Abstract

In this paper, we introduce a class of random walks with absorbing states on simplicial complexes. Given a simplicial complex of dimension d, a random walk with an absorbing state is defined which relates to the spectrum of the k‐dimensional Laplacian for 1 ≤ k ≤ d. We study an example of random walks on simplicial complexes in the context of a semi‐supervised learning problem. Specifically, we consider a label propagation algorithm on oriented edges, which applies to a generalization of the partially labelled classification problem on graphs. © 2016 Wiley Periodicals, Inc. Random Struct. Alg., 49, 379–405, 2016

Keywords: spectral theory, random walks, simplicial complexes

1. Introduction

The relation between spectral graph theory and random walks on graphs is well studied and has both theoretical and practical implications 2, 13, 14, 16. Consider an unweighted and undirected graph G = (V, E). A random walk on the graph G is defined via a Markov chain on the set of vertices, with transition probability matrix P = I – D −1 W where W is the graph adjacency matrix, and D is a diagonal matrix with D ii the degree of vertex i. The graph Laplacian is defined as Δ = D – W so P = D −1Δ. Connections between spectral properties of the graph Laplacian and properties of random walks are well understood 13, the mixing time of a random walk on a graph is one connection. A key observation is that the stationary distribution of a random walk on a graph is related to the harmonics of the graph Laplacian 13. Expander graphs are another important example that highlights the relationship between random walks and spectral graph theory 2, 7, 13.

Graphs are common objects on which stochastic processes are defined, however they are limited in their ability to represent interactions between more than two objects. Simplicial complexes provide a language to describe such high‐order interactions. In this paper we will define stochastic processes on simplicial complexes and examine what properties of random walks on graphs extend to random walks on simplicial complexes. The idea of extending results of random walks on graphs to random walks on simplicial complexes have only recently been explored 6, 17, 19, 20. There has also been recent activity studying the related problem of extending the notion of expander graphs to simplicial complexes and finding isoperimetric inequalities for simplicial complexes 8, 15, 17, 18, 21. In contrast, the understanding of quasirandom properties of hypergraphs is relatively well developed 4, 5, 10, 12.

The main objective of this paper is to define random walks on simplicial complexes by a Markov chain and relate the stationary distribution of the chain to harmonics of the Hodge Laplacian. The extension of the graph Laplacian to simplcial complexes goes back to the work of Eckmann 9 where higher‐order combinatorial or Hodge Laplacians were defined. The spectrum of the k‐th order Hodge Laplacian provides information on whether the k‐th (co)homology class is trivial. The size of the spectral gap can be considered a “measure” of how far the complex is from having nontrivial (co)homology. In this paper we will show that the stationary distribution of the random walk on the simplicial complex captures nontrivial k‐th (co)homology classes.

The main differences between random walks on graphs and random walks on simplicial complexes are as follows:

Detection of higher‐order homology groups: The topological properties studied on graphs are usually limited to connected components and cycles–these are the zeroth and first homology groups, H 0 and H 1 respectively. In the case of simplicial complexes we can examine higher dimensional analogs of cycles, represented by the higher homology groups H 2, H 3 respectively. Understanding the relationship between random walks on simplicial complexes and their homology is one of our aims.

The role of orientation: In order to study simplicial homology each simplex in a complex is required to have an orientation, an ordering on its vertices that can be either positive or negative. Conceptually, the biggest difference form the graph case is that for random walks on simplicial complexes a Markov chain needs to be defined on each orientation and the two chains need to be coupled.

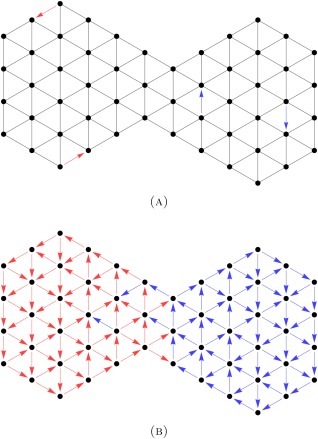

Defining neighbors: In a graph there is only one logical way to define neighboring vertices–if they are connected by an edge. Two simplexes in a simplicial complex can be connected in various ways, see Fig. 1. In this paper we focus on the case where two k‐simplexes are considered to be neighbors if they share a common (k – 1)‐dimensional face. In 17 two k‐simplexes are considered neighbors if they are faces of a common (k + 1)‐dimensional simplex (a coface). These different definitions of neighborhoods yield random walks with different properties.

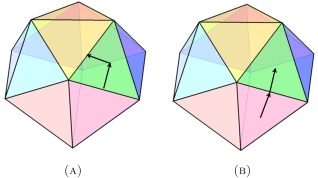

Figure 1.

(A) The image on the left corresponds to the random walk between two edges that share a triangle. (B) The image on the right corresponds to the random walk between two triangles that share an edge.

1.1. Motivation and Related Work

A strong motivation for studying random walks on simplicial complexes is a desire to capture geometry as well as topology. Consider two objects: (a) a solid three dimensional disc and (b) the same disc with a small hole punctured in the middle. Generate a graph G s from a random sample of the solid disc and a graph G p from a random sample of the punctured disc. If the puncture is small the spectra of the graph Laplacian of G s and the graph Laplacian G p will be very similar. In contrast if one generates a simplicial complex or triangulation of the solid and punctured disc, T s and T p respectively, the spectra of higher‐order Hodge Laplacians will not be the same since the inner boundary of the disc with a hole will be detected by the Hodge Laplacian.

The two operators needed to define the Hodge Laplacian are the boundary map and the coboundary map. Here we provide an intuition for these maps and describe how they relate to the different walks shown in Fig. 1. A rigorous formulation will be provided in Section 2.3. The boundary operator ∂ k maps k‐dimensional complexes to (k – 1) dimensional simplices, for example ∂ 1 maps edges to vertices and ∂ 2 maps triangles to edges. The coboundary operator δ k goes in the opposite direction, it defines a map from lower‐dimensional complexes to higher‐dimensional complexes. The k‐th Hodge Laplacian for dimension k > 0 is composed of two components

These two components will correspond to the two definitions of random walks displayed in Figure 1. One can define a random walk between triangles that share an edge, Fig. 1b, this corresponds to δ 1 ∂ 2 since each move in the walk can be thought of as a composition of moving from a triangle to an edge and back to a triangle. One can also define a random walk between edges that share a triangle, Fig. 1a, this corresponds to ∂ 2 δ 1 since each move in the walk can be thought of as a composition of moving from an edge to a triangle back to an edge. One can also define a walk that combines both types of walks. Walks corresponding to ∂ k + 1 δ k were introduced by Parzanchevski and Rosenthal 17. In this paper we will introduce walks corresponding to δ k–1 ∂ k as well as walks that relate to the Hodge Laplacian.

The following example motivates the walk in Fig. 1B. Consider the 2‐dimensional simplicial complex formed by a hollow tetrahedron (or any triangulation of the 2‐sphere). We know that the complex has nontrivial 2‐dimensional homology since there is a void. However, this homology cannot be detected by the random walk defined in 17, because there are no tetrahedrons that can be used by the walk to move between the triangles. In general, the walk defined in 17 can detect homology from dimension 0 to co‐dimension 1, but never co‐dimension 0. Hence, a new walk which can travel from triangles to triangles through edges is needed to detect the void.

In addition to the two types of random walks explored in this paper and in 17, a recent paper by Rosenthal 19 considers a notion of branching processes on simplicial complexes. We will return to a discussion of the similarities and differences of these Markov processes in Section 7.

1.2. Overview of paper

In Section 2 we state the notation used in this paper and define chains, cochains, and the Hodge Laplacian. In Section 3 we define a random walk from a k‐simplex to another k‐simplex through a (k – 1)‐dimensional face. We then relate the stationary distribution of the walk to the harmonics of the δ k–1 ∂ k component of the Hodge Laplacian and provide mixing times. In Section 4 we define a random walk from a k‐simplex to another k‐simplex through a (k + 1)‐dimensional coface and relate the stationary distribution to the harmonics of the ∂ k + 1 δ k component of the Hodge Laplacian. The results in this section can be considered a restatement of the results in 17. In Section 5 we define a walk that captures the harmonics of the entire Hodge Laplacian. In Section 6 we provide some examples of random walks to illustrate some of our ideas and present a novel machine learning algorithm based on random walks on simplicial complexes.

2. Definitions

In this section we define the notion of a simplicial complex X, the chain and cochain complexes associated to it, and the k‐Laplacian.

2.1. Simplicial Complexes

By a simplicial complex we mean an abstract finite simplicial complex. Simplicial complexes generalize the notion of a graph to higher dimensions. Given a set of vertices V, any nonempty subset σ ⊆ V of the form σ = {v 0, v 1,…,v j} is called a j‐dimensional simplex, or j‐simplex. A simplicial complex X is a finite collection of simplexes of various dimensions such that X is closed under inclusion, i.e., τ ⊆ σ and σ ∊ X implies τ ∊ X. While we will not need it for this paper, one can include the empty set in X as well (thought of as a (–1)‐simplex). Given a simplicial complex X, denote the set of j‐simplexes of X as X j. We say that X is d‐dimensional or that X is a d‐complex if but . Graphs are 1‐dimensional simplicial complexes. We will assume throughout that X is a d‐complex for some fixed d ≥ 1.

If , and , then we call τ a face of σ and σ a coface of τ. Every j‐simplex σ for j ≥ 1 has exactly j + 1 faces but may have any number of cofaces. Given σ ∊ X j we define (called the degree of σ) to be the number of cofaces of σ. Two simplexes are upper adjacent if they share a coface and lower adjacent if they share a face. The number of simplexes upper adjacent to a j‐simplex σ is while the number of simplexes lower adjacent to σ is where the sum is over all faces τ of σ.

Orientation plays a major role in the geometry of a simplicial complex. For j > 0, an orientation of a j‐simplex σ is an equivalence class of orderings of its vertices, where two orderings are equivalent if they differ by an even permutation. Notationally, an orientation is denoted by placing one of its orderings in square brackets, as in [v 0,…,v j]. Every j‐simplex σ has two orientations which we think of as negatives of each other. We abbreviate these two orientations as σ + and (which orientation σ + corresponds to is chosen arbitrarily). For any j ≥ 1, we will use to denote a choice of positive orientation σ + for each j‐simplex σ. The set of all oriented j‐simplexes will be denoted by , so that and for any choice of orientation .

An oriented simplex σ + = [v 0,…,v j] induces an orientation on the faces of σ as . Conversely, an oriented face of σ induces an orientation σ + = [v 0,…,v j] on σ. Two oriented j‐simplexes σ + and are said to be similarly oriented, and we write , if σ and are distinct, lower adjacent j‐simplexes and σ + and induce the opposite orientation on the common face (if σ and are upper adjacent as well, this is the same as saying that σ + and induce the same orientation on the common coface). If they induce the same orientation on the common face, then we say they are dissimilarly oriented and write . We say that a d‐complex X is orientable if there is a choice of orientation such that for every pair of lower adjacent simplexes , the oriented simplexes are similarly oriented.

Orientation is typically not a property of vertices of a graph and the term oriented graph is taken to mean a directed graph. For j = 0 there are no distinct orderings, and one can think of each vertex v as being positively oriented by default (so, v + = v) and having an oppositely‐oriented counterpart . However, introducing orientation to a graph is not standard. The collection of oriented 0‐simplexes, does not reduce to the standard graph setting, since graphs are not considered to be oriented. We will discuss orientation in more detail in Section 3. We will see in Section 3 that the random walks we define on simplicial complexes do not reduce to a simple random walk on a graph.

2.2. Chain and Cochain Complexes

Given a simplicial complex X, we can define the chain and cochain complexes of X over . The space of j‐chains is the vector space of linear combinations of oriented j‐simplexes with coefficients in , with the stipulation that the two orientations of a simplex are negatives of each other in C j (as implied by our notation). Thus, any choice of orientation provides a basis for C j. The space of j‐cochains is then defined to be the vector space dual to C j. These spaces are isomorphic and we will make no distinction between them. Usually, we will work with cochains using the basis elements , where is defined on a basis element as

The boundary map is the linear map defined on a basis element [v 0,…,v j] as

The coboundary map is then defined to be the transpose of the boundary map. In particular, for ,

When there is no confusion, we will denote the boundary and coboundary maps by ∂ and δ. It holds that ∂∂ = δδ = 0, so that (C j, ∂ j) and (C j, δ j) form chain and cochain complexes respectively.

The homology and cohomology vector spaces of X over are

It is known from the universal coefficient theorem that H j is the vector space dual to H j. Reduced (co)homology can also be used, and it is equivalent to including the nullset as a (–1)‐dimensional simplex in X.

2.3. The Hodge Laplacian

The k‐Laplacian of X is defined to be

where

The Laplacian is a symmetric positive semi‐definite matrix, as is each part and . From Hodge theory, it is known that

and the space of cochains decomposes as

where the orthogonal direct sum ⊕ is with respect to the “usual” inner product

For much of this paper we will focus on the half of the Laplacian. Trivially, and the smallest nontrivial eigenvalue of is therefore given by

where denotes the Euclidean norm on C k. A cochain f that achieves the minimum is an eigenvector of λ k. It is easy to see that any such f is also an eigenvector of Δk with eigenvalue λ k and that, therefore, λ k relates to homology:

Remark 2.1

Given a choice of orientation can be written as a matrix with rows and columns indexed by , the entries of which are given by

Changing the choice of orientation amounts to a change of basis for . If the row and column indexed by σ + are instead indexed by σ –, all the entries in them switch sign except the diagonal entry. Alternatively, can be characterized by how it acts on cochains

Note that since is a cochain, .

The remainder of this section states some relevant properties on the behavior of .

Definition 2.2

A d‐complex X is called k‐connected (1 ≤ k ≤ d) if for every two k‐simplexes σ, there exists a chain of k‐simplexes such that σ i is lower adjacent to σ i + 1 for all i. For a general d‐complex X, such chains define equivalence classes of k‐simplexes, and the subcomplexes induced by these are called the k‐connected components of X.

Definition 2.3

A d‐complex X is called disorientable if there is a choice of orientation of its d‐simplexes such that all lower adjacent d‐simplexes are dissimilarly oriented. In this case, the d‐cochain is called a disorientation.

Remark 2.4

Disorientability was defined in 17 and shown to be a higher‐dimensional analog of bipartiteness for graphs. Note that one can also define X to be k‐disorientable if the k‐skeleton of X (the k‐complex given by the union ) is disorientable, but this can only happen when k = d. For example if k < d then there exists a (k + 1)‐simplex . Given any two dissimilarly oriented faces of σ +, say, and , we find that the simplex cannot be dissimilarly oriented to both of them simultaneously.

The following Lemma is similar to Proposition 2.7 in 17.

Lemma 2.5

Let X be a d‐complex, 1 ≤ k ≤ d and .

is the disjoint union of where Xi are the k‐connected components of X.

The spectrum of is contained in .

The kernel of is exactly .

The upper bound (k + 1)M is attained if and only if k = d and X has a d‐connected component that is both disorientable and of constant (d – 1)‐degree.

Statement (1) follows from the fact that can be written as a block diagonal matrix with each block corresponding to a component X i. Statement (3) is easy to verify.

For statement (2), let f be an eigenvector of with eigenvalue λ, let be a choice of orientation such that for all and suppose . Then by Remark 2.1,

where the third inequality results from the fact that any k‐simplex is lower adjacent to at most (k + 1)(M – 1) other k‐simplexes. Therefore, λ ≤ (k + 1)M.

It now remains to prove statement (4). Looking back at the inequalities, it holds that λ = (k + 1)M only if σ – ∼ τ + and f(σ +) = f(τ +) whenever σ and τ are lower adjacent, and the faces of σ all have degree M. But since f(σ +) = f(τ +), the same reasoning can be applied to f(σ +) for all σ lower adjacent to τ and eventually to all k‐simplexes in the same k‐connected component X i. Ultimately, this implies that X i has constant (k – 1)‐degree and is k‐disorientable (and hence k = d).

To see that this bound is indeed attainable, consider a disorientable d‐complex with constant (d – 1)‐degree M (this includes, for instance, the simplicial complex induced by a single d‐simplex). Let be a choice of orientation such that all lower adjacent d‐simplexes are dissimilarly oriented. Then a disorientation f on X d will satisfy

for every τ +.

3. Random walks and

In this section we will define a random walk on a d‐complex. The stationary distribution of the random walk is related to the harmonics of for 1 ≤ k ≤ d. The mixing time of the walk is a function of the spectral gap of . A notion of maximal degree M will appear in our results.

The following random walk is a Markov chain that is related to .

Definition 3.1

The state space is the set of oriented complexes as well as a death state .

Neighbors: two oriented k‐cells σ and are called neighbors, which we denote as if they share a (k – 1) face and have opposite orientation on the shared face.

where denotes the opposite orientation of is the notation for moving to an oriented neighboring cell, and is the notation for moving to a disoriented neighboring cell. The probability of transitioning into a death state is where Lk(σ) are the set of simplexes that are oriented or disoriented neighbors of σ. The transition probabilities are constrained to ensure P is a stochastic matrix.

The intuition behind the steps in the transition matrix follows. We first consider the case where there is no death state. In this case with probability α 0 one stays in the same state. The probability of moving to an oriented neighboring cell is α 1. The probability of moving in one step to disoriented neighboring cell is α 2. The death state is needed when the total probability, 1 – β, of either moving to a new cell or staying at the same cell is less than one. In this case the remaining probability β corresponds to moving to an absorption or death state. There is also an interpretation of the Markov chain as two coupled Markov chains. Again for simplicity we consider the case where there is no death state. Consider one Markov chain on the state space and another chain on . The two chains are coupled as follows: at each time step one can either jump to another state in the chain or jump to the corresponding state of opposite orientation in the other chain and proceed in the new chain.

We now specify the parameter values of the transition matrix P.

Definition 3.2

We define transition matrix P based on Definition 3.1 with parameter values

where M is the maximal degree corresponds to the order of , and 0 < p < 1.

We selected this parameter specification for ease of analysis and to impose certain intuitive properties of the walk. The value 0 < p < 1 determines the laziness of the walk, the probability of not changing states. We set the probability of moving to a neighbor as equal to the probability of moving to a disoriented neighbor, α 1 = α 2. One reason for the existence of the death state is that if α 1 = α 2 the total transition probability at each state may not sum to one so we use the death state as an absorption state. A natural choice for α 1 is a uniform probability of transitioning to each of the neighbors of a complex. We set , this would be a uniform transition probability if all complexes had maximal degree M, this is the analog of a M‐regular graph. We suspect that the qualitative phenomena and insights we derive for the specified walk will transfer to walks defined by other parameters, such as the more natural uniform walk.

In the remainder of this section we will relate the stationary distribution of the stochastic matrix P to the geometry of the simplicial complex on which the random walk is defined. Specifically we are interested in relating the stationary distribution to the harmonics of . From Hodge theory we know that the relevant geometry is characterized by the space of k‐cochains, f ∊ C k. A k‐cochain is antisymmetric under exchange of any pairs of indices which implies the following constraint

Given the transition matrix P we can compute the following expected value from a k‐cochain at time t as

where p t(σ) is the probability vector over states X + at time t and is the probability vector over states X – at time t. We will be interested in the convergence of the following “expectation process”

If we consider the random walk as two coupled chains, on X + and X – respectively, the above can be thought of as the difference in the marginal probability vectors of each chain.

In the case of a graph with a stochastic matrix P G one studies the convergence of p t the probability vector over states at time t to a stationary distribution π. The rate of convergence of the probability vector p t to π as well as the stationary distribution π are related to harmonics of the graph Laplacian. The graph case has some fundamental differences from the case of simplicial complexes in that graphs are not defined with orientation and the antisymmetric constraint that is required of k‐cochains is not relevant.

Note that the orientation of the complexes does not affect the Hodge Laplacian. One can define the following propagation matrix on that corresponds to the random walk P in Definition 3.2.

Definition 3.3

The propagation matrix B of the lower k‐walk is defined to be a square matrix indexed by with

There is a natural relation between the propagation matrix and the Markov matrix. We define a matrix Q with rows indexed by and columns indexed by S. The values of the matrix are specified as follows: Q ij = 1 if state i and state j are neighbors, Q ij = –1 if state i and state j with reverse orientation are neighbors, and Q ij = 0 if state j is a death state. This matrix has the following property QP = BQ. We now state some properties of the propagation matrix and its relation to the transition probability matrix P.

Proposition 3.4

The propagation matrix B is given by

In addition, B satisfies QP = BQ and

The first claim is a straightforward computation based on Definition 3.3. The second claim is equivalent to the equality QP = BQ, which we now prove. If and is the column of P indexed by , then the column of QP indexed by is . Using the definition of Q, the following holds

Similarly, note that where is the vector assigning 1 to and 0 to all other elements in S. If is the zero vector. Otherwise, if then and

For what follows, we define to be the marginal difference of the random walk on X starting at τ + at time t. Also, let be a choice of orientation and denote .

Corollary 3.5

The spectrum of B is contained in , with the upper bound acheived by cochains in ker ∂k and the lower bound acheived if and only if k < = d and there is a disorientable d‐connected component of constant (d – 1)‐degree.

Statement (1) is easy to verify using Lemma 2.5 and Proposition 3.4. Statement (3) follows from the inequality where A is a matrix, f is a vector, and is the spectral norm of A.

It remains now to prove statement (2). If τ has a coface σ, let (with σ + being any orientation of σ) which implies . Let be an orthogonal basis for C k such that are eigenvectors of B. Then,

By Corollary 3.5 we know that when p ≠ 0,1. We rescale B to define the normalized propagation matrix

for which the trivial convergence due to is eliminated. We also define the normalized marginal difference The next two theorems show that the homology of X can be determined from the limiting behavior of the normalized marginal difference.

Theorem 3.6

The limit of the normalized marginal difference exists for all τ+ if and only if has no eigenvalue λ ≤ –1. Furthermore, whenever exists, where is the projection map onto ker ∂k.

Note that by Corollary 3.5, the spectrum of is upper bounded by 1 and the eigenspace of the eigenvalue 1 is exactly ker ∂ k. Let f 1,…,f i be an orthogonal basis for C k such that f 1, …, f i are eigenvectors of with eigenvalues γ 1,…,γ i. Then any can be written as a linear combination so that

Since the f j form a basis, converges if and only if converges for each j. In other words, converges if and only if for every j, α j = 0 or γ j > –1. Furthermore, the limit (when it exists) is always

Finally, suppose has an eigenvalue λ ≤ –1. Then there is an eigenvector f such that does not converge. Since the set of cochains spans , f can be written as a linear combination of the cochains and therefore must not converge for some τ +.

Theorem 3.7

where denotes the projection map onto ker δk.

The same holds when or k = d or there are no disorientatable d‐connected components of constant (d – 1)‐degree.

The proof follows mostly from Theorem 3.6. According to Theorem 3.6, exists for all τ + if and only if the spectrum of is contained in (–1, 1]. Using Corollary 3.5 and the definition , we know that the spectrum of is contained in . Now each of the following statements imply each other

which proves that the spectrum of is indeed contained in (–1, 1] when . Since the span all of C k, the span all of ker ∂ k, and hence the span all of ker δ k.

In the case that , the spectrum of is contained in [–1, 1]. However, as long as –1 is not actually an eigenvalue of , the result still holds. According to Corollary 3.5, –1 is an eigenvalue if and only if k = d and there is a disorientable d‐connected component of constant (d – 1)‐degree. The case p = 1 is trivial () and is not considered.

Finally, if the spectrum of B lies in (–1, 1] and λ is the eigenvalue of contained in (–1, 1) with largest absolute value, then

for all f. To see this, let f 1,…,f i be an orthonormal basis for C k such that f 1,…,f i are eigenvectors of with eigenvalues γ 1,…,γ i. Then any f can be written as a linear combination so that and

In particular, if then the spectrum of is contained in [0,1] and therefore .

Note the dependence of the theorem on both the probability p of remaining in a state and on M. We can think of M as the maximum amount of “branching”, where M = 2 means there is no branching, as in a pseudomanifold of dimension d = k, and large values of M imply a high amount of branching. In particular, the walk must become more and more lazy for larger values of M in order to prevent the marginal difference from diverging. However, since for all M a lazy probability of at least will always ensure convergence. While there is no explicit dependence on k or the dimension d, it is easy to see that M must always be at least d – k + 1 (for instance, it is not possible for a triangle complex to have maximum vertex degree 1). A natural question is how varying the transition matrix P in Definition 3.2 will change the scaling behavior of the walk with respect to M and p.

We would also like to know whether the normalized marginal difference always converges to 0. Note that if τ + has a coface, then we already know that stays bounded away from 0 according to Corollary 3.5. However, if τ has no coface, then may be perpendicular to ker ∂ k, allowing to die in the limit as we see in the following corollary.

Corollary 3.8

If τ has no coface, Hk = 0, and if then

The same is true when and or k = d and there are no disorientable d‐connected components of constant (d – 1)‐degree,

Under all conditions stated, converges. If τ has no coface, then is in the orthogonal complement of im ∂ k+1, because all elements of im ∂ k+1 are supported on oriented faces of (k + 1)‐simplexes. If H k = 0 then ker ∂ k = im ∂ k+1 and

4. Random walks and

The random walk described by Parzanchevski and Rosenthal in 17 is the “dual” of the random walk defined in the previous section in that one traverses between simplexes via cofaces rather than faces. We include Rosenthal and Parzanchevski's proposed walk in this section so the paper is self contained and we can compare the walk on with the one in the previous section as well as a walk corresponding to the full Hodge Laplacian. We do not present any new results in this section.

Let X be a d‐complex, 0 ≤ k ≤ d – 1, and 0 ≤ p < 1.

Definition 4.1

The State space is the set of oriented complexes as well as a death state, .

Co‐neighbors: two oriented k‐cells are called co‐neighbors, which we denote as if they share a (k + 1) coface and have opposite orientation on the shared face.

for all .

The definition of the walk in 17 is slightly different. There is no death state in 17 because the case of k = d – 1 was examined and it was assumed that every k‐simplex had at least one coface. As in the previous section we can relate the above Markov matrix to and QPtν is the marginal difference after t steps for the random walk via co‐neighbors. Here Q is the same matrix as in the previous section. There is again a propagation matrix A such that QPtν = AtQν and A relates to . As before the marginal difference converges to 0 for all initial distributions. We scale A by a constant to provide a normalized propagation matrix and a normalized marginal distribution that describes the limiting behavior. The limiting behavior of the normalized marginal difference reveals homology similar to Theorem 3.7

There are a few differences between the walk across faces and the walk across cofaces. The norm of the normalized marginal difference for the walk across cofaces starting at a single oriented simplex stays bounded away from 0 (see Proposition 2.8 of 17), whereas this need not hold for the walk across faces (as in Corollary 3.8). This is because in the walk across cofaces every starting point has some nonzero inner product with an element of . The second difference is the threshold values for p in Theorem 3.7 and Theorem 2.9 of 17. For the walk across faces, homology can be detected for (where ) whereas for the walk across cofaces the threshold is . Hence, the walk across cofaces is sensitive to the dimension while the walk across faces is sensitive to the maximum degree. In both cases, is always sufficient to detect homology and allows us to put a bound on the rate of convergence.

5. Random walk related to the full Hodge Laplacian

The existence of random walks across faces and cofaces that respectively correspond to the two parts of the Hodge Laplacian suggests that there may be a random walk for the entire Laplacian, . In this section, we state results for a random walk that captures both parts of the Hodge Laplacian. One can define a weighted notion of the Hodge Laplacian to weighted Laplacians

where W j denotes a diagonal matrix with diagonal entries equal to positive weights, one for each j‐simplex. In general, one can also define an operator L k that is a generalized notion of the k‐th Hodge Laplacian.

Definition 5.1

Let be a choice of orientation. A generalized Laplacian matrix is a square matrix L such that

the rows and columns of L are indexed by ,

L has nonnegative diagonal entries,

whenever L has a zero on the diagonal, all other entries in the same row or column are also zero,

L is a non‐negative operator.

Definition 5.2

Let be a choice of orientation, L a generalized Laplacian matrix, and p ∊ [0, 1]. Given L we define a normalization matrix as a diagonal matrix with if and otherwise.

We define the p‐lazy propagation matrix related to L to be

where . The case K = 0 is degenerate and is not considered. In addition, we define the normalized p‐lazy propagation matrix

Note that whenever K = 1, . In particular, this is true in the graph case when L = Δ0.

We will now define the Markov transition matrix in terms of the propagation matrix.

Definition 5.3

Let be a choice of orientation, L a generalized Laplacian matrix, p ∊ [0, 1], and let A L,p be defined as above. The state space is which is a (2n + 1) × (2n + 1) matrix where the number of unoriented complexes is n. The Markov transition matrix is defined as

where the first n rows and columns correspond to , the second n rows and columns correspond to , and the last row and column correspond to the death state. The elements of matrix A correspond to transition probabilities between simplexes of the same orientation and for i, j = 1,…,n. The elements of matrix correspond to transition probabilities between simplexes of the opposite orientation and for i, j = 1,…,n. The elements in the column vector v are the probabilities of entering the death set from one of the complexes and

The row vector is the transpose of v. The 1 in the lower diagonal states implies that one does not leave the death state.

The following lemma shows that P L,p is a left stochastic matrix.

Lemma 5.4

Let be a choice of orientation, L a generalized Laplacian, and p ∊ [0, 1]. The matrix defined above is a left stochastic matrix for an absorbing Markov chain on the state space S (i.e., ) such that Θ is an absorbing state and Prob(σ → σ) = p for all σ ≠ Θ.

It is clear by the definition of P L,p that Θ is an absorbing state. To see that Prob(σ → σ) = p for all s ≠ Θ, note that

and hence by the definition of P L,p,

for all σ. It is also clear by the definition of P L,p that the entries are nonnegative for any . Hence, in order to show that P L,p is left stochastic we need only to prove that for all . By the symmetries inherent in P L,p, the value of the sum is the same for σ = σ + as it is for σ = σ –. For any σ = σ +,

The following theorem relates the Markov transition matrix P L,p to the generalized Hodge Laplacian L.

Theorem 5.5

Let be a choice of orientation, L a generalized Laplacian matrix, p ∊ [0, 1], and let AL,p and PL,p be defined as above. In addition, let Q be defined as in Section 3. Then

In other words, the evolution of the marginal differences after n steps with initial distribution ν is governed by the propagation matrix: .

Using the definition of Q

Similarly, note that where 1 s is the vector assigning 1 to s ∊ S and 0 to all other elements in S. If is the zero vector. Otherwise, if s = τ ± then . Thus,

Finally, we conclude with a few results motivating the normalized propagation matrix and showing how the limiting behavior of the marginal difference relates to the kernel and spectrum of L.

Theorem 5.6

Let be a choice of orientation, L a generalized Laplacian matrix with (Λ > 0). Then for the following statements hold:

for every initial distribution ν,

for every initial distribution ν, where denotes the projection map onto the kernel of L,

The proof is the same as in the proofs of Corollary 3.5 and Theorem 3.7 and mostly boil down to statements about the spectra of A L,p and . Note that since where the eigenspace of the eigenvalue 1 is equal to the kernel of L, and the largest eigenvalue of less than 1 is .

As an example of the applicability of this framework, is used with L = Δk to perform label propagation on edges in the next section.

6. Walks on triangle complexes and random walks for semi‐supervised learning

In this section we provide some examples of random walks on simplicial complexes to provide some intuition. In addition we extend the label propagation algorithm used in machine learning for semi‐supervised learning from graphs to simplicial complexes.

6.1. Triangle Complexes

We begin by reviewing local random walks on graphs as defined by Fan Chung in 3. Given a graph G = (V, E) and a designated “boundary” subset , a ‐lazy random walk on can be defined to satisfy a Dirichlet boundary condition on S (meaning a walker is killed whenever it reaches S). The walker starts on a vertex and at each step remains in place with probability or else jumps to one of the adjacent vertices with equal probability. The boundary condition is enforced by declaring that whenever the walker would jump to a vertex in S, the walk ends. Thus, the left stochastic matrix P for this walk can be written as

where denotes that vertices v and are adjacent and dv is the number of edges connected to v. Note that P is indexed only by , and that its columns sums may be less than 1. The probability of dying is implicitly encoded in P as the difference between the column sum and 1. As was shown in 3, P is related to a local Laplace operator also indexed by . If D is the degree matrix and A the adjacency matrix, the graph Laplacian of G is Δ = D – A. We denote the local Laplacian as ΔS, where S in the subscript means rows and columns indexed by S have been deleted. The relation between P and ΔS is

Hence, the existence and rate of convergence to a stationary distributions can be studied in terms of the spectrum of the local Laplace operator.

Now suppose we are given an orientable 2‐dimensional non‐branching simplicial complex X = (V, E, T) where T is the set of triangles (subsets of V of size 3). Non‐branching means that every edge is contained in at most 2 triangles. We can define a random walk on triangles fundamentally identical to a local walk on a graph which reveals the 2‐dimensional homology of X. The ‐lazy 2‐walk on T starts at a triangle t 0 and at each step remains in place with probability or else jumps to the other side of one of the three edges. If no triangle lies on the other side of the edge, the walk ends. The transition matrix B for this walk is given by

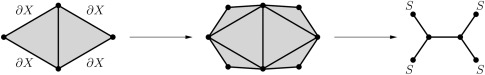

where denotes t and share an edge. This is the same transition matrix as P, in the case that dv = 3 for all . In this case, the analog of the set S is the set of triangles that do not share an edge, this is the boundary of X. To draw an explicit connection, imagine adding a triangle to each boundary edge, obtaining a larger complex . See Fig. 2

Figure 2.

Making the Dirichlet boundary condition explicit, and translating into a graph.

Then take the “dual graph” G = (V, E) of by thinking of triangles as vertices (so, ) and connecting vertices in G with an edge if the corresponding triangles in share an edge. We do not add a vertex for each outer face. Choose the vertices corresponding to the added triangles to be the boundary set S. Now the matrix P associated to the local random walk on G is indistinguishable from the matrix B associated to the random walk on X. In addition, it can be seen that ΔS on G is the same as Δ2, the 2‐dimensional Laplacian on X defined with respect to a given orientation (recall that we have assumed orientability). The following states the relation between the transition matrices and Laplacians:

See Section 2 for the definition of Δ2, and the appendix of 21 for more on the connection between ΔS and Δ2.

It is a basic fact that the kernel of Δ2 corresponds to the 2‐dimensional homology group of X over . Therefore, there exists a stationary distribution for the random walk if and only if X has nontrivial homology in dimension 2. Additionally, the rate of convergence to the stationary distribution (if it exists) is governed by the spectral gap of Δ2. In particular, the following statements hold:

Given a starting triangle t 0, the marginal distribution of the random walk after n steps is where is the vector assigning a 1 to t 0 and 0 to all other triangles. For any t 0, the marginal distrubition converges, i.e., exists.

The limit is equal to 0 for all starting triangles t 0 if and only if X has trivial homology in dimension 2 over .

- The rate of convergence is given by

where λ 2 is the smallest nonzero eigenvalue of Δ2.

The example given here is constrained by certain assumptions (orientability and the non‐branching property), which allows for the most direct interpretation with respect to previous work done on graphs.

6.2. Label Propagation on Edges

In machine learning random walks on graphs have been used for semi‐supervised learning. In this section we will generalize a class of algorithms on graphs called “label propagation” algorithms to simplicial complexes, specifically we extend the algorithm described in 23 (for more examples, see 1, 11, 22). The goal of semi‐supervised classification learning is to classify a set of unlabelled objects {v 1, …, vu}, given a small set of labelled objects and a set E of pairs of objects {vi, vj} that one believes a priori to share the same class. Let G = (V, E) be the graph with vertex set and let P be the probability matrix for the usual random walk, i.e.,

where dj is the degree of vertex j. We denote the classes an object belongs to as c = 1,…, C and an initial distribution is the a priori confidence that each vertex is in class c, a recursive label propagation process proceeds as follows.

- For t = 1,…, ℓ and c = 1,…,C:

- Set

- Reset for all vi labelled as c.

Consider as an estimate of the relative confidence that each object is in class c.

- For each unlabelled point vi, i ≤ u, assign the label

The number of steps ℓ is set to be large enough such that is close to its limit . If G is connected, it can be shown that is independent of the choice of . Even if G is disconnected, the algorithm can be performed on each connected component separately and again the limit for each component will be independent of the choice of .

We will now adapt the label propagation algorithm to higher dimensional walks, namely, walks on oriented edges. Given any random walk on the set of oriented edges (and an absorbing death state Θ), its probability transition matrix P could be used to propagate labels in the same manner as the above algorithm. However, this will treat and label the two orientations of a single edge separately as though they are unrelated. We will use walks that are related to the 1‐forms and harmonics of the Hodge Laplacian. A major difference with propagation on a goal is that the labels will be oriented. For example, given an oriented edge e + and a class c, the propagation algorithm may assign a positive confidence that e + belongs to class c or a negative confidence that e + belongs to class c, which we view as a positive confidence that e + belongs to class –c or, equivalently, that e – belongs to class c. This construction applies to systems in which every class has two built‐in orientations or signs, so the class information has a directed sense of “flow”.

For example, imagine water flowing along a triangle complex in two dimensions. Given an oriented edge, the water may flow in the positive or negative direction along the edge. A “negative” flow of water in the direction of e + can be interpreted as a positive flow in the direction of e –. Perhaps the flow along a few edges is observed and one wishes to infer the direction of the flow along all the other edges. Unlike in the graph case, a single class of flow already presents a classification challenge. Alternately, consider multiple streams of water colored according to the C classes, in which case we may want to know which stream dominates the flow along each edge and in which direction. In order to make these inferences, it is necessary to make some assumption about how labels should propagate from one edge to the next. When considering water flow, it is intuitive to make the following two assumptions.

Local Consistency of Motion. If water is flowing along an oriented edge [vi, vj] in the positive direction, then for every triangle [vi, vj, vk] the water should also tend to flow along [vi, vk] and [vk, vj] in the positive directions.

Preservation of Mass. The total amount of flow into and out of each vertex (along edges connected to the vertex) should be the same.

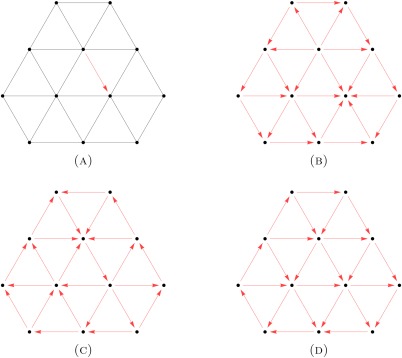

In fact, either one of these assumptions is sufficient to infer oriented class labels given the observed flow on a few edges. Depending on which assumptions one chooses, different normalized propagation matrices (see Section 5) may be applied. For example, setting the Laplacian matrix L to will enforce local consistency of motion without regard to preservation of mass, while will do the opposite. A reasonable way of preserving both assumptions is by using the full Hodge 1‐Laplacian L = Δ1, see Fig. 3 for a visual example of the contrast between walks.

Figure 3.

(A) An edge labelled on a 2‐complex. (B) Label propagation with , note the gradient like flows. (C) Label propagation with , note the short cycles or curl structure. (D) Label propagation with Δ1, note the cycle or Harmonic around the boundary.

We now state a simple algorithm, analogous to the one for graphs, that propagates labels on edges to infer a partially‐observed flow. Let X be a simplicial complex of dimension d ≥ 1 and let be a choice of orientation for the set of edges. Without loss of generality, assume that oriented edges have been classified with class c (not –c). Similar to the graph case, we apply a recursive label propagation process to an initial distribution vector measuring the a priori confidence that each oriented edge is in class c. See Algorithm 1 for the procedure. The result of the algorithm is a set of estimates of the relative confidence that each edge is in class c with some orientation. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

After running the algorithm, an unlabelled edge ei is assigned the oriented class where .

We now prove that given enough iterations ℓ the algorithm converges and the resulting assigned labels are meaningful. The proof uses the same methods as the one found in 23 for the graph case.

Proposition 6.1

Using the notation of Section 5, assume that L is a Laplacian matrix with . Let be the normalized p‐lazy propagation matrix as defined in Definition 5.2. If and if no vector in kerL is supported on the set of unclassified edges, then Algorithm 1 converges. That is,

where A4 and A3 are submatrices of and ψc is the class function on edges labelled with ±c (for which ). In addition, depends neither on the initial distribution nor on the lazy probability p.

First, note that we are only interested in the convergence of for ei not labelled ±c. Partition and according to whether ei is labelled ±c or not as

The recursive definition of in Algorithm 1 can now be rewritten as . Solving for in terms of yields

In order to prove convergence of , it suffices to prove that A 4 has only eigenvalues strictly less than 1 in absolute value. This ensures that converges to zero (eliminating dependence on the initial distribution) and that converges to as k → ∞. We will prove that by relating Spec(A 4) to as follows.

First, partition L and DL in a similar way to as

so that

Hence Spec(A 4) is determined by , or to be more specific, . Furthermore, note that and are similar matrices that share the same spectrum. It turns out that the spectrum of is bounded within the spectrum of , which in turn is equal to by similarity. Let g be an eigenvector of with eigenvalue λ and let g 1,…, gi be an orthonormal basis of eigenvectors of (such a basis exists since it is a symmetric matrix) with eigenvalues γ 1,…, γi ∊ [0, Λ]. We can write

for some α 1,…, αi, where 0 c is the vector of zeros with length equal to the number of edges classified as ±c. Then

Because we assumed that γj ∊ [0, Λ] for all j, it would be a contradiction if λ >Λ or λ < 0. The case λ = 0 is possible if and only if αjγj = 0 for all j and therefore . Since we assumed that no vector in ker L is supported on the unlabelled edges, we conclude that . Finally, since we assumed that , we conclude that .

To see that the solution does not depend on p, note that I – A 4 is a submatrix of so that does not depend on p. Then write as

and note that is an off‐diagonal submatrix of and therefore does not depend on p either.

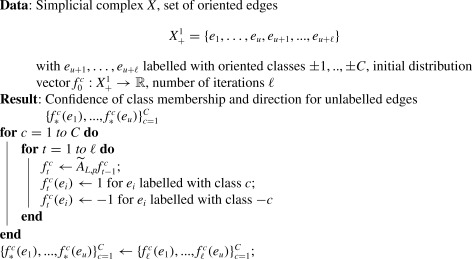

Algorithm 1. Edge propagtion algorithm.

1.

Note that while the limit exists, the matrix I – A 4 could be ill‐conditioned. In practice, it may be better to approximate with for large enough t. Also, the algorithm will converge faster for smaller values of p and if .

6.2.1. Simulation Study

We use two simulations to illustrate how Algorithm 1 works. In the first example, we describe how a single oriented edge of one class is propagated via the different walks. In the second example, we describe the case where to oriented edges with different class labels are propagated.

6.2.1.1. Propagating a single edge with one label

Figure 3A shows a simplicial complex in which a single oriented edge e 1 has been labelled red and all other edges are unlabelled. Figure 3B displays the result of propagating this single label 1000 steps using Algorithm 1 with , with the parameter p = 0.9, and f 0 equal to the indicator function on e 1 (edge e 1 is labelled red). The edges in Fig. 3B are oriented according to the sign of f 1000. Figures 3C and 3D display the results of propagating the same red edge by and L = Δ1 respectively. Propagation by results in gradient like flows while propagation by results in a more curl like structure. Propagation by the walk corresponding to the full Laplacian Δ1 results in longer cycles or harmonics as can be seen by the cycle around the boundary of the simplcial complex.

6.2.1.2. Propagating edges of different labels

Figure 4A shows a simplicial complex in which two edges have been labelled with class c = 1 (indicated by the red color) and two other edges have been labelled with class c = 2 (indicated by the blue color). Figure 4B displays the result of 1000 iterations of Algorithm 1 with L = Δ1, p = 0.9, and equal to the indicator function on the oriented edges labelled with classes c = 1, 2. The orientation of the edges are given by the sign of , if , or , if . Notice that only a small number of labels are needed to induce large‐scale circular motion. Near the middle, a few blue labels mix in with the red due to the asymmetry of the initial labels.

Figure 4.

(A)A2‐complex with two different labels on four edges. (B) Edge propagation with two classes with Δ1. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

7. Discussion

In this paper, we introduced a random walk on simplicial complexes with a stationary distribution that is related to part of the k‐th Hodge Laplacian . We compared our result to the result in 17 which focused on the walk corresponding to part of the k‐th Hodge Laplacian . We also state a walk that corresponds to the full Hodge Laplacian Δk.

There remain many open questions about random walks on simplicial complexes and the spectral theory of higher order Laplacians. Possible future directions of research include:

What is the continuum limit for these random walks for a model where the random complex is generated by sampling a manifold.

Is it possible to use conditioning techniques from stochastic processes such as Doob's h‐transform to analyze these walks?

What applications do these walks have to problems in machine learning and statistics?

Acknowledgements

SM would like to thank Anil Hirani, Misha Belkin, and Jonathan Mattingly for useful comments. JS would like to thank Kevin McGoff for proofreading and useful comments.

Supported by NIH (System Biology) (to S.M.) (5P50‐GM081883); AFOSR (to S.M.) (FA9550‐10‐1‐0436); NSF (to S.M.) (CCF‐1049290); NSF (to J.S.) (DMS‐1045153; DMS‐12‐09155).

The copyright line for this article was changed on 14 February 2017 after original online publication.

Contributor Information

Sayan Mukherjee, Email: jbergen@uic.edu.

John Steenbergen, Email: sayan@stat.duke.edu.

References

- 1. Callut J., Françoisse K., Saerens M., and Dupont P., Semi‐supervised classification from discriminative random walks, Machine learning and knowledge discovery in databases, Springer‐Verlag, Berlin‐Heidelberg‐New York, 2008, pp. 162–177. [Google Scholar]

- 2. Chung F. R. K., Spectral graph theory, American Mathematical Society, Providence, RI, 1997. [Google Scholar]

- 3. Chung F. R. K., Random walks and local cuts in graphs, Linear Algebra Appli Vol. 423 (2007), 22–32. [Google Scholar]

- 4. Chung F. R. K., Quasi‐random hypergraphs revisited, Random Struct Algorithms 40 (2012), 39–48. [Google Scholar]

- 5. Chung F. R. K. and Graham R. L., Quasi‐random hypergraphs, Random Struct Algorithms 1 (1990), 105–124. [Google Scholar]

- 6. Cohen E., Mubyi D., Ralli P., and Tetali P., Inverse expander mixing for hypergraphs, Arxiv preprint arXiv:1407.2285, 2014.

- 7. Dodziuk J., Difference equations, isoperimetric inequality and transience of certain random walks, Trans Am Math Soc 284 (1984), 787–794. [Google Scholar]

- 8. Dotterrer D. and Kahle M., Coboundary expanders, J Topol Anal 4 (2012), 499–514. [Google Scholar]

- 9. Eckmann B., Harmonische Funktionen und Randwertaufgaben in einem Komplex, Comment Math Helv 17 (1944), 240–255. [Google Scholar]

- 10. Friedman J. and Wigderson A., On the second eigenvalue of hypergraphs, Combinatorica 15 (1995), 43–65. [Google Scholar]

- 11. Jaakkola T. and Szummer M., Partially labeled classification with M, arkov random walks, Advances in neural information processing systems (NIPS), MIT Press, Cambridge, MA, Vol. 14, 2002, pp. 945–952. [Google Scholar]

- 12. Kahle M., Sharp vanishing thresholds for cohomology of random flag complexes, Ann Math 179 (2014), 1085–1107. [Google Scholar]

- 13. Levin D. A., Peres Y., and Wilmer E. L., Markov chains and mixing times, American Mathematical Society, Providence, RI, 2008. [Google Scholar]

- 14. Lovász L., Random walks on graphs: A survey, In Miklós D., Sós V. T. and Szőnyi T., editors, Combinatorics, Paul Erdős is eighty, Vol. 2, János Bolyai Mathematical Society, Budapest‐Hungary, 1996, pp. 353–398. [Google Scholar]

- 15. Lubotzky A., Ramanujan complexes and high dimensional expanders, Japanese Journal of Mathematics 9 (2014), 137–169. [Google Scholar]

- 16. Meilă M. and Shi J., A random walks view of spectral segmentation, In AI and Statistics (AISTATS) 2001, Key West, Florida, 2001. [Google Scholar]

- 17. Parzanchevski O. and Rosenthal R., Simplicial complexes: Spectrum, homology and random walks, arXiv:1211.6775v2, 2012.

- 18. Parzanchevski O., Rosenthal R., and Tessler R. J., Isoperimetric inequalities in simplicial complexes, Combinatorica, 1–33. [Google Scholar]

- 19. Rosenthal R., Simplicial branching random walks and their applications, Arxiv preprint arXiv:1412.5406, 2014.

- 20. Steenbergen J., Towards a spectral theory for simplicial complexes, PhD thesis, Duke University, Durham, NC, 2013, AAI3605065. [Google Scholar]

- 21. Steenbergen J., Klivans C., and Mukherjee S., A Cheeger‐type inequality on simplicial complexes, Adv Appl Math 56 (2014), 56–77. [Google Scholar]

- 22. Zhou D. and Schölkopf B., Learning from labeled and unlabeled data using random walks, Pattern recognition, Springer, Berlin‐Heidelberg, 2004, pp. 237–244. [Google Scholar]

- 23. Zhu X., Semi‐supervised learning with graphs, PhD thesis, Carnegie Mellon University, Language Technologies Institute, School of Computer Science, Pittsburgh, PA, 2005. [Google Scholar]