Abstract

Objective

Evidence suggests that musicians, as a group, have superior frequency resolution abilities when compared to non-musicians. It is possible to assess auditory discrimination using either behavioral or electrophysiologic methods. The purpose of this study was to determine if the auditory change complex (ACC) is sensitive enough to reflect the differences in spectral processing exhibited by musicians and non-musicians.

Design

Twenty individuals (10 musicians and 10 non-musicians) participated in this study. Pitch and spectral ripple discrimination were assessed using both behavioral and electrophysiologic methods. Behavioral measures were obtained using a standard three interval, forced choice procedure and the ACC was recorded and used as an objective (i.e. non-behavioral) measure of discrimination between two auditory signals. The same stimuli were used for both psychophysical and electrophysiologic testing.

Results

As a group, musicians were able to detect smaller changes in pitch than non-musicians. They also were able to detect a shift in the position of the peaks and valleys in a ripple noise stimulus at higher ripple densities than non-musicians. ACC responses recorded from musicians were larger than those recorded from non-musicians when the amplitude of the ACC response was normalized to the amplitude of the onset response in each stimulus pair. Visual detection thresholds derived from the evoked potential data were better for musicians than non-musicians regardless of whether the task was discrimination of musical pitch or detection of a change in the frequency spectrum of the rippled noise stimuli. Behavioral measures of discrimination were generally more sensitive than the electrophysiologic measures; however, the two metrics were correlated.

Conclusions

Perhaps as a result of extensive training, musicians are better able to discriminate spectrally complex acoustic signals than non-musicians. Those differences are evident not only in perceptual/behavioral tests, but also in electrophysiologic measures of neural response at the level of the auditory cortex. While these results are based on observations made from normal hearing listeners, they suggest that the ACC may provide a non-behavioral method of assessing auditory discrimination and as a result might prove useful in future studies that explore the efficacy of participation in a musically based, auditory training program perhaps geared toward pediatric and/or hearing-impaired listeners.

Keywords: Music perception, auditory evoked potentials, acoustic change complex, Auditory training

INTRODUCTION

Like speech, music is a spectrally complex acoustic signal. In order to perceive and fully appreciate music, the listener must be able to detect and process brief and subtle variations in the temporal and/or spectral content of an ongoing acoustic signal. For individuals with hearing loss, understanding speech in noise or music can be particularly challenging. Assistive devices like a hearing aid or cochlear implant (CI) can help, but studies show that many hearing-impaired individuals continue to struggle, even with well-fit assistive devices (e.g., Takahashi et al. 2007; Gifford & Revit 2010; Looi et al. 2012; Limb & Roy 2014; Kochkin 2015).

Auditory training is one approach that has been suggested as a way to improve the ability of a listener to process spectrally complex acoustic signals like speech or music. Numerous studies show that individuals with extensive musical training are able to discriminate sounds based on the musical pitch more quickly and more accurately than non-musicians (Tervaniemi et al. 2005; Parbery-Clark et al. 2009). Musicians also have been shown to outperform non-musicians on tests of how well they are able to understand speech in noise (Parbery-Clark et al. 2009; Slater et al. 2015). Music-based auditory training has also been shown to improve specific aspects of cognitive-linguistic functioning such as auditory attention, working memory and phonological processing (Fujioka et al. 2006; Parbery-Clark et al. 2009; Shahin 2011). Observations like these, coupled with the fact that music is an engaging stimulus for many listeners, have fueled an interest in the development of music-based training tools for use with hearing-impaired populations (Chen et al. 2010; Yucel et al. 2009; Shahin et al. 2011). In fact, today, many CI manufacturers, professional associations, and advocacy groups recommend using musical stimuli in aural rehabilitation programs and encourage pediatric CI users to participate in music-related activities. While such suggestions are logical, well-intentioned and often well-received, efficacy studies are lacking. Additionally, long-term music training typically begins in childhood and involves a level of commitment of time and resources that may not be practical for many and may lead to considerable frustration for others. Complicating things further, children are now often identified as having a hearing loss within the first year of life. Establishing the efficacy of a music-based auditory training program for pre-school aged children is likely to be a difficult task. Toward that end, it would be helpful if there was a reliable, non-behavioral method to evaluating the impact that training might have on auditory perception.

In this study, we focus on normal hearing listeners and examine the impact that long-term training in music has on the ability of the listener to use spectral cues to discriminate between two spectrally complex sounds. Our long-term goals are to determine if an objective (i.e., non-behavioral) method of assessing auditory discrimination might play a role (1) in deciding which CI users might benefit from participation in a music-based auditory training program, and eventually (2) in establishing efficacy of such a program for pediatric CI recipients. This report represents a first step toward that goal by providing a direct comparison of behavioral and electrophysiologic measures of auditory discrimination in individuals with and without musical training.

There are a number of different cortical auditory evoked potentials (CAEPs) that have been used to assess neural processing of acoustic signals. The most commonly recorded CAEP is the P1-N1-P2 onset response. This auditory evoked potential can be elicited using long duration, spectrally complex acoustic signals (like speech syllables or musical tones) presented in a passive listening paradigm. It has a fairly large amplitude, and studies have shown that it is possible to measure the P1-N1-P2 response complex in individuals who use conventional hearing aids or a CI to communicate (e.g., Friesen & Tremblay 2006; Tremblay et al. 2006a, 2006b; Brown et al. 2015). The presence of this CAEP is typically interpreted as evidence that the acoustic signal has been detected. The impact that factors like sleep, attention and development have on the P1-N1-P2 onset response has also been described previously in the literature (for review, see Ponton et al. 1996; or Picton 2011).

Several different groups of investigators have previously shown that the amplitude of the P2 component of the P1-N1-P2 complex can be enhanced by auditory training and that musicians, as a group, often have larger P2 amplitudes than non-musicians (Tremblay et al. 2001, 2007, 2009; Shahin et al. 2003, 2004, 2007, 2011; Trainor et al. 2003). However, this finding is not completely unanimous. For example, Alain et al. (2007) reported finding smaller P2 amplitudes following training using speech sounds. More recently, Zhang et al. (2015) also recorded smaller N1-P2 responses from musicians compared to non-musicians when they used click trains as the stimulus for evoked potential testing.

All of these studies have focused on the P1-N1-P2 onset response. Clearly, detection of sound is important; however, it seems likely that the primary advantage enjoyed by musicians relative to non-musicians might be related to their ability to discriminate between two sounds or to detect subtle changes in the quality of a sound. The auditory change complex (ACC) is a variant of the P1-N1-P2 response that has previously been used in studies exploring the impact of musical training on auditory perception. This averaged evoked potential is essentially a second P1-N1-P2 response that is generated if the listener is able to perceive a change in some aspect of an ongoing acoustic signal. The ACC is not a difference potential and does not require the use of an odd-ball paradigm. It typically is larger in amplitude, has more consistent morphologic features and can be recorded more efficiently than the mismatch negativity. The ACC can be recorded using long duration acoustic stimuli presented in the sound field that are then “processed” either by a hearing aid or a CI (e.g., Martin et al. 2008; Billings et al. 2012; Martinez et al. 2013; Brown et al. 2015). There also is evidence in the literature shows that the magnitude of the ACC response is at least roughly correlated with the salience of the “change” used to evoke it (e.g., Martin & Boothroyd 2000; Brown et al. 2008; Kim et al. 2009). In all of these studies, subtle changes in stimulus features resulted in small ACC responses. More obvious or clearly detectable changes resulted in larger amplitude ACC responses. It is for all these reasons that the ACC is the focus of this investigation.

Perhaps most directly relevant to the current investigation are results of two studies. In the first study, ACC responses were recorded from normal hearing musicians and non-musicians (Itoh et al. 2012). The stimuli were two chord progressions – one with a more distinct change than the other. Musicians not only were found to have more robust N1 change responses than non-musicians but the effects of attention were also greater for musicians than non-musicians. In the second study, psychophysical and electrophysiologic measures of spectral-ripple discrimination for three normal hearing listeners were tested using stimuli that were vocoded to simulate perception using a CI (Won et al., 2011). While the evoked potential estimates of auditory discrimination were not as sensitive as the behavioral measures, the ACC amplitudes clearly decreased as the spectral ripple density increased. As a group, these studies suggest that the ACC may, indeed, provide a reasonable estimate of the ability of the listener to discriminate between two spectrally complex signals.

This report describes results of a study comparing the impact that long-term musical training has on both behavioral and electrophysiological measures of auditory discrimination. We hypothesized that musicians would be able to discriminate smaller changes in pitch and/or have higher (i.e., better) spectral ripple discrimination thresholds than non-musicians, and that those differences in spectral acuity would be evident in both the behavioral and electrophysiologic measures. If our hypotheses are correct, the results of this study will advance our understanding of how musical training affects auditory processing at the level of the cortex and support use of an evoked potential such as the ACC as a tool to assess efficacy of a music-based auditory training program. If long-term musical training does not affect the ACC, it seems unlikely that this evoked response would prove useful as a metric of progress in a short-term training program geared toward hearing-impaired listeners.

METHODS

I. Subjects

Twenty individuals participated in this study. Our goal was to construct two groups of subjects whose musical background and experience were very different. Most of the musically trained subjects were either faculty or graduate students in the School of Music at the University of Iowa. Individuals without musical training were recruited from among the faculty and/or graduate students in the Department of Communication Sciences and Disorders at the University of Iowa. Potential subjects were asked to rank themselves on music training using two 10-point Likert scales. These scales were quick to administer and served as a screening tool that we used to help construct our two subjects groups. On the first scale, number 1 was labelled “Non-musician – No music classes beyond elementary school” and number 10 was labeled “Professional musician - Extensive music classes and music practice”. On the second scale, number 1 was labeled “No involvement with music – past or present” and number 10 was labeled “Regular involvement with music for many years”. Individuals who ranked themselves between 9 and 10 on both scales were recruited to participate as part of a subject group we refer to as “musicians”. Individuals who ranked themselves between 1 and 3 on both scales were invited to participate as part of a subject group we refer to as “non-musicians”. Four individuals who ranked themselves between those extremes were excused from further participation.

All 20 study participants then were asked to complete the Iowa Musical Background Questionnaire (IMBQ). The IMBQ has been widely used to quantify musical background and experience (Gfeller et al. 2000). Our goal was to define/quantify the extent to which our two subject groups differed in terms of their musical training and overall experience. The average score on the IMBQ for the musicians was 68.89 (SD = 54). The mean score obtained by the non-musicians was 13.1 (SD = 9.59). This difference between the two groups was statistically significant (t(11) = 5.18, p = 0.0003).

None of the study participants reported a significant history of hearing loss or neurologic disorders. The average age of the musically trained study participants was 42.2 years (SD = 16.04). The average age of non-musicians was 38.4 years (SD = 12.03). Unpaired t tests revealed that the age difference between the two groups was not statistically significant (t(18) = 0.5993, p = 0.5564). The group of musicians consisted of five males and five females. The group of non-musicians consisted of two males and eight females.

All 20 study participants had audiometric thresholds that were ≤ 20 dB HL for frequencies ranging from 250 to 2000 Hz. All 10 non-musicians passed the hearing screening at 4000 Hz. One of the non-musicians had a mild high frequency hearing loss at 8000 Hz. Three of the musicians had a mild high frequency hearing loss at 4000 and/or 8000 Hz (i.e., audiometric thresholds between 25 and 40 dB HL).

A non-auditory measure of attention and working memory known as the Visual Monitoring Task (VMD) was also administered to subjects in both groups (Knutson 2006). Mean performance on the VMT was 99.08% (SD = 1.29) for the musicians and 90.3% (SD = 13.63) for the non-musicians. This difference was not statistically significant (t(9) = 2.03, p = 0.0726).

II. Behavioral Test Procedures

Behavioral measures of speech understanding in background noise and timbre recognition were obtained from each study participant. Both measures are reported widely in the literature as being sensitive to the effects of long-term musical training. Two additional adaptive psychophysical tests were used to measure pitch and spectral ripple discrimination thresholds. All four tests were completed in a sound-treated booth using stimuli presented in the sound field at 60-65 dBA from a speaker located approximately 4 feet from the listener at 0° azimuth. Details related to each of these behavioral tests are provided below.

1. Speech understanding in noise

Two lists of AZ Bio sentences (Spahr et al. 2012) were presented in the sound field at 60 dBA. The 10-talker babble was presented from the same speaker at a signal-to-noise ratio of 0 dB. The ability of the study participant to identify key words in each sentence was assessed. Results from both lists were averaged and scores expressed as percent correct.

2. Timbre recognition

This was a closed set task. Study participants were given a card with six common musical instruments depicted and labelled. A sequence of four notes was played, and each study participant was asked to identify which musical instrument he/she thought had played that sequence of sounds. The stimuli were generated digitally using MIDI technology and professional composition software (Finale Songwriter 2012). Each stimulus was played four times in random order (24 stimuli total). Feedback was not provided. Results were scored as percent correct.

3. Pitch discrimination

This task required that the study participants listen to a series of three notes that were generated digitally using MIDI technology and professional composition software (Finale Songwriter 2012 and Adobe Audition, AdobeSystems, Inc. ver. 3). Two of the three notes were simulations of a clarinet playing C4. This stimulus was labelled the “standard” stimulus. The third stimulus, labelled the “different” or “variable” stimulus, was a digitally generated sample of a clarinet playing a note that was higher in pitch than middle C. All three stimuli were 400 ms in duration and gated on and off using a 5 ms linear ramp. The three stimuli were presented in random order, and the level of each of the three stimuli was randomly varied by +/− 2 dB in order to eliminate potential loudness cues. The three listening intervals were separated by two 400 ms silent intervals. A three interval, three alternative forced choice paradigm was used and participants were asked to indicate which of the three intervals contained the different stimulus. Feedback was not provided. A 2-down, 1-up stepping rule was used that results in estimation of the 70.7% point on the psychometric function (Levitt 1971). Initially the standard and deviant stimuli were separated by 2 semitones (200 cents) and a 1 semitone step size was used. If the study participant was able to correctly discriminate two tones that differed by 1 semitone, the step size was halved to 50 cents. The smallest step size used was 5 cents. The process stopped after 10 reversals were obtained and the mean of the last 6 reversals was computed. This adaptive procedure was completed four times. The musical pitch discrimination threshold was defined as the mean of the last three of the four pitch discrimination thresholds.

4. Spectral ripple discrimination

Psychophysical measures of spectral ripple discrimination thresholds were also measured for each study participant. Matlab (MathWorks, Inc. US R2011b) was used to generate the ripple noise stimuli. The noise was comb filtered to create sinusoidal peaks and valleys (“spectral ripples”) in the frequency domain. The modulation depth was held constant at 30 dB. A three interval, three alternative forced choice paradigm was used. Two intervals contained ripple noise that we describe as “cosine phase noise”. The third interval contained a sample of rippled noise where the position of the peaks and valleys in the frequency domain had been shifted by 180 degrees and is described as inverted or “sine phase”. Ripple discrimination thresholds were measured by systematically varying the spectral ripple density from 0.25 to 18 ripples per octave (rpo). The stimuli used for this task were RMS balanced but during the adaptive procedure, the presentation level was varied slightly (+/− 2 dB) to minimize the extent to which the listener could use loudness cues. The study participant's task was to identify the interval that was “different”. Feedback was not provided. At low ripple densities, the task is very simple and most normal hearing listeners describe the difference between the two stimuli as a change in pitch. At higher ripple densities the task becomes much more difficult. Ripple density was systematically varied and testing continued until 10 reversals were obtained. A mean spectral ripple discrimination threshold for each run was computed by averaging the results of the last 6 reversals in each run. The whole procedure was repeated four times and spectral ripple discrimination threshold was defined as the mean of the last three runs.

III. Electrophysiologic Test Procedures

1. Stimuli

ACC responses were recorded using the same stimuli used for the behavioral measures of pitch and spectral ripple discrimination described above; however, in order to record the ACC responses, two 400 ms segments that were RMS balanced were concatenated and digitally edited to ensure that there were no audible pops or clicks at the transition point. The result was a single 800 ms stimulus that consisted of two segments that differed from each other based on either musical pitch or the location of peaks and valleys of a ripple noise stimulus.

One set of stimuli was constructed by concatenating two digitally generated samples of a clarinet playing a continuous note for 400 ms. The first note played was middle C (C4). The fundamental frequency of which is 262 Hz. The second note was always higher in pitch than middle C. The magnitude of the pitch change ranged from a few cents to several semitones (C4-F4).

The second set of stimuli was also digitally generated and was composed of 256 sinusoids combined at random phases to create a noise with a bandwidth from 100 to 6400 Hz. This noise was then comb filtered using varying ripple densities and a 30 dB modulation depth. The relative position of the peaks and valleys of the 800 ms segment of ripple noise was reversed after 400 ms. A set of stimuli was created using ripple densities that ranged from 1 rpo to 12 rpo. Discriminating a shift in the position of the spectral peaks became more difficult as ripple density increased.

2. Recording parameters

Standard disposable disc electrodes were used for recording the auditory evoked responses. The recording electrode sites included the vertex (Cz), both mastoids (M1 and M2), and the inion (Oz). The ground electrode for all of the recording channels was located off center on the forehead. Two additional electrodes were placed above and lateral to one eye and used to monitor eye blinks. Electrode impedance ranged from 1000-3000 Ohms and was monitored throughout the recording session. Online artifact rejection was used to eliminate from averaging any sweeps containing eye blinks or voltage excursions greater than 80 μV in the eye blink channel or in one or more of the other five recording channels. The rate of artifact rejection was typically under 10 %.

An optically isolated, Intelligent Hearing System differential amplifier (IHS8000) was used to amplify (gain = 10,000) and band-pass filter (single pole RC: 1-30 Hz) the raw EEG activity. A National Instruments Data Acquisition board (DAQCard-6062E) was used to sample the ongoing EEG activity at a rate of 10,000 Hz per channel. Two recordings of 100 sweeps each were obtained for each stimulus. These two recordings were then combined off-line to create a single evoked potential. Custom-designed LabVIEW™ software (National Instruments Corp.) was used to compute and display the averaged waveforms. No baseline correction was required.

3. Testing procedures

During testing, study participants were seated in a comfortable chair inside a double-wall sound booth. No active listening task was required but the study participants were instructed to stay awake and were allowed to read or watch captioned videos. Subject state was monitored carefully using both audio and video recording systems, and frequent breaks were provided.

The stimuli used for electrophysiologic testing were presented at 65 dBA SPL in the sound field from a speaker located approximately 4 ft from the study participant at 0° azimuth. The stimulation rate was varied randomly from 2.8 to 3.8 stimuli per second. This relatively slow stimulation rate and the addition of some jitter in the timing between consecutive stimuli helped minimize the effects of adaptation. MATLAB software was used to control stimulus timing and to trigger the averaging computer.

Recording a full set of evoked potential data typically required approximately three hours. Testing usually started using either a stimulus with a ripple density of 1 rpo or a pitch change of one semitone (100 cents). Both conditions typically elicited clear onset and change responses. Data were recorded in blocks of 100 sweeps and using progressively more challenging contrasts until it was clear that the ACC was no longer evident in the averaged recordings. To save time, once a clear no response condition was identified, stimuli with smaller pitch changes or more densely spaced spectral ripples were not used. As a result, not all subjects were tested using all of the stimulus contrasts.

IV. Data Analysis

For each study participant, waveforms recorded differentially between Cz-M1, Cz-M2 and Cz-Oz were combined to create a single composite waveform for each stimulus that was analyzed using a custom MATLAB program. This program automatically identified the minimum and subsequent maximum values in the 400 ms interval that followed either the onset of the stimulus or the introduction of the change in the stimulus. These automatically selected points were examined and labelled N1 and P2 respectively. In rare instances (well under 5% of the time) the results of the automatic peak picking algorithm were overruled by the experimenter and the N1 and/or P2 peaks were picked manually. This happened only in instances where the recordings were noisy and when the latency of the N1 or P2 peaks determined using the automatic peak picking algorithm were inconsistent with latencies recorded from the same subject using similar stimuli. A second examiner who was blinded to subject identity and to the stimulus condition also examined the waveforms off-line and confirmed peak location and identified the visual detection threshold for each recording series. Peak-to-peak amplitude was measured between the N1 peak and the following positive peak (P2). Normalization was used to facilitate comparison of ACC amplitudes across subjects and/or groups. This was accomplished off-line by dividing the peak-to-peak amplitude of the ACC response by the peak-to-peak amplitude of the onset response recorded simultaneously. Finally, grand mean waveforms were computed for each stimulus contrast.

To compare performances between two groups, t tests and linear regression models with correlated errors were used. When performing a two sample t test, the variances were tested for equality using a Folded F test. If the variances were not significantly different, then a pooled variance was used. If the variances were significantly different, then a Satterthwaite adjustment was used. The linear regression model with correlated errors, which accounts for within-subject correlation, was used to compare normalized amplitudes of the various pitch and spectral ripple change stimuli between musician and non-musicians. We used a heterogeneous AR(1) correlation structure, which allows each pitch to have its own variance and the correlation between pitch decays as the pitches get farther apart. Linear regression analysis and correlations were used to compare between evoked potential peak-to-peak amplitudes and perceptual measures. SAS® version 9.3 was used for all of the statistical analyses.

All of the procedures used to recruit, evaluate and test study participants were reviewed and approved by the Institutional Review Board at the University of Iowa. Study participants were compensated for participation in this study.

RESULTS

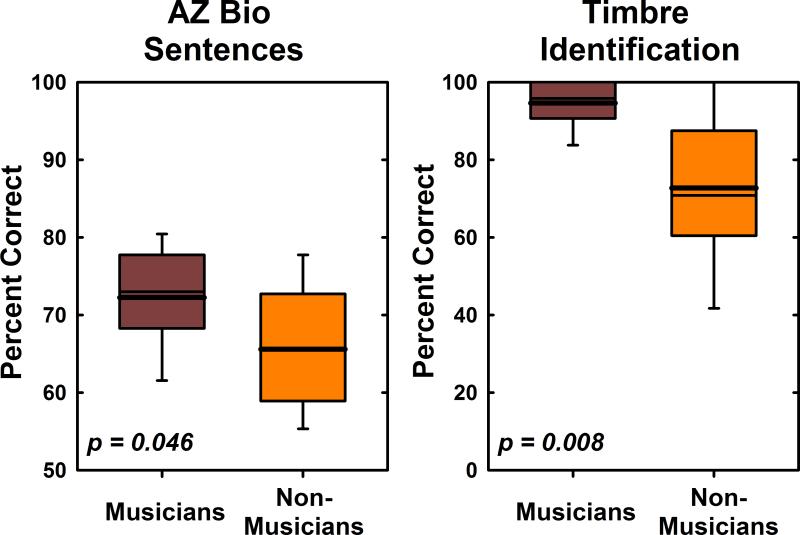

Figure 1 shows results of tests of speech understanding in noise and musical timbre identification for musicians and non-musicians. Musicians performed significantly better than non-musicians on the AZ Bio test administered at 0 dB SNR (t(18) = 2.14, p = 0.046). They also were able to more accurately identify instruments based on timbre cues than non-musicians (t(9) = 3.35, p = 0.008). Note that the degrees of freedom used to compare performance on the timbre identification task were adjusted to account for differences in variance between the two subject groups (see Methods). The boundaries of the box plots shown in Figure 1 define the 75th and 25th percentiles of the distribution. The whiskers indicate the 5th and 95th percentiles of the distribution. Mean performance is indicated by the thicker solid line. The median is marked using the thinner line.

Figure 1.

Box plots illustrate performance by both groups of study participants on a speech perception in noise task (AZ Bio sentence test at 0 dB SNR) and on a timbre identification task.

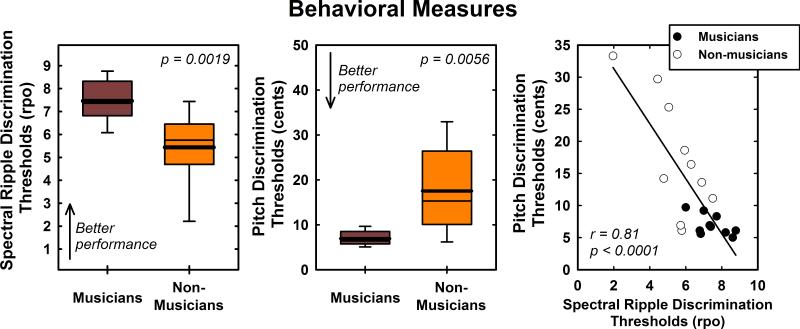

Behavioral measures of pitch and spectral ripple discrimination thresholds also differed for musicians and non-musicians (see Figure 2). Musicians, as a group, were found to have higher (better) spectral ripple discrimination thresholds than non-musicians (t(18) = 3.63, p = 0.0019). They were also able to perceive smaller changes in pitch (lower pitch discrimination thresholds) than the group of individuals without musical training (t(10) = −3.56, p = 0.0056). The box plots in Figure 2 follow the same conventions as those shown in Figure 1.

Figure 2.

Box plots show performance of musicians and non-musicians on the spectral ripple and pitch discrimination tasks. The scatter plot shows the correlation between these two behavioral measures of auditory discrimination. Note that better performance on the spectral ripple discrimination task is indicated by higher threshold values while better performance on the pitch discrimination task is indicated by lower thresholds threshold values.

The scatter plot in Figure 2 compares performance by both musicians (filled symbols) and non-musicians (open symbols) on these two behavioral measures of auditory discrimination. Linear regression analysis revealed a strong and statistically significant correlation (r2 = 0.66, p < 0.0001) between spectral ripple discrimination and pitch discrimination thresholds suggesting these two tests may be tapping into the same basic perceptual skill.

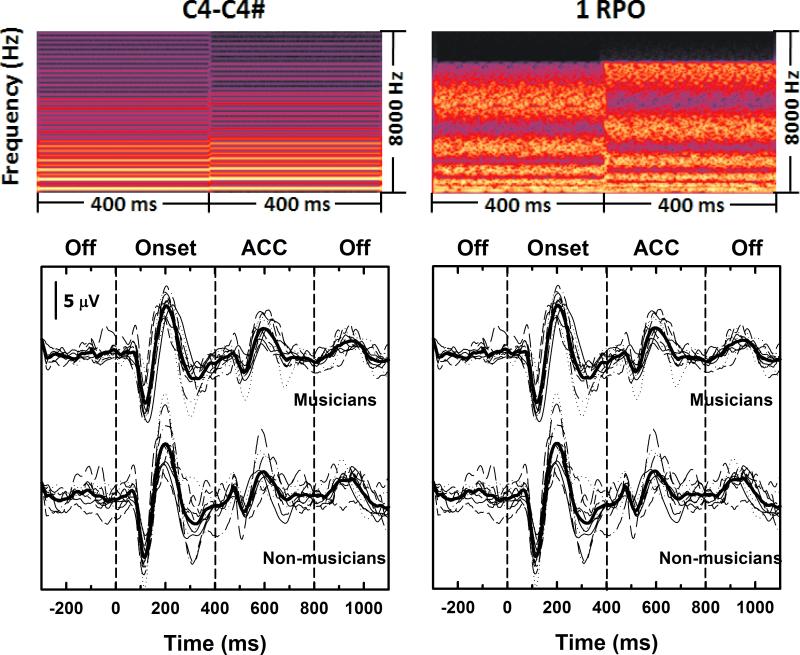

Figure 3 includes spectrograms of two stimulus pairs (C4-C4# and 1 rpo) used for evoked potential testing. These stimulus contrasts were clearly detected by all 20 study participants. The lower panels show evoked potential recordings obtained using these two stimulus contrasts. Thin lines are used to show data from individual subjects. The stimulus onset occurs at the 0 ms point in each recording. The stimulus change occurs at the 400 ms point. Grand mean recordings are shown using the thick line. Results from musicians and non-musicians are plotted separately. Recordings from individual study participants represent an average of 100 sweeps. These recordings include a pre-stimulus baseline, an onset N1-P2 response and a smaller amplitude ACC response.

Figure 3.

Waveforms recorded from all 20 study participants are shown for two example stimuli. Thinner dashed lines are waveforms recorded from individual participants. The thicker solid lines are grand average waveforms. Waveforms recorded from musicians and non-musicians have been separated. Spectrograms of the stimuli used to evoke these responses are also shown.

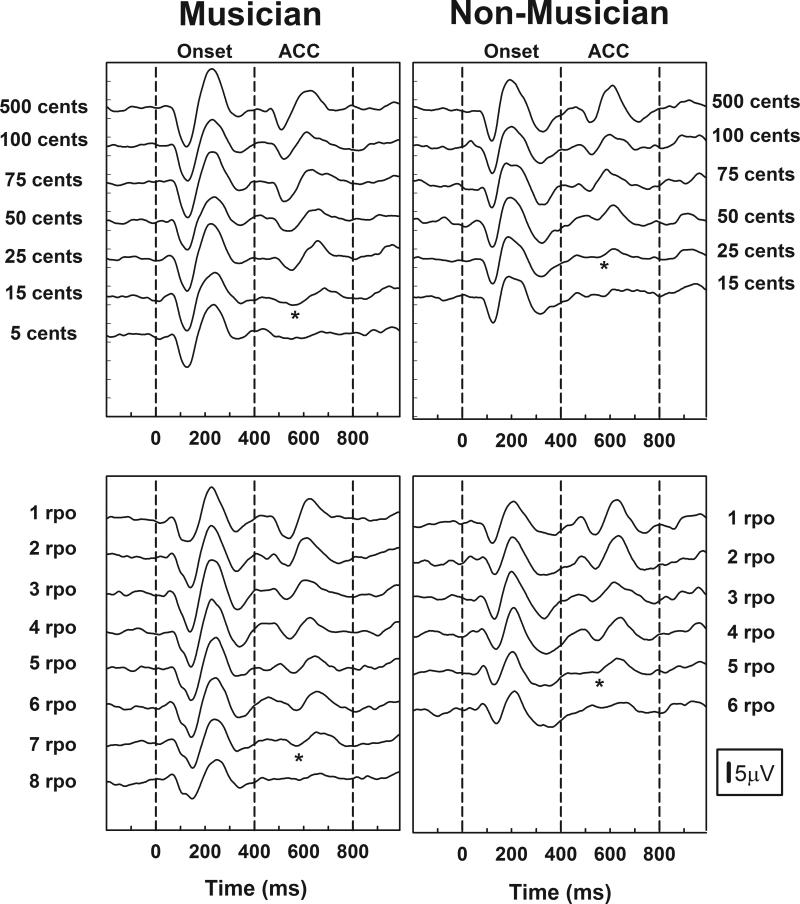

Figure 4 shows a series of waveforms recorded from a musician and a non-musician. The two upper panels show waveforms evoked using a change in pitch. The parameter is the magnitude of the pitch change measured in cents where 100 cents is equal to one semitone. The two lower panels show responses recorded using ripple noise stimuli. Once again, the stimulus onset occurs at the 0 ms point in each recording, and the stimulus change is introduced at the 400 ms point. Both are indicated with dashed lines.

Figure 4.

Cortical onset and ACC responses recorded from a musician and a non-musician are shown. The upper panels show results evoked using a change in pitch. The parameter is the magnitude of the pitch change. The lower panels show results obtained using a shift in the position of the peaks and valleys of the ripple noise stimuli. The parameter is density of the spectral ripples. The asterisks indicate the visual detection thresholds for the ACC.

Onset responses are clearly identifiable in all of the recordings from both study participants. As the change in pitch becomes smaller or as ripple density increases, it becomes progressively more difficult to discriminate the two signals in each stimulus pair and the ACC amplitude, measured from N1 to P2, decreases. Asterisks indicate the visual detection threshold (VDT) identified for each of these two subjects. For the musician, a difference in pitch as small as 15 cents and ripple densities as large as 7 rpo resulted in the recording of a measurable ACC. For the non-musician, the smallest pitch difference that evoked a measurable ACC was 25 cents and spectral ripple densities of ≤ 5 rpo were required to elicit an ACC response.

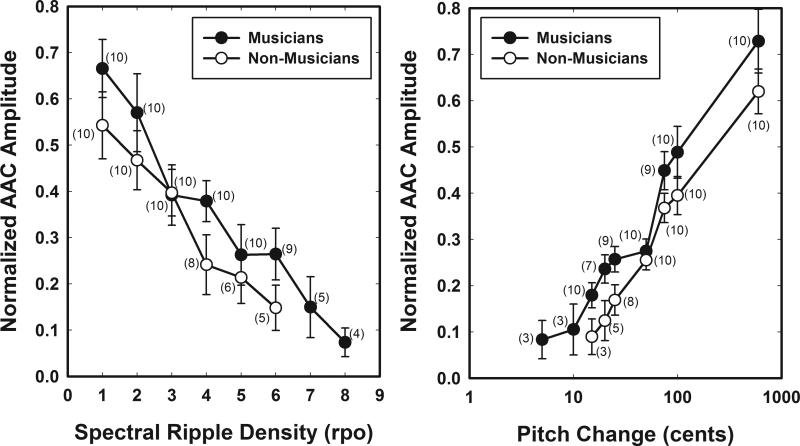

Figure 5 shows average normalized ACC amplitude measures evoked using a change in pitch or a change in the location of the spectral peaks of a rippled noise stimulus (see Figure 3). Results recorded from musicians are shown using filled symbols. Results recorded from non-musicians are plotted using open symbols. Error bars indicate ± 1 standard error around the mean. The numbers in parentheses indicate the number of subjects tested using each stimulus contrast.

Figure 5.

The effect of changes in spectral ripple density and pitch on normalized ACC response amplitudes are shown. Filled symbols indicate results obtained from the musicians. Open symbols indicate results obtained from non-musicians. The error bars represent one standard error around the mean. The numbers in parentheses are the number of subjects in each group included in the mean calculation.

The amplitude of the ACC response measured between the N1 and P2 peaks was normalized to the amplitude of the onset response. A normalized amplitude of 1 means the ACC response was as large as the onset response. A normalized amplitude of 0 means no ACC response was detected. As the density of the spectral ripples is increased or the magnitude of the pitch change is reduced, normalized ACC amplitude values tend to decrease. There also appear to be group effects. That is, musicians appear to have slightly larger normalized amplitudes than non-musicians for the same stimulus contrasts.

In order to evaluate the statistical significance of the trends shown in Figure 5, two separate MANOVAs were computed. Subject group and stimulus type were considered to be main effects. The dependent variable was normalized ACC amplitude. The independent variable was the magnitude of the change in pitch or spectral ripple density. Not all study participants were tested using all stimulus contrasts. The number of study participants tested using each of the contrasts shown is indicated on the figure. Because subject numbers in each group are small for the more challenging stimulus contrasts, statistical analysis was limited to stimulus contrasts less than 7 rpo and pitch changes greater than 10 cents. Normalized ACC amplitudes were found to decrease significantly as spectral ripple density increased (F(1,98) = 64.82, p < 0.0001) and as the magnitude of the pitch change decreased (F(1,113) = 97.52, p < 0.0001). Group effects, that is the difference between musicians and non-musicians, were also found to be statistically significant for both stimulus types (Pitch: F(1,18) = 12.30, p = 0.0025; Ripple Noise: F(1,18) = 6.99, p = 0.0165). No interaction was found for either the data set collected using rippled noise stimuli or stimuli that included a change in pitch.

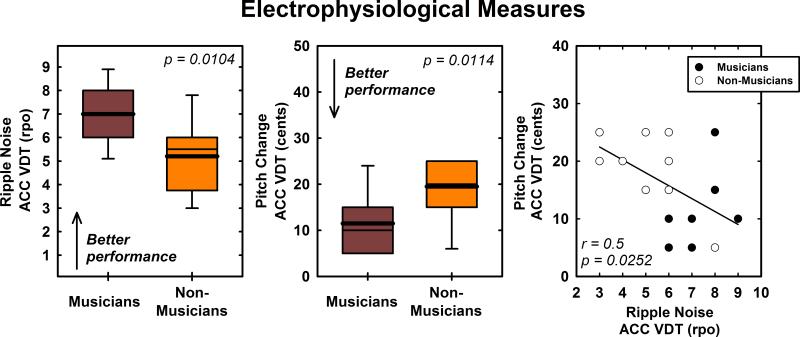

Figure 6 shows VDTs for the ACC response computed using both the pitch change and ripple noise paradigms. VDTs recorded from musicians using ripple noise stimuli are higher (better) than VDTs recorded from non-musicians using the same stimuli (t(18) = 2.86, p = 0.0104). VDTs recorded using changes in pitch were also significantly lower (better) for musicians compared with non-musicians (t(18) = −2.82, p = 0.0114). The scatter plot in Figure 6 shows the relationship between these two electrophysiologic measures of “discrimination”. Linear regression analysis performed using data from both subject groups combined revealed a statistically significant correlation between these two metrics (r = 0.5, p = 0.0252).

Figure 6.

Box plots show visual detection thresholds for ACC responses evoked using either a shift in the peaks and troughs of a ripple noise stimulus or a shift in pitch of a musical tone. Results obtained from musicians are contrasted with results recorded from non-musicians. The scatterplot shows the correlation between these two metrics. The line represents the results of a linear regression computed using combined data from both subject groups.

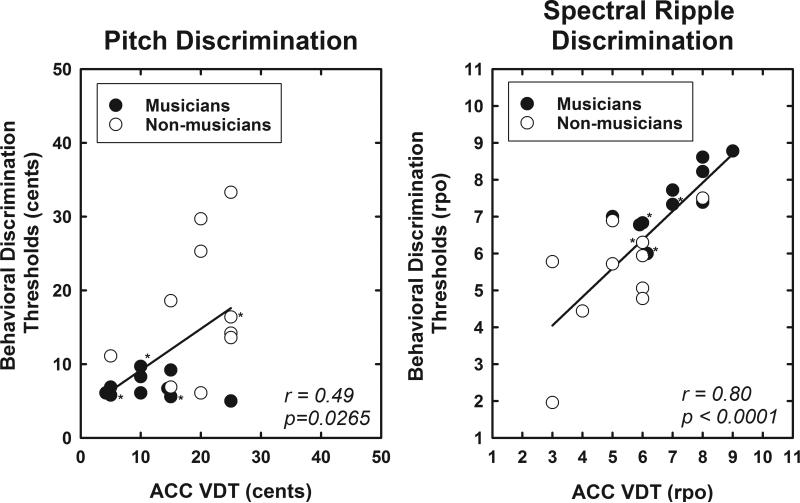

Finally, Figure 7 shows the relationship between ACC VDTs recorded using electrophysiologic techniques and behavioral estimates of auditory discrimination. A strong and statistically significant correlation between the electrophysiologic and behavioral measures is evident when the ripple noise stimulus is used (r2 = 0.64, p < 0.0001). A weaker, but still statistically significant correlation was obtained when the pitch change stimuli were used (r2 = 0.24, p = 0.0265). In this analysis, data from both musicians and non-musicians have been combined. The asterisks in Figure 7 indicate specific datum points recorded from study participants with a mild hearing loss at 4000 and/or 8000 Hz. While somewhat anecdotal given the small numbers, note that the three musicians with mild loss of hearing in the high frequencies do have slightly worse spectral ripple discrimination thresholds than their peers with audiometric thresholds that were better than 20 dB HL for frequencies above 4000 Hz. No such trend is apparent in the pitch discrimination data.

Figure 7.

Scatterplots show the relationship between ACC based VDTs and behavioral measures of auditory discrimination for the pitch change and the ripple noise stimuli. The lines show the results of a linear regression computed using combined data from both subject groups. Asterisks indicate responses recorded from individuals with mild hearing loss for frequencies ≥ 4000 Hz.

DISCUSSION

In this study we used both behavioral and electrophysiologic methods to determine how long-term musical training impacts the ability of a listener to detect a change in a spectrally complex acoustic signal. Our goal was to determine if the ACC response was sensitive enough to reflect changes in perceptual acuity associated with such training.

The musicians who participated in this study scored higher on measures of speech discrimination in noise and were able to use timbre cues to identify musical sounds with greater accuracy than individuals without musical training (see Figure 1). This finding was expected and generally consistent with previously published results. For example, Parbery-Clark et al. (2009) found that musicians performed better than non-musicians on a battery of behavioral measures that included frequency discrimination, working memory function and speech perception in noise. A variety of explanations has been offered to explain these perceptual differences. For example, it has been suggested that the act of listening to speech in the presence of noise is similar to a concurrent analysis of auditory units and sound segregation that musicians are trained to employ (Parbery-Clark et al. 2009; Zendel & Alain 2009; Shahin 2011). Another explanation relates to the fact that musical perception and performance requires sophisticated top-down processing (Strait et al. 2010) enhanced pitch discrimination (Tervaniemi et al. 2005) and superior working memory (Chan et al. 1998). These abilities, which musicians may have developed with practice, could also facilitate the formation of ‘perceptual anchors’ that may also enhance performance on speech-in-noise tasks. Finally, there is a growing body of literature that suggests that the neural resources responsible for processing music are also involved with speech-language processing (e.g., Kraus & Banai 2007; Mussacchia et al. 2008; Patel 2014).

In this study we reported comparisons between musicians and non-musicians on how well they are able to discriminate small changes in two different spectrally complex acoustic signals: a noise stimulus that has been filtered to create spectral ripples and a synthetic version of a note played on a clarinet. Psychophysical testing showed that, as expected, musicians had better frequency and spectral ripple discrimination thresholds that the non-musicians (see Figure 2). These results are consistent with observations reported by others that suggest musicians are able to discriminate stimuli on the basis of pitch more accurately than non-musicians (Kishon-Rabin et al. 2001; Besson et al. 2007). The fact that no significant difference between the two groups was measured on the VMT suggests that this difference in perceptual acuity may be more related to practice or auditory training than simply to differences in working memory.

Our goal was to determine if we could use electrophysiologic methods to assess sensitivity to change in the spectral content of an acoustic signal. We focused on the ACC. While other researchers have used evoked potential techniques to evaluate the impact of training, most have focused on onset responses and have shown that the amplitude of the second positive peak of the P1-N1-P2 onset response can be enhanced following a period of training using either music or speech sounds (Tremblay et al. 2001; Tremblay & Kraus 2002; Shahin et al. 2003; Trainor et al. 2003; Musacchia et al. 2008; Orduña et al. 2012). While relevant, the P1-N1-P2 onset response is typically characterized as a metric that is related to detection of an acoustic signal rather than discrimination between two signals. In this study our primary focus was on auditory discrimination rather than detection and so we chose to focus on the ACC rather than the onset response. Our study participants were normal hearing adults and as a result, the onset responses that were recorded were generally well formed and characterized by clear N1 and P2 peaks (see Figure 3). The ACC responses we recorded tended to be smaller in amplitude than the onset responses as measured between the N1 and P2 peaks. The amplitude of the ACC response also decreases as the magnitude of the stimulus change decreased (see Figures 4 and 5). This finding is consistent with similar results previously reported from our lab and from others (e.g., Martin & Boothroyd 2000; Brown et al. 2008, 2015; Kim et al. 2009; Won et al. 2011) and suggests that the ACC might provide an objective (e.g., non-behavioral) metric that may predict perception. In this report, we use a peak-to-peak metric to quantify amplitude (N1-P2) rather than a simple measure of P2 amplitude relative to a baseline or P2 latency because the ACC response has a relatively small amplitude and this transition is the prominent feature of that response. In order to facilitate comparison across subjects, we normalized the amplitude of the ACC response to the amplitude of the onset response. These normalized amplitude measures increased as the differences between in the two halves of the acoustic stimulus became more different (e.g., as the difference in the pitch between the first and second halves of the stimulus increased or as the spectral ripple density decreased). Small normalized ACC recordings were obtained when the change between the first and second half of the acoustic signal was small. Larger normalized change responses were recorded when the change in the acoustic stimulus was larger and presumably more easily detected by the listener (see Figures 4 and 5). This finding was also expected and similar to results reported previously using other subject populations and using a range of different stimulus types (e.g., Martin & Boothroyd 2000; Brown et al. 2008; Kim et al. 2009; Itoh et al. 2012).

Our results showed that the electrophysiologic estimates of auditory discrimination (e.g., the VDTs) were not as sensitive as the behavioral measures of discrimination; however, the two metrics are correlated with each other (See Figure 6). Additionally, the results of this study demonstrate that musicians are not only able to discriminate smaller changes pitch than non-musicians, but they also have higher (better) spectral ripple discrimination thresholds than non-musicians; both of those differences in perceptual acuity are mirrored in VDTs recorded using the same stimuli and the same stimulation paradigm. We also demonstrate that musicians tend to have larger normalized ACC amplitudes than non-musicians for both types of stimuli (See Figure 5). While the magnitude of the differences we report are not large, they are statistically significant and confirm that at least on a group level, the normalized ACC amplitude metric is, in fact, sensitive enough to reflect differences in the way musicians and non-musicians process spectrally complex acoustic stimuli.

These results are consistent with previous data reported from Itoh et al. (2012) who showed larger N1 change responses from musicians than non-musicians when evoked using two different changes in a musical chord. One change was perceptually more subtle than the other, and the result of this study showed this more subtle change in pitch was associated with a smaller evoked response. Behavioral and physiologic comparisons of spectral ripple discrimination have also been compared previously by Won et al. (2011). These investigators measured ACC responses from three normal hearing listeners tested on ripple noise stimuli that were processed using an 8 channel vocoder. Consistent with this report, normalized ACC amplitudes decreased as ripple density increased and behavioral measures were more sensitive than electrophysiological measures of spectral ripple discrimination. A previous study by the same group (Won et al. 2010) reported correlations between behavioral measures of spectral ripple and complex-tone pitch discrimination with measures of speech perception in noise in cochlear implant users. No electrophysiologic data are included in that report, but the link between these two measures of change in a spectrally complex signal and speech understanding in noise is compelling.

The results of this study add to a growing body of literature that suggests that the effects of musical training or practice can alter both the way sound is perceived and the neural processes associated with that perceptual change are measureable (e.g., Trainor et al. 2003; Tervaniemi et al. 2005; Fujioka et al. 2006; Alain et al. 2007; Fu & Galvin 2007; Shahin, 2011; Krishnan et al. 2012).

While we have focused on individuals with essentially normal hearing who have had long term training, this work has application to clinical populations. For example, today children as young as one year of age who have been born deaf, are considered to be candidates for cochlear implantation. Outcome with a cochlear implant can vary widely. Most cochlear implant users have very poor spectral resolution, and most struggle to understand speech in background noise or to appreciate music. These challenges affect both adult and pediatric cochlear implant recipients. Recent evidence has shown that participation in music-based auditory training programs can lead to improved performance by pediatric CI users (Yucel et al. 2009; Chen et al. 2010). Based on observations like these, many cochlear implant teams are recommending the incorporation of musical activities into the auditory habilitation program for these children. This is also the population where non-behavioral measures of progress with might be most welcome. Results of the current study suggest the ACC might serve that purpose. Future work is needed to expand this study to include hearing impaired populations.

Finally, while promising, we would note that these recordings can be time-consuming to obtain, require the application of recording electrodes, and depend on having a subject who is able to stay alert but remain relatively quiet during the testing process. The finding that the spectral ripple and pitch discrimination thresholds can be estimated using either behavioral or electrophysiological methods and are sensitive to the effects of training are compelling; however, the results we report were collected using individuals with many years of musical training. An important next step will be to evaluate the sensitivity of these electrophysiologic measures to changes in auditory processing associated with participation in short-term musical training programs.

CONCLUSIONS

Long-term training in music performance and/or exposure to music over a lifetime results in improvements in the ability of an individual to discriminate small changes in musical pitch and process subtle changes in complex spectral cues in adults. These differences in perceptual acuity are evident both in physiologic measures of neural processing at the level of the auditory cortex and behavioral measures of discrimination between acoustic signals that vary based on differences in musical pitch and/or variations in the spectrum of a noise stimulus.

ACKNOWLEDGMENTS

The authors gratefully appreciate the study participants who very generously gave their time to participate in this study. We acknowledge Dr. Jacob Oleson for help in statistical analysis and Wenjun Wang for help in developing perception testing software. This study was supported by the NIH/NIDCD grants, R01 DC012082 and P50 DC000242.

Footnotes

Conflicts of interest:

None of the authors report a conflict of interest.

REFERENCES

- Alain C, Snyder J, He Y, et al. Changes in auditory cortex parallel rapid perceptual learning. Cereb Cortex. 2007;17:1074–1084. doi: 10.1093/cercor/bhl018. [DOI] [PubMed] [Google Scholar]

- Besson M, Schön D, Moreno S, et al. Influence of musical expertise and musical training on pitch processing in music and language. Restor Neurol Neurosci. 2007;25:399–410. [PubMed] [Google Scholar]

- Billings C, Papesh M, Penman T, et al. Clinical use of aided cortical auditory evoked potentials as a measure of physiological detection or physiological discrimination. Int J Otolaryngol. 2012 doi: 10.1155/2012/365752. Article ID 365752, 14 pages. doi:10.1155/2012/365752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CJ, Etler C, He S, et al. The electrically evoked auditory change complex: preliminary results from nucleus cochlear implant users. Ear Hear. 2008;29:704–717. doi: 10.1097/AUD.0b013e31817a98af. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CJ, Jeon EK, Chiou LK, et al. Cortical auditory evoked potentials recorded from Nucleus Hybrid cochlear implant users. Ear Hear. 2015;36:723–732. doi: 10.1097/AUD.0000000000000206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen JKC, Chuang AYC, McMahon C, et al. Music training improves pitch perception in prelingually deafened children with cochlear implants. Pediatrics. 2010;125:e793–800. doi: 10.1542/peds.2008-3620. [DOI] [PubMed] [Google Scholar]

- Finale Songwriter. MakeMusic Inc., Boulder, Co.; 2012. [Google Scholar]

- Friesen LM, Tremblay KL. Acoustic change complexes recorded in adult cochlear implant listeners. Ear Hear. 2006;27:678–685. doi: 10.1097/01.aud.0000240620.63453.c3. [DOI] [PubMed] [Google Scholar]

- Fu Q, Galvin J. Perceptual learning and auditory training in cochlear implant recipients. Trends Amplif. 2007;11:193–205. doi: 10.1177/1084713807301379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka T, Ross B, Kakigi R, et al. One year of musical training affects development of auditory cortical-evoked fields in young children. Brain. 2006;129:2593–2608. doi: 10.1093/brain/awl247. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Christ A, Knutson J, et al. Musical backgrounds, listening habits and aesthetic enjoyment of adult cochlear implant recipients. J Am Acad Audiol. 2000;11:390–406. [PubMed] [Google Scholar]

- Itoh K, Okumiya-Kanke Y, Nakayama Y, et al. Effects of musical training on the early auditory cortical representation of pitch transitions as indexed by change-N1. European J Neuroscience. 2012;36:3580–3592. doi: 10.1111/j.1460-9568.2012.08278.x. [DOI] [PubMed] [Google Scholar]

- Kim JR, Brown CJ, Abbas PJ, et al. The effect of changes in stimulus level on electrically evoked cortical auditory potentials. Ear Hear. 2009;30:320–329. doi: 10.1097/AUD.0b013e31819c42b7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kishon-Rabin L, Amir O, Vexler Y, et al. Pitch discrimination: Are professional musicians better than non-musicians?. J Basic Clin Physiol Pharmacol. 2001;12:125–144. doi: 10.1515/jbcpp.2001.12.2.125. [DOI] [PubMed] [Google Scholar]

- Knutson JF. Psychological aspects of cochlear implantation. In: Cooper H, Craddock L, editors. Cochlear Implants: A Practical Guide. Whurr Publishers Limited; London: 2006. pp. 151–178. [Google Scholar]

- Kochkin S. Customer satisfaction with hearing instruments in the digital age. Hearing J. 2005;58:30–39. [Google Scholar]

- Kraus N, Banai K. Auditory-processing malleability focus on language and music. Curr Dir Psychol Sci. 2007;16:105–110. [Google Scholar]

- Krishnan A, Gandour J, Bidelman G. Experience-dependent plasticity in pitch encoding: from brainstem to auditory cortex. Neuroreport. 2012;23:498–502. doi: 10.1097/WNR.0b013e328353764d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- Limb CJ, Roy AT. Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hear Res. 2014;308:13–26. doi: 10.1016/j.heares.2013.04.009. [DOI] [PubMed] [Google Scholar]

- Looi V, Gfeller K, Driscoll V. Music appreciation and training for cochlear implant recipients: A review. Semin Hear. 2012;33:307–334. doi: 10.1055/s-0032-1329222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madsen SMK, Moore BCJ. Music and Hearing Aids. Trends Hear. 2014;18:2331216514558271. doi: 10.1177/2331216514558271. http://doi.org/10.1177/2331216514558271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin BA, Boothroyd A. Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. J Acoust Soc Am. 2000;107:2155–2161. doi: 10.1121/1.428556. [DOI] [PubMed] [Google Scholar]

- Martin BA, Tremblay KL, Korsczak P. Speech evoked potentials: From the laboratory to the clinic. Ear Hear. 2008;29:285–313. doi: 10.1097/AUD.0b013e3181662c0e. [DOI] [PubMed] [Google Scholar]

- Martinez AS, Eisenberg LS, Boothroyd A. The acoustic change complex in young children with hearing loss: a preliminary study. Sem in Hearing. 2013;34:278–287. doi: 10.1055/s-0033-1356640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Strait D, Kraus N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear Res. 2008;241:34–42. doi: 10.1016/j.heares.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orduña I, Liu EH, Church BA, et al. Evoked-potential changes following discrimination learning involving complex sounds. Clin Neurophysiol. 2012;123:711–719. doi: 10.1016/j.clinph.2011.08.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Lam C, et al. Musician enhancement for speech-in-noise. Ear Hear. 2009;30:653–661. doi: 10.1097/AUD.0b013e3181b412e9. [DOI] [PubMed] [Google Scholar]

- Patel AD. Can nonlinguistic musical training change the way the brain processes speech? The expanded OPERA hypothesis. Hear Res. 2014;308:98–108. doi: 10.1016/j.heares.2013.08.011. [DOI] [PubMed] [Google Scholar]

- Picton TW. Human Auditory Evoked Potentials. Plural Publishing; San Diego: 2011. Late Auditory Evoked Potentials: Changing the Things Which Are. pp. 335–398. [Google Scholar]

- Ponton CW, Don M, Eggermont JJ, et al. Maturation of human cortical auditory function: differences between normal hearing children and children with cochlear implant. Ear Hear. 1996;17:430–437. doi: 10.1097/00003446-199610000-00009. [DOI] [PubMed] [Google Scholar]

- Gifford RH, Revit LJ. Speech Perception for Adult Cochlear Implant Recipients in a Realistic Background Noise: Effectiveness of Preprocessing Strategies and External Options for Improving Speech Recognition in Noise. J Am Acad Audiol. 2010;21:441–488. doi: 10.3766/jaaa.21.7.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin AJ. Neurophysiological influence of musical training on speech perception. Front Psychol. 2011;2:126. doi: 10.3389/fpsyg.2011.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin A, Bosnyak DJ, Trainor LJ, et al. Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J Neurosci. 2003;23:5545–5552. doi: 10.1523/JNEUROSCI.23-13-05545.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin AJ, Roberts LE, Pantev C, et al. Enhanced anterior-temporal processing for complex tones in musicians. Clin Neurophysiol. 2007;118:209–220. doi: 10.1016/j.clinph.2006.09.019. [DOI] [PubMed] [Google Scholar]

- Shahin A, Roberts LE, Trainor LJ. Enhancement of auditory cortical development by musical experience in children. Neuroreport. 2004;15:1917–1921. doi: 10.1097/00001756-200408260-00017. [DOI] [PubMed] [Google Scholar]

- Slater J, Skoe E, Strait DL, et al. Music training improves speech-in-noise perception: longitudinal evidence from a community-based music program. Behav Brain Res. 2015;291:244–252. doi: 10.1016/j.bbr.2015.05.026. [DOI] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Parbery-Clark A, et al. Musical experience shapes top-down auditory mechanisms: evidence from masking and auditory attention performance. Hear Res. 2010;261:22–29. doi: 10.1016/j.heares.2009.12.021. [DOI] [PubMed] [Google Scholar]

- Spahr JJ, Dorman MF, Litvak LM, et al. Development and validation of AzBio sentence lists. Ear Hear. 2012;33:112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi G, Martinez CD, Beamer S. Subjective measures of hearing aid benefit and satisfaction in the NIDCD/VA follow-up study. J Am Acad Audiol. 2007;18:323–49. doi: 10.3766/jaaa.18.4.6. [DOI] [PubMed] [Google Scholar]

- Tervaniemi M, Just V, Koelsch S, et al. Pitch discrimination accuracy in musicians vs nonmusicians: an event-related potential and behavioral study. Exp Brain Res. 2005;161:1–10. doi: 10.1007/s00221-004-2044-5. [DOI] [PubMed] [Google Scholar]

- Tremblay KL. Training-related changes in the brain: Evidence from human auditory Evoked potentials. Sem Hear. 2007;28:120–132. [Google Scholar]

- Tremblay KL, Kraus N. Auditory training induces asymmetrical changes in cortical neural activity. J Speech Lang Hear Res. 2002;45:564–572. doi: 10.1044/1092-4388(2002/045). [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Kraus N, McGee T, et al. Central auditory plasticity: changes in the N1-P2 complex after speech sound training. Ear Hear. 2001;22:79–90. doi: 10.1097/00003446-200104000-00001. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Shahin AJ, Picton T, et al. Audiotry training alters the physiological detection of stimulus-specific cues in humans. Clin Neurophysiol. 2009;120:128–135. doi: 10.1016/j.clinph.2008.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay KL, Billings CJ, Friesen LM, et al. Neural representation of amplified speech sounds. Ear Hear. 2006a;27:93–103. doi: 10.1097/01.aud.0000202288.21315.bd. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Kalstein L, Billings CJ, et al. The neural representation of consonant-vowel transitions in adults who wear hearing aids. Trends Amplif. 2006b;10:155–162. doi: 10.1177/1084713806292655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trainor LJ, Shahin A, Roberts LE. Effects of Musical Training on the Auditory Cortex in children. Ann NY Acad Sci. 2003;999:506–513. doi: 10.1196/annals.1284.061. [DOI] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Kang RS, et al. Psychoacoustic abilities associated with music perception in cochlear implant users. Ear Hear. 2010;31:796–805. doi: 10.1097/AUD.0b013e3181e8b7bd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Clinard CG, Kwon S, et al. Relationship between behavioral and physiological spectral-ripple discrimination. J Assoc Res Otolaryngol. 2011;12:375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yucel E, Sennaroglu G, Belgin E. The family oriented musical training for children with cochlear implants: speech and musical perception results of two year follow-up. Int J Pediatr Otorhinolaryngol. 2009;73:1043–1052. doi: 10.1016/j.ijporl.2009.04.009. [DOI] [PubMed] [Google Scholar]

- Zendel BR, Alain C. Concurrent sound segregation is enhanced in musicians. J Cogn Neurosci. 2009;21:1488–1498. doi: 10.1162/jocn.2009.21140. [DOI] [PubMed] [Google Scholar]

- Zhang L, Peng W, Chen J, et al. Electrophysiological evidences demonstrating differences in brain functions between non-musicians and musicians. Sci Rep. 2015;5:13796. doi: 10.1038/srep13796. Doi:10.1038/srep13796. [DOI] [PMC free article] [PubMed] [Google Scholar]