Abstract

Background

Failed treatment trials are common in major depressive disorder and treatment-resistant depression, and remotely performed multifaceted, centralized structured interviews can potentially enhance signal detection by ensuring that enrolled patients meet eligibility criteria.

Methods

We assessed the use of a specific remote structured interview that validated the diagnosis, level of treatment resistance, and depression severity. The objectives were to (1) assess the rate at which patients who were deemed eligible for participation in trials by site investigators were ineligible, (2) assess the reasons for ineligibility, (3) compare rates of ineligibility between academic and nonacademic sites, (4) compare eligibility between US and non-US sites, and (5) report the placebo response rates in trials utilizing this quality assurance approach, comparing its placebo response rates with those reported in the literature. Methods included a pooled analysis of 9 studies that utilized this methodology (SAFER interviews).

Results

Overall, 15.33% of patients who had been deemed eligible at research sites were not eligible after the structured interviews. The most common reason was that patients did not meet the study requirements for level of treatment resistance. Pass rates were significantly higher at non-US compared with US sites (94.6% vs 83.3%, respectively; P < 0.001). There was not a significant difference between academic and nonacademic sites (87.8% vs 82.4%; P = 0.08). Placebo response rates were 13.0% to 27.3%, below the 30% to 40% average in antidepressant clinical trials, suggesting a benefit of the quality assurance provided by these interviews.

Conclusions

The use of a remotely structured interview by experienced clinical researchers was feasible and possibly contributed to lower-than-average placebo response rates. The difference between US and non-US sites should be the subject of further research.

Key Words: clinical trials, methodology, remote, rater, depression

Novel treatments for major depressive disorder (MDD) are needed, considering the morbidity and mortality that currently exist despite currently available treatments. Drug development for MDD is presently hindered by high placebo response rates in clinical trials. Potentially efficacious new medications for MDD and treatment-resistant depression (TRD) may be derailed from further study and development if early trials do not demonstrate separation of drug from placebo response rates, and the elevation of placebo response rates across the years has made such signal detection increasingly difficult.1,2

One component that may contribute to higher placebo response rates is the enrollment of inappropriate subjects into depression studies, increasing the risk that nonspecific factors can influence study outcomes. Investigators often feel pressured to recruit and enroll participants into clinical trials as quickly as possible, because of financial and systemic pressures that occur within large-scale multisite clinical trials. These burdens on site investigators may influence the suitability and appropriateness of enrolled study participants.3,4 This process is additionally complicated by the fact that some potential study subjects are motivated by factors such as remuneration (eg, “professional patients”) or other secondary gains from participation.5 Therefore, quality assurance measures are considered a necessary element to enhance the precision of patient selection into randomized trials for the treatment of MDD and TRD.

The investigators developed and honed a multifaceted, centralized, remotely structured interview tool for application after in-person patient screening at individual research sites. The goal of the structured interview is to further refine the patient population to only include patients with the protocol-specified history, diagnosis, and disease severity. The SAFER structured interview, an abbreviation for state versus trait, assessability, face validity, ecological validity, and rule of the three Ps (see below for more details). The SAFER Interview and the Antidepressant Treatment Response Questionnaire (ATRQ) represent 2 clinical trial tools developed at Massachusetts General Hospital (MGH) to increase the precision associated with the diagnosis and course of MDD and of TRD. The SAFER and ATRQ provide researchers with tools that are well suited for use in a clinical setting, that enrich the qualitative assessment of MDD and TRD, and that when combined with a rating scale for symptom severity assessment provide a comprehensive quality assurance process. For simplicity, the entire remote interview is referred to as the “SAFER Interview,” although components of the ATRQ and depression severity are typically included and used together in studies referred to in this article.

The objectives of this study were (1) to assess the rate at which patients who are deemed eligible for enrollment into clinical trials of MDD and TRD by site investigators are deemed ineligible based on these tools, (2) to determine the reasons why these patients were deemed ineligible, (3) to compare rates of ineligibility between academic and nonacademic sites, (4) to compare eligibility between US and non-US sites, and (5) to report the placebo response rates in trials utilizing these tools, comparing them with placebo response rates reported in the literature.

METHODS

A review of independent remote SAFER Interviews conducted in 9 consecutive clinical drug trials in MDD and TRD was performed. In each trial, the interview occurred after each subject had passed screening procedures at the study site and was considered to be eligible for the trial by the site investigators.

Raters

The qualifications of the SAFER remote raters are as follows: (1) psychiatrist or psychologist, (2) at least 2 years of clinical research experience, (3) fluent in English and, if applicable, in the language of the patient being interviewed, and (4) completed training in the instruments used in the SAFER Interview. The North American interviews were performed by clinical researchers from MGH. Interviews outside North America were performed by independent contractors hired and trained by the MGH Clinical Trials Network and Institute (CTNI). The contractors met the same experience requirements and completed the same training as the MGH SAFER raters.

Rater training was composed of a didactic presentation of materials, observation of interviews, performance of mock interviews by the trainee, and observation of the trainee's study interviews by an experienced senior rater. Upon completion of training, each rater's summary of qualifications, experience, and training performance were reviewed by CTNI leadership before the rater started conducting interviews.

Procedures

The SAFER Interview was performed remotely by raters, who telephoned the patient directly at their home or on their cell phone. During the interview, the rater administered the SAFER Criteria Inventory (SCI), the MGH ATRQ, and a structured severity scale for MDD (either the Montgomery-Asberg Depression Rating Scale or the 17-item Hamilton Depression Rating Scale). After interviews where the eligibility of a patient was not clear, the process for adjudication entailed review with the MGH SAFER ratings team via a secure e-mail system. If additional information was needed to assess whether the patient was eligible, the rater contacted the clinical staff at the study site for additional information and/or pharmacy or medical records. The collection of additional information and good communication with sites were encouraged among raters to facilitate the accuracy and collegiality of the independent remote interview process.

SAFER Criteria Inventory

The SAFER Criteria Interview was created to assess the patient's presentation and history to determine whether the patient meets criteria for the disorder being studied as observed in real-world settings. This has been previously described in detail.6,7 Originally developed for use in trials of MDD and TRD, the SCI has been adapted and utilized in clinical trials for other psychiatric disorders such as schizophrenia, bipolar depression, obsessive-compulsive disorder, and attention-deficit/hyperactivity disorder.

To summarize, the SCI is a 9-item clinician-performed structured interview based on the following criteria: (1) state versus trait: the identified symptoms must reflect the current state of illness and not longstanding traits that are unlikely to change across a treatment trial; (2) assessability: the patient's symptoms can be reliably assessed with standardized, reliable rating instruments; (3) face validity: the patient's presentation is consistent with our knowledge of the illness (their symptoms are specific and map onto the nosological entity, ie, the Diagnostic and Statistical Manual of Mental Disorders; there is clear change from previous level of function; the symptoms are similar to previous episodes if recurrent); (4) ecological validity: the patient's symptoms reflect the characteristics of the illness in a real-world setting (frequency, intensity, duration, course, and impact over at least 4 weeks); and (5) rule of the 3 Ps: identified symptoms must be pervasive, persistent, and pathological. The three Ps must interfere with function and quality of life.

Massachusetts General Hospital Antidepressant Treatment Response Questionnaire8,9

The MGH ATRQ is a clinician-rated questionnaire that examines the patient's antidepressant treatment history, using specific anchor points to define the adequacy of both dose and duration of each antidepressant trial, and the degree of symptomatic improvement obtained with each trial. This questionnaire, which has been validated,10 allows for the determination of treatment resistance in depression. The remote raters performed either the Montgomery-Asberg Depression Rating Scale or the 17-item Hamilton Depression Rating Scale as required by each protocol to verify severity of depression for eligibility.11,12

Statistical Methods

For continuous measures, descriptive statistics consisted of means and standard deviations, and for rates, descriptive statistics consisted of percentages or proportions. For continuous measures, statistical comparisons were conducted by independent t tests, and for rates were computed by chi-square tests or Fisher exact test.

RESULTS

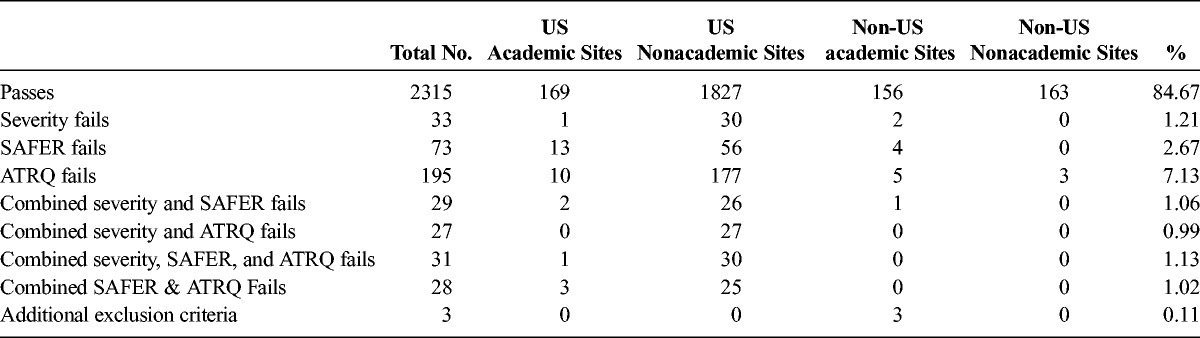

Across 7 independent trials of MDD and 2 of TRD, 2734 independent remote SAFER Interviews were performed. In these 9 trials involving 2734 interviews of patients, 2315 patients (84.67%) were deemed eligible for continued screening (ie, “passed” the SAFER Interview). The results are summarized in Table 1. Among the excluded patients, 33 (1.21%) did not meet severity criteria only as specified by the depression rating scales in the study inclusion criteria, 73 (2.67%) did not meet SAFER criteria only, 195 (7.13%) did not meet ATRQ criteria for treatment resistance only, and 3 (0.11%) met additional protocol exclusion criteria revealed during the interview, outside the scope of the severity, SAFER, or ATRQ measures. The remaining 115 (4.21%) patients did not meet criteria based on a combination of 2 or more components of the remote interview. Therefore, a total of 419 (15.33%) patients were deemed ineligible upon completion of the interview.

TABLE 1.

Overall MDD and TRD Trials Results

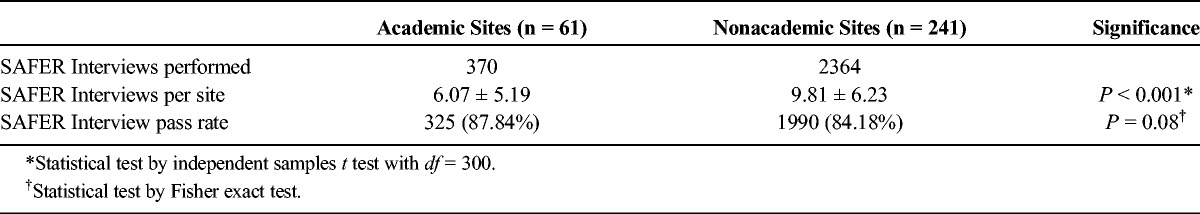

Academic Versus Nonacademic Study Sites

Results from SAFER Interviews were compared between academic study sites and nonacademic sites and are summarized in Table 2. All 9 of the independent trials of MDD and TRD utilized at least 1 academic site. Across the academic sites in these 9 trials, 370 independent remote SAFER Interviews were performed. Of the 370 interviews, 325 (87.84%) patients were deemed eligible for continued screening. Of the remaining patients, 3 (0.81%) did not meet severity criteria only, 17 (4.59%) did not meet SAFER criteria only, 15 (4.05%) did not meet ATRQ criteria for treatment resistance only, and 3 (0.81%) met additional protocol exclusion criteria, outside the scope of the severity, SAFER, or ATRQ measures. The remaining 7 (1.89%) patients did not meet criteria on a combination of 2 or more components of the remote interview. A total of 45 (12.16%) patients were deemed ineligible upon completion of the interview.

TABLE 2.

Comparison of SAFER Interview Results at Academic Versus Nonacademic Sites

Across the nonacademic sites in these 9 trials, 2364 independent remote SAFER Interviews were performed. Of the 2364 interviews, 1990 (84.18%) patients were deemed eligible for continued screening. Of the remaining patients, 30 (1.27%) did not meet severity criteria only, 56 (2.37%) did not meet SAFER criteria only, 180 (7.61%) did not meet ATRQ criteria for treatment resistance only, and 0 (0.00%) met additional protocol exclusion criteria outside the scope of the severity, SAFER, or ATRQ measures. The remaining 108 (4.57%) patients did not meet criteria on a combination of 2 or more components of the remote interview. A total of 374 (15.82%) patients were deemed ineligible upon completion of the interview.

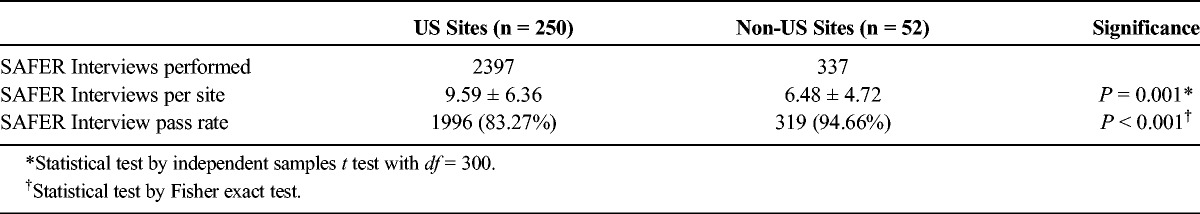

US Versus Non-US Study Sites

Results from SAFER Interviews were compared between study sites in the United States and sites outside the United States, which included Australia, Belgium, Bulgaria, Canada, Germany, Hungary, Moldova, Poland, Romania, Russia, and Ukraine. Of the 9 independent trials of MDD and TRD, 7 used at least some sites located in the United States. Across these 7 trials, 2397 independent remote SAFER Interviews were performed in the United States. Of the 2397 US interviews, 1996 (83.27%) patients were deemed eligible for continued screening. Of the remaining patients, 31 (1.29%) did not meet severity criteria, 69 (2.88%) did not meet SAFER criteria only, 187 (7.80%) did not meet ATRQ criteria for treatment resistance, and 0 (0.00%) met additional protocol exclusion criteria outside the scope of the severity, SAFER, or ATRQ measures. The remaining 114 (4.76%) patients did not meet criteria on a combination of 2 or more components of the remote interview. A total of 401 (16.73%) patients were deemed ineligible upon completion of the interview.

Of the 9 independent trials of MDD and TRD, 4 used sites located outside of the United States. Across these 4 trials, 337 independent remote SAFER Interviews were performed outside of the United States. Of the 337 interviews, 319 (94.66%) patients were deemed eligible for continued screening. Of the remaining patients, 2 (0.59%) did not meet severity criteria, 4 (1.19%) did not meet SAFER criteria only, 8 (2.37%) did not meet ATRQ criteria for treatment resistance, and 3 (0.89%) met additional protocol exclusion criteria outside the scope of the severity, SAFER, or ATRQ measures. The remaining 1 (0.30%) patient did not meet criteria on a combination of 2 or more components of the SAFER Interview. A total of 18 (5.34%) patients were deemed ineligible upon completion of the interview.

Because of the smaller number of non-US sites, independent sample t tests were conducted to compare interview results between the different types of sites. A significantly greater number of SAFER Interviews per site were performed at sites in the United States (P ≤ 0.001). The mean rate of interview passes per site was significantly higher at sites outside the United States (P = 0.010) (Table 3).

TABLE 3.

Comparison of SAFER Interview Results at US Versus Non-US Sites

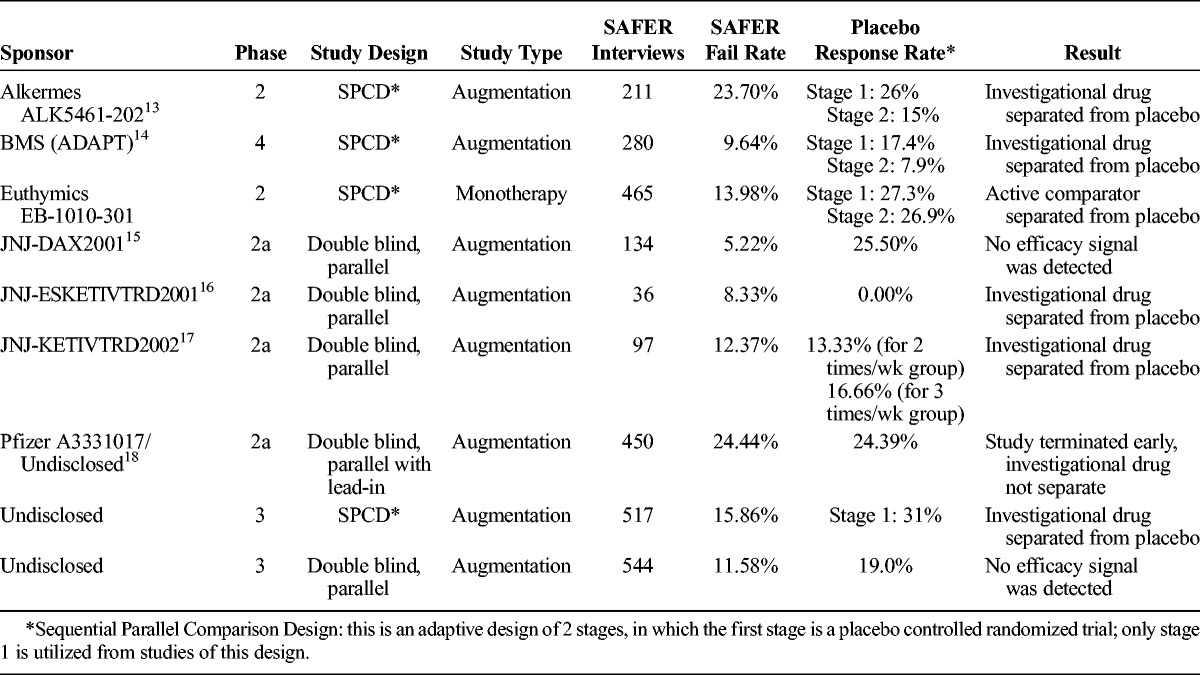

Placebo Response Rates

Placebo response rates provide a signal as to whether the SAFER Interviews added to the quality of the studies and potential for signal detection. We did not have a control group of studies conducted in parallel, but report the placebo response rates in Table 4.

TABLE 4.

Studies Using the SAFER Interviews and Placebo Response Rates

In all of the studies, placebo response rates were within a range of 13.0% to 27.3%, below the 30% to 40% average reported in antidepressant clinical trials.1,2,19

DISCUSSION

As demonstrated by these findings, a substantial proportion of patients who were initially deemed eligible by research site-clinicians proved not eligible after a comprehensive remote evaluation by experienced interviewers. The remote interviews consisted of the SAFER tool, the ATRQ, and a measure of depression severity. Of the trials described here, overall 15.33% of the patients were determined not to meet enrollment criteria on at least 1 component. Thus, these patients would have been enrolled into the trials had there not been a remote interview. We found that the elements of the interview most likely to lead to ineligibility were the verification of treatment resistance (as determined by the ATRQ) and the SAFER tool. Interestingly, we found that failure rates were higher among US compared with non-US sites. We also found a trend for higher failure rates among nonacademic sites compared with academic sites. Finding that 1 in approximately 7 patients deemed eligible for enrollment by site investigators was observed to be ineligible through an independent interview is remarkable, as the sites were aware of the process in place and therefore perhaps more likely to avoid the enrollment of inappropriate patients.

Although remote interviews in psychiatric research are not new, this is a novel application of a multidimensional interview conducted by experienced clinical psychiatrists and psychologists for quality assurance in clinical trials. Previous studies have demonstrated that interviews can be conducted remotely in trials of depression and other psychiatric disorders with good precision with the use of severity measures, and good acceptability among participants.20–22

The implications for precision in clinical trials of MDD and TRD are far reaching and have major implications for drug development and public health. Certainly, there is a cost associated with these remote quality assurance interviews by MD- and PhD-level clinical researchers, in terms of financial costs, and the additional efforts required to incorporate this step in trial implementation. However, failed trials are much more costly financially and, more importantly, present barriers to advances in the care of patients with psychiatric disorders. In addition, because placebo rates have risen, the risk of obscuring positive findings has heightened, making drug development more expensive and reducing the incentive for drug development in psychiatric disorders.1,2 Higher placebo response rates necessitate larger trials to achieve adequate statistical power, resulting in higher overall costs of clinical research, a cost ultimately passed along to patients who have to pay higher prices for medications that are eventually marketed.

It is widely appreciated that patients who will likely be placebo responders are problematic to include in clinical trials because high placebo response rates increase the risk of failed trials. McCann et al have described these participants as those “destined to succeed” and have modeled the robust “threat” that they pose to drug development, much more so than participants who are “destined to fail” or are unlikely to respond to any treatment condition. As they discuss, the complicated overlapping relationships between nonadherence, “professional subjects” motivated by financial gain, and placebo response rates are aspects of clinical research that need to be addressed concurrently. However, individuals who are unlikely to respond to any treatment, such as those living in situations of socioeconomic deprivation or who have different kinds of situational stresses, are also of concern in trial enrollment and may impact outcomes in studies with lower placebo response rates.23 The implementation of a remote interview to pare out those “destined” for response or nonresponse, regardless of study arm, is an important tool for the conduction of trials that will further the field.

The significant differences found in SAFER Interview failure rates are intriguing. The US sites had a significantly greater rate of SAFER failures. Compared with most of the non-US countries in which these studies were conducted, the United States lacks a national systematic health care system. Therefore, there are broad disparities in access to mental health services in the United States, and those who enter MDD/TRD trials may live with financial or environmental stressors that diminish the observable impact of an efficacious biological treatment. Similarly, the financial incentives that are offered to trial participants are likely to provide a stronger motivation for participation for those in more dire socioeconomic situations. Although we do not have specific data for each site's practices for patient remuneration, from the knowledge we have about these studies, in most of the patients in the United States, trials were remunerated, and most of those outside the United States were not. It is possible—and probable—that financial payments to patients may influence the recruitment and enrollment of ineligible patients.

We also saw a slight nonsignificant trend for a higher failure rate at nonacademic sites. A major difference between academic and nonacademic sites is the volume of patients screened and enrolled in trials of depression.23 Nonacademic sites generally recruit a higher number of patients in a shorter amount of time per trial. Recruitment strategies may also differ, with some sites using databases of patients from previous studies. This lack of a significant difference is consistent with a finding seen in a previous analysis of one of the studies included in these analyses.24 The current findings build upon this finding with a larger volume of patients screened and studies represented.

The strengths of this study include the assessment of the impact of remote assessments upon quality assurance in clinical trials for MDD/TRD. These assessments address multiple aspects of patient eligibility, are conducted by highly experienced clinician/researchers who were trained in remote interviewing, and incorporate a systematic process for managing inconclusive eligibility determinations by individual raters. The study also had limitations. The interviews were conducted by MD- and PhD-level clinical researchers, and it is not known whether reinterview at the sites or interview by individuals of other disciplines would have yielded similar results. We were not able to have a “control” group of studies matched for study drug, indication, study sites, and study size without the remote interview to assess for differences in study outcomes such as drug and placebo response rates. However, that said, the placebo response rates observed in the 9 consecutive trials that we examined were in ranges (13.0%–27.3%) well below the 30% to 40% average reported in standard antidepressant clinical trials,1,2,19 suggesting a robust clinical research utility.

In summary, the remote interview based on the SAFER, MGH ATRQ, and symptom severity instruments permits greater vigilance over patients enrolled into clinical trials and provides quality assurance rendered by experienced clinician researchers. These and other quality assurance measures should be implemented to ensure that the drug development process is precise and efficient and can ultimately bring effective drugs to market for patients who are not adequately treated with available options.

AUTHOR DISCLOSURE INFORMATION

Ms Flynn, Mr Pooley, and Dr Baer declare no conflicts of interest.

Dr Freeman (past 24 months): Investigator Initiated Trials (research): Takeda, JayMac; Advisory boards: Janssen, Sage, JDS therapeutics, Sunovion; Independent Data Safety and Monitoring Committee: Janssen (Johnson & Johnson); Medical Editing: GOED newsletter. Dr Freeman is an employee of Massachusetts General Hospital and works with the MGH National Pregnancy Registry [Current Registry Sponsors: Alkermes, Inc. (2016–present); Otsuka America Pharmaceutical Inc (2008–present); Forest/Actavis (2016–present), Sunovion Pharmaceuticals, Inc (2011–present)]. As an employee of MGH, Dr Freeman works with the MGH CTNI, which has had research funding from multiple pharmaceutical companies and the National Institute of Mental Health.

Dr Mischoulon has received research support from the FisherWallace, Nordic Naturals, MSI, and PharmoRx Therapeutics. He has received honoraria for speaking from the MGH Psychiatry Academy. He has received royalties from Lippincott Williams & Wilkins for the published book Natural Medications for Psychiatric Disorders: Considering the Alternatives.

Dr Mou has received consulting fees from Valera Health.

Disclosures (lifetime): Maurizio Fava, MD.

All disclosures can be viewed online at http://mghcme.org/faculty/faculty-detail/maurizio_fava.

Research support: Abbott Laboratories; Acadia Pharmaceuticals; Alkermes Inc; American Cyanamid; Aspect Medical Systems; AstraZeneca; Avanir Pharmaceuticals; AXSOME Therapeutics; BioResearch; BrainCells Inc; Bristol-Myers Squibb; CeNeRx BioPharma; Cephalon; Cerecor; Clintara LLC; Covance; Covidien; Eli Lilly and Company; EnVivo Pharmaceuticals Inc; Euthymics Bioscience Inc; Forest Pharmaceuticals Inc; FORUM Pharmaceuticals; Ganeden Biotech Inc; GlaxoSmithKline; Harvard Clinical Research Institute; Hoffman-LaRoche; Icon Clinical Research; i3 Innovus/Ingenix; Janssen R&D LLC; Jed Foundation; Johnson & Johnson Pharmaceutical Research & Development; Lichtwer Pharma GmbH; Lorex Pharmaceuticals; Lundbeck Inc; MedAvante; Methylation Sciences Inc (MSI); National Alliance for Research on Schizophrenia & Depression; National Center for Complementary and Alternative Medicine; National Coordinating Center for Integrated Medicine; National Institute of Drug Abuse; National Institute of Mental Health; Neuralstem Inc; NeuroRx; Novartis AG; Organon Pharmaceuticals; PamLab LLC; Pfizer Inc; Pharmacia-Upjohn; Pharmaceutical Research Associates Inc; Pharmavite LLC; PharmoRx Therapeutics; Photothera; Reckitt Benckiser; Roche Pharmaceuticals; RCT Logic LLC (formerly Clinical Trials Solutions LLC); Sanofi-Aventis US LLC; Shire; Solvay Pharmaceuticals Inc; Stanley Medical Research Institute; Synthelabo; Takeda Pharmaceuticals; Tal Medical; VistaGen; Wyeth-Ayerst Laboratories.

Advisory board/consultant: Abbott Laboratories; Acadia; Affectis Pharmaceuticals AG; Alkermes Inc; Amarin Pharma Inc; Aspect Medical Systems; AstraZeneca; Auspex Pharmaceuticals; Avanir Pharmaceuticals; AXSOME Therapeutics; Bayer AG; Best Practice Project Management Inc; Biogen; BioMarin Pharmaceuticals Inc; Biovail Corporation; BrainCells Inc; Bristol-Myers Squibb; CeNeRx BioPharma; Cephalon Inc; Cerecor; CNS Response Inc; Compellis Pharmaceuticals; Cypress Pharmaceutical Inc; DiagnoSearch Life Sciences (P) Ltd; Dinippon Sumitomo Pharma Co Inc; Dov Pharmaceuticals Inc; Edgemont Pharmaceuticals Inc; Eisai Inc; Eli Lilly and Company; EnVivo Pharmaceuticals Inc; ePharmaSolutions; EPIX Pharmaceuticals Inc; Euthymics Bioscience Inc; Fabre-Kramer Pharmaceuticals Inc; Forest Pharmaceuticals Inc; Forum Pharmaceuticals; GenOmind LLC; GlaxoSmithKline; Grunenthal GmbH; Indivior; i3 Innovus/Ingenis; Intracellular; Janssen Pharmaceutica; Jazz Pharmaceuticals Inc; Johnson & Johnson Pharmaceutical Research & Development LLC; Knoll Pharmaceuticals Corp; Labopharm Inc; Lorex Pharmaceuticals; Lundbeck Inc; MedAvante Inc; Merck & Co Inc; MSI; Naurex Inc; Nestle Health Sciences; Neuralstem Inc; Neuronetics Inc; NextWave Pharmaceuticals; Novartis AG; Nutrition 21; Orexigen Therapeutics Inc; Organon Pharmaceuticals; Osmotica; Otsuka Pharmaceuticals; Pamlab LLC; Pfizer Inc; PharmaStar; Pharmavite LLC.; PharmoRx Therapeutics; Precision Human Biolaboratory; Prexa Pharmaceuticals Inc; PPD; Puretech Ventures; PsychoGenics; Psylin Neurosciences Inc; RCT Logic LLC (formerly Clinical Trials Solutions LLC); Rexahn Pharmaceuticals Inc; Ridge Diagnostics Inc; Roche; Sanofi-Aventis US LLC; Sepracor Inc; Servier Laboratories; Schering-Plough Corporation; Shenox Pharmaceuticals; Solvay Pharmaceuticals Inc; Somaxon Pharmaceuticals Inc; Somerset Pharmaceuticals Inc; Sunovion Pharmaceuticals; Supernus Pharmaceuticals Inc; Synthelabo; Taisho Pharmaceutical; Takeda Pharmaceutical Company Limited; Tal Medical Inc; Tetragenex Pharmaceuticals Inc; TransForm Pharmaceuticals Inc; Transcept Pharmaceuticals Inc; Vanda Pharmaceuticals Inc; VistaGen.

Speaking/publishing: Adamed Co; Advanced Meeting Partners; American Psychiatric Association; American Society of Clinical Psychopharmacology; AstraZeneca; Belvoir Media Group; Boehringer Ingelheim GmbH; Bristol-Myers Squibb; Cephalon Inc; CME Institute/Physicians Postgraduate Press Inc; Eli Lilly and Company; Forest Pharmaceuticals Inc; GlaxoSmithKline; Imedex LLC; MGH Psychiatry Academy/Primedia; MGH Psychiatry Academy/Reed Elsevier; Novartis AG; Organon Pharmaceuticals; Pfizer Inc; PharmaStar; United BioSource Corp; Wyeth-Ayerst Laboratories.

Stock/Other Financial Options:

Equity Holdings: Compellis; PsyBrain Inc.

Royalty/patent, other income: Patents for Sequential Parallel Comparison Design, licensed by MGH to Pharmaceutical Product Development LLC (PPD); and patent application for a combination of Ketamine plus Scopolamine in Major Depressive Disorder, licensed by MGH to Biohaven.

Copyright for the MGH Cognitive & Physical Functioning Questionnaire, Sexual Functioning Inventory, Antidepressant Treatment Response Questionnaire, Discontinuation-Emergent Signs & Symptoms, Symptoms of Depression Questionnaire, and SAFER; Lippincott, Williams & Wilkins; Wolters Kluwer; World Scientific Publishing Co. Pte. Ltd.

REFERENCES

- 1.Montgomery SA. The failure of placebo-controlled studies. ECNP Consensus Meeting, September 13, 1997, Vienna. European College of Neuropsychopharmacology. Eur Neuropsychopharmacol. 1999;9:271–276. [DOI] [PubMed] [Google Scholar]

- 2.Iovieno N, Papakostas GI. Correlation between different levels of placebo response rate and clinical trial outcome in major depressive disorder: a meta-analysis. J Clin Psychiatry. 2012;73:1300–1306. [DOI] [PubMed] [Google Scholar]

- 3.Hall MA, Friedman JY, King NM, et al. Commentary: per capita payments in clinical trials: reasonable costs versus bounty hunting. Acad Med. 2010;85:1554–1556. [DOI] [PubMed] [Google Scholar]

- 4.Puttagunta PS, Caulfield TA, Griener G. Conflict of interest in clinical research: direct payment to the investigators for finding human subjects and health information. Health Law Rev. 2002;10:30–32. [PubMed] [Google Scholar]

- 5.McCann DJ, Petry NM, Bressell A, et al. Medication nonadherence, “professional subjects,” and apparent placebo responders: overlapping challenges for medications development. J Clin Psychopharmacol. 2015;35:566–573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Desseilles M, Witte J, Chang TE, et al. Massachusetts General Hospital SAFER criteria for clinical trials and research. Harv Rev Psychiatry. 2013;21:269–274. [DOI] [PubMed] [Google Scholar]

- 7.Targum SD, Pollack MH, Fava M. Redefining affective disorders: relevance for drug development. CNS Neurosci Ther. 2008;14:2–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fava M, Davidson KG. Definition and epidemiology of treatment-resistant depression. Psychiatr Clin North Am. 1996;19:179–200. [DOI] [PubMed] [Google Scholar]

- 9.Fava M. Diagnosis and definition of treatment-resistant depression. Biol Psychiatry. 2003;53:649–659. [DOI] [PubMed] [Google Scholar]

- 10.Chandler GM, Iosifescu DV, Pollack MH, et al. RESEARCH: validation of the Massachusetts General Hospital Antidepressant Treatment History Questionnaire (ATRQ). CNS Neurosci Ther. 2010;16:322–325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Williams JB, Kobak KA. Development and reliability of a structured interview guide for the Montgomery Asberg Depression Rating Scale (SIGMA). Br J Psychiatry. 2008;192:52–58. [DOI] [PubMed] [Google Scholar]

- 12.Williams JB. A structured interview guide for the Hamilton Depression Rating Scale. Arch Gen Psychiatry. 1988;45:742–747. [DOI] [PubMed] [Google Scholar]

- 13.Fava M, Memisoglu A, Thase ME, et al. Opioid modulation with buprenorphine/samidorphan as adjunctive treatment for inadequate response to antidepressants: a randomized double-blind placebo-controlled trial. Am J Psychiatry. 2016;173:499–508. [DOI] [PubMed] [Google Scholar]

- 14.Fava M, Mischoulon D, Iosifescu D, et al. A double-blind, placebo-controlled study of aripiprazole adjunctive to antidepressant therapy among depressed outpatients with inadequate response to prior antidepressant therapy (ADAPT-A Study). Psychother Psychosom. 2012;81:87–97. [DOI] [PubMed] [Google Scholar]

- 15.Kent JM, Daly E, Kezic I, et al. Efficacy and safety of an adjunctive mGlu2 receptor positive allosteric modulator to a SSRI/SNRI in anxious depression. Prog Neuropsychopharmacol Biol Psychiatry. 2016;67:66–73. [DOI] [PubMed] [Google Scholar]

- 16.Singh JB, Fedgchin M, Daly E, et al. Intravenous esketamine in adult treatment-resistant depression: a double-blind, double-randomization, placebo-controlled study. Biol Psychiatry. 2016;80:424–431. [DOI] [PubMed] [Google Scholar]

- 17.Singh J, Fedgchin M, Daly E, et al. Onset of efficacy of ketamine in treatment-resistant depression: a double-blind, randomized, placebo-controlled, dose frequency study, in poster session presented at: 27th European College of Neuropsychopharmacology (ECNP); 2014 Oct 18–21. 2014: Berlin, DE. [Google Scholar]

- 18.Fava M, Ramey T, Pickering E, et al. A randomized, double-blind, placebo-controlled phase 2 study of the augmentation of a nicotinic acetylcholine receptor partial agonist in depression: is there a relationship to leptin levels? J Clin Psychopharmacol. 2015;35:51–56. [DOI] [PubMed] [Google Scholar]

- 19.Sonawalla SB, Rosenbaum JF. Placebo response in depression. Dialogues Clin Neurosci. 2002;4:105–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shen J, Kobak KA, Zhao Y, et al. Use of remote centralized raters via live 2-way video in a multicenter clinical trial for schizophrenia. J Clin Psychopharmacol. 2008;28:691–693. [DOI] [PubMed] [Google Scholar]

- 21.Williams JB, Ellis A, Middleton A, et al. Primary care patients in psychiatric clinical trials: a pilot study using videoconferencing. Ann Gen Psychiatry. 2007;6:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dobscha SK, Corson K, Solodky J, et al. Use of videoconferencing for depression research: enrollment, retention, and patient satisfaction. Telemed J E Health. 2005;11:84–89. [DOI] [PubMed] [Google Scholar]

- 23.Dunlop BW, Rapaport MH. Antidepressant signal detection in the clinical trials vortex. J Clin Psychiatry. 2015;76:e657–e659. [DOI] [PubMed] [Google Scholar]

- 24.Dording CM, Dalton ED, Pencina MJ, et al. Comparison of academic and nonacademic sites in multi-center clinical trials. J Clin Psychopharmacol. 2012;32:65–68. [DOI] [PubMed] [Google Scholar]