Abstract

The relationship between autistic traits and gaze-oriented attention to fearful and happy faces was investigated at the behavioral and neuronal levels. Upright and inverted dynamic face stimuli were used in a gaze-cueing paradigm while ERPs were recorded. Participants responded faster to gazed-at than to non-gazed-at targets and this Gaze Orienting Effect (GOE) diminished with inversion, suggesting it relies on facial configuration. It was also larger for fearful than happy faces but only in participants with high Autism Quotient (AQ) scores. While the GOE to fearful faces was of similar magnitude regardless of AQ scores, a diminished GOE to happy faces was found in participants with high AQ scores.

At the ERP level, a congruency effect on target-elicited P1 component reflected enhanced visual processing of gazed-at targets. In addition, cue-triggered early directing attention negativity and anterior directing attention negativity reflected, respectively, attention orienting and attention holding at gazed-at locations. These neural markers of spatial attention orienting were not modulated by emotion and were not found in participants with high AQ scores. Together these findings suggest that autistic traits influence attention orienting to gaze and its modulation by social emotions such as happiness.

Keywords: Autism-spectrum Quotient, ERPs, Emotions, Gaze, Attention

1. INTRODUCTION

Gaze direction is a powerful nonverbal cue, which informs us about others’ attention focus. The ability to orient one’s attention to the location indicated by others’ gaze to determine their object of focus is called Joint Attention. Research on joint attention often employs gaze cueing paradigms (Friesen & Kingstone, 1998), in which a central face looking to the side is followed by a lateral target. Participants typically respond faster to gazed-at (congruent) targets than to non-gazed-at (incongruent) targets, regardless of the task. This reliable effect, known as the Gaze Orienting Effect (GOE), reflects our propensity to attend to the location indicated by another’s gaze (see Frischen, Bayliss & Tipper, 2007 for an in depth review on the GOE).

While gaze direction provides information regarding the location of the viewer’s attention, facial expressions provide information regarding the viewer’s feelings toward the object of focus and allow for inferences regarding the nature of that object. For instance, observing a fearful face looking to the side should provide a strong incentive to orient to the gazed-at location because it indicates the presence of a possible danger. In accordance with this idea, many studies found a larger GOE for fearful than neutral and happy faces, which was interpreted as reflecting a boost in attention-orienting toward a threat (Bayless, Glover, Taylor, & Itier, 2011; Fox, Mathews, Calder, & Yiend, 2007; Graham, Friesen, Fichtenholtz, & LaBar, 2010; Lassalle & Itier, 2013; Mathews, Fox, Yiend, & Calder, 2003; Neath, Nilsen, Gittsovich, & Itier, 2013; Pecchinenda, Pes, Ferlazzo, & Zoccolotti, 2008; Putman, Hermans, & Van Honk, 2006; Tipples, 2006). However, some studies did not find this GOE enhancement for fearful faces (e.g., Bayliss, Frischen, Fenske, & Tipper, 2007; Fichtenholtz, Hopfinger, Graham, Detwiler, & LaBar, 2007, 2009; Galfano et al., 2011; Hietanen & Leppänen, 2003). Although the reasons for those discrepant findings are not entirely clear, several factors might influence the emotional modulation of the GOE. The first one is the Stimulus Onset Asynchrony (SOA), as Graham et al. (2010) reported that the GOE was modulated by emotions when the SOA was 475 to 575 ms but not when it was 275 ms. Another potentially influential factor is the task, as localization tasks seem more relevant than other tasks, given that the direction of another’s gaze indicates where the object of interest is located in the environment (Lassalle & Itier, 2013). Whether the cue is static or dynamic could also impact the GOE emotional modulation, as emotions are processed better when presented dynamically (Sato, Kochiyama, Yoshikawa, Naito, & Matsumura, 2004). Finally, participants’ anxiety could affect the emotional modulation of the GOE, since some previous studies reported a GOE enhancement for fear only in highly anxious participants (e.g., Fox et al., 2007; Mathews et al., 2003). More recent studies, however, reported a GOE increase for fear even in non-anxious participants (Bayless et al., 2011; Lassalle & Itier, 2013; Neath et al., 2013). Following up on those recent findings, the present study included only nonclinically anxious participants and used a 500 ms SOA, a localization task and a dynamic face cue. This dynamic face cue averted its gaze prior to expressing an emotion, in accordance with previous studies (Graham et al., 2010; Neath et al., 2013). This sequence allows the gaze shift to be dissociated from the size of the eyes that varies with emotion (larger for fearful than for happy faces) thus avoiding any GOE modulation by emotion to be due to eye size alone.

The neural processes underlying gaze-oriented attention and its modulation by emotions are poorly understood but Event Related Potentials (ERPs) have been used to track their time course during cue and target presentations, allowing for a better characterization of the cognitive stages involved in attention orienting than response times alone. Attention shift is usually indexed by the amplitude of the P1 ERP component recorded to the target. P1 is a positive deflection occurring at parieto-occipital sites around 100ms after target presentation and reflects the early visual processing of the target. P1 is known to be sensitive to attention (Mangun, 1995; Luck, Woodman, & Vogel, 2000) and in cueing paradigms P1 amplitude is enhanced for congruent relative to incongruent targets, reflecting a facilitation of visual processing for the cued targets. This effect has been shown in both arrow (Eimer, 1997; Mangun & Hillyard, 1991) and gaze cue (Hietanen, Leppänen, Nummenmaa, & Astikainen, 2008; Schuller & Rossion, 2001, 2004, 2005).

While the congruency effects observed on P1 amplitude reflect the impact of attention orienting on subsequent processes such as the visual processing of the target, the ERPs associated with cue presentation are a direct measure of attention-orienting itself and include the Early Directing Attention Negativity (EDAN) and the Anterior Directing Attention Negativity (ADAN) (Praamstra, Boutsen, & Humphreys, 2005; Simpson et al., 2006). EDAN is an ERP component occurring at posterior locations between 200 and 300ms after cue presentation and is thought to reflect actual attention-orienting to the cued location. In contrast, ADAN ERP component occurs anteriorly between 300 and 500ms after cue presentation and reflects attention holding at the cued location. Although most previous studies found that EDAN and ADAN were triggered by arrow cues, Lassalle & Itier (2013) recently reported their presence in response to gaze cues as well. This new finding opens the door to further investigation of the processes involved in gaze-triggered attention prior to target and response onsets.

Even fewer studies have investigated how these ERPs are modulated by the emotion of the face cue, and most reported null findings at the ERP and behavioral levels (Fichtenholtz et al., 2007, 2009; Holmes et al., 2010; Galfano et al., 2011). Lassalle and Itier (2013) found no evidence for an emotional modulation of EDAN or ADAN components. In addition, a congruency effect on P1 amplitude was observed for fearful, surprised and happy facial expressions but not for angry or neutral expressions, while the GOE was enlarged for fearful, surprised and angry emotions compared to neutral and happy emotions. The difference between the ERP and behavioral results suggests that additional cognitive processes involved in integrating emotion and gaze signals occurred between the target presentation and the behavioral response for some emotions. Together, the findings of this study suggest that emotions impact attention orienting rather late, between 600–800ms after cue onset, and differently depending on the emotion.

The importance of studying joint attention is highlighted by research on Autism Spectrum Condition (ASC), a neurodevelopmental condition characterized by deficits in social interactions and communication (Diagnostic and Statistical Manual of Mental Disorders, DSM V, 2013). Individuals with ASC present with many abnormalities related to human face processing, including a lack of focus on the eye region and a difficulty with identity recognition from faces (Itier & Batty, 2009; Tanaka & Sung, 2013 for reviews). Although those with autism do not seem to have difficulty discriminating others’ gaze directions and facial expressions per se (Harms, Martin, & Wallace, 2010; Nuske, Vivanti, & Dissanayake, 2014; Pelphrey, Morris, & McCarthy, 2005), they could be impaired in the interpretation of these cues as indicating others’ intentions and state of mind (Pelphrey et al., 2005). Importantly, infants later diagnosed with ASC exhibit a clinical deficit in joint attention (Dawson et al., 2004). This deficit has been linked to later impairment in the ability to understand others’ mental states or theory of mind (ToM), making joint attention an important building block in the normal development of ToM and social cognition (Baron-Cohen, 1995). A full understanding of these mechanisms is necessary to develop effective and targeted interventions to alleviate the social deficits of individuals with ASC. However, the behavioral and neural mechanisms underlying this joint attention deficit are currently unclear. Specifically, studies that have used the GOE as an experimental proxy for joint attention have reported mixed results with most failing to show a clear GOE deficit in participants with ASC (see Nation & Penny, 2008 for a review). These null findings might be attributed, in part, to the high heterogeneity of ASC, and GOE impairments might be typical of only some subtypes of ASC.

Autistic-like traits exist in the general population as a continuum and can be indexed using the Autism-Spectrum Quotient (AQ) questionnaire (Baron-Cohen et al., 2001). The AQ test is composed of five subscales, each indexing a domain of behavioral particularity in autism (increased attention to details, decreased social skills, imagination, communication and ability to switch attention). Studying the links between the GOE and these specific behavioural domains in the general population could provide new insight into the joint attention deficits seen in ASC. In addition to the behavioural response (GOE), monitoring ERP components associated with attention orienting and exploring their relationship to autistic traits might also provide additional hints regarding the neural processes underlying joint attention and the way in which they break down in ASC.

To the best of our knowledge, only one study so far has reported a negative correlation between AQ scores and the magnitude of the GOE (Bayliss, Di Pellegrino and Tipper, 2005), indicating that the more an individual exhibits autistic traits, the less she orients attention towards gaze direction. That study, however, only tested neutral faces and it remains unknown whether the GOE could be modulated by facial expressions differentially depending on individuals’ autistic traits. This question is pertinent given that the emotional component of joint attention appears important to evaluate the stimuli present in the environment and to understand others’ mental states (Bayliss et al., 2007; Mundy & Sigman, 1989; Shamay-Tsoory, Tibi-Elhanany & Aharon-Peretz, 2007). Perhaps, individuals with ASC are able to orient their attention to others’ gaze, but have difficulty modulating their gaze-oriented attention with facial expression, which is critical in social contexts. In accordance with this idea, Uono, Sato and Toichi (2009) showed that, contrary to typical individuals, those with ASC do not exhibit a larger GOE for fearful than neutral faces (Uono et al., 2009). To orient their attention, people with ASC might thus not integrate gaze and emotion cues to the same extent as neurotypicals, which could be linked to their deficits in ToM and social skills.

Here, we investigated further the relationship between AQ score and the GOE in the general population, and whether it varied with the facial expression of the cue (fearful or happy). We also investigated whether the ERP correlates of attention to gaze reviewed above were differentially modulated by gaze and emotion cues depending on autistic traits. Finally, we used inverted faces to determine whether the larger GOE for fearful relative to happy faces could be due to a difference in the type of processing engaged by the two emotions. Configural processing is disrupted by face inversion (e.g. Tanaka & Farah, 1993; Rossion, 2009), which also hinders emotion recognition (e.g., Derntl et al., 2009) and decreases the GOE (Langton & Bruce, 1999; Kingstone et al., 2000; Graham et al., 2010). However, some studies found that the recognition of fear is more impaired by inversion than the recognition of happiness (e.g., McKelvie et al., 1995; Prkachin et al., 2003), suggesting that the processing of fearful faces may be more configural than the processing of happy faces, which could impact the GOE. Based on previous literature, we expected a larger GOE for fearful than happy expressions, and a general decrease in GOE with inversion (Langton & Bruce, 1999; Kingstone et al., 2000; Graham et al., 2010). However, if the two emotions were processed differently, we expected a larger impact of inversion on the GOE for the emotion processed most configurally (fear). Lastly, we expected a negative correlation between the AQ score and the overall GOE (Bayliss et al., 2005), and based on Uono et al. (2009), a stronger negative correlation between the AQ score and the GOE for fearful compared to happy faces. For ERPs, we expected to reproduce Lassalle & Itier (2013) results with a congruency effect for P1 and the presence of EDAN and ADAN. Given the lack of clear emotion effect on these ERPs in that study, we did not expect them to be influenced by emotion in the present study. However, we hoped to see overall correlations between the ERP components and AQ scores, reflecting an abnormal processing of gaze cues in participants with high autistic traits.

METHODS

2.1. Participants

Three hundred and forty-six (346) Math students from the University of Waterloo (UW) were pre-screened based on the completion of three questionnaires for which they received $5 and a chocolate bar.

The first questionnaire assessed emotion recognition. Eight faces (four females) from the MacBrain Face Stimulus set1 (Tottenham et al., 2009), each displaying fearful, surprised, angry, happy, and neutral expressions, were presented printed on paper2. Participants had to recognize the five presented emotions above chance level to rule out any emotion recognition impairment.

The second questionnaire assessed participants’ trait anxiety using the State-Trait Inventory for Cognitive and Somatic Anxiety questionnaire (STICSA; Ree, French, Macleod & Locke, 2008). Only students with scores below the high trait anxiety score of 433 were selected, as high trait anxiety affects the emotional modulation of the GOE (e.g., Matthew et al., 2003) and we aimed at replicating previous studies showing an emotional modulation of gaze-oriented attention in non-anxious participants (e.g., Lassalle& Itier, 2013).

The Autism-spectrum Quotient test (AQ; Baron-Cohen et al., 2001) assessed participants’ autistic traits (see see the “AQ calculation and correlations” section for details). A score of 26 indicates a clinical diagnosis of ASC in 83% of the respondents (Woodbury-Smith et al., 2005)Hhere, the AQ score was used to assess its possible effect on the GOE (see Data Analysis section). Recruitment in the Faculty of Mathematics ensured finding individuals with high autistic traits but male-biased the gender ratio (Hango, 2013).

To be further eligible for the EEG experiment, participants had to be free of neurological or psychiatric illness. Of the 346 math students tested, 224 were eligible but 78 ended up participating in the EEG study. Ten participants were rejected, one for attempting to participate twice and scoring inconsistently on the AQ questionnaire, two for uncertain scores on the AQ (questionnaires with missing values or unclear responses) and seven because of too few trials per condition for the P1 ERP component after artifact rejection (<40 trials).

The final sample included 68 right-handed participants (21 females) with normal or corrected-to-normal vision, between 18 and 29 years old (mean=20.90, SD=2.07), with anxiety scores in the normal range (mean=33.51, SD=6.10) and AQ scores ranging from 7 to 37 (mean=21.31, SD=7.40). Twenty-two participants scored 26 or above on the AQ (see Table 1a for more details), although the exact number of individuals with a formally diagnosed ASC was unknown. In the remaining of the manuscript, we thus talk about individuals with high AQ rather than individuals with ASC, but we do include discussion on ASC. The study was approved by the University of Waterloo Research Ethics Board; participants gave informed written consent and received $20 for their participation in the EEG study.

Table 1.

Details pertaining to AQ and STICSA scores in the whole group, as well as in the low and high AQ groups.

| a. Overall | b. Low AQ group | c. High AQ group | ||||

|---|---|---|---|---|---|---|

| Mean (SD) | Range | Mean (SD) | Range | Mean (SD) | Range | |

| AQ | 21.38 (7.48) | 7–37 | 13.68 (2.52) | 7–18 | 29.64 (3.89) | 24–37 |

| STICSA | 33.94 (5.24) | 23–42 | 32.08 (5.22) | 24–42 | 35.64 (3.87) | 27–42 |

| Age | 20.90 (2.07) | 18–29 | 21.28 (2.54) | 18–29 | 20.44 (2.27) | 18–28 |

| N (Female) | 68 (21) | 25 (9) | 25 (8) | |||

2.1. EEG experiment

2.1.1. Stimuli and procedure

The fearful and happy faces of the emotion recognition questionnaire were used in this computer task. Eye gaze was manipulated using Photoshop (Version 11.0). The iris was cut and pasted in the corners of the eyes to obtain leftward or rightward gaze. We applied an elliptical mask to hide hair, ears, and shoulders. Contrast and luminance were equated using the SHINE toolbox (Willenbockel et al., 2010). The face pictures subtended a visual angle of 8.02° horizontally and 12.35° vertically. They were presented at the center of the monitor, on a white background.

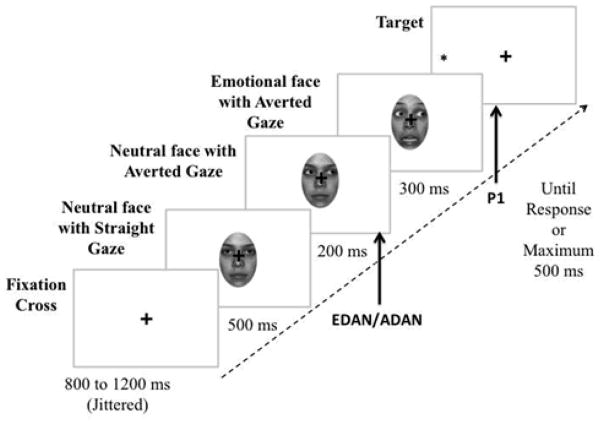

Participants sat 67cm in front of a monitor in a quiet, dimly-lit and electrically shielded room, with their head restrained by a chin-rest. Each trial started with a centered fixation cross (1.28°×1.28°), presented for a jittered amount of time (800–1200ms). A neutral face with straight gaze was then shown for 500ms, followed by the same face with a rightward or leftward gaze for 200ms. Next, the same individual expressing either happiness or fear was presented for 300ms while gaze remained averted (Figure 1). This sequential presentation induced the perception of a face averting gaze to the side and dynamically expressing an emotion. The face sequence was presented upright or inverted. A black asterisk target (.85°×.85°) then appeared on either side of the fixation cross at 7.68° eccentricity and remained on the monitor until the response or for a maximum of 500ms. The centered fixation cross remained for the entire trial time, superimposed onto the face.

Figure 1.

Example of an incongruent trial in which the cue expresses fear. The arrows indicate the stage at which the ERP components are being measured (EDAN/ADAN when the cue is presented and P1 when the target is presented).

The experiment was programmed using Experiment Builder and consisted of 10 blocks of 128 trials separated by a self-paced break, resulting in a total of 1280 trials, with 80 trials for each of the 16 basic conditions. A condition consisted of a combination of a particular Emotion (happy, fearful) with a specific Orientation (upright, inverted), a particular Gaze Direction (rightward, leftward) and a Congruency type (congruent, incongruent). There were an equal number of congruent and incongruent trials, and they were presented in a random order in each block.

Throughout the experiment, participants had to maintain fixation on the central cross and remain still. Initial fixation on the cross was ensured using an eye-tracking device (Eyelink 1000), which was calibrated between blocks. Twenty practice trials were run before the start of the first block. Participants were told gaze direction did not predict target location and were required to press, using both hands, the keyboard left key “C” when the target was on the left and the right key “M” when it was on the right, as accurately and as fast as possible.

2.1.2. Electrophysiological recordings

The EEG was recorded with an Active two Biosemi system, using a 66-channel elastic cap (extended 10/20 system) plus 3 pairs of extra electrodes, for a total of 72 recording sites. Horizontal and vertical eye movements were monitored from the outer canthi and infra orbital ridges, respectively, using two pairs of ocular sites (LO1, LO2, IO1, IO2). A third pair of electrodes was situated over the mastoids (TP9/TP10). EEG was acquired using a 516Hz sampling rate, a Common Mode Sense (CMS) active electrode and a Driven Right Leg (DRL) passive electrode serving as ground. Offline, an average reference was computed and used for data analysis.

2.2. Data analysis

2.2.1. Reaction times (RTs)

Responses were considered correct if the key matched the side of the target and if RTs were above 100ms and below 1200ms. Mean RTs for correct answers were calculated according to facial expressions and congruency, with left and right target conditions averaged together. For each subject, only RTs within 2.5 standard deviations from the mean of each condition were kept in the mean RT calculation (Van Selst & Jolicoeur, 1994). On average, 7% of trials were excluded.

Mean RTs were analyzed using a 2 (Emotions: fearful, happy) by 2 (Congruency: congruent, incongruent) by 2(Orientation: upright, inverted) repeated measures Analysis Of Variance (ANOVA). When the Emotion by Congruency interaction was significant, further analyses were conducted separately for congruent and incongruent trials, using the factors Emotion and Orientation.

2.2.2. ERP to targets

ERPs were time-locked to target onset (−100ms to +500ms). Based on previous literature and data inspection, we selected PO7/PO8 and O1/O2 as the electrodes of interest for P1 analysis and only the side contralateral to the target was analyzed.

P1 peak was measured between 70 and 130ms after target onset, using an automated procedure. Individual data were then inspected to check that the correct peak was measured and manual peak measures were performed if necessary. P1 amplitudes and latencies were analyzed using a 2(Emotions: fear, happy) by 2(Congruency: congruent, incongruent) by 2(Orientations: upright, inverted) by 2(Electrodes: Parietal-Occipital or Occipital) by 2(Hemisphere: right, left) repeated measures ANOVA.

2.2.3. ERPs to gaze cue

ERPs were time-locked to the gaze shift (from −100ms to +500ms). In accordance with previous studies (e.g., Holmes et al., 2010; Lassalle & Itier, 2013) and after data inspection, EDAN was measured at posterior electrodes (P7, P8, PO7, PO8) between 200 and 300ms after cue onset (i.e. between 0 and 100ms after emotion onset) while ADAN component was measured at anterior electrodes (F5, F6, F7, F8, FC5, FC6, FT7, FT8) between 300 and 500ms after cue onset (i.e. between100 and 300ms after emotion onset). For each component, the mean amplitude across the defined time window was averaged across the electrodes for a given hemisphere.

For each hemisphere we tested whether amplitudes were more negative for gaze directed toward the contralateral side than for gaze directed toward the ipsilateral side, indicating the presence of EDAN or ADAN components (e.g. Holmes et al., 2010). Mean amplitudes for the ipsilateral and contralateral conditions were calculated for each emotion and hemisphere and analyzed using a 2(Emotion) by 2(Gaze laterality: contralateral, ipsilateral) by 2(Orientation) by 2(Hemisphere) repeated measure ANOVA.

For all analyses, statistical tests were set at α<.05 significance level. Greenhouse-Geisser correction for sphericity was applied when necessary and Bonferroni corrections were used for multiple comparisons.

2.2.4. AQ calculation and correlations

The AQ score is a 50-item questionnaire and comprises five different subscales assessing i) social skills, ii) attention to details, iii) attention switching, iv) communication, and v) imagination. For each subscale, participants were presented with 10 statements and indicated whether they definitely agreed, slightly agreed, slightly disagreed, or definitely disagreed. AQ scores were computed using the two-point scoring method as used in Baron-Cohen et al. (2001): half of the statements received a 1 for “agree” answers and the other half received a 1 for “disagree” answers, regardless of whether it was “slightly” or “definitely”. High AQ scores indicate a high number of autistic traits, such as high attention to details, low social skills, little communicative and imaginative abilities, and difficulty with attention switching.

Pearson correlations were performed between AQ scores and i) the GOE (for happy upright, happy inverted, fearful upright, and fearful inverted separately) as well as ii) the ERP components (congruency effect on P1 and laterality effect on EDAN/ADAN). For each subject and emotion, the congruency effect on P1 was calculated as the difference in P1 amplitude between congruent and incongruent conditions, and the laterality effect on EDAN/ADAN as the difference in amplitude between ipsilateral and contralateral conditions. Correlations were done using the overall AQ score (which is the sum of the 5 subscale scores) and each subscale score. Only significant effects are reported unless otherwise stated.

We also computed Pearson correlations between STICSA scores and i) the GOE and ii) AQ scores, as the enhancement of the GOE for fear relative to neutral/happy faces has been linked to anxiety levels (e.g. Fox et al., 2007; Matthews et al., 2003; Putman et al., 2006), and anxiety is often comorbid with autism (Van Steensel et al., 2011). Finally, we computed partial correlations between AQ scores and GOE, controlling for STICSA scores.

3. RESULTS

3.1. Behavioral results

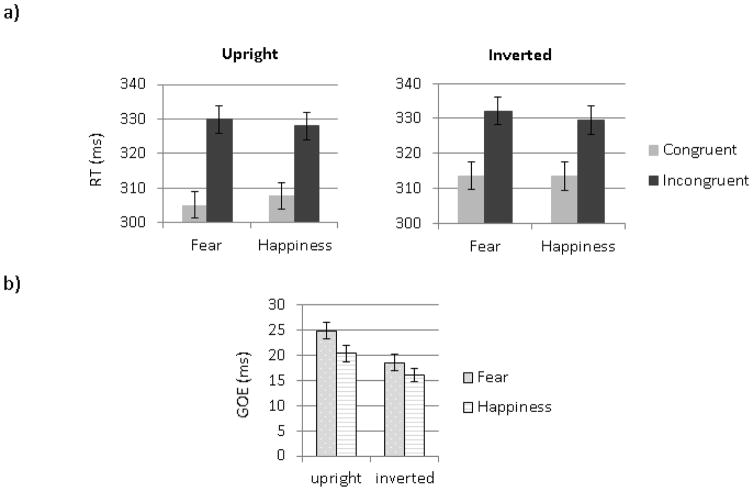

A main effect of Orientation (F (1, 67) =75.85, MSE=36.20, p< .01, ηp2= .53) was due to longer RTs for inverted than upright faces. The main effect of Congruency (F (1, 67) =235.59, MSE=228.92, p< .01, ηp2= .78) reflected faster responses to congruent than to incongruent targets (Figure 2a). This congruency effect was larger for upright than inverted faces (Figure 2b), as revealed by a significant Orientation by Congruency interaction (F (1, 67) =22.59, MSE= 42.79, p< .01, ηp2= .25). An Emotion by Congruency interaction (F (1, 67) =14.07, MSE=30.16, p< .01, ηp2= .17) was due to a larger GOE for fearful than happy faces (Figure 2b). A separate analysis for the congruent and incongruent conditions showed a significant effect of Emotion in both conditions (F (1, 69)= 4.53, MSE=24.11, p= .04, ηp2=.06 and F (1,67)= 11.80, MSE=29.58, p<.01, ηp2=.15, respectively). This Emotion effect was due to slightly faster RTs for fearful than happy faces in the congruent condition and to slightly longer RTs for fearful than happy faces in the incongruent condition, making the incongruent-congruent difference in RT larger for fearful than happy faces. The three-way interaction of Orientation by Congruency by Emotion was not significant (F=1.61, p=.21).

Figure 2.

Behavioral results. (a) Mean RTs to face cues presented in the upright condition (left panel) and in the inverted condition (right panel). (b) Mean GOE for fearful and happy faces (GOE = RTinc − RTcong). Error bars represent SE.

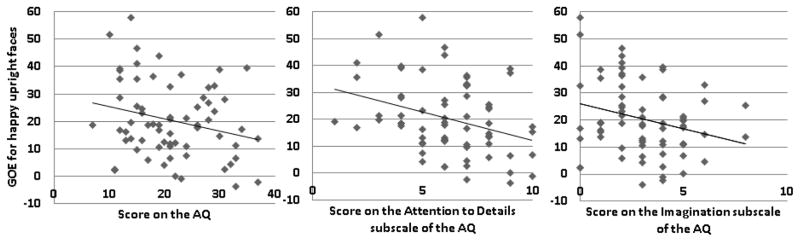

There was a significant negative correlation between the GOE for happy upright faces and the overall AQ (r=−.25, p=.04), the Attention to Detail subscale (r=−.33, p<.01) and the Imagination subscale (r=−.25, p=.04). Thus the higher the autistic traits (particularly strong attention to detail and weak imagination), the smaller the GOE to happy upright faces (Table 2, Figure 3). No correlations were found for fearful faces. AQ and STICSA scores were correlated (r=.25, p=.04) such that participants with many autistic traits also tended to be more anxious, in accordance with previous research (Van Steensel et al., 2011). However, the STICSA scores did not correlate with any of the GOEs (fear upright, fear inverted, happy upright, happy inverted).

Table 2.

Correlations between the AQ scores (overall AQ and scores on the five subscales) and (i) the GOE (for each condition and orientation), (ii) the congruency effect on P1, and (iii) the laterality effects for ADAN and EDAN.

| Condition | overall AQ | Social Skills | Imagination | Attention to Details | Attention Switching | Communication | STICSA score |

|---|---|---|---|---|---|---|---|

| GOE Happy Up | r=−.25* | r=−.10 | r=−.25* | r=−.33** | r=−.09 | r=−11 | r= −.08 |

| GOE Happy Inv | r=−.19 | r=−.17 | r=−.22 | r=−.14 | r=−.05 | r=−.08 | r= −.08 |

| GOE Fear Up | r=−.10 | r=−.01 | r=−.14 | r=−.14 | r=−.03 | r=−.04 | r= .08 |

| GOE Fear Inv | r=−.05 | r=.06 | r=−.04 | r=−.19 | r=−.04 | r=−.03 | r= .06 |

| congruency effect on P1 | r=−.18 | r=−.09 | r=−.19 | r=−.13 | r=−.10 | r=−.13 | |

| ADAN | r=−.18 | r=−.12 | r=−.17 | r=−.06 | r=−.11 | r=−.16 | |

| EDAN | r=.01 | r=.00 | r=.00 | r=−.13 | r=−.10 | r=−.02 | |

| STICSA Score | r=−.25* |

reflects significance <.01,

reflects significance <.05.

Figure 3.

Correlations between the GOE for happy upright faces (on the y-axis) and a) total AQ scores (on the x-axis), (b) AQ Imagination subscale scores and (c) AQ Attention to Detail subscale scores.

Given that AQ and STICSA scores were correlated, we also calculated the partial correlation between the AQ scores and the GOEs controlling for participants’ anxiety (results reported in Table 3)4. A trend remained toward a significant partial correlation between the GOE to happy upright faces and the overall AQ score (r=−.24, p=.06). The relationships between the GOE for happy upright faces and the Attention to Detail subscale (r=−.33 p<.01) as well as the Imagination subscale (r=−.24, p=.05) both remained significant. In addition, a significant negative partial correlation emerged between the GOE to happy inverted faces and the score on the Imagination subscale (r=−.24, p=.05).

Table 3.

Partial correlations (controlling for anxiety scores) between the AQ scores (overall AQ and scores on the five subscales) and (i) the GOE (for each condition and orientation), (ii) the congruency effect on P1, (iii) the laterality effects for ADAN and EDAN.

| Condition | overall AQ | Social Skills | Imagination | Attention to Details | Attention Switching | Communication |

|---|---|---|---|---|---|---|

| GOE Happy Up | r=−.23~ | r=−.08 | r=−.24* | r=−.33** | r=−.08 | r=−10 |

| GOE Happy Inv | r=−.21 | r=−.20 | r=−.24* | r=−.14 | r=−.05 | r=−.09 |

| GOE Fear Up | r=−.10 | r=−.00 | r=−.14 | r=−.14 | r=−.03 | r=−.04 |

| GOE Fear Inv | r=−.07 | r=.04 | r=−.06 | r=−.19 | r=−.04 | r=.02 |

| congruency effect on P1 | r=−.15 | r=−.04 | r=−.16 | r=−.13 | r=−.10 | r=−.11 |

| ADAN | r=−.17 | r=−.10 | r=−.16 | r=−.07 | r=−.10 | r=−.15 |

| EDAN | r=−.01 | r=−.02 | r=−.01 | r=.13 | r=−.11 | r=−.03 |

reflects significance <.01,

reflects significance <.05 and

indicates a trend (<.07).

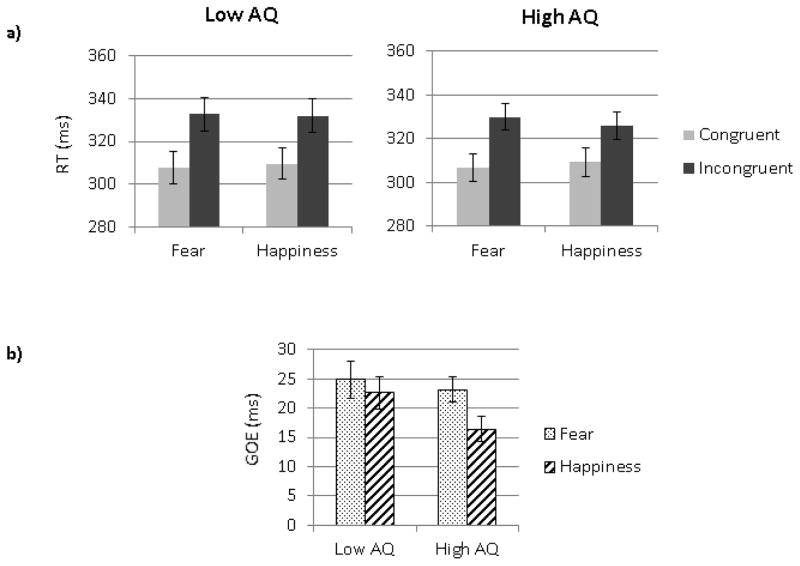

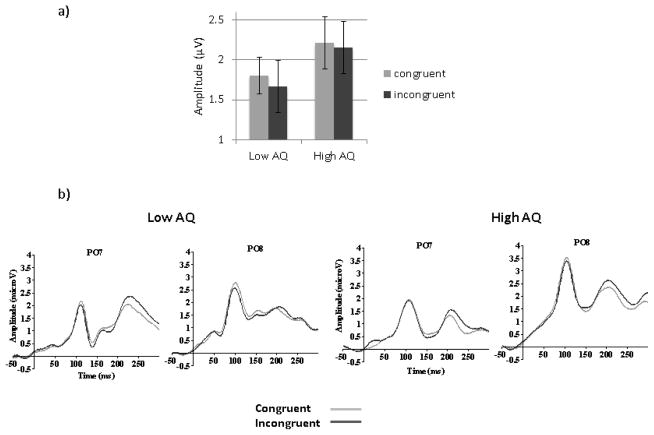

To better compare with Bayliss et al. (2005), we also analyzed the RT data grouping the 25 participants with the lowest AQ scores (9 females) and the 25 participants with the highest AQ scores (8 females). The Low AQ group (Age: mean=21.28 [SD=2.54]) and the High AQ group (Age: 20.44 [2.27]) were contrasted using a 2 (Groups) by 2 (Emotions) by 2 (Congruency) by 2(Orientation) mixed ANOVA on RTs. This analysis revealed a main effect of Orientation (F (1, 48) =62.58, MSE=37.45, p<.01, ηp2= .57) with faster responses for upright than inverted faces, and a main effect of Congruency (F (1, 48) =195.61, MSE=242.29, p<.01, ηp2= .80) with faster responses to congruent than incongruent trials. The interaction between Emotion and Congruency was significant (F (1, 48) =17.55, MSE=28.80, p<.01, ηp2= .27) but was modulated by the three way interaction between Group, Congruency and Emotion (F (1, 48) =4.36, MSE=28.80, p=.04, ηp2= .08). Each group was thus analyzed separately. An interaction between congruency and emotion was found in the High AQ Group (F (1, 24) =40.53, MSE=14.00, p=.01, ηp2= .63), characterized by larger GOE for fearful faces than for happy faces but not in the Low AQ group (F=1.46, p=.24) for which the GOE was similar between emotions, as seen in Figure 4. The larger GOE for fearful than happy faces in the high AQ group was due both to faster RT for fearful than happy faces in the congruent condition (F (1, 24) =9.52, MSE=9.52, p<.01, ηp2= .28) and to slower RT for fearful than happy faces in the incongruent condition (F (1, 24) =18.11, MSE=24.25, p<.01, ηp2= .43). A follow-up ANOVA revealed that the GOE to fearful faces did not differ between groups (F (1, 48) = .24, MSE=54.29 p=.63, ηp2<.01), while the GOE to happy faces was smaller in the High AQ compared to the Low AQ group (F(1,48)=4.30, MSE=225.65, p=.04, ηp2=.08). The four-way interaction of group by orientation by congruency by emotion was not significant.

Figure 4.

a) Mean RTs to face cues presented in the congruent and incongruent conditions in a) the low AQ group and the high AQ group. (b) Mean GOE for fearful and happy faces in the two groups. Error bars represent SE.

As indicated by Table 1b, the low and high AQ groups differed on AQ scores (t(48)=−17.34, p<.01) and anxiety scores (t(48)=2.74, p<.01), but not on age (t(48)=1.23, p=.22). Thus, the high AQ group was more anxious than the low AQ group, which was to be expected according to the overall correlation we observed between STICSA and AQ scores5. This limitation is addressed in more details in the discussion.

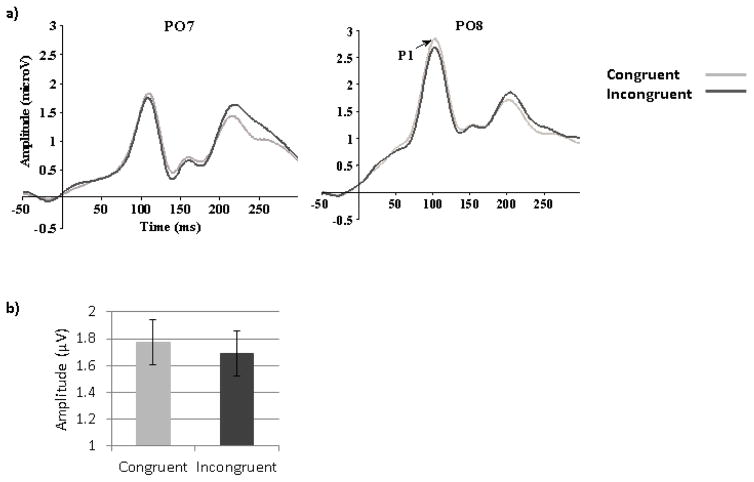

3.2. ERP to the target (P1)

The average number of trials per condition for P1 component was 67.56 (SD=.56). Analysis of P1 amplitude showed the expected main effect of Congruency (F (1, 67) =7.48, MSE= .14, p<.01, ηp2=.10) with larger amplitude in the congruent than in the incongruent condition (Figure 5). In addition there was a main effect of Hemisphere (F (1, 67) =12.07, MSE=26.57, p<.01, ηp2= .15) with larger amplitudes in the right than in the left hemisphere, as well as a main effect of Electrode (F (1,67)=6.00, MSE=11.21, p=.02, ηp2= .08) with larger amplitudes at Occipital than at Parieto-Occipital sites. In addition, the interaction between Hemisphere and Congruency was significant (F(1,67)=3.84, MSE=.36, p=.05, ηp2= .05) such that the Congruency effect was significant in the right hemisphere (F(1,67)=10.88, MSE=.26, p=.03, ηp2= .14) but interacted with Electrode in the left hemisphere (F(1,67)=3.88, MSE=.10, p=.05, ηp2= .06) as it was only present at PO7.

Figure 5.

Target-triggered ERPs. (a) ERP waveforms featuring the P1 component at PO7 (left hemisphere, left panel) and PO8 (right hemisphere, right panel) for the congruent and the incongruent conditions (happy and fearful faces averaged together). (b) Mean P1 amplitudes to congruent and incongruent targets (averaged across happy and fearful faces).

No significant correlations (Pearson or partial) with AQ scores were found for P1 congruency effect when the whole group was used (Table 2 and 3). When the High and Low AQ groups were contrasted using a 2 (Groups) by 2 (Emotions) by 2 (Congruency) by 2(Orientation) by 2 (Hemisphere) by 2 (Electrodes) mixed ANOVA, a main effect of Congruency was also found (F(1,48)=4.28, MSE=2.08, p=.02, ηp2=.11). However, although no group by congruency interaction was found, planned analyses for each group revealed that the effect of Congruency was significant in the Low AQ group (F (1,24)=4.99, MSE=.74, p=.04, ηp2=.22) but not in the High AQ group (F (1,24)=1.22, MSE=.62, p=.28, ηp2=.05), as seen in Figure 6.

Figure 6.

a) Mean P1 amplitudes to congruent and incongruent targets (averaged across happy and fearful faces) in low and high AQ participants. Error bars represent SE. b) P1 waveforms at PO7 and PO8 for the congruent and the incongruent conditions (happy and fearful faces averaged together), for high and low AQ groups.

3.3. ERP to the gaze cue

ERPs were time locked to the onset of gaze shift and thus included the onset of facial expression 200ms later. The average number of trials per condition for EDAN/ADAN components was 131.98 (SD=1.01).

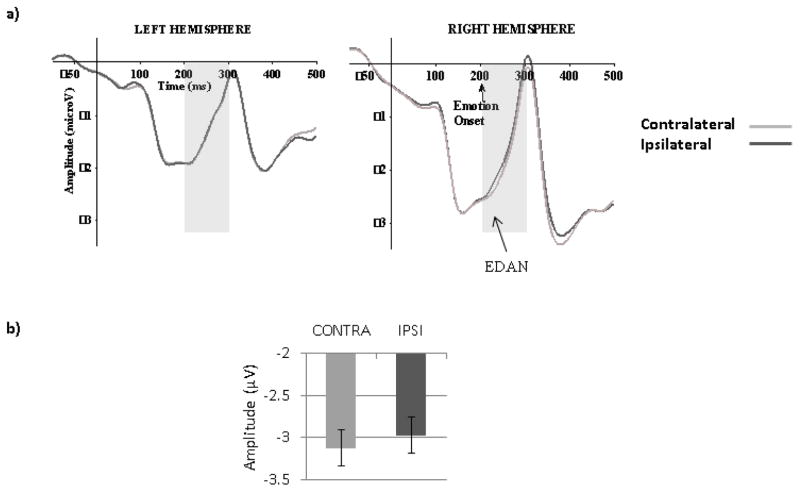

3.3.1. Early Directing Attention Negativity (EDAN)

A main effect of Hemisphere (F (1, 67) =6.32, MSE=26.39, p=.01, ηp2=.09) was due to more negative amplitudes in the right than the left hemisphere. As expected, there was a main effect of Gaze laterality (F (1, 67) =7.70, MSE=.86, p< .01, ηp2= .12) such that amplitudes were more negative for contralateral than ipsilateral gaze (Figure 7), indicative of EDAN. No other significant effects were found.

Figure 7.

Early Directing Attention Negativity (EDAN). (a) ERP waveforms for contralateral (contra) and ipsilateral (ipsi) gaze cues (averaged across emotions, orientation and electrodes: P7 and PO7 for the left hemisphere, P8 and PO8 for the right hemisphere). The grey zone indicates the time limits of the analysis (200–300ms). (b) Mean amplitudes for contra and ipsi gaze directions, between 200 and 300ms, averaged across emotions, hemispheres, electrodes and orientations).

No significant (Pearson or partial) correlations with AQ scores were found with the whole group (Table 2 and 3). When the Low and High AQ groups were analyzed using a 2 (Groups) by 2(Orientation) by 2(Emotion) by 2(Hemisphere) by 2(Gaze laterality: contralateral, ipsilateral) mixed ANOVA, EDAN analysis revealed a main effect of laterality (F (1, 48)=4.53, MSE=.99, p=.04, ηp2=.09). However, planned analyses for each group revealed no effect of Laterality in either the Low (F=2.50, p=.13) or High AQ Group (F=2.09, p=.16), probably due to a lack of power.

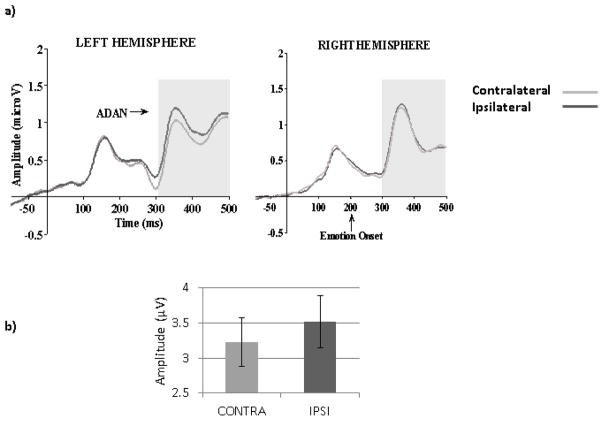

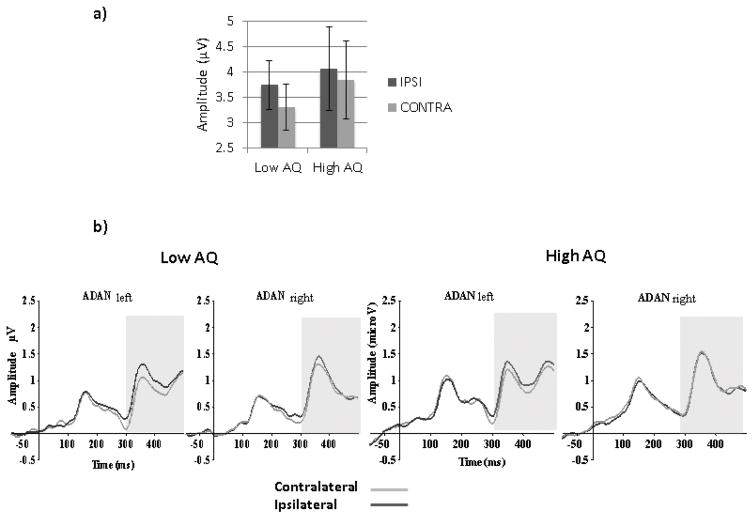

3.3.2. Anterior Directing Attention Negativity (ADAN)

A main effect of Orientation (F (1, 67) =17.35, MSE=8.52, p< .01, ηp2= .21) reflected larger amplitudes for upright than inverted faces. The Orientation by Hemisphere interaction (F (1, 67) =5.03, MSE=5.45, p=.03, ηp2= .07) revealed that the effect of orientation was significant only in the left hemisphere (F (1, 67) =21.48, MSE=7.04, p< .01, ηp2= .24). The expected effect of Gaze Laterality was also significant (F (1, 67) =8.62, MSE=2.70, p< .01, ηp2=.11) with less positive amplitudes in the contralateral than in the ipsilateral condition, indicative of ADAN (Figure 8). The Gaze laterality by Hemisphere interaction (F (1, 67) =3.86, MSE=2.54, p=.05, ηp2= .06) revealed that the Gaze Laterality effect was significant only in the left hemisphere (F (1, 67) =11.09, MSE=2.85, p<.01, η2= .14).

Figure 8.

Anterior Directing Attention Negativity (ADAN). (a) ERP waveforms for contralateral (contra) and ipsilateral (ipsi) gaze cues (averaged across emotions, orientations and electrodes: F5, F7, FC5, FT7 for the left hemisphere, F6, F8, FC6, FT8 for the right hemisphere). The grey zone marks the time limits of the analysis (300–500ms). (b) Mean amplitudes for contra and ipsi gaze directions between 300 and 500ms averaged across orientations, emotions, hemispheres and electrodes.

No Pearson or partial significant correlations with AQ scores were found for ADAN laterality effects when the whole group was used (Table 2 and 3). When the High and Low AQ groups were contrasted using a 2(Groups) by 2(Emotion) by 2(Gaze laterality: contralateral, ipsilateral) by 2(Orientation) by 2(Hemisphere) mixed ANOVA, a main effect of Laterality was found (F (1, 48)=7.79, MSE=2.71, p<.01, ηp2=.14). Although no interaction with group was seen, planned analyses for each group revealed that the effect of Laterality was present in the Low AQ group (F(1,24)=6.99, MSE=2.82, p=.01, η2=.23) but not in the High AQ group (F(1,24)=1.63, MSE=2.61, p=.21, η2=.06) as seen in Figure 9.

Figure 9.

(a) Mean amplitudes for contra- and ipsilateral gaze directions between 300 and 500ms averaged across orientations, emotions, hemispheres and electrodes in low AQ and high AQ participants. Error bars represent SE. (b) Ipsi- and contralateral ERP waveforms showing ADAN component for the two AQ groups and both hemispheres.

4. DISCUSSION

We investigated the behavioral and ERP correlates of the modulation of gaze-oriented attention by fearful and happy faces using a localization task in a non-anxious sample. We also examined the relationship between these correlates and autistic traits as indexed by the AQ score. We used a dynamic sequence in which the facial expression occurred after the gaze shift, as in real life one usually orients gaze toward a stimulus before reacting to it. This sequence also ensured an identical eye aperture at gaze shift across conditions, preventing the larger eye size of fearful faces from facilitating gaze shift and thus contributing to the larger GOE observed.

4.1. Influence of facial expressions and autistic traits on the Gaze Orienting Effect

We found the traditional GOE with faster responses to congruent than incongruent trials (Driver et al., 1999; Friesen & Kingstone, 1998). We also found a smaller GOE for inverted than upright faces, in line with previous studies (e.g., Graham et al., 2010; Hori et al., 2005; Kingstone et al., 2000; Langton & Bruce, 1999). These results confirm that the efficiency of gaze cues is decreased when configural processing of faces is disrupted by inversion. The lack of orientation by congruency by emotion interaction also revealed that inversion impacted fearful and happy faces to the same extent, suggesting the two emotions were processed similarly (in this particular task), as also found by previous studies (e.g., Derntl et al., 2009; Fallshore & Bartholow, 2003).

Across our large sample, the GOE was larger for fearful than happy faces, reproducing previous findings in non-anxious individuals (e.g., Bayless et al., 2011; Neath et al., 2013). However, this emotional difference was dependent on individuals’ autistic traits. We found an inverse correlation between the size of one’s GOE for happy faces and one’s score on the AQ such that higher levels of autistic traits were associated with smaller GOEs to happy facial expressions. This finding was contrary to what we initially expected. Given the lack of enhancement of the GOE by fearful faces for autistic individuals reported by Uono and colleagues (2009), we expected an inverse relationship between autistic traits and the size of the GOE to fearful faces, not happy faces. In contrast, our result suggests that individuals with high autistic traits orient their attention less when looking at upright happy faces compared to individuals with low autistic traits. This finding was apparent in the correlation analyses with happy upright faces as well as when low AQ and high AQ groups were contrasted. Importantly, this resulted in a lack of GOE difference between fearful and happy faces in individuals with low AQ, while a clearly larger GOE for fearful than happy faces was seen in individuals with high AQ.

Although the mean anxiety scores in our low and high AQ groups were below the clinical cutoff score and thus within the normal range, individuals with high AQ were slightly more anxious than individuals in the low AQ group. This is not surprising, given that a large proportion of individuals with ASC also suffer from anxiety (Van Steensel et al., 2011). However, this raises the concern that the difference in GOE between facial expressions seen only in high AQ participants could be due to their higher anxiety rather than their higher autistic traits, given previous reports of GOE modulation by emotion in high but not in low anxious participants (e.g. Fox et al., 2007; Mathews et al., 2003). This possibility is, however, unlikely for two reasons. First, significant correlations between AQ subscores and GOE remained when STICSA scores were controlled for. Second, previous experiments showed an impact of anxiety level on the GOE to fearful faces (Fox et al., 2007; Mathews et al., 2003; Putman et al., 2006) while the AQ scores in the present experiment are correlated with the GOE to happy faces (the GOE of the two groups differs only for happy faces). Nevertheless, we acknowledge that anxiety might play some role in our analysis and that future studies should try to disentangle these factors. It would be especially important to assess social anxiety given that 17% of individuals with ASC are diagnosed with social anxiety (Van Steensel et al., 2011) and that some of the social deficits exhibited by individuals with autism appear to be mediated by their comorbid social anxiety (Kleinhans et al., 2010).

A negative correlation between AQ scores and the GOE for neutral faces was also reported by Bayliss et al. (2005), suggesting that gaze-oriented attention to neutral faces could also be sensitive to autistic traits. The lack of difference between fearful and neutral or happy faces reported previously (e.g., Holmes et al., 2006; Tipples, 2006) could thus be due, at least in part, to participants’ low autistic traits as AQ was not monitored in previous studies. However, in another study, the GOE to fearful and neutral faces did not differ in a group of individuals with Asperger syndrome with presumably high autistic traits (Uono et al., 2009). Thus, the extent to which the GOE to neutral faces can be modulated by autistic traits remains unclear.

Participants in the present study were mostly males due to their recruitment in a Mathematic Department (Hango, 2013). Because males tend to have higher autistic traits than females (Baron-Cohen et al., 2001), one concern was that the correlations with AQ scores were driven by participants’ gender, with low AQ scores seen in females and high AQ scores in males. However, an equal number of female was found in the low and high AQ groups, ruling out this possibility. Bayliss et al. (2005) showed that the GOE (to neutral faces) was larger for female than for male participants. It is thus remarkable that in our male dominant sample, with likely smaller overall GOE, the correlations with AQ emerged for happy faces. Although we cannot be sure that the same correlations would be seen in a female dominant sample, we can at least be sure that the difference between our AQ groups was not due to a different gender ratio.

The result of a smaller GOE for happy faces with higher AQ scores could be due to the social interaction implied by a happy expression. It has been shown that people experiencing pleasant situations are more likely to smile when they are interacting socially than when they are alone (Jones, Collins & Hong, 1991; Kraut & Johnston, 1979). A happy face looking away might thus be interpreted as smiling at someone else rather than at something. Due to the decreased reward associated with social interaction in individuals with high AQ, they may orient their attention to a smiling face less than individuals with low AQ. This idea is in line with current research suggesting a diminished reward value of happy facial expression in individuals with ASC compared to typical individuals (Sepeta et al., 2012). A fearful expression, in contrast, reflects the presence of a danger in the environment and our results suggest that individuals are sensitive to fearful gaze regardless of their AQ, likely because of the evolutionary relevance of threat for survival.

Interestingly, we found a significant inverse correlation between the GOE for happy upright faces and the score on the Imagination subscale of the AQ. “Imagination” might be required to associate the faces presented in experimental conditions (i.e., grey-scale pictures presented on a computer monitor) with real faces sending social signals in a natural context (i.e., happy faces smiling at another, fearful faces reacting to a danger in the environment). This might be especially true for happy faces since participants were alone in a closed room.

In addition, we also found an inverse correlation between the Attention-to-detail subscale of the AQ and the GOE for happy upright faces. Attention-orienting to gaze also seems to engage configural processing to a greater extent than featural processing as the GOE is diminished with face inversion (e.g., Graham et al., 2010; Jenkins & Langton, 2003; Kingstone et al., 2000; Langton & Bruce, 1999). High AQ individuals tend to focus more on details and less on the whole, which constitutes a core feature of the weak central coherence theory of autism (Happé & Frith, 2006). In accordance with this theory, a decrease in holistic processing for faces has been reported in ASC (e.g. Wallace, Coleman, & Bailey, 2008). Thus upright faces might be processed more featurally than configurally in individuals with autism. Their increased attention to the features of the emotional faces in the present experiment could have resulted in different visual exploration strategies for happy and fearful faces presented upright. Indeed, the salient features, characteristic of fearful expressions, are the eyes (Whalen et al., 2004) while the salient feature, characteristic of happy expressions, is the mouth (Leppänen & Hietanen, 2007). Given that attention to the eye region is also essential to perform a gaze-orienting task, a tendency to process upright facial emotions based on their salient features would decrease the gaze-orienting performance for happy relative to fearful faces. However, the difference in the type of processing engaged by the two groups would not be apparent with inversion given both groups would process inverted faces based on their features. Note that this idea explains the correlation found for upright faces and the lack thereof for inverted faces but does not explain the lack of orientation by congruency by group effect when the low and high AQ groups were compared, possibly because of a lack of power.

Weigelt, Koldewyn, and Kanwisher (2012) reported that the face processing abnormalities observed in autism are more apparent when information needs to be extracted from the eye region. This idea is in line with the social motivation hypothesis of autism (Dawson, Webb, & McPartland, 2005) according to which individuals with ASC avoid any social stimuli. Recently, Tanaka and Sung (2013) have proposed that the face processing deficits seen in ASD could be due to this active avoidance of socially charged stimuli (such as eye contact) that is perceived as an invitation for social interaction (Kleinke, 1986) and triggers physiological hyperarousal as well as increased amygdala activation in participants with autism (Dalton et al., 2005; Kylliäinen & Hietanen, 2006). To avoid negative overarousal, individuals with ASC would adopt a compensatory strategy and tend to focus on facial features other than the eyes, such as the mouth (Tanaka & Sung, 2013). Because it signals a potential social interaction, a smiling face could also be associated with negative overarousal in individuals with ASC. The lack of rewarding value of a happy face and its negative overarousal could prompt individuals with ASC or high AQ to actively avoid the eye region, which would hinder gaze-oriented attention. Thus, the social motivation hypothesis could explain the reduction of gaze-oriented attention to happy faces for individuals with high AQ relative to those with low AQ. It remains to be tested, however, whether happy faces trigger negative overarousal and eye avoidance in individuals with high autistic traits, more so than fearful faces.

4.2. Influence of autistic traits and emotion cues on ERPs associated with gaze-oriented attention

P1 amplitude to targets was enhanced in the congruent condition compared to the incongruent condition, as previously shown with neutral face cues (Hietanen et al., 2008; Lassalle & Itier, 2013; Schuller & Rossion, 2001, 2004, 2005), reflecting an enhancement of the target visual processing at the cued location (Hopf & Mangun, 2000). This congruency effect did not differ between fearful and happy conditions. To our knowledge, only one study investigated the effect of the face cue emotion on this P1congruency effect and found a larger congruency effect for targets preceded by fearful and happy faces compared to targets preceded by neutral faces (Lassalle and Itier, 2013). Although fearful and happy conditions were not directly compared in that study as they were presented in different sessions, the results suggested these emotions yielded similar congruency effects on P1, in line with the present results. Because a neutral face condition was not employed in the present experiment, we cannot claim that emotion did not have any influence. In fact, a comparison with Lassalle and Itier’s results (2013) suggests that emotional faces influenced gaze-oriented attention at target level compared to neutral faces, but similarly for fearful and happy expressions. However, it is also possible that the presentation of the facial expression was too short in the present design to allow for an emotional modulation of the P1 congruency effect. According to Lassalle & Itier (2013), emotional modulation of gaze-oriented attention starts being observed around 600ms after cue and emotion onset. Since the emotion was presented for only 300ms after gaze shift in the present study, by the time P1 occurred only 400ms on averaged had elapsed, which might have not been sufficient to integrate emotion and gaze cues.

EDAN and ADAN cue-triggered ERP components, indexing respectively attention orienting to the cued location and attention holding at the cued location, were also analyzed. Hietanen et al., (2008) found evidence for both components with arrow cues but not with schematic neutral face cues. However, using emotional faces and a discrimination task, Holmes et al. (2010) showed the presence of ADAN but not EDAN, while Lassalle and Itier (2013) reported both EDAN and ADAN using emotional faces and a localization task. In the current experiment, we also used a localization task, which we believe is more relevant than a discrimination or a detection task in gaze cuing studies, given the cue indicates a location in the environment. While Lassalle & Itier (2013) used a sequence in which both gaze and emotion were changed 500ms prior to target onset, we used a sequence in which the face cue averted its gaze first for 200ms before expressing an emotion for 300ms (500ms SOA as well). Nevertheless, despite using a different sequence, we replicated the findings of Lassalle and Itier (2013) regarding the presence of EDAN and ADAN in response to gaze cues, and the lack of a modulation of these components by facial emotion.

These ERP findings reinforce the idea that attention orienting to gaze can be reliably tracked during both cue and target presentations using ERPs. The emotional content of the gaze cue does not seem to impact these attention stages until at least P1 if we take into consideration Lassalle and Itier (2013) results of larger congruency effects for emotional than neutral faces, i.e. around 600ms after gaze shift (with an average 100ms latency for P1). However, the differences in gaze-oriented attention between fearful and happy faces do not appear until the response, i.e. approximately 300ms after target onset given an average response time of 300ms. Thus, fear might trigger different processes than happiness between 600 and 800ms after gaze shift, a similar time interval as suggested by Lassalle and Itier (2013) using a different dynamic sequence and design. Alternatively, it is possible that emotion influences brain processes devoted to gaze-oriented attention earlier but that this modulation is not detectable with scalp ERPs.

Interestingly, although no correlation was found between the ERPs associated with gaze-orienting and AQ scores across the entire group of participants, the planned analyses for each AQ group revealed that P1 congruency effect and ADAN laterality effect were present in the low AQ group but not in the high AQ group. That the brain correlates associated with gaze-oriented attention could be weaker or completely absent in individuals with high AQ scores suggests autistic traits modulate the neural processes associated with attention orienting to gaze in the general population. Given that gaze-oriented attention is an experimental proxy for joint attention, the present results also suggest a link between autistic traits and joint attention in the general population, as predicted by the clinical deficits of joint attention observed in individuals with ASC (Dawson et al., 2004; Okada, Sato, Murai, Kubota, & Toichi, 2003). Based on the present results, it is possible that the deficit in joint attention observed in ASC is rooted in gaze-oriented attention deficits occurring during the processing of both the face cue and target. Future studies will need to extend these results, comparing the target and cue-triggered ERPs of individuals with and without a formally diagnosed ASC in gaze cueing experiments. Studies should also test whether autistic traits are linked to the neural processes involved in attention orienting to non-gaze cues or whether this relationship is specific to social stimuli.

5. CONCLUSION

While fearful faces seem to trigger a large and mandatory gaze orienting effect, likely due to the implied environmental threat they signal, the degree to which attention is oriented by happy faces seems to depend on individuals’ autistic traits, with high AQ individuals showing less sensitivity to happy faces than individuals with low AQ. Thus, autistic-like traits influence the way social signals are processed. Moreover, the presence of P1 congruency effect and ADAN laterality effect in individuals with low AQ but not in individuals with high AQ suggests that overall less attention is allocated to the gazed-at direction in individuals with a high prevalence of autistic traits, a finding in line with the clinical deficit of joint attention in individuals with ASC. Future studies will have to replicate the observed influence of autistic traits on the brain processes devoted to attention and on the allocation of attention resources to faces signaling a social interaction. Future work should also try to disentangle the relative impact of autistic trait and trait anxiety (including social anxiety) on the observed effects.

Acknowledgments

This work was supported by the Canada Foundation for Innovation [#213322], the Canada Research Chair Program [#959-213322], and an Early Researcher Award from the Ontario government [#ER11-08-172] to RJI.

Footnotes

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

The faces were the exact same as the ones presented in the ERP experiment; see section “Reaction times” for a full description of the face stimuli used.

According to Van Dam et al. (2013) a cut-off of 43 should be used in research settings to indicate probable cases of clinical anxiety (sensitivity=.73, specificity=.74, classification accuracy=.74)

Note that anxiety could not be used as a covariate in the ANOVA as it would violate the assumption of linear relationship between the covariate and the dependant variable given that anxiety and the GOEs do not correlate (Owen & Froman, 1998).

Note, however, that anxiety cannot be used as a covariate in this analysis, as the groups were non-randomly selected (see Miller & Chapman, 2011, for a detailed explanation).

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 5. Arlington, VA: American Psychiatric Publishing; 2013. [Google Scholar]

- Baron-Cohen S. Mindblindness: An essay on autism and theory of mind. Cambridge, MA: The MIT press; 1995. [Google Scholar]

- Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E. The autism-spectrum quotient (AQ): Evidence from asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of autism and developmental disorders. 2001;31(1):5–17. doi: 10.1023/a:1005653411471. [DOI] [PubMed] [Google Scholar]

- Bayless SJ, Glover M, Taylor MJ, Itier RJ. Is it in the eyes? Dissociating the role of emotion and perceptual features of emotionally expressive faces in modulating orienting to eye gaze. Visual Cognition. 2011;19(4):483–510. doi: 10.1080/13506285.2011.552895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayliss AP, Di Pellegrino G, Tipper SP. Sex differences in eye gaze and symbolic cueing of attention. The Quarterly Journal of Experimental Psychology. 2005;58(4):631–650. doi: 10.1080/02724980443000124. [DOI] [PubMed] [Google Scholar]

- Bayliss AP, Frischen A, Fenske MJ, Tipper SP. Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition. 2007;104(3):644–653. doi: 10.1016/j.cognition.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, et al. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Toth K, Abbott R, Osterling J, Munson J, Estes A, Liaw J. Early social attention impairments in autism: social orienting, joint attention, and attention to distress. Developmental psychology. 2004;40(2):271. doi: 10.1037/0012-1649.40.2.271. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: Insights from behavioral and electrophysiological studies. Developmental Neuropsychology. 2005;27(3):403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- Derntl B, Seidel EM, Kainz E, Carbon CC. Recognition of emotional expressions is affected by inversion and presentation time. Perception. 2009;38(12):1849–1862. doi: 10.1068/p6448. [DOI] [PubMed] [Google Scholar]

- Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron-Cohen S. Gaze perception triggers reflexive visuospatial orienting. Visual cognition. 1999;6(5):509–540. [Google Scholar]

- Eimer M. Uninformative symbolic cues may bias visual-spatial attention: Behavioral and electrophysiological evidence. Biological Psychology. 1997;46(1):67–71. doi: 10.1016/S0301-0511(97)05254-X. [DOI] [PubMed] [Google Scholar]

- Fallshore M, Bartholow J. Recognition of emotion from inverted schematic drawings of faces. Perceptual and Motor Skills. 2003;96(1):236–244. doi: 10.2466/pms.2003.96.1.236. [DOI] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Happy and fearful emotion in cues and targets modulate event-related potential indices of gaze-directed attentional orienting. Social cognitive and affective neuroscience. 2007;2(4):323–333. doi: 10.1093/scan/nsm026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Event-related potentials reveal temporal staging of dynamic facial expression and gaze shift effects on attentional orienting. Social neuroscience. 2009;4(4):317–331. doi: 10.1080/17470910902809487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Mathews A, Calder AJ, Yiend J. Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion. 2007;7(3):478–486. doi: 10.1037/1528-3542.7.3.478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen CK, Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic bulletin & review. 1998;5(3):490–495. [Google Scholar]

- Frischen A, Bayliss AP, Tipper SP. Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychological bulletin. 2007;133(4):694–724. doi: 10.1037/0033-2909.133.4.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galfano G, Sarlo M, Sassi F, Munafò M, Fuentes LJ, Umiltà C. Reorienting of spatial attention in gaze cuing is reflected in N2pc. Social Neuroscience. 2011;6(3):257–269. doi: 10.1080/17470919.2010.515722. [DOI] [PubMed] [Google Scholar]

- Graham R, Friesen CK, Fichtenholtz HM, LaBar KS. Modulation of reflexive orienting to gaze direction by facial expressions. Visual Cognition. 2010;18(3):331–368. [Google Scholar]

- Hango D. Gender differences in science, technology, engineering, mathematics and computer science (STEM) programs at university. Science. 2013;6(6.6):5–8. [Google Scholar]

- Happé F, Frith U. The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. Journal of Autism and Developmental Disorders. 2006;36(1):5–25. doi: 10.1007/s10803-005-0039-0. [DOI] [PubMed] [Google Scholar]

- Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. Neuropsychology Review. 2010;20(3):290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppänen JM. Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology: Human Perception and Performance. 2003;29(6):1228–1243. doi: 10.1037/0096-1523.29.6.1228. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppänen JM, Nummenmaa L, Astikainen P. Visuospatial attention shifts by gaze and arrow cues: an ERP study. Brain research. 2008;1215:123–136. doi: 10.1016/j.brainres.2008.03.091. [DOI] [PubMed] [Google Scholar]

- Holmes A, Richards A, Green S. Anxiety and sensitivity to eye gaze in emotional faces. Brain and Cognition. 2006;60(3):282–294. doi: 10.1016/j.bandc.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Holmes A, Mogg K, Garcia LM, Bradley BP. Neural activity associated with attention orienting triggered by gaze cues: A study of lateralized ERPs. Social neuroscience. 2010;5(3):285–295. doi: 10.1080/17470910903422819. [DOI] [PubMed] [Google Scholar]

- Hopf JM, Mangun GR. Shifting visual attention in space: an electrophysiological analysis using high spatial resolution mapping. Clinical Neurophysiology. 2000;111(7):1241–1257. doi: 10.1016/s1388-2457(00)00313-8. [DOI] [PubMed] [Google Scholar]

- Hori E, Tazumi T, Umeno K, Kamachi M, Kobayashi T, Ono T, Nishijo H. Effects of facial expression on shared attention mechanisms. Physiology & Behavior. 2005;84(3):397–405. doi: 10.1016/j.physbeh.2005.01.002. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Batty M. Neural bases of eye and gaze processing: The core of social cognition. Neuroscience & Biobehavioral Reviews. 2009;33(6):843–863. doi: 10.1016/j.neubiorev.2009.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins J, Langton SR. Configural processing in the perception of eye-gaze direction. Perception. 2003;32(10):1181–1188. doi: 10.1068/p3398. [DOI] [PubMed] [Google Scholar]

- Jones SS, Collins K, Hong HW. An audience effect on smile production in 10-month-old infants. Psychological Science. 1991;2(1):45–49. [Google Scholar]

- Kingstone A, Friesen CK, Gazzaniga MS. Reflexive joint attention depends on lateralized cortical connections. Psychological Science. 2000;11:159–166. doi: 10.1111/1467-9280.00232. [DOI] [PubMed] [Google Scholar]

- Kleinhans NM, Richards T, Weaver K, Johnson LC, Greenson J, Dawson G, Aylward E. Association between amygdala response to emotional faces and social anxiety in autism spectrum disorders. Neuropsychologia. 2010;48(12):3665–3670. doi: 10.1016/j.neuropsychologia.2010.07.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinke CL. Gaze and eye contact: a research review. Psychological bulletin. 1986;100(1):78–100. [PubMed] [Google Scholar]

- Kraut RE, Johnston RE. Social and emotional messages of smiling: An ethological approach. Journal of personality and social psychology. 1979;37(9):1539–1553. [Google Scholar]

- Kylliainen A, Hietanen JK. Skin conductance responses to another person’s gaze in children with autism. Journal of Autism and Developmental Disorders. 2006;36:517–525. doi: 10.1007/s10803-006-0091-4. [DOI] [PubMed] [Google Scholar]

- Langton SRH, Bruce V. Reflexive visual orienting inresponse to the social attention of others. Visual Cognition. 1999;6:541–567. [Google Scholar]

- Lassalle A, Itier RJ. Fearful, surprised, happy and angry facial expressions modulate gaze-oriented attention: behavioral and ERP evidence. Social Neuroscience. 2013 doi: 10.1080/17470919.2013.835750. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Hietanen JK. Is there more in a happy face than just a big smile? Visual Cognition. 2007;15(4):468–490. [Google Scholar]

- Luck SJ, Woodman GF, Vogel EK. Event related potential studies of attention. Trends in Cognitive Sciences. 2000;4(11):432–440. doi: 10.1016/S1364-6613(00)01545-X. [DOI] [PubMed] [Google Scholar]

- Mangun GR. Neural mechanisms of visual selective attention. Psychophysiology. 1995;32(1):4–18. doi: 10.1111/j.1469-8986.1995.tb03400.x. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Hillyard SA. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal of Experimental Psychology: Human Perception and Performance. 1991;17(4):1057. doi: 10.1037//0096-1523.17.4.1057. [DOI] [PubMed] [Google Scholar]

- Mathews A, Fox E, Yiend J, Calder A. The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition. 2003;10(7):823–835. doi: 10.1080/13506280344000095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKelvie SJ. Emotional expression in upside-down faces: Evidence for configurational and componential processing. British Journal of Social Psychology. 1995;34(3):325–334. doi: 10.1111/j.2044-8309.1995.tb01067.x. [DOI] [PubMed] [Google Scholar]

- Miller GA, Chapman JP. Misunderstanding analysis of covariance. Journal of Abnormal Psychology. 2001;110(1):40–48. doi: 10.1037/0021-843X.110.1.40. [DOI] [PubMed] [Google Scholar]

- Mundy P, Sigman M. The theoretical implications of joint-attention deficits in autism. Development and Psychopathology. 1989;1(03):173–183. doi: 10.1017/S0954579400000365. [DOI] [Google Scholar]

- Nation K, Penny S. Sensitivity to eye gaze in autism: Is it normal? Is it automatic? Is it social? Development and Psychopathology. 2008;20(01):79–97. doi: 10.1017/S0954579408000047. [DOI] [PubMed] [Google Scholar]

- Neath K, Nilsen ES, Gittsovich K, Itier RJ. Attention Orienting by Gaze and Facial Expressions Across Development. Emotion. 2013;13(3):397–408. doi: 10.1037/a0030463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nuske HJ, Vivanti G, Dissanayake C. Reactivity to fearful expressions of familiar and unfamiliar people in children with autism: An eye-tracking pupillometry study. Journal of Neurodevelopmental Disorders. 2014;6(1):14. doi: 10.1186/1866-1955-6-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okada T, Sato W, Murai T, Kubota Y, Toichi M. Eye gaze triggers visuospatial attentional shift in individuals with autism. Psychologia. 2003;46(4):246–254. [Google Scholar]

- Owen SV, Froman RD. Focus on qualitative methods uses and abuses of the analysis of covariance. Research in Nursing and Health. 1998;21(6):557–562. doi: 10.1002/(SICI)1098-240X(199812)21:6<557::AIDNUR9>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Pecchinenda A, Pes M, Ferlazzo F, Zoccolotti P. The combined effect of gaze direction and facial expression on cueing spatial attention. Emotion. 2008;8(5):628–634. doi: 10.1037/a0013437. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. Neural basis of eye gaze processing deficits in autism. Brain. 2005;128(5):1038–1048. doi: 10.1093/brain/awh404. [DOI] [PubMed] [Google Scholar]

- Praamstra P, Boutsen L, Humphreys GW. Frontoparietal control of spatial attention and motor intention in human EEG. Journal of Neurophysiology. 2005;94(1):764–774. doi: 10.1152/jn.01052.2004. [DOI] [PubMed] [Google Scholar]

- Prkachin GC. The effects of orientation on detection and identification of facial expressions of emotion. British Journal of Psychology. 2003;94(1):45–62. doi: 10.1348/000712603762842093. [DOI] [PubMed] [Google Scholar]

- Putman P, Hermans E, Van Honk J. Anxiety meets fear in perception of dynamic expressive gaze. Emotion. 2006;6(1):94–102. doi: 10.1037/1528-3542.6.1.94. [DOI] [PubMed] [Google Scholar]

- Ree MJ, French D, MacLeod C, Locke V. Distinguishing cognitive and somatic dimensions of state and trait anxiety: Development and validation of the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA) Behavioural and Cognitive Psychotherapy. 2008;36(3):313. [Google Scholar]

- Rossion B. Distinguishing the cause and consequence of face inversion: The perceptual field hypothesis. Acta Psychologica. 2009;132(3):300–312. doi: 10.1016/j.actpsy.2009.08.002. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M. Enhanced neural activity in response to dynamic facial expressions of emotion: An fMRI study. Cognitive Brain Research. 2004;20(1):81–91. doi: 10.1016/j.cogbrainres.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye gaze increases and speeds up early visual activity. Neuroreport. 2001;12(11):2381–2386. doi: 10.1097/00001756-200108080-00019. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Perception of static eye gaze direction facilitates subsequent early visual processing. Clinical Neurophysiology. 2004;115(5):1161–1168. doi: 10.1016/j.clinph.2003.12.022. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye gaze enhances and speeds up visual processing in upper and lower visual fields beyond early striate visual processing. Clinical neurophysiology. 2005;116(11):2565–2576. doi: 10.1016/j.clinph.2005.07.021. [DOI] [PubMed] [Google Scholar]

- Sepeta L, Tsuchiya N, Davies MS, Sigman M, Bookheimer SY, Dapretto M. Abnormal social reward processing in autism as indexed by pupillary responses to happy faces. Journal of Neurodevelopmental Disorder. 2012;4(17) doi: 10.1186/1866-1955-4-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamay-Tsoory SG, Tibi-Elhanany Y, Aharon-Peretz J. The green-eyed monster and malicious joy: The neuroanatomical bases of envy and gloating (schadenfreude) Brain. 2007;130(6):1663–1678. doi: 10.1093/brain/awm093. [DOI] [PubMed] [Google Scholar]

- Simpson GV, Dale CL, Luks TL, Miller WL, Ritter W, Foxe JJ. Rapid targeting followed by sustained deployment of visual attention. Neuroreport. 2006;17(15):1595–1599. doi: 10.1097/01.wnr.0000236858.78339.52. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. The Quarterly Journal of Experimental Psychology. 1993;46(2):225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Sung A. The “Eye Avoidance” Hypothesis of Autism Face Processing. Journal of autism and developmental disorders. 2013:1–15. doi: 10.1007/s10803-013-1976-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tipples J. Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition & Emotion. 2006;20(2):309–320. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry research. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uono S, Sato W, Toichi M. Dynamic fearful gaze does not enhance attention orienting in individuals with Asperger’s disorder. Brain and cognition. 2009;71(3):229–233. doi: 10.1016/j.bandc.2009.08.015. [DOI] [PubMed] [Google Scholar]

- Van Dam NT, Gros DF, Earleywine M, Antony MM. Establishing a trait anxiety threshold that signals likelihood of anxiety disorders. Anxiety, Stress & Coping. 2013;26(1):70–86. doi: 10.1080/10615806.2011.631525. [DOI] [PubMed] [Google Scholar]

- Van Selst M, Jolicoeur P. A solution to the effect of sample size on outlier elimination. The Quarterly Journal of Experimental Psychology. 1994;47(3):631–650. [Google Scholar]

- Van Steensel FJA, Bögels SM, Perrin S. Anxiety disorders in children and adolescents with autistic spectrum disorders: A meta-analysis. Clinical Child and Family Psychology Review. 2011;14(3):302–317. doi: 10.1007/s10567-011-0097-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace S, Coleman M, Bailey A. An investigation of basic facial expression recognition in autism spectrum disorders. Cognition and Emotion. 2008;22(7):1353–1380. doi: 10.1080/02699930701782153. [DOI] [Google Scholar]

- Weigelt S, Koldewyn K, Kanwisher N. Face identity recognition in autism spectrum disorders: A review of behavioral studies. Neuroscience & Biobehavioral Reviews. 2012;36(3):1060–1084. doi: 10.1016/j.neubiorev.2011.12.008. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, … Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306(5704):2061–2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW. Controlling low-level image properties: the SHINE toolbox. Behavior research methods. 2010;42(3):671–684. doi: 10.3758/BRM.42.3.671. [DOI] [PubMed] [Google Scholar]

- Woodbury-Smith MR, Robinson J, Wheelwright S, Baron-Cohen S. Screening adults for Asperger syndrome using the AQ: A preliminary study of its diagnostic validity in clinical practice. Journal of Autism and Developmental Disorders. 2005;35(3):331–335. doi: 10.1007/s10803-005-3300-7. [DOI] [PubMed] [Google Scholar]