Abstract

A role for the hippocampus in memory is clear, although the mechanism for its contribution remains a matter of debate. Converging evidence suggests that hippocampus evaluates the extent to which context-defining features of events occur as expected. The consequence of mismatches, or prediction error, signals from hippocampus is discussed in terms of its impact on neural circuitry that evaluates the significance of prediction errors: Ventral tegmental area (VTA) dopamine cells burst fire to rewards or cues that predict rewards (Schultz et al., 1997). Although the lateral dorsal tegmentum (LDTg) importantly controls dopamine cell burst firing (Lodge & Grace, 2006) the behavioral significance of the LDTg control is not known. Therefore, we evaluated LDTg functional activity as rats performed a spatial memory task that generates task-dependent reward codes in VTA (Jo et al., 2013; Puryear et al., 2010) and another VTA afferent, the pedunculopontine nucleus (PPTg, Norton et al., 2011). Reversible inactivation of the LDTg significantly impaired choice accuracy. LDTg neurons coded primarily egocentric information in the form of movement velocity, turning behaviors, and behaviors leading up to expected reward locations. A subset of the velocity-tuned LDTg cells also showed high frequency bursts shortly before or after reward encounters, after which they showed tonic elevated firing during consumption of small, but not large, rewards. Cells that fired before reward encounters showed stronger correlations with velocity as rats moved toward, rather than away from, rewarded sites. LDTg neural activity was more strongly regulated by egocentric behaviors than that observed for PPTg or VTA cells that were recorded by Puryear et al. and Norton et al. While PPTg activity was uniquely sensitive to ongoing sensory input, all three regions encoded reward magnitude (although in different ways), reward expectation, and reward encounters. Only VTA encoded reward prediction errors. LDTg may inform VTA about learned goal-directed movement that reflects the current motivational state, and this in turn may guide VTA determination of expected subjective goal values. When combined it is clear the LDTg and PPTg provide only a portion of the information that dopamine cells need to assess the value of prediction errors, a process that is essential to future adaptive decisions and switches of cognitive (i.e. memorial) strategies and behavioral responses.

Keywords: lateral dorsal tegmentum, reward processing, velocity, motivation, dopamine, prediction error

Introduction

Our memories shape future decisions, and the decisions we make determine what and how memories are updated. It should be expected, then, that many fundamental memory-related processes of the brain (e.g. different associative algorithms, motivation, attention, memory updating, and response selection) work closely with decision neurocircuitry to continuously guide experience-dependent and adaptive behaviors. There are significant, though largely separate, literatures that describe brain mediation of memory and decision systems, and we are only beginning to understand how the memory and decision systems work together.

Multiple memory processors in the brain

Amnesic populations illustrate not only that many regions of the brain play important roles in memory, but different brain areas do so for different reasons. Temporal lobe patients (such as patient HM) show severe but select anterograde episodic memory impairment while procedural memory remain intact (Bayley et al., 2005; Milner, 2005). Patients suffering from basal ganglia dysfunction show selective impairment in habit learning and procedural memory (Knowlton et al., 1996; Yin & Knowlton, 2006). Amygdala damage results in poor emotional regulation of memory (Adolphs et al., 2005; Paz & Pare, 2013). Frontal patients suffer from inadequate working memory (Baddeley & Della Sala, 1996; Goldman-Rakic, 1996). These classic distinctions of the mnemonic consequences of damage to different brain areas in humans, has been replicated in rodents by demonstrating not only double but often triple dissociations of functions of structures like the hippocampus, striatum, amygdala, and prefrontal cortex (e.g. Chiba et al., 2002; Gilbert & Kesner, 2002; Gruber & McDonald, 2012; Kametani & Kesner, 1989; Kesner et al., 1993; Kesner et al., 1989, Kesner & Williams, 1995; Packard, 1999; Packard & McGaugh, 1996; White & McDonald, 2002). Moreover, the often clever behavioral paradigms developed for rodents have inspired the generation of increasingly specific hypotheses about memory functions that have been tested in human subjects (e.g. Hopkins et al., 1995, 2004; Kesner & Hopkins, 2001, 2006).

Neurophysiological investigations of memory-related brain regions both confirmed and challenged the view that there are multiple memory systems in the brain. Spatial and conjunctive context-dependent coding were identified in the hippocampus (e.g., O’Keefe & Dostrovsky, 1971; O’Keefe & Nadel, 1978; see recent reviews in Mizumori, 2008b) and this was consistent with the view that hippocampus mediates episodic memory (Tulving, 2002). Response-related codes were found in the striatum (Eshenko & Mizumori, 2007; Jog et al., 1999; Yeshenko et al., 2004), supporting the hypothesis that striatum mediate habit or response learning (Knowlton et al., 1996). Frontal cortical neurons remain active during delay periods (Goldman-Rakic, 1995), a finding that one might expect from a brain region that is importantly involved in working memory (Fuster, 2006, 2008, 2009). Other additional studies, however, showed that these striking neural correlates of behavior were not so unique to the hippocampus, striatum and frontal cortex. Egocentric movement-related firing by hippocampal interneurons and pyramidal neurons was reported long ago (e.g. Vanderwolf, 1969). Parietal cortical neurons also showed strong representations of behavioral responses (e.g. Fogassi et al., 2005; McNaughton et al., 1994). Delay cells were found in many regions of the cortex in addition to the prefrontal cortex, for example in somatosensory cortex (Meftah et al., 2009), parietal cortex (Snyder et al., 1997), frontal eye fields (Curtis et al., 2004), and in temporal cortex (Kurkin et al., 2011). The fact that single unit evidence aligned only generally, and not specifically, with the lesion literature suggested that many regions of the brain use similar types of information during a single mnemonic operation. There is some evidence that this is indeed the case (e.g. Eshenko & Mizumori, 2007; Mizumori et al., 2004’ Yeshenko et al., 2004) in that spatial and movement correlates have been described for brain areas thought to mediate different forms of memory. It is noted however that most of the published single unit data came from studies of rodents and primates that used different recording methods while subjects performed different types of tasks. Regardless, converging evidence strongly suggested that the different memory regions of the brain work in parallel, perhaps to compete over control of behavioral responses (Mizumori et al., 2004; Packard & Goodman, 2013; Poldrack & Packard, 2003). A remaining challenge for memory researchers continues to be to understand how different memory processing areas of the brain interact to enable animals to behaviorally adapt during environmental change.

Interactions amongst multiple memory processors: Prediction error signaling

To understand how brain areas interact during memory guided behavior, it is useful to identify the nature of the output messages from the structures of interest. In this regard, it is worth noting that there is growing evidence that there may be a common significance to the output messages of many brain structures, and that is to relay the extent to which experience-based predictions about task specific features, outcomes, and learned responses are correct. In fact, an emerging view is that the brain evolved in large part to allow organisms to accurately predict the outcomes of events and behaviors (e.g. Buzsaki, 2013, Buzsaki & Moser, 2013; Llinas & Roy, 2009; Mizumori & Jo, 2013). To predict behavioral outcomes, it is necessary to retain information over time until the outcome occurs. The retention period could vary as needed by different goals. Different brain areas are indeed known to generate and retain sequences of information, an ability that can be accounted for by state-dependent changes in network dynamics (Mauk & Buonomano, 2004), internally-generated oscillatory activity (Pastalkova et al., 2008), and/or dedicated ‘time cells’ (Kraus et al., 2013). Temporal functions such as these should contribute to organisms’ ability to adapt to environments and ultimately to evolve societies of increasing complexity. The latter, in turn, would require coordinated and sophisticated additional mechanisms to make decisions and predictions in dynamic and conditional environments. According to this view, the underlying neural mechanisms of predictions (and the assessment of their accuracy) are likely to be highly conserved across species (Adams et al., 2013; Watson & Platt, 2008).

The ability to make predictions about the outcomes of choices has been described as a mechanism by which sensory and motor systems adjust after errors in prediction (Scheidt et al., 2012; Tanaka et al., 2009), and theoretical models explain how resolution of prediction errors results in new learning and memory (Rescorla & Wagner, 1972). The ability of the brain to accurately predict behavioral outcomes or future events has been most studied in the context of the role of dopamine in reinforcement-based learning. Ventral tegmental area (VTA) dopamine neurons appear to be involved in the regulation of motivation and learning by signaling the subjective value of the outcomes of behavioral actions (Arnsten et al., 2012; Bromberg-Martin et al., 2010; Heinrichs & Zaksanis 1998; Noel et al., 2013; Rubia et al., 2009; Schultz, 2010; Wise 2008). Dopamine neurons (burst) fire to novel rewards and stimuli (Redgrave and Gurney, 2006), then they come to fire to cues that predict reward outcomes (Fiorillo et al., 2003, 2005; Ljungberg et al., 1992; Mirenowicz & Schultz, 1994). When expected rewards do not occur, dopamine cells increase or decrease firing, depending on whether the unexpected reward was greater or less than expected, respectively (Fiorillo et al 2008; Schultz et al., 1997). Convergent evidence suggest that sensory, behavioral, and mnemonic information contribute to dopamine cell prediction of the expected value of behavioral outcomes (e.g. Schultz, 2010; Schultz et al., 1997)

The existence of prediction error signals in sensory, motor and midbrain regions begs the question of whether prediction error signaling occurs in brain areas that mediate more complex learning and memory such as the hippocampus. Recent investigation of this question led to the proposal of a Context Discrimination Hypothesis (CDH) that postulates that single hippocampal neurons provide multidimensional (context-defining) data to population-based network computations that ultimately determine whether expected contextual features of a situation have changed (e.g. Mizumori et al., 1999, 2000, 2007a, 2008a,b; Smith and Mizumori, 2006a,b). Specifically, hippocampal representations of spatial context information (O’Keefe & Nadel, 1978; Nadel & Payne, 2002; Nadel & Wilner, 1980) may contribute to a match-mismatch analysis that evaluates the present context in terms of how similar it is to the context that an animal expected to encounter based on past experiences (e.g. Anderson & Jeffery, 2003; Gray, 1982, 2000; Hasselmo, 2005; Hasselmo et al., 2002; Jeffery et al., 2004, Lisman & Otmakhova, 2001; Manns et al., 2007a; Mizumori et al., 1999, 2000; Smith & Mizumori, 2006a,b; Nadel, 2008; Vinogradova, 1995). Human brain imaging studies provide comparable evidence for a match-mismatch function of hippocampus (Chen et al., 2011; Dickerson et al, 2011; Duncan et al., 2012a,b; Foerde & Shohamy, 2011; Kuhl et al., 2010; Kumaran & Maguire, 2007). Detected mismatches can be used to identify novel situations, initiate learning-related neural plasticity mechanisms, and to distinguish different contexts- functions that are necessary to define significant events or episodes. When a match is computed, the effect of hippocampal output should be to strengthen currently active memory networks located elsewhere in the brain (e.g. neocortex).

Context discrimination, or the detection of a mismatch between expected and experienced context-specific information, could be considered an example of an error in predicting the contextual details of the current situation, referred to as context prediction errors. Upon receipt of the context prediction error message, efferent midbrain structures may respond with changes in excitation or inhibition that are needed to evaluate the subjective value of the context prediction error signal (e.g. Humphries & Prescott, 2010; Lisman & Grace, 2005; Mizumori et al., 2004; Penner & Mizumori, 2012a,b). On the other hand, a hippocampal signal indicating that there was no prediction error may engage plasticity mechanisms that enable new information to be incorporated into existing memory schemas (e.g. Bethus et al., 2010; Mizumori et al., 2007a,b; Tse et al., 2007). Thus, hippocampal context analyses become critical for the formation of new episodic memories not only because prediction signals provide a mechanism that separates in time and space one meaningful event from the next, but also because the outcome of the prediction error computation engages appropriate neuroplasticity mechanisms in efferent structures that promote subsequent adaptive decisions and updated memories (Mizumori, 2013; Mizumori & Jo, 2013).

If the generation of midbrain prediction error signals are fundamental to learning and memory functions, it would be expected that reward and prediction error signaling by dopamine neurons is observed during not only reinforcement learning, but also hippocampal-dependent learning. Puryear et al. (2010) and Jo et al. (2013) recorded VTA dopamine neurons as rats used a hippocampal-dependent strategy to retrieve small or large rewards that were located in predictable locations on an 8 arm radial maze. Similar to reports from primate classical and instrumental conditioning studies (e.g. Schultz et al., 1997; Schultz, 2010), rat dopamine neurons showed the largest phasic response when large rewards were encountered. Other dopamine neurons showed increased firing just prior to reward encounters, and this anticipatory response was significantly reduced when the prefrontal cortex was inactivated (Jo et al., 2013). Puryear et al. showed that DA reward responsiveness changed following experimental manipulation of contextual features of the test room, manipulations that also result in hippocampal place field remapping (as reviewed in Moser et al., 2008 and Penner & Mizumori, 2012a). Both Puryear et al. and Jo et al. showed that when rats encountered locations that were unexpectedly missing expected rewards, dopamine cells exhibit a transient inhibition of firing (i.e. a negative reward prediction error signal). VTA dopamine responses to reward, then, reflect reward processing that is relevant to different types of memories.

Role of the pedunculopontine nucleus (PPTg) in dopamine reward processing

The context sensitivity of dopamine cell reward responses suggests that hippocampal context information somehow influences dopamine reward responsiveness. It is not clear, however, how hippocampus provides VTA with relevant context information especially since there are no direct connections from the hippocampus to the VTA. To begin to address this issue, our attention focused on the roles of the pedunculopontine nucleus (PPTg) and the lateral dorsal tegmentum (LDTg) since they are considered to be two of the most important regulators of dopamine cell burst firing (Lodge & Grace, 2006). Norton et al. (2011) recorded PPTg neural activity as rats solved the same spatial working memory task as that used in the Puryear et al. (2010) and Jo et al. (2013) studies. 45% of recorded PPTg neurons were either excited or inhibited upon reward acquisition, and there was no evidence for prediction error signaling. Thus, the prediction error signals appear to arrive in the VTA via a route that does not involve the PPTg (perhaps via the rostromedial tegmentum since it is thought to relay error signals from the lateral habenula, Hong et al., 2011; Matsumoto and Hikosaka, 2007, 2009). Another separate population of PPTg neurons exhibited firing rate correlations with the velocity of an animal’s movement across the maze. A small number of cells that encoded reward in conjunction with a specific type of egocentric movement (i.e. turning behavior). The context-dependency of PPTg reward responses was tested by observing the impact of changes in visuospatial and reward information. Visuospatial, but not reward manipulations significantly altered PPTg reward-related activity. Movement-related responses, however, were not affected by either type of manipulations. These results suggest that PPTg neurons conjunctively encode both reward and behavioral response information, and that the reward information is processed in a context-dependent manner. It appears that the PPTg is involved in a functional neural circuit between hippocampus and the VTA.

PPTg responses to rewards tended to persist for the duration of reward consumption, whereas VTA cells show phasic high frequency burst firing to rewards just as rats encountered rewards. Also the duration of the VTA response was short compared to the duration of reward consumption. Thus while PPTg may initiate VTA dopaminergic reward-encounter responses, other intrinsic or extrinsic mechanisms must subsequently down regulate dopamine burst firing to rewards (perhaps via inhibitory input from accumbens or pallidum (Zahm & Heimer, 1990; Zahm et al., 1996).

Role of the lateral tegmentum (LDTg) in dopamine reward processing

The lateral dorsal tegmental nucleus (LDTg) is thought to importantly control dopamine phasic firing to rewards (Lodge & Grace, 2006). The LDTg provides glutamatergic, GABAergic, and cholinergic input to not only mesoaccumbens-projecting dopamine neurons but also the GABA neurons of the VTA (Clement et al., 1991; Cornwall et al., 1990; Forster & Blaha, 2000; Forster et al., 2002). LDTg stimulation results in dopamine cell burst firing and increased dopamine release in the nucleus accumbens while LDTg lesions reduce dopamine burst firing (Grace et al., 2007; Lodge and Grace, 2006). LDTg’s control over dopamine cell activity appears amplified since it also controls the extent to which activation of other afferent systems (e.g. PPTg) impacts burst firing by dopamine cells (Lodge & Grace, 2006). Although the LDTg is strategically situated to exert multiple forms of control over the adaptive responses of dopamine neurons, the nature of the information transmitted is not known. Stimulation of only those LDTg neurons that project to VTA dopamine neurons enhance conditioned place preferences, suggesting that LDTg relays reward-relevant information (Lammel et al., 2012). However, LDTg lesions result in the attenuation of drug-induced stereotypy and sensitization (Forster et al., 2002; Laviolette et al., 2000; Nelson et al., 2007), effects likely mediated via mesencephalic and medullary efferents that control patterned limb movements (Brudzynski et al., 1988; Mogenson et al., 1980). Here, we test the nature of LDTg neural codes in a task that a) has a strong and directed locomotion component, b) has a specific reward-based econometric demands, c) induces VTA and PPTg neural responses to reward value (Jo et al., 2013; Martig et al., 2011b; Norton et al., 2011; Puryear et al., 2010), and d) requires VTA functionality (Martig et al., 2009, 2011a). In Experiment 1, the LDTg was inactivated while rats performed a spatial-reward discrimination task. In Experiment 2, LDTg neural activity was recorded as rats performed the same task. LDTg neural responses were then compared to those of VTA and PPTg cells that were recorded in previous studies (Norton et al., 2011; Puryear et al., 2010).

2. Materials and methods

2.1. Subjects

Male Long-Evans rats (Simonsen Laboratories) were cared for and housed according to regulations from the University of Washington’s Institutional Animal Care and Use Committee and NIH guidelines. Behavioral testing occurred during the light phase of a 12:12 hr light schedule. Water was continually available, but access to food was restricted to maintain rats at about 85% of their free feeding weights.

2.2. Apparatus

Behavioral training of a differential reward spatial memory task was conducted on an 8-arm radial maze as described previously (e.g. Norton et al., 2011; Puryear et al, 2010). The black Plexiglas maze consisted of a central platform (19.5 cm dia) that was elevated 79 cm off the ground with eight radially-extending arms (or alleys; 58 × 5.5 cm). At the end of alternating maze arms was a small receptacle that contained either small (100μL) or large (300μL) amounts of diluted Ensure chocolate milk. Licking the milk generated an electrical pulse that timestamped the exact time that the rat obtained the reward. Access to the chocolate milk rewards was controlled remotely by moving the proximal segment of individual maze arms up or down relative to the central platform. Visual cues were placed on the black curtains that surrounded the maze (Fig 1A).

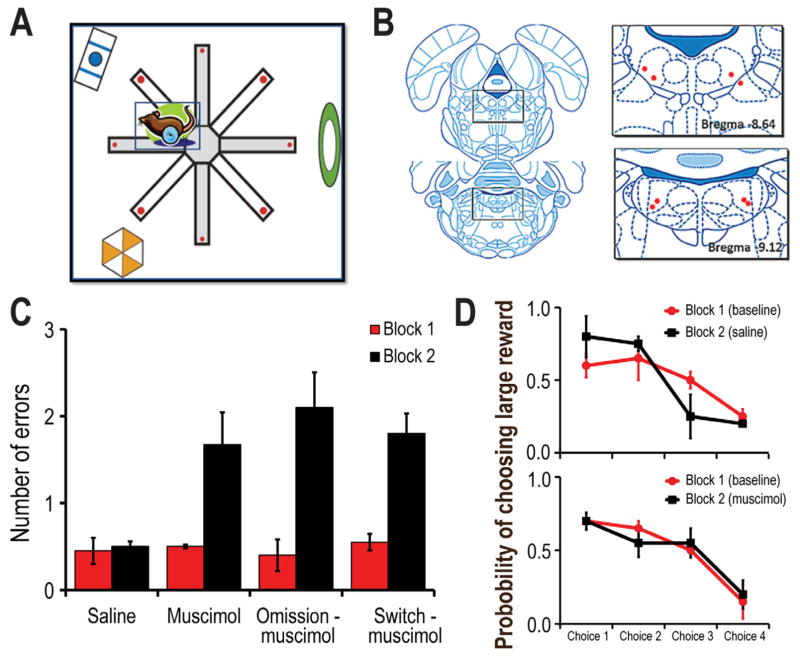

Figure 1.

A) Schematic illustration of a top-down view of the radial maze. The maze was enclosed by a black curtain with distinct visual cues that served to guide choices. Alternating maze arms contained large (big red dots) or small (small red dots) amounts of chocolate milk. B) Histological depiction of the cannula tips within the LDTg. C) Summary of the mean (+ SE) number of errors made per trial before (Block 1) and after (Block 2) either saline or muscimol infusion into the LDTg. Block 2 consisted of either no manipulation, reward omission, or reward switch conditions. Muscimol-infusion significantly increased the number of error regardless of the reward manipulation. D) Probability of choosing a large reward maze arm on choices 1–4 during the test phase of a session (in which only two large and two small reward arms remained to be selected). Top Rats preferentially selected the large reward arm during the first and second choices of the test phase during baseline or saline trials. Bottom Muscimol infusion did not impact rat’s preference for large rewards even though a significant increase in errors per trial was observed (see C).

2.3. Surgery

Experiment 1

Reversible inactivation of the LDTg was accomplished via local infusion of the GABA agonist, muscimol. Guide cannula were obtained from Plastics One (Roanoke, VA/C232-SP). Each guide cannula (22 ga) allowed for microinfusion of 0.1 uL of muscimol via an injection tube (C232I/SP 28 ga). Bilateral cannula were implanted after rats achieved asymptotic performance on the maze task. Rats were deeply anesthetized with isoflurane, then secured in a stereotaxic apparatus (Kopf Instruments) while under isoflurane anesthesia. Cannula were placed near the dorsal tip of the LDTg (A-P: −8.8 anterior/posterior, M-L: +/− 2.5 medial/lateral, and D-V: 6 mm). Cannula assemblies were secured to the skull with acrylic cement.

Experiment 2

Rats were deeply anesthetized with isoflurane just prior to the surgical implantation of the recording assemblies. At that time, an antibiotic (Baytril, 5 mg/kg) and analgesic (Metacam, 1 mg/kg) were also administered. The skull was exposed and stereotaxically placed holes were made to allow for bilateral implantation of recording electrodes dorsal to the LDTg (A-P: −8.8 anterior/posterior, M-L: +/− 2.5 medial/lateral, and D-V: 4–5 mm). A reference electrode was also implanted near the anterior cortex (ventral to the brain surface 1–2mm), and a ground screw secured to the skull. The microdrives were then secured to the skull with screws and acrylic cement.

3. Behavioral testing

3.1. Differential Reward Behavioral Training

Rats were habituated to the testing environment by initially allowing unconstrained exploration of the maze until the randomly placed rewards were consistently consumed. Subsequently, training on the differential reward spatial memory task began. Such training consisted of two blocks of 5 trials (2 min intertrial interval). For each trial, rats collected the eight rewards by visiting each maze arm once. Repeat visits were considered errors. The first four choices of each trial comprised the Study phase in which four different arms were individually presented to the rat. The order of presentation was randomly determined for each trial except that two of the arms contained small rewards while the other two arms contained large rewards. Upon return to the central platform after the fourth (forced) choice, all eight of the maze arms were simultaneously available to begin the Test phase. At this time rats selected the four remaining rewards from un-entered arms. Reward locations for large and small rewards were held constant for individual rats. However the assignment of small and large reward locations was counterbalanced across rats. Presurgical maze training continued until rats performed 10 trials within an hour for each of three consecutive days.

Following the surgical implantation of guide cannula or recording electrodes (see description below), trials 1–5 (Block 1) were conducted in a familiar environment according to previously learned task rules. Trials 6–10 (Block 2) tested behavioral and neural responses during conditions in which either the expected reward locations were switched such that locations that previously contained large rewards now contained the small reward (reward switch condition), or two (pseudorandomly determined) rewards (one small and one large) were omitted from the food cups during the Study phase (reward omission condition). On other days, trials 6–10 were identical to trials 1–5; comparison of unit activity across these blocks was used to verify the stability of the recordings and the behavioral correlates. The order of Block 2 conditions was randomly determined for each cell tested.

3.2. LDTg inactivation

Following recovery from surgery, rats were retrained on the familiar maze task until asymptotic performance was achieved (4 or less errors per block for 2 sessions). Rats were then habituated to the injection procedure with 1 control (saline, or SAL) infusion that took place 2 days before the LDTg inactivation protocol began. During infusion days, rats were removed from the maze after block 1 of trials, then infused either with SAL or muscimol (MUSC; 1 μg/μL, 0.1 uL Sigma-Aldrich). All infusions occurred at a controlled rate of 0.05 uL/min with the aid of a motorized syringe pump (KD Scientific, Holliston MA) during the intertrial interval between trials 5 and 6. Animals were placed into their home cages then returned to the maze 15 minutes later for Block 2 testing.

3.3. LDTg electrode preparation, neural recordings, and data analysis

Custom built microdrives held two tetrodes each (e.g. Martig et al., 2011a; Norton et al., 2011; Puryear et al., 2010). Each tetrode was made of four 25μm tungsten wires that were gold plated to achieve impedances of 100 to 400 kΩ. Tetrodes were threaded through a 30 G stainless steel cannula guide so that they extended 2~3mm beyond the cannula tip.

The rats were given one week after surgery to recover before retraining on the spatial memory task. The location of the rat on the maze was monitored by a camera located on the ceiling above the center of the maze. The camera detected the infrared diodes connected to the rat’s head stage (sampling rate=30 kHz; pixel resolution=2.5 cm). During retraining, the recording electrodes were slowly lowered to the target region of the LDTg (no more than about 250 μm per day) such that the electrodes were situated within the dorsal aspect of the LDTg by the time rats consistently performed daily 10 maze trials (two blocks of 5 trials each). All cellular recordings were made using the digital Neuralynx data acquisition system (Neuralynx, Bozeman, MT), and subsequently sorted into signals from individual cells with the aid of an Offline Sorter routine (Plexon, Inc., Dallas TX) that allows segregation of spikes based on clustering parameters such as spike amplitude, spike duration, and waveform principle components. Cells were analyzed further if the waveform amplitude was at least three times that of the background cellular activity, and if the cluster boundaries were consistent across trials of a session.

The behavioral correlates of unit activity were analyzed using custom Matlab software (MathWorks Inc. Natick, MA). Given our hypothesis that the LDTg regulates VTA dopamine cell responses to reward in this task, reward-related responding was evaluated for LDTg cells using the same methods that were used to identify VTA reward responses in prior studies (e.g. Jo et al., 2013; Puryear et al., 2010). Neural data were organized into perievent histograms (PETHs) that were centered on the time of reward encounters (+ 2.5 sec; 50 ms bins). A significant reward response showed: 1) peak firing rates that occurred within 150 ms of reward acquisition, 2) peak rates that were significantly greater than the average firing rate for the block of trials being evaluated (Wilcoxin sign rank test, p<.05).

VTA cells that are recorded in the same task as the one used in this experiment also show strong correlations with an animal’s velocity (Puryear et al., 2010). Thus, given the alleged role of the LDTg in locomotion (e.g. Shabani et al., 2010), we correlated the firing rates of LDTg neurons with the velocity of the animals as they traversed the maze. Based on animal tracking data that were collected during the recording sessions (30 Hz), we determined the ‘instantaneous’ velocity of the animal by dividing the distance between two points by the inverse of the video sampling rate (Gill & Mizumori, 2006; Puryear et al., 2010; Yeshenko et al., 2004). Each cell’s firing rate was then correlated with these velocity measures (Pearson’s linear correlation; α = .05) within the range of 3–30 cm/s. A minimum cut off was employed so that the velocity analysis did not include times when the animal was not moving, i.e. when rewards were being consumed.

3.4. Histology

After completion of testing, the rats were overdosed using 50mg/ml; 1.0 cc, pentobarbital sodium and sodium phenytoin mixture (Buthanasia, Schering-Plough Animal Health, Union, NJ). To mark electrode tracks, lesions were created by passing a current of .01mA for 25 seconds through each recording channel. The rats were then perfused with 30mL of 0.1% phosphate buffered saline followed by 30mL of 10% of a formalin/saline solution. The brain was sunk overnight in 30% sucrose. 40 μm coronal brain slices were taken with a vibratome. The sections were stained with cresyl violet, and then digitized to determine the exact location of the lesions and electrode tracts. The locations of recorded cells were determined using standard histological reconstruction methods. Only data from cells that were identified to be recorded in the LDTg were used for subsequent analysis.

4. Results

4.1 Experiment 1: LDTg inactivation impairs accurate choice behavior

Placement of the guide cannula in the LDTg was verified histologically for rats that completed all behavioral test sessions (Fig. 1B). That is, on different days, the LDTg of each rat (n=4) was infused with either SAL or MUSC prior to each of three Block 2 conditions (no reward manipulation, reward omission, reward location switch). Since the testing conditions were familiar to the rats during Block 1 trials, their performance during this block was considered as the baseline for comparison to Block 2. Figure 1C illustrates that LDTg inactivation produced a significant increase in errors regardless of the nature of the Block 2 condition. A repeated measures ANOVA revealed a significant block effect [F1,12] = 39.76, p<.001] and a significant interaction effect between manipulation groups and block condition [F3,12 = 4.43, p<.03]. Posthoc tukey tests showed that animals that were infused with muscimol made more errors during Block 2 compared to Block 1 (p<.05). Given the potentially prominent role of the LDTg in the control of locomotion, it is worth noting that LDTg inactivation did not produce an observable lack of motor coordination although it did increase the time to complete a trial during Block 2 compared to Block 1 as demonstrated by a significant main effect of block (F1, 12= 32.95, p<.001). The longer latency likely did not reflect general disorientation but rather the extra time that it took to find all of the correct locations. Evidence supporting this conclusion is that both SAL and MUSC treated rats showed the same accuracy when preferentially choosing large reward arms during the first two free choices of the Test Phase (Fig. 1D). Thus, while LDTg inactivation did not alter reward discrimination nor learned reward-location associations per se, it resulted in impaired trial-specific memory.

4.2. LDTg neural codes during differential reward spatial task performance

Five additional rats completed the behavioral training regimen described above, and contributed histologically verified (Fig. 3A) LDTg single unit data to the analysis described below.

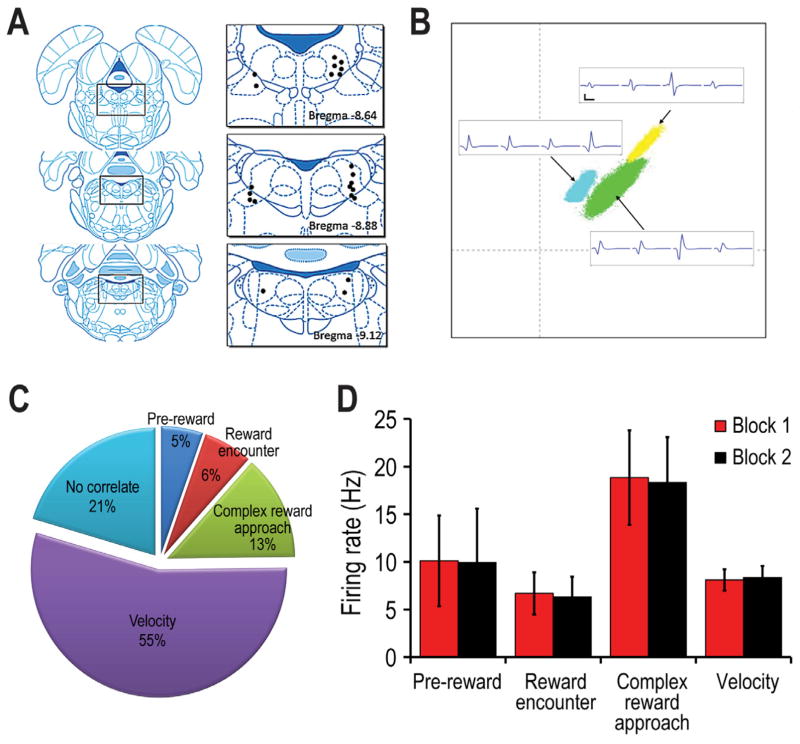

Figure 3.

A) Histological summary of LDTg recording sites. Multiple cells were recorded at the same site, and these are represented by a single dot. B) Sample cluster analysis that compare specific features of LDTg spikes, along with corresponding analog traces of LDTg neural signals. (Calibration: 500 μsec, 50 μV) C) The proportion of recorded LDTg cells whose firing was correlated with reward (pre-reward + velocity, reward encounter +velocity), complex reward approach) or velocity (only). 21% of the LDTg cells showed no clear correlate (NCC). D) The mean (+ SE) firing rate of the different categories of LDTg neurons when measured across the whole recording session (see text for explanations).

Behavioral performance

LDTg single unit activity was recorded as rats performed at asymptotic levels in terms of choice accuracy. During baseline performance (Block 1), rats made on average 0.71 errors per trial (Fig. 2A). Consistent with past reports (e.g. Norton et al., 2011; Puryear et al., 2010) and with data in Figure 1D, rats showed a significant preference for maze arms with large rewards early in the Test phase of Block 1 trials (Fig. 2B) of baseline and reward omission trials. However when the locations of the large and small rewards were switched, rats initially chose locations that were previously associated with large rewards. This is shown by the lower preference for choosing the (new) locations of large reward arms in Block 2 (F3, 24=18.66, p<.001). This finding shows that rather than following any sort of sensory cue emanating from the reward itself, rat’s choices were guided by the learned association between a location and rewards of particular magnitudes. Figure 2C compares the velocity of movement as rats approached the reward locations, and during the first 2 sec after rewards were encountered. It is shown that rats ran faster toward expected large rewards than expected small rewards (t(4) = 11.26, p < 0.001).

Figure 2.

A) Summary of the number of the mean (+SE) number of errors made per trial during Blocks 1and 2 of testing as LDTg single unit activity was recorded. Block 2 included either reward switch or reward omission conditions. Neither manipulation significantly impacted the error count. B) Top Similar to Figure 1D, rats preferentially selected the large reward arms during the test phases of the trials. Random reward omission did not change this preference. Bottom Switching the location of the large and small rewards resulted in a significant reduction in choices to maze arms that previously contained large rewards, indicating the expectation for rewards of particular magnitudes significantly affected choice behavior. C) Mean (+/− SE) velocity of the rats before and after they arrived at the reward location (T0). It can be seen that the peak velocity was higher when rats approached locations that were expected to have large rewards as compared to approaches to locations that contained small rewards.

LDTg Cell Firing Characteristics: A total of 176 units were recorded from 5 rats. Many of these units were tested in more than one session in order to verify the reliability of the unit-behavioral correlates. Thus the following analysis was conducted for 113 unique and histologically verified recorded LDTg neurons (Fig. 3A). Figure 3B exemplifies the simultaneous recording of multiple LDTg neurons. About 79.6% (90/113 cells) of recorded LDTg cells showed significant variations in firing rate relative to specific aspects of task performance (Fig. 3C). A large segment of the recorded cells showed significant correlations with the ongoing velocity of a rat’s movement across the maze (velocity cells) and/or firing relative to encounters with reward (reward cells). A number of cells showed dramatically elevated firing prior to reward encounters (complex (multiphasic) reward approach cells). The mean firing rates of the cells of different behavioral categories are shown in Fig. 3D. There was no evidence for location-selective LDTg neural firing.

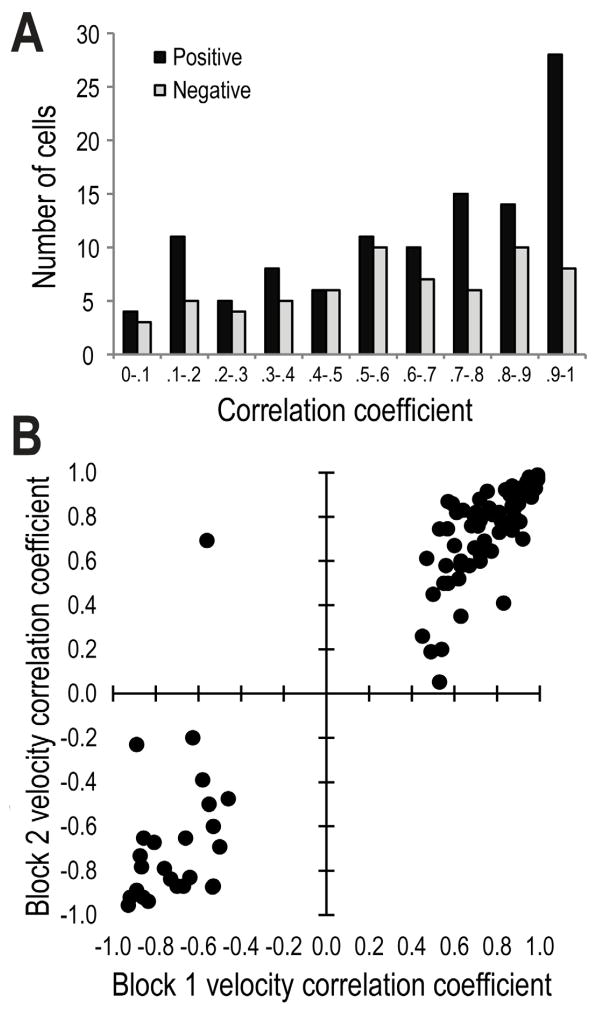

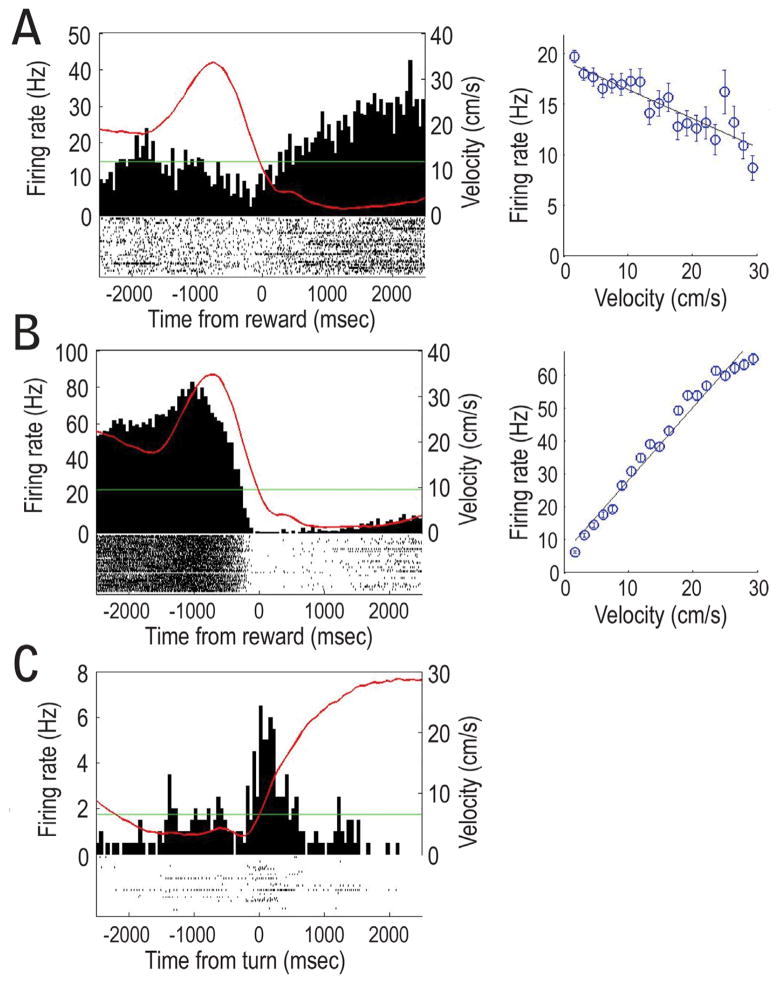

MOVEMENT-RELATED LDTg RESPONSES were observed for a majority of LDTg neurons (70%: 79/113; 62 were correlated to only velocity while 17 were correlated with both reward and velocity). Velocity correlations were observed to be strongly positive or negative (ranging from +0.99 to −0.97; Fig. 4). However, cells that showed the strongest correlation with velocity (e.g. r > + 0.50) tended to be positively correlated rather than negatively correlated. As a group, the strength of the velocity correlation did not change from Block 1 to Block 2 (Fig. 4). Also, the mean firing rates of these velocity-correlated cells were not affected by the experimental manipulations of Block 2 (Fig. 3D). Figures 5A and B provide individual cell examples of strong negative and positive correlations between firing rates and the velocity of movement as the rat headed outbound on maze arms (T0 corresponds to the time of reward encounters at the ends of maze arms). A minority (n=10) of the velocity cells fired relative to specific behaviors of the rat such as when they made 180 degree turns at the arms ends (Fig. 5C). Seventeen velocity-correlated cells also showed significant responding to reward. These conjunctive velocity-reward cells are described below.

Figure 4.

A) Frequency histogram of R-values that reflect the correlation between the firing rates of LDTg neurons and an animal’s velocity of movement across the maze (whole session data). A large proportion of these cells showed very strong correlations (R-value of >.70) especially for cells with positive correlations. B) Scatterplot of the significant R-values (> about + 0.40) for Block 1 trials relative to Block 2 trials. R-values were not significantly different between Blocks 1 and 2.

Figure 5.

A) An example of LDTg neural firing that was negatively correlated with movement velocity. The red line in the histogram reflects the rat’s velocity of movement as it approached the reward location. The scattergram shows the negative correlation between firing rate of the cell on the left and the velocity of movement. B) An example of a strongly positive correlation between LDTg firing and velocity. C) Sensitivity of LDTg cells to egocentric behaviors was also evident when rats made 180 degree turns at the ends of the maze arms (T0). This cell responded during the turns made at the end of the maze arms.

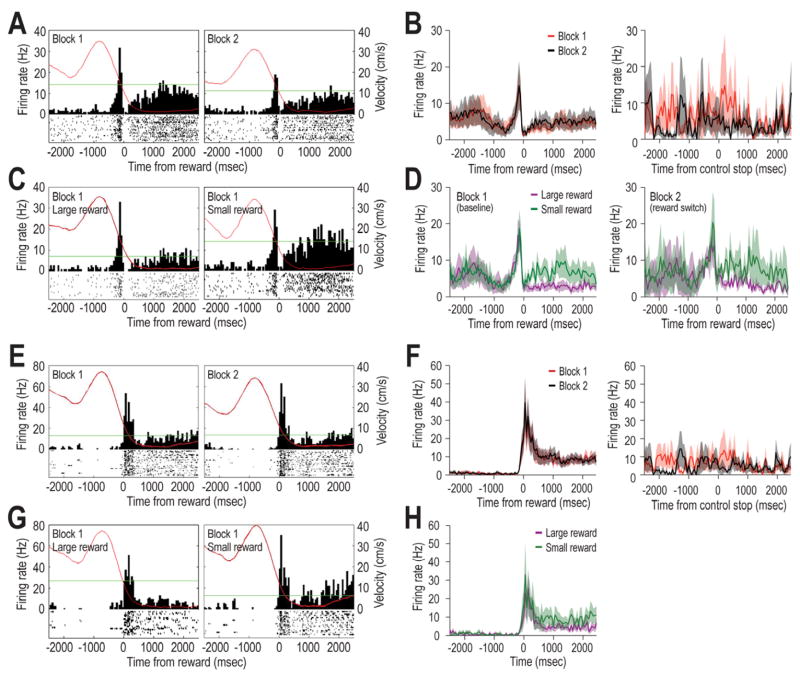

REWARD-RELATED LDTg RESPONSES were observed for 25% of recorded cells. Of these, 6/113 cells (5%) showed a peak response just before reward encounters (pre-reward cells), and 7/113 cells (6%) burst fired shortly after the onset of reward encounter (reward encounter cell). Figure 6A and 6E provide individual cell responses as examples. A third pattern (referred to as complex reward approach responses) showed neural activity that included a combination of excitatory and inhibitory responses close to the time of reward (examples shown in Fig. 7; n= 15; 13%) as well as phasic responding leading up to the reward (between 500 and 1000 ms prior to reward, Fig. 7A and 7B). The average (whole session) mean firing rates were found to vary significantly across the different categories of reward-related cells (Fig. 3D) and this difference did not change across Blocks 1 and 2 (F (2, 95) = .88, p>.05). No significant differences were observed in terms of reward responsiveness after reward switch or omission manipulations. Therefore data from these experimental conditions were combined, and will be referred to generally as Block 2 effects.

Figure 6.

Examples of reward responses of LDTg neurons. A) LDTg pre-reward cells showed bursts of firing just prior to reward encounters (T0) during Blocks 1 and 2 of maze testing. B) A population summary illustrates that the pre-reward responses of LDTg neurons did not change in Block 2, and this was the case regardless of whether Block 2 included reward switch or reward omission trials. The Control Stop population histogram (right) shows firing when the rat stopped forward movement during times other than when rewards were encountered (e.g. on the central platform of the maze; T0). No distinct bursts were observed in these cases, indicating that the burst firing by pre-reward cells was not due to cessation of movement per se. C) A pre-reward cell that fired similarly before reward encounters regardless of the subsequent magnitude of reward. However this cell differentially responded during the consumption of small and large rewards, a pattern that was also reflected in the population summary shown in D). The right histogram in D) illustrates that during the reward switch condition, pre-reward cells continued to fire selectively during the consumption of small rewards even when the reward was in a new location on the maze. E) Reward encounter cells fired bursts just after reward encounters (T0). This burst did not change between Blocks 1 and 2 (reward omission trials). F) The population histogram illustrates the generality of the lack of response to unexpected reward omissions. The right histogram illustrates that the burst response at reward encounters was not due to the cessation of forward movement per se. G) An LDTg cell that did not distinguish encounters with large and small rewards. H) This lack of discrimination of reward magnitudes was also evident in the population response. Similar to pre-reward cells, reward encounter cells showed significantly elevated firing during the subsequent consumption of small, but not large, rewards. Inadvertently, reward switch trials were not conducted while recording reward encounter cells.

Figure 7.

Individual cell examples of complex reward approach neural correlates of LDTg neurons (T0 = food encounter; red lines reflect the animal’s velocity relative to food encounters). A and B) Two examples of dramatically increased LDTg firing as the rat ran outbound on maze arms toward the reward. The firing rate was not correlated with the rat’s velocity, and the peak rates occurred well outside the typical time window for classification of as a reward responsive neuron. These correlates did not change when the locations of large and small rewards were switched (Block 2). C) Other complex reward approach neurons showed a multiphasic response around the time of reward encounter. As rats started to go down a maze arm the firing of this cell was significantly reduce, and this quiet period was quickly followed by a short period of very high firing, which in turn was followed by an another brief period of inhibition just prior to the reward encounter. Just after encounters with rewards, cell firing again was elevated briefly. This complex pattern of phasic responding did not change after the reward manipulations, nor were they different on large and small reward choices.

Fig. 6A shows a pre-reward response of an LDTg neuron that appeared to attenuate during Block 2. This apparent effect, however, was not common since the population summary (Fig. 6B, left graph) shows that there was no consistent change across blocks. The population histogram in the far right panel of Figure 6B illustrates that there was no specific response of pre-reward cells to the cessation of forward movement per se (T0 = control stop) since no change in firing was observed when the rat stopped forward movement at non-rewarded locations. This shows that the pre-reward response was not a mere reflection of the cessation of forward movement. Fig. 6C compares responses of a representative LDTg neuron prior to receiving a large or small reward during Block 1. It can be seen that the pre-reward neural responses were similar regardless of the expected reward magnitude (e.g. small vs large reward), but that the firing rate was higher when consuming a small, rather than large, reward (Wilcoxon; z=−6.69, p<.01). This pattern was also observed in the population summary (Fig. 6D, left panel; Wilcoxon; z = −1.99, p<.05), indicating that the same LDTg neurons code reward expectation as well as consumption of relatively small rewards. Three of the pre-reward cells were tested for their response after the expected locations of the large and small reward were switched. As can be seen in the right graph of Figure 6D, these pre-reward cells continued to increase firing during the consumption of small rewards even when it was found in a different location (Wilcoxon; z =−6.26, p<.01).

Fig. 6E shows a reward encounter cell response that did not change across blocks of trials, and this is confirmed in the adjacent population histogram (Fig. 6F). The reward encounter responses could not be accounted for by the cessation of movement per se since no such response was observed in the population histogram in the fourth column (T0 = control stop). The bottom row of Fig 6G illustrates that the reward encounter response did not differ depending on the magnitude of reward, and this is also verified in the adjacent population summary (Fig. 6H). However, similar to the pre-reward cells, reward encounter cells discriminated between consumption of large and small rewards by exhibiting higher firing rates during small reward consumption (Wilcoxon; z = −6.02, p<.01), although to a lesser degree than pre-reward cells. In summary, a) pre-reward and reward encounter responses were not due to the fact that rats ceased forward movement at the time of reward, b) there was no evidence that that LDTg neurons signal reward prediction errors (since responses did not change during the reward omission condition), and c) pre-reward and reward encounter neurons showed greater responses toward the smaller rewards.

Given the heterogeneous nature of LDTg neuronal types, it was of interest to assess whether the morphology of LDTg neural signals (e.g. standard biphasic, triphasic, inverted biphasic) or spike duration were associated with a particular behavioral correlate. No clear relationships were found with respect to reward codes: cells with biphasic, inverted biphasic or triphasic response profiles responded to rewards, both with and without velocity correlations. Interestingly, velocity (only) cells showed a greater proportion of biphasic (normal and inverted) signals compared to triphasic signals (X2 (2) = 17.43, p<.001). Thus, our analysis suggest that while reward-related information is likely relayed from the LDTg by different types of cells, cells that code only movement velocity could represent a unique cell population.

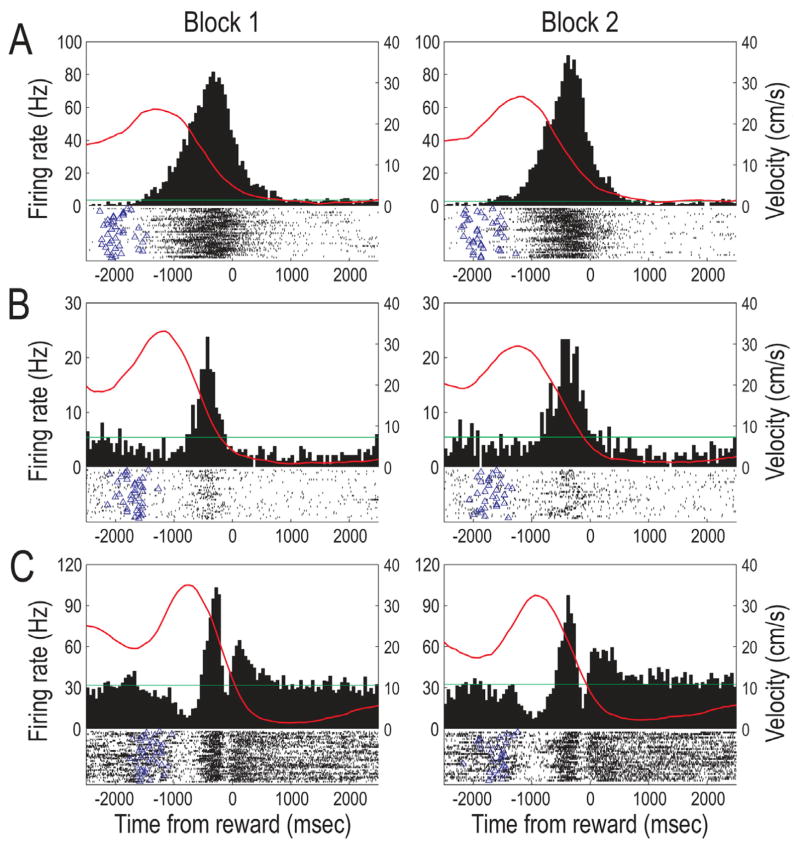

CONJUNCTIVE MOVEMENT AND REWARD RESPONSES were observed for a portion of the velocity and reward responsive cells described above (70.7%, or 17/28, of all reward cells and 21.5%, or 17/79, of all velocity cells). It was of interest to determine whether reward or velocity was differentially coded by these conjunctive reward-movement cells compared to LDTg cells that only showed only velocity or only reward codes. Both pre-reward and reward encounter responses were exhibited by conjunctive cells, and these reward responses did not change after either reward or manipulation. To determine whether the velocity codes differed between conjunctive cells and cells that coded only velocity, we compared the r-values of the velocity correlations for pre-reward conjunctive cells, reward encounter conjunctive cells, and velocity (only) cells. Figure 8A (top panel) compares the absolute values of the velocity correlation for pre-reward, reward encounter, and velocity (only) categories of LDTg neural correlates as a function of Block 1 vs 2 trials. Scatter plot insets show individual cell data along the range of correlation values from −1.0 to 1.0 (Red dots: pre-reward cells; blue dots: reward encounter cells). While the velocity correlation did not change across Blocks of trials (p>.05), reward encounter cells showed significantly higher velocity correlations than either pre-reward or velocity only cells, F(2,71) = 16.87, p<.01 (Tukey test: p<.01 for each post hoc comparison). From an examination of the scatterplot distribution of the cells’ correlation scores, it is clear that pre-reward cells showed firing that was either very strongly positively and negatively correlated with velocity. In contrast, the reward encounter cells tended to show mostly negative correlations with velocity.

Figure 8.

Comparison of the velocity correlation for LDTg, PPTg, and VTA neurons. A) (Top) The mean (+) SE velocity correlation coefficient is shown for pre-reward, reward encounter, and velocity (only) LDTg neurons. Velocity correlations for the entire recording session were compared between Blocks 1 and 2. While there were no effects of Block, reward encounter cells were more strongly correlated with velocity than pre-reward or velocity (only) cells. The scatterplot inset compares individual cell correlations for Block 1 vs Block 1. Pre-reward cells (shown by the red dots) were either strongly positively or strongly negatively correlated with velocity. In contrast, reward encounter cells (blue dots) were only negatively correlated with velocity. (Bottom) The velocity correlation of LDTg neurons as rats approached reward locations on the maze (outbound), or as rats began to move inward on the maze arms towards the central platform (inbound) after making a 180 degree turn at the maze arm ends. Pre-reward cell firing was more strongly correlated with velocity when rats moved outbound than inbound, an effect not observed for reward encounter or velocity (only) cells. [Note: a single dot in the scattergram inset may correspond to more than one cell with the same r-value] The velocity correlation value is much higher for reward encounter cells in the top figure because that summary included velocities during behaviors that were not included in the bottom figure (e.g. turns at the arm ends and crossing the central platform). B) Pedunculopontine (PPTg) neural activity that was reported in a study by Norton et al. (2011) was reanalyzed here so that direct comparisons could be made to the LDTg responses. Top In contrast to LDTg responses, PPTg reward encounter cells (bar graphs) showed weaker correlations relative to pre-reward and velocity (only) cells. The scatterplot shows that while pre-reward PPTg cells (red dots) showed only positive correlations that were not sensitive to reward manipulations that occurred in Block 2 trials, the activity of PPTg reward encounter cells (blue dots) were more weakly positive or negatively correlated with velocity. Bottom Unlike LDTg, PPTg neurons did not show different velocity correlations during outbound and inbound movements trajectories. The scatterplot, however, illustrates that while some of the PPTg neurons showed stronger velocity correlations in the outbound direction, many did not. C) Ventral tegmental (VTA) neural activity that was acquired by Puryear et al. (2010) was reanalyzed here so that direct comparisons could be made to the LDTg and PPTg responses. Top Similar to PPTg, there was no change across Blocks of trials in terms of the velocity correlation coefficient for any type of cell response. However velocity (only) cells showed stronger correlations with velocity than especially the reward encounter cells. In the scatterplot inset it can be seen that while reward encounter cells (blue dots) showed a range of positive and negative correlations with velocity, pre-reward cells (red dots) showed predominantly positive correlations. Bottom Also similar to PPTg neural responses, there was no significant difference in velocity correlation as rats moved in the outbound and inbound directions. D) A comparison of the behaviors of rats from this (LDTg) and our earlier studies of PPTg and VTA neural activity is shown in terms of the velocity of the rats’ movements toward (left) and away from (right) the goal location. It can be seen that the pattern if changes in velocity are nearly identical. Thus the differential responses of these groups of cells during outbound and inbound trajectories cannot be accounted for by different patterns of behaviors across the studies. E) Mean velocity for the LDTg, PPTg, and VTA populations of recorded neurons during outbound (left) and inbound (right) trajectories. It can be seen that the average firing rates did not differ across groups, or as a function of direction of movement.

Given the hypothesis that the LDTg may be important for specific goal-directed behaviors (e.g., Shabani et al., 2010), we examined whether velocity correlations were strongest during goal-directed behaviors. It was reasoned that in the present task there are different expectations for the outcomes of behavior when rats moved toward rewards (outbound journeys on maze arms) and away from the rewards (inbound journeys on maze arms). Thus, these different trajectories of movement may have associated with them different degrees of velocity correlated cell firing. The bottom panel of Figure 8A compares the velocity correlation as a function of movement in the outbound (2 s prior to reward encounters) or inbound (2 s after rats turned around at the arm ends) directions on maze arms. As was found when velocity correlations were analyzed for the whole recording session, a multifactor ANOVA revealed significant differences in velocity correlation across pre-reward, reward consumption, and velocity (only) groups of cells, F(2,71) = 5.45, p<.01 (Tukey test: p<.01; bottom graph). Specifically, velocity (only) cells showed stronger velocity correlations compared to reward encounter cells (p<.01). Generally, outbound correlations of reward cells were higher than inbound correlations, F(1,2) = 3.96, p=.05, and this effect was most striking for the pre-reward and reward encounter cells (Tukey test: p<.05). The scatterplot of individual cells’ data confirm the stronger correlations in the outbound direction for the pre-reward cells.

The strong velocity correlations demonstrate that LDTg cells are precisely tuned to the ongoing behavior of the animals. It could be argued that this result is confounded by the reward response since reward-related firing occurred only at times when forward movement was very low or nonexistent. This is not likely to be the case since the outbound velocity correlation was based on spikes collected earlier than 300 ms prior to reward encounters. Thus the outbound correlations reflected only active movement as rats approached rewards. The higher correlation for outbound trajectories was present for approaches to both large and small rewards, and this is consistent with the above data indicating that LDTg neural codes are not affected by an animal’s expectations for a particular reward magnitude. In sum, then, the pattern of results supports the view that while the LDTg codes aspects of locomotion in terms of movement velocity generally, the precision of the movement code varies depending on whether or not the movement is goal-directed.

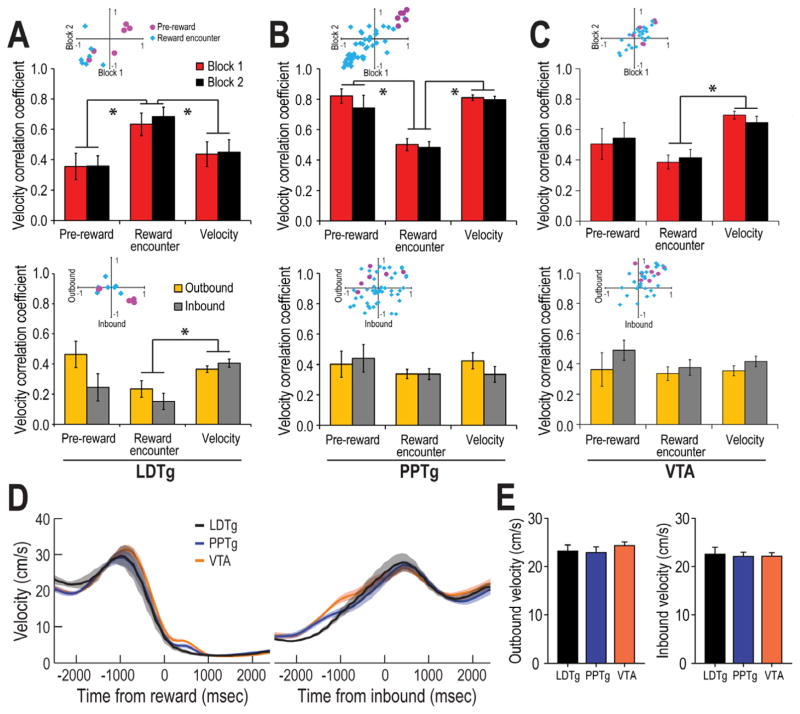

COMPARISONS OF LDTg, VTA, AND PPTg NEURAL RESPONSES: Similar to the LDTg data presented thus far, Norton et al. (2011) suggested that the PPTg provides reward and movement velocity information to dopamine neurons of the VTA. While there appear to be slight differences in the details of the reward information that may be passed on to dopamine cells from the PPTg and the LDTg (Table 1), insufficient information was provided in the Norton et al. study to determine whether conjunctive reward and movement codes exist in the PPTg, and if so, whether the PPTg velocity codes of such conjunctive cells were stronger during goal-directed behaviors. Since the PPTg data were collected from rats that performed the identical task as the one used in this study, we reanalyzed the PPTg data that were collected by Norton et al. to determine whether the PPTg contains conjunctive reward and movement cells (as defined here). Using the identical analysis methods as those used for the LDTg cells above, 24 of 105 recorded PPTg cells showed conjunctive reward and movement codes (18 reward encounter conjunctive cells and 6 pre-reward conjunctive cells; Fig. 8B, top panel). A multifactor ANOVA revealed a significant effect of correlate type, F(2,79) = 24.61, p<.01, with reward encounter cells showing significantly lower velocity correlations than pre-reward or velocity cells (Tukey test: p<.01). These contrast with the findings of significantly higher velocity correlations for reward encounter LDTg cells. Similar to LDTg velocity correlations, however, PPTg pre-reward cells showed mostly strongly positive velocity correlations while reward encounter cells showed mostly negative correlations between firing rates and velocity correlation (see scatterplot in Fig. 8D, top). The bottom graph of Figure 8B illustrate that, in contrast to LDTg neurons, there was no evidence that the velocity correlations of PPTg neurons differentiated outbound and inbound movement trajectories on the maze (all p’s > .05).

Table 1.

Comparison of reward and velocity properties of LDTg neurons reported in this study with those of PPTg (Norton et al., 2011), and VTA (Puryear et al., 2010) cells reported earlier. Applying the same analysis to the three sets of data, it is clear that all structures encode combinations of reward and egocentric movement (in this case movement velocity). While reward information was found to be encoded both separately or in conjunction with velocity by VTA and PPTg cells, LDTg reward neurons were always also correlated with velocity, and there was a smaller proportion of such reward-velocity conjunctive neurons in the LDTg. The LDTg showed a greater proportion of cells that coded only velocity (and not reward), and other behavioral movement correlates such as turns and behaviors related to reward approach. All three structures respond differently depending on reward magnitude, albeit in different ways (see text). Also all three brain regions show evidence of reward expectation (i.e. pre-reward cell activity) and reward encounter codes (although to varying degrees). Only the VTA shows evidence of reward prediction error responsiveness, and only the PPTg shows activity that is correlated with reward consumption per se, regardless of the reward magnitude

| General correlate | VTA* | PPTg** | LDTg |

|---|---|---|---|

| Reward only | yes (19.1%) | yes (27.6%) | no |

| Velocity only | yes (36.0%) | yes (27.6%) | yes (54.9%) |

| Conjunctive (reward & velocity) | yes (27.0%) | yes (22.9%) | yes (15%) |

| Turn only | no | no | yes (8.8%) |

| Complex correlate | no | no | yes (13.3%) |

| Reward Cells | |||

| Reward Encounter | yes (57.3%) | yes (34.3%) | yes (5.3%) |

| Reward Consumption | no | yes | no |

| Pre-reward | yes (7.3%) | yes (11.4%) | yes (6.2%) |

| Reward Magnitude | yes | yes | yes |

| Reward Prediction Error | yes | no | no |

Finally, VTA (dopamine) cells that were recorded and reported by Puryear et al., (2010) were reanalyzed according to the metrics described for Figure 8C. Similar to what was found for the PPTg data, velocity correlations of VTA neurons varied according to the different categories of cells, F(2,67) = 10.15, p<.01, with reward encounter cells showing lower overall correlations compared to pre-reward and velocity (only) cells (Tukey test: p<.01). In contrast to the LDTg, but similar to PPTg, the VTA scatterplots show that pre-reward cells showed mostly positive correlations with velocity while reward encounter cells showed both positive and negative correlations with velocity. When VTA velocity correlations were compared for outbound and inbound trajectories (Fig. 8C bottom), no differences were observed (all p’s>.05).

In sum, LDTg reward encounter neurons showed higher velocity correlations than similarly correlated PPTg and VTA cells, when compared to pre-reward and velocity (only) cells (Fig. 8A, 8B, and 8C). Also, LDTg reward-correlated neurons showed stronger correlations with velocity when animals moved toward goal locations than when they moved away from reward locations. A similar analysis with PPTg and VTA data did not reveal a comparable distinction for effect goal-oriented behaviors. These regional differences in velocity correlation could not be accounted for by different velocities expressed by rats from the three separate studies. Figure 8D and 8E show that the velocity of movement was identical for rats from the LDTg, PPTg, and VTA studies during outbound (F(2,17) = 0.67, p = 0.53) and inbound journeys (F(2,17) = 0.05, p = 0.95).

5.0 Discussion

The LDTg exerts significant control over the responses of VTA neurons (Grace et al., 2007; Lodge and Grace, 2006). This study assessed for the first time the nature of the information that is reflected in this control as rats performed a task that requires both LDTg (Fig. 1) and VTA (Martig et al., 2009), and that elicits locomotion and reward neural responses in VTA (Puryear et al., 2010) and PPTg (Norton et al., 2011). The most common LDTg neural correlate was the rats’ locomotion velocity, and a small number of cells responded to specific aspects of encountered rewards.

5.1. Locomotion-related coding by LDTg neurons

Consistent with reports that LDTg controls goal-directed behaviors (e.g. Lammel et al., 2012; Nelson et al., 2007; Shabani et al., 2010), the firing rate of a large proportion (70%) of LDTg neurons showed strong positive or negative correlation with an animal’s movement velocity (Figs. 4 and 5). The majority of the velocity correlated cells (78.5%) were not impacted by reward manipulations nor were they responsive to rewards themselves. The strong control of velocity over the tonic (i.e. nonburst) activity of a large segment of LDTg neurons may reflect a role for the LDTg in signaling the current motivational state to efferent structures since it is known that rats run faster or slower under conditions of strong or weak motivation (e.g. Puryear et al., 2010). In this way, the LDTg may regulate dopamine cell responses to reward according to the current motivation. Indeed, it is known that the firing by dopamine neurons signals motivated behaviors via sustained (or weak) extrasynaptic dopamine release in nucleus accumbens (Cagniard et al., 2006; Floresco et al., 2003; Ikemoto, 2007; Pecina et al., 2003), the latter of which scales with the excitation response of dopamine neurons to rewards (Cousings et al., 1994; Salamone & Correa, 2002). However, since these cells did not discriminate expected large from small rewards, it appears that a motivational signal from the LDTg likely reflects the general state of motivation (e.g. hunger) rather than specific reward-based motivation (such as differential motivation to seek out large vs small rewards).

If the LDTg controls dopamine cell responses to reward by relaying information regarding the current general motivation state, one might have expected that LDTg inactivation (Exp. 1) would result in a general memory impairment. Instead errors were made selectively when rats needed to find small reward locations. Perhaps the LDTg motivational influence is especially impactful when normally low motivation drives behavior (such as when expected rewards are small). Interestingly, LDTg firing during reward consumption was selective to the consumption of small rewards (Fig. 6). Since this small reward consumption response was observed only for the reward cells, while the strongest velocity correlates were observed for cells that did not encode reward information, it appears that the LDTg may make multiple functional contributions to the dopaminergic system evaluation of rewards.

5.2. Reward coding by LDTg neurons

24.8% of LDTg neurons showed burst firing just before or after encounters with rewards. These reward responses did not reflect movement velocity per se since most of the variations in firing occurred at a time when the animal remained still at the ends of the maze arms. These reward responses were predicted given LDTg’s control over dopamine cell burst firing (Grace et al., 2007). LDTg pre-reward responses may signal upcoming reward encounters generally since the pre-reward response did not change when large and small reward locations were unexpectedly switched or rewards unexpectedly omitted. Since dopamine neural responses scale relative to the expected or experienced magnitude of reward (Schultz, 2010; Schultz et al, 1997; Puryear et al., 2010), it was hypothesized that LDTg reward responses would as well. This hypothesis was confirmed, but in an unexpected way. Pre-reward or reward encounter responses themselves did not vary as a function of reward magnitude. However, these same cells showed greater subsequent firing during the consumption of small, not large, rewards (Fig. 6), a pattern that is in the opposite direction from what is observed for dopamine cells (Jo et al., 2013; Puryear et al., 2010; Schultz et al, 1997; Schultz, 1998, 2010). This preferential firing for small rewards continued to be observed when the small reward was placed in a different location (reward switch condition). It is not clear why the pre-reward cells showed greater differential firing than reward consumption cells, but in both cases, these cells may contribute to the bias of dopamine cells to respond to larger rewards if they inhibit dopamine cells from firing to small rewards. This scenario is similar to the inverse relationship between lateral habenula and VTA neural responses to rewards and prediction errors (Bromberg-Martin & Hikosaka, 2011; Matsumoto & Hikosaka, 2007, 2009; Schultz, 2010).

5.3. Conjunctive reward and movement coding by LDTg neurons

A minority (15%) of LDTg cells conjunctively coded velocity and reward information. The velocity correlation was stronger during outbound journeys (i.e. movement toward expected rewards), especially for pre-reward cells. Thus some of the LDTg velocity codes may be used as a metric of ongoing behaviors that increases the temporal precision of predictions about future goal encounters. During the course of experimentation, rats repeatedly traveled down the same maze arms to known goal locations. Thus, rats likely developed a pattern of run speeds and behaviors that reliably predicted the time and distance to food. This information, when passed on to VTA cells, may regulate the tonic activity of dopamine neurons in a velocity-dependent manner such that when an animal stops moving forward (i.e. arrives at the reward site), dopamine neurons rapidly transition to and ‘up state’ of greater excitability (Wilson, 1993; Wilson and Kawaguchi, 1996) such that they become prepared to respond rapidly should new information about rewards be received. In this way LDTg neural responses of reward expectations can enable adaptive responding by dopamine cells.

LDTg velocity cells appear physiologically capable of tracking changes in velocity with the temporal resolution that dopamine cells use to track reward encounters (on the order of 10–100’s of msec). Further, that most velocity-tuned LDTg cells did not code the presence of reward suggest a hypothesis that the LDTg precisely predicts the time of future reward encounters based on learned response sequences. Should LDTg become dysfunctional (as in the case of LDTg inactivation in Experiment 1), errors would be expected to be made not because animals cannot recall where (larger) rewards are located (Fig. 1). Rather, as rats make more choices in a trial, there is greater memory load along with corresponding uncertainty. Perhaps, in times of greater uncertainty, one depends more heavily on their ability to predict action-outcomes, especially if the behavioral options are familiar. This could explain the impaired choice accuracy only at the end of trials when small rewards remained to be retrieved.

5.4. Neural systems regulation of decisions during active navigation

A vast literature shows that dopamine cells signal reward value based on information about expected reward encounters (e.g. expected timing, probability, location, magnitude and sensory qualities of reward relative to behavior), actual reward experiences, and the perceived costs of obtaining rewards. These dopamine cell responses to reward likely reflect the integration of information from a multitude of afferent sources regardless of whether a rat forages for food in a spatially-extended environments for food (as in this study) or depressing levers in an operant chamber (as reviewed in Penner & Mizumori, 2012b). When foraging within familiar environments, rats may consistently choose one route over another based on learned associations between food and particular locations. Moreover, after a route is selected, the approach behaviors required to obtain food may occur essentially habitually. In that way, one can track one’s behavior to improve one’s prediction about the time to reward encounters. Our data suggest that the LDTg may be one of the brain structures that contributes precise movement state information as a rat approaches a known food location. Such movement information is hypothesized to inform efferent structures (e.g. dopamine cells) when to expect rewards. This is important not only to reinforce the most recent choice if rewards are actually encountered, but also in the event that a reward is not found at an expected location. The high rate of firing by LDTg neurons as a rat approaches a known reward location may sufficiently depolarize dopamine neurons that they transform to a more excitable ‘up state’. In this state, dopamine neurons can promptly signal a reward prediction error input from the lateral habenula (Hong et al., 2011; Matsumoto & Hikosaka, 2007). Such error signals are ultimately transmitted to cortex, which in turn may destabilize spatial representations in hippocampus so that new information may be incorporated (Mizumori & Jo, 2013). Indeed altered neural activity of VTA neurons results in less reliable place fields by hippocampal neurons (Martig et al., 2011a).

In addition to prediction error signals, dopamine neurons are known to increase firing in expectation of upcoming reward encounters during our task (Puryear et al., 2010; Jo et al., 2013). The expectation of reward encounters could be relayed to the VTA by PPTg and LDTg as both contain neurons that burst shortly before reward encounters. LDTg, but not PPTg, pre-reward responses may be guided by velocity information as they show stronger velocity correlations as animals approach known goal locations (Fig. 8). This stronger correlation is hypothesized to reflect greater motivation, which in turn may improve the precision of VTA and/or PPTg pre-reward firing (e.g. Lodge & Grace, 2006). The prefrontal cortex (PFC) may also bias reward expectancy codes of at least the VTA neurons since PFC neurons exhibit reward expectancy properties (Pratt et al., 2001), and PFC inactivation selectively disrupts expectancy neural codes of VTA neurons recorded using the same task as the one used in this study (Jo et al., 2013). VTA reward expectancy responses scale to the magnitude of expected rewards, but this effect likely does not come from the mPFC (Pratt et al., 2001), PPTg (Norton et al., 2011), or LDTg since those neurons are not sensitive to expected reward magnitudes.

One of the current challenges in memory research is to understand the mechanisms by which different memory systems of the brain interact to allow animals to switch cognitive strategies when behavioral contingencies change. To resolve this issue, it is important to understand how the brain detects and responds to changes in expected behavioral outcomes. The latter process is a complex one that we are just beginning to understand. However, the midbrain dopamine system is likely an important component of this process, as it is thought to be essential to compute the value of behavioral outcomes. The determined value of an outcome is somewhat subjective in that it can be pushed up or down depending on the conditions of the sensory environment and by the motivation of the animals. It is suggested here that context and sensory information is provided to VTA dopamine cells by the PPTg (Table 1; Norton et al., 2011), while motivation (as reflected in ongoing behaviors) is provided by the LDTg. In this way, motivational state information can regulate the influence of sensory and context information processing by VTA neurons, perhaps via LDTg regulation of PPTg-VTA synapses.

Acknowledgments

We thank Sarah Egler for assistance with LDTg data collection. This research was supported by NIMH Grant MH 58755 to SJYM.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errorsmaybe discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adams RA, Shipp S, Friston KJ. Predictions not commands: active interference in the motor system. Brain Structure and Function. 2013;218:611–643. doi: 10.1007/s00429-012-0475-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Buchanan TW. Amygdala damage impairs emotional memory for gist but not details of complex stimuli. Nature Neuroscience. 2005;8:512–518. doi: 10.1038/nn1413. [DOI] [PubMed] [Google Scholar]

- Anderson MI, Jeffery KJ. Heterogeneous modulation of place cell firing by changes in context. The Journal of Neuroscience. 2003;23:8827–8835. doi: 10.1523/JNEUROSCI.23-26-08827.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnsten AFT, Wang MJ, Paspalas CD. Neuromodulation of thought: Flexibilities and vulnerabilities in prefrontal cortical network synapses. Neuron. 2012;76:223–239. doi: 10.1016/j.neuron.2012.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A, Della Sala S. Working memory and executive control. Royal Society of London B Biological Sciences. 1996;351:1397–1403. doi: 10.1098/rstb.1996.0123. [DOI] [PubMed] [Google Scholar]

- Bayley PJ, Frascino JC, Squire LR. Robust habit learning in the absence of awareness and independent of the medial temporal lobe. Nature. 2005;436:550–553. doi: 10.1038/nature03857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bethus I, Tse D, Morris RGM. Dopamine and memory: modulation of the persistence of memory for novel hippocampal NMDA receptor-dependent paired associates. The Journal of Neuroscience. 2010;30:1610–1618. doi: 10.1523/JNEUROSCI.2721-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. A pallidus-habenula-dopamine pathway signals inferred stimulus values. Journal of Neurophysiology. 2010;104:1068–1076. doi: 10.1152/jn.00158.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brudzynski SM, Wu M, Mogenson GJ. Modulation of locomotor activity induced by injections of carbachol into the tegmental pedunculopontine nucleus and adjacent areas in the rat. Brain Research. 1988;451:119–125. doi: 10.1016/0006-8993(88)90755-x. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. Time, space and memory. Nature. 2013;497:568–569. doi: 10.1038/497568a. [DOI] [PubMed] [Google Scholar]

- Buzsaki G, Moser EI. Memory, navigation and theta rhythm in the hippocampal-entorhinal system. Nature Neuroscience. 2013;16:130–138. doi: 10.1038/nn.3304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cagniard B, Beeler JA, Britt JP, McGehee DS, Marinelli M, Zhuang X. Dopamine scales performance in the absence of new learning. Neuron. 2006;51:541–547. doi: 10.1016/j.neuron.2006.07.026. [DOI] [PubMed] [Google Scholar]

- Chen J, Olsen RK, Preston AR, Glover GH, Wagner AD. Associative retrieval processes in the human medial temporal lobe: Hippocampal retrieval success and CA1 mismatch detection. Learning and Memory. 2011;18:523–528. doi: 10.1101/lm.2135211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiba AA, Kesner RP, Jackson P. Two forms of spatial memory: A double dissociation between the parietal cortex and the hippocampus in the rat. Behavioral Neuroscience. 2002;116:874–883. doi: 10.1037//0735-7044.116.5.874. [DOI] [PubMed] [Google Scholar]

- Clement JR, Toth DD, Highfield DA, Grant SJ. Glutamate-like immunoreactivity is present within cholinergic neurons of the laterodorsal tegmental and pedunculopontine nuclei. Advances in Experimental and Medical Biology. 1991;295:127–142. doi: 10.1007/978-1-4757-0145-6_5. [DOI] [PubMed] [Google Scholar]

- Cornwall J, Cooper JD, Philipson OT. Afferent and efferent connections of the laterodorsal tegmental nucleus in the rat. Brain Research Bulletin. 1990;25:271–284. doi: 10.1016/0361-9230(90)90072-8. [DOI] [PubMed] [Google Scholar]

- Cousins MS, Wei W, Salamone JD. Pharmacological characterization of performance on a concurrent level pressing/feeding choice procedure: effects of dopamine antagonist, cholinomimetic, sedative and stimulant drugs. Psychopharmacology. 1994;116:529–537. doi: 10.1007/BF02247489. [DOI] [PubMed] [Google Scholar]

- Curtis CE, Rao VY, D’Esposito M. Maintenance of spatial and motor codes during oculomotor delayed response tasks. The Journal of Neuroscience. 2004;24:3944–3952. doi: 10.1523/JNEUROSCI.5640-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]