Abstract

Public health and other community-based practitioners have access to a growing number of evidence-based interventions (EBIs), and yet EBIs continue to be underused. One reason for this underuse is that practitioners often lack the capacity (knowledge, skills, and motivation) to select, adapt, and implement EBIs. Training, technical assistance, and other capacity-building strategies can be effective at increasing EBI adoption and implementation. However, little is known about how to design capacity-building strategies or tailor them to differences in capacity required across varying EBIs and practice contexts. To address this need, we conducted a scoping study of frameworks and theories detailing variations in EBIs or practice contexts and how to tailor capacity-building to address those variations. Using an iterative process, we consolidated constructs and propositions across 24 frameworks and developed a beginning theory to describe salient variations in EBIs (complexity and uncertainty) and practice contexts (decision-making structure, general capacity to innovate, resource and values fit with EBI, and unity vs. polarization of stakeholder support). The theory also includes propositions for tailoring capacity-building strategies to address salient variations. To have wide-reaching and lasting impact, the dissemination of EBIs needs to be coupled with strategies that build practitioners’ capacity to adopt and implement a variety of EBIs across diverse practice contexts.

Keywords: capacity building, dissemination, evidence-based interventions, knowledge translation, prevention support

A growing number of organizations are disseminating evidence-based interventions (EBIs) for clinical, public health, and other practitioners to adopt and implement into practice (Briss, Brownson, Fielding, & Zaza, 2004; Leeman, Sommers, Leung, & Ammerman, 2011). EBIs include a wide range of programs, practices, and policies that researchers and others have demonstrated to be effective at improving targeted outcomes (Rabin, Brownson, Haire-Joshu, Kreuter, & Weaver, 2008). Disseminating EBIs generally is insufficient to change practice, however, and practitioners continue to underuse the available EBIs (Hannon et al., 2013). One reason why EBIs are underused is that practitioners often lack the capacity to adopt, adapt, and implement EBIs within their practice settings.

A broad literature is developing that describes and evaluates strategies that are effective at building practitioners’ capacity to use EBIs. This literature employs a diverse terminology to refer to capacity building including “technical assistance,” “facilitation,” “knowledge brokering,” and “prevention support,” among other terms (Wandersman et al., 2008; Ward, House, & Hamer, 2009). We use the term “capacity building” (CB) to encompass this broader literature and define it as any strategy or combination of strategies that seeks to provide practitioners’ with menus of EBIs and increase their motivation and ability to adopt and implement those EBIs (Flaspohler, Duffy, Wandersman, Stillman, & Maras, 2008). Although we recognize the importance of also building capacity at the levels of organizations and systems, for the purposes of this review we have excluded strategies that directly target those levels from our definition of CB. CB strategies include training, technical assistance, tools, and other strategies (Leeman, Calancie, et al., 2015; Wandersman et al., 2008). Although extensive research has demonstrated that CB is effective at promoting practitioners’ EBI adoption and implementation (Durlak & DuPre, 2008; Mitton, Adair, McKenzie, Patten, & Waye Perry, 2007), researchers typically have provided limited details on the design of CB strategies or the theory guiding which strategies were selected. Furthermore, most of the CB literature has taken a “one-size-fits-all” approach with little attention to differences in the types of capacity required to adopt and implement different types of EBIs across varying contexts. For example, different capacities will be required to adopt and implement EBIs to enact smoke-free policies than for EBIs to support individual-level smoking cessation. In addition, capacity needs will differ between contexts, such as between a large, academically affiliated health care center and a small geographically isolated department of public health.

Scholars have developed many frameworks describing CB but few of these frameworks provide guidance on how to tailor CB to address the needs of different EBIs and contexts (McCormack et al., 2013; Ward et al., 2009). Developing more comprehensive theory is essential to advancing understanding of what CB strategies may work best under what circumstances. The purpose of this review was to advance theory to guide the design of CB strategies, with a specific focus on strategies to adopt and implement community-based prevention EBIs. The central questions guiding the review were the following: (a) In what ways do EBIs and contexts vary? (b) How might CB strategies be tailored to address those variations?

Conceptual Framework

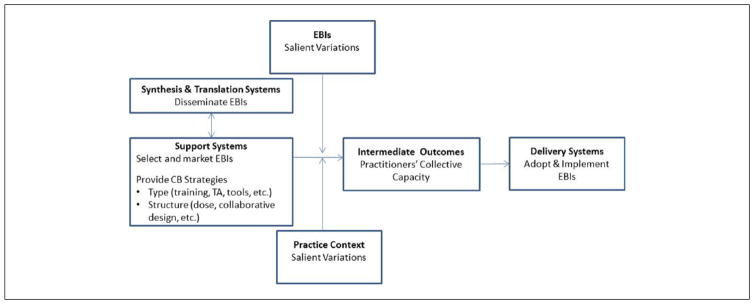

The review was guided by a framework that builds on Wandersman et al.’s (2008) Interactive Systems Framework for Dissemination and Implementation. The Interactive Systems Framework posits that EBI implementation requires interaction among three systems: synthesis and translation systems disseminate EBIs, and support systems provide training, technical assistance, tools, and other CB strategies to promote delivery system’s adoption and implementation of EBIs into practice. As summarized in Table 1, Leeman, Calancie, et al. (2015) have previously documented the types of CB strategies that support systems use and variations in the ways they are structured (e.g., dose, mode; Leeman, Calancie, et al., 2015). The review framework (Figure 1) posits that CB strategies affect practitioners’ capacity, which in turn affects the extent and quality of delivery systems’ EBI adoption and implementation. The effectiveness of CB is moderated by characteristics of both EBIs and practice contexts. In this review, we focused on identifying salient variations in EBIs and practice contexts and then developing theory to guide support systems in tailoring their CB strategies contingent on those variations.

Table 1.

Definitions of Capacity Building Strategy Types and Structures.

| CB strategies | Definitions |

|---|---|

| Types | |

| EBI selection and marketing | The identification of specific EBIs and the way they are communicated to specific delivery-system audiences |

| Training | The provision of preplanned education or skill building sessions to a group |

| Tools | Media or technology resources for use in planning or implementing an intervention |

| Technical assistance | The provision of interactive, individualized education, skill building, and problem-solving sessions |

| Assessment and feedback | Collecting and providing data-based feedback on delivery system performance |

| Peer networking | Bringing practitioners together to learn from each other via in-person or distance trainings and technical assistance sessions |

| Incentives | Financial compensation and in-kind resources to incentivize progress or build capacity |

| Structures | |

| Dose | The duration, frequency, and amount of CB provided |

| Level of CB provider/recipient collaboration | “The relationship between support providers and recipients varies in the extent of collaboration with some providers functioning as advisors while others function as fully engaged participatory partners” (Leeman, Calancie, et al., 2015) |

| Target audience | CB strategies vary in whether the intended recipients are those who will make the adoption decision, plan the intervention, implement the intervention, and/or manage those who implement the intervention |

Note. CB = capacity building; EBIs = evidence-based interventions. Adapted from Leeman, Calancie, et al. (2015) and Wandersman, Chien, & Katz (2012).

Figure 1.

Conceptual framework guiding the review.

Note. CB = capacity building; TA = technical assistance; EBIs = evidence-based interventions. Adapted from Leeman, Calancie, et al. (2015) and Wandersman et al. (2008).

Review Methods

We conducted a scoping study to identify relevant frameworks followed by the extraction of framework constructs and propositions (Levac, Colquhoun, & O’Brien, 2010). We then synthesized constructs using an analytic approach similar to that used by Damschroder et al. (2009) and others (Contandriopoulos, Lemire, Denis, & Tremblay, 2010; Michie, van Stralen, & West, 2011) to consolidate existing dissemination/implementation science frameworks. The team that conducted the study included members of the Capacity-Building Technical Assistance and Training workgroup of the Cancer Prevention and Control Research Network, a Centers for Disease Control and Prevention and National Institutes of Health–funded network of 10 centers nationwide (Fernandez et al., 2014).

Search Methods

To identify relevant frameworks, we assessed those included in three prior reviews of dissemination/implementation frameworks (Damschroder et al., 2009; Meyers, Durlak, & Wandersman, 2012; Tabak, Khoong, Chambers, & Brownson, 2012). We also solicited recommendations from members of the workgroup. To be included, frameworks had to address variations in EBIs and/or contexts and the potential effect those variations might have on EBI adoption or implementation. The diversity of terminology and extensiveness of the literature limited our ability to identify and include all potentially relevant frameworks. The search was designed to be broad but not exhaustive, with the goal of creating a beginning theory that might be further developed over time.

Data Abstraction and Synthesis

All authors participated in the abstraction process. For each publication, two authors extracted the following information: evidence base for the framework, constructs related to salient variations in EBIs and contexts, and propositions regarding how to tailor CB to address those variations. The two authors then compared extractions and resolved discrepancies via consensus. The lead authors (JL, LC) then reviewed all extractions to identify themes, and iteratively developed and refined the themes to create consolidated lists of constructs and propositions. The two lead authors then selected constructs and propositions to be included in the beginning theory, with priority given to findings that occurred most frequently across frameworks and to findings derived from a systematic review of the literature or a research study. Secondary priority was given to frameworks derived from authors’ reflections on their applied, field-based experience or from nonsystematic review of the literature. The goal of the synthesis was to reconcile conflicting or overlapping constructs and propositions and integrate them into a single comprehensive, yet parsimonious provisional theory. A summary report was created outlining the provisional theory and detailing the full list of consolidated constructs and propositions with related citations. The summary report was presented to Capacity-Building Technical Assistance and Training workgroup members to get their feedback and further refine the theory to best fit review findings.

Results

Included Frameworks

Twenty-four frameworks were included in the review (Table 2). Seven of the frameworks were derived from systematic reviews of the literature, nine from empirical studies, five from authors’ applied experience, and three from a non-systematic review of existing literature and theory. The theories cited most frequently as contributing to frameworks included Diffusion of Innovations (n = 6), Actor-Network Theory (n = 2), Complexity Science (n = 2), and the Interactive Systems Framework (n = 2).

Table 2.

Purpose, Evidence Base, and Underlying Theory or Framework of the Articles Informing the Development of a Beginning Theory Providing Guidance on How to Tailor Capacity-Building Strategies to Address Variations in Interventions and Practice Contexts.

| Citation | Country | Purpose | Evidence base for framework | Underlying theory/framework |

|---|---|---|---|---|

| Atun, de Jongh, Secci, Ohiri, and Adeyi (2010)a | United Kingdom | Present an analytical framework that identifies critical elements that affect adoption, diffusion, and assimilation of innovations within health systems | Literature | Diffusion of Innovations and others |

| Clavier, Senechal, Vibert, and Potvin (2012)d | Canada | Propose a theory-based model of translation practices at the nexus between academic researchers and practitioners in participatory research | Empirical—Focus groups with intermediaries | Actor-network theory, sociology of intermediate actors |

| Contandriopoulos et al. (2010)d | Canada | Develop an integrated interdisciplinary framework for understanding collective-level knowledge exchange interventions | Systematic review | Multiple |

| Damschroder et al. (2009)a,b | United States | Provide an overarching typology to promote implementation theory development | Systematic review | Multiple |

| DeGroff, Schooley, Chapel, and Poister (2010)d | United States | Explore challenges in public health problems, systems, and data and suggest approaches to performance measurement in public health | Applied experience | NA |

| Denis, Hebert, Langley, Lozeau, and Trottier (2002)d | Canada | Describe how the distribution of benefits and risks map onto the interests, values, and power distribution of the adopting system is critical to understanding how innovations diffuse | Empirical—Multicase study | Actor-network theory |

| Dreisinger et al. (2012)a | United States | Identify individual, organizational, and intervention characteristics that contribute to an intervention’s readiness for widespread dissemination | Empirical—Interviews with 64 staff in 19 programs | Diffusion of innovations |

| Durlak and DuPre (2008)b | United States | Assess impact of implementation on program outcomes and identify factors affecting the implementation process | Systematic review | Interactive systems framework |

| Elwyn, Taubert, and Kowalczuk (2007)a | United Kingdom | Explore applicability of sticky knowledge (a business model) to implementation of evidence-based practice in health care | Sticky knowledge is based on empirical, cross-sectional survey | Communication theory, knowledge transfer |

| Fixsen, Naoom, Blase, Friedman, and Wallace (2005)a,b,c | United States | Create a topographical map of implementation as seen through evaluation of factors related to implementation attempts | Systematic review | NA |

| Greenhalgh, Robert, Macfarlane, Bate, and Kyriakidou (2004)a,b,c | United Kingdom | Create a parsimonious and evidence-based model for considering the diffusion of innovations in health service organizations | Systematic review | Multiple |

| Kitson et al. (2008)a,b,c; Rycroft-Malone (2004)a,b,c | United Kingdom | Describe an implementation framework and integrate work to date that used the framework | Empirical—Case studies | Multiple |

| Lanham et al. (2012)d | United States | Reexamine two cases of successful scale up and spread of innovations in clinical settings | Empirical—Two case studies | Complexity science |

| Le, Anthony, Broheim, Holland, and Perry (2014)d | United States | Examine technical assistance through interviews with skilled providers to further a more evidence-based approach | Empirical—Interviews with 14 technical assistance providers | Theories of change, Adult learning, facilitation, etc. |

| Leeman, Baernholdt, and Sandelowski (2007)c | United States | Develop a provisional taxonomy of implementation methods and links them to theoretical constructs | Systematic review | Diffusion of innovations, contingency theory, behavioral change theories |

| May et al. (2009)a; May et al. (2011)a | United Kingdom | Proposes a theory of normalization processes for implementation, embedding, and integration in conditions marked by complexity and emergence | Empirical—Three phases of qualitative studies | Normalization process theory |

| Mendel, Meredith, Schoenbaum, Sherbourne, and Wells (2008)a,c | United States | Provide a framework for understanding contexts and how they influence diffusion and for identifying strategies to promote adoption and implementation | Applied experience | Diffusion of innovations, social cognitive theory, agency theory |

| Ogilvie et al. (2011)d | United Kingdom | Present a framework for evaluating complex public health interventions | Applied experience | NA |

| Rogers (2003)a,b | United States | Describe diffusion of innovations theory | Empirical—40 years of research | Diffusion of innovations, social learning theory |

| Scheirer (2013)d | United States | Suggests a framework for analyzing the sustainability of six types of interventions | Applied experience | Congruence and open-systems theories |

| Snowden and Boone (2007)d | United States | Presents a framework to guide decision making in business and government agencies | Applied experience | Complexity science |

| Wandersman et al. (2008)a,b | United States | Proposes a framework that specifies interactive systems of activities that are necessary to bridge the gap between science and practice | Literature | Diffusion of innovations, technology transfer model, integrated systems framework |

| Weiner (2009)a | United States | Theorizes organizational determinants of effective implementation of complex innovations in worksites | Literature | Theory of implementation of complex innovations |

| Yuan et al. (2010)a | United States | Proposes a blueprint for national quality improvement campaigns | Systematic review | Conceptual framework of diffusion |

Note. Source of framework:

From Tabak et al. (2012).

From Meyers et al. (2012).

Capacity Building Training and Technical Assistance Workgroup.

Variations in EBIs and Contexts: How to Tailor CB Contingent on Those Variations

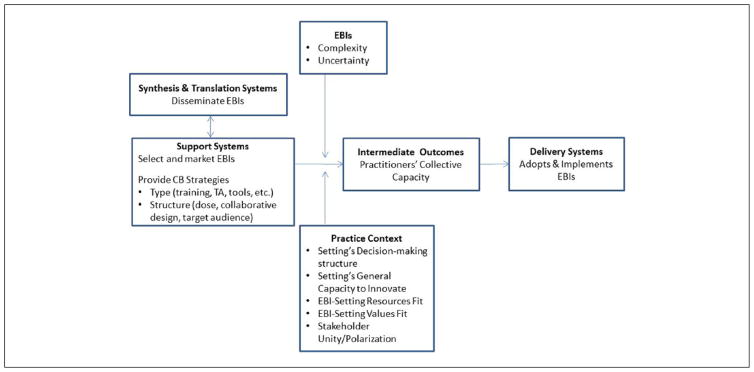

Figure 2 presents characteristics of EBIs and contexts whose variations moderated the relationship between CB strategies and the delivery system’s successful adoption and implementation of EBIs. In relation to EBIs, the two most salient factors were complexity and uncertainty. Salient contextual factors included the adoption/implementation setting’s decision-making structure and general capacity to innovate, the EBI’s fit with the setting’s capacity and values, and the extent of stakeholder unity/polarization in support of the EBI. Table 3 defines each salient characteristic and offers propositions for tailoring CB contingent on those characteristics with further details provided in the online appendix (available online at heb.sagepub.com/supplemental).

Figure 2.

Revised framework of salient variations in EBIs and contexts.

Note. CB = capacity building; TA = technical assistance; EBIs = evidence-based interventions.

Table 3.

Guidance on Tailoring Capacity Building Contingent on Salient Variations in Evidence-Based Interventions and Contexts.

| Salient variation | Guidance for tailoring capacity building | ||

|---|---|---|---|

|

|

|

||

| Factor | EBI selection/marketing | CB structure and types (in addition to training, TA, tools) | CB focus |

| EBI: Complexity |

When high Select a different EBI Market EBIs in formats that promote their adaptability and triability |

When high Provide more CB (dose) than when lower |

When high Focus on capacity to assess local contexts, select and adapt EBIs to fit context, and develop infrastructure to manage interdependency |

| EBI: Uncertainty |

When low Select standardized EBIs When high Select broad, flexible EBIs |

When high Provide more CB (dose) than when lower Strengthen CB provider/recipient collaboration Facilitate peer networking Assess and provide feedback on performance |

When low Focus on capacity to adapt to local context, and implement with fidelity When high Focus on capacity to engage stakeholders, facilitate ongoing and open communication, collect local data, develop a shared understanding of problem, and collectively formulate an intervention plan |

| Context: Setting’s Decision-Making Structure |

When hierarchical, centralized Tailor and deliver CB to those working at different levels of the organization (target audience) When horizontal, decentralized Deliver CB to the coalition or team (target audience) that will plan and implement the EBI |

When hierarchical, centralized Focus on organizational leaders’ capacity to adopt and support the intervention; middle managers’ capacity to implement, supervise, and sustain the intervention; and practitioners’ capacity to deliver the intervention When horizontal, decentralized Focus on capacity to engage partners and to facilitate collaborative decision making |

|

| Context: Settings’ Overall Capacity |

When low Select EBIs that embed change in existing technologies or operating procedures |

When low Efforts to build capacity to adopt and implement EBIs may not be successful |

When low Focus on building overall capacity prior to focusing on EBIs |

| Context: EBI-Setting Resources Fit |

When poor fit If possible, select an EBI that provides a better fit |

When poor fit Provide incentives (funding or in-kind resources) to build capacity |

When poor fit Focus on capacity to adapt EBI and/or acquire additional resources |

| Context: EBI-Setting Values Fit |

When poor fit Select an EBI that provides a better fit |

When poor fit CB provider/recipient collaboration Facilitate peer networking Assess and provide feedback on performance |

When poor fit Focus on capacity (motivation) to adopt and implement EBIs |

| Context: Stakeholder Unity/Polarization |

When polarized Select an EBI that provides a better fit Reframe EBI marketing |

When polarized Provide more CB (dose) than when lower |

When unified Focus on capacity for a technical, rational approach to adoption/implementation When polarized Focus on capacity for strategic and political approaches to adoption/implementation |

Note. Variation in CB type/structure is italicized. EBI = evidence-based intervention; TA = technical assistance; CB = capacity building.

EBI complexity refers to the extent to which the overall task of adopting and implementing an EBI is intricate or complicated. An EBI’s complexity increases contingent on the number and socioecological levels it targets; diversity and interdependence of stakeholders (e.g., from different settings, sectors, disciplines), organizations, and/or systems-levels required to adopt and implement the EBI; and the duration and number of components and episodes that comprise EBI implementation (Atun et al., 2010; Damschroder et al., 2009; Greenhalgh et al., 2004; Kitson et al., 2008; Lanham et al., 2012; Rogers, 2003; Scheirer, 2013). The diversity and interdependence of involved stakeholders is a central feature contributing to EBI complexity. Stakeholders include any individual or group that has a stake in the task and may include those who adopt, support, oppose, implement, and/or benefit from an EBI. Scheirer (2013) has proposed a typology of interventions that largely categorizes them according to their place on a continuum of stakeholder diversity and interdependence. At one end of the continuum, individual providers can implement interventions independently (e.g., prescribing a nicotine patch); further along the continuum interventions require coordination among multiple staff over time (e.g., delivering a smoking cessation program); and still further along the continuum, the intervention requires collaboration across sectors (e.g., enacting policy to ban smoking in public settings).

When task complexity is higher as opposed to lower, support systems may explore marketing EBIs in formats that maximize their adaptability and triability (Atun et al., 2010; May et al., 2009). Maximizing EBIs’ adaptability involves combining clearly defined core components with more peripheral components that can be adapted to meet the needs of local settings and stakeholders (Damschroder et al., 2009). Maximizing EBIs’ triability involves marketing them in formats that allow stakeholders to pilot them on a small scale prior to committing to full-scale implementation (Damschroder et al., 2009). When EBI complexity is high, support systems also may need to provide more CB (dose frequency and duration; Le et al., 2014) and to focus CB on building practitioners’ capacity to assess local contexts, select and adapt EBIs to fit with contexts, and develop the administrative and other infrastructure necessary to manage interdependency throughout the process of planning, implementing, and sustaining the intervention (Leeman et al., 2007; Scheirer, 2013; Snowden & Boone, 2007).

EBI uncertainty refers to the lack of an evidence base to guide intervening. Although only a few authors used the term “uncertainty,” authors used other terms (e.g., “unproven knowledge”; Elwyn et al., 2007) to refer to similar concepts. Uncertainty is high when guidance on how to implement an intervention is limited, the evidence base for available EBIs is weak, or the potential to translate an EBI to a new context is unknown (DeGroff et al., 2010; Elwyn et al., 2007; Kitson et al., 2008; Yuan et al., 2010). The evidence base for translating EBIs to new contexts often is less certain when those contexts are complex adaptive systems that are composed of numerous elements that interact in ways that cannot be predicted (Lanham et al., 2013; Snowden & Boone, 2007). For example, EBIs that change public policies (e.g., tobacco control policies) typically occur within complex systems whose interacting elements are hard to control or predict.

When EBI uncertainty is low and causal links between EBIs and outcomes are clear, support systems might select EBIs that are packaged into standardized formats and focus on building practitioners’ capacity to select, adapt, and implement EBIs with fidelity (Le et al., 2014; Snowden & Boone, 2007). For example, they may select from one of Cancer Control Planet’s packaged intervention programs (http://rtips.cancer.gov/rtips/index.do). When uncertainty is high, practitioners have little information about what EBIs may work or whether they will work within their practice contexts. Support systems therefore need to select EBIs that are less standardized and have more flexible formats (Dreisinger et al., 2012; Lanham et al., 2013; Snowden & Boone, 2007), such as the Guide to Community Preventive Services recommended strategies (www.the-communityguide.org). When uncertainty is high, support systems also will need to provide more CB (dose frequency and duration), and strengthen collaborative relationships between CB providers and recipients. In numerous frameworks and theories, scholars have pointed to the influence of the CB provider/recipient relationship on EBI adoption and implementation (Atun et al., 2010; Clavier et al., 2012; Elwyn et al., 2007; Rogers, 2003). Relationships are stronger when the individuals providing CB are consistent over time and are perceived to have mastered the recipients’ “norms, values, and languages” (Clavier et al., 2012). When EBIs are uncertain, collaborative CB provider/recipient relationships have the potential to maintain recipients’ continued motivation despite a lack of clarity about the initiatives’ direction and potential benefits.

In cases of high uncertainty, support systems should focus on building practitioners’ capacity to engage stakeholders in collectively developing a shared understanding of the problem and context and in formulating an intervention plan that is both evidence-informed and context specific (DeGroff et al., 2010; Elwyn et al., 2007; Lanham et al., 2013; Le et al., 2014; May et al., 2009; May et al., 2011). Priority should be given to developing practitioners’ capacity to engage stakeholders, facilitate ongoing communication, and encourage both dissent and a diversity of viewpoints (Lanham et al., 2013; Snowden & Boone, 2007). When the evidence base for intervening is highly uncertain, support systems may want to build practitioners’ capacity to create an environment that promotes experimentation and allows innovations to emerge over time (Lanham et al., 2013; Snowden & Boone, 2007).

Additional CB strategies that may be useful when uncertainty is high include peer networking and assessment and feedback of performance data (DeGroff et al., 2010). Peer networking provides an opportunity for practitioners to learn how other practitioners have successfully engaged stakeholders, achieved desired outcomes, and overcome barriers. Support systems may use assessment and feedback to provide data that practitioners can use to monitor for improvements in performance following, for example, pilot studies and other forms of experimentation (DeGroff et al., 2010; Yuan et al., 2010).

Adoption/implementation setting’s decision-making structure refers to whether the decision to adopt and implement an EBI is centralized within a hierarchical organizational structure or decentralized across a horizontal structure (DeGroff et al., 2010; Rogers, 2003; Wandersman et al., 2008). Decision making in health care organizations is often centralized within a hierarchy, with the organization’s leaders making an initial decision to adopt an intervention and then deploying responsibility for implementation to middle managers and staff. In contrast, decision making in community coalitions is often decentralized and involves collaborative deliberation across all organizations participating in the coalition.

The intended audience and focus of capacity building will differ depending on the decision-making structure. When decision making is structured hierarchically, capacity building may need to be targeted to those working at different levels of the organization with a focus on strengthening organizational leaders’ overall motivation to adopt and support the intervention; middle managers’ capacity to implement, supervise, and sustain the intervention; and practitioners’ capacity to deliver the intervention (Fixsen et al., 2005; Scheirer, 2013; Weiner, 2009). When decision making is decentralized, capacity-building may need to be delivered to the coalition or team that will plan and implement the EBI. CB will need to focus on building practitioners’ capacity to engage partners and to facilitate collaborative decision making in addition to implementing the EBI (Clavier et al., 2012; Contandriopoulos et al., 2010).

Adoption/implementation setting’s overall capacity to innovate refers to the setting’s overall capacity to adopt and implement new interventions than do others. Factors that contribute to the capacity to innovate include strong leadership, a learning culture, and past success with overall capacity to adopt and implement new interventions than do others. Factors that contribute to the capacity to innovate include strong leadership, a learning culture, and past success with innovations (Dreisinger et al., 2012; Kitson et al., 2008; Rogers, 2003; Weiner, 2009). High levels of staff turnover and overall turbulence may limit innovation capacity (Dreisinger et al., 2012; Snowden & Boone, 2007).

When general capacity is low, support systems may have more success if they select and market EBIs that embed change in existing technologies, information systems, or policies rather than EBIs that require individual or collective behavior change (Scheirer, 2013). For example, EBIs might involve changing policy governing the foods and beverages served at worksite celebrations or changing the type of equipment on a playground. Prior to promoting EBIs that require collective behavior change, support systems may first need to invest in building overall capacity (e.g., leadership development; Scheirer, 2013).

EBI-setting resources fit refers to the match between EBIs and the existing resources and infrastructure of the systems into which they will be implemented. Wandersman et al. (2008) refer this to as “innovation-specific” as opposed to “general” capacity. Innovation-specific capacity includes stakeholders’ individual and collective knowledge, skills, and self-efficacy in relation to the targeted problem, intervention options, and/or the selected EBI (Weiner, 2009). Capacity also encompasses the infrastructure and other resources of both the implementation settings (e.g., existing staff’s skill mix, space, equipment, etc.) and the wider economic, political, and social context (e.g., Atun et al., 2010). For example, does the EBI align with available funding streams or staffing?

If there is a poor fit between the EBI and existing capacity, support systems may need to identify an EBI that provides a better fit. Alternatively, they might build practitioners’ capacity to adopt and implement the EBI and thereby improve its fit and/or to acquire additional resources or new partners (Wandersman et al., 2008). CB providers also might need to provide funding or other supports such as free materials or direct assistance to support intervention planning or delivery (Contandriopoulos et al., 2010; Dreisinger et al., 2012).

EBI-setting values fit is the extent to which stakeholders perceive the EBI as fostering “fulfillment of their values” (Weiner, 2009). Many frameworks identify potential adopters’ perceptions of an intervention’s advantages relative to its alternatives as central to successful adoption and implementation (e.g., Damschroder et al., 2009). Stakeholders’ perceptions of an EBI’s relative advantage are influenced by their values, which determine the problems stakeholders view as high priority and in need of change and the outcomes they view as most important (Damschroder et al., 2009; Greenhalgh et al., 2004; Weiner, 2009).

When there is a poor fit between the EBI and existing values, support systems may need to identify an EBI that provides a better fit. Support systems also might reframe their EBI marketing to better align with stakeholders’ values and alter their perceptions of its attributes (Rogers, 2003). Providers also might consider facilitating peer networking and providing funding or other incentives. Facilitating interactions with peers who have adopted and implemented an EBI can be an effective way to improve potential adopters’ perceptions of the EBI and motivation to use it, particularly if those peers are opinion leaders within the potential adopters’ organization or professional networks (Greenhalgh et al., 2004; Leeman et al., 2007; Rogers, 2003; Yuan et al., 2010). Funding and other types of incentives are another strategy that can be used to influence perceptions of an intervention’s advantage to those adopting and implementing it into practice (Leeman et al., 2007).

Stakeholder unity/polarization refers to the extent to which stakeholders share similar or divergent values in relation to an EBI and the intensity of their endorsement of divergent values (Contandriopoulos et al., 2010; Denis et al., 2002; Weiner, 2009). The perceived benefits and risks of an EBI may not be distributed evenly across stakeholder groups. As a result stakeholders’ may be polarized on their views of the problem, its priority, and/or its potential solutions (Contandriopoulos et al., 2010; Denis et al., 2002). Stakeholders’ position on a proposed EBI may be further polarized when stakeholder groups have a past history of conflict (Clavier et al., 2012).

When stakeholders are unified, capacity-building strategies can focus on a content-based, technical, rational approach to adoption/implementation (Contandriopoulos et al., 2010). When stakeholders are polarized, CB providers may need to look for another EBI or reframe their EBI marketing to better map the EBIs costs and benefits to the values of relevant stakeholder groups. In such instances, capacity building will need to focus on a relationship-based, strategic and political approach (Contandriopoulos et al., 2010; Denis et al., 2002). CB providers will need to build practitioners’ capacity to assess stakeholders’ positions and to strategically build support and manage opposition. Greater doses of CB also may be required than for interventions where stakeholders are more unified.

Discussion

Numerous foundations, governmental agencies, consultants, and universities are serving in the role of support systems as they deliver training, technical assistance, and other CB strategies to build practitioners’ capacity to adopt and implement EBIs. The aim of this review was to create a beginning theory that support systems might use to guide their CB efforts. We identified numerous frameworks that described CB strategies and factors that may influence their design and effectiveness. By reviewing these frameworks, we were able to create a beginning theory that consolidates salient variations in EBIs and contexts and begins to identify propositions for tailoring CB contingent on those variations.

As detailed in Tables 2 and 3, multiple frameworks supported the identified variations in EBIs and contexts and the propositions for tailoring CB strategies. Findings should be interpreted with caution, however, as the search was not exhaustive and the strength of the evidence base in support of identified frameworks was mixed. While the majority of the 24 frameworks were based on systematic reviews of the literature or empirical studies (n = 16), 8 were derived from authors’ applied experience or from a nonsystematic retrieval of and integration of existing literature and theory. Additional intervention research is needed to compare the effectiveness of different CB designs across variations in EBIs and contexts. The beginning theory offers a starting point for guiding CB but will require further research to verify the identified variations in EBIs and contexts and to assess their role in moderating the effectiveness of different CB designs.

Implications for Practice

Little is known about how to design CB strategies and even less about how best to tailor them to practitioners’ varying needs. When interviewed, CB providers have reported that their mandate was “too vague,” “underformalized,” and “ill-defined” (Clavier et al., 2012). The proposed beginning theory represents an initial step toward filling this gap. Support systems might use the beginning theory (Figure 2) to characterize EBIs and contexts. They might then use the list of propositions (Table 3) to design their CB strategies contingent on those characterizations. For example, if a support system planned to promote after school practitioners’ use of obesity prevention EBIs, they might assess available EBIs and identify several whose complexity and uncertainty levels are low. They would then tailor capacity building to focus on adapting EBIs to the local context and implementing them with fidelity to their core components. Support systems might also assess the after school system’s context. Are the after school programs centrally organized under the direction of a regional office or decentralized with decision making controlled locally? Who are the key stakeholders involved in both adoption and implementation and how well do the proposed EBIs fit with their values? What general and EBI-specific capacity do after schools have to implement the EBIs? Following this initial assessment, the support system might then further tailor CB strategies to fit the context following the propositions outlined in Table 3.

As another example, a support system may want to promote public health practitioners use of EBIs to create health-supporting policies (Leeman, Myers, Ribisl, & Ammerman, 2015). EBIs that target changes to policy often have high levels of both complexity and uncertainty. The support system, therefore, might employ CB strategies that capitalize on practitioners’ tacit knowledge of what works in their community (Kothari et al., 2012) by facilitating peer networking and providing data-based feedback on what is working and what is not. The focus of CB would be on engaging stakeholders in collaboratively developing a shared understanding of both problems and potential solutions.

Conclusions

To have wide reaching and lasting impact, the dissemination of EBIs needs to be coupled with strategies that build practitioners’ capacity to adopt and implement interventions within their diverse and ever-changing practice contexts. The proposed theory offers much needed guidance on how to tailor capacity-building strategies to address variations in interventions and practice contexts. Additional research is needed to further develop and test the theory.

Supplementary Material

Acknowledgments

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This publication was supported by Cooperative Agreement Numbers U48DP00194409, U48DP001949, U48DP0010909, and U48DP001934 from the Centers for Disease Control and Prevention and the National Cancer Institute to Cancer Prevention and Control Research Network sites. Marieke A. Hartman was supported by the postdoctoral fellowship, University of Texas School of Public Health Cancer Education and Career Development Program, National Cancer Institute (NIH Grant R25CA57712) and NIH Grant R01CA163526. Esther Thatcher was supported by the National Institute of Nursing Research (Grant 5T32NR00856). The findings and conclusions presented here are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention or the National Institutes of Health.

Footnotes

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional supporting information is available at heb.sagepub.com/supplemental.

References

- Atun R, de Jongh T, Secci F, Ohiri K, Adeyi O. Integration of targeted health interventions into health systems: A conceptual framework for analysis. Health Policy and Planning. 2010;25:104–111. doi: 10.1093/heapol/czp055. [DOI] [PubMed] [Google Scholar]

- Briss PA, Brownson RC, Fielding JE, Zaza S. Developing and using the guide to community preventive services: Lessons learned about evidence-based public health. Annual Review of Public Health. 2004;25:281–302. doi: 10.1146/annurev.publhealth.25.050503.153933. [DOI] [PubMed] [Google Scholar]

- Clavier C, Senechal Y, Vibert S, Potvin L. A theory-based model of translation practices in public health participatory research. Sociology of Health & Illness. 2012;34:791–805. doi: 10.1111/j.1467-9566.2011.01408.x. [DOI] [PubMed] [Google Scholar]

- Contandriopoulos D, Lemire M, Denis JL, Tremblay E. Knowledge exchange processes in organizations and policy arenas: A narrative systematic review of the literature. Milbank Quarterly. 2010;88:444–483. doi: 10.1111/j.1468-0009.2010.00608.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeGroff A, Schooley M, Chapel T, Poister TH. Challenges and strategies in applying performance measurement to federal public health programs. Evaluation and Program Planning. 2010;33:365–372. doi: 10.1016/j.evalprogplan.2010.02.003. [DOI] [PubMed] [Google Scholar]

- Denis JL, Hebert Y, Langley A, Lozeau D, Trottier LH. Explaining diffusion patterns for complex health care innovations. Health Care Management Review. 2002;27(3):60–73. doi: 10.1097/00004010-200207000-00007. [DOI] [PubMed] [Google Scholar]

- Dreisinger ML, Boland EM, Filler CD, Baker EA, Hessel AS, Brownson RC. Contextual factors influencing readiness for dissemination of obesity prevention programs and policies. Health Education Research. 2012;27:292–306. doi: 10.1093/her/cyr063. [DOI] [PubMed] [Google Scholar]

- Durlak JA, DuPre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Elwyn G, Taubert M, Kowalczuk J. Sticky knowledge: A possible model for investigating implementation in healthcare contexts. Implementation Science. 2007;2:44. doi: 10.1186/1748-5908-2-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandez ME, Melvin CL, Leeman J, Ribisl KM, Allen JD, Kegler MC, … Hebert JR. The Cancer Prevention and Control Research Network: An interactive systems approach to advancing cancer control implementation research and practice. Cancer Epidemiology, Biomarkers & Prevention. 2014;23:2512–2521. doi: 10.1158/1055-9965.EPI-14-0097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa: University of South Florida, National Implementation Research Network; 2005. [Google Scholar]

- Flaspohler P, Duffy J, Wandersman A, Stillman L, Maras MA. Unpacking prevention capacity: An intersection of research-to-practice models and community-centered models. American Journal of Community Psychology. 2008;41:182–196. doi: 10.1007/s10464-008-9162-3. [DOI] [PubMed] [Google Scholar]

- Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Quarterly. 2004;82:581–630. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannon PA, Maxwell AE, Escoffery C, Vu T, Kohn M, Leeman J, … DeGroff A. Colorectal Cancer Control Program grantees’ use of evidence-based interventions. American Journal of Preventive Medicine. 2013;45:644–648. doi: 10.1016/j.amepre.2013.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: Theoretical and practical challenges. Implementation Science. 2008;3:1. doi: 10.1186/1748-5908-3-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kothari A, Rudman D, Dobbins M, Rouse M, Sibbald S, Edwards N. The use of tacit and explicit knowledge in public health: A qualitative study. Implement Science. 2012;7:20. doi: 10.1186/1748-5908-7-20. 1748-5908-7-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lanham HJ, Leykum LK, Taylor BS, McCannon CJ, Lindberg C, Lester RT. How complexity science can inform scale-up and spread in health care: Understanding the role of self-organization in variation across local contexts. Social Science & Medicine. 2013;93:194–202. doi: 10.1016/j.socscimed.2012.05.040. [DOI] [PubMed] [Google Scholar]

- Le LT, Anthony B, Broheim SM, Holland CM, Perry DF. A technical assistance model for guiding service and systems change. Journal of Behavioral Health Services & Research. 2014 doi: 10.1007/s11414-014-9439-2. Advance online publication. [DOI] [PubMed] [Google Scholar]

- Leeman J, Baernholdt M, Sandelowski M. Developing a theory-based taxonomy of methods for implementing change in practice. Journal of Advanced Nursing. 2007;58:191–200. doi: 10.1111/j.1365-2648.2006.04207.x. [DOI] [PubMed] [Google Scholar]

- Leeman J, Calancie L, Hartman M, Escoffery C, Hermman A, Tague L, … Samuel-Hodge C. What strategies are used to build practitioners’ capacity to implement community-based interventions and are they effective? A systematic review. Implementation Science. 2015 doi: 10.1186/s13012-015-0272-7. Advance online publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leeman J, Myers A, Ribisl K, Ammerman A. Disseminating policy and environmental change interventions: Insights from obesity prevention and tobacco control. International Journal of Behavioral Medicine. 2015;22:301–311. doi: 10.1007/s12529-014-9427-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leeman J, Sommers J, Leung MM, Ammerman A. Disseminating evidence from research and practice: A model for selecting evidence to guide obesity prevention. Journal of Public Health Management and Practice. 2011;17:133–140. doi: 10.1097/PHH.0b013e3181e39eaa. [DOI] [PubMed] [Google Scholar]

- Levac D, Colquhoun H, … Brien KK. Scoping studies: Advancing the methodology. Implementation Science. 2010;5:69. doi: 10.1186/1748-5908-5-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- May CR, Finch T, Ballini L, MacFarlane A, Mair F, Murray E, … Rapley T. Evaluating complex interventions and health technologies using normalization process theory: Development of a simplified approach and web-enabled toolkit. BMC Health Services Research. 2011;11:245. doi: 10.1186/1472-6963-11-245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- May CR, Mair F, Finch T, MacFarlane A, Dowrick C, Treweek S, … Montori VM. Development of a theory of implementation and integration: Normalization process theory. Implementation Science. 2009;4:29. doi: 10.1186/1748-5908-4-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCormack L, Sheridan S, Lewis M, Boudewyns V, Melvin CL, Kisteler C, … Lohr KN. Communication and dissemination strategies to facilitate the use of health-related evidence. Evidence Reports/Technology Assessments, No. 213. 2013 doi: 10.23970/ahrqepcerta213. Retrieved from http://effectivehealthcare.ahrq.gov/ehc/products/433/1756/medical-evidence-communication-executive-131120.pdf. [DOI] [PMC free article] [PubMed]

- Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, Wells KB. Interventions in organizational and community context: A framework for building evidence on dissemination and implementation in health services research. Administration and Policy in Mental Health. 2008;35(1–2):21–37. doi: 10.1007/s10488-007-0144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyers DC, Durlak JA, Wandersman A. The quality implementation framework: A synthesis of critical steps in the implementation process. American Journal of Community Psychology. 2012;50:462–480. doi: 10.1007/s10464-012-9522-x. [DOI] [PubMed] [Google Scholar]

- Michie S, van Stralen MM, West R. The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Science. 2011;6:42. doi: 10.1186/1748-5908-6-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitton C, Adair CE, McKenzie E, Patten SB, Waye Perry B. Knowledge transfer and exchange: Review and synthesis of the literature. Milbank Quarterly. 2007;85:729–768. doi: 10.1111/j.1468-0009.2007.00506.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogilvie D, Cummins S, Petticrew M, White M, Jones A, Wheeler K. Assessing the evaluability of complex public health interventions: Five questions for researchers, funders, and policymakers. Milbank Quarterly. 2011;89:206–225. doi: 10.1111/j.1468-0009.2011.00626.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver NL. A glossary for dissemination and implementation research in health. Journal of Public Health Management & Practice. 2008;14:117–123. doi: 10.1097/01.PHH.0000311888.06252.bb. [DOI] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of Innovations. New York, NY: Free Press; 2003. [Google Scholar]

- Rycroft-Malone J. The PARIHS framework—A framework for guiding the implementation of evidence-based practice. Journal of Nursing Care Quality. 2004;19:297–304. doi: 10.1097/00001786-200410000-00002. [DOI] [PubMed] [Google Scholar]

- Scheirer MA. Linking sustainability research to intervention types. American Journal of Public Health. 2013;103:e73–e80. doi: 10.2105/AJPH.2012.300976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snowden DJ, Boone ME. A leader’s framework for decision making. Harvard Business Review. 2007 Nov;:1–9. [PubMed] [Google Scholar]

- Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: Models for dissemination and implementation research. American Journal of Preventive Medicine. 2012;43:337–350. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wandersman A, Chien VH, Katz J. Toward an evidence-based system for innovation support for implementing innovations with quality: Tools, training, technical assistance, and quality assurance/quality improvement. American Journal of Community Psychology. 2012;50:445–459. doi: 10.1007/s10464-012-9509-7. [DOI] [PubMed] [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, … Saul J. Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. American Journal of Community Psychology. 2008;41:171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- Ward VL, House AO, Hamer S. Knowledge brokering: Exploring the process of transferring knowledge into action. BMC Health Services Research. 2009;9:12. doi: 10.1186/1472-6963-9-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner BJ. A theory of organizational readiness for change. Implementation Science. 2009;4:67. doi: 10.1186/1748-5908-4-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan CT, Nembhard IM, Stern AF, Brush JE, Jr, Krumholz HM, Bradley EH. Blueprint for the dissemination of evidence-based practices in health care. Issue Brief (Commonwealth Fund) 2010;86:1–16. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.