Abstract

The brain is highly energy consuming, therefore is under strong selective pressure to achieve cost-efficiency in both cortical connectivities and activities. However, cost-efficiency as a design principle for cortical activities has been rarely studied. Especially it is not clear how cost-efficiency is related to ubiquitously observed multi-scale properties: irregular firing, oscillations and neuronal avalanches. Here we demonstrate that these prominent properties can be simultaneously observed in a generic, biologically plausible neural circuit model that captures excitation-inhibition balance and realistic dynamics of synaptic conductance. Their co-emergence achieves minimal energy cost as well as maximal energy efficiency on information capacity, when neuronal firing are coordinated and shaped by moderate synchrony to reduce otherwise redundant spikes, and the dynamical clusterings are maintained in the form of neuronal avalanches. Such cost-efficient neural dynamics can be employed as a foundation for further efficient information processing under energy constraint.

Author summary

The adult human brain consumes more than 20% of the resting metabolism, despite constituting only 2% of the body’s mass. Most energy is consumed by the cerebral cortex with billions of neurons, mainly to restore ion gradients across membranes for generating and propagating action potentials and synaptic transmission. Even small increases in the average spike rate of cortical neurons could cause the cortex to exceed the energy budget for the whole brain. Consequently, the cortex is likely to be under considerable selective pressure to reduce spike rates but to maintain efficient information processing. Experimentally, cortical activities are ubiquitously observed at multiple scales with prominent features: irregular individual firing, synchronized oscillations and neuronal avalanches. Do these features of cortical activities reflect cost-efficiency on the aspect of information capacity? We employ a generic but biologically plausible local neural circuit to compare various dynamical modes with different degrees of synchrony. Our simulations show that these features of cortical activities can be observed simultaneously and their co-emergence indeed robustly achieves maximal energy efficiency and minimal energy cost. Our work thus suggests that basic neurobiological and dynamical mechanisms can support the foundation for efficient neural information processing under the energy constraint.

Introduction

Complex spatiotemporal patterns are ubiquitously observed in spontaneous cortical activities in vitro and in vivo, with prominent features at multiple scales: irregular individual firing [1–3], synchronized oscillations [4–6] and neuronal avalanches [7–10]. Specially, neuronal avalanches form spatiotemporal clusters of synchronized activities interrupted by periods of silence, yet individual neurons discharge spikes in a rather random way, which is close to a Poisson process [10]. The sizes of spiking clusters in neuronal avalanches follow a power-law distribution, suggesting that such activities are generated by a scale-invariant dynamics, as the system poised at a critical state [11, 12]. Therefore, self-organized criticality has been considered as an overriding organizing mechanism for the cortical activities at different scales [13–15].

These multi-scale cortical activities are believed to have different implications in information processing. Firstly, irregular firing can be robustly generated in large-size networks, in which excitatory and inhibitory currents to each neuron are dynamically balanced, as a result to increase the accuracy and speed of information relay in terms of firing rate [2]. Secondly, synchronous oscillations are thought to be crucial for neural integration, cognition, and behavior [4–6]. Abnormally strong synchrony can indicate dysfunction of the underlying cortical network, e.g., excessive synchrony during epileptic seizures [16] and Parkinson’s disease [17], while abnormally weak synchrony can be associated with disorders such as schizophrenia [18] and autism [19]. Finally, neuronal avalanches have been demonstrated to optimize the response range of stimulus intensities [20, 21], the amount of information that can be stored and transferred [7, 22], the variability of spontaneous synchrony [23] to allow flexible switching between states, and the information representation in an adaptive sensory neuronal network [15, 24]. Consequently, complex spatiotemporal patterns are significant on numerous aspects of neural information processing.

However, cortical activities should be constrained by its restricted energy budget. Actually, the human brain consumes 20% of the body’s energy despite constituting only 2% of the body’s mass. Thus, optimal brain functioning requires careful balancing of the brain’s energy budget. Nonetheless, the brain is remarkably energy-efficient when compared to the computer CPU [25], since neurons fire sparsely and the majority of them are at quiescent state for any given time [26]. Cost-efficiency is therefore supposed to be an important organizing principle for cortical connectivities and activities, and should be reflected in the above-mentioned features of cortical activities. This concept has been extraordinarily successful in explaining brain structure, including the scaling between white and gray matters across species [27], the spatial placement of the neural components [28, 29] with wiring length minimization and the features of brain connectome by a trade-off with functional values [30, 31]. It has also been employed to well explain optimal behavioral patterns [32]. Therefore, it is highly desirable to investigate whether the principle of cost-efficiency is reflected in the ubiquitously observed features in cortical activities.

To assess the impact of these dynamical features on energy consumption and information processing, we employ a generic but biologically plausible neural circuit to compare various dynamical modes with different synchrony degrees. Since cortical energy usage is dominated by the generation and propagation of action potentials and synaptic transmission, the energy cost is generally proportional to the mean firing rate and can be roughly estimated by the spike rate. On the other hand, information processing and transmission is limited by the repertoire of different activated configurations available to the population, whose extent can be quantified by the entropy H, also known as information capacity [33, 34]. H is important because it defines an upper limit on various aspects of information processing, e.g., a population with low entropy will present a bottleneck for information transmission in the cortex. Therefore, following Ref. [35], the energy efficiency here is introduced as information capacity per energy unit. In this way, our results show that irregular firing, synchronized oscillations and neural avalanches can be observed simultaneously in the regime of moderate synchrony, while their co-emergence indeed robustly achieves maximal energy efficiency and minimal spike rate in comparison to the other synchrony regimes. The superior efficiency at moderate synchrony is attributed to the dynamical mechanism for coordinating and shaping individual firing to reduce otherwise redundant spikes. Thus, co-emergence of the experimentally observed multi-scale cortical activities achieves cost-efficiency in terms of information capacity.

Results

Neural circuit model

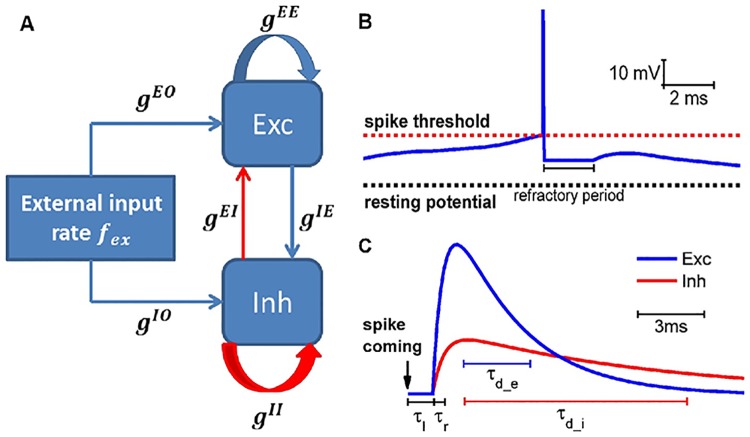

Here we consider a generic model of neuronal networks with basic biological characteristics: excitation-inhibition (E-I) balance, conductance-based synaptic currents and realistic synaptic dynamics. The model was proposed in [36] to study the emergence of gamma oscillations from sparse firing of neurons. We simulate large random networks of E-I spiking neurons with E-I ratio γ = 4: 1 and interconnection probability C = 0.2, sketched in Fig 1A. Besides, each neuron receives some independent external excitatory projections, which represent input from other neural circuits or external stimuli. Neuronal spiking dynamics is described by the integrate-and-fire (IF) model with refractory period and leaky current (an example in Fig 1B), while conductance-based synaptic currents are used to model the synaptic transmission from presynaptic neurons to postsynaptic neurons (details in Methods). When a presynaptic spike arrives, the unitary conductance change is modelled as a bi-exponential function with conduction delay time τl, rise time τr and decay time τd (see Fig 1C). Moreover, the synaptic strengths are chosen to realize an E-I balanced state, in which neurons fire irregularly [2, 37] (details in Methods). The synaptic decay times were found important to determine the frequencies of the oscillations [36]. In this study, we explore the parameter space of E-I synaptic decay times (τd_e, τd_i) to investigate various dynamical modes and to study whether cost-efficiency on the aspect of information capacity can be achieved.

Fig 1. Schematic representation of network architecture, neuronal integration and spike, synaptic conductance traces.

(A) The local recurrent neuronal network consists of excitatory (Exc) and inhibitory (Inh) spiking neurons with synaptic connections (blue, excitatory; red, inhibitory) and inputs from other neural circuits or external stimuli. (B) The voltage trace of one IF neuron with refractory period and leaky current. (C) The unitary conductance response to a pre-synaptic spike is described by a bi-exponential function with latency τl, rise time τr and decay time τd.

Co-emergence of multi-scale cortical activities

The above model has been previously shown to generate sparsely synchronized oscillations, which consist of irregular and sparse individual spikes but synchronized oscillating population activities [36]. Two different underlying dynamical mechanisms have been discovered: E-I loop for gamma oscillations (30 ∼ 80 Hz) or inhibition-inhibition (I-I) loop for sharp-wave ripples (∼200 Hz), which happens at two different parameter regions of (τd_e, τd_i), where excitatory currents or inhibitory currents dominate the fast dynamics, respectively [36]. Here we focus on the former one with a continuous transition from asynchronous states to synchronized states induced by the E-I loop for gamma oscillations.

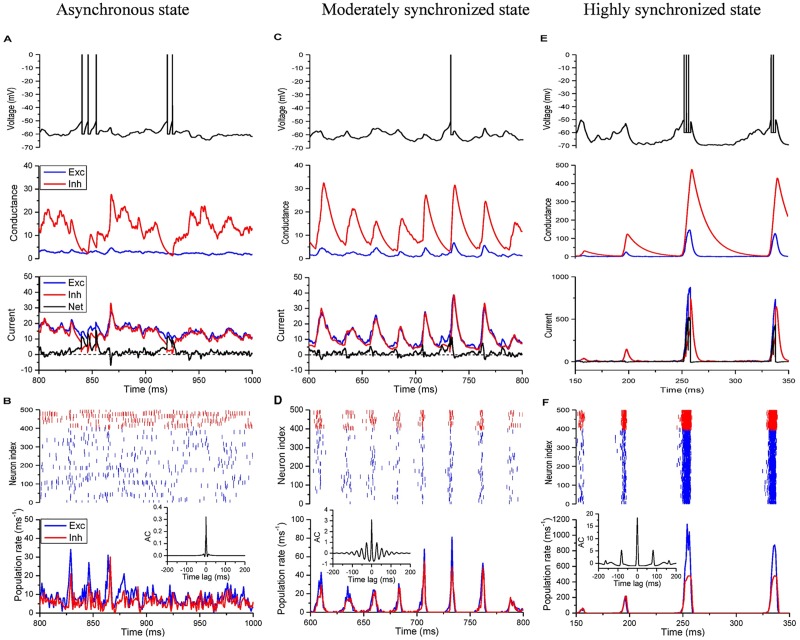

In Fig 2, we show three examples with different synchrony degrees (synchrony defined in Methods):

Fig 2. Multi-scale dynamics of E-I balanced network with various synchrony degree.

Left panel: asynchronous state (τd_e = 6 ms, τd_i = 6 ms); Middle panel: moderately synchronized state (τd_e = 4 ms, τd_i = 10 ms); Right panel: highly synchronized state (τd_e = 2 ms, τd_i = 14 ms). (A, C, E) Time series of membrane potential, input conductances, and input currents of a randomly selected neuron. (B, D, F) Network activity. Top, raster plot of a subset 500 neurons (Exc 400 (blue), Inh 100 (red)); bottom, the average excitatory and inhibitory population activity in 1-ms bins; inset, autocorrelation (AC) of the excitatory population activity. Middle and right panels show that the population rhythm is mainly determined by inhibitory decay time τd_i, and the delayed negative feedback from inhibitory population suppresses the firing of the excitatory population, leaving a window for integration, whose size controls the burst of individual activities (C, E).

Asynchronous irregular state (τd_e = 6 ms, τd_i = 6 ms);

Moderately synchronized state (τd_e = 4 ms, τd_i = 10 ms);

Highly synchronized state (τd_e = 2 ms, τd_i = 14 ms).

For the first case, the individual neuron fires spikes irregularly, due to the incoming E-I balanced currents with their mean cancelled and large fluctuations left (Fig 2A), while the population activity is asynchronous (Fig 2B) [37]. Secondly, individual spiking is driven by commonly modulated E-I conductance (Fig 2C), due to the moderately synchronized population activity (Fig 2D). The resulting currents to each neuron are tightly coupled with a little time lag of inhibition behind excitation, closely resembling the observation in in vivo intracellular recordings [38]. Finally, the fast dynamics is dominated by the excitatory currents (Fig 2E) and the population activity is highly synchronized by strong E-I loop (Fig 2F). And each neuron is driven by the feedback currents with large E-I time lag, which allow neurons to fire once or even more spikes in each lag window (Fig 2E). Therefore, with different parameters (τd_e, τd_i), different cortical activities at both neuron and population levels can be simultaneously generated in this model.

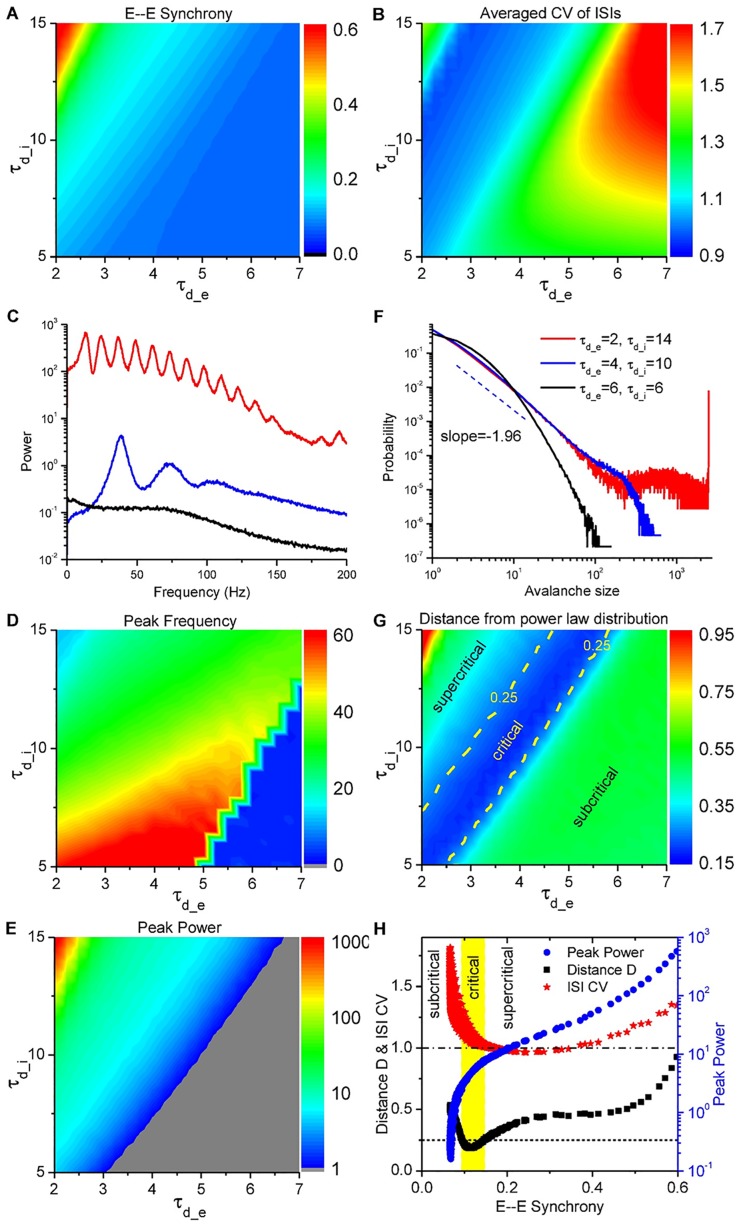

In summary, by decreasing τd_e and increasing τd_i, stronger and stronger synchrony can be induced in the population activities as shown in Fig 3A, while individual spikes are still irregular as shown in Fig 3B. That is because, faster excitation and slower inhibition lead to the formation of a stronger E-I delayed-feedback loop [6, 36, 39]. As a result, increasing synchrony will induce the emergence of collective oscillations, where the maximal power shifts to nonzero frequencies in the power spectra of the population activities as shown in Fig 3C and 3D, and the maximal power also increases with the synchrony degree as shown in Fig 3E. On the other hand, increasing either synchrony or τd_i slows down the population rhythm (Fig 3D). Actually, after one bump of excitatory and inhibitory activities, another round cannot be initiated until the residual inhibitory conductance decays to low enough values to be conquered by the external excitatory inputs (Fig 2C and 2E). Therefore, large τd_i (∼10 ms) will limit the rhythm of collective oscillations into gamma band (30 ∼ 80 Hz) (Fig 3D), which is thought to be important for sensory processing, motor activity, and cognitive functions [5].

Fig 3. Co-existence of multi-scale cortical activities at moderately synchronized states.

(A) Average pairwise 1-ms synchrony between excitatory neurons (E—E Synchrony); (B) Average CV (standard deviation/mean) of the inter-spike intervals (ISIs) over the excitatory population; (C) Power spectra of population activity for 3 different parameter sets indicated in (F); (D) Peak frequency; (E) Peak power. (F) Avalanche size distributions for 3 different parameter sets. (G) Distance of avalanche size distribution from the best-fitted power-law distribution; (H) ISI CV (red), distance from power-law (black) and peak power (blue) vs. E—E Synchrony, showing the co-existence of irregular firing, synchronized oscillations and neuronal avalanches at moderately synchronized states. (A, B, D, E, G) in the parameter space (τd_e, τd_i) (unit: ms).

Moreover, synchronized oscillations with different synchrony degrees temporally split population activities into random clusters, presenting subcritical, critical, or supercritical avalanche dynamics. Here the avalanches are characterized following the spike-based avalanche analysis in vivo of pyramidal neurons [10] (details in Methods). The subcritical dynamics has an exponentially decaying avalanche size distribution; the supercritical one has much more chance of large-size avalanches, while the critical dynamics shows a power-law avalanche size distribution. The avalanche size distributions for the above three examples are plotted in Fig 3F. We can find that the moderately synchronized case is critical, while the asynchronous one is subcritical and the highly synchronized one is supercritical, which is also supported by the distributions of spike number and duration in each avalanche, and waiting time between two consecutive avalanches (see S1 Fig). The criticality at the moderately synchronized states can also be indicated by the exponentially-modulated and small-amplitude sinusoidal autocorrelation of the excitatory population activity, as shown in Fig 2D, bottom inset. To more quantitatively characterize the criticality, we introduce here a distance D of the avalanche size distribution from the best-fitted power-law function, with respect to the average avalanche size (details in Methods). From Fig 3G, we find that neuronal avalanche, as the critical dynamics, coincides with the moderately synchronized states, while the subcritical dynamics occurs in the asynchronous region and the supercritical one in the highly synchronized region. Besides, the moderately synchronized states also correspond to the oscillation onset (also indicated in Fig 2D, bottom inset), where the oscillation power goes through a clear transition from low to high as shown in Fig 3H. Therefore, neuronal avalanches and gamma oscillations emerge jointly, which is consistent with in vivo observations [8, 40]. Specifically, neuronal avalanches are achieved by aggregating different groups of neurons into clusters at different time instants and sparsely synchronized oscillations emerge when the clusters are organized with typical time-scales.

Furthermore, moderate synchrony will feedback to shape individual spikes to be irregularly tonic with coefficients of variation (CV) close to 1 as shown in Fig 3H for the excitatory population, where CV is defined as the standard deviation over the mean of inter-spike intervals (ISIs) (details in Methods). Distributions of CV separately for excitatory and inhibitory neurons in the various dynamical states with different synchrony degree are also given in S2 Fig for various parameter sets (τd_e, τd_i). The distribution profiles in the critical states with moderate synchrony are consistent with those of experimental data in various cortex areas, as shown in [41, 42]. As shown in Fig 3B, CV is larger than 1 in the whole asynchronous region, indicating burst in the spikes of individual neurons, that is, several spikes in a short interval followed by a long period of silence. And CV is larger at larger τd_e and τd_i. The generation of burst is due to the effects of both conductance-based currents and slow synaptic conductance. Firstly, large bumps of conductance inputs to each neuron drastically reduce neuronal effective membrane time constant, so that neurons response promptly to positive currents and fire spikes more frequently [43, 44]. Secondly, the slow synaptic dynamics will induce long time-scale autocorrelation of net currents [45, 46], whose fluctuations drive the postsynaptic neurons to generate grouped spikes with short ISIs in between (Fig 2A and S3 Fig). As a result, larger synaptic decay time introduces longer excursion of current fluctuations and induces more bursts in individual activities as shown in Fig 3B [46]. On the other hand, in the highly synchronized region, the currents can also drive neurons to show burst activities (S3 Fig), because of the large E-I time lag in currents. However, in the moderately synchronized region, bursts are reduced by the instantaneously correlated and moderately modulated E-I currents (Figs 2C, 3H and S3 Fig). That is because the current fluctuations are smoothed out by the modulation and neuronal integration is limited in the little E-I time lag of rising phase (Fig 2C).

Cost-efficient information capacity

To examine whether cost-efficiency can be achieved on the aspect of information capacity, here we first introduce the definition of the population spike pattern and its corresponding energy cost and efficiency, in analogy to the work by Levy and Baxter [35]. The population spike pattern is defined within a time window Δτ in two scenarios: Binary scenario, each neuron has just two states, spiking or non-spiking; Analog scenario, neuron’s state is represented by its spike count.

Assume that a resting neuron consumes r unit of energy within Δτ, due to its leaky current, and a spike costs one extra unit of energy. In this way, 1/r measures the relative energy constraint level on the spike pattern (details in Methods). If we consider a population with n neurons, which fire m spikes on average in each time window Δτ, the generated pattern can be described by its activity level ρ = m/n, energy cost E = nr + m and energy efficiency η = H/E, where the entropy H measures the abundance of different activated configurations available to the population, representing its information capacity (detailed formulation in Methods). Here we just consider excitatory neurons in the pattern, so the activity level can also be given as ρ = vE Δτ, where vE is the mean firing rate of excitatory neurons.

Actually, with the given activity level ρ, the theoretical upper-bound efficiency has been derived by Levy and Baxter [35], based on the maximal entropy principle [47] (a unified derivation also presented in Methods: Energy efficiency optimization). That is, for each given ρ, the optimal efficiency ηopt is written as

| (1) |

| (2) |

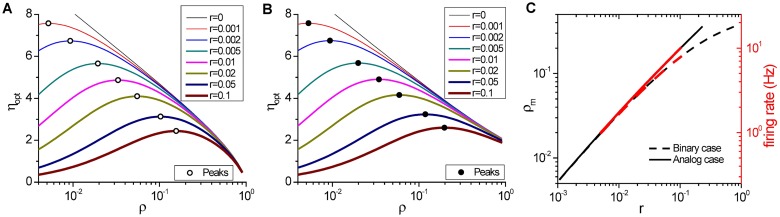

where f(ρ) = −ρlog2 ρ − (1 − ρ)log2(1 − ρ) represents the Shannon’s entropy of a binary event with probability ρ (more details discussed in Methods: Energy efficiency optimization). Note that the optimal efficiency is independent of the number of neurons n but dependent on the parameter r. As shown in Fig 4, increasing r from 0 to ∞ will shift the value ρm for the maximal ηopt from ρm = 0 to ρm = 0.5 in binary scenario or from ρm = 0 to ρm = 1 in analog scenario. Therefore, a pattern with a lower firing rate vE does not always imply a higher energy efficiency ηopt.

Fig 4. Effect of relative resting energy r on optimized energy efficiency ηopt(ρ).

(A, B) The optimized energy efficiency ηopt(ρ) vs. activity level ρ for various values of relative resting energy r in both binary (A) and analog scenarios (B). Larger r shifts the value of ρm for maximal ηopt(ρ) monotonically from ρm → 0 at r = 0 to ρm = 0.5 in binary scenario (open circles in (A)) or ρm = 1 in analog scenario (solid points in (B)) at r → ∞. (C) The monotonic dependence of ρm as well as its corresponding firing rate v (v = ρ/Δτ, Δτ = 20 ms) on r in both binary (black dashed line) and analog scenarios (black solid line). To achieve the maximal energy efficiency ηopt(ρ), the neuronal firing rate is constrained in the range of 1 ∼ 8 Hz for binary patterns (red dashed line) or 1 ∼ 10 Hz for analog patterns (red solid line) with r in the empirical range 0.005 ∼ 0.1, respectively.

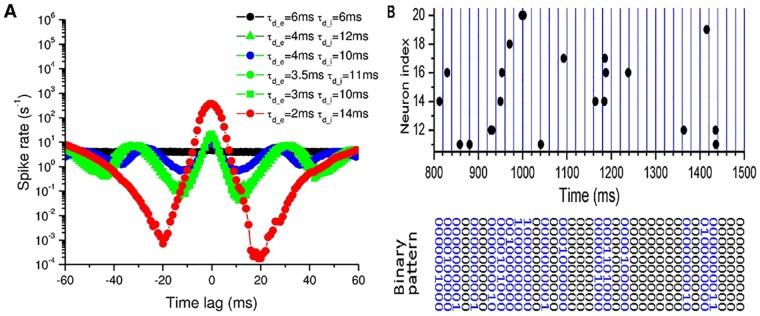

Generally, the spatiotemporal spike patterns should be discretized by both spatial and temporal resolutions. The former can be naturally set by one neuron, while the latter needs a typical time scale. From the viewpoint of population coding, a pattern is reasonable to include the co-activated neurons within this typical time window, which therefore should be determined by the time scale of cross correlations between neurons [48]. In our simulation, spike series of different neurons are coincident within 20 ms for most parameter pairs (τd_e, τd_i) as shown in Fig 5A, thus the spike patterns can be splited into bins with Δτ = 20 as shown in Fig 5B. Such a time scale is also biologically plausible in neural circuit, e.g., reading out the patterns by downstream neurons through the synaptic current time of a few milliseconds and the membrane time of 10 ∼ 20 ms, and learning by spike-timing dependent plasticity (STDP) [49] with precision of spike timing < 20 ∼ 30 ms. Actually, the time window can not be larger, otherwise the spike pattern tends to involve more than one spikes for each neuron, which is not energy efficient as discussed in Methods: Energy efficiency optimization. We have also checked smaller bin sizes for the spike patterns, and found that decreasing Δτ will weaken the advantage of the critical regime in terms of energy efficiency, as shown in S5 Fig. Therefore, we select Δτ = 20 ms as the proper time window to split the spike trains into patterns.

Fig 5. Definition of spatiotemporal spike patterns.

(A) Examples of cross-correlogram between neuron pairs for various parameter sets (τd_e, τd_i) show that spike coincidence happens within 20-ms windows; the average firing rate of one neuron is plotted relative to the time at which the other neuron spikes, averaged over 2000 pairs of randomly selected excitatory neurons. Black, blue, red points are the respective subcritical, critical supercritical cases as exampled in Fig 2. Three more cases around the critical region are shown as green points. (B) Schematics of mapping spiking patterns of 10 randomly selected neurons into binary strings; black, patterns without any spike; blue, binary patterns with spikes.

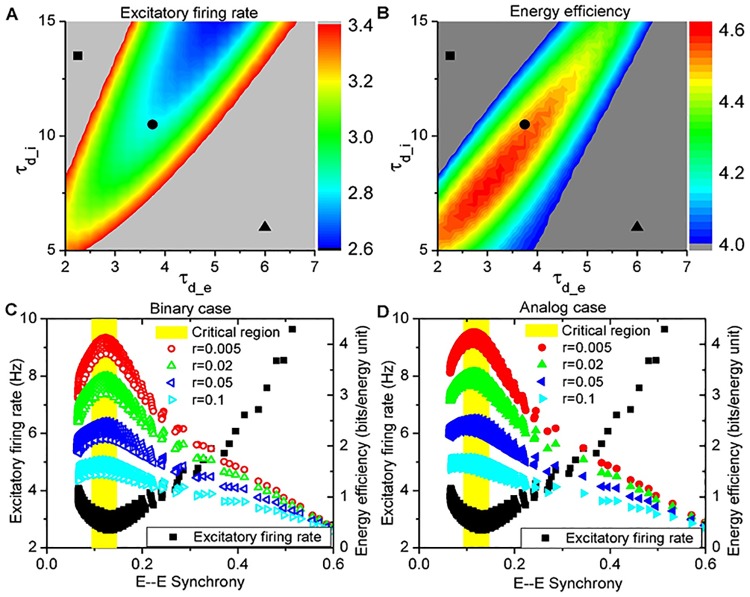

From our simulations, as shown in Fig 6A and 6B, one can find that the firing rate vE is minimal and energy efficiency ηsim is maximal in the parameter region for critical dynamics, where irregular firing, synchronized oscillations and neuronal avalanches emerge altogether. Actually, the inhibitory firing rate vI is also minimal in this region, as shown in S4 Fig. Therefore, the spike patterns of cortical activities with moderate synchrony, where the prominent multi-scale dynamical features emerge together, can achieve cost-efficiency on the aspect of information capacity. What is more, such cost-efficiency is robust in both binary and analog scenarios as shown in Fig 6C and 6D (more data in S6 Fig, upper panel), as long as the parameter r is in the empirical range (0.005 ∼ 0.1) [50–52]. These results are significant because theoretically the optimal energy efficiency ηopt is not always achieved in the pattern with the lowest firing rate as indicated in Eqs (1 and 2) and Fig 4.

Fig 6. Cost-efficient information capacity in the critical region.

(A) Average excitatory firing rate vE; (B) Energy efficiency ηsim in analog scenario at r = 0; (C, D) Energy efficiency ηsim at various r (colors) and average excitatory firing rate vE (black) vs. E—E Synchrony in both binary (C) and analog (D) scenarios. Cost-efficiency is achieved robustly in the critical region across the empirical range of r. n = 40 for all patterns. (A, B) in the parameter space (τd_e, τd_i) (unit: ms).

The minimal firing rate vE in the moderately synchronized states, as shown in Fig 6C and 6D, can be ascribed to the reduction of burst activities through the specific feedback currents. In the asynchronous states, one can find that bursts in the spike trains with intermittent periods of long silence, which is indicated in S3 Fig, make vE slightly larger than that in the critical region. While strong synchrony also drives neurons to show burst activities and much enhances the firing rate vE (S3 Fig), moderate synchrony is just enough to reduce the bursts, which will be generated by fluctuating and balanced currents in the asynchronous states, but avoids to induce burst, when each neuron receives the currents with just a little E-I time lag in the currents (Fig 2C; S3 Fig). Therefore, the moderate synchrony can both coordinate and shape individual spikes to reduce the bursts and render the firing rate vE to be minimal in this critical region, as shown in Fig 6C and 6D. Besides, in the critical region, slower population rhythm at larger τd_i further lowers vE, as indicated in Fig 6A.

Such reduction of burst activities also makes the critical dynamics with moderate synchrony to achieve maximal energy efficiency ηsim robustly, as shown in Fig 6C and 6D. As analyzed in Methods: Energy efficiency optimization, the upper bound ηopt can only be achieved when neurons are active independently with an identical probability, so the energy efficiency ηsim in our simulations is reduced from the corresponding upper bound ηopt by two main sources of correlations—the temporal correlation due to burst and synchronization among neurons. Actually, as shown in S7 Fig from the simulation, increasing CV decreases the energy efficiency in the asynchronous states (synchrony degree < 0.1) for both binary and analog scenarios and various r.

Specifically, in the binary scenario with r = 0, the effect of burst on reducing energy efficiency can be isolated by eliminating the redundant spikes, because just one spike fired by each neuron contributes to the simulated entropy Hsim in each time window Δτ. If these redundant spikes were not taken into consideration in the energy cost, then the energy efficiency can boost from ηsim = Hsim/m to Bηsim = Hsim/mn, with B = m/mn denoting the burst level and mn representing the average number of spiking neurons in each time window Δτ. Thus the reduction of energy efficiency due to the burst can be given as

| (3) |

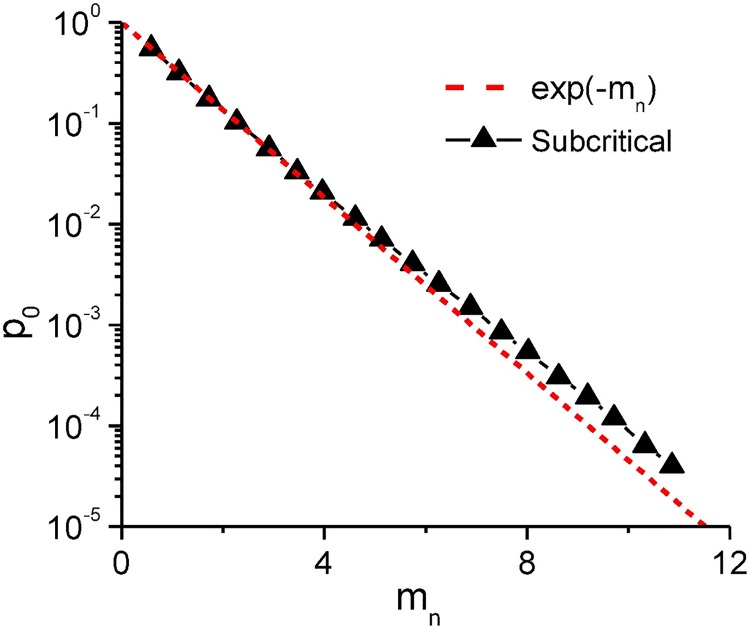

Except for the burst, the other temporal correlation in individual spiking series seems to be ignorable, which can be inferred from the dependence of the probability p0 of empty patterns on the number of spiking neurons mn at various sample size n, as shown in Fig 7. The dependence is fitted well with the ideal case of sparse patterns (mn ≪ n)

| (4) |

where neurons fire spikes in a random way. Thus, in the asynchronous states, neurons seem to be active in a random way except for the burst activities. Therefore, the remaining gap between Bηsim and ηopt can be approximately ascribed to the synchronization, given as

| (5) |

yielding the total reduction of energy efficiency as

| (6) |

Fig 7. Probability of empty patterns.

Dependence of the probability p0 of empty patterns on the number of spiking neurons mn for and the subcritical state in our simulations at various sample size n. Dashed line represents the ideal case with all neurons firing randomly. Parameter set (τd_e, τd_i) is indicated by the triangle in Fig 6A and 6B.

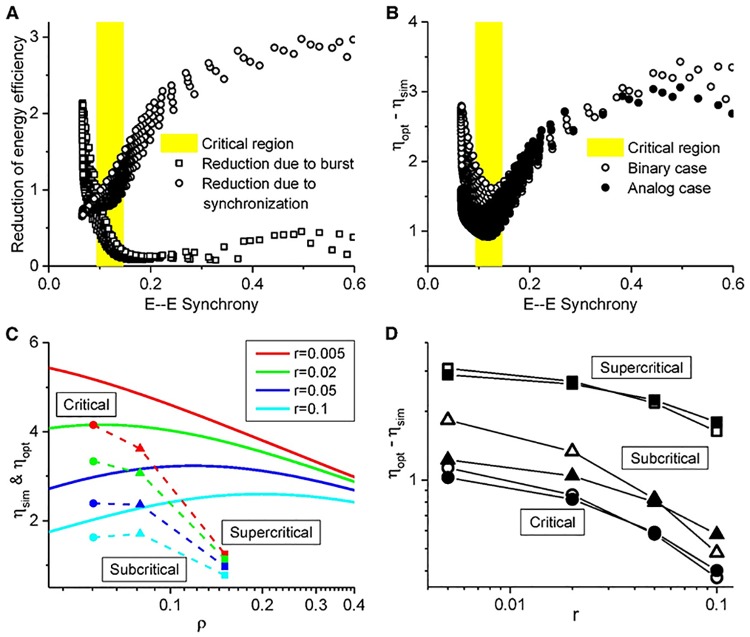

As shown in Fig 3H, red, burst activities can be shaped by moderate synchrony in our simulations, and thus there is a trade-off of their contributions to the reduction of energy efficiency, as shown in Fig 8A for the case r = 0. Interestingly, the total reduction ηopt − ηsim (or RB + RS) is just right minimized in the critical region, as shown in Fig 8B for both binary and analog cases. Actually, in the later case, burst activities also limit the available configurations, whose effect is similar to that by synchronization, although different spike counts within Δτ represent different patterns and spikes are not redundant any more. Such mechanism is robust to minimize the total reduction R and then to maximize the simulated energy efficiency ηsim for any r chosen from the empirical range (0.005 ∼ 0.1), even though larger r shifts the maximum of ηopt to larger ρ, as indicated by the solid lines in Fig 8C, or to the corresponding subcritical and supercritical regions in the parameter space (τd_e, τd_i), as shown in S6 Fig bottom panel. Thus, the energy efficiency reduction ηopt − ηsim keeps minimal in the critical region in both binary and analog scenarios, as shown in Fig 8D, and the simulated energy efficiency ηsim perserved maximal in the critical region pretty well for r ranging from 0.005 to 0.1, as shown in S6 Fig top panel. Therefore, the critical dynamics can robustly achieve a maximal energy efficiency ηsim.

Fig 8. Trade-off in energy efficiency reduction.

(A) Energy efficiency reduction by burst and synchronization in binary scenario; (B) Energy efficiency reduction is minimal in the critical region in both binary and analog scenarios; r = 0 for (A, B). (C) Comparison of simulated energy efficiency ηsim with the upper bound ηopt at various states for various r. The optimum is represented by solid lines and the simulated by symbols. (D) ηopt − ηsim vs. r in both binary (open circles) and analog (solid points) scenarios. Energy efficiency reduction keeps minimal in the critical region at various r in both scenarios. (C, D) Parameters (τd_e, τd_i) are indicated in Fig 6A and 6B with corresponding symbols; n = 40 for all patterns.

Furthermore, the spike patterns generated by the critical dynamics are sparse. Specifically, the minimal firing rate vE reaches around 3 Hz (Fig 6A), against 30 ∼ 80 Hz of the primary population rhythm (Fig 3E), indicating that a single pyramidal cell fires only once in every 10 ∼ 20 population cycles, which is consistent with the experimental observation [53]. This implies that the activity level in each configuration is low (ρ = ΔτvE ∼ 0.06), suggesting that such spatiotemporal spike patterns can be reconciled with the ‘sparse coding’ scheme [26, 54, 55], where a small proportion of neurons fire at any one time and a few spikes can be distributed among a large number of neurons in many different ways. Interestingly, despite being very sparse, the critical dynamics still frequently generates readable configurations with large number of neurons simultaneously activated. Actually, as shown in Fig 9, the frequency of large number of activating neurons, which is comparable to the experimental observation [48], is 2-order larger than that of the asynchronous case. Therefore, such critical dynamics not only achieves cost-efficiency on the aspect of information capacity, but also is feasible for information processing.

Fig 9. Spiking neuron number distribution.

Probability distributions of the activated neuron number for the selected states, indicated in Fig 6A and 6B with the corresponding symbols. n = 40 for all patterns. The distribution in the critical region is close to the experimental data [48] (red).

Discussion

To summarize, biologically realistic synaptic dynamics in E-I balanced networks provides a scheme to generate cortical activities with prominent multi-scale features: irregular individual firing, synchronized oscillations and neuronal avalanches. Interestingly, the generated spike patterns can simultaneously achieve the lowest mean firing rate and the maximal energy efficiency on the aspect of information capacity. Therefore our work establishes the link between the underlying design principle of cost-efficiency and the generically observed features of cortical activities. Such cost-efficient neural dynamics are caused by an E-I delayed-feedback loop with suitable strength, and the resulted moderate synchrony can coordinate irregular neuronal spikes into neuronal avalanches and shape them to reduce otherwise redundant spikes. Here we argue that such cost-efficient spike patterns could provide a foundation for further efficient information processing, learning and memory by employing sensitive, flexible and coherent responses in the network with low-rate and sparse firing. In the following, we go further to discuss the novelty of our results with comparison to previous understandings of the dynamical mechanism underlying the co-emergence of multi-scale cortical activities. Then we will discuss the potential benefits of cost-efficient cortical activities in information processing and the cost-efficiency in the co-organision of cortical connectivities and activities.

Comparisons to previous understandings

Neuronal avalanche has been widely studied in models, such as the random branching model [7], the excitatory neuronal network model with short-term synaptic plasticity [13] and the E-I balanced Ising model [56]. However, most previous work treats neuronal avalache as the state with a critical activity level, therefore the critical states generated in these models are always asynchronous without displaying oscillations. On the other hand, synchronized oscillations are mainly investigated by the interplay between excitatory and inhibitory population, emphasizing the role of inhibitory neurons [38, 57]. Such model can reconcile irregular individual firing and synchronized population oscillations. To this end, we suggest here the critical synchronization to account for the co-emergence of these salient dynamical features. That is, E-I balance and suitable E-I synaptic dynamics induce E-I population oscillations, which moderately modulate the feedback currents with a little E-I time lag, and drive neurons to fire irregularly and continuously in the form of avalanches. Unfortunately, we should point out that the analytical understanding of neuronal response to such correlated and modulated E-I inputs is highly challenging, partly because of the complicate interaction between multiple time scales of synaptic filters and the high-conductance membrane [44]. Therefore, how all of these features can be simultaneously reconciled at this critical state is still unsolved analytically.

Nonetheless, the co-occurrence of moderate synchrony and critical states is not only to occur in the model here, but also can be found in a broad class of network models, e.g., one recent example in Ref. [58] and a current-based neuronal network model with simulation results shown in S8 Fig. Here the critical states with neuronal avalanches are considered as the onset of population oscillations, and Fig 3(A), 3(E) and 3(H) show that the moderate synchronous state occurs at the oscillation onset. This scenario has been employed to analyze the population frequency close to the critical points via linear stability analysis, when the model here was first introduced by Brunel and Wang [36]. What is more, the corresponding normal form at such critical points was also derived in by Brunel and Hakim [57, 59] for different models, with the generic underlying dynamical mechanisms of I-I loop or E-I loop, and different synaptic or voltage integrative (current-based or conductance-based model) mechanisms.

Note however, that the critical states in the current-based model can occur in the states with a relatively wide range of synchrony, not only the moderately synchronized states but also some state with rather weak synchronization (Synchrony measured in 1-ms window can be low to the value ∼0.01, see S8 Fig. (F)). The underlying mechanism can be attributed to the effective time scale τeff of membrane potential integration. In the current-based model, τeff is equal to the membrane time constant τE (discussion focusing on excitatory neurons, but it is the same for inhibitory neurons), while in the conductance-based model, , which is dynamical with dependence on the total incoming conductance, and can be reduced to 1 ∼ 2 ms in the so-called high-conductance states [43, 44, 60]. Therefore, in the conductance-based model, the population activity should be coordinated into a group with strictly moderate 1-ms pairwise synchrony to support the critical dynamics with neuronal avalanches. However, in the current-based model, due to much larger and constant τeff, the population oscillation may induce the cross-correlation between neuronal spiking in larger time lags, which is suitable to organize the population activity in order to support critical dynamics with neuronal avalanches, even though synchronization in the 1-ms window can be rather weak. Therefore, such critical dynamics can occur in the states with a wide range of synchrony. As discussed above, it is still hard to analyze neuronal response to such correlated, balanced and modulated E-I inputs. We hope our work will stimulate more theoretical analysis on the intricate relationships among these properties.

Actually, I-I loop can also generate sparsely synchronized oscillations, and we have also simulated the transition due to the I-I loop. It is found from the simulation that the firing rate change in this transition is abrupt, which is in some sense like the subcritical Hopf bifurcation in terms of the macroscopic state. This is totally different from the one due to the E-I loop, which can be described as a supercritical Hopf bifurcation, where the oscillation amplitude increases gradually from 0 and we can find a large parameter regime for the critical states due to the effects of finite system size and external noise. Therefore, there is little critical regime for the transition due to the I-I loop. It is not clear now why they are so different, because the reduced equation derived by Brunel and Hakim [57] has shown that the dynamics of the population averaged firing rate goes through a supercritical Hopf bifurcation in a simplified and purely inhibitory neuronal network. Thus, it is not clear which property of our model makes the transition due to the I-I loop as a subcritical Hopf bifurcation, which is often accompanied by a hysteresis. It is also unclear how to investigate such kind of hysteresis in the neuronal network dynamics and what is its functional role in the cortex. This topic will be our further work in future.

On the other hand, previous studies in both experiments and theoretical models have shown that mutual information or entropy measures has a maximum at criticality or avalanche dynamics [7, 22, 61]. However, all of those studies consider the scenario of the critical point in a transition from a quiescent state to a fully activated state in a driven system [7, 22, 61]. As discussed above, we are here considering the transition from an asynchronous state to a highly synchronized state. Thus, our results do not contradict with previous facts. Furthermore, our results are not only to extend the existing understanding, but to start from the basic idea of the first fundamental principle?cost-efficiency, and demonstrates that there exists one biological plausible neuronal network model which can accomplish this principle under the constraint of commonly observed multi-scale dynamical features of cortical activities. Therefore, the novelty of our results is completely not mitigated by the existing facts. Different from the usual view that entropy measures show a maximum at critical points or avalanche dynamics, here we also study the nontrivial change of the firing rate and study the energy efficiency as the ratio of the entropy over the energy (linearly dependent on the firing rate). Different from the common view that the firing rate increases monotonically during the transition, here the firing rate and firing patterns have a nontrivial trade-off since moderate synchrony can coordinate and shape irregular individual activities to simultaneously minimize firing rate (by reducing the redundant spikes) and reduce the entropy under the corresponding rate (due to synchronization), and it is the trade-off in the critical regime that robustly maximize the energy efficiency. Such a trade-off shown in Fig 8, to our best knowledge, has not been discovered yet. Besides, the previous experimental observation is obtained from the electrodes’ signals, like LFPs [7, 22]. So our results are expected to be further tested in the experiments with neuronal resolutions.

Functional benefits in information processing

The E-I balanced network has been shown as an efficient candidate for rate coding and information transmission [2, 62]. Such rate sensitivity also provides a dynamical basis for orientation selectivity without the need of neural maps [63]. Moreover, the sensitivity of neuronal response to weakly correlated inputs can surprisingly induce highly nontrivial patterns [64, 65].

On the other hand, activity in gamma frequencies is thought to play a major role in the propagation of information across cortical areas [66–69]. Synchronous spiking during gamma activity is supposed to allow these neurons to efficiently cooperate in the recruitment for their postsynaptic targets, thereby facilitating the transmission of information, and also regulate the efficiency, thereby contributing to the merger, or ?binding?, of information originating from distinct regions. And such information transmission during gamma oscillations depends on the precise timing of the oscillation. However, even within a specific cortical location, the instantaneous frequency of gamma oscillations changes from one moment to the next, and this ongoing modulation in oscillation frequency (or phase) affects the precise timing of neuronal spiking with that cortical location, thereby altering the efficacy with which information is transmitted to downstream regions.

In consistent with previous in vivo observations [70], cycle-to-cycle fluctuations in the oscillation amplitude reflect underlying fluctuations of both excitatory and inhibitory synaptic currents, yet excitation and inhibition remain balanced during each oscillation cycle. What is more, such fluctuation can be maximized at the critical states, as reflected in the dynamical properties of neuronal avalanches. Thus, the instantaneous E-I balance in the critical dynamics may translate ongoing fluctuation of oscillation amplitudes into the variability of inter-event interval or oscillation phase [70].

Therefore, co-emergence of these salient cortical activities may provide a dynamical substrate for signal transmission with high flexibility and capacity, while neuronal spikes are sparse and irregular.

Finally, the temporal correlation of spikes is crucial for spike-timing dependent plasticity (STDP), which is a solid biophysical substrate for learning [71]. STDP can also feedback to drive the network into the critical state with moderate synchrony, which is at the border between synchronization and desynchronization [72]. Thus, a recurrent network endowed with STDP could self-organize into the critical dynamics, and then provide the dynamical foundation for efficient learning. On the other hand, the oscillation frequency could also make impact in the learning process. Of special interest are the beta/gamma-band (13 ∼ 30/30 ∼ 80 Hz) oscillations, where two avalanches are separated by a few tens of milliseconds (15 ∼ 80 ms). As a result, synapses within the same cluster will be altered significantly by STDP, while the synapses crossing two different clusters are slightly modified. Thus a network endowed with STDP could evolve into modules with stronger connections within a cluster and relatively weaker connections between clusters, providing a potential substrate for memorizing each signal in each cluster.

Co-organization of network structures and activities

In our further work, preliminary numerical simulations indicate that such cost-efficient critical states are robust in 2-dimensional lattices, whose connection probability decay exponentially with distance. If the neural circuits are geometrically constrained and the wiring is required to be economical, a good candidate for the realistic network structure is the hierarchical module, featured by dense, short-range connections and sparse, long-range connections [30, 31]. Our previous work has shown that such connection topology can increase the range of parameters for critical dynamics and therefore supports its robustness, because the module renders the activities hard to spread beyond the local modules to the whole network [73, 74]. In this way, the geometrical constraint is likely to further shape the spike patterns. Therefore, it is significant in the future to study the cost-efficiency on both cortical connectivities and activities.

Methods

Recurrent E-I network model

The model studied here was introduced in [36], whose biological basis and related discussions can be dated back to the work by Amit and Brunel [75, 76]. While the model did not consider all the anatomical and neurobiological details, it captures essential features in neuronal spiking, synaptic dynamics and network coupling, as detailed in the following realistic properties:

Neuronal property: leaky integrate-and-fire neurons with realistic membrane time constants, resting membrane potential, spike threshold, reset potential and refractory periods for pyramids and interneurons (fast spiking interneuron with short membrane time constant);

Synaptic property: realistic synaptic time courses with synaptic time constants: latency, rise time and decay time taken from slice data;

Network property: realistic connection probability and E-I ratio in population size.

In particular, we model large recurrent networks with excitatory (Exc) and inhibitory (Inh) neurons (N = 2500, NE: NI = 4: 1), randomly connected with a given connection probability C = 0.2. Each neuron receives on average KE excitatory and KI inhibitory synaptic inputs from other neurons within the network, and also KO excitatory synaptic inputs from outside, mimicking connections within the same cortical area and inputs from other areas in the cortex (KO = KE = 400, KI = 100), respectively. The external synaptic inputs are modelled as uncorrelated Poisson-type spike trains, with input rate fex = 2.5 Hz for each connection.

Both excitatory and inhibitory neurons are simplified as leaky integrate-and-fire neurons. The dynamics of sub-threshold membrane potential VE (VI) for excitatory (inhibitory) neurons are described as

| (7) |

| (8) |

| (9) |

where i = 1, …, NE, and k = E, I.

Here gEO, gIO, gEE, gEI, gIE, gII denote the synaptic strengths of conductance for external input to Exc, external input to Inh, Exc to Exc, Inh to Exc, Exc to Inh and Inh to Inh. Their values are set to satisfy the balanced condition [2, 77], e.g., gEO = 0.05, gIO = 0.08, gEE = 0.04, gIE = 0.08, gEI = 0.6, gII = 0.96, in units of the resting membrane conductance gL = 10 nS. EE (EI) is the reversal potential for excitatory (inhibitory) synaptic currents, with EE = 0 mV, EI = −70 mV. One corresponding current-based neuronal network model is also employed to investigate the multi-scale activities, whose results are summarized in S8 Fig. The model is similar to the conductance-based model, only with the last two for both excitatory and inhibitory synaptic currents in Eq (7) replaced by the averaged potentials 〈V〉, which is set to be 〈V〉 = −60 mV for all cases. Though the modification of the model appears small, but the dynamical features of the current-based and conductance-based models can be quite different, because the latter model has an intrinsic dynamics of the so-called effective time scale for membrane potential integration, which depends on the total incoming conductance [43, 44, 60].

The membrane time constants are set as τE = 20 ms, τI = 10 ms, and the leaky potential is VL = −70 mV. When the membrane potential reaches the spike threshold θ = −50 mV, a spike is emitted, the membrane potential is reset to −60 mV, and synaptic integration is halted for 2 ms (1 ms) for excitatory (inhibitory) neurons, mimicking the refractory period in real neurons.

∂O i, ∂E i, ∂I i denote the set of incoming external, excitatory, inhibitory neighbors, respectively. sE(t − tjn), sI(t − tjn) are the time courses of synaptic conductance induced by the nth presynaptic spike coming at tjn from jth excitatory or inhibitory incoming connection, respectively. They are described as a delayed difference of exponentials with three parameters: latency τl, rise time τr, and decay time τd. They are given as

| (10) |

where k = E, I and Θ(t) is the Heaviside function, with Θ(t) = 0 for t ≤ 0 and Θ(t) = 1 for t > 0. For both excitatory and inhibitory synapses, τl = 1 ms and τr = 0.5 ms. The decay times τd_e, τd_i for excitatory and inhibitory synapses are employed as parameters around typical values (2 ∼ 5 ms for τd_e [78, 79], 5 ∼ 15 ms for τd_i [80, 81]) for investigating the network dynamical modes.

Simulation methods

Simulations are done using a finite difference integration scheme based on the second-order Runge-Kutta algorithm with time step dt = 0.05 ms [82, 83]. Each network is simulated for 2000 s with the initial 1 s discarded. Networks are simulated on a cluster of 16 nodes (8 processors each node) running Linux, using custom written codes in C++.

Autocorrelation of population activity

The instantaneous population activity A(t) is determined by the number of spikes in the full network per 1-ms bin. The autocorrelation of the population activity in the insets in Fig 2B, 2D and 2F is defined as [62]

| (11) |

where k = E, I and 〈Ak(t)〉 is the mean activity of kth population.

Irregularity of individual spikes

For each neuron, inter-spike interval (ISI) is measured by the time distance of two consecutive spikes, each of which has a precise spiking time. The irregularity of individual spikes is characterized by the coefficients of variation (CV) of the ISI distribution, which is the ratio of the standard deviation (SD) to the mean of the ISI distribution. CV values close to 0 indicate regular spikes, values near 1 indicate irregular spikes, and values much larger than 1 indicate bursts. For burst activities, the neuron is likely to fire several spikes in a short interval followed by a longer period of silence. The averaged CV over the excitatory population is used to characterize the irregularity of individual activities throughout the population.

Synchrony index of spike trains

The spatiotemporal clustering of individual spikes is characterized by the pair-wise spiking synchronization. We adopt the average instantaneous cross-correlation of neuronal spiking time to quantify the degree of synchrony. The pair coherence between neuron i and j is defined as

| (12) |

where Bi(k) (Bj(k)) is the spike train of neuron i(j). Bi(k) = 0 or 1 (k = 1, …, l), represents no spike or one spike generated in the kth 1-ms bin.Kij measures the probability of neuron i and j spiking together within 1-ms bins, and the average over all pairs Kij is taken as the synchrony index.

Peak frequency analysis

The series of population firing rate with the mean detrended are Fourier transformed to calculate the power spectrum. To estimate the peak frequency, a Gaussian kernel is used to smooth the power spectrum and then to catch the peak frequency and peak power.

Neuronal avalanches definition and quantification

Following recent observation of spike-based neuronal avalanches in vivo [10], in which just spikes of pyramidal neurons are taken into consider, we here also define neuronal avalanches using spikes in excitatory population. The window size δt is employed to bin the spike train of the whole excitatory population. An avalanche is defined as a sequence of consecutive non-empty bins, flanked by empty bins.δt ranges from the simulation step size dt to 20 dt (from 0.05 ms to 1 ms), and the results are almost the same.

Here the avalanche size s is measured as the number of neurons firing in an active period. Due to individual burst activity in some cases, a neuron may fire several spikes in this period. We have also defined the avalanche size as the total number of spikes in this sequence and found there is no qualitative difference in our results. The duration of the avalanches and the waiting time between two consecutive avalanches are also examined.

To characterize neuronal avalanches, the distribution P(s) of avalanche sizes is first visually inspected and then quantified by the distance from the best-fitted power-law distribution Pfit(s), which is defined as the ratio of the average size difference per avalanche to the average size of the best-fitted power-law distribution, as follows:

| (13) |

Energy efficiency of information capacity

Spike trains of excitatory neurons are binned by windows of Δτ = 20 ms into sequences of spike count (s = 0, 1, …, 10) in analog scenario or binary sequences of spiking (1) and non-spiking (0) in binary scenario. In binary scenario, in case where there is more than one spike in a bin, we denote it as ‘1’. Information theoretic quantities such as the entropy depend on the full distribution of states for the population. Estimating these quantities could be difficult, because finite data sets lead to systematic errors. In this work, we perform long time simulations (2000 s) and sample n excitatory neurons’ spike trains to investigate the spike patterns. Here the sampled size is set as n = 40, and the number of available configurations is very large. We try our best to reduce the statistical variability by taking 100 random samples and averaging the obtained entropy values of each subset of chosen neurons.

We denote p0 as the probability of the empty configuration with no spike, and correspondingly pi as the probability of ith unique nontrivial configuration with mi spikes distributed in n sampled neurons during the 2000 s simulation time. Fig 5B presents one schematic example of the binary spike patterns of 10 sampled neurons. Then the information capacity can be defined as the entropy of all these configurations

| (14) |

In each time window Δτ, each neuron, spiking or not, costs r energy unit due to the leaky currents and one spike costs one extra unit of energy. Then, the average energy expansion per configuration is given as

| (15) |

where m = ∑i mi pi is the average spike count over all configurations. Here, the energy efficiency is defined as the ratio of information capacity to energy cost, as follows

| (16) |

with the unit bits/energy. In this way, the spike pattern is constrained by the activity level ρ = m/n, and 1/r measures the relative energy constraint on the spike pattern. If r → ∞, the spikes expend no extra energy and the energy has no constraint on the spike pattern. If r = 0, the energy cost of resting neurons can be ignored, then the energy efficiency is simplified as η = H/m, which characterizes how much information one spike can express. Decreasing r increases the energy constraint on the energy efficiency of the spike patterns. Empirically, r cannot be ignored, which ranges from 0.005 to 0.1 [50–52].

Energy efficiency optimization

The optimization of energy efficiency provides its theoretical upper bound with given ρ, which can be expressed as

| (17) |

with given spike expenditures m = ∑i mi pi and population size n. By introducing Lagrangian multiplier λ and μ to assume

| (18) |

such optimization subject to the constraint of spike expenditures m = ρn can be solved in both binary and analog scenarios by the principle of maximum entropy [47].

Our results are identical with the previous work in Ref. [35], where the information capacity is estimated by assuming independent and random neuronal activities, and the binary and analog patterns are dealt from different perspectives: fraction of active neurons in binary scenario and firing frequencies in analog scenario. Actually, both scenarios can be unified in the unique framework of the distribution of spike patterns. Here, we derive strictly the optimal energy efficiency with given activity level ρ = m/n in both scenarios, and summarize the results into the formula, which can be used to discuss the significant effect of the relative resting energy r on the constraint of activity level or neuronal firing rate.

Binary scenario

In this scenario, each neuron can be considered as a binary signaling device with two states: spiking irrespective of the spike count in the time bin or non-spiking. This happens where connections between neurons of upstream and downstream have short-term depression [84, 85], where just the first spike makes a significant contribution, while the subsequent spikes within a short time window have little effect on the downstream neurons.

To maximize the energy efficiency of binary patterns, each neuron is naturally assumed to fire at most one spike in each pattern. Thus, the fraction of nontrivial patterns with k spikes can be given as:

| (19) |

where is the number of different unique patterns with k spikes distributed in n neurons. Then the corresponding maximization can be written as:

| (20) |

with Pk ≥ 0, , . Substituting Pk into the last two summation equations yields:

| (21) |

| (22) |

and then we arrive

| (23) |

| (24) |

which gives us the probability of the ith pattern as

| (25) |

Such distribution of spike patterns shows that each neuron is to be active independently with an identical probability ρ, which is consistent with the assumption in Ref. [35].

So the optimized energy efficiency can be given as

| (26) |

where f(ρ)≡ − (1 − ρ)log2(1 − ρ) − ρlog2 ρ is Shannon’s entropy function of a binary event with probability ρ. This function tells us that the optimized entropy Hopt(ρ) can achieve the maximal value at ρ = 0.5, where each neuron expresses one bit information and all kinds of unique binary patterns can be generated equally.

However, the optimized energy efficiency ηopt not only depends on the activity level ρ but also the parameter r, as shown in Fig 4. So the value of ρm for maximal ηopt depends on the value of r. When r ≫ ρ, the denominator in Eq 26 can be considered as constant, so the optimization of energy efficiency is equivalent to the entropy optimization. In this case, ηopt peaks at ρm = 0.5. On the other hand, when r → 0, Eq 26 can be simplified to ηopt(ρ) = f(ρ)/ρ, whose peak is achieved at ρm → 0. It is monotonic dependence of the value ρm on r, as shown by the open circles in Fig 4A. By setting , we can get the relationship between ρm and r:

| (27) |

whose solutions are shown in Fig 4C, dashed line.

Analog scenario

This is a more general scenario where burst activity can transmit information. In this case, the spike count sj of the jth neuron in each pattern expresses information, therefore the activity level ρ = m/n can be larger than 1. Here, the pattern time window is Δτ = 20 ms and the excitatory neuron has a refractory period τrp = 2 ms, so we set the spike count sj in each pattern to range from 0 to 10. In this way, the fraction of patterns with k spikes can be given as

| (28) |

where is the number of different unique patterns with k spikes () distributed in n neurons. Similar to that in binary scenario, from , , we can get

| (29) |

| (30) |

where and for e−μ < 1.

Then we can get

| (31) |

| (32) |

which gives us the probability of the ith pattern as

| (33) |

where .

So the maximum entropy principle [47] will lead to the optimized energy efficiency as

| (34) |

which shows that the optimized entropy Hopt(ρ) achieves maximal at ρ′ = 0.5 or ρ = 1, that is m = n, where all kinds of unique analog patterns can be generated equally. However, like that in binary scenario, the optimized energy efficiency ηopt also depends on both activity level ρ and the parameter r. Fig 4B shows the dependence of ηopt on ρ at various values of r and the value of ρm for maximal ηopt is also monotonically determined by the value of r (solid points in Fig 4B). The dependence of ρm on r can be given by the equation

| (35) |

whose solution is shown in Fig 4C, solid line.

Finally, the constraint of r on the activity level ρm will limit the firing rate of neurons v (v = ρ/Δτ) ranging from 1 ∼ 10 Hz for both scenarios, see Fig 4C.

Supporting information

It is shown that the scale-free behavior in the moderately synchronized case is not reflected in size distribution, but also in temporal dynamics, although the synchronized oscillations start to emerge.

(TIF)

CV distribution for excitatory population (blue columns), inhibitory population (red columns); The distribution profiles at critical states are consistent with those of experimental data in various cortex areas, as shown in [41, 42].

(TIF)

(A) ISI distribution of individual spiking activities at the asynchronous state compared to that of Poisson process with identical firing rate, shown in linear-log scales. The comparison shows us that neurons at the asynchronous state have higher probability to fire temporally clustered spikes and also higher probability to be silent for long periods. (B) ISI distributions in linear-linear scale shows strong burst activity at the asynchronous state (black) and highly synchronized state (red), which is reduced by moderate synchrony at the critical state (blue).

(TIF)

Due to faster spiking of inhibitory neurons (membrane time constant: 10 ms for inhibitory neurons and 20 ms for excitatory neurons), excitatory and inhibitory populations are analyzed separately. (A) The average firing rate vI of inhibitory population is about twice of the excitatory one vE, but both have similar distribution shape in the whole parameter space (τd_e, τd_i). (B) The average firing rate vI of inhibitory population is also minimal in the critical regime.

(TIF)

It is shown that decreasing the bin size will weaken the advantage of the critical regime in terms of energy efficiency.

(TIF)

The simulated energy efficiency ηsim (top panel) and the optimal one ηopt with its corresponding activity level (bottom panel) of analog patterns in the parameter space (τd_e, τd_i) (unit: ms), for various values of r indicated in the plot. It shows that ηsim preserves maximal in the critical region pretty well for r ranging from 0.005 to 0.1, although larger r will shift the maximum of ηopt to the subcritical as well as supercritical regions with larger activity level or firing rate.

(TIF)

For the asynchronous states with synchrony degree less than 0.1, increasing the averaged CV over the excitatory population will decrease the energy efficiency for both binary and analog scenarios and various r.

(TIF)

The model’s details are described in Methods: Recurrent E-I network model. (A) Raster of an excitatory subpopulation in the asynchronous irregular states with bursts in individual neuron’s spiking; (B) The averaged CV over the excitatory population in the parameter space (τd_e, τd_i); One can find that indivdual spiking behaviours are also shaped by the synchrony (compared with (E)). (C) Avalanches size distributions for 3 different states: subcritical, critical and supercritical states as also indicated in (F) (unit for both τd_e and τd_i: ms); (D) Distance D of avalanche size distribution from the best-fitted power-law distribution; (E) Average pairwise 1-ms synchrony between excitatory neurons (E—E Synchrony); (F) Distance D from power-law distribution vs. E—E Synchrony, showing the co-existence of neuronal avalanches and moderate synchrony. The three solid dots correspond to the three cases shown in (C), with respective colors.

(TIF)

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

This work was partially supported by Hong Kong Baptist University (HKBU) Strategic Development Fund, NSFC-RGC Joint Research Scheme HKUST/NSFC/12-13/01 (or N-HKUST 606/12), NSFC (Grant Nos. 11275027) and NSFC (Grant Nos. 11225526 and 11121403). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Softky WR, Koch C. The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J Neurosci. 1993;13(1):334–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. van Vreeswijk C, Sompolinsky H. Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science. 1996;274(5293):1724–1726. 10.1126/science.274.5293.1724 [DOI] [PubMed] [Google Scholar]

- 3. Shadlen MN, Newsome WT. The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J Neurosci. 1998;18(10):3870–3896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Buzsaki G. Rhythms of the Brain. Oxford University Press; 2006. [Google Scholar]

- 5. Fries P. Neuronal gamma-band synchronization as a fundamental process in cortical computation. Annu Rev Neurosci. 2009;32:209–224. 10.1146/annurev.neuro.051508.135603 [DOI] [PubMed] [Google Scholar]

- 6. Wang XJ. Neurophysiological and computational principles of cortical rhythms in cognition. Physiol Rev. 2010;90(3):1195–1268. 10.1152/physrev.00035.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Beggs JM, Plenz D. Neuronal avalanches in neocortical circuits. J Neurosci. 2003;23(35):11167–11177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Gireesh ED, Plenz D. Neuronal avalanches organize as nested theta-and beta/gamma-oscillations during development of cortical layer 2/3. Proc Natl Acad Sci USA. 2008;105(21):7576–7581. 10.1073/pnas.0800537105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Petermann T, Thiagarajan TC, Lebedev MA, Nicolelis MA, Chialvo DR, Plenz D. Spontaneous cortical activity in awake monkeys composed of neuronal avalanches. Proc Natl Acad Sci USA. 2009;106(37):15921–15926. 10.1073/pnas.0904089106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Bellay T, Klaus A, Seshadri S, Plenz D. Irregular spiking of pyramidal neurons organizes as scale-invariant neuronal avalanches in the awake state. Elife. 2015;4:e07224 10.7554/eLife.07224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Chialvo DR. Emergent complex neural dynamics. Nat Phys. 2010;6(10):744–750. 10.1038/nphys1803 [DOI] [Google Scholar]

- 12. Poil SS, Hardstone R, Mansvelder HD, Linkenkaer-Hansen K. Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks. J Neurosci. 2012;32(29):9817–9823. 10.1523/JNEUROSCI.5990-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Levina A, Herrmann JM, Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nat Phys. 2007;3(12):857–860. 10.1038/nphys758 [DOI] [Google Scholar]

- 14. Millman D, Mihalas S, Kirkwood A, Niebur E. Self-organized criticality occurs in non-conservative neuronal networks during’up’ states. Nat Phys. 2010;6(10):801–805. 10.1038/nphys1757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Shew WL, Clawson WP, Pobst J, Karimipanah Y, Wright NC, Wessel R. Adaptation to sensory input tunes visual cortex to criticality. Nat Phys. 2015;11(8):659–663. 10.1038/nphys3370 [DOI] [Google Scholar]

- 16. Steriade M. Neuronal substrates of sleep and epilepsy. Cambridge University Press; 2003. [Google Scholar]

- 17. Bolam JP, Ingham CA, Magill PJ. The basal ganglia VIII. Springer Science & Business Media; 2006. [Google Scholar]

- 18. Uhlhaas PJ, Singer W. Abnormal neural oscillations and synchrony in schizophrenia. Nat Rev Neurosci. 2010;11(2):100–113. 10.1038/nrn2774 [DOI] [PubMed] [Google Scholar]

- 19. Yizhar O, Fenno LE, Prigge M, Schneider F, Davidson TJ, O’Shea DJ, et al. Neocortical excitation/inhibition balance in information processing and social dysfunction. Nature. 2011;477(7363):171–178. 10.1038/nature10360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kinouchi O, Copelli M. Optimal dynamical range of excitable networks at criticality. Nat Phys. 2006;2(5):348–351. 10.1038/nphys289 [DOI] [Google Scholar]

- 21. Shew WL, Yang H, Petermann T, Roy R, Plenz D. Neuronal avalanches imply maximum dynamic range in cortical networks at criticality. J Neurosci. 2009;29(49):15595–15600. 10.1523/JNEUROSCI.3864-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Shew WL, Yang H, Yu S, Roy R, Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J Neurosci. 2011;31(1):55–63. 10.1523/JNEUROSCI.4637-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Yang H, Shew WL, Roy R, Plenz D. Maximal variability of phase synchrony in cortical networks with neuronal avalanches. J Neurosci. 2012;32(3):1061–1072. 10.1523/JNEUROSCI.2771-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Shriki O, Yellin D. Optimal Information Representation and Criticality in an Adaptive Sensory Recurrent Neuronal Network. PLoS Comput Biol. 2016;12(2):1–19. 10.1371/journal.pcbi.1004698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Merolla PA, Arthur JV, Alvarez-Icaza R, Cassidy AS, Sawada J, Akopyan F, et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science. 2014;345(6197):668–673. 10.1126/science.1254642 [DOI] [PubMed] [Google Scholar]

- 26. Laughlin SB, Sejnowski TJ. Communication in neuronal networks. Science. 2003;301(5641):1870–1874. 10.1126/science.1089662 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Zhang K, Sejnowski TJ. A universal scaling law between gray matter and white matter of cerebral cortex. Proc Natl Acad Sci USA. 2000;97(10):5621–5626. 10.1073/pnas.090504197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Cherniak C, Mokhtarzada Z, Rodriguez-Esteban R, Changizi K. Global optimization of cerebral cortex layout. Proc Natl Acad Sci USA. 2004;101(4):1081–1086. 10.1073/pnas.0305212101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Chen BL, Hall DH, Chklovskii DB. Wiring optimization can relate neuronal structure and function. Proc Natl Acad Sci USA. 2006;103(12):4723–4728. 10.1073/pnas.0506806103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Bullmore E, Sporns O. The economy of brain network organization. Nat Rev Neurosci. 2012;13(5):336–349. [DOI] [PubMed] [Google Scholar]

- 31. Chen Y, Wang S, Hilgetag CC, Zhou C. Trade-off between multiple constraints enables simultaneous formation of modules and hubs in neural systems. PLoS Comput Biol. 2013;. 10.1371/journal.pcbi.1002937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Daffertshofer A, Huys R, Beek PJ. Dynamical coupling between locomotion and respiration. Biol Cybern. 2004;90(3):157–164. 10.1007/s00422-004-0462-x [DOI] [PubMed] [Google Scholar]

- 33. Shannon CE. A mathematical theory of communication. SIGMOBILE Mob Comput Commun Rev. 2001;5(1):3–55. 10.1145/584091.584093 [DOI] [Google Scholar]

- 34. Dayan P, Abbott LF. Theoretical neuroscience. Cambridge, MA: MIT Press; 2001. [Google Scholar]

- 35. Levy WB, Baxter RA. Energy efficient neural codes. Neural Comput. 1996;8(3):531–543. 10.1162/neco.1996.8.3.531 [DOI] [PubMed] [Google Scholar]

- 36. Brunel N, Wang XJ. What determines the frequency of fast network oscillations with irregular neural discharges? I. Synaptic dynamics and excitation-inhibition balance. J Neurophysiol. 2003;90(1):415–430. 10.1152/jn.01095.2002 [DOI] [PubMed] [Google Scholar]

- 37. Renart A, de la Rocha J, Bartho P, Hollender L, Parga N, Reyes A, et al. The asynchronous state in cortical circuits. Science. 2010;327(5965):587–590. 10.1126/science.1179850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Okun M, Lampl I. Instantaneous correlation of excitation and inhibition during ongoing and sensory-evoked activities. Nat Neurosci. 2008;11(5):535–537. 10.1038/nn.2105 [DOI] [PubMed] [Google Scholar]

- 39. Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12(1):1 10.1016/S0006-3495(72)86068-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Wang SJ, Ouyang G, Guang J, Zhang M, Wong KM, Zhou C. Stochastic Oscillation in Self-Organized Critical States of Small Systems: Sensitive Resting State in Neural Systems. Phys Rev Lett. 2016;116(1):018101 10.1103/PhysRevLett.116.018101 [DOI] [PubMed] [Google Scholar]

- 41. Shinomoto S, Shima K, Tanji J. Differences in spiking patterns among cortical neurons. Neural Comput. 2003;15(12):2823–2842. 10.1162/089976603322518759 [DOI] [PubMed] [Google Scholar]

- 42. Shinomoto S, Kim H, Shimokawa T, Matsuno N, Funahashi S, Shima K, et al. Relating neuronal firing patterns to functional differentiation of cerebral cortex. PLoS Comput Biol. 2009;5(7):e1000433 10.1371/journal.pcbi.1000433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Shelley M, McLaughlin D, Shapley R, Wielaard J. States of high conductance in a large-scale model of the visual cortex. J Comput Neurosci. 2002;13(2):93–109. 10.1023/A:1020158106603 [DOI] [PubMed] [Google Scholar]

- 44. Moreno-Bote R, Parga N. Membrane potential and response properties of populations of cortical neurons in the high conductance state. Phys Rev Lett. 2005;94(8):088103 10.1103/PhysRevLett.94.088103 [DOI] [PubMed] [Google Scholar]

- 45. Brunel N, Sergi S. Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. J Theor Biol. 1998;195(1):87–95. 10.1006/jtbi.1998.0782 [DOI] [PubMed] [Google Scholar]

- 46. Moreno-Bote R, Parga N. Role of synaptic filtering on the firing response of simple model neurons. Phys Rev Lett. 2004;92(2):028102 10.1103/PhysRevLett.92.028102 [DOI] [PubMed] [Google Scholar]

- 47. Jaynes ET. Information theory and statistical mechanics. Phys Rev. 1957;106(4):620 10.1103/PhysRev.106.620 [DOI] [Google Scholar]

- 48. Schneidman E, Berry MJ, Segev R, Bialek W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature. 2006;440(7087):1007–1012. 10.1038/nature04701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Bi Gq, Poo Mm. Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J Neurosci. 1998;18(24):10464–10472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Yarowsky P, Kadekaro M, Sokoloff L. Frequency-dependent activation of glucose utilization in the superior cervical ganglion by electrical stimulation of cervical sympathetic trunk. Proc Natl Acad Sci USA. 1983;80(13):4179–4183. 10.1073/pnas.80.13.4179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Yarowsky P, Crane A, Sokoloff L. Metabolic activation of specific postsynaptic elements in superior cervical ganglion by antidromic stimulation of external carotid nerve. Brain Res. 1985;334(2):330–334. 10.1016/0006-8993(85)90226-4 [DOI] [PubMed] [Google Scholar]

- 52. Laughlin SB. Energy as a constraint on the coding and processing of sensory information. Curr Opin Neurol. 2001;11(4):475–480. 10.1016/S0959-4388(00)00237-3 [DOI] [PubMed] [Google Scholar]

- 53. Fisahn A, Pike FG, Buhl EH, Paulsen O. Cholinergic induction of network oscillations at 40 Hz in the hippocampus in vitro. Nature. 1998;394(6689):186–189. 10.1038/28179 [DOI] [PubMed] [Google Scholar]

- 54. Olshausen BA, Field DJ. Sparse coding of sensory inputs. Curr Opin Neurobiol. 2004;14(4):481–487. 10.1016/j.conb.2004.07.007 [DOI] [PubMed] [Google Scholar]

- 55. Ohiorhenuan IE, Mechler F, Purpura KP, Schmid AM, Hu Q, Victor JD. Sparse coding and high-order correlations in fine-scale cortical networks. Nature. 2010;466(7306):617–621. 10.1038/nature09178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Benayoun M, Cowan JD, van Drongelen W, Wallace E. Avalanches in a stochastic model of spiking neurons. PLoS Comput Biol. 2010;6(7):e1000846 10.1371/journal.pcbi.1000846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Brunel N, Hakim V. Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Comput. 1999;11(7):1621–1671. 10.1162/089976699300016179 [DOI] [PubMed] [Google Scholar]

- 58. Teramae Jn, Tsubo Y, Fukai T. Optimal spike-based communication in excitable networks with strong-sparse and weak-dense links. Sci Rep. 2012;2:485 EP. 10.1038/srep00485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Brunel N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J Comput Neurosci. 2000;8(3):183–208. 10.1023/A:1008925309027 [DOI] [PubMed] [Google Scholar]