Abstract

Objectives

Evaluate web-based patient-reported outcome (wbPRO) collection in MS subjects in terms of feasibility, reliability, adherence, and subject-perceived benefits; and quantify the impact of MS-related symptoms on perceived well-being.

Methods

Thirty-one subjects with MS completed wbPROs targeting MS-related symptoms over six months using a customized web portal. Demographics and clinical outcomes were collected in person at baseline and six months.

Results

Approximately 87% of subjects completed wbPROs without assistance, and wbPROs strongly correlated with standard PROs (r > 0.91). All wbPROs were completed less frequently in the second three months (p < 0.05). Frequent wbPRO completion was significantly correlated with higher step on the Expanded Disability Status Scale (EDSS) (p = 0.026). Nearly 52% of subjects reported improved understanding of their disease, and approximately 16% wanted individualized wbPRO content. Over half (63.9%) of perceived well-being variance was explained by MS symptoms, notably depression (rs = −0.459), fatigue (rs = −0.390), and pain (rs = −0.389).

Conclusions

wbPRO collection was feasible and reliable. More disabled subjects had higher completion rates, yet most subjects failed requirements in the second three months. Remote monitoring has potential to improve patient-centered care and communication between patient and provider, but tailored PRO content and other innovations are needed to combat declining adherence.

Keywords: multiple sclerosis, patient engagement technology, patient empowerment, patient-provider communication, patient-reported outcomes, personalized medicine

INTRODUCTION

Patient-centered care has helped patients and providers find common ground[1], and electronic symptom monitoring can improve health-related quality of life[2]. When care providers use a patient-centered approach, patients utilize health care services less often[3] with reduced associated cost[4,5]. For these reasons, both elements are part of a consensus vision for the future of MS care[6]. Internet and mobile health technologies (mHealth) can facilitate symptom reporting and patient-centered care by regularly gathering health-related information at low cost, changing the patient role through unprecedented data access and control. As a result, over 500 studies have assessed mHealth interventions, with remote monitoring of chronic conditions being one of the most common and consistent targets[7]. On average, these interventions have had a small but significant positive effect on targeted behaviors[8].

MS is a promising target for mHealth because of the progressive nature of the disease, the unpredictability of relapses, and the importance of ongoing assessment. Over 80% of persons with MS use the internet on a weekly basis and 90% can navigate an electronic health record[9], making internet-based interventions technically feasible in MS. Consequently, a growing number of mHealth studies have focused on the MS population. An informational website for MS patients and their families received positive feedback[10], and MSDialog, a mobile and web-based patient-reported outcome (PRO) platform, was well-received by patients and care providers[11]. Data collected through novel modalities can be presented via interfaces such as the MS Bioscreen, a clinical data visualization tool developed specifically for MS[12].

Despite these successes, proving the real-world effectiveness of mHealth remains a top priority of the field[13,14]. Most studies have recruited highly motivated subjects, so positive results may not generalize to a broader population[15]. Moreover, participant interest seems to decline over time, casting doubts on long-term feasibility. For instance, a phone-based diabetes management intervention had a 50% dropout rate[16], and call completion for an interactive voice response service decreased over a three to six month period[17]. In a mobile-enabled weight loss intervention, adherence to dietary self-monitoring declined from roughly 70% to less than 20% over the course of the study[18]. Further, a mobile intervention for irritable bowel syndrome found a 25% decline in meal entries between weeks one and two[19]. In contrast, comparatively little is known regarding sustained mHealth compliance in MS.

The current study addresses mHealth engagement and adherence in MS by evaluating web-based PRO (wbPRO) collection in terms of feasibility, reliability, adherence, and subject-perceived benefits. While our wbPROs are similar to those used in other studies, we have conducted a detailed exploration of the dynamics of remote monitoring in persons with MS. As a secondary objective, the question “How are you feeling today?” (HAYFT) was used to study the influence of MS-related symptoms on perceived well-being. This relationship is complicated by symptom co-occurrence[20], so sustained and repeated PRO collection is required for accurate analysis.

METHODS

Recruitment and Study Procedures

All study procedures were approved by the University of Virginia (UVA) Institutional Review Board for Health Sciences Research. Interested subjects were recruited from the University of Virginia James Q. Miller MS Clinic outpatient population. Written consent was obtained prior to initiation of study procedures. Recruited subjects had clinically definite MS[21] with Expanded Disability Status Scale (EDSS) ≤ 6.5. Subjects with EDSS above 6.5 were excluded to ensure that all subjects were ambulatory, as several of the selected assessments (below) are not appropriate in a non-ambulatory population. Internet access via desktop or tablet (not phone) was also required.

Subjects participated over a period of six months, with in-person assessment at baseline and six months. These baseline and six month assessments included collection of demographic information; neurologic exam by Neurostatus-certified staff; and completion of several PROs, including the MS Walking Scale (MSWS-12)[22], Modified Fatigue Impact Scale (MFIS)[23], Godin Leisure Time Exercise Questionnaire (GLTEQ)[24], Patient-Determined Disease Steps (PDDS)[25], and Performance Scales (PS) covering 11 distinct symptom domains such as mobility and vision[26].

Web-Based Patient-Reported Outcome (wbPRO) Collection

A UVA-hosted web portal allowed subjects to report symptoms from home and view their symptom history. The web portal was created specifically for this study. Its navigation page features the “How are you feeling today?” (HAYFT) question, scored from 1 to 10, and links to the following four questionnaires: MSWS-12, MFIS, GLTEQ, and PS. The 11 PS were adapted for the portal and labeled as the “Symptom Tracker”. The history of responses to HAYFT, MSWS-12, MFIS, GLTEQ, and each PS could be viewed as a graph or a table. A dedicated “Symptom Tracker” page allowed subjects to compare severity between symptoms and view recent trends. Subjects were oriented to the web portal at baseline visit with a 15-minute, face-to-face tour and tutorial.

Subjects were required to complete each of the five questionnaires at least once per month. Additional use of the web portal was encouraged but not required. Subjects rated the utility of the web portal and provided free-response feedback at the six month visit.

Data Analysis

Data were analyzed in Matlab R2015b. Groups have been compared by t-test or Mann-Whitney rank sum test as appropriate for interval and ordinal variables, respectively. Spearman correlations have been used, as Pearson correlations are not appropriate for ordinal data. Subject-adjusted correlations were calculated by subtracting subject-specific mean values from all measurements before computing the correlation. Thus the mean-adjusted correlation measures the association between changes in one measurement and changes in the other while allowing for subject-specific offsets. A linear mixed-effects model was used to achieve a similar effect, but it is less appropriate for ordinal data, and clinical interpretation of model coefficients is limited by correlations between predictors. The linear mixed-effects model has been used solely to estimate the total HAYFT variance explained jointly by the predictors.

RESULTS

Subject Demographics and Disability Outcome Measures

Thirty-one subjects completed all study requirements. By design, recruited subjects were evenly dispersed across the disability spectrum up to an EDSS of 6.5. Nine had mild disability (EDSS 0 to 2.5), 11 had moderate disability (EDSS 3 to 4.5), and 10 had severe disability (EDSS 5 to 6.5). All subjects were ambulatory, but 13 used assistive devices inside the home (7 cane; 4 walker; 2 hand bars), and three additional subjects used a cane outside of the home. Demographics and outcome measures at baseline visit are summarized in Table 1. Subjects were 93.5% female (29/31), with a median age of 48 years (range: 27 years to 61 years). Instrumental activities of daily living were reported on a three-point scale (0 No help; 1 Some help; 2 Unable to do)[27]. No subjects experienced a MS relapse during the study, and the largest EDSS progression was 1.5 (median = 0, range = −2 to 1.5, IQR = −0.5 to 0.5).

Table 1.

Demographics and Selected Outcome Measures at Initial Visit

| N (Female/Male) | 31 (29/2) |

| Age, median (range) [IQR] | 48 (27 – 61) [44 – 56] |

| Years since onset, median (range) [IQR] | 15.5 (4 – 37) [10.25 – 19.75] |

| Years since diagnosis, median (range) [IQR] | 12 (3 – 31) [9.25 – 15] |

| MS Subtype: | |

| Relapsing-remitting disease | 21 |

| Progressive disease | 10 |

| EDSS, median (range) [IQR] | 3.5 (2 – 6.5) [2.5 – 6] |

| PDDS, median (range) [IQR] | 3 (0 – 7) [1 – 4.5] |

| IADL Score, median (range) [IQR] | 2 (0 – 9) [0 – 4] |

| MSWS-12 Score, median (range) [IQR] | 31.3 (0 – 100) [8.9 – 79.7] |

| MFIS Total, median (range) [IQR] | 36 (0 – 67) [23 – 49] |

| T25FW, median (range) [IQR] | 4.95 (3.3 – 67.4) [3.9 – 12.7] |

IQR: Inter-Quartile Range; MS: Multiple Sclerosis; EDSS: Expanded Disability Status Scale; PDDS: Patient Determined Disease Steps; IADL: Instrumental Activities of Daily Living; MSWS-12: MS Walking Scale; MFIS: Modified Fatigue Impact Scale; T25FW: Timed 25-Foot Walk

Feasibility and Reliability of wbPRO Collection

Only 12.9% of subjects had technical difficulty with the log-in process. In each case, this was solved by phone call with the study coordinator. Most subjects (87.1%) completed all questionnaires the first month per study requirements. In total, 77.4% had no difficulty with the web portal, 22.6% had some difficulty. These subjects were examined for shared characteristics such as age, disease severity, disease subtype, employment status and education, but none were identified.

Table 2 quantifies the reliability of wbPROs, measured as the correlation between wbPROs and standard PROs. First, standard PROs from baseline and six month visits were compared to wbPROs from the first and last months, respectively. These completions were up to two weeks apart, so there is some longitudinal component to the comparison. Standard and web-based MSWS-12 scores were most highly correlated (r = 0.973 at both the baseline and six month visits). MFIS totals were least highly correlated on average, though correlation was still strong (r = 0.944 and r = 0.912 at baseline visit and six month visit, respectively). For comparison, the baseline PROs and six month PROs are less strongly correlated (Table 2, part c); in fact, these values are lower than their counterparts when comparing month 1 wbPROs with month 6 wbPROs (Table 2, part d). Importantly, no subjects reported difficulty with the web-based questionnaires themselves.

Table 2.

Reliability of wbPRO collection based on correlation between wbPROs and standard PROs

| Correlation between PROs (r) | |||

|---|---|---|---|

| Encounter | MSWS-12 | MFIS | Symptom Tracker |

| (a) Baseline (web-based/standard) | 0.973 | 0.944 | 0.922 |

| (b) Six Months (web-based/standard) | 0.973 | 0.912 | 0.959 |

| (c) Baseline vs Six Months (both standard) | 0.933 | 0.780 | 0.886 |

| (d) Baseline vs Six Months (both web-based) | 0.957 | 0.794 | 0.941 |

| (e) Baseline (standard) vs Six Months (web-based) | 0.936 | 0.816 | 0.801 |

| (f) Baseline (web-based) vs Six Months (standard) | 0.944 | 0.789 | 0.934 |

PRO: Patient-Reported Outcome; MSWS-12: MS Walking Scale; MFIS: Modified Fatigue Impact Scale

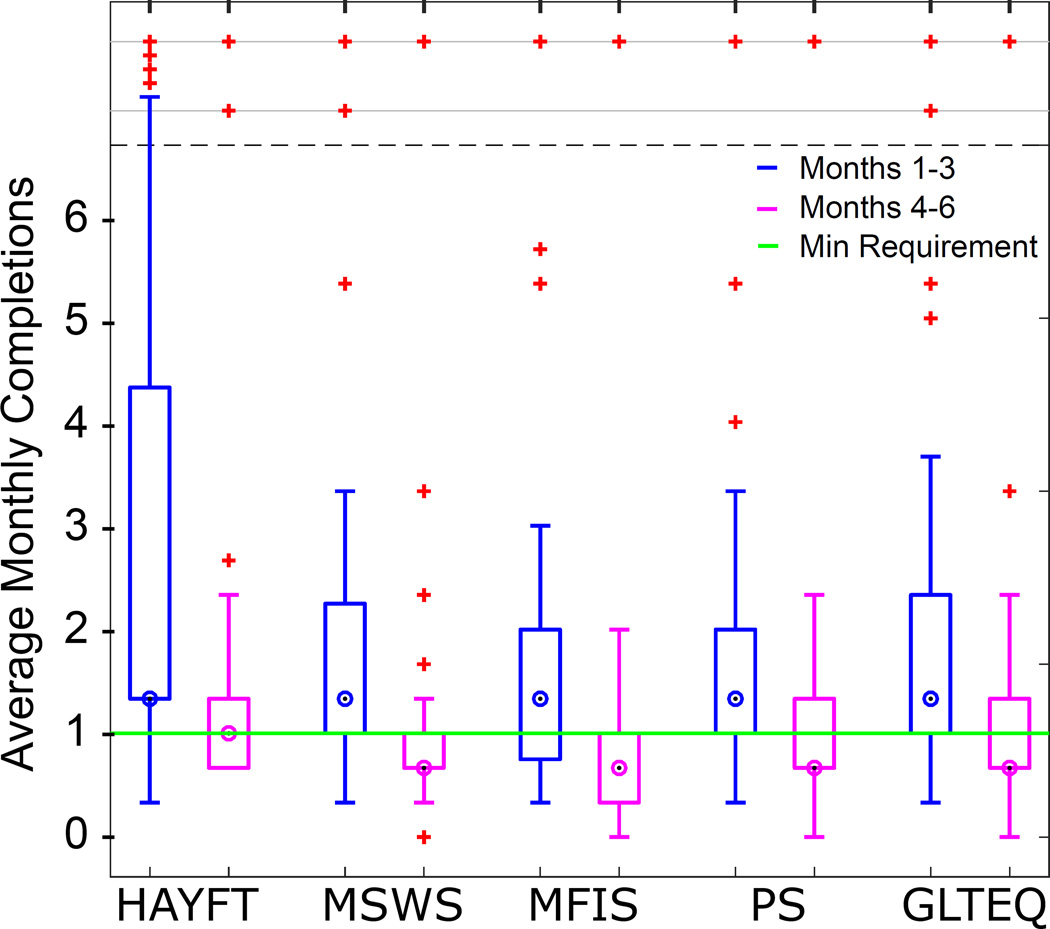

Trends in wbPRO Completion

Figure 1 shows average monthly completions for each questionnaire among all subjects in the first half of the study (months 1 – 3) compared to the second half (months 4 – 6). A majority of subjects met requirements in the first half. In the second half, a majority met the HAYFT requirement but failed to meet MSWS-12, MFIS, PS, and GLTEQ requirements. The median number of completions for the four other questionnaires was 2, one below the minimum requirement. The decline in total completions was significant by paired t-test for all questionnaires (HAYFT p = 0.001; MSWS-12 p = 0.031; MFIS p = 0.008; PS p = 0.011; GLTEQ p = 0.004).

Figure 1.

Adherence declined between the first and second halves of the study as measured by questionnaire completion. All differences are statistically significant by paired t-test (p < 0.05).

There were several notable outliers in terms of completion rates: for example, one subject completed HAYFT every day (185 completions). Low household income (< $10,000) was the only demographic or disease-related factor significantly associated (p < 0.001) with “super-completer” status (> 100 total completions).

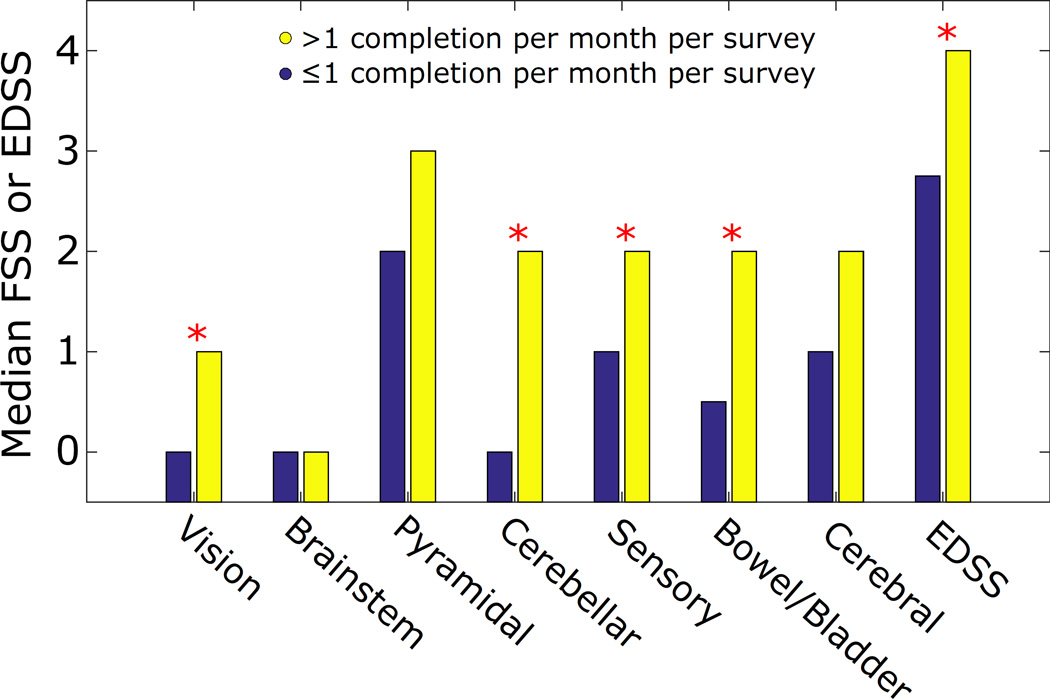

Subjects who exceeded requirements (> 1 completion per month per survey, on average) had higher EDSS (p = 0.026) than others by Mann-Whitney rank sum test. Figure 2 shows differences in median FSS and EDSS between these groups. Subjects who exceeded requirements also had higher FSS, with significant differences found in vision (p = 0.049), cerebellar (p = 0.006), sensory (p = 0.032), and bowel/bladder (p = 0.023) scores by Mann-Whitney rank sum test. The trend was also present in the remaining FSS, but it did not reach statistical significance.

Figure 2.

Median EDSS and FSS were higher among frequent questionnaire completers (> 1 completion per month per survey, on average) compared to infrequent completers. Differences between groups in EDSS and four FSS (Vision, Cerebellar, Sensory, and Bowel/Bladder) were statistically significant between groups (p < 0.05).

Subject Feedback

Responses to the data utility survey and correlations between responses may be found in Table 3. Over 60% of subjects viewed all results monthly, matching the minimum requirement for completion. Over 10% of subjects viewed HAYFT or the Symptom Tracker weekly or daily (9.7% and 3.2% respectively for both). The GLTEQ was viewed least often. The plurality of subjects felt that most questionnaires were “Moderately” useful in helping them understand their MS; only GLTEQ was more often seen as “Not at all” useful. The Symptom Tracker was perceived as the most useful, with 25.8% reporting it as “Quite a bit” useful. The MSWS-12 was seen as “Extremely” useful by one subject, a category not reported by any subjects for the other questionnaires. Results were similar for the second utility question regarding communication with care providers. Subjects most often felt that the Symptom Tracker, MSWS-12, and MFIS were “Moderately” useful, whereas they most often felt HAYFT and GLTEQ were “Not at all” useful. In general, fewer subjects saw the questionnaires as useful when communicating compared to understanding their MS. One notable exception was the MFIS, which 16.7% of subjects found “Quite a bit” useful when communicating with their provider.

Table 3.

Results from the data utility survey given to subjects at the six-month visit. Subjects were asked (a) “On average, how often did you view the following graphs or tables?”; (b) “How useful was each piece of information in helping you to understand your MS?”; and (c) “How useful was each piece of information in helping you to communicate about your MS with your doctor?”. Viewing frequency is correlated with perceived utility (d), with statistically significant correlations indicated by asterisk (p < 0.05).

| (a) Viewing Frequency | (b) Utility: Understanding MS | (c) Utility: Communicating with Care Provider |

(d) Correlations between Viewing Frequency and Utility |

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Never | A Little | Monthly | Weekly | Daily | Not at all | A Little | Moderately | Quite a bit | Extremely | Not at all | A Little | Moderately | Quite a bit | Extremely | Frequency vs Understanding MS (rs) |

Frequency vs Communicating with Provider (rs) |

|

| HAYFT | 6.5 | 19.4 | 61.3 | 9.7 | 3.2 | 25.8 | 29.0 | 29.0 | 16.1 | 0.0 | 30.0 | 26.7 | 26.7 | 13.3 | 3.3 | 0.454* | 0.560* |

| Symptom Tracker | 3.2 | 22.6 | 61.3 | 9.7 | 3.2 | 19.4 | 19.4 | 35.5 | 25.8 | 0.0 | 26.7 | 16.7 | 36.7 | 16.7 | 3.3 | 0.488* | 0.473* |

| MSWS-12 | 3.2 | 22.6 | 64.5 | 6.5 | 3.2 | 19.4 | 22.6 | 41.9 | 12.9 | 3.2 | 23.3 | 16.7 | 46.7 | 10.0 | 3.3 | 0.301 | 0.307 |

| MFIS | 6.5 | 19.4 | 67.7 | 3.2 | 3.2 | 19.4 | 22.6 | 45.2 | 12.9 | 0.0 | 26.7 | 16.7 | 33.3 | 16.7 | 6.7 | 0.543* | 0.507* |

| GLTEQ | 19.4 | 16.1 | 61.3 | 3.2 | 0.0 | 38.7 | 29.0 | 22.6 | 9.7 | 0.0 | 46.7 | 16.7 | 26.7 | 10.0 | 0.0 | 0.571* | 0.454* |

HAYFT: “How are you feeling today?”; MSWS-12: MS Walking Scale; MFIS: Modified Fatigue Impact Scale; GLTEQ: Godin Leisure-Time Exercise Questionnaire

Frequency of viewing was positively correlated with perceived utility on both questions: when subjects viewed results more often, they were more likely to report them as useful. This association reached statistical significance for HAYFT, Symptom Tracker, MFIS, and GLTEQ, but not MSWS-12.

There were three clear themes identified in the free response feedback. First, a majority of subjects (51.6%) commented that monitoring helped them understand aspects of their disease. For example, one subject wrote “I found it very useful because I could see what days were good and why and what days were bad and why”. A smaller but substantial portion (16.1%) said the symptom history would be useful when communicating with a care provider, for instance: “I think it would be more useful to the doctor to have an overview of the symptoms between office visits”. Lastly, subjects commented on the lack of disability-specific content and/or timing in symptom questionnaires (16.1%). One said “Because my walking is so bad I felt a lot did not pertain to me”, while another said “I probably would not answer the questions within periods where my symptoms remained largely unchanged.”

Determinants of “How are you feeling today?” (HAYFT)

A majority of PRO results were correlated with HAYFT, as shown in Table 4. Correlation was strongest for depression (rs = −0.459) followed by fatigue (rs = −0.390), pain (rs = −0.389), bladder/bowel (rs = −0.365) and the MFIS (rs = −0.351). Changes in symptoms were most strongly correlated with changes in HAYFT for vision (rs = −0.341) followed by fatigue (rs = −0.318), bladder/bowel (rs = −0.293), and depression (rs = −0.227), as demonstrated by the subject-adjusted correlations.

Table 4.

Spearman correlations and subject-adjusted Spearman correlations between “How are you feeling today?” and other questionnaire results. Statistically significant correlations (p < 0.001) are emphasized in bold.

| Non-adjusted | Subject-Adjusted | |||

|---|---|---|---|---|

| rs | p | rs | p | |

| Mobility | −0.007 | 0.9016 | −0.100 | 0.0806 |

| Hand Function | −0.062 | 0.2890 | −0.139 | 0.0162 |

| Vision | −0.245 | < 0.0001 | −0.341 | < 0.0001 |

| Fatigue | −0.390 | < 0.0001 | −0.318 | < 0.0001 |

| Sensory | −0.268 | < 0.0001 | −0.176 | 0.0050 |

| Spasticity | −0.214 | 0.0006 | −0.088 | 0.1617 |

| Cognitive | −0.091 | 0.1073 | −0.190 | 0.0008 |

| Pain | −0.389 | < 0.0001 | −0.194 | 0.0018 |

| Depression | −0.459 | < 0.0001 | −0.227 | 0.0001 |

| Bladder/Bowel | −0.365 | < 0.0001 | −0.293 | < 0.0001 |

| Tremor | −0.257 | < 0.0001 | 0.106 | 0.0690 |

| MSWS-12 | −0.287 | < 0.0001 | −0.052 | 0.3472 |

| MFIS | −0.351 | < 0.0001 | 0.023 | 0.7107 |

| GLTEQ | 0.282 | < 0.0001 | 0.081 | 0.1284 |

MSWS-12: MS Walking Scale; MFIS: Modified Fatigue Impact Scale; GLTEQ: Godin Leisure-Time Exercise Questionnaire

The linear mixed-effects model explained almost 60% of HAYFT response variance (r2 = 0.5886, adjusted r2 = 0.5693) when only the 11 PS (Symptom Tracker) were used as predictor variables. When the MSWS-12 score and MFIS score were added to the model, this figure increased to almost 64% (r2 = 0.6392, adjusted r2 = 0.6094).

DISCUSSION

Although this study involved longitudinal measurement of PROs, our results are primarily cross-sectional with respect to disease status, which progressed minimally in only a few subjects. Longitudinal results have to do with day to day symptom variability and long-term intervention adherence and perception. Since disease status did not change, trends in questionnaire completion are likely to be the result of continued exposure to the web portal.

Difficulties with log-in and web portal navigation occurred at rates consistent with the literature. For example, Haase et al. found that 83% of patients can quickly adjust to new software and 87% report weekly internet use[9], similar to the 87% of our cohort who did not require technical assistance. Contrary to expectations, there were no trends between technical difficulty and age, disease severity, or other recorded demographic characteristics.

Importantly, wbPRO completion was reliable based on comparison to standard, paper PROs. While not surprising, this result reinforces a foundational assumption of remote symptom reporting, namely that remote symptom reports may be trusted. Our wbPROs faithfully reproduced the standard PROs, whereas a less strict web-based interpretation might have produced different results. No subjects reported difficulty with the questionnaires themselves. Some variability between standard PROs and web-based PROs was expected given the subjective nature of the questionnaires and the time delay between completions (up to 2 weeks). These cross-sectional correlations were stronger than the corresponding longitudinal correlations, suggesting that variability was within expected limits. Moreover, longitudinal correlations were similar regardless of the modality (paper/web-based).

The HAYFT analysis can be viewed in two different lights. On the one hand, the mixed effects model explained the majority of HAYFT variability, highlighting the influence of symptoms on daily well-being. The correlations show that depression, fatigue, and bowel and bladder function are among the most important symptoms in this regard. Subject-adjusted correlations relate changes in symptoms to changes in HAYFT while allowing for subject-specific baselines. The results suggest that vision, fatigue, and bladder and bowel symptoms were the most important drivers of HAYFT in this study. Importantly, this analysis relies on intra-subject symptom variability: symptoms can only drive HAYFT responses if they are changing.

On the other hand, a large portion of HAYFT variance – roughly 40% – remains unexplained by the model despite our inclusion of subject-specific intercept parameters. Some of this variance may be attributed to unrecorded symptoms and higher-order effects, but a substantial portion remains. Thus in this population, factors not related to disease seem to be just as important as MS-related symptoms as drivers of perceived well-being.

Declining completion rates are a major obstacle to mHealth adoption that future interventions must address. Much like other remote interventions[16–19], our subjects were initially engaged with high completion rates, but engagement dropped as subjects became more realistic about intervention benefits. Like other interventions, our subjects may have been more motivated than the general population; if so, these challenges will be even more pronounced outside of a research setting. Free-response feedback points to an important contributing factor: the intervention was not adequately tailored to the disability status of individual subjects. Some subjects felt that questions were not relevant to them, while others grew tired of reporting the same unchanged symptoms. Additional themes related to wbPRO design might have been useful in the development of future tools, but unfortunately none were identified.

The association between disease severity and completion rates is promising, as subjects with more severe symptoms tended to report them more often. Further, correlations between viewing frequency and perceived utility suggest that the web portal was useful when subjects were engaged. Nevertheless, completion rates were low in most subjects beyond month 3, pointing to a need for more innovative, individualized approaches to monitoring.

Many studies have combated low compliance by opting for short form PROs, reducing patient burden. Our results partly support this approach: patients rated the single-question performance scales (symptom tracker) as the most useful of the five questionnaires. Ultimately, however, there is a trade-off between PRO length and score validity. Instead of reducing the number of questions, we favor development of adaptive technologies which leverage mobile computation to tailor PRO content and timing to the subject. Further work is needed to develop adaptive PROs and evaluate their benefits in MS and other clinical populations.

CONCLUSION

Most subjects with MS had no technical difficulties completing PROs through a custom web interface. Reliability was high, as measured by the correlation between paper and wbPROs. Voluntary completion varied substantially, and higher completion rates were associated with more severe disability as measured by EDSS. Adherence was low in the second half of the study, with a majority of subjects failing to meet requirements. Feedback showed that subjects who used the web portal more often were more positive about its potential benefits. Importantly, free-response feedback pointed to lack of person-specific PRO content and timing as causes of poor long-term adherence. Disability symptoms and factors not related to disease were equally important to perceived well-being, with depression, fatigue, and bladder and bowel function being the most important symptoms by correlational analysis.

This study contributes to the growing mHealth evidence base in MS care by evaluating wbPRO collection in terms of feasibility, reliability, adherence over a six month period, and subject-perceived benefits. Persons with MS are able to utilize mHealth tools, but their willingness to do so could be improved by incorporating individualization into mHealth platforms. While mHealth does have the potential to facilitate patient-centered care and improve engagement, reaching this potential will require innovative solutions to combat poor compliance and adherence.

WHAT WAS ALREADY KNOWN?

eHealth interventions have had small but significant positive effects on targeted behaviors in diabetes, weight-loss, and other conditions.

Most eHealth studies have recruited highly motivated subjects, so positive results may not generalize, and several clues point to poor long-term adherence.

Persons with multiple sclerosis are able to use the internet, but little is known regarding their interest in eHealth or adherence to a long-term eHealth intervention.

WHAT KNOWLEDGE HAS BEEN ADDED?

Declining adherence is a critical and underemphasized barrier to eHealth impact in multiple sclerosis and potentially other chronic diseases.

Severely disabled subjects have no difficulty completing web-based patient-reported outcomes; in fact, they complete them more often than others.

Subjects see the potential of eHealth to improve their understanding of disease and communication with providers, but many would like to see tailored content and other innovations.

Depression, fatigue, and pain are the most prominent drivers of perceived well-being in multiple sclerosis.

HIGHLIGHTS.

Web-based patient-reported outcome collection is evaluated in multiple sclerosis.

Subjects reliably reported symptoms related to fatigue and mobility through a custom web portal.

A statistically significant decline in adherence occurred over the six month study period.

More disabled subjects completed patient-reported outcomes more frequently.

Tailored content and other innovations are needed to combat declining adherence.

Acknowledgments

This research was conducted as a research activity of the Broadband Wireless Access and Applications Center, an NSF-supported industry-university cooperative research center (NSF award #1266311). Dr. Goldman is supported by the National Institutes of Health-National Institute of Neurologic Disorders and Stroke (K23NS62898).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

COMPETING INTERESTS

MME, SDP, KS, and JCL report no competing interests. MDG reports grants from National Institutes of Health-National Institute of Neurologic Disorders and Stroke (K23NS62898), grants from Biogen Idec, grants from Novartis, other from Acorda, other from Biogen Idec, other from Novartis, personal fees from Novartis, and personal fees from Sarepta outside the submitted work.

CONTRIBUTIONS

MME: study design, data collection, data analysis and interpretation, drafting the manuscript; SDP: study design, data interpretation, revising the manuscript; KS: study design, data interpretation, revising the manuscript; JCL: study design, data interpretation, revising the manuscript; MDG: study design, data collection, data interpretation, revising the manuscript.

Contributor Information

Matthew M. Engelhard, Department of Systems and Information Engineering, University of Virginia, Charlottesville, VA, USA, P.O. Box 400747, Charlottesville, VA, 22904, mme@virginia.edu, 434-924-5393 (phone), 434-982-2972 (fax)

Stephen D. Patek, Department of Systems and Information Engineering, University of Virginia, Charlottesville, VA, USA

Kristina Sheridan, MITRE Corporation McLean, VA, USA.

John C. Lach, Department of Electrical and Computer Engineering, University of Virginia, Charlottesville, VA, USA

Myla D. Goldman, Department of Neurology, University of Virginia, Charlottesville, VA, USA

REFERENCES

- 1.Stewart M, Brown JB, Donner A, McWhinney IR, Oates J, Weston WW, Jordan J. The impact of patient-centered care on outcomes. J. Fam. Pract. 2000;49:796–804. doi: see also this commentary by Ronal Epstein; http://www.jfponline.com/Pages.asp?AID=2593&UID= [PubMed] [Google Scholar]

- 2.Basch E, Deal AM, Kris MG, Scher HI, Hudis CA, Sabbatini P, Rogak L, Bennett AV, Dueck AC, Atkinson TM, Chou JF, Dulko D, Sit L, Barz A, Novotny P, Fruscione M, Sloan JA, Schrag D. Symptom Monitoring With Patient-Reported Outcomes During Routine Cancer Treatment: A Randomized Controlled Trial. J. Clin. Oncol. 2015;34 doi: 10.1200/JCO.2015.63.0830. JCO.2015.63.0830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bertakis KD, Azari R. Patient-Centered Care is Associated with Decreased Health Care Utilization. J. Am. Board Fam. Med. 2011;24:229–239. doi: 10.3122/jabfm.2011.03.100170. [DOI] [PubMed] [Google Scholar]

- 4.Bertakis KD, Azari R. Determinants and outcomes of patient-centered care. Patient Educ. Couns. 2011;85:46–52. doi: 10.1016/j.pec.2010.08.001. [DOI] [PubMed] [Google Scholar]

- 5.Epstein RM, Franks P, Shields CG, Meldrum SC, Miller KN, Campbell TL, Fiscella K. Patient-centered communication and diagnostic testing. Ann. Fam. Med. 2005;3:415–421. doi: 10.1370/afm.348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rieckmann P, Boyko A, Centonze D, Coles A, Elovaara I, Havrdova E, Hommes O, Lelorier J, Morrow SA, Oreja-Guevara C, Rijke N, Schippling S. Future MS care: A consensus statement of the MS in the 21st Century Steering Group. J. Neurol. 2013 doi: 10.1007/s00415-012-6656-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ali EE, Chew L, Yap KY-L. Evolution and current status of mhealth research: a systematic review. BMJ Innov. 2016;2:33–40. [Google Scholar]

- 8.Webb TL, Joseph J, Yardley L, Michie S. Using the internet to promote health behavior change: a systematic review and meta-analysis of the impact of theoretical basis, use of behavior change techniques, and mode of delivery on efficacy. J. Med. Internet Res. 2010;12:e4. doi: 10.2196/jmir.1376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Haase R, Schultheiss T, Kempcke R, Thomas K, Ziemssen T. Modern communication technology skills of patients with multiple sclerosis. Mult. Scler. 2013;19:1240–1. doi: 10.1177/1352458512471882. [DOI] [PubMed] [Google Scholar]

- 10.Colombo C, Filippini G, Synnot A, Hill S, Guglielmino R, Traversa S, Confalonieri P, Mosconi P, Tramacere I. Development and assessment of a website presenting evidence-based information for people with multiple sclerosis: the IN-DEEP project. BMC Neurol. 2016;16:30. doi: 10.1186/s12883-016-0552-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Greiner P, Sawka A, Imison E. Patient and Physician Perspectives on MSdialog, an Electronic PRO Diary in Multiple Sclerosis. Patient. 2015;8:541–550. doi: 10.1007/s40271-015-0140-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gourraud P-A, Henry RG, Cree BAC, Crane JC, Lizee A, Olson MP, Santaniello AV, Datta E, Zhu AH, Bevan CJ, Gelfand JM, Graves JS, Goodin DS, Green AJ, von Büdingen H-C, Waubant E, Zamvil SS, Crabtree-Hartman E, Nelson S, Baranzini SE, Hauser SL. Precision medicine in chronic disease management: The multiple sclerosis BioScreen. Ann. Neurol. 2014;76:633–642. doi: 10.1002/ana.24282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.van Heerden A, Tomlinson M, Swartz L. Point of care in your pocket: a research agenda for the field of m-health. Bull. World Health Organ. 2012;90:393–394. doi: 10.2471/BLT.11.099788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barbour V, Clark J, Connell L, Ross A, Simpson P, Veitch E, Winker M. A Reality Checkpoint for Mobile Health: Three Challenges to Overcome. PLoS Med. 2013;10:e1001395. doi: 10.1371/journal.pmed.1001395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Burke LE, Ma J, Azar KMJ, Bennett GG, Peterson ED, Zheng Y, Riley W, Stephens J, Shah SH, Suffoletto B, Turan TN, Spring B, Steinberger J, Quinn CC. Current Science on Consumer Use of Mobile Health for Cardiovascular Disease Prevention: A Scientific Statement from the American Heart Association. 2015 doi: 10.1161/CIR.0000000000000232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Katz R, Mesfin T. K. Barr. Lessons From a Community-Based mHealth Diabetes Self-Management Program: “It’s Not Just About the Cell Phone,” J. Health Commun. 2012;17:67–72. doi: 10.1080/10810730.2012.650613. [DOI] [PubMed] [Google Scholar]

- 17.Aikens JE, Zivin K, Trivedi R, Piette JD. Diabetes self-management support using mHealth and enhanced informal caregiving. J. Diabetes Complications. 2014;28:171–176. doi: 10.1016/j.jdiacomp.2013.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Burke LE, Styn MA, Sereika SM, Conroy MB, Ye L, Glanz K, Sevick MA, Ewing LJ. Using mHealth Technology to Enhance Self-Monitoring for Weight Loss: A Randomized Trial. Am. J. Prev. Med. 2012;43:20–26. doi: 10.1016/j.amepre.2012.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zia JK, Schroeder J, Munson SA, Fogarty J, Nguyen L, Barney P, Heitkemper MM, Ladabaum U. Feasibility and Usability Pilot Study of a Novel Irritable Bowel Syndrome Food and Gastrointestinal Symptom Journal Smartphone App. Clin. Transl. Gastroenterol. 2015;7 doi: 10.1038/ctg.2016.9. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Newland PK, Flick LH, Thomas FP, Shannon WD. Identifying symptom cooccurrence in persons with multiple sclerosis. Clin. Nurs. Res. 2014;23:529–43. doi: 10.1177/1054773813497221. [DOI] [PubMed] [Google Scholar]

- 21.Polman CH, Reingold SC, Banwell B, Clanet M, Cohen JA, Filippi M, Fujihara K, Havrdova E, Hutchinson M, Kappos L, Lublin FD, Montalban X, O’Connor P, Sandberg-Wollheim M, Thompson AJ, Waubant E, Weinshenker B, Wolinsky JS. Diagnostic criteria for multiple sclerosis: 2010 revisions to the McDonald criteria. Ann. Neurol. 2011;69:292–302. doi: 10.1002/ana.22366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hobart JC, Riazi A, Lamping DL, Fitzpatrick R, Thompson AJ. Measuring the impact of MS on walking ability: The 12-Item MS Walking Scale (MSWS-12) Neurology. 2003;60:31–36. doi: 10.1212/wnl.60.1.31. [DOI] [PubMed] [Google Scholar]

- 23.Ritvo P, Fischer JS, Miller DM, Andrews H, Paty DW, LaRocca NG. MSQLI— Multiple Sclerosis Quality of Life Inventory. A User’s Manual. New York Natl. MS Soc. 1997 [Google Scholar]

- 24.Godin G, Shephard RJ. A simple method to assess exercise behavior in the community. Can. J. Appl. Sport Sci. 1985;10:141–146. [PubMed] [Google Scholar]

- 25.Rizzo M, Hadjimichael O, Preiningerova J, Vollmer T. Prevalence and treatment of spasticity reported by multiple sclerosis patients. Mult. Scler. 2004;10:589–595. doi: 10.1191/1352458504ms1085oa. [DOI] [PubMed] [Google Scholar]

- 26.Schwartz CE, Vollmer T, Lee H. the N.A.R.C. on M.S.O.S. Group, Reliability and validity of two self-report measures of impairment and disability for MS. Neurology. 1999;52:63–63. doi: 10.1212/wnl.52.1.63. [DOI] [PubMed] [Google Scholar]

- 27.Lawton MP, Brody EM. Assessment of Older People: Self-Maintaining and Instrumental Activities of Daily Living. Gerontologist. 1969;9:179–186. [PubMed] [Google Scholar]