Abstract

Introduction. Implementations of electronic health records (EHR) have been met with mixed outcome reviews. Complaints about these systems have led to many attempts to have useful measures of end-user satisfaction. However, most user satisfaction assessments do not focus on high-level reasoning, despite the complaints of many physicians. Our study attempts to identify some of these determinants.

Method. We developed a user satisfaction survey instrument, based on pre-identified and important clinical and non-clinical clinician tasks. We surveyed a sample of in-patient physicians and focused on using exploratory factor analyses to identify underlying high-level cognitive tasks. We used the results to create unique, orthogonal variables representative of latent structure predictive of user satisfaction.

Results. Our findings identified 3 latent high-level tasks that were associated with end-user satisfaction: a) High- level clinical reasoning b) Communicate/coordinate care and c) Follow the rules/compliance.

Conclusion: We were able to successfully identify latent variables associated with satisfaction. Identification of communicability and high-level clinical reasoning as important factors determining user satisfaction can lead to development and design of more usable electronic health records with higher user satisfaction.

Introduction

Healthcare institutions have been increasingly implementing electronic health records (EHR) over the last decade. These systems are very expensive and have mixed reviews. Despite many positive outcomes, clinicians continue to complain about difficulties with usability, lack of cognitive support or failures to match workflow in design. EHRs that provide cognitive support should be expected to match the users’ task-based mental processes such as perception, memory, reasoning and behaviour.

The degree to which systems fail to support clinical reasoning, interrupt complex thought processes and increase (rather than decrease) cognitive load have been cited as a major source of dissatisfaction. 1–3 Studies show that irrelevant display clutter can negatively affect the clinicians’ ability to perform tasks and lead to clinicians missing critical information.4

User satisfaction is a combination of the ease of use and the degree to which the system supports work and is useful5, 6. In this paper, we are focusing on how well the system supports user’s perceived performance of high-level reasoning tasks. We created and validated an instrument based on high-level reasoning tasks as one way to explore underlying mental representations.

Background

Dissatisfaction with EHR Design

EHR systems with poor designs significantly increase the mental workload of a clinician while performing high- level cognitive tasks thereby reducing user satisfaction, increasing frustration, and causing ineffective work-arounds.7, 8. Such poorly designed systems can also affect patient safety9, 10. A 2009 report by National Research Council of deployed EHRs studied how health information technology (HIT) is used in 8 different medical centers and noted that none provided the much needed cognitive support to clinicians, i.e. the high-level reasoning and decision making capacity that spans several low-level transactional tasks like ordering and prescribing. As per the report, IT applications are largely designed to automate simple clinical actions or business processes11. These narrow task- specific automation systems that make up the majority of today’s health care IT provide little cognitive support to clinicians. For example, as Stead and Linn noted:

“Today, clinicians spend a great deal of time and energy searching and sifting through raw data about patients and trying to integrate these data with their general medical knowledge to form relevant mental abstractions and associations relevant to the patient’s situation. Such sifting effort force clinicians to devote precious cognitive resources to the details of data and make it more likely that they will overlook some important higher-level consideration.” 11

This refrain is common amongst clinicians, but the exact nature of these higher-level “considerations” is not clear. Although EHR adoption has been steadily increasing, in part due to federal incentives, research suggests that the design and implementation of EHRs do not align with the cognitive and/or workflow requirements and preferences of physicians 12

Prior research on identifying the cognitive tasks involved in healthcare delivery has come from a variety of traditions. Ethnographic studies assessing user’s needs in a clinical environment lists behavior actions, but often they often do not make sense clinically and cognitively. For example, Tang, Jaworski and Fellencer, et al. (1996)13 observed 38 clinicians across several sites and found 5 types of activities: reading, writing, talking, and assessing the patient. Although accurate, these kinds of descriptions of activity types do not translate to understanding cognitive processing goals.

Complexity in health-care decision-making arises out of a multitude of factors, including sicker patients, general increase in the prevalence of chronic disease, and the increased emphasis on team care. The delivery of high-quality health care to patients increasingly involves multiple clinicians – primary care physicians, specialists, nurses, technicians and others – all of whom are required to deliver effective coordinated care.14. Each clinician has specific, limited interactions with the patient and thus a somewhat different view resulting in increased fragmentation or compartmentalized into silos of facts and disconnected symptoms. Although ONC (Office of the National Coordinator for Health IT) encourages the use of EHRs across the continuum of care, problems in continuity of care continue 15.

A qualitative study conducted by AHRQ on clinician’s recommendations for improving clinical communication and patient safety, found that HIT and organization of EHR information were the most frequent safety solutions recommended by clinicians to address problems in clinician-to-clinician communication. Moreover, clinicians envisioned EHRs to become the desired “one source of truth” for a patient’s medical information.16 How this source of truth is organized, accessed and displayed remains problematic.

Mental Representations of Tasks

Authors in information systems research examining “task-technology fit” (TTF) also focus on task definition. TTF was proposed early by Goodhue17 and others and expanded by Ammenworth and Lee13 with the goal of examining the degree to which a system helps to support user’s tasks. The premise is that satisfaction (and use) is derived from a good match between the tasks and the technology. Some of this work examined the impact of data representation on task performance 7,17 and found performance dropped rapidly when data representation did not reflect cognitive processing. Some of this work focused on dimensions of tasks and not the cognitive representation of behavior. 17

In this paper, we approach defining clinical tasks based on the Theory of Action Identification. Assessing the clinician’s high-level cognitive actions or tasks require exploring the mental representation of action. According to Action Identification Theory,18 behavior is controlled by hierarchical representations that are a group of associated neurons and pathways that include behaviors, schemas, and goals. Specific movements involved in an action, such as typing or searching or scrolling are at the “bottom.” The higher-level component of the representation includes the goals, intentions and associated values. A core principle is that expert behavior is controlled and cued at the highest levels possible. In other words, when physicians start to investigate the best treatment for a cancer or they initiate a plan for helping the patient control their diabetes, they are not really engaged in typing, sifting, sorting and scrolling or even in looking for Hemoglobin A1C trends over time. They are actually looking to see if the treatment protocols are working. That high-level action identification is supported by a myriad of smaller information representations and physical actions. The other key aspect of this theory is that behavior that is functioning at the highest level of its representation is more fluid, uses less cognitive resources and is more satisfying. Think about what happens to an expert musician when you ask them to think about where to put their fingers. They may become distracted and lose focus. Similarly, high-level reasoning actions are also interrupted when they cannot “flow” due to an increase in the difficulty of actually performing the task.

The high-level identities of many clinical tasks have not been made explicit. As a result, designing EHR to support those tasks is difficult. In addition, evaluating the success of systems to support those tasks remains indirect and is often limited to simple usability testing. General usability metrics often neglect high-level reasoning tasks or activities and do not discriminate between high-level reasoning difficulties and those difficulties associated with the interface itself.

Objectives

In this study, our goal was to explore the characteristics of high-level tasks by generating task-based questions for a survey from prior work (observations, interviews, and surveys) 19,20 and validating the instrument using factor analysis to determine latent task structures.. If the factor analysis revealed a clustering of items together that reflected the tasks, then we would have established one form of validation19 we used exploratory factor analysis, an analytic technique used for examining latent structures in a set of items. The ultimate goal is to develop a method of measuring user satisfaction that addresses high-level reasoning tasks and provides useful directions for design of an effective EHR.

Method

Design: The study was conducted in 3 phases. First, we identified an initial set of 51 questions addressing clinician tasks from prior literature and our own work. The top most frequent and important questions were preserved after pilot testing and were used to build the final survey instrument. The final survey was conducted among practicing clinicians across all levels of service in multiple specialities. Second, an exploratory factor analysis of the survey results was conducted. Finally, scales were created using tests of Cronbach’s alpha reliability and evaluation of item loadings on derived factors. The aggregate ratings of those scales were used to predict the overall judgment of how well the system supported work performance. Specific details are outlined in results.

Setting and Sample

The University of Utah Institutional Review Board approved this study. Data collection was conducted at a large, >500-bed academic hospital located in Salt Lake City, Utah. We identified and targeted 189 residents, fellows and attendings who were currently posted at the University of Utah hospitals or the Huntsman Cancer Institute and were using EPIC EHR for their in-patient care. We used direct approach, email public link to the survey or email personal link to contact the targeted participants for the survey. All email links were created using the REDCap survey tool 21. Residents were the targeted participants were approached during one month, in December 2015, 18 months following EPIC implementation.

For purposes of statistical analysis service domains were broadly categorized into “Medical Specialties” which includes: Internal Medicine, Anesthesiology, Physical Medicine and Rehab, Psychiatry, Neurology, Pediatrics, Cardiology, Gastroenterology, Rheumatology and Family Medicine, and “Surgical Specialties” which includes: General Surgery, Cardiovascular Surgery, Obstetrics & Gynecology, Neuro Surgery, Plastic Surgery, Orthopedics and Urology.

Phase I: Item Selection and Evaluation

An initial set of 51 tasks were created based on our prior research 19,20 in user satisfaction and information needs as well as from the general recommended areas of concern noted by Stead and Linn.11 This report highlighted 7 information sensitive domains identified in Institute of Medicine’s vision for 21st century healthcare: a) integrate patient information from various sources, b) integrate evidence base with daily practice, c) developing tools for portfolio management of patients at an individual and population level, d) rapid integration of new knowledge into a ‘learning’ health system, e) extending care (treatment and monitoring) beyond hospitals through better communication and technology, thereby empowering patients in the decision making process more effectively.11 The items were all identified as tasks that clinicians needed to accomplish during the course of their work. We designed the questions to be about at the same level of abstraction and to reflect general domains of work, e.g. patient assessment, diagnosis, teamwork.

The items were extensively pilot-tested and then rated by 8 physicians as to their frequency/importance on a 1 (Very Low) to 5 (Very High) scale. The final set of questions included those that were rated in the top 50%. (n=21).The final survey questionnaire had 3 parts. The first part included demographic questions about the participant including name, department affiliation and level year. For the second part, clinicians were asked to respond to the question “While caring for hospitalized patients, how well does Epic help you to… “ The responses were captured in a Likert scale ranging from 1 (very poorly) to 5 (very well). The third part consisted of a free text comment box, where the user was asked to add any additional comments he/she had. The questions used in the survey instrument are listed in Table 2.

Table 2.

– Survey Questionnaire with descriptive results

| Survey questions - “How well does Epic help you to…” | N | Range | Min | Max | Mean | SD | |

|---|---|---|---|---|---|---|---|

| 1. | Efficiently do your work overall | 90 | 4 | 1 | 5 | 3.60 | .909 |

| 2. | Discover why the patient is receiving a specific treatment and/or medication | 90 | 4 | 1 | 5 | 3.13 | .997 |

| 3. | Identify a patient’s current severity of illness | 90 | 4 | 1 | 5 | 3.07 | .958 |

| 4. | Track clinical parameters that need continuous monitoring | 90 | 4 | 1 | 5 | 3.74 | .966 |

| 5. | Review appropriateness for drug therapy | 88 | 4 | 1 | 5 | 3.15 | .891 |

| 6. | Prevent and monitor for adverse drug events | 85 | 4 | 1 | 5 | 3.31 | .900 |

| 7. | Integrate external sources of patient information | 83 | 4 | 1 | 5 | 3.01 | 1.110 |

| 8. | Comprehend the relationship and dependencies between medication and labs | 86 | 4 | 1 | 5 | 3.10 | .921 |

| 9. | Follow the sequence and timeline of a clinical event | 89 | 4 | 1 | 5 | 3.33 | .963 |

| 10. | Integrate patient preferences into the care plan | 86 | 4 | 1 | 5 | 3.06 | .998 |

| 11. | Document my plan of care | 88 | 4 | 1 | 5 | 3.85 | .865 |

| 12. | Communicate goals of care to the team | 89 | 4 | 1 | 5 | 3.49 | .919 |

| 13. | Determine if the patient’s team are on the same page and agree on the clinical plan | 88 | 4 | 1 | 5 | 3.02 | 1.005 |

| 14. | Communicate results of hospitalization to the Primary Care Provider | 83 | 4 | 1 | 5 | 3.27 | 1.049 |

| 15. | Organize information to order a referral | 83 | 4 | 1 | 5 | 3.30 | .959 |

| 16. | Write an effective discharge summary | 80 | 4 | 1 | 5 | 3.55 | .940 |

| 17. | Comp with billing requirements | 70 | 4 | 1 | 5 | 3.63 | .871 |

| 18. | Track my compliance with guidelines in treating current patients | 75 | 4 | 1 | 5 | 2.97 | .944 |

| 19. | Identify rules for admission/discharge/transfer | 77 | 4 | 1 | 5 | 2.88 | .986 |

| 20. | Identify patients who require a higher acuity level of care | 83 | 4 | 1 | 5 | 3.17 | .922 |

| 21. | Access relevant clinical knowledge at Point of care | 87 | 4 | 1 | 5 | 3.13 | 1.043 |

Phase II: Validation of Factor Structure and Variable Creation

Factor analysis has long been used in the social sciences to assess for underlying latent structure in perceptions and mental variables which cannot be measured directly (e.g., intelligence). Multiple methods have been devised for determining these factors (latent variables), all of which center on determining the pattern of correlations between variables 22,23. A pattern of correlations amongst the questions may reveal clusters that suggest those questions are measuring the same construct. Inspection of the resultant patterns of correlations (factors) provide evidence of a latent structure. We conducted an exploratory factor analysis using a correlation matrix with varimax rotation 24. Recommendations for the sample size varies with some recommending as low as 5 participants per question and as many as 10 or more. Our analysis showed each common factor was over-determined with at least 5 measured variables representing each common factor. As few as 3-4 measured variables per common factor can reduce the required sample size to approximately 100,25 which is consistent with our sample size.

Items loading on the same factor with a loading value of greater than 0.5 and not loading greater than 4.0 on another factor were combined (summed and averaged) to create 3 new variables (or scales). The reliability of these new variables were assessed using Cronbach’s alpha with an acceptability criteria of greater than 0.80 26

Phase III. Predictive Validity Assessment

The 3 newly defined variables were then regressed on the rating of overall efficiency to validate their association with performance using linear regression.

Results

Study population

Of the 189 targeted clinicians (residents, fellows, and attendings) who were working in the system at the time, 47.6% (n=90) participated in the survey. The distribution of respondents by level and domain are presented in Table 1 below. Two participants did not disclose their service category (department) and one participant did not disclose the level year of service.

Table 1.

Participant sample size (n = 90) based on broad speciality and level of service

| Level | Surgery (n) | Medicine (n) |

|---|---|---|

| PGY 1 | 4 | 15 |

| PGY 2 | 4 | 14 |

| PGY 3 | 6 | 4 |

| PGY 4 | 5 | 6 |

| PGY 5 | 4 | 0 |

| PGY 6 | 3 | 0 |

| PGY 7 | 2 | 0 |

| Fellow | 5 | 14 |

| Attending | 0 | 4 |

| Total | 33 | 57 |

PGY - Post Graduate Year

Descriptive Results

The descriptive statistics of each survey question (n, range, min, max, mean and SD) are listed in Table 2. The number of respondents to each question ranged from 70 to 90, with a mean satisfaction score ranging from 2.88 to 3.85 (Max 5)

Level of service

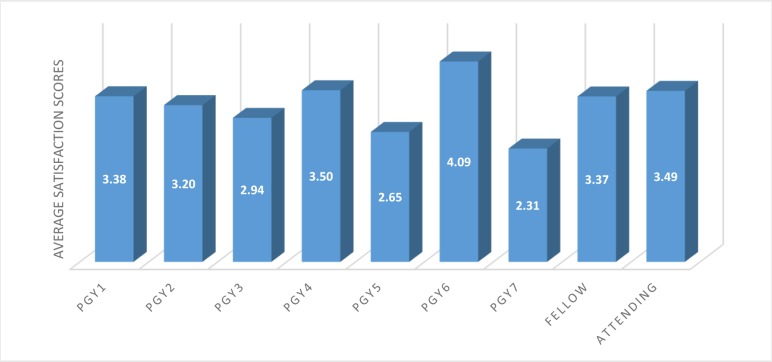

Participant sample for this study included clinicians from post graduate year 1 to post graduate year 7, fellows and attending physicians. Total user satisfaction score was calculated for all the participants and were categorized based on the year of service. Average satisfaction score for each level of service was calculated by dividing the total score of all participants in each level year by the product of total number of participants in each level year and the number of questions answered (Figure 1).

Figure 1.

Average satisfaction scores (min=0, max=5), categorized by level of service.

Factor Analysis

An exploratory factor analysis using the correlation matrix was conducted using varimax rotation. The number of factors was constrained to 3 based on inspection of the scree plot showing 1 factor with a large eigenvalue (amount of variance) and 2 smaller factors (which we preserved for theoretical reasons). There were 4 factors with eigenvalues above 1.0; however the lowest one explained only 5.26% of the variance with an eigenvalue of only 1.04 so it was excluded. Eigen values are a measure of how much of the observed variables’ variance is explained by the factor. The initial eigenvalues show that the first factor explained 48.85% of the variance, the second factor 6.75% of the variance, the third factor 6.11% of the variance and the fourth factor 5.26% of the variance (see Table 3). Any factor with an eigenvalue ≥ 1 explains more variance than a single observed variable and is the minimal acceptable level to be included as a factor. The first 3 factors explained 62% of the variance.

Table 3.

Factors, eigenvalues and variance

| Factor | Initial Eigenvalues | % of Variance |

|---|---|---|

| 1 | 10.26 | 48.85 |

| 2 | 1.42 | 6.75 |

| 3 | 1.22 | 6.11 |

| 4 | 1.04 | 5.26 |

Table 4 displays the items and their associated factor loadings. The questions related to each of the 3 factors were examined and the factors were identified as: 1) High-level clinical reasoning (i.e.: complex thinking about patient information, such as linking meds and labs); 2) Communication /coordination (i.e.: those items associated with shared responsibility of care, including communicating with team or the primary care physician (PCP)); and 3) Following the rules / compliance (i.e.: following billing rules/ following clinical guidelines). These labels are descriptive of the underlying construct. Two questions did not show a strong association (above 0.5) with any factors (questions 6 and 7).

Table 4.

Factor loadings and communalities for survey questions

| Qn no: | Survey Questions | Factor 1 - Communication /Coordination | Factor 2 - High-Level Clinical Reasoning | Factor 3 - Rule Following /Compliance |

|---|---|---|---|---|

| 11 | Care plan documentation | 0.807 | 0.026 | 0.322 |

| 14 | Communicate with PCP | 0.703 | 0.304 | -0.079 |

| 13 | Patient’s team sync | 0.687 | 0.209 | 0.440 |

| 12 | Communicate goals | 0.671 | 0.321 | 0.323 |

| 9 | Follow timelines | 0.617 | 0.408 | 0.336 |

| 4 | Track Parameters | 0.565 | 0.013 | 0.378 |

| 1 | Work Efficiency | 0.537 | 0.286 | 0.224 |

| 15 | Info for Referral | 0.515 | 0.432 | 0.452 |

| 7 | Integrate external | 0.499 | 0.461 | 0.077 |

| 8 | Med-Lab relation | 0.076 | 0.758 | 0.107 |

| 10 | Patient Preference | 0.239 | 0.676 | 0.312 |

| 20 | Identify Acuity need | 0.321 | 0.655 | 0.396 |

| 3 | Identify severity | 0.459 | 0.651 | 0.145 |

| 5 | Drug Appropriateness | 0.091 | 0.620 | 0.473 |

| 2 | Why Treatment | 0.506 | 0.592 | 0.128 |

| 6 | Adverse Drug Events | 0.439 | 0.460 | 0.338 |

| 18 | Guideline Compliance | 0.261 | 0.365 | 0.773 |

| 17 | Billing Compliance | 0.166 | 0.028 | 0.731 |

| 19 | Identify ADT rules | 0.146 | 0.477 | 0.681 |

| 21 | Knowledge at POC | 0.321 | 0.362 | 0.622 |

| 16 | Discharge Summary | 0.439 | 0.248 | 0.569 |

Scale Construction

Composite scores were created for each of the 3 factors by summing the items highlighted in Table 4. Higher scores indicate higher satisfaction. Reliability was tested for each item. All were acceptable as measured by Cronbach’s alpha (see Table 5).

Table 5.

Descriptive statistics of aggregated variables

| Factors | No. of items | Mean | SD | Cronbach’s Alpha |

|---|---|---|---|---|

| Communication/Coordination | 8 | 3.42 | 0.76 | 0.861 |

| High-level clinical reasoning | 6 | 3.16 | 0.73 | 0.875 |

| Compliance/Follow the Rules | 5 | 3.23 | 0.74 | 0.850 |

Regression and Predictive Validity

The 3 constructed variables, communication/coordination, compliance/follow the rules and high-level clinical reasoning were regressed on the rating of overall efficiency using forward entry. The overall model was significant (R=0.54; p=0.00) with communication entering in first with the largest component and high-level clinical reasoning entered on the second step producing a significant R2 change (R=0.59; p=0.04). Compliance (follow the rules) was not significant after the other 2 variables were included in the model.

Discussion

Our exploratory factor analysis successfully explained 62% of the overall variance in the items and 3 latent high- level cognitive tasks were validated with the factor structure. These can be thought of as latent variables referring to overarching “groups” of actions. They also illustrate how clinicians are trying to use the EHR to accomplish their work and identify high-level intentions for action in the clinical environment.

The overall means across these 3 variables were not meaningfully different, although they correlated significantly. All were in the middle of the scale (around 3.5) indicating mid-level of satisfaction. The validity of the constructs were also supported by the regression results with communication/coordination and high-level reasoning explaining most of the variance in overall satisfaction.

Current user satisfaction studies focus on specific functionalities and technical attributes of the system rather than a deep understanding of the cognitive processes that influence the end-users’ usage of the system 27–29 Specific models like the Technology Acceptance Model (TAM) suggests user perceptions of ease of use and usefulness as major determinants of user satisfaction30 Additionally, Delone and McLean information system success model suggests other determinants of user satisfaction like user characteristics, a) system usefulness and b) service quality 31. None of these address satisfaction regarding the ability of the system to support high-level cognitive work. A popular usability measurement instrument, System Usability Scale (SUS), is a 10 question survey instrument which focuses on the user perception of a system’s usability and learnability metrics. However there is very little information that can be inferred from the SUS regarding the system’s ability to support high-level cognitive tasks, communicability or compliance with regulations.

Our research strongly suggests that user satisfaction is determined by factors well beyond those suggested by the above models. We identified 3 high-level tasks factors: a) ability to communicate/coordinate care between teams, b) ability to conduct cognitive tasks and c) ability to abide by the rules, as independent determinants that significantly influence the end-user satisfaction with our EHR system. Our findings validate the common clinician complaints about the lack of support to carry out cognitive tasks and collaborate between teams observed in previous research studies1, 32. Amongst the 3 high-level task factors, we found that communication/coordination had the strongest relationship with overall efficiency leading to highest perceived user satisfaction.

These findings suggest that the ability of EHRs to support these high-level tasks could be one crucial factor in determining overall user satisfaction. However, we may not have identified all of the attributes related to high-level action identities. Different contexts would likely be associated with more variety in the specific high-level tasks in which clinicians are engaged as well as the amount of non-EHR support. This pattern would explain why a system may be well received by users within one context, but rejected in another. As clinicians’ tasks vary from one setting to another, system vendors and system optimizers should modify their current approach and try to understand this dynamism and optimize the system accordingly.

Implications for Providing Cognitive Support

Clinicians’ cognitive resources are limited, and therefore any task which forces them to divert their attention away from patient care to data acquisition and interpretation should be avoided. Functionalities that support coordination, communication, shared views and negotiated responsibilities might be more important than realized. In addition, systems should be able to provide a holistic picture of the data in a complete patient context and its relevance to the situation at hand. Having this holistic picture can significantly reduce the cognitive effort required while conducting high-level tasks.

Clinical Implications

Our study shows that ability to communicate and coordinate between various clinical teams with relative ease and efficiency is associated with significantly higher user satisfaction amongst clinicians. As healthcare evolves from an individual doctor - patient relationship to a multidisciplinary team-based approach, the functionality of electronic health records to support collaboration between the teams proves to be crucial in a successful EHR implementation. Another important factor that determines user satisfaction was found to be the ability of the system to support high- level cognitive tasks conducted by the clinicians. These findings should prompt EHR vendors to undertake further system modifications to support these functionalities. It would also encourage clinical informaticians to undertake system optimization projects where they could study the various cognitive tasks within different contexts, and to implement practical solutions.

Limitations

The sample size to support a factor analysis was only minimally acceptable. The use of one variable assessing overall satisfaction might limit reliability. Finally, the study was conducted at only one site, which may impact generalizability. Even so, this study was intended to be exploratory and involved a newly-developed instrument which can be used and further validated by others.

Conclusion

Our research significantly increases our understanding of the determinants of end-user satisfaction with electronic health record (EHR) systems. We find that measuring a system’s ability to carry out high-level tasks has a much higher influence on end-user satisfaction than currently measured determinants. These findings could be adopted by system vendors as well as system optimizers in the future to create effective and efficient electronic health records that generate true value to the end-user.

Acknowledgements

This study was funded by the University of Utah Health Science Center to the Department of Biomedical Informatics.

References

- 1.Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2006;116(6):1506–1512. doi: 10.1542/peds.2005-1287. [DOI] [PubMed] [Google Scholar]

- 2.Bowman S. Impact of electronic health record systems on information integrity: quality and safety implications; Perspectives in health information management / AHIMA, American Health Information Management Association; 2013. p. 1c. [PMC free article] [PubMed] [Google Scholar]

- 3.Wipfli R, Ehrler F, Bediang G, Betrancourt M, Lovis C. How Regrouping Alerts in Computerized Physician Order Entry Layout Influences Physicians’ Prescription Behavior: Results of a Crossover Randomized Trial. JMIR human factors. 2016;3(1):e15. doi: 10.2196/humanfactors.5320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moacdieh N, Sarter N. Clutter in electronic medical records: examining its performance and attentional costs using eye tracking. Hum Factors. 2015;57(4):591–606. doi: 10.1177/0018720814564594. [DOI] [PubMed] [Google Scholar]

- 5.Park KS, Lim CH. A structured methodology for comparative evaluation of user interface design using usability criteria and measures. International Journal of Industrial Ergonomics. 1999;23(5-6):379–389. [Google Scholar]

- 6.Hadji B, Degoulet P. Information system end-user satisfaction and continuance intention: A unified modeling approach. J Biomed Inform. 2016;61:185–193. doi: 10.1016/j.jbi.2016.03.021. [DOI] [PubMed] [Google Scholar]

- 7.Peute LW, De Keizer NF, Van Der Zwan EP, Jaspers MW. Reducing clinicians’ cognitive workload by system redesign; a pre-post think aloud usability study. Studies in Health Technology and Informatics. 2011;169:925–929. [PubMed] [Google Scholar]

- 8.EHR Usability Task-Force. Defining and testing EMR usability: principles and proposed methods of EMR usability evaluation and rating. Healthcare Information and Management Systems Society. 2009.

- 9.Khajouei R, de Jongh D, Jaspers MW. Usability evaluation of a computerized physician order entry for medication ordering. Studies in Health Technology and Informatics. 2009;150:532–536. [PubMed] [Google Scholar]

- 10.Middleton B, Bloomrosen M, Dente MA, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. Journal of American Medical Informatics Assocation. 2013;20(e1):e2–e8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stead WW, Lin H, Davidson SB, et al. Computational technology for effective health care immediate steps and strategic directions. National Academies Press. 2009 http://search.ebscohost.com/login.aspx?direct=true&scope=site&db=nlebk&db=nlabk&AN=272051. [PubMed] [Google Scholar]

- 12.American Medical Association. Improving Care: Priorities to Improve Electronic Health Record Usability. 2014. https://www.aace.com/files/ehr-priorities.pdf.

- 13.Ammenwerth E, Spotl HP. The time needed for clinical documentation versus direct patient care. A worksampling analysis of physicians’ activities. Methods Inf Med. 2009;48(1):84–91. [PubMed] [Google Scholar]

- 14.McDonald KM, Sundaram V, Bravata DM, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol. 7: Care Coordination) Rockville (MD) 2007. [PubMed]

- 15.HealthIT.gov. Improve Care Coordination using Electronic Health Records. Providers & Professionals. 2016. HealthIT.gov https://www.healthit.gov/providers-professionals/improved-care-coordination.

- 16.Woods DM, Holl JL, Angst D, et al. Henriksen K, Battles JB, Keyes MA, Grady ML. Improving Clinical Communication and Patient Safety: Clinician- Recommended Solutions; Advances in Patient Safety: New Directions and Alternative Approaches (Vol. 3: Performance and Tools) Rockville (MD); 2008. [PubMed] [Google Scholar]

- 17.Goodhue DL, Thompson RL. Task-technology fit and individual performance; MIS Quarterly; 1995. (June) pp. 213–236. [Google Scholar]

- 18.Vallacher RR, Wegner DM. Action identification theory; Handbook of Theories of Social Psychology; 2012. pp. 327–348. [Google Scholar]

- 19.Weir CR, Nebeker JJ, Hicken BL, Campo R, Drews F, Lebar B. A cognitive task analysis of information management strategies in a computerized provider order entry environment. J Am Med Inform Assoc. 2007;14(1):65–75. doi: 10.1197/jamia.M2231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Weir CR, Crockett R, Gohlinghorst S, McCarthy C. Does user satisfaction relate to adoption behavior?: An exploratory analysis using CPRS implementation; Proc AMIA Symp; 2000. pp. 913–917. [PMC free article] [PubMed] [Google Scholar]

- 21.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cattell RB. The Scree Test For The Number Of Factors. Multivariate Behavioral Research. 1966;1(2):245–276. doi: 10.1207/s15327906mbr0102_10. [DOI] [PubMed] [Google Scholar]

- 23.Tinsley HE, Tinsley DJ. Uses of factor analysis in counseling psychology research. Journal of Counseling Psychology. 1987;34(4):414–424. [Google Scholar]

- 24.Wastell DG. PCA and varimax rotation: some comments on Rosler and Manzey. Biological Psychology. 1981;13:27–29. doi: 10.1016/0301-0511(81)90025-9. [DOI] [PubMed] [Google Scholar]

- 25.MacCallum RC, Widaman KF, Preacher KJ, Hong S. Sample size in factor analysis: The Role of Model Error. Multivariate Behavioral Research. 2001;36(4):611–637. doi: 10.1207/S15327906MBR3604_06. [DOI] [PubMed] [Google Scholar]

- 26.Cronbach LJ. Coefficient alpha and the internal sttructure of tests. Psychometrika. 1951;16(3):297–334. [Google Scholar]

- 27.Wirtz J, Mattila A. Exploring the role of alternative perceived performance measures and needs- congruency in the consumer satisfaction process. Journal of Consumer Psychology. 2001;11(3):181–192. [Google Scholar]

- 28.Khalifa M, Liu V. Satisfaction with internet-based services: the role of expectations and desires. International Journal of Electronic Commerce. 2002;7(2):31–49. [Google Scholar]

- 29.Yoo S, Jung SY, Kim S, et al. A personalized mobile patient guide system for a patient-centered smart hospital: Lessons learned from a usability test and satisfaction survey in a tertiary university hospital. Int J Med Inform. 2016;91:20–30. doi: 10.1016/j.ijmedinf.2016.04.003. [DOI] [PubMed] [Google Scholar]

- 30.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of technology. MIS Quarterly. 1989;13(3):319–340. [Google Scholar]

- 31.DeLone W, McLean E. The DeLone and McLean model of information systems success: a ten-year update. Journal of Management Information System. 2003;19(4):9–30. [Google Scholar]

- 32.Crabtree BF, Miller WL, Tallia AF, et al. Delivery of clinical preventive services in family medicine offices. Annals of Family Medicine. 2005;3(5):430–435. doi: 10.1370/afm.345. [DOI] [PMC free article] [PubMed] [Google Scholar]