Abstract

There are numerous methods to study workflow. However, few produce the kinds of in-depth analyses needed to understand EHR-mediated workflow. Here we investigated variations in clinicians’ EHR workflow by integrating quantitative analysis of patterns of users’ EHR-interactions with in-depth qualitative analysis of user performance. We characterized 6 clinicians’ patterns of information-gathering using a sequential process-mining approach. The analysis revealed 519 different screen transition patterns performed across 1569 patient cases. No one pattern was followed for more than 10% of patient cases, the 15 most frequent patterns accounted for over half ofpatient cases (53%), and 27% of cases exhibited unique patterns. By triangulating quantitative and qualitative analyses, we found that participants’ EHR-interactive behavior was associated with their routine processes, patient case complexity, and EHR default settings. The proposed approach has significant potential to inform resource allocation for observation and training. In-depth observations helped us to explain variation across users.

Introduction

Health information technologies (HIT), such as electronic healthcare records (EHRs), are expected to bring significant advancements to healthcare delivery through improved management and availability of patient information1. Thus far, there have been mixed results from HIT implementation and use. Problems include EHRs not integrating smoothly into clinical work processes and impacting workflow as seen in altered sequences in which tasks are performed2, the duration required to complete tasks2, the allocation of tasks among workers2, development of workarounds3, some resulting in adverse events4 that compromise patient safety and quality of care delivered. The absence of a focus on system usability and on understanding patterns of workflow is a major impediment to adoption and widespread use.

Usability studies typically employ user-satisfaction surveys, focus groups, expert inspections and experiments involving usability testing5. Although these methods are informative, they involve a reliance on subjective judgment, may lack reliability and do not provide a sufficiently rich window into the clinical workflow process. Alternatively, there have been numerous studies of workflow that vary in method and scope6. There is a need to scrutinize EHR workflow in situ to surface patterns of interaction, characterize the distributions of those patterns and elucidate the factors that underlie them. In this study, we integrate a quantitative process mining analysis of sequential patterns of data access with a qualitative analysis of user performance to investigate and explain clinicians’ work processes. Specifically, we focus on EHR workflow associated with a routine information gathering task (InfoGather).

Background

There is ample evidence to suggest that the implementation of HIT can negatively impact clinical workflows and thereby create staff dissatisfaction, inefficiency and HIT-mediated errors4. Current technologies place a burden on clinicians’ working memory and increase cognitive load, which is associated with medical errors and risks to patient safety7. Cognitive load reflects the demands on user’s working memory, and is a function of task complexity, user’s skill level, and system usability8. The productive use of HIT is partly dependent on the degree to which it can provide cognitive support for tasks that comprise clinical workflow. It is also reasonable to assume that experienced practitioners can develop efficient and effective methods for executing routine tasks—such as information gathering, progress note documentation and order entry—that better leverage the affordances provided by the EHR. We can also hypothesize that clinicians employ suboptimal strategies that result in unnecessarily complex and inefficient trajectories that are more time consuming and error prone. These patterns are empirically discoverable through automated computational approaches that identify patterns of interaction. Further, we recognize that EHR workflow is not performed in vacuum, but rather is connected to a web of actions, interactions, relationships and dependencies between clinicians and work components (e.g., patient, clinician, information, tools, etc.). This necessitates convergent methods to surface the various factors that shape interaction. The regularities of cognitive work can only be discovered through detailed, time-intensive study of the specific setting9.

We have developed a methodological framework that draws on three research traditions8, 10: cognitive engineering, distribution cognition and computational ethnography. Each framework provides a theoretical lens, identifying important foci and a set of methods that illuminate different facets of workflow. The cognitive engineering approach focuses on both the usability of the system or interface in question and in the analysis of users’ skills and knowledge11. In analyzing performance, the focus is on cognitive functions such as attention, perception, memory, comprehension, problem solving, and decision making. The approach has a lengthy history in the study of human-computer interaction in general11 and in its application to EHRs12. The cognitive engineering approach has also been used to explain why users employ suboptimal or inefficient procedures or strategies in interacting with systems13. The theory of distributed cognition (DCog)9, conceptualizes cognition as distributed across people and artifacts, and dependent on knowledge in both internal (e.g., memory) and external (e.g., visual displays, paper notes) representations as well as their interactions14. One can employ DCog to characterize workflow as the sequence, or propagation of internal and external representational states across media, settings and time9.

Computational Ethnography is an emerging set of methods for conducting human-computer interaction studies5. It combines the richness of ethnographical methods with the advantages of automated computational approaches. Zheng and colleagues define computational ethnography as “a family of computational methods that leverages computer or sensor-based technologies to unobtrusively or nearly unobtrusively record end users’ routine, in situ activities in health or healthcare related domains for studies of interest to human-computer interaction.” Sequential pattern analysis employs log files to search for recurring patterns within a large number of event sequences. The analysis can be used effectively in combination with other forms of data such as ethnography or video-capture of end-users performing clinical tasks. Zheng et al.15 investigated users’ interaction with an EHR by uncovering hidden navigational patterns in EHR logfile data. Various patterns were seen to be at variance from optimal pathways as suggested by designers and individuals in clinical management. Similarly, Kannampallil et al.16 used workflow logfile data to compare the information-seeking strategies of clinicians in critical care settings. Specifically, they characterized how distributed information was searched, retrieved and used during clinical workflow.

In a previous feasibility study, we conducted a process mining analysis with manually-curated event log data from (Morae™) video recordings13. We found patient case complexity was associated with the complexity of the clinician-EHR interactive behavior for the computer-based pre-rounds information gathering task. Two analyses were conducted. The first characterized the most common patterns of screen transitions. The second analysis quantified the frequency of each screen transition pattern. We observed 27 total screen-transition patterns, each employed 2 to 7 times. We also correlated patterns with interaction measures including mouse clicks and task duration. The objective was to characterize the difference in complexity for each pattern. We observed that, on average, a screen transition resulted in 2 to 2.5 mouse clicks. The task durations per patient were highly variable and may be associated with other factors such as variation in clinical case complexity.

The objectives of this study are to explain variation in EHR workflow by integrating quantitative analysis of empirical patterns with an in-depth qualitative analysis of user performance. This study is part of a larger research project in which we seek to characterize, evaluate, diagnose and improve clinicians’ workflow in post-operative hospital care10.

Methods

Clinical Setting & Participants

Research was conducted in the Colon & Rectal Surgery Department (CRS) at Mayo Clinic, Rochester, MN, an academic tertiary healthcare center equipped with a comprehensive EHR since 2005. Patient data is accessed through a customized interface, Synthesis. In CRS Rochester, patients are cared for primarily by surgeons, fellows, resident physicians, hospitalists, nurses, and pharmacists. Hospitalist, in this context, refers to nurse practitioners (NPs) and physician assistants (PAs) who have responsibilities similar to a resident physician. This study was centered on the hospitalist or resident physician, who share responsibilities for coordinating across members of the patients care team, delivering direct patient care, order entry and documentation. Surgery residents work for an attending physician’s service for 6-weeks before cycling to their next service. To date, we have observed four hospitalists, a PA (H1) and three NPs (H2, H3 and H4), and two residents, a 2nd year (R1) and 4th year (R2). H1, H2, H3 and H4 were experienced users of the system and routinely performed the tasks we observed. At observation, they had worked in the unit between 2 and 3 years. R1 and R2 were doing a rotation in the unit and were less experienced users of the system.

This work represents an extension of a surgery practice redesign project, which sought to understand clinical processes and information needs to inform design of new technologies that can improve patient safety and quality and efficiency of health care delivery. It was reviewed by the Mayo Clinic Institutional Review Board (IRB) and judged to be exempt as human subjects’ research.

Pre-Rounds Information Gathering Task (InfoGather)

The data were collected as the clinicians were completing pre-rounds information gathering task (InfoGather). In context of workflow, InfoGather occurs close to the start of the day shift, approximately 6:00 am. Hospitalists and residents round together immediately afterwards. The goals of the task are to access the most recent information on patients’ medical status, review care plans, as well as to anticipate patient needs for the current day17. It is clinicians’ first task and serves to anchor their understanding of their patients and their workload. To conduct the task, each clinician reviews patient data in the computer and paper-based information resources, and annotates a paper document that is subsequently referenced and modified throughout their shift.

Data Collection: System event log files & Observation

We observed clinicians in context of their routine workflow and collected ethnographic data from an electronic source (i.e., system-generated log files for the observed participants and the primary EHR application (Synthesis) used by participants for the task).

Synthesis is a customized interface developed by the Mayo Clinic Hospital in Rochester, MN for EHR data aggregation and visualization. We retrieved system-generated event log files for six participants for the six-week period that coincided with the residents’ (R1 and R2) rotation in the CRS department. We also retrieved EHR event log files for the four hospitalists for an additional two-week period that coincided with other observations in the department. At minimum, each event (row) in a log file has a User ID (i.e., clinician ID), an Event Description (e.g., “Activated tab: Labs”) and a Time Stamp (with date and time). Events that are associated with a patient chart also have the patient’s clinic number.

EHR event log files record users’ interactions with the EHR interface, to include selection of a patient chart in the Navigation Panel as well as screen tabs and their associated subtabs in a patient’s chart. The Synthesis application window includes a list of patient records in a panel on the left-side of the screen (Navigation Panel). Synthesis includes a number of screens, separated into tabs, for viewing patient data (top of Figure 1). There are a total of 13 tabs to include, Summary, Labs, Medications, Vital Signs, Intake/Output, Document/Images, Assessment/Cares, Allergies/Immunizations, Patient Facts, Clinical Problem List, Orders and Viewers/Reports. Several tabs have subtabs which allow access to other screens. For example, the Labs tab has 7 subtabs to include, Labs, Microbiology, Pathology and Pending Labs. For all participants, the Summary screen is divided into six equally sized sections, each with a predefined subset of patient data for Allergies, Intake/Output, Medications, Documents, Vital Signs, and Labs. For example, only a patient’s lab data from the last 24 hours are shown in the Summary screen. The clinician would go to the Labs tab to see all past lab results.

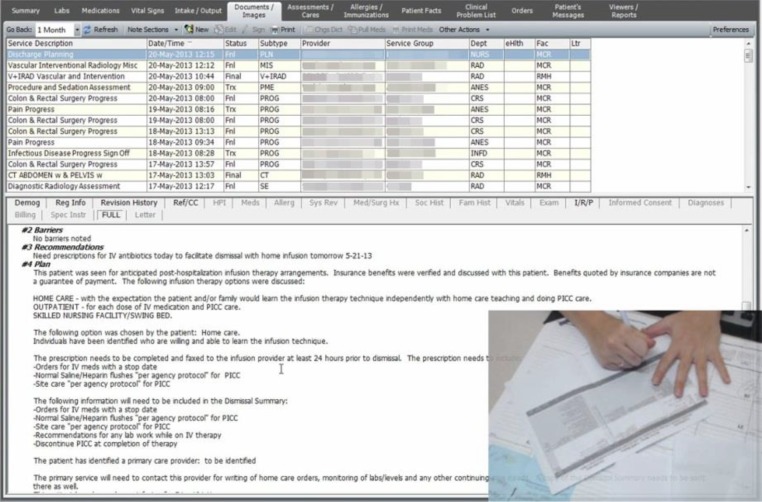

Figure 1.

Screen capture from Morae™ video of an EHR display. The tabs across the top of the screen (and the menus below) constitute the EHR “screens” reflected in the process mining analysis. The inset picture in is an image from the webcam capturing the participant annotating a paper document, a printout of the handoff document.

We employed Morae™ video capture and think-aloud protocol of participants engaging in InfoGather to allow for retrospective task analysis. Morae™ software is used for usability studies and it records user activity with no interruption to the user’s work18. The software provides a screen capture (see Figure 1), and allows use of a webcam to capture audio of participants verbalizing their thoughts (think-aloud) as well as video recording of the participant’s face or hands (inset image in the lower right corner in Figure 1).

We have a broader understanding of the context of the work environment because it was the setting for a larger research project in which we also conducted semi-structured interviews of clinicians from varying roles (e.g., hospitalist, senior resident, and nurse), collected artifacts including paper documents, observed clinicians’ work across tasks and reviewed patient charts. Interview questions aimed to reveal details of clinicians’ key clinical work activities, to include purpose or goal, tasks associated with each activity and resources used. Retrospective patient chart review was performed to understand the complexity of a patient’s clinical state.

Sequential Data Analysis

We employed temporal data mining (i.e., process mining) methods to identify clinicians’ patterns of EHR interaction performed to complete InfoGather. The analyses were conducted using a business process mining tool, Disco™ version 1.9.3, a process-mining workbench used for business process management. Process mining has been used for a wide range of purposes in relation to business19 and for adherence to guidelines in healthcare20. The input of Disco is a set of event logs (in our case, EHR logfiles associated with CRS clinicians), which can be processed, analyzed and visualized. Logfiles were preprocessed using Python. Code was written to de-identify log files by replacing clinician IDs and patient clinic numbers with a study ID. Video recordings of observed cases were reviewed with associated log files to understand how the Event Descriptions aligned with users’ behavior.

Quantitative descriptors examined in this study include the number of cases per screen pattern, screen frequency and screen transitions, which were derived from the log files. These descriptors allow us to quantify and compare participants’ interactive behavior required for the task. The quantities provide relative measures of work and reveal insights into the usability of EHR tools, patient case complexity and individual clinician’s interactive strategies. We describe and examine the variation across patient cases and individual clinicians. Further, we integrate other methods (e.g., chart review, sequential analysis, qualitative analysis) to better explore the factors that contribute to the variation.

Qualitative Analysis & Case Study

We conducted qualitative analysis of clinicians’ think-aloud verbalizations to explain patterns of EHR workflow. One of our objectives was to investigate the causes of repeat screen viewing. This was accomplished by reviewing video recordings of clinicians performing the task. In addition, a case study was selectively used to present detailed analysis of clinical work. It provides an illustration of observed behavior with qualitative data interwoven with quantitative descriptors to better understand users’ behavior. For the selected case study, patient case complexity was evidenced by its quantitative descriptors; screen transitions, mouse clicks and task duration for the selected patient case were more than twice the clinician’s, H1’s, average across H1’s observed cases. We previously published these quantitative descriptors and sequential analysis for a subset of cases, the 66 observed patient cases across five clinicians13.

Results

Screen transition pattern analysis

To investigate clinicians’ EHR interaction patterns for InfoGather, a routine computer-based task, we applied process mining to EHR-generated event log files. Our sample consisted of 1569 patient cases across 6 clinician participants. Participants accessed and viewed 26 different EHR screens. Among them are 12 of the 13 main display tabs (the seven most viewed are shown in Table 1). Also included is the Navigation panel (N), which is a collapsible vertical panel on the left of the EHR interface. We defined it as a screen in this study because it is relevant to users’ EHR-interaction for accessing patient charts. Navigation was accessed for nearly all patients (99.7%, Table 1) because it includes the patient list and the search field for a user to access a patient chart. Once a patient chart has been opened during the user’s session, the chart can be reopened by selecting the patient in the Navigation panel (N) or by selecting the chart’s tab along the top of the Synthesis screen. Summary (S), Labs (L), and Vital Signs (V) were viewed for more than half of all cases, and Documents/Images (D) and Intake/Output (I) screens were viewed for more than two-thirds of all cases suggesting the importance of these displays as information sources. Seven screens (D, I, S, L, V, VwR and M) were viewed more than once for some cases and up to 7 times (S and L) (see max repetitions in Table 1). Repeat viewing is analyzed in greater detail below.

Table 1.

Screen statistics. Case Frequency is the number of cases in which the screen was viewed at least once. Absolute Frequency is the number of times the screen was viewed. Max Repetitions is the highest number of time the screen was viewed per one case.

| Screen | Screen Symbol | Case Frequency (% total cases) | Absolute Frequency | Max Repetitions |

|---|---|---|---|---|

| Navigation panel | N | 1565 (99.7) | 1649 | 3 |

| Documents / Images | D | 1055 (67.2) | 1426 | 6 |

| Intake / Output | I | 1212 (77.2) | 1425 | 5 |

| Summary | S | 870 (55.4) | 1171 | 7 |

| Labs | L | 828 (52.8) | 976 | 7 |

| Vital Signs | V | 836 (53.3) | 966 | 5 |

| Viewers/Reports | VwR | 179 (11.4) | 182 | 2 |

| Medications | M | 140 (8.9) | 162 | 3 |

There were 519 variants of screen sequence patterns (Patterns in Table 2). The 15 most frequent patterns account for just over half of all cases (52.6%). All patterns start at the Navigation panel (N). Upon selecting a patient in Navigation (N), the user is immediately transferred to a screen in the newly opened patient’s chart. As represented in the 15 patterns shown in Table 2, transitions lead from Navigation to Documents/Images (N-D), Navigation to Summary (NS), and Navigation to Viewers/Reports (N-VwR). This is because the users had one of these three screens set as the default opening screen. Documents/Images (D) displayed when H2 and H3 opened a patient’s chart, whereas Summary (S) was set as default for H1, R1 and R2 and Viewers/Reports (VwR) was the default for H4. Due to default settings, H1, R1, and R2 navigated through the Summary screen (S) for all of their patients, but observation of their behavior revealed that only R1 used Summary (S) to access patient data for the task. Because Summary (S) is not used by H1 and R2, navigating through this screen is an unnecessary “cost” for these clinicians.

Table 2.

Screen sequence patterns and frequency measures for InfoGather. Screen sequences are shown for the 10 most frequent patterns. Frequency gives the number of patient cases per pattern. The third column expresses the frequency the clinician uses a screen sequence pattern as a percent of their total cases. EHR screen codes: N (Navigation Panel), D (Documents/Images), S (Summary), L (Labs), V (Vital Signs), I (Intake/Output), VwR (Viewer Reports).

| Pattern | Screen Sequence | Frequency (cases/pattern) | Percent of Clinician’s Patterns | Total | |||||

|---|---|---|---|---|---|---|---|---|---|

| H1 | H2 | H3 | H4 | R1 | R2 | ||||

| 1 | N – D – I | 132 | 0 | 0.23 | 0.27 | 0 | <0.01 | 0 | 0.50 |

| 2 | N – VwR – I – V – L | 67 | 0 | 0 | 0 | 0.41 | 0 | 0 | 0.41 |

| 3 | N – S – V – I – D | 65 | 0.13 | 0 | 0 | 0 | 0.01 | 0.12 | 0.26 |

| 4 | N – S | 95 | <0.01 | 0 | 0 | 0 | 0.22 | 0.03 | 0.26 |

| 5 | N – S – V – I | 66 | 0.16 | 0 | 0 | 0 | 0.02 | 0.06 | 0.24 |

| 6 | N – S – L – V – I – D | 68 | 0.24 | 0 | 0 | 0 | 0 | 0 | 0.24 |

| 7 | N – D – I – V – L | 46 | 0 | 0.19 | 0 | 0 | 0 | 0 | 0.19 |

| 8 | N – D | 52 | <0.01 | 0.04 | 0.12 | 0 | 0.02 | 0 | 0.18 |

| 9 | N – S – D | 45 | 0 | 0 | 0 | 0 | 0.05 | 0.13 | 0.18 |

| 10 | N – D – I – L | 38 | 0 | 0.02 | 0.12 | 0 | 0 | 0 | 0.14 |

| 11-101 | 91 sequences | 2-40 each | - | - | - | - | - | - | - |

| 102-519 | 418 sequences | 1 each* | 0.21 | 0.22 | 0.18 | 0.27 | 0.38 | 0.30 | 1.56 |

| Total (case count) | 1569 | 288 | 248 | 274 | 162 | 393 | 204 | ||

The percent of cases in which clinicians had a unique pattern served as a preliminary measure of variation.

Table 2 also indicates the percent of clinicians’ patient cases for which the clinician followed each pattern (normalized by clinician’s total to reduce the bias of varying sample sizes). The most frequent screen transition pattern occurred for 132 cases: Navigation to Documents/Images to Intake/Output (Pattern 1: N-D-I). It was followed by H2 for 23% of H2’s cases, by H3 for 27% of H3’s cases, and by R1 one time (0.3% of R1’s cases). The second most frequent screen sequence occurred 67 times: Navigation to Viewers/Reports to Intake/Output to Vital Signs to Labs (Pattern 2: N-VwR-I-V-L). It was followed by one provider—H4 for 41% of H4’s cases (Pattern 2). Among the top 15 patterns, three other patterns were each followed by one provider—24% of H1’s cases (Pattern 6: N-S-L-V-I-D), 19% of H2’s cases (Pattern 7: N-D-I-V-L), and 11% of H4’s cases (Pattern 14: N-VwR-I-V-L-D). Due to the screen default settings, H2 and H3 sometimes followed the same patterns, while H1, R1 and R2 occasionally followed the same patterns, and they never followed H4’s patterns. More than half of the remaining cases (418; 26.6% of total cases) exhibited a pattern that appeared only once (Patterns 102-519). We use the number of sequence patterns with only one case associated as the measure of variation. Thus, H3’s task performance had the least variation (18% of H3’s cases had a pattern that appeared once), whereas R1’s task performance had the most variation (38% of R1’s cases had a pattern that appeared one time) (Table 2).

Although some of the complexity can be accounted for by users’ system settings, others may be accounted for by the interface or may reflect provider efficiency. For example, Pattern 6, employed by a single clinician for 24% of the clinician’s cases, involved sequential transitions from left to right corresponding to the order of tabs along the top of the screen. Similarly, a different clinician employed sequential transitions that correspond to the order of tabs from right to left (Pattern 7). Still others may reflect variation in patient case complexity, which can be inferred from observations as discussed in the next section.

Analysis of Repeated Views: Of the 66 observed patient cases, 31 had at least one screen that was viewed two times (1/9 for H1, 7 /21 for H2, 11/16 for H3, 10/12 for R1, and 2/8 for R2). For example, in the screen sequence N-D-I-L-D, which H3 followed for three patients, Documents/Images (D) was viewed twice per patient. We inferred reasons for redundant screen viewing from clinicians’ observed behavior. For most of H3’s patients, Documents/Images (D) was viewed twice per patient because it appeared to be the clinician’s routine process. H3 first viewed D when a patient’s chart was first opened because D was set as the default screen for this user. H3 explained, “Whenever I launch a patient, I'm looking at the notes to make sure there was no weird note put in overnight.” H3 would view D towards the end of the task as well, which would allow H3 to review the notes in context of what H3 learned about the patient during the task. H2 also had D set as the default screen, but, unlike H3, the default did not appear to be useful to H2 for several cases. Instead, H2 seemed to use a two-phase approach. First, for most cases, H2 exhibited a consistent screen sequence (i.e., N-D-I-V-L) at the start of the task. Then, for some patients, H2 also visited additional screens, perhaps to see if there were things missed. R1 had the highest percentage of cases with redundant screen viewing. This is not surprising because R1, a second-year resident physician, was relatively inexperienced with the EHR and the CRS practice. R1 could not easily synthesize and consolidate information from the EHR. R1 selectively uses screens with representations that can provide better cognitive support. For example, R1 views both Summary (S) and Labs (L) screens consecutively and multiple times per task. R1 stated “the way they do electrolytes [in the tabular form in the Labs screen], I can’t even sort through that in my mind very quickly so I go back to the skeleton here [on the Summary screen].” In this case, the most recent lab values are represented succinctly in fishbone format on the Summary screen and were the preferred representation.

Case Study: Micro-Analysis of Qualitative and Quantitative data

To explain variation in clinicians’ patterns, we drew on in-depth observation and qualitative analysis of clinicians’ think-aloud. Here, we present a detailed task analysis for one patient case observed in H1’s InfoGather workflow. H1’s screen transition pattern was not repeated for any other case (Pattern 113: N-S-L-S-L-V-I-D). A review of the patient’s chart conveyed the clinical complexity of the patient case in the reason for admission, length of stay, surgical procedures, number of medical services involved in care, and discharge requirements. The patient’s hospitalization was a readmission for a leak and infection. A leak is an abnormal break in the wall of an organ, such as the colon, that allows for an abnormal transfer of contents from the organ to another organ or the body cavity. Observation was conducted on the thirteenth day of the patient’s hospitalization, which was the discharge day. During the patient’s stay, the patient underwent a re-operation and CRS consulted three other services to assist in patient care—critical care, pain service and infectious disease. These consultations are indicative of patient complexity and increased communication needs because information was distributed across additional members of the patient’s care team.

To complete InfoGather, H1 reviewed patient information in the EHR and annotated a paper artifact (paper print out of the electronic handoff document) with patient data, tasks and reminders. Table 3 gives H1’s think-aloud verbalizations, EHR screens viewed and running time for the one patient case. The verbalizations revealed patient information that H1 gathered from each screen (screen captured by the Morae™ video recording). For example, as shown in Table 3, blood pressure is read on the Vital Signs screen (time 01:15), and oral intake volume is read on Intake/Output screen (time 02:18). H1’s verbalizations also reveal data gathered from the Pain Service and Infectious Disease Service notes on the Documents/Images screen (D) in the patient’s chart (time 02:55 to 08:22).

Table 3.

Case study narrative. H1’s think-aloud, screens viewed and running time for one complex patient case.

| Time (mm:ss) | Screen Viewed | H1 Narrative |

|---|---|---|

| 00:00 | Navigation (N) | |

| 00:01 | Summary (S) | |

| 00:02 | Labs (L) | alright so she has… great. |

| 00:03 | Summary (S) | |

| 01:08 | Labs (L) | She’s a mess. |

| I'm thinking I'd like to hear everything going on [with this patient] because my electronic service list can only tell me so much. | ||

| 01:15 | Vital Signs (V) | It looks like she’s a little hypotensive so I go all the way back to the beginning, which is only 24 hours. |

| I'm trying to go back to see her admit blood pressure so that if I get called about her blood pressure today, at least I'll be familiar if she came with low blood pressure. Alright, I feel better. | ||

| 02:18 | Intake/Output (I) | And again, it’s oral intake. I ignore the intermittent infusions. I ignore tubal ligations. number 1 drain nothing. number 2 drain.. |

| 02:55 | Documents/Images (D) | Okay, this is good; I need to know this. This is the discharge planning note cause she will go home with IV antibiotics. So I need to make sure ____for her. So I write down kind of what I need. |

| IV meds, ____. ____ care… per agency protocol. Ordering IV antibiotics is very difficult, I mean outpatient, when I'm setting them up for outpatient. Because all of these things have to be there before they dismiss but we’re not supposed to write things ahead of time so it gets kind of hard. recommending lab work and dc PICC at end of therapy. | ||

| Okay. So then what I do, since IV antibiotics I have to find infectious disease [note]. | ||

| So Zosyn 3.375 q six. PO qd through the 25th… continuous infusion…13.5 | ||

| 05:58 | …400 3d. Let’s see what pain service is wanting. | |

| Because she is an involved patient, that’s why I'm looking at this stuff. …_____…_____….. Tylonol and Topomax… | ||

| So I'm looking at her Sinogram. It says [drain #1] should be flushed daily with 10cc of saline. So then I have to go into MICS | ||

| 08:22 | MICS: Home Screen | [I] click on [Surgeon] patient, [then] her name, [then] Inpatient Order entry to see if it was done. |

| 08:27 | MICS: Order List | [Order says to] Drain flush twice daily. Okay. |

Discussion

We characterized clinicians’ EHR-interactions for the pre-rounds information gathering task (InfoGather) by applying process-mining methods to EHR-generated event log files. We hypothesized that there would be a few screen patterns that could explain a majority of the cases because it is a relatively simple task, there are not many screens that have primary patient data, and clinicians may have preferred patterns of screen transitions, which they follow for most of their patients. Screen viewing patterns may be motivated by an intent to seek out new information (e.g., new lab results). An alternative hypothesis is that there is a large amount of variation in EHR-interactive patterns, which may be explained by the differences in patient problems and patient states. Patients with similar problems and profiles (e.g., age and comorbidities) may require the same information-gathering strategy for the task.

There were 519 variant screen sequence patterns to describe the 1569 cases. No pattern described more than 10% of the sample. Fifteen patterns (3%) accounted for just over half of all cases. Because a majority of cases can be described by 3% of total screen patterns, it may suggest that EHR systems can be designed to better facilitate information gathering across screens. On the other hand, there were 418 patterns that only occurred for 1 patient case, which suggests there is much task variation across users or patient types. No pattern of three or more screens was followed by more than 3 of the 6 clinicians (Pattern 8, N-D, employing 2 screens was followed by 4 of 6 participants). Observations helped to explain some of the screen pattern variation. Across the 6 participants, there were 3 different settings that determined the first screen that was displayed when a patient chart was opened; H1, R1, and R2 had Summary (S) set as default, H2 and H3 had Documents/Images (D) set as default, and H4 had Viewers/Reports (VwR) set as default. The different screen default settings caused variation in screen patterns.

We hypothesized that the clinicians with less expertise would have more variation in screen transitions. The 6-clinician participant pool limits our ability to address this issue, but it can seed hypotheses for further testing. We expected R1, the least experienced user of the EHR among study participants as well as the less expert clinician, to have the most pattern variation. We observed that 38% of R1’s cases exhibited a pattern that occurred only once compared to a 22% average variation across the four hospitalists. More variation is indicative of an incomplete mental model (e.g., understanding of where needed patient information is located or knowledge of potential shortcuts to access data). This is consistent with research showing that more experienced users develop robust mental models21. When a user follows the same pattern for many patients, it may be indicative of the user’s spatial mental model of the system—where information is distributed across sources (applications and their screens). It may also reflect the user’s information needs, and their preferred information sources. R2, in relation to R1, had more clinical expertise and was a more experienced user of the EHR. As expected, R2’s pattern variation was lower than R1’s (30% versus 38%). In relation to the hospitalists, we defined R2 as having equal or more clinical expertise but a less experienced user of the EHR in the CRS department. As expected, R2’s pattern variation was also greater than the hospitalists’ (30% versus 22% average).

Event log file analysis revealed that residents viewed more and different screens, whereas the hospitalists viewed fewer unique screens during the task. This may be additional evidence that residents are less experienced users of the system and, consequently, not as certain where to find patient data. Alternatively, it could be evidence that residents and hospitalists differ in terms of their information needs (e.g., residents are monitoring different or additional patient care goals so they need different or additional patient data). Further evidence that hospitalists and residents differ in terms of information needs and task goals came from R2’s think-aloud, in which the resident appeared to do more decision-making on patients’ care plans than the hospitalists did.

We hypothesized that most clinicians would conduct the task similarly because the routine nature of the task and, though there may be some variation in care goals across patients, there were care goals common to all patients in the unit. There are any number of reasons that may explain variation in these cases including each clinician’s idiosyncratic strategies and patient-case differences. A second-order analysis in which the sequence was not an exact match (e.g., different starting point, but otherwise follows the same pattern) may reveal additional similarities not detected by the first-order analysis. For example, the number of variant patterns is in part due to the variation in chart default settings. By looking at similarities in smaller units of screen sequences, it may be more informative about shared processes and information needs across clinicians.

We triangulated quantitative variables with patient chart review and qualitative data and found clinicians’ EHR-interactive behavior was associated with their routine processes, patient case complexity, variant screen sequence patterns, and EHR default settings. We presented a case study to help convey these findings. In particular, this case study exemplifies non-routine requirements of the InfoGather task. Our data suggests the selected patient case is complex because the quantitative descriptors (reported in 13) are more than twice H1’s average interactivity across all nine observed patients. Data presented in this study further supports this assertion: 1) H1 described the patient as “an involved patient”. 2) To complete InfoGather, H1 followed a screen transition pattern variant that was unique in our sample. The pattern involved 7 transitions between main-tab EHR screens and 12 transitions including the EHR’s subtabs and other clinical applications. 3) Among the 7 EHR screens, H1 visited two screens twice (S and L). This may suggest that redundant screen viewing for a patient case is also an indicator of patient case complexity. On the other hand, redundant screen viewing may suggest users’ inefficient task performance, an indicator of system usability or of task complexity (e.g., conflicting data gathered from another screen or from the paper handoff document may cause the clinician to return to a previously viewed screen). As an outlier case, it surfaces some complexities of completing the task and negotiating the information sources.

Process mining enables researchers to variably focus data analysis on clinicians, patients, time, tasks and interactions, thereby providing insights into different dimensions of workflow. The level of analysis is limited only by the granularity of the event logs. A limitation of our data collection is that we only looked at event logs from one clinical information system and in one setting. Future work will combine event logs generated from additional clinical information systems. As we examine how automated event log files are useful to study EHR workflow, we can also inform what user behavior is captured in systems’ event log files. That is, we can determine if there are particular user-computer activities that event logs could capture and that would be informative of users’ cognitive work.

We conducted qualitative analysis on the subset of observed cases to investigate why some screens were viewed two or more times (redundant screen viewing) for one patient case. Three clinicians repeated screen viewing for a third or more of their patient cases. Reasons varied from clinician’s lack of experience, lack of cognitive support provided by the system to synthesize and consolidate patient findings, system default settings and clinicians’ deliberate routine work process. It was assumed that default setting improved workflow so that clinicians would not have to visit the screen twice. It could be that the default setting serves them better on another task. Future design could support task-based navigation through the system, which could reduce cognitive workload.

Future research will employ regression analysis to determine if there are associations between measures of patient complexity (e.g., procedure, primary diagnosis, number of medication at admission, days in hospital, number of services involved in care, etc.) and measures of task complexity (e.g., number of screen transitions, task duration, etc.). The case study supports further investigation of this. If there is an association between patient case complexity and task complexity, it could be used to quantify increased workload on clinicians from complex patients. Also, it could be evidence toward development of advanced clinical decision support systems that facilitate team awareness and collaboration of complex patients.

Cognitive studies grounded in DCog framework examine clinical work in the context of actual practice and can identify issues in human and system performance. A contribution of our approach to cognitive research is that we leverage large data sets of system-generated event log files to investigate users’ behavior in conjunction with observational methods to explain variation in the empirical findings. In this study, we demonstrated the value of integrating quantitative data analysis with qualitative data to examine EHR workflow. This study indicates that process mining techniques can be used to evaluate variation of clinicians’ task behavior across many clinicians, which can potentially be used to direct resource allocation for observation or training when patterns of interaction seem aberrant or inconsistent with clinical pathways. This study presents a part of a larger methodological framework that we are developing for the study of clinical and EHR workflow10. In the larger research project, we are studying workflow from multiple perspectives (e.g., tasks, clinicians, patients, tool). As we branch beyond analysis of a single task, we are exploring how to use system log files to examine care team coordination activities, particularly to study patient-centered clinical workflow. Variation in team processes surfaced through process mining can be used to focus observation efforts.

Conclusion

The presented study approach addresses the need for an integrated, in-depth approach that facilitates broad investigation of workflow across many settings, clinicians and patient cases, while also facilitating detailed analysis. We used system-generated event log files to characterize clinicians’ EHR-interaction patterns for a routine computer-based task, along with observation to explain variation in the patterns. As demonstrated in this study, computational ethnography can be integrated with observation to balance the advantages and limitations of individual data collection methods and to enable collection of a broad and rich data set for studying clinical work.

Acknowledgements

We would like to thank the Mayo Clinic Office of Information and Knowledge Management (OIKM) for funding this research initiative and Research Fellowship for Stephanie Furniss’s doctoral work. The work was partially supported by a Mayo Clinic Professional Service Award to David Kaufman. A special thanks to the clinicians in Colon & Rectal Surgery Division who graciously volunteered to participate in this study. We are grateful to the efforts of Robert Sunday and Katherine Wright who assisted in data collection.

References

- 1.Blumenthal D. Stimulating the Adoption of Health Information Technology. N Engl J Med. 2009;360(15):1477–1479. doi: 10.1056/NEJMp0901592. [DOI] [PubMed] [Google Scholar]

- 2.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11(2):104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Unertl KM, Weinger MB, Johnson KB, Lorenzi NM. Describing and Modeling Workflow and Information Flow in Chronic Disease Care. J Am Med Inform Assoc. 2009;16(6):826–836. doi: 10.1197/jamia.M3000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Koppel R, Metlay JP, Cohen A, et al. Role of Computerized Physician Order Entry Systems in Facilitating Medication Errors. JAMA. 2005;293(10):1197-1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 5.Zheng K, Hanauer DA, Weibel N, Agha Z. Computational Ethnography: Automated and Unobtrusive Means for Collecting Data in situ for Human–Computer Interaction Evaluation Studies. In: Patel VL, Kannampallil T, Kaufman DR, editors. Cognitive Informatics in Health and Biomedicine: Human Computer Interaction. New York: Springer; 2015. pp. 111-111–140. [Google Scholar]

- 6.Holden RJ, Carayon P, Gurses AP, et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics. 2013;56(11):1669–1686. doi: 10.1080/00140139.2013.838643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Horsky J, Kaufman DR, Oppenheim MI, Patel VL. A framework for analyzing the cognitive complexity of computer-assisted clinical ordering. J Biomed Inform. 2003;36(1-2):4-4–22. doi: 10.1016/s1532-0464(03)00062-5. [DOI] [PubMed] [Google Scholar]

- 8.Kaufman DR, Kannampallil TG, Patel VL. Cognitive Informatics for Biomedicine. Springer; 2015. Cognition and Human Computer Interaction in Health and Biomedicine; pp. 9–34. [Google Scholar]

- 9.Hutchins E. Cambridge, Mass: MIT Press; 1995. Cognition in the wild. [Google Scholar]

- 10.Furniss SK, Mirkovic J, Larson DW, Burton MM, Kaufman DR. Amicro-analytic framework for characterizing workflow in the pre-rounds data gathering process. BMI technical report to be submitted to the Journal of Biomedical Informatics. 2016.

- 11.Norman DA. Cognitive engineering. User centered system design: New perspectives on human-computer interaction. 1986. p. 3161.

- 12.Kushniruk AW, Kaufman DR, Patel VL, Levesque Y, Lottin P. Assessment of a computerized patient record system: a cognitive approach to evaluating medical technology. MD Comput. 1996;13(5):406–415. [PubMed] [Google Scholar]

- 13.Kaufman DK, Furniss SK, Grando MA, Larson DW, Burton MM. A Sequential Data Analysis Approach to Electronic Health Record Workflow. Context Sensitive Health Informatics (CSHI) conference; 2015; p. 196. [PubMed] [Google Scholar]

- 14.Rogers Y. HCI theory: classical, modern, and contemporary. Synthesis Lectures on Human-Centered Informatics. 2012;5(2):1–129. [Google Scholar]

- 15.Zheng K, Padman R, Johnson MP, Diamond HS. An Interface-driven Analysis of User Interactions with an Electronic Health Records System. J Am Med Inform Assoc. 2009;16(2):228–237. doi: 10.1197/jamia.M2852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kannampallil TG, Franklin A, Mishra R, Almoosa KF, Cohen T, Patel VL. Understanding the nature of information seeking behavior in critical care: implications for the design of health information technology. Artif Intell Med. 2013;57(1):21–29. doi: 10.1016/j.artmed.2012.10.002. [DOI] [PubMed] [Google Scholar]

- 17.Burton MM. Best Practice (Methods and Tools) for Clinical Workflow Analysis. HIMSS Conference & Exhibition; 2013. [Google Scholar]

- 18.Patel VL, Kannampallil TG. Cognitive informatics in biomedicine and healthcare. J Biomed Inform. 2015;53:3–14. doi: 10.1016/j.jbi.2014.12.007. [DOI] [PubMed] [Google Scholar]

- 19.van der Aalst, Wil MP, Reijers HA, Weijters AJ, et al. Business process mining: An industrial application. Inf Syst. 2007;32(5):713–732. [Google Scholar]

- 20.Grando MA, Schonenberg M, van der Aalst, Wil MP. Semantic Process Mining for the Verification of Medical Recommendations; HEALTHINF; 2011. pp. 5–16. [Google Scholar]

- 21.Kieras DE, Bovair S. The role of a mental model in learning to operate a device. Cognitive science. 1984;8(3):255–273. [Google Scholar]