Abstract

To determine how the Rapid Assessment Process (RAP) can be adapted to evaluate the readiness of primary care clinics for acceptance and use of computerized clinical decision support (CDS) related to clinical management of working patients, we used a unique blend of ethnographic methods for gathering data. First, knowledge resources, which were prototypes of CDS content areas (diabetes, lower back pain, and asthma) containing evidence-based information, decision logic, scenarios and examples of use, were developed by subject matter experts. A team of RAP researchers then visited five clinic settings to identify barriers and facilitators to implementing CDS about the health of workers in general and the knowledge resources specifically. Methods included observations, semi-structured qualitative interviews and graphic elicitation interviews about the knowledge resources. We used both template and grounded hermeneutic approaches to data analysis. Preliminary results indicate that the methods succeeded in generating specific actionable recommendations for CDS design.

Introduction

The majority of adults in the U.S. work, and on average they spend more than half their waking hours at work.1 Therefore, primary care providers treating adults encounter many situations where the patient’s work environment affects their health and/or the management of their health conditions. And although primary care providers most often are the first to see patients with medical issues such as asthma symptoms that may be caused by workplace exposures, they do not routinely ask patients about their work2–6. Occupational health physicians have developed evidence-based guidelines for helping to manage many such patients, but primary care providers are rarely aware of their existence7. Though computerized clinical decision support (CDS) holds potential for increasing awareness and providing guidance in the care of working patients, it must be developed and implemented to fit the context and workflow of those who would benefit from having the information8.

This qualitative evaluation project was part of a larger project funded by the National Institute for Occupational Safety and Health (NIOSH) of the Centers for Disease Control and Prevention. The NIOSH project is designing, developing, and pilot testing clinical decision support (CDS) for the health of working patients in primary care outpatient settings. The first step in this project was the development of three knowledge resources (KRs) containing evidence-based information, decision logic, scenarios and examples of use. The KRs were prepared by three subject matter expert (SME) groups for three topics that are related to the health of patients who work and that were considered especially pertinent to a primary care practice. The SME groups were guided through the guideline and KR development process by an informatician with expertise in these procedures (Dr. Richard N. Shiffman). The three KRs focused on dealing with work environment factors that impact the management of a chronic disease (diabetes), guidance for return-to- work (RTW) after lower back pain diagnosis not related to work, and diagnosis and management of work- related/work-exacerbated asthma. The goal of the qualitative study was to identify the barriers and facilitators related to CDS for the clinical management of working patients in a variety of primary care settings, including assessment of the technical and organizational feasibility of implementing the CDS represented by each KR. Having used the Rapid Assessment Process (RAP) in the past, the research team members considered it most appropriate for this study.

RAP is a way of gathering, analyzing, and interpreting ethnographic data that is both effective and efficient. The process is expedited through the consistent use of structured tools, which are developed for each individual study and consolidated into a field manual. RAP can only be conducted by teams that include those inside the organization as well as outside researchers. RAP also provides feedback to internal stakeholders. In summary, RAP “depends heavily on triangulation of data from different sources. A field manual is developed prior to the study and generally includes 1) site inventory profiles, 2) observation guides, 3) interview question guides, and 4) rapid survey instruments9, p. 80” As with traditional ethnography, methodological approaches must be tailored specifically to the needs of the project10–12.

The RAP methodology has mainly been applied to assessing clinical systems that have already been implemented and within which CDS is embedded11–12, but for this project we aimed to adapt it for assessing both the clinical context and the KR prototypes prior to full development and implementation. Because we needed direct comments about each of the three KRs, we explored using graphic elicitation interviewing techniques in addition to our classical semi-structured interview methods. Graphic elicitation interviewing involves asking the subject to look at a graphical artifact to stimulate discussion, providing “contemplative verbal responses13, p53.” Umoquit et al.13 have compared the use of graphic elicitation, which involves use of a visual aid available to both the interviewer and interviewee, with participatory diagramming, in which the interviewee is tasked with developing a graphic. They found that graphic elicitation was best for focusing on exactly what the researchers were interested in and for producing thoughtful verbal comments on researcher-identified issues. Participatory diagramming produced fewer detailed comments about those issues but it encouraged creativity and new ideas. For our purposes, to gather verbal commentaries about specific aspects of the KRs, we selected graphic elicitation as our method of choice along with semistructured interviewing.

For the study described here, our research question was: How can RAP be further adapted for assessment of the readiness of the organizational and technological context for CDS and, in addition, for evaluation of the CDS content?

Methods

Selection of methodological approaches

Because of the timeline for the larger project and the need to request human subjects approval from NIOSH, OHSU and five different organizations serving as sites, we only had five months within which to conduct site visits, analyze data, and report results. We fortunately had a large enough team of trained researchers so that some visits by sub-teams could take place at the same time. Our most difficult decisions concerned maximizing the use of our interviews so that we optimized the use of interview time, learned about context, and also received detailed input about the KRs. We opted to conduct two-part interviews using two different interviewing strategies.

For gathering input on the KRs, we wanted interviewees to evaluate a CDS concept with which they may not have been familiar, especially if they were non-clinicians. Further, we needed them to react in an abstract way because the CDS was not yet built. We decided we needed a variety of tools to help us describe the CDS ideas to our subjects. We designed two artifacts in addition to the extremely detailed documents developed by each of the three SME groups: a brief easily understood one-paragraph description of the CDS and a flowchart showing what the CDS would do. We planned to get general assessments of the usefulness of the CDS from non-clinical and non-technical interviewees using these less detailed descriptions. For others, we used different artifacts depending on the expertise of the interviewee.

We also decided that interviews alone would not be sufficient. To learn about the workflow within which the proposed CDS might best fit, we opted to conduct observations. This form of methodological triangulation serves to verify whether or not workflow described during interviews is accurate. Researchers shadowed clinic staff and clinicians and observed work activities throughout each clinic while writing detailed field notes. These observations also provided an opportunity to see how patient work information was recorded in the EHR.

Development of a Field Manual

Our multidisciplinary team of nine occupational health experts and informaticians from four different U.S. locations convened in Portland, OR for three days of training and planning. The training had RAP as its focus since some team members were familiar with ethnographic techniques but not with RAP in particular. Decisions were made together as we developed the field manual for this study. For our field manual, we put together a “site inventory profile,” which was a checklist of kinds of CDS and other related factors. We gathered some information prior to our visit to help us develop our interview questions and lists of foci for observations and we also gathered some site inventory profile information on site. We conducted phone discussions with a contact person at each site to learn more about the site and to mutually identify individuals to interview and observe. As is our practice, our field manual included a master interview guide with all of the questions we wanted to have answered. For each individual, we selected the most appropriate questions depending on his or her role. As with other field manuals we have prepared, the field manual also included a fieldwork observation guide for use in writing field notes. It briefly noted foci for fieldwork at that particular site. Finally, we included the three different artifacts for each of the three KRs, which would help us with the graphic elicitation interviews.

Human Subjects Protection

This study was approved by the Institutional Review Boards (IRBs) at NIOSH, OHSU, and two of our study sites. IRB review and/or official determinations (if a site did not have an IRB, it had other mechanisms for reviewing our protocol) were also obtained from the other three sites.

Site selection

We deliberately selected geographically-diverse sites and both large and small organizations so that we could observe whether these differences might influence the ability to customize, implement, and gain user satisfaction with the proposed CDS; and to get a sense of capability, and applicability of our findings across a variety of clinical settings. We also purposely selected sites that varied in organizational structure and had different electronic health record systems (EHRs).

We conducted this qualitative assessment across several clinics within two Federally Qualified Health Centers (FQHC) using the NextGen EHR, two organizations of very different sizes that use Epic, and one very large, complex organization that uses AllScripts. Sites were located in California, Mississippi, New York, Massachusetts, and Ohio.

Subject selection

With the assistance of an inside contact person at each site, we selected individuals representing different roles on health care teams, including physicians, nurses, pharmacists, medical assistants, social workers, care coordinators, educators, clerical and administrative staff, and information technology and CDS specialists.

Data collection

The interviews explored present CDS usage in general, work information in commercial EHRs, capability for implementing new CDS, information needs related to managing care of working patients, workflow, and assessment of the three KRs.

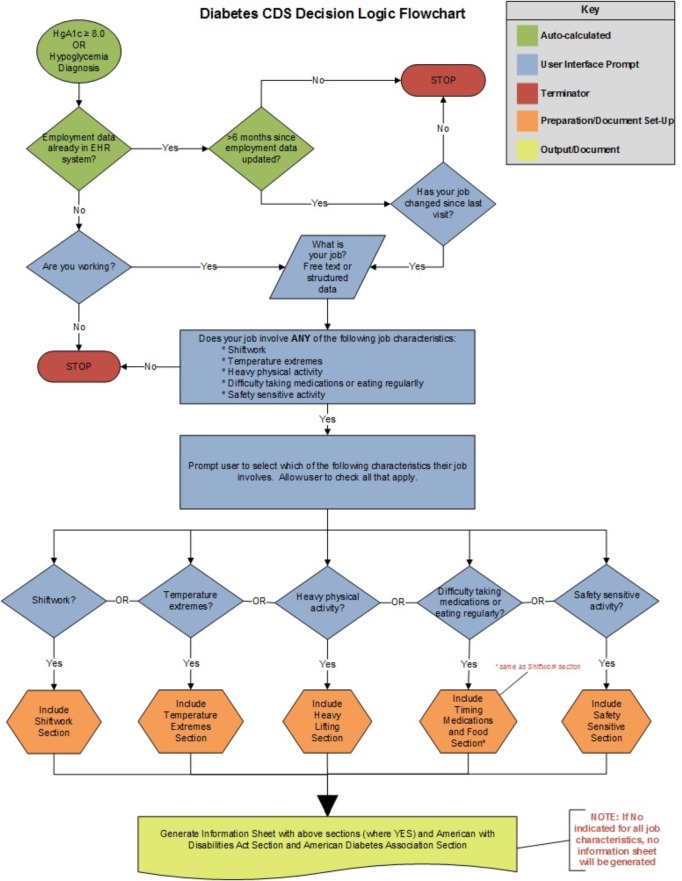

We developed tailored interview guides for individuals depending on their roles in the organization. During the first half of the interview, we asked general questions about CDS, information to help manage care of working patients (such as availability of the patient’s work information in the EHR), technical issues, and workflow (Table 1). A sample of some questions asked of information technology-related staff is given in Table 2. Figure 1 shows an example of one of the three flowcharts used in the second part of the interview as a graphic elicitation tool. For the second part of each interview, we asked specific questions about the KRs (see Table 3 for an example), assisted by the artifacts (text description, flowchart, or full KR) selected as most appropriate. Subjects representing all roles were first shown the paragraph summarizing each of the proposed CDS modules. Subsequently, depending on the role of the interviewee and his or her level of understanding and use of CDS, we used the graphic elicitation technique. We often showed the flowchart in addition to the paragraph and on rare occasions we showed the full KRs.

Table 1.

Topics in interview guides

| 1. Clinical interviewee question areas |

| Part 1 |

| Background and role of interviewee |

| Your work patterns |

| About CDS |

| About CDS for clinical management of patients who work |

| Part 2 |

| The three knowledge resources: how useful, who should be involved in asking questions and educating, and where each fits into the workflow |

| 2. Informatics and IT question areas |

| Part 1 |

| Background and role of interviewee |

| Clinician work patterns and clinic workflows |

| About your EHR |

| About training, support, and customization |

| About CDS |

| About CDS for clinical management of patients who work |

| Part 2 |

| The three knowledge resources: how useful, who should be involved in asking questions and educating, and where each fits into the workflow |

| 3. Management and staff question areas |

| Part 1 |

| Background and role of interviewee |

| Your work patterns |

| About CDS |

| About CDS for clinical management of patients who work |

| Part 2 |

| The three knowledge resources: how useful, who should be involved in asking questions and educating, and where each fits into the workflow |

Table 2.

Sample of questions asked of technical experts

| 4. About work patterns |

| Could you briefly describe the work pattern or different work patterns of clinicians in your clinic—what are their days like? |

| What do they do before, during, and after patient visits? |

| 5. About your EHR system |

| I see here that your organization uses X EHR system. |

| Can you give an overview of the configuration of your EHR system? Are there separate interfaces/modules for the registration vs. billing vs. patient chart? |

| (if there are separate modules) Do you know how/if data are shared among the different modules? |

| How are data entered into your EHR system? (probe -- are any data entered directly by patients via a tablet, kiosk, or patient portal/PHR system?) |

| Could you describe how the process works for requesting, testing, and deploying modifications to your EHR system? |

| 6. About clinical decision support |

| We define decision support to include alerts, reminders, order sets, reference sources, data displays like flow sheets, and documentation templates. |

| What kinds of CDS do you have here and what seems to have been most helpful? |

| What employment or occupational information is currently captured in your EHR system (such as employer name, occupation/job title, etc.)? Is it in structured form? Where is it in the system? |

Figure 1.

Flowchart for use during graphic elicitation interview: diabetes example

Table 3.

Questions for graphic elicitation interviews: diabetes example

| Part 2 of interviews: CDS Knowledge Resources |

| Explanation: An outside panel has come up with three ideas for CDS which might help primary care providers meet patient’s needs more effectively. The three are: using CDS to help identify and manage work related asthma, analyzing the impact of working conditions on refractory diabetes, and providing structured return to work letters for patients with low back pain. We would like to discuss each of them with you. |

| [Hand copy of most appropriate artifact to person] |

| Interviewee reads: |

| Refractory diabetes: A diabetic patient ’s working conditions, such working more or different hours or working in hot environments can contribute to hyper or hypoglycemic episodes. Also, for some safety sensitive” jobs, a worker with impaired cognition due to low blood sugar could be at risk for injury to himself or to others. The CDS would prompt providers to ask specific work-related questions and would generate educational information for the provider and patient based on the responses. |

| Ask: |

| How useful would this information be to those in your clinic? |

| Which clinic personnel are likely to be involved in either gathering patient data or acting on decisions/information like this? |

| Who would be asking the required questions? |

| Who would/should be educating the patient? |

| At what point in the clinic workflow would/should this happen? |

We also adjusted our questioning during the interview so that we could probe intriguing topics. Two interviewers attended each interview, with a few exceptions due to scheduling. We tried to include one occupational health specialist and one informatics specialist on each team so that each team had the expertise to probe either clinical or technical answers given by interviewees. One interviewer was the official interviewer and the other remained silent until appropriate times when he or she was allowed to ask questions during the first part of the interview. In this way, the process remains true to recommended semistructured interview techniques (e.g. it does not become a conversation), but the assistant interviewers have an opportunity to ask follow-up questions in their particular areas of expertise.10 Another important task for the assistant interviewer was to write field notes during the interview. They noted nonverbal interactions and areas to further explore as well as what the person was saying. For the second part of the interview, when we used the graphic elicitation technique to discuss the flowcharts and/or KRs, both of the interviewers played a more active role since more of a dialogue and question and answer interchange was necessary. All interviews were recorded and transcribed.

During our observations, we observed all activities throughout the clinic, from patient registration through patients leaving the clinic, so that we could trace workflow in a general sense. We shadowed individual providers and staff members while they were interacting with patients and also when they were performing other duties. We also conducted informal interviews with those we were observing when there were opportunities.

At the end of each day, the team met to debrief about findings for that day, to share insights from each researcher’s unique perspective, and to plan for the next day. When we had two teams in the field in different geographic locations, we met via teleconference.

Data analysis

Preliminary data analyses took place between interviews. Using notes taken by the assistant interviewer, the researchers briefly reviewed what had been learned so that new questions for upcoming interviews could be developed as needed based on prior interviews. After completion of the site visits, interview notes and transcripts were entered into NVivo, (QSR International, Doncaster, Victoria Australia), a program that facilitates the organization and retrieval of qualitative data for analysis.

We used both grounded hermeneutic and template approaches to data analysis14-15. For in-depth analysis of all data, we used the former, which begins with the words of our interviewees. Our core research team broke into dyads and each dyad was assigned a set number of transcripts. Individuals read the assigned transcripts, noting all recurring or potentially important expressions and key phrases, and the dyads then met to compare and agree on their findings. The researchers next met so each dyad could discuss results with the larger team, which reached consensus on meaning and terminology for themes. One dyad also analyzed and categorized all comments related to the KRs using a template of predetermined terms related to the questions we asked.

The interpretive process was both iterative and flexible. Our team met repeatedly to interpret the results. We wrote a short report of the findings for each site we studied for two reasons: first, we thought the organizations would find them useful, and second, the report was a form of “member checking14,” a qualitative technique to further establish trustworthiness of results by asking insiders for feedback.

Results

We conducted five site visits over an 8-week period between July 21st and September 11th, 2015. Team members included two occupational health physicians, three NIOSH staff members (a scientist, a nurse, and an informatician), four informatics faculty members (a social scientist, a nurse practitioner, a physician, and a laboratory specialist), and a project manager. We interviewed 41 clinicians not deeply involved in informatics, 23 individuals who were informaticians or information technology specialists, 15 managers or staff members, and four quality improvement specialists, for a total of 83 interviews. We spent a total of 30 hours observing in ten clinics within these five organizations. We believe we reached data saturation in that we reached a point where we were hearing the same answers repeatedly and not learning anything new.

Lessons Learned About Methods

Timeline: This was planned as a one-year project, but half of that time was needed to secure the necessary IRB approvals. Although we were able to gather a great deal of information in eight weeks, and to analyze it to produce the needed reports within a three month period after that, this time frame was too short to allow for truly in depth analysis within the contract period. Analysis was still ongoing three months later. Another problem with the short timeline was that, even with nine researchers available to conduct site visits, some had to be done simultaneously and therefore the lead investigator (JA) was not able to attend all visits. In the past, we have found that having the lead investigator attend all visits provided consistency in data gathering (J. Ash, personal observation).

Two-part interview format: The two-part interview strategy worked well most of the time. By progressing from questions about CDS in general to specifics about the KRs, we were able to set the stage so that interviewees knew the context of our questions and were more likely to be comfortable talking with us. We ran out of time during a few of the interviews, which were normally scheduled for a half hour. These occurrences could have indicated that the interviewees were comfortable with talking, but was likely due primarily to trying to cover so much during each interview.

Graphic elicitation interviews: These yielded immensely useful information about the KRs. We always started the second part of the interview by handing the interviewee one paragraph about one KR at a time (or paragraphs about all three KRs if the interviewee preferred seeing them all at once). For the interviewees with more expertise in clinical and/or technical areas, the paragraph started a dialogue, the interviewee asked questions, and the flowchart would then be discussed. This situation made the second part of the interviews, collecting information about the KRs, especially challenging because the interviewers not only had to adapt each question to the skill level of the interviewee, but also bring out the graphical representation of the CDS at the right time. Usually semi-structured ethnographic interviews using RAP are not dialogues: the interviewee answers questions posed by the interviewer, who tries to do more listening than talking. However, we found that our subjects could not answer our questions without the ability to ask interviewers about details of the CDS flow. The graphic elicitation interviews were used to provide more details simply and quickly, in order to help the interviewees answer our questions and move back to more of an interview (vs. a dialogue). In this manner, we saved considerable time by only needing to bring out the full KR documentation a few times when interviewees had very specific questions.

Assistant interviewer: The role of the assistant interviewer was more important in this project than others we have conducted because the site visits followed one another in such rapid succession that transcripts from prior visits were not available to help us prepare for the next visit. In fact, it was very important that the interview notes were detailed enough so that, because of the tight timeline, we were able to write preliminary reports for each SME group. The reports made recommendations about each KR based on these field notes without having all of the transcripts completed. Subsequent examination of the transcripts provided more nuanced information, but did not change the big picture of the information collected.

Subject selection: By observing and interviewing staff in all roles, including clerical staff, we were able to assess all activities in the clinics and gain a broad picture of clinic workflows and the way patient work information data are currently collected in the EHRs. Some of these interviewees were unable to provide much feedback about the KRs, but they all were able to describe their daily work and the way they used the EHR in detail.

Observations: The notes from the observations provided critical insights into the use of the EHR and CDS. They allowed us to obtain more detailed information about the workflow, availability of multiple roles within the clinic, and differences in how these roles are used across the clinics. They also allowed us to modify or verify our interpretations of what was said in the interviews about workflow and roles. Staff members often fail to describe their work activities accurately and completely, most likely because they take many of them for granted and are trying to be succinct during interviews. Observations serve to fill in the gaps and proved to be another valuable technique for triangulating data.

Lessons Learned About CDS for Clinical Management of Working Patients

Preliminary analysis of the interview and observation data resulted in themes that reflect facilitators or barriers to development and implementation of the proposed CDS and are indicative of the depth of knowledge we were able to obtain using RAP. Full results will be reported when analysis is complete, but analysis of the transcripts and field notes up to this point indicates that there are many facilitators related to development and acceptance of decision support tools as outlined in the KRs developed by the SME groups. The most important and somewhat unexpected finding was that it is technically feasible to develop any of the three proposed CDS tools. There are also a number of barriers to implementation and use. Not surprisingly, interviewees most consistently noted that the proposed CDS might add to their time burdens.

Responses about the Three Knowledge Resources

From the graphic elicitation interviews we learned that in general the three proposed CDS modules included some terminology, especially when offering recommendations, that needed explanation. For example, the term “safety sensitive activity” was not immediately understood and could be changed to “activity with potential safety risks.” We learned that informatics innovations that facilitate patients entering data about their work may have potential to relieve some clinic time burdens, that culturally sensitive patient education materials are needed, and that providers do not necessarily need to be the targets of the CDS. We were able to gain feedback on each of the KRs that was very detailed, especially when the interviewee had both clinical and informatics knowledge. As an example, one interviewee with an informatics background suggested ways to use a service-oriented architecture approach for one of the KRs. Although we gained useful feedback from medical assistants and other non-provider staff about workflow, we found that they were less interested in either the flowcharts or KRs.

Discussion

We believe that use of the Rapid Assessment Process is effective for gaining a good deal of information in a short amount of time. For this project, because of circumstances beyond our control, we were forced to work even more rapidly than usual. As others have found when conducting RAP evaluations, flexibility and creativity are needed so that the mix of methods can best fit the situation10. Workarounds such as the use of detailed assistant interviewer notes and splitting up the team to conduct simultaneous site visits helped us to stay on schedule, but they are not optimal. On the other hand, we succeeded in gathering a great deal of information about the facilitators and barriers to developing and implementing CDS for the health of working patients in primary care settings based on a combination of interviews and observations.

Enhancing RAP by using graphic elicitation interviews such as those described by Umoquit12 to assess CDS ideas allowed us to gather rich and specific feedback about the KRs. Like Crilly et al.16, we found that the artifacts we presented to interviewees served as a reference point and were more effective with many interviewees than simply asking a series of questions. Additionally, like Larkin et al., we found the artifacts most useful for interviewees with the interest and ability to interpret them quickly17. The modifications to RAP used for this study might be adopted for other studies of CDS with different content and contexts, though we would recommend a longer timeline.

Recommendations and Conclusion

The use of RAP to evaluate the content and concept of CDS after it has been outlined in a form that is understandable to users but before it has been built can offer a useful description of the perceived value and context within which the CDS can work best. By adding graphic elicitation interviews to the RAP process, researchers can gather detailed feedback for CDS developers and implementers.

Acknowledgements

This project was supported by CDC/NIOSH Contract 200-2015-61837 as part of NORA project #927ZLDN. We would like to thank the following for service on the research team: Sherry Baron, MD, MPH, Genevieve Barkocy Luensman, PhD, Margaret Filios, RN, MSc, Nedra Garrett, MS, Richard N. Shiffman, MD, MCIS, and James McCormack, PhD. We appreciate the help of the following at our sites: Rose H. Goldman, MD, MPH, Laura Brightman, MD, Stacey Curry, MPH, Larry J. Knight, MSA, MSHRM, Deborah Lerner, MD, Herb K. Schultz, Michael Rabovsky, MD, Joseph Conigliaro, MD, MPH, and Nicole Moodhe Donoghue. Finally, we would like to acknowledge and thank the clinics and the clinicians and staff at each site for their participation and support of this work.

References

- 1.U.S. Department of Labor, Bureau of Labor Statistics, June 24, 2016 Economic News Release www.bis.gov/news.release/atus.nr0.htm, accessed July 1, 2016.

- 2.Goldman RH, Peters JM. The occupational and environmental health history. JAMA. 1981;246(24):2831–2836. [PubMed] [Google Scholar]

- 3.Shofer S, Haus BM, Kuschner WG. Quality of occupational history assessments in working age adults with newly diagnosed asthma. Chest. 2006;130(2):455–462. doi: 10.1378/chest.130.2.455. [DOI] [PubMed] [Google Scholar]

- 4.Politi BJ, Arena VC, Schwerha J, Sussman N. Occupational medical history taking: how are today’s physicians doing? A cross-sectional investigation of the frequency of occupational history taking by physicians in a major U.S. teaching center. J Occup Environ Med. 2004;4(6):550–555. doi: 10.1097/01.jom.0000128153.79025.e4. [DOI] [PubMed] [Google Scholar]

- 5.Mazurek JM, Storey E. Physician-patient communication regarding asthma and work. . Am J Prev Med. 2012;43(1):72–75. doi: 10.1016/j.amepre.2012.03.021. [DOI] [PubMed] [Google Scholar]

- 6.Mazurek JM, White GE, Moorman JE, Storey E. Patient-physician communication about work- related asthma: what we do and do not know. Ann Allergy Asthma Immunol. 2015;11(4):97–102. doi: 10.1016/j.anai.2014.10.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Newman LS. Occupational illness. NEJM. 1995;333(17):1128–1134. doi: 10.1056/NEJM199510263331707. [DOI] [PubMed] [Google Scholar]

- 8.Lomotan EA, Hoeksema LJ, Edmonds DE, Ramirez-Gamica G, Shiffman RN, Horwitz LI. Evaluating the use of a computerized clinical decision support system for asthma by pediatric pulmonologists. In J Med Inform. 2012;81(3):157–165. doi: 10.1016/j.ijmedinf.2011.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ash JS, Ash JS, Sittig DF, McMullen CK, McCormack J, Wright A, Bunce A, Wasserman J, Mohan V, Cohen DJ, Shapiro M, Middleton B. Studying the vendor perspective on clinical decision support; Proceedings AMIA 2011; 2011. pp. 80–7. [PMC free article] [PubMed] [Google Scholar]

- 10.Beebe J. Walnut Creek, CA: AltaMira Press; 2001. Rapid Assessment Process: An Introduction. [Google Scholar]

- 11.McMullen CK, Ash JS, Sittig DF, Bunce A, Guappone K, Dykstra R, Carpenter J, Richardson J, Wright A. Rapid assessment of clinical information systems in the healthcare setting: An efficient method for time-pressed evaluation.; Meth Inform Med; 2011. pp. 299–307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ash JS, Sittig DF, McMullen CK, Guappone K, Dykstra R, Carpenter J. A rapid assessment process for clinical informatics interventions; Proceedings AMIA; 2008 Nov. pp. 26–30. [PMC free article] [PubMed] [Google Scholar]

- 13.Umoquit MJ, Dobrow MJ, Lemieux CL, Ritvo PG, Urback DR, Wodchis WP. The efficiency and effectiveness of utilizing diagrams in interviews: an assessment of participatory diagramming and graphic elicitation; BMC Med Research Methodology; 2008. p. 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Crabtree BF, Miller WL. Doing Qualitative Research. second edition. Thousand Oaks CA, Sage: p. 1999. [Google Scholar]

- 15.Berg BL, Lune H. Boston, MA: Pearson; 2012. Qualitative Research Methods for the Social Sciences, 8thed. [Google Scholar]

- 16.Crilly N, Blackwell AF, Clarkson PJ. Graphic elicitation: Using research diagrams as interview stimuli. Qualitative Research. 2006;6:341–366. [Google Scholar]

- 17.Larkin JH, Simon HA. Why a diagram is (sometimes) worth ten thousand words. Cognitive Science. 1987;1(1):65–99. [Google Scholar]