Abstract

Clinicians today face increased patient loads, decreased reimbursements and potential negative productivity impacts of using electronic health records (EHR), but have little guidance on how to improve clinic efficiency. Discrete event simulation models are powerful tools for evaluating clinical workflow and improving efficiency, particularly when they are built from secondary EHR timing data. The purpose of this study is to demonstrate that these simulation models can be used for resource allocation decision making as well as for evaluating novel scheduling strategies in outpatient ophthalmology clinics. Key findings from this study are that: 1) secondary use of EHR timestamp data in simulation models represents clinic workflow, 2) simulations provide insight into the best allocation of resources in a clinic, 3) simulations provide critical information for schedule creation and decision making by clinic managers, and 4) simulation models built from EHR data are potentially generalizable.

Introduction

Physicians today are pressured to see more patients in less time for less reimbursement due to persistent concerns about the accessibility and cost of healthcare.1,2 Furthermore, clinicians are concerned that the adoption of electronic health records (EHRs) has negatively impacted their productivity.3–5 For example, at Oregon Health & Science University (OHSU), which completed a successful EHR implementation in 2006 that received national publicity, ophthalmologists currently see 3-5% fewer patients than before EHR implementation and require >40% additional time for each patient encounter.6

Facing these pressures, clinicians lack guidance on how to improve their efficiency while dealing with increased patient loads and time requirements of EHR use. For example, ophthalmologists typically see 15-30 patients or more in a half-day session, utilize multiple exam rooms simultaneously, work with ancillary staff (e.g., technicians, ophthalmic photographers), and examine patients in multiple stages (e.g., before and after dilation of eyes, before and after ophthalmic imaging studies). This creates enormous challenges in workflow and scheduling, and large variability in operational approaches.7 Approaches toward improving the efficiency of clinical workflow using EHRs would have significant real-world impact.

Clinic workflow bottlenecks result when patients arrive and clinic resources (e.g. staff, exam rooms, and providers) are not available to serve them. This mismatch of arrivals and availability can be increased by ad-hoc scheduling protocols that increase patient wait time.8 Testing different scheduling strategies and resource allocation in real clinics is impractical, however, since patient and provider time is too valuable for experimentation. Empirical models of clinical processes using discrete event simulation (DES) can evaluate different clinic configurations effectively before implementing them in clinical settings. DES requires large amounts of workflow timing data, which is available as timestamped EHR data.9 DES has been used for quality improvement in healthcare and scheduling, but not using EHR and detailed workflow data.10–13

In this paper, we present the process and results of simulating four outpatient ophthalmology clinics at OHSU, through discrete event simulation using secondary EHR data. Ophthalmology is an ideal domain for these studies because it is a high-volume field that combines both medical and surgical practices. Our results show that simulations can provide insight into the benefits and drawbacks of adding or removing clinic staff and exam rooms, as well as strategies for improving patient scheduling.

Background: Discrete Event Simulation

Discrete event simulation is a method for analyzing processes with high variability. The processes are broken down into a series of discrete steps whose time requirements are represented by probability distributions rather than constant values. When a simulation model is executed, these distributions are sampled to produce a time spent in each step. Simulations are repeated many times to determine the average behavior of the system. Simulation models cannot be used to solve for absolute optimality; instead they are used to evaluate different scenarios to determine relative behavior.

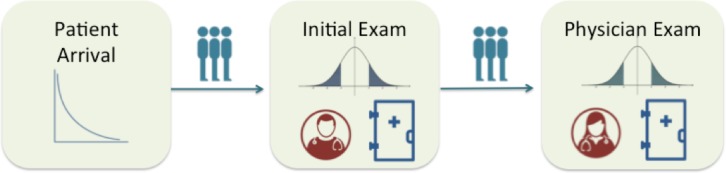

For example, a clinic workflow can be modeled as a sequence of steps, such as patient arrival, an initial exam by a nurse or staff member followed by a physician exam as shown in Figure 1. Each of these steps will take a different varying amount of time, so each needs its own probability distribution. Each exam requires the use of finite clinic resources—a nurse or a doctor and an exam room. When the simulation model runs, a time value is determined for each step by sampling its distribution. As the model runs and more patients arrive, it is possible to generate queues of waiting patients for each exam. This happens when patients arrive faster than the exams take and/or there are not enough nurses, doctors or exam rooms for all waiting patients. To determine the expected behavior of the model over time, the simulation must be repeatedly executed.

Figure 1:

Example Discrete Event Simulation for outpatient clinic. Patients arrive according to a certain probability distribution. They first see a nurse for an initial exam before they are examined by a physician. Each of the exams requires resources—in this case a nurse or a physician and an exam room. Each exam time is represented by a probability distribution. Because there are finite resources in a clinic, there are potential queues of waiting patients for each of the exams.

Discrete event simulations can be used to evaluate changes to the model. For the example of the outpatient clinic, simulations can determine the effect of increasing the number of exam rooms or changing the appointment scheduling, which will both affect the patient arrivals. Each of these changes can greatly impact metrics such as average patient wait time or number of patients seen in a given time period. Simulation provides an easy and rapid way to evaluate these changes without interfering with clinic operations.

Discrete event simulations are used to improve various healthcare operations from emergency department configurations to operating room scheduling.14 Outpatient clinics have used discrete event simulations for resource allocation decision making as well as scheduling improvements,15–17 but mostly focus on individual clinics and use limited data as the basis of their model—multiple days of time-motion studies. In our study, we use multiple years worth of EHR timing data to create models that more precisely represent the variability of clinic workflows. We also parameterize the model so that it may be used for multiple clinics.

Methods

This study was approved by the Institutional Review Board at Oregon Health & Science University (OHSU).

Study Environment

OHSU is a large academic medical center in Portland, Oregon. The ophthalmology department includes over 50 faculty providers, who perform over 90,000 annual outpatient examinations. The department provides primary eye care, and serves as a major referral center in Pacific Northwest and nationally. We selected 4 outpatient ophthalmology clinics to study: 1) pediatric ophthalmology (LR), 2) comprehensive eye care (LL), 3) glaucoma (MP), and cornea (WC). These 4 clinics represent the diversity of outpatient care in ophthalmology at OHSU.

Over several years, an institution-wide EHR system (EpicCare; Epic Systems, Madison, WI) was implemented throughout OHSU. This vendor develops software for mid-size and large medical practices, is a market share leader among large hospitals, and has implemented its EHR systems at over 200 hospital systems in the United States. In 2006, all ophthalmologists at OHSU began using this EHR. All ambulatory practice management, clinical documentation, order entry, medication prescribing, and billing tasks are performed using components of the EHR.

Workflow Modeling and Reference Data Collection

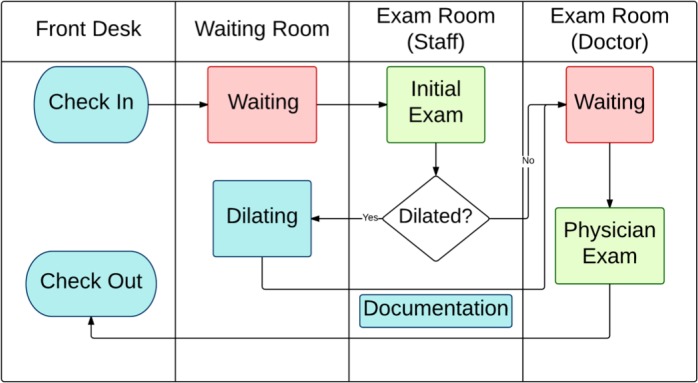

Interviews with staff and observations of each of the four clinics were performed to determine the basic patient flow. All four clinics had the same basic clinic flow as shown in Figure 2. Patients check in and wait to be seen. An ancillary staff member performs an initial exam in an exam room. At the end of this exam, the patient’s eyes may be dilated. If this is the case, the patient returns to the waiting room while waiting for the dilation to take effect—about 25 to 30 minutes. After the dilation, the patient is returned to an exam room and waits for the physician exam.

Figure 2:

Clinic Workflow. Flowchart representation of the workflow for all clinics. Patients see a staff member for an initial exam followed by a physician exam. Patients’ eyes may be dilated before the physician examines them.

If the patient’s eyes were not dilated, the patient remains in the exam room and waits for the physician. While the patient is waiting for the physician exam, the staff member must complete the documentation of the initial exam before the physician can start the exam. After the physician’s exam, the patients check out and leave. Because OHSU is an academic institution, trainees (residents and fellows) may also examine the patient. Trainees’ exams, however, do not occur at regular points in the workflow. Because of this added complexity, we focus on workflows without trainees in this initial study.

Once the workflow was understood, we performed time-motion studies for 3 - 6 half-day sessions at each of the clinics. One to two observers recorded timestamps of physicians and staff as they entered and exited exam rooms; these timestamps were then processed later to determine the duration of time spent in exam rooms with patients. This observational timing data was used to validate the use of EHR timestamp data to represent workflows and will be used to validate the simulation models in this study.9

Simulation Model Parameters and Their EHR Sources

To build the models for all four clinics, we first had to determine the necessary parameters and the EHR sources for them. In a prior study, we identified sources of workflow data within the EHR.9 We use the clinical data warehouse and ophthalmology datamart for OHSU’s EHR (EpicCare; Epic Systems, Madison, WI). While these timestamps are specific to OHSU’s implementation in ophthalmology, comparable timestamps are available for other vendors, installations and specialties.

The parameters are based on the clinic workflow, clinic resources and clinic scheduling:

Start and End of Patient Encounter: Check in and check out timestamps. These timestamps are available in the ophthalmology datamart.

Start and End of Staff and Physician Exams: Audit log timestamps. Timestamps from the audit log can be used to represent the beginning and ending of individual exams during the course of the office visit, which can calculate the duration of these exams. Data about providers and workstations was also required to determine the proper context for the timestamps.

Dilation rate: Structured ophthalmology documentation form and ophthalmology datamart. Eye dilation information is entered in the structured ophthalmology documentation form of the EHR and is available from the ophthalmology datamart.

Staff Documentation time: Audit log timestamps. The ancillary staff members must complete their documentation of the initial exam before the provider can start their exam. Using the audit log data combined with user and workstation data, we could measure the documentation time by measuring the time spent on the staff workstations after the initial exam.

Number of ancillary staff and exam rooms: interviews and audit log data. We used a combination of interviews and analysis of EHR users to determine typical values of these parameters. For many clinics, these parameters could vary from day to day, depending on other providers’ clinics in their specialty who shared these resources.

The number and length of the scheduling blocks and number of patients per block: interviews and clinic encounter data from ophthalmology datamart. We were able to obtain scheduling templates for each of the four clinics that guided how patients are scheduled; however, clinics rarely follow this template for every clinic day. Urgent patients are added on, schedules are overbooked, patients cancel or do not show. We reviewed the encounter data from the ophthalmology datamart to determine representative schedules for each of the clinics.

Patient arrival patterns: check in and appointment times from ophthalmology datamart. While all the clinics have only scheduled patients (no walk-ins), patients do not usually arrive at their scheduled time. This variation can have a great impact on the number of patients at the clinic at a given time. Since patients are scheduled, we measured the difference in time from their scheduled appointment time and their check in time. For all clinics, the large majority of patients are early.

EHR Dataset

Once we identified the parameters and their sources, we gathered datasets of office encounters for each of the four clinics for two years: 2013 and 2014. As with any large dataset, there are some encounters with incomplete data— we used only those that had valid check in and check out times. Further, we focused only on encounters that did not use trainees, since our initial models did not include them, as mentioned previously. Next, we wanted to find clinic days that represented a “typical” half-day session. This would include patient visits that happened during regularly scheduled half-day clinic sessions without large numbers of no-shows or overbooks. Therefore, we restricted the data to include clinic sessions whose number of patients fell within the 1st and 3rd quartile of all clinic sessions’ volume. We plan to address the atypical clinic days with future models. Finally, for each clinic, we then separated the dataset into a training set for building the model (80% of the data) and a test set for validating the model (20% of the data), in order to avoid overfitting the model.

Simulation Models & Validation

We used Arena simulation software18 to build a model of each clinic’s workflow using the training dataset. We used the same basic model for all four clinics, but customized it for each. We validated the 4 clinics’ simulation models by comparing metrics (total average exam time and average wait time) to the EHR test dataset.

Model Experiments

Once the clinic models were validated, we used them to evaluate different clinic scenarios. Specifically, we tested the effect of adding clinic resources such as staff members and exam rooms. Additionally we tested various methods of scheduling strategies in an attempt to better manage the negative impact of patient encounter (visit) variability on the clinic flow. Previous studies have shown that scheduling longer encounters with higher variability at the end of the day helps reduce wait time.19 To simulate this scenario, we identified the patient encounters that were the longest 1/5 of all encounters in the two year dataset. We then tested schedules with varying numbers of long encounters as well as different placements of these long encounters.

Results

EHR, Observed and Simulated Encounters

Table 1 gives the number of encounters in the EHR, observed and simulated datasets. The table shows how the number of encounters decreased in the EHR dataset as we restricted the set to encounters with complete data, no trainees, and during a typical day (number of patients is in 1st – 3rd quartile of ½-day clinic volume). The EHR training dataset was used to determine the probability distributions used in the simulation model. The test data, observed data without trainees and the simulated data sets are those that are used for validation.

Table 1:

Number of encounters in the EHR data including the training and test datasets, the observed data and the simulated data.

| Number of Encounters | ||||||||

|---|---|---|---|---|---|---|---|---|

| EHR Data | Observed Data | Simulated Data ‡ | ||||||

| Clinic | Total * | w/o Trainees | Typical Clinic† | Training | Test‡ | Total | w/o Trainees‡ | |

| Cornea (WC) | 7128 | 4665 | 2789 | 2239 | 550 | 47 | 17 | 18000 |

| Comprehensive (LL) | 3830 | 3564 | 2652 | 2161 | 591 | 29 | 21 | 11000 |

| Glaucoma (MP) | 5252 | 3743 | 2288 | 1839 | 449 | 26 | 27 | 15000 |

| Pediatrics (LR) | 5227 | 4950 | 3130 | 2486 | 644 | 148 | 120 | 13000 |

Excludes encounters with incomplete time stamp data

Clinics with 1st - 3rd quartile of 1/2 day clinic volume

Used for validation

Simulation Model Parameters

Building the simulation models required generating probability distributions for the patient arrivals, staff exams, staff documentation and provider exams and determining values for clinic parameters, shown in Table 2. The probability distributions were fit to the EHR training data. We used the average dilation rate for each clinic to determine the likelihood that a patient’s eyes are dilated after the initial exam, but we used median values for the other parameters (number of staff, number of exam rooms, number of scheduling blocks, block length and total number of patients per half-day clinic session) since the model requires discrete values instead of continuous averages.

Table 2:

Model Parameters per ½ Day Clinic Session. Parameter values for each of the four clinics that represent a typical ½ day session. Values were determined from audit log and ophthalmology datamart encounter information. All values are medians, except for the dilation rate, which is an average over all ½ day sessions in the clinic dataset.

| Clinic | Dilation Rate | # Staff | # Exam Rooms | # Schedule Blocks | Length (Minutes) | # Patients |

|---|---|---|---|---|---|---|

| Cornea (WC) | 10% | 3 | 4 | 13 | 15 | 18 |

| Comprehensive (LL) | 64% | 2 | 4 | 10 | 15 | 11 |

| Glaucoma (MP) | 7% | 2 | 5 | 9 | 20 | 15 |

| Pediatrics (LR) | 43% | 2 | 6 | 11 | 15 | 13 |

Validation of Simulation Models

We used the parameters and distributions to build a simulation model for each clinic in Arena. We ran each model 1000 times and compared the patient wait time and total exam time to the averages of the EHR test data set and observed dataset; see Table 1 for the sizes of these datasets. Note: the simulated data set had very large n since we ran the models for 1000 half- day clinics to determine the long-term behavior of the system.

Validating the model is crucial for ensuring that the models are representative. The validation results, given in Table 3, show that the simulated wait and total exam times (staff + provider) are close--within 5.5 – 6.8% on average--to those measured from the EHR test data set. Three out of the four clinics’ models mean wait time and exam time were under 5% different from the EHR test data set. Only two differences of the means were statistically significant—Glaucoma (MP) wait times and Pediatric (LR) exam times.

Table 3:

Model Validation. Mean patient wait time and mean exam time per ½ day clinic session were compared for the simulated dataset and the test data set from the EHR data for each clinic. Wait time error% ranged from just under 2% to under 12% and exam time error % ranged from 3.3% to 15.2%. The only statistically significant differences between the simulation and EHR data were for the Glaucoma (MP) clinic wait times and the Pediatric (LR) clinic exam times.

| Mean Wait Time (Minutes) | Mean Exam Time (Minutes) | |||||||

|---|---|---|---|---|---|---|---|---|

| Clinic | Simulated | EHR | Error (%) | p-value | Simulated | EHR | Error (%) | p-value |

| Cornea (WC) | 42.6 | 44.3 | 3.8% | 0.8 | 33.2 | 34.7 | 4.3% | 0.8 |

| Comprehensive (LL) | 42.5 | 41.7 | 1.8% | 0.5 | 21.4 | 22.4 | 4.6% | 0.3 |

| Glaucoma (MP) | 33.5 | 31.9 | 4.8%* | <0.001 | 26.6 | 27.5 | 3.3% | 0.1 |

| Pediatric (LR) | 30.7 | 34.7 | 11.6% | 0.4 | 21.7 | 25.6 | 15.2%* | <0.001 |

| Average | 5.5% | 6.8% | ||||||

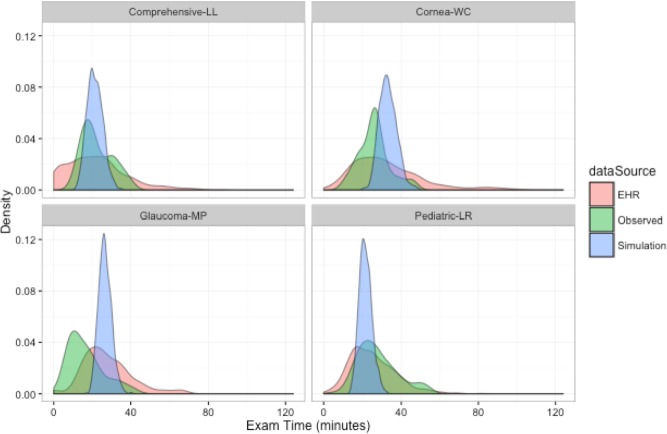

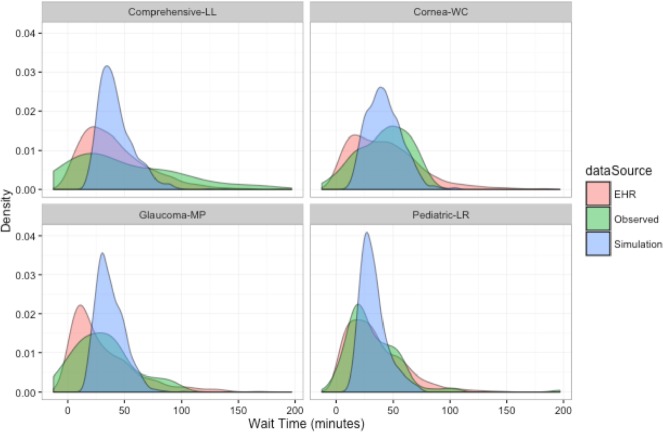

Because of the differences in size between the datasets, statistical tests do not conclusively represent the similarity or difference between the distributions of datasets. Instead, we present visualizations of the datasets’ distributions for comparison. Figures 3 and 4 show the densities of the three different data sets for wait time and total exam time. The means are close in all the graphs and there is significant overlap for all the datasets in all the clinics, which indicates that the simulated models are representative of real clinic workflows. Because the simulation models have a large n and the plotted data is average clinic wait time instead of individual encounter wait time (a limitation of the simulation software), the plots of the simulated data have a much narrower distribution than the other 2 datasets.

Figure 3:

Exam time densities of the three datasets: simulated, EHR test data and observed data. There is significant overlap of the three different datasets which indicated the simulated model is representative of the real clinic workflow.

Figure 4:

Wait time densities of the three datasets: simulated, EHR test data and observed data. There is significant overlap of the three different datasets which indicated the simulated model is representative of the real clinic workflow.

Model Experiments

Once the models were validated, we are able to use them to experiment with different clinic configurations:

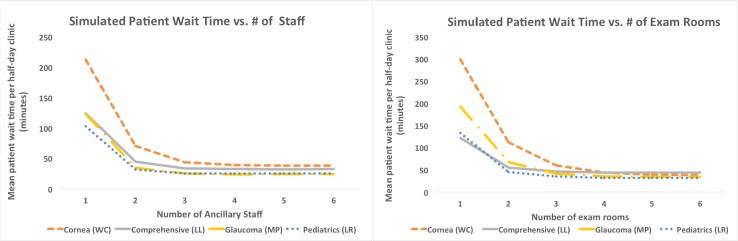

1. Experiment 1: Varying number of staff and exam rooms

Simulation models were used to determine the effect of changing the availability of resources. For our four clinic models, we investigated the impact of varying the number of available exam rooms and ancillary staff members. Clinic managers commonly make decisions regarding these resources, but do not have good data for supporting them. Figure 5 plots the simulated patient wait time against number of exam rooms and number of staff members. For each experiment, the non-varying resource was held constant at the value given in Table 2. Patient wait time decreases as the number of exam rooms and staff members increases from 1 to 3, but levels off after that point. Since there is still only one physician in the clinic, adding more resources does not improve wait times since the single physician becomes the bottleneck.

Figure 5:

Experiment 1: Effect of number of staff and exam rooms on simulated patient wait time. Data shows that patient wait time decreases up to a point when staff and exam room numbers are increased. For all clinics, there appears to be only a small benefit for greater than 3 exam rooms and greater than 3 staff members.

2. Experiment 2: Impact of varying numbers of “long” encounters per clinic session

Simulation models were used to examine the impact of encounters that are “long” and have high variability since long encounters monopolize resources, cause delays and create queues of waiting patients.19,2” To investigate the impact that long encounters have on clinic wait times, we first defined long encounters to be the longest 20% of all encounters for a clinic in the two year dataset. On average, we would expect that 20% of patients in a half-day clinic session would fall into this category, but in reality, the number of long encounters per half-day clinic session may vary when schedules do not limit them. We looked at the datasets and determined minimum, maximum and median numbers of long encounters per clinic session for each provider’s ½ day clinic session. We then used the simulated models to measure the range in patient wait times for these differing numbers of long encounters per session.Table 4 shows these results. For all four provider clinics, the wait times increase dramatically as the number of long encounters per session increases. For example, the Cornea (WC) clinic’s wait time increases from 26.9 minutes when there are 0 long encounters to 61.9 minutes when there are 9 long encounters, which is an increase of over 100%. The other clinics are similar. Keeping the number of long encounters steady—close to the median—will help reduce the variability in wait times from session to session, while still meeting the demands for longer encounters over time.

Table 4:

Experiment 2: Simulated Patient Wait Times for Different Number of Long Encounters per ½-Day Clinic Session. When a clinic session has a larger number of long encounters, the simulated average patient wait time increases and the reverse is true for fewer number of long encounters. Ideally, clinics should try to keep the number of long encounters steady per clinic session to avoid the variability in wait times.

| Number of Long Encounters per 1/2 Day Clinic & Simulated Mean Wait Times (Minutes) | ||||||

|---|---|---|---|---|---|---|

| Clinic | Minimum | Median | Maximum | |||

| n | Mean | n | Mean | n | Mean | |

| Cornea (WC) | 0 | 26.9 | 3 | 33.6 | 9 | 61.9 |

| Comprehensive (LL) | 0 | 34.6 | 2 | 40.0 | 6 | 59.6 |

| Glaucoma (MP) | 0 | 20.3 | 3 | 28.1 | 8 | 50.0 |

| Pediatrics (LR) | 0 | 24.9 | 2 | 27.0 | 7 | 41.5 |

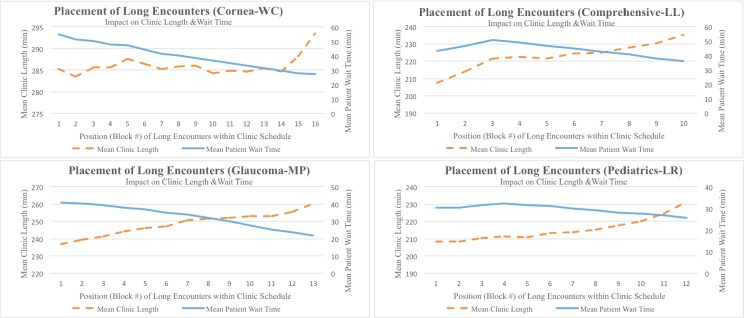

3. Experiment 3: Determining the optimal arrangement of encounters in a clinic session using competing metrics.

After having established above that keeping the number of “long” encounters steady over all clinic sessions reduces the variability of wait time, simulation models were used to investigate the best placement of long encounters in a clinic session. Anecdotally, we observed that schedulers often put encounters expected to be longer at the start of the session, so that the clinic does not run over time; however, this may worsen patient wait time.

To investigate the impact of long encounter placement on these two competing metrics, we ran simulations to determine the average patient wait time and clinic length for different placements of long encounters. Results are shown in Figure 6. Long encounters placed at block 1 are at the start of the clinic session; larger block numbers represent appointment slots closer to the end of the clinic session. The graphs show that mean patient wait time was minimized by scheduling the long encounters at the end of the session, but this has the effect of increasing clinic length. The converse is true—clinic length is minimized by scheduling the long encounters at the beginning of the session, but this also maximizes the mean patient wait time. These graphs and corresponding data can help clinic managers can determine the best compromise between the two competing interests for their clinics.

Figure 6.

Experiment 3: Impacts of Placement of Long Encounters in a ½-day Clinic Session. There is a tradeoff between average patient wait time and clinic length with respect to where long encounters are scheduled in a clinic session. Long encounters at the start of the session minimize overall clinic length and long encounters at the end of the session minimize patient wait time. Graphical analyses can help clinic managers find the placement that best balances the two competing metrics.

Discussion

This study has the following key findings: 1) secondary use of EHR timestamp data in simulation models accurately represents clinic workflow, 2) simulations can provide insight into the best allocation of resources in a clinic, 3) simulations can provide critical information for schedule creation and decision making by clinic managers, and 4) simulation models built from EHR data can be generalizable.

1. Secondary use of EHR timestamp data in simulation models accurately represents clinic workflows. Typically, secondary uses of EHR data have been for clinical research, quality assurance, and public health, rather than for operational purposes.21–23 While emergency departments have used EHR timing data for tracking patients and quality assurance,24–27 our study focuses on using EHR data for modeling outpatient workflows and studying clinic resource allocations and scheduling strategies. Our study shows that the data needed to study workflow can be mined from the EHR and that it represents general trends of clinic workflow in simulation models—simulated average patient wait time and average exam time are within 5% of the EHR data for three out of 4 of the clinics. Patient flow is a concern for all areas of healthcare, in both inpatient and outpatient settings.28 As patients move through the stages of their care, bottlenecks occur at points where demand for finite resources (providers, beds, etc.) exceeds availability. Using EHR large-scale timestamp data provides models that accurately represent the variability and can be used to study various clinic configurations.

2. Simulations can provide insight into the best allocation of resources in a clinic. Adding resources to clinics can help reduce patient wait times, but only to a certain point—3 staff members and 3 exam rooms for all four of the clinics. As long as there is a single physician for the clinic, additional resources will cause this physician to become a bottleneck Therefore, the decreasing marginal benefit of additional staff members or exam rooms should be carefully weighed against the costs of adding these resources.

3. Simulations can provide critical information for schedule creation and decision making by clinic managers. Limiting the number of long encounters per clinic session and placing them wisely can help reduce wait time and clinic length. Keeping the number of long encounters stable for all clinic sessions can help reduce variability in wait times from session to session. Further, the placement of the long encounters affects wait time and clinic length with opposing effects--the closer the long encounters are to the end of the clinic session, average wait times are decreasedand clinic length is increased. The simulated data provides necessary information for clinic managers to decide best how to balance these two competing metrics. We are currently testing schedules in clinic that place long encounters about an hour from the end of the clinic session since this minimizes wait time without unduly increasing the length of the clinic. Preliminary results show that the schedule implementation is effectively minimizing patient wait time.

4. Simulation models built from EHR data can be generalizable. By creating models for multiple outpatient clinics, we show that this approach is not limited to an individual clinic. As long as the simulation parameters are modified for each clinic, the models will still be representative. Further, even though the four clinics we modeled were quite different in terms of their clinic parameters (exam distributions, number of staff, number of exam rooms, number of patients, etc.), they all displayed the same general trends in all of the experiments. This gives us confidence that these trends are accurate and generalizable to other clinics.

Limitations

There are several limitations to our study. First, in order to identify audit log entries that correspond to exams, we had to discern when a staff member or physician was using the EHR during a patient interaction. At OHSU, we have uniquely named workstations in each exam room, which makes identifying patient interactions easier. If laptops were used, it would be much more difficult to determine when a provider was with a patient versus charting in an office. Second, the EHR timestamps do not always capture time spent with patients when the staff or doctor is not using the EHR. While we can determine what times the providers are not using the EHR, we cannot pinpoint what they are doing at those times. Second, the difference in size between our datasets (namely, the small observed set) that we used for the simulation validation limits our ability to use formal statistical methods for comparison. We are currently investigating methods for improving our observed clinic data collection so that we can easily increase the size of this dataset. Third, we limited this initial study to encounters that did not include trainees because of the differences in how trainees interact with the workflow. We are expanding our models to include this important activity to determine its impact on resources and scheduling. Finally, scheduling long encounters wisely improves patient wait times, but requires predictions of encounter length. We have performed preliminary studies using the relationship between clinical and demographic factors and visit length using timing data from the EHR;29 we are continuing to study this area, including adding physician’s input.

Conclusion and Future Directions

Simulation models based on secondary EHR timestamp data can be powerful tools for improving clinic workflows. Multiple years worth of clinic data provides an accurate representation of workflow variability in the models, which allows them to accurately evaluate the impact of clinic changes, whether it be adding staff or exam rooms or investigating novel scheduling approaches. The multiple models indicate that observed trends are real and generalizable. This implies that secondary use of EHR timestamp data for simulations broadens EHRs from a repository of clinical data to a holistic tool for managing clinical workflow.

Acknowledgements

Supported by grants 1T15 LM007088, 1K99 LM012238, and P30EY0105072 from the National Institutes of Health, (Bethesda, MD) and unrestricted departmental support from Research to Prevent Blindness (New York, NY).

References

- 1.Blumenthal D, Collins S. Health care coverage under the Affordable Care Act: a progress report. N Engl J Med. 2014;371:275–81. doi: 10.1056/NEJMhpr1405667. [DOI] [PubMed] [Google Scholar]

- 2.Hu P, Reuben DB. Effects of managed care on the length of time that elderly patients spend with physicians during ambulatory visits: National Ambulatory Medical Care Survey. Med Care. 2002 Jul;40(7):606–13. doi: 10.1097/00005650-200207000-00007. [DOI] [PubMed] [Google Scholar]

- 3.Shea S, Hripcsak G. Accelerating the use of electronic health records in physician practices. N Engl J Med. 2010;362(3):192–5. doi: 10.1056/NEJMp0910140. [DOI] [PubMed] [Google Scholar]

- 4.Chiang MF, Boland MV, Margolis JW, Lum F, Abramoff MD, Hildebrand PL. Adoption and perceptions of electronic health record systems by ophthalmologists: an American Academy of Ophthalmology survey. Ophthalmology. 2008;115(9):1591–7. doi: 10.1016/j.ophtha.2008.03.024. [DOI] [PubMed] [Google Scholar]

- 5.Boland MV, Chiang MF, Lim MC, Wedemeyer L, Epley KD, McCannel CA. Adoption of electronic health records and preparations for demonstrating meaningful use: an American Academy of Ophthalmology survey. Ophthalmology. 2013;120(8):1702–10. doi: 10.1016/j.ophtha.2013.04.029. [DOI] [PubMed] [Google Scholar]

- 6.Chiang MF, Read-Brown S, Tu DC, Choi D, Sanders DS, Hwang TS. Evaluation of electronic health record implementation in ophthalmology at an academic medical center (an American Ophthalmology Society thesis) Trans Am Ophthalmol Soc. 2013;(111):34–56. [PMC free article] [PubMed] [Google Scholar]

- 7.Chiang MF, Boland MV, Brewer A, Epley KD, Horton MB, Lim MC. Special Requirements for electronic health record systems in ophthalmology. Ophthalmology. 2011;118(8):1681–7. doi: 10.1016/j.ophtha.2011.04.015. [DOI] [PubMed] [Google Scholar]

- 8.Gupta D, Denton B. Appointment scheduling in health care: Challenges and opportunities. IIE Trans. 2008:800–19. [Google Scholar]

- 9.Hribar MR, Read-Brown S, Reznick LG, Lombardi L, Parikh M, Yackel TR, et al. Secondary Use of EHR Timestamp data: Validation and Application for Workflow Optimization. 2015 AMIA Annual Symposium Proceedings; 2015; [PMC free article] [PubMed] [Google Scholar]

- 10.Ceglowski R, Churilov L, Wasserthiel J. Combining Data-Mining and Discrete Event Simulation for a Value- Added View of a Hospital Emergency Department. J Oper Res Soc. 2007:246–54. [Google Scholar]

- 11.Glowacka KJ, Henry RM, May JH. A Hybrid Data Mining/Simulation Approach for Modelling Outpatient No-Shows in Clinic Scheduling. J Oper Res Soc. 2009:1056–68. [Google Scholar]

- 12.Rutberg MH, Wenczel S, Devaney J, Goldlust EJ, Day TE. Incorporating Discrete Event Simulation into Quality Improvement Efforts in Health Care Systems. Am J Med Qual. 2013;XX(X):1–5. doi: 10.1177/1062860613512863. [DOI] [PubMed] [Google Scholar]

- 13.Hung GR, Whitehouse SR, O'Neill C, Gray AP, Kissoon N. Computer Modeling of Patient Flow in a Pediatric Emergency Department Using Discrete Event Simulation. Pediatr Emerg Care. 2007;23(1):5–10. doi: 10.1097/PEC.0b013e31802c611e. [DOI] [PubMed] [Google Scholar]

- 14.Gunal MM, Pidd M. Discrete event simulation for performance modelling in health care: a review of the literature. J Sim. 2010 Mar;4(1):42–51. [Google Scholar]

- 15.Clague JE, Reed PG, Barlow J, Rada R, Clarke M, Edwards RH. Improving outpatient clinic efficiency using computer simulation. Int J Health Care Qual Assur Inc Leadersh Health Serv. 1997;10(4-5):197–201. doi: 10.1108/09526869710174177. [DOI] [PubMed] [Google Scholar]

- 16.Hashimoto F, Bell S. Improving outpatient clinic staffing and scheduling with computer simulation. J Gen Intern Med. 1996 Mar;11(3):182–4. doi: 10.1007/BF02600274. [DOI] [PubMed] [Google Scholar]

- 17.Cayirli T, Veral E. Outpatient Scheduling in Health Care: A Review of the Literature. Prod Oper Manag. 2003:519–49. [Google Scholar]

- 18.Arena Simulation Software [Internet]. 2015 [cited 2015 Feb 3] Available from: https://www.arenasimulation.com/

- 19.Rohleder TR, Klassen KJ. Using client-variance information to improve dynamic appointment scheduling performance. Omega. 2000:293–302. [Google Scholar]

- 20.Cayirli T, Veral E, Rosen H. Assessment of Patient Classification in Appointment System Design. Prod Oper Manag. 2008:338–53. [Google Scholar]

- 21.Sandhu E, Weinstein S, McKethan A, Jain SH. Secondary uses of electronic health record data: benefits and barriers. Jt Comm J Qual Patient Saf. 2012;38(1):34–40. doi: 10.1016/s1553-7250(12)38005-7. [DOI] [PubMed] [Google Scholar]

- 22.Linder JA, Haas JS, Iyer A, Labuzzetta MA, Ibara M, Celeste M, et al. Secondary use of electronic health record data: spontaneous triggered adverse drug event reporting. Parmacoepidemiol Drug Saf. 2010;19(12):1211–5. doi: 10.1002/pds.2027. [DOI] [PubMed] [Google Scholar]

- 23.Hersh WR. Adding value to the electronic health record through secondary use of data for quality assurance, research, and surveillance. Am J Manag Care. 2007;13(6 part 10):277–8. [PubMed] [Google Scholar]

- 24.Boger E. Electronic tracking board reduces ED patient length of stay at Indiana hospital. J Emerg Nurs. 2003 Feb;29(1):39–43. doi: 10.1067/men.2003.13. [DOI] [PubMed] [Google Scholar]

- 25.Gordon BD, Flottemesch TJ, Asplin BR. Accuracy of Staff-Initiated Emergency Department Tracking System Timestamps in Identifying Actual Event Times. Ann Emerg Med. 2008 Nov;52(5):504–11. doi: 10.1016/j.annemergmed.2007.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wiler JL, Gentle C, Halfpenny JM, Heins A, Mehrotra A, Mikhail MG, et al. Optimizing Emergency Department Front-End Operations. Ann Emerg Med. 2010 Feb;55(2):142–60.e1.. doi: 10.1016/j.annemergmed.2009.05.021. [DOI] [PubMed] [Google Scholar]

- 27.Eitel DR, Rudkin SE, Malvehy MA, Killeen JP, Pines JM. Improving Service Quality by Understanding Emergency Department Flow: A White Paper and Position Statement Prepared For the American Academy of Emergency Medicine. J Emerg Med. 2010 Jan;38(1):70–9. doi: 10.1016/j.jemermed.2008.03.038. [DOI] [PubMed] [Google Scholar]

- 28.Hall RW. Patient Flow: The New Queueing Theory for Healthcare. ORMS Today [Internet]. 2006 Jun; Available from: http://www.orms-today.org/orms-6-06/patientflow.html.

- 29.Aaker G, Read-Brown S, Sanders D, Hribar MR, Reznick L, Yackel TR, et al. Identification of factors leading to increase pediatric ophthalmology visit times using electronic health record data. Chicago, IL: American Academy of Ophthalmology; 2014. [Google Scholar]