Abstract

Preventive interventions are often designed and tested with the immediate program period in mind, and little thought that the intervention sample might be followed up for years, or even decades beyond the initial trial. However, depending on the type of intervention and the nature of the outcomes, long-term follow-up may well be appropriate. The advantages of long-term follow-up of preventive interventions are discussed, and include the capacity to examine program effects across multiple later life outcomes, the ability to examine the etiological processes involved in the development of the outcomes of interest and the ability to provide more concrete estimates of the relative benefits and costs of an intervention. In addition, researchers have identified potential methodological risks of long-term follow-up such as inflation of type 1 error through post-hoc selection of outcomes, selection bias and problems stemming from attrition over time. The present paper presents a set of seven recommendations for the design or evaluation of studies for potential long-term follow-up organized under four areas: Intervention Logic Model, Developmental Theory and Measurement Issues; Design for Retention; Dealing with Missing Data; and Unique Considerations for Intervention Studies. These recommendations include conceptual considerations in the design of a study, pragmatic concerns in the design and implementation of the data collection for long-term follow-up, as well as criteria to be considered for the evaluation of an existing intervention for potential for long-term follow-up. Concrete examples from existing intervention studies that have been followed up over the long-term are provided.

Keywords: Intervention, Assessment Design, Longitudinal, Follow-up, Retention, Developmental

Experiments are usually designed to investigate only immediate or short term causal effects, and, to date, it is rare to find studies where participants are followed up for much more than one year after an experiment (Farrington, 2006). However, with long-term intervention follow-up one may be able to model within person intervention effects such as cumulative outcomes (e.g., weight status or telomere length), stability and continuity vs. inconsistency, developmental sequences and cascades, and different manifestations of the same underlying construct at different ages.

Caveats have been raised, however, about the proper conduct of prevention trials that are especially relevant to long-term follow-up (e.g., Holder, 2009). For example, there are concerns about outcome variable selection, including a concern for statistical “fishing” for long-term effects, and inflation of type 1 error by capitalizing on chance findings. In addition, long-term follow-up is vulnerable to selection bias: perhaps only willing, compliant participants would agree to be part of a long-term study. A related risk of long-term follow-up is that of differential attrition: different kinds of people could be lost from intervention and control conditions, leading to artificial group differences over time (for example, if the more disordered individuals are lost from the intervention condition over time).

Aim of the Present Paper

While a compelling argument can be made for the benefits of the long-term follow-up of interventions, actual design and planning considerations, particularly those that would minimize concerns raised by Holder (2009) and others, have been less well discussed. How does a research team plan for long-term follow-up while reducing concerns for bias? What strategies be learned from existing interventions that have been followed up in the long-term? The longitudinal follow-up of participants from a prevention trial requires consideration of numerous ethical and logistical issues related to recruitment, consent and sample maintenance. The present paper focuses on four areas for discussion to aid in the design and evaluation of studies proposing the long-term follow-up of a preventive intervention: intervention logic model, design for retention, dealing with missing data, and unique considerations for evaluation studies. As a whole, the paper is intended to help researchers think proactively about designing a study to permit greater likelihood of sample retention, with a developmentally prescient measurement package that permits appropriate follow-up of plausible long-term intervention outcomes. The present paper builds on several excellent resources that detail practical aspects to enhance longitudinal follow-up (Magnusson & Bergman, 1990; Stouthamer-Loeber & van Kammen, 1995; van Kammen & Stouthamer-Loeber, 1998), but focuses in particular on the long-term follow-up of preventive intervention studies.

Intervention Logic Model – Developmental Theory – Measurement Considerations

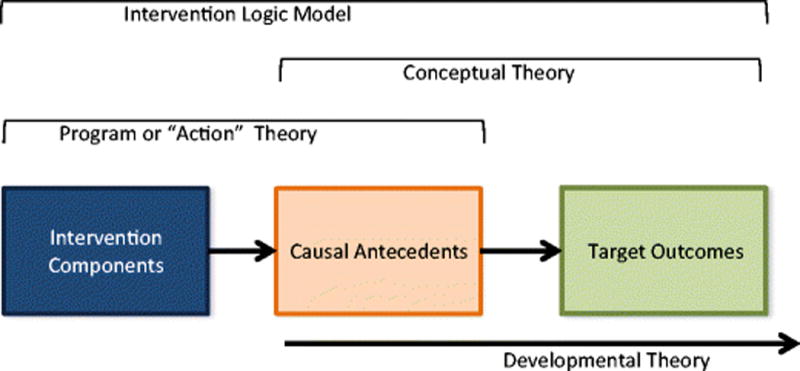

The first recommendation for designing or evaluating a study proposing the long-term follow-up of a preventive intervention is that, consistent with Society for Prevention Research’s standards of evidence, the intervention should have a clearly specified logic model (Flay et al., 2005; Gottfredson et al., in press). As illustrated in Figure 1, an intervention logic model consists of two overlapping components, a causal theory linking causal antecedents to outcomes, and a program or “action” theory, linking intervention components to causal antecedents (Chen, 1990; Gest & Davidson, 2011; MacKinnon, 2008). Specification of a logic model is critical because not only does it represent the hypothesized mechanism by which it is expected to achieve its effects, but it also helps reduce the concern of capitalization on chance findings (fishing) raised by Holder (2009) and others.

Figure 1.

Intervention Logic Model. The intervention logic model specifies the theoretical mechanisms through which the intervention is expected to influence target outcomes. It consists of an action theory reflecting how the treatment will affect known antecedents of the target outcomes, and a conceptual theory drawn from etiological findings on what mediators are known to be related to the outcomes of interest. The developmental theory then specifies how these intervention consequences may unfold over time (see figure 2).

Developmental Theory

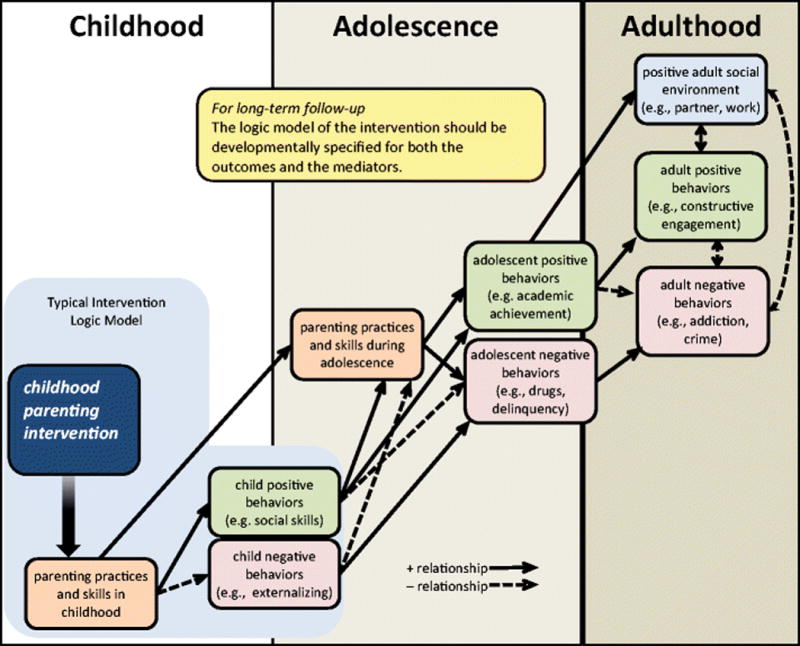

The second recommendation for designing or evaluating a study seeking to conduct a long-term follow-up of an intervention is that the intervention logic model should be considered within a developmental framework. This is perhaps the most crucial component of a priori study design that will facilitate long-term follow-up. Consider an intervention designed, for example, to improve parenting practices for the parents of 5 to 8 year old children through a series of workshops and self-administered DVD and web-based lessons. Assuming that the intervention has the intended effects of changing family processes and child behavior, how might these changes play out over time? Figure 2 provides an illustration of how an intervention logic model, typically focused on the proximal targets of the intervention, could then be considered developmentally into subsequent environmental and behavioral consequences. Childhood-focused parenting practices learned through the intervention could later be translated by parents into parenting skills needed to address adolescent issues. Child competencies gained, and problem behavior reduced, could in turn affect parenting practices as well as adolescent behaviors such as academic achievement, drug use and delinquency. This changed system of parenting and adolescent behavior could, in turn, affect the adult social environment experienced by the intervention participant as well as his or her positive and negative functioning. Positive developmental cascades such as these have been well discussed by Masten and others (Masten & Cicchetti, 2010; Patterson, Forgatch, & DeGarmo, 2010). It is the developmental specification of the intervention logic model that both permits and limits the long-term follow-up of intervention outcomes. It permits long-term follow-up by providing a plausible theory accounting for why earlier intervention procedures might lead to effects across developmental periods or generations. It also limits the analysis, reducing the risk of type 1 error, by focusing analyses only on those outcomes that have plausibility within a specified developmental cascade framework.

Figure 2.

Illustration of a developmentally-specified intervention logic model.

The Nature of Development and the Timing of Follow-up

How long does it take addiction (for example) to develop? Are there multiple paths of addiction? Is it reversible? Is it continuous or stage-like? Collins (1991) discussed the importance of thinking about how the phenomenon under study is expected to unfold developmentally, and suggests researchers ask: Is its development cumulative or noncumulative? Is its development unitary or multi-path? Is its development reversible? Will the growth of the phenomenon under study be a continuous, quantitative phenomenon, or will it be characterized by movement through a series of qualitatively different stages? Similarly, Cohen (1991) emphasized that, during the design phase of the study, it is essential to match the temporal spacing of follow-up with the actual expected nature and rate of change of the phenomenon under study.

Concrete Examples from Existing Intervention Studies

In a review of 46 randomized experimental trials of parenting interventions with at least 1 year follow-up, Sandler, and colleagues (2011) noted that there was good evidence that parenting interventions could prevent a wide range of problem outcomes and promote competencies from one to 20 years later. However, they went on to show that very few of these studies actually investigated the processes by which their parenting program might have produced the observed long-term effects, and called for more intervention studies to do so.

Recent studies provide examples of a cascading pathways model of long-term effects of childhood intervention. Patterson and colleagues (Patterson et al., 2010) examined the long-term effects of a randomized controlled trial of a preventive intervention that used the Parent Management Training—Oregon Model (PMTO). They reviewed results from meditational analyses that supported four different potential developmental cascade models through which initial intervention outcomes may have played out over the course of nine years: a social learning model, a comorbid behavior model, a collateral environmental change model, and an interpersonal process model. One interesting feature of tests of the PMTO intervention is that intervention effects remained stable (Patterson & Fleischman, 1979), or even increased after the end of the intervention (Beldavs, Forgatch, Patterson, & DeGarmo, 2006; Forgatch & DeGarmo, 2007). The finding of increased intervention effect sizes over time supports the assertion that the intervention created a system change that resulted in positive developmental cascades over time.

Similarly, our own study, the Seattle Social Development Project (SSDP), also included a preventive intervention nested within a longitudinal study, and provides an example of the long-term follow-up of childhood intervention. The SSDP intervention began as a delinquency and drug prevention program and sought to improve opportunities, involvement, rewards and life skills for children in elementary school. Guided theoretically by the Social Development Model (SDM, Catalano & Hawkins, 1996), we identified and developed methods of management and instruction that could be used by public school teachers and adult caretakers to set children on a positive developmental course by promoting opportunities for children’s active involvement in the classroom and family, developing children’s skills for participation, and encouraging reinforcement from teachers and parents for children’s effort and accomplishment. Early results examining intervention efficacy found significant intervention effects on targeted outcomes such as early delinquency and alcohol initiation (Hawkins et al., 1992). Importantly, Hawkins et al. also examined the effects of the intervention on theoretically targeted mediators of their intervention logic model and found that youths in the full-intervention condition reported better family communication, family involvement, attachment to family and family management as well as better school rewards, attachment, and commitment compared to controls.

The Social Development Model is a life-course developmental theory (Catalano & Hawkins, 1996; Elder, 1998) that recognizes that modal positive and problem behaviors and the nature of interactions of the individual and the environment change with development. The SDM has been articulated in submodels that include childhood, adolescence, the transition to adulthood, and adulthood. Different malleable individual characteristics and socialization forces are salient within each of these developmental submodels. The sources of opportunities, involvement, rewards, and bonding for particular behaviors change at different stages of the lifespan. For example, we have shown that the influence of bonding to family of origin on the onset of daily smoking declines in the transition to adulthood (Hill, Hawkins, Catalano, Abbott, & Guo, 2005), while experiences in the family of cohabitation, such as pregnancy and partner substance use, are important influences in adulthood (Bailey, Hill, Hawkins, Catalano, & Abbott, 2008; Epstein, Hill, Bailey, & Hawkins, 2013).

Similarly, salient outcomes change developmentally, as illustrated in Figure 2. It is this foundation of a developmentally specified theory guiding the logic model of the intervention that has permitted the examination of long-term intervention effects of the SSDP intervention into adolescence and adulthood across a range of relevant outcomes. For example, by age 18 (six years post-intervention) compared to controls, youths in the full intervention had significantly reduced school misbehavior, lifetime violence, and heavy alcohol use, and improved school commitment, attachment, and achievement (Hawkins, Catalano, Kosterman, Abbott, & Hill, 1999; Hawkins, Guo, Hill, Battin-Pearson, & Abbott, 2001). Also by age 18, youths in the full intervention had significantly lower lifetime prevalences of sexual intercourse, early pregnancy (or causing pregnancy), and multiple sex partners than controls (Hawkins et al., 1999). These findings were replicated at age 21, in addition to significantly increased probability of condom use during last intercourse (among single individuals), and decreased incidence of lifetime STI diagnosis in the full-intervention group compared with controls (Lonczak, Abbott, Hawkins, Kosterman, & Catalano, 2002). By age 21, the full intervention group, compared with controls, showed significantly better outcomes with respect to education, employment, mental health, and reduced crime (Hawkins, Kosterman, Catalano, Hill, & Abbott, 2005). By ages 24–27 the full intervention group, compared with controls, showed significantly better socioeconomic attainment, and mental health (Hawkins, Kosterman, Catalano, Hill, & Abbott, 2008). Effect sizes (Cohen’s d) at ages 24–27 ranged from 0.27 (educational attainment) to 0.46 (mental health DSM-IV criterion index).

Examining intervention differences associated with the onset of sexually transmitted infections (STI) through age 30, Hill et al. (2013) found significant main effects of the intervention on STI onset (41.1% lifetime STI in the control condition vs. 27% in the full treatment condition by age 30). Interestingly, the difference in STI onset between intervention and control conditions did not achieve significance until age 16, four years after the end of the intervention. This finding of increasing intervention differences reflects those discussed earlier by Patterson et al. (2010) and supports a cascading model of preventive-intervention long-term effects. Additionally, significant ethnicity by intervention effects were found for those in the full-intervention condition such that reduction in STI was significantly stronger for African Americans and Asian Americans compared to Caucasian Americans. We conducted meditational analyses of these intervention effects among the African American participants by extending the logic model of the intervention into adolescence, and found that (1) intervention condition significantly predicted the targeted mediators of prosocial adolescent family environment, school bonding and age of initiation of sexual behavior, and (2) these variables partially mediated intervention effects on STI hazard.

As with the Parent Management Training-Oregon Model (Patterson et al., 2010) intervention described earlier, the initial SSDP intervention logic model was focused on childhood problem behavior and its antecedents. However, guided by a developmentally articulated theory, we have been able to project and test theoretically plausible intervention effects and their mediators forward into adolescence and young adulthood. Thus, two critical recommendations drawn from our work, and from findings of others examining long-term intervention effects and their mechanisms, are to specify a clear intervention logic model, and think it forward developmentally.

Measurement Considerations

Our third recommendation in planning or evaluating a study for long-term intervention follow-up is that the instrument design should take future potential developmental cascades into account. The act of explicitly specifying an intervention logic model and then projecting it forward developmentally has measurement implications for the original design of the study. As with our own research group and Patterson, et al. (Patterson et al., 2010), many researchers now conducting long-term follow-ups of their interventions initially focused their conceptualization and measurement package on immediate intervention targets. However, realizing that intervention effects on the mediators and outcomes might cascade into other domains and behaviors encourages researchers to establish early measures of those cascading behaviors at the outset. For example, while the reduction of depression or risky sexual behavior and improvement of physical health in adulthood may not be foci of a preventive intervention directed towards young children, if these were plausible distal consequences of successful intervention, then the designers of the study would be prescient to include simple early measures affective disorder, early sexual behavior, as well as height and weight at developmentally appropriate points in the assessment. The addition of potential cascading intervention outcomes could be stepped in sequentially, at age appropriate waves of assessment.

One substantial challenge of long-term follow-up is striking a balance between asking developmentally-appropriate questions as the sample ages versus keeping the questions unchanged to permit consistent comparisons and repeated measures analyses. In some cases, the forms of the questions and response options may need to be adapted by age for the same respondents. For example, one may use simple sentence structures and response formats for a younger child (6–9 year old) interview (e.g., Does your family usually eat dinner together? Yes – No), and then more informative structures and responses for the older child (10+ year old) interviews (How many evenings a week does your family usually eat meals together? 0 times a week, 1–2 times a week, 3–4 times a week, 5–6 times a week, 7 times a week). In other cases, entirely new domains become relevant in adolescence (e.g., sexual risk behavior) that were not asked before, and other domains (e.g., school misbehavior) become irrelevant in adulthood. Striking this balance between measurement consistency across time and developmentally-appropriate assessment is an ongoing, serious discussion at each assessment.

Pragmatic lessons learned about project management and data collection

Our fourth recommendation in planning or evaluating a study for long-term follow-up is that data collection efforts should be implemented to maximize sample retention and data validity. This section provides practical guidance to those designing or evaluating an intervention study for the possibility of long-term follow up. We draw on our own experience conducting the follow-up field periods for a range of interventions as well as traditional longitudinal panel studies. In 1990 the Social Development Research Group at the University of Washington established a central unit to provide survey research services to its investigators. During the last twenty-five years, this unit has developed the systems, procedures and a broad knowledge base necessary to ensure successful recruitment and retention with a variety of survey modes and study populations, including general households and specialized populations such as children, at-risk youth, ethnic and other cultural groups those undergoing treatment for drug addiction, adjudicated delinquents, teachers, and key informants including elected and other public officials. This professionalization of the data collection staff (rather than relying undergraduate students of the moment) has greatly enhanced recruitment and retention.

Maintain a consistent field management staff over multiple waves of data collection

It is impossible to document everything that occurs over the course of a data collection wave, and often it can be the random, unique bits of information about the field period or particular respondents that can make or break the next experience or interaction. Over time or over waves, field managers can become an invaluable library of historical knowledge that can thoughtfully inform next steps. The retention of project historical knowledge is hard to accomplish if the design has intermittent assessments which then lead to regular staff turnover. It is typically between field periods, during the downtime, that valuable staff and project knowledge and skills are lost. Identify within and across project activities to which field management can contribute, thus preventing staff attrition between major field periods.

Data Collection Issues

In addition to thinking about how the causal model and measurement battery may change over time, consider the natural life transitions that participants may experience beyond the original grant timeline, such as graduating from school, living independently from parents, getting married and/or having children. Sample dispersion is inevitable and will take researchers beyond the original catchment area. How might these and other changes affect survey instruments and choice in modes of data collection? How to plan for follow up with participants who move away? Select a plan that will optimize success, consistency and retention while minimizing respondent burden and overall study costs. Longitudinal follow-up with low attrition can be costly because of the additional efforts required by field staff and management. As cases completed per month decrease during a field period, costs per case increase. Plan for adequate funding by building time into the grant application to respond effectively to the portion of the study sample that will be most resistant to response. The following points summarize our primary recommendations.

Design consent materials to anticipate long-term follow-up

Leave the door open for long-term follow up even if current funding only gets you part way there. This is especially important with respect to human subjects (Institutional Review Board, IRB) review issues and obtaining consent from the sample. Ideally, you want to avoid having to reconsent your sample under new or different terms at a later date. Specific considerations with regard to the IRB application include:

Data retention. Request to retain data indefinitely, and include this language in the consent form;

Certificates of Confidentiality. Apply for a Federal Certificate of Confidentiality, (http://grants.nih.gov/grants/policy/coc), if appropriate, describe this in the consent form. Be aware that a Certificate of Confidentiality provides some protection, but is not an absolute guarantee against disclosure (Beskow, Dame, & Costello, 2008; Wolf et al., 2012).

Incarceration. If there is any chance that some participants may become incarcerated, mention this in your IRB application since prisoners are a protected population needing special review.

Children. Ask for assent from the child and consent from parents until age 18, and reconsent the person after their 18th birthday.

Multi-modal contacts. Consider in advance what variations in participant correspondence might arise and have them approved by your IRB so that you are not delayed at the time the situation arises. For example, draft multiple versions of reminder contacts to be delivered via a variety of media (text, email, Facebook) and get them all approved prior to the start of the field period.

When designing your consent form, consider the following:

Keep the language open-ended with respect to time. In all study correspondence, keep language open-ended for a potentially long-term follow up, but be careful not to imply that you intend to interview them for the rest of their life. It is a delicate balance between keeping the door open for potential continuation versus scaring off potential participants because they think you are asking them for a lifetime commitment. Examples: If you agree to this study, we will interview you annually; We would like to continue to interview you over time/as you grow older; This study is currently funded until [date], however funding will be sought to continue the study beyond [date].

Obtain consent to collect locating information. Set the stage for on-going contact and the need for collecting locating information. In the consent form include language such as: Since this is a long-term study, it is extremely important that we are able to get in touch with you for follow-up interviews. Therefore, we will maintain contact with you even if you move or your child changes schools. To help keep us in touch, we will ask you for information such as a current address or telephone numbers. If you move, other contacts may be made as needed with you, your child’s school and/or other names provided by you to verify address and telephone information.

Enable others to provide information. Obtain explicit, written consent from the respondent to contact others for updated contact information (schools, other “locators”). By providing my signature below I agree to allow study staff to contact my child’s school and other contact persons regarding our whereabouts, to obtain updated address or telephone numbers.

Develop a database to track sample members over time

From the outset, it will be essential to have a database capable of tracking address histories (with associated start and stop dates), contact histories, locator information, details about respondents and interview experiences, survey statuses and details for each wave, problem codes, and other anecdotal information. In addition, due to rapidly advancing technologies, there is an increasing need for a project database to be able to interface with information coming from a variety of locations and devices: web surveying platforms, interviewers in the field with smart phones or other mobile devices, and field supervisors at remote locations. Your database must be adaptable and supportable over the long haul, so pick a platform that has some longevity and is user friendly. For example, Microsoft Access currently is commonly used, sometimes in conjunction with Microsoft SQL Server depending on the size and budget of the project. Terminal server installations allow ease of access from either inside or outside of the office. Note that address histories should be tracked not only for locating purposes, but also in the event that geo-coded built environment data (e.g., neighborhood poverty or tobacco or alcohol outlet density measures) could be built into the dataset over time for analysis purposes.

Be thoughtful in study branding

In designing your study materials beyond the actual content of the assessment (e.g., flyers, newsletters, letterhead, etc.), again it will be essential to look at them with an eye on the future. Your project identity will need staying power. You want it to promote bonding or branding to your study. Think carefully about your study name and logo. Choose something that is memorable, meaningful and will remain relevant as your population ages. Will it still resonate with them if they are older? If they have moved away? A study called “The Boston Kids Project” will be less appealing and relevant to participants who are no longer “kids” and/or have moved away from Boston.

Collect strategic locator information

Another essential element of your first contact with respondents should be to collect detailed “locator” contact information. We ask the respondents to nominate at least 3 people who will always know where they are (locators). Request the full name with middle name or middle initial, of all parent figures (for minors) and their relationship to the respondent. Focus on collecting “good” locators; someone who is likely to always be in touch with the respondent no matter what, such as a parent, grandparent, or lifelong family friend. Avoid less stable locators such as a youth’s best friend, a teenager’s current boyfriend or girlfriend. Train your interviewers that collecting contact and locating information is just as important as the survey data. Ask them to probe for clarifying details if the information is ambiguous or incomplete. In addition to human locator contracts, one should ideally also collect respondent birth date, social security number, addresses and multiple phone numbers (home, cell, work), email addresses, social network sites. If possible collect these data on the 3 locators as well. Given the rising concerns about identify theft, our suggestion would be to NOT ask for all of this at the point of recruitment. Ask for this information after the study has had a chance to build some rapport and trust, perhaps after the initial data collection is completed. Often it is not possible to collect social security numbers from respondents; each study presents different privacy concerns. Asking for as many pieces of information as possible to reduce future attrition and loss to follow-up is a wise strategy to employ but anticipate that some of these requests will be denied by the IRB and/or any agencies or government bodies providing sample information.

Maintain the sample during and between waves

In a long-term intervention follow-up, participants will not have study activities to complete for months or years in-between assessments. During this time, it is important to still keep in touch with participants to assure their commitment to the project and to keep updated records of their contact information. Over time, this gets trickier and more tailored. One size does not fit all. For most, an important key to successful longitudinal follow-up is in maintaining a positive relationship with sample participants, and key to this is to really treat them like relationships. Participants do not want to feel like “research subjects.” They typically want to be treated as collaborating, thinking, consenting people. One way to do this is to provide them with systematic updates of study findings. In the past, these updates were typically delivered via a newsletter in the mail. As social networking has grown in popularity, many studies are now updating their participants of new findings or important deadlines via a study website, Facebook page or Twitter account. These media can also be used to update participants on how many people have already completed their study activities in an attempt to reach participants who have yet to complete them. It should be noted, as discussed later, that this must be done very carefully and in some cases avoided altogether so as not to bias findings, for example, by creating expectancies or demand characteristics or alerting participants to hypotheses (see also for a thoughtful discussion, Fernandez, Skedgel, & Weijer, 2004).

Other strategies include: letting respondents know what other participants are saying about the study (include quotes from open-ended responses regarding participation); sending birthday and/or holiday cards (this also can double as a locating strategy between waves by using Address Service Requested); and providing adequate, escalating incentives as the respondent gets older that honor the respondent’s time and contribution to your study. In the case of incentives, estimate the average hourly wage of your sample and compensate accordingly. Additional reimbursement may be offered to cover lost time at work, travel costs or childcare for participants for whom these are barriers to participation. In our experience, we have obtained IRB approval consistently to do so because we are removing barriers to participation that participants form lower socioeconomic status may disproportionally face relative to the rest of the sample. Submit all potential fall-back strategies with the initial IRB.

It is important to understand your sample and the ways they like to communicate. Stay current and relevant; it is essential to stay on the cutting edge of contact strategies, especially when dealing with youth and young adult populations. Texting and Facebook or may be better avenues than phone messages or email for contacting youth or young adults. New social networking platforms will rise in prominence over time. Get the recent technologies of the day (e.g., currently, smartphones) for your interviewers to assist in accessing social networking media while in the field. For other populations, a postal letter may still speak volumes. Try different strategies and see what people respond to.

Use paradata (information about the process of survey data collection, such as number of contact attempts, interviewer observations, etc., Nicolaas, 2011) and information collected in past waves to guide your approach with individuals or groups of respondents. Draft multiple versions of reminder contacts to be delivered via a variety of media, and use them as needed, with all or specific segments of your population. Be prepared to offer multiple ways to complete the survey for a small percentage of respondents (e.g., in-person vs. web-based vs. telephone vs. paper-and-pencil), or have a planned approach for offering alternate methods to all at defined points in your field period (e.g., start with a web-based offer, and follow-up in person with web non-responders). Be flexible and willing to accommodate individual needs. Your preferred mode of data collection might be the primary barrier to a completed interview for a portion of participants. For example, the technology-phobic person might request to do the survey on paper rather than the web; or the exceedingly private individual might not want an in-person interview and would prefer to do it on-line. Although accommodating special requests is more work, efforts to accommodate individual needs improve the retention rate, and create a positive lasting impression on the respondent. In many cases, it boils down to just listening to their concerns, validating them, and doing your best to be flexible and accommodating when possible. This is the cornerstone of effective refusal prevention.

Invest in locating and reconnecting with lost participants

Inevitably some respondents will lose interest or experience burn-out, some will become disillusioned, and some will become lost. Some are lost due to geographic mobility. All 808 of our SSDP study participants started out in 5th grade classrooms in Seattle. By age 33 they lived in 38 states and 9 counties. However, we still obtained a 92% response rate, and 90% of all interviews were conducted in-person. The remainder were web (7%), paper (2%) or telephone (1%). Expect that your sample will disperse and that even local people could be hard to find. The sample maintenance strategies outlined above will help to address many of these issues, but what do you do when you simply can’t find your respondents?

There is an established literature documenting successful locating strategies (Cotter, Burke, Stouthamer-Loeber, & Loeber, 2005; Farrington, Gallagher, Morley, St. Ledger, & West, 1990; Haggerty et al., 2008). These strategies include the use of locating information gathered at earlier data collection points, contact with participants’ social and family networks, frequent telephone calls and in-person visits, the use of public records such as state and national death records, the use of public databases easily accessed through the internet, and the use of paid searches of the National Change of Address (NCOA) database and commercial databases.

In locating, you must employ the same strategies you do with respondents to establish relationships and build rapport with all potential gatekeepers, locators, and others in the respondent’s social and community networks. This takes time, but is worth the effort to build trust, as these locators and gatekeepers are often your key to unlocking useful information and can even become your ally in getting in contact with your respondent. Use your University or Government affiliation (if relevant) and any available consent or release forms to help establish your legitimacy. Devote as much thought and effort to contacting gatekeepers and locators as you do the respondents. Vary the approach and message to appeal to different sensibilities. Make use of emerging media approaches and social networking sites such as Facebook, LinkedIn, Twitter, text messaging, etc., both as a tool for locating participants and for contacting respondents and locators. Interviewer staff members establish a work-related Facebook page (for example), complete with photos and friends. Photos can be taken at the office and “friends” can be the other interviewers on the team. At all times, remain ethical and within the bounds of your IRB approved protocol, and protect the confidentiality of the study participant.

Successful locating is a well-honed skill that takes significant time. For these reasons, it may be best to centralize locating efforts with one person or a small team and keep locating tasks separate from interviewing tasks. Devote adequate resources into locating as you build your proposed project budget.

Minimizing and Dealing with Missing Data

Our fifth recommendation is to minimize and deal properly with missing data when it occurs

Attrition is a serious threat to the internal and external validity of findings in long-term follow-up studies. One of the great challenges of long-term follow-up of an intervention is that the maximum sample size is fixed at the outset (those who received the intervention and the control group) and can only decline from then on through death and attrition. The main SSDP longitudinal panel was constituted when study participants were 10 years old. The follow-up retention rates at ages 11, 12, 13, 14, 15, 16, 18, 21, 24, 27, 30, and 33 were 87%, 69%, 81%, 97%, 97%, 96%, 94%, 96%, 95%, 94%, 91% and 92% respectively. Three points are important to note here. First, note that retention improved over time. Just because a study did not reach a particular participant one wave, does not mean they are out of the study. Except for those who are deceased or have requested to be removed from the study, attempt to locate and interview all of the original baseline participants at each wave regardless of prior success. Second, note the drop in retention at age 12. This drop to 69% resulted from a change in consent procedures from passive consent through classroom administration to active consent (parent signatures required). Be aware that active consent of child participants will likely require extra efforts to maximize retention. Third, note that high retention is possible in a long-term follow-up, even twenty-three years following the end of the intervention.

Maintaining a high proportion of the original sample size is essential for maintaining statistical power to detect hypothesized relationships among variables of interest (Hansen & Collins, 1994). Furthermore, Hansen, Tobler, and Graham established that a target retention rate of 87% for studies of three or more years’ duration is required to minimize threats to internal and external validity (Hansen, Tobler, & Graham, 1990). Minimizing attrition is particularly important in studies where problem behavior such as violence or drug use and abuse is of primary interest. Research has shown that individuals with frequent antisocial behavior or who are exposed to high levels of risk for problem behaviors are the most difficult to locate and interview at follow-up (Cotter, Burke, Loeber, & Navratil, 2002; Cotter et al., 2005). In our own SSDP study, we compared data for participants who completed their interviews early in the field period at age 24 with data from the last 20% of participants interviewed (Fleming, Marchesini, Haggerty, Hill, & Catalano, under review), and found that late completers were more likely to be African American, male, and high school dropouts. Those interviewed late in the field period were less likely to have a credit card and were more likely to receive public assistance. Late completers were also more likely to have been incarcerated, to have started a fight in the prior year, and to be daily smokers. Thus, different types of people will be lost to the study if one stops the field period early, thus compromising not only power, but also external validity. The value of information gained in long-term follow-up can be compromised as a function of attrition. Importantly, not maximizing retention may also increase the risk of type 2 error: not finding an intervention effect that is truly there. If the intervention was successful in reducing problem behavior, and more risky individuals tend to be assessed later in a field period, stopping the field period early risks increasing the chances of differential attrition by intervention condition. Specifically, one may risk losing higher risk-behavior from the control condition, making it artificially more positive overall, thus working against one’s chance of detecting a significant intervention effect.

The prior section discussed design and implementation strategies to maximize retention over the long-term, however, inevitably, some attrition will occur, and it is likely that these data will not be missing at random. Further, it is not unusual in long-term studies for individuals to be missing from one wave of data collection, but present in subsequent waves. Research has shown that many missing data strategies (e.g., listwise and pairwise deletion, or mean substitution) systematically underestimate means, variances, covariances, and standard errors (Graham, 2009). Graham and others have shown that techniques such as maximum likelihood estimation and multiple imputation provide unbiased parameter estimates and their standard errors when the data are missing at random, and provide the least biased estimates compared to other methods when the data are not missing at random.

Graham (2009) and others have emphasized that when using these procedures, probably the single best strategy for reducing bias due to missing data is to include good auxiliary variables in the missing data model. Good auxiliary variables are covariates that are useful for predicting missing values. One potential set of good auxiliary variables could come from the paradata about the interview discussed above. For example, keep track of the mode used, number of contact attempts, number of broken appointments, and interview location for each person, and include these paradata in the analysis dataset. These data are useful to analysts not only as a data validity check on responses, but also can improve parameter estimation in the presence of missing data to the extent that they reflect potential mechanisms of missingness. Thus, our fifth recommendation is that analyses of long-term intervention follow-up should employ these missing data techniques (e.g., maximum likelihood or multiple imputation) to make maximum use of all available data in the face of attrition.

Unique Considerations of Intervention Studies

The long-term follow-up of intervention studies provides some unique challenges over and above the traditional longitudinal study, including: validity threats arising from attrition, threats to intervention construct validity arising from program participant knowledge of intervention results, and threats to etiological analyses due the presence of the intervention.

Differential attrition by condition

In intervention studies that seek to examine long-term follow-up, a major threat to internal validity is differential attrition by intervention condition. An examination of differential attrition by intervention condition examines not so much whether different numbers of participants were lost in intervention and control conditions (which many studies none-the-less report), but rather, it asks whether different kinds of people were lost in the different conditions (which many published interventions do not report). In a violence prevention program, for example, if fewer high-risk individuals are followed-up in the intervention condition than in the control condition, the remaining intervention group will appear artificially less risky. Although the implementation of CONSORT reporting for interventions provides a great advance (Grant et al., 2013; Moher et al., 2010), CONSORT diagrams document how many participants were retained in each condition but not necessarily whether different kinds of participants dropped out across conditions. At a minimum programs testing intervention effects over time must examine whether those lost to attrition differed across groups on baseline measures of the outcome (e.g., aggression or violence) and on predictors of that outcome (e.g., risk factors for violence).

Registration as a Clinical Trial

In October of 2014, the US National Institutes of Health published a revised definition (NOT-OD-15-015) of a clinical trial that clarified that the concept includes not only drug trials, but also many of the social-behavioral interventions common to prevention science. Their current definition of a clinical trial is “A research study in which one or more human subjects are prospectively assigned to one or more interventions (which may include placebo or other control) to evaluate the effects of those interventions on health-related biomedical or behavioral outcomes.” Prevention researchers have called for preventive interventions, being clinical trials, to be registered in a database such as ClinicalTrials.gov (Sanders & Kirby, 2014), and many peer-reviewed journals will not publish results unless the trial has been registered (for a current list see: http://www.icmje.org/journals-following-the-icmje-recommendations). The challenge of registration of an intervention in such a database is that one must stipulate the targeted primary and secondary outcomes of the intervention. It might seem odd at the point of registration to include potential outcomes 20 years into the future. One possible approach could be to describe the potential for long-term study follow up in the study description, specify the immediate anticipated outcomes for the current time frame, and then to edit your study record with each subsequently funded follow-up period to reflect the targeted outcomes of the follow-up.

History and disclosure of study findings

Researchers have noted that the disclosure of intervention findings to participants in research reflects a moral obligation of researchers, founded in the ethical principle of respect for human dignity, to avoid treating human participants as a means to an end (Fernandez et al., 2004). However, this moral imperative generates a second risk of long-term follow-up that has not consistently been considered: the potential influence of program participants learning of program results, which would, in turn affect their willingness to participate and responses in subsequent assessments. Researchers conducting intervention studies thus are torn between an ethical standard encouraging the sharing of program findings with participants, and the methodological concern for contamination of intervention validity through participant self-selection and demand characteristics in their responses. In our own SSDP study we have erred on the side of providing information about etiological findings in communications with participants, but being cautious about discussing intervention effects. In general we have sought to maintain participant interest in continuing in the study not through frequent discussion of intervention findings with study participants in newsletters and websites, but rather through discussion about what we are learning from our etiological analyses.

Another historical consideration is that participants in both intervention and control groups might participate in a variety of interventions that could affect study findings. At a minimum the researcher may seek to monitor and assess these, however, especially in participant blind interventions, the participants may not be aware of interventions they have experienced, or in what condition they were placed. Unless the concern for collateral interventions can be accurately assessed and controlled, this potential threat may contribute to either random or systematic bias in the intervention analyses.

Etiological Analyses in Intervention Samples

Special care must be taken when conducting studies of etiology on samples that contain interventions, because analyses that do not take the intervention into account may be subject to threats to validity: perhaps the intervention changed the etiological processes themselves? While our analyses of SSDP intervention effects have found differences in the levels and prevalences of risk and protective factors and outcomes between groups (Hawkins et al., 1999; Hill et al., 2013; Lonczak et al., 2002), we have found little evidence of differences among the intervention groups in the etiological processes (e.g., in the relationships between predictors and outcomes). However, to be cautious, in our etiological studies we have used analysis methods that model and control for the presence of a nested intervention (e.g., Bailey et al., 2008; Hill et al., 2010; Oesterle, Hawkins, Hill, & Bailey, 2010). These studies have indicated models that constrained the parameters to be equal across intervention and control groups did not substantially decrease fit relative to unconstrained models. However, for all ongoing etiological analyses we continue this practice. In particular, we recommend that etiological analyses in intervention samples conduct analyses exploring model equivalence between the intervention and control groups. Where important differences are found, researchers should model these effects.

Benefit-Cost Analysis

Benefit-cost analyses have been advocated as necessary to wisely select among a set of potential interventions available for implementation (Foster & McCombs-Thornton, 2012). Conventional benefit-cost analysis requires data on important economic indicators in adulthood (such as criminal justice system contacts, healthcare utilization, labor market earnings). However, with the exceptions discussed in this article, follow-up data from child or adolescent interventions are typically not collected more than a year after the trial has ended. Health economists have suggested that intervention trial results should be extrapolated forward to selected time horizons to better estimate the overall benefits of the intervention (Plotnick, 1994), and successful examples have been provided (Aos, Lieb, Mayfield, Miller, & Pennucci, 2004; Kuklinski, Briney, Hawkins, & Catalano, 2012). However, many models are available for this purpose, and the choice of extrapolation model can lead to very different benefit-cost estimates (Latimer, 2013; Slade & Becker, 2014). Accurate, less biased estimates of benefits of an intervention can more reliably be obtained through actual data assessed in long-term follow-up (Slade & Becker, 2014). Furthermore, interim benefit-cost analyses of a prevention trial may suggest the need to gather, in future follow-ups, data on an expanded set of measures to better capture the full economic impact of early preventive interventions.

Summary of Design Recommendations

In sum, we have developed seven recommendations gleaned from our experience conducting a long-term follow-up of preventive interventions at the Social Development Research Group.

The intervention must have a clearly specified intervention logic model.

The intervention logic model should be conceptualized forward and considered developmentally.

The instrument design and measurement package should take future potential developmental cascades into account.

The data collection efforts should be implemented to maximize sample retention and data validity.

Analytic strategies such as multiple imputation and maximum likelihood analysis should be employed that utilize all available data in the face of missingness during follow-up.

Analyses of long-term intervention effects must be conducted to test and adjust for differential attrition by intervention condition.

Etiological analyses on intervention samples should take the potential validity threat of intervention contamination into account.

Evaluation of Intervention Follow-up Potential

This paper has been written primarily to convey lessons learned from on-going longitudinal studies for those planning a long-term follow-up of their intervention. These suggestions can also be used for reviewers to evaluate the potential of a proposed long-term intervention follow-up (e.g., Is there a clearly specified intervention logic model? Has the logic model been projected longitudinally in a manner that motivates and defines the scope of the follow-up?, etc.). In addition, it is reasonable for reviewers to require some evidence of intervention efficacy, either on the targeted outcomes themselves or at least the proposed mediators of the intervention logic model. Some intervention systems change relatively rapidly like school tolerance and responses to bullying, and one might prefer to see intervention effects on targeted behaviors before investing in a longer-term follow-up. For example, the Olweus Bullying Prevention Program that has been shown to produce significant intervention effects 8 months following implementation (Olweus & Limber, 2010), however, whether there is a cascading impact of this change in school climate on other outcomes such as better adolescent mental health and attainment could still be proposed and tested. Other processes of change are slower, and evidence of intervention effects on the mediators may be sufficient. For example, Hawkins and colleagues note that community wide change through coordinated prevention planning may several years to unfold (Hawkins, Catalano, et al., 2008). In their randomized controlled trial testing the Communities that Care prevention system, Hawkins and colleagues reported that intervention effects on targeted risk factors (e.g., school bonding, delinquent peers) and on targeted outcomes (e.g., substance use and delinquency) emerged as significant 2 years after implementation (Brown, Hawkins, Arthur, Briney, & Abbott, 2007; Hawkins, Brown, et al., 2008). Thus, a criterion of demonstrated efficacy is reasonable, but the nature and duration of the change process involved should be considered, as well as whether the effects shown are on mediators or on targeted outcomes.

Funding considerations

The first author of this paper attended a meeting of longitudinal researchers at NIH where an agency representative began the meeting with the question “I would like to know how to end longitudinal studies.” Granted, longitudinal follow-up with low attrition can be costly, and funding agencies are reluctant to set aside significant portions of their budget for extended periods. However, this was a misapprehension of how most long-term follow-ups are conducted. Most such studies do not request a long period of set aside support, but rather depend upon a patchwork of funding from a range sources over time. A potential funder only needs to consider the scientific merits of the current proposed period of follow-up. The SSDP study has continued from 1980 until present through a wide range of private, NIJ and NIH agency support. What made this continued success of cobbled funding possible was that we had recruited a sufficiently large sample size in the first place to ensure that the sample would be adequate even after attrition, and we employed the many recommended strategies detailed in the present article to maintain the sample. The message to researchers is to build the study well at the outset anticipating some attrition, expect to patch together funding over time from a range of government and foundation sources, and the message to funders is not to think that funding a longitudinal grant means that you will be committing resources for the entire future life of the study: the request is just for the 3 to 5 year window under consideration.

When designing a preventive intervention, researchers hope that the intervention will produce a meaningful, positive, lasting change in the lives of those who experience it. Results from long-term follow-ups of the interventions such as those described here have shown that meaningful positive developmental cascades of intervention effects are possible. We hope that the consideration of these seven recommendations will improve the design, implementation and evaluation of the next generation of prevention programs.

Acknowledgments

This project was supported by the National Institute on Drug Abuse (NIDA; R01DA009679, R01DA024411-05-07), and grant 21548 from the Robert Wood Johnson Foundation. The content is solely the responsibility of the authors. The authors gratefully acknowledge our study participants for their continued contribution to the longitudinal studies. They also acknowledge the SDRG Survey Research Division for their hard work maintaining high panel retention. Earlier versions of this manuscript were presented at an expert panel meeting on “Impact of Early Interventions on Trajectories of Violence” in October of 2010 organized by CDC as well as at the Society for Prevention Research meeting in 2011. The paper has been expanded to consider long-term follow-up of preventive interventions in general.

Footnotes

Conflict of Interest. The authors declare that they have no competing interests.

Ethical Approval. Formal ethics review is not applicable for this theoretical/methodological paper, however, the work is consistent with COPE guidelines.

Informed Consent. Informed consent is not applicable for this work.

References

- Aos S, Lieb R, Mayfield J, Miller M, Pennucci A. Benefits and costs of prevention and early intervention programs for youth. Olympia, WA: Washington State Institute for Public Policy; 2004. [Google Scholar]

- Bailey JA, Hill KG, Hawkins JD, Catalano RF, Abbott RD. Men’s and women’s patterns of substance use around pregnancy. Birth: Issues in Perinatal Care. 2008;35(1):50–59. doi: 10.1111/j.1523-536X.2007.00211.x. [DOI] [PubMed] [Google Scholar]

- Beldavs Z, Forgatch MS, Patterson GR, DeGarmo G. Reducing the detrimental effects of divorce: Enhancing the parental competence of single mothers. In: Heinrichs N, Haalweg K, Döpfner M, editors. Strengthening families: Evidence-based approaches to support child mental health. Munster: Psychotherapie-Verlag; 2006. pp. 143–185. [Google Scholar]

- Beskow LM, Dame L, Costello EJ. Certificates of Confidentiality and Compelled Disclosure of Data. Science. 2008;322:1054–1055. doi: 10.1126/science.1164100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown EC, Hawkins JD, Arthur MW, Briney JS, Abbott RD. Effects of Communities That Care on prevention services systems: Findings from the Community Youth Development Study at 1.5 years. Prevention Science. 2007;8(3):180–191. doi: 10.1007/s11121-007-0068-3. [DOI] [PubMed] [Google Scholar]

- Catalano RF, Hawkins JD. The Social Development Model: A theory of antisocial behavior. In: Hawkins JD, editor. Delinquency and crime: Current theories. New York: Cambridge University Press; 1996. pp. 149–197. [Google Scholar]

- Chen HT. Theory-driven evaluations. Thousand Oaks, CA, US: Sage Publications, Inc; 1990. [Google Scholar]

- Cohen P. A source of bias in longitudinal investigations of change. In: Collins LM, Horn JL, editors. Best methods for the analysis of change: Recent advances, unanswered questions, future directions. Washington, DC, US: American Psychological Association; 1991. pp. 18–30. [Google Scholar]

- Collins LM. Measurement in longitudinal research. In: Collins LM, Horn JL, editors. Best methods for the analysis of change: Recent advances, unanswered questions, future directions. Washington, DC, US: American Psychological Association; 1991. pp. 137–148. [Google Scholar]

- Cotter RB, Burke JD, Loeber R, Navratil JL. Innovative retention methods in longitudinal research: A case study of the developmental trends study. Journal of Child and Family Studies. 2002;11(4):485–498. [Google Scholar]

- Cotter RB, Burke JD, Stouthamer-Loeber M, Loeber R. Contacting participants for follow-up: How much effort is required to retain participants in longitudinal studies? Evaluation and Program Planning. 2005;28:15–21. [Google Scholar]

- Elder GH., Jr The life course as developmental theory. Child Development. 1998;69(1):1–12. [PubMed] [Google Scholar]

- Epstein M, Hill KG, Bailey JA, Hawkins JD. The effect of general and drug-specific family environments on comorbid and drug-specific problem behavior: A longitudinal examination. Developmental Psychology. 2013;49(6):1151–1164. doi: 10.1037/a0029309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrington DP. Key longitudinal-experimental studies in criminology. Journal of Experimental Criminology. 2006;2:121–141. [Google Scholar]

- Farrington DP, Gallagher B, Morley L, St Ledger RJ, West DJ. Minimizing attrition in longitudinal research: Methods of tracing and securing cooperation in a 24-year follow-up study. In: Magnusson D, Bergman L, editors. Data Quality in Longitudinal Research. Cambridge: Cambridge University Press; 1990. pp. 122–147. [Google Scholar]

- Fernandez CV, Skedgel C, Weijer C. Considerations and costs of disclosing study findings to research participants. Canadian Medical Association Journal. 2004;170(9):1417–1419. doi: 10.1503/cmaj.1031668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flay BR, Biglan A, Boruch RF, Castro FG, Gottfredson D, Kellam S, Ji P. Standards of evidence: Criteria for efficacy, effectiveness and dissemination. Prevention Science. 2005;6(3):151–175. doi: 10.1007/s11121-005-5553-y. [DOI] [PubMed] [Google Scholar]

- Fleming CB, Marchesini G, Haggerty KP, Hill KG, Catalano RF. The Importance of High Completion Rates: Evidence from Two Longitudinal Studies of the Etiology and Prevention of Problem Behaviors. Evaluation and Program Planning (under review) [Google Scholar]

- Forgatch MS, DeGarmo DS. Accelerating recovery from poverty: Prevention effects for recently separated mothers. Journal of Early and Intensive Behavioral Intervention. 2007;4(4):681–702. doi: 10.1037/h0100400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster EM, McCombs-Thornton K. The economics of evidence-based practice in disorders of childhood and adolescence. In: Sturmey P, Hersen M, editors. Handbook of evidence-based practice in clinical psychology, Vol 1: Child and adolescent disorders. Hoboken, NJ, US: John Wiley & Sons Inc; 2012. pp. 103–127. [Google Scholar]

- Gest SD, Davidson AJ. A developmental perspective on risk, resilience, and prevention. In: Underwood MK, Rosen LH, editors. Social development: Relationships in infancy, childhood, and adolescence. New York, NY US: Guilford Press; 2011. pp. 427–454. [Google Scholar]

- Gottfredson DC, Cook TD, Gardner FEM, Gorman-Smith D, Howe GW, Sandler IN, Zafft KM. Standards of Evidence for Efficacy, Effectiveness, and Scale up Research in Prevention Science: Next Generation. Prevention Science. doi: 10.1007/s11121-015-0555-x. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham JW. Missing data analysis: making it work in the real world. Annual Review of Psychology. 2009;60:549–576. doi: 10.1146/annurev.psych.58.110405.085530. [DOI] [PubMed] [Google Scholar]

- Grant S, Mayo-Wilson E, Hopewell S, Macdonald G, Moher D, Montgomery P. Developing a reporting guideline for social and psychological intervention trials. Journal of Experimental Criminology. 2013;9(3):355–367. doi: 10.1186/1745-6215-14-242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggerty KP, Fleming CB, Catalano RF, Petrie RS, Rubin RJ, Grassley MH. Ten years later: Locating and interviewing children of drug abusers. Evaluation and Program Planning. 2008;31(1):1–9. doi: 10.1016/j.evalprogplan.2007.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen WB, Collins LM. National Institute on Drug Abuse Research Monograph Series Vol. 142. Washington, DC: U.S. Department of Health and Human Services; 1994. Seven ways to increase power without increasing N; pp. 184–195. [PubMed] [Google Scholar]

- Hansen WB, Tobler NS, Graham JW. Attrition in substance abuse prevention research: A meta-analysis of 85 longitudinally followed cohorts. Evaluation Review. 1990;14(6):677–685. [Google Scholar]

- Hawkins JD, Brown EC, Oesterle S, Arthur MW, Abbott RD, Catalano RF. Early effects of Communities That Care on targeted risks and initiation of delinquent behavior and substance use. Journal of Adolescent Health. 2008;43(1):15–22. doi: 10.1016/j.jadohealth.2008.01.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins JD, Catalano RF, Arthur MW, Egan E, Brown EC, Abbott RD, Murray DM. Testing Communities that Care: The rationale, design and behavioral baseline equivalence of the community youth development study. Prevention Science. 2008;9(3):178–190. doi: 10.1007/s11121-008-0092-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins JD, Catalano RF, Kosterman R, Abbott R, Hill KG. Preventing adolescent health-risk behaviors by strengthening protection during childhood. Archives of Pediatrics and Adolescent Medicine. 1999;153(3):226–234. doi: 10.1001/archpedi.153.3.226. [DOI] [PubMed] [Google Scholar]

- Hawkins JD, Catalano RF, Morrison DM, O’Donnell J, Abbott RD, Day LE. The Seattle Social Development Project: Effects of the first four years on protective factors and problem behaviors. In: McCord J, Tremblay RE, editors. Preventing antisocial behavior: Interventions from birth through adolescence. New York: Guilford Press; 1992. pp. 139–161. [Google Scholar]

- Hawkins JD, Guo J, Hill KG, Battin-Pearson S, Abbott RD. Long-term effects of the Seattle Social Development intervention on school bonding trajectories. Applied Developmental Science: Special issue: Prevention as altering the course of development. 2001;5(4):225–236. doi: 10.1207/S1532480XADS0504_04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins JD, Kosterman R, Catalano RF, Hill KG, Abbott RD. Promoting positive adult functioning through social development intervention in childhood: Long-term effects from the Seattle Social Development Project. Archives of Pediatrics and Adolescent Medicine. 2005;159(1):25–31. doi: 10.1001/archpedi.159.1.25. [DOI] [PubMed] [Google Scholar]

- Hawkins JD, Kosterman R, Catalano RF, Hill KG, Abbott RD. Effects of social development intervention in childhood 15 years later. Archives of Pediatrics & Adolescent Medicine. 2008;162:1133–1141. doi: 10.1001/archpedi.162.12.1133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill KG, Bailey JA, Hawkins JD, Catalano RF, Kosterman R, Oesterle S, Abbott RD. The onset of STI diagnosis through age 30: Results from the Seattle Social Development Project intervention. Prevention Science. 2013 doi: 10.1007/s11121-013-0382-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill KG, Hawkins JD, Bailey JA, Catalano RF, Abbott RD, Shapiro VB. Person-Environment Interaction in the Prediction of Alcohol Abuse and Alcohol Dependence in Adulthood. Drug and Alcohol Dependence. 2010 Mar 16; doi: 10.1016/j.drugalcdep.2010.02.005. [Epub ahead of print.] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill KG, Hawkins JD, Catalano RF, Abbott RD, Guo J. Family influences on the risk of daily smoking initiation. Journal of Adolescent Health. 2005;37(3):202–210. doi: 10.1016/j.jadohealth.2004.08.014. [DOI] [PubMed] [Google Scholar]

- Holder H. Prevention programs in the 21st century: what we do not discuss in public. Addiction. 2009;105:578–581. doi: 10.1111/j.1360-0443.2009.02752.x. [DOI] [PubMed] [Google Scholar]

- Kuklinski MR, Briney JS, Hawkins JD, Catalano RF. Cost-benefit analysis of communities that care outcomes at eighth grade. Prevention Science. 2012;13(2):150–161. doi: 10.1007/s11121-011-0259-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Latimer NR. Survival analysis for economic evaluations alongside clinical trials—Extrapolation with patient-level data: Inconsistencies, limitations, and a practical guide. Medical Decision Making. 2013;33(6):743–754. doi: 10.1177/0272989X12472398. [DOI] [PubMed] [Google Scholar]

- Lonczak HS, Abbott RD, Hawkins JD, Kosterman R, Catalano RF. Effects of the Seattle Social Development Project on sexual behavior, pregnancy, birth, and sexually transmitted disease outcomes by age 21 years. Archives of Pediatrics and Adolescent Medicine. 2002;156(5):438–447. doi: 10.1001/archpedi.156.5.438. [DOI] [PubMed] [Google Scholar]

- MacKinnon DP. Introduction to Statistical Mediation Analysis. New York, NY: Taylor & Francis Group/Lawrence Erlbaum Associates; 2008. [Google Scholar]

- Magnusson D, Bergman LR, editors. Data quality in longitudinal research. New York, NY, US: Cambridge University Press; 1990. [Google Scholar]

- Masten AS, Cicchetti D. Developmental cascades. Development and Psychopathology. 2010;22(3):491–495. doi: 10.1017/S0954579410000222. [DOI] [PubMed] [Google Scholar]

- Moher D, Hopewell S, Schulz KF, Montori V, Gøtzsche PC, Devereaux PJ, Altman DG. CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trials. Journal of Clinical Epidemiology. 2010;63(8):e1–e37. doi: 10.1016/j.jclinepi.2010.03.004. doi: http://dx.doi.org/10.1016/j.jclinepi.2010.03.004. [DOI] [PubMed] [Google Scholar]

- Nicolaas G. Survey Paradata: A Review. 2011 Retrieved February 2015 from Economic and Social Research Council National Center for Research Methods http://eprints.ncrm.ac.uk/1719/

- Oesterle S, Hawkins JD, Hill KG, Bailey JA. Men’s and women’s pathways to adulthood and their adolescent precursors. Journal of Marriage and Family. 2010;72(5):1436–1453. doi: 10.1111/j.1741-3737.2010.00775.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olweus D, Limber SP. Bullying in school: Evaluation and dissemination of the Olweus Bullying Prevention Program. American Journal of Orthopsychiatry. 2010;80(1):124–134. doi: 10.1111/j.1939-0025.2010.01015.x. [DOI] [PubMed] [Google Scholar]

- Patterson GR, Fleischman MJ. Maintenance of treatment effects: Some considerations concerning family systems and follow-up data. Behavior Therapy. 1979;10(2):168–185. [Google Scholar]

- Patterson GR, Forgatch MS, DeGarmo DS. Cascading effects following intervention. Development and Psychopathology. 2010;22(4):949–970. doi: 10.1017/S0954579410000568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plotnick RD. Applying benefit-cost analysis to substance use prevention programs. International Journal of the Addictions. 1994;29(3):339–359. doi: 10.3109/10826089409047385. [DOI] [PubMed] [Google Scholar]

- Sanders MR, Kirby JN. Surviving or Thriving: Quality Assurance Mechanisms to Promote Innovation in the Development of Evidence-Based Parenting Interventions. Prevention Science. 2014:1–11. doi: 10.1007/s11121-014-0475-1. [DOI] [PubMed] [Google Scholar]

- Sandler IN, Schoenfelder EN, Wolchik SA, MacKinnon DP. Long-Term Impact of Prevention Programs to Promote Effective Parenting: Lasting Effects but Uncertain Processes. Annual Review of Psychology. 2011;62:299–329. doi: 10.1146/annurev.psych.121208.131619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slade EP, Becker KD. Understanding proximal–distal economic projections of the benefits of childhood preventive interventions. Prevention Science. 2014;15(6):807–817. doi: 10.1007/s11121-013-0445-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stouthamer-Loeber M, van Kammen WB. Data collection and management: A practical guide. Thousand Oaks, CA, US: Sage Publications, Inc; 1995. [Google Scholar]

- van Kammen WB, Stouthamer-Loeber M. Practical aspects of interview data collection and data management. In: Bickman L, Rog DJ, Bickman L, Rog DJ, editors. Handbook of applied social research methods. Thousand Oaks, CA, US: Sage Publications, Inc; 1998. pp. 375–397. [Google Scholar]

- Wolf LE, Dame LE, Patel MJ, Williams BA, Austin JA, Beskow LM. Certificates of confidentiality: Legal counsels’ experiences with and perspectives on legal demands for research data. Journal of Empirical Research on Human Research Ethics. 2012;7(4):1–9. doi: 10.1525/jer.2012.7.4.1. [DOI] [PMC free article] [PubMed] [Google Scholar]