Significance

Wearable displays are becoming increasingly important, but the accessibility, visual comfort, and quality of current generation devices are limited. We study optocomputational display modes and show their potential to improve experiences for users across ages and with common refractive errors. With the presented studies and technologies, we lay the foundations of next generation computational near-eye displays that can be used by everyone.

Keywords: virtual reality, augmented reality, 3D vision, vision correction, computational optics

Abstract

From the desktop to the laptop to the mobile device, personal computing platforms evolve over time. Moving forward, wearable computing is widely expected to be integral to consumer electronics and beyond. The primary interface between a wearable computer and a user is often a near-eye display. However, current generation near-eye displays suffer from multiple limitations: they are unable to provide fully natural visual cues and comfortable viewing experiences for all users. At their core, many of the issues with near-eye displays are caused by limitations in conventional optics. Current displays cannot reproduce the changes in focus that accompany natural vision, and they cannot support users with uncorrected refractive errors. With two prototype near-eye displays, we show how these issues can be overcome using display modes that adapt to the user via computational optics. By using focus-tunable lenses, mechanically actuated displays, and mobile gaze-tracking technology, these displays can be tailored to correct common refractive errors and provide natural focus cues by dynamically updating the system based on where a user looks in a virtual scene. Indeed, the opportunities afforded by recent advances in computational optics open up the possibility of creating a computing platform in which some users may experience better quality vision in the virtual world than in the real one.

Emerging virtual reality (VR) and augmented reality (AR) systems have applications that span entertainment, education, communication, training, behavioral therapy, and basic vision research. In these systems, a user primarily interacts with the virtual environment through a near-eye display. Since the invention of the stereoscope almost 180 years ago (1), significant developments have been made in display electronics and computer graphics (2), but the optical design of stereoscopic near-eye displays remains almost unchanged from the Victorian age. In front of each eye, a small physical display is placed behind a magnifying lens, creating a virtual image at some fixed distance from the viewer (Fig. 1A). Small differences in the images displayed to the two eyes can create a vivid perception of depth, called stereopsis.

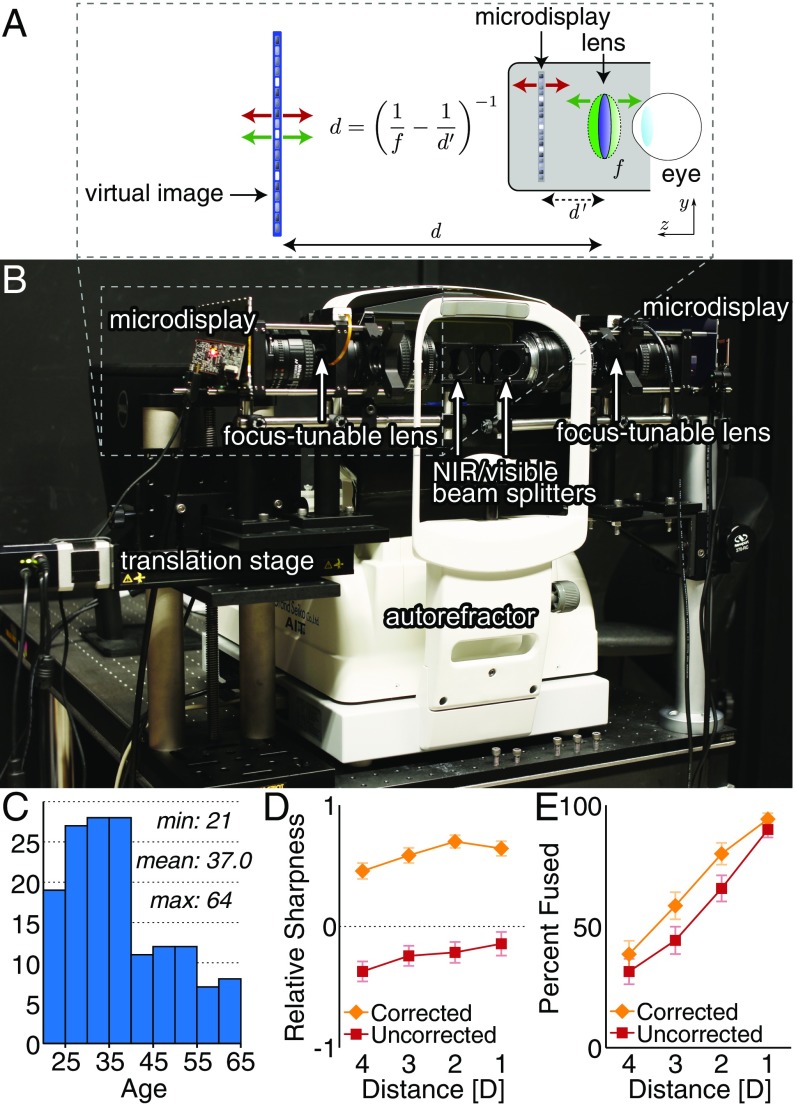

Fig. 1.

(A) A typical near-eye display uses a fixed focus lens to show a magnified virtual image of a microdisplay to each eye (the eyes cannot accommodate at the very near microdisplay’s physical distance). The focal length of the lens, , and the distance to the microdisplay, , determine the distance of the virtual image, . Adaptive focus can be implemented using either a focus-tunable lens (green arrows) or a fixed focus lens and a mechanically actuated display (red arrows), so that the virtual image can be moved to different distances. (B) A benchtop setup designed to incorporate adaptive focus via focus-tunable lenses and an autorefractor to record accommodation. A translation stage adjusts intereye separation, and NIR/visible light beam splitters allow for simultaneous stimulus presentation and accommodation measurement. (C) Histogram of user ages from our main studies. (D and E) The system from B was used to test whether common refractive errors could quickly be measured and corrected for in an adaptive focus display. Average (D) sharpness ratings and (E) fusibility for Maltese cross targets are shown for each of four distances: 1–4 D. The x axis is reversed to show nearer distances to the left. Targets were shown for 4 s. Red data points indicate users who did not wear refractive correction, and orange data points indicate users for whom correction was implemented on site by the tunable lenses. Values of -1, 0, and 1 correspond to responses of blurry, medium, and sharp, respectively. Error bars indicate SE across users.

However, this simple optical design lacks a critical aspect of 3D vision in the natural environment: changes in stereoscopic depth are also associated with changes in focus. When viewing a near-eye display, users’ eyes change their vergence angle to fixate objects at a range of stereoscopic depths, but to focus on the virtual image, the crystalline lenses of the eyes must accommodate to a single fixed distance (Fig. 2A). For users with normal vision, this asymmetry creates an unnatural condition known as the vergence–accommodation conflict (3, 4). Symptoms associated with this conflict include double vision (diplopia), compromised visual clarity, visual discomfort, and fatigue (3, 5). Moreover, a lack of accurate focus also removes a cue that is important for depth perception (6, 7).

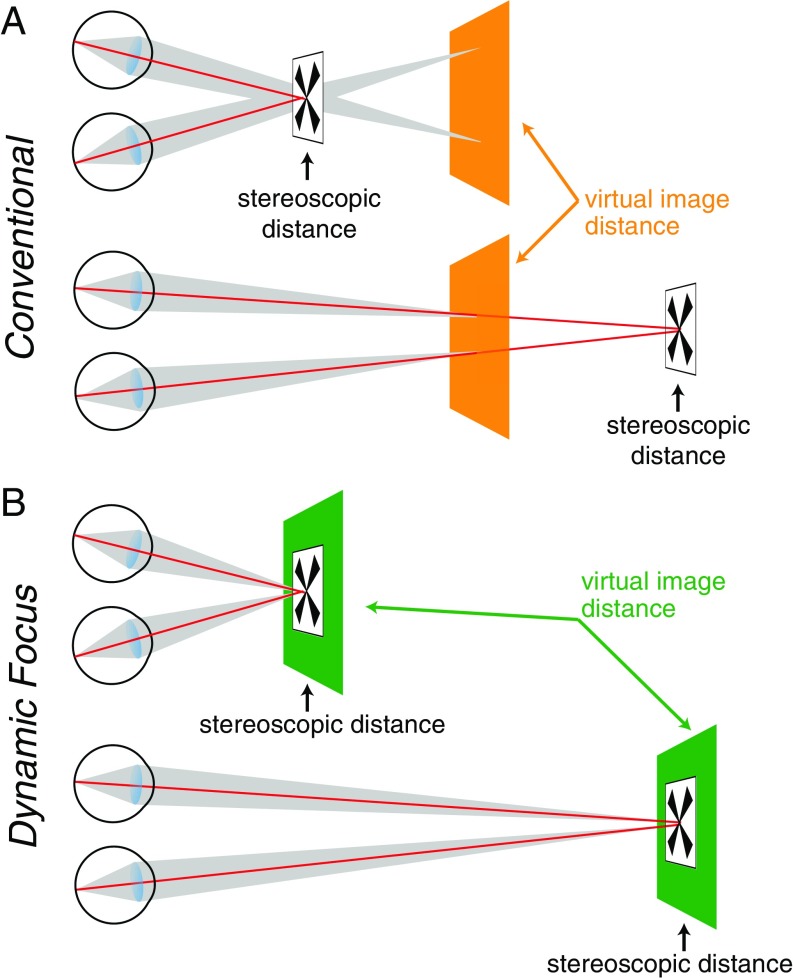

Fig. 2.

(A) The use of a fixed focus lens in conventional near-eye displays means that the magnified virtual image appears at a constant distance (orange planes). However, by presenting different images to the two eyes, objects can be simulated at arbitrary stereoscopic distances. To experience clear and single vision in VR, the user’s eyes have to rotate to verge at the correct stereoscopic distance (red lines), but the eyes must maintain accommodation at the virtual image distance (gray areas). (B) In a dynamic focus display, the virtual image distance (green planes) is constantly updated to match the stereoscopic distance of the target. Thus, the vergence and accommodation distances can be matched.

The vergence–accommodation conflict is clearly an important problem to solve for users with normal vision. However, how many people actually have normal vision? Correctable visual impairments caused by refractive errors, such as myopia (near-sightedness) and hyperopia (far-sightedness), affect approximately one-half of the US population (8). Additionally, essentially all people in middle age and beyond are affected by presbyopia, a decreased ability to accommodate (9). For people with these common visual impairments, the use of near-eye displays is further restricted by the fact that it is not always possible to wear optical correction.

Here, we first describe a near-eye display system with focus-tunable optics—lenses that change their focal power in real time. This system can provide correction for common refractive errors, removing the need for glasses in VR. Next, we show that the same system can also mitigate the vergence–accommodation conflict by dynamically providing near-correct focus cues at a wide range of distances. However, our study reveals that this conflict should be addressed differently depending on the age of the user. Finally, we design and assess a system that integrates a stereoscopic eye tracker to update the virtual image distance in a gaze-contingent manner, closely resembling natural viewing conditions. Compared with other focus-supporting display designs (10–18) (details are in SI Appendix), these adaptive technologies can be implemented in near-eye systems with readily available optoelectronic components and offer uncompromised image resolution and quality. Our results show how computational optics can increase the accessibility of VR/AR and improve the experience for all users.

Results

Near-Eye Display Systems with Adaptive Focus.

In our first display system, a focus-tunable liquid lens is placed between each eye and a high-resolution microdisplay. The focus-tunable lenses allow for adaptive focus—real-time control of the distance to the virtual image of the display (Fig. 1A, green arrows). The lenses are driven by the same computer that controls the displayed images, allowing for precise temporal synchronization between the virtual image distance and the onscreen content. Thus, the distance can be adjusted to match the requirements of a particular user or particular application. Details are in SI Appendix, and related systems are described in refs. 19–21. This system was table-mounted to allow for online measurements of the accommodative response of the eyes via an autorefractor (Fig. 1B), similar to the objective measurements in ref. 14, but the compact liquid lenses can fit within conventional-type head-mounted casings for VR systems. Adaptive focus can also be achieved by combining fixed focus lenses and a mechanically adjustable display (Fig. 1A, red arrows) (11). This approach is used for our second display system, which has the advantage of having a much larger field of view, and it will be discussed later. To assess how adaptive focus can be integrated into VR systems so as to optimize the display for the broadest set of users, we conducted a series of studies examining ocular responses and visual perception in VR. Our main user population was composed of adults with a wide range of ages ( = 153, age range = 21–64 y old) (Fig. 1C) and different refractive errors (79 wore glasses and 19 wore contact lenses).

Correcting Myopia and Hyperopia in VR.

Before examining the vergence–accommodation conflict, we first tested whether a simple procedure can measure a user’s refractive error and correct it natively in a VR system with adaptive focus. Refractive errors, such as myopia and hyperopia, are extremely common (22) and result when the eye’s lens does not produce a sharp image on the retina for objects at particular distances. Although these impairments can often be corrected with contact lenses or surgery, many people wear eyeglasses. Current generation VR/AR systems require the user to wear their glasses beneath the near-eye display system. Although wearing glasses is technically possible with some systems, user reviews often cite problems with fit and comfort, which are likely to increase as the form factor of near-eye displays decreases.

Users ( = 70, ages 21–64 y old) were first tested using a recently developed portable device that uses a smartphone application to interactively determine a user’s refractive error without clinician intervention, including the spherical lens power required for clear vision (NETRA; EyeNetra, Inc.) (23). After testing, each user performed several tasks in VR without wearing his/her glasses. Stimuli were presented under two conditions: uncorrected (the display’s virtual image distance was 1.3 m) and corrected (the virtual image was adjusted to appear at 1.3 m after the correction was applied). Note that the tunable lenses do not correct astigmatism. We assessed the sharpness and fusibility of a Maltese cross under both conditions. The conditions were randomly interleaved along with four different stereoscopic target distances: 1–4 Diopters (D; 1.0, 0.5, 0.33, and 0.25 m, respectively). Users were then asked (i) how sharp the target was (blurry, medium, or sharp) and (ii) whether the target was fused (i.e., not diplopic).

As expected, the corrected condition substantially increased the perceived sharpness of targets at all distances (Fig. 1D). This condition also increased users’ ability to fuse targets (Fig. 1E). Logistic regressions indicated significant main effects for both condition and distance. The odds ratios for correction were 4.05 [95% confidence interval () = 3.25–5.05] and 1.54 ( = 1.20–1.98) for sharpness and fusibility, respectively. The distance odds ratios were 0.77 and 0.21, respectively (all 0.01), indicating reductions in both sharpness and fusibility for nearer distances.

Importantly, the VR-corrected sharpness and fusibility were comparable with those reported by people wearing their typical correction, who participated in the next study (called the conventional condition). Comparing responses between these two groups of users reveals that, across all distances, the average sharpness values for the corrected and conventional conditions were 0.60 and 0.63, respectively. The percentages fused were 68 and 74%, respectively. This result suggests that fast, user-driven vision testing can provide users with glasses-free vision in VR that is comparable with the vision that they have with their own correction.

We also assessed overall preference between the two conditions (corrected and uncorrected) in a less structured session. A target moved sinusoidally in depth within a complex virtual scene, and the user could freely toggle between conditions to select the one that was more comfortable to view; 80% of users preferred the corrected condition, which is significantly above chance (binomial probability distribution; ). Those that preferred the uncorrected condition may have had inaccurate corrections or modest changes in clarity that were not noticeable in the virtual scene (SI Appendix has additional discussion). Future work can incorporate the refractive testing directly into the system by also using the focus-tunable lenses to determine the spherical lens power that results in the sharpest perceived image and then, store this information for future sessions.

Driving the Eyes’ Natural Accommodative Response Using Dynamic Focus.

Even in the absence of an uncorrected refractive error, near-eye displays suffer from the same limitations as any conventional stereoscopic display: they do not accurately simulate changes in optical distance when objects move in depth (Fig. 2A). To fixate and fuse stereoscopic targets at different distances, the eyes rotate in opposite directions to place the target on both foveas; this response is called vergence (red lines in Fig. 2A). However, to focus the displayed targets sharply on the retinas, the eyes must always accommodate to the virtual display distance (gray lines in Fig. 2A). In natural vision, the vergence and accommodation distances are the same, and thus, these two responses are neurally coupled. The discrepancy created by conventional near-eye displays (the vergence–accommodation conflict) can, in principle, be eliminated with an adaptive focus display by producing dynamic focus: constantly updating the virtual distance of a target to match its stereoscopic distance (Fig. 2B) (19, 20).

Using the autorefractor integrated in our system (Fig. 1B), we examined how the eyes’ accommodative responses differ between conventional and dynamic focus conditions and in particular, whether dynamic focus can drive normal accommodation by restoring correct focus cues. Users ( = 64, ages 22–63 y old) viewed a Maltese cross that moved sinusoidally in depth between 0.5 and 4 D at 0.125 Hz (mean = 2.25 D, amplitude = 1.75 D), while the accommodative distance of the eyes was continuously measured. Users who wore glasses were tested as described previously with the NETRA, and their correction was incorporated. In the conventional condition, the virtual image distance was fixed at 1.3 m; in the dynamic condition, the virtual image was matched to the stereoscopic distance of the target. Because of dropped data points from the autorefractor, we were able to analyze 24 trials from the dynamic condition, which we compare with 59 trials for the conventional condition taken from across all test groups.

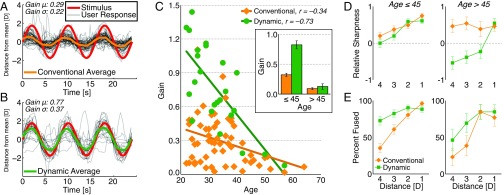

The results are shown in Fig. 3 A and B. Despite the fixed accommodative distance in the conventional condition, on average, there was a small accommodative response (orange line in Fig. 3A) (mean gain = 0.29) to the stimulus. This response is likely because of the cross-coupling between vergence and accommodative responses (24). However, the dynamic display mode (green line in Fig. 3B) elicited a significantly greater accommodative gain (mean = 0.77; partially paired one-tailed Wilcoxon tests, < 0.001), which closely resembles natural viewing conditions (25). These results show that it is indeed possible to drive natural accommodation in VR with a dynamic focus display (SI Appendix has supporting analysis).

Fig. 3.

(A and B) Accommodative responses were recorded under conventional and dynamic display modes while users watched a target move sinusoidally in depth. The stimulus was shown for 4.5 cycles, and the response gain was calculated as the relative amplitude between the response and stimulus for 3 cycles directly after a 0.5-cycle buffer. The stimulus position (red), each individual response (gray), and the average response (orange indicates conventional focus and green indicates dynamic focus in all panels) are shown with the mean subtracted for each user. Phase is not considered because of manual starts for measurement. (C) The accommodative gains plotted against the user’s age show a clear downward trend with age and a higher response in the dynamic condition. Inset shows means and SEs of the gains for users grouped into younger and older cohorts relative to 45 y old. (D and E) Average (D) sharpness ratings and (E) fusibility were recorded for Maltese cross targets at each of four fixed distances: 1–4 D. The x axis is reversed to show nearer distances to the left. Error bars indicate SE.

The ability to accommodate degrades with age (i.e., presbyopia) (26). Thus, we examined how the age of our users affected their response gain. For both conditions, accommodative gain was significantly negatively correlated with age (Fig. 3C) (conventional , dynamic , < 0.01). This correlation is illustrated further in Fig. 3C, Inset, in which average gains are shown for users grouped by age (45 and >45 y old). Although the gains are much greater for the dynamic condition than conventional among the younger age group, the older group had similar gains for the two conditions. From these results, we predicted that accurate focus cues in near-eye displays would mostly benefit younger users and in fact, may be detrimental to the visual perception of older users in VR. We examine this question below.

Optimizing Optics for Younger and Older Users.

A substantial amount of research supports the idea that mitigating the vergence–accommodation conflict in stereoscopic displays improves both perception and comfort, and this observation has been a major motivation for the development of displays that support multiple focus distances (3, 5, 7, 12–15, 27). However, the fact that accommodative gain universally deteriorates with age suggests that the effects of the vergence–accommodation conflict may differ for people of different ages (28–30) and even that multifocus or dynamic display modes may be undesirable for older users. Because presbyopes do not accommodate to a wide range of distances, these individuals essentially always have this conflict in their day to day lives. Additionally, presbyopes cannot focus to near distances, and therefore, using dynamic focus to place the virtual image of the display nearby would likely decrease image quality. To test this hypothesis, we assessed sharpness and fusibility with conventional and dynamic focus in younger (45 y old, = 51) and older (>45 y old, = 13) users.

For the younger group, sharpness was slightly reduced for closer targets in both conditions. However, for the older group, perceived sharpness was high for all distances in the conventional condition and fell steeply at near distances in the dynamic condition (Fig. 3D). A logistic regression using age, condition, and distance showed significant main effects of distance and condition. The distance odds ratio was 0.56 ( = 0.46–0.69), and the ratio for the dynamic condition was 0.60 ( = 0.48–0.75; < 0.001), indicating reductions in sharpness at nearer distances. However, the effect of condition was modified by an interaction with age, indicating that sharpness in the older group was reduced significantly more by dynamic mode (odds ratio = 0.70, = 0.56–0.87, < 0.01). Indeed, for targets 2 D (50 cm) and closer, older users tended to indicate that the dynamic condition was blurry and that the conventional condition was sharp. The fusibility results for the two age groups were more similar: dynamic focus facilitated fusion at closer distances (Fig. 3E). Significant main effects of condition (odds ratio of 1.75, = 1.23–2.49) and distance (odds ratio of 0.27, = 0.18–0.39) were modified by an interaction (odds ratio of 1.69, = 1.27–2.25, all < 0.01). The interaction indicated that the improvement in fusibility associated with dynamic focus increased at nearer distances. Although dynamic focus provides better fusion for young users, in practice, a more conventional display mode may be preferable for presbyopes. The ideal mode for presbyopes will depend on the relative weight given to sharpness and fusion in determining the quality of a VR experience. In addition, a comfortable focus distance for all images in the conventional condition obviates the need to wear traditional presbyopic correction at all.

We also tested overall preferences while users viewed a target moving in a virtual scene. Interestingly, in both the younger and older groups, only about one-third of the users expressed a preference for the dynamic condition (35% of younger users and 31% of older users). This result was initially surprising given the substantial increase in fusion experienced by younger users in the dynamic mode. One potential explanation is that the target in the dynamic condition may have been modestly less sharp (Fig. 3D) and that people strongly prefer sharpness over diplopia. However, two previous studies have also reported overall perceptual and comfort improvements using dynamic focus displays (19, 20). To understand this difference, we considered the fact that our preference test involved a complex virtual scene. Although users were instructed to maintain fixation on the target, if they did look around the scene even momentarily, the dynamic focus (yoked to the target) would induce a potentially disorienting, dynamic vergence–accommodation conflict. That is, unless the dynamic focus is adjusted to the actual distance of fixation, it will likely degrade visual comfort and perception. To address this issue, we built and tested a second system that enabled us to track user gaze and update the virtual distance accordingly.

A Gaze-Contingent Focus Display.

Several types of benchtop gaze-contingent display systems—systems that update the displayed content based on where the user fixates in a scene—have been proposed in the literature, including systems that adjust binocular disparity, depth of field rendering, and focus distance (11, 19, 31, 32). Gaze-contingent depth of field displays can simulate the changes in depth of field blur that occur when the eyes accommodate near and far, but they do not actually stimulate accommodation and thus, have not been found to improve perception and comfort (19, 32).

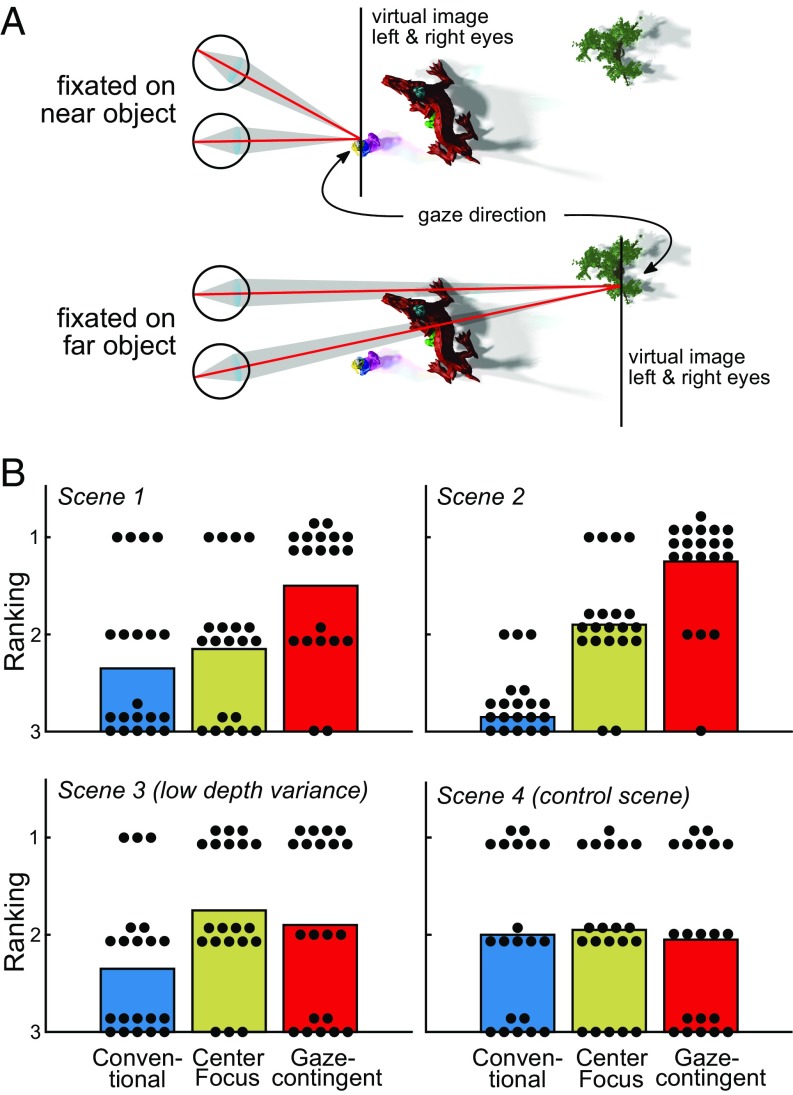

To address the issue of simulating correct accommodative distances in a gaze-contingent manner, we built a second wearable near-eye display system implementing gaze-contingent focus. Our system builds on Samsung’s Gear VR platform, but we modify it by adding a stereoscopic eye tracker and a motor that mechanically adjusts the distance between screen and magnifying lenses in real time (Fig. 1A, red arrows). To place the virtual image at the appropriate distance in each rendered frame, we use the eye tracker to determine where the user is looking in the VR scene, calculate the distance of that location, and adjust the virtual image accordingly (Fig. 4A). This system enabled us to perform comparisons with conventional focus under more naturalistic viewing conditions, in which users could freely look around a VR scene by moving both their head and eyes. Unlike the previous experiments, there was no specific fixation target, and they could move their head to look around the scene. Within each scene, the order of the conditions (conventional, center focus, and gaze-contingent focus) was randomized, and the user was asked to rank them on their perceived image quality.

Fig. 4.

(A) The gaze-contingent focus mode places the virtual image at the same depth as the object in the user’s gaze direction. This mode allows the vergence (red lines) and accommodation (gray areas) responses to match at the object depth, which is similar to natural viewing conditions. Note that this illustration does not depict one of the VR scenes used in the experiment (SI Appendix shows screenshots of these stimuli). (B) User rankings for each condition in four different scenes. Conditions were conventional (the virtual image remains at a fixed optical distance), center focus (the virtual image is adjusted to the scene depth at the center of the view), and gaze-contingent focus. Bar heights indicate average rankings, and black circles show individual responses.

Based on the insights from our experiments with the benchtop system, we expected users to prefer the gaze-contingent focus condition, particularly when viewing objects at close distances (i.e., 3–4 D). However, if the depth variation in a scene is very gradual or small, an eye tracker may not be necessary. Instead, the depth of the point in the center of the scene directly in front of the viewer (regardless of whether they are fixating it or not) could be used as a proxy for gaze (center focus). To test these hypotheses, we designed four VR scenes. Scenes 1 and 2 contained large depth variations and nearby objects (up to 4 D). Scene 3 contained objects within a farther depth range (0–2.5 D), and the depth variation was mostly gradual. Finally, scene 4 was a control scene that only contained objects at far distances.

Twenty users (age range = 21–38 y old) ranked three conditions (conventional, center focus, and gaze-contingent focus) for all scenes. We used this age group, because our previous study suggested that younger users would primarily benefit from gaze-contingent focus. As expected, users preferred gaze-contingent focus for scenes with large depth changes and nearby objects (Fig. 4B, scenes 1 and 2). Ordinal assessments (Friedman tests) showed a significant effect of condition, and follow-up tests indicated that, for scene 1, gaze-contingent focus was ranked significantly higher than conventional and for scene 2, both center focus and gaze-contingent focus were ranked higher (all < 0.05, Bonferroni corrected). For the scenes with little to no depth variation (scenes 3 and 4), there was no significant difference between conditions.

Discussion

Near-eye displays pose both a challenge and an opportunity for rethinking visual displays in the spirit of designing a computing platform for users of all ages and abilities. Although the past few years have seen substantial progress toward consumer-grade VR platforms, conventional near-eye displays still pose unique challenges in terms of displaying visual information clearly and comfortably to a wide range of users. The optocomputational displays described here contribute substantially to solving issues of visual quality for users with both normal vision and common refractive errors. Key to these improvements is the idea of an adaptive focus display—a display that can adapt its focus distance in real time to the requirements of a particular user. This adaptive focus can be used to correct near- or far-sightedness and in combination with a mobile eye tracker, can create focus cues that are nearly correct for natural viewing—if they benefit the user. Moving forward, the ability to focus to both far and near distances in VR will be particularly important for telepresence, training, and remote control applications. Similar benefits likely also apply for AR systems with transparent displays that augment the view of the real world. However, AR systems pose additional challenges, because the simulated focus cues for the digital content should also be matched to the physical world.

As an alternative to adaptive focus, two previous studies have examined a low-cost multifocus display solution called monovision (19, 20). In monovision display systems, the left and right eye receive lenses of different powers, enabling one eye to accommodate to near distances and the other eye to accommodate to far distances. We also examined accommodative gain and perceptual responses in monovision (a difference of 1.5 D was introduced between the two eyes, but there was no dynamic focus). We found that monovision reduced sharpness and increased fusion slightly but not significantly, and users had no consistent preference for it. The monovision display also did not drive accommodation significantly more than conventional near-eye displays (SI Appendix).

The question of potential negative consequences with long-term use of stereoscopic displays has been raised; however, recent extensive studies have not found evidence of short-term visuomotor impairments or long-term changes in balance or impaired eyesight associated with viewing stereoscopic content (33, 34). In fact, AR and VR near-eye displays have the potential to provide practical assistance to people with existing visual impairments beyond those that are correctable by conventional optics. Near-eye displays designed to provide enhanced views of the world that may increase functionality for people with impaired vision (e.g., contrast enhancement and depth enhancement) have been in development since the 1990s (35, 36). However, proposed solutions have suffered from a variety of limitations, including poor form factor and ergonomics and restricted platform flexibility. The move to near-eye displays as a general purpose computing platform will hopefully open up possibilities for incorporating low-vision enhancements into increasingly user-friendly display systems. Thus, in the future, AR/VR platforms may become accessible and even essential for a wide variety of users.

Materials and Methods

Display Systems.

The benchtop prototype uses Topfoison TF60010A Liquid Crystal Displays with a resolution of pixels and a screen diagonal of 5.98 in. The optical system for each eye offers a field of view of 34.48° and comprises three Nikon Nikkor 50-mm f/1.4 camera lenses. The focus-tunable lens (Optotune EL-10-30-C) dynamically places the virtual image at any distance between 0 and 5 D and changes its focal length by shape deformation within 15 ms. The highest rms wavefront error exhibited by the lens placed in a vertical orientation (according to Optotune) is 0.3 (measured at 525 nm). No noticeable pupil swim was reported. Two additional camera lenses provide a 1:1 optical relay system that increases the eye relief so as to provide sufficient spacing for a near-IR (NIR)/visible beam splitter (Thorlabs BSW20R). The left one-half of the assembly is mounted on a Zaber T-LSR150A Translation Stage that allows interpupillary distance adjustment. A Grand Seiko WAM-5500 Autorefractor records the accommodation state of the user’s right eye at about 4–5 Hz with an accuracy of 0.25 D through the beam splitter. The wearable prototype is built on top of Samsung’s Gear VR platform with a Samsung Galaxy S7 Phone (field of view = 96°, resolution = 1,280 1,440 per eye). A SensoMotoric Instruments (SMI) Mobile ET-HMD Eye Tracker is integrated in the Gear VR. This binocular eye tracker operates at 60 Hz over the full field of view. The typical accuracy of the gaze tracker is listed as . We mount an NEMA 17 Stepper Motor (Phidgets 3303) on the SMI Mobile ET-HMD Eye Tracker and couple it to the focus adjustment mechanism of the Gear VR, which mechanically changes the distance between phone and internal lenses. The overall system latency is approximately 280 ms for a sweep from 4 to 0 D (optical infinity). For reference, a typical response time for human accommodation is around 300–400 ms (discussion is in SI Appendix) (37).

Experiments.

Informed consent was obtained from all study participants, and the procedures were approved by the Stanford University Institutional Review Board. Details are in SI Appendix.

Data Availability.

Dataset S1 includes the raw data from both studies.

Supplementary Material

Acknowledgments

We thank Joyce Farrell, Max Kinateder, Anthony Norcia, Bas Rokers, and Brian Wandell for helpful comments on a previous draft of the manuscript. N.P. was supported by an National Science Foundation (NSF) Graduate Research Fellowships Program. E.A.C. was supported by Microsoft and Samsung. G.W. was supported by a Terman Faculty Fellowship, an Okawa Research Grant, an NSF Faculty Early Career Development (CAREER) Award, Intel, Huawei, Samsung, Google, and Meta. Research funders played no role in the study execution, interpretation of data, or writing of the paper.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1617251114/-/DCSupplemental.

References

- 1.Wheatstone C. Contributions to the physiology of vision. Part the first. On some remarkable, and hitherto unobserved, phenomena of binocular vision. Philos Trans R Soc Lond. 1838;128:371–394. [Google Scholar]

- 2.Sutherland IE. 1968. A head-mounted three dimensional display. Proceedings of Fall Joint Computer Conference (ACM, New York), pp 757–764.

- 3.Kooi FL, Toet A. Visual comfort of binocular and 3D displays. Displays. 2004;25:99–108. [Google Scholar]

- 4.Lambooij M, Fortuin M, Heynderickx I, IJsselsteijn W. Visual discomfort and visual fatigue of stereoscopic displays: A review. J Imaging Sci Technol. 2009;53(3):1–14. [Google Scholar]

- 5.Shibata T, Kim J, Hoffman DM, Banks MS. The zone of comfort: Predicting visual discomfort with stereo displays. J Vis. 2011;11(8):11. doi: 10.1167/11.8.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cutting JE, Vishton PM. Perceiving layout and knowing distances: The interaction, relative potency, and contextual use of different information about depth. In: Epstein W, Rogers S, editors. Perception of Space and Motion. Academic Press; San Diego: 1995. pp. 69–117. [Google Scholar]

- 7.Hoffman DM, Girshick AR, Akeley K, Banks MS. Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J Vis. 2008;8(3):1–30. doi: 10.1167/8.3.33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Vitale S, Ellwein L, Cotch M, Ferris F, Sperduto R. Prevalence of refractive error in the United States, 1999-2004. Arch Ophthalmol. 2008;126(8):1111–1119. doi: 10.1001/archopht.126.8.1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Duane A. Normal values of accommodation at all ages. J Am Med Assoc. 1912;LIX(12):1010–1013. [Google Scholar]

- 10.Traub AC. Stereoscopic display using rapid varifocal mirror oscillations. Appl Opt. 1967;6(6):1085–1087. doi: 10.1364/AO.6.001085. [DOI] [PubMed] [Google Scholar]

- 11.Shiwa S, Omura K, Kishino F. Proposal for a 3-D display with accommodative compensation: 3DDAC. J Soc Inf Disp. 1996;4(4):255–261. [Google Scholar]

- 12.Rolland J, Krueger M, Goon A. Multifocal planes head-mounted displays. Appl Opt. 2000;39(19):3209–3215. doi: 10.1364/ao.39.003209. [DOI] [PubMed] [Google Scholar]

- 13.Akeley K, Watt S, Girshick A, Banks M. A stereo display prototype with multiple focal distances. ACM Trans Graph. 2004;23(3):804–813. [Google Scholar]

- 14.Liu S, Cheng D, Hua H. 2008. An optical see-through head mounted display with addressable focal planes. Proceedings of ISMAR (IEEE Computer Society, Washington,DC), pp 33–42.

- 15.Love GD, et al. High-speed switchable lens enables the development of a volumetric stereoscopic display. Opt Express. 2009;17(18):15716–15725. doi: 10.1364/OE.17.015716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lanman D, Luebke D. Near-eye light field displays. ACM Trans Graph. 2013;32(6):1–10. [Google Scholar]

- 17.Huang FC, Chen K, Wetzstein G. The light field stereoscope: Immersive computer graphics via factored near-eye light field display with focus cues. ACM Trans Graph. 2015;34(4):1–12. [Google Scholar]

- 18.Banks MS, Hoffman DM, Kim J, Wetzstein G. 3D displays. Annu Rev Vis Sci. 2016;2(1):397–435. doi: 10.1146/annurev-vision-082114-035800. [DOI] [PubMed] [Google Scholar]

- 19.Konrad R, Cooper EA, Wetzstein G. 2016. Novel optical configurations for virtual reality: Evaluating user preference and performance with focus-tunable and monovision near-eye displays. ACM CHI Conference on Human Factors in Computing System (ACM, New York), pp 1211–1220.

- 20.Johnson PV, et al. Dynamic lens and monovision 3D displays to improve viewer comfort. Opt Express. 2016;24:11808–11827. doi: 10.1364/OE.24.011808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Llull P, et al. OSA Imaging and Applied Optics 2015. Optical Society of America; Washington, DC: 2015. Design and optimization of a near-eye multifocal display system for augmented reality; p. JTH3A.5. [Google Scholar]

- 22.World Health Organization 2014 Visual Impairment and Blindness. Available at www.who.int/mediacentre/factsheets/fs282/en/. Accessed September 29, 2016.

- 23.Pamplona VF, Mohan A, Oliveira MM, Raskar R. Netra: Interactive display for estimating refractive errors and focal range. ACM Trans Graph. 2010;29(4):77:1–77:8. [Google Scholar]

- 24.Fincham EF, Walton J. The reciprocal actions of accommodation and convergence. J Physiol. 1957;137(3):488–508. doi: 10.1113/jphysiol.1957.sp005829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Charman WN, Heron G. On the linearity of accommodation dynamics. Vision Res. 2000;40(15):2057–2066. doi: 10.1016/s0042-6989(00)00066-3. [DOI] [PubMed] [Google Scholar]

- 26.Heron G, Charman WN. Accommodation as a function of age and the linearity of the response dynamics. Vision Res. 2004;44(27):3119–3130. doi: 10.1016/j.visres.2004.07.016. [DOI] [PubMed] [Google Scholar]

- 27.Schowengerdt BT, Seibel EJ. True 3-D scanned voxel displays using single or multiple light sources. J Soc Inf Disp. 2006;14(2):135–143. [Google Scholar]

- 28.Watt S, Ryan L. Age-related changes in accommodation predict percetual tolerance to vergence-accommodation conflicts in stereo displays (abs.) J Vis. 2015;15:267. [Google Scholar]

- 29.Yang SN, et al. Stereoscopic viewing and reported perceived immersion and symptoms. Optom Vis Sci. 2012;89(7):1068–1080. doi: 10.1097/OPX.0b013e31825da430. [DOI] [PubMed] [Google Scholar]

- 30.Read JC, Bohr I. User experience while viewing stereoscopic 3D television. Ergonomics. 2014;57(8):1140–1153. doi: 10.1080/00140139.2014.914581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Peli E, Hedges TR, Tang J, Landmann D. 53.2: A binocular stereoscopic display system with coupled convergence and accommodation demands. SID Dig. 2012;32(1):1296–1299. [Google Scholar]

- 32.Mauderer M, Conte S, Nacenta MA, Vishwanath D. 2014. Depth perception with gaze-contingent depth of field. ACM CHI Conference on Human Factors in Computing System (ACM, New York), pp 217–226.

- 33.Read JC, et al. Viewing 3D TV over two months produces no discernible effects on balance, coordination or eyesight. Ergonomics. 2015;59(8):1073–1088. doi: 10.1080/00140139.2015.1114682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Read JC, et al. Balance and coordination after viewing stereoscopic 3D television. R Soc Open Sci. 2015;2(7):140522. doi: 10.1098/rsos.140522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Massof RW, Rickman DL. Obstacles encountered in the development of the low vision enhancement system. Optom Vis Sci. 1992;69(1):32–41. doi: 10.1097/00006324-199201000-00005. [DOI] [PubMed] [Google Scholar]

- 36.van Rheede JJ, et al. Improving mobility performance in low vision with a distance-based representation of the visual scene. Invest Ophthalmol Vis Sci. 2015;56(8):4802. doi: 10.1167/iovs.14-16311. [DOI] [PubMed] [Google Scholar]

- 37.Campbell FW, Westheimer G. Dynamics of accommodation responses of the human eye. J Physiol. 1960;151:285–295. doi: 10.1113/jphysiol.1960.sp006438. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Dataset S1 includes the raw data from both studies.