Abstract

Background

Use of electronic clinical decision support (eCDS) has been recommended to improve implementation of clinical decision rules. Many eCDS tools, however, are designed and implemented without taking into account the context in which clinical work is performed. Implementation of the pediatric traumatic brain injury (TBI) clinical decision rule at one Level I pediatric emergency department includes an electronic questionnaire triggered when ordering a head computed tomography using computerized physician order entry (CPOE). Providers use this CPOE tool in less than 20% of trauma resuscitation cases. A human factors engineering approach could identify the implementation barriers that are limiting the use of this tool.

Objectives

The objective was to design a pediatric TBI eCDS tool for trauma resuscitation using a human factors approach. The hypothesis was that clinical experts will rate a usability-enhanced eCDS tool better than the existing CPOE tool for user interface design and suitability for clinical use.

Methods

This mixed-methods study followed usability evaluation principles. Pediatric emergency physicians were surveyed to identify barriers to using the existing eCDS tool. Using standard trauma resuscitation protocols, a hierarchical task analysis of pediatric TBI evaluation was developed. Five clinical experts, all board-certified pediatric emergency medicine faculty members, then iteratively modified the hierarchical task analysis until reaching consensus. The software team developed a prototype eCDS display using the hierarchical task analysis. Three human factors engineers provided feedback on the prototype through a heuristic evaluation, and the software team refined the eCDS tool using a rapid prototyping process. The eCDS tool then underwent iterative usability evaluations by the five clinical experts using video review of 50 trauma resuscitation cases. A final eCDS tool was created based on their feedback, with content analysis of the evaluations performed to ensure all concerns were identified and addressed.

Results

Among 26 EPs (76% response rate), the main barriers to using the existing tool were that the information displayed is redundant and does not fit clinical workflow. After the prototype eCDS tool was developed based on the trauma resuscitation hierarchical task analysis, the human factors engineers rated it to be better than the CPOE tool for nine of 10 standard user interface design heuristics on a three-point scale. The eCDS tool was also rated better for clinical use on the same scale, in 84% of 50 expert–video pairs, and was rated equivalent in the remainder. Clinical experts also rated barriers to use of the eCDS tool as being low.

Conclusions

An eCDS tool for diagnostic imaging designed using human factors engineering methods has improved perceived usability among pediatric emergency physicians.

Traumatic brain injury (TBI) is a leading cause of childhood morbidity and mortality. In the United States, pediatric head trauma is responsible for 7,400 deaths, 60,000 hospitalizations, and more than 600,000 emergency department (ED) visits annually.1 More than half of children with head injuries undergo computed tomography (CT), with most having minor head trauma. Fewer than 10% of those scanned have TBI, and fewer than 1% have clinically important TBI.2 Given the number of children evaluated each year for head injury, the established risks of radiation exposure3,4 and the added cost from overuse of head CT represent avoidable health care burdens.

Similar to other clinical conditions for which testing or treatment is either overused or inconsistently applied,5–8 a Pediatric Emergency Care Applied Research Network (PECARN) clinical decision rule (CDR) has been derived and validated to guide the use of head CT in injured children.2 The current PECARN criteria for TBI is stratified as age < 2 years old and age ≥ 2 years old.2 Each list contains seven conditions, signs, or symptoms that the TBI patient must be assessed for to determine the risk of clinically significant TBI. According to the PECARN rule, high-risk features are altered mental status, low Glasgow Coma Scale (GCS) score, and skull fracture. Medium risk is defined as the absence of high-risk criteria, and presence of at least one of the remaining four age-specific criteria. Low risk is defined as the absence of all seven age-specific criteria.

Unfortunately, physicians often fail to adopt CDRs and other forms of evidence-based practice through traditional means of knowledge acquisition such as journal articles and scientific presentations.9–12 This implementation gap has led to interest in electronic clinical decision support (eCDS) tools as a means to improve physician adoption rates.13,14 An eCDS tool is deployed within the electronic health record system, often connected to computerized physician order entry (CPOE) functionality, to help the provider in his or her decision-making process. Many existing eCDS tools have been designed and implemented without taking into account the context in which clinical work is performed. As a result, implementation research confirms little demonstrable improvement in physician adoption or quality of care using these systems.15,16 At the very least, poor implementation of eCDS systems leads to wasted effort and money by failing to accomplish its goal.17,18 At worst, it leads to unnecessary patient morbidity or mortality by serving as a time-consuming distraction to physicians.19,20

In the ED at Children’s National Medical Center (CNMC), the current PECARN CDR implementation for head CT imaging after TBI is a CDR questionnaire delivered when ordering a head CT using Cerner FirstNet (Cerner Corporation, North Kansas City, MO). Although more than half of clinicians use it in their daily practice, it is used in less than 20% of trauma resuscitation cases.21 Rather than citing physician resistance to the existing CPOE tool, a human factors engineering approach seeks to address this noncompliance by identifying the usability challenges and workflow gaps that serve as barriers to use. Trauma resuscitations at CNMC involve a coordinated team of emergency medicine and trauma surgery providers, which includes a trauma recorder (typically a nurse or physician) who collects the elements of assessment and management as they are uttered by other team members and a nurse outside the trauma bay who enters orders into the CPOE. In the trauma bay, there is a wall-mounted 40-inch monitor that displays pertinent patient information. The patient information presently displayed is only vital signs data, but the trauma flowsheet is being converted from paper form to a digital tablet entry to enable the monitor to display the ongoing collective assessment in real time. A human factors evaluation may discover that the CPOE tool, which is being presented to the nurse outside the trauma bay who is entering the orders, is being delivered to the wrong team member and well after the decision has already been made to perform the test. Our objective is to use human factors engineering methods to design a pediatric TBI eCDS tool that is more appropriate than the existing CPOE tool for the fast-paced environment of trauma resuscitations.

METHODS

Study Design

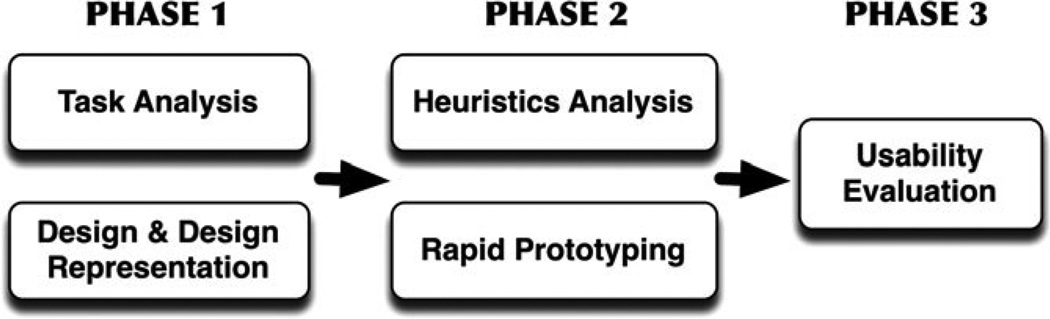

For this study we used a mixed-methods approach that is an adaptation of the Hix and Hartson22 star life cycle usability evaluation (Figure 1). This study was approved by our institutional review board (IRB).

Figure 1.

Research approach as a modified Star Life Cycle Evaluation.

Study Setting and Population

The CNMC is an urban tertiary care teaching hospital with Level I trauma and burn center designation. The ED sees almost 87,000 ED visits annually, with over 500 trauma resuscitations. In addition to the study physicians, we recruited into our team three usability experts; human factors engineers with domain knowledge of medicine; and five clinical experts, board-certified pediatric emergency medicine academic faculty members.

Study Protocol

We used a video surveillance system already in place at our hospital that allows for performance improvement review of trauma resuscitations. Each trauma bay is equipped with two ceiling-mounted cameras that record video and audio. All of the audiovisual streams, including the vital signs monitor, are stored together on a server and can be reviewed simultaneously through a secure portal.

Usability Evaluation Phase 0: Survey of Providers

We created an anonymous survey for CNMC pediatric EM staff physicians to identify barriers to use of the existing CPOE tool for pediatric TBI. We developed the questions from identified barriers to use of electronic decision support from a prior systematic review and pilot-tested the questions prior to the actual survey.23 Electronic survey data were collected via e-mail solicitation and managed using Research Electronic Data Capture (REDCap) electronic data capture tools hosted in the Biostatistics Division of Children’s National Health System.24

Usability Evaluation Phase 1a: Hierarchical Task Analysis

We performed a hierarchical task analysis to reorganize the existing PECARN TBI decision rule for pediatric TBI.25 Using existing trauma protocols (Advanced Trauma Life Support, Pediatric Advanced Life Support, and our own department protocols), the study physicians performed a hierarchical task analysis of pediatric TBI evaluation. The clinical experts then iteratively modified the hierarchical task analysis until no new modifications were made (saturation) and the clinical experts were in consensus. The PECARN TBI assessment criteria were then identified within the hierarchical task analysis to understand how they fit the workflow pattern of how trauma patients are assessed once they present to the ED.

Usability Evaluation Phase 1b: Design and Design Representation

Guided by the hierarchical task analysis, the software team (KY, SC) developed a computer display of a prototype eCDS. We created a Microsoft PowerPoint slideshow with embedded links to navigate between the different states of the tool. The goal of the prototype was to display the information in a way that correlated with workflow, making it natural and intuitive to the providers who were assessing the trauma patient.

Usability Evaluation Phase 2a: Heuristic Evaluation

For the heuristic analysis, a team of three human factors engineers with medical domain knowledge were consulted. These experts evaluated the design of the existing CPOE tool and our prototype eCDS tool based on Jacob Neilsen’s “10 Usability Heuristics for User Interface Design,” which is a commonly used and well-respected reference for user interface design.26 This consensus evaluation provided a score for each tool for the 10 usability heuristics on a three-point scale (heuristic met, partially met, not met).

Usability Evaluation Phase 2b: Rapid Prototyping

The prototype eCDS was programmed by the software team using HTML and Javascript because it allowed for portability between systems without added software development. Using this interface, the tool can be run on any computer or portable device with an Internet Web browser. Iterative refinements were made and tested based on the heuristics evaluation and continuous researcher review of the prototype performance with routine video review of trauma resuscitation cases.

Usability Evaluation Phase 3: Near-live Usability Testing

The eCDS tool was subject to iterative usability evaluation by the clinical experts using video review of trauma resuscitation cases. Based on prior usability evaluation sample size literature,27 five clinical experts, all of whom are emergency physicians, completed 10 simulations each over a 1-month period. To vary case content, the 10 most recent trauma resuscitation videos were accessed from the server at the time of each expert session. For usability evaluation, we conducted “near-live” video-guided simulated resuscitations using a “talk-aloud” protocol.28

The “near-live” simulation was set up so that the clinical expert was viewing two display screens. The first display showed recorded video footage of the resuscitation in addition to the audio stream and vital sign monitor from the case. The second display projected the eCDS prototype that was displaying real-time information as the trauma resuscitation was being viewed. During the simulations, a member of the research team was the trauma recorder acting as a “Wizard of Oz,”29 picking up cues from the video and triggering the eCDS tool. Similar to their behavior as members of the trauma resuscitation team, the clinical experts were encouraged to verbalize their thoughts, perceptions, and actions as they worked through the simulations with the eCDS tool.

Qualitative Analysis

The participant’s utterances and feedback during the simulation were recorded by direct observation and with a digital audio recorder to understand the decision-making in real time. In addition, physician experiences were explored at the end of each case-based simulation using semistructured interviews and a survey that rated perceptions of the eCDS utility on a three-point Likert scale. Audio and written transcripts were reviewed independently by two study investigators (KY, JJ) using NVivo 10 (QSR international, Melbourne, Australia). Content analysis was performed inductively to generate themes, informed by previously identified barriers to use of eCDS.23 Data were double-coded and examined for discrepancies to establish consensus.

RESULTS

The Phase 0 implementation survey was completed by 40 of the 53 CNMC pediatric emergency medicine providers (75.5% response rate). The primary barriers to use of the existing CPOE tool are that the information provided is redundant and does not fit clinical workflow (Table 1).

Table 1.

Survey of Clinician Barriers to Use of Existing CPOE Tool

| Barrier | CPOE Tool, % |

|---|---|

| Information redundancy | 69.2 |

| Fit to clinical workflow | 53.8 |

| Too much time to complete | 30.8 |

| Medicolegal concerns | 15.4 |

| Family expectations | 15.4 |

N = 26.

CPOE = computerized physician order entry.

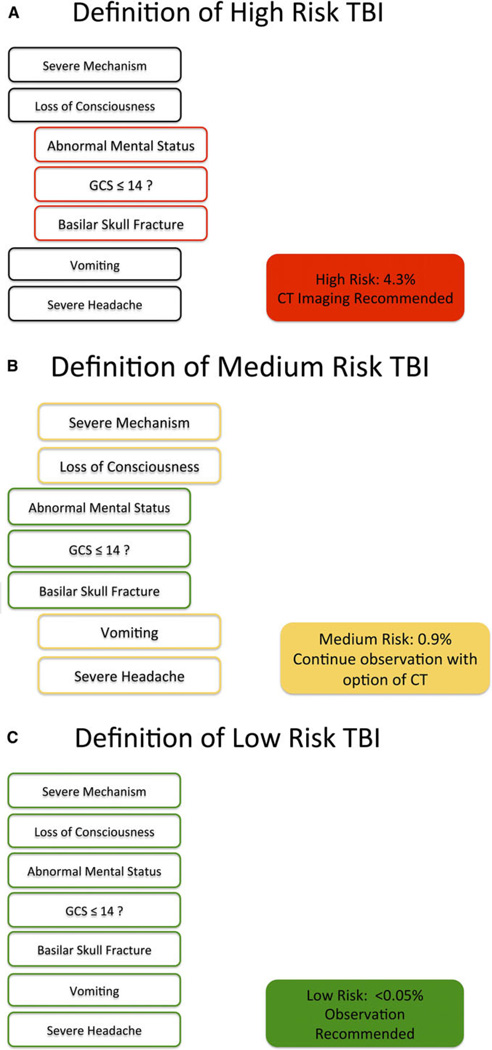

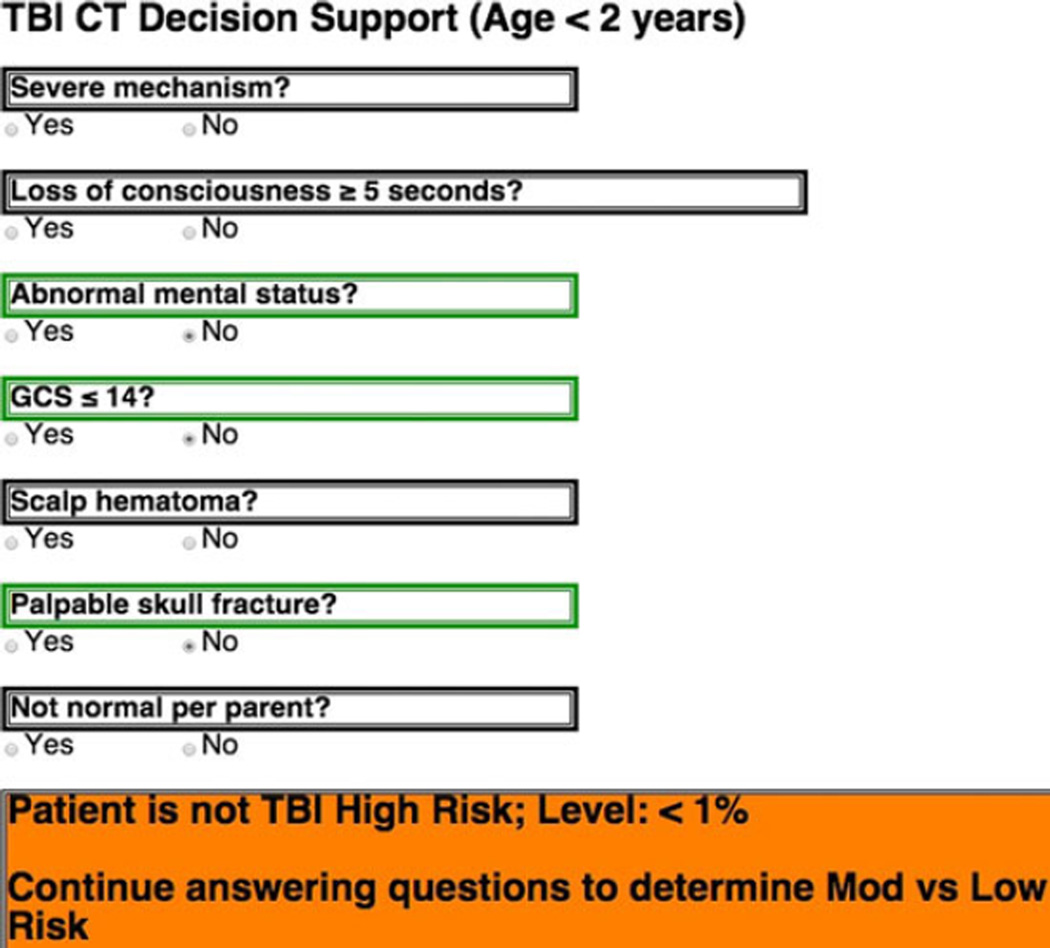

We developed and iteratively modified a trauma resuscitation hierarchical task analysis until we reached clinical expert consensus. The Phase 1a hierarchical task analysis was then used to reorganize the original PECARN rule for the Phase 1b prototype eCDS tool (Table 2). To make the tool concise and not distracting to the user, we presented the criteria in the form of short yes or no questions abstracted from the PECARN rule. In addition, we included color markers and a status indicator that would not only draw the users’ attention to the important changes in the assessment, but also provide a familiar indication of the severity of the assessed criteria and what kind of risk level the patient was at. Red indicated presence of a high-risk criteria, yellow indicated medium-risk criteria, and green indicated low-risk criteria (Figure 2).

Table 2.

Reorganization of PECARN TBI rule by age

| HTA-modified TBI Rule | |

|---|---|

| Age ≥ 2 yr | Age < 2 yr |

| Severe mechanism | Severe mechanism |

| Loss of consciousness | Loss of consciousness |

| Abnormal mental status | Abnormal mental status |

| GCS < 15 | GCS < 15 |

| Severe headache | Scalp hematoma |

| Basilar skull fracture | Palpable skull fracture |

| Vomiting | Not normal per parent |

GCS = Glasgow Coma Scale; HTA = Health Technology Assessment; PECARN = Pediatric Emergency Care Applied Research Network; TBI = traumatic brain injury.

Figure 2.

Organization of (A) high-, (B) intermediate-, and (C) low-risk elements. CT = computed tomography; GCS = Glasgow Coma Scale; TBI = traumatic brain injury.

During Phase 2a, the human factors engineers scored the prototype eCDS tool better for user interface design on all but one of the 10 usability heuristic measures when compared to the CPOE tool (Data Supplement S1, available as supporting information in the online version of this paper). The eCDS tool was considered deficient in the “help and documentation” category for not having links to the pediatric GCS and criteria for severe mechanism of injury for reference. To address this deficiency, we planned to add wall-mounted severe mechanism of injury posters in every trauma resuscitation room to accompany the existing GCS reference posters.

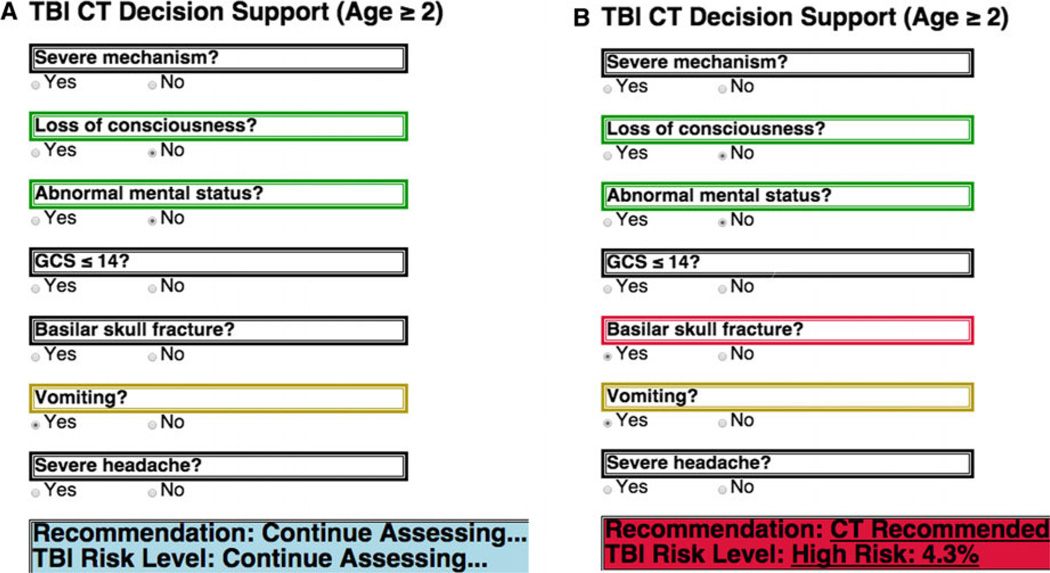

As a result of the heuristic evaluation, design improvements were incorporated during Phase 2b rapid prototyping before usability testing. Major changes included phrasing each of the seven questions so that all “yes” answers were positive findings and that “yes” and “no” options were clearly visible. When an answer is selected, the query area is marked and the question above it changes to a color that indicates the contribution of that finding to risk: red indicating contribution to high risk, yellow indicating contribution to medium risk, and green indicating no contribution to risk. The decision bar on the bottom of the tool is made to stand out from the rest as it displays the recommendation. This bar prompts the user to continue assessing the trauma until enough criteria are met for a recommendation to be made. If a user error is made, or a criterion is reassessed and changes during the resuscitation, the yes/no status is visible and can be corrected. The human factors engineers advised that changes in the state of the responses be automatically reflected in the recommendation.

In anticipation of integration into the electronic display of the trauma flowsheet, we condensed the tool into a single column occupying about a third of the screen, so that other pertinent patient information could be displayed on the monitors in the trauma bay. We used a continuous loop that reassessed when a new criterion was checked off so that the system state would update instantaneously (sample code available from author on request). The recommendation made by the tool then would reflect real-time updates in the trauma assessment (Figure 3). In addition, any input was made to be reversible so that a wrong input could be changed immediately. Likewise, if criteria changed after patient reassessment during the resuscitation, the system would change immediately to reflect the new information and update the recommendation.

Figure 3.

Examples of (A) updating eCDS tool and (B) change in recommendation. CT = computed tomography; GCS = Glasgow Coma Scale; TBI = traumatic brain injury.

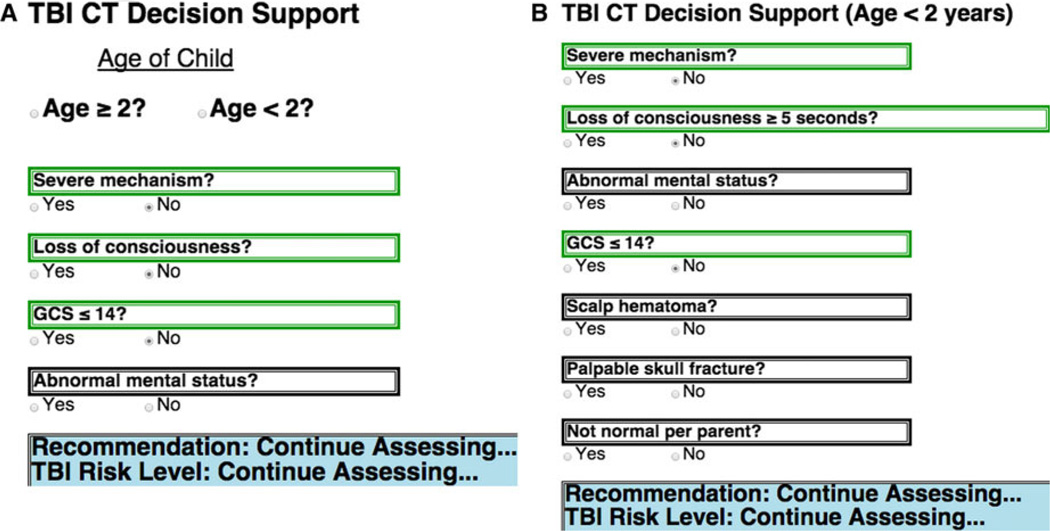

Recommendations from the Phase 3 usability testing with clinical experts led to further substantive improvements in the eCDS tool. One major consideration that needed to be accounted for was that the PECARN rule is stratified into two criteria sets based on age and that the age of a patient presenting to a trauma resuscitation was not always known immediately. The appropriate PECARN TBI criteria could not be displayed immediately, and nothing could be displayed on the eCDS tool screen until age was determined. Because reorganization of the two criteria sets had shown significant overlap early in the assessment (Table 2), an initial starting display was created so that the common criteria could be displayed for assessment without age being known, and information could carry over to the age-specific list once the age was determined (Figure 4).

Figure 4.

The eCDS tool allows collection of risk score elements prior to knowing age. CT = computed tomography; eCDS = electronic clinical decision support; GCS = Glasgow Coma Scale; TBI = traumatic brain injury.

The other consideration was for the tool to provide intermediate feedback to the provider about how the assessment is going even if a final recommendation cannot yet be made. Specifically, it was possible to indicate that the patient was no longer considered high risk but should be continued to be assessed to determine low versus intermediate risk. This prompt could only be triggered if all three of the age-specific high risk PECARN criteria are absent (Figure 5).

Figure 5.

Intermediate decision support guidance.

After five clinical experts gave feedback on 10 video cases each (with a three-point scale of better, equivalent, or worse), the iteratively refined eCDS tool was rated better than the existing CDR in 84% of respondent–case pairs and rated equivalent in the remainder. Content analysis did not identify any new barriers to eCDS tool use. Barriers to acceptability were not matching clinical workflow (6%), medicolegal concerns (4%), and the timing/redundancy (8%) of data display (Table 3). Although not directly comparable, these rates were substantially lower than the previously solicited CPOE tool barriers (Table 1).

Table 3.

Frequency of Identification of Barriers to Decision Support

| Barrier | New eCDS Tool, % (Expert–Video Pairs), n = 50 |

|---|---|

| Information redundancy | 8 |

| Fit to clinical workflow | 6 |

| Medicolegal concerns | 4 |

eCDS = electronic clinical decision support.

DISCUSSION

Our study demonstrates the importance of considering workflow and usability in design of CDS tools. Use of the PECARN TBI decision rule occurs in less than 20% of trauma resuscitations at our institution and physicians cite workflow and usability as major obstacles. Using a human factors approach and iterative design, we were able to substantially improve the match to workflow and perceived usability of the tool to support decision making in the trauma resuscitation setting.

The Agency for Healthcare Research and Quality has promoted the use of human factors analyses of usability and workflow to improve health information technology (IT) implementation.30 Usability evaluation methods have improved work in ambulatory care settings by engaging end users and simulating the clinical realities of use.31 Previous work applying paper checklist interventions has shown that human factors implementation is possible in operating rooms and intensive care units.32 Little human factors research has been conducted in other critical care medical settings,33–35 including trauma resuscitation rooms.36 This study is a novel translation of human factors usability and workflow methods to inform and improve diagnostic imaging eCDS in the pediatric trauma resuscitation environment.

The survey-identified barriers are consistent with prior research in that the two primary errors with health IT are problems with data entry and data display and poor provider communication and coordination with clinical workflow.23,37 The value of human factors methods for health IT implementation has been highlighted previously38,39 and is considered core content in the specialty of clinical informatics.40 The benefit of a human factors approach to improve usability and workflow of CDS was apparent in our study, as those two primary barriers were markedly reduced with the new eCDS tool (Table 3).

Dissemination and implementation remains a challenge for the translation of research findings into clinical practice, including emergency care.41 In contrast to clinical practice guidelines that have had well-publicized difficulties with adoption,42–44 CDRs have been a focus of clinical researchers because they are designed with bedside application in mind. Despite this intent, challenges remain for their implementation, as highlighted by previous work and our experience with the CPOE tool in the trauma resuscitation room.45–48 Part of this implementation barrier is inherent from CDR research methodology. Elements of CDRs are selected for reliability and then the statistical modeling organizes the criteria for optimal discrimination of the outcome of interest. When disseminated as peer-reviewed publications, CDRs are often presented in a way that matches the statistical model output or are perhaps organized in a way perceived to be easier to remember. Our work highlights how engaging usability experts and end-user practitioners to consider the clinical work environment can help reorganize and adapt CDRs as eCDS tools to better fit workflow and promote adoptability. When considered within a conceptual framework for implementation science,49 human factors design is a valuable strategy for improving intervention characteristics. Our study complements other ongoing human factors research using recommended implementation planning strategies50 to achieve better use of the PECARN TBI CDR.51

LIMITATIONS

The results of this study should be considered with some limitations in mind. First and foremost, we are describing a usability study to design a better eCDS tool and therefore are focused on implementation outcomes, not clinical outcomes. In fact, usability testing should not be undertaken in the clinical environment without extensive protections against patient harms. This is one of the major issues identified in prior studies and case reports where electronic health record features are put into production without scrutiny by human factors methods.17–20 Instead, we opted to use sound human factors methods to do simulated usability testing. Having now demonstrated that the new eCDS tool is usable and acceptable using valid human factors techniques, we have IRB approval to pilot test the eCDS tool in the trauma bay for feasibility and effect on actual clinical care, with all members of the trauma team participating.

Because of the workflow-specific nature of this usability evaluation, the eCDS tool end product is specific to the institution and clinical area where it was studied. It cannot and should not be transplanted into a different location, but rather the whole human factors engineering approach outlined should be undertaken to design a custom-fit eCDS tool. Couched within some of the popular conceptual frameworks of implementation science, our usability approach considers the inner setting (culture) and individual characteristics when planning an implementation, and adheres to the recommendation that implementation approaches should be “multifaceted, multilevel implementation plans tailored to local contexts.”49,50

The usability evaluation approach operates under a risk of bias. In particular, the heuristic evaluation and usability evaluation steps involve person-to-person communication with the study investigators. This interaction may result in a bias to perform evaluations that favor the eCDS tool. A prior systematic review did show that those eCDS tools implemented where they were created are often found to be more successful.52 Rather than consider those studies to be biased, it could be argued that those studies used better implementation methods. This highlights the tension between mitigating bias in the execution of an empirical scientific approach and pursuing an evidence-based mixed-methods implementation approach. Creating blinding to mitigate bias would limit the depth and richness of these key improvement steps, where exchanges of ideas allowed for substantive iterative changes to the eCDS tool.

Our study design did not allow direct comparison of the relative merits of the existing CPOE tool and the new eCDS tool. The CPOE tool barriers in Phase 0 were elicited as a general question to providers anonymously, and the eCDS tool barriers in Phase 3 were evaluated on an expert–video pair case-by-case basis. It is therefore not possible to compare the proportions of reported barriers. Our formative assessment was focused on creating a refined eCDS tool, and the future summative evaluation with testing in the live clinical environment would provide better comparison measures. A planned follow-up anonymous survey of eCDS tool would allow a more direct comparison with the CPOE tool, in addition to other outcome metrics to measure effective implementation.53

CONCLUSIONS

This study shows the utility of human factors engineering in the design of an electronic clinical decision support tool. The iteratively refined electronic clinical decision support tool is now ready for live clinical feasibility and acceptability testing. The goal of this work is to incorporate the electronic clinical decision support tool into the process of care to display real-time guidance to medical providers for head CT imaging in children with head injuries during trauma resuscitations. Effective implementation of an electronic clinical decision support tool may reduce the number of unnecessary CT requests for children at very low risk for traumatic brain injury. This methodologic approach could be applied to improve the implementation of electronic clinical decision support tools for other medical conditions evaluated in the ED.

Supplementary Material

Acknowledgments

This project was supported by the National Institutes of Health Clinical and Translational Science Award (CTSA) program, grants UL1TR000075 and KL2TR000076. Dr. Yadav, an associate editor for this journal, had no role in the peer-review process or publication decision for this paper.

The authors acknowledge Dr. Elizabeth Carter who provided statistical assistance in support of the study and our clinical experts, Drs. Oluwakemi Badaki, Paul Mullan, Karen O’Connell, Kathleen Brown, and Shireen Atabaki.

Footnotes

Presented at The Human Factors and Ergonomics Society International Symposium on Human Factors and Ergonomics in Health Care, Chicago, IL, March 2014; and the Society for Academic Emergency Medicine Annual Meeting, Dallas, TX, May 2014.

The authors have no potential conflicts to disclose.

Supporting Information

The following supporting information is available in the online version of this paper:

Data Supplement S1. Heuristics evaluation and screenshots of the original eCDS tool.

References

- 1.Faul M, Xu L, Wald MM, Coronado VG. Traumatic Brain Injury in the United States: Emergency Department Visits, Hospitalizations and Deaths 2002–2006. Atlanta, GA: Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2010. [Google Scholar]

- 2.Kuppermann N, Holmes JF, Dayan PS, et al. Identification of children at very low risk of clinically-important brain injuries after head trauma: a prospective cohort study. Lancet. 2009;374:1160–1170. doi: 10.1016/S0140-6736(09)61558-0. [DOI] [PubMed] [Google Scholar]

- 3.Brenner DJ. Estimating cancer risks from pediatric CT: going from the qualitative to the quantitative. Pediatr Radiol. 2002;32:228–223. doi: 10.1007/s00247-002-0671-1. [DOI] [PubMed] [Google Scholar]

- 4.Brenner DJ, Hall EJ. Computed tomography–an increasing source of radiation exposure. N Engl J Med. 2007;357:2277–2284. doi: 10.1056/NEJMra072149. [DOI] [PubMed] [Google Scholar]

- 5.Centor RM, Witherspoon JM, Dalton HP, Brody CE, Link K. The diagnosis of strep throat in adults in the emergency room. Med Decis Making. 1981;1:239–246. doi: 10.1177/0272989X8100100304. [DOI] [PubMed] [Google Scholar]

- 6.Stiell IG, Lesiuk H, Wells GA, et al. The Canadian CT Head Rule study for patients with minor head injury: rationale, objectives, and methodology for phase I (derivation) Ann Emerg Med. 2001;38:160–169. doi: 10.1067/mem.2001.116796. [DOI] [PubMed] [Google Scholar]

- 7.Wells PS, Anderson DR, Rodger M, et al. Excluding pulmonary embolism at the bedside without diagnostic imaging: management of patients with suspected pulmonary embolism presenting to the emergency department by using a simple clinical model and d-dimer. Ann Intern Med. 2001;135:98–107. doi: 10.7326/0003-4819-135-2-200107170-00010. [DOI] [PubMed] [Google Scholar]

- 8.Carpenter CR, Keim SM, Seupaul RA, Pines JM. Best Evidence in Emergency Medicine Investigator Group. Differentiating low-risk and no-risk PE patients: the PERC Score. J Emerg Med. 2009;36:317–322. doi: 10.1016/j.jemermed.2008.06.017. [DOI] [PubMed] [Google Scholar]

- 9.Institute of Medicine. Committee on Quality of Health Care in America. Crossing the Quality Chasm. Washington DC: National Academies Press; 2001. [Google Scholar]

- 10.Lang E, Wyer P, Haynes RB. Knowledge translation: closing the evidence-to-practice gap. Ann Emerg Med. 2007;49:355–363. doi: 10.1016/j.annemergmed.2006.08.022. [DOI] [PubMed] [Google Scholar]

- 11.Gaddis GM, Greenwald P, Huckson S. Toward improved implementation of evidence-based clinical algorithms: clinical practice guidelines, clinical decision rules, and clinical pathways. Acad Emerg Med. 2007;14:1015–1022. doi: 10.1197/j.aem.2007.07.010. [DOI] [PubMed] [Google Scholar]

- 12.Eichner J, Das M. Challenges and barriers to clinical decision support (CDS) design and implementation experienced in the Agency for Healthcare Research and Quality CDS demonstrations. Washington, DC: Agency for Healthcare Research and Quality, US Department of Health and Human Services Publication; 2010. [Google Scholar]

- 13.Handler JA, Feied CF, Coonan K, Vozenilek J, Gillam M, Smith MS. Computerized physician order entry and online decision support. Acad Emerg Med. 2004;11:1135–1141. doi: 10.1197/j.aem.2004.08.007. [DOI] [PubMed] [Google Scholar]

- 14.Holroyd BR, Bullard MJ, Graham TA, Rowe BH. Decision support technology in knowledge translation. Acad Emerg Med. 2007;14:942–948. doi: 10.1197/j.aem.2007.06.023. [DOI] [PubMed] [Google Scholar]

- 15.Karsh BT. Clinical Practice Improvement and Redesign: How Change in Workflow Can Be Supported by Clinical Decision Support. Washington, DC: Agency for Healthcare Research and Quality, US Department of Health and Human Services; 2009. [Google Scholar]

- 16.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157:29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 17.Wears RL, Perry SJ, Shapiro M, Beach C, Croskerry P, Behara R. A comparison of manual and electronic status boards in the emergency department: what’s gained and what’s lost? Proc Human Factors Ergonom Soc Ann Mtng. 2003;47:1415–1419. [Google Scholar]

- 18.Gaikwad R, Sketris I, Shepherd M, Duffy J. Evaluation of accuracy of drug interaction alerts triggered by two electronic medical record systems in primary healthcare. Health Informat J. 2007;13:163–177. doi: 10.1177/1460458207079836. [DOI] [PubMed] [Google Scholar]

- 19.Koppel R, Wetterneck T, Telles JL, Karsh BT. Workarounds to barcode medication administration systems: their occurrences, causes, and threats to patient safety. J Am Med Inform Assoc. 2008;15:408–423. doi: 10.1197/jamia.M2616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bisantz AM, Wears RL. Forcing functions: the need for restraint. Ann Emerg Med. 2009;53:477–479. doi: 10.1016/j.annemergmed.2008.07.019. [DOI] [PubMed] [Google Scholar]

- 21.Atabaki S, Chamberlain J, Shahzeidi S, et al. Reduction of cranial computed tomography for pediatric blunt head trauma through implementation of computerized provider order entry order sets. Annual Meeting of the International Brain Injury Association; March 21–25, 2012; Edinburgh, Scotland. [Google Scholar]

- 22.Hix D, Hartson HR. Developing User Interfaces: Ensuring Usability Through Product & Process. Hoboken, NJ: Wiley; 1993. [Google Scholar]

- 23.Kawamoto K. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330:765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)–A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Salmon P, Jenkins D, Stanton N, Walker G. Hierarchical task analysis vs. cognitive work analysis: comparison of theory, methodology and contribution to system design. Theoret Issues Ergonom Sci. 2010;11:504–531. [Google Scholar]

- 26.Nielsen J. Usability Engineering. Boston, MA: Academic Press; 1993. [Google Scholar]

- 27.Virzi RA. Refining the test phase of usability evaluation: how many subjects is enough? Hum Factors. 1992;34:457–468. [Google Scholar]

- 28.Li AC, Kannry JL, Kushniruk A, et al. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int J Med Informat. 2012;81:761–772. doi: 10.1016/j.ijmedinf.2012.02.009. [DOI] [PubMed] [Google Scholar]

- 29.Dahlbäck N, Jönsson A, Ahrenberg L. Wizard of Oz studies–why and how. Knowledge Based Sys. 1993;6:258–266. [Google Scholar]

- 30.Agency for Healthcare Research and Quality. Workflow Assessment for Health IT Toolkit Evaluation. [Accessed Jun 27, 2015];Federal Register. 2012 Available from: http://healthit.ahrq.gov/health-it-tools-and-re-sources/workflow-assessment-health-it-toolkit.

- 31.McQueen L, Mittman BS, Demakis JG. Overview of the Veterans Health Administration (VHA) Quality Enhancement Research Initiative (QUERI) J Am Med Inform Assoc. 2004;11:339–343. doi: 10.1197/jamia.M1499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Winters BD, Gurses AP, Lehmann H, Sexton JB, Rampersad CJ, Pronovost PJ. Clinical review: checklists–translating evidence into practice. Crit Care. 2009;13:210. doi: 10.1186/cc7792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Baxter GD, Monk AF, Tan K, Dear PR, Newell SJ. Using cognitive task analysis to facilitate the integration of decision support systems into the neonatal intensive care unit. Artif Intell Med. 2005;35:243–257. doi: 10.1016/j.artmed.2005.01.004. [DOI] [PubMed] [Google Scholar]

- 34.Malhotra S, Jordan D, Shortliffe E, Patel VL. Workflow modeling in critical care: piecing together your own puzzle. J Biomed Inform. 2007;40:81–92. doi: 10.1016/j.jbi.2006.06.002. [DOI] [PubMed] [Google Scholar]

- 35.Effken JA, Loeb RG, Johnson K, Johnson SC, Reyna VF. Using cognitive work analysis to design clinical displays. Stud Health Technol Inform. 2001;84:127–131. [PubMed] [Google Scholar]

- 36.Carter EA, Waterhouse LJ, Kovler ML, Fritzeen J, Burd RS. Adherence to ATLS primary and secondary surveys during pediatric trauma resuscitation. Resuscitation. 2013;84:66–71. doi: 10.1016/j.resuscitation.2011.10.032. [DOI] [PubMed] [Google Scholar]

- 37.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–112. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA. 2005;293:1261–1263. doi: 10.1001/jama.293.10.1261. [DOI] [PubMed] [Google Scholar]

- 39.Wears RL. Health information technology and victory. Ann Emerg Med. 2015;65:143–145. doi: 10.1016/j.annemergmed.2014.08.024. [DOI] [PubMed] [Google Scholar]

- 40.Gardner RM, Overhage JM, Steen EB, et al. Core content for the subspecialty of clinical informatics. J Am Med Inform Assoc. 2009;16:153–157. doi: 10.1197/jamia.M3045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bernstein SL, Stoney CM, Rothman RE. Dissemination and implementation research in emergency medicine. Acad Emerg Med. 2015;22:229–236. doi: 10.1111/acem.12588. [DOI] [PubMed] [Google Scholar]

- 42.Cabana MD, Rand CS, Powe NR, et al. Why don’t physicians follow clinical practice guidelines? JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 43.Grimshaw JM, Thomas RE, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess. 2004;8:iii–iv. 1–72. doi: 10.3310/hta8060. [DOI] [PubMed] [Google Scholar]

- 44.Scholes J, Commentary. Cabanna M, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. Nurs Crit Care. 2007;12:211–212. (1999) [Google Scholar]

- 45.Stiell IG, Bennett C. Implementation of clinical decision rules in the emergency department. Acad Emerg Med. 2007;14:955–959. doi: 10.1197/j.aem.2007.06.039. [DOI] [PubMed] [Google Scholar]

- 46.Stiell IG, Clement CM, Grimshaw J, et al. Implementation of the Canadian C-Spine Rule: prospective 12 centre cluster randomised trial. BMJ. 2009;339:b4146–b4146. doi: 10.1136/bmj.b4146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Bessen T, Clark R, Shakib S, Hughes G. A multifaceted strategy for implementation of the Ottawa ankle rules in two emergency departments. BMJ. 2009;339:b3056–b3056. doi: 10.1136/bmj.b3056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Shojania KG, Jennings A, Mayhew A, Ramsay C, Eccles M, Grimshaw J. Effect of point-of-care computer reminders on physician behaviour: a systematic review. Can Med Assoc J. 2010;182:E216–E225. doi: 10.1503/cmaj.090578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69:123–157. doi: 10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sheehan B, Nigrovic LE, Dayan PS, et al. Informing the design of clinical decision support services for evaluation of children with minor blunt head trauma in the emergency department: a sociotechnical analysis. J Biomed Inform. 2013;46:905–913. doi: 10.1016/j.jbi.2013.07.005. [DOI] [PubMed] [Google Scholar]

- 52.Garg AX. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 53.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2010;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.