Abstract

Interest in higher-order tensors has recently surged in data-intensive fields, with a wide range of applications including image processing, blind source separation, community detection, and feature extraction. A common paradigm in tensor-related algorithms advocates unfolding (or flattening) the tensor into a matrix and applying classical methods developed for matrices. Despite the popularity of such techniques, how the functional properties of a tensor changes upon unfolding is currently not well understood. In contrast to the body of existing work which has focused almost exclusively on matricizations, we here consider all possible unfoldings of an order-k tensor, which are in one-to-one correspondence with the set of partitions of {1, …, k}. We derive general inequalities between the lp-norms of arbitrary unfoldings defined on the partition lattice. In particular, we demonstrate how the spectral norm (p = 2) of a tensor is bounded by that of its unfoldings, and obtain an improved upper bound on the ratio of the Frobenius norm to the spectral norm of an arbitrary tensor. For specially-structured tensors satisfying a generalized definition of orthogonal decomposability, we prove that the spectral norm remains invariant under specific subsets of unfolding operations.

Key words and phrases: higher-order tensors, general unfoldings, partition lattice, operator norm, orthogonality

1. Introduction

Tensors of order 3 or greater, known as higher-order tensors, have recently attracted increased attention in many fields across science and engineering. Methods built on tensors provide powerful tools to capture complex structures in data that lower-order methods may fail to exploit. Among numerous examples, tensors have been used to detect patterns in time-course data [7, 17, 22, 29] and to model higher-order cumulants [1, 2, 14]. However, tensor-based methods are fraught with challenges. Tensors are not simply matrices with more indices; rather, they are mathematical objects possessing multilinear algebraic properties. Indeed, extending familiar matrix concepts such as norms to tensors is non-trivial [12, 18], and computing these quantities has proven to be NP-hard [4–6].

The spectral relations between a general tensor and its lower-order counterparts have yet to be studied. There are generally two types of approaches underlying many existing tensor-based methods. The first approach flattens the tensor into a matrix and applies matrix-based techniques in downstream analyses, notably higher-order SVD [3, 10] and TensorFace [25]. Flattening is computationally convenient because of the ubiquity of well-established matrix-based methods, as well as the connection between tensor contraction and block matrix multiplication [19]. However, matricization leads to a potential loss of the structure found in the original tensor. This motivates the key question of how much information a flattening retains from its parent tensor.

The second approach either handles the tensor directly or unfolds it into objects of order-3 or higher. Recent work on bounding the spectral norm of sub-Gaussian tensors reveals that solving for the convex relaxation of tensor rank by unfolding is suboptimal [24]. Interestingly, in the context of tensor completion, unfolding a higher-order tensor into a nearly cubic tensor requires smaller sample sizes than matricization [28]. These results are probabilistic in nature and merely focus on a particular class of tensors. Assessing the general impact of unfolding operations on an arbitrary tensor and the role of the tensor’s intrinsic structure remains challenging.

The primary goal of this paper is to study the effect of unfolding operations on functional properties of tensors, where an unfolding is any lower-order representation of a tensor. We study the operator norm of a tensor viewed as a multilinear functional because this quantity is commonly used in both theory and applications, especially in tensor completion [15,28] and low-rank approximation problems [23, 27]. Given an order-k tensor, we represent each possible unfolding operation using a partition π of [k] = {1, …, k}, where a block in π corresponds to the set of modes that should be combined into a single mode. Each unfolding is a rearrangement of the elements of the original tensor into a tensor of lower order. Here we study the lp operator norms of all possible tensor unfoldings, which together define what we coin a “norm landscape” on the partition lattice. A partial order relation between partitions enables us to find a path between an arbitrary pair of unfoldings and establish our main inequalities relating their operator norms. For specially-structured tensors satisfying a generalized definition of orthogonal decomposability, we show that the spectral norm (p = 2) remains invariant under unfolding operations corresponding to a specific subset of partitions. To our knowledge, our results represent the first attempt to provide a full picture of the norm landscape over all possible tensor unfoldings.

The remainder of this paper is organized as follows. In Section 2, we introduce some notation, and relate the spectral norm and the general lp-norm of a tensor. We then describe in Section 3 general tensor unfoldings defined on the partition lattice. In Section 4, we present our main results on the inequalities between the operator norms of any two tensor unfoldings and describe how the norm landscape changes over the partition lattice. In Section 5, we generalize the notion of orthogonal decomposable tensors and prove that the spectral norm is invariant within a specific set of tensor unfoldings. We conclude in Section 6 with discussions about our findings and avenues of future work.

2. Higher-order tensors and their operator norms

An order-k tensor 𝒜 = 〚ai1 … ik〛 ∈ 𝔽d1×⋯×dk over a field 𝔽 is a hypermatrix with dimensions (d1, …, dk) and entries ai1…ik ∈ 𝔽, for 1 ≤ in ≤ dn, n = 1, …, k. In this paper, we focus on real tensors, 𝔽 = ℝ. The total dimension of 𝒜 is denoted by .

The vectorization of 𝒜, denoted Vec(𝒜), is defined as the operation rearranging all elements of 𝒜 into a column vector. For ease of notation, we use the shorthand [n] to denote the n-set {1, …, n} for n ∈ ℕ+, and sometimes write when space is limited. We use Sd−1 = {x ∈ ℝd : ‖x‖2 = 1} to denote the (d − 1)-dimensional unit sphere, and Id to denote the d × d identity matrix.

For any two tensors 𝒜 = 〚ai1 … ik〛, ℬ = 〚bi1 … ik〛 ∈ ℝd1×⋯×dk of identical order and dimensions, their inner product is defined as

while the tensor Frobenius norm of 𝒜 is defined as

both of which are analogues of standard definitions for vectors and matrices.

Following [12], we define the covariant multilinear matrix multiplication of a tensor 𝒜 ∈ ℝd1×⋯×dk by matrices as

which results in an order-k tensor in ℝs1×⋯×sk. This operation multiplies the nth mode of 𝒜 by the matrix Mn for all n ∈ [k]. Just as a matrix may be multiplied in up to two modes by matrices of consistent dimensions, an order-k tensor can be multiplied by up to k matrices in k modes. In the case of k = 2, 𝒜 is a matrix and . Sometimes we are interested in multiplying by vectors rather than matrices, in which case we obtain the k-multilinear functional 𝒜: ℝd1×⋯× ℝdk → ℝ given by

| (1) |

where , n ∈ [k]. Note that multiplying by a vector in r modes in the manner defined in (1) reduces the order of the output tensor to k − r, whereas multiplying by matrices leaves the order unchanged. Although the coordinate representation of a tensor as a hypermatrix provides a concrete description, viewing it instead as a multilinear functional provides a coordinate-free, basis-independent perspective which allows us to better characterize the spectral relations among different tensor unfoldings.

We define the operator norm, or induced norm, of a tensor 𝒜 using the associated k-multilinear functional (1).

Definition 2.1 (Lim [12])

Let 𝒜 ∈ ℝd1×⋯×dk be an order-k tensor. For any 1 ≤ p ≤ ∞, the lp-norm of the multilinear functional associated with 𝒜 is defined as

| (2) |

where ‖xn‖p denotes the vector lp-norm of xn.

Remark 2.2

The special case of p = 2 is called the spectral norm, frequently denoted ‖𝒜‖σ. By (2), ‖𝒜‖σ is the maximum value obtained as the inner product of the tensor 𝒜 with a rank-1 tensor, x1 ⊗ ⋯ ⊗ xn, of Frobenius norm 1 and of the same dimensions. This point of view provides an equivalent definition of ‖𝒜‖σ as determining the best rank-1 tensor approximation to 𝒜, and we note that the rank-1 constraint becomes weaker as more unfolding is applied. See Section 4 for further details.

Because we restrict all entries of 𝒜 and to be real, we need not take the absolute value of 𝒜(x1, …, xk) as in [12]. It is worth mentioning that the notion of tensor lp-norms defined by (2) are not extensions of the classical matrix lp-norms when p ≠ 2. To see this, recall that for an m × n matrix A, one usually defines the lp operator norm as

| (3) |

In general (2) and (3) are not equal even for matrices, illustrated by the following example:

Example 2.3

Let A = 〚aij〛 be the 2 × 2 matrix and consider p = 1. Solving (3), we have

However, instead using (2) gives

which is neither the classical matrix l1-norm (equal to 5), nor the entry-wise l1-norm (equal to ∑i,j |aij | = 6).

Throughout this paper, we adopt Definition 2.1 and always use ‖·‖p to denote the lp-norm defined therein, even for matrices. In fact, (3) defines an operator norm by viewing the matrix as a linear operator from ℝd2 to ℝd1, whereas (2) defines an operator norm in which the matrix defines a bilinear functional from ℝd1 × ℝd2 to ℝ. These two definitions are equivalent when p = 2, but otherwise represent two different operators and result in two distinct operator norms. To be consistent with our treatment of tensors as k-multilinear functionals, we formulate matrices as bilinear functionals.

For a given tensor, its lp-norm and lq-norm mutually control each other, and the comparison bound is polynomial in the total dimension of the tensor, .

Proposition 2.4 (lp-norm vs. lq-norm)

Let 𝒜 ∈ ℝd1× ⋯ ×dk be an order-k tensor. Suppose ‖·‖p and ‖·‖q are two norms defined in (2) with q ≥ p ≥ 1. Then,

Proof

Starting from Definition 2.1, we have

| (4) |

For any q ≥ p ≥ 1, the equivalence of vector norms tells us

| (5) |

Applying (5) to xn, for n ∈ [k], gives

| (6) |

Inserting (6) into (4) and noting , we find

which completes the proof.

3. Partitions and general tensor unfoldings

Any higher-order tensor can be transformed into different lower-order tensors by modifying its indices in various ways. The most common transformations are n-mode flattenings, or matricizations, which rearrange the elements of an order-k tensor into a dn × ∏i≠n di matrix. For example, the n-mode matricization of a tensor 𝒜 ∈ ℝd1×⋯×dk is obtained by mapping the fixed tensor index (i1, …, ik) to the matrix index (in, m), where

| (7) |

Recently there has been much interest in studying the relationship between tensors and their matrix flattenings [9]. We present here a more general analysis by considering all possible lower-order tensor unfoldings rather than just matricizations. Using the blocks of a partition of [k] to specify which modes are combined into a single mode of the new tensor, we establish a one-to-one correspondence between the set of all partitions of [k] and the set of lower-order tensor unfoldings. The partition lattice then describes the underlying relationship between possible tensor unfoldings of a tensor 𝒜.

For any k ∈ ℕ+, a partition π of [k] is a collection of disjoint, nonempty subsets (or blocks) satisfying . The set of all partitions of [k] is denoted 𝒫[k]. We use |π| to denote the number of blocks in π, and to denote the number of elements in . We say that a partition π is a level-ℓ partition if |π| = ℓ. The set of all level-ℓ partitions of [k] is denoted by , which is a set of S(k, ℓ) elements, where S(k, ℓ) is the Stirling number of the second kind.

The following partial order naturally relates partitions satisfying a basic compatibility constraint and the resulting structure plays a key role in our work.

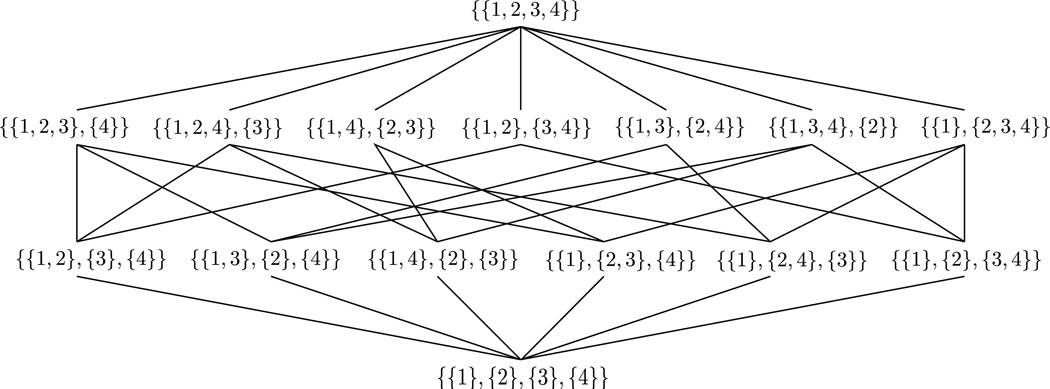

Definition 3.1 (Partition Lattice)

A partition π1 ∈ 𝒫[k] is called a refinement of π2 ∈ 𝒫[k] if each block of π1 is a subset of some block of π2; conversely, π2 is said to be a coarsening of π1. This relationship defines a partial order, expressed as π1 ≤ π2, and we say that π1 is finer than π2 while π2 is coarser than π1. If either π1 ≤ π2 or π2 ≤ π1, then π1 and π2 are comparable. According to this partial order, the least element of 𝒫[k] is 0[k] ≔ {{1}, …, {k}}, while the greatest element is 1[k] ≔ {{1, …, k}}. Equipped with this notion, 𝒫[k] generates a partition lattice by connecting any two comparable partitions that differ by exactly one level. An example is illustrated in Figure 1. Henceforth, 𝒫[k] may represent either the set of all partitions of [k] or the partition lattice it generates depending on context.

Figure 1.

The partition lattice 𝒫[4].

It is clear that many partitions are not comparable, including every pair of distinct partitions at the same level. To consider arbitrary partitions in tandem, we require an extension of this partial order. In general, for any two partitions π1, π2 ∈ 𝒫[k], we define their greatest lower bound π1 ∧ π2 as

More concretely, π1 ∧ π2 consists of the collection of all nonempty intersections of blocks in π1 and π2 and is unique for a given pair (π1, π2).

Example 3.2

Figure 1 illustrates 𝒫[4] in lattice form. Recall that an edge connects two partitions if and only if they are comparable and their levels differ by exactly one. Partitions are comparable if and only if there exists a non-reversing path between them. To clarify the components of Definition 3.1, take π1 = {{1, 4}, {2, 3}} and π2 = {{1, 3, 4}, {2}}. Then π1 ∧ π2 = {{1, 4}, {2}, {3}}, 0[4] = {{1}, {2}, {3}, {4}}, and 1[4] = {{1, 2, 3, 4}}.

The following definition generalizes the concept of n-mode flattenings defined in (7) to general unfoldings induced by arbitrary partitions π ∈ 𝒫[k].

Definition 3.3 (General Tensor Unfolding)

Let 𝒜 ∈ ℝd1×⋯×dk be an order-k tensor and . The partition π defines a mapping such that

| (8) |

where

Clearly, ϕπ is a one-to-one mapping, so its inverse is well defined. Thus ϕπ induces an unfolding action 𝒜 ↦ Unfoldπ(𝒜) such that

for all or, equivalently,

for all (i1, …, ik) ∈ [d1]×⋯× [dk]. Thus Unfoldπ(𝒜) is an order-ℓ tensor of dimensions , and we call it the tensor unfolding of 𝒜 induced by π.

Remark 3.4

By the definition of ϕπ in (8), Unfold0[k](𝒜) = 𝒜 and Unfold1[k](𝒜) = Vec(𝒜).

Example 3.5

Consider an order-4 tensor 𝒜 = 〚aijkl〛 ∈ ℝ2×2×2×2. We provide a subset of the possible tensor unfoldings to elucidate both the manner in which the operation works and the natural association with partitions.

- For π = {{1, 2}, {3}, {4}}, Unfoldπ(𝒜) is an order-3 tensor of dimensions (4, 2, 2) with entries given by

for all (k, l) ∈ [2] × [2]. - For π = {{1, 2}, {3, 4}}, Unfoldπ(𝒜) is a 4 × 4 matrix

- For π = {{1, 2, 3}, {4}}, Unfoldπ(𝒜) is a 8 × 2 matrix

Remark 3.6

There are different conventions to order the elements within each transformed mode. In principle, the ordering of elements within each transformed mode is irrelevant, so we do not explicitly spell out their orderings hereafter.

Remark 3.7

The unfolding operation leaves the Frobenius norm unchanged; that is, ‖𝒜‖F = ‖Unfoldπ(𝒜)‖F for all π ∈ 𝒫[k]. More generally, the inner product remains invariant under all unfoldings: 〈𝒜, ℬ〉 = 〈Unfoldπ(𝒜), Unfoldπ(ℬ)〉 for all π ∈ 𝒫[k], where 𝒜, ℬ ∈ ℝd1×⋯×dk are two order-k tensors of the same dimensions.

4. Operator norm inequalities on the partition lattice

In this section, we compare the operator norms of different unfoldings of a tensor, in particular relative to that of the original tensor. We first focus on the spectral norm (p = 2) and then discuss extensions to general lp-norms.

Recall that for an order-k tensor 𝒜 ∈ ℝd1×⋯×dk, its spectral norm is defined as

| (9) |

The maximization of the polynomial form 𝒜(x1, …, xk) on the unit sphere is closely related to the best, in the least-square sense, rank-1 tensor approximation. Specifically, for an order-k tensor 𝒜 ∈ ℝd1×⋯×dk, the problem of determining the spectral norm is equivalent to finding a scalar λ and a rank-1 norm-1 tensor x1 ⊗⋯⊗ xk that minimize the function

The corresponding value of λ is equal to ‖𝒜‖σ. Since x1 ⊗⋯⊗ xk must have the same order and dimensions as 𝒜, the rank-1 condition becomes less strict the more unfolded the tensor. In particular, for the vectorized unfolding, Vec(𝒜), the best rank-1 tensor approximation is simply Vec(𝒜) itself. For unfoldings into a matrix, Mat(𝒜), the best rank-1 approximation is the outer product of the leading left and the right singular vectors of Mat(𝒜). For higher-order unfoldings, the closed form of the best rank-1 approximation is not known in general. Nevertheless, the set of the rank-1 tensors over which the supremum is taken in (9) becomes more restricted. This observation implies that the spectral norm of a tensor unfolding decreases as the order of the unfolded tensor increases; that is, the spectral norm preserves the partial order on partitions.

Proposition 4.1 (Monotonicity)

For all partitions π1, π2 ∈ 𝒫[k] satisfying π1 ≤ π2,

In particular, we have the global extrema

Proof

Suppose π1, π2 ∈ 𝒫[k], and π1 is a one-step refinement of π2. Without loss of generality, assume π2 is obtained by merging two blocks B1, B2 ∈ π1 into a single block B ∈ π2. Let Unfoldπ1(𝒜) ∈ ℝd1×⋯×dℓ denote the tensor unfolding induced by π1 = {B1, …, Bℓ}. Then, Unfoldπ2(𝒜) is a (d1 · d2, d3, …, dℓ)-dimensional tensor. By definition of the spectral norm,

where the third line comes from the fact that the set {Vec(x1 ⊗ x1) : (x1, x2) ∈ Sd1−1 × Sd2−1} is contained in the set {y : y ∈ Sd1·d2−1}.

In general, if π1 ≤ π2, we can obtain Unfoldπ2(𝒜) from Unfoldπ1(𝒜) by a series of single unfoldings. Hence, applying the above arguments to these successive unfoldings gives the desired result.

The following lemma will play a key role in proving our main results:

Lemma 4.2 (One-Step Inequality)

For 1 < ℓ ≤ k, let ℬ ∈ ℝd1×⋯×dℓ be an order-ℓ tensor unfolding of an order-k tensor 𝒜 induced by the partition {B1, …, Bℓ} ∈ 𝒫[k], and let 𝒞 be an order-(ℓ − 1) tensor unfolding of ℬ induced by merging blocks Bi and Bj for some i, j ∈ [ℓ]. Then,

| (10) |

Proof

The upper bound follows readily from Proposition 4.1. To prove the lower bound, without loss of generality, assume 𝒞 corresponds to the merging of blocks Bℓ−1 and Bℓ so that 𝒞 is a (d1, …, dℓ−2, dℓ−1·dℓ)-dimensional tensor. Note that Sd1−1 ×⋯× Sdℓ−2−1 × Sdℓ−1dℓ−1 is a compact set, so the supremum (9) is attained in that set for 𝒞. Then, there exists such that

| (11) |

Define . By the self duality of the Frobenius norm in ℝdℓ−1dℓ, we can rewrite (11) as

| (12) |

On the other hand, if we define , then 𝒞* is simply the vectorization of Mat(𝒞*). Hence, by Remark 3.7, we obtain

| (13) |

Using the definition of Mat(𝒞*), we can write

| (14) |

Recall that Mat(𝒞*) is a matrix of size dℓ−1 × dℓ, meaning [8]

| (15) |

Using (14) in conjunction with (15), (13), and (12), we then obtain

which proves the lower bound.

Remark 4.3

Both bounds in the one-step inequality (10) are sharp. The sharpness of the upper bound will be discussed in Section 5. For the lower bound, consider an order-ℓ tensor unfolding , where {e1,i : i ∈ [d1]} is the standard orthonormal basis of ℝd1, {e2,i : i ∈ [d1]} is a set of d1 standard basis vectors in ℝd2, and 𝒟 is an arbitrary (d3, …, dℓ)-dimensional tensor. Assume d1 ≤ d2 and consider the two blocks B1 = {1}, B2 = {2}. By the unfolding operation specified in Lemma 4.2 with B1 and B2, , which is an order-(ℓ − 1) tensor unfolding by merging the first two modes of ℬ into a single mode. It follows that ‖ℬ‖σ = ‖𝒟‖σ and , and therefore the left-hand-side inequality in (10) is saturated.

More generally, we can establish inequalities relating the spectral norms of two tensor unfoldings corresponding to two arbitrary partitions π1, π2 ∈ 𝒫[k] that are not necessarily comparable. To do so, we must first introduce the following definition.

Definition 4.4

Given an order-k tensor 𝒜 ∈ ℝd1×⋯×dk, we define the map dim𝒜 : 𝒫[k] ×𝒫[k] → ℕ+ as

where π1, π2 ∈ 𝒫[k].

We label this quantity as dim𝒜(·, ·) because it involves a product of a subset of the dimensions of 𝒜, and dim𝒜(π1, π2) ≤ dim(𝒜) with equality only when π1 = π1 ∧ π2. Intuitively, dim𝒜(π1, π2) reflects the overlap between the unfoldings induced by π1 and π1 ∧ π2. Example 4.6 presents a concrete illustration.

Remark 4.5

We set D𝒜(B, B′) = 0 when B ∩ B′ = ∅. This does not affect the multiplication in Definition 4.4 because a block of π1 cannot be disjoint from every block of π2. Note that D𝒜(B, B′) = D𝒜(B′, B), but in general dim𝒜(π1, π2) ≠ dim𝒜(π2, π1).

Example 4.6

To illustrate the above map, let 𝒜 ∈ ℝd1×d2×d3×d4 and consider the partitions π1 = {{1, 2}, {3, 4}} and π2 = {{1, 2, 3}, {4}}, for which π1∧π2 = {{1, 2}, {3}, {4}}. From Definition 4.4,

Then,

Exchanging arguments, we find

Remark 4.7

As in Lemma 4.2, if π2 is a one-step coarsening of π1 obtained by merging two blocks and of dimensions di and dj into a single block , then

Hence, Lemma 4.2 can be written as

Having introduced Definition 4.4, we can now state our main result on how the spectral norms of two arbitrary unfoldings of a tensor are related:

Theorem 4.8 (Spectral norm inequalities)

Let 𝒜 ∈ ℝd1×⋯×dk be an arbitrary order-k tensor, and π1, π2 any two partitions in 𝒫[k]. Then,

Remark 4.9

Note that π1 and π2 need not be comparable.

- If dn = d for all n ∈ [k], then the result reduces to

where c1 = (k − ∑B∈π1 maxB′∈π2 |B ∩ B′|) and c2 = (k − ∑B∈π2 maxB′∈π1 |B ∩ B′|). For k = 4, the above inequalities in (b) are sharp. For example, consider the tensor 𝒜 = Id ⊗ Id, and partitions π = {{1, 2}, {3, 4}} and π′ = {{1}, {2}, {3}, {4}}, for which we have ‖Unfoldπ(𝒜)‖σ = d and ‖Unfoldπ′(𝒜)‖σ = 1. If π1 = π and π2 = π′, then c1 = 2 and d−c1/2 ‖Unfoldπ1(𝒜)‖σ = ‖Unfoldπ2(𝒜)‖σ. On the other hand, if π1 = π′ and π2 = π, then we have c2 = 2 and ‖Unfoldπ2(𝒜)‖σ = dc2/2 ‖Unfoldπ1(𝒜)‖σ. This particular tensor is further discussed later in Example 4.15.

Proof of Theorem 4.8

The main idea is to apply Lemma 4.2 to some appropriate sequence of partitions connecting π1 and π2 in the partition lattice. To do so, we consider Unfoldπ1∧π2(𝒜) and compare its spectral norm to that of Unfoldπ1(𝒜). Since π1 ∧ π2 ≤ π1, from Proposition 4.1 we have

| (16) |

Let and , and note that . Now, order the blocks in π2 such that

| (17) |

and define . Consider a sequence (𝒯1, 𝒯2, …, 𝒯ℓ2−1) of unfoldings of 𝒜 where 𝒯j, for 1 ≤ j ≤ ℓ2 − 1, is obtained from the tensor Unfoldπ1∧π2(𝒜) by an unfolding operation corresponding to merging the blocks B1,1, …, B1,j+1 into a single block. Using Lemma 4.2 and (17), we obtain

Similarly for all i ∈ [ℓ2 − 2],

Combining these inequalities gives

We can iterate the same line of argument with for i = 2, …, ℓ1 to obtain

Together with (16), this last inequality means

| (18) |

By symmetry,

| (19) |

Finally, combining (18) and (19) completes the proof.

We may immediately establish several corollaries of Theorem 4.8.

Corollary 4.10

All order-k tensors 𝒜 ∈ ℝd1×⋯×dk satisfy

Proof

Taking π1 = 0[k] and π2 = 1[k] in Theorem 4.8 yields the result.

Corollary 4.10 gives the worst-case ratio of the Frobenius norm to the spectral norm for an arbitrary tensor. This ratio is sharper than the bound recently found by Friedland and Lim [5, Lemma 5.1], namely ‖𝒜‖F ≤ dim(𝒜)1/2 ‖𝒜‖σ.

We now give a set of inequalities comparing the spectral norms of unfoldings at level ℓ to that at either level k or level 1. For ease of exposition, we assume dn = d for all n ∈ [k].

Corollary 4.11 (Bottom-Up Inequality)

Let 𝒜 ∈ ℝd×⋯×d be an order-k tensor with the same dimension d in all modes. For all levels 1 ≤ ℓ ≤ k and partitions ,

| (20) |

Proof

Take π2 = 0[k] in Theorem 4.8.

Remark 4.12

The existing work that is most closely related to our own is that of Hu’s [9], in which the author bounds the nuclear norm of a tensor by that of its matricization. Since the nuclear norm and spectral norm are dual to each other in tensor space, many of our results apply to the nuclear norm as well. Particularly, letting ℓ = 2 in the bottom-up inequality (20) reproduces Hu’s results.

Corollary 4.13 (Top-Down Inequality)

Let 𝒜 ∈ ℝd×⋯×d be an order-k tensor with the same dimension d in all modes. For all levels 1 ≤ ℓ ≤ k and partitions ,

Proof

Take π1 = 1[k] in Theorem 4.8.

Corollary 4.14

Let 𝒜 ∈ ℝd×⋯×d be an order-k tensor with the same dimension d in all modes. For all levels 1 ≤ ℓ ≤ k,

Proof

Note that the minimum of the maximal block sizes across all level-ℓ partitions of [k] is , and apply Corollary 4.13.

The above corollaries bound the amount by which norms can vary over a specific level ℓ. They imply that the ratios ‖Unfoldπ(𝒜)‖σ/‖𝒜‖σ and ‖Unfoldπ(𝒜)‖σ/‖𝒜‖F fall in the intervals [1, d(k−ℓ)/2] and [d−(k− ⌈k/ℓ⌉)/2, 1], respectively. Therefore, in the worst case, ‖Unfoldπ(𝒜)‖σ only recovers ‖𝒜‖σ or ‖𝒜‖F at poly(d) precision. Note that the factor d(k−ℓ)/2 has an exponent linear in the difference between the orders of the original tensor and its flattening. This means that the potential deviation between their spectral norms depends only on the difference in their orders rather than the actual orders themselves, and that the deviation accumulates in multiplicative fashion with a loss of in precision at each level. In contrast, the factor d−(k−⌈k/ℓ⌉)/2 depends on more than just the gap between k and ℓ, with a larger impact for unfoldings with orders close to k.

We provide a low-order example that reaches the poly(d) scaling factor in Corollary 4.11.

Example 4.15

Consider the order-4 tensor 𝒜 = Id ⊗ Id. Straightforward calculation shows that ‖𝒜‖σ = 1. Furthermore, by symmetry, the spectral norm of an unfolding induced by any partition in or must fall into one of the following five representative cases:

For .

For .

For .

For .

For .

Therefore

We conclude this section by generalizing Theorem 4.8 to lp-norms.

Theorem 4.16 (lp-norm inequalities)

Let 𝒜 ∈ ℝd1×⋯×dk be an arbitrary order-k tensor, and π1, π2 any two partitions in 𝒫[k]. Then,

- For any 1 ≤ p ≤ 2,

- For any 2 ≤ p ≤ ∞,

Proof

We only prove (a) since (b) follows similarly. For any given 1 ≤ p ≤ 2, taking q = 2 in Proposition 2.4 implies that the bound between the lp-norm and spectral norm depends only on the total dimension of the tensor, dim(𝒜) = ∏n∈[k] dn. Because the total dimension is invariant under any unfolding operation, we have

| (21) |

for all π ∈ 𝒫[k]. Combining (21) with Theorem 4.8 gives the desired results.

5. Orthogonal decomposability and norm equality on upper cones

We have seen that the unfolding operation may change the spectral norm by up to a poly(d) factor for an arbitrary 𝒜. This is undesirable in many flattening-based algorithms, such as [3, 25]. However, for some specially-structured tensors, the operator norm on the partition lattice may not change much either globally or locally. We demonstrate such a behavior for the following class of tensors:

Definition 5.1 (π-orthogonal decomposable)

Let 𝒜 ∈ ℝd1×⋯×dk be an order-k tensor and consider any partition π ∈ 𝒫[k]. Then 𝒜 is called π-orthogonal decomposable, or π-OD, over ℝ if it admits the decomposition

| (22) |

where λ1 ≥ λ2 ≥ ⋯ ≥ λr ≥ 0, and the set of vectors satisfies

for all B ∈ π and all n, m ∈ [r].

A concept similar to π-OD, referred to as biorthogonal eigentensor decomposition [28], is introduced in the tensor completion literature when k = 3 and π = {{1}, {2, 3}}. Informally speaking, π-OD imposes an orthogonality constraint on every block of singular vectors.

Remark 5.2 (0[k]-OD)

When π = 0[k] in Definition 5.1, we obtain the special case of 0[k]-OD tensors, which admit the decomposition (22) while satisfying

for all i ∈ [k] and all n, m ∈ [r].

The definition of 0[k]-OD tensors generalizes the definition of orthogonal decomposable tensors presented in [21], as we require neither symmetry nor equality of dimension across modes. In fact, a 0[k]-OD tensor 𝒜 is a diagonalizable tensor [3], meaning that the core tensor output from higher-order SVD is superdiagonal (i.e., entries are zero unless i1 = ⋯ = ik).

Lemma 5.3

Consider an order-k tensor 𝒜 ∈ ℝd1×⋯×dk.

Let π1, π2 ∈ 𝒫[k] and π1 ≤ π2. If 𝒜 is π1-OD, then 𝒜 is π2-OD.

- Let π ∈ 𝒫[k] and π ≠ 1[k]. If 𝒜 is π-OD, then

(23)

Proof

Part (a): For any two finite sets of vectors {xi} and {yi} for which xi, yi ∈ ℝdi, we have

| (24) |

for all B ⊂ [k]. Suppose B ∈ π2. If π1 ≤ π2, then there exist subsets C1, …, Cm ∈ π1 such that C1 ∪ ⋯ ∪ Cm = B. So,

which implies that 𝒜 is π2-OD if 𝒜 is π1-OD.

Part (b): Suppose is of the form . Note that π ≠ 1[k] implies ℓ ≥ 2. Letting where denotes the complement of with respect to [k], we have and π ≤ τ. By Lemma 5.3(a), 𝒜 is τ-OD, so 𝒜 admits a decomposition of the form

| (25) |

where

| (26) |

for all n, m ∈ [r].

Now define and for all n ∈ [r]. By (26), both {xn} and {yn} are sets of orthonormal vectors. By the definition of Unfoldτ (𝒜), (25) implies

which is simply the matrix SVD of Unfoldτ (𝒜). Hence ‖Unfoldτ (𝒜)‖σ = λ1. Using monotonicity (c.f., Proposition 4.1), we have

| (27) |

Conversely, by the definition of the spectral norm, we have

| (28) |

where the third line comes from (24) and the last line follows from the fact that 𝒜 is π-OD. Combining (27) and (28), we conclude ‖Unfoldπ(𝒜)‖σ = λ1.

Remark 5.4

The condition π ≠ 1[k] in Lemma 5.3 is needed for (23) to hold. In fact, consider a 2 × 2 matrix A = 2e1 ⊗ e1 + e1 ⊗ e2, where {ei, i ∈ [2]} is the canonical basis of ℝ2. Then, A is 1[k]-OD with λ1 = 2, but .

Theorem 5.5 (Norm equality on upper cones)

If 𝒜 is π-OD, then for any partition in the upper cone Uπ = {τ ∈ 𝒫[k] : π ≤ τ < 1[k]} of π, we have

Proof

If 𝒜 is π-OD, then by Lemma 5.3(b), we have ‖Unfoldπ(𝒜)‖σ = λ1. Given any τ ≥ π, by Lemma 5.3(a), 𝒜 is also τ-OD. Again, using Lemma 5.3(b), we have ‖Unfoldτ (𝒜)‖σ = λ1. Therefore, ‖Unfoldτ (𝒜)‖σ = ‖Unfoldπ(𝒜)‖σ.

Theorem 5.5 states that the spectral norm is invariant for π-OD 𝒜 under any unfolding induced by the partitions in the upper cone Uπ of π. This lies in contrast with the poly(d) factor we have seen for unstructured tensors.

Corollary 5.6

If 𝒜 is 0[k]-OD, then for all partitions π ≠ 1[k], we have

Corollary 5.6 implies that for 0[k]-OD tensors, the operator norm is invariant under any unfolding operations except vectorization. Lastly, π1, π2 ∈ Uπ1∧π2 implies the following corollary:

Corollary 5.7

Let π1, π2 ∈ 𝒫[k]. If 𝒜 is (π1 ∧ π2)-OD, then

6. Discussion

In this paper, we presented a new framework representing all possible tensor unfoldings by the partition lattice and established a set of general inequalities quantifying the impact of tensor unfoldings on the operator norms of the resulting tensors. We showed that the comparison bounds scale polynomially in the dimensions {dn} of the tensor, with powers depending on the corresponding partition and block sizes for any pair of tensor unfoldings being compared. As a direct consequence, we demonstrated how the operator norm of a general tensor is lower and upper bounded by that of its unfoldings.

In general, an unfolding operation may inflate the operator norm by up to a poly(d) factor, as seen in Corollary 4.11. Note that the quantity dim(𝒜) plays a key role in the worst-case inflation factor and is a manifestation of the curse of dimensionality. Specifically, dim(𝒜) can be quite large as the mode dimensions and tensor order increase, with particular sensitivity to the latter. In such settings, our main result seems to bode poorly for flattening-based algorithms; however, we believe that it should be interpreted with caution because our comparison bounds deal with arbitrary tensors rather than those often sought in applications. In fact, π-OD tensors permit much tighter bounds in which some unfoldings, including certain matricizations, leave the operator norm relatively unaffected. In practice, π-OD tensors, or those within a small neighborhood around π-OD tensors, arise widely in statistical and machine learning applications [1, 11, 16, 26].

Additionally, our work enables us to compare different unfoldings at the same level ℓ. Recent work on problems featuring nuclear-norm regularization has shown that not all n-mode flattenings are equally preferable [13]. Indeed, as illustrated in Example 4.15, the operator norm of level-2 unfoldings (i.e. matricizations) can be quite different. Recently, several algorithms have been proposed to account for this behavior. For example, Tomioka et al. [23] consider a weighted sum of the norms of all single-mode matricizations. Other techniques include two-mode matricization [26] and square matricization [15, 20], in which the original tensor is reshaped into a matrix by flattening along multiple modes. Our work provides general bounds to evaluate the effectiveness of such schemes. In particular, the results presented here are used in the theoretical analysis of a two-mode higher-order SVD algorithm proposed recently [26].

We have not attempted to characterize the degree to which operator norm relations on the partition lattice restrict the original tensor. Essentially, this is a converse problem asking whether π-OD is a necessary condition for Theorem 5.5 and Corollary 5.6 in addition to being sufficient. If not, it would be useful to determine the extent to which such equalities inform us about the intrinsic structure of the original tensor. From a practical standpoint, norm comparisons between different matricizations are relatively simple, but the optimal manner in which to use this information to learn about the original tensor remains unknown.

In closing, we emphasize that while this work focuses on theory rather than computational tractability, it possesses practical implications as well. Because direct calculation of the operator norm of a level-ℓ tensor is generally computationally prohibitive for ℓ ≥ 3, exploiting level-2 unfoldings may be attractive when the unfolding effect is small enough. Alternatively, for more precise calculations, a number of approximation algorithms exist for higher-order tensor problems [23, 27] at the cost of increased computation. Given that the trade-off between accuracy and computation is often unavoidable, our work may be of help in finding an appropriate application-specific balance when working with higher-order tensors.

Acknowledgments

This research is supported in part by a Math+X Research Grant from the Simons Foundation, a Packard Fellowship for Science and Engineering, and an NIH training grant T32-HG000047.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Anandkumar A, Ge R, Hsu D, Kakade SM, Telgarsky M. Tensor decompositions for learning latent variable models. The Journal of Machine Learning Research. 2014;15(1):2773–2832. [Google Scholar]

- 2.Cardoso J-F. Eigen-structure of the fourth-order cumulant tensor with application to the blind source separation problem. International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 1990:2655–2658. [Google Scholar]

- 3.De Lathauwer L, De Moor B, Vandewalle J. A multilinear singular value decomposition. SIAM Journal on Matrix Analysis and Applications. 2000;21(4):1253–1278. [Google Scholar]

- 4.Friedland S, Lim L-H. Computational complexity of tensor nuclear norm. SIAM Journal on Optimization. 2016;4(26):2378–2393. [Google Scholar]

- 5.Friedland S, Lim L-H. Nuclear norm of higher-order tensors. Mathematics of Computation. (to appear) [Google Scholar]

- 6.Hillar CJ, Lim L-H. Most tensor problems are NP-hard. Journal of the ACM. 2013;60(6) Article 45. [Google Scholar]

- 7.Hoff PD. Multilinear tensor regression for longitudinal relational data. The Annals of Applied Statistics. 2015;9(3):1169–1193. doi: 10.1214/15-AOAS839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Horn RA, Johnson CR. Matrix Analysis. Cambridge University Press; 2012. [Google Scholar]

- 9.Hu S. Relations of the nuclear norm of a tensor and its matrix flattenings. Linear Algebra and its Applications. 2015;478:188–199. [Google Scholar]

- 10.Kroonenberg PM. Three-Mode Principal Component Analysis: Theory and Applications. Vol. 2. DSWO Press; 1983. [Google Scholar]

- 11.Kuleshov V, Chaganty A, Liang P. Tensor factorization via matrix factorization. Proceedings of the 18th International Conference on Artificial Intelligence and Statistics (AISTATS) 2015:507–516. [Google Scholar]

- 12.Lim L-H. Singular values and eigenvalues of tensors: a variational approach. Proceedings of the IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP) 2005:129–132. [Google Scholar]

- 13.Liu J, Musialski P, Wonka P, Ye J. Tensor completion for estimating missing values in visual data. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2013;35(1):208–220. doi: 10.1109/TPAMI.2012.39. [DOI] [PubMed] [Google Scholar]

- 14.McCullagh P. Tensor notation and cumulants of polynomials. Biometrika. 1984;71(3):461–476. [Google Scholar]

- 15.Mu C, Huang B, Wright J, Goldfarb D. Square deal: Lower bounds and improved relaxations for tensor recovery. Proceedings of the 31st International Conference on Machine Learning (ICML) 2014:73–81. [Google Scholar]

- 16.Nickel M, Tresp V, Kriegel H-P. A three-way model for collective learning on multi-relational data. Proceedings of the 28th International Conference on Machine Learning (ICML) 2011:809–816. [Google Scholar]

- 17.Omberg L, Golub GH, Alter O. A tensor higher-order singular value decomposition for integrative analysis of DNA microarray data from different studies. Proceedings of the National Academy of Sciences. 2007;104(47):18371–18376. doi: 10.1073/pnas.0709146104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Qi L. Eigenvalues of a real supersymmetric tensor. Journal of Symbolic Computation. 2005;40(6):1302–1324. [Google Scholar]

- 19.Ragnarsson S, Van Loan CF. Block tensor unfoldings. SIAM Journal on Matrix Analysis and Applications. 2012;33(1):149–169. [Google Scholar]

- 20.Richard E, Montanari A. A statistical model for tensor PCA. Advances in Neural Information Processing Systems (NIPS) 2014;27:2897–2905. [Google Scholar]

- 21.Robeva E. Orthogonal decomposition of symmetric tensors. SIAM Journal on Matrix Analysis and Applications. 2016;37:86–102. [Google Scholar]

- 22.Sun J, Tao D, Faloutsos C. Beyond streams and graphs: dynamic tensor analysis. Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2006:374–383. [Google Scholar]

- 23.Tomioka R, Hayashi K, Kashima H. Estimation of low-rank tensors via convex optimization. 2010 arXiv:1010.0789. [Google Scholar]

- 24.Tomioka R, Suzuki T. Spectral norm of random tensors. 2014 arXiv:1407.1870. [Google Scholar]

- 25.Vasilescu MAO, Terzopoulos D. Multilinear analysis of image ensembles: Tensorfaces. Proceedings of European Conference on Computer Vision (ECCV) 2002:447–460. [Google Scholar]

- 26.Wang M, Song YS. Orthogonal tensor decompositions via two-mode higher-order SVD (HOSVD) 2016 arXiv:1612.03839. [Google Scholar]

- 27.Yu Y, Cheng H, Zhang X. Approximate low-rank tensor learning. 7th NIPS Workshop on Optimization for Machine Learning. 2014 [Google Scholar]

- 28.Yuan M, Zhang C-H. On tensor completion via nuclear norm minimization. Foundations of Computational Mathematics. 2015:1–38. [Google Scholar]

- 29.Zhao L, Zaki MJ. Tricluster: an effective algorithm for mining coherent clusters in 3D microarray data. Proceedings of the 2005 ACM SIGMOD International Conference on Management of Data. 2005:694–705. [Google Scholar]