Abstract

Injury and violence prevention strategies have greater potential for impact when they are based on scientific evidence. Systematic reviews of the scientific evidence can contribute key information about which policies and programs might have the greatest impact when implemented. However, systematic reviews have limitations, such as lack of implementation guidance and contextual information, that can limit the application of knowledge. “Technical packages,” developed by knowledge brokers such as the federal government, nonprofit agencies, and academic institutions, have the potential to be an efficient mechanism for making information from systematic reviews actionable. Technical packages provide information about specific evidence-based prevention strategies, along with the estimated costs and impacts, and include accompanying implementation and evaluation guidance to facilitate adoption, implementation, and performance measurement. We describe how systematic reviews can inform the development of technical packages for practitioners, provide examples of technical packages in injury and violence prevention, and explain how enhancing review methods and reporting could facilitate the use and applicability of scientific evidence.

Keywords: Systematic review, injury, violence, implementation

Over the past thirty years, the nature of public health practice has evolved such that state, tribal, local, and territorial (STLT) health agency practitioners are expected to use the best available evidence when selecting policy and program strategies. Such expectations are demonstrated within major federal funding initiatives across areas of health and social policy that grow the number of strategies supported by rigorous effectiveness research and prioritize funds for “top tier” evidence strategies (Haegerich, Gorman-Smith, Wiebe, & Yonas, 2010). Broadly, evidence encompasses information gathered from research (e.g., public health surveillance and program evaluations in the scientific literature); about population characteristics, needs, values, and preferences; and about resources available, including practitioner expertise, all within a given environment and organizational context (Brownson, Fielding, & Maylahn, 2009).

Evidence supporting injury and violence prevention is strong, and reflects a broad spectrum of education, behavior change, policy, engineering, and environmental strategies (Haegerich, Dahlberg, Simon, Baldwin, Sleet, Greenspan, & Degutis, 2014). The need for prevention strategies is critical, as injuries and violence are leading causes of death across the lifespan and pose a significant public health burden. For example, unintentional injury and homicide are among the top 5 leading causes of death for individuals aged 1–44 years. Drug overdose is the leading cause of injury death for all ages, followed by motor vehicle injury. The burden of homicide is felt particularly among young people ages 15 to 24 years (CDC, 2014). Given the health burden and strong research foundation for prevention, injury and violence prevention is an area of public health and social policy that needs greater application of evidence to practice (Haegerich et al., 2010; Sogolow, Sleet, & Saul, 2007). As conveners across multiple disciplines with a focus on improving community wellness, STLT health agencies are uniquely positioned to build collaboration and capacity across sectors that are invested in injury and violence prevention (e.g., transportation, justice, mental health, and substance abuse services), and promote increased use of evidence-based strategies.

Leaders of the movement toward evidence-based public health have identified three clear needs: (1) identify the evidence of effectiveness for different strategies; (2) translate that evidence into recommendations; and (3) increase the extent to which that evidence is used in public health practice (Brownson, Baker, Leet, & Gillespie, 2003).

Systematic reviews are a fundamental tool for meeting the first need (Brownson et al. 2009), as they efficiently summarize the findings of multiple studies. Systematic reviews, although useful, can be challenging for STLT agency practitioners to use easily. Common barriers to use include lack of access, awareness, familiarity, usefulness, and training; for example, practitioners are not always familiar with or have access to electronic tools that assist them in identifying evidence, such as PubMed (Wallace, Nwosu, & Clarke, 2012). The Institute of Medicine (2001) delivered a sentinel call to action to close the gap between research and practice, citing a 17 year delay between the discovery of evidence using rigorous methods and the use of such evidence in practice settings (Balas & Boren, 2000). Federal agencies, nonprofit foundations, and academic institutions can serve as knowledge brokers to help to close this gap by translating systematic review findings into practice guidance. Such translation activities can be bolstered by developing relationships, providing support, and building capacity of STLT agency practitioners (Dobbins et al., 2009); for example, through training and technical assistance to enhance implementation of evidence-based strategies, and evaluation guidance to allow for monitoring and improvement.

Organizational groups are facilitating access to scientific findings to improve public health and social policy. These include the Cochrane and Campbell collaborations that prepare, maintain, and promote the accessibility of systematic reviews, and efforts of the Community Preventive Services Task Force and the US Preventive Services Task Force that develop public health and clinical practice recommendations based on systematic reviews (Davies & Boruch, 2001; Grimshaw, Santesso, Cumpston, Mayhew, & McGowan, 2006; Harris et al., 2001; Briss et al., 2000).

One translation mechanism available to knowledge brokers to enhance dissemination of evidence-based strategies and support adoption and implementation is the “technical package” for STLT agency practitioners (Frieden, 2014). This mechanism combines evidence translation with capacity building for implementation and evaluation. In this paper, we review the use and limitations of systematic reviews for public health action, the concept of technical packages as a mechanism for translating systematic review findings into actionable recommendations, and provide examples of technical packages in injury and violence prevention to illustrate the potential contributions to practice improvement. We also provide suggestions for how systematic review methods can be improved to facilitate their use, applicability, and integration within technical packages.

The Use and Limitations of Systematic Reviews

A systematic review is “a scientific investigation that focuses on a specific question and that uses explicit, planned scientific methods to identify, select, assess, and summarize the findings of similar but separate studies. It may or may not include a quantitative synthesis of the results from separate studies (meta-analysis)” (IOM, 2011, p241). Well-conducted systematic reviews summarize a body of research evidence, identify the research questions, explain how the strategies investigated link to the outcomes, and critically appraise study quality using a rigorous method. Organizations dedicated to improving evidence-based decisions, such as the Cochrane Collaboration, have outlined fundamental criteria for high quality systematic reviews that attend to processes such as defining review questions, developing criteria for including studies, searching for studies, collecting studies and data, assessing risk of bias, analyzing data and undertaking meta-analyses, addressing reporting bias, presenting results, interpreting results, and drawing conclusions (see Higgins & Green, 2008 for more details). Reviews can provide epidemiologic information about factors that place individuals and communities at risk for a given health burden, as well as evaluative information about prevention strategy effectiveness.

Although systematic reviews can help practitioners identify “best bets” for public health impact, they have limitations. Investigators often employ strict criteria for study inclusion in reviews, summarize evidence for strategies without prioritizing for specific populations or contexts, and do not typically address implementation or fit. These limitations result in consideration of only a portion of information that is needed to inform public health action.

Limited scope and criteria

Investigators usually employ strict inclusion criteria (e.g., randomized trials or high quality quasi-experimental studies) or limit the review to narrowly defined programs when conducting systematic reviews (Valentine, Wilson, Rindskopf et al., 2016). Hence, the studies included are often limited in number, conducted in tightly constrained environments, within specific local contexts, and with limited time for follow-up to detect sustained health impact. Some public health topics, such as prescription drug overdose, are emerging research areas where few strategies are known to be effective (Haegerich, Paulozzi, Manns, & Jones, 2014). Further, evidence for community-level or policy strategies is often less plentiful than evidence for strategies that modify individual (e.g., problem-solving) and relationship (e.g., communication) factors (David-Ferdon & Simon, 2014; US Department of Health and Human Services, 2001). When there are few high quality studies available for review, the evidence that is synthesized can become truncated, identifying few promising or effective strategies for consideration by STLT agencies.

As a general principle, strategies should be implemented at a large scale when there is strong evidence of effectiveness behind them. There is risk associated with implementing strategies in a widespread manner that have not yet been subjected to rigorous evaluation – risk for inefficient use of resources and iatrogenic effects. Tension arises when a health problem is at epidemic status (e.g., prescription drug overdose), the number of identified effective strategies is low, and STLT agencies require guidance given consequences of inaction (e.g., declining health of a population); yet, history suggests that null results are often found when strategies are rigorously evaluated or evaluated at scale (Epstein & Klerman, 2012). When systematic reviews can only point to a small number of strategies, but the public health burden is high, it is necessary to prioritize the strategies with the most rigorous data supporting them, but also offer supplemental guidance to address the problem. A mechanism is needed to review additional research evidence; assess population characteristics, needs, values, and preferences; and leverage resources including practitioner expertise to make evidence-based public health decisions (Brownson et al., 2009). A mechanism is also needed to provide guidance on implementation and evaluation metrics so that strategies with limited evidence are selected for pilot implementation only in a defined area, and practitioners are provided guidance on collecting data on intended outcomes; this approach can help identify which strategies should be prioritized for more rigorous evaluation to build the evidence base before large-scale rollout (Epstein & Klerman, 2012).

Lack of prioritization

In some areas, such as youth violence and motor vehicle injury, there is an extensive research history with systematic reviews of numerous prevention strategies. STLT agency practitioners need guidance to appropriately select strategies. Too large a number of strategies selected for implementation could result in more costly and unwieldy programs with a lower likelihood of success (Frieden, 2010). To adequately prioritize interventions and choose those that are most likely to have success in a specific context, practitioners need information on variables such as quality of materials, flexibility, time requirements, complexity, and cost that have been found to predict adherence, core component implementation, dosage, and sustainability (Mihalic & Irwin, 2003). Yet, this information is commonly not presented within systematic reviews. For example, intervention cost and return on investment are important issues for practitioners and often a deciding factor for implementation (Raghavan, 2012). Acting on misguided, costly recommendations could waste scarce resources, and result in unintended consequences, such as exacerbating the outcomes interventions intend to prevent (Braveman, 2007). If economic information was more readily shared with evidence of intervention effectiveness from systematic reviews, STLT agency practitioners could use this information to make informed decisions about whether or not to pursue a specific strategy.

Absence of information about implementation and fit

Systematic reviews often provide minimal or uneven information that can be used to address implementation and variation in fit across environments (Roen et al., 2006; Paulsell, Thomas, Monahan, & Seftor, 2016). Only recently have there been innovations in systematic review methods to allow for incorporation of qualitative research, such as program implementation data (Higgins & Green, 2008). STLT agencies vary in populations served, risk and protective factors experienced, and community capacity and infrastructure, making it difficult to generalize findings and identify the best interventions to be implemented. For example, systematic reviews indicate that publicized sobriety checkpoint programs can significantly reduce alcohol-impaired driving and associated fatalities (Bergen et al., 2014). When communities implement these programs, the local environment varies (e.g., legal restrictions, law enforcement capacity). Although broad implementation of sobriety checkpoints could be beneficial, experience shows it is challenging to do because of variation in state laws that authorize checkpoint use and variation in enforcement. Guidance needs to be provided beyond what is typically included in systematic reviews so that implementers can anticipate and plan for legislative barriers (Lavis et al., 2005) and the local enforcement environment. A translation mechanism that goes beyond systematic review findings and discusses challenges and how strategies can be implemented in different ways may better equip STLTs to consider and address their unique community assets and challenges and increase their use of evidence-based strategies. Thus, systematic reviews include necessary, but not sufficient, guidance for STLT agencies.

The Technical Package Concept

What are technical packages?

As described by Frieden (2014), technical packages outline a narrow range of evidence-based strategies to address a specific public health problem, along with the estimated costs and impacts. The purpose is to provide concrete evidence-based guidance to STLT agencies to help them select, implement, and evaluate prevention strategies to improve health and social outcomes. The audience includes STLT public health practitioners. Scientists and program administrators within the federal government, nonprofit agencies, and academic institutions, serving in the role of knowledge brokers (Meyer, 2010; Ward, House, & Hamer, 2009), develop technical packages. STLT public health practitioners can use technical packages when planning programmatic efforts (e.g., in response to funding opportunity announcements). A narrow range of evidence-based strategies is desired so that STLT practitioners are guided to implement the most effective, feasible, scalable, and sustainable interventions identified through rigorous evaluation. Yet, a number of options are desired so that STLT practitioners can select the strategies that address the key risk and protective factors that are apparent in their own community. Within the package, users can find a suite of interventions that when implemented create synergy and enhance public health impact (Frieden, 2014). Interventions are typically selected for the package based on feasibility of implementation and evidence from systematic reviews or rigorous evaluation studies (e.g., randomized controlled trials or quasi-experimental evaluations) showing impact on the health outcome or the risk and protective factors associated with the outcome; interventions with emerging evidence that address high burden issues and known risk factors might also be selected, with refinements made when results of rigorous evaluation become available. Technical packages can further evidence-based public health action by placing research evidence within the context of population and community needs, capacities, resources, and environment/organizational contexts (Brownson et al, 2009). They may also facilitate translation when implementation and evaluation guidance are included. In injury and violence prevention, technical packages are a new method for translating scientific information. Knowledge brokers within the public health field are starting to conceptualize the components and how they could be structured.

How are systematic reviews used in technical packages?

Systematic reviews are valuable tools for the development of technical packages as they assist in identifying at-risk populations and promising or effective strategies based on research. For example, epidemiologic systematic reviews on risk and protective factors that contribute to injury burden, such as individual (e.g., socio-demographics), relationship (e.g., supervision), community (e.g., social capital), and societal (e.g., existing policies and systems) factors can identify which populations have the greatest injury burden, the factors that increase their risk, and the contexts that need to be modified to mitigate harm. Evaluation systematic reviews on policy, program, and practice effectiveness can assist in identifying which types of strategies have impacts on knowledge, attitudes, behaviors, health, and other violence/injury outcomes over multiple trials, contexts, and samples. They can signal which strategies might be promising when there is a limited evidence base, and indicate which strategies might be most effective when rigorous studies are plentiful.

How do technical packages extend traditional evidence synthesis?

Technical packages go beyond communicating to STLT agency practitioners which strategies are most promising or effective based on the scientific evidence. Other mechanisms serve this limited purpose. For example, evidence-based program registries typically provide a compilation of programs that are intended to prevent specific health outcomes. Blueprints for Healthy Youth Development is one such registry that provides information about evidence-based programs which impact problem behavior, such as youth violence, education, emotional well-being, physical health, and positive relationships (http://www.blueprintsprograms.com/). While ratings are provided for programs so that users can judge the level of evidence of effectiveness (e.g., model, promising) with benefits and costs, guidance is not provided on how to select a particular program or set of programs from the list or about which are most feasible, scalable, sustainable, or work in a synergistic fashion. Registries are typically limited to programs, and are not broadly inclusive of system changes or policies – that is, registries tend to focus on interventions that have specific structured implementation at individual, family, or community levels. In the rare instances where systems changes or policies are included, they are usually specific to an organization implementing the intervention (e.g., school policy/systems change implementation to improve the behavioral environment, such as in Positive Behavioral Interventions and Supports; archived in the Substance Abuse and Mental Health Services Administration’s (SAMHSA) National Registry of Evidence-Based Programs and Practices), and do not represent broader legislation or regulation, for example at the state level. Technical packages extend traditional evidence synthesis and registries by highlighting strategies that target constellations of risk and protective factors at multiple levels of the social ecology; thus, when implemented in combination with one another, strategies might have synergistic impact. Other mechanisms like planning systems assist communities in planning, implementing, and evaluating the impact of programs. Getting to Outcomes (Wandersman, Imm, Chinman, & Kaftarian, 2000), for example, leads practitioners through a 10-step process of identifying underlying needs and conditions, goals and objectives, best practices, fit to community context, resources needed, program plans, process evaluation, outcome evaluation, improvement plans, and sustainability. Planning systems typically do not specify the prevention strategies that are preferable for selection or are likely to have the greatest impact in a given context.

What are the key components of technical packages?

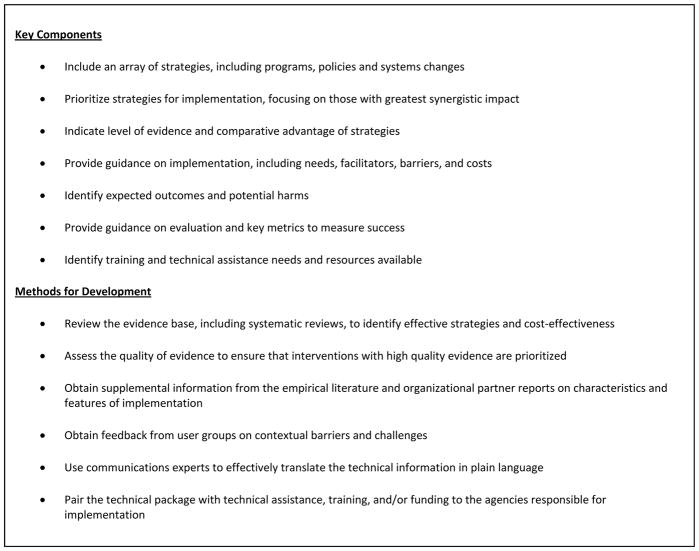

Technical packages triage and prioritize the list of available strategies for STLT agency practitioners, focusing on those strategies that are likely to have the greatest synergistic impact (that is, provide an effect on outcomes that is greater than the effect that would be seen by a series of interventions implemented separately) (see Figure 1 for a summary of key components of technical packages). Technical packages can highlight new and innovative strategies that show promise but require further evaluation, as well as well-established strategies where the evidence is strong. Strategies can be prioritized by examining the effect estimates across studies, selecting interventions with specific components to allow for coverage across outcomes and mechanisms of action, identifying the potential harms of specific interventions, assessing applicability of interventions to specific settings, or gathering information on acceptability or cost constraints (e.g., see Glasziou et al., 2014). Importantly, technical packages can provide guidance on implementation and evaluation. The mere discovery of prevention strategies that work does not ensure their use. Even after identifying “what works” and packaging materials for widespread use, most evidence-based strategies are not adopted and implemented in practice settings, and when adopted are often implemented ineffectively (Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005; Sloboda, Dusenbury, & Petras, 2014). Translation (sometimes termed “dissemination” or “implementation”) research has illuminated factors that impede or promote the adoption and use of evidence-based strategies (Fixsen et al., 2005; Wandersman, Duffy, Flaspohler, Noonan, & Lubell et al., 2008; Glasgow, Lichtenstein, & Marcus, 2003). Hence, well-designed technical packages must penetrate the “black box” between research and practice, and incorporate the spectrum of activities that run from scientific discovery to research synthesis, capacity building, dissemination, adoption and implementation (Wandersman et al., 2008; Noonan & Emshoff, 2013).

Figure 1.

Key Components of Technical Packages and Methods for Development

Consistent with diffusion of innovation principles, technical packages should attend to the factors that influence whether STLT practitioners will try something new: That is, technical packages should have clearly communicated messages, be easy to use, include evidence-based strategies that can be implemented on a trial basis, and illustrate how the included strategies are better than other policies or practices that have been previously tried (i.e., have a relative advantage) and will have observable results (Rogers, 1962). These factors enhance efforts to promote evidence-based strategies and stimulate adoption. Further, adoption and use are driven by the fit of the strategy with the current needs and preferences of the implementing organization, as well as the climate for implementation – the organizational culture, administrative support, training, and other reinforcements for implementing that particular strategy (e.g., Klein & Sorra, 1996). And after a new strategy is adopted, the quality of the implementation – that is, whether the technical package is used effectively – rests on selection of staff, implementer training, and other “core components” that explain implementation outcomes (Fixsen, Blase, Naoom, & Wallace, 2009). Therefore, to ensure adoption and use, developers of technical packages should understand their intended audience’s needs and preferences when developing the content to maximize natural spread of the interventions. Because information alone is rarely sufficient to change practice or policies, developers are encouraged to scale up the interventions through dissemination and marketing of the materials to STLT agency practitioners (and potentially other audiences, including elected officials or other key decision makers in states) and training of implementers based on the difficulty of the package’s specifications, while emphasizing how the package fits their organizational characteristics.

Technical packages begin in the knowledge creation funnel of the Knowledge to Action cycle (Straus, Tetro, & Graham, 2009), in which translation is seen as a dynamic and complex process. The technical package is a product that is developed by knowledge brokers who clearly identify the problem to be addressed, review and select evidence, adapt the evidence to likely implementation contexts, assess barriers to evidence use, and select and prioritize interventions for implementation. The technical package also serves as a tool that guides users on the action cycle, providing instructions on adaptation, monitoring, evaluation, and sustainability. The technical package process is a dynamic one; that is, packages are updated as programmatic efforts are implemented and evaluation activities identify successes and barriers in changing intended outcomes.

What is the evidence that technical packages can improve public health?

Although evaluation is needed to understand the utility and impact of technical packages, there is some evidence to suggest that they are a promising translation strategy. For example, in the area of tobacco control, use of a global policy technical package consisting of six evidence-formed interventions (MPOWER) was associated with a significant decrease in smoking across 5 WHO regions in an exploratory analysis, with greater decreases seen in countries with higher levels of implementation (Dubray et al., 2014). Further, the Communities that Care (CTC) prevention system is consistent with the technical package concept, supplemented by intensive implementation and evaluation technical assistance. CTC is a data-driven strategic prevention planning model that supports communities in assessing local risks and protective factors and connects this information to a suite of evidence-based interventions to reduce violence, delinquency, and substance use among young people, in addition to guidance on mobilization of community stakeholders to collaborate in the selection, installation, and monitoring of the prevention system (Hawkins et al., 2012). In a randomized trial of CTC, increases were seen in the use of evidence-based programs in intervention communities compared to control communities (Fagan et al., 2011), and after several years, significant reductions were detected for delinquency, violence, and substance use, even after intensive implementation support ended (Hawkins et al., 2012). Greater evaluation is needed of the technical package concept to understand if package dissemination can result in successful implementation and health impact, or if technical assistance is needed for successful use, and how much. Consistent with principles for evaluation of complex interventions (Bamberger, 2012), evaluation strategies might include mixed-methods approaches that combine quantitative and qualitative approaches to assess intervention adoption and fidelity of implementation, tracking of technical assistance, and assessment of key outcomes.

Exemplars in Injury and Violence Prevention

To illustrate the concept of the technical package, show how systematic reviews are an important component, and highlight opportunities and challenges in development, we provide exemplars in the area of prescription drug overdose, youth violence, and motor vehicle injury prevention, three areas of great public health burden (see Table 1). The exemplars illustrate initial conceptual frameworks. Packages are in various stages of development, and have not yet been fully released or implemented with key audiences; when available, these packages can be accessed from the CDC Injury Center website (http://www.cdc.gov/injury/). We highlight the health burden justifying the injury topics addressed, the systematic review foundation for selection of evidence-based strategies, the limitations of the evidence base for informing practice, the importance of providing supplemental implementation and evaluation guidance, and the challenges faced when developing technical packages for population-level impact while attending to adaptation and unique community needs. The process for developing these specific technical packages has been varied and is ongoing; however, common features of development are summarized in Figure 1. These packages complement other injury and violence prevention packages recently published in the areas of child abuse and neglect and sexual violence prevention, available at http://www.cdc.gov/violenceprevention/pub/technical-packages.html (Fortson, Klevens, Merrick, Gilbert, & Alexander, 2016; Basile, DeGue, Jones, Freire, Dills, Smith, & Rainford, 2016).

Table 1.

Illustrations of Technical Package Development in Injury and Violence Prevention

| Point of Consideration | Prescription Drug Overdose | Youth Violence | Tribal Motor Vehicle Injury |

|---|---|---|---|

| Why Prevention is Important: Public Health Burden |

|

|

|

| Example Sources of Evidence |

|

|

|

| Potential Package Components |

|

|

|

| Key Stakeholders for Implementation |

|

|

|

| Challenges |

|

|

|

Note: PDMP = prescription drug monitoring program; BAC = blood alcohol concentration

Prescription drug overdose prevention

The United States is in the midst of a prescription opioid overdose epidemic. From 1999 to 2014, over 165,000 Americans have died from an overdose involving prescription opioids. Deaths are starting to decline for the first time in 15 years, but still a large number of people continue to die from these drugs – over 14,000 in 2014 (CDC, 2016). In parallel with death trends, from 1999 to 2008 opioid prescriptions quadrupled (CDC, 2011). Because overdose deaths and opioid sales rose together in lockstep, many researchers and organizations have focused on inappropriate prescribing – and all the policies and systems that have supported it – as a key driver (Dowell, Kunins, & Farley, 2013; Kenan, Mack, & Paulozzi, 2012; Kirschner, Ginsburg, & Sulmasy, 2014).

States have implemented interventions to address problematic prescribing and risky patient behaviors, but the evidence base is limited. In a systematic review, Haegerich, Paulozzi, Manns, and Jones (2014) summarized the evidence for prevention approaches. The investigators reviewed multiple strategies within one comprehensive review and analyzed effects on provider behavior, patient behavior, and health outcomes. Overall, there were a small number of evaluation studies and study quality was low. There is some promising evidence for prescription drug monitoring programs (PDMPs), insurer strategies (e.g., patient review and restriction, drug utilization review, prior authorization), pain clinic legislation, clinical guidelines, and naloxone distribution programs for improving prescribing and patient outcomes (Haegerich et al., 2014). These strategies are considered promising because although there were positive outcomes, evaluation studies used designs with methodological weaknesses, such as lack of baseline data and comparison groups, inadequate statistical testing, small sample sizes, self-reported outcomes, and short-term follow-up. The systematic review provides evidence to support an initial prioritization of interventions for states and health systems, but lacks detail needed to create a technical package. This omission is unfortunate, given the urgency of the problem and frequent state demands for technical assistance to address the epidemic.

In response, CDC is funding state health departments through a new $70M program entitled, Prescription Drug Overdose Prevention for States. This program is designed to enhance, implement, and evaluate state-level interventions that have the potential to address the problem: enhancement of PDMPs, implementation of insurer and pharmacy benefit manager mechanisms (e.g., prior authorization, drug utilization review), and evaluation of state policies, rules, or regulations (e.g., pain clinic regulation, doctor shopping, Good Samaritan laws). Because the quality of evidence for these strategies is low, we need to grow the evidence base by conducting evaluations of these efforts, with a careful eye toward implementation factors that influence both adoption and health outcomes. The funded states will engage in both implementation and evaluation efforts, providing an opportunity for these states to become laboratories for greater innovation and evaluation of “what works.” In essence, systematic review findings are being used to directly inform funding for programmatic efforts, as with other recent federal initiatives (e.g., teen pregnancy prevention; Goesling, Oberlander, & Trivits, 2016), with evaluation components built in to support further development of the evidence base.

To scale up this program, CDC is in the process of drafting a technical package that will leverage both the systematic review and what is known about effective knowledge translation – responding to the target audience’s expressed needs and preferences; making the package easy to use by providing a short prioritized list of options; offering technical assistance and training on the suite of interventions in the package to build implementation capacity; disseminating materials when audiences are ready to receive them; providing clear guidance and training on implementation; and providing evaluation metrics so states can monitor their own progress. In the area of PDMPs, for example, effective components likely include universal use (all controlled substances are included and all prescribers check it before writing a prescription), real-time data, and active management (reports are proactively sent to stakeholders to alert them to aberrant prescribing or use). However, each state has a different PDMP configuration and set of rules that apply to its use. Table 2 provides example content to be included in the technical package. While the systematic review provides information on the purpose, target population, and rationale for PDMPs with key findings about impact, the package provides more information about needed resources, implementation activities, opportunities, challenges, key evaluation metrics, resources and tools, and example case studies of success in states.

Table 2.

Illustration of the Prescription Drug Overdose Prevention Technical Package – Prescription Drug Monitoring Program Strategy

| Strategy Example | Prescription Drug Monitoring Programs |

|---|---|

| Description |

|

| Purpose |

|

| Target Population |

|

| Essential Components |

|

| Role of State Health Department |

|

| Rationale |

|

| Evidence Level |

|

| Implementation Activities |

|

| Resources |

|

| Opportunities |

|

| Challenges |

|

| Key evaluation metrics |

|

| Data sources |

|

| Case Study |

|

| Resources and Tools |

|

| Evidence Resources |

|

Youth Violence Prevention

Youth violence is when young people intentionally use physical force or power to threaten or harm others. There are many forms, such as fighting, bullying, threats with weapons, and gang-related violence. Youth violence is a significant public health issue with homicide being the third leading cause of death among 10–24 years-olds (CDC, 2014). A strong research base and several systematic reviews demonstrate that youth violence can be prevented (e.g., USDHHS, 2001; Center for the Study and Prevention of Violence, n.d.; CDC, n.d.; Office of Justice Programs, n.d.; Substance Abuse Mental Health Services Administration, n.d.). Evidence-based approaches include those that build youth’s skills to avoid violence as well as parenting and family-focused strategies that provide support and teach communication, problem-solving, monitoring, and behavior management skills. Systematic reviews have found these strategies are effective in reducing youth violence among broad groups of youth and among youth at greatest risk (e.g., chronic offenders, youth in families with high levels of conflict). Research is emerging about community- and societal-level prevention strategies (Masho, Bishop, Edmonds, & Farrell, 2014; Webster, Whitehill, Vernick, & Curriero, 2012; MacDonald, Golinelli, Stokes, & Bluthenthal, 2010), but this area is not developed enough yet to have associated systematic reviews. Growing benefit-cost information shows that the financial savings of evidence-based programs can far outweigh costs (Washington State Institute for Social Policy, n.d.; Center for the Study and Prevention of Violence, n.d.).

The accessibility of information about evidence-based approaches has improved with free, online systematic reviews (e.g., Community Guide, Blueprints for Healthy Youth Development). Although useful, these resources include information about strategies for a wide-range of health topics (i.e., not just youth violence), information is sometimes out of date, and communities still need to sift through technical information across multiple resources and publications to determine which intervention should be prioritized based on evidence and needs (Mihalic & Elliott, 2015). These systematic reviews also typically lack information about the community capacity and infrastructure requirements, which is necessary to successfully implement evidence-based approaches (Puddy & Wilkins, 2011).

CDC provides technical assistance and develops tools to increase the capacity of STLT agencies to use evidence-based approaches to prevent youth violence. For instance, CDC’s Preventing Youth Violence: Opportunities for Action and its companion guide (David-Ferdon & Simon, 2014) has components consistent with a technical package. The guide presents the trends, disparities, and causes of youth violence that need to be considered with designing a strategic prevention approach. The resource presents a range of evidence-based prevention strategies and cost estimates informed by systematic reviews. Online systematic reviews (e.g., Blueprints for Healthy Youth Development, Community Guide), capacity to implement and sustain prevention strategies, and evidence-based action steps for multiple groups (e.g., public health, community leaders and members, parents and other caregivers, and youth) are discussed.

CDC’s Preventing Youth Violence: Opportunities for Action and its companion guide are providing a foundation for a technical package that is currently in development at the CDC. This technical package will address the limitations of the existing guides as they do not have a narrowed focus on the most effective, feasible, scalable or sustainable strategies; rather, what is broadly known about prevention strategies is presented. Although some examples of cost effectiveness data are presented, estimates are not provided about every prevention strategy as these data are emerging. Nonetheless, these resources bridge the gap between science and practice and make what is known about preventing youth violence accessible.

Developers of the youth violence technical package are wrestling with the fact that the causes and appropriate prevention strategies can vary significantly across communities. Although an ideal technical package might have a focused list of specific prevention strategies, this approach for youth violence could result in too small a range of programs. Research comparing the relative effectiveness of evidence-based programs is limited, which creates challenges to prioritizing programs. The technical package currently in development is envisioned to provide a menu of evidence-based prevention strategies (e.g., strengthen youth’s skills) that is complemented by approaches (e.g., universal school-based programs) to advance the strategy and examples of specific programs (e.g., Good Behavior Game, Life Skills Training). Implementation guidance for the technical package will also be developed that may include case studies to illustrate how communities could begin with the general menu of strategies and ultimately select approaches and programs that are the most feasible, scalable, and sustainable based on their risk factor/asset profile as well as more information about program costs and delivery considerations. The usefulness of this technical package could be supplemented by technical assistance and tools, such as CDC’s STRYVE Strategies Selector Tool (http://vetoviolence.cdc.gov/stryve/strategy_pdf.html), that helps communities build capacity to select approaches that match local needs, implement them effectively, and evaluate their effects.

Motor Vehicle Injury Prevention

American Indians and Alaska Natives (AI/AN) are at increased risk for motor-vehicle crash (MVC)-related injury and death with rates 1.5 to 3 times higher than rates of other Americans. Several factors place AI/AN at increased risk including low rates of seat belt and child safety seat use and a high prevalence of alcohol-impaired driving (NHTSA, 2014). To address this disparity, tribal motor vehicle safety programs have been funded by the CDC and the Indian Health Service (IHS). CDC funded 12 Tribal Motor Vehicle Injury Prevention Programs (TMVIPP) and the IHS Tribal Injury Prevention Cooperative Agreement Program (TIPCAP) funded 35 tribes to tailor effective motor vehicle injury prevention interventions in tribal communities. Both programs required implementation of motor vehicle safety evidence-based strategies systematically reviewed by the Community Guide and recommended by the Community Preventive Services Task Force, including increasing child safety seat use, increasing seat belt use, and decreasing impaired driving (Dinh-Zarr et al., 2001; Shults et al., 2001: Zaza et al., 2001). Tribes selected strategies for implementation and then prioritized available interventions for each strategy.

For agency and tribal perspectives, CDC convened six tribes and the four federal agencies funding them (CDC, IHS, Bureau of Indian Affairs, and National Highway Traffic Safety Administration) to identify essential components of tribal traffic safety programs. A list of essential components was developed based on tribal and agency experiences and evaluation in the areas of 1) program requirements and administration, 2) partnership and collaboration, 3) data collection and program evaluation, and 4) tailoring effective strategies. The list of components served as a resource for federal agencies to inform future AI/AN programs.

A technical package is currently in development for tribes based on the lessons learned from the CDC and IHS programs, essential components of tribal traffic safety programs, and on the Community Preventive Services Task Force recommendations. The technical package, Tribal Motor Vehicle Injury Prevention Best Practices Guide, includes a description of the evidence-based policies and programs, along with guidance on adaptation for tribal populations and implementation strategies. Effective policies and programs identified based on systematic review include child safety seat laws, community-wide information and enhanced enforcement campaigns, safety seat distribution and education programs; primary seatbelt enforcement laws and enhanced enforcement programs; and for alcohol-impaired driving prevention .08% Blood Alcohol Concentration (BAC) laws publicized sobriety checkpoints, and multicomponent programs (Bergen et al., 2014; Dinh-Zarr et al., 2001; Elder et al., 2004; Elder et al., 2005; Shults et al., 2001; Shults et al., 2009; Zaza et al., 2001). Case examples are being included in the technical package to summarize tribal experience in implementing the selected strategies, garnering support, and identifying resources and tools available for evidence-based strategies. The technical package is accompanied by the Roadway to Safer Tribal Communities Toolkit comprised of fact sheets, brochures, posters, a short video, and a tutorial in CDC’s Web-based Injury Statistics Query and Reporting System (WISQARS) featuring AI/AN examples (http://www.cdc.gov/motorvehiclesafety/native/toolkit.html).

Presently, there are 547 federally-recognized tribes in the US, all with varying cultures, governments, laws, infrastructure, and capacity. The Community Guide recommendations are based on research in non-tribal communities and pose challenges related to applicability in tribal populations with different infrastructure and policy options. For example, strongly recommended enhanced enforcement is not an option for tribes without police departments or detention facilities. Supplemental information and guidance is needed to complement systematic reviews of strategies, even when the strategies are broadly recognized as effective. In the case of tribes, a technical package that provides successful tailored tribal case examples can ease the skepticism that often accompanies efforts to implement model programs in a dissimilar setting.

To stimulate adoption of best practices within tribal communities using the technical package, CDC is collaborating with the Federal Highway Administration to provide technical assistance to tribes within the 3 regional Tribal Technical Assistance Program (TTAP) centers in the western US. This pilot program has the potential to reach 207 tribes in 10 states.

Improving Systematic Reviews to Facilitate Their Use

While systematic reviews contribute to technical package development as highlighted in the exemplars, review methods could be enhanced to facilitate use. For example, systematic reviews could better incorporate logic models that illustrate components of the policy or practice (e.g., duration, administration), the characteristics of target population, the settings for implementation, and the causal pathways that influence health outcomes (Anderson et al., 2011). Including more contextual information within systematic reviews can reduce barriers to dissemination by explicating common factors that can inhibit adoption, such as requirements for implementation strategies, scale-up, cost/resources, setting characteristics, incentives or regulations, reach, and sustainability. Such data might come from qualitative components of original quantitative research studies, related quality improvement efforts in the field, and marketing/opinion polling of the target population (Glasgow & Emmons, 2007) and could be highlighted within systematic review translation websites (Paulsell et al., 2016). Conducting systematic reviews in a staged approach, such as by developing an overview of reviews or gap maps (Da Silva, Zaranyika, Langer et al., 2016), can help identify where evidence is strong and where it is missing, and better inform which interventions should be prioritized for implementation and which should be subjected to rigorous evaluation if they are selected for implementation. When the number of studies available for synthesis are low, Bayesian analysis could be considered to generate estimates of intervention effectiveness (Valentine et al., 2016).

Reporting guidelines for systematic reviews could also include criteria that encourage authors to specify policy and practice implications of the findings. Decision makers have reported that inclusion of summaries with policy recommendations within reviews can facilitate the use of evidence (Innvaer, Vist, Trommald, & Oxman, 2002). The PRISMA statement (Moher, Liberati, Tetzlaff, & Altman, 2009), a tool that assesses the quality of systematic reviews, rates reviews on whether the authors provide a general interpretation of the results in the context of other evidence, and implications for future research, as well as a description of the relevance of the findings to key groups. However, PRISMA guidance, or guidance for other systematic review assessment tools, could be expanded to include explicit assessment of the degree to which authors provide contextual information about implementation factors, cost considerations, applicability to diverse populations, policy considerations, and use for development of practice recommendations.

Conclusion

Systematic reviews are valuable for the construction of technical packages for STLT agency practitioners that describe evidence-based strategies, along with the estimated costs and impacts. Technical packages are newly developing in injury and violence prevention. As the concept evolves in all areas of public health, it will be important to share lessons learned across health topics, including how technical packages have influenced strategy adoption and implementation, and health impact. Evaluations can test their utility and fill gaps in our knowledge about how to disseminate and implement evidence-based strategies (Brownson, Dreisinger, Colditz, & Proctor, 2012). As injury and violence prevention technical packages are developed and implemented by communities, it is critical to collect data to evaluate local use, and determine needs for modification and supplementary technical assistance. As the evidence-base grows, a revision loop should be implemented so that technical packages continue to provide the most up to date evidence and implementation guidance for communities.

Footnotes

Author Note: The conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

References

- Anderson LM, Petticrew M, Rehfuess E, Armstrong R, Ueffing E, Baker P, … Tugwell P. Using logic models to capture complexity in systematic reviews. Research Synthesis Methods. 2011;2(1):33–42. doi: 10.1002/jrsm.32. [DOI] [PubMed] [Google Scholar]

- Balas E, Boren SA. Managing Clinical Knowledge for Health Care Improvement. Yearbook of Medical Informatics National Library of Medicine. 2000;2000:65–70. [PubMed] [Google Scholar]

- Bamberger M. Introduction to mixed methods in impact evaluation. Impact Evaluation Notes. 2012;3:1–38. [Google Scholar]

- Basile KC, DeGue S, Jones K, Freire K, Dills J, Smith SG, Raiford JL. STOP SV: A Technical Package to Prevent Sexual Violence. Atlanta, GA: National Center for Injury Prevention and Control, Centers for Disease Control and Prevention; 2016. [Google Scholar]

- Bergen G, Pitan A, Qu S, Shults RA, Chattopadhyay SK, Elder RW … Community Preventive Services Task Force. Publicized sobriety checkpoint programs: a Community Guide systematic review. American Journal of Preventive Medicine. 2014;46(5):529–39. [Google Scholar]

- Braveman P. When do we know enough to recommend action? The need to be bold but not reckless. Journal of Epidemiology and Community Health. 2007;61(11):931. [PMC free article] [PubMed] [Google Scholar]

- Briss PA, Zaza S, Pappaioanou M, Fielding J, Wright-De Aguero L, Truman BI, … Harris JR. Developing an evidence-based guide to community preventive services: methods. American Journal of Preventive Medicine. 2000;18(1):35–43. doi: 10.1016/s0749-3797(99)00119-1. [DOI] [PubMed] [Google Scholar]

- Brownson RC, Baker EA, Leet TL, Gillespie KN. Evidence-based public health. New York, NY: Oxford University; 2003. [Google Scholar]

- Brownson RC, Dreisinger M, Cotditz GA, Proctor EK. The path forward in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science to practice. New York: Oxford; 2012. pp. 498–508. [Google Scholar]

- Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: A fundamental concept for public health practice. Annual Review of Public Health. 2009;30:175–201. doi: 10.1146/annurev.publhealth.031308.100134. [DOI] [PubMed] [Google Scholar]

- Center for the Study and Prevention of Violence. Blueprints for healthy youth development. n.d Retrieved October 19, 2014, from http://www.colorado.edu/cspv/blueprints/

- Centers for Disease Control and Prevention. The guide to community preventive services: The Community Guide. n.d Retrieved September 4, 2014, from http://www.thecommunityguide.org/index.html.

- Centers for Disease Control and Prevention. Vital signs: Overdoses of prescription opioid pain relievers – United States, 1999–2008. MMWR. 2011;60(43):1487–1492. [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention. Web-based Injury Statistics Query and Reporting System (WISQARS) Atlanta, GA: Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; 2014. Retrieved October 19, 2014, from http://www.cdc.gov/injury/wisqars/index.html. [Google Scholar]

- Centers for Disease Control and Prevention. Wide-ranging online data for epidemiologic research (WONDER) Atlanta, GA: Centers for Disease Control and Prevention, National Center for Health Statistics; 2016. Retrieved from http://wonder.cdc.gov. [Google Scholar]

- Da Silva NR, Zaranyika H, Langer L, Randall N, Muchiri E, Stewart R. Making the most of what we already know: A three-state approach to systematic reviewing. Evaluation Review. 2016 doi: 10.1177/0193841X16666363. [DOI] [PubMed] [Google Scholar]

- David-Ferdon C, Simon TR. Preventing youth violence: opportunities for action. Atlanta, GA: Centers for Disease Control and Prevention; 2014. [Google Scholar]

- Davies P, Boruch R. The Campbell Collaboration does for public policy what Cochrane does for health. British Medical Journal. 2001;323(7308):294–295. doi: 10.1136/bmj.323.7308.294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinh-Zarr TB, Sleet DA, Shults RA, Zaza S, Elder RW, Nichols JL, Thompson RS, Sosin DM the Task Force on Community Preventive Services. Reviews of evidence regarding interventions to increase the use of safety belts. American Journal of Preventive Medicine. 2001;21(4S):48–65. doi: 10.1016/s0749-3797(01)00378-6. [DOI] [PubMed] [Google Scholar]

- Dobbins M, Robeson P, Ciliska D, Hanna S, Cameron R, O’Mara L, … Mercer S. A description of a knowledge broker role implemented as part of a randomized controlled trial evaluating three knowledge translation strategies. Implementation Science. 2009;4(23):1–16. doi: 10.1186/1748-5908-4-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowell D, Kunins HV, Farley T. Opioid analgesics – risky drugs, not risky patients. JAMA. 2013;309(21):2219–2220. doi: 10.1001/jama.2013.5794. [DOI] [PubMed] [Google Scholar]

- Dubray J, Schwartz R, Chaiton M, O’Connor S, Cohen JE. The effect of MPOWER on smoking prevalence. Tobacco Control. 2014;0:1–3. doi: 10.1136/tobaccocontrol-2014-051834. [DOI] [PubMed] [Google Scholar]

- Elder RW, Nichols JL, Shults RA, Sleet DA, Barrios LC, Compton R the Task Force on Community Preventive Services. Effectiveness of school-based programs for reducing drinking and driving and driving and riding with drinking drivers: A systematic review. American Journal of Preventive Medicine. 2005;28(5S):288–304. doi: 10.1016/j.amepre.2005.02.015. [DOI] [PubMed] [Google Scholar]

- Elder RW, Shults RA, Sleet DA, Nichols JL, Thompson RS, Rajab W the Task Force on Community Preventive Services. Effectiveness of mass media campaigns for reducing drinking and driving and alcohol-involved crashes: A systematic review. American Journal of Preventive Medicine. 2004;27:57–65. doi: 10.1016/j.amepre.2004.03.002. [DOI] [PubMed] [Google Scholar]

- Epstein D, Klerman JA. When is a program ready for rigorous impact evaluation? The role of a falsifiable logic model. Evaluation Review. 2012;36:375–401. doi: 10.1177/0193841X12474275. [DOI] [PubMed] [Google Scholar]

- Fagan AA, Arthur MW, Hanson K, Briney JS, Hawkins JD. Effects of Communities that Care on the adoption and implementation fidelity of evidence-based prevention programs in communities: Results from a randomized controlled trial. Prevention Science. 2011;12(3):223–234. doi: 10.1007/s11121-011-0226-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Blasé KA, Naoom SF, Wallace F. Core implementation components. Research on Social Work Practice. 2009;19(5):531–540. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. [Google Scholar]

- Fortson BL, Klevens J, Merrick MT, Gilbert LK, Alexander SP. Preventing child abuse and neglect: A technical package for policy, norm, and programmatic activities. Atlanta, GA: National Center for Injury Prevention and Control, Centers for Disease Control and Prevention; 2016. [Google Scholar]

- Frieden TR. A framework for public health action: the health impact pyramid. American Journal of Public Health. 2010;100(4):590–595. doi: 10.2105/AJPH.2009.185652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frieden TR. Six components necessary for effective public health program implementation. American Journal of Public Health. 2014;104(1):17–22. doi: 10.2105/AJPH.2013.301608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annual Review of Public Health. 2007;28:413–433. doi: 10.1146/annurev.publhealth.28.021406.144145. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health. 2003;93:1261–1267. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasziou PP, Chalmers I, Green S, Michie S. Intervention synthesis: A missing link between a systematic review and practical treatment(s) PLOS Medicine. 2014;11(8):e1001690. doi: 10.1371/journal.pmed.1001690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goesling B, Oberlander S, Trivits L. High-stakes systematic reviews: A case study from the field of teen pregnancy prevention. Evaluation Review. 2016 doi: 10.1177/0193841X16664658. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Santesso N, Cumpston M, Mayhew A, McGowan J. Knowledge for knowledge translation: The role of the Cochrane collaboration. Journal of Continuing Education in the Health Professions. 2006;26(1):55–62. doi: 10.1002/chp.51. [DOI] [PubMed] [Google Scholar]

- Haegerich TM, Dahlberg LL, Simon TR, Baldwin GT, Sleet DA, Greenspan AI, Degutis LC. Prevention of injury and violence in the USA. The Lancet. 2014;384(9937):64–74. doi: 10.1016/S0140-6736(14)60074-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haegerich TM, Gorman-Smith D, Wiebe DJ, Yonas M. Advancing research in youth violence prevention to inform evidence-based policy and practice. Injury Prevention. 2010;16(5):358. doi: 10.1136/ip.2010.029009. [DOI] [PubMed] [Google Scholar]

- Haegerich TM, Paulozzi LJ, Manns BJ, Jones CM. What we know, and don’t know, about the impact of state policy and systems-level interventions on prescription drug overdose. Drug and Alcohol Dependence. 2014 doi: 10.1016/j.drugalcdep.2014.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris RP, Helfand M, Woolf SH, Lohr KN, Mulrow CD, Teutsch SM, Atkins D Methods Work Group Third US Preventive Services Task Force. Current methods of the US Preventive Services Task Force: A review of the process. American Journal of Preventive Medicine. 2001;20(3):21–35. doi: 10.1016/s0749-3797(01)00261-6. [DOI] [PubMed] [Google Scholar]

- Hawkins JD, Oesterle S, Brown EC, Monahan KC, Abbott RD, Arthur MW, Catalano RF. Sustained decreases in risk exposure and youth problem behaviors after installation of the Communities That Care prevention system in a randomized trial. Archives of Pediatric and Adolescent Medicine. 2012;166(2):141–148. doi: 10.1001/archpediatrics.2011.183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins JPT, Green S. Cochrane handbook for systematic reviews of interventions. West Sussex: John Wiley & Sons; 2008. [Google Scholar]

- Innvaer S, Vist G, Trommald M, Oxman A. Health policy-makers’ perceptions of their use of evidence: A systematic review. Journal of Health Services Research and Policy. 2002;7(4):239–244. doi: 10.1258/135581902320432778. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academies; 2001. [PubMed] [Google Scholar]

- Institute of Medicine. Finding what works in health care: Standards for systematic reviews. Washington, DC: National Academies; 2011. [PubMed] [Google Scholar]

- Kenan K, Mack K, Paulozzi L. Trends in prescriptions for oxycodone and other commonly used opioids in the United states, 2000–2010. Open Medicine. 2012;6(2):e41–e47. [PMC free article] [PubMed] [Google Scholar]

- Kirschner N, Ginsburg J, Sulmasy LS. Prescription drug abuse: Executive summary of a policy position paper from the American College of Physicians. Annals of Internal Medicine. 2014;160(3):198–200. doi: 10.7326/M13-2209. [DOI] [PubMed] [Google Scholar]

- Klein KJ, Sorra JS. The challenge of innovation implementation. Academy of Management Review. 1996;21:1055–1080. [Google Scholar]

- Lavis J, Davies H, Oxman A, Denis JL, Golden-Biddle K, Ferlie E. Towards systematic reviews that inform health care management and policy-making. Journal of Health Services Research and Policy. 2005;10(Suppl 1):35–48. doi: 10.1258/1355819054308549. [DOI] [PubMed] [Google Scholar]

- MacDonald J, Golinelli D, Stokes RJ, Bluthenthal R. The effect of business improvement districts on the incidence of violent crimes. Injury Prevention. 2010;16(5):327–332. doi: 10.1136/ip.2009.024943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masho SW, Bishop DL, Edmonds T, Farrell AD. Using surveillance data to inform community action: The effect of alcohol sale restrictions on intentional injury related ambulance pickups. Prevention Science. 2014;15(1):22–30. doi: 10.1007/s11121-013-0373-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer M. The rise of the knowledge broker. Science Communication. 2010;32(1):118–127. [Google Scholar]

- Mihalic SF, Elliott DS. Evidence-based programs registry: Blueprints for Healthy Youth Development. Evaluation and Program Planning. 2015;48:124–131. doi: 10.1016/j.evalprogplan.2014.08.004. [DOI] [PubMed] [Google Scholar]

- Mihalic SF, Irwin K. Blueprints for Violence Prevention: From reasearch to real-world settings – Factors influencing the successful replication of model programs. Youth Violence and Juvenile Justice. 2003;1(4):307–329. [Google Scholar]

- Moher D, Liberati A, Tetzlaff J, Altman DG. The PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLOS Med. 2009;6:e1000097. doi: 10.1371/journal.pmed1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Highway Traffic Safety Administration. Fatality Analysis Reporting System. Retrieved October 15, 2014, from http://www.nhtsa.gov/FARS.

- Noonan RK, Emshoff JE. Translating research to practice: Putting “what works” to work. In: DiClemente RJ, Salazar LF, Crosby RA, editors. Health behavior theory for public health: Principles, foundations, and applications. Burlington, MA: Jones and Bartlett; 2013. pp. 309–334. [Google Scholar]

- Office of Justice Programs, US Department of Justice. Crime solutions.gov. n.d Retrieved October 19, 2014, from http://www.crimesolutions.gov/

- Paulsell D, Thomas J, Monahan S, Seftor N. A trusted source of information: How systematic reviews can support user decisions about adopting evidence-based programs. Evaluation Review. 2016 doi: 10.1177/0193841X16665963. [DOI] [PubMed] [Google Scholar]

- Puddy RW, Wilkins N. Understanding Evidence Part 1: Best Available Research Evidence. A Guide to the Continuum of Evidence of Effectiveness. Atlanta, GA: Centers for Disease Control and Prevention; 2011. [Google Scholar]

- Raghavan R. The role of economic evaluation in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science to practice. New York: Oxford; 2012. pp. 94–113. [Google Scholar]

- Roen K, Arai L, Roberts H, Popay J. Extending systematic reviews to include evidence on implementation: Methodological work on a review of community-based initiatives to prevent injuries. Social Science and Medicine. 2006;63(4):1060–1071. doi: 10.1016/j.socscimed.2006.02.013. [DOI] [PubMed] [Google Scholar]

- Rogers E. Diffusion of innovations. New York: The Free Press; 1962. [Google Scholar]

- Shults RA, Elder RW, Nichols JL, Sleet DA, Compton R, Chattopadhyay SK the Task Force on Community Preventive Services. Effectiveness of multicomponent programs with community mobilization for reducing alcohol-impaired driving. American Journal of Preventive Medicine. 2009;37(4):360–371. doi: 10.1016/j.amepre.2009.07.005. [DOI] [PubMed] [Google Scholar]

- Shults RA, Elder RW, Sleet DA, Nichols JL, Alao MA, Carande-Kulis VG, Zaza S, Sosin DM, Thompson RS the Task Force on Community Preventive Services. Reviews of evidence regarding interventions to reduce alcohol-impaired driving. American Journal of Preventive Medicine. 2001;21(4S):66–88. doi: 10.1016/s0749-3797(01)00381-6. [DOI] [PubMed] [Google Scholar]

- Sloboda A, Dusenbury L, Petras H. Implementation science and the effective delivery of evidence-based prevention. In: Sloboda Z, Petras H, editors. Defining Prevention Science. New York: Springer; 2014. pp. 293–314. [Google Scholar]

- Sogolow ES, Sleet DA, Saul J. Dissemination, implementation, and widespread use of injury prevention interventions. In: Doll L, Bonzo S, Mercy J, Sleet D, editors. Handbook of injury and violence prevention. New York: Springer; 2007. pp. 493–510. [Google Scholar]

- Straus SE, Tetro J, Graham I. Defining knowledge translation. Canadian Medical Association Journal. 2009;181(3–4):165–168. doi: 10.1503/cmaj.081229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration. National registry of evidence-based programs and practices (NREPP) n.d Retrieved October 19, 2014, from http://www.nrepp.samhsa.gov/

- US Department of Health and Human Services. Youth violence: a report of the surgeon general. Rockville, MD: US Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Injury Prevention and Control; Substance Abuse and Mental Health Services Administration, Center for Mental Health Services; National Institutes of Health, National Institute of Mental Health; 2001. [Google Scholar]

- Valentine JC, Wilson SJ, Rindskopf D, Lau TS, Tanner-Smith EE, Yeide M, LaSota R, Foster L. The challenge of synthesis when there are only a few studies to review. Evaluation Review. 2016 doi: 10.1177/0193841X16674421. [DOI] [PubMed] [Google Scholar]

- Wallace J, Nwosu B, Clarke M. Barriers to the uptake of evidence from systematic reviews and meta analyses: A systematic review of decision makers’ perceptions. BMJ Open. 2012;2(5):1–13. doi: 10.1136/bmjopen-2012-001220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, Saul J. Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. American Journal of Community Psychology. 2008;41(3–4):171–181. doi: 10.1007/s10464-008-9174-z. [DOI] [PubMed] [Google Scholar]

- Wandersman A, Imm P, Chinman M, Kaftarian S. Getting to outcomes: A results-based approach to accountability. Evaluation and Program Planning. 2000;23(3):389–395. [Google Scholar]

- Ward V, House A, Hamer S. Knowledge brokering: the missing link in the evidence to action chain? Evidence & Policy: A Journal of Research, Debate, and Practice. 2009;5(3):267–279. doi: 10.1332/174426409X463811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Washington State Institute for Public Policy. Cost-benefits results. n.d Retrieved October 19, 2014, from http://www.wsipp.wa.gov/BenefitCost.

- Webster DW, Whitehill JM, Vernick JS, Curriero FC. Effects of Baltimore’s Safe Streets program on gun violence: A replication of Chicago’s CeaseFire program. Journal of Urban Health. 2012;90(1):27–40. doi: 10.1007/s11524-012-9731-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaza S, Sleet DA, Thompson RS, Sosin DM, Bolen JC the Task Force on Community Preventive Services. Reviews of evidence regarding interventions to increase use of child safety seats. American Journal of Preventive Medicine. 2001;21(4S):31–47. doi: 10.1016/s0749-3797(01)00377-4. [DOI] [PubMed] [Google Scholar]