Abstract

Numerous examples exist in population health of work that erroneously forces the causes of health to sum to 100%. This is surprising. Clear refutations of this error extend back 80 years. Because public health analysis, action, and allocation of resources are ill served by faulty methods, I consider why this error persists.

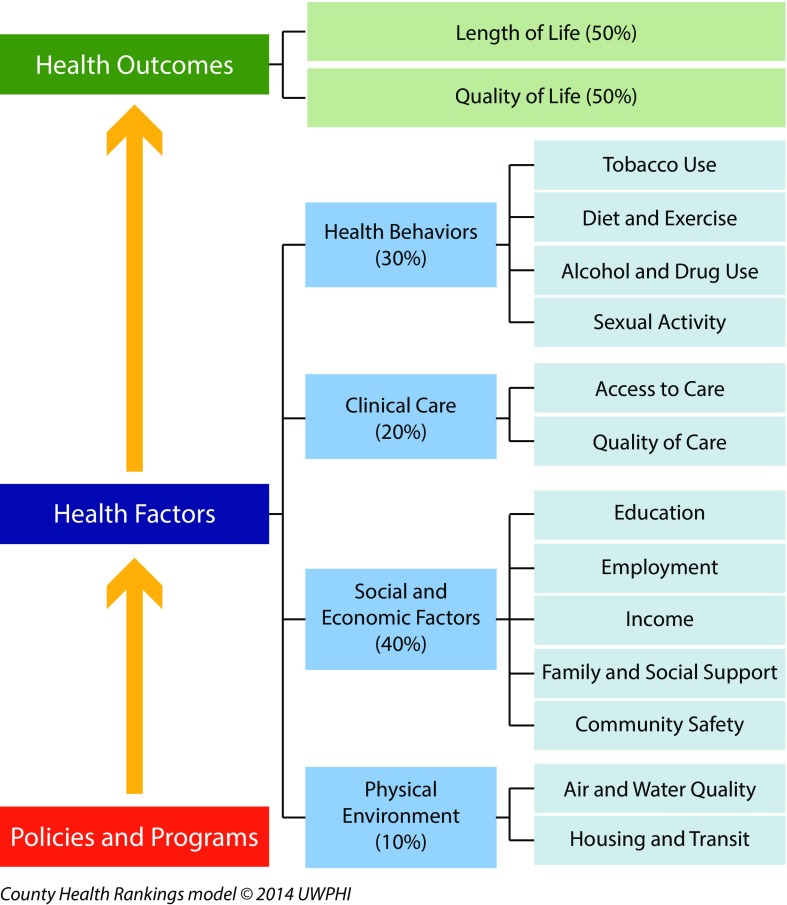

I first review several high-profile examples, including Doll and Peto’s 1981 opus on the causes of cancer and its current interpretations; a 2015 high-publicity article in Science claiming that two thirds of cancer is attributable to chance; and the influential Web site “County Health Rankings & Roadmaps: Building a Culture of Health, County by County,” whose model sums causes of health to equal 100%: physical environment (10%), social and economic factors (40%), clinical care (20%), and health behaviors (30%).

Critical analysis of these works and earlier historical debates reveals that underlying the error of forcing causes of health to sum to 100% is the still dominant but deeply flawed view that causation can be parsed as nature versus nurture. Better approaches exist for tallying risk and monitoring efforts to reach health equity.

Can the causes of population health be parsed into components that add up to 100%? For example, can they be divvied up into X% social and (100–X%) biological (or, more specifically, genetic)? These questions are consequential for how health professionals and the broader public conceptualize determinants of health, and for policy decisions about allocation of resources to improve population health and promote health equity.

The quick answer is “no”—causes of health cannot be forced to sum to 100%. For at least 80 years, since the mid-1930s, the scientific and mathematical argument has been clear: to the extent that multiple factors contribute, independently and synergistically, to the causal pathways leading to any particular health outcome, the sum of their contributions must exceed 100%.1–8 Moreover, the percentage of variation in outcomes that is “explained” by particular factors is not equivalent to the proportion of risk causally attributable to these factors.1–4,8

Even so, the contemporary literature is studded with high-profile examples of works premised on the view that the causes of health sum to 100% and that also elide distinctions between explaining variation and quantifying causal impact. Three examples that I will discuss pertain to Sir Richard Doll and Sir Richard Peto’s initial 1981 opus on the causes of cancer9 and its subsequent revision10; a 2015 high-publicity article in Science claiming two thirds of cancer is attributable to chance11; and several influential projects that seek to monitor and rank the health status and health determinants of US states and counties by using an approach that assumes that social, economic, medical, behavioral, and biological causes of health can be partitioned and summed to 100%.12,13

Why might such errors abound—especially in this era of quantitative, evidence-based approaches to public health and medicine?14,15 And why does what might, at first glance, seem like an arcane technical issue matter?

I present the novel argument that this problem persists because of entrenched views that causally pit nature versus nurture.1–3 What appears to be, at one level, a technical debate, is in fact much deeper—and deeply worrisome. Public health is ill served by inaccurate science. We cannot claim our arguments are evidence-based if the evidence is distorted to imply that causes of health sum to 100%. As an alternative, I present more mathematically tenable and transparent approaches that are available to analyze the determinants of population health and their contributions to health equity.

FORCING CAUSES OF HEALTH TO SUM TO 100%

Common to the 3 examples I present are 2 errors. One involves the misuse of population attributable fractions. The other is erroneously equating explaining variation with explaining causation.

Example 1: “The Causes of Cancer”

Perhaps no better introduction to the tension between portraying the causes of risk as adding up to 100% versus knowing that, if there are multiple causes, the sum must be more than 100%, is the classic 1981 article by Doll and Peto on “The Causes of Cancer: Quantitative Estimates of Avoidable Risks of Cancer in the United States Today” (Table 1).9 This article was commissioned by the US government to help inform priorities for the vividly named War on Cancer, declared 10 years earlier, in 1971, under the Nixon administration.

TABLE 1—

Doll and Peto’s “The Causes of Cancer” and the Changing Sum of Population Attributable Fractions: 1981 vs 1985

| Initial Presentation, 19819(p1256): “Proportions of cancer deaths attributed to various different factors” |

Revised Presentation, 198510(p12): “Percentage of all U.S. or U.K. cancer deaths that might be avoidable” |

|||

| Factor | Best Estimate | Range of Acceptable Estimates | Best Estimate | Range of Acceptable Estimates |

| Tobacco | 30 | 25–40 | 30 | 25–40 |

| Alcohol | 3 | 2–4 | 3 | 2–4 |

| Diet | 35 | 10–70 | 35 | 10–70 |

| Food additives | < 1 | −5–2 | < 1 | −5–2 |

| Reproductive and sexual behavior | 7 | 1–13 | Sexual behavior: 1 | 1 |

| Yet-to-be-discovered analogs of reproductive factors: up to 6 | 0–12 | |||

| Occupation | 4 | 2–8 | 4 | 2–8 |

| Pollution | 2 | < 1–5 | 2 | 1–5 |

| Industrial products | < 1 | < 1–2 | < 1 | < 1–2 |

| Medicines and medical procedures | 1 | 0.5–3 | 1 | 0.5–3 |

| Geophysical factors | 3 | 2–4 | 3 | 2–4 |

| Infection | 10? | 1–? | 10? | 1–? |

| Unknown | ? | ? | ? | ? |

| Total: 200 or morea | ||||

The 1981 table did not provide a row for “total”; however, the percentages of “best estimates” sum to 97%, not including the unknown “?.” By contrast, the 1985 version of the table stated, “Since once cancer may have two or more causes, the grand total in such a table will probably, when more knowledge is available, greatly exceed 200. (It is merely a coincidence that the suggested figures … happen to add up to about 100.)”10

The approach used by Doll and Peto to apportion risk was what may be termed the population attributable fraction (PAF). This concept was independently proposed in 1951 by Doll and by Cornfield, and in 1953 by Levin.7 Ever since, the epidemiological literature has had lively discussion on what this concept means and what formulas should be used for its computation.5–7 Indeed, in 2015, an entire issue of Annals of Epidemiology focused on the PAF.7

The basic idea is to calculate the percentage of cases that would have been prevented if everyone had the same risk of exposure as persons with the most favorable exposure (which potentially may be no exposure).5–7 The calculation of the PAF thus depends on both the prevalence of exposure and the risk associated with the exposure (noting that it matters if this risk estimate does or does not take into account confounding—in other words, the presence of other factors that account for the observed exposure–outcome association but are not themselves affected by the exposure5,6).

A deceptively simple formula used to calculate the PAF in the presence of confounding is6

where pd equals the proportion of cases exposed to the risk factor, and RR equals the adjusted relative risk for exposure to that risk factor. For example, if the adjusted RR equaled 3, and the proportion of cases exposed to the risk factor was 30%, the PAF would equal 80%; if the adjusted RR equaled 2 and the proportion of cases exposed were the same (30%), the PAF would equal 45%.

Many well-known threats exist to valid calculation and interpretation of the PAF.5–7 Apart from issues of confounding, questions abound, including (1) Is the underlying assumption of a true causal mechanism sound?; (2) How is measurement of the exposure and outcome affected by misclassification and uncertainty?; and (3) How is time accounted for? (e.g., in relation to the life course timing and duration of the exposure, the etiologic period, and the time period after exposure during which cases are accrued). None of these are simple questions with simple answers.

Even if ideal data existed to compute a PAF accurately, however, the critical point is that if different causes produce an outcome, the sum of the PAF for separate causes necessarily must exceed 100%.5–7 Why? Consider the classic case of smoking, asbestos, and lung cancer. As concisely explained by Greenland and Robins in 1988, “if some persons develop lung cancer solely because of their exposure to asbestos and smoking, such persons will contribute to the excess and etiologic fractions for both asbestos and smoking,” and in such a scenario, the attributable risks among the jointly exposed “can (and in fact do) sum to more than one.”5(p1193) Rather than consider this a problem, a 2002 report from the World Health Organization optimistically averred, “the key message of multicausality is that different sets of interventions can produce the same goal”16(p18) (Table A, available as a supplement to the online version of this article at http://www.ajph.org).

In Doll and Peto’s 1981 article, however, they transformed multicausality into a statistical nuisance, stating that “If a variety of factors commonly interact to produce cancer, this may greatly facilitate prevention of the disease, but it complicates the attribution of risk.”9(p1219) Thus, despite being well aware that causal interaction between 2 agents meant “that it is inappropriate to present a neat balance sheet adding up to 100% indicating the proportion of all cancers that are preventable by strategies X, Y, and Z,”9(p1220) in their famous table, shown in Table 1, they did just this, whereby the “proportions of cancer deaths attributed to various different factors” tallied to just under 100%.9

As justification for their approach, Doll and Peto problematically posited that “the proportion of present-day U.S. cancer death rates that can be prevented by various separate methods is small.”9(p1220) This questionable assumption allowed them to state,

We shall therefore ignore the anomaly in the rest of this paper and hope that, once it has been pointed out, no one will fall into the trap of adding together proportions that are not, in fact, mutually exclusive. 9(p1220)

Suggesting, however, that their caveat was unheeded, their PAFs were—and remain—widely quoted and interpreted as summing to 100% in both peer-reviewed scientific article and the popular media.17,18

Interestingly, in an update published by Peto in 1985, the table presented virtually identical PAFs, but included 1 radical change: a new line for “total” percentage, equaling “200 or more,” with the footnote acknowledging that PAFs can sum to more than 100% when a cancer has 2 or more causes10(p12) (Table 1). The chapter also amplified a key point only alluded to in the 1981 article:

Cancer arises from three things: nature, nurture, and luck. In large populations nature and luck seem generally to average out and only nurture remains. So, study of cancer rates in relation to nurture tells us about the role of external factors, which is, both for government policy and for individual choices, the only thing of practical importance. 10(p6)

This cleavage of “nature, nurture, and luck” is fundamental to the rest of this analysis.

Example 2: Two Thirds of Cancer Due to Chance

In January 2015, an article published in Science by Tomasetti and Vogelstein11 received enormous publicity—and backlash—for its controversial claim that two thirds of cancer is attributable to chance.19 Eliciting both admiration20 and caustic criticism8 in major scientific journals, the article’s relevance lies in its appeal to the idea that causes must sum to 100%.

In brief, Tomasetti and Vogelstein posited that variation in cancer risk among organs (e.g., why colon cancer is common and bone cancer is rare) is the result of chance mutations arising from stem cell divisions (see the box on the next page).11(p78) Considering a selected number of cancer sites, they found that “the lifetime risk of cancers of many different types is strongly correlated (r = 0.81) with the total number of divisions of the normal self-renewing cells maintaining that tissue’s homeostasis.”11(p78) They then squared this correlation, to get an R2 value, whereby 0.81 × 0.81 = 0.65, leading them to assert that “65% (39% to 81%; 95% CI [confidence interval]) of the differences in cancer risk among different tissues can be explained by the total number of stem cell divisions in those tissues.”11(p78) Premised on the view that causes sum to 100%, they concluded that if 65% of cancer risk is attributable to chance (i.e., chance errors occurring in stem cell division), then “only a third of the variation in tissue risk among tissues is attributable to environmental factors or inherited predispositions.”11(p78)

Is Explaining 100% of Variation the Same as Accounting for Causal Contributions? The Illustrative Case of Why the Claim Two Thirds of Cancer Is Attributable to Chance Is Spurious

| a. Yes: Tomasetti and Vogelstein (2015)11 |

| Abstract. “Some tissue types give rise to human cancers millions of times more often than other tissue types. Although this has been recognized for more than a century, it has never been explained. Here, we show that the lifetime risk of cancers of many different types is strongly correlated (0.81) with the total number of divisions of the normal self-renewing cells maintaining that tissue’s homeostasis. These results suggest that only a third of the variation in cancer risk among tissues is attributable to environmental factors or inherited predispositions. The majority is due to ‘bad luck,’ that is, random mutations arising during DNA replication in normal, noncancerous stem cells. This is important not only for understanding the disease but also for designing strategies to limit the mortality it causes.”(p78) |

| b. No: Rose (1985)22 |

| “If everyone smoked 20 cigarettes a day, then clinical, case–control and cohort studies alike would lead us to conclude that lung cancer was a genetic disease; and in one sense that would be true, since if everyone is exposed to the necessary agent, then the distribution of cases is wholly determined by individual susceptibility.”(p32) |

| “Within Scotland and other mountainous parts of Britain there is no discernible relation between local cardiovascular death rates and the softness of the public water supply. The reason is apparent if one extends the enquiry to the whole of the UK. In Scotland, everyone's water is soft. . . . Everyone is exposed, and other factors operate to determine the varying risk. |

| Epidemiology is often defined in terms of study of the determinants of the distribution of the disease; but we should not forget that the more widespread is a particular cause, the less it explains the distribution of cases. The hardest cause to identify is the one that is universally present, for then it has no influence on the distribution of disease.”(p32–33) |

| c. No: Weinberg and Zakin (2015)8 |

| “R2 does not explain much. . . . Consider the following hypothetical thought experiment. Suppose an evil agent exposes the entire US population to a powerful new carcinogen that doubles the incidence of all 31 cancers. One might conclude that the fraction explained by this exposure must be one-half, because half the cases would not have occurred had they not been exposed; one might then reason that the fraction explained by stochastic errors in stem cell division would now be correspondingly smaller. But even with the new two-fold higher incidence numbers, the correlation would not change at all, because the points (all being on a log scale) would rigidly shift upward, each by log (2). The fraction of the variability in log incidence that is ‘explained’ (in the statistical sense) by the number of stem cell divisions would remain at 2-thirds. Similarly, if the population were administered an anticancer vaccine that could prevent the occurrence of half of cancers, regardless of type, the correlation would still be 2-thirds. Clearly one cannot infer from the Tomasetti and Vogelstein data that 2-thirds of cancer is unpreventably due to bad luck.” |

| “One cannot partition causes into fractions that add up to 1. . . . environmental exposures, germ-line genetic variants and random events like replicative errors typically act in concert; the effects cannot be treated as separable. It is a mistake to assume that one can partition etiologic factors into contributions that sum to 1.0, as in the notion that two-thirds of cancers are due to bad luck and therefore at most one-third could be due to environmental and inherited genetic factors.” |

| “Because of joint effects, contributing causes often have attributable fractions that add to more than 1.0. The intellectual disability syndrome secondary to phenylketonuria is a well known example where the fraction attributable to genetics is 1.0, while the fraction attributable to environment is also 1.0, because the outcome requires both a dysfunctional metabolic gene and an environmental exposure (dietary phenylalanine). As another example, the fact that 100% of prostate cancer is due to a stochastic event (the random inheritance of a Y chromosome) does not relieve us of the need to search out other causes, some of which may be preventable.” |

The article was immediately subjected to 2 kinds of criticisms.8,19,21 Biological critiques questioned the very data employed (e.g., extrapolation of mice data on stem cell replication to humans).21 Methodologically, the article was chastised for confusing explaining variation with explaining causation,8 a well-known error famously elaborated in Sir Geoffrey Rose’s classic 1985 article “Sick Individuals and Sick Populations” (see the box on the next page).22

The larger point, articulated in the elegant and concise analysis by Weinberg and Zaykin,8 is that “one cannot partition causes into fractions that add up to 1”8(p3)—and they further challenged the view that “chance” means prevention is not possible. They thus not only recalled Brian MacMahon’s classic 1968 analysis of why phenylketonuria can legitimately be described as a disease whose etiology is 100% genetic and 100% environmental4 (see the box on the next page), but also extended the argument, by pointing out that 100% of prostate cancer could be framed as attributable to a chance (stochastic) event—inheriting a Y chromosome—but this does not mean no other causes are at play, “some of which may be preventable.”8(p3)

Example 3: Summing Health Factors to 100%

Constraining causes to add to 100% also problematically underlies the methodology of several current and highly influential efforts to rank US states and counties in relation to their health profiles and health determinants.12,13 These include County Health Rankings & Roadmaps, which is funded by the Robert Wood Johnson Foundation12 (Figure 1), and America’s Health Rankings, which focuses on state rankings and is sponsored by the United Health Foundation and the American Public Health Association13 (Figure A, available as a supplement to the online version of this article at http://www.ajph.org). Both projects are intended to influence public and policymakers’ awareness of health issues and to guide allocation of resources to improve health rankings and health equity.

FIGURE 1—

Portraying the Determinants of Health as Summing to 100%

Source. Adapted from Remington et al.,23 which “is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited.”

Tellingly, for both initiatives, the sum of contributions of component factors equals 100%. For County Health Rankings (CHRs), the contribution of the specified health determinants to the specified health outcomes total to 100% as follows: 10% for physical environment, 40% for social and economic factors, 20% for clinical care, and 30% for health behaviors.12 By contrast, for America’s Health Rankings, the 100% is parsed, for a total score, as follows: 25% for behavior, 22.5% for community and environment, 12.5% for policies, 15% for clinical care, and 25% for outcomes.13

To support their choice of percentages, the projects’ Web sites and methodological publications12,13,23 state that they relied on expert input, literature reviews, and weights used by other analogous efforts. One such example is the highly cited 2002 article by McGinnis et al.24 Ranking genetic determinants higher, it stated,

On a population basis, using the best available estimates, the impacts of various domains on early deaths in the United States distribute roughly as follows: genetic predisposition, about 30 percent; social circumstances, 15 percent; environmental exposures, 5 percent; behavioral patterns, 40 percent; and shortfalls in medical care, 10 percent.24(p83)

Having made this allocation, it also called for attention to intersections and interactions between these factors (Table B, available as a supplement to the online version of this article at http://www.ajph.org).

The CHR also provided as justification their “own analysis of the variation of outcomes explained by each factor,”23(p3) an approach problematically replicated by 2 studies seeking to validate the CHR percentages.25,26 In these analyses, the 4 health factors on average explained only 54% of variation in health outcomes between counties within states—a far cry from 100%, with this percentage strikingly ranging from a low of 2.7% for Arizona to a high of 87.2% for Wyoming.26 In addition, the finding that the relative contributions of the 4 factors to this 54% of explained variation “were largest for social and economic factors (47%), followed by health behaviors (34%); clinical care (16%); and physical environment (3%)”26(p132) was curiously interpreted as meaning, “The findings provide support for the CHR model weightings”26(p134)—when the results instead suggest, even by the flawed logic of the approach used, that the CHR weightings should effectively be halved (and a large “unknown” factor should be added).

The problem by now should be clear. Forcing causes of population health to sum to 100%, whether framed in terms of PAF or via wrongly conflating explaining variation with explaining causation, is not accurate, methodologically or substantively. It is clearly disturbing that such flawed and discredited approaches to reckoning with causal contributions continue to be published in prestigious scientific journals and to be employed in major public health initiatives.

THE ROOT PROBLEM OF NATURE VS NURTURE

Why does this problem of seeking to force causes to sum to 100% persist in public health and medicine, despite multiple refutations? And what are the alternatives?

I submit that one key reason for the endurance of this scientific error is the longstanding preoccupation within science, medicine, and public health of pitting nature against nurture and arguing over which contributes more to shaping individuals’ traits and population health.1–3,27 To the extent that these debates remain politically and ideologically fraught, so too will the scientific literature see repeated rounds of work that seek to make causes add up to 100%—and rebuttals demonstrating the fallacy of this approach. Better data alone will not resolve this issue. Intellectual clarity instead is required about the underlying intellectual and social tensions, to spur better understanding and methods.

In the box on the next page, I provide key examples drawn from the past century’s substantive and statistical debates over quantifying the causal effects of nature and nurture.1–3,28–31 The Box starts with Sir Francis Galton’s radical treatment, in 1874, of nature and nurture as disjoint, a view integral to eugenics (a term he coined) and its promotion of better heredity, which in Galton’s view trumped the environment.3,28 It extends to new insights from ecological evolutionary developmental biology (“eco–evo–devo”) that productively challenge the flawed assumptions underpinning the nature versus nurture divide,29,30 building on the insight the renowned experimental embryologist, geneticist, and statistician Lancelot Hogben captured, in 1933, in his novel formulation, “the interdependence of nature and nurture.”1(p91) Aware of the political charge surrounding this issue, Hogben pointedly observed, “Curiously enough, eugenicists who profess to be interested in promoting knowledge about human inheritance bitterly oppose social reforms directed to equalize the human environment.”1(p30)

Fundamental Debates Over Partitioning “Cause”: “Nature vs Nurture” vs the “Interdependence of Nature & Nurture”—From Galton (1874) to Hogben vs Fisher (1933) to Eco–Evo–Devo (2015)

| a. Sir Francis Galton: among the first (if not the first) to frame nature and nurture as disjoint domains (1874)28 |

| “The phrase ‘nature and nurture’ is a convenient jingle of words, for it separates under two distinct heads the innumerable elements of which personality is composed. Nature is all that a man brings with himself into the world; nurture is every influence from without that affects him after his birth. The distinction is clear: the one produces the infant such as it actually is, including its latent faculties of growth of body and mind; the other affords the environment amid which the growth takes place, by which natural tendencies may be strengthened or thwarted, or wholly new ones implanted.”(p12) |

| “When nature and nurture compete for supremacy on equal terms in the sense to be explained, the former proves the stronger. It is needless to insist that neither is self-sufficient; the highest natural endowments may be starved by defective nurture, while no carefulness of nurture can overcome the evil tendencies of an intrinsically bad physique, weak brain, or brutal disposition.”(p12–13) |

| Insight from Keller (2010)3 |

| “… the assumption already implicit in Francis Galton’s catchy phrase, ‘Nature and Nurture’ (1874) is that there exist two domains, each separate from the other, waiting to be conjoined. Galton was hardly the first to write about nature and nurture as distinguishable concepts, but he may have been the first to treat them as disjoint.”(p11) |

| b. Lancelot Hogben–R.A. Fisher 1933 exchange over partitioning causation in relation to heredity vs environment: Hogben’s emphasis on their interdependence (as informed by his own experiments with fruit flies under different conditions) versus Fisher’s assertion of their independence.29(p738–739, 749) |

| Hogben to Fisher: “Suppose you say that 90 percent of the observed variance is due to heredity, do you mean that the variance would be reduced by 10 per cent, if the environment were uniform? Do you mean that the variance would be reduced by 90 per cent, if all genetic differences were eliminated? Perhaps you might think the question silly; but if you could suggest an alternative form of words, it might help.” |

| Fisher to Hogben: “Dear Hogben, your question is a sound one . . . if each genotype has an equal chance of experiencing with their proper probabilities, each of the available kinds of environments, then the variance is additive, and the statements you have are equivalent.” |

| Hogben to Fisher: “Dear Fisher, I don’t think you quite got the difficulty I am trying to raise. It concerns an inherent relativity in the concepts of nature and nurture.” |

| Fisher to Hogben: “You are on a question of non-linear interactions of environment and heredity . . . it would be very difficult to find a case in which this would be of the least use, as exceptional types of interaction are best treated on their merits, and many become additive or nearly so as to cause no trouble when you choose a more appropriate metric.” |

| Insight from Tabery (2008)29 |

| “R.A. Fisher, one of the founders of population genetics and the creator of statistical analyses of variance, introduced the biometric concept as he attempted to resolve one of the main problems in the biometric tradition of biology – partitioning the relative contribution of nature and nurture responsible for variation in a population. Lancelot Hogben, an experimental embryologist and also a statistician, introduced the developmental concept as he attempted to resolve one of the main problems in the developmental tradition of biology – determining the role that developmental relationships between genotype and environment played in the generation of variation.”(p717) |

| Insight from Keller (2010)3 |

| “ . . . as the Swiss primatologist Hans Kummer remarked some years ago . . . trying to determine how much of a trait is produced by nurture, or how much by genes and how much by environment, is as useless as asking whether the drumming we hear in the distance is made by the percussionist or his instrument . . . the point is a logical one about which there ought, at least in principle, to be no debate: causes that interact in such ways simply cannot be parsed; it makes no sense to ask how much is due to one and how much to the other.”(p7) |

| “Questions about differences between groups require a different kind of analysis than do questions about individuals. . . . For group differences, the question we need to ask is, how much of the variation we hear in the sound of drums is due to variation in drummers, and how much is due to variation in drums? And to answer this question, we must turn to the statistical analysis of populations. Which is precisely how Fisher reformulated Galton’s question, and he was clearly aware of the importance of such a shift. Introducing his very first paper on the topic, Fisher warned that while ‘it is desirable on the one hand that elementary ideas . . . should be clearly understood; and easily expressed in ordinary language,’ nonetheless ‘loose phrases about the ‘percentage of causation’ which obscure the essential distinction between the individual and the population, should be carefully avoided’ (1918, 399–400). Perhaps he even had Galton in mind.”(p54) |

| c. Hogben (1933)1—in which his chapter titled “The interdependence of Nature and Nurture,”(p91) explained the fallacy of equating explaining variation with explaining cause, and argued for an approach to quantify causal impacts akin to the population attributable fraction. |

| “Clearly we are on safe ground when we speak of a genetic difference between two groups measured in one and the same environment or in speaking of difference due to environment when identical stocks are measured under different conditions of development. Are we on equally safe ground when we speak on the contribution of heredity and environment to the measurement of genetically different individuals or groups measured in different kinds of environments? . . . The question is easily seen to be devoid of a definite meaning.”(p97) |

| “On the basis of such statements as the previous quotation about stature [by Fisher], it is often argued that the results of legislation directed towards more equitable distribution of medical care must be small, and that in consequence we must look to selection for any noteworthy improvement in a population. . . . The gross nature of the fallacy is easily seen with the help of a parable. Imagine a city after a prolonged siege or blockade ending over a number of years. The available supplies of food containing the necessary vitamins have long since been exhausted in the open market. Young children still growing are stunted in consequence and weigh on average 20 per cent. less than prewar children. One biochemist has a small stock of crystallized vitamins which he has reserved for his family of 4, who grow up normally. There are, let us say, a million stunted children to 4 healthy ones. A party of rabid environmentalists is clamouring for peace. The Government appoints an official inquiry of statisticians. They report that far less than 1 per cent. of the observed variance with respect to body-weight is due to differences in diet, that the improvement produced by change in diet if peace were made would therefore be negligible, and that eugenic selection would solve the problem of how to keep a community alive with vitamins if the war could be prolonged for a few more millennia. It requires no subtlety to see what is wrong with this conclusion. If only 4 in a million and 4 children had sufficient vitamins for normal growth, the effect of differences in the vitamin content of the diet to the observed variance in the population would be a statistically negligible quantity. In spite of this, the mean bodyweight of the population could be increased by 30 per cent. if all children had received a ration with a vitamin content equivalent to the greatest amount available to any child in the same population.”(p116–117) |

| Related insights from the new field of ecological evolutionary developmental biology (“eco–evo–devo”) about the interdependence of nature and nurture, and the ubiquity of flexible phenotypes. |

| –From Gilbert and Epel (2015)30 |

“A quiet biological revolution, driven by new technologies in molecular, cell, and developmental biology and ecology has made the biology of the twenty-first century a different science than that of the twentieth. . . . Some unexpected ideas must be integrated into our new thinking about inheritance, development, evolution, and health. These include the following:

|

| “ . . . ‘eco-evo-devo’ seeks to bring into evolutionary biology the rules by which an organism’s genes, environment, and development interact to create the variation and selective pressures needed for evolution.”(p.xiv) |

| –From Piermsa and van Gils (2011)31 |

| “Bodies ‘express ecology’ by being sufficiently plastic, by taking on different structure, form or composition in different environments,” including “phenotypic plasticity expressed by single reproductively mature organisms throughout their life, phenotypic flexibility – reversible within-individual variation.”(p3) |

| How big is a python’s gut or heart?—it depends: “Prey capture . . . elicits a burst of physiological activity, with drastic upregulation of many metabolic processes. Immediately, the heart starts to grow. With a doubling of the rate of heartbeat, and as blood is shunted away from the muscles to the gut, blood flow to the gut increases by an order of magnitude. Within two days, the wet mass of the intestine more than doubles.”(p85) |

| “. . . the older identical twins become, the easier it is to tell them apart! . . . organisms and their particular environments are inseparable: changes in an individual’s shape, size, and capacity will often be direct functions of the ecological demands placed upon them. Bodies express ecology.”(p174) |

As the excerpts provided in the box on the next page additionally make clear, by the mid-1930s, the contours of the causal problem were well defined, and the fatal flaw of equating explanation of variation with explanation of causation was well articulated.1–3,29,30 What is less appreciated is that also in the 1930s new ideas began to germinate, seeded by public health concerns, that anticipate the formal development of the PAF in the 1950s7—and that point to better ways of quantifying and communicating the impact of diverse factors on population health, a topic central to contemporary initiatives to promote Health in All Policies32 and to advance health equity impact assessment.33

Thus, in Hogben’s 1933 parable (see the box on the next page), he tallied what the effect on childhood stunting would be if vitamin levels of vitamin-deprived children were raised to that of children with adequate vitamin levels.1(p116–117) He pointedly observed that if virtually all children were vitamin-deprived, studies would not observe vitamin level to be associated with stunting. His thought experiment thus anticipates Rose’s 1985 insight that uniform exposure across groups means the exposure will not be detected as a cause of group differences in outcomes,22 and underscores the problems of parsing cause without appreciation of context.

AN ALTERNATIVE: HEALTH EQUITY PAFS

The alternative approached sketched by Hogben can be seen in more developed form in a new study that estimates the impact on premature mortality of raising the minimum wage to $15 per hour in New York City.34 Results (including sensitivity analyses taking into account the impact of such a raise on other income levels and also possible job loss) indicate not only what the impact could be on the on-average citywide reduction of premature mortality rates (estimate = 4% to 8% decline, translating to 2800 to 5500 premature deaths prevented), but also how “[m]ost of these avertable deaths would be realized in lower-income communities, in which residents are predominantly people of color.”34(p1036) Analogous methods have been used to estimate the proportion and number of premature deaths that could be averted if everyone had the same (and already, not hypothetically, achieved) age-specific mortality rates as persons in the most affluent groups.35,36 These estimates do not require summing to 100% the contributions of the myriad specific and interdependent exposure-to-outcome pathways involved.

An additional positive feature of this PAF-based approach, building on the ranking approach of County Health Rankings12 and America’s Health Rankings,13 is that it can lend itself to ranking which communities would benefit most from a change in exposures, albeit without constraining causes to add to 100%. Thus, for any given community (however defined, for example, by geography or social or economic group), it would be possible to compute both the proportion and absolute number of adverse outcomes averted, were the distribution of exposures changed, thereby showing who would most benefit. The health equity impact could thus be transparently portrayed. Moreover, the magnitude of this impact could be compared across different scenarios involving diverse exposures, all without imposing the assumption that the contributions of component causes sum to 100%. Such approaches could be used for not only within-country health equity analyses, but also for analyses concerned with PAFs involving between-country inequities and also the global burden of health inequities.

Granted, in this era of Big Data and fast computing, more computationally intensive simulation approaches, including agent-based modeling, could be used heuristically to quantify potential impacts of changing distributions and levels of exposures.37 These approaches likewise do not require causes to be independent or to have their relative contributions sum to 100%. As is well recognized, however, simulations are only as good as their data and their assumptions—and as Hogben also warned, when powerful statistical techniques are employed, it is always necessary to be alert to “the danger of concealing assumptions which may have no factual basis behind an impressive façade of flawless algebra.”1(p121)

The basic point is that the public’s health and the work to attain health equity are both ill-served by—and do not require—methodological approaches that err in assuming causes sum to 100% and that confuse explaining variation with explaining causation. Nor need it be assumed that cause can be discretely portioned, in Peto’s words, among “nature, nurture, and luck.”10(p6) To the contrary, these 3 components are at play in every case and every non-case, and are in no way mutually exclusive.38,39

The signal contribution of population health sciences is that they render it possible to see (i.e., detect) the population-level forces not visible at the individual level that structure risks of exposure and outcomes across social groups.38,39 To quote from Hogben once again,

A human society may be crudely compared to a badly managed laboratory in which there are many cages each containing a pair of rats and their offspring. The rats are of different breeds. The cages are at different distances from the window. Different cages receive different rations. Rats in the same cage cannot all get to the feeding trough together. So some get more meal than others. The cage corresponds to the family as a unit of environment. The rats in each cage constitute the family as a genetic unit.1(p107)

As this metaphor makes clear, it is essential to analyze causes and compute PAFs keeping in mind both space and place in the distribution of resources, powers, and structural determination of health inequities over time, within and across historical generations.

Indeed, as Greenland and Robins observed nearly 30 years ago, regarding the interpretation of PAFs,

[T]he dependency of epidemiologic measures on cofactor distributions points out the need to avoid considering such measures as biological constants. Rather, epidemiologic measures are characteristics of particular populations under particular conditions. . . .5(p1195)

By implication, PAFs and percentage variation explained are and must be historically contingent, and this is yet another reason that no fixed summing to 100% is possible.

In summary, in matters of life, health, and death, we humans, like other biological species, are not and never have been purely biological organisms or purely social beings; we are both, simultaneously. It is only through living in and engaging with our co-constituted societies and ecosystems, in real time, that we develop and express our existence as simultaneously social and biological beings.1,3,27,30,39,40 These are our embodied realities, as underscored by the ecosocial theory of disease distribution, with its emphasis on how we literally embody, biologically, our societal and ecological context, thereby producing population patterns of health, disease, and well-being.27,29

It is way past time to reject the false premises and logic of the idea that causes can be discretely apportioned among nature, nurture, and chance, and, related, that component causes must add up to 100%. Behind such approaches lie assumptions that involve deeper debates about causes of social inequalities, including in health, and untenable approaches to analyzing both biology and health, and, thus, health inequities. Public health claims to scientific rigor are compromised by inaccurate assumptions and methods. The focus should shift to valid and transparent methods to quantify the toll of health inequities and progress toward their eradication—and we have the technical capacity to use these approaches now.

ACKNOWLEDGMENTS

This work was supported in part by the American Cancer Society Clinical Research Professorship, awarded to N. Krieger.

HUMAN PARTICIPANT PROTECTION

This research did not involve human participants.

REFERENCES

- 1.Hogben L. Nature and Nurture. London, England: Williams & Norgate Ltd; 1933. [Google Scholar]

- 2.Tabery J. Beyond Versus: The Struggle to Understand the Interaction of Nature and Nurture. Cambridge, MA: MIT Press; 2014. [Google Scholar]

- 3.Keller EF. The Mirage of a Space Between Nature and Nurture. Durham, NC: Duke University Press; 2010. [Google Scholar]

- 4.MacMahon B. Gene–environment interaction in human disease. J Psychiatr Res. 1968;6(suppl 1):393–402. [Google Scholar]

- 5.Greenland S, Robins JM. Conceptual problems in the definition and interpretation of attributable fractions. Am J Epidemiol. 1988;128(6):1185–1197. doi: 10.1093/oxfordjournals.aje.a115073. [DOI] [PubMed] [Google Scholar]

- 6.Rockhill B, Newman B, Weinberg C. Use and misuse of population attributable fractions. Am J Public Health. 1998;88(1):15–19. doi: 10.2105/ajph.88.1.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Poole C. A history of the population attributable fraction and related measures. Ann Epidemiol. 2015;25(3):147–154. doi: 10.1016/j.annepidem.2014.11.015. [DOI] [PubMed] [Google Scholar]

- 8.Weinberg CR, Zaykin D. Is bad luck the main cause of cancer? J Natl Cancer Inst. 2015;107(7):djv125. doi: 10.1093/jnci/djv125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Doll R, Peto R. The causes of cancer: quantitative estimates of avoidable risks of cancer in the United States today. J Natl Cancer Inst. 1981;66(6):1191–1308. [PubMed] [Google Scholar]

- 10.Peto R. The preventability of cancer. In: Vessey MP, Gray M, editors. Cancer Risks and Prevention. Oxford, England: Oxford University Press; 1985. pp. 1–14. [Google Scholar]

- 11.Tomasetti C, Vogelstein B. Variation in cancer risk among tissues can be explained by the number of stem cell divisions. Science. 2015;347(6217):78–81. doi: 10.1126/science.1260825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.County Health Rankings & Roadmap: Building a Culture of Health, County by County. Our approach. A Robert Wood Johnson Foundation Program/University of Wisconsin Population Health Institute, School of Medicine and Public Health. Available at: http://www.countyhealthrankings.org/our-approach. Accessed August 5, 2015.

- 13.America’s Health Rankings. About the annual report. Available at: http://www.americashealthrankings.org/about/annual. Accessed August 5, 2016.

- 14.Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. doi: 10.1146/annurev.publhealth.031308.100134. [DOI] [PubMed] [Google Scholar]

- 15.Ben-Shlomo Y, Brookes S, Hickman M, editors. Epidemiology, Evidence-Based Medicine and Public Health. 6th ed. Chichester, West Sussex, England: Wiley-Blackwell; 2013. [Google Scholar]

- 16.The World Health Report 2002: Reducing Risks, Promoting Healthy Life. Geneva, Switzerland: World Health Organization; 2002. [DOI] [PubMed] [Google Scholar]

- 17.Hilgartner S. The dominant view of popularization: conceptual problems, political uses. Soc Stud Sci. 1990;20:519–539. [Google Scholar]

- 18.Blot WJ, Tarone RE. Doll and Peto’s quantitative estimates of cancer risks: holding generally true for 35 years. J Natl Cancer Inst. 2015;107(4):djv044. doi: 10.1093/jnci/djv044. [DOI] [PubMed] [Google Scholar]

- 19.Couzin-Frankel J. Backlash greets bad luck cancer story and coverage. Science. 2015;347(6219):224–225. doi: 10.1126/science.347.6219.224. [DOI] [PubMed] [Google Scholar]

- 20.Luzzatto L, Pandolfi PP. Causality and chance in the development of cancer. N Engl J Med. 2015;373(1):84–88. doi: 10.1056/NEJMsb1502456. [DOI] [PubMed] [Google Scholar]

- 21.Rozhok AI, Wahl GM, DeGregori J. A critical examination of the “bad luck” explanation of cancer risk. Cancer Prev Res (Phila) 2015;8(9):762–764. doi: 10.1158/1940-6207.CAPR-15-0229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rose G. Sick individuals and sick populations. Int J Epidemiol. 1985;14(1):32–38. doi: 10.1093/ije/14.1.32. [DOI] [PubMed] [Google Scholar]

- 23.Remington PL, Catlin BB, Gennuso KP. The County Health Rankings: rationale and methods. Popul Health Metr. 2015;13:11. doi: 10.1186/s12963-015-0044-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McGinnis JM, Williams-Russo P, Knickman JR. The case for more active policy attention to health promotion. Health Aff (Millwood) 2002;21(2):78–93. doi: 10.1377/hlthaff.21.2.78. [DOI] [PubMed] [Google Scholar]

- 25.Park H, Roubal AM, Jovaag A, Gennuso KP, Catlin BB. Relative contributions of a set of health factors to selected health outcomes. Am J Prev Med. 2015;49(6):961–969. doi: 10.1016/j.amepre.2015.07.016. [DOI] [PubMed] [Google Scholar]

- 26.Hood CM, Gennuso KP, Swain GR, Catlin BB. County Health Rankings: relationships between determinant factors and health outcomes. Am J Prev Med. 2016;50(2):129–135. doi: 10.1016/j.amepre.2015.08.024. [DOI] [PubMed] [Google Scholar]

- 27.Krieger N. Epidemiology and the People’s Health: Theory and Context. New York, NY: Oxford University Press; 2011. [Google Scholar]

- 28.Galton F. English Men of Science: Their Nature and Nurture. London, England: MacMillan & Co; 1874. [Google Scholar]

- 29.Tabery J. R.A. Fisher, Lancelot Hogben, and the origin(s) of genotype–phenotype interaction. J Hist Biol. 2008;41(4):717–761. doi: 10.1007/s10739-008-9155-y. [DOI] [PubMed] [Google Scholar]

- 30.Gilbert SF, Epel D. Ecological Developmental Biology: The Environmental Regulation of Development, Health, and Evolution. Sunderland, MA: Sinauer Assoc; 2015. [Google Scholar]

- 31.Piermsa T, van Gils JA. The Flexible Phenotype: A Body-Centered Integration of Ecology, Physiology, and Behavior. New York, NY: Oxford University Press; 2011. [Google Scholar]

- 32.Conference Outcome Documents. Health in All Policies (HiAP) Framework for Country Action. Health Promot Int. 2014;29(suppl 1):i19–i28. doi: 10.1093/heapro/dau035. [DOI] [PubMed] [Google Scholar]

- 33.Heller J, Givens ML, Yuen TK et al. Advancing efforts to achieve health equity: equity metrics for Health Impact Assessment practice. Int J Environ Res Public Health. 2014;11(11):11054–11064. doi: 10.3390/ijerph111111054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tsao TY, Konty KJ, Van Wye G et al. Estimating potential reductions in premature mortality in New York City from raising the minimum wage to $15. Am J Public Health. 2016;106(6):1036–1041. doi: 10.2105/AJPH.2016.303188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Galea S, Tracy M, Hoggatt KJ, DiMaggio C, Karpati A. Estimated deaths attributable to social factors in the United States. Am J Public Health. 2011;101(8):1456–1465. doi: 10.2105/AJPH.2010.300086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chen JT, Rehkopf DH, Waterman PD et al. Mapping and measuring social disparities in premature mortality: the impact of census tract poverty within and across Boston neighborhoods, 1999–2001. J Urban Health. 2006;83(6):1063–1084. doi: 10.1007/s11524-006-9089-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annu Rev Public Health. 2012;33:357–376. doi: 10.1146/annurev-publhealth-031210-101222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Smith GD. Epidemiology, epigenetics, and the “gloomy prospect”: embracing randomness in population health research and practice. Int J Epidemiol. 2011;40(3):537–562. doi: 10.1093/ije/dyr117. [DOI] [PubMed] [Google Scholar]

- 39.Krieger N. Who and what is a “population?” Historical debates, current controversies, and implications for understanding “population health” and rectifying health inequities. Milbank Q. 2012;90(4):634–681. doi: 10.1111/j.1468-0009.2012.00678.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Grene M. People and other animals. In: Grene M, editor. The Understanding of Nature: Essays in the Philosophy of Biology. Dordrecht, The Netherlands: D. Reidel; 1974. pp. 346–360. [Google Scholar]