Abstract

Objective

To compare two approaches to measuring racial/ethnic disparities in the use of high‐quality hospitals.

Data Sources

Simulated data.

Study Design

Through simulations, we compared the “minority‐serving” approach of assessing differences in risk‐adjusted outcomes at minority‐serving and non‐minority‐serving hospitals with a “fixed‐effect” approach that estimated the reduction in adverse outcomes if the distribution of minority and white patients across hospitals was the same. We evaluated each method's ability to detect and measure a disparity in outcomes caused by minority patients receiving care at poor‐quality hospitals, which we label a “between‐hospital” disparity, and to reject it when the disparity in outcomes was caused by factors other than hospital quality.

Principal Findings

The minority‐serving and fixed‐effect approaches correctly identified between‐hospital disparities in quality when they existed and rejected them when racial differences in outcomes were caused by other disparities; however, the fixed‐effect approach has many advantages. It does not require an ad hoc definition of a minority‐serving hospital, and it estimated the magnitude of the disparity accurately, while the minority‐serving approach underestimated the disparity by 35–46 percent.

Conclusions

Researchers should consider using the fixed‐effect approach for measuring disparities in use of high‐quality hospital care by vulnerable populations.

Keywords: Race, disparities, hospital readmissions

Unequal use of high‐quality hospital care is an important and potentially modifiable cause of racial and ethnic disparities in health. Minority and nonminority patients tend to receive care from different providers (Bach et al. 2004; Jha et al. 2007). Minorities have worse hospital outcomes across a wide range of medical conditions (Alexander et al. 1995; Skinner et al. 2005; Jencks et al. 2009; Joynt 2011), cardiovascular diseases (Mensah et al. 2005), surgical procedures (Silber et al. 2009), and perinatal care (Howell et al. 2013), raising the question whether minority patients are systematically and disproportionately receiving care from hospitals that provide lower clinical quality of care.

To study this phenomenon, researchers often identify hospitals that are “minority serving” and report the difference in risk‐adjusted outcomes between “minority‐serving” and “non‐minority‐serving” hospitals (Groeneveld et al. 2005; Groeneveld et al. 2007; Pippins et al. 2007; Lathan et al. 2008; Jha et al. 2010; Ly et al. 2010; Brooks‐Carthon et al. 2011; Joynt 2011; Pollack et al. 2011; Cheng et al. 2012; Haider et al. 2012; López and Jha 2013). There are several reasons why this “minority‐serving” approach might not be optimal. First, it requires making ad hoc decisions on the definition of a minority‐serving hospital and the cutoffs for categorizing a hospital as minority serving or not. There are no theoretical guidelines for making these decisions, and different definitions of minority‐serving could lead to different conclusions about racial disparities. Second, the minority‐serving approach does not provide a measure of the magnitude of the disparity. It returns an odds ratio or risk ratio on minority‐serving hospitals, but whether this represents a large number of excess events for minorities depends on the number of minorities who received care from minority‐serving hospitals. If hospitals that treat a high percentage of minorities tend to be small, then worse outcomes at these facilities may not reflect the quality of hospital care received by the greater minority community. Third, the minority‐serving methods estimate what we label a between‐hospital disparity—that is, a disparity in outcomes caused by minority patients receiving care from hospitals that provide lower quality of care to all of their patients. However, a between‐hospital disparity is only one of the three pathways by which racial and ethnic differences in risk‐adjusted hospital outcomes can materialize. A second pathway is via minority patients receiving worse quality of care than nonminority patients at all hospitals, regardless of the underlying quality of care at the hospital. We label this a “within‐hospital” disparity because it would be evidenced by racial differences in patient outcomes within each hospital. A third pathway is through factors that are beyond the control of the hospital, such as poor living conditions or social support inadequacies that make posthospital self‐management more difficult for minority patients. We label this a “hospital‐independent” disparity. These between‐hospital, within‐hospital, and hospital‐independent pathways are not mutually exclusive, and a significant odds ratio on patient race from a logistic regression of risk‐adjusted hospital outcomes gives no indication of which of these three scenarios occurred. Whether the minority‐serving methods are robust to disparities in outcomes caused by these different pathways is not known.

We describe an alternative approach to quantify between‐hospital racial disparities in hospital outcomes like 30‐day readmissions. This alternative approach does not require ad hoc definitions of minority‐serving hospitals. Rather, it estimates a fixed effect for each hospital and then predicts the number of adverse hospital outcomes that would occur if the distribution of minority patient across hospitals was the same as that of nonminority patients. The difference between the predicted and observed outcomes is the estimate of the number of adverse outcomes attributable to between‐hospital disparities. If white patients are systematically receiving care from higher‐quality hospitals, then this “fixed‐effect” approach would generate lower predict than observed adverse outcomes for minority patients. Using simulations in which we impose between‐hospital, within‐hospital, and hospital‐independent disparities, we compared the minority‐serving approach with the fixed‐effect approach in terms of their respective abilities to find a between‐hospital disparity when one exists, reject a between‐hospital disparity when the disparity is caused by within‐hospital or hospital‐independent disparities, and provide an accurate measure of the magnitude of the disparity caused by between‐hospital disparities.

Methods

Researchers assessing disparities in risk‐adjusted hospital outcomes for minorities commonly estimate an equation similar to:

| (1) |

where y ij is hospital readmission or death within a specified period of time (typically 30 days) for patient i whose index hospitalization occurred at hospital j, x is a vector of patient health characteristics, and r is an indicator for whether the patient is a member of a minority group. A significant and positive coefficient on the minority indicator suggests that there are excess outcomes for minority patients. To estimate whether these excess outcomes are due to minorities receiving care from hospitals that provide lower quality care, the minority‐serving approach involves estimating a model of the form:

| (2) |

where m is an indicator for whether hospital j is a “minority‐serving hospital.” How to define “minority serving” is not clear. It is commonly estimated using a percentile of a distribution of minorities, but what percentile and what distribution is up to the researcher. For example, a researcher could look at the distribution of minority patient discharges across hospitals in a community and define minority serving as the 5 percent (or 10 percent or any other percentile) of hospitals that treated the greatest number of minorities. Alternatively, the researcher could look at the percent of discharges at each hospital who were minority patients and define minority serving as the 5 percent of hospitals with the highest percentage of minority discharge. Having chosen a definition for minority serving, researchers report the sign and significance of β 3 as a test of the hypothesis that risk‐adjusted outcomes are different at minority‐serving hospitals compared with all other hospitals. Different definitions of minority serving can result in different inferences.

In the fixed‐effect approach, we estimate a fixed effect for each hospital as shown in equation (3):

| (3) |

where J is the total number of hospitals and I() is the indicator function that is equal to 1 if patient i's index hospitalization was at hospital j, and zero otherwise—that is, equation (3) is equation (2) with the minority‐serving variable replaced by a series of dummy variables for each hospital.

Using the estimated coefficients from (3), we then calculate the difference between the number of observed readmissions for minority patients and the expected readmissions if each minority patient had received care at the hospitals where nonminority patient tended to receive care. Specifically, for each minority patient, we take a weighted average of the predicted readmission for that patient at every hospital, where the percentages of white patients who were treated at each hospital are used as weights, as shown in equation (4).

| (4) |

where w j is the percentage of all white patients in the sample whose index hospitalization was at hospital j, and 's are estimated coefficients from equation (3). Finally, we take the sum of p i for all minority patients in the sample. The difference between the observed readmissions for minority patients and the predicted readmissions from (4) is the contribution of between‐hospital disparities to readmissions. Standard errors for this between‐hospital disparity measure were calculated using a bootstrap procedure.

Simulations

We tested the minority‐serving approach and the proposed fixed‐effect approach using simulations. In each simulation, we created a racial disparity in risk‐adjusted outcomes by imposing a between‐hospital, within‐hospital, or hospital‐independent disparity, respectively. We also ran a simulation in which all three types of disparities were present. For each type of disparity, we imposed three levels of severity: low, medium, and high. We then compared how the minority‐serving and fixed‐effect approaches performed in each of these scenarios.

We generated simulated data for hospital quality, patient risk factors, and patient outcomes for each of the scenarios listed above, using readmission after hospitalization for heart failure as our hospital outcome. For simplicity, we assumed that there are only two races: black and white. The simulations required seven inputs, which are outlined in Table 1. Each simulation drew a random sample of 125 hospitals from the 663 hospitals that recorded race in the 2009 Healthcare Cost and Utilization Project (HCUP) files (HCUP 2009). We used HCUP so that the sample was representative of U.S. hospitals, and we sampled 125 hospitals to make the simulation similar in size to an empirical study we were conducting of heart failure at the Veterans Administration.

Table 1.

Values of Experimental and Incidental Parameters for the Simulations

| Parametera | Scenariosb | ||||

|---|---|---|---|---|---|

| Baseline (No Disparity) | Within‐Hospital Treatment Disparity, Medium (Low, High) | Between‐Hospital Treatment Disparity, Medium (Low, High) | Hospital‐Independent Disparity, Medium (Low, High) | ||

| Experimental parameters | |||||

| Probability that a black patient received appropriate treatment relative to a white patient's probability at the same hospital | α 2 | 1.0 | 0.7 (0.9, 0.5) | 1.0 | 1.0 |

| For every 1 percentage point increase in black discharges at a hospital, the change in probability that any patient received appropriate treatment at the hospital | α 1 | 0.0 | 0.0 | −0.75 (−0.5, −1.0) | 0.0 |

| Irrespective of treatment received, the odds that a black patient survives a hospital stay relative to a white patient's odds | α 3 | 1.0 | 1.0 | 1.0 | 0.925 (0.95, 0.90) |

| Incidental parameters common to all scenarios | Common across all scenarios | ||||

| Number of hospitals, n | 125 | ||||

| Patients per hospital, mean (Q1, Q3) | 246.1 (26, 1,549) | ||||

| Percentage of black discharges, % (Q1, Q3) | μ R | 16% (2%, 23%) | |||

| Probability of appropriate treatment, mean (SD) | β 0 | 0.67 (0.2) | |||

| Probability of hospital‐free 30‐day survival for white patients who were treated appropriately | β 1 | 0.9 | |||

| Relative risk of hospital‐free 30‐day survival if patient was not treated appropriately | β 2 | 0.8 | |||

We assumed that there was only one appropriate in‐hospital treatment required on admission for heart failure, and each patient either received appropriate treatment or did not. Whether the patient received the treatment was a function of the overall quality of care at the hospital and, in the within‐hospital treatment disparity scenario, the patient's race. We assumed that each patient's observed health risks—such as age, comorbidity, and other factors commonly included in hospital risk adjustment models—were observable and represented by a single variable (x i) distributed random normal across patients. Based on differences in Charlson (Deyo et al. 1992) comorbidity scores for black and white patients in the 2009 HCUP, we assumed risk was distributed N(0,1) for white patients and N(0.2,1) for black patients. The simulated outcome was a dichotomous variable representing readmission, which was generated by a Bernoulli random number generator with a patient‐specific probability of readmission. The probability of readmission increased with observed health risk, decreased with appropriate treatment, and, in the hospital‐independent disparity scenario, increased if the patients were black. These relationships are depicted in equations (5), (6), (7), (8), (9), (10), (11), where r i is 1 if patient i is black and 0 otherwise, R j is the proportion of hospital j's patients who are black, t ij is an indicator for whether patient i was treated appropriately, T j is proportion of patients treated appropriately at a hospital j, x is patient health risk, 1‐s is patient‐specific probability of readmission, and y is an indicator for 30‐day readmission.

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

| (10) |

| (11) |

Values for the experimental parameters (α) and the incidental parameters (β) are given in Table 1 for each scenario. To effect a between‐hospital disparity, we varied the α 1 parameter, which reflects how the probability of appropriate treatment at a hospital changes for every percentage point increase in black discharges at the hospital. Alternative values tested for α 1 were −0.75 (e.g., the probability of appropriate treatment was 7.5 percentage points lower at a hospital with 25 percent black patients compared to a hospital with 15 percent black patients), −0.5, and −1.0. To effect a within‐hospital disparity, we varied the α 2 parameter, which reflects the ratio of the probability that a black patient was treated appropriately to the probability that a white patient was treated appropriately at any hospital. Alternative values for α 2 were 0.5 (i.e., black patients where half as likely to receive appropriate treatment), 0.7, and 0.9. To affect a hospital‐independent disparity, we varied α 3, which reflects the probability of hospital‐free survival independent of treatment. Alternative values were log(0.95) (i.e., odds of hospital‐free survival for blacks was 95 percent that of whites), log(0.925), and log(0.975).

Estimating the Between‐Hospital Treatment Disparity

For each scenario, we report the estimated excess readmissions due to black race, and, among black patients, the number of readmissions due to a between‐hospital disparity. We estimated both quantities using recycled predictions (Basu 2005; Kleinman and Norton 2009). We estimate excess readmissions due to race from a logistic regression of readmissions as a function of patient risk and patient race using equation (1). Excess readmissions were estimated as the difference between total observed black readmissions and the sum over all black patients of the predicted probability of readmission with the coefficient on black race set equal to zero. For the minority‐serving approach, we estimated a model represented by equation (2) where the minority indicator was set to 1.0 for a hospital if the hospital was in the top 10 percent of hospitals in terms of percent of black patients among each hospital's discharges for heart failure. We estimated readmissions due to between‐hospital disparities as the sum of the observed readmissions for black patients minus the sum over all black patients of the predicted probability from equation (2) with the indicator of minority serving (m j) set to zero. This gives the expected readmissions for black patients if there were no differences in readmission rates between minority serving and other hospitals. For the fixed‐effect approach, we measured the between‐hospital disparity using equations (3) and (4).

Results

The Abilities of the Minority‐Serving and Fixed‐Effect Approaches to Detect Disparities Caused by Between‐Hospital Differences in the Quality of Care

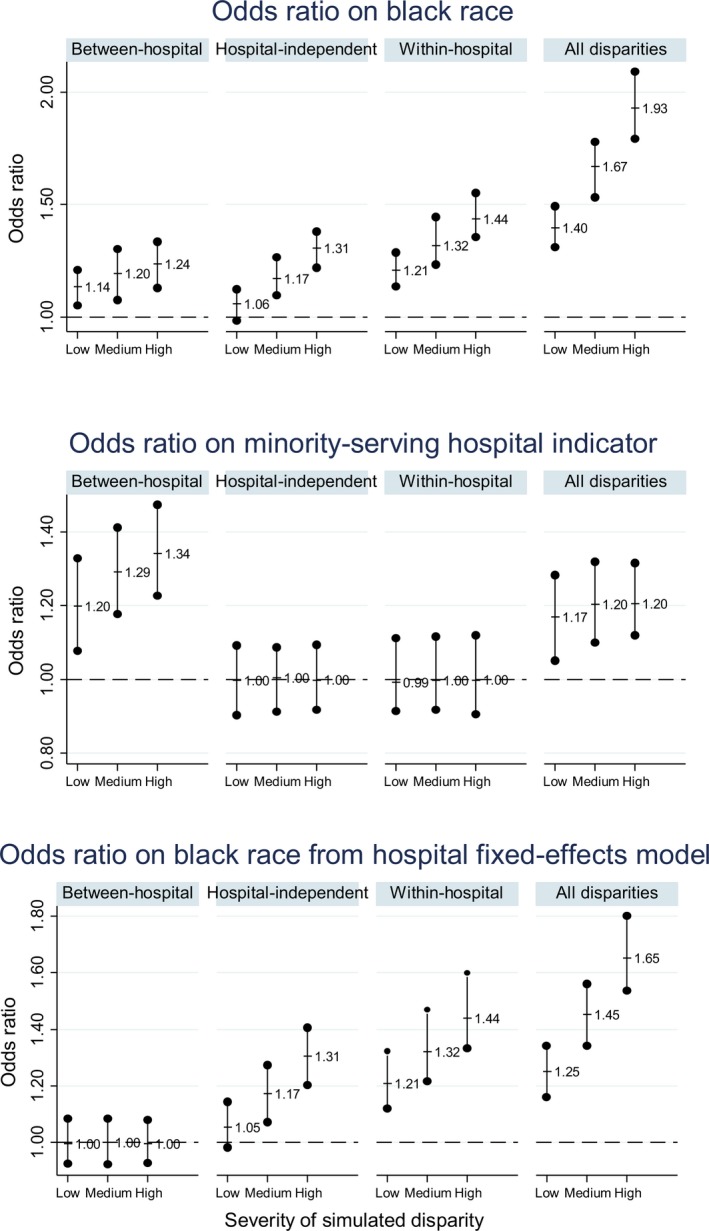

Across all simulations, there was an average of 19,959 white and 4,962 black patients. The simulation results are shown in Figure 1. For each graph, the x‐axis represents increasing levels of disparity, the y‐axis represents the logistic regression odds ratio, the dashed marks indicate the mean odds ratio across all 200 simulations, and error bars represent the 2.5th to 97.5th percentile of the empirical distribution of estimates.

Figure 1.

- Legend: Estimated Odds Ratios on Race and Minority‐Serving Hospital Variables from Three Models of Hospital Readmission (Rows), for Four Types of Disparity Mechanisms (Panels within Rows), and Three Degrees of Severity (Bars within Panels). Row 1 shows odds ratios on black race from logistic regressions of readmissions as a function of patient health risks. An odds ratio of 1.0 indicates no risk‐adjusted disparity in outcomes. Row 2 shows the odds ratio on the minority‐serving indicator from a similar logistic regressions, but with the addition of a dummy variable equal to one if the patient's index admission was at a minority‐serving hospital. An odds ratio of 1.0 suggests that there was no disparity caused by black patients using lower quality hospitals (i.e., a between‐hospital disparity). Row 3 shows the odds ratios on black race from the same logistic regression as in row 1, but with dummy variables for each hospital. An odds ratio of 1.0 implies that all of the disparity in risk‐adjusted outcomes was caused by a between‐hospital disparity.

The top panel shows the odds ratio on race from the risk‐adjusted hospital outcome equation from each scenario. We chose the simulation parameters to generate relatively modest odds ratios on race—that is, approximately 1.1–1.4. The middle panel depicts the odds ratio on the dummy variable for minority‐serving facilities (equation (2)), which represents the minority‐serving approach to assessing disparities in access to high‐quality care. As expected, the odds ratios on minority‐serving indicators were >1.0 in scenarios where the disparity in outcome was caused by between‐hospital disparity. In these scenarios, the probability of receiving appropriate treatment decreased with increasing minority representation at a hospital, so minority‐serving hospitals had higher odds of readmission. The odds ratios on minority‐serving dummy variables were centered on 1.0 when disparities were caused by within‐hospital disparities or hospital‐independent factors.

The lower panel represents the fixed‐effect approach. It depicts the odds ratio on black race from a model that included hospital‐level fixed effects (equation (3)). As expected, the odds ratio on black race was centered around 1.0 when the disparity in outcome was caused by between‐hospital disparities, but not when the racial disparity was caused by a within‐hospital or hospital‐independent disparities.

The Abilities of the Minority‐Serving and Fixed‐Effect Approaches to Measure the Extent of Readmissions Caused by Between‐Hospital Disparities

Table 2 shows readmissions and estimates of excess readmissions from each simulation. For the medium‐level between‐hospital disparity scenario (α 1 = −0.5), the mean number of black readmissions across the 200 simulations was 1,718. There was a mean of 213 more readmissions among black patients than could be explained by differences in patient risk. Because excess black readmissions were caused entirely by between‐hospital disparities in this simulation, this mean of 213 more readmissions represents the true expected number of excess readmissions due to between‐hospital disparities. The fixed‐effect approach yielded a mean estimate of 213 readmissions due to between‐hospital disparities, while the minority‐serving approach generated a mean of 140 readmissions—that is, an underestimate of 34 percent. For the large disparity, the fixed‐effect approach estimated a mean of 254 excess readmissions due to between‐hospital disparities when the true value was 252; the minority‐serving approach estimated 159—that is, an underestimate of 37 percent.

Table 2.

Comparison of the Measure of the Magnitude of the Disparity between Traditional and Fixed‐Effect Methods, by Type and Magnitude of the Mechanism Causing the Disparity

| Small Disparity | Medium Disparity | Large Disparity | |

|---|---|---|---|

| Results for between‐hospital disparity scenario | |||

| Mean of observed black readmissions, n (95% range) | 1,679 (1,160, 2,279) | 1,718 (1,207, 2,395) | 1,752 (1,205, 2,397) |

| Mean estimate of excess readmission due to race, n (95% range) | 150 (46, 252) | 213 (78, 377) | 252 (120, 410) |

| Minority‐serving approach: mean estimate of excess readmits due to minority serving, n (95% range) | 98 (29, 184) | 140 (57, 273) | 159 (75, 275) |

| Fixed‐effect approach: mean estimate of excess readmissions due to between‐hospital disparity, n (95% range) | 152 (68, 266) | 213 (98, 372) | 254 (141, 405) |

| Results for hospital‐independent disparity scenario | |||

| Mean of observed black readmissions, n (95% range) | 1,748 (1,191, 2,532) | 1,852 (1,296, 2,702) | 1,957 (1,316, 2,695) |

| Mean estimate of excess readmission due to race, n (95% range) | 220 (132, 358) | 334 (208, 558) | 443 (290, 660) |

| Minority‐serving approach: mean estimate of excess readmits due to minority serving, n (95% range) | −4 (−56, 53) | −1 (−56, 68) | −2 (−55, 70) |

| Fixed‐effect approach: mean estimate of excess readmissions due to between‐hospital disparity, n (95% range) | −6 (−68, 69) | 0 (−66, 78) | −2 (−74, 78) |

| Results for within‐hospital disparity scenario | |||

| Mean of observed black readmissions, n (95% range) | 1,558 (1,076, 2,149) | 1,691 (1,131, 2,233) | 1,819 (1,194, 2,431) |

| Mean estimate of excess readmission due to race, n (95% range) | 63 (−17, 130) | 186 (96, 294) | 316 (181, 449) |

| Minority‐serving approach: mean estimate of excess readmits due to minority serving, n (95% range) | −1 (−48, 47) | 0 (−56, 36) | −0 (−53, 45) |

| Fixed‐effect approach: mean estimate of excess readmissions due to between‐hospital disparity, n (95% range) | −2 (−59, 45) | 0 (−57, 57) | 5 (−53, 62) |

| Results for combined within‐, between‐, and hospital‐independent disparity scenario | |||

| Mean of observed black readmissions, n (95% range) | 1,933 (1,338, 2,610) | 2,175 (1,462, 2,975) | 2,353 (1,685, 3,125) |

| Mean estimate of excess readmission due to race, n (95% range) | 411 (247, 587) | 650 (411, 934) | 846 (605, 1,145) |

| Minority‐serving approach: mean estimate of excess readmits due to minority serving, n (95% range) | 92 (27, 181) | 114 (37, 204) | 119 (50, 214) |

| Fixed‐effect approach: mean estimate of excess readmissions due to between‐hospital disparity, n (95% range) | 147 (59, 246) | 193 (89, 335) | 227 (123, 376) |

Figures represent the mean estimate across 200 simulations within each scenario. Figures in parentheses represent the empirical range (2.5th percentile to 97.5th percentile) of the estimates.

For the hospital‐independent and within‐hospital disparity scenarios, none of the excess black readmissions were caused by between‐hospital disparities. In these scenarios, the true number of excess readmissions due to between‐hospital disparities was zero, and both the minority‐serving approach and the fixed‐effect approach provided accurate estimates. For example, for the medium within‐hospital disparity, the minority‐serving and fixed‐effect approaches estimated that −1 and zero readmissions, respectively, were due to between‐hospital disparities.

For the combined within‐, between‐, and hospital‐independent scenarios, both approaches underestimated readmissions due to between‐hospital disparities, but the minority‐serving approach underestimated it more. For example, for the medium disparity scenario, the minority‐serving approach estimated that 114 readmissions were due to between‐hospital disparities, when the true figure was 213 (the same estimate as the between‐hospital disparity scenario) for an underestimate of 46 percent. The fixed‐effect approach estimated 193 readmissions due to between‐hospital scenarios or an underestimate of 9 percent.

The Sensitivity of the Results of the Minority‐Serving Approach to Alternative Definitions of a Minority‐Serving Hospital

Table 3 shows how alternative definitions of “minority serving” affected the assessment of whether a between‐hospital disparity exists or not and the estimates of the number of readmissions due to between‐hospital disparities. The first two columns show the results of simulations in which we defined a hospital as minority serving if it is in the top deciles of hospitals; the second two columns use the top quartile as the cutoff. Within each of these cutoffs, we defined minority‐serving hospitals both by the percent of black patients and the number of black patients at the hospital. The data represent estimates from 200 simulation of the medium between‐hospital disparity scenario. The estimates of excess readmissions due to race were fairly constant at around 200 readmissions. Because excess black readmissions were caused entirely by between‐hospital disparities in this simulation, this figure represents the true number of excess readmissions due to between‐hospital disparities. The highest estimate of 209 differs from the lowest estimate of 194 by 7.7 percent. If we use the minority‐serving approach to estimate between‐hospital disparities, we observe odds ratios on the minority‐serving hospital that range from 1.15 to 1.20. As with the simulations in Table 2, the minority‐serving approach underestimates the true readmissions by about one third, and the estimates themselves vary across definitions of minority‐serving status, from a high of 181 to a low of 124 (a 46 percent difference). The bias, which is defined as the difference between the readmissions attributed to between‐hospital disparities and the readmissions due to race, ranged from −74 to −53 for the minority‐serving approach and from −1.7 to 2.8 for the fixed‐effect approach. Using the top quartile of hospitals as the cutoff generated more accurate measures of the between‐hospital disparity than did using the top decile as the cutoff.

Table 3.

Results of Four Simulations of the Effects of Alternative Definitions of a Minority‐Serving Hospital on Estimates of Excess Black Hospitalizations Due to Between‐Hospital Differences in Hospital Quality, from Simulations in Which the Disparity Was Caused by Between‐Hospital Differences in Quality

| Alternative Definitions of a Minority‐Serving Hospital | ||||

|---|---|---|---|---|

| Top Decile of Hospitals in Terms of: | Top Quartile of Hospitals in Terms of: | |||

| Percent of Black Patients at the Hospital | Number of Black Patients at the Hospital | Percent of Black Patients at the Hospital | Number of Black Patients at the Hospital | |

| Mean of observed black readmissions, n (95% range) | 1,705 (1,121, 2,278) | 1,802 (1,145, 2,427) | 1,719 (1,133, 2,281) | 1,783 (1,210, 2,480) |

| Mean estimate of excess readmission due to race, n (95% range) | 208 (86, 363) | 198 (82, 347) | 209 (86, 375) | 194 (82, 321) |

| Minority‐serving approach | ||||

| Odds ratio on minority‐serving variable, mean (95% range) | 1.29 (1.17, 1.40) | 1.18 (1.09, 1.28) | 1.22 (1.12, 1.31) | 1.15 (1.07, 1.23) |

| Mean estimate of excess readmissions due to minority serving, n (95% range) | 135 (59, 248) | 124 (46, 223) | 181 (81, 304) | 141 (64, 252) |

| Mean bias, mean (95% range) | −73.1 (−159.7, 4.8) | −74.1 (−165.8, −7.4) | −28.7 (−130.8, 53.9) | −53.0 (−147.1, 37.3) |

| Fixed‐effect approach | ||||

| Mean estimate of excess readmissions due to between‐hospital disparities, n (95% range) | 206 (103, 354) | 201 (80, 349) | 208 (100, 357) | 191 (102, 309) |

| Mean bias, mean (95% range) | −2.4 (−75.4, 68.0) | 2.8 (−69.1, 70.6) | −1.7 (−92.3,78.5) | −2.8 (−75.5, 75.5) |

Discussion

We conducted a simulation study to compare two approaches to measuring the degree to which unequal use of high‐quality hospitals, which we labeled a “between‐hospital disparity,” results in racial disparities in hospital outcomes. The minority‐serving approach, which compares risk‐adjusted outcomes across minority‐serving and non‐minority‐serving hospitals, generally performed well in simulations. The minority‐serving approach correctly identified a between‐hospital disparity in simulations where such a disparity was imposed and rejected a between‐hospital disparity in simulations where the disparity in outcomes was caused by “within‐hospital” disparities and a “hospital‐independent” disparities. The minority‐serving approach also confirmed the existence of a between‐hospital disparity when we combined the three pathways into the same simulation.

There are, however, two significant shortcomings to the minority‐serving approach, which suggest an alternative approach is warranted. First, the minority‐serving approach commonly returns an odds ratio or risk ratio on “minority‐serving” hospitals, and an odds ratio alone does not give an indication of how urgent or costly the between‐hospital disparity is to the minority community. A large odds ratio could belie a small effect on the community if minority‐serving hospitals treat few patients or the outcome is rare. A policy maker, who is interested in reducing the burden of poor hospital care for minorities, needs information on the number and costs of outcomes that are attributable to minority patients receiving care from poor‐quality hospitals; an odds ratio alone does not provide this information. Moreover, our simulations showed that when one employs the minority‐serving method and performs the math necessary to translate the odds ratio on the minority‐serving variable into the number of outcomes attributed to “minority‐serving” hospitals, the results underestimated the true effect of between‐hospital disparities in quality on outcomes. The minority‐serving method underestimated the true effect by a third to a half. The policy maker would substantially underestimate the costs of the between‐hospital disparity and thus would risk underinvesting in efforts to ameliorate it.

The proposed “fixed‐effect” approach addresses these shortcomings by replacing a minority‐serving indicator variable with hospital‐specific fixed effects in the outcome equation, and estimating the difference between realized outcomes for minorities and expected outcomes if every minority patient replaced his/her own hospital with the hospital that each white patient in the sample chose. This fixed‐effect measure of between‐hospital disparities performed better in simulations than the minority‐serving methods. Whereas the minority‐serving estimate underestimated the true‐between‐hospital disparity by 1/3 to 1/2, the fixed‐effect method was generally within a few percentage points of the true disparity.

The second drawback of the minority‐serving approach is that it relies on an ad hoc definition of “minority serving,” and the simulations found that alternative definitions of minority serving yielded significant differences in the odds ratio on minority‐serving hospitals, and on the derived measure of excess outcomes attributed to minority‐serving hospitals. It is important to note, however, that the better performance of definitions of minority serving based on the percent, rather than the number, of minorities at a hospital is an artifact of the way the simulation was constructed. In the simulation, we imposed a between‐hospital disparity based on the percent of minorities at each hospital, so definitions of minority serving based on the number of minorities at a hospital were disadvantaged.

There are other problems with the minority‐serving approach that we did not explore through simulation. The specification of minority serving is dichotomous. Any hospital below this cutoff is not minority serving, no matter how similar it is to hospitals above the cutoff. In a multiyear study, a hospital could switch its minority‐serving status from year to year if it is close to this cutoff. This is particularly important for urban communities with large hospitals that often tend to be safety‐net hospitals treating large numbers of Medicaid patients. Whether these hospitals fall to one side or the other of the cutoff could have important consequences for the measure of between‐hospital disparities.

Using a percentile cutoff to define minority serving also means that whether a hospital is minority serving or not depends on the sample from which it is drawn. The same hospital may be “minority serving” in a national sample of hospitals, but not in a local sample of hospitals if there are more minorities in the local sample. In addition, researchers must decide what patients to include in the sample to define a “minority‐serving” hospital. For example, in a study of hospital outcomes of low‐birthweight births, should the minority‐serving status be based on all births at the hospital or only low‐birthweight births? For a study of black‐white differences in hospital outcomes of heart failure, should the minority‐serving status be based on all minority patients at the hospital or only black patients?

The fixed‐effect approach has other advantages. The method lends itself easily to estimating between‐hospital disparities for other subgroups of patients. To estimate between‐hospital disparities for Hispanic patients, there is no need to define a “Hispanic‐serving” hospital. There is no need to estimate a separate outcome model at all. The researcher only needs to solve equation (4) for the set of all Hispanic patients in the database. To estimate between‐hospital disparities for low‐income versus high‐income patients, a researcher needs only to recalculate the weights in (4) to reflect the percentage of high‐income patients who use each hospital and solve equation (4) over the set of all low‐income patients. By generating the number of outcomes attributable to between‐hospital disparities, rather than an odds ratio, the fixed‐effect approach also facilitates estimating the costs of the disparity. Simply multiply the difference in observed events minus the expected events from equation (4) by a measure of the cost of each event. The fixed‐effect approach could also be applied to nonhospital settings, such as explorations of racial differences in the use of high‐quality ambulatory surgical care facilities, dialysis facilities, or individual surgeons.

The fixed‐effect approach is a simple extension of the use of hospital‐level dummy variables to control for racial disparities in the use of high‐quality hospitals that has been used in other studies (Barnato et al. 2005; Lucas et al. 2006; Hasnain‐Wynia et al. 2007; Gaskin et al. 2008; Howell et al. 2008; Silber et al. 2009), although to our knowledge no author has produced the statistic we described. As others have shown (Barnato et al. 2005; Lucas et al. 2006; Hasnain‐Wynia et al. 2007; Gaskin et al. 2008; Silber et al. 2009), a comparison of the coefficients on race from logistic regressions that do (equation (3)) and do not (equation (1)) include hospital‐level dummy variables informs the extent to which the site of care contributes to a racial difference in risk‐adjusted outcomes. If disparities are caused by where a minority patient receives care, then controlling for where a patient receives care by including hospital‐level dummy variables will reduce the apparent disparity. For example, Hasnain‐Wynia et al. (2007) showed that the adjusted sign and significance of racial differences in the probability of receiving a hospital quality‐of‐care measures diminished after including hospital dummy variables in the risk‐adjusted model, which suggests that much of the disparity in quality‐of‐care was due to between‐hospital disparities. Conversely, Silber et al. (2009) found that racial disparities in the probability of a poor surgical outcomes—specifically, racial differences in failure to resuscitate—persisted after including hospital‐level dummy variables, which suggested that disparities existed both within and between hospitals. However, neither of these studies produced an estimate of the number of hospital outcomes that were attributable to between‐hospital disparities in care, as we described in this study.

The proposed fixed‐effect approach is similar to the approach of Howell et al. (2008), who included hospital‐level dummy variables in a logistic regression of 30‐day neonatal mortality. Howell et al. then repeatedly randomly reassigned black mothers to hospitals such that the distribution of black mothers across hospitals was the same as the distribution of white mother. The fixed‐effect approach described here achieves the same result, except that rather than randomly reassigning black patients to hospitals, the fixed‐effect approach systematically reassigns black patients to every hospital. The proposed fixed‐effect approach is similar in someways to the Oaxaca–Blinder decomposition methods (Oaxaca 1973) in which differences in outcomes between two groups are attributed to differences in mean values of the explanatory variables and differences in estimated coefficients on those variables between the groups. In the Oaxaca–Blinder approach, separate coefficients are estimated in equation (3) for black and white patients. This is may not be feasible for most applications because of the small number of black patients at many hospitals. In addition, the Oaxaca–Blinder approach is appropriate for linear models. The proposed fixed‐effect approach achieves a similar result, but in the context of non‐linear models commonly used for hospital outcome assessments.

Limitations

As with all risk adjustment models, the conclusions are only as good as the risk adjustment model used. If the estimated coefficients on the hospital dummy variables in the risk‐adjusted outcome model are a poor reflection of the quality of care at hospitals, then the estimates of the contribution of disparities in use of high‐quality care will be suspect. This could be especially true for small hospitals for which the variance in the estimated coefficient can be great. Future research should explore using the fixed‐effect approach described here with Bayesian shrinkage estimators of the hospital‐level effects. The fixed‐effect approach would not be appropriate for especially rare hospital outcomes such that some hospitals would have no events and therefore no estimated coefficient in equation (3). The simulations were necessarily simplifications of a more complex process—there is not a single treatment for hospitalized patients—so it is difficult to choose incidental parameters such as the treatment effectiveness. By the same token, distinguishing between hospital‐independent and within‐hospital disparities in empirical analyses would be difficult as it would require measuring all the aspects of treatment that account for within‐hospital disparities. Finally, the favorable performance of the fixed‐effect approach in simulations might be expected given the close match between the fixed‐effect's statistical model and the conceptual models that motivated the simulations. However, the simulations showed that the fixed‐effect models could distinguish the pathway by which disparities materialized, despite the considerable statistical noise typical for hospital readmission models.

Conclusion

The minority‐serving approach of comparing risk‐adjusted hospital outcomes between minority‐serving and nonminority‐serving hospitals accurately identified a between‐hospital treatment disparity in simulations when one existed; however, the minority‐serving approach underestimates the number of outcomes attributed to racial differences in the use of high‐quality hospitals. An alternative approach that considers the expected outcomes of minority patients if they were distributed across hospitals in the same way as nonminority patients performed better, required no ad hoc assumptions about the definition of a minority‐serving hospital, and should be considered when addressing the consequences of racial disparities in the use of high‐quality hospitals or other facilities. A hospital's quality should not depend on the racial/ethnic composition of its patient population. Accurately measuring the disparity in outcomes caused by racial differences in the use of high‐quality hospital care is an important first step toward closing the racial and ethnic gap in health.

Supporting information

Appendix SA1: Author Matrix.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: We acknowledge the helpful suggestions of the external reviewers. The study was supported by a grants from the Health Services Research and Development (HSR&D) Service, the Office of Research and Development, the Department of Veterans Affairs (IIR 09‐354), and the National Institute on Minority Health and Health Disparities R01MD007651 (Howell). Dr. Wong is supported by a VA HSR&D Career Development Award (CDA 13‐024). The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs, the United States Government, the University of Washington, the Yale School of Medicine, or the Mount Sinai School of Medicine. Dr. Wong reports ownership of common stock in Community Health Systems Inc. and UnitedHealth Group Inc.

Disclosures: No other disclosures.

Disclaimers: None.

References

- Alexander, M. , Grumbach K., Selby J., Brown A. F., and Washington E.. 1995. “Hospitalization for Congestive Heart Failure: Explaining Racial Differences.” Journal of the American Medical Association 274 (13): 1037–42. [PubMed] [Google Scholar]

- Bach, P. B. , Pham H. H., Schrag D., and Tate R.. 2004. “Primary Care Physicians Who Treat Blacks and Whites.” New England Journal of Medicine 351 (6): 575–84. [DOI] [PubMed] [Google Scholar]

- Barnato, A. E. , Lucas F. L., Staiger D., and Wennberg D.. 2005. “Hospital‐Level Racial Disparities in Acute Myocardial Infarction Treatment and Outcomes.” Medical Care 43 (4): 308–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basu, A. 2005. “Extended Generalized Linear Models: Simultaneous Estimation of Flexible Link and Variance Functions.” Stata Journal 5 (4): 501. [Google Scholar]

- Brooks‐Carthon, J. M. , and Kutney‐Lee A.. 2011. “Quality of Care and Patient Satisfaction in Hospitals with High Concentrations of Black Patients.” Journal of Nursing Scholarship 43 (3): 301–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng, E. M. , Keyhani S., Ofner S., Williams L. S., Hebert P. L., Ordin D. L., and Bravata D. M.. 2012. “Lower Use of Carotid Artery Imaging at Minority‐Serving Hospitals.” Neurology 79: 138–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deyo, R. A. , Cherkin D. C., and Ciol M. A.. 1992. “Adapting a Clinical Comorbidity Index for Use with ICD‐9‐CM Administrative Databases.” Journal of Clinical Epidemiology 45 (6): 613–9. [DOI] [PubMed] [Google Scholar]

- Gaskin, D. J. , Spencer C. S., Richard P., Anderson G. F., Powe N. R., and LaVeist T. A.. 2008. “Do Hospitals Provide Lower‐Quality Care to Minorities Than to Whites?” Health Affairs 27 (2): 518–27. [DOI] [PubMed] [Google Scholar]

- Groeneveld, P. W. , Kruse G. B., Chen Z., and Asch D. A.. 2007. “Variation in Cardiac Procedure Use and Racial Disparity among Veterans Affairs Hospitals.” American Heart Journal 153 (2): 320–7. [DOI] [PubMed] [Google Scholar]

- Groeneveld, P. W. , Laufer S. B., and Garber A. M.. 2005. “Technology Diffusion, Hospital Variation, and Racial Disparities among Elderly Medicare Beneficiaries: 1989–2000.” Medical Care 43 (4): 320–9. [DOI] [PubMed] [Google Scholar]

- Haider, A. H. , Ong'uti S., Efron D. T., Oyetunji T. A., Crandall M. L., Scott V. K., Haut E. R., Schneider E. B., Powe N. R., Cooper L. A., and Cornwell E. E.. 2012. “Association between Hospitals Caring for a Disproportionately High Percentage of Minority Trauma Patients and Increased Mortality: A Nationwide Analysis of 434 Hospitals.” Archives of Surgery 147 (1): 63–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasnain‐Wynia, R. , Baker D. W., Nerenz D., Feinglass J., Beal A. C., Landrum M. B., Behal R., and Weissman J. S.. 2007. “Disparities in Health Care Are Driven by Where Minority Patients Seek Care: Examination of the Hospital Quality Alliance Measures.” Archives Of Internal Medicine 167 (12): 1233–9. [DOI] [PubMed] [Google Scholar]

- HCUP . 2009. National Inpatient Sample Healthcare Cost and Utilization Project (HCUP). Rockville, MD: Agency for Healthcare Research and Quality. [Google Scholar]

- Howell, E. A. , Hebert P., Chatterjee S., Kleinman L. C., and Chassin M. R.. 2008. “Black/White Differences in Very Low Birth Weight Neonatal Mortality Rates among New York City Hospitals.” Pediatrics 121 (3): e407–15. [DOI] [PubMed] [Google Scholar]

- Howell, E. A. , Zeitlin J., Hebert P. L., Balbierz A., and Egorova N.. 2013. “Paradoxical Trends and Racial Differences in Obstetric Quality and Neonatal and Maternal Mortality.” Obstetrics and Gynecology 121 (6): 1201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jencks, S. F. , Williams M. V., and Coleman E. A.. 2009. “Rehospitalizations among Patients in the Medicare Fee‐for‐Service Program.” New England Journal of Medicine 360 (14): 1418–28. [DOI] [PubMed] [Google Scholar]

- Jha, A. K. , Orav E. J., Zhonghe L., and Epstein A. M.. 2007. “Concentration and Quality of Hospitals That Care for Elderly Black Patients.” Archives of Internal Medicine 167 (11): 1177–82. [DOI] [PubMed] [Google Scholar]

- Jha, A. K. , Stone R., Lave J., Chen H., Klusaritz H., and Volpp K.. 2010. “The Concentration of Hospital Care for Black Veterans in Veterans Affairs Hospitals: Implications for Clinical Outcomes.” Journal for Healthcare Quality 32 (6): 52–61. [DOI] [PubMed] [Google Scholar]

- Joynt, K. E. , Orav E. J., and Jha A. K.. 2011. “Thirty‐day Readmission Rates for Medicare Beneficiaries by Race and Site of Care.” Journal of the American Medical Association 305 (7): 675–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinman, L. C. , and Norton E. C.. 2009. “What's the Risk? A Simple Approach for Estimating Adjusted Risk Measures from Nonlinear Models Including Logistic Regression.” Health Services Research 44 (1): 288–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lathan, C. S. , Neville B. A., and Earle C. C.. 2008. “Racial Composition of Hospitals: Effects on Surgery for Early‐Stage Non–Small‐Cell Lung Cancer.” Journal of Clinical Oncology 26 (26): 4347–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- López, L. , and Jha A. K.. 2013. “Outcomes for Whites and Blacks at Hospitals That Disproportionately Care for Black Medicare Beneficiaries.” Health Services Research 48 (1): 114–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lucas, F. L. , Stukel T. A., Morris A. M., Siewers A. E., and Birkmeyer J. D.. 2006. “Race and Surgical Mortality in the United States.” Annals of Surgery 243 (2): 281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ly, D. P. , Lopez L., Isaac T., and Jha A. K.. 2010. “How Do Black‐Serving Hospitals Perform on Patient Safety Indicators?: Implications for National Public Reporting and Pay‐for‐Performance.” Medical Care 48 (12): 1133–7. [DOI] [PubMed] [Google Scholar]

- Mensah, G. A. , Mokdad A. H., Ford E. S., Greenlund K. J., and Croft J. B.. 2005. “State of Disparities in Cardiovascular Health in the United States.” Circulation 111 (10): 1233–41. [DOI] [PubMed] [Google Scholar]

- Oaxaca, R. 1973. “Male‐Female Wage Differentials in Urban Labor Markets.” International Economic Review 14 (3): 693–709. [Google Scholar]

- Pippins, J. R. , Fitzmaurice G. M., and Haas J. S.. 2007. “Hospital Characteristics and Racial Disparities in Hospital Mortality from Common Medical Conditions.” Journal of the National Medical Association 99 (9): 1030. [PMC free article] [PubMed] [Google Scholar]

- Pollack, C. E. , Bekelman J. E., Liao K. J., and Armstrong K.. 2011. “Hospital Racial Composition and the Treatment of Localized Prostate Cancer.” Cancer 117 (24): 5569–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silber, J. H. , Rosenbaum P. R., Romano P. S., Rosen A. K., Wang Y., Teng Y., Halenar M. J., Even‐Shoshan O., and Volpp K. G.. 2009. “Hospital Teaching Intensity, Patient Race, and Surgical Outcomes.” Archives of Surgery 144 (2): 113–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner, J. , Chandra A., Staiger D., Lee J., and McClellan M.. 2005. “Mortality after Acute Myocardial Infarction in Hospitals That Disproportionately Treat Black Patients.” Circulation 112 (17): 2634–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.