Abstract

Currently, electric wheelchairs are commonly used to improve mobility in disabled people. In severe cases, the user is unable to control the wheelchair by themselves because his/her motor functions are disabled. To restore mobility function, a brain-controlled wheelchair (BCW) would be a promising system that would allow the patient to control the wheelchair by their thoughts. P300 is a reliable brain electrical signal, a component of visual event-related potentials (ERPs), that could be used for interpreting user commands. This research aimed to propose a prototype BCW to allowed severe motor disabled patients to practically control a wheelchair for use in their home environment. The users were able to select from 9 possible destination commands in the automatic mode and from 4 directional commands (forward, backward, turn left and right) in the shared-control mode. These commands were selected via the designed P300 processing system. The wheelchair was steered to the desired location by the implemented navigation system. Safety of the user was ensured during wheelchair navigation due to the included obstacle detection and avoidance features. A combination of P300 and EOG was used as a hybrid BCW system. The user could fully operate the system such as enabling P300 detection system, mode shifting and stop/cancelation command by performing a different consecutive blinks to generate eye blinking patterns. The results revealed that the prototype BCW could be operated in either of the proposed modes. With the new design of the LED-based P300 stimulator, the average accuracies of the P300 detection algorithm in the shared-control and automatic modes were 95.31 and 83.42% with 3.09 and 3.79 bits/min, respectively. The P300 classification error was acceptable, as the user could cancel an incorrect command by blinking 2 times. Moreover, the proposed navigation system had a flexible design that could be interfaced with other assistive technologies. This research developed 3 alternative input modules: an eye tracker module and chin and hand controller modules. The user could select the most suitable assistive technology based on his/her level of disability. Other existing assistive technologies could also be connected to the proposed system in the future using the same protocol.

Keywords: P300, EOG, ROS, BCW, BCI

Introduction

Disabled people suffer difficulties in their daily life due to some normal function that has been disabled. They cannot walk or move normally in daily life if their motor function is impaired, such as due to muscle weakness, spinal cord injury (SCI), Huntington’s disease (HD), ALS, cerebral palsy and stroke patients. Many research groups have sought to allow disabled patients to regain their movement abilities. These patient may be able to restore some normal function, e.g., standing, walking or grasping by using functional electrical stimulation (FES) to directly stimulate their muscles (John et al. 2008) or exoskeletons to hold their body (Hugh 2009). Nevertheless, the limitations of using FES and exoskeletons remain a major issue. Muscles need to be trained to increase their muscle mass before using FES, and FES works for only a short period of time due to muscle fatigue. The most common way to improve mobility in disabled people is the use of an electric wheelchair. The patient is able to control the wheelchair by hand control. Some disabled who have lost hand function, for example quadriplegic patients, may be able to use their remaining function to control a customized wheelchair that supports some specific control input module, such as a tongue movement detector (Jeonghee et al. 2013), a tooth-click controller (Tyler et al. 2008), a Sip and Puff switch (SnP) (Michael et al. 2008), a sniff controller (Anton et al. 2010), a chin pointer (Torsten and Rainer 2007), a head pointer (Gwang et al. 2007), voice recognition or an eye tracker (Susumu et al. 2006). Tongue movement detectors and tooth-click controllers employ the tongue to control wheelchair directions. These techniques have fast control responses because the tongue can be rapidly moved in the oral area. However, the patients need to have some material in their mouth, which may be uncomfortable. SnP switches and sniff controllers use air pressure from the patient’s lungs to control pneumatic sensors. A pattern of inhaling and exhaling can be used to control wheelchair direction. These methods are the most popular for wheelchair control because the systems are reasonably priced and less complex. However, the tube or straw must be cleaned frequently. Some patients who have limited ventilation systems cannot use these techniques. Chin and head pointers require the patient to perform head-neck movements to control the wheelchair direction. These techniques are one of the most accurate controlling methods and are low in cost but are limited to patients who have normal head neck function. Voice recognition is generally used in typing applications and also could be used for wheelchair control. However, this technique is sensitive to noise, and the wheelchair must be able to controlled in various environments. Pupil direction can be detected by an eye tracker. The pupil direction reflects the wheelchair direction control. However, light intensity causes problems with eye trackers and cameras that mount in front of the user may obstruct their vision.

Brain computer interface (BCI) techniques can be used as assistive technology for disabled people by processing their brain waves or electroencephalograms (EEGs) (Jonathan et al. 2002). BCIs allow disabled people to type (Farwell and Donchin 1988), control an electric device (Yunyong and Yodchanan 2012) and drive machines (Yunyong and Yodchanan 2010; Karl et al. 2013) including electric wheelchairs called brain controlled wheelchairs (BCWs). Presently, BCWs can be categorized into three different types based on brain phenomena. The first brain phenomenon is event related desynchronization (ERD) and event related synchronization (ERS). Users can control wheelchairs to move in the left or right directions based on motor imagery (imagine moving to the left or right). The mu band (11–13 Hz) and beta band (18–25 Hz) at the contralateral side of the motor cortex change in power when the user prepares to move (ERD) and after actual movement (ERS) (Kyuwan and Andrzej 2008; Kazuo et al. 2005; Johan et al. 2007). The user can freely control the wheelchair by using this technique, but it yields low accuracy, and the significant effort and training time by the user are needed. Another brain phenomenon is steady-state visual evoked potentials (SSVEPs). When the subjects look at a flickering light source, the same frequency occurs in the occipital area together with its harmonic frequencies. Flickering 4 LEDs that each has a different frequency are defined as different directions (forward, turn left or turn right) (Christian et al. 2009). This approach gives a fast response and high accuracy. However, focusing at flickering light causes the user’s eyes to fatigue easily. Moreover, the SSVEP technique has a limited number of commands due to the harmonic frequencies. The final brain phenomenon used in BCWs is the P300 signal. This signal occurs as a positive peak at a latency approximately 300 ms after the stimulus onset. Target commands, such as wheelchair direction or destination, shown on a monitor or LED panel are randomly flashed or changed in appearance to induce the P300 component. The user can select the command by staring or focusing on the desired target (Jonathan et al. 2002; Inaki et al. 2009; Rossella et al. 2008; Brice et al. 2006, 2010). The P300 technique is usually characterized by high accuracy, less fatigue and a high number of commands compared to SSVEP and ERD/ERS techniques.

Each type of brain controlled wheelchair method carries both advantages and disadvantages. ERD/ERS BCWs facilitate free will control, stimulation free and be able to use for user with impaired sensory organ but modulating ERD/ERS signal is usually difficult. P300 and SSVEP BCWs usually needs simple calibration, no extensive training is required. Almost user can achieve high successful rate. However, these systems need stimulators causing unnatural control to users. SSVEP BCW gives fastest response and highest accuracy but it provokes fatigue and can cause epileptic seizures.

Present BCWs use different wheelchair control method which could be classified into 3 types, low-level navigation (Varona-Moya et al. 2015), high-level navigation (Ng et al. 2014; Zhang et al. 2015) and shared-control (Duan et al. 2014) navigation. Low-level navigation offers a simple navigation command for example; forward, turn left or right. The users could design their own path to achieve the desired destinations without any assistance from the system. Most ERD/ERS BCWs use low-level navigation. High-level navigation offers comfortability to the users by applying high-level command such as “go to bedroom”. Various sensors for environment sensing must be equipped and processed by intelligent system. Shared-control navigation allows both user and the system to control the wheelchair. The users give a simple command and the system assists for navigation. This method minimizes command errors and controlling time.

Low-level and shared-control navigation allow the user freely control the wheelchair. However, the users need to control the wheelchair in a series of commands. They may become fatigued at some distance due to physical limitations. Moreover, an accident may possibly occur to the users in some critical environments, such as a corridor or obstructed area. These problem can be reduced by the high-level navigation, the integration of a navigation system in the electric wheelchair. Some research groups have developed P300-based BCWs that allow the users control the wheelchair by a single command selection (Rossella et al. 2008; Brice et al. 2006, 2010). The users could go to a defined location on a map (e.g., living room, bed room, kitchen or toilet) automatically using the navigation system. This system offers comfort to the users. Nevertheless, the users lack freedom control because they are restricted to the pre-defined locations on the map.

In this study, we propose a prototype of an automated navigation system for a P300-based BCW. The proposed system offers 2 modes of operation, automatic navigation and shared-control modes. Automatic navigation mode allows the user to control the wheelchair to a desired location on a pre-determined map by a single command. The wheelchair safely navigates to the destination by path planning, obstacle detection and avoidance features. When the users achieve the desired destination, they can freely control the wheelchair direction in shared-control mode. These destinations in automatic navigation mode and directional commands in shared-control mode are selected through a P300 detection system. The combination of P300 and the eye-blinking signal is proposed in this study. Defined patterns of eye blinking corresponding to 2, 3, or 4 consecutive blinks were used as stop/cancel commands, enabling the P300 system and mode switching, respectively. The users could fully operate the proposed system by themselves. Moreover, the proposed automated navigation system was designed to be compatible with other assistive technologies. This research offers 3 additional modules, hand control, chin pointer and eye tracker modules, as alternative assistive technologies. These alternative input modules could be selected based on the disability levels of the patient. If the user has hand control (for example, for a short period of time as in muscle weakness patient, cerebral palsy or elderly) they may consider using hand control. If their hand cannot be controlled but they can perform head-neck movements (spinal cord injury), they may be suitable candidates for the chin pointer. Eye trackers and BCWs would be incorporated for severe motor disability, such as quadriplegic patients.

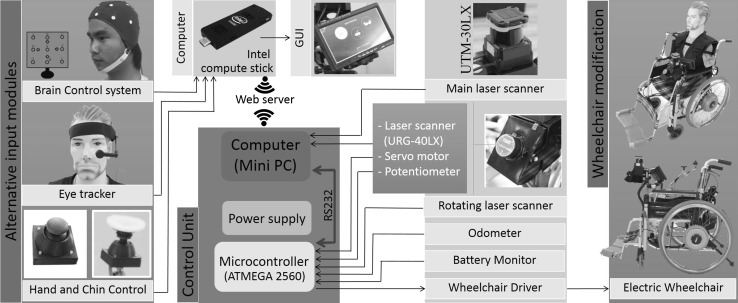

Figure 1 shows an overview of the proposed system in which the users can choose the most suitable assistive technologies for themselves. These alternative input modules can be plugged and processed using a computer (Intel compute stick). The classified output command from the compute stick is sent to another computer unit (mini PC) via wireless communication. This mini PC was used for wheelchair navigation by processing information from a main laser scanner, a rotating laser scanner and an odometer. The odometry data were acquired and processed via a microcontroller connected to the computer through serial communication (RS232). This microcontroller was also used as a peripheral processor, such as to control the rotational mechanism of a rotating laser scanner, to monitor the power from batteries and to drive the wheelchair according to the command velocities from the navigation system. The user can monitor the wheelchair status and their selected commands via a graphic user interface (GUI) on the monitor screen connected to the compute stick.

Fig. 1.

System overview of the proposed system; the main control unit composed of computer, microcontroller and power supply. The alternative assistive technologies could be plugged in this control unit. All hardware for wheelchair navigation including wheelchair driver were interfaced with this main control unit. User could monitor wheelchair status and their command via graphic user interface on the monitor screen connected to the computer

P300 based BCW

System overview

P300 is a type of event related potential (ERP) that can be elicited post-stimulation. The stimuli can be light (visual evoked potential, VEP), sound (auditory evoked potential, AEP), or tactile (somatosensory evoked potential, SSEP). The P300 signal is elicited as a positive pulse approximately 300 ms after stimulus onset. A clear P300 response can be observed by averaging several trials. The P300 signal can be applied to many applications, such as use in spelling systems (Farwell and Donchin 1988), to control electric devices (Yunyong and Yodchanan 2012), in mobile phones (Krzysztof and Piotr 2015) and in electric wheelchairs (Kyuwan and Andrzej 2008; Kazuo et al. 2005; Johan et al. 2007; Christian et al. 2009; Inaki et al. 2009; Rossella et al. 2008; Brice et al. 2006, 2010).

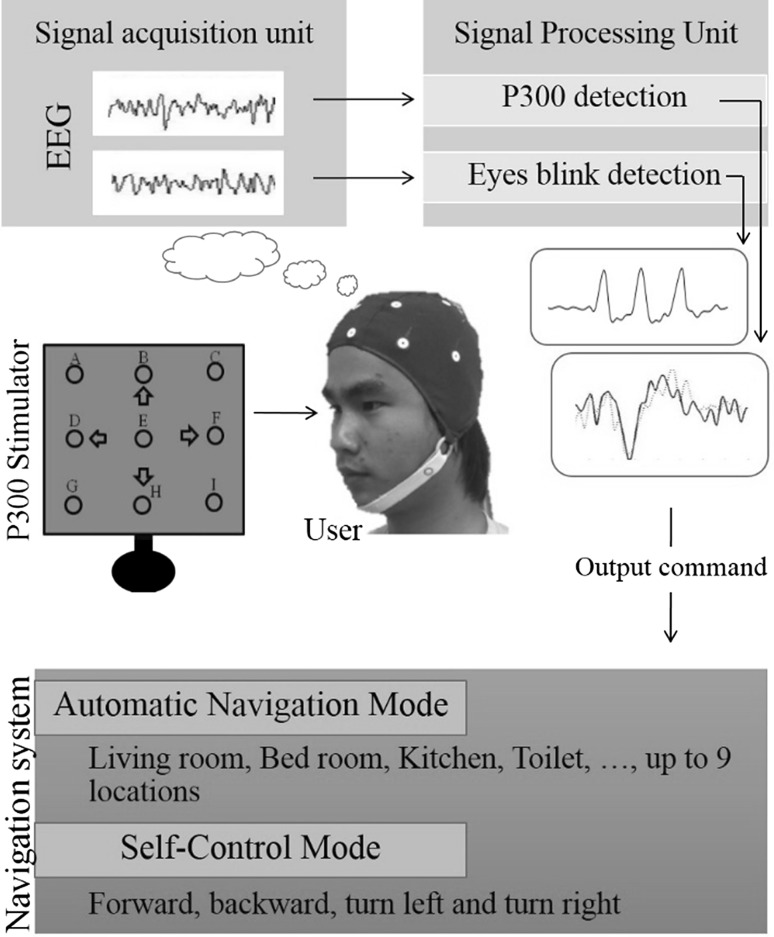

Our previous research demonstrated that the combination of eye link artifacts and the P300 signal allowed the user to operate the wheelchair by themselves (Dilok and Yodchanan 2012). Our previous work offers 4 target destinations in automatic navigation mode and 4 directional commands in shared-control mode. Figure 2 shows the hybrid EOG and P300 system proposed in this study. The P300 stimulator would be installed in front of the user. This stimulator was installed approximately 0.3 m from the subject’s eyes. The details of the P300 stimulator design are discussed in the next section. The EEG signals were recorded from the scalp via Ag/AgCl electrodes with conductive gal or salt solution. Generally, the P300 response occurs in the central, parietal and occipital areas. To obtain the P300 response, the electrodes were positioned at O2 (+), Cz (−) and Fz (Ground) according to the 10–20 system EEG montage. Eye blink artifacts or electro-electrooculography (EOG) can be obviously observed in the frontal area. Hence, electrode positions were located at Fp2 (+), C4 (−) and T4 (ground) for capturing the eye-blinking signal. These two tiny brain waves (in micro scale) were amplified by a bio-signal amplifier. This study used a BIOPAC amplifier for signal amplification and noise filtering. The amplified EEG signals were digitized by an NI USB-6008 data acquisition card. The digitized signals were processed to detect the P300 response and the eye-blinking signal by a LABVIEW programming implemented in the compute stick. The proposed technique for P300 detection and eye blink detection is discussed later.

Fig. 2.

P300-based BCW system; the user chooses the desired command by looking at the P300 stimulator. The output command was classified and sent to the navigation system. P300 and eye-blinking pattern were processed from the EEG signal measured at the user’s scalp

The P300 stimulator was initially off. The user could start the P300 stimulator by blinking 3 times within 2 s. Once each target stimulus was consequently flashed, the user could select the desired target command, such as the destination command in automatic navigation or the direction command in shared-control modes, by focusing on only the target being selected. The user’s EEG signal was processed and classified to obtain the user command. The proposed system offered 9 destinations in automatic navigation and 4 directions in shared-control modes. These modes could be switched by blinking 4 times within 2 s. In case of emergency, the users could abort their command or stop the wheelchair by blinking 2 times within 2 s. The combination of the eye blink pattern and P300 allowed the wheelchair user to command the wheelchair in both automatic navigation and shared-control modes by themselves.

P300 stimulator design

The P300 stimulator used in this study was based on visual stimulation. The VEP response could be elicit when the subject focused on some source of light, e.g., the screen (LCD) or LED. An LED light was used in this study because it is the most suitable for wheelchair control applications. In practice, the wheelchair might be employed in various locations that may have low light intensity. Less illumination can diminish P300 detection accuracy. Our previous study revealed that the LED-based P300 stimulator yields better performance than LCD monitors (Dilok and Yodchanan 2011). The accuracy of the LCD-based P300 stimulator is lower than the LED-based P300 stimulator when the light intensity is decreased.

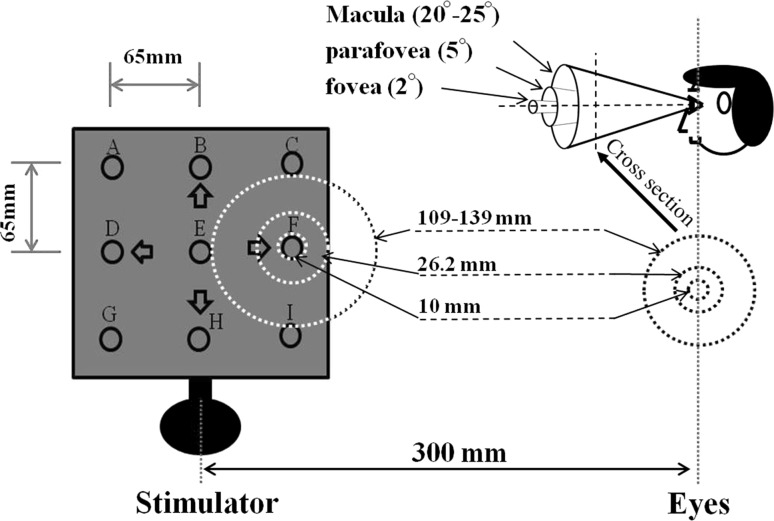

Figure 3 shows our design P300 stimulator for the proposed Hybrid EOG and P300 system. This stimulator can be used for stimulating the target commands in both modes. The user can select 9 possible target destinations (A, B, C, …, I) and 4 directional targets (forward (B), backward (H), turn left (D) and turn right (F)) in the automatic navigation and shared-control modes, respectively. Each LED is controlled by a microcontroller embedded in the stimulator. This microcontroller is connected to the compute stick to receive start, mode shifting and stop commands and to send the stimulus sequence for P300 signal segmentation. After stimulus onset in each target, EEG data are segmented and averaged to yield a clear P300 response.

Fig. 3.

P300 stimulator design; visual field effect consideration to avoid non-target interference

The P300 detection accuracy and transfer rate of the command decrease in relation to the number of targets and its position. When the target place is adjacent to the non-target, the non-target may distract the user and cause low transfer rate and accuracy. In this study, the human visual field was considered (see Fig. 3) to eliminate interference between the target and non-target stimulus. Normally, the visual angle of the fovea, the parafovea and the macula are 2, 5 and 20–25 degrees, respectively (Horacio et al. 2011; Gray et al. 1997). When the stimulator was installed 300 mm from the user eyes, the diameter of the visual field projected at the stimulator could be calculated by a simple trigonometry property. The visual field at the stimulator was approximately 10, 26.2 and 109–139 mm for fovea, parafovea and macula angles, respectively. Therefore, our proposed P300 stimulator used a minimal clearance between each target of approximately 65 mm. Therefore, non-target stimuli would not be presented at the focal point of the fovea and parafovea. The target stimulus (10 mm diameter of LED) would appear at the focal point at the fovea. The clearance of the target and non-target in shared-control mode was higher than in the automatic navigation modes because forward (B), backward (H), turn left (D) and turn right (F) targets were located 90 mm from one another. It was hypothesized that the accuracy and transfer rate in shared-control mode would be higher than in automatic mode due to clearance and matrix size. In automatic navigation mode, the inter stimulus interval (ISI) and the flash time interval were 400 ms and 100 ms, respectively. Whereas, ISI and flash time interval were 200 ms and 100 ms, respectively, in shared-control modes. The total diameter of the proposed P300 stimulator was 170 × 170 mm.

P300 detection

Many studies have sought to achieve high accuracy and transfer rates by using several EEG channels with a complicated algorithm. The best reported P300 detection accuracy was approximately 94.5% (Miami 2009; Ulrich et al. 2008), which used 8 EEGs in a P300-based brain spelling system. In practice, implementing a high number of electrodes placed on the scalp is time consuming and requires a skillful assistant to setup the device. Moreover, processing of a complicated algorithm based on several EEG channels is computationally expensive.

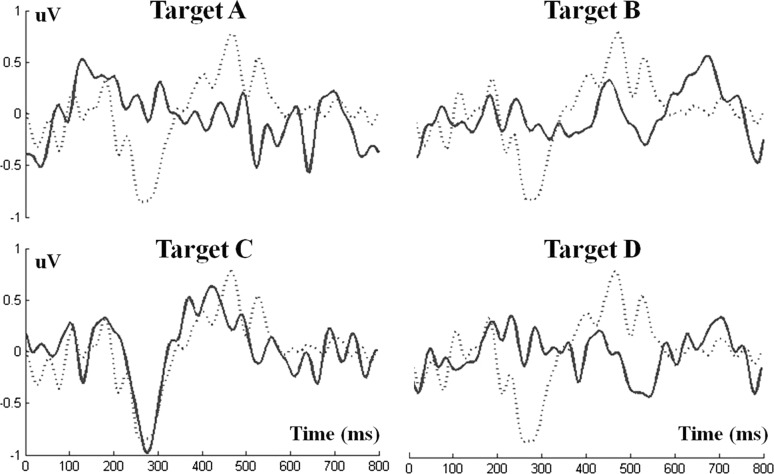

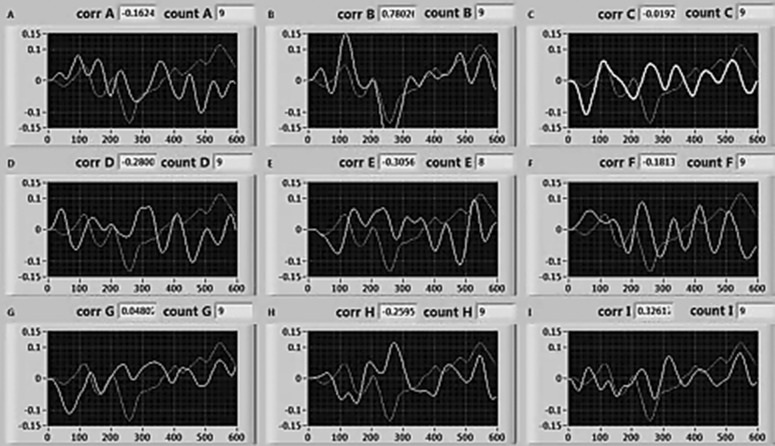

This research used one EEG channel for P300 detection. This EEG signal was captured from O2, Cz and Fz, which corresponded to plus, minus and ground electrodes, respectively. Analog-to-digital conversion was set at a 200 Hz sampling frequency. When the P300 stimulator was started, each target would be randomly flashed in a sequent at different points of time. The stimulated target sequence was sent to the compute stick as a marker for EEG signal segmentation. The EEG data were segmented for 800 ms after stimulus onset. Mean removal was applied to each trial to eliminate movement artifact. All sub trials in each target were averaged to remove background noise. The grand average was then filtered by a fourth-order Butterworth band pass filter in a range of 0.5–25 Hz. Figure 4 (bold line) shows the grand average from 10 epochs of target A, B, C and D. The user was focusing on target C so that its grand average contained clear N200 and P300 components. Because our designed P300 stimulator could elicit strong N200 and P300 responses, P300 detection could be performed by a template matching technique. Figure 4 (dotted line) shows a P300 template that was recorded prior to classification. The template could be recorded by focusing on one target on the P300 stimulator for 40 s (approximately 33 trails). This template was user dependent. Figure 4 demonstrates that target C was the best match to the P300 template.

Fig. 4.

Correlation coefficient calculation between the recorded P300 template and the current signal for the A, B, C and D targets; the subject was asked to concentrate on target C. Therefore, target C was the best match to the P300 template

The similarities between the recorded P300 template and a current grand averaged P300 response can be calculated according to the correlation coefficient as given in Eq. 1.

| 1 |

where r is the correlation coefficient (value between −1 and 1) between the P300 template and the current P300 response, x(i) is the template, y(i) is the input signal that could be either the target and non-target P300 signal, n is the sample number, x bar and y bar are the mean x(i) and y(i) signals, and Sx and Sy are the standard deviations of the x i and y i signals, respectively.

The correlation coefficients of target A, B, C and D in Fig. 4 compared to the template were −0.1147, 0.1881, 0.7293 and −0.1474, respectively. For the decision making method, the percentage different between the greatest maximum value (max1) and the second maximum value (max2) was calculated. If this percentage was greater than the setting percent of detection (POD), which was initially set to 20%, the target that had the maximum value would be selected as the output command. For example, in Fig. 4, the correlation coefficient of max1 (target C) and max2 (target B) were 0.7293 and 0.188, respectively. The percentage difference was 54.12%, which was greater than the DOF (20%). Therefore, target C would be classified as the output command. Each target stimuli would be repeated a maximum of 20 times. There was no output sent to the navigation system if the percentage difference between max1 and max2 was less than the defined DOF after time out. In contrast, an output command could possibly be classified earlier if the target response matched the template.

Eyes-blinking detection

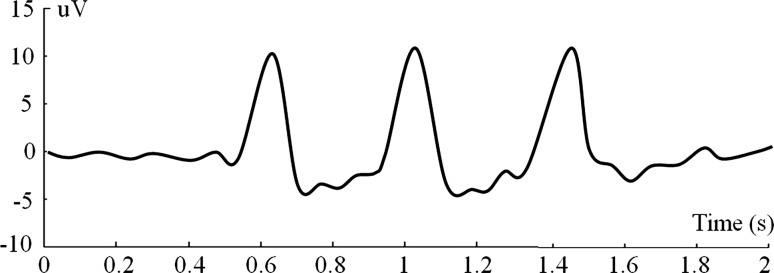

A pulse of the eye blink artifact could be clearly observed at the frontal area. Figure 5 shows that the EEG signal contained 3 pulses of eye blinking. This EEG signal was captured from electrodes placed at Fp2, C4 and T4, which refer to the plus, minus and ground electrodes, respectively. This EEG signal was converted from analog to digital at a 200 Hz sampling frequency. A forth-order Butterworth band pass filter in the range of 1–10 Hz was applied to the EEG signal to remove the high frequency and DC offset (zero frequency). A pulse of eye blinking could be detected by a template matching method. The proposed eye blinking detection used a Gaussian function as the template because its shape has similarities to the eye blink artifact pulse. Because the amplitude and width of Gaussian functions can be adjusted, the generated bell shape can be adjusted individually for each user.

Fig. 5.

EEG signal contains 3 blinking artifacts

A pulse of eye blink artifact was detected by calculating the correlation coefficient between the template Gaussian function and the sampled EEG data using Eq. 1. A 250 ms (50 elements) window size of EEG data with 98% overlapping was continuously matched to the array of 50 elements (250 ms) of the Gaussian function. Because the value of the correlation coefficient ranges from 1 to −1, the detection criterion (threshold) was set initially to 0.5 but could be adjusted. If 3, 4 or 2 blinks were detected, the P300 would be initiated, the control mode would be switched and the navigation would be stopped, or the selected command would be aborted, respectively.

Alternative input modules

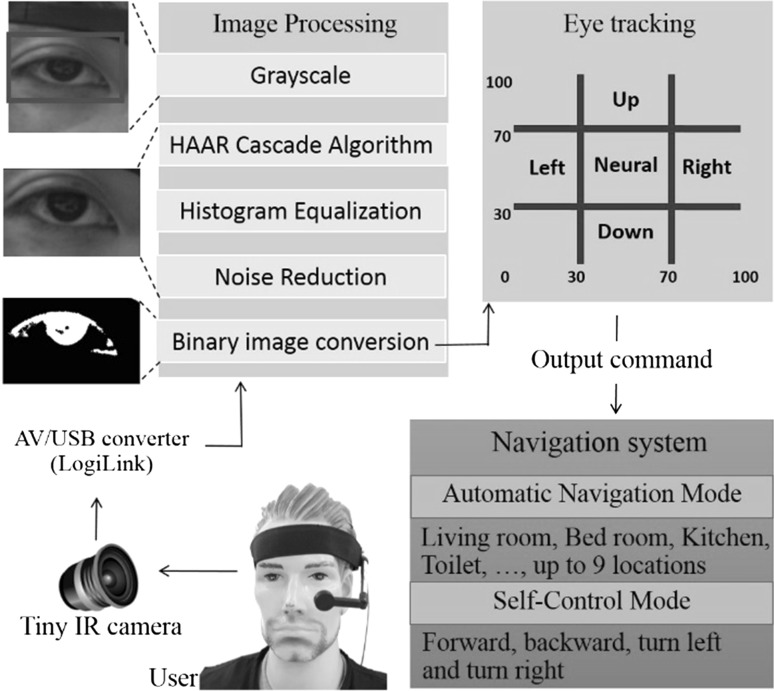

Eye tracking system

Some research groups have developed eye controlled wheelchairs (Gautam 2014; Arai and Ronny 2011; Dilok et al. 2014) that allow the disable people to control wheelchair direction using their eyes. Some systems install a proximity sensor to avoid collision. However, the available system use a huge camera attached to goggles (Gautam 2014) or eye glasses (Arai and Ronny 2011). The large camera may obstruct user vision. Moreover, control of the wheelchair with eye movements for long distances causes user fatigue.

Our previous work (Dilok et al. 2014) demonstrated that our eye tracking-based wheelchair control could facilitate the wheelchair user to control the wheelchair direction and select a destination. The pupil directions could be captured by a tiny camera and processed in an embedded board (Raspberry Pi). Use of a tiny camera diminished visual obstruction to the user. Moreover, the user had less fatigue when controlling over long distances in automated navigation mode. However, our previous design uses a CMOS camera (PI camera for Raspberry PI) whose accuracy depends on light intensity. Illumination control is impossible in this research because the wheelchair must be able to be used in various environments. Moreover, customized glasses cause discomfort to the user due to their structure.

To eliminate the light intensity problem, a tiny infrared (IR) camera was applied in this study. This IR camera was attached to the head band, as shown in Fig. 6. Figure 7 shows our prototype of the integrated headband-camera module. The camera was installed on an adjustable actuator. This actuator was used to adjust the camera position individually for each user. The user could rotate the actuator around the x and z axis and translate along the x axis. The analog output from this camera was converted to a digital image using a LogiLink AV/USB converter. The digitized imaged would then be processed in a compute stick at 30 frames per second. The raw image was converted to a grayscale image. The location of the user’s eye on the image was localized by HAAR cascade algorithm and segmented as an area of interest. The segmented image underwent histogram equalization and noise reduction for image quality enhancement. Finally, this image was converted to a binary image, as shown in Fig. 6. Summation of the binary image along the x and y axis was used for pupil direction classification. If both maximum values on the x and y axis were located between 30 and 70% of the segmented image scale, this condition was considered a neutral condition. When the maximum value on the x axis was greater or lower than 70 or 30% while the maximum value on the y axis remained neutral, these conditions were considered as right and left conditions, respectively. If the maximum value on the y axis was greater or lower than 70 or 30% while the maximum value on the x axis remained neutral, these conditions were considered as up/forward or down/backward conditions, respectively.

Fig. 6.

Eye tracker-based wheelchair control; pupil direction is captured by a tiny IR camera. The captured image is converted, processed and classified to obtain the output command

Fig. 7.

Integrated headband-camera; a tiny IR camera was mounted to an adjustable actuator attached at the headband. This camera could be adjusted in the x axis and rotated on the x and y axis individually

The users could control the wheelchair in both automated navigation and shared-control modes by the defined protocol. The users could select the destination shown on monitor screen by the eye tracking system. In automatic navigation mode, the user must confirm their selection by blinking. After command confirmation, the wheelchair could be automatically navigated to the desired destination. The user could switch modes by closing their eye until a beep sound occurred. The wheelchair would stop and change mode. The wheelchair could be controlled to the direction according to the user’s eye movement in shared-control mode.

Hand and chin hand control

Users that can use their hands, such as elderly or SCI patients with injury below the C8 level, may consider using hand control. If their hands are not functional but head-neck movements can be performed, such as for SCI at the C5–C8 level or cerebral palsy, such users may select chin control. Both the hand (see Fig. 8 top right) and chin (see Fig. 8 bottom right) joysticks employed the same principle as wheelchair’s joystick. It was composed of two variable resistors whose value changed according to joystick direction. Two analog signals were acquired by the microcontroller and processed in the compute stick. This customized joystick was also embedded with a push switch such that the joystick could be pressed for an additional command, such as mode shifting or command confirmation. The hand and chin joysticks were mounted at the end-effector of the 3 DOF actuator, as shown in Fig. 8 (left). This actuator could adjust the joystick position individually for each user.

Fig. 8.

Hand and chin input modules; hand (top right) and chin (left below) joysticks were mounted on a three degree of freedom (DOF) actuator installed on the wheelchair. The 3 DOF actuator could be used to adjust the joystick position individually for each user

Controlling the modified wheelchair by either hand or chin control is straightforward. The user can operate the wheelchair in both automatic navigation and shared-control modes. In automatic navigation mode, the user chooses a destination by using hand or chin joystick to control the pointer on a GUI on the monitor screen. The users can confirm their command by pressing the joystick. The selected command is sent to the navigation unit for the wheelchair navigation task. The user can switch between automatic navigation mode and shared-control mode pressing the joystick for approximately 4 s until a beep occurs. The user can control the wheelchair direction using the hand and chin joysticks.

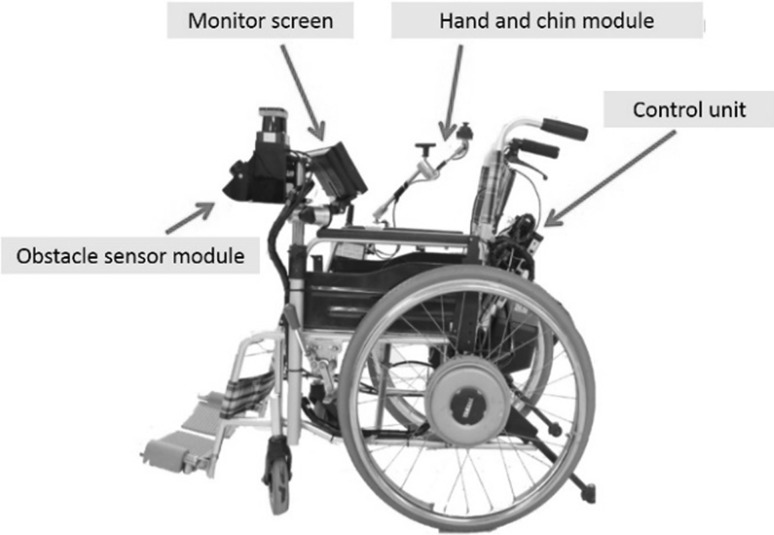

Wheelchair modification

Most disabled people already have their own familiar wheelchair. Therefore, the proposed system was developed as an add-on device that could be installed to an existing electric wheelchair. This study used a YAMAHA lightweight JWX-1 electric wheelchair. Figure 9 shows the main component needed to be installed on the wheelchair. Nevertheless, the component positioning on the wheelchair is important because some components may obstruct the users from getting into and out of the wheelchair. Our previous works (Dilok and Yodchanan 2012) and existing studies (Kyuwan and Andrzej 2008; Kazuo et al. 2005; Johan et al. 2007; Christian et al. 2009; Inaki et al. 2009; Rossella et al. 2008; Brice et al. 2006, 2010). install some component in front of the user. Even this component can be folded but it causes many difficulties for the user and their assistant and makes the user feel uncomfortable. In this research, the obstacle sensor module and the monitor were mounted on a metal rod attached at the front-left side of the wheelchair. The user can easily move in the wheelchair. The position and angle of the obstacle sensor module and monitor can be adjusted according to the physical condition of each user. The control unit was install on the back side of the wheelchair. In case of hand or chin users, the hand or chin joystick would be mounted on the right arm-rest of the wheelchair.

Fig. 9.

System installation on a normal electric wheelchair; the first module composed of obstacle sensors and a monitor was installed at front-left side. The second module, the control unit, was installed on the back side of the wheelchair. For hand and chin users, the hand or chin joystick was mount at right side of the wheelchair

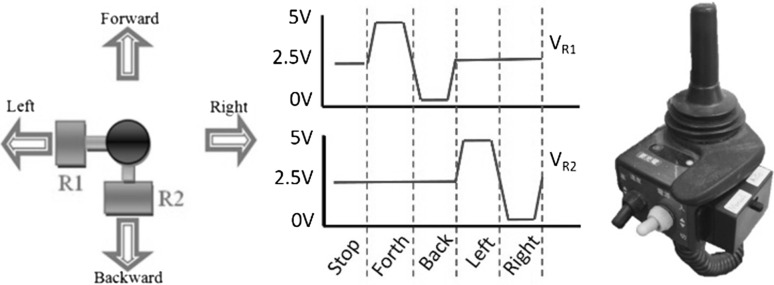

A normal electric wheelchair is controlled by a joystick mounted on the wheelchair. To control the wheelchair direction from the developed navigation system, the wheelchair’s joystick needs to be modified. Generally, a wheelchair’s joystick is composed of two variable resistors that change according to joystick movement (see Fig. 10 left). Figure 10 (middle) shows the voltage output from both variable resistors (VR1 and VR2) in each condition. The wheelchair is stopped when VR1 and VR2 are at 2.5 volts. The wheelchair moves forward, backward, turns left or turns right when VR1 is 5 or 0 volts and VR2 is 5 or 0 volts, respectively. Hence, the wheelchair can be controlled to move in the desired direction by generating a pattern of voltages that are delivered to the wheelchair. These two voltages were generated by an integrated circuit (IC) called a PCF8574. This digital-to-analog converter (DAC) IC is controlled by a microcontroller via an I2C bus. Figure 10 (right) shows the modified wheelchair’s joystick, which could switch between the former joystick and the proposed navigation system controls.

Fig. 10.

Joy stick modification; a pattern of control voltages was generated by an integrated circuit (IC) in the main control unit

Wheelchair navigation system

Obstacle sensor module

Safety and accuracy are the most important issues in a wheelchair navigation system. The system must be able to sense an environment or obstacles and be able to localized itself in the map. Our previous work implemented a sensor module that used a laser scanner (HOKUYO, URG-04LX 240) in conjunction with a 3D camera (Kinect Xbox) [33]. The system could be localized by processing the distance between detected environments from 0 to 240 degrees about the wheelchair. The obstacles beneath the 2D plane of the laser scanner could be detected by the point cloud of the 3D camera. However, the URG-04LX 240 has a short sight detection length (4 meters). The wheelchair would lose localization when the environment or markers were outside of the detection range. Moreover, the 3D camera has a narrow angle of detection (43.5 degree). The wheelchair could collide with obstacles when it turns left or right.

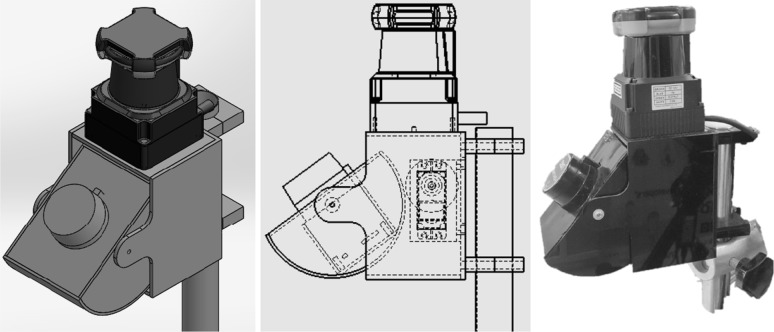

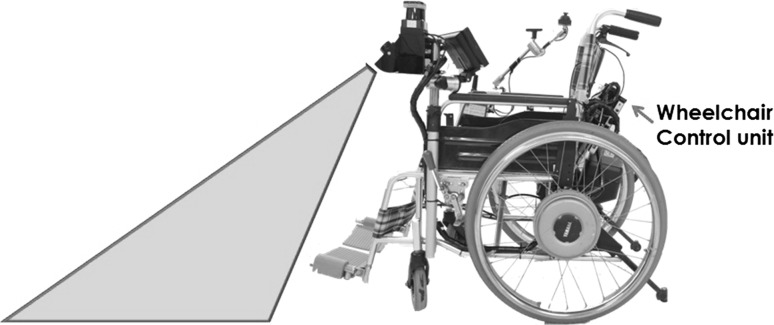

This research aimed to develop a robust navigation system for wheelchair control. The environment and the obstacles would be detected by the combination of the long distance and rotating laser scanners. Figure 11 shows the implemented obstacle sensor module. The main laser scanner, the UTM-30LX (HOKUYO), which has a long detection distance of 30 meters and wide detection angle of 270 degrees, was used for high performance wheelchair localization. This main laser scanner was mounted on top of the module. The rotating laser scanner used the short detection length URG-04LX laser scanner installed in the semicircle cylindrical case for 3D obstacle detection. This laser scanner was rotated in the range of −30 to −50 degrees from the horizontal plane by a servo motor, as shown in Fig. 12 (red area). This servo motor was driven at an angular velocity of approximately 0.1 s/degree by the microcontroller in the main control unit. The rotated angle was detected by a linear potentiometer. The distances of the entire range (240 degrees) of the URG-04LX was matched with the angle information from the linear potentiometer for 3D obstacle reconstruction, as shown in Fig. 15. This wide area modified 3D obstacle sensor could detect obstacles on left and right sides of the wheelchair. The obstacle sensor module was tightened to the metal rod that was attached to the wheelchair, as shown in Fig. 12.

Fig. 11.

Obstacle sensor module; a main laser scanner (top) was used for sensing long distance environments in a fixed 2 dimensional plane, and a rotating laser scanner was used for short length obstacle detection in 3 dimensions in front of the wheelchair

Fig. 12.

Area of detection of the rotating laser scanner; obstacles in the red area would be detected

Fig. 15.

Wheelchair navigation on a 2D map; the wheelchair was controlled from one destination (green rod) to another destination (red rod) by following the planned path (green line). (Color figure online)

Main control unit

Figure 13 shows the main control unit which was composed of three main components: a computer, a microcontroller and a power management unit. The computer, a Lenovo mini PC IdeaCentre Q190, was used for navigation processing and to communicate with the compute stick to obtain user commands from the alternative assistive technologies. This mini PC was attached to the black box, as shown in Fig. 13. The UTM-30LX and URG-04LX laser scanners were connected to the mini PC through USB1 and USB2 ports, respectively. The microcontroller, an Arduino ATMEGA2560, communicated with the mini PC via a USB to serial converter at USB3 port. The Arduino ATMEGA2560 and its peripheral circuit was designed to be compatible with a particular peripheral device. This board was embedded in the black box. The Arduino ATMEGA2560 controlled the servo motor to revolve the rotating laser scanner by pulse width modulation (PWM) and also to read the bending angle from the linear potentiometer via the ADC feature. Odometer was implemented by interfacing with the two encoders embedded in each wheel via an interrupted input module. The wheelchair was controlled by the two analog signals generated by the digital-to-analog converter (DAC) IC. This IC communicated with the Arduino ATMEGA2560 via the I2C bus. The power for all devices was supplied by two rechargeable 22.2 V 6 amp/hour Lithium batteries. The power management circuit was designed to transform the battery input voltage into 5, 12 and 19 V outputs via a switching regulator circuit. The 5 voltage DC source was supplied to the microcontroller and its peripheral circuit, laser scanners, servo motor and wheelchair driving circuit. The 12 voltage DC source was supplied to the monitor screen and cooling fans. The 19 voltage DC source was supplied to the mini PC.

Fig. 13.

Main control unit; composed of a computer (mini PC), a microcontroller unit and a power management unit

Wheelchair navigation processing

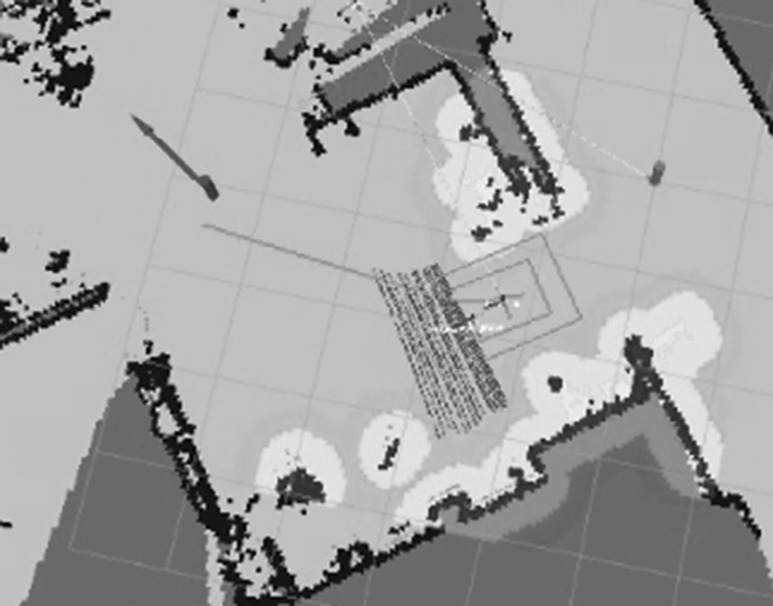

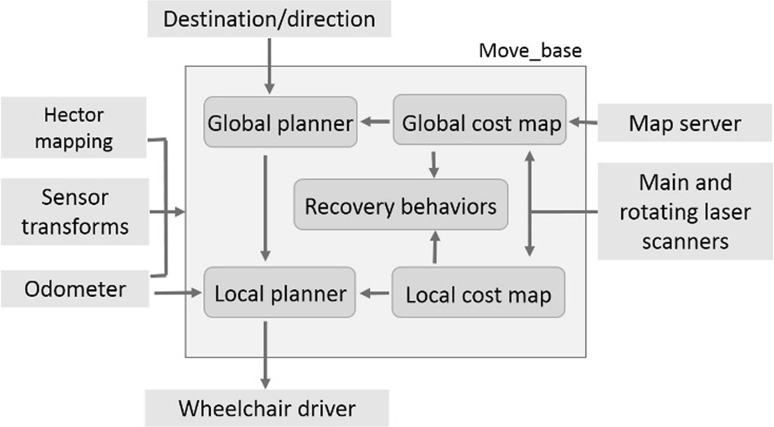

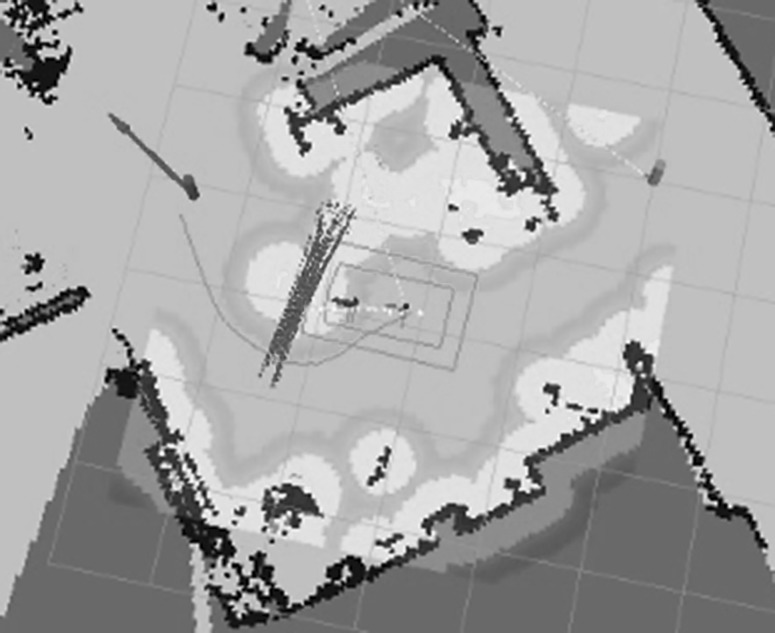

The navigation system was developed based on an open source robotic operating system (ROS) (Morgan et al. 2009). The ROS was embedded and operated in the mini PC running Linux OS. The ROS provides package libraries to handle a particular task, such as map building, path planning and navigation packages. All sensors and other peripheral devices must be tuned for the ROS navigation system. The coordination (x, y, z) of the laser scanners on the wheelchair must be defined to provide a transformation tree to the ROS package. In this study, the base link (0, 0, 0) was the position between two wheels of the wheelchair on the floor. The main and rotating laser scanners were positioned at (0.45, 0.19, 0.84) and (0.52, 0.19, 0.084), respectively. Therefore, all objects detected by each laser scanner could be transformed to the base frame. The wheelchair geometry (footprint) and clearance, as shown in the small and large green boxes of Fig. 14, respectively, must be declared to prevent collision with an obstacle.

Fig. 14.

Overview of the navigation system in the ROS

Map building

A 2D map was generated by a hector mapping (Stefan et al. 2013) package that uses a simultaneous localization and mapping (SLAM) approach (Hugh and Tim 2006a,b). Environmental information acquired by the main laser scanner (UTM-30LX) was used for map building. This ROS package provides a 2D pose estimation that allows the system to localize the wheelchair location and direction on the map. Figure 14 shows a 2D map of the BCI lab at Mahidol University, where white, black and grey pixels refer to clear areas, obstacles and unexplored areas. The green box indicates the estimated current location and pose of the wheelchair on the 2D map. The user could record up to 9 goal destinations, such as a bedroom, living room, kitchen, or bathroom, on the map, as shown by the green rod in Fig. 15.

Navigation

Figure 14 shows an overview of the ROS navigation system. When the navigation system received a destination command, the global planner package would determine a possible path (rough path) from the current location to the desired destination based on the global cost map from the map server. Figure 15 shows a planned path in green from the base frame (wheelchair) to the defined target destination (red rod). Then, the local planner package would determine a local path based on the local cost map acquired from the odometer and the UTM-30LX and URG-04LX (rotating) laser scanners. The local cost map information is then updated in the 2D map in bright pixels, as in Fig. 15. Cyan pixels represented a clearance for collision prevention. The several red lines indicate obstacle scanning by the URG-04LX laser scanner rotating every 1 degree from −30 to 50 degrees. To control the wheelchair, the move-base package was used to determine the command velocities (direction and velocity) to drive the base frame (wheelchair) along the local path. These command velocities were calculated from the local path planning information. When the wheelchair was displaced during navigation, it was localized and the wheelchair location and pose were updated by the hector mapping package.

During navigation, static or dynamic obstacles in front of the wheelchair would be detected in 3 dimensions by the UTM-30LX and URG-04LX laser scanners. Figure 16 shows obstacle detection and avoidance. When the obstacles were captured, the local cost map would update environment information in the global cost map to recalculate a new path by the global planner package. Then, the command velocities could be re-determined from the updated local path from the local planner package. The recovery behaviors package was used to monitor navigation behavior. The navigation would be aborted when the wheelchair was stuck.

Fig. 16.

Obstacle avoidance; when an obstacle is present in the system, the global planner would determine a new path (green line) to avoid collision with the object (brightened pixels). Cyan pixels represent the clearance to the obstacle. (Color figure online)

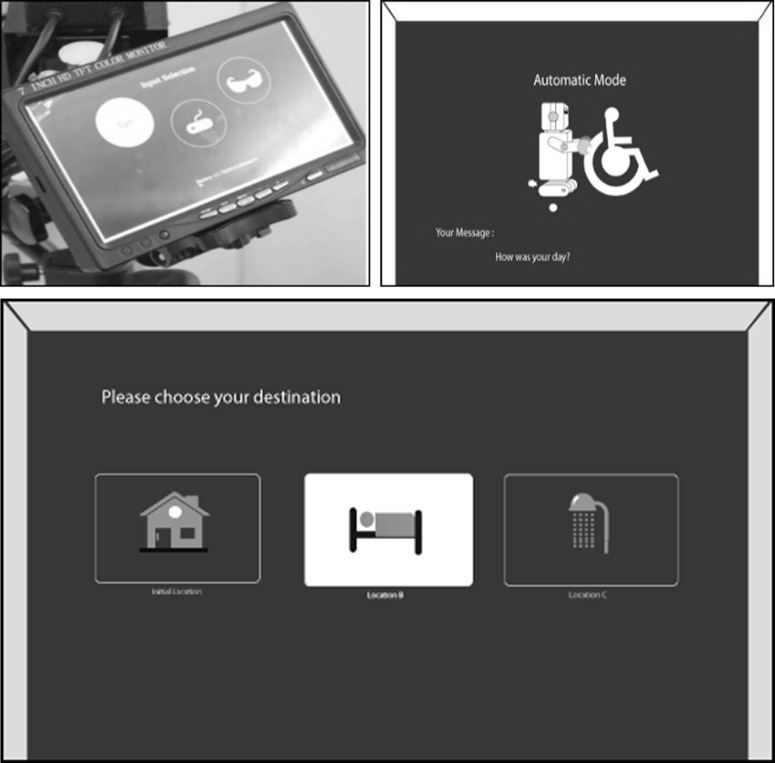

Graphic user interface

A graphic user interface (GUI) was displayed on the 5-inch TFT monitor screen, which was mounted to a metal rod on the front-left of the wheelchair (see Fig. 18). This screen could be adjusted for each user. The monitor screen was connected to the compute stick via an HDMI port. The GUI was developed as a web server based on HTML 5 in the compute stick. The user commands and wheelchair status were published wirelessly through an ROS socket. Figure 18 (top right) shows the wheelchair status when it was navigating to a destination. Figure 17 (bottom) shows the target selection in automatic navigation mode.

Fig. 18.

Average accuracy (● or blue line) and bit rate (▲ or red line) with respect to time for 4 subjects in shared-control mode (forward, backward, turn left and turn right). (Color figure online)

Fig. 17.

Graphic user interface (GUI); 5-inch TFT monitor screen (top left), wheelchair status during navigation to the destination (top right) and target selection in automatic navigation mode (bottom)

Results

P300 detection results

All experiments was conducted under the rules and regulations regarding to the Center of Ethical Reinforcement for Human Research, Mahidol University (COA No. MU-CIRB 2015/065.2105). Four healthy right-handed subjects (two men 23 and 24 years old and two women 24 and 30 years old) participated in this study. The first subject had experience with a BCI system while the rest were new. All subjects were recruited from undergraduate and graduate student populations of the Department of Biomedical Engineering, Faculty of Engineering, Mahidol University. The subjects had no neurological or psychiatric problems and had a normal or corrected-to-normal vision. Each subject was asked to sit in the wheelchair during the experiment. The P300 stimulator was installed approximately 300 mm in front of the subject. Before starting the experiment, each subject was trained and motivated to generate a P300 signal for approximately 10 min. The experiments were performed in the BCI laboratory on the 4th floor, Engineering Building 1, Salaya campus, Mahidol University. The experiment consisted of 2 studies: offline and online lessons.

During the offline study, the P300 template was first recorded, which required the subject to concentrate on the desired target for 40 s (approximately 33 trials). The experiment was designed in 2 runs. Each run consisted of 17 commands (9 commands in automatic mode (A, B, C, …, I) and another 8 commands in shared-control mode (2 runs each for forward, backward, turn left and turn right movements)). Totally, the subjects underwent to 18 destination selections in automatic mode and 16 direction selections in shared-control mode. For each command selection, the EEG signal was recorded during the task for 40 s for off-line analysis.

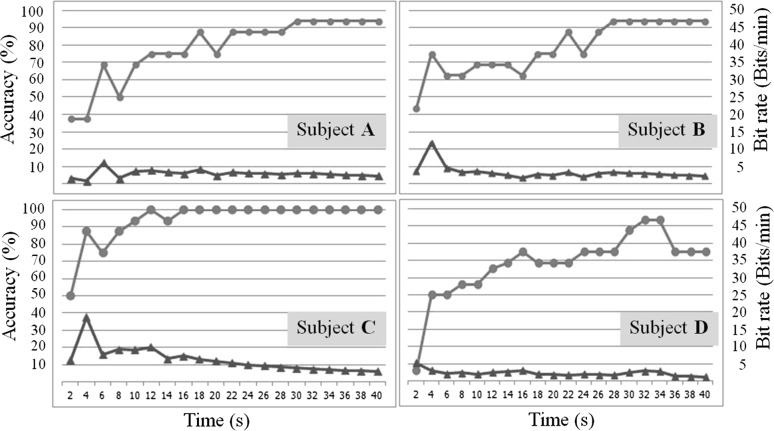

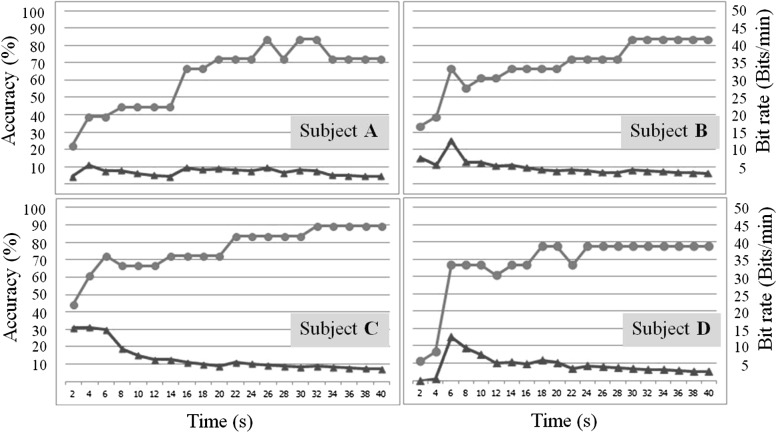

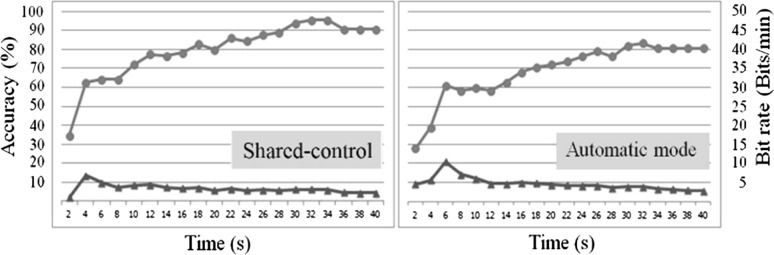

The EEG data were analyzed separately between automatic navigation (9 targets, matrix 3 × 3) and shared-control (4 targets, matrix 2 × 2) modes. The correlation coefficient between the template and input signals were calculated by Eq. 1. System accuracy and information transfer rate (bit rate) were calculated every 2 s for 40 s. The bit rate was the amount of information communicated per unit time [3, 21]. Figures 18 and 19 show the accuracy (● in blue line) and bit rate (▲in red line) with respect to the time for 4 subjects in shared-control (directions forward, backward, turn left and turn right) and automatic modes (destinations A, B, C, …, and I), respectively. The results revealed that the accuracy was very poor when performed using the P300 signal classification during 0–4 s. The performance increased as the time increased. Conversely, the bit rate tended to decrease as the time increased. One hundred percent accuracy was achieved by subject C in shared-control mode.

Fig. 19.

Average accuracy (● or blue line) and bit rate (▲ or red line) with respect to time for 4 subjects in automatic navigation mode (A, B, C, …, I). (Color figure online)

Figure 20 shows the averaged accuracy (● in blue line) and bit rate (▲ in red line) with respect to time for all 4 subjects in shared-control mode (left) and automatic mode (right). The results revealed that incrementing numbers of targets in automatic mode caused a decrease in accuracy compared to shared-control mode. This supported existing experiments with different sizes and inter-stimulus intervals of stimulators affecting the system accuracy. The average bit rate of all subjects in automatic mode seemed to have a higher bit rate than in shared-mode because of the higher number of targets (9 targets vs. 4 targets). The average accuracies of the P300 detection algorithm in shared-control and automatic modes were 95.31 and 83.42% with 3.09 and 3.79 bits/min, respectively. The average accuracy and bit rate of the proposed system with 1 channel of EEG signal were comparable to existing P300 detection systems [29, 30], which use at least 4 EEG signals. Less electrodes would result in easier and more practical actual usage. The lower accuracy in automatic mode was acceptable because the user could stop or cancel a mistaken command by blinking twice.

Fig. 20.

Average accuracy (● in blue line) and bit rate (▲in red line) of 4 subjects in shared-control mode (left) and automatic mode (right). (Color figure online)

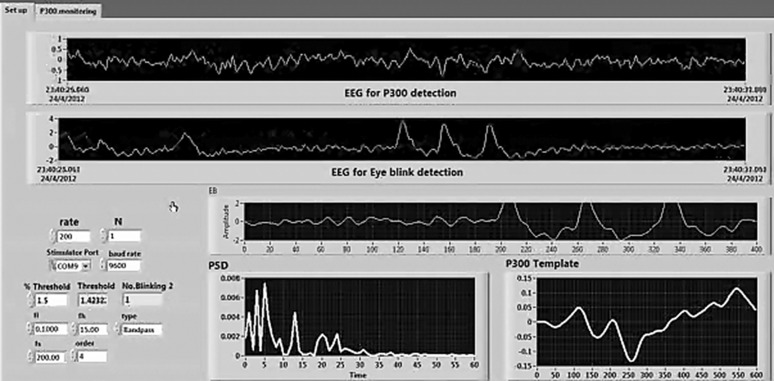

Online P300 classification could be performed using the implemented software in LabView. This software consisted of 2 screens: setup and output monitoring screens, as shown in Fig. 21 and 22, respectively. Raw EEG data for P300 processing (first graph) and eye blinking detection (second graph), PSD (left below graph) and the P300 template (right below graph) were displayed in the setup screen, as shown in Fig. 21. The filtering parameters and the percent of eye blinking detection could be adjusted in this screen. The raw EEG data for eye blink detection in Fig. 21 contained 3 times the eye blink pulses. This condition would enable the P300 stimulator to begin stimulation. Figure 22 shows the output monitoring screen. Destination B was classified as the output command because the correlation coefficient between the P300 template (red line) and the grand averaged P300 response at destination B was 0.78 (max1), where max2 was 0.32 at destination I. The percent different between B and I was 40.4%, which was higher than the setting POD (20%).

Fig. 21.

The setup screen of the implemented software for EEG processing for online P300 classification

Fig. 22.

The output monitoring screen of the implemented software for EEG processing for online P300 classification

For the online experiment, the percent of eye blinking detection (threshold) and the percent of P300 signal detection (POD) were set at 60 and 20%, respectively. For P300 signal detection, the processing time was varied depending on the percent different between max1 and max2. The system could quickly detect and classify an output command by the 6th epoch in shared-control mode and by the 7th epoch in automatic mode. Regarding the different of ISI in each mode, the reaction time required to classify the P300 signal was 9.6 s/command in shared-control mode (ISI = 400 ms, matrix 2 × 2, 6th epoch,) and 12.6 s/command in automatic mode (ISI = 200 ms, matrix 3 × 3, 7th epoch).

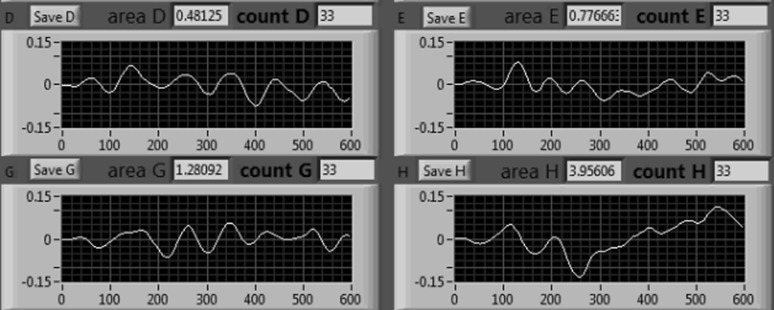

Figure 23 shows the difference in the powers of the N200 and P300 components between target and non-target stimuli. This figure was captured while the subject concentrated on destination H. It was found that the N200 and P300 amplitudes between the target and non-targets stimuli were affected by the visual field. The target stimulus had the highest N200 and P300 power of approximately 3.95. The power of a non-target decreased when the clearance was increased. For example, destinations E and G, which were placed 65 mm away from destination H, had powers of approximately 0.77 and 1.28, respectively. The furthest non-target (destination D, 90 mm away from the target) had the lowest power of approximately 0.48. Therefore, the proposed P300 visual stimulator could diminish the interference between the target and non-target stimuli.

Fig. 23.

The effect of the visual field on the N200 and P300 components of the target and non-target stimuli

Navigation system test

Five disable people (3 spinal cord injured patients and 2 cerebral palsy patients) and 5 healthy subjects participated in navigation system testing. The disable patients were recruited from the Putthamonthon Independent Living Center (PILC), and the normal subjects were recruited from the undergraduate population of the Department of Biomedical Engineering, Faculty of Engineering, Mahidol University. The experiments were performed at the BCI laboratory on the 4th floor, Engineering Building 1, Salaya campus, Mahidol University.

Each subject was asked to control the wheelchair in a round trip manner from location A to B in both automatic navigation and shared-control modes. In automatic navigation mode, locations A and B were recorded beforehand on the 2D map. The subject was asked to select the defined location in this mode. These two locations were located approximately 10 meters away from each other. The path along these two locations comprised a doorway and a corner. In shared-control mode, the subject was required to manually control the wheelchair to reach the goal position. The experiment was repeated 6 times for statistical analysis. The time elapsed during each trial was recorded and used to quantitatively evaluate the system performance. Moreover, the proposed system was qualitatively evaluated by questionnaires from the subject’s post-operation. The alternative assistive technology used in this experiment was a chin controller. The subjects used their chin to manually control the wheelchair directions in shared-control mode. In automatic navigation mode, the subject used their chin to select the destination.

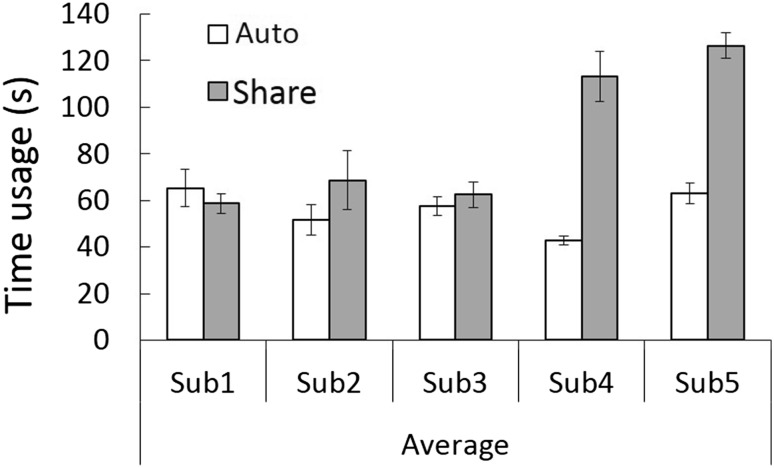

Figure 24 shows averaged time usage of each disabled subject in automatic navigation (white bar) and shared-control (gray bar) modes. Subjects 1–3 are the spinal cord injured patients, and Subjects 4–5 are the cerebral palsy patients. The results revealed that the automatic navigation system required less controlling time in most subjects. Subjects 4 and 5, the cerebral palsy patients, required significantly longer time to operate the wheelchair in shared-control mode because these patients cannot perform head-neck movements well.

Fig. 24.

The average time required for wheelchair control in both automatic navigation (white bar) and shared-control (gray bar) modes for each disabled subject. Sub 1–3 are the spinal cord injured patients, and Sub 4–5 are the cerebral palsy patients

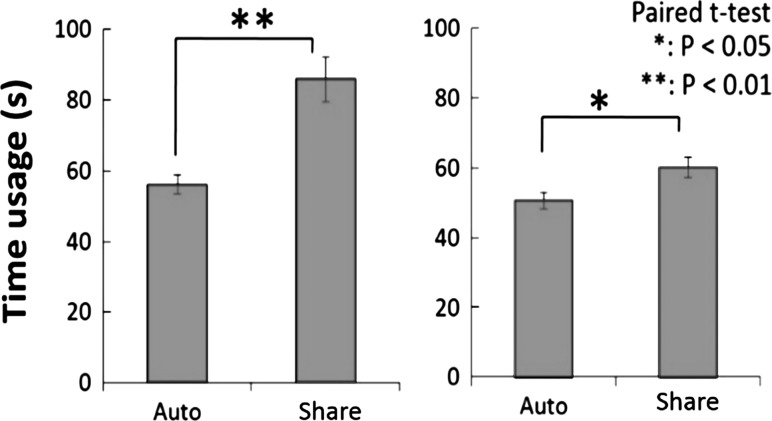

Figure 25 shows the grand averaged time used in both automatic navigation and shared-control modes for all disable subjects (left graph) compared to normal subjects (right graph). The statistical analysis (n = 30) employing a paired t test revealed that the automatic navigation system had a significantly lower operating time than the shared-control system (p value <0.01). A significant difference (p < 0.05) could also be observed in the normal subject. This result indicated that the automatic navigation system could save operation time and induced less fatigue in the subjects. The automatic navigation would benefit disabled patient who have less skill/experience or physical limitations for wheelchair control.

Fig. 25.

Grand average time usage in automatic navigation and shared-control modes for all disable subjects (left graph) compared to all normal subjects (right graph)

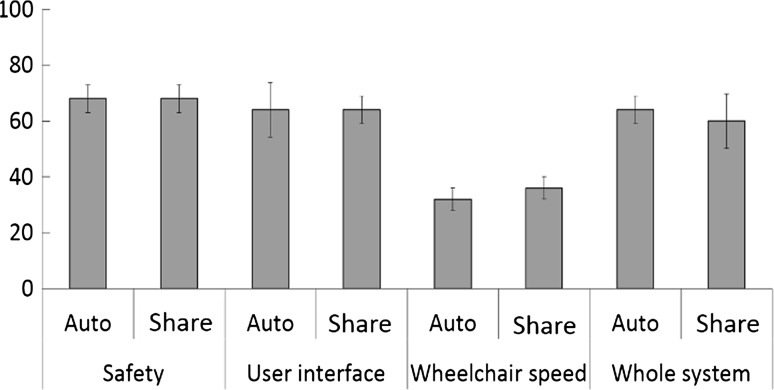

The satisfaction of the subjects in using our proposed system was observed post-wheelchair control. Figure 26 shows the average percentages of satisfaction levels of (1) safety, (2) GUI, (3) wheelchair speed and (4) the entire system in both automatic navigation and shared-control modes based on a questionnaire completed by all subjects. The results show that the subjects were approximately 68% satisfied with safety, GUI and the whole system. The satisfaction score for wheelchair speed is small due to the speed was set to minimum for safety. This velocity could be adjusted and satisfaction level could be increased.

Fig. 26.

Average percentage of satisfaction levels of (1) safety, (2) GUI, (3) wheelchair speed and (4) the entire system, in both automatic navigation (Auto: High-level navigation, the users select the destination and the system will fully navigate the wheelchair to the desired destination) and shared-control modes (Share: Shared-control navigation, the users select each command by themselves, the navigation system is used only for obstacle avoidance) based on a questionnaire

Discussions

EEG signal processing

P300 signal is usually has small SNR. Therefore, the existing researches need several electrodes and complex classifier. In this study, the proposed designed LED-P300 stimulator based on human visual perception could elicit strong ERP component especially P300 amplitude. The improved SNR required less electrode and small computational time with a comparable accuracy to existing research. Allowing users enable P300 stimulator by themselves would be useful because continuously applying P300 stimulation may bother the users. Triggering P300 stimulator could be done many different ways based on (1) EMG, (2) EEG, or (3) EOG. (1) EMG is a reliable signal but severe disable people could not voluntary control the target muscle. (2) Generating a strong alpha wave in EEG is consistent by performing eye-close. This triggering strategy is perfect since alpha wave could be acquired by the same electrode as for P300 detection. However, perform eye closing for a while before P300 tasks is not affective due to eye’s dark/light adaptation. In addition, attach one more electrode at forehead could capture attention of the user. This trigger is also a promising technique for triggering P300 stimulator command by controlling Alpha/Beta waves ratio. (3) Eye-blink artifact from EOG signal could be captured by the same electrode position as attention detection. Single eye-blink does not applicable for triggering the system since it is usually occurred. Possibly, eye blinking pattern such as 2, 3 and 4 consecutive blinks could be used as three possible commands.

EEG signals were captured using an Ag/AgCl electrode cap, amplified by a BIOPAC amplifier box set, digitized by a DAQ card NI USB-6008, and processed by the compute stick with integrated LabView software. The electrode cap was easy to setup, but most of subjects noticed that they felt uncomfortable when they wore the cap. Therefore, cup electrodes were used to obtain EEG signals at Fp2, T4 and C4 for the eye blinking detection signal and at O2, Cz and Fz for the P300 detection signal. In the future, a customized electrode head set with wireless communication would reduce the setup time.

Two, 3 or 4 consecutive eye blinks within 2 s are unusual conditions because most people blink their eyes approximately 1 time in 2 s or more. Therefore, the defined eye blinking patterns could be used to activate function. In healthy subjects, 100% accuracy of eye blinking detection was achieved using the proposed algorithm. Some of the disable patients, such as spinal cord injured patients, could perform eye blinking correctly. However, in some severe cases, performing eyes blinking is difficult. Nevertheless, the proposed idea using eye blinking pattern detection is a promising tool that allows severe patients to operate the entire system by themselves. The patients must be trained to blink their eyes in different patterns. The optimal percentage of eye blinking detection should be adjusted individually.

The proposed brain controlled wheelchair used visual evoked potentials (VEPs) of the P300 response for target command selection. The P300 stimulator was a crucial component to enhance system accuracy and transfer rate by allowing for the recording of a strong P300 signal response. Many studies have investigated the effect of the P300 stimulator on the P300 response. A proper P300 stimulator can be developed according to the literatures. This research used an LED-based P300 stimulator, as our previous study demonstrated that LEDs are the most suitable for brain control wheelchair applications (Dilok and Yodchanan 2011). The LED-based P300 stimulator showed great performance in eliciting the P300 response. However, the target stimulus shape and appearance could not be changed due to hardware limitation.

The P300 detection algorithm used a correlation coefficient method, which calculated similarities between the P300 template and the input P300 responses. The P300 template recording procedure was obtained beforehand. The results showed that the average accuracies of the P300 detection algorithm in shared-control and automatic navigation modes were 95.31 and 83.42% with 3.09 and 3.79 bits/min, respectively. The speed of P300 signal classification was 9.6 s/command in shared-control mode and 12.6 s/command in automatic navigation mode. The performance difference in each mode was caused by matrix size differences, the clearance between the target and non-target stimuli, and ISI. The results of our proposed P300 system were comparable with an existing P300 signal processing (Miami 2009; Ulrich et al. 2008) with only 1 EEG channel for P300 detection. This would result in easier and more applicable system setup. Because 100% accuracy in BCIs is rarely achieved, the remaining error of the P300 classification was acceptable because the users could abort a mistaken command by blinking twice.

The proposed BCI system has been preliminary verified with a severe ALS patient (30 years old male, cannot move the whole body, can control eye muscle for a short time). This test conducted without navigation task to avoid heavy stress to the subject. The subject was sitting while the P300 stimulator was installed in front. The subject was informed about experimental protocol, how to modulate P300 signal and how to perform eye-blink patterns. The subject was trained how to generate P300 to the desired target and execute a consecutive eye-blink. Due to the subject’s physical condition, only twice training session was performed. However, the acceptable average performance accuracy of 70% for a set of 4 tasks (enable with EOG and execute with P300) was obtained.

Navigation system

Wheelchair control method for BCW could be done several ways. The simplest method is direct-control the so called low-level navigation. The wheelchair was driven to the desired direction. This method is easy to implement and the user could control a specific movement. However, the system does not assist navigating the wheelchair. The system normally has no sensor for detecting an obstacle. A serious accident possibly be occurred. Next generation of BCW comes with sensors that could perceive environment and help executing the user command. This shared-control navigation method offers obstacle detection and avoidance features. The sensor can be a camera, a 2D scanner (laser scanner), a 3D scanner (3D camera, 3D laser scanner). The camera can capture an environment but could not get accurate distance to the obstacle. 2D laser scanner shows a good performance of wide rang detection, accuracy and speed. However, obstacle beyond 2D plane could not be detected. 3D camera has reasonable price. It can capture 3-dimensional environment but the detection field is narrow. 3D laser scanner has a great performance which it has a wide detection range in 3-dimensions but it is costly. The proposed obstacle sensor module, the combination of the static and the rotating laser scanners, would be a promising method for a wide range 3-dimensional obstacle detection. The high performance BCW embedded with intelligence system that could automatically navigate the wheelchair to the predefine location on the map. Some BCW develop this high-level navigation system that needs marker to localize wheelchair position. The wheelchair is navigated following the marker. Nevertheless, the system may lose of localization when the markers is overlaid. Marker less navigation could be achieved by acquiring sensors information for performing simultaneous localization and mapping (SLAM). The system must create the map or read a floor plan to get an exact dimension of the building. Marker less navigation does not need modification to the build like marker based navigation but processing sensors data for wheelchair localization, navigation and obstacle avoidance is computational expensive.

The high performance BCW is a promising method for severe motor disability. The automatic navigation by a single command would reduce the user’s stress. The safety could be ensured by the obstacle avoidance function.

This study implemented high performance wheelchair control. The high-level navigation was executed during automatic mode while shared-control control mode used share-control navigation. The proposed system can be installed in normal electric wheelchairs. The wheelchair could be driven by generating two analog signals via the modified wheelchair joystick. The implemented navigation system was based on ROS navigation. Map building, path planning, base driving and obstacle avoidance were processed by the available ROS package. Most of the wheelchair navigation system used laser scanners for obstacle detection. However, obstacles that are present beneath or above the 2D scanning plane would not be detected. Hence, serious accidents could possibly occur. The proposed navigation system developed a rotating laser scanner that continuously rotated from −20 to −50 degrees. Therefore, obstacles beneath the 2D plane of the main laser scanner could be detected and safely avoided by the obstacle avoidance feature. However, transparent objects, such as glass and acrylic and clear plastics, would not be detect by the laser scanner because infrared light can penetrate through these objects.

The 2D map was built prior the navigation task. Map building and the navigation system may fail to localize itself in a crowded area. A clear map would result in the best navigation performance. The user could add up to 9 of their favorite locations on the created map.

Alternative assistive technologies

The proposed navigation system was designed to support existing alternative input modules. This research offered 4 available alternative assistive technologies: a brain control module, an eye tracker, a chin pointer and a hand control module. All modules employ the same protocol to indicate 13 possible commands from the user: 9 destination commands in automatic navigation mode and 4 directions command in shared-control mode. The benefit of the alternative input module is that the user can select an appropriate assistive device to suit their disability level. If the user has control of their hand function, he/she may choose to use the hand control module. If the user did not have hand function but they could perform head-neck movement, he/she might choose chin control. If the user’s entire body could not be controlled but they still had eye and brain function, he/she might consider using the eye tracker or brain control module, respectively. However, the output classification accuracy and command rate of each assistive technology were different, which decreased in the following order from high to low accuracy: hand control module, chin pointer, eye tracker, and brain control module. The accuracy and command rate could be improved after training.

In the future, other assistive technologies, such as a tongue movement detector, a tooth-click controller, an SnP, a sniff controller and voice recognition, could be integrated and controlled in our system via the same communication protocol.

Conclusions

Many studies have sought to develop brain controlled wheelchairs by using different types of EEG signals, such as ERD/ERS (Kyuwan and Andrzej 2008; Kazuo et al. 2005; Johan et al. 2007), SSVEP (Christian et al. 2009), and P300 signals (Inaki et al. 2009; Rossella et al. 2008; Brice et al. 2006, 2010). The existing research on brain controlled wheelchairs can be differentiated into 2 types: wheelchair that require the user to perform a series of commands (Kyuwan and Andrzej 2008; Kazuo et al. 2005; Johan et al. 2007; Christian et al. 2009; Inaki et al. 2009) and those that require the user to perform a single command (Rossella et al. 2008; Brice et al. 2006, 2010). There are various advantages and disadvantages of each type. For a series of commands, the users can freely control the wheelchair direction. However, controlling this wheelchair for a long distance would fatigue the user. For single commands, the user needs to select only the destination command and then allow the navigation system to steer the wheelchair automatically. This type of automatic navigation system is very convenient. However, the users lack freedom and are limited to the defined destinations.

This research combined the advantages of each configuration. The proposed system offers two operation modes, shared-control automatic navigation modes that can be controlled by a series of commands and by single a command, respectively. This system offers the user convenience and freedom for wheelchair control.

The proposed BCW allows users to drive an electric wheelchair by using a BCI system interfaced with a navigation system. Nine destination commands (A, B, C, …, I) in automatic navigation mode and four direction commands (forward, backward, turn left and turn right) in shared-control mode were provided to the users. These commands can be selected by the proposed hybrid P300 and eye blink using the BCW system. After the destination command selection in automatic navigation mode, the wheelchair was steered automatically from the current position to the desired destination. Possible user command detection error was not a major issue because the user could abort a mistaken command by the defined eye-blinking pattern (2 times of eye blinking). Furthermore, when the user reached to the destination in automatic mode, the users could switch to shared-control mode by blinking 4 times. The user could freely control wheelchair movement in the forward, backward, left turn and right turn directions. The defined patterns of eye blinking enabled users to turn on or off the P300 stimulation system by themselves by blinking 3 times. The proposed eye-blinking detection algorithm was able to detect eye blinking patterns. The proposed P300 detection algorithm with 1 channel of EEG exhibited a comparable accuracy to previous studies that used at least 4 EEG channels (Miami 2009; Ulrich et al. 2008), which would facilitate practical uses of the system. The average accuracies of P300 detection in shared-control and automatic navigation modes were 95.31 and 83.42% with 3.09 and 3.79 bits/min, respectively. One hundred percent accuracy was achieved in shared-control mode by subject C. The lower accuracy in automatic mode was acceptable because blinking 2 times stopped or canceled a mistaken command.

Moreover, another 3 alternative user input modules were available in this research: an eye tracking system, a hand controlling system, and a chin controlling system. The user could select an appropriate input module according to his/her disability level. Further assistive technologies could be applied to our system with the same communication protocol in the future.

The implemented navigation system could perceive the environment without any modification, for example, by placing a marker or barcode on the ceiling or the floor (Rossella et al. 2008; Brice et al. 2006, 2010). The developed obstacle sensors module could detect objects and household structures in 3 dimensions. This environment sensing data were used for map building, wheelchair localization and obstacle detection and avoidance. A 2D map was built with exactly the same dimensions and shape as the actual home environment. The wheelchair could be navigated to the goal position and controlled to the desired direction in automatic navigation and shared-control modes, respectively, while ensuring safety by the obstacle avoidance feature.

The automatic navigation mode exhibited better performance than shared-control mode. The user could achieve the desired destination in less time and inducing less fatigue when using the automatic navigation mode. Global and local path planning facilitated the system to drive the wheelchair via the fastest route and at the optimal velocity.

Acknowledgements

This projected was supported by the National Research Council of Thailand (NRCT). The development of the EEG acquisition system was supported by the National Broadcasting and Telecommunication Commission (NBCT). Finally, many thanks go to all of the disable subjects from Putthamonthon Independent Living Center (PILC) and the healthy subjects who volunteered to participate in the experiments described in this paper.

References

- Anton P, Lee S, Aharon W, Roni K, Lior H, Yaara Y, Nachum S, Noam S. Sniffing enables communication and environmental control for the severely disabled. Proc Natl Acad Sci. 2010;107:14413–14418. doi: 10.1073/pnas.1006746107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arai K, Ronny M. Eyes based eletric wheel chair control system. Int J Adv Comput Sci Appl. 2011;12:98–105. [Google Scholar]

- Brice R, Etienne B, Cuntai G, Haihong Z, Chee L T, Qiang Z, Marcelo A, Christian L (2006) A brain controlled wheelchair based on P300 and path guidance. In: Biomechatron (Biorob), pp 1001–1006

- Brice R, Etienne B, Cuntai G, Haihong Z. A brain controlled wheelchair to navigate in familiar environments. Neural Syst Rehabil Eng. 2010;18:590–598. doi: 10.1109/TNSRE.2010.2049862. [DOI] [PubMed] [Google Scholar]

- Christian M, Thorsten L, Tim L, Thomas R, Axel G, Bernd KB (2009) Navigating a smart wheelchair with a brain-computer interface interpreting steady-state visual evoked potentials. In: Intelligent robots and systems, pp 1118–1125

- Dilok P, Yodchanan W (2011) Illuminant effect on LCD and LED stimulators for P300-based brain-controlled wheelchair. In: Biomedical engineering international conference (BMEiCON), pp 254257

- Dilok P, Yodchanan W (2012) Semi-automatic P300-based brain-controlled wheelchair. In: Complex medical engineering (CME), pp 455–460

- Dilok P, Sarawin K, Pongsakom W, Boonyanuch W, Yodchanan W (2014) Automated navigation system for eye-based wheelchair controls. In: Biomedical engineering international conference (BMEiCON)

- Duan J, Li Z, Yang C, Xu P (2014) Shared control of a brainactuated intelligent wheelchair. In: 11th world congress on intelligent control and automation (WCICA), pp 341–346

- Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol. 1988;70:510–523. doi: 10.1016/0013-4694(88)90149-6. [DOI] [PubMed] [Google Scholar]

- Gautam G. Eye movement based electronic wheel chair for physically challenged persons. Int J Sci Technol Res. 2014;3:206–212. [Google Scholar]

- Gray L, Galetta SL, Siegal T, Schatz N. The central visual field in homonymous hemianopia. Arch Neurol. 1997;54:312–317. doi: 10.1001/archneur.1997.00550150068018. [DOI] [PubMed] [Google Scholar]

- Gwang ME, Kyeong K, Chul SK, James L, Soon CC, Bongsoo L, Hiroki H, Norio F, Ryoko F, Takashi W. Gyro-mouse for the disabled: ‘Click’ and ‘position’ control of the mouse cursor. Int J Control Autom Syst. 2007;5:147–154. [Google Scholar]

- Horacio AB, Shir BZ, Shlomo B, Marta K. Parafoveal perception during sentence reading?: an ERP paradigm using rapid serial visual presentation (RSVP) with flankers. Psychophysiology. 2011;48:523–531. doi: 10.1111/j.1469-8986.2010.01082.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugh H. Exoskeletons and orthoses: classification, design challenges and future directions. Neuroeng Rehabil. 2009;6:2387–2396. doi: 10.1186/1743-0003-6-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugh DW, Tim B. Simultaneous localization and mapping: part I”. Robot Autom Mag. 2006;13:99–110. [Google Scholar]

- Hugh DW, Tim B. Hugh DW, Tim B (2006) Simultaneous localization and mapping (SLAM): part II”. IEEE Robot Autom Mag. 2006;13:108–117. doi: 10.1109/MRA.2006.1678144. [DOI] [Google Scholar]

- Inaki I, Javier MA, Andrea K, Javier M. Non-invasive brain-actuated wheelchair based on a P300 neurophysiological protocol and automated navigation. IEEE Trans Robot. 2009;25:614–627. doi: 10.1109/TRO.2009.2020347. [DOI] [Google Scholar]

- Jeonghee K, Hangue P, Joy B, et al. The tongue enables computer and wheelchair control for people with spinal cord injury. Sci Transl Med. 2013;5:213. doi: 10.1126/scitranslmed.3006296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johan P, Jose del RM, Gerolf V, Eileen L, Ferran G, Pierre WF, Hendrik VB, Mamix N (2007) Adaptive shared control of a brain-actuated simulated wheelchair. In: Rehabilitation robotics

- John C, Lynne S, Jayme K. Neuromuscular electrical stimulation for motor restoration in hemiplegia. Topics Stroke Rehabil. 2008;15:412–426. doi: 10.1310/tsr1505-412. [DOI] [PubMed] [Google Scholar]

- Jonathan RW, Niels B, Dennis JM, Gert P, Theresa MV. Brain-computerinterfaces for communication and control. Clin Neurophysiol. 2002;113:767–791. doi: 10.1016/S1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- Karl L, Kaitlin C, Alexander D, Kaleb S. Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain–computer interface. J Neural Eng. 2013;10:4. doi: 10.1088/1741-2560/10/4/046003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazuo T, Kazuyuki M, Hua OW. Electroencephalogram-based control of an electric wheelchair. Robotics. 2005;21:762–766. [Google Scholar]

- Krzysztof D, Piotr W (2015) Brain-computer interface for mobile devices. J Med Inf Technol 24

- Kyuwan C, Andrzej C (2008) Control of a wheelchair by motor imagery in real time. In: Intelligent data engineering and automated learning, pp 330–337

- Miami B (2009) Feature extraction and classification of EEG signals for rapid P300 mind spelling. In: International conference on machine learning and applications

- Michael J, Kevin G, John A, Ruth F. A sip-and-puff wireless remote control for the Apple iPod. Assist Technol. 2008;20:107–110. doi: 10.1080/10400435.2008.10131937. [DOI] [PubMed] [Google Scholar]

- Morgan Q, Brian G, Ken C, Josh F, Tully F, Jeremy L, Eric B, Rob W, Andrew N (2009) ROS: an open-source Robot Operating System. In: ICRA workshop on open source software

- Ng DW-K, Soh Y-W, Goh S-Y (2014) Development of an autonomous BCI wheelchair. In: IEEE symposium computational intelligence brain computer interfaces, pp 1–4