Abstract

Objective

To explore how different practices responded to the Data-driven Quality Improvement in Primary Care (DQIP) intervention in terms of their adoption of the work, reorganisation to deliver the intended change in care to patients, and whether implementation was sustained over time.

Design

Mixed-methods parallel process evaluation of a cluster trial, reporting the comparative case study of purposively selected practices.

Setting

Ten (30%) primary care practices participating in the trial from Scotland, UK.

Results

Four practices were sampled because they had large rapid reductions in targeted prescribing. They all had internal agreement that the topic mattered, made early plans to implement including assigning responsibility for work and regularly evaluated progress. However, how they internally organised the work varied. Six practices were sampled because they had initial implementation failure. Implementation failure occurred at different stages depending on practice context, including internal disagreement about whether the work was worthwhile, and intention but lack of capacity to implement or sustain implementation due to unfilled posts or sickness. Practice context was not fixed, and most practices with initial failed implementation adapted to deliver at least some elements. All interviewed participants valued the intervention because it was an innovative way to address on an important aspect of safety (although one of the non-interviewed general practitioners in one practice disagreed with this). Participants felt that reviewing existing prescribing did influence their future initiation of targeted drugs, but raised concerns about sustainability.

Conclusions

Variation in implementation and effectiveness was associated with differences in how practices valued, engaged with and sustained the work required. Initial implementation failure varied with practice context, but was not static, with most practices at least partially implementing by the end of the trial. Practices organised their delivery of changed care to patients in ways which suited their context, emphasising the importance of flexibility in any future widespread implementation.

Trial registration number

Keywords: PRIMARY CARE, Prescribing, General Practice, Quality and Safety, Randomised Controlled Trials, Process Evaluation

Strengths and limitations of this study.

This is a comprehensive, preplanned process evaluation which includes a third of all practices which participated in the Data-driven Quality Improvement in Primary Care (DQIP) stepped-wedge cluster-randomised trial.

The evaluation sampled four practices which rapidly implemented the intervention and all six practices which failed to implement the intervention to some degree.

A strength of the study is the use of qualitative data from interviews and observational field notes, and quantitative data about key trial processes and practice-level effectiveness to examine implementation in detail.

A limitation is that we did not collect any data from practices prior to them receiving the intervention.

Background

High-risk prescribing in primary care is a major concern for healthcare systems internationally. Between 2% and 4% of emergency hospital admissions are caused by preventable adverse drug events (ADEs),1 2 at significant cost to healthcare systems.3 4 A large proportion of these admissions are caused by commonly prescribed drugs, with non-steroidal anti-inflammatory drugs (NSAIDs) and antiplatelets being frequently implicated, causing gastrointestinal, cardiovascular and renal adverse events.5–7

The Data-driven Quality Improvement in Primary Care (DQIP) intervention was systematically developed and optimised8–10 and comprised three intervention components: (1) professional education about the risks of NSAIDs and antiplatelets via an outreach visit by a pharmacist; (2) financial incentives to review patients at the highest risk of NSAID and antiplatelet ADEs, split into a participation fee of £350 and £15 per patient reviewed and (3) access to a web-based IT tool to identify such patients and support structured review. The intervention was evaluated in a pragmatic stepped-wedge cluster-randomised controlled trial11 in 33 practices from one Scottish health board, where all participating practices received the intervention but were randomised to one of 10 different start dates.8 Across all practices, targeted high-risk prescribing fell from 3.7% immediately before to 2.2% at the end of the intervention period (adjusted OR 0.63 (95% CI 0.57 to 0.68), p<0.0001). The intervention only incentivised review of ongoing high-risk prescribing, but led to reductions in ongoing (adjusted OR 0.60, 95% CI 0.53 to 0.67) and ‘new’ high-risk prescribing (adjusted OR 0.77, 95% CI 0.68 to 0.87). Notably, reductions in high-risk prescribing were sustained in the year after financial incentives stopped. In addition, there were significant reductions in emergency hospital admissions with gastrointestinal ulcer or bleeding (risk ratio (RR) 0.66, 95% CI 0.51 to 0.86) and heart failure (RR 0.73, 95% CI 0.56 to 0.95).12

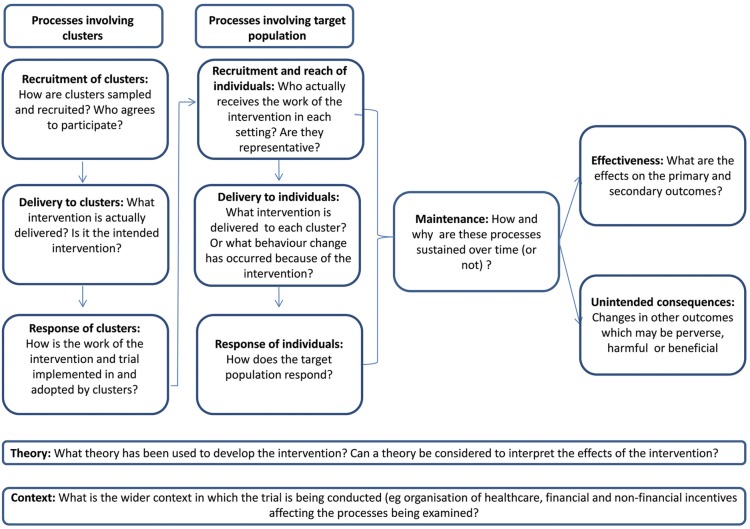

Alongside the main trial, we designed a mixed-methods process evaluation,13 14 based on a cluster-randomised trial process evaluation framework which we developed.15 Our framework emphasises the importance of considering two levels of intervention delivery and response that often characterise cluster-randomised trials of behaviour change interventions (although their importance will depend on intervention design). The first is the intervention that is delivered to clusters, which respond by adopting (or not) the intervention and integrating it with existing work. The second is the change in care which the cluster professionals deliver to patients. In DQIP, the delivery of the intervention to professionals was predefined, intended to be delivered with high fidelity across all practices by the research team, whereas the intervention delivered to patients was at the discretion of practices, who decided whether and how they reviewed patients and whether to change prescribing. We used this framework to structure our parallel process evaluation, mapping data collection to a logic model of how the DQIP intervention was expected to work (figure 1).

Figure 1.

DQIP process evaluation framework. DQIP, Data-driven Quality Improvement in Primary Care.

The aim of this analysis is to examine how different practices responded to the intervention delivered to them by the research team in terms of their adoption of the work, their reorganisation to deliver the intended change in care to patients and whether implementation was sustained over time.

Methods

The design was a mixed-method comparative case study, with general practices the unit of analysis.16 17 The overall design and methods have been described previously in the published protocol.18 In brief, we used a mixed-methods parallel process evaluation to examine the implementation of selected processes and their associations with change in high-risk prescribing at practice level. The quantitative element examined how change in prescribing at practice level was associated with practice characteristics and implementation of key processes and is reported separately (Dreischulte et al. Process evaluation of the Data-driven Quality Improvement in Primary Care (DQIP) trial: quantitative examination of representativeness of trial participants and heterogeneity of impact. Implementation Science 2016, Submitted). The qualitative element consisted of case studies in 10 of the 33 participating practices. Practice staff perceptions of the importance of different intervention components and when they had an effect are reported separately.19 The analysis reported here examines how practices adopted, implemented and maintained the intervention. Informed consent was obtained from all participants to participate and to publish anonymised data.

Case study sampling

Practices were purposively sampled to include those which had and had not initially reduced high-risk prescribing, judged by visual inspection of run charts of high-risk prescribing rates ∼4 months after starting the intervention. Our assumption was change in high-risk prescribing was a good proxy for initial response to the intervention delivered to practices. Sampling was additionally structured to recruit practices starting the trial at different times, and to ensure a mix of smaller and larger practices (<5000 and ≥5000 registered patients), reflecting the stepped-wedge trial design and our a priori belief that smaller practices would more effectively implement the intervention (randomisation was stratified by list size).18 Ten (30%) practices were sampled and recruited to the process evaluation, including all six practices with no initial change in targeted prescribing.

Qualitative data collection

In each practice, the general practitioner (GP) most involved in the DQIP work (leading the review work), a GP less involved (who may have been involved in the review work to some degree), the practice manager and pharmacist were invited for interview. All interviews were carried out by AG ∼6 months after the practice started the intervention, and the GP most involved was interviewed again 3–6 months later to explore changes over time. Interviews lasted ∼1 hour, and were audio-recorded and transcribed verbatim. Additional data generated were field notes made by AG during the educational outreach visits (EOV), detailing response to the educational component and informatics tool training. Data generation took place between September 2011 and December 2013 in parallel with intervention implementation. All data have been anonymised, including by using pseudonyms for practices.

Quantitative data collection

Data from the DQIP IT tool were used to sample practices using run charts to visually assess change in performance in the first 4 months of implementation, but were not used alongside the qualitative analysis reported here which was otherwise blind to any quantitative process or outcome data. Data from the same source were also used after qualitative analysis was complete to measure and categorise reach, delivery to the patient and maintenance. Reach was measured as the percentage of patients flagged as needing a review who had received at least one review, delivery to the patient as the percentage of flagged patients who had further action in response to initial review (eg, a medication change, or an invitation to consult) and maintenance as reach in the final 24 weeks of the intervention period. Effectiveness was defined as the relative change in the mean rate of high-risk prescribing trial primary outcome measure between baseline (the 48 weeks before the intervention) and the final 24 weeks of the intervention. Quantitative data were only used to explore whether the qualitative judgements made about implementation were consistent with observed data on reach, delivery, maintenance and effectiveness. Associations between quantitative practice-level process and effectiveness data will be reported separately (Dreischulte et al 2016, Submitted).

Researcher expectations of how the intervention would work

During intervention and process evaluation design, the research team expected that several processes had to happen for the intervention to reduce high-risk prescribing:

Practices had to adopt the intervention, in the sense of being convinced that the work was worthwhile, engaging with the education and set-up process, and organising how the work required would be done.20 This is represented by the ‘response of clusters’ box in figure 1.

Practices would have to deliver the reviews to patients by using the informatics tool to identify patients, by a GP initially reviewing the records and taking any action they judged necessary (eg, to stop the drug, or review the patient in person, or seek advice from a specialist). This work is represented by the ‘reach’ and ‘delivery to patient’ boxes in figure 1.

Practices would then have to maintain this activity over the 48 weeks the intervention was active (‘maintenance’ in figure 1).

Analysis

Analysis was iterative with data generation to allow issues and themes identified to inform subsequent data generation and facilitate deeper exploration, and continued until no new themes emerged. Analysis was carried out by AG with BG contributing through discussion of data and interpretation until he became aware of the trial results, and was completed by AG before she knew the outcome of the trial.

A coding frame was developed inductively and deductively from field notes, initial interviews and topic guides (available in see online supplementary appendix), framework15 and logic model,18 and revised during analysis through use of the constant comparative method.21 NVivo V.8 was used to systematically apply the coding frame to all data. An in-depth description of each case study was constructed detailing characteristics and perceptions of all practice staff that participated in interviews with additional data from the EOV observation and informal interviews. This facilitated a detailed exploration on a case-by-case basis. Analysis used the framework technique which facilitated comparing the data by concept, theme and practice and facilitated cross and within-case comparisons.22 Analysis drew on normalisation process theory (NPT), which focuses attention on how interventions become integrated, embedded and routinised into social contexts.20 AG analysed the data collected in the study twice, inductively and deductively as described above and deductively based on NPT. Each NPT construct was defined specifically for DQIP and these definitions can be found in table 1. Coding charts and memos were developed for each NPT construct and higher order constructs (coherence, cognitive participation, collective action and reflective monitoring). NPT interpretation and coding reliability were established through a workshop with NPT experts. The data were explored for negative cases.

Table 1.

Normalisation process theory constructs interpreted for the DQIP trial

| Coherence How do participants understand and attribute value to DQIP? |

Cognitive participation Enrolment and engagement of individuals and groups |

Collective action Organising and doing the work |

Reflective monitoring Reflecting on progress and making necessary adjustments |

|---|---|---|---|

|

Differentiation How does DQIP differ from other prescribing quality improvement work? |

Initiation Agency—capacity of individuals to make decisions and weigh up options. |

Interactional workability How is DQIP operationalised? |

Systematisation How do practices make judgements about effectiveness? |

|

Individual specification How does DQIP cohere with other work? |

Enrolment Persuading others to take part |

Skill set workability How is the work allocated? Roles and responsibilities |

Communal appraisal Regular and organised formal monitoring and appraisal |

|

Communal specification Does the team have a shared understanding of DQIP? |

Legitimation Buying into the DQIP work: how or what do they value about DQIP? |

Relational integration How is DQIP understood and mediated by the people around it? |

Individual appraisal Unsystematic and informal appraisal of DQIP. What are the conclusions? |

|

Internalisation What past experiences do they relate DQIP work to? |

Activation What process have they decided on to do the work? What resources are required? |

Contextual integration Incorporation of DQIP into practice context. |

Reconfiguration Appraisal may lead to changes—what have they changed? |

DQIP, Data-driven Quality Improvement in Primary Care.

bmjopen-2016-015281supp_appendix.pdf (512.4KB, pdf)

Results

Findings are based on data from 38 interviews (10 lead GPs of whom 9 were interviewed twice, 7 GPs less involved with DQIP, 9 practice managers and 3 practice pharmacists) and from ∼11 hours of field notes. Table 2 summarises the practice characteristics and intended and actual process for delivering the intervention to patients, ordered by the final qualitative judgement about the extent to which they implemented the intervention as intended by the research team (table 3).

Table 2.

Practice characteristics including planned and actual process for delivering care to patients

| Practice* | Randomised group† | Approximate list size and full time equivalent (FTE) GPs | Sampling (initial change in prescribing and size) | Overall high-risk prescribing rate at baseline‡ | The process for delivering the intervention to patients, both planned by the practice and actual (based on interview and observational data) |

|---|---|---|---|---|---|

| Orosay | 2 | 10 000 6.5 FTE |

Not reducing Large |

6.6 | Failure to legitimise and no process to implement agreed, but the most engaged GP said there was some change in clinical practice |

| Boreray | 10 | 6500 3.5 FTE |

Not reducing Large |

2.5 | Initially agreed to divide the work between GPs, but failed to implement because of understaffing/prioritisation of clinical work |

| Hellisay | 9 | 3000 1.9 FTE |

Not reducing Small |

7.0 | Initially agreed that one GP would review, but actually divided the work. Staff changes meant they could not maintain reviewing |

| Mingulay | 3 | 9000 5 FTE |

Not reducing Large |

3.2 | Initially agreed that one GP would review all patients in set 2 hours/month. This was inadequate, and poor GP to GP communication further reduced impact |

| Gighay | 7 | 2500 1.9 FTE |

Not reducing Small |

3.4 | Initially agreed that one GP would review all patients and flag notes for when next seen, so relied on patient consulting and other GPs acting on the flag |

| Lingay | 4 | 3000 2 FTE |

Not reducing Small |

5.0 | Initially agreed to divide the work, but did not implement; one GP systematically and enthusiastically reviewed after a delay |

| Scalpay | 6 | 3000 2 FTE |

Reducing Small |

3.7 | Did not agree process at EOV, but rapid implementation of one GP systematically reviewing all patients |

| Hirta | 8 | 5500 4.3 FTE |

Reducing Large |

4.2 | Initially agreed to divide the work and rapidly delivered by all GPs initially reviewing. Once initial bulk of reviews done, one GP maintained reviewing |

| Monach | 1 | 3500 2.7 FTE |

Reducing Small |

7.1 | Initially agreed to divide the work, but actually rapid implementation by one GP doing all the reviewing |

| Taransay | 5 | 6000 4 FTE |

Reducing Large |

3.7 | Initially agreed to divide the work, with rapid implementation by all GPs carrying out the reviewing |

*Ordered from top to bottom in terms of the practices judged from qualitative analysis to have been the least (top) to most (bottom) successful implementers.

†Practice group in terms of when started the intervention (1= first group to start, 10= last group to start).

‡Mean practice rate of high-risk prescribing in the 2 years before starting the intervention.

Table 3.

Comparison of overall qualitative assessment of implementation and quantitative measures or reach, delivery, maintenance and effectiveness

| Practice | Overall qualitative assessment of implementation* | Reach % of eligible patients† with a review recorded at any point during the intervention period | Delivery to patients % of eligible patients‡ with change in prescribing recorded§ |

Maintenance % of eligible patients‡ with a review recorded in the final 24 weeks of the intervention |

Effectiveness % reduction in high-risk prescribing¶ |

|---|---|---|---|---|---|

| Orosay | DQIP intervention was not adopted because there was a failure to collectively legitimise the intervention. This was too much work for one individual so no process for implementation was agreed | 3 | 2 | 0.7 | 19 |

| Boreray | DQIP intervention was not adopted. Initially the GPs agreed to share the work, but failed to implement any changes because of understaffing and a prioritisation of clinical work | 2 | 0 | 0 | −24 |

| Hellisay | DQIP was adopted, initially delivered to patients but with low maintenance. The GPs dealt with the initial bulk immediately but staff changes meant they struggled to consistently maintain reviewing | 64 | 5 | 7 | 6 |

| Mingulay | DQIP was adopted with reasonable initial reach. One GP had 2 hours per month allocated to deliver review, but this was inadequate to address the numbers identified with limited communication with other GPs | 62 | 29 | 48 | 28 |

| Gighay | DQIP was adopted with limited delivery to patients. They agreed that one GP would review all patients and flag notes for when next seen, so relied on patient consulting and other GPs acting on the flag | 83 | 14 | 43 | 56 |

| Lingay | DQIP intervention was not initially adopted. GPs initially agreed to divide the work but problems with access to the informatics tool led to lost motivation. DQIP was implemented fully by one GP after a delay | 78 | 32 | 44 | 53 |

| Scalpay | DQIP was fully implemented from the start. The practice did not agree process at EOV but one GP reviewed all patients | 95 | 19 | 50 | 67 |

| Hirta | DQIP was fully implemented from the start. The initial bulk of work was divided among all GPs and then one GP maintained reviewing | 90 | 45 | 43 | 75 |

| Monach | DQIP was fully implemented from the start. The practice initially agreed to divide the work but one GP actually did all the reviewing | 89 | 38 | 47 | 77 |

| Taransay | DQIP was fully implemented from the start. DQIP was delivered by all GPs and co-ordinated by an administrative member of staff | 92 | 33 | 68 | 59 |

*Ordered from top to bottom in terms of the practices judged from qualitative analysis to have been the least (top) to most (bottom) successful implementers.

†Eligible defined as patients with one or more high-risk prescriptions issued during the 48-week intervention period.

‡Eligible defined as patients with one or more high-risk prescriptions issued during the second half (24 weeks) of the intervention period.

§Patients without a review recorded were assumed not to have a change in prescribing.

¶Defined as the relative change in high-risk prescribing in each practice in the final 24 weeks of the intervention compared with the 48 weeks before the intervention started (negative numbers indicate an increase in high-risk prescribing).

DQIP, Data-driven Quality Improvement in Primary Care.

Perceptions of the work required and initial plans to implement

All GPs interviewed said they believed the targeted prescribing to be a cause of potentially preventable harm which was worth addressing (although in one practice, one non-interviewed GP did not believe this—see below). There was a consistent perception that safety-focused prescribing improvement work was more engaging for GPs (and patients) compared with previous experience of interventions to reduce prescribing cost. Some GPs reported they had already reviewed NSAID prescribing under a local health board initiative but DQIP was more meaningful because it focused on and identified individuals at higher risk of adverse events. All but one interviewed GP perceived the DQIP intervention to be clearly differentiated from existing prescribing improvement activity, and to be of significant value.

Although practices were free to organise the work as they saw fit, the research team knew approximately half of patients requiring review over the year would be identified when the practice started the intervention. At the educational visit, the research team therefore encouraged practices to divide the work across more than one GP, and most practices initially agreed to do this. However, many found this was either not feasible or sustainable (eg, where many or most GPs in a practice worked part-time), or in small practices was not necessary since one GP could comfortably manage the workload.

However, all practices found this initial volume of work required dedicated time to complete and could not be done opportunistically. Three practices (Mingulay, Lingay and Hirta) allocated administrative time to carrying out the DQIP reviews, in the remaining practices the GPs reviewed patients once routine work was finished.

…we ended up doing it in the evenings; it was after six o'clock, so it was sitting here at half past six, seven o'clock in the evening ploughing through patients’ notes. (Hellisay, GP 2, interview 1)

Case study practices which had not initially reduced high-risk prescribing

Six practices were sampled on the basis of having no initial change in high-risk prescribing, and were assumed by the research team to be initial ‘non-adopters’. However, the reasons for this varied across practices.

Failure to initially adopt the DQIP intervention. Three practices failed to initially adopt the DQIP intervention, although for different reasons and with different consequences. In Orosay and Boreray, non-adoption was persistent, whereas Lingay did adopt after a delay.

The GPs in Orosay never agreed how the work required would be done, partly because of lack of agreement that it was important to do. There was poor attendance at the initial EOV and at a second visit requested by the practice after ∼6 months. One GP in Orosay was highly motivated and engaged, but one felt they had no obligation to participate despite having received the initial payment, and another did not believe the targeted prescribing needed review.

How much risk is each individual patient at? What will happen if we don't do anything? This GP's questioning resulted in a long discussion with this GP arguing that he had little experience of renal failure due to NSAIDs; he said he is an old GP and his experience shapes his prescribing. (Extract from field notes 17.05.12)

During practice visits and during interviews in Orosay, administrative staff indicated that working relationships among GPs were frayed at times and there was a belief that it was not appropriate for one GP to change another's prescribing. However, although patients were not reviewed as intended, there was some evidence individual GPs in Orosay did change their prescribing as a result of the educational intervention.

Yeah it's been because of DQIP really yes, aye. … It's merely made me sit up and pay a bit more attention… It's more now when I see them, and I didn't even know if their name's on the tool. (Orosay, GP 1, interview 1)

In Boreray all GPs legitimised the work. The practice did agree a process by which to do the work but they never got started due to persistent clinical and administrative understaffing:

…we lost a partner… but the (other) doctors have cut their hours, they havnae got time…so it's kinda been a wee bit o’ upheaval in the last few months. So I was hoping they would take on a full-time Practice Manager… but they've taken on a part-time one. (Boreray, administrative interview)

As a result, the practice prioritised routine clinical work, although at the final interview, the GP said they had not known that each reviewed patient earned a £15 payment, and that knowing this might have led to different priorities.

Lingay experienced a ‘delayed implementation’. When examining the practice data during the EOV, it was obvious to the two GPs in the practice that many of the patients identified usually consulted with one of them. This GP was keen to review identified patients, but this was delayed by informatics access problems and little reviewing was initially done as motivation waned. Subsequently, one of the GPs was temporarily unable to do clinical work for health reasons which created time for doing work like DQIP. Once started, the GPs became highly motivated by seeing reductions in high-risk prescribing, and this led them to change their appointment system to protect specific time to do quality improvement work.

…we are making changes as of this month, we are staggering the times of surgeries so that there's actually going to be proper paperwork time. (Lingay, GP 1, interview 2)

Failure to consistently reach patients targeted by the DQIP intervention. In Mingulay, all professionals legitimised DQIP, and agreed that one GP would do all the work in 2 hours dedicated administrative time per month. However, this was not enough time for the GP to complete the initial large volume of reviews, exacerbated by the informatics tool initially running slowly (a problem for some practices in the first wave of implementation). There was also poor communication with the other GPs in the practice due to most being part-time with heavy use of locums. The GPs doing the work, therefore, felt that they never got on top of the reviewing, and perceived that stopped prescribing was often restarted.

The first few times we sort of went great guns and I got right down to, I only had 5 to review and then of course the next time I went on there was something like 19 ‘cause obviously there had been a couple that had re-triggered for whatever reason and then others that had obviously come on in that time and it was very frustrating. (Mingulay, GP 1, interview 1)

Failure to deliver the DQIP intervention to patients. In Gighay, one GP committed the practice to DQIP, attended the EOV and carried out all the review work, but made few changes, instead flagging the patient's record for the attention of whichever GP next saw them. As a result, GPs who had not received the educational intervention (written educational materials were not internally distributed) were responsible for delivering the change in prescribing, and delivery was therefore dependent on the patient attending for routine care and on the GP seeing them responding to the flag in the record.

Failure to maintain delivery of the DQIP intervention to patients. Hellisay was a two-GP practice where one GP attended the EOV and rapidly started reviewing patients. However, the other GP then left, and although a new GP replaced them, the remaining original GP then went off sick.

We blitzed the first numbers that we had, we then drifted along for a little bit doing some (reviews)… roundabout Christmas we stopped doing DQIP ‘cause Christmas is, is such a busy rush time, anyway DQIP dropped to the bottom of the priority list. Pretty much after Christmas Dr X went off sick. (Hellisay, GP 2, interview 1)

Reviewing was therefore not maintained, although the new partner said had they been aware of the financial incentive, it might have encouraged them to prioritise DQIP over other work.

Case study practices which had large initial reductions in high-risk prescribing

Four practices were sampled on the basis of having large initial reductions in high-risk prescribing. In these practices, there was full attendance by virtually all prescribers at the EOVs and there was strong and widespread legitimation and commitment to deliver. Two were small practices which initially decided to share the review work among all GPs, but within a couple of months of starting, one more motivated and/or IT literate GP took responsibility for the work because it was ‘not too onerous for one GP’ (Scalpay, GP 1, interview 1). In the two large practices, the initial review work and patient communication was shared by all GPs. In Hirta, one GP then took responsibility for subsequent review work and consultations with patients, whereas in Taransay the work continued to be shared across GPs and coordinated by a member of administrative staff.

Maintenance

No practice felt DQIP put an unfeasible burden on practice resources although it clearly required time to review records and communicate with patients, and two practices (Hellisay and Boreray) were unable to deliver the intervention due to being understaffed and prioritising competing demands. Most GPs delivered the required work outside routine opening hours, although in three practices, GPs used time already allocated for administrative work. As a result, all practices primarily communicated with patients by telephone. DQIP work was therefore done as separate activity rather than fully integrated with existing work, with only Lingay modifying any broader processes or systems as a result of the DQIP work.

Participants perceived that the review work influenced their future prescribing, saying that the work was more ‘hands on’ than other prescribing interventions which were pharmacist-led, and came with clear and concise prescribing advice.

cause you're thinking ‘oh …’, when you're prescribing anti-inflammatory, is there a reason I shouldn't be or should I prescribe a PPI as well, so I think it probably does focus your mind. (Scalpay, GP 1, interview 1)

Since participants felt this applied less to GPs not doing reviewing, they used a range of strategies to try to prevent drugs being restarted without due consideration, including informal discussion in the coffee room or in practice meetings, and flagging the notes with a warning. GPs from the practices which successfully reduced the targeted high risk prescribing continued to check the tool to ensure no patients had been incorrectly restarted on high-risk drugs and no new patients had been prescribed the targeted medication, and expressed concern that when the trial ended, they would not be able to check this.

…the worry is of course when, when the project stops whether things will drift back up again, I just wondered if, if this software could be left running… … ‘cause I think it's very, very useful … I think, it's like everything, unless you're constantly reminded of things it does, it does, it does tend to slip a little bit. (Monach, GP 1, interview 2)

Unintended consequences

Unintended consequences were explored in the interviews but none were identified.

Quantitative data in relation to qualitative assessment of the extent of implementation

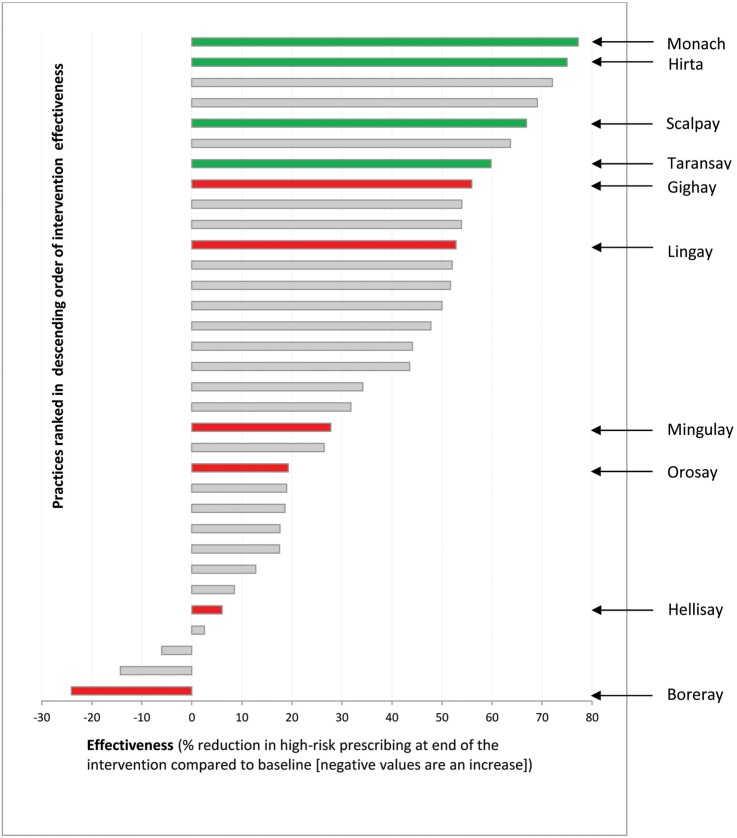

Table 2 summarises the qualitative assessment of the extent of practice adoption and implementation made before the trial results and quantitative process data were known, and ordered from the least to most successful adopters/implementers. It additionally shows quantitative measures of reach, delivery to patients, maintenance and effectiveness to contextualise the qualitative judgement. The four practices sampled as having rapid initial reductions in high-risk prescribing (Scalpay, Hirta, Monach, Taransay) were all qualitatively judged to have rapid and sustained adoption and implementation. These four practices also had the highest quantitative measures of reach, and among the highest measures of delivery and maintenance. They had among the largest reductions in targeted high-risk prescribing across all practices in the trial (figure 2).

Figure 2.

All trial practices ranked in order of intervention effectiveness, with case study practices identified. Practices marked in green were sampled because they were judged to have initially rapidly reduced the targeted prescribing; practices marked in red were sampled because they were judged to have not initially reduced the targeted prescribing.

The six practices sampled as having no initial reduction in high-risk prescribing (assumed to be ‘initial non-adopters’ during sampling) were more varied in qualitative and quantitative assessment. Table 2 shows the two practices qualitatively judged to have not adopted the intervention at all (Orosay and Boreray) had very low rates of reach, delivery and maintenance, all of which were measured using data from the informatics tool. Orosay did have a reduction in high-risk prescribing consistent with qualitative data that there was change in prescribing practice by at least some GPs even though the informatics tool was never systematically used, but high-risk prescribing increased in Boreray (table 2 and figure 2). The other four practices sampled as having no initial reduction in high-risk prescribing were qualitatively judged to have implemented with delay or in a way which the practices themselves perceived as suboptimal. They had generally intermediate levels of reach over the whole intervention period (table 2). Consistent with the qualitative data, Hellisay had limited delivery to patients of changed prescribing or follow-up, and poor maintenance with minimal change in the rate of high-risk prescribing. The remaining three practices had somewhat lower but overlapping levels of delivery to patients compared with the successful implementers, with similar rates of maintenance, and somewhat lower rates of effectiveness, consistent with the qualitative interpretation that these practices had delayed but ultimately successful implementation (table 2 and figure 2).

Discussion

This study examined implementation of the DQIP intervention in 10 practices (30% of trial practices) purposively selected for variation in initial reduction in targeted high-risk prescribing, which we assumed was a proxy for initial adoption and implementation. For the four practices selected on the basis of early reductions in high-risk prescribing, qualitative and quantitative data showed evidence of rapid and sustained adoption and implementation, and they were among the most effective in terms of practice-level change in high-risk prescribing. Our sample included all the six practices which had no initial reduction in high-risk prescribing and these were all judged qualitatively to either have not adopted at all because of disagreement about the value of the work, or to have experienced delay in implementation related to their organisational context, and the quantitative measures were largely consistent with the qualitative interpretation.

The way that practices did the work of implementation did therefore appear to be associated with effectiveness. An attractive theoretical lens to examine this work is NPT.20 The NPT construct coherence refers to participants' understanding of the intervention. Interviewed professionals in all practices clearly perceived the intervention and the associated review work as different from existing quality improvement work because of its focus on safety rather than cost. Cognitive participation relates to the enrolment and engagement of practices and clinicians to the DQIP work. In all practices, there was at least one engaged GP, but collective engagement varied across other GPs. Most or all GPs in nine of the case study practices highly legitimised the DQIP intervention, perceiving this as an innovative way to address an important safety problem. However, in the one practice where there was openly stated disagreement about value, implementation of the intervention failed from the outset (although there was evidence of change in prescribing practice by GPs who did legitimise the work and of reduction in high-risk prescribing, contrary to our belief that use of the informatics tool would be required for effectiveness).

Collective action focuses on how practices organised the work required for DQIP, which was usually as a separate activity rather than fully integrated with existing work or practice routines. In the smaller practices, which successfully implemented the intervention immediately or with delay, one GP did all the reviewing partly because the numbers made this feasible (contrary to our prior belief that intervention effectiveness would require sharing of the work). In the larger practices, the initial bulk of work was shared between GPs which required coordination by an administrator, but continued reviewing was shared or taken on by the most engaged GP.

Reflexive monitoring is about how participants assessed their progress in delivering the intervention, and responded to problems. Many GPs in practices which successfully implemented the intervention said that they regularly checked the tool to monitor progress. Interviewed GPs perceived that reviewing altered their future prescribing but were concerned that this did not apply to their colleagues. They described using a number of strategies to try to influence colleague's prescribing to try to ensure sustainability but were uncertain whether this was effective (although the main trial analysis found significant reductions in new high-risk prescribing, and that reductions in total high-risk prescribing were sustained in the 48 weeks after financial incentives ceased).12

Overall, coherence appeared uniformly high across case study practices (perhaps unsurprising in practices volunteering to participate in a trial), and so did not appear to influence implementation. However, varied perceptions of the legitimacy of the work and low engagement (cognitive participation) significantly contributed to poor adoption in two practices. More successful practices delivered the intervention in a variety of ways which did not always match the research teams' prior beliefs about how best to deliver the intended care, highlighting that allowing practices flexibility to implement in ways that worked for them was important (collective action). Successful implementers also regularly reviewed their progress, and used a range of strategies to change their colleagues' practice to address their own concerns about sustainability (reflexive monitoring).

Our other qualitative process evaluation paper reports that practice participants perceived all primary components of the intervention delivered to them by the research team to be active (financial incentives, education, informatics).19 This paper complements and extends those findings by examining how practices actually responded to the intervention delivered to them by adopting and implementing the work of delivering changed care to patients. They are also consistent with the quantitative examination of variation in effectiveness (Dreischulte et al 2016, Submitted). Six of the 10 case study practices were sampled because they did not initially reduce high-risk prescribing (assumed to be initial implementation failures), but most had delayed implementation and did achieve reductions in targeted prescribing. The practice where qualitative and quantitative data were most incongruent was Orosay, which was qualitatively judged to be an implementation failure (true in the sense that it made no use of the informatics tool and claimed no payments for reviewing), but which had a 19% reduction in targeted high-risk prescribing, consistent with the educational intervention altering clinical practice. This supports the idea that multicomponent interventions to change professional practice may be somewhat more effective than single-component interventions, since professionals or the organisations they work in may vary in how they respond (or not) to different elements.

In the wider literature, the intervention evaluated in the pharmacist-led information technology intervention for medication errors (PINCER) trial is the closest to DQIP in design and focus.23 Significant differences were that PINCER was pharmacist-led and delivered over 12 weeks, and that PINCER had a more standardised intervention in terms of how the pharmacists completed reviews. The PINCER process evaluation found that some of the pharmacists doing reviews did not feel well integrated into the practice team, found the work repetitive and would have preferred some flexibility to tailor the intervention to different practice contexts.24 Both interventions showed large reductions in targeted prescribing, but the DQIP intervention had sustained effects 48 weeks after the intervention ceased whereas the PINCER effect at 12 months was somewhat smaller than at 6 months.23 The DQIP findings suggest that GP-led reviewing may lead to more sustainable change by having greater influence on less involved GPs, and by changing future initiation of high-risk prescribing. However, since both studies were carried out in volunteer practices, it is likely that non-volunteer practices will require greater effort to engage and greater support to deliver similar outcomes which has implications for implementation across entire healthcare systems. From this perspective, pharmacist-led interventions have the potential advantage that it may be easier to deliver at least some change in practices which are less engaged or which are resource constrained.24

These findings are likely to be generalisable beyond this immediate study and context. For example, Kennedy et al found that the Whole System Informing Self-management Engagement (WISE) intervention was not routinely adopted by practices because it was not perceived as relevant or legitimate activity or a priority for general practice, and it did not fit within existing work. These findings align with the findings of this study that lack of coherence and cognitive participation were important barriers to implementation, and therefore may be important targets for intervention.25 Likewise, Berendsen et al26 found the BeweegKuur (combined lifestyle intervention) was not implemented according to protocol and had poor sustainability because patient's expectations of the intervention were not met and tailoring to general practice and patient contexts was required. Moore et al27 have also shown how implementation of an exercise intervention varied by patient characteristics and context. Like this study, the process evaluation of the implementation of the telephone triage for management of same-day consultation requests in general practice (ESTEEM) trial comparing GP versus nurse telephone triage of same-day appointment requests highlighted the importance of context on intervention implementation, and suggested that allowing autonomy to deliver the intervention to suit practice and patient contexts was likely important for effective implementation.28

Our published paper examining professional perceptions of the key components of the intervention delivered to practices (education, financial incentives and the informatics tool) found that all components were ‘active’ although at different stages of recruitment and adoption.19 This paper adds to that, within a clear definition of the work practices were expected to do with patients, allowing them the freedom to tailor implementation and develop their own processes to suit their context was important for effective implementation. The paper also shows that characteristics associated with implementation varied with context. Having a single motivated individual GP in small practices appeared to be sufficient for effective implementation, but shared vision and joint working additionally appeared important in larger practices.

Strengths and limitations

A strength of the study overall is that we were sufficiently resourced to develop and published a preplanned and prespecified study18 and carry out a well-resourced and rigorous process evaluation of a third of all practices included in the trial (Dreischulte et al 2016, Submitted).19 A strength of this analysis is the attention to context, illustrating its influence on intervention implementation including that context is not fixed, for example, in relation to variable staffing over time. The sampling method we chose has advantages and disadvantages. Using run charts showing change in the targeted high-risk prescribing, we chose to sample six practices which did not appear to have implemented the intervention, and four practices which had. We felt that purposive sampling for heterogeneity in this way would help us more clearly understand the barriers and facilitators to trial delivery and intervention implementation. We recognise that sampling from the two ends of the distribution of initial response may limit generalisability to a wider population, but this is mitigated by the fact that we included one third of practices participating in the trial in the process evaluation. In principle, it also risks regression to the mean where outliers from both ends of the distribution would be expected to become more similar to each other because being an outlier at one time point may be due to chance variation. We do not believe this was the case here, because the four initial responders remained among the most effective at trial end, and the two initial non-responders which delivered the largest reductions in high-risk prescribing by trial end were both judged qualitatively (blind to final trial results) to have significantly implemented after a delay.

Conclusions

The DQIP intervention successfully reduced targeted high-risk prescribing12 and reductions were sustained in the 48 weeks after the intervention ceased. Overall, the four case study practices which immediately implemented the DQIP intervention had full or nearly full GP attendance at the EOV consistent with collective engagement, rapidly identified and implemented a process for delivering the required work, and had at least one engaged and motivated GP who used a range of strategies to try to influence their colleagues' prescribing. In contrast, the six practices in which implementation was problematic were more variable, with initial implementation failure occurring at different stages of adoption and often being linked to wider practice context or resources. Context and resources were however not fixed, with most of the initial implementation ‘failures’ delivering some or all elements of the intervention, leading to successful reductions in targeted high-risk prescribing. In wider implementation, commissioners should pay careful attention to deploying educational and persuasive strategies to ensure practices legitimise the work, should allow practices freedom to tailor how they implement the work of review, and should seek to offer additional support and facilitation to practices struggling with short-term resource constraint or other contextual barriers early in implementation.

Acknowledgments

We would like to thank all participating practices and the University of Dundee's Health Informatics Centre for data management and anonymisation, the Advisory and Trial Steering Groups and Debby O'Farrell who provided administrative support.

Footnotes

Twitter: Follow Aileen Grant @aileenmgrant

Contributors: BG and AG designed the study. AG led the data collection and analysis, supported by BG in analysis and interpretation. AG wrote the first draft of the manuscript with all authors commenting on subsequent drafts.

Funding: The study was supported by a grant (ARPG/07/02) from the Scottish Government Chief Scientist Office.

Disclaimer: The funder had no role in study design, data collection, analysis and interpretation, the writing of the manuscript or the decision to publish.

Competing interests: None declared.

Ethics approval: Fife and Forth Valley Research Ethics Committee (11/AL/0251).

Data sharing statement: No additional data are available.

References

- 1.Hakkarainen KM, Hedna K, Petzold M et al. . Percentage of patients with preventable adverse drug reactions and preventability of adverse drug reactions--a meta-analysis. PLOS ONE 2012;7:e33236 10.1371/journal.pone.0033236 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Howard R, Avery A, Bissell P. Causes of preventable drug-related hospital admissions: a qualitative study. Qual Saf Health Care 2008;17:109–16. 10.1136/qshc.2007.022681 [DOI] [PubMed] [Google Scholar]

- 3.National Institute for Health and Care Excellence (NICE). Costing statement: Medicines optimisation—Implementing the NICE guideline on medicines information (NG5), 2015. https://www.nice.org.uk/guidance/ng5/resources/costing-statement-6916717 (accessed 11 05 2016).

- 4.Institute for Health Care Informatics, IMS. Responsible Use of Medicines Report, 2012. http://www.imshealth.com/en/thought-leadership/ims-institute/reports/responsible-use-of-medicines-report

- 5.Howard RL, Avery AJ, Slavenburg S et al. . Which drugs cause preventable admissions to hospital? A systematic review. Br J Clin Pharmacol 2007;63:136–47. 10.1111/j.1365-2125.2006.02698.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Budnitz DS, Lovegrove MC, Shehab N et al. . Emergency hospitalizations for adverse drug events in older Americans. N Engl J Med 2011;365:2002–12. 10.1056/NEJMsa1103053 [DOI] [PubMed] [Google Scholar]

- 7.Leendertse AJ, Egberts ACG, Stoker LJ et al. . Frequency of and risk factors for preventable medication-related hospital admissions in the Netherlands. Arch Intern Med 2008;168:1890–6. 10.1001/archinternmed.2008.3 [DOI] [PubMed] [Google Scholar]

- 8.Dreischulte T, Grant A, Donnan P et al. . A cluster randomised stepped wedge trial to evaluate the effectiveness of a multifaceted information technology-based intervention in reducing high-risk prescribing of non-steroidal anti-inflammatory drugs and antiplatelets in primary medical care: the DQIP study protocol. Implement Sci 2012;7:24 10.1186/1748-5908-7-24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Grant AM, Guthrie B, Dreischulte T. Developing a complex intervention to improve prescribing safety in primary care: mixed methods feasibility and optimisation pilot study. BMJ Open 2014;4:e004153 10.1136/bmjopen-2013-004153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Guthrie B, McCowan C, Davey P et al. . High risk prescribing in primary care patients particularly vulnerable to adverse drug events: cross sectional population database analysis in Scottish general practice. BMJ 2011;342:d3514. [DOI] [PubMed] [Google Scholar]

- 11.Brown CA, Lilford RJ. The stepped wedge trial design: a systematic review. BMC Med Res Methodol 2006;6:54 10.1186/1471-2288-6-54 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dreischulte T, Donnan P, Grant A et al. . Safer prescribing--a trial of education, informatics and financial incentives. N Engl J Med 2016;374:1053–64. 10.1056/NEJMsa1508955 [DOI] [PubMed] [Google Scholar]

- 13.Medical Research Council. Developing and evaluating complex interventions: new guidance, 2008. www.mrc.ac.uk/complexinterventionsguidance (accessed 4 Mar 2017).

- 14.Medical Research Council. Process evaluation of complex interventions: UK Medical Research Council (MRC) guidance, 2015. https://www.mrc.ac.uk/documents/pdf/mrc-phsrn-process-evaluation-guidance-final/ (accessed 4 Mar 2017).

- 15.Grant A, Treweek S, Dreischulte T et al. . Process evaluations for cluster-randomised trials of complex interventions: a proposed framework for design and reporting. Trials 2013;14:15 10.1186/1745-6215-14-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stake R. The Art of Case Study Research. Thousand Oaks: Sage Publications, 1995. [Google Scholar]

- 17.Crowe S, Cresswell K, Robertson A et al. . The case study approach. BMC Med Res Methodol 2011;11:100 10.1186/1471-2288-11-100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Grant A, Dreischulte T, Treweek S et al. . Study protocol of a mixed-methods evaluation of a cluster randomized trial to improve the safety of NSAID and antiplatelet prescribing: data-driven quality improvement in primary care. Trials 2012;13:154 10.1186/1745-6215-13-154 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Grant A, Dreischulte T, Guthrie B. Process evaluation of the Data-driven Quality Improvement in Primary Care (DQIP) trial: active and less active ingredients of a multi-component complex intervention to reduce high-risk primary care prescribing. Implement Sci 2017;12:4 10.1186/s13012-016-0531-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.May C, Finch T. Implementing, embedding, and integrating practices: an outline of normalization process theory. Sociology 2009;43:535. 10.1177/0038038509103208 [DOI] [Google Scholar]

- 21.Glaser B, Strauss A. The discovery of grounded theory: strategies for qualitative research. Chicago: Aldine Publishing Co, 1967. [Google Scholar]

- 22.Ritchie J, Spencer L, O'Connor W. Carrying out Qualitative Analysis. In: Ritchie J, Lewis J, ds. Qualitative research practice, a guide for social science students and researchers . London: Sage Publications Ltd, 2003:219–62. [Google Scholar]

- 23.Avery AJ, Rodgers S, Cantrill JA et al. . A pharmacist-led information technology intervention for medication errors (PINCER): a multicentre, cluster randomised, controlled trial and cost-effectiveness analysis. The Lancet 2012;379:1310–19. 10.1016/S0140-6736(11)61817-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cresswell KM, Sadler S, Rodgers S et al. . An embedded longitudinal multi-faceted qualitative evaluation of a complex cluster randomized controlled trial aiming to reduce clinically important errors in medicines management in general practice. Trials 2012;13:78 10.1186/1745-6215-13-78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kennedy A, Rogers A, Chew-Graham C et al. . Implementation of a self-management support approach (WISE) across a health system: a process evaluation explaining what did and did not work for organisations, clinicians and patients. Implementation Science 2014;9:129 10.1186/s13012-014-0129-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Berendsen BAJ, Kremers SPJ, Savelberg HHCM et al. . The implementation and sustainability of a combined lifestyle intervention in primary care: mixed method process evaluation. BMC Family Practice 2015;16:37 10.1186/s12875-015-0254-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Moore GF, Moore L, Murphy S. Facilitating adherence to physical activity: exercise professionals’ experiences of The National Exercise Referral Scheme in Wales a qualitative study. BMC Public Health 2011;11:935 10.1186/1471-2458-11-935 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Murdoch J, Varley A, Fletcher E et al. . Implementing telephone triage in general practice: a process evaluation of a cluster randomised controlled trial. BMC Fam Pract 2015;16:47 10.1186/s12875-015-0263-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2016-015281supp_appendix.pdf (512.4KB, pdf)