Abstract

Until recently, measurement and evaluation in sport science, especially agility testing, has not always included key elements of proper test construction. Often tests are published without reporting reliability and validity analysis for a specific population. The purpose of the present study was to examine the test re-test reliability of four versions of the 3-Cone Test (3CT), and provide guidance on proper test construction for testing agility in athletic populations. Forty male students enrolled in classes in the Department of Physical Education at a mid-Atlantic university participated. On each of test day participants performed 10 trials. In random order, they performed three trials to the right (3CTR, standard test), three to the left (3CTL), and two modified trials (3CTAR and 3CTAL), which included a reactive component in which a visual cue was given to indicate direction. Intra-class correlation coefficients (ICC) indicated a moderate to high reliability for the four tests, 3CTR 0.79 (0.64-0.88, 95%CI), 3CTL 0.73 (0.55-0.85), 3CTAR 0.85(0.74-0.92), and 3CTAL 0.79 (0.64-0.88). Small standard error of the measurement (SEM) was found; range 0.09 to 0.10. Pearson correlations between tests were high (0.82-0.92) on day one as well as day two (0.72-0.85). These results indicate each version of the 3-Cone Test is reliable; however, further tests are needed with specific athletic populations. Only the 3CTAR and 3CTAL are tests of agility due to the inclusion of a reactive component. Future studies examining agility testing and training should incorporate technological elements, including automated timing systems and motion capture analysis. Such instrumentation will allow for optimal design of tests that simulate sport-specific game conditions.

Key points.

The commonly used 3-cone test (upside down “L” to the right”) is a reliable change of direction speed (CODS) test when evaluating collegiate males.

A modification of the CODS 3-cone test (upside down “L” to the left instead of to the right) is also reliable for evaluating collegiate males.

A modification of the 3-cone that includes reaction and a choice of a cut to the left or right remains reliable as now an agility test version in collegiate males.

There are moderate to high correlation between the 4 versions of the tests.

Reaction remains a critical to the design of testing and training agility protocols, and should be investigated similarly to various athletes including novice/expert, male/female, and nearly every sporting event.

Key words: Measurement, sport, change of direction speed (CODS), methodology

Introduction

The concept of agility has seen many changes of definition in the last 60 years. Definitions have appeared in measurement texts (Clarke, 1950; Cureton, 1947; McCloy and Young, 1954) and research studies/ reviews (Chelladurai, 1976; Chelladurai and Yuhasz, 1977; Sheppard and Young, 2006; Young et al., 2001) which describe different elements of agility. In a review of the literature, five key descriptive elements of agility were identified: precision/accuracy, change of direction, body (parts), quickness/rapidity, and reaction; and none of the 24 definitions found had all five elements. Differences in definition have altered the reliability and validity of tests - designed to quantify agility for the purposes of skill acquisition (Beise and Peaseley, 1937; Mohr and Haverstick, 1956; Rarick, 1937), prediction of athletic success (Gates and Sheffield, 1940; Hoskins, 1934; Johnson, 1934; Larson, 1941; Lehsten, 1948), and possibly even the maintenance of fitness/health (Barnett et al., 2008). As evidence of this discrepancy in the literature, Craig (2004, p. 13) noted a “gap between the applied and the scientific knowledge”. This apparent historical miscommunication between practitioners and sport scientists has, at times, resulted in the misclassification or inappropriate application of change of direction speed (CODS) tests. Instead of being its own category of assessment, such CODS tests have been misinterpreted as tests of agility. This knowledge gap may be due, at least in part, to the many different definitions of agility that persists in the literature. Each definition derives from the sub-disciplinary perspective from which the measurement is derived (biomechanics, motor learning, physical education, strength and conditioning, or skill coaching). The definition of agility adopted for the present investigation comes from Sheppard and Young (2006, p.922): “a rapid whole-body movement with changing velocity or direction in response to a stimulus”. This definition results in only a few options for testing “true” agility. The majorities of available tests are CODS tests and should be recognized as such in future studies as they test a distinct component, separate from agility. Only movements requiring reaction to a stimulus should be classified as “agility” (Farrow, 2005).

Of the 24 unique definitions, three early researchers included ‘reaction’ when describing agility (O’Conner and Cureton, 1945; Cureton, 1947; and Clarke, 1950). Unfortunately, these early definitions were not widely adopted. Several CODS tests were developed prior to, and even after, Chelladurai (1976) critiqued prior research for the absence of reaction to a stimulus re: agility and further suggested that the whole body should be in motion, not just a single limb. Chelladurai (1976) also developed an agility classification scheme that included ‘simple’, ‘complex’, and ‘universal’ agility. However, his classification scheme was also limited in that it still classified CODS tests as “simple” agility. Nearly 30 years later, and with no significant change in agility protocols, Sheppard and Young (2006, p. 922) indicated the need for “a simpler definition of agility [that] could be established by using an exclusion criterion, rather than an inclusion criterion”. With no acknowledged or standard criteria, tests were published (Draper, 1985; Graham, 2000; Hoffman et al., 2007; Semenick, 1990) and used by coaches, however, we would exclude these as ‘agility’ tests because they did not require a reaction to a stimulus.

This lack of consensus with respect to a definition of agility is stark when compared to other physical attributes like aerobic conditioning. Aerobic conditioning is based on either the direct measurement or an indirect estimation of maximal oxygen uptake. The measurement of physiological gas exchange is the “gold standard” laboratory test that has given rise to validated sub-maximal or field-based tests. The protocols are well established and highly researched. In the case of agility, many of the authors fail to report the procedures by which they arrived at a new test protocol, making replication difficult.

The lack of methodological clarity is less frequent in the literature published since 2008. In 2002, Young and colleagues first identified the limitations of Chelladurai’s (1976) model. The 2002 model proposed an alternative that focused solely on “universal” agility, and identified two major contributing factors to successful agility performance: CODS and perceptual/decision-making factors. Sheppard and Young (2006) described the uniqueness of Chelladurai’s 1976 model, and its usefulness for coaches and sport scientists to better classify skills specific to a particular sport. Such knowledge allows for the creation of tests / drills that target the sub-components of a skill or the overall skill itself. For example, the design of agility tests can be improved, but what impact does muscular strength or anthropometrics have on agility and its performance? These and other elements compose the model proposed by Sheppard and Young (2006). Spiteri et al. (2014) investigated some of these elements by measuring muscle cross-sectional area, strength, and electromyography in professional female basketball players and found that eccentric strength was correlated with CODS tests, but not with tests of agility. Combined, the work of Chelladurai (1976); Sheppard and Young (2006) and Young et al. (2002) has advanced ‘agility’ as a concept that can be investigated for the purpose of talent identification as well as monitoring progress following a training program.

The 3-Cone Test is an example of a CODS test that has been used, incorrectly in the authors’ opinion, to quantify ‘agility’. To provide a consistent terminology for the 3-Cone Test, as well as the modified versions examined in the present study we propose 3CT as an acronym. In fact, the 3CT reported in the literature dictates that the runner makes a change of direction to the right (further detail in the methods section). In an attempt to make that clear to practitioners, we identify this as 3-Cone Test-Right (3CTR).

In view of the high-profile use of the 3CTR by strength and conditioning professionals, and lack of published data on the test, an investigation into the test’s reliability is warranted. In addition to replicating the reliability of the 3CTR test, (Stewart et al., 2014) the goal of the present study was to re-design the test so that it met the definition of ‘agility’ provided by Sheppard et al. (2006), namely “a rapid whole-body movement with changing velocity or direction in response to a stimulus”, and examine the test re-test reliability of three versions of the 3-Cone Test (3CTR).

In order to keep the terminology consistent, the version in which the runners are required to change direction to the left is 3-Cone Test-Left (3CTL) (additional details found in the methods). The two additional modifications to the 3CT require a reaction to a stimulus and are denoted as 3-Cone Test-Agility-Right (3CTAR), and 3-Cone Test-Agility-Left (3CTAL) respectively. Each of these tests is described in detail in the methods section, generally the test design is the same as the CODS versions except the runner will be cued to go either right or left based on the stimulus provided by the tester.

The hypotheses were (a) the 3CTR will be reliable (intraclass correlation coefficient (ICC)>0.75) when testing untrained college-aged men; (b) the three modifications 3CTL, and 3CTAR, and 3CTAL versions of the 3CTR will be reliable (ICC>0.75); and (c) correlation coefficients amongst the four tests will be moderate (r>0.75) to high (r>0.85).

Methods

Data was collected on 49 men (age, 20.97 ± 1.67 years; height, 1.81 ± 0.06 m; body mass 85.1 ± 18.3 kg), however, nine failed to complete at least one of the required trials, leaving the final sample size at 40. Convenience sampling was used to recruit participants from the student body at a mid-Atlantic university in the Department of Physical Education. An a priori power analysis indicated a minimal sample size of n=34 would be required to achieve 95% statistical power with an r ≥ 0.7 while significance level was set at an alpha of 0.05. Each subject participated voluntarily, and met all inclusion criteria, including no impairment or injury that would limit test performance. Prior to testing, the University’s Institutional Review Board approved the study, and all participants read and signed an informed consent form. Volunteers also completed a brief demographic questionnaire that included information regarding their participation in sport, injury history, and dominant side (right, n = 34; left, n = 6).

Test procedures

Four versions of the 3CT were administered. Each variation of the test required movement in the horizontal and transverse planes, with varying requirements of perceptual reaction. The four tests included the 3CTR, 3CTL and 3CTA. Each test is described in detail later in this section. The 3CTA version had a reactive component that required the runner to make a decision to go either right or left.

Familiarization and test re-test protocols

A test-retest design was implemented to establish reliability. The components of the test battery included the 3CTR test, and three modifications: 3CTL, 3CTAR and 3CTAL (descriptions below). Participants were tested on three occasions separated by at least 48 hours. Prior to each test, participants completed a dynamic warm-up of 8-10 minutes that included several exercises designed to prepare the lower body for maximal sprinting and high velocity changes of direction.

On day 1 (familiarization), all three tests were demonstrated by the lead researcher and performed by each participant as a means of introduction to the test patterns, and to determine an acceptable rest interval during the actual trials on days 2 and 3. Each participant performed 12 trials (four trials for each test). During the initial trial, the participant ran at a self-selected “half-speed,” while the second trial was at “three-quarter speed.” The final two trials were at full speed. The order of the familiarization trials was the same for all participants 3CTR, 3CTL, and 3CTA; each trial was separated by 50-65 seconds.

During testing on days 2 and 3, each participant completed 10 trials; three 3CTR, three 3CTL, and four 3CTA. The order of the three groups of tests was randomized using a Balanced Latin Square technique for each participant, and no participant completed the test groups in the same order on days 2 and 3. The four-3CTA trials were also randomized to include two trials in each direction (two right; two left). The fastest trial from each test version was used for analysis. Each trial had a rest interval of 50-65 seconds. The time corresponded to an average trial time of 8.53 seconds during familiarization and a 6:1 rest-to-work ratio.

Protocol for 3-Cone Test (3CTR and 3CTL)

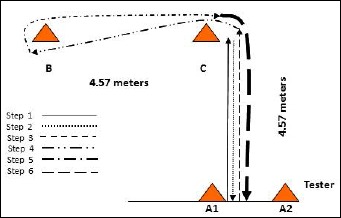

The 3CTR was arranged by creating a triangle or an inverted L-shape setting up a start/finish line (Stewart et al., 2014). The aim was to run as fast as possible without making contact with a cone.

The time to complete the test was recorded to the nearest hundredth of a second using an electronic stopwatch (Sportline, 240). Participants started in a two-point stance; the watch was started on initiation of the first movement and stopped upon crossing the line at point A (Figure 1). The participant performed additional trials using the same procedure for the 3CTL; except; they went left at cone B and around cone D instead of right around cone C (Figure 2).

Figure 1.

3-Cone Test-Left (3CTL).

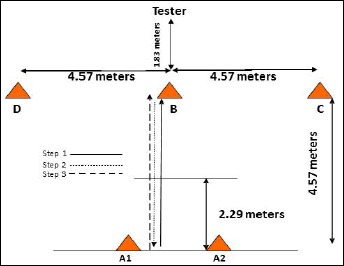

Figure 2.

3-Cone Test Agility-Left (3CTAL).

Protocol for 3-Cone Agility Test (3CTAR and 3CTAL)

The 3CTA test is a combination of 3CTR and 3CTL, having both the C and D cones as possible running patterns in the shape of a “T”. These modifications were necessary in order to have tests that require a response to a stimulus. The test variation included temporal reaction, and while this is not “universal” agility, as described by Chelladurai (1976), it allowed for comparisons between tests. The modifications to the 3CT were specific to step 3 of the 3CTL (Figure 2). During this step the subject reacted to a stimulus provided by the tester standing 4.11-m directly in front of them or 1.83-m from B (Figure 2). During step 3, the runner turned to face cone B, as the runner passed the 2.29-m mark on the floor the tester pointed to either cone C or D. Participants then completed steps 4-6 in the same pattern as either the 3CTR or 3CTL depending on direction. The directional signal began with the fists of the tester together and both thumbs pointing upward directly in front of the chest of the tester. If the runner was assigned to go to their left, the tester pointed with the right thumb; and vice versa if the runner was to go to the right.

Data analysis

All data were analyzed using SPSS (Version 24; Chicago, IL) and Microsoft Excel (Version 2010). Statistical significance was set at p < 0.05. Reliability was determined via ICC model 3,1 for between-day analysis using the best trial each day for 3CTR, 3CTL, 3CTAR and 3CTAL. The ICC 3,1 “was an intra-rater design with a single tester representing the only tester of interest” (Beekhuizen et al., 2009, p. 2169). The ICC is a reliability coefficient that generates a ratio ranging from 0.00 to 1.00 to estimate the consistency of performance on repeated trials (a score of 0.00 indicates the measure was deemed unreliable) (Drouin, 2003). In calculating the ICC, the ratio determines accurate interpretation of how much variability in the observed measure is due to a change in the participant or the result of measurement error. Standard error of measurement (SEM) was determined, in conjunction with the ICC. In addition, the relationship between tests was assessed using the Pearson Product Moment Correlation. Bland Altman plots were drawn to demonstrate the level of agreement and bias (Bland and Altman, 1986). Lastly, the coefficient of variation (CV) was determined.

Results

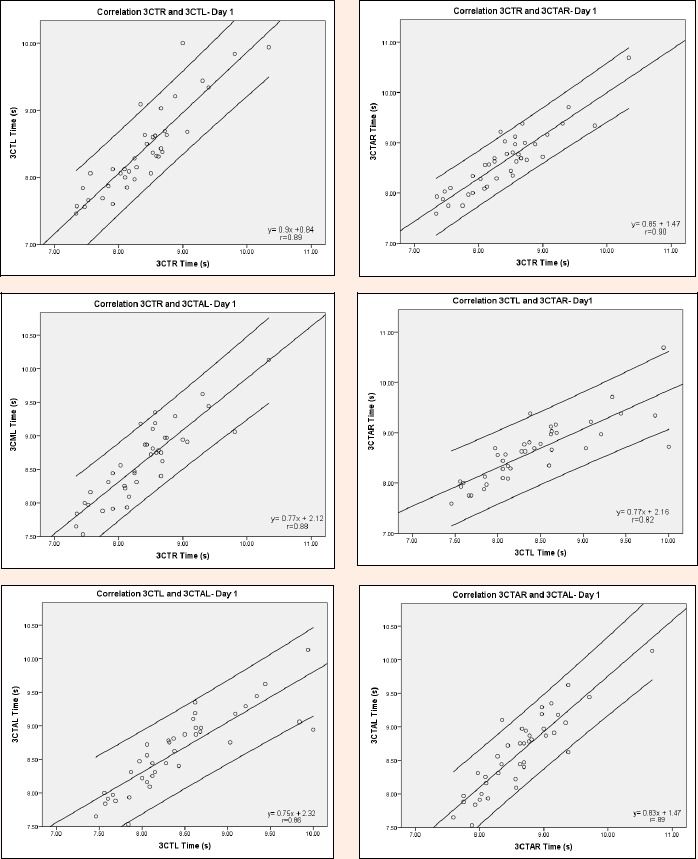

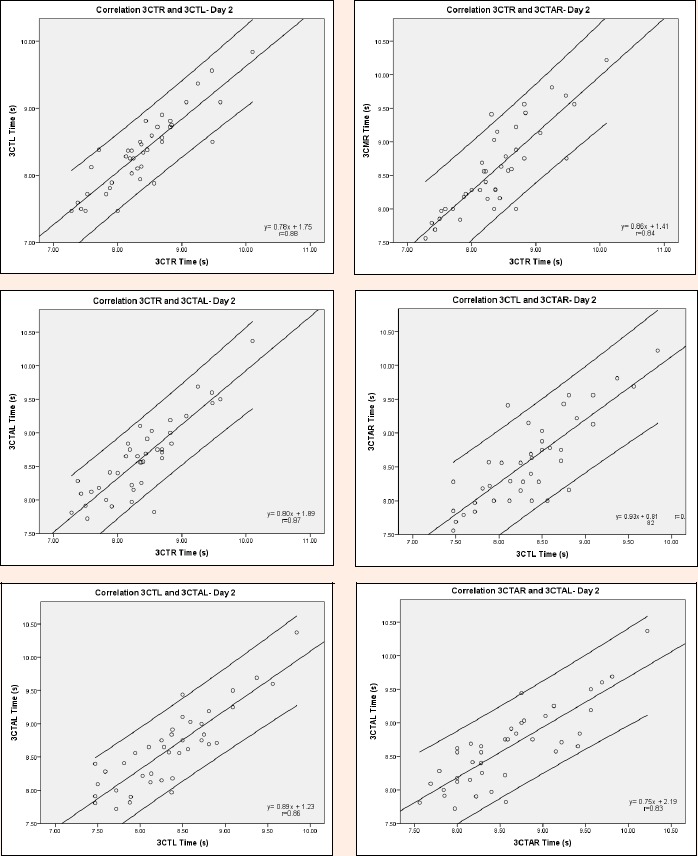

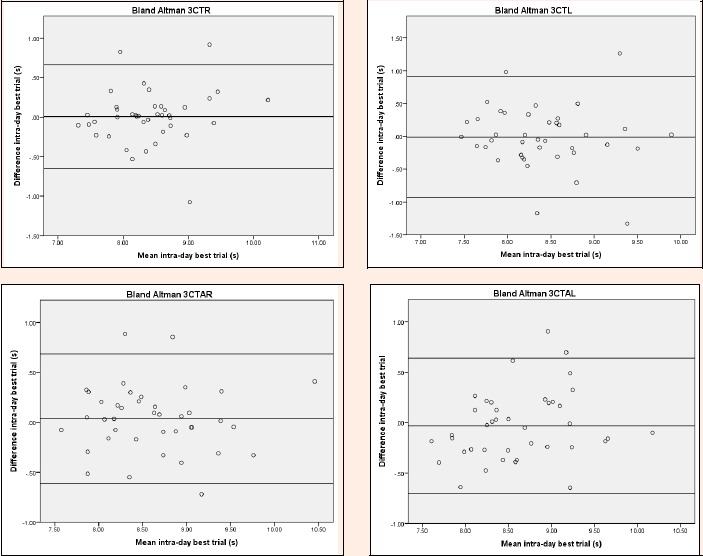

Table 1 shows the performance characteristics (means ±SD) of the fastest trials used for each of the four tests, as well as the ICC, SEM, mean time differences, and 95% confidence intervals (CI’s). Small SEM’s (0.09-0.10) indicate that the measurement error was minimal. All but one (3CTAR=0.85) of the ICC’s for the four tests was lower than the 0.85 predicted at the outset of the study; however, according to Portney and Watkins (1992), >0.75 should still be considered ‘good’. The 3CTL fell just below at 0.73. Correlations between the tests are shown in Figure 3 (Day 1) and Figure 4 (Day 2) while correlations between tests by days were moderate (r = 0.72-0.85). Bland Altman plots between days for each of the tests are in Figure 5. Coefficients of variation for the best trial ranged from 6.4-7.6% across the eight cumulative trials.

Table 1.

Performance characteristics and reliability coefficients.

| Tests | Best Trial 1 Means ± SD |

Best Trial 2 Means ± SD |

Mean Difference (s) ± SD |

ICC (95% CI) | SEM Trial 1 | SEM Trial 2 |

|---|---|---|---|---|---|---|

| 3CTR | 8.39 ± .65 | 8.39 ± .64 | -.00 ± .34 | .79 (.64 - .88) | .103 | .101 |

| 3CTL | 8.42 ± .66 | 8.36 ± .57 | -.01 ± .47 | .73 (.55 - .85) | .104 | .090 |

| 3CTAR | 8.63 ± .61 | 8.60 ± .65 | .04 ± .33 | .85 (.74 - .92) | .097 | .103 |

| 3CTAL | 8.62 ± .57 | 8.63 ± .59 | -.03 ±.34 | .79 (.64 - .88) | .091 | .093 |

Figure 3.

Day one Pearson Product Moment correlations between best trial of each version.

Figure 4.

Day two Pearson’s correlations between best trial of each version.

Figure 5.

Bland Altman plots by trial condition.

Discussion

The findings of the present study indicate each version of the 3CT was reliable. Therefore, 3CTR and 3CTL can be used to evaluate change of direction speed (CODS) in untrained college-aged men. Similarly, 3CTAR and 3CTAL can be used to evaluate agility in the same population.

Stewart et al. (2014) was first to determine the reliability of the 3CTR, and found 3CTR times of 8.19±0.46 s, and ICC of 0.80 (0.55-0.91), SEM of 0.18 and CV of 2.3%. Their subjects were slightly faster, with a nearly identical ICC (0.80 versus 0.79), higher SEM and lower CV. It should be noted their tests used electronic timing gates. The results of the present study confirm those found by Stewart et al. (2014) in a larger (40 versus 24 participants) population of non-athletic men.

In addition to replicating the findings of Stewart et al. (2014), the study goal was to determine the reliability of three additional versions of the test and correlate each test version to determine whether one or more tests is necessary to assess agility. Each version (3CTL, 3CTAR, and 3CTAL) of the 3CTR test was reliable with ICC values of 0.73 or higher. As with the 3CTR the values were slightly lower than the predicted value of 0.85, yet were similar to the those reported by Stewart et al. (2014). Pearson correlation coefficients ranged between r=0.72-0.92 across both test days indicating that the tests are measuring very similar qualities of movement and therefore only one test is necessary, but in practical terms athletes should be trained to change direction using both legs. As for the versions that included a reaction component and considered as ‘agility’ (Young et al., 2002) the correlations between 3CTAR and 3CTAL were r=0.89 (day 1) and r = 0.83 (day2) which result in shared variances equaling 79.7% and 68.5%. It can be concluded that the tests are measuring the same variable, but testing and training should not rely on making a change of direction only using the same foot.

The current study was the first to use a manual cueing system to initiate a reaction. The cue was simple to apply and indicated in which direction to proceed, but further research is necessary to determine the point at which the cue is initiated to better simulate the game action being represented. Also to be considered is the timing of the initiation of the cue by the tester. Every attempt was made to provide the cue at the same time point to all participants; however, it is possible that some error was introduced. A main aim was to determine the reliability of the tests so future studies can focus on validation and e.g. comparisons between manual and electronic timing. Depending on the speed of the participant, using a cue at a standardized time point could affect the time each participant has to make the next change of direction (COD). For example, in 3CTAR or 3CTAL the cue was given at the same distance from the next change of direction (Figure 2), but if a light gate cue was given at a prescribed interval following the previous COD the faster one runs the closer the participant gets to the next COD. Vice versa, a slower participant will receive a light cue earlier having more time to make the change of direction. This may result in a participant slowing during the reactive step, thus slowing their overall time. This would not be a desired training adaptation. In a team game, moving as fast as possible in a controlled manner is the desired outcome. Young and Farrow (2013) described the need for progressing the athlete from CODS, to “generic”, then to “sport-specific” cues during training. The same can be assumed for testing. For example, Paul et al. (2016) found that a human or video stimulus was better than a light source. This finding, in combination with the principle of specificity, means the practice of using generic tests across different sports and skill levels is not appropriate. The results from the present sample of non-athletic men is that when going to the same side (i.e. 3CTR and 3CTAR) there was a strong positive agreement r = 0.90 (day 1) and r = 0.88 (day 2). Similar values were seen between 3CTL and 3CTAL (r=0.86 on both days). The high correlations between reactive and non-reactive tests in the present study support the need for a more “sport-specific” cueing system when assessing athletes. For example, Farrow et al. (2005) reported r = 0.70 between reactive and standard tests when using a video simulation of a player in a game situation.

To identify any case of systematic bias Bland Altman plots (Figure 5) were included; bias was not evident as the points were evenly dispersed above and below the horizontal mean line. Additionally, to provide evidence in relation to the mean, a coefficient of variation (CV) score was calculated for each test on both days. A small percentage of the times were equal to the mean. The inclusions of these additional statistical procedures provide greater evidence for the conclusions drawn from the basic ICC and Pearson correlations. Bland Altman plots and CV provide additional statistical information that should be included in future test re-test investigations. Areas of concern in the literature regarding the 3-Cone Test that this study addresses are inaccuracies. The first example of an inaccuracy comes from Hoffman et al. (2007). The 3CTR in Figure 1 Hoffman et al. (2007, p. 129), indicates the runner proceeding to the left (clockwise) when circling cone C, when, in fact, the runner should be routed around the cone to the right (counterclockwise). It is likely an error in the figure, but as the first published model it could be confusing to an uninformed coach.

In addition to the incorrect path depicted by the Hoffman et al. (2007) study, comparisons between the current data and those of Hoffman et al. (2007) should be cautioned, due to differences in the test, re-test protocols, and no mention of the statistical analysis used to calculate reliability. Stewart et al. (2014), correctly described the 3-Cone Test, but unfortunately labeled it the “L-Run (aka 3-cone drill)”. The L-Run, published by Webb and Lander (1983), did not include a return to the start line after the first change of direction (step 2 on Figure 1). The L-run goes directly around ‘B’ to the right (no step 2 or 3). The current study provides an accurate account of the original 3-Cone Test. In addition, two previously undescribed versions (3CTL and 3CTA) are described.

To our knowledge, the present study is the first to make direct comparisons between identical tests, with and without a reaction stimulus using a manual cue. Farrow et al. (2005) compared a video reactive stimulus and a planned movement test. They found ICC’s of 0.80, which falls below the range (0.84-0.90) found in the present study across the four test versions of the 3-Cone Test. Morland et al. (2013) examined the use of a pre-planned route and either a light or a human stimulus, but did not report any correlations between tests. A consensus exists that ‘reaction’ remains a critical element of agility. Even with the high correlations (0.86-0.90) and shared variances (r2) of 73.9 and 81.0% between the non-reactive (3CTR and 3CTL) and reactive pairs (3CTAR and 3CTAL), the data confirm differences between agility and CODS tests. While the differences were not as great as hypothesized, both the cueing method and timing may need to be considered. A possible reason for similar results between the reactive and non-reactive version of 3CTR is that the cue was administered too early, thus allowing the participant to react and change direction at nearly the same velocity they were running at during the non-reactive test. In addition, more than a single reactive component may be necessary to fully assess agility (Matlak, et al., 2016). It also may be necessary to calculate ‘segment times’ (including reaction time), as reported by Spiteri (2015), instead of total test time in order to establish exactly where time was lost between trials. For example, a runner may get a bad start or lose balance during a change of direction - unrelated to the reaction component of the test. In that case, total time would not be representative of the influence reaction has on executing the test as fast as possible. Lastly, the use of a more sophisticated cueing system that simulates a specific game situation may be required. For example, the question of how much time does a person have to react to the situation in real-time at the velocity they will be traveling must be considered. This would include the distance to the cue. The manual cue given in the current study was at a distance equal to 2.29 metres from the required change in direction. This distance may have contributed to the lack of difference between the reactive (3CTAR/3CTAL) and non-reactive tests (3CTR/3CTL). A computerized cueing system, presently found in the new generation of reactive tests, could randomly provide a visual cue at various distances from the point of change of direction.

A limitation associated with the present study is the use of a convenience sample, which is a threat to external validity and, ultimately, generalizability. Before using any version of the 3CT, practitioners must first determine the reliability within their population. A second limitation was the consistency of maximum effort. The participants were asked to refrain from any strenuous activity the day prior to testing. However, it became obvious, once the data was analyzed, that a few individuals provided less than maximal effort on certain trials. In five of the 40 subjects (12.5%), test times decreased greater than one second from day 1 to day 2 for at least one test version. The test results from these participants had a significant influence on the ICC values. These types of discrepancies may necessitate re-testing or potential removal from the data set, due to an apparent lack of consistent effort.

In the absence of a “gold standard“ test for agility and without access to a comparison sample, test validation in the current study is limited to face validity. The determination of validity was not the primary focus of the present study, however; based on a required definition of agility that incorporates “a rapid whole-body movement with changing velocity or direction in response to a stimulus” (Sheppard and Young, 2006, p. 922), the 3CTR and 3CTL are deemed reliable CODS tests, requiring future validation. The reaction variations of the test, 3CTAR and 3CTAL, appear to be reliable options for measuring agility. Future investigations should attempt to validate these tests with athletes of different sports and levels of participation, using either criterion or construct validity.

The current list of agility test options is quite limited and focused on a small number of sports and performance levels (Paul et al., 2015). The majority of these reactive agility tests employ either expensive video (Farrow et al., 2005; Gabbett et al., 2007; Serpell et al., 2010; Spiteri et al,. 2014; Young et al,. 2011) or photocell timing gate systems (Benvenuti et al., 2010; Green et al., 2011; Henry et al., 2011; Oliver and Meyers, 2009; Lockie, et al., 2014; Sekulic et al., 2014), crucially; no previous investigation has compared an existing test to a modified agility test using a manual cue to initiate a reaction.

Future research should identify a set of valid, sport/position-specific, developmental, and sex-specific tests of agility. The use of technology allows for multiple variations in test patterns that challenge both the temporal and spatial components described by Chelladurai (1976) and Sheppard and Young (2006). Video from actual game play can be used for testing and training purposes, as Serpell (2010) demonstrated using practice video clips. It is likely that, in the not too distant future, athletes could attend virtual training centres and interact with 3-dimensional images as part of their testing and training programs, much like a “virtual reality” video game. Conversely, alternative “field” accessible tests of agility should be developed for practitioners at the youth sport and physical education level. These tests should be valid, use appropriate technology and include the same characteristics described above.

Conclusion

Agility is dependent upon multiple physical and biomechanical attributes and, as researchers begin to consolidate information from disparate sources, the necessary factors for developing agility should emerge. The current investigation provides insights into test design, which could be sport or position-specific. The development of such tests will also create an opportunity for examining different training methodologies in order to improve an athlete’s sport-specific agility.

Acknowledgements

The authors declared no conflict of interests regarding the publication of this manuscript.

Biographies

Jason G. LANGLEY

Employment

Assistant Professor and Human Performance Lab Coordinator at the University of Southern Indiana.

Degree

PhD

Research interests

Human performance testing and training.

E-mail: jglangley@usi.edu

Robert CHETLIN

Employment

Associate Professor and Clinical Coordinator, Mercyhurst University Department of Sports Medicine

Degree

PhD

Research interests

Strength and conditioning.

E-mail: rchetlin@mercyhurst.edu

References

- Barnett L.M., Van Beurden E., Morgan P.J., Brooks L.O., Beard J.R. (2008) Does childhood motor skill proficiency predict adolescent fitness? Medicine Science and Sports & Exercise 40(12), 2137-2144. [DOI] [PubMed] [Google Scholar]

- Beekhuizen K.S., Davis M.D., Kolber M.J., Cheng M.-S.S. (2009) Test-retest reliability and minimal detectable change of the hexagon agility test. Journal of Strength and Conditioning Research 23(7), 2167-2171. [DOI] [PubMed] [Google Scholar]

- Beise D., Peaseley V. (1937) The relation of reaction time, speed, and agility of big muscle groups on specific sport skills. The Research Quarterly 8(1), 133-142. [Google Scholar]

- Benvenuti C., Minganti C, Condello G., Capranica L., Tessitore A. (2010) Agility assessment in female futsal and soccer players. Medicina (Kaunas) 46(6), 415-420. [PubMed] [Google Scholar]

- Bland J.M., Altman D.G. (1986) Statistical methods for assessing agreement between two methods of clinical measurement Lancet 8(1, 8746), 307-310. [PubMed] [Google Scholar]

- Chelladurai P. (1976) Manifestations of agility. Canadian Association for Health, Physical Education, and Recreation, 36-40. [Google Scholar]

- Chelladurai P., Yuhasz M.S. (1977) Agility performance and consistency. Canadian Journal of Applied Sport Science 2, 37-41. [Google Scholar]

- Chaouachi A., Bruchelli M., Chanmari K., Levin G.T., Abdelkrim B., Laurencelle L., Castagna C. (2009) Lower limb maximal dynamic strength and agility determinants in elite basketball players. Journal of Strength and Conditioning Research 23(5), 1570-1577. [DOI] [PubMed] [Google Scholar]

- Clarke H. H. (1950) Application of measurement to health and physical education. 2nd edition New York: Prentice-Hall Inc. [Google Scholar]

- Craig B.W. (2004) What is the scientific basis of speed and agility. Strength and Conditioning Journal 26(3), 13-14. [Google Scholar]

- Cureton T. K. (1947) Physical fitness appraisal and guidance. St. Louis: C.V. Mosby Company. [Google Scholar]

- Draper J.A., Lancaster M.G. (1985) The 505 test; a test of agility in the horizontal plane. Austrailian Journal of Science & Medicine in Sport 17(1), 15-18. [Google Scholar]

- Drouin J. (2003) How should we determine a measurement is appropriate for clinical practice? Athletic Therapy Today 8(4), 56-58. [Google Scholar]

- Farrow D., Young W., Bruce L. (2005) The development of a test of reactive agility for netball: a new methodology. Journal of Science Medicine and Sport 8(1), 40-48. [DOI] [PubMed] [Google Scholar]

- Gabbett T.J., Kelly J.N., Sheppard J.M. (2008) Speed, change of direction, and reactive agility of rugby league players. Journal of Strength and Conditioning Research 22(1), 174-181. [DOI] [PubMed] [Google Scholar]

- Gabbett T., Rubinoff M., Thorburn L. (2007) Testing and training anticipation skills in softball fielders. International Journal of Sports Science and Coaching 2(1), 15-24. [Google Scholar]

- Gates D.P., Sheffield R.P. (1940) Tests of change of direction as measurement of different kinds of motor ability in boys in 7th, 8th, and 9th grades. Research Quarterly 11, 136-147. [Google Scholar]

- Graham J.F. (2000) Agility training. In: Training for Speed, agility, and Quickness. Eds: Brown L.E., Ferrigo V.A., Santana J.C. Champaign, IL: Human Kinetics; 79-143. [Google Scholar]

- Green B.S., Blake C., Caulfield B.M. (2011) A valid field test protocol of linear speed and agility in rugby union. Journal of Strength and Conditioning Research 25(5), 1256-1262. [DOI] [PubMed] [Google Scholar]

- Henry G., Dawson B., Lay B., Young W. (2011) Validity of a reactive agility test for Australian football. International Journal of Sports Physiology and Performance 6(4), 534-545. [DOI] [PubMed] [Google Scholar]

- Hoffmann J.R., Ratamess N.A., Klatt M., Faigenbaum A.D., Kang J. (2007) Do bilateral deficits influence direction-specific movement patterns? Research in Sports Medicine 15, 125-132. [DOI] [PubMed] [Google Scholar]

- Hoskins R.N. (1934) The relationship of measurements of general motor capacity to the learning of specific psychomotor skills. Research Quarterly, 63-72. [Google Scholar]

- Jeffreys I. (2006) Motor Learning- Applications for Agility, Part 1. Strength and Conditioning Journal 28(5), 72-72. [Google Scholar]

- Johnson L.W. (1934) Objective basketball tests for high school boys. Iowa City: State University of Iowa. [Google Scholar]

- Larson L.A. (1941) A factor analysis of motor ability variables and tests with tests for college men. Research Quarterly, 499-517. [Google Scholar]

- Lehsten N. (1948) A measure of basketball skills in high school boys. Physical Educator, 103-109. [Google Scholar]

- Lockie R.G., Jefferies M.D., McGann T.S., Callaghan S.J. (2014) Planned and reactive agility performance in semi-professional and amateur basketball players. International Journal of Sports Physiology Performance 9(5), 766-71. [DOI] [PubMed] [Google Scholar]

- Matlak J., Tihanyi J., Racz L. (2016) Relationship between reactive agility and change of direction speed in amateur soccer players. J Strength and Conditioning Research 30(6), 1547-1552. [DOI] [PubMed] [Google Scholar]

- McCloy C.H., Young N.D. (1954) Tests and measurements in health and physical education 3rd edition New York: Appleton-Century-Crofts Inc. [Google Scholar]

- Mohr D. R., Haverstick M.J. (1956) Relationship between height, jumping ability, and agility to volleyball skill. The Research Quarterly 27(1), 74-78. [Google Scholar]

- O'Conner M.E., Cureton T.K. (1945) Motor fitness tests for high school girls. Research Quarterly 16, 302-314. [Google Scholar]

- Oliver J.L., Meyers R.W. (2009) Reliability and generality of measures of acceleration, planned agility, and reactive agility. International Journal of Sport Physiology Performance 4(3), 345-354. [DOI] [PubMed] [Google Scholar]

- Paul D., Gabbet T., Nassis G. (2016) Agility in Team Sports: Testing, Training, and Factors Affecting Performance. Sports Medicine 46, 421-442. [DOI] [PubMed] [Google Scholar]

- Pauole K., Madole K., Garhammer J., Lacourse M., Rozenek R. (2000) Reliability and validity of the T-test as a measure of agility, leg power, and leg strength in college-aged men and women. Journal of Strength and Conditioning Research 14(4), 443-450. [Google Scholar]

- Portney L.G., Watkins M.P. (1992) Foundations of clinical practice. East Norwalk, CT: Appleton & Lange. [Google Scholar]

- Rarick L. (1937) An analysis of the speed factor in simple athletic activities. Research Quarterly 8(4), 89-105. [Google Scholar]

- Sekulic D., Krolo A., Spasic M., Uljevic O., Peric M. (2014) The development of a new stop’n’go reactive agility test. Journal of Strength and Conditioning Research 28(11), 3306-3312. [DOI] [PubMed] [Google Scholar]

- Serpell B.G., Ford M., Young W.B. (2010) The development of a new test of agility for rugby league. Journal of Strength and Conditioning Research 24(12), 3270-3277. [DOI] [PubMed] [Google Scholar]

- Sheppard J.M., Young W.B. (2006) Agility literature review: classifications, training and testing. Journal of Sport Sciences 24(9), 919-932. [DOI] [PubMed] [Google Scholar]

- Sheppard J.M., Young W.B., Doyle T.L., Sheppard T.A., Newton R.U. (2006) An evaluation of a new test of reactive agility and its relationship to sprint speed and change of direction speed. Journal of Science and Medicine in Sport (9), 342-349. [DOI] [PubMed] [Google Scholar]

- Spiteri T., Newton R., Nimphius S. (2015) Neuromuscular strategies contributing to faster multidirectional agility performance. Journal of Electromyography and Kinesiology 25(4), 629-636. [DOI] [PubMed] [Google Scholar]

- Spiteri T., Nimphius S., Hart N.H., Specos C., Sheppard J.M., Newton R.U. (2014) Contribution of strength characteristics to change of direction and agility performance in female basketball players. Journal of Strength and Conditioning Research, 28(9), 2415-2423. [DOI] [PubMed] [Google Scholar]

- Stewart P.F., Turner A.N., Miller S.C. (2014) Reliability, factorial validity, and interrelationships of five commonly used change of direction speed tests. Scandinavian Journal of Medicine & Science in Sports 24, 500-506. [DOI] [PubMed] [Google Scholar]

- Webb P., Lander J. (1983) An economical fitness testing battery for high school and college rugby teams. Sports Coach, 7: 44-46 [Google Scholar]

- Wheeler K.W., Sayers M. G. (2010) Modification of agility running technique in reaction to a defender in rugby. Journal of Sports Science and Medicine 9, 445-451. [PMC free article] [PubMed] [Google Scholar]

- Young W.B., Farrow D. (2013) The importance of sport-specific stimulus for training agility. Strength and Conditioning Journal 27(2), 39-43. [Google Scholar]

- Young W.B., Farrow D., Pyne D., McGregor W., Handke T. (2011) Validity and reliability of agility tests in junior Australian football players. Journal of Strength and Conditioning Research. 25(12), 3399-3403. [DOI] [PubMed] [Google Scholar]

- Young W.B., James R., Montgomery I. (2002) Is muscle power related to running speed with changes of direction? Journal of Sports Medicine and Physical Fitness 43, 282-288. [PubMed] [Google Scholar]

- Young W.B., McDowell M.H., Scarlett B.J. (2001) Specificity of sprint and agility training methods. Journal of Strength and Conditioning Research 15(3), 315-319. [PubMed] [Google Scholar]