Abstract

Background

Studies suggest that most hospitals now have relatively high adherence with recommended AMI process measures. Little is known about hospitals with consistently poor adherence with AMI process measures, and whether these hospitals also have increased patient mortality.

Methods and Results

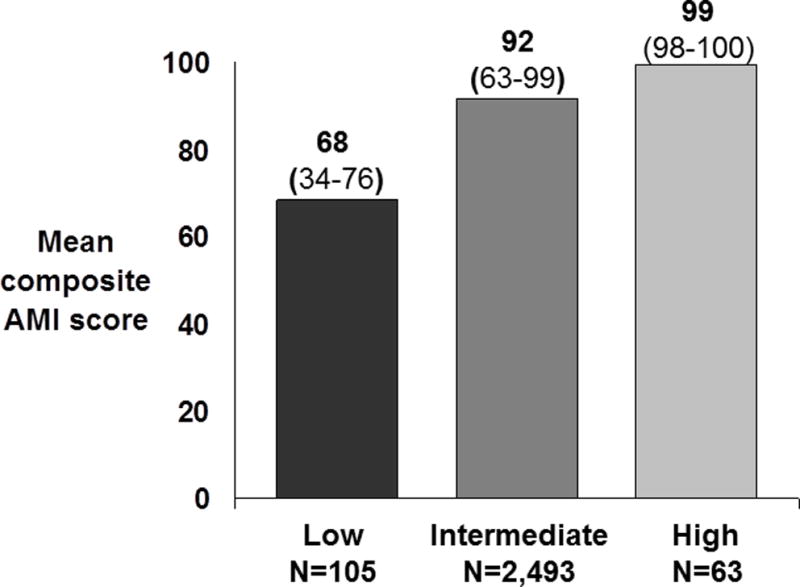

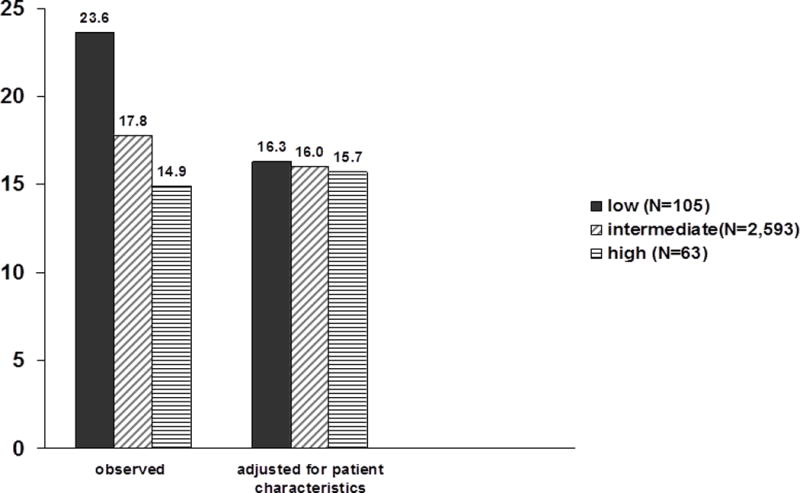

We conducted a retrospective study of 2761 US hospitals reporting AMI process measures to the Center for Medicare and Medicaid Services (CMS) Hospital Compare database during 2004–2006 that could be linked to 2005 Medicare Part A data. The main outcome measures were hospitals’ combined compliance with five AMI measures (aspirin and beta blocker on admission and discharge and ACE inhibitor/ARB use at discharge for patients with left ventricular dysfunction) and risk-adjusted 30-day mortality for 2005. We stratified hospitals into those with low AMI adherence (ranked in the lowest decile for AMI adherence for three consecutive years [2004–2006], [N=105]), high adherence (ranked in the top decile for three consecutive years [N=63]), and intermediate adherence (all others [N=2,593]). Mean AMI performance varied significantly across low, intermediate and high performing hospitals (mean score 68% vs. 92% vs. 99%, P<.001). Low-performing hospitals were more likely than intermediate- and high-performing hospitals to be safety net providers (19.2% vs. 11.0% vs. 6.4%, p=.005). Low-performing hospitals had higher unadjusted 30-day mortality rates (23.6% vs. 17.8% vs. 14.9%; p<.001). These differences persisted after adjustment for patient characteristics (16.3% vs. 16.0% vs. 15.7%, p=.02).

Conclusion

Consistently low-performing hospitals differ substantially from other US hospitals. Targeting quality improvement efforts towards these hospitals may offer an attractive opportunity for improving AMI outcomes.

Keywords: myocadial infarction, hospital, quality of care

Several initiatives have been launched in recent years to collect hospital performance data and make it widely available to the public. The practice that began over 15 years ago in New York State1 has become commonplace, as the Centers for Medicare and Medicaid Services (CMS) now publicly reports performance of hospitals nationally on their website, Hospital Compare (http://www.hospitalcompare.hhs.gov). One rationale for public reporting is that it will motivate low-performing hospitals to improve and encourage patients to select higher quality hospitals.2 Yet questions linger about how best to assess hospital quality and motivate improvement.

Uncertainty remains about whether hospital performance is best measured using adherence to evidence-based process measures or patient outcomes (eg, risk-adjusted mortality).3–11 This uncertainty stems, in part, from conflicting data as to whether hospitals with better adherence with process measures have correspondingly better patient outcomes.9–11 In addition, it is unclear whether pay-for-performance initiatives based on adherence to process measures unfairly target low-performing hospitals with limited resources for quality improvement programs (eg, safety-net hospitals).12,13

While a number of prior studies have characterized hospitals’ adherence with CMS process measures,4,7–11,13–17 and how adherence may be changing over time,15–18 to the best of our knowledge there are no studies specifically focusing on hospitals with consistently poor adherence. In particular, it is unclear how many hospitals have consistently low adherence with CMS process measures, whether these hospitals may face common challenges that were not considered in development of these performance measures, and whether patients treated in hospitals with poor adherence have higher mortality than patients treated in hospitals with better adherence. Furthermore, the identification of low-performing hospitals may offer a particularly good opportunity to target investments in quality improvement in a small group of hospitals that offer the greatest opportunity for benefit.

Thus, our primary objective is to examine the organizational characteristics of hospitals with consistently low adherence with CMS process measures for AMI, as compared to US hospitals with intermediate and high performance. Our secondary objective is to examine characteristics and risk-adjusted mortality of patients treated in low- performing hospitals, as compared to patients treated in intermediate and high performing hospitals.

Methods

The current study relied upon four primary hospital- and patient-level data sources: 1) the CMS Hospital Compare for hospital performance on AMI process measures; 2) the American Hospital Association (AHA) Annual Survey for hospital characteristics; 3) Medicare Part A (MedPAR) data for AMI patient characteristics and outcomes; and 4) US Census data for patient socioeconomic status (SES) measures.

Hospital-level Data

We first identified all hospitals reporting performance on AMI process measures during 2004–2006 through the Hospital Compare website (www.hospitalcompare.hhs.gov) (N=3802). The Hospital Compare website provides performance information for most acute care non-federal hospitals in the US, including each hospital’s adherence with 5 core AMI process measures: 1) use of aspirin within 24 hours of arrival; 2) use of aspirin at discharge; 3) use of beta-blockers within 24 hours of arrival; 4) use of beta-blockers at discharge; 5) the use of an angiotensin-converting-enzyme (ACE) inhibitor or angiotensin receptor blocker (ARB) for left ventricular systolic dysfunction. Although participation in Hospital Compare is voluntary, because CMS has created financial incentives for reporting most measures, participation is nearly universal. There are three additional AMI process measures (time-to-reperfusion with either thrombolytics or percutaneous coronary intervention (PCI), and smoking cessation counseling) that were not linked to financial incentives for reporting during the study period and fewer hospitals reported data for these measures; thus our analyses focused on adherence with the five core measures. For each hospital and each measure, the Hospital Compare database provides the number of patients eligible for each therapy and the number of eligible patients receiving that specific therapy; hospital performance is assessed as the proportion of eligible patients receiving each recommended therapy. We downloaded these publicly available data from the Hospital Compare web site.

We excluded all hospitals at which the total number of AMI patients eligible for each of the five therapies was less than 25 (N=965) in accordance with similar methodology employed by CMS and other investigators,7,14 because such hospitals are considered to have too few cases to allow for a statistically reliable assessment of performance. The remaining 2837 hospitals were linked to the 2005 AHA Annual Survey using each hospital’s unique provider number. The AHA survey provided data on hospitals’ structural and operational characteristics including teaching status, for-profit status, and bed size. We excluded 21 hospitals (<1% of the final sample) that we were unable to link to the AHA data.

Our analyses used a number of additional hospital characteristics that we derived from the data sources described above. We identified each hospital’s location as urban or rural based upon each hospital’s zip code using rural-urban commuting area codes (http://www.ers.usda.gov/Data/RuralUrbanCommutingAreaCodes). We categorized hospitals as safety-net providers if the hospital’s Medicaid caseload for 2005 exceeded the mean for all U.S. hospitals by two standard deviations of the overall distribution for the study hospitals (mean 16.4%, SD 9.6%), thus adapting a method that has been previously used in research.12,19 Finally, we calculated nurse staffing ratios for each hospital as nurse full-time equivalents (FTEs) divided by adjusted patient days.20

Patient-level Data

The study utilized two sources of patient-level data: 1) 2005 MedPAR data for characteristics and outcomes of AMI patients admitted to study hospitals; and 2) the US Census 2000 Summary File (publicly available at http://factfinder.census.gov) for zip code level SES measures for study patients.

We used the 2005 MedPAR data to identify all patients admitted to the study hospitals with AMI (International Classification of Diseases, Ninth revision, Clinical Modification [ICD-9-CM code 410.X]) during 2005; hospitals identified in the HQA data that could not be linked to the MedPAR data (n=76) were excluded. The MedPAR files contain patient-level data on all hospitalizations of fee-for-service Medicare enrollees including patient demographics, primary and secondary diagnoses; procedures; and discharge disposition. Patients in the MedPAR files are linked to the Medicare Denominator files to ascertain deaths occurring after hospital discharge. Deaths occurring after transfer were attributed to the initial admitting hospital rather than the receiving hospital.

We identified AMI location using ICD-9-CM diagnostic codes and comorbid illnesses following algorithms developed by Elixhauser et al.21. We also calculated each hospital’s Medicare AMI, PCI, and coronary artery bypass grafting (CABG) surgery volumes by summing the number of patients admitted to each hospital for each procedure in the MedPAR data. We assessed whether each hospital had full revascularization capability, defined as the performance of at least two PCI and two CABG procedures on Medicare enrollees during 2005.

We linked each patient’s zip code of residence in the MedPAR files to 2000 US Census data to obtain socioeconomic measures (eg, mean household income) of patients admitted to the study hospitals. Zip code based centroids were also used to calculate distances between patients’ residences and admitting hospitals, and between admitting hospitals without revascularization and closest hospitals providing full revascularization services. The final sample included 2761 hospitals admitting 186,700 AMI patients.

Identification of Low-, Intermediate-, and High-Performing Hospitals

We assessed hospital compliance with AMI process measures by calculating a composite AMI score for each hospital and each year reflecting overall compliance with the five AMI measures described previously. Using the methodology adopted by Williams et al,17 the scores were computed as the ratio of the number of patients in a given hospital who received each treatment divided by the number of patients in that hospital who were eligible for each treatment for all five measures in aggregate. Hospitals were then stratified into deciles based on their composite AMI score for each year (ie, 2004, 2005, and 2006). We defined low-performing hospitals as all hospitals ranking in the bottom decile on the AMI composite measure for each of the three years (N=105); we defined high-performing hospitals as hospitals ranking in the top decile during each of the three years (N=63). All other hospitals (N=2593) were defined as intermediate-performing hospitals.

Analyses

First, we compared the AMI composite performance scores and hospital characteristics (urban location, safety net status, teaching status, bed size, nurse staffing ratios, and Medicare AMI volumes) of low-, intermediate-, and high-performing hospitals. These analyses used analyses of variance with Bonferroni adjustment for multiple comparisons for continuous variables and the Cochran-Armitage test for trend for comparisons of proportions across the three hospital categories. Second, we compared the characteristics of AMI patients (eg, demographics, rates of comorbid illness, and SES measures) treated in low-, intermediate- and high-performing hospitals, and rates of patient transfers and revascularization for patients admitted at low-, intermediate- and high-performing hospitals, using similar statistical methods. Rates of revascularization were calculated for 30 days after the initial admission, and included procedures performed during the index admission, transfer to other acute care hospitals, and subsequent hospitalizations for coronary revascularization. Because we only had MedPAR admission data through December 31, 2005, we calculated transfer and revascularization rates through November 30, 2005.

Lastly, we compared crude and adjusted 30-day mortality rates for AMI patients hospitalized in low-, intermediate-, and high-performing hospitals. Crude mortality rates were compared using the Cochran Armitage test for trend. Risk-adjusted 30-day mortality rates were constructed as the ratio of predicted to expected mortality at each hospital, multiplied by the mean overall 30-day mortality rate for this population (ie, hospital-specific risk-standardized mortality rates22). Predicted and expected 30-day mortality were estimated using multivariable mixed models that controlled for patient socio-demographic characteristics and comorbidity using fixed coefficients, and individual hospital effects using random hospital coefficients. Thus, expected mortality at a given hospital reflects the expected probability of death based on patient characteristics and is independent of hospital, while predicted mortality reflects the mortality for patients with the same characteristics at the specific hospital. Potential patient risk factors associated with mortality were identified in bivariate analyses (p<.01) or from previous studies. Candidate predictors included socio-demographic characteristics (age, gender, race, and median household income) comorbid conditions (as defined by Elixhauser et al), and location of myocardial infarction (categorized into four groups defined by ICD-9-CM codes, indicating anterior or lateral, inferior or posterior, subendocardial, or other unspecified locations). We then introduced variables significantly associated with mortality into a stepwise multivariate logistic model. For our risk-adjustment models, we retained all variables independently (p<.01) related to mortality, with the exception of variables for which the observed relationships were not consistent with previously demonstrated clinical effects (e.g., lower risk of death for a comorbidity known to increase the risk of death). Covariates included in the final model are displayed in Appendix 1. Hospital-level risk-standardized mortality rates were compared across the three hospital performance categories using analysis of variance.

To examine the robustness of our findings, we performed several sensitivity analyses. We repeated the analyses using alternative thresholds for defining low-, intermediate-, and high-performing hospitals as the bottom respectively top 15%, 20%, and 25% hospitals based on their composite performance score. We also repeated our analyses after excluding all patients initially admitted with AMI and subsequently transferred to another acute care facility because such patients may differ systematically from patients who do not require transfer.

P-values were two-sided. Statistical significance was defined using a conservative criterion of P<.01. All analyses were performed using SAS statistical software version 9.1 (Cary, NC). This project was approved by the University of Iowa Institutional Review Board.

Results

Mean composite AMI process adherence ranged from 68% among low-performing hospitals to 99% among high-performing hospitals (Figure 1). Low-performing hospitals differed significantly from intermediate- and high-performing hospitals with respect to most hospital characteristics (Table 1). In particular, low-performing hospitals were more likely to be for-profit and were more likely to be defined as safety-net providers; interestingly, only 5 low-performing hospitals were both for-profit and safety net providers.

Figure 1.

Means and ranges of composite AMI scores for low-, intermediate-, and high-performing hospitals

Table 1.

Characteristics of hospitals with low, intermediate, and high compliance with AMI process measures during 2005

| Characteristic | Low (N=105) |

Intermediate (N=2,593) |

High (N=63) |

|---|---|---|---|

| Urban location (%)* | 30.5 (N=32) |

67.0 (N=1,710) |

88.9 (N=56) |

| Safety net status (%)** | 19.2 (N=20) |

11.0 (N=282) |

6.4 (N=4) |

| Teaching status (%)* | 0 (N=0) |

9.8 (N=252) |

27.0 (N=17) |

| Nurse staffing ratio† (mean, [SD]) | 2.5 [1.1] | 2.9 [1.0] | 3.2 [1.2] |

| Bed size† (median, [IQR]) | 83 [57] | 182 [205] | 233 [198] |

| For profit ownership (%)* | 29.8 (N=31) |

16.5 (N=425) |

11.1 (N=7) |

| Medicare AMI volume† (median, [IQR]) | 23 [17] | 70 [106] | 114 [172] |

P-value for trend <.001, except

P=.005

P-value for overall and pair wise comparisons <.001. P-values for pairwise comparisons are adjusted for 3 comparisons within each variable using Bonferroni adjustments.

Hospitals with low AMI performance were much less likely to offer full revascularization services (PCI and CABG) as compared to intermediate- and high-performance hospitals (6.7% [N=6] vs. 51.4% [N=1,052] vs. 77.8% [N=49]; p<.001). Low-performing hospitals without revascularization services were located further away from the nearest hospital with full revascularization services as compared with intermediate- and high-performing hospitals without revascularization services (mean 25 vs. 18 vs. 14 miles, p<.001).

The characteristics of Medicare beneficiares with AMI admitted to low-performing hospitals differed significantly from patients admited to intermediate- and high-performing hospitals (Table 2). In particular, patients admitted to low-performing hospitals tended to be older, were more likely to be female, black, reside in lower income zip codes, live further away from hospitals providing revascularization, and have higher rates of most comorbid illnesses. Patients admitted to low-performing hospitals were more likely to be transferred to another acute care hospital and less likely to receive revascularization within 30 days of admission as compared to patients admitted to intermediate- and high-performing hospitals (Table 3).

Table 2.

Characteristics of Medicare patients admitted to high, intermediate, and low AMI process compliance during 2005

| Patient characteristic | Low (N= 2,134) |

Intermediate (N= 216,946) |

High (N= 7,783) |

P-value* |

|---|---|---|---|---|

| Age (mean [SD]) | 80.4 [8.4] |

79.3 [8.1] |

79.3 [8.0] |

<.001 |

| Race black (%) | 8.9 (N=197) |

7.8 (N=13,932) |

4.3 (N=250) |

<.001 |

| Female gender (%) | 55.9 (N=1,239) |

51.0 (91,177) |

50.2 (N=2,888) |

<.001 |

| Zip code median household income ($, mean [SD]) | 33,730 [11,710] |

43,150 [16,098] |

47,502 [16,153] |

<.001 |

| Distance from home to nearest hospital with revascularization (miles, median [IQR]) | 18.7 [25.2] |

5.5 [14.3] |

4.7 [11.2] |

<.001 |

| Comorbidities | ||||

| Heart failure (%) | 49.3 (N=1,093) |

42.8 (N=76,438) |

39.2 (N=2,254) |

<.001 |

| Arrhythmia (%) | 29.3 (N= 650) |

32.0 (N=57,121) |

30.8 (N=1,772) |

.06 |

| Chronic obstructive lung disease (%) | 28.0 (N=621) |

23.7 (N=42,375) |

21.2 (N=1,221) |

<.001 |

| Fluid and electrolyte disorders (%) | 23.1 (N=513) |

20.1 (N=35,947) |

17.9 (N=1,027) |

<.001 |

| Renal failure (%) | 11.2 (N=249) |

11.2 (N=19,948) |

10.7 (N=618) |

.37 |

| Neurological disease (%) | 6.0 (N=132) |

5.4 (N=9,557) |

4.9 (N=280) |

.04 |

| Paralysis | 0.7 (N=15) |

0.5 (N=866) |

0.5 (N=26) |

.40 |

| Liver disease | 0.7 (N=16) |

0.5 (N=958) |

0.5 (N=30) |

.49 |

| Lymphoma | 0.4 (N=8) |

0.7 (N=1,243) |

0.7 (N=42) |

.16 |

| Metastatic cancer (%) | 1.2 (N=27) |

1.2 (N=2,180) |

1.0 (N=56) |

.15 |

| Weight loss (%) | 2.5 (N=55) |

1.8 (N=3,164) |

1.5 (N=89) |

.015 |

| Dementia (%) | 6.2 (N=138) |

4.1 (N=7,325) |

3.2 (N=181) |

<.001 |

| Coagulopathy (%) | 1.8 (N=39) |

3.5 (N=6,233) |

3.5 (N=202) |

.013 |

| AMI location | ||||

| Anterior and lateral (%) | 10.7 (N=236) |

12.7 (N=22,741) |

13.0 (N=748) |

<.001 |

| Inferior and posterior (%) | 10.3 (N=229) |

13.7 (N=24,503) |

13.9 (N=799) |

<.001 |

| Subendocardial (%) | 52.8 (N=1,171) |

62.3 (N=111,404) |

63.2 (N=3,634) |

<.001 |

| Other site (%) | 26.2 (N=581) |

11.2 (N=20,082) |

9.9 (N=572) |

<.001 |

For categorical variables (proportions), P-values represent P for trend across the three ordinal categories (low, intermediate, and high performance). For continuous variables (age, income and distance), P-values represent P for overall as well as pairwise comparisons (patients admitted to low vs. intermediate, and low vs. high compliance hospitals). P-values were adjusted for 3 comparisons within each variable using Bonferroni adjustments.

Table 3.

Transfer and revascularization rates at 30 days for patients admitted to high, intermediate and low AMI process compliance during January–November 2005

| Low (N= 2,001) |

Intermediate (N= 163,736) |

High (N= 5,263) |

P-trend | |

|---|---|---|---|---|

| Transfer to another hospital (%) | 30.3 (N=607) |

12.5 (N=20,480) |

8.1 (N=428) |

<.001 |

| Revascularization at 30 days | ||||

| PCI (%) | 14.5 (N=290) |

28.2 (N=46,201) |

33.0 (N=1,737) |

<.001 |

| CABG (%) | 8.1 (N=162) |

10.4 (N=17,051) |

10.4 (N=546) |

.003 |

| Total (%) | 22.6 (N=452) |

38.6 (N=63,252) |

43.4 (N=2,283) |

<.001 |

Low-performing hospitals had significantly higher unadjusted 30-day mortality rates as compared to intermediate- and high-performing hospitals (Figure 2 and Table 4). After adjustment for patient characteristics, the risk of mortality in low-performing hospitals remained higher than intermediate- and high-performing hospitals, but differences were modest. Results were similar when patients transferred out of the hospital were excluded from the analyses (Table 4). Sensitivity analyses using alternative definitions of low-, intermediate-, and high-performing hospitals did not significantly change our findings.

Figure 2.

Mean 30-day observed and adjusted mortality rates for low-, intermediate-, and high-performing hospitals; hospital mortality was adjusted for patient characteristics, structural hospital characteristics (eg, ownership, teaching status), and composite AMI performance score

Table 4.

Rates of unadjusted and adjusted 30-day mortality for hospitals with low, intermediate, and high AMI process compliance for all patients and after excluding patients transferred out

| 30-day hospital-specific mortality rates (%) | Low (N=105) |

Intermediate (N=2,593) |

High (N=63) |

Overall P-value | P-value* Low vs. intermediate | P-value* Low vs. high |

|---|---|---|---|---|---|---|

| All Patients | ||||||

| Unadjusted | 23.6 | 17.8 | 14.9 | <.001 | <.001 | <.001 |

| Adjusted for patient characteristics | 16.3 | 16.0 | 15.7 | .04 | .15 | .02 |

| Transferred Patients excluded | ||||||

| Unadjusted | 30.5 | 21.6 | 16.6 | <.001 | <.001 | <.001 |

| Adjusted for patient characteristics | 16.6 | 16.0 | 15.6 | <.001 | .003 | <.001 |

P-values were adjusted for 3 comparisons within each variable using Bonferroni adjustments.

Discussion

Using linked data from Hospital Compare and Medicare, we found that hospitals with consistently low adherence to AMI process measures differ substantially from intermediate- and high-performing hospitals in in a number of ways including hospital location, for-profit status, safety-net status, and availability of revascularization services. In addition, low performing hospitals serve a patient population that is older, more often black, and has more comoribid illness than higher performing hospitals. We found that hospitals with consistently low adherence with AMI process measures had higher mortality rates than better performing hospitals and that the higher mortality persisted after adjustment for patient risk factors, although differences across categories were more modest.

A number of our findings are important and merit further mention. First, our finding that low-performing hospitals appear organizationally different from higher-performing hospitals is important. Our data suggest that the category of low-performing hospitals are actually composed of two distinct subgroups. Many of the low-performing hospitals were small, rural, safety-net hospitals. At the same time, a substantial proportion of low-performing hospitals (N=31) were actually for-profit hospitals. This finding raises the question of why both safety-net and for-profit hospitals are over-represented among low-performing hospitals;it is likely that different mechanisms apply to each group.

It is well recognized that safety-net hospitals face significant financial constraints that have worsened in recent years.23 Moreover, a recent study by Laschober et al12 found evidence that smaller rural hospitals were less likely to have implemented formal quality improvement programs. Thus, it seems possible that safety-net hospitals lack the resources to engage in important quality improvement activities. The cause for poor performance of for-profit hospitals found in this study is somewhat less clear. While some prior studies have found a negative association between hospital quality of care and for-profit ownership status,7,24 other studies have found outcomes in for-profit and not-for-profit hospitals to be quite similar.25–28 It is possible that for-profit hospitals might choose to distribute discretionary funds to their investors rather than invest in quality improvement, though such a strategy would seem risky if poor performance resulted in negative publicity and loss of market share. Alternatively, the finding could be explained by geographic variations in practice patterns, as most of the low-performing for-profit hospitals are located in the South (65%) and West (30%).

Our finding that low-performing hospitals had higher mortality rates than intermediate- and high-performing hospitals is also important. It is possible that low utilization of the evidence-based therapies captured by the process measures (eg, aspirin for AMI) is directly responsible for the higher mortality rates. Alternatively, it is possible that poor adherence to AMI process measures is the proverbial “canary in the coal mine,” identifying hospitals that are having difficulty in many dimensions of quality including, but not limited to use of the evidence-based therapies assessed in the CMS performance measures.

Our finding should be considered in light of a number of recent publications examining the effect of hospital adherence with process measures on hospital outcomes. Both Jha et al29 and Bradley et al30 have reported a strong correlation between hospital compliance with AMI process measures and improved patient outcomes. Alternatively, Werner et al11 found a far weaker correlation between hospital compliance and patient outcomes.

The policy implications of these data are important. In many ways the small group of low-performing hospitals offer an unique opportunity to improve patient outcomes by targeting quality improvement interventions on a discrete group of hospitals that generally serve a large number of vulnerable patients. Such interventions could include desigining pay-for-performance programs to funnel payments to hospitals with the largest improvement rather than incentivizing hospitals based upon their actual performance. In fact, the CMS Premier Hospital Quality Incentive Demonstration (HQID) pay-for-performance project is being revised to reward both top performance as well as improvement which should provide strong incentive for low performing hospitals.31 Second, disproportionate share hospital (DSH) payments might be updated to incentivize safety-net hospitals to invest in quality improvement activities.32 Additionally, since less than one fifth of these low-performing hospitals would be categorized as “safety-net” under current definitions, important research and policy questions arise as to how to better identify safety-net activites in order to allocate federal and local resources (e.g. the Federal Medicaid DSH payments, and state-level uncompensated care pools) more judiciously.33

Alternatively, one could make an argument that hospitals with consistently low performance merit more severe, punitive action rather than remediation; taking such a tact would be politically challenging, but not unprecedented.34 Future research should thus focus on understanding specific organizational issues realted to poor performance, as well as on treatment choices for patients in markets with these low-performing hospitals.

The study has several limitations that need to be acknowledged. First, the analysis was limited to one year of Medicare data, and hospitals reporting at least 25 AMI cases for the combined measures per year, representing only 63% of US hospitals treating AMI patients. Second, adherence to process measures are self-reported by hospitals and not subjected to independent external validation; recent papers have voiced concern over whether hospitals might be manipulating their results to enhance their apparent quality. Third, one could argue that our definitions of low-, intermediate- and high-performing hospitals were based on somewhat arbitrary thresholds of AMI process measure compliance. While this is technically correct, we conducted extensive sensitivity analyses using alternative definitions with similar results. Finally, mortality predictions are based on Medicare patients only and on the limited number of variables available within administrative data sets.

In summary, the current study provides evidence that hospitals with consistently low adherence with CMS AMI process measures differ substantially from better-performing US hospitals in terms of both hospital organizational characteristics and patient outcomes. Targeting quality improvement efforts at low-performing hospitals offers a unique opportunity for improving patient outcomes and reducing disparities in health care.

Supplementary Material

What we know

-

▪

A number of recent studies have characterized hospitals’ adherence with CMS process measures, and how adherence may be changing over time. Data ugggest that, while overall quality has improved over time, some hospitals seem to lag significantly behind. Yet little is known about the characteristics and outcomes of these hospitals

-

▪

Identification of low-performing hospitals may offer a particularly good opportunity to target investments in quality improvement in a small group of hospitals that offer the greatest opportunity for benefit.

What this article adds

-

▪

Analyses provide evidence that low-performing hospitals differ substantially from better-performing US hospitals. Low-performing hospitals have different organizational characteristics, including lower bed numbers, lower staffing ratios and lower volumes, and are less likely to provide specialty services such as coronary revascularization. Low-performing hospitals have are more likely to be safety nets for vulnerable populations and appear to have somewhat worse mortality than other US hospitals.

-

▪

Thus, the study suggests that, by targeting policy and quality improvement efforts at low-performing hospitals, there is a unique opportunity for improving patient outcomes and reducing disparities in health care.

Acknowledgments

Sources of Funding

For data acquisition, management, and analysis, this research was supported, in part, by an award (HFP 04-149) from the Health Services Research and Development Service, Veterans Health Administration, Department of Veterans Affairs.

Dr Popescu is supported by a Ruth L. Kirschstein National Research Service Award, and by a National Scientist Development award from the American Heart Association.

Dr. Vaughan-Sarrazin is a Research Scientist in the Center for Research in the Implementation of Innovative Strategies in Practice (CRIISP) at the Iowa City VA Medical Center, which is funded through the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development Service. Dr. Werner is supported by R01 HS016478-01 from the Agency for Healthcare Research and Quality, by a Career Development Award from the Department of Veterans Affairs, Health Services Research and Development Service, by a T. Franklin Williams Award in Geriatrics, and by a Merit Award (IIR 06-196-2) from the Department of Veterans Affairs, Health Services Research and Development. Dr. Cram is supported by a K23 career development award (RR01997201) from the National Center for Research Resources at the NIH, by the Robert Wood Johnson Physician Faculty Scholars Program, and by R01 HL085347-01A1 from National Heart Lung and Blood Institute at the NIH. The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

Footnotes

Disclosures: none

References

- 1.Hannan EL, Kilburn H, Jr, Racz M, Shields E, Chassin MR. Improving the outcomes of coronary artery bypass surgery in New York State. JAMA. 1994;271:761–766. [PubMed] [Google Scholar]

- 2.Barr JK, Giannotti TE, Sofaer S, Duquette CE, Waters WJ, Petrillo MK. Using public reports of patient satisfaction for hospital quality improvement. Health Serv Res. 2006;41:663–682. doi: 10.1111/j.1475-6773.2006.00508.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jencks SF, Wilensky GR. The health care quality improvement initiative. A new approach to quality assurance in Medicare. JAMA. 1992;268:900–903. [PubMed] [Google Scholar]

- 4.Marciniak TA, Ellerbeck EF, Radford MJ, Kresowik TF, Gold JA, Krumholz HM. Improving the quality of care for Medicare patients with acute myocardial infarction: results from the Cooperative Cardiovascular Project. JAMA. 1998;279:1351–1357. doi: 10.1001/jama.279.17.1351. [DOI] [PubMed] [Google Scholar]

- 5.Iezzoni LI. The risks of risk adjustment. JAMA. 1997;278:1600–1607. doi: 10.1001/jama.278.19.1600. [DOI] [PubMed] [Google Scholar]

- 6.Thomas JW, Hofer TP. Research evidence on the validity of risk-adjusted mortality rate as a measure of hospital quality of care. Med Care Res Rev. 1998;55:371–404. doi: 10.1177/107755879805500401. [DOI] [PubMed] [Google Scholar]

- 7.Jha AK, Li Z, Orav EJ, Epstein AM. Care in U.S. hospitals–the Hospital Quality Alliance program. N Engl J Med. 2005;353:265–274. doi: 10.1056/NEJMsa051249. [DOI] [PubMed] [Google Scholar]

- 8.Werner RM, Bradlow ET, Asch DA. Hospital performance measures and quality of care. LDI Issue Brief. 2008;13:1–4. [PubMed] [Google Scholar]

- 9.Bradley EH, Herrin J, Elbel B, McNamara RL, Magid DJ, Nallamothu BK. Hospital quality for acute myocardial infarction: correlation among process measures and relationship with short-term mortality. JAMA. 2006;296:72–78. doi: 10.1001/jama.296.1.72. [DOI] [PubMed] [Google Scholar]

- 10.Peterson ED, Roe MT, Mulgund J, DeLong ER, Lytle BL, Brindis RG, Smith SC, Jr, Pollack CV, Jr, Newby LK, Harington RA, Gibler WB, Ohman EM. Association between hospital process performance and outcomes among patients with acute coronary syndromes. JAMA. 2006;295:1912–1920. doi: 10.1001/jama.295.16.1912. [DOI] [PubMed] [Google Scholar]

- 11.Werner RM, Bradlow ET. Relationship between Medicare’s hospital compare performance measures and mortality rates. JAMA. 2006;296:2694–2702. doi: 10.1001/jama.296.22.2694. [DOI] [PubMed] [Google Scholar]

- 12.Laschober M, Maxfield M, Felt-Lisk S, Miranda DJ. Hospital response to public reporting of quality indicators. Health Care Financ Rev. 2007;28:61–76. [PMC free article] [PubMed] [Google Scholar]

- 13.Werner RM, Goldman LE, Dudley RA. Comparison of change in quality of care between safety-net and non-safety-net hospitals. JAMA. 2008;299:2180–2187. doi: 10.1001/jama.299.18.2180. [DOI] [PubMed] [Google Scholar]

- 14.Williams SC, Koss RG, Morton DJ, Loeb JM. Performance of top-ranked heart care hospitals on evidence-based process measures. Circulation. 2006;114:558–564. doi: 10.1161/CIRCULATIONAHA.105.600973. [DOI] [PubMed] [Google Scholar]

- 15.Bradley EH, Herrin J, Mattera JA, Holmboe ES, Wang Y, Frederick P. Hospital-level performance improvement: beta-blocker use after acute myocardial infarction. Med Care. 2004;42:591–599. doi: 10.1097/01.mlr.0000128006.27364.a9. [DOI] [PubMed] [Google Scholar]

- 16.Bradley EH, Herrin J, Mattera JA, Holmboe ES, Wang Y, Frederick P. Quality improvement efforts and hospital performance: rates of beta-blocker prescription after acute myocardial infarction. Med Care. 2005;43:282–292. doi: 10.1097/00005650-200503000-00011. [DOI] [PubMed] [Google Scholar]

- 17.Williams SC, Schmaltz SP, Morton DJ, Koss RG, Loeb JM. Quality of care in U.S. hospitals as reflected by standardized measures, 2002–2004. N Engl J Med. 2005;353:255–264. doi: 10.1056/NEJMsa043778. [DOI] [PubMed] [Google Scholar]

- 18.Kroch E, Duan M, Silow-Carroll S. Hospital Performance Improvement: Trends on Quality and Efficiency—A Quantitative Analysis of Performance Improvement in U.S. Hospitals. The Commonwealth Fund. 2007 Apr; [Google Scholar]

- 19.Ross JS, Cha SS, Epstein AJ, Wang Y, Bradley EH, Herrin J. Quality of care for acute myocardial infarction at urban safety-net hospitals. Health Aff (Millwood) 2007;26:238–248. doi: 10.1377/hlthaff.26.1.238. [DOI] [PubMed] [Google Scholar]

- 20.Lindrooth RC, Bazzoli GJ, Needleman J, Hasnain-Wynia R. The effect of changes in hospital reimbursement on nurse staffing decisions at safety net and nonsafety net hospitals. Health Serv Res. 2006;41:701–720. doi: 10.1111/j.1475-6773.2006.00514.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36:8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- 22.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113:1683–1692. doi: 10.1161/CIRCULATIONAHA.105.611186. [DOI] [PubMed] [Google Scholar]

- 23.Bazzoli GJ, Lindrooth RC, Kang R, Hasnain-Wynia R. The influence of health policy and market factors on the hospital safety net. Health Serv Res. 2006;41:1159–1180. doi: 10.1111/j.1475-6773.2006.00528.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Landon BE, Normand SL, Lessler A, O’Malley AJ, Schmaltz S, Loeb JM. Quality of care for the treatment of acute medical conditions in US hospitals. Arch Intern Med. 2006;166:2511–2517. doi: 10.1001/archinte.166.22.2511. [DOI] [PubMed] [Google Scholar]

- 25.Mark BA, Harless DW. Nurse staffing, mortality, and length of stay in for-profit and not-for-profit hospitals. Inquiry. 2007;44:167–186. doi: 10.5034/inquiryjrnl_44.2.167. [DOI] [PubMed] [Google Scholar]

- 26.Picone G, Chou SY, Sloan F. Are for-profit hospital conversions harmful to patients and to Medicare? Rand J Econ. 2002;33:507–523. [PubMed] [Google Scholar]

- 27.Sloan FA, Picone GA, Taylor DH, Chou SY. Hospital ownership and cost and quality of care: is there a dime’s worth of difference? J Health Econ. 2001;20:1–21. doi: 10.1016/s0167-6296(00)00066-7. [DOI] [PubMed] [Google Scholar]

- 28.Sloan FA, Trogdon JG, Curtis LH, Schulman KA. Does the ownership of the admitting hospital make a difference? Outcomes and process of care of Medicare beneficiaries admitted with acute myocardial infarction. Med Care. 2003;41:1193–1205. doi: 10.1097/01.MLR.0000088569.50763.15. [DOI] [PubMed] [Google Scholar]

- 29.Jha AK, Orav EJ, Li Z, Epstein AM. The inverse relationship between mortality rates and performance in the Hospital Quality Alliance measures. Health Aff (Millwood) 2007;26:1104–1110. doi: 10.1377/hlthaff.26.4.1104. [DOI] [PubMed] [Google Scholar]

- 30.Bradley EH, Herrin J, Elbel B, McNamara RL, Magid DJ, Nallamothu BK. Hospital quality for acute myocardial infarction: correlation among process measures and relationship with short-term mortality. JAMA. 2006;296(1):72–78. doi: 10.1001/jama.296.1.72. [DOI] [PubMed] [Google Scholar]

- 31.Overview of CMS Hospital Quality Incentive Demonstration Project payment method. Accessed October 20, 2008, at http://www.premierinc.com/quality-safety/tools-services/p4p/hqi/payment/project-payment-year4.jsp.

- 32.Zwanziger J, Khan N. Safety-net hospitals. Med Care Res Rev. 2008;65:478–495. doi: 10.1177/1077558708315440. [DOI] [PubMed] [Google Scholar]

- 33.Goldman LE, Henderson S, Dohan DP, Talavera JA, Dudley RA. Public reporting and pay-for-performance: safety-net hospital executives’ concerns and policy suggestions. Inquiry. 2007;44:137–145. doi: 10.5034/inquiryjrnl_44.2.137. [DOI] [PubMed] [Google Scholar]

- 34.Ettinger WH, Hylka SM, Phillips RA, Harrison LH, Jr, Cyr JA, Sussman AJ. When things go wrong: the impact of being a statistical outlier in publicly reported coronary artery bypass graft surgery mortality data. Am J Med Qual. 2008;23:90–95. doi: 10.1177/1062860607313141. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.