Abstract

Background

Ascertainment of hospitalizations is critical to assess quality of care and the effectiveness and adverse effects of various therapies. Smartphones, mobile geo-locators that are ubiquitous, have not been leveraged to ascertain hospitalizations. Therefore, we evaluated the use of smartphone-based “geofencing” to track hospitalizations.

Methods and Results

Participants ages ≥ 18 years installed a mobile application programmed to “geofence” all hospitals using global positioning systems and cell phone tower triangulation and to trigger a smartphone-based questionnaire when located in a hospital for ≥ 4 hours. An “in-person” study included consecutive consenting patients scheduled for electrophysiology and cardiac catheterization procedures. A “remote” arm invited Health eHeart Study participants that consented and engaged with the study via the internet only. The accuracy of application-detected hospitalizations was confirmed by medical record review as the reference standard. Of 22 eligible “in-person” patients, 17 hospitalizations were detected (sensitivity 77%; 95% confidence interval [CI] 55-92%). The length of stay according to the application was positively correlated with the length of stay ascertained via the electronic medical record (r=0.53, p=0.03). In the remote arm, the application was downloaded by 3,443 participants residing in all 50 U.S. states; 243 hospital visits at 119 different hospitals were detected through the application. The positive predictive value for an application-reported hospitalization was 65% (95% CI 57-72%).

Conclusions

Mobile application-based ascertainment of hospitalizations can be achieved with modest accuracy. This first proof of concept may ultimately be applicable to “geofencing” other types of prespecified locations to facilitate healthcare research and patient care.

Keywords: hospitalizations, tracking, digital health

Ascertainment of hospitalizations and cardiovascular events is critical to assess disease occurrence, quality of care, and the effectiveness and adverse effects of various therapies.1 Yet, there is currently no optimal method to ascertain these data.2, 3 Self-reported data suffers from recall bias, and the use of medical records and administrative claims is resource-intensive.4 In addition, because there is no universal electronic medical record and researchers from other institutions may have limited access to these records, relying on electronic medical records alone may miss hospital events at hospitals outside of a particular network.

Mobile devices are increasingly used for medical diagnostics,5 disease monitoring,6 and counseling.7 The use of smartphones is quickly becoming ubiquitous, and users are increasingly relying on smartphone applications (or “apps”) to monitor their health.8, 9 The emerging field of mobile health (mHealth) offers new opportunities for patients and providers to collect and share health care information and data. However, despite these advances, the location sensing capabilities of smartphones have not yet been leveraged to ascertain health care data.

Smartphone-based “geofencing,” a location-based program that defines geographical boundaries, may allow real-time tracking of medical visits and reduce the measurement error of retrospective reporting. An important advantage of such a “fence” is that it does not require continuous tracking of location, which would impinge on privacy and therefore likely significantly limit applicability. Instead, a location is identified only when the fence is crossed. Additionally, once validated and optimized, this technology could be expanded into other arenas, such as grocery stores, fast food restaurants, gymnasiums, pharmacies and liquor stores, allowing real-time collection of health-related behaviors and eventually, real-time interventions. Therefore, as a proof of concept, we sought to evaluate the use of smartphone-based “geofencing” for tracking hospitalizations among (1) participants with a known hospital visit (the “in-person” arm) and (2) participants with an app-detected hospital visit (the “remote” arm).

Methods

Study Design

This was a two-part study using data from the Health eHeart Study (www.health-eheartstudy.org), a world-wide online cardiovascular cohort study coordinated at the University of California, San Francisco (UCSF). English-speaking adults (age ≥ 18) with an active e-mail address were eligible to participate in the Health eHeart Study and were recruited through academic institutions, lay press, social media, and promotional events. Upon enrollment, participants were prompted to complete online questionnaires which included questions regarding demographics, personal and family medical history, physical activity, quality of life, and technology use. Individuals meeting inclusion criteria for the Health eHeart Study and who reported having either an iOS or Android-based smartphone were offered participation in the current study.

Remote Arm

In a “remote” arm of the study, we evaluated whether, in participants with an app-detected medical visit, there was a “true” medical visit as determined from the medical record (the positive predictive value of the app). Health eHeart participants with smartphones received an email invitation to download the smartphone app (developed by Ginger.io in collaboration with study investigators) in September 2013. Individuals that enrolled in the Health eHeart Study after that date could also download the app as part of several optional study activities. Therefore, all Health eHeart participants with a smartphone were eligible to join the study between September 4, 2013 to September 9, 2015. Of note, download of the app required additional consent (obtained remotely and electronically) specific to the capabilities and monitoring of the app. Participation in the remote arm was not limited to individuals with a hospital visit scheduled and participants were not provided specific instructions, outside of the consent process, regarding the use of the app to document medical visits.

In the remote arm, an app-detected medical visit was defined as a hospital location detected by the app and confirmed by the participant as a medical visit through the app-based questionnaire. An app-based medical visit was considered a true positive if there was evidence from the medical record that participants visited the medical center within 24 hours of the time reported by the app. In instances where the app detected multiple visits with the same dates, the visits were considered to represent a single encounter if the end of one detected visit and the beginning of another were within 4 hours (the set threshold for detection) of one another.

In-person Arm

In an “in-person” arm of the study, we evaluated (1) whether the app detected known hospital visits (the sensitivity of the app), (2) the correlation between the length of the hospital visit detected by the app compared to the medical record, and (3) feedback from participants at 1-week and 1-month after enrollment in the study. We enrolled consecutive, consenting patients scheduled for electrophysiology and cardiac catheterization procedures at UCSF from July to August 2015. Individuals that had previously downloaded the mobile app as part of the Health eHeart Study were excluded from this arm. Individuals were contacted by telephone and invited to enroll in the Health eHeart Study and install the mobile app on their smartphone. Participants were asked to bring the smartphone with the app pre-installed to the hospital on the day of their scheduled procedure and to respond to any app-based notifications to confirm the hospital visit.

This study was approved by the UCSF Committee on Human Research and all participants provided informed electronic consent. The consent was in a modular and hierarchical form, such that the basic Health eHeart consent was first required before participants were offered the specific Ginger.io app consent. The mobile app could be downloaded only after the specific app-related consent was obtained. Study data were collected and managed using Research Electronic Data Capture electronic data capture tools hosted at UCSF.10

Smartphone-based hospitalization assessment

Once downloaded, the app operated in the background to collect behavioral data such as location data on Android and iOS-based devices and self-reported data through application-based questionnaires. App-detected data was passive, not requiring that the app was opened or any active engagement by the user, and the regular function of the smartphone was not affected. Patients received notifications on their smartphone when a questionnaire was available, such as to confirm a medical visit.

The app was programmed to “geofence” all U.S. hospitals using the Global Positioning Systems (GPS) and cell phone tower triangulation by defining a virtual perimeter around the geographical locations of hospitals. Using “location services” on the phone, if the phone was detected within the geofenced hospital location for ≥ 4 hours, a smartphone-based notification was sent to participants within 1 hour of leaving the hospital vicinity, asking them to respond to a smartphone-based questionnaire to confirm whether or not they visited the medical center for medical care. A 4-hour window was chosen to optimize the detection of as many true hospital visits as possible while attempting to minimize the potential for false positives for people within the “geofence” for non-medical reason, such as visiting a patient or briefly passing through the area.

Patients had up to 16 hours to confirm the visit through the app-based questionnaire. After this window, the questionnaire was no longer available in order to collect the data in “real-time” and avoid confusion with hospitalizations on other dates. To enhance clarity, the smartphone-based questionnaire language was changed on May 2, 2014 (please see Supplemental Methods). A sensitivity analysis done to assess the accuracy of the app before and after the language changed revealed no meaningful differences.

“Technological eligibility” was defined as having the smartphone present, turned on, and with location and notification services enabled.

Electronic medical record-based hospitalization assessment

For participants who visited UCSF, actual hospitalization and duration of stay were determined from the electronic medical record. For participants in the remote arm who reported via the app that they had received medical care at another institution, an e-mail questionnaire was sent in December 2015 requesting confirmation of the medical visit. In order to obtain permission to search medical record data, patients confirming that they had received medical care during the time indicated by the mobile app were asked to provide consent and Health Insurance Portability and Accountability Act (HIPAA) authorization electronically via a workflow in the Health eHeart Study. Among those who did not fill out the e-mail questionnaire, attempts were made to contact participants by telephone, text message, or mail based on their self-reported contact preferences. For consenting and HIPAA-authorizing participants, we contacted the medical center where they had received care and requested the relevant medical records to confirm the visit(s).

Feedback and Usability Ascertainment

Participants in the in-person arm were contacted at one week and one month after hospital discharge and a Feedback and Usability Survey (Supplemental Methods) was administered either via telephone or in person when they returned to UCSF for a post-procedure visit. Among participants who did not respond to the app-based survey to confirm the hospital visit, we attempted to elicit the reason(s). All participants were asked to rate the ease of use of the app, how bothersome the use of the app was, and their level of interest in continuing to use the app on a scale of 1-10.

Covariate ascertainment

Demographics and medical information were self-reported by participants on the initial Health eHeart Study entry questionnaire. Self-reported medical information has been found to have high accuracy within the Health eHeart Study.11 Self-identified race and ethnicity were combined, with Hispanic ethnicity taking precedence over race, and were categorized as white, black, Asian/Pacific Islander, Hispanic, or other. For the in-person study, race was determined from the electronic medical record for participants who did not self-report race through the study questionnaire.

Statistical Analysis

Normally distributed continuous variables are presented as means ± SD and were compared using t-tests, and continuous variables with skewed distributions are presented as medians and interquartile ranges (IQR) and were compared using the Wilcoxon rank-sum test. Categorical variables were compared using the chi-squared test or Fisher's exact test, as appropriate.

In the in-person study, we excluded participants who had a hospital stay < 4 hours (n=3). Spearman rank correlation and a Bland-Altman plot were used to measure the correlation and agreement between smartphone-based and actual duration of hospital stay. Sensitivity was estimated using the in-person arm of the study and positive predictive value was estimated in the remote arm of the study. Patient feedback was collected on a 10-point scale and presented as median (IQR). A two-tailed p value <0.05 was considered statistically significant. Stata version 14 (College Station, Texas) was used for statistical analyses.

Results

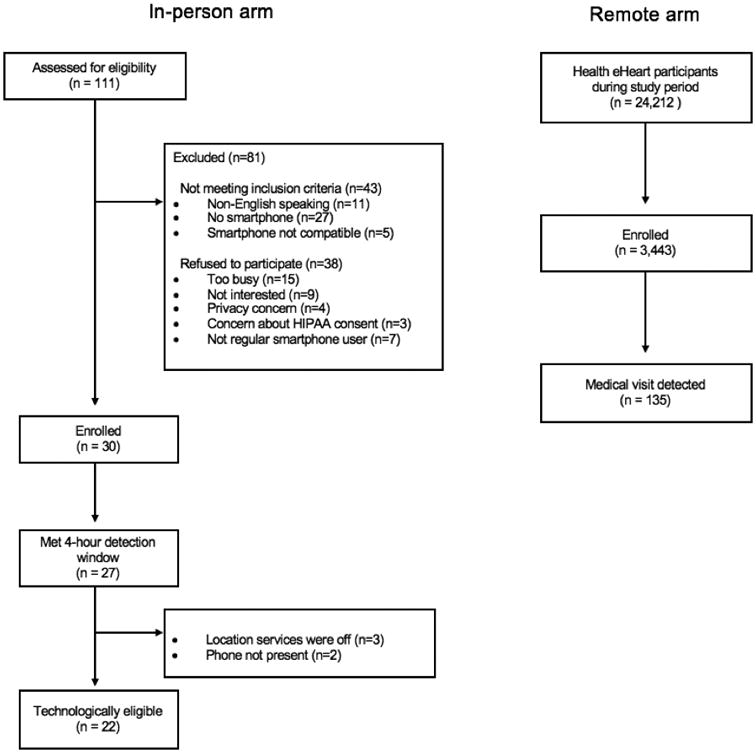

Among 68 individuals meeting inclusion criteria, 30 individuals were enrolled in the in-person arm of the study (Figure 1). Among those who did not consent to the study, concern for privacy was the cited reason in four individuals (6%). Among 24,212 participants in the Health eHeart Study, the mobile application was downloaded by 3,443 remote participants residing in all 50 states with 676 potential hospital locations from 32 states detected (Figure 2). The median study duration was 260.5 days (IQR: 134 to 334) and the median duration that the application was connected was 252 days (IQR: 134 to 330). Individuals in the in-person arm of the study were more likely to be male compared to those in the remote arm (Table 1). There was no evidence of differences in age, race, or smartphone type between the two arms of the study.

Figure 1. Enrollment and study process.

Figure 2.

Geographical distribution of participants (panel A) and hospitals (panel B). Location based on zip code. The number of records represented by relative size. Created with Tableau Software (www.tableau.com) and U.S. map provided under a CC BY-SA license from OpenStreetMap (www.openstreetmap.org/copyright), © OpenStreetMap contributors.

Table 1. Baseline characteristics of study participants.

| Study Arm | |||||

|---|---|---|---|---|---|

| Characteristic | Total n=3,743 | In-person Arm n = 30 | Remote Arm n =3,443 | P value | |

| Mean age, y | 49 ± 14 | 53 ± 16 | 49 ± 14 | 0.10 | |

| Male sex, n (%) | 1,022 (30%) | 19 (63%) | 1,003 (29%) | <0.001 | |

| Race, n (%) | |||||

| White | 2,726 (79%) | 19 (63%) | 2,707 (80%) | 0.08 | |

| Black | 173 (5%) | 1 (3%) | 172 (5%) | ||

| Asian/Pacific Islander | 157 (5%) | 2 (7%) | 155 (5%) | ||

| Hispanic | 216 (6%) | 5 (17% | 211 (6%) | ||

| Other | 162 (5%) | 3 (10%) | 159 (5%) | ||

| Smartphone type, n (%) | |||||

| iOS | 2,162 (65%) | 21 (70%) | 2,141 (65%) | 0.72 | |

| Android | 1,088 (33%) | 9 (30%) | 1,079 (33%) | ||

In-person arm - Smartphone-based ascertainment of scheduled procedures

Participants in the in-person study were predominantly male, white, and iOS users (Table 2). Of 30 participants, 27 participants had a hospitalization of at least four hours during the study period. Of these, a hospital visit was detected for 17 individuals. No difference was found in demographics, hospital duration, or types of procedure between participants who had a visit detected and those who did not.

Table 2. Characteristics of “in-person” participants with and without hospitalization detected.

| Application-detected hospitalization | ||||

|---|---|---|---|---|

| Characteristic | Total | No n=10 | Yes n=17 | P value |

| Age, median (IQR), y | 51 (38-69) | 42 (37-63) | 58 (44-69) | 0.15 |

| Male sex, n (%) | 17 (63%) | 5 (50%) | 12 (71%) | 0.29 |

| Race, n (%) | ||||

| White | 17 (63%) | 4 (40%) | 13 (76%) | 0.15 |

| Black | 1 (4%) | 0 | 1 (6%) | |

| Asian/Pacific Islander | 2 (2%) | 1 (10%) | 1 (6%) | |

| Hispanic | 4 (15%) | 3 (30%) | 1 (6%) | |

| Other | 3 (11%) | 2 (20%) | 1 (6%) | |

| Procedure, n (%) | ||||

| EP study & ablation | 14 (52%) | 7 (70%) | 7 (42%) | 0.44 |

| Diagnostic EP | 2 (7%) | 1 (10%) | 1 (6%) | |

| Right heart catheterization | 7 (26%) | 1 (10%) | 6 (35%) | |

| Pacemaker or ICD | 4 (15%) | 1 (10%) | 3 (18%) | |

| Length of hospital stay, median (IQR), h | 12 (6.5-27) | 13 (7.9-26) | 8 (6-27) | 0.80 |

| Smartphone type, n (%) | ||||

| iOS | 19 (70%) | 8 (80%) | 11 (65%) | 0.67 |

| Android | 8 (30%) | 2 (20%) | 6 (35%) | |

Abbreviation: IQR, interquartile range; EP, electrophysiology; ICD, implantable cardioverter-defibrillator

Among the 22 participants who were “technologically eligible” to have the visit detected (Table 3), 17 visits were detected (sensitivity 77%, 95% confidence interval [CI] 55-92%). Among technologically eligible participants, we did not find any differences between those whose hospitalization was detected compared to those who were not (Table S1). None of these participants had another hospitalization detected by the app during the study period, yielding a positive predictive value of 100%. Of these, 15 (88%) visits were user-confirmed through the smartphone app. In addition, during follow-up at one week, participants reported a high ease and low burden of use, and high interest in continued use of the application during both the one-week and one-month surveys (Table 4).

Table 3. Summary of patient follow-up.

| Visit was not detected (n=10) | |

|---|---|

| Reason hospital visit not detected (n=10) | |

| Technologically ineligible | 5 (50%) |

| • Patient did not have phone/phone off | 3 (30%) |

| • Location services off | 2 (20%) |

| Unknown | 5 (50%) |

| Visit was detected (n=17) | |

| Response to app-based question (n=15): | |

| Were you at ___ Medical Center today? | |

| • Yes, for medical treatment | 15 (100%) |

| • Yes, another reason | 0 |

| • No, I was not there | 0 |

| Reason patients did not respond to question (n=2) | |

| • “I thought I did respond” | 1 (50%) |

| • “I meant to but forgot” | 1 (50%) |

Values are n (%) and based on the Feedback and Usability Survey, which can be found in the Supplemental Methods

Table 4. Participant feedback during follow-up.

| 1-week survey (n=27) | |

| • Ease of use (1=difficult, 10=easy; n=24) | 8.5 (8-10) |

| • Bothersome (1=least,10=most; n=23) | 1 (1-2) |

| • Interest in continued use (1=low, 10=high; n=27) | 7 (5-10) |

| 1-month survey | |

| • Interest in continued use (n=25) | 7 (5-8) |

Values are median (interquartile range) and based on the Feedback and Usability Survey (Supplemental Methods)

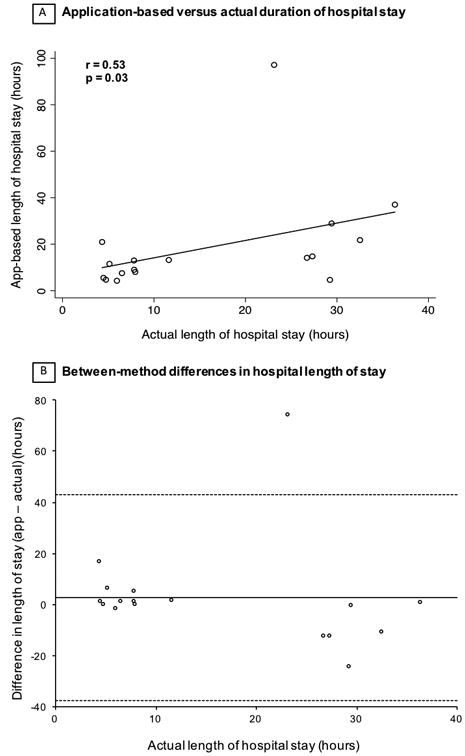

The geofencing app-reported length of stay had a moderate positive correlation with the actual hospital length (Figure 3). The mean difference in the visit duration ascertained through the app and the actual duration was 2.6 hours (95% CI -38 to 43 hours).

Figure 3.

Comparison between application-based and actual duration of hospital stay. Actual length of hospital stay based on the electronic medical record. A. Correlation between two methods. B. Bland-Alman plots showing the difference between application (app)-based and actual duration of hospital stay. Solid line represents mean difference between the app-based and actual length of stay. Dashed line depicts the upper and lower bounds of the 95% confidence interval.

Remote arm - Remote ascertainment of visits for medical treatment

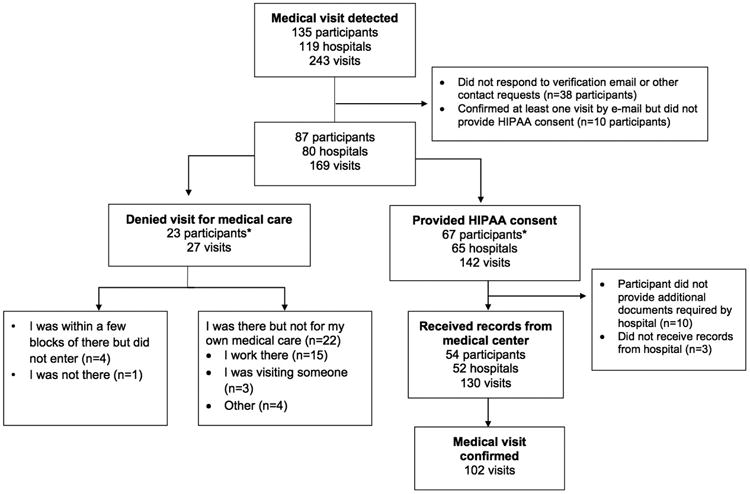

Among 3,443 remote participants, over 10,000 app-based questionnaires were sent to 800 unique participants asking them to confirm or deny the medical visit (Table S2). Of these, 135 participants indicated through the app that they were at the hospital for medical care. These participants had 243 hospital visits at 119 different hospitals. These individuals were more likely to be older, iOS users, and have a history of hypertension, atrial fibrillation, and myocardial infarction compared to those who did not have an app-detected medical visit (Table S3). Among 87 participants that responded to the follow-up email survey to confirm hospitalization and using that email response as the reference standard, 102 out of 157 medical visits were correctly reported through the app (positive predictive value 65%; 95% CI 57-72%; Figure 4). Among 142 medical visits indicated as true positives by participants, there were 130 medical visits where sufficient permissions and hospital forms could be obtained to receive medical records. Of these, 102 were confirmed as true positives using the documented medical record as the reference standard (positive predictive value 78%; 95% CI 70-85%). Among 27 instances of an incorrect app-detected medical visit, the most common reason was that the participant was employed by the medical center (n=15).

Figure 4.

Accuracy of medical visits confirmed through the application in “remote arm.” *Three participants were included in both groups (multiple visits detected with participants verifying via e-mail at least one visit for medical care and denied at least one visit for medical care). HIPAA = Health Insurance Portability and Accountability Act.

Discussion

Smartphone-based geofencing for the ascertainment of hospitalizations in electrophysiology and cardiac catheterization patients exhibited moderate sensitivity for hospitalization detection and moderate correlation with hospital length of stay. In the in-person study, participants reported a high ease and low burden of use, and high interest in continued use of the application. Among remote Health eHeart users in the U.S., the app had a positive predictive value of approximately 70%. Given the importance of obtaining hospital utilization data and the growing ubiquity of smartphones, this sort of technology may provide an efficient and cost-effective method to collect, share, and react to such data in real-time.

As the prevalence of chronic disease increases with the aging population, there is a need for improved health care monitoring and more timely treatment between encounters with health care providers.12, 13 The majority of Americans now use smartphones and patients are relying more on mobile app to regularly monitor their health.14, 15 Smartphone devices already have the capability to nearly continuously identify a user's location in real-time, and publicly available datasets enable pre-specification of a myriad of location types. Push notifications via mobile apps (as was utilized in the current study) or automated calls or texts triggered by some activity on an app allow for customized messaging or questions in response to an app-detected condition. Our proof of concept study suggests that a mobile app might be used to detect healthcare encounters, but the theoretical implications extend to any location (or location type) that can be pre-determined (such as grocery stores, liquor stores, gymnasiums, pharmacies, or fast-food restaurants).

As a proof of concept, we focused on the feasibility of hospital-based geofencing for the detection of hospital and medical center visits. Although ascertainment of this information is critical, there is currently no optimal method to do so. While the medical record and administrative claims are generally considered to be the most reliable sources of this information, their use is time-intensive and costly. In addition, medical records may have inaccuracies and administrative data may be incomplete or delayed.4 Self-reported data offer convenience and reduced costs, but suffers from recall bias, and accuracy can vary based on diagnoses, recall timeframe, and type of utilization.16-18

The ability to record medical visits in real-time would substantially improve this limitation. In the in-person study, we found that when hospital locations were correctly detected, almost 90% of participants correctly used the app to confirm the visit. While the app had only moderate sensitivity for the detection of medical visits, we believe this could be improved upon with more clear instructions to participants, particularly regarding how to set-up the app to allow notifications and access to location services. As participant feedback regarding the experience was generally positive, we believe that further iteration informed by ongoing feedback is likely feasible.

The positive predictive value found in the remote arm was only modest and, among those who provided a reason, the most common cause for an incorrectly reported medical visit was from participants actually in the hospital but not receiving care (such as there for work). Therefore, the rationale and language of the application needs to be clear and tested carefully to improve accuracy without being unnecessarily cumbersome. A next natural step would be to include a learning algorithm, such as adjusting the time-window for those that indicate they work at a given hospital (perhaps a trigger extended for localization in that hospital for more than 12 or 14 hours for example). For this initial study, we selected a 4-hour threshold in an attempt to optimize detection while reducing false positives. While GPS is more accurate, a combination of cell phone triangulation and GPS was used in order to minimize battery usage. We and others will continue to work on enhancing the accuracy of the app for the detection of a hospital location as well as the user-confirmation system to optimize true positives.

This pilot study provides some insights into the use of smartphone-based geofencing that may be improved upon in the future. Furthermore, we believe that this feature has potential use in multiple arenas. Early on, smartphone-based geofencing could be utilized as a research tool to better understand health-related behaviors or patterns. In the future, this technology might be used to offer interventions to help change these behaviors as they occur. The validation of this methodology could lead to its use in a myriad of other facilities: the geofencing of fitness centers, fast food restaurants, and grocery and liquor stores could lead to insights into patient behaviors and heart health. This could be further developed to provide real-time guidance once a location is detected. For example, once a grocery store location is detected, participants could be sent a notification reminding them to purchase healthy foods or vegetables.

While privacy is a concern with any app that detects and shares behavioral information, the number of individuals in the in-person arm who did not enroll due to privacy concerns was low. Additionally, many individuals are motivated to monitor their health and share the information with others for both research and health management purposes. For example, Chen, et al. found that among 67 individuals surveyed in a university setting, 77% were willing to share health-tracking data for research purposes.19 Importantly, the majority of individuals required assurance of privacy and viewed anonymity as important. In studies evaluating the use of telemonitoring to monitor blood pressure, blood glucose, and weight, patients found telemonitoring to be useful and cited the potential of their health providers to view the information as a motivating factor for behavior change.20, 21 In another study of the views of 17 seniors using a monitoring device to collect and transmit “activity data” to providers, 16 of the 17 participants had positive views and did not feel that it invaded their privacy.22

However, research has also found that some individuals who consent to health monitoring may not understand how their data is being tracked or may refuse to use or continue such technology due to privacy concerns.23 Therefore, taken together, we believe that many individuals are willing to share health-related information for research purposes as long as their information is kept private and secure. For clinical purposes, while there is increasing interest in telemonitoring, it is important to ensure that patients understand what data are being tracked and how the data will be used. An important advantage of our study is that geofencing allows individuals to only transmit information about specific types of locations. Therefore, an individual may opt to record and share information regarding hospital visits but not regarding fast food restaurants, if preferred. However, in some individuals, privacy concerns may outweigh the potential benefits of the use of geofencing, which would limit the applicability of its use in these individuals.

Our study had several limitations that are important to address. Technological eligibility was based on self-report, however, when possible, this information was confirmed at the clinic visit. Hospital visit duration ascertained from the electronic medical record may not reflect total time within the geofence (such as time spent in the hospital prior to check-in and after discharge). Participants in the in-person arm were scheduled for elective procedures and thus may not be generalizable to other hospitalization types. While all individuals not previously enrolled in Health eHeart that were scheduled for eligible procedures were invited to enroll in the in-person study and all Health eHeart study participants were eligible to enroll in the remote study during the study periods, those who chose to enroll may be different from those who did not, which may affect the generalizability of our results. However, this would not affect our internal validity, specifically the accuracy of the app to detect a hospital visit within the study populations. Reasons for declining to participate were not collected from remote arm participants, and privacy concerns may hinder similar efforts utilizing geolocation in the future. Although the medical record is commonly used as the reference standard, it may not have captured all medical visits, particularly those where a clinician was not seen such as laboratory testing or radiology imaging. Similarly, we considered true positives in the remote arm to be a medical record-confirmed visit within 24-hours of the app-detected visit. However, it is possible that such a reference standard is not sufficiently sensitive to detect all visits. Finally, the study was not designed to determine specificities or negative predictive values (where, as an extreme example that would not be feasible, all hospitals in the US would need to be queried daily for all participants to assure “true negatives”).

Conclusions

Smartphone-based geofencing may enable real-time tracking of hospitalizations. Use of the tool yielded a moderate sensitivity and a positive predictive value of 65%. Future work should focus on optimizing the accuracy of geofencing applications both in detecting hospital locations and in collecting accurate user feedback. This concept may be used to leverage the ubiquitous use of smart-phones to facilitate clinical research and ultimately to help optimize patient care.

Supplementary Material

What is Known

Ascertainment of hospitalizations is critical in research studies, but an optimal method has not been identified

Smartphone users are increasingly relying on mobile applications to track and monitor their health

Smartphone-based “geofencing” theoretically allows for real-time tracking of hospital visits, yet its use for this purpose has not been validated

What the Study Adds

This study found that smartphone-based geofencing of hospital visits can be achieved with modest accuracy

Users reported generally positive feedback and high interest in continued use of the application

Acknowledgments

Ms. Nguyen and Dr. Marcus had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Sources of Funding: Research reported in this publication was supported by Office of Behavioral and Social Sciences Research, National Institute of Biomedical Imaging and Bioengineering, National Institute of Neurological Disorders and Stroke, National Heart, Lung, and Blood Institute, National Institute on Alcohol Abuse and Alcoholism of the NIH under award number 1U2CEB021881 and from the Patient Centered Outcomes Research Institute (PPRN-1306-04709). This publication was made possible in part by the Clinical and Translational Research Fellowship Program, a program of UCSF's Clinical and Translational Science Institute that is sponsored in part by the National Center for Advancing Translational Sciences, National Institutes of Health, through UCSF-CTSI Grant Number TL1 TR000144 and the Doris Duke Charitable Foundation (DDCF), and by R25MD006832 from the National Institute on Minority Health and Health Disparities (K.T.N.). This publication was also made possible in part by the Sarnoff Cardiovascular Research Foundation (M.A.C.). The contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH, UCSF or the DDCF.

Footnotes

Disclosures: Drs. Moturu and Kaye are employees of Ginger.io. None of the other authors report any potential conflict of interest.

References

- 1.Hlatky MA, Douglas PS, Cook NL, Wells B, Benjamin EJ, Dickersin K, Goff DC, Hirsch AT, Hylek EM, Peterson ED, Roger VL, Selby JV, Udelson JE, Lauer MS. Future directions for cardiovascular disease comparative effectiveness research: report of a workshop sponsored by the National Heart, Lung, and Blood Institute. J Am Coll Cardiol. 2012;60:569–580. doi: 10.1016/j.jacc.2011.12.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Heckbert SR, Kooperberg C, Safford MM, Psaty BM, Hsia J, McTiernan A, Gaziano JM, Frishman WH, Curb JD. Comparison of self-report, hospital discharge codes, and adjudication of cardiovascular events in the Women's Health Initiative. Am J Epidemiol. 2004;160:1152–1158. doi: 10.1093/aje/kwh314. [DOI] [PubMed] [Google Scholar]

- 3.Mell MW, Pettinger M, Proulx-Burns L, Heckbert SR, Allison MA, Criqui MH, Hlatky MA, Burwen DR, Workgroup WPW. Evaluation of Medicare claims data to ascertain peripheral vascular events in the Women's Health Initiative. J Vasc Surg. 2014;60:98–105. doi: 10.1016/j.jvs.2014.01.056. [DOI] [PubMed] [Google Scholar]

- 4.Bhandari A, Wagner T. Self-reported utilization of health care services: improving measurement and accuracy. Med Care Res Rev. 2006;63:217–235. doi: 10.1177/1077558705285298. [DOI] [PubMed] [Google Scholar]

- 5.Kwon L, Long KD, Wan Y, Yu H, Cunningham BT. Medical diagnostics with mobile devices: Comparison of intrinsic and extrinsic sensing. Biotechnol Adv. 2016;34:291–304. doi: 10.1016/j.biotechadv.2016.02.010. [DOI] [PubMed] [Google Scholar]

- 6.Logan AG, McIsaac WJ, Tisler A, Irvine MJ, Saunders A, Dunai A, Rizo CA, Feig DS, Hamill M, Trudel M, Cafazzo JA. Mobile phone-based remote patient monitoring system for management of hypertension in diabetic patients. Am J Hypertens. 2007;20:942–948. doi: 10.1016/j.amjhyper.2007.03.020. [DOI] [PubMed] [Google Scholar]

- 7.Vidrine DJ, Arduino RC, Lazev AB, Gritz ER. A randomized trial of a proactive cellular telephone intervention for smokers living with HIV/AIDS. AIDS. 2006;20:253–260. doi: 10.1097/01.aids.0000198094.23691.58. [DOI] [PubMed] [Google Scholar]

- 8.Sama PR, Eapen ZJ, Weinfurt KP, Shah BR, Schulman KA. An evaluation of mobile health application tools. JMIR Mhealth Uhealth. 2014;2:e19. doi: 10.2196/mhealth.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mobile Technology Fact Sheet. Pew Research Center; Dec 27, 2013. [Accessed 10, 2016]. http://www.pewinternet.org/fact-sheets/mobile-technology-fact-sheet/ [Google Scholar]

- 10.Harris Paul A, T R, Thielke Robert, Payne Jonathon, Gonzalez Nathaniel, Conde Jose G. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dixit S, Pletcher MJ, Vittinghoff E, Imburgia K, Maguire C, Whitman IR, Glantz SA, Olgin JE, Marcus GM. Secondhand smoke and atrial fibrillation: Data from the Health eHeart Study. Heart Rhythm. 2016;13:3–9. doi: 10.1016/j.hrthm.2015.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bauer UE, Briss PA, Goodman RA, Bowman BA. Prevention of chronic disease in the 21st century: elimination of the leading preventable causes of premature death and disability in the USA. Lancet. 2014;384:45–52. doi: 10.1016/S0140-6736(14)60648-6. [DOI] [PubMed] [Google Scholar]

- 13.Murray CJ, Vos T, Lozano R, Naghavi M, Flaxman AD, Michaud C, Ezzati M, Shibuya K, Salomon JA, Abdalla S, Aboyans V, Abraham J, Ackerman I, Aggarwal R, Ahn SY, Ali MK, Alvarado M, Anderson HR, Anderson LM, Andrews KG, Atkinson C, Baddour LM, Bahalim AN, Barker-Collo S, Barrero LH, Bartels DH, Basanez MG, Baxter A, Bell ML, Benjamin EJ, Bennett D, Bernabe E, Bhalla K, Bhandari B, Bikbov B, Bin Abdulhak A, Birbeck G, Black JA, Blencowe H, Blore JD, Blyth F, Bolliger I, Bonaventure A, Boufous S, Bourne R, Boussinesq M, Braithwaite T, Brayne C, Bridgett L, Brooker S, Brooks P, Brugha TS, Bryan-Hancock C, Bucello C, Buchbinder R, Buckle G, Budke CM, Burch M, Burney P, Burstein R, Calabria B, Campbell B, Canter CE, Carabin H, Carapetis J, Carmona L, Cella C, Charlson F, Chen H, Cheng AT, Chou D, Chugh SS, Coffeng LE, Colan SD, Colquhoun S, Colson KE, Condon J, Connor MD, Cooper LT, Corriere M, Cortinovis M, de Vaccaro KC, Couser W, Cowie BC, Criqui MH, Cross M, Dabhadkar KC, Dahiya M, Dahodwala N, Damsere-Derry J, Danaei G, Davis A, De Leo D, Degenhardt L, Dellavalle R, Delossantos A, Denenberg J, Derrett S, Des Jarlais DC, Dharmaratne SD, Dherani M, Diaz-Torne C, Dolk H, Dorsey ER, Driscoll T, Duber H, Ebel B, Edmond K, Elbaz A, Ali SE, Erskine H, Erwin PJ, Espindola P, Ewoigbokhan SE, Farzadfar F, Feigin V, Felson DT, Ferrari A, Ferri CP, Fevre EM, Finucane MM, Flaxman S, Flood L, Foreman K, Forouzanfar MH, Fowkes FG, Fransen M, Freeman MK, Gabbe BJ, Gabriel SE, Gakidou E, Ganatra HA, Garcia B, Gaspari F, Gillum RF, Gmel G, Gonzalez-Medina D, Gosselin R, Grainger R, Grant B, Groeger J, Guillemin F, Gunnell D, Gupta R, Haagsma J, Hagan H, Halasa YA, Hall W, Haring D, Haro JM, Harrison JE, Havmoeller R, Hay RJ, Higashi H, Hill C, Hoen B, Hoffman H, Hotez PJ, Hoy D, Huang JJ, Ibeanusi SE, Jacobsen KH, James SL, Jarvis D, Jasrasaria R, Jayaraman S, Johns N, Jonas JB, Karthikeyan G, Kassebaum N, Kawakami N, Keren A, Khoo JP, King CH, Knowlton LM, Kobusingye O, Koranteng A, Krishnamurthi R, Laden F, Lalloo R, Laslett LL, Lathlean T, Leasher JL, Lee YY, Leigh J, Levinson D, Lim SS, Limb E, Lin JK, Lipnick M, Lipshultz SE, Liu W, Loane M, Ohno SL, Lyons R, Mabweijano J, MacIntyre MF, Malekzadeh R, Mallinger L, Manivannan S, Marcenes W, March L, Margolis DJ, Marks GB, Marks R, Matsumori A, Matzopoulos R, Mayosi BM, McAnulty JH, McDermott MM, McGill N, McGrath J, Medina-Mora ME, Meltzer M, Mensah GA, Merriman TR, Meyer AC, Miglioli V, Miller M, Miller TR, Mitchell PB, Mock C, Mocumbi AO, Moffitt TE, Mokdad AA, Monasta L, Montico M, Moradi-Lakeh M, Moran A, Morawska L, Mori R, Murdoch ME, Mwaniki MK, Naidoo K, Nair MN, Naldi L, Narayan KM, Nelson PK, Nelson RG, Nevitt MC, Newton CR, Nolte S, Norman P, Norman R, O'Donnell M, O'Hanlon S, Olives C, Omer SB, Ortblad K, Osborne R, Ozgediz D, Page A, Pahari B, Pandian JD, Rivero AP, Patten SB, Pearce N, Padilla RP, Perez-Ruiz F, Perico N, Pesudovs K, Phillips D, Phillips MR, Pierce K, Pion S, Polanczyk GV, Polinder S, Pope CA, 3rd, Popova S, Porrini E, Pourmalek F, Prince M, Pullan RL, Ramaiah KD, Ranganathan D, Razavi H, Regan M, Rehm JT, Rein DB, Remuzzi G, Richardson K, Rivara FP, Roberts T, Robinson C, De Leon FR, Ronfani L, Room R, Rosenfeld LC, Rushton L, Sacco RL, Saha S, Sampson U, Sanchez-Riera L, Sanman E, Schwebel DC, Scott JG, Segui-Gomez M, Shahraz S, Shepard DS, Shin H, Shivakoti R, Singh D, Singh GM, Singh JA, Singleton J, Sleet DA, Sliwa K, Smith E, Smith JL, Stapelberg NJ, Steer A, Steiner T, Stolk WA, Stovner LJ, Sudfeld C, Syed S, Tamburlini G, Tavakkoli M, Taylor HR, Taylor JA, Taylor WJ, Thomas B, Thomson WM, Thurston GD, Tleyjeh IM, Tonelli M, Towbin JA, Truelsen T, Tsilimbaris MK, Ubeda C, Undurraga EA, van der Werf MJ, van Os J, Vavilala MS, Venketasubramanian N, Wang M, Wang W, Watt K, Weatherall DJ, Weinstock MA, Weintraub R, Weisskopf MG, Weissman MM, White RA, Whiteford H, Wiebe N, Wiersma ST, Wilkinson JD, Williams HC, Williams SR, Witt E, Wolfe F, Woolf AD, Wulf S, Yeh PH, Zaidi AK, Zheng ZJ, Zonies D, Lopez AD, AlMazroa MA, Memish ZA. Disability-adjusted life years (DALYs) for 291 diseases and injuries in 21 regions, 1990-2010: a systematic analysis for the Global Burden of Disease Study 2010. Lancet. 2012;380:2197–223. doi: 10.1016/S0140-6736(12)61689-4. [DOI] [PubMed] [Google Scholar]

- 14.Smith A. US Smartphone Use in 2015. Pew Research Center; Apr 1, 2015. [Google Scholar]

- 15.Ramirez V, Johnson E, Gonzalez C, Ramirez V, Rubino B, Rossetti G. Assessing the Use of Mobile Health Technology by Patients: An Observational Study in Primary Care Clinics. JMIR Mhealth Uhealth. 2016;4:e41. doi: 10.2196/mhealth.4928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bergmann MM, Byers T, Freedman DS, Mokdad A. Validity of self-reported diagnoses leading to hospitalization: a comparison of self-reports with hospital records in a prospective study of American adults. Am J Epidemiol. 1998;147:969–77. doi: 10.1093/oxfordjournals.aje.a009387. [DOI] [PubMed] [Google Scholar]

- 17.Coughlin SS. Recall bias in epidemiologic studies. J Clin Epidemiol. 1990;43:87–91. doi: 10.1016/0895-4356(90)90060-3. [DOI] [PubMed] [Google Scholar]

- 18.Raina P, Torrance-Rynard V, Wong M, Woodward C. Agreement between self-reported and routinely collected health-care utilization data among seniors. Health Serv Res. 2002;37:751–774. doi: 10.1111/1475-6773.00047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Chen J, Bauman A, Allman-Farinelli M. A Study to Determine the Most Popular Lifestyle Smartphone Applications and Willingness of the Public to Share Their Personal Data for Health Research. Telemed J E Health. 2016;22:655–665. doi: 10.1089/tmj.2015.0159. [DOI] [PubMed] [Google Scholar]

- 20.Hanley J, Fairbrother P, McCloughan L, Pagliari C, Paterson M, Pinnock H, Sheikh A, Wild S, McKinstry B. Qualitative study of telemonitoring of blood glucose and blood pressure in type 2 diabetes. BMJ Open. 2015;5:e008896. doi: 10.1136/bmjopen-2015-008896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Abdullah A, Liew SM, Hanafi NS, Ng CJ, Lai PS, Chia YC, Loo CK. What influences patients' acceptance of a blood pressure telemonitoring service in primary care? A qualitative study. Patient Prefer Adherence. 2016;10:99–106. doi: 10.2147/PPA.S94687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Essen A. The two facets of electronic care surveillance: an exploration of the views of older people who live with monitoring devices. Soc Sci Med. 2008;67:128–136. doi: 10.1016/j.socscimed.2008.03.005. [DOI] [PubMed] [Google Scholar]

- 23.Berridge C. Breathing Room in Monitored Space: The Impact of Passive Monitoring Technology on Privacy in Independent Living. Gerontologist. 2016;56:807–816. doi: 10.1093/geront/gnv034. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.