Introduction

Measurement burst designs combine short-term and long-term longitudinal methods (e.g., a two-week daily diary study that recurs quarterly) (Nesselroade 1991; Sliwinksi 2008). These designs are of particular interest to researchers studying within- and between-person associations across time. Although these designs can provide rich data regarding day-to-day behavior, participation in a measurement burst design might be more burdensome than participation in a cross-sectional or less intensive longitudinal design (Bolger and Laurenceau 2013; Sliwinski 2008). As a result, researchers should carefully consider unit nonresponse, which occurs when sample members do not provide any information at a given time point. Methods for analyzing measurement burst data (e.g., multilevel models) do not require that sample members are observed at every time point (Raudenbush and Bryk 2002, 199–200; Snijders and Bosker 1999, 52); however, as noted by Snijders and Bosker (1999, 52), “smaller groups will have a smaller influence on the results than the larger groups” (where “groups” in this context are participants and “group size” is defined as the number of observations obtained from each participant). For example, to the extent that females disproportionately respond to the daily surveys, findings about within-person associations might generalize primarily to females.

To date, little is known about response patterns in these designs. Specifically, a thorough characterization of response metrics at various stages of the data collection has not yet been documented. Although studies using these designs often mention that differential participation might affect analytic sample representativeness, a better understanding of where in the data collection representation deteriorates is needed. Using data from a measurement burst design examining substance use across the transition out of high school, we present a description of participation throughout the study by adapting response metrics commonly used by panel studies. We then address the following three research questions:

Are subgroups defined by sociodemographics, college plans, and substance use differentially represented in an analytic sample restricted to sample members completing at least one daily survey across all three 14-day bursts (i.e., 42 days)?

At which data collection stage do underrepresented subgroups differentially participate?

Do respondent characteristics predict the total number of daily surveys completed among daily survey respondents?

Background

Response metrics

From a survey methodological perspective, data collection using a web-based measurement burst design is very similar to data collection using an online panel (see Couper 2000). Commonly, both methods begin with the recruitment of a probability sample to participate in the study. Either as part of this recruitment process or immediately following it, sample members are invited to participate in an initial survey [called a “profile” or “welcome” survey in the panel literature (Callegaro and DiSogra 2009)] that collects basic information on potential study participants. Following completion of the profile survey, sample members are invited to participate in subsequent surveys (“target surveys”); in the case of measurement burst designs, these target surveys are commonly daily surveys.

Although there is little literature addressing the methodological aspects of measurement burst designs, there is an extensive literature on panel surveys, in general (e.g., Kasprzyk et al. 1989), and online panels, in particular (e.g., Couper 2000). Of particular relevance to the current study is how response rates to such panels can be quantified. Callegaro and DiSogra (2009) reviewed the variety of panel types and proposed a set of standardized response metrics. The recruitment rate is defined as the proportion of sample members who initially consent to participate in the panel. The profile rate is defined as the proportion of eligible sample members (based on their initial consent) who participate in the profile survey. The completion rate is the proportion of eligible sample members (based on their initial consent and completion of the profile survey) who complete a particular subsequent survey (target survey). Last, the cumulative response rate is the proportion of the initial sample that completes the target survey. Note that the cumulative response rate is the product of the recruitment, profile, and completion rates, reflecting the conditional nature of eligibility at subsequent stages of the panel design.

Sample representativeness

Many studies reporting the substantive findings from intensive longitudinal designs do not include discussions about unit nonresponse or attrition (Leigh 2000). Instead, the emphasis is placed on whether the analytic sample is representative of those invited to participate. Although this information helps the reader understand the generalizability of the results, it does not identify how the various data collection stages might contribute to differential nonresponse. To the extent that differential nonresponse is due to one stage or another (e.g., the profile survey vs. the daily surveys), data collection efforts can be tailored to improve participation at a particular stage and, ultimately, overall response.

Furthermore, although analytic samples are commonly restricted to include participants who have completed a certain number of daily surveys, it is not clear whether participants who complete more days are different from those who complete fewer days or none at all. This is an important distinction because predictors of who participates in any of the daily surveys might differ from predictors of the number of daily surveys (>0) completed. That is, even if respondents to the set of daily surveys are representative of the survey population, differential participation in the daily surveys might affect the generalizability of the estimated within-person associations.

Data and methods

In the spring of 2012, 440 12th grade students from three Midwestern high schools (purposively selected to represent urban, suburban, and rural communities) were recruited to participate in the Young Adult Attitudes Survey (see Griffin and Patrick 2014). After completing a paper-and-pencil school-based baseline survey, approximately two thirds of the participants were randomized into a measurement burst group, and the remaining third were randomized into a control group. Four, eight, and twelve months after baseline (burst 1: September 2012, burst 2: January 2013, and burst 3: May 2013), young adults in the measurement burst group were invited to complete a 30-minute web survey (profile survey) followed by 14 days of daily web surveys (daily surveys). Only profile survey respondents were invited to participate in that burst’s daily surveys. Young adults in the control group were invited to participate in only the 30-minute follow-up web survey administered twelve months after baseline (i.e., the Wave 3 profile survey). The current study focuses on the 202 young adults randomly assigned to the measurement burst group (initial sample).

Plan of analysis

First, we report the following response metrics adapted from Callegaro and DiSogra (2009) and the American Association for Public Opinion Research (AAPOR 2011, 37):

Profile rate: the proportion of eligible initial sample members who complete a specific burst’s profile survey,

Daily survey completion rate: the proportion of profile survey respondents who complete a given daily survey,

Daily survey cumulative response rate: the proportion of the initial sample who complete a given daily survey,

Overall profile rate: the proportion of the initial sample who complete at least one profile survey,

Overall daily survey completion rate: the proportion of profile survey respondents (i.e., those completing at least one profile survey) who complete at least one daily survey, and

Overall daily survey cumulative response rate: the proportion of the eligible initial sample members who complete at least one daily survey.

We then estimate a series of chi-square tests to evaluate whether any of the following self-reported baseline measures predict responding to at least one daily survey (overall daily survey cumulative response rate): sociodemographics (i.e., gender, race/ethnicity, parental education, school location1), college plans (i.e., whether respondent says he/she will definitely graduate from four-year college after high school), and substance use (i.e., lifetime and past 12-month alcohol, marijuana, and other illegal drug use; and past two-week binge drinking). Question wording appears in the Appendix.

To determine the source of differential nonresponse to the daily surveys, we examine predictors of response at each stage of the design. Specifically, we estimate a series of chi-square tests to examine predictors of completing at least one profile survey (overall profile rate) and predictors of completing at least one daily survey among profile survey respondents (overall daily survey completion rate).

Last, to examine the representativeness of daily survey responses among daily survey respondents, we estimate a series of t-tests comparing the mean number of days completed between groups defined by the characteristics in question. All analyses were conducted using SPSS v. 20 (IBM Corporation 2011).

Results

Response metrics

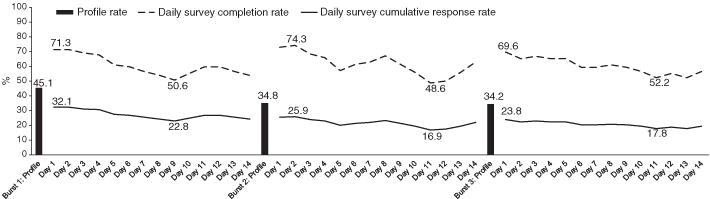

Burst-specific response metrics are illustrated in Figure 1; overall response metrics are illustrated in Figure 2. To assist in the interpretation of the burst-specific metrics, we first present a detailed description for burst 1. In burst 1, 193 of the 202 young adults assigned to the measurement burst group were eligible (i.e., were at least 18 years old) to participate in the first wave of data collection. Of those 193 young adults, 87 completed the profile survey resulting in a profile rate of 45.1 percent. Of the 87 young adults eligible to complete the daily surveys (because of their completion of the profile survey), between 44 and 62 completed a particular daily survey resulting in daily survey completion rates ranging from 50.6 percent to 71.3 percent. The daily survey cumulative response rate for the daily surveys is the product of the profile and completion rates and ranges from 22.8 percent to 32.1 percent.

Figure 1.

Burst-specific response metrics.

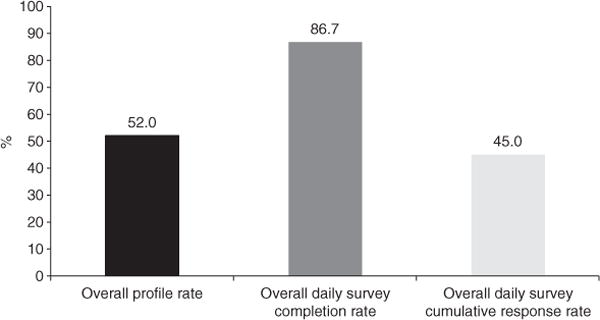

Figure 2.

Overall response metrics.

Due to age-eligibility restrictions (i.e., at least age 18), 201 and 202 sample members were eligible for the burst 2 and burst 3 profile surveys, respectively. Of those eligible, 34.8 percent and 34.2 percent completed the second and third profile surveys, respectively (profile rate). Of those who completed the profile survey for a given burst, completion rates for the daily surveys ranged from 48.6 percent to 74.3 percent in burst 2 and 52.2 percent to 69.6 percent in burst 3 (daily survey completion rate). Daily survey cumulative response rates ranged from 16.9 percent to 25.9 percent in burst 2 and 17.8 percent to 23.8 percent in burst 3.

As illustrated in Figure 2, more than half (52.0 percent) of the initial sample completed at least one profile survey. Among profile survey respondents, 86.7 percent completed at least one daily survey. The overall daily survey cumulative response rate was 45.0 percent. Thus, 45.0 percent of the initial sample completed at least one daily survey.

Research Question 1: Predictors of Overall Daily Survey Cumulative Response

To evaluate the representativeness of an analytic sample restricted to sample members completing at least one daily survey across all three 14-day bursts (i.e., all 42 days), we examined predictors of overall daily survey cumulative response. Results are presented in Table 1. Nonrespondents to the set of daily surveys were more likely to be male, a race/ethnicity other than white, and from an urban high school. With respect to baseline substance use, nonrespondents were less likely to have reported binge drinking and more likely to have reported lifetime or past 12-month other illegal drug use. There were no differences between respondents and nonrespondents by parental education, college plans, alcohol use, or marijuana use.

Table 1.

Prediction of overall profile survey response, overall daily survey completion, and overall daily survey cumulative response.

| Overall profile survey responsea | Overall daily survey completionb | Overall daily survey cumulative responsec | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||||

| Total (n=202) percent |

R (n=105) percent |

NR (n=97) percent |

χ2 | ΦC | Total (n=105) percent |

R (n=91) percent |

NR (n=14) percent |

χ2 | ΦC | Total (n=202) percent |

R (n=91) percent |

NR (n=101) percent |

χ2 | ΦC | |

| Sociodemographics | |||||||||||||||

| Male | 45.5 | 39.0 | 52.7 | 3.701 | 0.137 | 39.0 | 37.4 | 50.0 | 0.814 | 0.088 | 45.5 | 37.4 | 52.3 | 4.447* | 0.150 |

| White | 71.8 | 75.2 | 68.0 | 1.289 | 0.080 | 75.2 | 79.1 | 50.0 | 5.523* | 0.229 | 71.8 | 79.1 | 65.8 | 4.403* | 0.148 |

| Parental college education | 62.9 | 68.6 | 56.7 | 3.044 | 0.123 | 68.6 | 69.2 | 64.3 | 0.138 | 0.036 | 62.9 | 69.2 | 57.7 | 2.869 | 0.119 |

| School location | 1.424 | 0.084 | 10.970** | 0.323 | 6.409* | 0.178 | |||||||||

| Urban | 17.3 | 14.3 | 20.6 | 14.3 | 9.9 | 42.9 | 17.3 | 9.9 | 23.4 | ||||||

| Rural | 49.0 | 50.5 | 47.4 | 50.5 | 53.8 | 28.6 | 49.0 | 53.8 | 45.0 | ||||||

| Suburban | 33.7 | 35.2 | 32.0 | 35.2 | 36.3 | 28.6 | 33.7 | 36.3 | 31.5 | ||||||

| College plans | |||||||||||||||

| Definite college plans | 50.0 | 52.5 | 47.3 | 0.516 | 0.052 | 52.5 | 52.9 | 50.0 | 0.040 | 0.020 | 50.0 | 52.9 | 47.7 | 0.521 | 0.052 |

| Substance use | |||||||||||||||

| Alcohol: Lifetime | 70.1 | 75.5 | 64.6 | 2.763 | 0.119 | 75.5 | 75.6 | 75.0 | 0.002 | 0.004 | 70.1 | 75.6 | 24.4 | 2.212 | 0.107 |

| Alcohol: Past 12 months | 64.8 | 68.0 | 61.5 | 0.916 | 0.069 | 68.0 | 67.4 | 72.7 | 0.125 | 0.036 | 64.8 | 67.4 | 62.6 | 0.486 | 0.050 |

| Alcohol: Binge, past 2 weeks | 25.5 | 33.3 | 17.5 | 6.443* | 0.181 | 33.3 | 34.5 | 25.0 | 0.427 | 0.066 | 25.5 | 34.5 | 18.3 | 6.628* | 0.184 |

| Marijuana: Lifetime | 47.0 | 45.2 | 49.0 | 0.284 | 0.038 | 45.2 | 43.3 | 57.1 | 0.933 | 0.095 | 47.0 | 43.3 | 50.0 | 0.883 | 0.066 |

| Marijuana: Past 12 months | 37.9 | 37.9 | 37.9 | 0.000 | 0.000 | 37.9 | 36.0 | 50.0 | 1.014 | 0.099 | 37.9 | 36.0 | 39.4 | 0.254 | 0.036 |

| Other illegal drug: Lifetime | 14.4 | 9.7 | 18.8 | 3.359 | 0.130 | 9.7 | 6.7 | 30.8 | 7.528** | 0.270 | 14.4 | 6.7 | 20.2 | 7.449** | 0.193 |

| Other illegal drug: Past 12 months | 8.6 | 3.9 | 13.7 | 5.946* | 0.174 | 3.9 | 2.2 | 15.4 | 5.196 | 0.226 | 8.6 | 2.2 | 13.9 | 8.387** | 0.206 |

Note. R=respondent, NR=nonrespondent. χ2=chi-square test statistic for test of difference between respondents and nonrespondents. ΦC =Cramér’s V coefficient (an effect size measure that is equivalent to the phi coefficient (Φ) in the case of a 2×2 contingency table).

Completing at least one profile survey (among all 202 young adults).

Completing at least one daily survey conditional on having completed at least one profile survey (i.e., among 105 young adults eligible).

Completing at least one daily survey overall (i.e., not conditional on having completed at least one profile survey; thus, among all 202 young adults).

p<0.05,

p<0.01,

p<0.001.

Research Question 2: Predictors of Overall Profile Response and Overall Daily Survey Completion

To determine the source of differential nonresponse to the set of daily surveys, we examined predictors of response at each stage of the design. Specifically, we considered the representativeness of (1) participants who completed at least one profile survey (overall profile survey response) and (2) participants who completed at least one daily survey conditional on completing at least one profile survey (overall daily survey completion). Results are presented in Table 1. Nonrespondents to the set of profile surveys were more likely to have reported, at baseline, binge drinking and past 12-month other illegal drug use. There were no differences between profile survey respondents and nonrespondents on sociodemographics, college plans, alcohol use, marijuana use, or lifetime other illegal drug use. Among profile survey respondents, nonrespondents to the set of daily surveys were more likely to be a race/ethnicity other than white, from an urban high school, and to have reported, at baseline, lifetime other illegal drug use. There were no differences between daily survey respondents and nonrespondents on gender, parental education, college plans, alcohol use, marijuana use, or past 12-month other illegal drug use.

Research Question 3: Predictors of the Total Number of Daily Surveys Completed by Daily Survey Respondents

On average, participants who completed at least one daily survey in a given burst completed approximately 10 of the 14 daily surveys (burst 1: , s = 4.20, median=11; burst 2: , s=4.19, median=11; burst 3: , s=4.55, median=11). Participants who completed at least one daily survey across all bursts completed an average of 21 of the 42 daily surveys ( , s=13.49, median=15); 13.2 percent completed all 42 daily surveys. Mean differences and corresponding t-tests examining the number of daily surveys completed by respondent characteristics were computed (results not presented). None of the comparisons were statistically significant.

Discussion

In the current paper, we defined response at the various stages of the data collection and examined the source of nonresponse to the set of daily surveys. Generally speaking, response metrics were highest for the first request of a given type (i.e., response rates decreased over time) (see also Bailar 1989; Bolger and Laurenceau 2013). Notably, nearly all participants who completed at least one profile survey also completed at least one daily survey, suggesting that profile surveys are not a barrier to subsequent daily survey response. That is, the task of completing the daily surveys does not seem to be so overwhelming that sample members opt out entirely.

Respondents to the set of daily surveys differed from the initial sample in important, but expected, ways. Perhaps more importantly, we found that patterns of nonresponse differed across data collection stages. For example, although participation in the profile survey did not vary by race/ethnicity, participation in the daily surveys among profile respondents was lower among young adults reporting a race/ethnicity other than white, from an urban high school, and reporting lifetime other illegal drug use in high school. Conversely, although participation in the daily surveys among profile survey respondents did not vary by baseline binge drinking or past 12-month other illegal drug use, young adults reporting binge drinking were more likely to participate in at least one profile survey and young adults reporting other illegal drug use were less likely to participate in at least one profile survey. Last, among daily survey respondents, respondent characteristics did not predict the number of daily surveys completed.

Knowing the source of nonresponse will help inform efforts to improve the representation of daily survey respondents. Specifically, data collection methods can be tailored to encourage response among population subgroups at various data collection stages. For example, when participation in the profile surveys is the primary driver of overall differential nonresponse, respondent contacts might emphasize completing profile surveys. Conversely, when participation in daily surveys is the primary driver of overall differential nonresponse, respondent contacts might highlight the importance of completing even a small number of daily surveys. Finally, when person-days are representative of daily survey respondents, but not of the initial sample, efforts encouraging profile participants to begin the daily surveys might be more effective in increasing the representativeness of daily survey respondents than efforts to encourage the completion of a greater number of daily surveys among daily survey respondents.

In conclusion, the use of standardized response metrics for examining nonresponse will facilitate comparisons among studies using measurement burst designs and, thus, shared learning about the strengths and limitations of these designs. Additionally, more research about predictors of nonresponse in such designs and the use of responsive data collection strategies can help improve data collection methods and, ultimately, data quality.

Acknowledgments

The National Institute on Drug Abuse funded the study and the preparation of this manuscript (R21 DA031356 to M.E.P). The content here is solely the responsibility of the authors and does not necessarily represent the official views of the sponsors

Appendix

Table A1.

Baseline survey measures.

| Construct | Question stem | Response options and data coding |

|---|---|---|

| Sex | What is your sex? | 1 Male 0 Female |

|

| ||

| Race/ethnicity | How do you describe yourself? (Mark all that apply.) |

1 White (Caucasian) 0 Black or African American 0 Mexican American or Chicano 0 Cuban American 0 Puerto Rican 0 Other Hispanic or Latino Asian American 0 American Indian or Alaska Native 0 Native Hawaiian or Other Pacific Islander |

|

| ||

| Parental education | What is the highest level of schooling your father completed? | 0 Completed grade school or less 0 Some high school 0 Completed high school 1 Some college |

| What is the highest level of schooling your mother completed? | 1 Completed college 1 Graduate or professional school after college . Don’t know, or does not apply |

|

|

| ||

| College plans | How likely is it that you will graduate from college (four-year program) after high school? | 0 Definitely won’t 0 Probably won’t 0 Probably will 1 Definitely will |

|

| ||

| Alcohol: Lifetime | On how many occasions (if any) have you had an ALCOHOLIC BEVERAGE to drink – more than just a few sips… …in your lifetime? |

0 0 Occasions 1 1–2 Occasions 1 3–5 Occasions 1 6–9 Occasions 1 10–19 Occasions 1 20–39 Occasions |

| Alcohol: Last 12 months | …during the last 12 months? | 1 40 or More |

| Alcohol: Binge, last 2 weeks | Think back over the LAST TWO WEEKS. How many times have you had 5 OR MORE drinks in a row? | 0 None 1 Once 1 Twice 1 Three to five times 1 Six to nine times 1 Ten or more times |

|

| ||

| Marijuana: Lifetime | On how many occasions | 0 0 Occasions |

| (if any) have you used MARIJUANA OR HASHISH… …in your lifetime? …during the last 12 months? |

1 1–2 Occasions 1 3–5 Occasions 1 6–9 Occasions 1 10–19 Occasions 1 20–39 Occasions 1 40+ Occasions |

|

| Marijuana: Last 12 months | ||

| Other illegal drug use: Lifetime | On how many occasions (if any) have you used ANY OTHER ILLEGAL DRUGS… …in your lifetime? |

|

| Other illegal drug use: Last 12 months | …during the last 12 months? | |

Footnotes

As noted on the sampling frame.

Contributor Information

Jamie Griffin, Institute for Social Research, University of Michigan.

Megan E. Patrick, Institute for Social Research, University of Michigan

References

- American Association for Public Opinion Research. Standard definitions: final dispositions of case codes and outcome rates for surveys. 7th. AAPOR; Deerfield, IL: 2011. [Google Scholar]

- Bailar BA. Information needs, surveys, and measurement errors. In: Kasprzyk D, Duncan GJ, Kalton G, Singh MP, editors. Information needs, surveys, and measurement errors. John Wiley & Sons; New York: 1989. [Google Scholar]

- Bolger N, Laurenceau JP. Intensive longitudinal methods: an introduction to diary and experience sampling research. The Guilford Press; New York: 2013. [Google Scholar]

- Callegaro M, DiSogra C. Computing response metrics for online panels. Public Opinion Quarterly. 2009;72(5):1008–1032. [Google Scholar]

- Couper MP. Web surveys: a review of issues and approaches. Public Opinion Quarterly. 2000;64(4):464–494. [PubMed] [Google Scholar]

- Griffin J, Patrick ME. Nonresponse bias in a longitudinal measurement design examining substance use across the transition out of high school. Drug and Alcohol Dependence. 2014;143:232–238. doi: 10.1016/j.drugalcdep.2014.07.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- IBM Corporation. IBM SPSS Statistics for Windows Version 20.0. IBM Corporation; Armonk, NY: 2011. [Google Scholar]

- Kasprzyk D, Duncan G, Kalton G, Singh MP. Panel surveys. Wiley; New York: 1989. [Google Scholar]

- Leigh BC. Using daily reports to measure drinking and drinking patterns. Journal of Substance Abuse. 2000;12(1–2):51–65. doi: 10.1016/s0899-3289(00)00040-7. [DOI] [PubMed] [Google Scholar]

- Nesselroade JR. The warp and woof of the developmental fabric. In: Downs R, Liben L, Palermo D, editors. Visions of development, the environment, and aesthetics: the legacy of Joachim F. Wohlwill. Erlbaum, Hillsdale; NJ: 1991. [Google Scholar]

- Raudenbush SW, Bryk AS. Hierarchical linear models: applications and data analysis methods. Vol. 1. Sage; Thousand Oaks, CA: 2002. [Google Scholar]

- Sliwinski MJ. Measurement-burst designs for social health research. Social and Personality Psychology Compass. 2008;2(1):245–261. [Google Scholar]

- Snijders T, Bosker R. Multilevel analysis: an introduction to basic and advanced multilevel modeling. Sage; London: 1999. [Google Scholar]