Abstract

Pictorial examples during creative thinking tasks can lead participants to fixate on these examples and reproduce their elements even when yielding suboptimal creative products. Semantic memory research may illuminate the cognitive processes underlying this effect. Here, we examined whether pictures and words differentially influence access to semantic knowledge for object concepts depending on whether the task is close- or open-ended. Participants viewed either names or pictures of everyday objects, or a combination of the two, and generated common, secondary, or ad hoc uses for them. Stimulus modality effects were assessed quantitatively through reaction times and qualitatively through a novel coding system, which classifies creative output on a continuum from top-down-driven to bottom-up-driven responses. Both analyses revealed differences across tasks. Importantly, for ad hoc uses, participants exposed to pictures generated more top-down-driven responses than those exposed to object names. These findings have implications for accounts of functional fixedness in creative thinking, as well as theories of semantic memory for object concepts.

Keywords: creative problem solving, divergent thinking, object concepts, verbal and pictorial stimuli, semantic knowledge, object function, functional fixedness

The use of examples as instructional tools or as springboards for creative idea generation is widespread among students and professionals in many fields across science, engineering, design, and the arts. Psychological studies on creative problem solving have explored factors that determine whether or not one’s knowledge about the world or experience with a particular kind of problem or situation can facilitate efforts to solve a new problem with similar features. This phenomenon of analogical transfer is well-established in the creativity literature (e.g., Gick & Holyoak, 1980, 1983; Holyoak, 1984, 2005). However, analogical transfer is not always positive. Under certain circumstances, prior knowledge or experience with a particular example or solution strategy may have negative effects for creative thought (e.g., Gentner, 1983; Osman, 2008). Functional fixedness or fixation is an instance of such negative transfer, wherein a solver’s experience with a particular function of an object impedes using the object in a novel way during creative problem solving (Duncker, 1945; Scheerer, 1963).

A number of studies have demonstrated that the presence of pictorial examples may exacerbate functional fixedness during creative generation—or open-ended—tasks (i.e., tasks that do not appear to have one correct solution and for which the solution possibilities appear infinite). Such tasks are presumed to rely primarily on divergent thinking, a notion that was originally introduced by Guilford (1950; 1967) to describe a set of processes hypothesized to result in the generation of ideas that diverge from the ordinary. For example, Jansson and Smith (1991; see also Purcell & Gero, 1996) asked engineering design students and professionals to generate as many solutions as possible for a series of such open-ended design problems (e.g., design a bike-rack for a car). Participants who were shown example designs with the problems were significantly more likely to conform to those examples relative to participants who were asked to solve the problems without such examples. The phenomenon is not exclusive to design experts. Chrysikou and Weisberg (2005) have demonstrated that in similar open-ended design tasks, naïve-to-design participants who were shown a problematic pictorial example produced significantly more elements of the example in their solutions and included more flaws in their designs, relative to participants who were not shown such examples or who were explicitly instructed to avoid them. Similarly, Smith, Ward, and Schumacher (1993; see also Ward, Patterson, & Sifonis, 2004) asked participants to imagine and create designs for different categories (e.g., animals to inhabit a foreign planet, new toys). Participants who were shown pictorial examples tended to conform to these examples, even after completing a distractor task prior to generating their solutions or being instructed to avoid reproducing the example solutions.

Overall, research on design fixation suggests that naïve participants and experts alike are susceptible to the effects of negative transfer from pictures during divergent thinking tasks. That is, in open-ended creative problem-solving tasks, pictorial examples appear to influence how participants retrieve aspects of their knowledge about certain objects or situations to solve the problem at hand. As a result, they tend to fixate on pictorial examples and reproduce their elements, strikingly even in cases where the examples are explicitly described as problematic. Why would pictorial examples have such a constraining effect to creativity? In other words, why would pictures bias semantic memory retrieval in a particular way during creative generation (e.g., design, artistic) tasks? Although traditionally not discussed in this context, research on the organization and function of semantic memory may shed some light on the cognitive processes underlying functional fixedness from pictures during divergent thinking. Behavioral, neuropsychological, and neuroimaging evidence suggest that pictures and words may access different components of semantic memory, and, thus, may make certain aspects of our knowledge about the world more salient than others depending on context and circumstances.

Indeed, one of the key questions concerning research on the structure and organization of knowledge bears on the format of object knowledge representations (e.g., analog versus symbolic). Earlier theories (e.g., Paivio, 1986) examined the possibility of distinct systems through which this semantic knowledge would be represented, for example, a dual code for the processing of visual and verbal information. The influence of stimulus format (e.g., whether pictorial or verbal) on the retrieval of object knowledge has been explored in early investigations of semantic processing which revealed both similarities and differences in reaction times and accuracy for a variety of tasks (e.g., naming, lexical or object decision tasks, priming manipulations, interference effects; Glaser, 1992; Kroll & Potter, 1984; Potter & Faulconer, 1975; Potter, Valian, & Faulconer, 1977).

Later studies suggested that pictures might allow for privileged access to knowledge about functions and motor actions associated with the typical use of the object (relative to other semantic information), whereas words might permit direct access to phonological and lexical (prior to semantic) information (see Glaser & Glaser, 1989). For example, when asked to name and make action decisions (e.g., pour or twist?) and semantic/contextual decisions (e.g., found in kitchen?) about words or pictures of common objects, participants were faster at reading words than naming pictures, whereas they were faster in making action and semantic/contextual decisions for pictures than for words (Chainay & Humphreys, 2002; see also Rumiati & Humphreys, 1998; Saffran, Coslett, & Keener, 2003). Furthermore, using a free association task, Saffran, Coslett, and Keener (2003) reported that pictures elicited more verbs than did verbal stimuli, particularly for non-living, manipulable objects. Finally, Rumiati and Humphreys (1998) have shown that when generating a use-relevant gesture in response to the name or line drawing of an object, participants made more visual (relative to semantic) errors with pictures but not with words (i.e., they generated a gesture appropriate for an item that was visually similar to the target, but not associated with either the target or from the same functional category, e.g., making a hammering gesture in response to a picture of a razor).

Dissociations in performance on semantic knowledge tasks that use pictorial and verbal stimuli have also been reported in studies of neuropsychological patients. For instance, patients with optic aphasia exhibit an inability to retrieve the names of objects presented visually, whereas their performance with lexical/verbal stimuli remains unimpaired (e.g., Hillis & Caramazza, 1995; Riddoch & Humphreys, 1987). In contrast, Saffran, Coslett, Martin, and Boronat (2003) describe the case of a patient with progressive fluent aphasia who exhibited significantly better performance on certain object recognition tasks when she was prompted with pictures relative to words. These and other findings from patients with neuropsychological deficits (e.g., Lambon Ralph & Howard, 2000; McCarthy & Warrington, 1988; Warrington & Crutch, 2007; see also Humphreys & Riddoch, 2007; Riddoch, Humphreys, Hickman, Cliff, Daly, & Colin, 2006) further suggest that pictures and words may access different types of semantic information.

A number of Positron Emission Tomography (PET) and functional magnetic resonance imaging (fMRI) studies using a variety of tasks (e.g., classification, similarity matching, working memory) with pictorial and verbal stimuli, have offered evidence for a common semantic system for pictures and words in the ventral occipitotemporal cortex. However, modality-specific activations were also reported in posterior brain regions when action-related conceptual properties were accessed by pictures and in anterior temporal brain regions when more complex conceptual properties were accessed by words (Bright, Moss, & Tyler, 2004; Gates & Yoon, 2005; Postler, De Bleser, Cholewa, Glauche, Hamzei, & Weiller, 2003; Sevostianov, Horwitz, Nechaev, Williams, From, & Braun, 2002; Vandenberghe, Price, Wise, Josephs, & Frackowiak, 1996; Wright et al., 2008; see also Chao, Haxby, & Martin, 1999; Tyler, Stamatakis, Bright, Acres, Abdallah, Rodd, & Moss, 2004).

Overall, behavioral, neuropsychological, and neuroimaging findings support a common semantic knowledge system in which object concepts are distributed patterns of activation over multiple properties, including perceptual properties (e.g., shape, size, color), motoric properties (e.g., use-appropriate gesturing, mode of manipulation), and abstract properties (e.g., function, relational information) that can be differentially tapped by pictorial or verbal stimuli based on the requirements of a given task (Allport, 1985; Humphreys & Forde; 2001; Plaut, 2002; Shallice, 1993; Tyler & Moss, 2001; Warrington & McCarthy, 1987; see also Chainay & Humphreys, 2002; Rumiati & Humphreys, 1998; Thompson-Schill, 2003; Thompson-Schill, Kan, & Oliver, 2006). Particularly relevant to their potential influence on creative generation or divergent thinking tasks, stimuli in pictorial format may allow for direct access to functional, action-related information (e.g., use-appropriate gesturing, manner of manipulation, object-specific motion attributes), whereas stimuli in verbal format may allow for direct access to other lexical and semantic information.

The Present Study

The investigation of the differential tapping of semantic memory for object concepts by pictures and words has previously exclusively involved simple classification tasks (e.g., naming, gesture generation, similarity judgments), yet these findings may also have important implications for creativity and divergent thinking, specifically in the context of everyday problem solving tasks involving common objects. Indeed, given the apparent link between pictorial stimuli and information related to an object’s canonical function and mode of manipulation as discussed above, pictorial stimuli may induce functional fixedness to an object’s normative or depicted use during creative problem solving. In other words, pictorial stimuli may render properties related to the already-learned actions associated with a given object more salient than others, hence impeding performance on divergent thinking tasks.

Despite its potential importance for understanding the cognitive processes underlying creative thinking, research exploring how the structure and function of semantic memory for objects may guide participant’s performance during open-ended tasks has been limited (e.g., Gilhooly, Fioratou, Anthony, & Wynn, 2007; Chrysikou, 2006, 2008; Keane, 1989; Valeé-Tourangeau, Anthony, & Austin, 1998; see also Walter & Kintch, 1985). Notably, Valeé-Tourangeau et al. (1998) asked participants to instantiate taxonomic and ad hoc categories for objects and to report retrospectively the strategies they followed to perform the task. An analysis of these reports revealed that during category instantiation participants largely relied on the retrieval of examples from their personal experiences, and significantly less so on the retrieval of abstract, encyclopedic information about category members. In addition, Gilhooly et al. (2007) presented participants with the Alternative Uses divergent thinking task (Christensen & Guilford, 1958) in which they were asked to generate as many alternative uses as possible for six common objects. Some participants were asked to think aloud during the task and a record of their thought processes was analyzed according to the type of memory retrieval strategy participants followed during the task. It was found that participants’ earlier responses were based on a top-down strategy of retrieval from long-term memory of already known uses for the objects. In contrast, later responses were based on bottom-up strategies, such as generating object properties or disassembling the object into its components. Importantly, the novelty of the generated uses was positively correlated with the bottom-up, disassembling strategy.

Overall, past work has demonstrated that (a) the presence of pictorial examples may lead to functional fixedness in open-ended creative thinking tasks, (b) pictures and words may access different components of semantic memory, and (c) people may rely more or less on top-down or bottom-up strategies when accessing their knowledge about objects depending on task demands. However, despite the reported deleterious effects of pictorial examples for problem solving as discussed above, in conjunction with studies demonstrating privileged access to action-related information from pictorial stimuli in close-ended, convergent thinking tasks, no study has explored how the modality of the stimulus (verbal or pictorial) may influence whether participants will adopt a top-down or a bottom-up memory retrieval strategy in open-ended, divergent thinking tasks.

Accordingly, the present experiment examined whether pictures and words will differentially influence access to semantic knowledge for object concepts depending on whether the task is close- or open-ended. We built on previous work on semantic memory retrieval that has focused on close-ended, convergent thinking tasks (i.e., tasks having a specific correct response) by exploring the effects of verbal and pictorial stimuli on the Object Use task (a version of the Alternative Uses, divergent thinking task adapted from Christensen & Guilford, 1958). In each of three subcomponents of the task, the requirements vary such that participants can retrieve from memory and generate the typical function for an object (Common Use task, close-ended), or they are instructed, instead, to generate a secondary function for an object (Common Alternative Use task, finite number of eligible responses) or an ad hoc, non-canonical function for the object (Uncommon Alternative Use task, open-ended). This task, thus, allowed us to manipulate systematically the degree to which participants are asked a close- or open-ended question. In addition, we aimed to extend prior research on semantic memory retrieval strategies in open-ended tasks (e.g., Gilhooly et al., 2007; Valeé-Tourangeau, et al., 1998) by manipulating stimulus modality (verbal, pictorial, or a combination of the two), to examine whether the type of stimulus would differentially guide participants’ responses as a function of the task requirements. In contrast to prior studies that involved multiple responses for the same stimulus (e.g., in the Alternative Uses task), here participants provided a single response for each study item that additionally allowed for the collection of reaction time measures for the task. Finally, we aimed to develop and introduce a novel coding system for single-response data on the Object Use task. Past assessments of creativity (e.g., the Torrance Tests of Creative Thinking, Torrance, 1974), have evaluated both verbal and figural aspects of divergent thought typically on fluency (i.e., the number of suitable ideas that were produced within a particular time period), flexibility (i.e., the number of unique ideas or types of solutions generated by a given person), and originality (i.e., the number of ideas generated by a given individual that were not produced by many other people), in addition to elaboration (the amount of detail in a given response). Although these traditional metrics are important for assessing creativity, they would not have been able to capture our particular interest in this study in top-down-driven relative to bottom-up-driven responses. As such, we developed a novel coding system that allows for the qualitative coding of responses on a continuum ranging from top-down responses that are based on the retrieval of abstract object properties (i.e., canonical function, use-specific mode of manipulation) to bottom-up responses that are based on the retrieval of concrete object properties (i.e., shape, size, materials, removable parts).

We hypothesized that: (a) if stimulus modality (verbal or pictorial) can influence the availability of object properties for retrieval, this should be significantly more pronounced during the open-ended components of the task (i.e., during the generation of secondary and, particularly, ad hoc uses). That is, when the task is open-ended, participants’ responses would differ depending on which object attributes are tapped by different stimulus modalities; however, when the task is close-ended, being prompted with the name or picture of the object (or a combination of the two) should not lead to differences across stimulus conditions, as measured by reaction times and our novel categorization system. We further hypothesized that: (b) if, as discussed above, pictorial materials render properties related to the learned actions associated with a given object more salient than other properties, the presence of pictorial stimuli will influence the extent to which participants’ responses are based on a top-down or a bottom-up semantic retrieval strategy, thus resulting in functional fixedness. That is, although they need not be associated with longer latencies, pictorial stimuli will interfere with the generation of non-canonical functions, leading to more top-down-based responses, relative to verbal stimuli.

Method

Participants

Sixty-three right-handed, native English speakers (N = 63; mean age = 21.12 years, 23 males) participated in this study for course credit. Participants were randomly assigned to one of three conditions, based on the type of stimuli they were shown: (a) The Name condition (n = 22; mean age = 22.39 years, 8 males); (b) the Picture condition (n = 23; mean age = 21.59 years, 6 males); or (c) the Name and Picture condition (n = 18; mean age = 21.88 years, 9 males). Participants across the three conditions did not differ in mean age and distribution of males to females. All participants provided informed consent according to university guidelines.

Materials

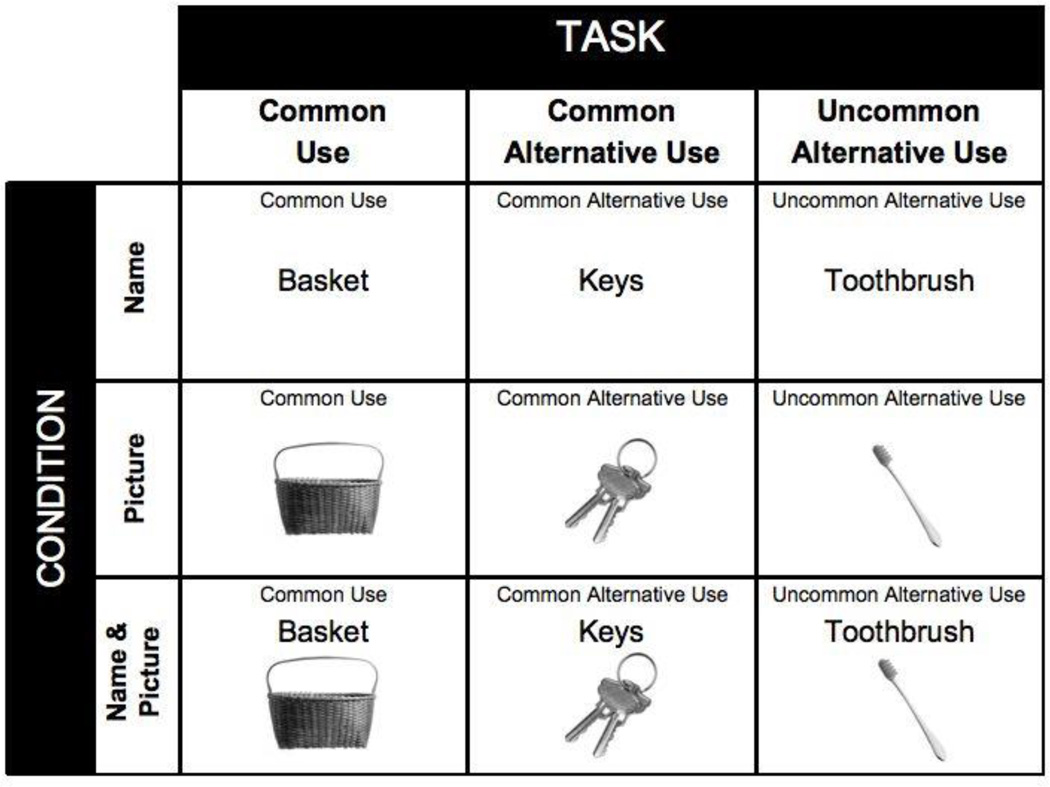

For the Picture condition, 144 black-and-white images of everyday objects, divided randomly into six blocks of 24 items, were used as stimuli. They were selected from a larger set of 220 items based on data from a different group of participants (N = 62, mean age = 20.14, 28 males), who completed a web-based survey asking for the name of each object and for common, common alternative, and uncommon alternative uses for each of them. They further reported how easy it was to generate each type of use for each item (on a 7-point Likert-like scale). Objects with high name agreement (> 75%) and ease of use-generation rating (> 5) were selected for the experiment. For the Name condition, the stimuli were the object names, as determined by the modal name produced by the majority of subjects in the norming study. For the Picture and Name condition, the stimuli consisted of the combination of the names and the pictures of the objects, with the image placed below the name of each object. Examples of stimuli are presented in Figure 1.

Figure 1.

Examples of stimuli by condition and task

Each participant completed two blocks of each of the three experimental tasks (i.e., common use task, common alternative use task, and uncommon alternative use task) for a total of six blocks, the order of which was counterbalanced across participants. The assignment of stimuli to task conditions was also counterbalanced across subjects, and no stimuli were repeated during the experiment.

A desktop PC computer was used for stimulus presentation. A microphone compatible with the stimulus presentation program (E-prime, Psychology Software Tools, Inc.) and a digital voice recorder (Sony electronics, Inc.) were used to record participants’ voice onset and their verbal responses, respectively.

Procedure

For each experimental block participants performed either the Common Use (CU) task, or the Common Alternative Use (CAU) task, or the Uncommon Alternative Use (UAU) task. For the CU task, participants reported (aloud) the most typical or commonly-encountered use for each object (e.g., Kleenex tissue: use to wipe one’s nose); for the CAU task, participants reported a relatively common use for the object, that was frequent but not the most typical (e.g., Kleenex tissue: use to wipe up a spill); finally, for the UAU task, participants generated a novel use for the object, one they had not seen or attempted before or may have seen only once or twice in their lives, that would be plausible, yet, which would deviate significantly from the object’s common and common alternative uses (e.g., Kleenex tissue: use as stuffing in a box). Participants were informed that the tasks had no right or wrong answers and that they should feel free to produce any response they judged fit. They were instructed to respond as quickly as possible and to remain silent if unable to generate a response.

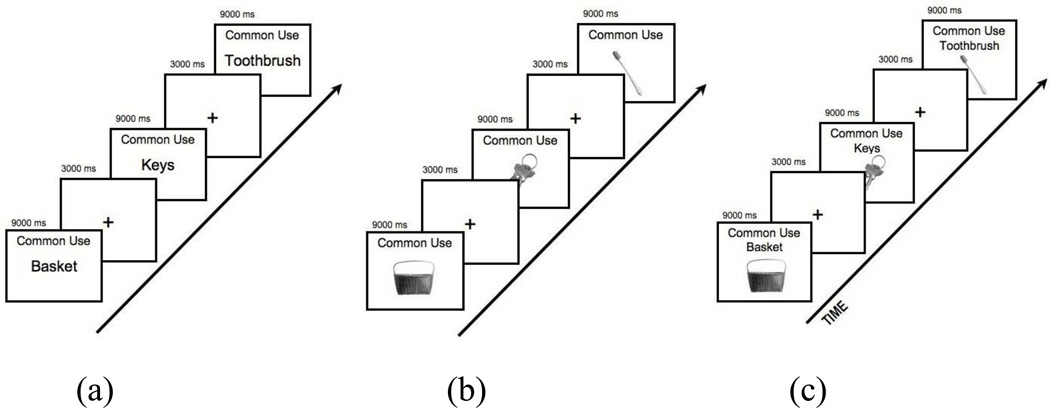

Each 7-minute block comprised 24 experimental trials, presented for 9000 ms followed by a fixation screen for 3000 ms (see Figure 2 for trial timing and composition). The task instructions were presented at the beginning of each block; a prompt also appeared above each trial item (i.e., “Common Use”, “Common Alternative Use”, or “Uncommon Alternative Use”; see Figure 1). Each subject completed a 5-minute training session consisting of three trials of each of the three experimental tasks. The experimental session lasted approximately one hour. At the end of the experiment, participants were debriefed on the purpose of the study and they were urged not to discuss the experiment with their classmates.

Figure 2.

Examples of trial timing and composition for: (a) the Name condition, (b) the Picture condition, and (c) the Name and Picture condition, for the Common use task. The timing and composition of the trials was the same for the Common alternative and Uncommon alternative tasks.

Results

Each participant’s voice-onset reaction times (RTs) per trial were recorded for quantitative analysis. Participants’ verbal responses were further recorded and later transcribed for qualitative analysis. We report the results for each of these measures separately in the sections that follow.

Analysis of Voice-Onset Reaction Times

Median voice-onset RTs were derived for each participant for each of the three experimental tasks (see Table 1), after eliminating any trials for which participants did not respond. Voice-onset RT data from one participant in the Picture condition were missing due to a software malfunction. Median RT data were subjected to a mixed, 3×3 analysis of variance (ANOVA), with type of task (CU, CAU, and UAU) as the within-subjects factor, and condition (Name, Picture, Name & Picture) as the between-subjects factor. The results revealed a significant main effect for task (F[2, 118] = 349.67, p < .001, η2 = .86), but no main effect for condition (F[1, 59] = 0.26, p = .77, η2= .01), and no task by condition interaction (F[4, 118] = 0.65, p = .63, η2 = .02). Across conditions, planned pairwise contrast comparisons showed that the common use task elicited significantly faster responses relative to the common alternative (F[1, 59] = 493.19, p < .001, η2 = .89, Bonferroni correction) and uncommon alternative (F[1, 59] = 654.83, p < .001, η2 = .92, Bonferroni correction) tasks, which did not reliably differ from each other (F[1, 59] = 2.22, p = .14, η2 = .04). Overall, the generation of common uses was associated with significantly faster RTs relative to the generation of secondary and, particularly, ad hoc uses. Regarding the effects of condition—predominantly for the open-ended components of the task—the type of stimulus was not associated with reliable differences in RTs. However, according to our hypotheses and previous research on functional fixedness (e.g., Chrysikou & Weisberg, 2005), if during ad hoc use generation the presence of an object’s name or picture (or a combination of the two) influences the extent to which participants’ responses are based on top-down or bottom-up memory retrieval strategies, then these differences would be present in the kinds of functions participants would generate and not, necessarily, in the speed in which they would generate them. These are the differences we attempted to capture with the novel qualitative coding scheme for participants’ responses that we present in the following section.

Table 1.

Mean Median Voice Onset RTs in milliseconds by Condition and Task (standard errors in parentheses)

| CU | CAU | UAU | |

|---|---|---|---|

| Name Condition (n = 22) |

2111.80 (109.66) |

4073.05 (157.39) |

4166.80 (171.70) |

| Picture Condition (n = 22) |

2196.00 (99.87) |

4062.66 (193.54) |

4389.07 (161.96) |

| Name and Picture Condition (n = 18) |

2152.28 (88.66) |

4266.39 (169.18) |

4278.39 (162.64) |

Note. RT = Reaction times; CU = Common use task; CAU = Common alternative use task; UAU = Uncommon alternative use task.

Qualitative Analysis of Verbal Responses

Description of coding system for object function

Participants’ verbal responses were analyzed with a novel coding system that classifies object function in one of four categories, on a continuum ranging from top-down responses that are based on the retrieval of abstract object properties (i.e., canonical function, typical mode of manipulation; Categories 1 and 2), to bottom-up responses that are based on the retrieval of concrete object properties (i.e., shape, size, materials, removable parts; Categories 3 and 4; see Table 2).

Table 2.

Qualitative Response Coding System for Object Function based on Top-down and Bottom-up Object Properties

| Top-Down-driven, Abstract Properties | Bottom-up-driven, Concrete Properties | ||

|---|---|---|---|

| 1 | 2 | 3 | 4 |

| Use an object for its typical/common function (e.g., chair: to sit on) |

Use an object as (instead of, in the place of) a different tool to allow for a different function (different handling but not modification) (e.g., football: to use as a life saver) |

Modify an object to generate a different function based on the object’s bottom-up features/properties (i.e., properties of the object about which one does not need to already know and that are visible or available without that prior knowledge) (e.g., blanket: to use as a bag to carry things) |

Generate a different function for the object based on its bottom-up features/properties (i.e., properties of the object about which one does not need to already know and that are visible or available without that prior knowledge) (e.g., flashlight: to open a beer bottle) |

| Use an object with the same function in a different context (e.g., chair: to sit on, on the beach) |

Generate a new function for the object based on its top-down features/properties (i.e., properties of the object about which one already knows and that are not visible or available without that prior knowledge) (e.g., hairdryer: to blow leaves) |

Use an object in the place of a different object based on its bottom-up features/properties (i.e., properties of the object about which one does not need to already know and that are visible or available without that prior knowledge) (e.g., bowl: to use as a hat) |

Dissolve-deconstruct an object into its components (or materials) to allow for a different function based on its bottom-up features/properties (i.e., properties of the object about which one does not need to already know and that are visible or available without that prior knowledge) (e.g., chair: to burn as firewood) |

| Modify/deconstruct an object to allow for a new function based on its top-down features/properties (i.e., properties of the object about which one already knows and that are not visible or available without that prior knowledge) (e.g., football: cut it in half and use to collect water) |

Modify an object after a different object to allow for a different function based on its bottom-up features/properties (i.e., properties of the object about which one does not need to already know and that are visible or available without that prior knowledge) (e.g., tennis racket: add straps to use as snow-shoe) |

||

In this system, responses are coded as belonging in Category 1 when they describe functions that are typical of the object’s canonical use (e.g., chair: to sit on) or reflect a use of the object in the same way but in a different context (e.g., chair: to sit on when on the beach).

Category 2 is meant to reflect functions that are not typical of the object, but which originate from top-down retrieval of object features that are associated with its canonical function and are not available simply by observing the object. Responses are also coded as belonging to Category 2 when the object is used to substitute for the function of another tool based on shared top-down or abstract properties (i.e., properties not visible or available without prior knowledge of what the object is); for example, using a football as a life saver is based on the knowledge that a football is filled with air and can float. This category is further used to describe the generation of a new function for an object, based on such top-down, abstract properties; for example, using a hairdryer to blow leaves is a function based on the top-down knowledge that a hairdryer is a device that blows air. Category 2 also includes responses for which an object is modified to allow for a new function based on top-down properties that cannot be inferred exclusively from its component features; for example, cutting a football in half and using it to collect water is a function based on the preexisting (i.e., not manifestly available) knowledge that a football is hollow. Responses that refer to common secondary functions for an object (e.g., ironing board: to fold clothes on) or which incorporate cultural and culturally-instantiated influences (e.g., sock: use as a stocking for Christmas) are further coded as belonging to Category 2.

Category 3 reflects functions that are distant from the object’s canonical function, and which originate from a consideration of the overall shape of an object after some modification. Category 3 describes functions generated from bottom-up properties of the object (i.e., properties visible or available without prior knowledge of the object’s functional identity) after minor modification. For example, folding a blanket and using it to carry things (i.e., as a bag) is a function originating from bottom-up properties of the item, which is far-removed from its use as a cover during sleep. Responses in which objects were used in the place of another object based on visual likeness are also coded as Category 3. For example, a bowl may prompt participants to generate the use “to wear as a hat;” in this case, top-down knowledge about the bowl (e.g., it’s use in food consumption) is overridden by the visual similarities to a hat (i.e., the round semicircular shape, the visibly hollow interior). Finally, functions classified under this category can further reflect the active modification or modeling of an object after a different item to allow for a function based on shared bottom-up or concrete properties (i.e., properties visible or readily available without existing knowledge of the object’s identity). For example, a response that suggests adding straps to a tennis racket to make a snowshoe is based on the visual similarities between the tennis racket and the snowshoe. This response does not refer to previous top-down knowledge or the common functions for a tennis racket (even though abstract properties of the second item—the snowshoe—are likely activated for the generation of this function).

Finally, Category 4 includes responses describing the generation of a function for the object based on specific bottom-up properties rather than the overall shape of the object; as with Category 3, these properties are visible or available without prior knowledge of the object’s identity; furthermore, in Category 4 the function is not based on overall visual similarity with an already existing item, as was the case in Category 3. For example, using a flashlight to open a beer bottle is a function based on a concrete, visually-observed property—having a thin, rigid edge—that does not reflect abstract, top-down knowledge about the object’s typical use. This category further incorporates responses involving the deconstruction of the object to allow for a different function based on the object’s concrete or bottom-up properties (e.g., chair: to burn and use as firewood). All responses that were vague, revealed a misunderstanding of a given object, indicated the participant’s failure to follow task instructions, or otherwise did fall into categories 1, 2, 3, or 4 were coded as miscellaneous.

The present coding system classifies responses on a top-down to bottom-up continuum, that is, as being either closer to an object’s abstract normative functional identity (e.g., chair: a piece of furniture, something to sit on), or as reflecting a distance from that identity and a stronger focus on stimulus-guided knowledge retrieval of the object’s concrete, bottom-up properties (e.g., chair: an artifact made of wood, to burn and use as fuel for a fire). That is, we emphasize that classification of an object’s function in one of the four categories does not imply an absolute either-or distinction between retrieval of top-down and bottom-up properties of an object’s representation. We further note that due to our particular interest in the effects of verbal or pictorial stimulus modality and the nature of the task, this coding system focuses on the retrieval of visual object properties; although not present in our dataset, the present coding system does not exclude bottom-up properties from other modalities (e.g., tactile, auditory).

Rating procedure

The total number of participants’ verbal responses, across conditions, exceeded 8,000 items. Three independent raters, blind to the participants’ condition, were trained on the use of the coding system and coded all responses. Regular biweekly meetings were conducted to ensure compliance with the coding system, in addition to resolving coding disagreements among the raters. Inter-rater reliability between rater pairs was examined by means of the Kappa statistic, which includes corrections for chance agreement levels. The average inter-rater reliability (Kappa coefficient) was .83 (p < .001), 95% CI (0.79, 0.87), ranging from .63 to .99, which is considered substantial to outstanding (Landis & Koch, 1977). Any differences among the raters were resolved in conference. The ratings across raters (after consensus) were used for subsequent analyses.

Analysis of response type

To achieve the most direct assessment of the experimental hypothesis, after coding and analyses were completed on the four-category coding system, we computed the percentage of each participant’s answers under each category for each task (CU, CAU, and UAU, out of the total number of answers they provided for that task; see Table 3 for average percentages by category, condition, and task). Subsequently, we combined the percentage of each subject’s answers for each task separately for categories 1 and 2 (top-down responses) and for categories 3 and 4 (bottom-up responses). We then classified categorically each participant’s performance overall for each task as predominantly either top-down- or bottom-up-driven, depending on whether the majority of their responses for each task fell under the one or the other category (see Figure 3 for an expression of these classifications in percentages by condition and task). Due to the qualitative nature of these results, we employed nonparametric statistics to examine whether participants generated predominantly top-down versus bottom-up responses for each task, based on the kind of stimulus they received. For the CU and the CAU tasks, all participants generated exclusively top-down responses (see Figure 3); hence, no measures of association were computed. For the UAU task, however, the association of stimulus condition (Name, Picture, Name & Picture) with response type (top-down, bottom-up) was significant (Pearson’s χ2 [2, N = 63] = 11.44, p = .003, two-tailed, Cramer’s ϕ = .43). Focused pairwise analyses by stimulus condition with Bonferroni-adjusted α = .017 showed that, as expected, more participants who were presented with the stimuli in the form of pictures than participants who were presented with the stimuli in the form of words generated responses that were judged to be based on a top-down strategy (Pearson’s χ2 [1, N = 45] = 10.29, p = .001, two-tailed, Cramer’s ϕ = .48; Fisher’s exact test p = .002). There was no difference between participants who were shown pictures and participants who were shown pictures and words (Pearson’s χ2 [1, N = 41] = 1.74, p = .19, two-tailed, Cramer’s ϕ = .21; Fisher’s exact test p = .30) or between participants who were shown words and participants who were shown a combination of pictures and words (Pearson’s χ2 [1, N = 40] = 3.74, p = .053, two-tailed, Cramer’s ϕ = .31; Fisher’s exact test p = .09). Overall, the qualitative analysis of subjects’ responses showed that, as predicted, for the open-ended UAU task, pictorial stimuli elicited significantly more top-down-driven responses, closer to the object’s canonical function, than did verbal stimuli.

Table 3.

Average Percentage of Responses Under Each Coding Scheme Category by Condition and Task

| CU | CAU | UAU | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Category | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 |

| Name Condition (n = 22) |

99.03 | 0.48 | 0.59 | 0.00 | 45.89 | 36.37 | 16.51 | 1.23 | 16.91 | 44.15 | 35.32 | 4.49 |

| Picture Condition (n = 22) |

99.26 | 0.55 | 0.19 | 0.00 | 56.43 | 31.31 | 11.57 | 1.57 | 25.77 | 42.76 | 28.65 | 2.35 |

| Name and Picture Condition (n = 18) |

99.77 | 0.23 | 0.00 | 0.00 | 48.29 | 35.96 | 15.49 | 0.26 | 19.10 | 42.57 | 33.87 | 4.46 |

Note. CU = Common use task; CAU = Common alternative use task; UAU = Uncommon alternative use task. Categories 1 and 2 are considered top-down driven, whereas Categories 3 and 4 are considered bottom-up-driven.

Figure 3.

Percentage of participants generating predominantly top-down responses by condition and task.

Analysis across the coding system categories

To examine differences among the conditions on the entire spectrum of the coding system, we first entered the percentage of each participant’s answers for each task (CU, CAU, and UAU; out of the total number of answers they provided for that task) for each category into a 4 × 3 repeated measures, mixed ANOVA, with category (1, 2, 3, or 4) as the within-subjects factor and the type of condition (Name, Picture, or Name & Picture) as the between-subjects factor. Given the vast majority of subjects producing responses that were exclusively classified under categories 1 and 2, for both the CU and the CAU tasks there was a main effect of category (ps < .001), but no effect of condition and no category by condition interaction (ps > .12). No post hoc comparisons across categories or tasks were significant (all ps > .11). In contrast, for the UAU task there was a main effect of category (F[3, 180] = 99.01, p < .001, η2 = .62) and a marginally significant main effect of condition (F[2, 60] = 3.09, p = .053, η2 = .09); the category by condition interaction was not significant F[6, 180] = 1.48, p = .19, η2 = .05). Post-hoc comparisons revealed a significant difference across categories between participants who received picture stimuli relative to those receiving the objects’ names (Tukey’s honestly significant difference test, p = .044). None of the other pairwise comparisons reached significance (all ps > .30).

We subsequently entered the percentage of each participant’s answers for each task (CU, CAU, and UAU; out of the total number of answers they provided for that task) that were categorized as top-down-driven into a 3 × 3 repeated measures, mixed ANOVA, with the type of task (CU, CAU, and UAU) as the within-subjects factor and the type of condition (Name, Picture, or Name & Picture) as the between-subjects factor. Participants generated more top-down-driven responses when they were instructed to produce the common function for the objects relative to secondary and ad hoc functions (main effect of task, F[2, 120] = 205.65, p < .001, η2= .77). Although the task × condition interaction was not significant (F[4, 120] = 0.99, p = .42, η2 = .03), there was a significant main effect of condition (F[2, 60] = 3.16, p = .049, η2 = .10). Post-hoc comparisons revealed that participants in the Picture condition generated significantly more top-down-driven responses than did participants in the Name condition (Tukey’s honestly significant difference test, p = .049). This difference was not significant between participants in the Picture condition relative to participants in the Name and Picture condition (Tukey’s honestly significant difference test, p = .19) or between participants in the Name condition and those in the Name and Picture condition (Tukey’s honestly significant difference test, p = .86).

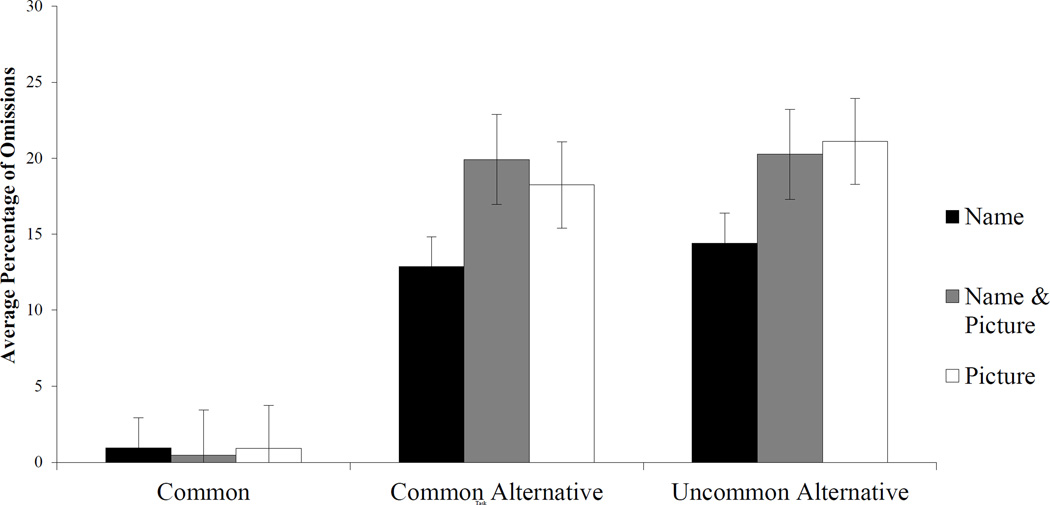

Analysis of omissions

To examine the possibility that the type of stimulus format might have influenced the number of trials for which participants did not give a response, particularly for the common and uncommon alternative tasks, we entered the percent of non-responses by subject and task into a 3 × 3 repeated measures, mixed ANOVA, with the type of task (CU, CAU, and UAU) as the within-subjects factor and the type of condition (Name, Picture, or Name & Picture) as the between-subjects factor (see Figure 4). As expected, there was a main effect of task (F[2, 120] = 70.00, p < .001, η2 = .54), especially given that the number of omissions was minimal for the CU task relative to the other tasks; importantly, however, the results did not reveal a significant effect of condition (F[1, 60] = 1.26, p = .29, η2 = .04) or a task × condition interaction (F[4, 120] = 1.26, p = .29, η2 = .04). Post-hoc pairwise contrast comparisons (Tukey’s honestly significant difference test) between conditions for all tasks were not significant (p > .30), thus confirming that the type of stimulus did not influence the number of trials for which participants did not provide a response.

Figure 4.

Average percentage of omissions by condition and task. The error bars depict the standard error of the mean.

Discussion

Coming up with creative solutions to problems, designing new products, or creating novel pieces of art often involves exposure to examples either generated by others or the creators themselves. Although examples can facilitate creativity through analogical transfer (e.g., Holyoak, 1984, 2005) or by constraining the creative task space (see Sagiv, Arieli, Goldenberg, & Goldschmidt, 2010), they can also lead to functional fixedness, thus limiting the generation of novel ideas. In this study we focused on the influence of verbal and pictorial examples for creativity and divergent thinking. We examined whether memory retrieval (specifically the activation of object representations) based on the influence of verbal and pictorial stimuli would differentially bias participants’ responses in the Object Use task; this task allowed us to manipulate systematically the degree to which participants are asked a close- or open-ended question. Our results suggested that (1) participants showed different biases toward top-down or bottom-up semantic retrieval strategies depending on the nature of the task (i.e., CU, CAU, UAU), such that canonical uses were generated faster than secondary and ad hoc uses; (2) although across all three tasks participants generally employed more top-down than bottom-up retrieval strategies, in open-ended, creative thinking tasks that involve the generation of secondary, and, particularly, ad hoc, uncommon uses for objects, the kinds of responses participants generated were based on bottom-up retrieval strategies more so than during the generation of canonical uses. This analysis was only possible through the classification of responses by means of our novel coding system that captures the extent to which a function is based on the retrieval of top-down or bottom-up attributes of the object’s representation; (3) the effects of stimulus type (name, picture, or a combination of the two) on the availability of object properties for retrieval was, as predicted, more pronounced during the generation of ad hoc, uncommon uses. Specifically, during the UAU task the presence of stimuli in pictorial format primed top-down, abstract aspects of object knowledge that are more closely tied to the object’s normative function, more so than the presence of an object’s name. Interestingly, the combination of the two types of stimuli (i.e., name and picture) seemed to elicit performance that fell somewhere between that of participants in the other two conditions (Name, Picture).

Our quantitative and qualitative results showed that for the UAU subcomponent of the task there is an increase in the generation of bottom-up-driven functions (measured by our novel coding scheme), in addition to an increase in processing time (measured by voice-onset RTs) as participants are forced to move away from a top-down strategy of retrieving the object’s canonical, abstract, and context-independent function, so as to generate an atypical, specific, and context-bound use for it. These results suggest that even though we typically categorize objects by accessing our top-down, abstract knowledge of their functions, under specific circumstances that require creativity and divergent thinking—when such abstract information would be counterproductive (i.e., when one needs to use an object in an ad hoc, goal-determined way, e.g., use a chair as firewood to keep oneself warm)—our conceptual system appears to allow for a temporary retreat or reorientation to more basic bottom-up knowledge, as guided by task demands.

Critically, we have shown that stimulus modality significantly influenced participants’ response type, such that pictorial stimuli led to more top-down-driven (and less bottom-up-driven) responses associated with the object’s canonical function, relative to verbal stimuli. This finding is consistent with the results of previous studies (e.g., Boronat et al., 2005; Chainay & Humphreys, 2002; Postler et al., 2003; Saffran, Coslett & Keener; 2003) showing facilitated access to action- and manipulation-related information from pictures relative to words. Importantly, the present research extends earlier findings by demonstrating that the action-relevant information elicited by pictorial stimuli does not pertain to general actions one can perform with the object—that are guided exclusively by its affordances (see Gibson, 1979)—but rather that the elicited action information is tightly linked to the object’s canonical, normative function.

Related to the effects of pictorial stimuli, we note that our results did not show a significant influence of type of stimulus on voice onset RTs, particularly for the close-ended (CU) subcomponent of the task. In particular, the presence of pictures did not lead to faster RTs when participants were generating the common use of the objects. We note that some previous studies have reported both facilitation with pictorial stimuli or comparable RTs between stimuli in verbal or pictorial format, depending on the nature of the semantic task. For example, Chainay and Humphreys (2002) have shown faster RTs for action-related decisions (e.g., does a teapot require a pouring action?) from pictures, but similar RT patterns for semantic/contextual decisions (e.g., is a teapot found in the kitchen?) from pictures and words. Regarding the question about typical object function in the CU task component of the present study, it is possible that canonical function representations are accessed equally rapidly from pictorial and verbal stimuli, even though specific types of properties that comprise object function (i.e., manipulation-related properties) are accessed faster through stimuli in pictorial format (see also Boronat et al., 2005; Saffran, Coslett, & Keener; 2003).

Our finding that participants in the Picture condition generated more top-down-driven responses during the ad hoc, creative use generation task, compared to participants in the Name condition, is consistent with studies of functional fixedness to pictorial examples in problem solving. As mentioned earlier, Chrysikou and Weisberg (2005) have shown that in an open-ended, design problem-solving task, participants prompted with pictorial examples were likely to reproduce in their solutions example design elements, even when these elements were explicitly described as flawed. In contrast, participants who were not given pictorial examples or who were explicitly instructed to avoid them, appeared immune to functional fixedness effects (see also Smith, Ward, & Schumacher, 1993). Similarly, participants’ responses in the Picture condition in the present study appeared strongly biased toward the retrieval of top-down, abstract properties linked to the objects’ canonical function during uncommon use generation. This finding may advance our understanding of functional fixedness from pictorial stimuli: Based on prior research on semantic memory retrieval from words and pictures as discussed above, we argue that the stronger bond between an object’s visual form (relative to its name) and function-related actions may have biased participants in the Picture condition toward the retrieval of features tied to the object’s canonical use. We further note that in the Object Use task a picture stimulus represents a single instance of the object category (e.g., a specific chair, knife, hairdryer) that is typical of that category and, as such, may prime the canonical function of the object. In contrast, a word can activate multiple instances of the object category across participants, which may also vary with respect to how typical they are of the object category. As a result, word stimuli may lead to increased variability in responses and reliance on bottom-up features depending on the specific instance of the category each participant will think about. Future work examining these effects with pictures of atypical instances of objects, as well as other modalities (e.g., auditory, tactile) may shed light on this issue. For example, a recent meta-analysis of 43 design studies (Sio, Kotovsky, & Cagan, 2015) suggested that fewer and less common examples might lead to more novel and appropriate responses during creative design problem solving tasks.

Finally, our results build on those of Gilhooly et al. (2007) who analyzed participants’ strategies while generating multiple uses for an object in the Alternative Uses task, a variant of the task employed in the present experiment. Specifically, Gilhooly and colleagues reported that participants’ initial responses were guided by a retrieval strategy of already-known uses for the objects, whereas subsequent responses for the same item were based on other strategies, including disassembly of the object and a search for broad categories for possible uses of the target object. Although participants in the present experiment generated only one function per object (either common, or common alternative, or uncommon alternative) given our intention to collect voice-onset reaction times, the types of responses generated for the ad hoc use conditions partially reflect the strategies detailed by Gilhooly et al. (2007). Our findings further extend this previous work by showing that stimulus modality (verbal or pictorial) can influence the type of retrieval strategy employed in open-ended tasks, with pictures leading to more top-down than bottom-up responses.

In sum, in this study we examined whether pictures and words will differentially influence access to semantic knowledge for object concepts depending on whether the task is close- or open-ended. Our results suggest that when generating ad hoc uses in an open-ended, creative thinking task, participants exposed to a picture as opposed to a word rely more on top-down-driven memory retrieval strategies and generate responses that are closer to an object’s typical function. Importantly, we have developed and applied a new coding system for object function that allows for a qualitative assessment of participants’ responses on a continuum ranging from top-down, context-independent, and abstract functions to bottom-up, context-bound, and concrete responses. Future research can benefit from the use of these assessments for a comprehensive evaluation of semantic knowledge for objects in studies with normal subjects and patients and for different kinds of stimuli and tasks, thereby further illuminating the organization of knowledge about objects and how this knowledge is accessed in various tasks by different stimulus modalities. Critically, future studies can employ this categorization scheme to evaluate novel idea generation in the context of creative design or artistic products, especially following exposure to different kinds of example prompts. Such applications may have important implications for the use of examples in various educational settings (e.g., industrial and engineering design or art schools) to ensure that these instructional tools promote innovation and creative thinking.

Acknowledgments

We thank the workshop participants for their feedback. We also thank Barbara Malt and the members of the Thompson-Schill lab for their suggestions on earlier versions of this manuscript. This research was supported by NIH grant R01-DC009209 to STS.

Footnotes

The groundwork for the coding system for the qualitative analysis of object function was originally developed for a presentation of the first author during the workshop ‘Principles of Repurposing’ that was held at the Santa Fe Institute (Santa Fe, NM, July 14–16, 2008).

References

- Allport DA. Distributed memory, modular systems, and dysphasia. In: Newman SK, Epstein R, editors. Current perspectives in dysphasia. Edinburgh, England: Churchill Livingstone; 1985. pp. 32–60. [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. Distinctions between manipulation and function knowledge of objects: Evidence from functional magnetic resonance imaging. Cognitive Brain Research. 2005;23:361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Bright P, Moss H, Tyler LK. Unitary vs multiple semantics: PET studies of word and picture processing. Brain and Language. 2004;89:417–432. doi: 10.1016/j.bandl.2004.01.010. [DOI] [PubMed] [Google Scholar]

- Chainay H, Humphreys GW. Privileged access to action for objects relative to words. Psychonomic Bulletin & Review. 2002;9:348–355. doi: 10.3758/bf03196292. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Christensen PR, Guilford JP. Creativity/Fluency scales. Beverly Hills, CA: Sheridan Supply, Co.; 1958. [Google Scholar]

- Chrysikou EG. When shoes become hammers: Goal-derived categorization training enhances problem solving performance. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:935–942. doi: 10.1037/0278-7393.32.4.935. [DOI] [PubMed] [Google Scholar]

- Chrysikou EG. Problem solving as a process of goal-derived, ad hoc categorization. Saarbrücken, Germany: VDM Verlag; 2008. [Google Scholar]

- Chrysikou EG, Weisberg RW. Following the wrong footsteps: Fixation effects of pictorial examples in a design problem-solving task. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2005;31:1134–1148. doi: 10.1037/0278-7393.31.5.1134. [DOI] [PubMed] [Google Scholar]

- Duncker K. On problem-solving. Psychological Monographs. 1945;58(5) Whole No. 270). [Google Scholar]

- Gates L, Yoon MG. Distinct and shared cortical regions of the human brain activated by pictorial depictions versus verbal descriptions: an fMRI study. Neuroimage. 2005;24:473–486. doi: 10.1016/j.neuroimage.2004.08.020. [DOI] [PubMed] [Google Scholar]

- Gentner D. Structure-mapping: A theoretical framework for analogy. Cognitive Science. 1983;7:155–170. [Google Scholar]

- Glaser WR. Picture naming. Cognition. 1992;42:61–105. doi: 10.1016/0010-0277(92)90040-o. [DOI] [PubMed] [Google Scholar]

- Glaser WR, Glaser MO. Context effects in Stroop-like word and picture processing. Journal of Experimental Psychology: General. 1989;118:13–42. doi: 10.1037//0096-3445.118.1.13. [DOI] [PubMed] [Google Scholar]

- Gick ML, Holyoak KJ. Analogical problem solving. Cognitive Psychology. 1980;12:306–355. [Google Scholar]

- Gick ML, Holyoak KJ. Schema induction and analogical transfer. Cognitive Psychology. 1983;15:1–38. [Google Scholar]

- Guilford JP. Creativity. American Psychologist. 1950;5:444–454. doi: 10.1037/h0063487. [DOI] [PubMed] [Google Scholar]

- Guilford JP. The nature of human intelligence. New York: McGraw-Hill; 1967. Creativity. [Google Scholar]

- Holyoak KJ. Analogical thinking and human intelligence. In: Sternberg RJ, editor. Advances in the psychology of human intelligence. Vol. 2. Hillside, NJ: Erlbaum; 1984. pp. 199–230. [Google Scholar]

- Holyoak KJ. Analogy. In: Holyoak KJ, Morrison RG, editors. The Cambridge handbook of thinking and reasoning. Cambridge, UK: Cambridge University Press; 2005. pp. 117–142. [Google Scholar]

- Gentner D. Structure-mapping: A theoretical framework for analogy. Cognitive Science. 1983;7:155–170. [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. Boston: Houghton-Mifflin; 1979. [Google Scholar]

- Gilhooly KJ, Fioratou E, Anthony SH, Wynn V. Divergent thinking: Strategies and executive involvement in generating novel uses for familiar objects. British Journal of Psychology. 2007;98:611–625. doi: 10.1111/j.2044-8295.2007.tb00467.x. [DOI] [PubMed] [Google Scholar]

- Hampton JA. Polymorphous concepts in semantic memory. Journal of Verbal Learning and Verbal Behavior. 1979;18:41–461. [Google Scholar]

- Hillis A, Caramazza A. Cognitive and neural mechanisms underlying visual and semantic processing: Implication from “optic aphasia”. Journal of Cognitive Neuroscience. 1995;7:457–478. doi: 10.1162/jocn.1995.7.4.457. [DOI] [PubMed] [Google Scholar]

- Humphreys G, Forde E. Hierarchies, similarity, and interactivity in object recognition: ‘Category-specific” neuropsychological deficits. Behavioral and Brain Sciences. 2001;24:453–476. [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ. How to define and object: Evidence from the effects of action on perception and attention. Mind & Language. 2007;22:534–547. [Google Scholar]

- Keane MT. Modeling “insight” in practical construction problems. Irish Journal of Psychology. 1989;11:202–215. [Google Scholar]

- Kroll JF, Potter MC. Recognizing words, pictures, and concepts: A comparison of lexical, object, and reality decisions. Journal of Verbal Learning and Verbal Behavior. 1984;23:39–66. [Google Scholar]

- Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- McCarthy RA, Warrington EK. Evidence for modality-specific meaning systems in the brain. Nature. 1988;334:428–430. doi: 10.1038/334428a0. [DOI] [PubMed] [Google Scholar]

- Osman M. Evidence for positive transfer and negative transfer/Anti-learning of problem solving skills. Journal of Experimental Psychology: General. 2008;137:97–115. doi: 10.1037/0096-3445.137.1.97. [DOI] [PubMed] [Google Scholar]

- Paivio A. Mental representations: A dual coding approach. New York: Oxford University Press; 1986. [Google Scholar]

- Plaut DC. Graded modality-specific specialization in semantics: A computational account of optic aphasia. Cognitive Neuropsychology. 2002;19:603–639. doi: 10.1080/02643290244000112. [DOI] [PubMed] [Google Scholar]

- Postler J, De Bleser R, Cholewa J, Glauche V, Hamzei F, Weiller C. Neuroimaging the semantic system(s) Aphasiology. 2003;17:799–814. [Google Scholar]

- Potter MC, Faulconer BA. Time to understand pictures and words. Nature. 1975;253:437–438. doi: 10.1038/253437a0. [DOI] [PubMed] [Google Scholar]

- Potter MC, Valian VV, Faulconer BA. Representation of a sentence and its pragmatic implications: Verbal, imagistic, or abstract? Journal of Verbal Learning and Verbal Behavior. 1977;16:1–12. [Google Scholar]

- Purcell AT, Gero JS. Design and other types of fixation. Design Studies. 1996;17:363–383. [Google Scholar]

- Riddoch MJ, Humphreys GW. Visual object processing in a case of optic aphasia: A case of semantic access agnosia. Cognitive Neuropsychology. 1987;4:131–185. [Google Scholar]

- Riddoch MJ, Humphreys GW, Hickman M, Cliff J, Daly A, Colin J. I can see what you are doing: Action familiarity and affordance promote recovery from extinction. Cognitive Neuropsychology. 2006;23:583–605. doi: 10.1080/02643290500310962. [DOI] [PubMed] [Google Scholar]

- Rumiati RI, Humphreys GW. Recognition by action: Dissociating visual and semantic routes to action in normal observers. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:631–647. doi: 10.1037//0096-1523.24.2.631. [DOI] [PubMed] [Google Scholar]

- Saffran EM, Coslett HB, Keener MT. Differences in word associations to pictures and words. Neuropsychologia. 2003;41:1541–1546. doi: 10.1016/s0028-3932(03)00080-0. [DOI] [PubMed] [Google Scholar]

- Saffran EM, Coslett HB, Martin N, Boronat C. Access to knowledge from pictures but not words in a patient with progressive fluent aphasia. Language and Cognitive Processes. 2003;18:725–757. [Google Scholar]

- Sagiv L, Arieli S, Goldenberg J, Goldschmidt A. Structure and freedom in creativity: The interplay between externally imposed structure and personal cognitive style. Journal of Organizational Behavior. 2010;31:1086–1110. http://doi.org/10.1002/job.664. [Google Scholar]

- Scheerer M. Problem-solving. Scientific American. 1963;208:118–128. doi: 10.1038/scientificamerican0463-118. [DOI] [PubMed] [Google Scholar]

- Sevostianov A, Horwitz B, Nechaev V, Williams R, Fromm S, Braun AR. fMRI study comparing names versus pictures for objects. Human Brain Mapping. 2002;16:168–175. doi: 10.1002/hbm.10037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shallice T. Multiple semantics: Whose confusions? Cognitive Neuropsychology. 1993;10:251–261. [Google Scholar]

- Smith SM, Ward TB, Schumacher JS. Constraining effects of examples in a creative generation task. Memory & Cognition. 1993;21:837–845. doi: 10.3758/bf03202751. [DOI] [PubMed] [Google Scholar]

- Sio UN, Kotovsky K, Cagan J. Fixation or inspiration? A meta-analytic review of the role of examples on design processes. Design Studies. 2015;39(C):70–99. http://doi.org/10.1016/j.destud.2015.04.004. [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: Inferring “how” from “where”. Neuropsychologia. 2003;41:280–292. doi: 10.1016/s0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Kan IP, Oliver RT. Functional neuroimaging of semantic memory. In: Cabeza R, Kingston A, editors. Handbook of functional neuroimaging of cognition. 2nd. Cambridge, MA: The MIT Press; 2006. pp. 149–190. [Google Scholar]

- Torrance EP. Norms-technical manual: Torrance tests of creative thinking. Lexington, MA: Ginn; 1974. [Google Scholar]

- Tyler LK, Stamatakis EA, Bright P, Acres K, Abdalah S, Rodd JM, Moss HE. Processing objects at different levels of specificity. Journal of Cognitive Neuroscience. 2004;16:351–362. doi: 10.1162/089892904322926692. [DOI] [PubMed] [Google Scholar]

- Vallee-Tourangeau F, Anthony SH, Austin NG. Strategies for generating multiple instances of common and ad hoc categories. Memory. 1998;6:555–592. doi: 10.1080/741943085. [DOI] [PubMed] [Google Scholar]

- Walker WH, Kintsch W. Automatic and strategic aspects of knowledge retrieval. Cognitive Science. 1985;9:261–283. [Google Scholar]

- Ward TB, Patterson MJ, Sifonis CM. The role of specificity and abstraction in creative idea generation. Creativity Research Journal. 2004;16:1–9. [Google Scholar]

- Warrington EK, Crutch SJ. A within-modality test of semantic knowledge: The Size/Weight Attribute Test. Neuropsychology. 2007;21:803–811. doi: 10.1037/0894-4105.21.6.803. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy R. Categories of knowledge: Further fractionations and an attempted integration. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Wright ND, Mechelli A, Noppeney U, Veltman DJ, Rombouts SARB, Glensman J, Haynes JD, Price CJ. Selective activation around the left occipito-temporal sulcus for words relative to pictures: Individual variability or false positives? Human Brain Mapping. 2008;29:986–1000. doi: 10.1002/hbm.20443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vandenberghe R, Price C, Wise R, Josephs O, Frackowiak RSJ. Functional anatomy of a common semantic system for words and pictures. Nature. 1996;383:254–256. doi: 10.1038/383254a0. [DOI] [PubMed] [Google Scholar]