Abstract

Background. The use of qualitative research (QR) methods is recommended as good practice in discrete choice experiments (DCEs). This study investigated the use and reporting of QR to inform the design and/or interpretation of healthcare-related DCEs and explored the perceived usefulness of such methods. Methods. DCEs were identified from a systematic search of the MEDLINE database. Studies were classified by the quantity of QR reported (none, basic, or extensive). Authors (n = 91) of papers reporting the use of QR were invited to complete an online survey eliciting their views about using the methods. Results. A total of 254 healthcare DCEs were included in the review; of these, 111 (44%) did not report using any qualitative methods; 114 (45%) reported “basic” information; and 29 (11%) reported or cited “extensive” use of qualitative methods. Studies reporting the use of qualitative methods used them to select attributes and/or levels (n = 95; 66%) and/or pilot the DCE survey (n = 26; 18%). Popular qualitative methods included focus groups (n = 63; 44%) and interviews (n = 109; 76%). Forty-four studies (31%) reported the analytical approach, with content (n = 10; 7%) and framework analysis (n = 5; 4%) most commonly reported. The survey identified that all responding authors (n = 50; 100%) found that qualitative methods added value to their DCE study, but many (n = 22; 44%) reported that journals were uninterested in the reporting of QR results. Conclusions. Despite recommendations that QR methods be used alongside DCEs, the use of QR methods is not consistently reported. The lack of reporting risks the inference that QR methods are of little use in DCE research, contradicting practitioners’ assessments. Explicit guidelines would enable more clarity and consistency in reporting, and journals should facilitate such reporting via online supplementary materials.

Keywords: discrete choice experiment, qualitative research, systematic review, survey

Discrete choice experiments (DCEs) are a stated preference method that uses a survey to systematically quantify individuals’ preferences. The method is used to understand which characteristics (termed attributes) are liked by consumers, how they balance these attributes, and the relative importance of each attribute in their decision to consume.1 In a DCE, the respondents are asked to choose their preferred option from a series of hypothetical scenarios called choice-sets. DCEs are underpinned by 2 key economic theories: Random Utility Theory (RUT) and Lancaster’s Theory.2,3 The 2 theories combined suggest that DCE respondents choose the option from each choice-set which provides them with the most satisfaction or “utility.” The method has been used to understand people’s preferences in a variety of settings, often when it is challenging to observe consumers making choices in real markets.4,5

In healthcare, decision making may involve careful assessment of the health benefits of an intervention.6 However, decision makers may wish to go beyond traditional clinical measures and incorporate “non-health” values such as those derived from the process of healthcare delivery.7 DCEs allow for estimation of an individual’s preferences for both health benefits and non-health benefits and can explain the relative value of the different sources.8

Systematic reviews of published health-related DCEs have identified that their designs are becoming increasingly complex, with an increase in the number of choice-sets presented and an increase in the number containing attributes that are difficult to present and interpret, such as time or risk.9–11 The increased complexity of DCE designs raises the potential for anomalous or inexplicable choices.12 Any increases in the cognitive burden of the task could result in poorer quality data and should be considered carefully.13 A number of studies have explored the implications for quantitative analyses of anomalous or inexplicable choice data, leading to, for example, the exclusion of respondents whose choices fail tests for monotonicity or transitivity or who exhibit sufficiently high levels of attribute nonattendance.14,15

Qualitative research is increasingly advocated in the field of health economics.16,17 The term qualitative research refers to a broad range of philosophies, approaches, and methods used to acquire an in-depth understanding or explanation of people’s perceptions.18–21 A key strength of qualitative research methods, in particular, is being able to collate important contextual data alongside quantitative preference data. These potential strengths can be realized only if studies are conducted appropriately and reported with sufficient clarity such that readers can understand the approach used and interpretation of the findings.

There is some evidence that stated preference methods, other than DCEs, have benefited from the use of qualitative research methods in order to provide a deeper understanding of their results.22–24 General guidelines advising on best practice for healthcare DCEs state the importance of qualitative research methods in the design of the DCE survey.25,26 Some academics have made specific recommendations for the application of qualitative research methods alongside DCEs, paying particular attention to the identification of attributes and levels.27–29 However, there has been no explicit investigation of how well these recommendations have been translated into practice or the perceived usefulness of the qualitative methods in this context.

This study aimed to identify studies reporting the use of qualitative research methods to inform the design and/or interpretation of healthcare-related DCEs and explore the perceived usefulness of such methods. The objectives were to 1) identify and quantify the proportion of DCEs using qualitative research methods; 2) investigate the stages in the DCE at which qualitative research methods were used; 3) describe the methods and techniques currently used; 4) evaluate the quality of the reporting of qualitative research when possible; and 5) explore the views of authors of published DCEs about the usefulness of qualitative research methods.

Methods

This study used systematic review methods30 combined with a structured online survey.

Systematic Review

The systematic review focused on identifying all published healthcare DCEs within a defined time period (2001 to June 2012). The focus was on DCEs rather than other stated preference methods such as conjoint analysis, which are grounded in different economic theories and are therefore not directly relevant to this review.31

Inclusion and Exclusion Criteria

The primary inclusion criteria were that the empirical study was healthcare related and used a discrete choice (also known as choice-based conjoint analysis) experimental design with no adaptive elements. Other literatures, such as environment, transport, or food, were excluded. Examples of conjoint analysis where respondents were required to rate or rank alternatives were also excluded from the review. Non-English articles and reviews, guidelines, or protocols were not included.

Search Strategy

An electronic search of MEDLINE (Ovid, 1966 to date) was conducted in June 2012. The strategy exactly replicated that of a published systematic review of DCEs.9 The search terms used were “discrete choice experiment(s),” “discrete choice model(l)ing,” “stated preference,” “part-worth utilities,” “functional measurement,” “paired comparisons,” “pairwise choices,” “conjoint analysis,” “conjoint measurement,” “conjoint studies,” and “conjoint choice experiment(s).”

Screening Process

Screening was conducted by an initial reviewer (C.V.) and duplicated by a second reviewer (K.P.). Following the initial screening, if an article could not be rejected with certainty on the basis of its abstract, the full text of the article was obtained for further evaluation. Papers were reviewed a second time to identify any articles relating to the same piece of research, thus limiting the problem of double counting a single study.

Data Extraction and Appraisal

In line with previous systematic reviews,22 this review defined qualitative research methods as any exploration of peoples’ thoughts or feelings through the collection of verbal or textual data. The studies were initially categorized into 3 categories: 1) those which reported no qualitative research (none); 2) those which contained basic information by reporting the aims, methods, analysis, or results of the qualitative component (basic); and 3) those which indicated that an extensive qualitative component was conducted by reporting information on the aims, methods, analysis, and results (extensive). Studies in category 3, “extensive,” were deemed to contain sufficient detail for critical appraisal. The categorization of studies was initially conducted by CV and repeated by 2 other researchers (Martin Eden and Eleanor Heather).

Data were extracted from each study, including the country setting, publishing journal, and year of publication. From studies in the “basic” or “extensive” categories, data were extracted about the purpose of the qualitative component, the qualitative research methods used, the approach taken to analyze or synthesize the qualitative data, and any software used in the process.

One researcher (C.V.) extracted data from the studies that reported basic details about the qualitative component of their study. An iterative process of identifying, testing, and critiquing existing appraisal tools with experienced qualitative researchers (Stephen Campbell and Gavin Daker-White) was used to produce a bespoke checklist (Appendix A, available online) for use when reporting qualitative methods used alongside a stated preference study. A separate paper is in preparation that focuses on the development, validation, and suggested use of this bespoke checklist.

Data Synthesis

Microsoft Excel was used to tabulate the extracted data. The data were then summarized and collated into a narrative report describing the findings.

Survey to Authors

An online survey (Appendix C) was designed to determine authors’ experiences and opinions of the following: 1) using qualitative research methods alongside DCEs and 2) communicating the qualitative work they conducted in a journal article. Additional questions included self-assessment of their and coauthors’ expertise in qualitative research, the number of DCEs they had conducted, and whether they agreed with the key findings of the systematic review. A preliminary version of the survey was devised and piloted with researchers (n = 3) experienced with DCEs but was not included in this review (because their DCEs were unpublished or in non-health subjects). All journal articles provided an e-mail address for the corresponding author. Therefore, the most feasible method of contacting authors and eliciting their views was an online survey. Authors were invited to participate via an e-mail (or electronic message) that explained the systematic review and included a brief abstract covering the background, methods, and results of the systematic review. The message also referenced the study included in the systematic review (for authors with multiple articles, this was the one most recently published).

Analysis of the survey responses involved production of descriptive statistics for each of the questions. The authors’ free-text comments were not thematically analyzed because of the limited textual data available (some authors chose not to comment).

Results

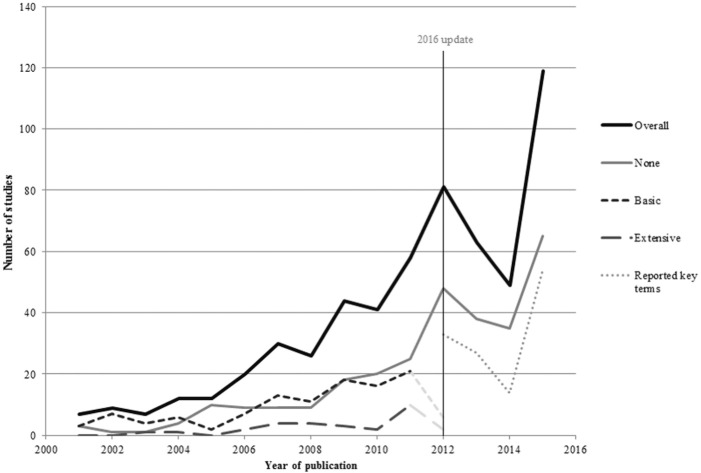

In total, 254 empirical studies (some studies were reported in more than one paper) were included in the final review published between 2001 and June 2012. A list of studies included in the review can be found in Appendix B. One hundred and twenty-nine studies were already identified by previous systematic reviews.9,32 The updated search resulted in 501 titles and abstracts since the previous review (2008 onward). Two hundred and eight full papers were retrieved for further assessment, and 148 papers met the inclusion criteria. Figure 1 shows the stages involved in screening and the reasons for rejection of the excluded papers.

Figure 1.

Flow of studies through the systematic review.

Overview of Included DCEs

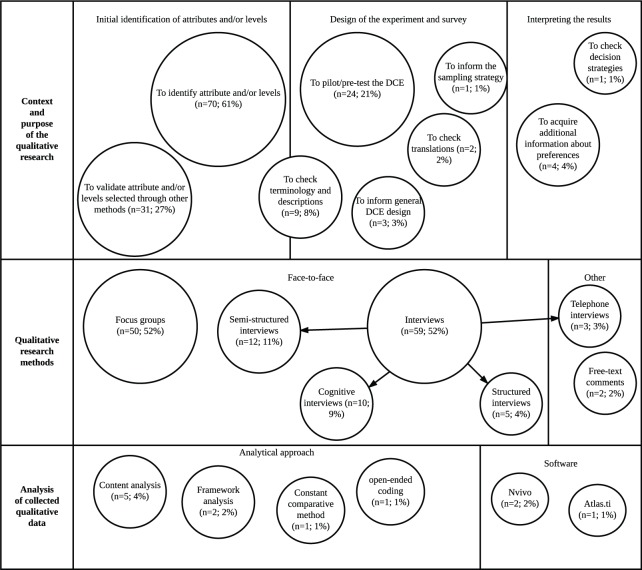

As shown in Figure 2, there was an increase in the number of DCEs published over time, with over half of the studies (n = 154, 56%) published since 2009. Half of the DCEs identified by this review were published in health services research journals (n = 139, 50%) and a third (n = 88) in specialized medical journals. Over half of the DCEs published were conducted in Europe (n = 186, 56%), and a quarter of the DCEs identified were carried out in the UK (n = 84, 25%). Other popular countries included the US (n = 49, 15%), the Netherlands (n = 38, 11%), Australia (n = 26, 8%), and Canada (n = 19, 6%).

Figure 2.

Trends in DCE publishing over time. “Overall” includes papers rather than studies. 2012 incomplete due to the year of search.

Overall, 111 studies (44%) did not report the use of any qualitative research methods; 114 studies (45%) were rated as “basic,” reporting minimal information on the use of qualitative methods; and 29 studies (11%) reported or explicitly cited extensive use of qualitative methods. A number of studies included in the review that reported no qualitative research went on to discuss the lack of qualitative data as a limitation of their study.33–35

Journals relating to specific disease areas were least likely to contain the qualitative component of the research. In contrast, 70% of the DCE studies reporting the use of qualitative research to were published in unspecific medical journals. There were also noticeable patterns, with 90% of the 11 DCE studies conducted in Africa reporting the details of the qualitative component of their research.

Basic Qualitative Research Reported

Almost all authors who reported using some qualitative research did so by stating in the methods section of the paper the nature of the qualitative component of their research (n = 113, 99%). Almost all (n = 113, 99%) of the studies that reported basic qualitative research reported using it before the DCE was implemented, in either the design or the piloting phase. Three studies (3%) reported using qualitative research at the end of the DCE to attain additional information on preferences.36–38

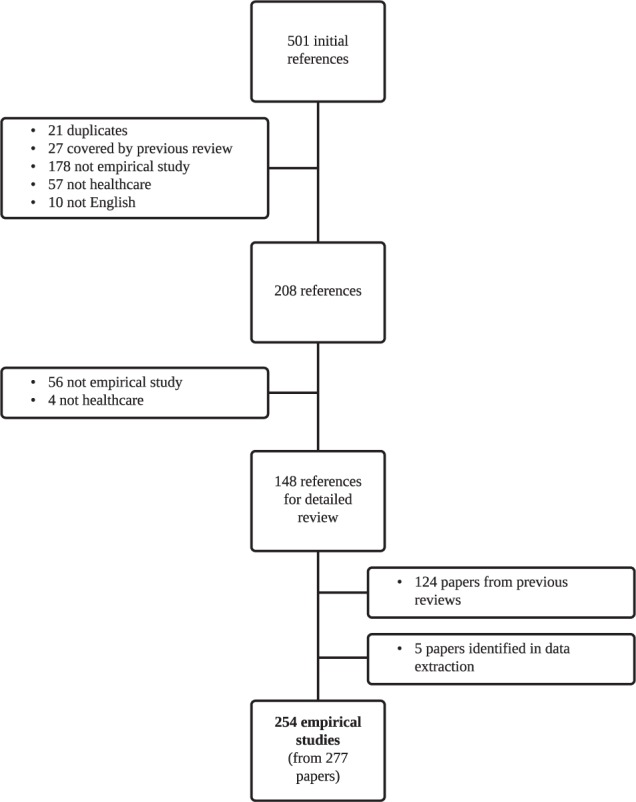

Figure 3 illustrates that a variety of applications of qualitative research methods were identified. In the design of the DCE, researchers were most commonly seeking to identify attributes and/or assign levels (n = 70, 61%) or validate attributes and/or levels identified through other methods (n = 31, 27%). Researchers also used qualitative research methods more specifically to check terminology, vignettes, and descriptions (n = 9, 8%) and to confirm translations (n = 2, 2%).

Figure 3.

Summary of methods and context of the studies (n = 114) reporting basic details about the qualitative component.

After the design phase, some studies also reported using qualitative research methods in the piloting of the DCE (n = 24, 21%). In the pilot stage, the methods were specifically used to check for decision strategies and also to determine an appropriate sample for the final DCE. For example, one study39 used interviews to determine an appropriate age range for the final DCE. Another study40 used the qualitative data acquired in the piloting stage to estimate preference heterogeneity and thus predict an appropriate model for the choice data, and another study41 used the qualitative research to predict the signs of the coefficients.

The most popular approach to qualitative data collection was interviews (n = 89, 78%), including structured and semistructured interviews. Ten studies (9%) also used cognitive interviews that included debriefing questions at the end of the task as well as a verbal protocol analytical technique called “think-aloud.” Focus groups were another popular approach to data collection (n = 50, 44%).

The most common populations used in the qualitative component were healthcare professionals (n = 21, 18%), patients (n = 46, 40%), and experts (n = 11, 10%), although some studies (n = 14, 13%) used a mixture of participants. Of the 114 studies, 71 (62%) conducted the qualitative research with the same population as the DCE study and 16 (14%) did not. In 23 studies (21%) it was unclear whether the populations for the DCE and the qualitative component were the same. In 4 studies (4%), the qualitative sample was the same sample of individuals who completed the DCE survey.

Although a crucial step in drawing reliable and valid results from the qualitative data, only a minority of studies described their approach to the analysis of the qualitative data (n = 15, 7%). Of these 15 studies, 5 studies reported using content analysis36,42–45 and 2 studies (2%) reported using framework analysis.46,47 Other analytical approaches included the use of grounded theory methods such as the constant comparative method48 and open-ended coding.49 Three studies detailed the use of specialist qualitative software: 2 studies36,50 (2%) used NVivo, and 1 study51 used Atlas.ti.

Extensive Qualitative Research Reported

Seven DCE studies extensively described the use of qualitative research within the main text of the paper.52–58 Twenty-two further studies were identified as having conducted extensive qualitative research by checking the references to the qualitative component of the work. The details tended to be reported in other peer-reviewed journals (n = 17) and commissioned reports (n = 5). The citation of the qualitative research (either the main text of the DCE or a previous publication); the application; the methods used; and the analysis conducted are described in Table 1.

Table 1.

Description of the Qualitative Research Contained in Extensively Detailed Studies

| Authors, Country, Cited Research | Methods | Context | Analysis | ||

|---|---|---|---|---|---|

| Baker et al.50 (UK) | Focus groups | To identify attributes and levels | Thematic analysis | ||

| Bridges et al.51 (multi-country) | Open-ended interviews | Open-ended telephone interviews | To identify attributes and levels | To compare to quantitative results | Frequency analysis and IPA |

| Bridges et al.52 (Germany) | Unstructured interviews | To understand how respondents complete the choice task (trading behavior) | Thematic analysis | ||

| Cheraghi-Sohi et al.54,87 (UK) | Think-aloud interviews | To understand how respondents complete the choice task (trading behavior) | Coded using a literature-derived framework | ||

| Fitzpatrick et al.70 (US) See also Fitzpatrick et al.88,89 |

Semistructured interviews | To identify attributes and levels | Constant comparative method in Atlas.ti and “open-coding” | ||

| Gerard et al.56 See also Salisbury et al.90 |

Ethnographical observation study | Semistructured interviews | To identify attributes and levels | To refine descriptions of attributes | Constant comparative method in Atlas.ti |

| Grindrod et al.64 (Canada) See also Grindrod et al.91 |

Focus groups | To identify attributes and levels | IPA content analysis | ||

| Hall et al.92 (Australia) See also Haas et al.93 |

Semistructured interviews | To identify attributes and levels | Thematic analysis | ||

| Haughney et al.94 (multi-country) See also Haughney et al.95 |

Semistructured interviews | To identify attributes and levels | Thematic analysis | ||

| Herbild et al.96 (US) See also Herbild97 |

Focus groups | To identify attributes and levels | Thematic analysis | ||

| Hsieh et al.65 (US) See also Hsieh et al.98 |

Focus groups | To identify attributes and levels | Content analysis using Atlas.ti | ||

| Lagarde et al.66 (Ghana) See also Smith Paintain et al.99 |

Semistructured interviews | To identify attributes and levels | Content analysis using NVivo | ||

| Lloyd et al.63 (UK) See also Nafees et al.100 |

Focus groups | To validate attributes and levels | Content analysis | ||

| Mark and Swait101 (US) See also Mark et al.102 |

Focus groups | To identify attributes and levels | Thematic analysis | ||

| Morton et al.103 (Australia) See also Morton et al.104 |

Semistructured interviews | To identify attributes and levels | Thematic synthesis | ||

| Naik-Panvelkar et al.68 (Australia) See also Naik-Panvelkar et al.1 |

Semistructured interviews | To identify attributes and levels | Thematic analysis | ||

| Payne et al.59 (UK) See also Fargher et al.105 |

Semistructured interviews | Focus groups | To identify attributes and levels | Constant comparative analysis | |

| Pitchforth et al.49 (UK) | Focus groups | To identify attributes and levels | To inform quantitative (subgroup) analysis | Constant comparative analysis | |

| Potoglou et al.106 (UK) See also Netten et al.107 |

Interviews | To identify attributes and levels | Thematic analysis | ||

| Poulos et al.60 (Vietnam) See also Nghi et al.108 |

Interviews | Focus groups | To identify attributes and levels | Theme-based code-book in NVivo | |

| Richardson et al.61 (UK) See also Kennedy et al.109 |

Focus groups | Semistructured interviews | To identify attributes and levels | Framework analysis | |

| Roux et al.55 (UK) | Focus groups | To identify attributes and levels | Thematic analysis | ||

| Ryan et al.57 (Australia) See also Netten et al.110 |

Interviews | Piloting and pretesting | Content analysis | ||

| Ryan et al.53 (UK) | Think-aloud interviews | To understand how respondents complete the choice task (trading behavior) | Charting approach | ||

| Scotland et al.67 (UK) See also Cheyne et al.111 |

Group interviews | To identify attributes and levels | Latent content analysis | ||

| Tappenden et al.58 (Australia) See also Bryan et al.112 |

Semistructured interviews | Semistructured interviews (telephone) | To identify attributes and levels | Open coding | |

| Turner et al.71 (UK) See also Baker et al.113 |

Semistructured interviews | To identify attributes and levels | Open coding (moving to structured) using NUDist software and NVivo | ||

| Van Empel et al.69 (multi-country) See also Dancet et al.114 |

Focus groups | To identify attributes and levels | Constant comparative content analysis with a coding tree | ||

| Vujicic et al.62 (Vietnam) See also Witter et al.115 |

Semistructured interviews | Focus groups | To identify attributes and levels | Coding in NVivo | |

“See also” directs readers to the qualitative study. IPA = interpretative phenomenological analysis.

Most of the studies (n = 25, 86%) reported the use of qualitative research methods to identify or validate attributes and/or levels for use in the DCE. Three studies55–57 used qualitative research methods to understand more about how respondents completed the choice task presented. Two studies52,55 also used the qualitative research to complement the quantitative analysis. Other studies used qualitative research methods to pilot and refine the survey.59,60

The most common data collection approach was interviews (n = 20, 69%). These interviews were mostly semistructured (n = 12, 41%) and face-to-face, although 2 studies used telephone interviews. 54,61 Of the 3 studies55–57 using qualitative research to understand more about how people completed the DCE task, 2 of these56,57 used a think-aloud interview approach.

A number of studies also used focus groups (n = 13, 45%), and 4 studies used a combination of focus groups and interviews in their qualitative study.62–65 One study59 used the results of an ethnographic observational study to identify attributes and levels for the DCE and used semistructured interviews to refine the training materials and descriptions.

Most studies simply stated in the paper that they used thematic analysis (n = 10, 34%) or content analysis (n = 5, 17%)60,66–69 to categorize the qualitative data collected. One study also reported the use of a “latent” content approach to discover underlying themes.70 One study71 reported using thematic synthesis, a type of thematic analysis that involves a more explicit refinement of themes (possibly from multiple studies) and is an approach in line with reducing the qualitative data to develop a few attributes and levels.

Other analytical approaches included framework analysis (n = 3, 10%)63,64,71 and a related analysis called charting.56 Seven studies used some constant comparative analysis (n = 5, 17%)52,59,62,72,73 or open-coding (n = 3, 10%)61,73,74 at least in the initial stages. Two studies54,67 used interpretative phenomenological analysis (IPA), which often takes an open-coding approach rather than relying on preexisting themes or frameworks. The type of software used was not always reported, but the most commonly reported packages were NVivo59,63,65,69 (n = 4, 14%) and Atlas.ti59,68,73 (n = 3, 10%).

Survey Results

A total of 114 studies reported at least basic use of qualitative research methods, and all authors of these studies were invited to complete the survey. As some corresponding authors had multiple studies included in the review, 91 individual authors were sampled. After the first e-mail sent on 1 May 2013, 38 authors completed the survey. Four authors declined to take part (for reasons such as one author had not practiced in the field for a few years so could not sufficiently recall his or her experiences; another was a statistician who had only been involved with the DCE analysis). The questionnaire closed on the 30 June 2013, with a total of 53 completed or partially complete responses, resulting in an overall response rate of 58%.

Table 2 provides a summary of the authors’ responses to each of the survey questions and examples of free-text comments provided by authors. These free-text comments are presented to illuminate the quantitative findings.

Table 2.

Surveyed Authors’ Responses

| Survey Question | n | % | Key Quotes from Free-text | ||

|---|---|---|---|---|---|

| How many DCEs in healthcare have you published, either as the first author or as a coauthor? | |||||

| 1 | 9 | 17 | |||

| 2 | 10 | 19 | |||

| 3 | 9 | 17 | |||

| 4 | 0 | 0 | |||

| 5-9 | 16 | 30 | |||

| 10+ | 9 | 17 | |||

| Why do you think there is this apparent absence/limited reporting of qualitative research methods? Some possible reasons are listed below, please tick all which apply. Qualitative research in DCEs . . . |

Reporting these details could cause criticism by the reviewers and compromise acceptance of publication. (Author ID27) What is presented in a manuscript often is limited because of word limits or because some of the qualitative research is somewhat informal. (Author ID19) |

||||

| Is not of interest to most of my peers. | 11 | 22 | |||

| Is not of interest to journals. | 22 | 44 | |||

| Is not of interest to funders. | 4 | 8 | |||

| Is not important in the design of health DCEs. | 3 | 6 | |||

| Does not affect the study outcomes. | 2 | 4 | |||

| Is too complicated to report in detail. | 26 | 52 | |||

| Is too time consuming to conduct properly. | 10 | 20 | |||

| Other reasons | 28 | 56 | |||

| For this question, please think about the study mentioned in the invitation e-mail. Was a member of the research team an expert in qualitative research? | Helping to disseminate guidelines/suggestions useful to report accurately but concisely details on qualitative research, in a way that it is accepted by the reviewers/readers, could help improve the transparency of the studies, hence their quality and results reliability. (Author ID27) | ||||

| Yes, I have expertise in qualitative research. | 13 | 25 | |||

| Yes, a member of the research team had expertise in qualitative research. | 31 | 58 | |||

| Do not know. | 1 | 2 | |||

| No, there was no expert in qualitative research in the research team. | 8 | 15 | |||

| Do you think the paper accurately reflected the amount of qualitative research undertaken in the study? | I have suggested to editors to incorporate a material section in the webpage including transcripts of the focus groups, and in general all the qualitative work. (Author ID36) | ||||

| Yes | 26 | 50 | |||

| No | 24 | 46 | |||

| Don’t know | 2 | 4 | |||

| A key finding of my systematic review of DCEs in healthcare was that many studies report either limited or no qualitative research methods. Does this finding agree with your experience of reading or conducting healthcare DCEs? | The most important step: identifying and reporting the methods for selecting attributes and their levels in a consistent manner has received less attention. (Author ID11) | ||||

| Yes | 42 | 79 | |||

| No | 11 | 21 | |||

| Do you feel that the qualitative research completed as part of this healthcare DCE added value? | The subjects were asked WHY they made that choice. This yielded wonderful insight into the beliefs and misconceptions behind prevention choices. (Author ID22) | ||||

| In this DCE | In DCEs generally | ||||

| n | % | n | % | ||

| It made a substantial improvement. | 29 | 58 | 31 | 74 | |

| It added a little value. | 21 | 42 | 11 | 26 | A necessary component of doing high quality DCE studies. (Author ID1) |

| None, it did not add any value at all. | 0 | 0 | 0 | 0 | |

| No, it hindered the study and had a negative role. | 0 | 0 | 0 | 0 | |

Of the respondents who answered the question enquiring whether qualitative research methods added value to their DCE, all (n = 50, 100%) stated that it did. Authors also reported that the use of qualitative research methods added value to their experience of conducting DCEs in general, with 74% (n = 31) stating that it made a “substantial improvement” to the study. However, one respondent offered this comment, which suggests some antipathy toward the use of qualitative research methods:

Qualitative methods often require a subjective component that doesn’t fit well with economics or quantitative methods. I am not convinced that qualitative work is always needed. (Author ID40)

A key finding of the systematic review was poor reporting of qualitative research in journal articles. The majority of survey respondents (n = 42, 79%) agreed with this finding that the qualitative component was only briefly described in their DCE paper. Some respondents (n = 11, 16%) stated that qualitative research would not be of interest to their peers, and 22 (44%) felt it was not of interest to journals. Other respondents (n = 4, 8%) reported that they did not believe qualitative research was important to funders.

Three-quarters of the respondents (n = 40, 75%) stated that they had no expertise in qualitative research methods. Some respondents (n = 31, 58%) did have a qualitative researcher as part of their team, but others (n = 8, 15%) did not. As described in Table 2, one author commented on the lack of guidance on reporting standards for the qualitative research conducted alongside a DCE.

Discussion

Existing systematic reviews of healthcare DCEs9,11,75 have not focused on the role of qualitative research, and there has been no direct contact with authors to determine whether the results detailed in their papers were subject to reporting bias. The review identified that 89% (n = 225) of identified DCEs did not report the qualitative component of the study in detail. A variety of reasons for the lack of detail in reporting, and complete omission in 44% (n = 111) of studies, were identified from the survey to authors. One potential reason for the paucity in reporting could be a lack of explicit reporting guidelines for qualitative research methods alongside DCEs.

Numerous guidelines exist for conducting and appraising qualitative research in general.76–82 However, in the context of DCEs, detailed guidelines on the use of qualitative research methods only exist for the identification of attributes and levels.27,28 It was difficult to ascertain the degree to which these guidelines were followed due to the lack of detail reported in published DCEs. The systematic review found that the most common application of qualitative research was to select attributes and levels for use in the DCE; other applications, for which guidelines do not exist, were also identified.

Qualitative research was frequently used for pretesting or piloting the DCE survey and for refining or checking terminology. The review also found some studies using qualitative research methods in other applications: for example, to predict preference heterogeneity, to select and specify a regression model, to identify the motives behind “irrational responses,” or to specifically test for breaks in the key axioms that support DCEs as a method.40,56,83 In light of these broad-ranging applications, it is apparent that qualitative research methods are being used in many ways. Best practice guidelines exist for many important steps in a DCE study, such as the experimental design84 and analysis of choice data.85 There is a need for similar guidelines covering the broad range of applications for qualitative research methods. Best practice guidelines may help improve both the quality and the reporting of qualitative research conducted alongside healthcare DCEs.

The author survey was sent to researchers who had regular experience in designing and/or analyzing DCEs, with most of the respondents publishing more than one DCE. This indicates that the sample was knowledgeable and was likely to include experienced researchers whose views are probably representative of the wider research area. This survey provided evidence that researchers designing and analyzing DCEs regarded using qualitative research methods as beneficial in a health DCE study. The lack of reporting of a beneficial and informative component to a research study could be rectified by clear and explicit reporting guidelines for all applications of qualitative research methods in the context of DCEs and the use of online appendices, particularly in word-restrictive journals. Maintained underreporting may otherwise erroneously imply that qualitative research is not useful in healthcare DCEs.

An updated rapid-review was conducted that covered all healthcare DCEs published between 1990 and February 2016 identified through a systematic search of the Medline database. The full-text of the 626 healthcare DCEs was then searched for key terms relating to qualitative research identified from a review of qualitative research in contingent valuation studies.22 Further details of the update to the review can be found in Appendix D. The results of the updated review were consistent with this systematic review: Few DCEs studies report the qualitative component of their research, and few details are provided about the analytical approaches used to interpret the textual data.

Limitations

A limitation of the systematic review was the focus on papers recorded in one database, MEDLINE. This search strategy was chosen because it updated a previously published review by De Bekker-Grob and others6 and replicated their study. The authors of the review chose MEDLINE, as other databases such as Pubmed or Embase identified duplicate papers rather than missing studies. Another limitation of the systematic review was the reliance on what was reported in the published paper; this was partially remedied with a survey to authors. However, the reliance on reporting was a particular challenge when assessing the analytical methods used by authors. For example, “content analysis” can refer to multiple approaches to the interpretation of qualitative data.86 It was often unclear whether the authors had used numerical (summative) quantification of themes or had taken a more conventional approach of developing themes either from the text or from an initial framework.

Arguably, a more in-depth account of authors’ views and experiences could have been collected (possibly through one-to-one interviews), and thorough thematic analysis of the free-text comments could have provided more robust results. The survey sampled only authors who reported using qualitative research methods. Authors who did not report the qualitative component of their DCE study may have excluded details because the research did not add value, possibly creating bias in the survey results. However, the results of the questionnaire helped to explain the key findings of the systematic review, such as the drivers behind the lack of detail, and it is unlikely that further analysis or review would have highlighted anything that would significantly alter the findings.

An emerging type of DCE called a best-worst scaling (BWS) DCE is becoming an increasingly popular form of preference elicitation.87,88 In a BWS experiment, the respondents select their most and least preferred items, arguably revealing more about the respondents’ strength of preference in a survey containing fewer choice-sets.89 BWS-DCE studies were not included in this systematic review because the search strategy, chosen to maintain methodological consistency with the previous DCE review,9 was not designed to capture these types of choice studies.

Another limitation of this study was the original focus on papers published between 2001 and 2012. To remedy this, a rapid update to the review was conducted. The results of the updated rapid review matched the finding of the original systematic review, providing confirmatory evidence of its validity and the conclusion that qualitative research methods are inadequately reported in healthcare DCEs.

Conclusion

The results of the systematic review and survey of authors identified that qualitative research methods were being used by DCE researchers to answer multiple research questions and that these methods add value to DCE studies. However, the review demonstrated there was a paucity of detail about the qualitative component of most DCE articles. This lack of reporting could cause researchers to infer that qualitative research is not an important component of a DCE study. Authors and journal editors should make provisions for reporting the details of the qualitative component of their research, perhaps through the use of online appendices. Further research is required to develop guidelines for the reporting of qualitative research methods in stated preference studies, particularly for uses other than the identification of attributes and levels, which are not covered by current guidelines.

Supplementary Material

Acknowledgments

The authors thank Martin Eden and Eleanor Heather for their help with screening papers included in this review. We also thank Dr. Gavin Daker-White and Professor Stephen Campbell for their assistance in developing the bespoke appraisal tool. We are grateful to Gene Sim, who assisted with an update to the review. We would like to acknowledge the discussions with colleagues who attended a seminar that presented preliminary results of the review and survey at the Health Economics Unit, University of Birmingham. In particular, we thank Dr. Emma Frew for her considered suggestions at this seminar.

Footnotes

Financial support for this study was provided entirely by a National Institute for Health Research (NIHR) School for Primary Care Research (SPCR) PhD Studentship for Caroline Vass. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, and writing and publishing the report. The views expressed in this article are those of the authors and not of the funding body.

The authors have no financial conflicts of interests.

Under the explicit guidance of the University of Manchester’s Research Ethics Committee, no ethical approval was required for this study.

Supplementary material for this article is available on the Medical Decision Making Web site at http://journals.sagepub.com/home/mdm.

References

- 1. Naik Panvelkar P, Armour C, Saini B. Community pharmacy-based asthma services—what do patients prefer? J Asthma. 2010;47:1085–93. [DOI] [PubMed] [Google Scholar]

- 2. Lancaster KJ. A new approach to consumer theory. J Polit Econ. 1966;74:132–57. [Google Scholar]

- 3. McFadden D. Conditional logit analysis of qualitative choice behaviour. In: Zarembka P, ed. Frontiers in Econometrics. New York: Academic Press; 1974. p 105–42. [Google Scholar]

- 4. Hensher D. An exploratory analysis of the effect of numbers of choice sets in designed choice experiments: an airline choice application. J Air Transp Manag. 2001;7:373–9. [Google Scholar]

- 5. Smyth RL, Watzin MC, Manning RE. Investigating public preferences for managing Lake Champlain using a choice experiment. J Environ Manage. 2009;90:615–23. [DOI] [PubMed] [Google Scholar]

- 6. De Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ. 2012;21:145–72. [DOI] [PubMed] [Google Scholar]

- 7. Harrison M, Rigby D, Vass C, Flynn T, Louviere J, Payne K. Risk as an attribute in discrete choice experiments: a critical review. Patient. 2014;7:151–70. [DOI] [PubMed] [Google Scholar]

- 8. Ryan M, Gerard K. Using discrete choice experiments to value health care programmes: current practice and future research reflections. Appl Health Econ Health Policy. 2003;2:55–64. [PubMed] [Google Scholar]

- 9. Flynn TN, Bilger M, Malhotra C, Finkelstein EA. Are efficient designs used in discrete choice experiments too difficult for some respondents? A case study eliciting preferences for end-of-life care. Pharmacoeconomics. 2015;34:273–84. [DOI] [PubMed] [Google Scholar]

- 10. Hall J, Viney R, Haas M, Louviere J. Using stated preference discrete choice modeling to evaluate health care programs. J Bus Res. 2004;57:1026–32. [Google Scholar]

- 11. Lancsar E, Louviere J. Deleting “irrational” responses from discrete choice experiments: a case of investigating or imposing preferences? Health Econ. 2006;15:797–811. [DOI] [PubMed] [Google Scholar]

- 12. Lagarde M. Investigating attribute non-attendance and its consequences in choice experiments with latent class models. Health Econ. 2012;22:554–67. [DOI] [PubMed] [Google Scholar]

- 13. Coast J. The appropriate uses of qualitative methods in health economics. Health Econ. 1999;8:345–53. [DOI] [PubMed] [Google Scholar]

- 14. Coast J, McDonald R, Baker R. Issues arising from the use of qualitative methods in health economics. J Health Serv Res Policy. 2004;9:171–6. [DOI] [PubMed] [Google Scholar]

- 15. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3:77–101. [Google Scholar]

- 16. Corbin J, Strauss A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. 3rd ed. Thousand Oaks, CA: Sage Publications; 2008. [Google Scholar]

- 17. Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–88. [DOI] [PubMed] [Google Scholar]

- 18. Pope C, Mays N. Qualitative Research in Health Care. 3rd ed. Oxford, UK: John Wiley & Sons, 2008. [Google Scholar]

- 19. Baker R, Robinson A, Smith R. How do respondents explain WTP responses? A review of the qualitative evidence. J Socio Econ. 2008;37:1427–42. [Google Scholar]

- 20. Chilton SM, Hutchinson WG. A qualitative examination of how respondents in a contingent valuation study rationalise their WTP responses to an increase in the quantity of the environmental good. J Econ Psychol. 2003;24:65–75. [Google Scholar]

- 21. Baker R, Robinson A. Responses to standard gambles: are preferences “well constructed”? Health Econ. 2004;13:37–48. [DOI] [PubMed] [Google Scholar]

- 22. Bridges JF, Hauber AB, Marshall D, Lloyd A, Prosser L, Regier DA, Johnson F, Mauskopf J. Conjoint analysis applications in health-a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Health. 2011;14:403–13. [DOI] [PubMed] [Google Scholar]

- 23. Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics. 2008;26:661–77. [DOI] [PubMed] [Google Scholar]

- 24. Coast J, Al-Janabi H, Sutton E, Horrocks SA, Vosper J, Swancutt DR, Flynn T. Using qualitative methods for attribute development for discrete choice experiments: issues and recommendations. Health Econ. 2012;21:730–41. [DOI] [PubMed] [Google Scholar]

- 25. Coast J, Horrocks SA. Developing attributes and levels for discrete choice experiments using qualitative methods. J Health Serv Res Policy. 2007;12:25–30. [DOI] [PubMed] [Google Scholar]

- 26. Kløjgaard M, Bech M, Søgaard R. Designing a stated choice experiment: the value of a qualitative process. J Choice Model. 2012;5:1–18. [Google Scholar]

- 27. Centre for Reviews and Dissemination (CRD). Systematic Reviews. CRD’s Guidance for Undertaking Reviews in Health Care. Heslington, UK: University of York; 2008. [Google Scholar]

- 28. Louviere J, Flynn T, Carson RT. Discrete choice experiments are not conjoint analysis. J Choice Model. 2010;3:57–72. [Google Scholar]

- 29. Naik-Panvelkar P, Armour C, Saini B. Discrete choice experiments in pharmacy: a review of the literature. Int J Pharm Pract. 2013;21:3–19. [DOI] [PubMed] [Google Scholar]

- 30. McTaggart-Cowan HM, Shi P, Fitzgerald JM, Anis A, Kopec JA, Bai TR, Soon JA, Lynd LD. An evaluation of patients’ willingness to trade symptom-free days for asthma-related treatment risks: a discrete choice experiment. J Asthma. 2008;45:630–8. [DOI] [PubMed] [Google Scholar]

- 31. Bhatt M, Currie GR, Auld MC, Johnson DW. Current practice and tolerance for risk in performing procedural sedation and analgesia on children who have not met fasting guidelines: a Canadian survey using a stated preference discrete choice experiment. Acad Emerg Med. 2010;17:1207–15. [DOI] [PubMed] [Google Scholar]

- 32. Hundley V, Ryan M. Are women’s expectations and preferences for intrapartum care affected by the model of care on offer? BJOG. 2004;111:550–60. [DOI] [PubMed] [Google Scholar]

- 33. Ashcroft DM, Seston E, Griffiths CE. Trade-offs between the benefits and risks of drug treatment for psoriasis: a discrete choice experiment with UK dermatologists. Br J Dermatol. 2006;155:1236–41. [DOI] [PubMed] [Google Scholar]

- 34. Caldow J, Bond CM, Ryan M, Campbell NC, Miguel FS, Kiger A, Lee A. Treatment of minor illness in primary care: a national survey of patient satisfaction, attitudes and preferences regarding a wider nursing role. Health Expect. 2007;10:30–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Kruk ME, Johnson JC, Gyakobo M, Agyei-Baffour P, Asabir K, Kotha SR, Kwansah J, Nakua E, Snow RC, Dzodzomenyo M. Rural practice preferences among medical students in Ghana: a discrete choice experiment. Bull World Health Organ. 2010;88:333–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Gyrd-Hansen D, Slothuus U. The citizen’s preferences for financing public health care: a Danish survey. Int J Health Care Finance Econ. 2002;2:25–36. [DOI] [PubMed] [Google Scholar]

- 37. Kjaer T, Gyrd-Hansen D. Preference heterogeneity and choice of cardiac rehabilitation program: results from a discrete choice experiment. Health Policy. 2008;85:124–32. [DOI] [PubMed] [Google Scholar]

- 38. Bech M. Politicians’ and hospital managers’ trade-offs in the choice of reimbursement scheme: a discrete choice experiment. Health Policy. 2003;66:261–75. [DOI] [PubMed] [Google Scholar]

- 39. Hanson K, McPake B, Nakamba P, Archard L. Preferences for hospital quality in Zambia: results from a discrete choice experiment. Health Econ. 2005;14:687–701. [DOI] [PubMed] [Google Scholar]

- 40. Johnson F, Özdemir S, Manjunath R, Hauber AB, Burch SP, Thompson TR. Factors that affect adherence to bipolar disorder treatments: a stated-preference approach. Med Care. 2007;45:545–52. [DOI] [PubMed] [Google Scholar]

- 41. Mangham LJ, Hanson K. Employment preferences of public sector nurses in Malawi: results from a discrete choice experiment. Trop Med Int Heal. 2008;13:1433–41. [DOI] [PubMed] [Google Scholar]

- 42. Schwappach DL, Mulders V, Simic D, Wilm S, Thurmann PA. Is less more? Patients’ preferences for drug information leaflets. Pharmacoepidemiol Drug Saf. 2011;20:987–95. [DOI] [PubMed] [Google Scholar]

- 43. Porteous T, Ryan M, Bond CM, Hannaford P. Preferences for self-care or professional advice for minor illness: a discrete choice experiment. Br J Gen Pract. 2006;56:911–7. [PMC free article] [PubMed] [Google Scholar]

- 44. Burr J, Kilonzo M, Vale L, Ryan M. Developing a preference-based Glaucoma Utility Index using a discrete choice experiment. Optom Vis Sci. 2007;84:797–808. [DOI] [PubMed] [Google Scholar]

- 45. Gidman W, Elliott RA, Payne K, Meakin GH, Moore J. A comparison of parents and pediatric anesthesiologists’ preferences for attributes of child daycase surgery: a discrete choice experiment. Paediatr Anaesth. 2007;17:1043–52. [DOI] [PubMed] [Google Scholar]

- 46. Lim MK, Bae EY, Choi S-E, Lee EK, Lee T-J. Eliciting public preference for health-care resource allocation in South Korea. Value Health. 2012;15:S91–4. [DOI] [PubMed] [Google Scholar]

- 47. Lim JNW, Edlin R. Preferences of older patients and choice of treatment location in the UK: a binary choice experiment. Health Policy. 2009;91:252–7. [DOI] [PubMed] [Google Scholar]

- 48. Hodgkins P, Swinburn P, Solomon D, Yen L, Dewilde S, Lloyd A. Patient preferences for first-line oral treatment for mild-to-moderate ulcerative colitis: a discrete-choice experiment. Patient. 2012;5:33–44. [DOI] [PubMed] [Google Scholar]

- 49. Pitchforth E, Watson V, Tucker J, Ryan M, van Teijlingen E, Farmer J, Ireland J, Thomson E, Kiger A, Bryers H. Models of intrapartum care and women’s trade-offs in remote and rural Scotland: a mixed-methods study. BJOG. 2008;115:560–9. [DOI] [PubMed] [Google Scholar]

- 50. Baker R, Bateman I, Donaldson C, Jones-Lee M, Lancsar E, Loomes G, Mason H, Odejar M, Pinto Prades JL, Robinson A, et al. Weighting and valuing quality-adjusted life-years using stated preference methods: preliminary results from the Social Value of a QALY Project. Health Technol Assess. 2010;14:1–162. [DOI] [PubMed] [Google Scholar]

- 51. Bridges JF, Gallego G, Kudo M, Okita K, Han K-H, Ye S-L, Blauvelt BM. Identifying and prioritizing strategies for comprehensive liver cancer control in Asia. BMC Health Serv Res. 2011;11:298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Bridges JF, Kinter ET, Schmeding A, Rudolph I, Muhlbacher A. Can patients diagnosed with schizophrenia complete choice-based conjoint analysis tasks? Patient. 2011;4:267–275. [DOI] [PubMed] [Google Scholar]

- 53. Ryan M, Watson V, Entwistle V. Rationalising the “irrational”: a think aloud study of a discrete choice experiment responses. Health Econ. 2009;18:321–36. [DOI] [PubMed] [Google Scholar]

- 54. Cheraghi-Sohi S, Hole AR, Mead N, McDonald R, Whalley D, Bower P, Roland M. What patients want from primary care consultations: a discrete choice experiment to identify patients’ priorities. Ann Fam Med. 2008;6:107–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Roux L, Ubach C, Donaldson C, Ryan M. Valuing the benefits of weight loss programs: an application of the discrete choice experiment. Obes Res. 2004;12:1342–51. [DOI] [PubMed] [Google Scholar]

- 56. Gerard K, Salisbury C, Street D, Pope C, Baxter H. Is fast access to general practice all that should matter? A discrete choice experiment of patients’ preferences. J Health Serv Res Policy. 2008;13:3–10. [DOI] [PubMed] [Google Scholar]

- 57. Ryan M, Netten A, Skatun D, Smith P. Using discrete choice experiments to estimate a preference-based measure of outcome—an application to social care for older people. J Health Econ. 2006;25:927–44. [DOI] [PubMed] [Google Scholar]

- 58. Tappenden P, Brazier J, Ratcliffe J, Chilcott J. A stated preference binary choice experiment to explore NICE decision making. Pharmacoeconomics. 2007;25:685–93. [DOI] [PubMed] [Google Scholar]

- 59. Payne K, Fargher EA, Roberts SA, Tricker K, Elliott RA, Ratcliffe J, Newman WG. Valuing pharmacogenetic testing services: a comparison of patients’ and health care professionals’ preferences. Value Health. 2011;14:121–34. [DOI] [PubMed] [Google Scholar]

- 60. Poulos C, Yang JC, Levin C, Van Minh H, Giang KB, Nguyen D. Mothers’ preferences and willingness to pay for HPV vaccines in Vinh Long Province, Vietnam. Soc Sci Med. 2011;73:226–34. [DOI] [PubMed] [Google Scholar]

- 61. Richardson G, Bojke C, Kennedy A, Reeves D, Bower P, Lee V, Middleton E, Gardner C, Gately C, Rogers A. What outcomes are important to patients with long term conditions? A discrete choice experiment. Value Health. 2009;12:331–9. [DOI] [PubMed] [Google Scholar]

- 62. Vujicic M, Shengelia B, Alfano M, Thu HB. Physician shortages in rural Vietnam: using a labor market approach to inform policy. Soc Sci Med. 2011;73:970–7. [DOI] [PubMed] [Google Scholar]

- 63. Lloyd A, Nafees B, Barnett A, Heller S, Ploug U, Lammert M, Bogelund M. Willingness to pay for improvements in chronic long-acting insulin therapy in individuals with type 1 or type 2 diabetes mellitus. Clin Ther. 2011;33:1258–67. [DOI] [PubMed] [Google Scholar]

- 64. Grindrod KA, Marra CA, Colley L, Tsuyuki RT, Lynd LD. Pharmacists’ preferences for providing patient-centered services: a discrete choice experiment to guide health policy. Ann Pharmacother. 2010;44:1554–64. [DOI] [PubMed] [Google Scholar]

- 65. Hsieh Y-H, Gaydos CA, Hogan MT, Uy OM, Jackman J, Jett-Goheen M, Albertie A, Dangerfield DT, Neustadt CR, Wiener ZS, et al. What qualities are most important to making a point of care test desirable for clinicians and others offering sexually transmitted infection testing? PLoS One. 2011;6:e19263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Lagarde M, Smith Paintain L, Antwi G, Jones C, Greenwood B, Chandramohan D, Tagbor H, Webster J. Evaluating health workers’ potential resistance to new interventions: a role for discrete choice experiments. PLoS One. 2011;6:e23588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Scotland GS, Mcnamee P, Cheyne H, Hundley V, Barnett C. Women’s preferences for aspects of labor management: results from a discrete choice experiment. Birth. 2011;38:36–46. [DOI] [PubMed] [Google Scholar]

- 68. Naik-Panvelkar P, Armour C, Rose JM, Saini B. Patients’ value of asthma services in Australian pharmacies: the way ahead for asthma care. J Asthma. 2012;49:310–6. [DOI] [PubMed] [Google Scholar]

- 69. Van Empel IWH, Dancet EAF, Koolman X, Nelen WLDM, Stolk EA, Sermeus W, D’Hooghe TM, Kremer JM. Physicians underestimate the importance of patient-centredness to patients: a discrete choice experiment in fertility care. Hum Reprod. 2011;26:584–93. [DOI] [PubMed] [Google Scholar]

- 70. Fitzpatrick E, Coyle DE, Durieux-Smith A, Graham ID, Angus DE, Gaboury I. Parents’ preferences for services for children with hearing loss: a conjoint analysis study. Ear Hear. 2007;28:842–9. [DOI] [PubMed] [Google Scholar]

- 71. Turner D, Tarrant C, Windridge K, Bryan S, Boulton M, Freeman G, Baker R. Do patients value continuity of care in general practice? An investigation using stated preference discrete choice experiments. J Health Serv Res Policy. 2007;12:132–7. [DOI] [PubMed] [Google Scholar]

- 72. Clark M, Determann D, Petrou S, Moro D, de Bekker-Grob EW. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics. 2014;32:883–902. [DOI] [PubMed] [Google Scholar]

- 73. Critical Appraisal Skills Programme (CASP). 10 Questions to Help You Make Sense of Qualitative Research. Eaglestone, UK: Public Health Resource Unit; 2006. [Google Scholar]

- 74. Dixon-Woods M. The problem of appraising qualitative research. Qual Saf Health Care. 2004;13:223–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Long A, Godfrey M. An evaluation tool to assess the quality of qualitative research studies. Int J Soc Res Methodol. 2004;7:181–96. [Google Scholar]

- 76. National Center for the Dissemination of Disability Research (NCDDR). What are the standards for quality research? NCDDR; 2004. http://ktdrr.org/ktlibrary/articles_pubs/ncddrwork/focus/focus9/Focus9.pdf. [Google Scholar]

- 77. Pearson A, Field J. JBI Critical Appraisal Checklist for Qualitative Research. Adelaide, Australia: Joanna Briggs Institute; 2011. [Google Scholar]

- 78. Popay J, Rogers A, Williams G. Rationale and standards for the systematic review of qualitative literature in health services research. Qual Health Res. 1998;8:341–51. [DOI] [PubMed] [Google Scholar]

- 79. Walsh D, Downe S. Appraising the quality of qualitative research. Midwifery. 2006;22:108–19. [DOI] [PubMed] [Google Scholar]

- 80. Kjaer T, Gyrd-Hansen D, Willaing I. Investigating patients’ preferences for cardiac rehabilitation in Denmark. Int J Technol Assess Health Care. 2006;22:211–8. [DOI] [PubMed] [Google Scholar]

- 81. Johnson F, Lancsar E, Marshall D, Kilambi V, Mulbacher A, Regier D, Bresnahan B, Kanninen B, Bridges JF. Constructing experimental designs for discrete-choice experiments: report of the ISPOR conjoint analysis experimental design good research practices task. Value Health. 2013;16:3–13. [DOI] [PubMed] [Google Scholar]

- 82. Hauber A, Gonzalez JM, Groothuis-Oudshoorn C, Prior T, Da M, Cunningham C, Ijzerman M, Bridges J. Statistical methods for the analysis of discrete-choice experiments: a report of the ISPOR Conjoint Analysis Good Research Practices Task Force. Value Health. 2016;19:300–15. [DOI] [PubMed] [Google Scholar]

- 83. Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–88. [DOI] [PubMed] [Google Scholar]

- 84. Cheung KL, Wijnen BFM, Hollin IL, Janssen EM, Bridges JF, Evers SMAA, Hiligsmann M. Using best-worst scaling to investigate preferences in health care. Pharmacoeconomics. 2016;34(12):1195–1209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Mühlbacher A, Kaczynski A, Zweifel P, Johnson FR. Experimental measurement of preferences in health and healthcare using best-worst scaling: an overview. Health Econ Rev. 2016;6(1):2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Flynn T, Louviere J, Peters T, Coast J. Best-worst scaling: what it can do for health care research and how to do it. J Health Econ. 2007;26:171–89. [DOI] [PubMed] [Google Scholar]

- 87. Cheraghi-Sohi S, Bower P, Mead N, McDonald R, Whalley D, Roland M. Making sense of patient priorities: applying discrete choice methods in primary care using “think aloud” technique. Fam Pract. 2007;24:276–82. [DOI] [PubMed] [Google Scholar]

- 88. Fitzpatrick E, Graham ID, Durieux-Smith A, Angus D, Coyle D. Parents’ perspectives on the impact of the early diagnosis of childhood hearing loss. Int J Audiol. 2007;46:97–106. [DOI] [PubMed] [Google Scholar]

- 89. Fitzpatrick E, Angus D, Durieux-Smith A, Graham ID, Coyle D. Parents’ needs following identification of childhood hearing loss. Am J Audiol. 2008;17:38–49. [DOI] [PubMed] [Google Scholar]

- 90. Salisbury C, Banks J, Goodall S, Baxter H, Montgomery A, Pope C, Gerard K, Simons L, Lattimer V, Sampson F, et al. An Evaluation of Advanced Access in General Practice. London, UK: National Co-ordinating Centre for NHS Service Delivery and Organisation Research and Development; 2007. [Google Scholar]

- 91. Grindrod KA, Rosenthal M, Lynd L, Marra CA, Bougher D, Wilgosh C, Tsuyuki RT. Pharmacists’ perspectives on providing chronic disease management services in the community —part I: current practice environment. Can Pharm J. 2009;142:234–9. [Google Scholar]

- 92. Hall J, Fiebig DDG, King MT, Hossain I, Louviere JJ. What influences participation in genetic carrier testing? Results from a discrete choice experiment. J Health Econ. 2006;25:520–37. [DOI] [PubMed] [Google Scholar]

- 93. Haas M, Hall J, De Abreu Lourenco R. It’s What’s Expected: Genetic Testing for Inherited Conditions. CHERE Discussion Paper No. 46. Sydney, Australia: University of Sydney; 2001. [Google Scholar]

- 94. Haughney J, Fletcher M, Wolfe S, Ratcliffe J, Brice R, Partridge M. Features of asthma management: quantifying the patient perspective. BMC Pulm Med. 2007;7:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95. Haughney J, Barnes G, Partridge M, Cleland J. The Living & Breathing Study: a study of patients’ views of asthma and its treatment. Prim Care Respir J. 2004;13:28–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Herbild L, Bech M, Gyrd-Hansen D. Estimating the Danish populations’ preferences for pharmacogenetic testing using a discrete choice experiment: the case of treating depression. Value Health. 2009;12:560–7. [DOI] [PubMed] [Google Scholar]

- 97. Herbild L. Designing a DCE to outlay patients’ and the publics’ preferences for genetic screening in the treatment of depression. Health Economics Papers (University of Southern Denmark). 2007;1:1–31. [Google Scholar]

- 98. Hsieh Y-H, Hogan MT, Barnes M, Jett-Goheen M, Huppert J, Rompalo AM, Gaydos CA. Perceptions of an ideal point-of-care test for sexually transmitted infections—a qualitative study of focus group discussions with medical providers. PLoS One. 2011;5:e14144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99. Smith Paintain L, Antwi G, Jones C, Amoako E, Adjei RO, Afrah NA, Greenwood B, Chandramohan D, Tagbor H, Webster J. Intermittent screening and treatment versus intermittent preventive treatment of malaria in pregnancy: provider knowledge and acceptability. PLoS One. 2011;6:e24035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100. Nafees B, Lloyd A, Kennedy-Martin T, Hynd S. How diabetes and insulin therapy affects the lives of people with type 1 diabetes. Eur Diabetes Nurs. 2006;3:92–7. [Google Scholar]

- 101. Mark T, Swait J. Using stated preference modeling to forecast the effect of medication attributes on prescriptions of alcoholism medications. Value Health. 2003;6:474–82. [DOI] [PubMed] [Google Scholar]

- 102. Mark TL, Kranzler HR, Poole VH, Hagen C, McLeod C, Crosse S. Barriers to the use of medications to treat alcoholism. Am J Addict. 2003;12:281–94. [PubMed] [Google Scholar]

- 103. Morton R, Snelling P, Webster AC, Rose JM, Masterson R, Johnson DW, Howard K. Factors influencing patient choice of dialysis versus conservative care to treat end-stage kidney disease. CMAJ. 2012;184:E277–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104. Morton R, Devitt J, Howard K, Anderson K, Snelling P, Cass A. Patient views about treatment of stage 5 CKD: a qualitative analysis of semistructured interviews. Am J Kidney Dis. 2010;55:431–40. [DOI] [PubMed] [Google Scholar]

- 105. Fargher EA, Eddy C, Newman WG, Qasim F, Tricker K, Elliott RA, Payne K. Patients’ and healthcare professionals’ views on pharmacogenetic testing and its future delivery in the NHS. Pharmacogenomics. 2007;8:1511–9. [DOI] [PubMed] [Google Scholar]

- 106. Potoglou D, Burge P, Flynn T, Netten A, Malley J, Forder J, Brazier J. Best-worst scaling vs. discrete choice experiments: an empirical comparison using social care data. Soc Sci Med. 2011;72:1717–27. [DOI] [PubMed] [Google Scholar]

- 107. Netten A, Burge P, Malley J, Brazier J, Flynn T, Forder J. Outcomes of Social Care for Adults (OSCA): Interim Findings. London: Personal Social Services Research Unit; 2009. [Google Scholar]

- 108. Nghi NQ, LaMontagne S, Bingham A, Rafiq M, Phoung Mai LT, Lien NTP. Human papillomavirus vaccine introduction in Vietnam: formative research findings. Sex Health. 2010;7:262–70. [DOI] [PubMed] [Google Scholar]

- 109. Kennedy A, Gately C, Rogers A. Examination of the implementation of the Expert Patients Programme within the structures and locality contexts of the NHS in England (PREPP study)—report II. Manchester, UK: National Primary Care Research and Development Centre; 2005. [Google Scholar]

- 110. Netten A, Ryan M, Smith P, Skatun D, Healey A, Knapp M, Wykes T. The Development of a Measure of Social Care Outcome for Older People. London: Personal Social Services Research Unit; 2002. [Google Scholar]

- 111. Cheyne BH, Terry R, Niven C, Dowding D, Hundley V, Mcnamee P. “Should I come in now?” A study of women’s early labour experiences. Br J Midwifery. 2007;15:604–9. [Google Scholar]

- 112. Bryan S, Williams I, Mciver S. Seeing the NICE side of cost-effectiveness analysis: a qualitative investigation of the use of CEA in NICE technology appraisals. Health Econ. 2007;16:179–93. [DOI] [PubMed] [Google Scholar]

- 113. Baker R, Freeman G, Boulton M, Brookes O, Windridge K, Tarrant C, Low J, Turner D, Hutton E, Bryan S. Continuity of Care: Patients’ and Carers’ Views and Choices in Their Use of Primary Care Services. London, UK: National Co-ordinating Centre for NHS Service Delivery and Organisation Research and Development; 2006;SDO/13b/20. [Google Scholar]

- 114. Dancet E, Van Empel I, Rober P, Nelen W, Kremer J, D’Hooghe T. Patient-centred infertility care: a qualitative study to listen to the patient’s voice. Hum Reprod. 2011;26:827–33. [DOI] [PubMed] [Google Scholar]

- 115. Witter S, Thi Thu Ha B, Shengalia B, Vujicic M. Understanding the “four directions of travel”: qualitative research into the factors affecting recruitment and retention of doctors in rural Vietnam. Hum Resour Health. 2011;2011;9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.