Abstract

According to the central limit theorem, the means of a random sample of size, n, from a population with mean, µ, and variance, σ2, distribute normally with mean, µ, and variance, . Using the central limit theorem, a variety of parametric tests have been developed under assumptions about the parameters that determine the population probability distribution. Compared to non-parametric tests, which do not require any assumptions about the population probability distribution, parametric tests produce more accurate and precise estimates with higher statistical powers. However, many medical researchers use parametric tests to present their data without knowledge of the contribution of the central limit theorem to the development of such tests. Thus, this review presents the basic concepts of the central limit theorem and its role in binomial distributions and the Student's t-test, and provides an example of the sampling distributions of small populations. A proof of the central limit theorem is also described with the mathematical concepts required for its near-complete understanding.

Keywords: Normal distribution, Probability, Statistical distributions, Statistics

Introduction

The central limit theorem is the most fundamental theory in modern statistics. Without this theorem, parametric tests based on the assumption that sample data come from a population with fixed parameters determining its probability distribution would not exist. With the central limit theorem, parametric tests have higher statistical power than non-parametric tests, which do not require probability distribution assumptions. Currently, multiple parametric tests are used to assess the statistical validity of clinical studies performed by medical researchers; however, most researchers are unaware of the value of the central limit theorem, despite their routine use of parametric tests. Thus, clinical researchers would benefit from knowing what the central limit theorem is and how it has become the basis for parametric tests. This review aims to address these topics. The proof of the central limit theorem is described in the appendix, with the necessary mathematical concepts (e.g., moment-generating function and Taylor's formula) required for understanding the proof. However, some mathematical techniques (e.g., differential and integral calculus) were omitted due to space limitations.

Basic Concepts of Central Limit Theorem

In statistics, a population is the set of all items, people, or events of interest. In reality, however, collecting all such elements of the population requires considerable effort and is often not possible. For example, it is not possible to investigate the proficiency of every anesthesiologist, worldwide, in performing awake nasotracheal intubations. To make inferences regarding the population, however, a subset of the population (sample) can be used. A sample of sufficient size that is randomly selected can be used to estimate the parameters of the population using inferential statistics. A finite number of samples are attainable from the population depending on the size of the sample and the population itself. For example, all samples with a size of 1 obtained at random, with replacement, from the population {3,6,9,30} would be {3},{6},{9}, and {30}. If the sample size is 2, a total of 4 × 4 = 42 = 16 samples, which are {3,3},{3,6},{3,9},{3,30},{6,3},{6,6}...{9,30},{30,3},{30,6},{30,9}, and {30,30}, would be possible. In this way, 4n samples with a size of n would be obtained from the population. Here, we consider the distribution of the sample means.

For example, here is the asymmetric population with a size of 4 as presented above:

| {3, 6, 9, 30} |

The population mean, µ, and variance, σ2, are:

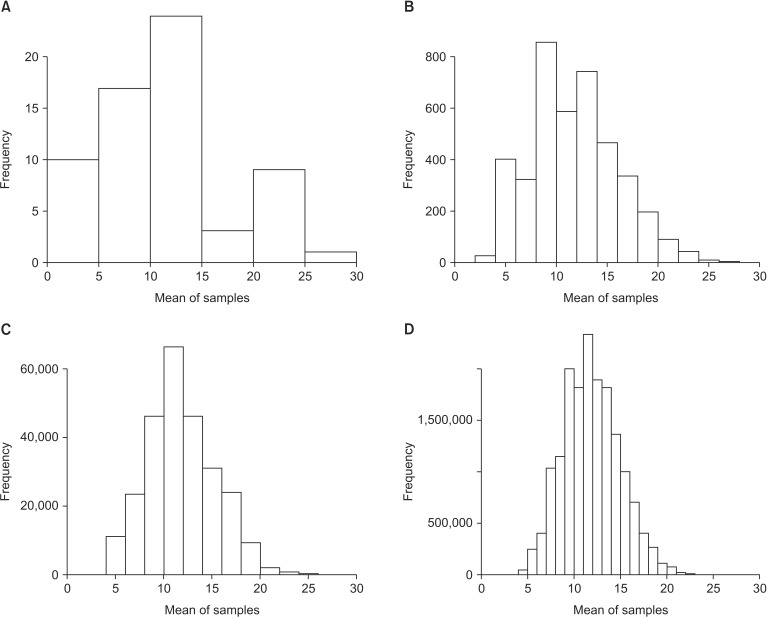

A simple rando m sampling with replacement from the population produces 4 × 4 × 4 = 43 = 64 samples with a size of 3 (Table 1). The mean and variance of the 64 sample means are 12 (the population mean) and , respectively; however, the distribution of the means of samples is skewed (Fig. 1A).

Table 1. Samples with a Size of 3 and Their Means.

| Number | Sample | Sample mean | ||

|---|---|---|---|---|

| 1 | 3 | 3 | 3 | 3 |

| 2 | 3 | 3 | 6 | 4 |

| 3 | 3 | 3 | 9 | 5 |

| 4 | 3 | 3 | 30 | 12 |

| 5 | 3 | 6 | 3 | 4 |

| 6 | 3 | 6 | 6 | 5 |

| 7 | 3 | 6 | 9 | 6 |

| 8 | 3 | 6 | 30 | 13 |

| 9 | 3 | 9 | 3 | 5 |

| 10 | 3 | 9 | 6 | 6 |

| Truncated | ||||

| 54 | 30 | 6 | 6 | 14 |

| 55 | 30 | 6 | 9 | 15 |

| 56 | 30 | 6 | 30 | 22 |

| 57 | 30 | 9 | 3 | 14 |

| 58 | 30 | 9 | 6 | 15 |

| 59 | 30 | 9 | 9 | 16 |

| 60 | 30 | 9 | 30 | 23 |

| 61 | 30 | 30 | 3 | 21 |

| 62 | 30 | 30 | 6 | 22 |

| 63 | 30 | 30 | 9 | 23 |

| 64 | 30 | 30 | 30 | 30 |

Fig. 1. Histogram representing the means for samples of sizes of 3 (A), 6 (B), 9 (C), and 12 (D).

When a simple random sampling with replacement is performed for samples with a size of 6, 4 × 4 × 4 × 4 × 4 × 4 = 46 = 4,096 samples are possible (Table 2). The mean and variance of the 4,096 sample means are 12 (the population mean) and , respectively. Compared to the distribution of the means of samples with a size of 3, that of the means of samples with a size of 6 is less skewed. Importantly, the sample means also gather around the population mean. (Fig. 1B). Thus, the larger the sample size (n), the more closely the sample means gather symmetrically around the population mean (µ) and have a corresponding reduction in the variance () (Figs. 1C and 1D). If Figs. 1A to 1D are converted to the probability density function by replacing the variable “frequency” with another variable “probability” on the vertical axis, their shapes remain unchanged.

Table 2. Samples with a Size of 6 and Their Means.

| Number | Sample | Sample mean | |||||

|---|---|---|---|---|---|---|---|

| 1 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| 2 | 3 | 3 | 3 | 3 | 3 | 6 | 3.5 |

| 3 | 3 | 3 | 3 | 3 | 3 | 9 | 4 |

| 4 | 3 | 3 | 3 | 3 | 3 | 30 | 7.5 |

| 5 | 3 | 3 | 3 | 3 | 6 | 3 | 3.5 |

| 6 | 3 | 3 | 3 | 3 | 6 | 6 | 4 |

| 7 | 3 | 3 | 3 | 3 | 6 | 9 | 4.5 |

| 8 | 3 | 3 | 3 | 3 | 6 | 30 | 8 |

| 9 | 3 | 3 | 3 | 3 | 9 | 3 | 4 |

| 10 | 3 | 3 | 3 | 3 | 9 | 6 | 4.5 |

| Truncated | |||||||

| 4087 | 30 | 30 | 30 | 30 | 6 | 9 | 22.5 |

| 4088 | 30 | 30 | 30 | 30 | 6 | 30 | 26 |

| 4089 | 30 | 30 | 30 | 30 | 9 | 3 | 22 |

| 4090 | 30 | 30 | 30 | 30 | 9 | 6 | 22.5 |

| 4091 | 30 | 30 | 30 | 30 | 9 | 9 | 23 |

| 4092 | 30 | 30 | 30 | 30 | 9 | 30 | 26.5 |

| 4093 | 30 | 30 | 30 | 30 | 30 | 3 | 25.5 |

| 4094 | 30 | 30 | 30 | 30 | 30 | 6 | 26 |

| 4095 | 30 | 30 | 30 | 30 | 30 | 9 | 26.5 |

| 4096 | 30 | 30 | 30 | 30 | 30 | 30 | 30 |

In general, as the sample size from the population increases, its mean gathers more closely around the population mean with a decrease in variance. Thus, as the sample size approaches infinity, the sample means approximate the normal distribution with a mean, µ, and a variance, . As shown above, the skewed distribution of the population does not affect the distribution of the sample means as the sample size increases. Therefore, the central limit theorem indicates that if the sample size is sufficiently large, the means of samples obtained using a random sampling with replacement are distributed normally with the mean, µ, and the variance, , regardless of the population distribution. Refer to the appendix for a near-complete proof of the central limit theorem, as well as the basic mathematical concepts required for its proof.

Central Limit Theorem in the Real World

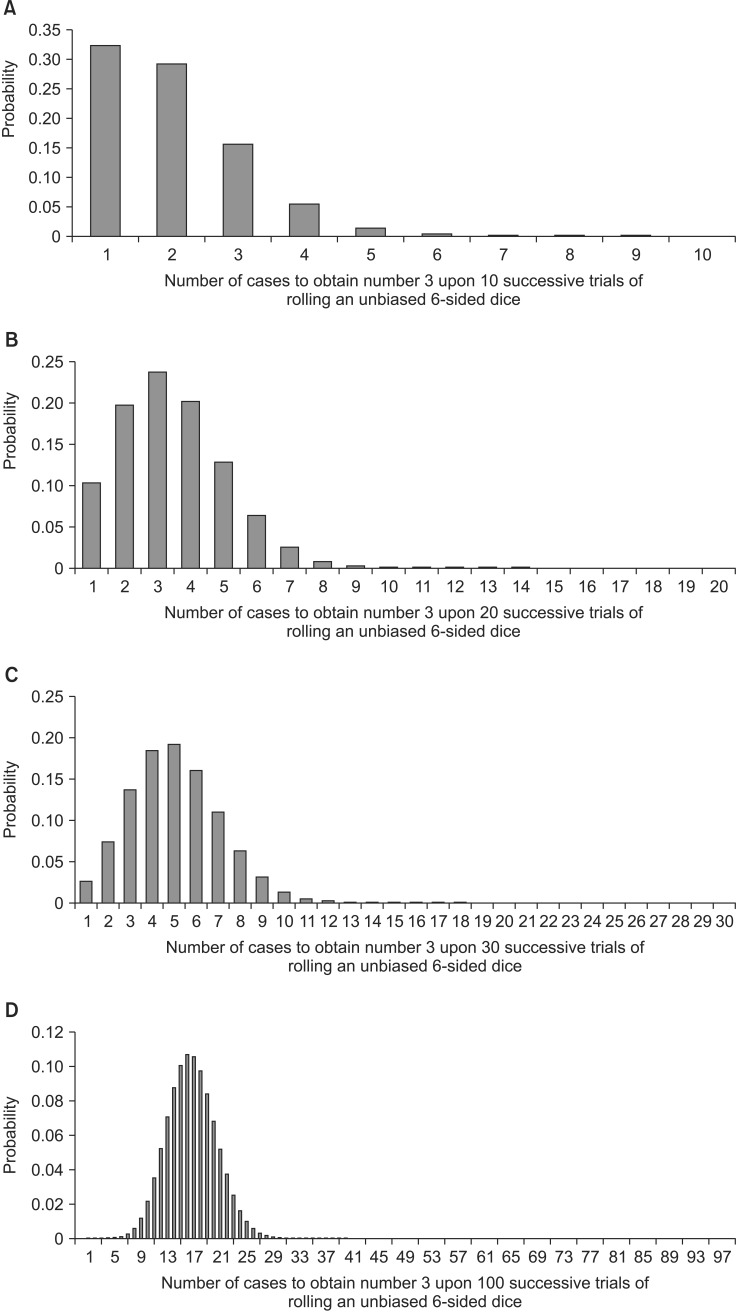

An unbiased, symmetric, 6-sided dice is rolled at random n times. The probability of rolling the number 3 x times in n successive independent trials has the following probability density distribution, which is called the binomial distribution:

n: number of in dependent trials (rolling a dice), x: number of times the number 3 is rolled in each trial, : the probability of rolling the number 3 in each trial, 1 − : the probability of rolling a number other than 3 in each trial.

The mathematical expectation of the random variable, X, (i.e., the number of times that the number 3 is rolled in each trial), which is also referred to as the mean of the distribution, is:

And the variance is:

When n = 10, the probability has a skewed distribution (Fig. 2A); however, as n increases, the distribution becomes symmetric with respect to its mean (Figs. 2B–2D). As n approaches infinity, the binomial distribution approximates the normal distribution with a mean, np, and a variance, np(1 − p), where p is the probability constant for the occurrence of the specific event during each trial.

Fig. 2. The probability density function of a binomial distribution with a probability parameter of (i.e., the probability of rolling the number 3 in each trial), based on to the number of trials.

Central Limit Theorem in the Student's t-test

Since the central limit theorem determines the sampling distribution of the means with a sufficient size, a specific mean (X̅) can be standardized and subsequently identified against the normal distribution with mean of 0 and variance of 12. In reality, however, the lack of a known population variance (σ2) prevents a determination of the probability density distribution.

Xi (i = 1, 2, ..., n): a sample from the population, N: the size of the population, µ: the mean of the population.

Notably, the Student's t-distribution was developed to use a sample variance (S) instead of a population variance (σ2).

xi (i = 1, 2, ..., n): a random sample from the population, n: sample size, X̅: the mean of the samples.

The specific mean (X̅) is studentized and its location is evaluated on the Student's t-distribution, based on the degree of freedom (n − 1). The shape of the Student's t-distribution is dependent on the degree of freedom. A low degree of freedom renders the peak of the Student's t-distribution lower than that of a normal distribution, although at some points, the tails have higher values than those of the normal distribution. As the degree of freedom increases, the Student's t-distribution approaches the normal distribution. At a degree of freedom of 30, the Student's t-distribution is regarded as equaling the normal distribution [1]. The underlying assumption for the Student's t-test is that samples should be obtained from a normally distributed population. However, since the distribution of population is not known, it should be determined whether the sample is normally distributed. This is particularly true for small sample sizes. If small sample sizes are normally distributed, the studentized distribution of the sample means is equal to the Student's t-distribution with a degree of freedom corresponding to the sample size. If the small samples are not normally distributed, non-parametric tests should be performed instead of the Student's t-test since they do not require assumptions about the distribution of population. If the sample size is 30, the studentized sampling distribution approximates the standard normal distribution and assumptions about the population distribution are meaningless since the sampling distribution is considered normal, according to the central limit theorem. Therefore, even if the mean of a sample of size > 30 is studentized using the variance, a normal distribution can be used for the probability distribution.

Conclusions

A comprehensive understanding of the central limit theorem will assist medical investigators when performing parametric tests to analyze their data with high statistical powers. The use of this theorem will also aid in the design of study protocols that are based on best-fit statistics.

Appendix

Moment-generating function

Moment-generating function of a normal distribution

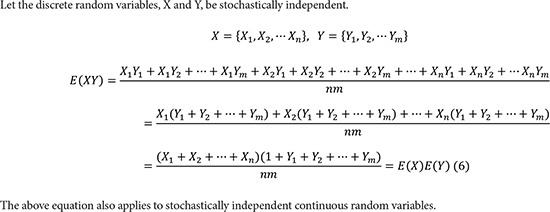

Mathematical expectation of multiplicat ion between stochastically independent random variables

Taylor's formula

Central limit theorem

References

- 1.Kim TK. T test as a parametric statistic. Korean J Anesthesiol. 2015;68:540–546. doi: 10.4097/kjae.2015.68.6.540. [DOI] [PMC free article] [PubMed] [Google Scholar]