Researchers test whether and how onymity, as opposed to anonymity, promotes cooperation in a social dilemma experiment.

Keywords: Evolutionary Game Theory, human behavior, Prisoner’s Dilemma, defection, punishment, reciprocity

Abstract

One of the most elusive scientific challenges for over 150 years has been to explain why cooperation survives despite being a seemingly inferior strategy from an evolutionary point of view. Over the years, various theoretical scenarios aimed at solving the evolutionary puzzle of cooperation have been proposed, eventually identifying several cooperation-promoting mechanisms: kin selection, direct reciprocity, indirect reciprocity, network reciprocity, and group selection. We report the results of repeated Prisoner’s Dilemma experiments with anonymous and onymous pairwise interactions among individuals. We find that onymity significantly increases the frequency of cooperation and the median payoff per round relative to anonymity. Furthermore, we also show that the correlation between players’ ranks and the usage of strategies (cooperation, defection, or punishment) underwent a fundamental shift, whereby more prosocial actions are rewarded with a better ranking under onymity. Our findings prove that reducing anonymity is a valid promoter of cooperation, leading to higher payoffs for cooperators and thus suppressing an incentive—anonymity—that would ultimately favor defection.

INTRODUCTION

Why is cooperation so prevalent in nature? After all, Darwinian selection should result in individuals pursuing their own selfish interests (1). However, cooperation not only prevails, but its mass emergence is the main force behind the evolutionary transitions from single-cell organisms to complex animal and human societies (2). To systematically analyze the fundamental trade-off faced by individuals who choose between cooperating and free-riding behaviors, mathematicians M. M. Flood and M. Dresher devised in the 1950s a model of conflict and cooperation known as Prisoner’s Dilemma (PD) (3). In each realization of PD, two players must select between cooperation (C) and defection (D). Although mutual cooperation generates a higher collective payoff, both players are tempted to defect because they can do better individually by exploiting a cooperative opponent. Without any external help, natural selection favors mutual defection (4).

Attempts to offset the unfavorable evolutionary outcome of PD branched into two complementary lines of research. One was spurred by the result that in spatially structured populations, cooperators who aggregate into clusters may avoid being wiped out by defectors (5, 6). Subsequent interest in the role of spatial topology in this field led to the introduction of complex networked structures (7–9) and, more recently, to a number of experiments examining the evolution of cooperation in relation to network heterogeneity, dynamics, and updating rules (10–13). The other line of research focused on social mechanisms, whereby individuals rely on common knowledge about their opponents or otherwise actively try to reduce the potential benefit of free riders. Examples of these mechanisms include tit for tat (14), win-stay, lose-shift (15), reputation (16), reward (17), and costly punishment (18–25). The special attention devoted to costly punishment (P) as an independent strategy partly originated from the controversial readiness of punishers to incur a cost to make other individuals pay an even higher fine. The role of this strategy in the promotion of cooperation has been disputed, with particularly negative views expressed on the basis of social experiments (22, 24).

Much of the mentioned progress in understanding the evolution of cooperation has been ascribed to several mechanisms that promote cooperative behavior (26). Although the details of these mechanisms differ greatly, they have a common basis (27–30) interpretable as the information needed to reduce anonymity relative to a primitive, well-mixed population (31, 32). Therefore, in the context of theoretical hypotheses and experimental evidence (30, 33), a question of considerable interest arises: Would reducing the anonymity of opponents in an actual social experiment promote cooperation? We set to answer this question by investigating the differences between anonymous and onymous treatments in a repeated PD experiment. We consider extended PD with three strategies [cooperation (C), defection (D), and punishment (P)]—as in the study of Dreber et al. (22) and in the study of Wu et al. (24)—to further inspect the (controversial) effects of costly punishment and to obtain richer correlations between payoffs and the strategies used, as compared to what would be possible with traditional two-strategy PD.

A total of 154 undergraduates voluntarily participated in a repeated PD experiment at Yunnan University of Finance and Economics (for more details, see Materials and Methods and the Supplementary Materials). The experiment consisted of pairwise encounters under two treatments: anonymous (T1) and onymous (T2). In T1, each participant interacted anonymously with opponents, whereas in T2, opponents were made known. In either of the treatments, the participants did not know how many rounds a particular interaction would have, but they knew that the probability of entering the next round had been set to 75%. When an interaction ended, the next set of previously unrealized pairwise encounters was drawn randomly until all participants interacted with each other. Participants were instructed to choose one action in each round, where actions reflected the three possible strategies (C, D, or P). The outcomes of unilateral actions were defined such that cooperation (C) meant paying 1 unit for the opponent to receive 2 units, defection (D) meant gaining 1 unit at a cost of 1 unit for the opponent, and punishment (P) meant paying 1 unit for the opponent to lose 4 units. The resulting unilateral and bilateral payoff matrices are given in Eq. 1

| (1) |

RESULTS

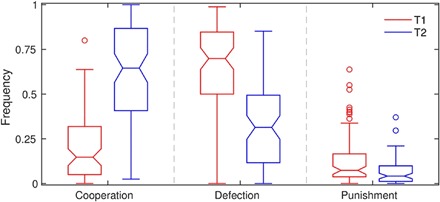

A comparison of the results from the two experimental treatments reveals emerging prosocial behavior under onymity (Fig. 1). We use the term prosocial in the sense of benefiting the collective. Cooperation is thus a prosocial action because if players in PD cooperate, then the group payoff is maximized. Conversely, defection is an antisocial action because defectors profit from exploiting cooperators, which in turn decreases the group payoff. Costly (altruistic) punishment is somewhat ambiguous because its immediate effect on the payoff is negative. However, a punisher’s presumable intention is to force a defector into a more cooperative frame of mind and, consequently, to generate a positive indirect effect on the group payoff. The empirical findings do not seem to confirm this indirect effect of punishment (22, 24)—an issue to which we devote considerable attention here. We find that the behavior of the participants differs significantly between anonymous and onymous treatments (χ2 test, χ2 = 2233, P < 10−6). Pairwise comparisons (Fig. 1) show an increase in the frequency of cooperation and a decrease in the frequency of defection, both significant at the 5% level, if anonymity is replaced with onymity. The frequency of punishment also notably decreases, but the difference in the medians is not significant. Overall, a more prosocial behavior emerges under onymity. This conclusion is consistent with the theoretical findings, whereby cooperation-promoting mechanisms—all of which preclude complete anonymity—can sometimes change the nature of PD (selection favors defection) to the point of eliminating the dilemma altogether (selection favors cooperation) (31).

Fig. 1. Onymity brings out the best in people.

Cooperation takes over defection as the dominant action if the anonymous treatment (T1) is replaced with the onymous one (T2). Pairwise comparisons indicate that the increase in the frequency of cooperation and the decrease in the frequency of defection between the two treatments are significant at the 5% level. The frequency of punishment decreases, but the difference between the medians is insignificant. Box-and-whisker plots with notches reveal the empirical distribution of the frequency of each action. Box height indicates the interquartile range with the median in between. Notches indicate the 95% confidence intervals for the median, thus permitting a visual pairwise comparison. Whisker height is such that 99.3% of the normally distributed data would be covered. The points drawn as outliers fall outside of the whisker coverage.

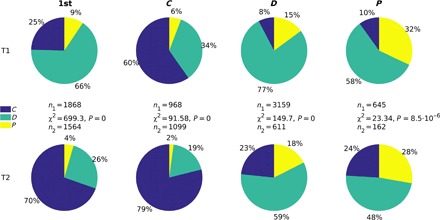

A closer look into participants’ behavior reveals that the action chosen in the first-round and the first-order conditional strategies (Fig. 2) becomes more prosocial under onymity (T2) compared to anonymity (T1). The increase in the frequency of cooperation and the decrease in the frequency of defection is particularly noticeable. In contrast, the response to punishment is decisively antisocial, with defection being the preferred choice followed by counter-punishment. In T1, punishment was countered with cooperation in less than 10% of cases. Although the situation improved in T2, cooperation still remained the least-preferred option. Hence, even if the intention of punishers is ultimately prosocial, the immediate result of their action is not. Another interesting outcome in T1 is that punishment was chosen as the first-round action about 100% more often than in the study of Dreber et al. (22), which is qualitatively similar to, but not as marked as, the result from the study of Wu et al. (24). The large difference between the choices in the two cited studies was attributed to the different cultural backgrounds of participants (Boston, MA and Beijing, China, respectively) (24). The same explanation may apply to our result relative to the study of Dreber et al. (22), given that the present experiment was also conducted in China. Among Chinese students, the frequency of punishment in the first round dropped below 5%—the level recorded in the Boston experiment—only after onymity had been introduced (that is, in T2). For a more detailed comparison between the present study and the study of Dreber et al. (22) and the study of Wu et al. (24), including the implications for the role of punishment in increasing the degree of cooperativeness in social dilemmas, see the Supplementary Materials.

Fig. 2. Onymity elicits prosocial behavior, but punishment does not.

The action in the first round (column 1st) and the first-order conditional strategies (columns C, D, and P, indicating the responses to cooperation, defection, and punishment, respectively) in later rounds are all significantly different between the two experimental treatments. The increase in cooperation and the decrease in defection by switching from the anonymous (T1) to the onymous (T2) treatment is particularly noticeable. Thus, onymity elicits prosocial behavior. In contrast, an immediate response to punishment—the preferred choice being defection followed by counter-punishment—is overwhelmingly antisocial even under onymity.

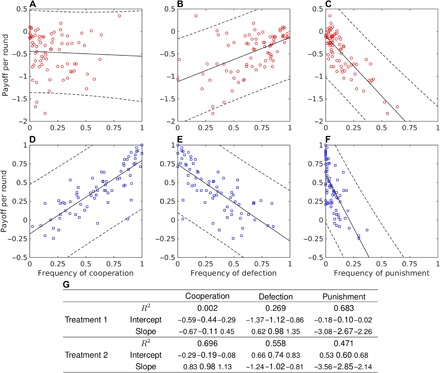

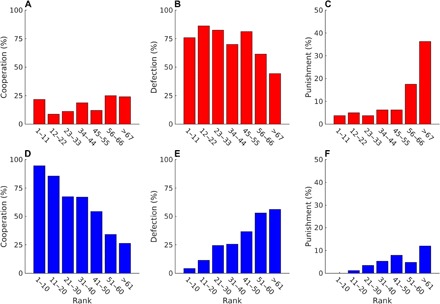

Because of the considerable changes in the behavior of participants between the two experimental treatments (Figs. 1 and 2), one could also anticipate major differences in the payoff per round. The median payoff per round of −0.319 under anonymity (T1) is significantly lower than the corresponding value of 0.395 under onymity (T2; Wilcoxon rank sum test, W = 8491, P < 10−6). Furthermore, the relationship between the choice of action and the performance of participants in terms of the payoff per round reveals contrasting outcomes between the two experimental treatments (Fig. 3). In T1, the payoff per round is uncorrelated with the frequency of cooperation (F test, F = 0.151, P = 0.698; however, see regression diagnostics in the Supplementary Materials), but it is positively correlated with the frequency of defection (F = 28.7, P < 10−6; Fig. 3, A and B). The latter result, although in contrast with the study of Dreber et al. (22), is not entirely surprising because in PD, selection favors defection. Given a sufficient number of cooperators to exploit, defectors necessarily attain a relative advantage in payoff. A conclusion is that, under anonymity, the antisocial action may produce a more desirable outcome in terms of the payoff per round than the prosocial one. However, in T2, the situation is reversed. The payoff per round becomes positively correlated with the frequency of cooperation (F = 165, P < 10−6) and negatively correlated with the frequency of defection (F = 91.0, P < 10−6; Fig. 3, D and E). Thus, onymity elicits prosocial behavior among participants, one that also pays back—winners play nice (Fig. 4).

Fig. 3. Playing nice under onymity pays off.

(A and B) When the opponent remains unknown (T1), the payoff per round does not correlate with the use of cooperation but correlates positively with the use of defection [in contrast with the study of Dreber et al. (22)], thus indicating that the prosocial action (that is, cooperation) is less desirable than the antisocial one (that is, defection). (D and E) When the opponent is known (T2), the payoff per round correlates positively with the use of cooperation and negatively with the use of defection, showing that the prosocial action is now more desirable than the antisocial one. (C and F) The only similarity between the two treatments is that the payoff per round correlates negatively with the use of punishment [the “winners don’t punish” effect (22, 24)]. Shown are the regression lines with the 95% prediction intervals (dashed curves). (G) In accompanying statistical analysis, the smaller font size indicates the 95% statistical confidence intervals.

Fig. 4. Onymity alters the factors of success in a social dilemma.

(A and B) Under anonymity (T1), the use of cooperation does not affect the ranking, whereas the use of defection is advantageous, albeit in a limited fashion. (D and E) Under onymity (T2), the use of cooperation is decisively advantageous for the ranking of the participants. In contrast, the use of defection is disadvantageous. (C and F) The “winners don’t punish” effect remains unaltered between the two experimental treatments. The standard competition ranking is used, such that a lower ranking number is better. The vertical axes report the average use of each action per 100 rounds. For clarity, the scale for the use of punishment is doubled. The number of bins in the histograms is kept the same for both treatments, although the number of participants was slightly higher in T1 than in T2 (80 versus 74, respectively), thus causing the bin sizes to slightly differ between the treatments.

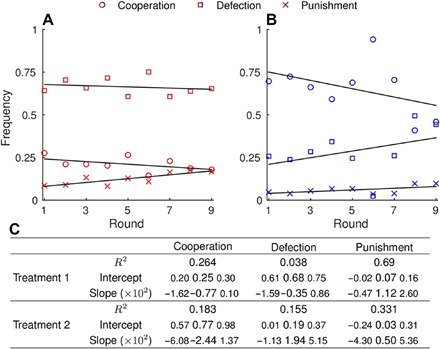

The consequences of the use of punishment on the payoff per round further corroborate the conclusion that winners play nice. We find that the payoff per round is negatively correlated with the frequency of punishment under anonymity (F = 168, P < 10−6) and even more so under onymity (F = 64.1, P < 10−6; Fig. 3, C and F). These results are a manifestation of the “winners don’t punish” effect observed in previous studies (22, 24). What is truly remarkable about this effect is its reproducibility even in the onymous treatment, which is, as we have seen, sufficient to drastically alter the outcomes of other actions (Fig. 4). The issue of reproducibility is an important one because not all outcomes of previous experimental studies are readily obtained. For instance, the frequency of punishment in the first round under anonymity, although double that of the study of Dreber et al. (22), is still 50% lower than the frequency found in the study of Wu et al. (24). This difference is quantitatively large, suggesting that the cultural background used to explain this result in the first place may be a dominant, but not the only, factor affecting the behavior of participants. In general, confounding factors other than cultural background may have affected our results. We had sufficient data to analyze two of these factors, gender and academic background. The results of these analyses are outlined in the Supplementary Materials. Another example of a result that differs from previous studies is our failure to observe the increasing frequency of defection with the number of rounds played (Fig. 5) (22, 24). The reason may again be the lack of a unique underlying mechanism—the study of Dreber et al. (22), for example, offers two possible explanations as to why the frequency of defection could increase with the number of rounds. The described mismatches between the experiments highlight the importance of understanding the mechanisms behind the statistically significant results. Presumably, the simpler and more stable these mechanisms are, the more likely the result is to be reproducible.

Fig. 5. Frequency of cooperation, defection, and punishment over the course of an interaction.

(A) In the anonymous treatment (T1), action frequencies do not show a statistically discernible trend as the interaction progresses, thus contrasting the results in the study of Dreber et al. (22) and in the study of Wu et al. (24). (B) In the onymous treatment (T2), the frequency of cooperation (defection) seems to be decreasing (increasing), but again, the results are statistically insignificant. Shown are the data points (circles, squares, and x marks for cooperation, defection, and punishment, respectively) and the regression lines. The latter were simultaneously fitted to ensure that the frequencies add up to unity. (C) In the accompanying statistical analysis, the smaller font size indicates the 95% statistical confidence intervals. We additionally tested whether the angle between the regression lines for cooperation and defection was significantly different from zero, but we obtained a negative result (P = 0.58 and P = 0.072 for T1 and T2, respectively).

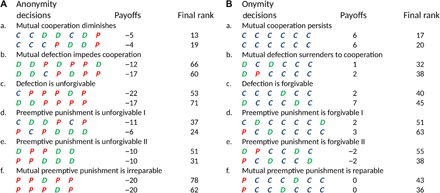

We took two preliminary steps toward a more mechanistic understanding of the observed empirical patterns. First, we peeked into the inner workings of anonymity and onymity by comparing typical interactions that, although they had started the same, ended up very differently depending on the treatment (Fig. 6). In the anonymous treatment (T1), even those interactions that had begun with mutual cooperation, more often than not, spiraled out of control in a series of antisocial decisions (namely, mutual defections or retaliatory punishments). Establishing and maintaining a cooperative outcome in instances in which the first round had consisted of decisions other than mutual cooperation proved all the more difficult. These results are in stark contrast with the typical interactions in the onymous treatment (T2). Under onymity, the relationship between two opponents was often reparable even after opening the interaction with mutual punishment. All other first-round decisions mostly led to establishing and maintaining a cooperative outcome, provided that the interaction lasted for a sufficient number of rounds.

Fig. 6. Same beginnings but vastly different endings.

Inner workings of anonymity and onymity through a direct comparison of some typical interactions that finished differently although they started the same. (A) In the anonymous treatment, even mutual cooperation occasionally gives way to defection and punishment. Other initial decisions make it only harder to reach and maintain a cooperative outcome. (B) In the onymous treatment, in contrast, even mutual preemptive punishment is reparable. Other initial decisions make it mostly easier to reach and maintain a cooperative outcome.

As a second step toward a more mechanistic understanding of the empirical patterns, we recreated the experiment using computer simulations (see the Supplementary Materials for details). By making three relatively straightforward assumptions, we could qualitatively and even quantitatively reproduce the experimental results and thus obtain some rudimentary indications of how to understand behaviorisms prompted by a social dilemma. Specifically, first-order conditional strategies seem to be followed on average, determining in the process whether prosocial tendencies will be rewarded or not. There is a considerable variability among individuals. However, overall behavior resembles the selection of strategies by trial and error, the main purpose of which seems to be filtering out maladjusted strategies rather than actively seeking well-adjusted ones.

DISCUSSION

In conclusion, onymity entices cooperation. To solidify this conclusion, we performed two additional control trials, one under anonymous and the other under onymous conditions (see the Supplementary Materials for details), wherein participants played a more traditional 2 × 2 PD game without punishment. Qualitatively similar results were obtained in these control trials, thus more firmly establishing the position of onymity as a powerful promoter of cooperation. Further quantitative comparisons between the controls and treatments T1 and T2 indicated that punishment, at best, failed in increasing the degree of cooperativeness among participants. However, an even more important result is that the level of cooperation may be sufficient to make onymous prosocial behavior advantageous—winners play nice. The conclusion that winners play nice under onymity is a major step forward from the previous studies in, at least, a twofold manner. The study of Dreber et al. (22), for example, establishes that successful individuals in an unfavorably structured social dilemma (of which PD is a prime example) avoid punishing others. This finding is complemented by our results because when the cloak of anonymity is removed, successful individuals should aspire to more than just staying shy of punishing others—they should behave truly prosocially and cooperate in the face of a strong temptation to defect. Furthermore, Wu et al. (24) report how costly punishment fails to increase cooperation. Although this result is interesting in itself, we actually offer a potential remedy for situations in which punishment has failed. Overall, the results are supportive of a notion that for cooperative behavior to emerge, opponents need some information about each other. This notion may have major implications for theoretical studies seeking mechanisms that promote cooperation. Instead of scouring an endless landscape of possible scenarios, the focus may shift toward available information for mutual recognition (27–30). At present, for instance, what degree of onymity effectively supports cooperation remains an interesting unknown.

Throughout the experimental sessions, we operationalized onymity simply by allowing opponents to learn each other’s name. However, for this to be effective, we relied on the fact that participants were students who had attended the same classes before. Thus, our sample exhibited two important characteristics that suggest a rather high level of onymity. One characteristic is that students had previous knowledge about one another, and the other is homogeneity in terms of age and interests, making mutual recognition and association relatively easy. This high level of onymity bears the question: To what extent could onymity be lowered and still promote cooperation? Although an answer is beyond the scope of the present study, we envisioned setups that would allow rigorous experimentation in this direction. For example, recruits need not be acquaintances before the experiment (nor do they have to originate from the same social groups) yet might be required to introduce themselves to others and even spend some time having a conversation about a given topic. This experimental design would certainly preclude complete anonymity, but neither mutual recognition nor association would be as easy as in the experiments presented here.

As with any experimental study, there is a danger in extrapolating the results outside the narrow set of controlled conditions and parameters prevalent during the experiment. We already highlighted several important differences between this and previous comparable studies, thereby hinting at plausible behavioral determinants operating in the face of a social dilemma (for example, cultural background). Aside from the cultural background, a slew of economic (for example, income and wealth) and biological (for example, age and gender) parameters may also play an important role, begging the question how robust the results of experimental studies truly are. It is somewhat encouraging in this context that computer simulations built on a small set of straightforward assumptions successfully reproduce the experimental results. These simulations justify our focus on the first-order conditional strategies in interpreting the raw data, but they fall short of explaining what overrides are triggered by onymity in order for defection in T1 to be replaced with cooperation in T2. At present, we can only speculate that participants anticipate to be reciprocated for prosocial actions under onymity despite being unable to spread rumors or to interact more than once (a few PD rounds) with any given individual.

MATERIALS AND METHODS

Experimental methods

We gathered 154 voluntary undergraduate students (49.4% women and 50.6% men, mean age of 21.6; for more details, see the Supplementary Materials) from three universities in Kunming, China, who major in mathematics (55 people), statistics (39 people), and eight other, mostly social sciences and humanities (60 people). Participants engaged in a repeated PD experiment at the computer laboratory of Statistics and Mathematics School, Yunnan University of Finance and Economics, consisting of around 100 isolated computer cubicles running z-Tree (34). A cubicle was randomly assigned to each participant, who would then read instructions about experimental procedures and have the understanding thereof tested via a questionnaire (fig. S1). Any two participants were separated by a vacant cubicle to help two supervisors, who could answer technical questions too, maintain order.

The experiment consisted of six sessions—three per treatment—which took place on 6 September, 11 October, and 15 November 2014 for the anonymous (T1; 26.7 participants per session) and on 20 September, 25 October, and 8 November 2014 for the onymous treatment (T2; 24.7). Each session began with a practice interaction, followed by a series of interactions in which every participant interacted with everyone else in a random order. An interaction meant that a pair of opponents repeatedly faced the same PD, where the next round would occur with 75% probability. Participants in both treatments had half a minute to inspect the result of the preceding round and their total payoff. Furthermore, in T2, participants—strictly classmates—could see each other’s name. Sessions lasted approximately 1.25 hours. No participant engaged in multiple sessions.

As an incentive, in addition to a ¥15 show-up fee, all participants started with an initial score of 50 points, and it was explained that their final score, if positive, would be exchanged for a real currency at the rate of ¥0.2 per point. This setup generated the average earnings of ¥18.5, ranging from ¥15.0 to ¥31.6.

Statistical methods and analyses

To get a sense of how the use of strategies (C, D, or P) is distributed without referring to a model distribution (for example, the normal distribution), we resorted to nonparametric descriptive statistics, that is, the median and the interquartile range. Subsequently, a direct comparison of the differences in the use of strategies between the two treatments (T1 and T2) was made on the basis of the median’s 95% confidence intervals. The first-order conditional strategies were naturally represented by means of 3 × 2 contingency tables, where table rows (columns) corresponded to C, D, and P (T1 and T2). Whether the strategies are independent of the treatments was tested with χ2 test. Using this test was justified by a large number of data points at our disposal.

The correlation between the payoff per round and the use of strategies was analyzed with ordinary least-squares regression and outlier detection methods, as presented in the study of Chatterjee and Hadi (35). Outlier detection was performed in conjunction with the tests of normality for residuals to identify instances in which a few data points had the potential to overwhelmingly affect the statistical inference. In contrast, in correlating the use of strategies with the number of rounds played, we used a custom-built regression routine for two reasons. First, the sum of the three strategies always equaled unity, implying a constraint that needed to be taken into account. Second, we used resampling (that is, bootstrapping) of the residuals because no indication of their true distribution was available from a low number of data points.

Supplementary Material

Acknowledgments

We are grateful to B. Zhang and Y. Tao for providing data on a similar experiment in Beijing and to S. Levin for useful suggestions. Funding: We appreciate the support from National Natural Science Foundation of China (grants nos. 11671348, 31170408, 31270433, 31300318, and 71161020), the National Science Fund for Distinguished Young Scholars (grant no. 31325005), National Science Foundation of China–Yunnan Joint Fund (grant no. U1302267), the West Light Foundation of the Chinese Academy of Sciences, the Special Fund for the Excellent Youth of the Chinese Academy of Sciences (grant no. KSCX2-EW-Q-9), the Japan Science and Technology Agency (Program to Disseminate Tenure Tracking System), Japan Society for the Promotion of Science Grant-in-Aid for General Scientific Research (B) (grant no. 24370011), and the National key research and development program (grant no. 2016YFB0800602). Author contributions: Z.W., R.-W.W., and L.S. designed and organized the experiment. Z.W. and M.J. performed the statistical analyses and executed the numerical simulations. All authors discussed the results and wrote the manuscript. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/3/3/e1601444/DC1

Supplementary Materials and Methods

Supplementary Results

fig. S1. Snapshot of the questionnaire used to test the basic understanding of PD games.

fig. S2. Interface for playing the PD game in anonymous treatment.

fig. S3. Interface for playing the PD game in onymous treatment.

fig. S4. Control trials.

fig. S5. Regression diagnostics.

fig. S6. Computer-simulated recreations of the anonymous treatment (T1).

fig. S7. Computer-simulated recreations of the onymous treatment (T2).

fig. S8. Comparison with other similar studies.

table S1. Basic information on the experimental sessions.

table S2. Gender as a confounding factor.

table S3. Academic background as a confounding factor.

Reference (36)

REFERENCES AND NOTES

- 1.Pennisi E. On the origin of cooperation. Science 325, 1196–1199 (2009). [DOI] [PubMed] [Google Scholar]

- 2.J. Maynard Smith, E. Szathmáry, The Major Transitions in Evolution (Oxford Univ. Press, 1998). [Google Scholar]

- 3.A. Rapoport, A. M. Chammah, Prisoner’s Dilemma: A Study in Conflict and Cooperation (The University of Michigan Press, 1965). [Google Scholar]

- 4.Doebeli M., Hauert C., Killingback T.. The evolutionary origin of cooperators and defectors. Science 306, 859–862 (2004). [DOI] [PubMed] [Google Scholar]

- 5.Nowak M. A., May R. M., Evolutionary games and spatial chaos. Nature 359, 826–829 (1992). [Google Scholar]

- 6.Durrett R., Levin S., The importance of being discrete (and spatial). Theor. Popul. Biol. 46, 363–394 (1994). [Google Scholar]

- 7.Santos F. C., Pacheco J. M., Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 95, 098104 (2005). [DOI] [PubMed] [Google Scholar]

- 8.Ohtsuki H., Hauert C., Lieberman E., Nowak M. A., A simple rule for the evolution of cooperation on graphs and social networks. Nature 441, 502–505 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Szabó G., Fath G., Evolutionary games on graphs. Phys. Rep. 446, 97–216 (2007). [Google Scholar]

- 10.Traulsen A., Semmann D., Sommerfeld R. D., Krambeck H.-J., Milinski M., Human strategy updating in evolutionary games. Proc. Natl. Acad. Sci. U.S.A. 107, 2962–2966 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rand D. G., Arbesman S., Christakis N. A., Dynamic social networks promote cooperation in experiments with humans. Proc. Natl. Acad. Sci. U.S.A. 108, 19193–19198 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gracia-Lázaro C., Ferrer A., Ruiz G., Tarancón A., Cuesta J. A., Sánchez A., Moreno Y., Heterogeneous networks do not promote cooperation when humans play a Prisoner’s Dilemma. Proc. Natl. Acad. Sci. U.S.A. 109, 12922–12926 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wang J., Suri S., Watts D. J., Cooperation and assortativity with dynamic partner updating. Proc. Natl. Acad. Sci. U.S.A. 109, 14363–14368 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nowak M. A., Sigmund K., Tit for tat in heterogeneous populations. Nature 355, 250–253 (1992). [Google Scholar]

- 15.Imhof L. A., Fudenberg D., Nowak M. A., Tit-for-tat or win-stay, lose-shift? J. Theor. Biol. 247, 574–580 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gallo E., Yan C., The effects of reputational and social knowledge on cooperation. Proc. Natl. Acad. Sci. U.S.A. 112, 3647–3652 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sefton M., Shupp R., Walker J. M., The effect of rewards and sanctions in provision of public goods. Econ. Inq. 45, 671–690 (2007). [Google Scholar]

- 18.Fehr E., Gächter S., Altruistic punishment in humans. Nature 415, 137–140 (2002). [DOI] [PubMed] [Google Scholar]

- 19.Boyd R., Gintis H., Bowles S., Richerson P. J., The evolution of altruistic punishment. Proc. Natl. Acad. Sci. U.S.A. 100, 3531–3535 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Fowler J. H., Altruistic punishment and the origin of cooperation. Proc. Natl. Acad. Sci. U.S.A. 102, 7047–7049 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Henrich J., McElreath R., Barr A., Ensminger J., Barrett C., Bolyanatz A., Camilo Cardenas J., Gurven M., Gwako E., Henrich N., Lesorogol C., Marlowe F., Tracer D., Ziker J., Costly punishment across human societies. Science 312, 1767–1770 (2006). [DOI] [PubMed] [Google Scholar]

- 22.Dreber A., Rand D. G., Fudenberg D., Nowak M. A., Winners don’t punish. Nature 452, 348–351 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Eldakar O. T., Wilson D. S., Selfishness as second-order altruism. Proc. Natl. Acad. Sci. U.S.A. 105, 6982–6986 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wu J.-J., Zhang B. Y., Zhou Z. X., He Q. Q., Zheng X. D., Cressman R., Tao Y., Costly punishment does not always increase cooperation. Proc. Natl. Acad. Sci. U.S.A. 106, 17448–17451 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Helbing D., Szolnoki A., Perc M., Szabó G., Evolutionary establishment of moral and double moral standards through spatial interactions. PLOS Comput. Biol. 6, e1000758 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nowak M. A., Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Eshel I., Cavalli-Sforza L. L., Assortment of encounters and evolution of cooperativeness. Proc. Natl. Acad. Sci. U.S.A. 79, 1331–1335 (1982). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lehmann L., Keller L., West S., Roze D., Group selection and kin selection: Two concepts but one process. Proc. Natl. Acad. Sci. U.S.A. 104, 6736–6739 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tudge S. J., Brede M., A tale of two theorems: Comment on “Universal scaling for the dilemma strength in evolutionary games” by Z. Wang et al. Phys. Life Rev. 14, 49–51 (2015). [DOI] [PubMed] [Google Scholar]

- 30.Gómez-Gardeñes J., Reciprocity mechanisms meet together. A previous step to bridge the gap with experiments?: Comment on “Universal scaling for the dilemma strength in evolutionary games”, by Z. Wang, et al. Phys. Life Rev. 14, 54–55 (2015). [DOI] [PubMed] [Google Scholar]

- 31.Wang Z., Kokubo S., Jusup M., Tanimoto J., Universal scaling for the dilemma strength in evolutionary games. Phys. Life Rev. 14, 1–30 (2015). [DOI] [PubMed] [Google Scholar]

- 32.Wang Z., Kokubo S., Jusup M., Tanimoto J., Dilemma strength as a framework for advancing evolutionary game theory: Reply to comments on “Universal scaling for the dilemma strength in evolutionary games”. Phys. Life Rev. 14, 56–58 (2015). [DOI] [PubMed] [Google Scholar]

- 33.Sánchez A., Theory must be informed by experiments (and back): Comment on “Universal scaling for the dilemma strength in evolutionary games”, by Z. Wang et al. Phys. Life Rev. 14, 52–53 (2015). [DOI] [PubMed] [Google Scholar]

- 34.Fischbacher U., z-Tree: Zurich toolbox for ready-made economic experiments. Exp. Econ. 10, 171–178 (2007). [Google Scholar]

- 35.Chatterjee S., Hadi A. S., Influential observations, high leverage points, and outliers in linear regression. Stat. Sci. 1, 379–393 (1986). [Google Scholar]

- 36.Talhelm T., Zhang X., Oishi S., Shimin C., Duan D., Lan X., Kitayama S., Large-scale psychological differences within China explained by rice versus wheat agriculture. Science 344, 603–608 (2014). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/3/3/e1601444/DC1

Supplementary Materials and Methods

Supplementary Results

fig. S1. Snapshot of the questionnaire used to test the basic understanding of PD games.

fig. S2. Interface for playing the PD game in anonymous treatment.

fig. S3. Interface for playing the PD game in onymous treatment.

fig. S4. Control trials.

fig. S5. Regression diagnostics.

fig. S6. Computer-simulated recreations of the anonymous treatment (T1).

fig. S7. Computer-simulated recreations of the onymous treatment (T2).

fig. S8. Comparison with other similar studies.

table S1. Basic information on the experimental sessions.

table S2. Gender as a confounding factor.

table S3. Academic background as a confounding factor.

Reference (36)