Abstract

Background

Shared decision-making (SDM) has become a policy priority, yet its implementation is not routinely assessed. To address this gap we tested the delivery of CollaboRATE, a 3-item patient reported experience measure of SDM, via multiple survey modes.

Objective

To assess CollaboRATE response rates and respondent characteristics across different modes of administration, impact of mode and patient characteristics on SDM performance and cost of administration per response in a real-world primary care practice.

Design

Observational study design, with repeated assessment of SDM performance using CollaboRATE in a primary care clinic over 15 months of data collection. Different modes of administration were introduced sequentially including paper, patient portal, interactive voice response (IVR) call, text message and tablet computer.

Participants

Consecutive patients ≥18 years, or parents/guardians of patients <18 years, visiting participating primary care clinicians.

Main measures

CollaboRATE assesses three core SDM tasks: (1) explanation about health issues, (2) elicitation of patient preferences and (3) integration of patient preferences into decisions. Responses to each item range from 0 (no effort was made) to 9 (every effort was made). CollaboRATE scores are calculated as the proportion of participants who report a score of nine on each of the three CollaboRATE questions.

Key results

Scores were sensitive to mode effects: the paper mode had the highest average score (81%) and IVR had the lowest (61%). However, relative clinician performance rankings were stable across the different data collection modes used. Tablet computers administered by research staff had the highest response rate (41%), although this approach was costly. Clinic staff giving paper surveys to patients as they left the clinic had the lowest response rate (12%).

Conclusions

CollaboRATE can be introduced using multiple modes of survey delivery while producing consistent clinician rankings. This may allow routine assessment and benchmarking of clinician and clinic SDM performance.

Keywords: PRIMARY CARE, shared decision-making, patient-reported experience measure, patient-reported measurement, mode effects

Strengths and limitations of this study.

This study deployed a range of survey administration modes within 24 hours of clinic visits.

By consistently measuring performance of the same clinicians, we were able to assess effects of survey administration mode on clinician rank order and CollaboRATE score.

We accounted for patient characteristics in our analysis by including patient demographic and clinical data.

Data collection for 15 months led to respondent and organisational fatigue, impacting response rates.

Cost analysis relied on retrospective cost estimates.

Introduction

Assessing whether patients have experienced shared decision-making (SDM) in clinical settings has become a priority for multiple stakeholders.1 In the US context, the Consumer Assessment of Healthcare Providers and Systems Clinician and Group (CG-CAHPS) survey is a widely used patient reported experience measure (PREM).2 However, the measurement of SDM is tangential to the overall survey goals.3 In addition, recall bias may undermine the validity of results given that patients are asked to assess experiences over the preceding 6 months.4 Furthermore, response rates are significantly below the 40% target set by the Agency for Healthcare Research and Quality. Alternative PREMs of SDM are designed primarily for research and not clinical use.5–7

To address this gap we developed CollaboRATE, a 3-item PREM of SDM.8 9 CollaboRATE assesses three core SDM tasks during a clinic visit: (1) information and explanation about health issues, (2) elicitation of patient preferences and (3) integration of patient preferences into decisions.

CollaboRATE has demonstrated psychometric soundness,9 and has also been used in a demonstration project to assess differences in SDM across 34 clinical teams in England.10 In addition, Blue Shield of California is using CollaboRATE to assess SDM as part of a preauthorisation process for joint replacement.11 A research study at a Veterans Administration site found positive correlations between CollaboRATE scores and patient assessments of satisfaction and communication.12

CollaboRATE's brevity minimises respondent burden and facilitates varied data collection modes, including email, interactive voice response (IVR) automated telephone calls, short message service (SMS) text messages, electronic kiosk, tablet computer and paper. Tourangeau identified five administration-mode factors related to survey response: (1) respondent contact method (eg, in person or email); (2) survey medium (eg, paper or electronic); (3) administration method (interviewer or self-completed); (4) sensory input (eg, visual or oral) and (5) response mode (eg, handwritten, keyboard, voice).13 Data analysis needs to account for mode effects and differences in response rates, especially if results are intended as measures of performance. However, to date, there has been limited research on differences in validity, reliability and administration costs by mode in patient-reported measurement.14

Mixed modes of CG-CAHPS survey administration, including options to respond over the phone, have yielded response rates near 40%.15 These mixed-mode response rates are higher than those achieved by mail-back surveys alone15 while collecting comparable data.16 In a systematic review of survey response rates, email delivery was associated with response rates of 15%,17 similar to the 14% response rate for CG-CAHPS delivered by email.16 SMS administration has associated response rates ranging from 49% to 98%,18–21 and data quality comparable to that collected by person-led phone interviews or IVR.21

The cost of PREM administration has significant implications, yet receives little attention.16 22 23 Achieving a reasonable response rate, at a realistic cost, is a key requirement if PREMs are to become sustainable sources of clinical performance data.

This project was designed to assess feasibility and identify challenges to the routine implementation and real-time delivery of CollaboRATE. In this paper, we aim to evaluate CollaboRATE as a measure of clinician performance with regard to response rates, CollaboRATE SDM scores at the level of individual clinicians, respondent characteristics across modes, impact of mode and patient characteristics on SDM scores, and cost of data collection per patient response. To do so, we conducted a single-site demonstration project where we sequentially introduced different modes of patient-reported data collection including paper, patient portal, IVR calls, SMS and electronic tablet in the clinic. We hypothesise variation in CollaboRATE scores by clinician as well as lower CollaboRATE scores in data collection modes completed outside the clinic setting.

Methods

Participants

Setting

The study was conducted with two clinical teams, consisting of 15 clinicians, in a primary care clinic of a rural academic medical centre in New Hampshire, with 16 000 registered patients.

Inclusion criteria

Consecutive patients 18 years or older visiting the participating clinical teams Monday through Friday beginning in April 2014 were eligible to complete CollaboRATE. Parents or guardians of patients under age 18 were eligible to complete CollaboRATE on behalf of their children.

Measures

CollaboRATE

CollaboRATE consists of three items (box 1).8 9

Box 1. CollaboRATE items.

Thinking about the appointment you have just had:

How much effort was made to help you understand your health issues?

How much effort was made to listen to the things that matter most to you about your health issues?

How much effort was made to include what matters most to you in choosing what to do next?

Responses to each item can range from 0 (No effort was made) to 9 (Every effort was made) for a maximum total of 27.

Responses to CollaboRATE were scored in a binary fashion; participants who responded to all questions with a ‘9’ were considered to have experienced SDM and all others were not. Participants with one or more missing responses on CollaboRATE were excluded from analyses. We calculated the proportion of participants who reported a score of 9 on each of the three CollaboRATE questions for each clinician.9 24 This scoring technique is a strategy for aiding interpretation while avoiding potential ceiling effects common among clinician performance assessments completed by patients.9 Incomplete CollaboRATE surveys were not included in analysis.

Data collection

Modes of CollaboRATE survey administration

We adopted five modes of CollaboRATE administration. In each mode, eligible patients were given opportunities to complete the survey within 24 hours of their clinic visits. Modes were implemented sequentially beginning in April 2014, each for a 3-month period (table 1). Clinicians were made aware of data collection at the start of the study. At the end of the study, we provided participating clinicians with a summary of the results, their individual scores and a brief presentation on how to achieve SDM in routine clinical settings.

Table 1.

Modes of CollaboRATE administration in chronological order

| Mode | Description |

|---|---|

| Paper survey | A paper-based version of CollaboRATE was given to patients by administrative staff as they left the clinic following their visits. Administrative staff added patient identifiers to the surveys to enable linkage to medical records data. Patients were asked to place completed surveys in a locked receptacle in the clinic. |

| Patient portal | CollaboRATE was delivered using an online patient portal (MyChart), part of the clinic's electronic medical record. The questionnaire was programmed by the medical centre's information systems department. As clinical encounters were completed, emails containing a web link to the CollaboRATE questionnaire were sent to those patients who had portal accounts. |

| Interactive voice response (IVR) | CollaboRATE was delivered to patients using an interactive voice response telephone system programmed by the medical centre's information systems department. An automated telephone call was made to each patient's cell phone at 19:00 on the day of their clinic visit. Before initiating the survey, the respondent was asked to confirm that they were the individual who had visited the clinic that day. On confirmation, numerical keypad responses to CollaboRATE questions were requested. |

| Short message service (SMS text messages) | Text messages, programmed by the medical centre's information systems department, were sent to patient cell phones at 19:00 on the day of their clinical visits. The first message introduced the survey and offered opt-out opportunities. Remaining messages each contained a single CollaboRATE question and response instructions. Subsequent CollaboRATE questions were triggered by each reply, sending a total of four text messages. |

| Tablet and mail | Using tablet computers, research assistants offered patients an opportunity to complete an online version of CollaboRATE hosted in Qualtrics (Provo, Utah, USA) as they left the clinic. Patients were asked for their name, age, gender and to indicate the clinician visited. Patients who declined the tablet opportunity were asked to respond by completing a paper-based survey to be returned in a postage-paid envelope. |

Participant characteristics

For the first four modes, CollaboRATE responses were linked to patient demographic and clinical data, including patient age, gender, number of health conditions, visit type (annual wellness or other), marital status and socioeconomic status (SES). SES was estimated by the percentage of people living in the patient's zip code with income beneath the federal poverty level using data from the American Community Survey.25 26 These data were extracted from patients' medical records, anonymised by medical centre staff and shared with the research team. Data linkage was not undertaken for the tablet mode; clinician, patient age and gender were self-reported in that mode.

Data analysis

Respondent characteristics and response rates

Response rates were based on the number of patients attending the clinic during the data collection periods. Not all patients were registered with a patient portal or had a cellular telephone number in their record. We therefore calculated adjusted response rates for patient portal, IVR and SMS modes, including only patients who could have received CollaboRATE. We compared the demographic characteristics of respondents to non-respondents for each mode. Pearson's χ2 tests and Student's t-tests were used for categorical and continuous variables, respectively. Logistic regression analysis was used to confirm the descriptive findings comparing respondents to non-respondents and to examine whether an interaction between age and number of comorbidities predicted response, where response was the binary outcome variable and independent variables included age, number of comorbidities, age multiplied by number of comorbidities, gender, age, and whether the visit was for a wellness check-up. We also calculated the median time to achieve 25 completed CollaboRATE surveys per clinician per mode, which has been used as a minimum for obtaining stable score estimates.24 Studies which have calculated the number of patient responses needed to obtain reliable information have found that around 25 completed questionnaires are needed.27

Clinician SDM performance by mode

To assess clinician performance across modes, we estimated a logistic regression model including clinician indicators and all available patient characteristics as covariates with the CollaboRATE top score indicator as the dependent variable. We used the model to estimate the CollaboRATE top score (accounting for patient characteristics) for each clinician in each mode and used these estimates to rank clinicians' performance and assessed concordance of the estimated rankings across modes. Clinicians who did not reach 25 patient responses in any of the four modes were excluded from the results.24 27

Effects of administration mode and patient demographics on CollaboRATE scores

To evaluate the effects of survey administration mode and patient characteristics on CollaboRATE scores, we conducted mixed effects logistic regression analysis with CollaboRATE score as the dependent variable. Fixed effects included administration mode and patient characteristics. Clinicians were included as a random effect to account for clustering of patients by clinician, allowing inferences to be generalisable beyond the clinicians in our study. The unit of analysis was the CollaboRATE score per individual visit. To address potential clustering of CollaboRATE responses by patients who had more than one visit, we also conducted the mixed effects logistic regression analysis described above including only the first CollaboRATE response from each patient.

Cost analysis by survey administration mode

The costs associated with each administration mode were estimated to calculate a cost per fully completed survey. Data included development costs (programming, testing and planning) and field costs (piloting, supplies and labels, technical and vendor support, management and staff time). We estimated the cost per completed survey by dividing the total cost for each mode by the number of completed surveys. Estimated costs per mode do not include costs associated with an institutional electronic medical record, patient portal or survey collection platform but do include variable costs such as technical support and charges associated with sending SMS messages and IVR calls. To estimate the likely long-term cost per completed survey, we repeated the analysis excluding research and set-up costs. Analysis was conducted in Stata V.13 and SPSS V.21. An α level of ≤0.05 was considered statistically significant.

Results

There were 4421 patients who completed the CollaboRATE survey over the 15-month study period (April 2014–October 2015), resulting in an overall CollaboRATE top score of 68% for the clinic. Only 19 incomplete CollaboRATE responses were recorded. Among eligible patients, the average response rate across modes was 25%, with the highest response rate in the combined tablet and mail mode (41%) followed by the patient portal mode (34%). The administration mode had significant effects on CollaboRATE scores, with the highest score recorded during the paper survey administration (81%), followed by the patient portal (71%), with the lowest overall CollaboRATE score recorded in the IVR survey administration mode (61%) (table 2).

Table 2.

Response rate and CollaboRATE scores across modes

| Mode | Response rate (n) | CollaboRATE score* (%) | Clinician score range (%) |

|---|---|---|---|

| Paper | |||

| All patients | 12% (541/4692) | 81 | 72–93 |

| Patient portal | |||

| All patients | 21% (1019/4939) | 71 | 59–83 |

| Eligible patients† | 34% (1019/3015) | ||

| IVR | |||

| All patients | 19% (893/4814) | 61 | 42–75 |

| Eligible patients† | 25% (893/3589) | ||

| SMS | |||

| All patients | 17% (757/4520) | 65 | 46–82 |

| Eligible patients† | 23% (757/3329) | ||

| Tablet and mail | |||

| All patients | 41% (1211/2943) | 66 | 53–83 |

*CollaboRATE score represents the proportion of respondents marking 9 on all three items (totalling 27/27).

†These calculations exclude patients who did not have patient portal accounts or phone numbers on file at which to receive the CollaboRATE survey.

IVR, interactive voice response; SMS, short message service.

The fastest median time to achieve 25 completions per clinician was accomplished in the tablet/mail-in mode (17 days), followed by paper in-clinic (25 days), patient portal (25 days), IVR (31 days) and SMS (40 days).

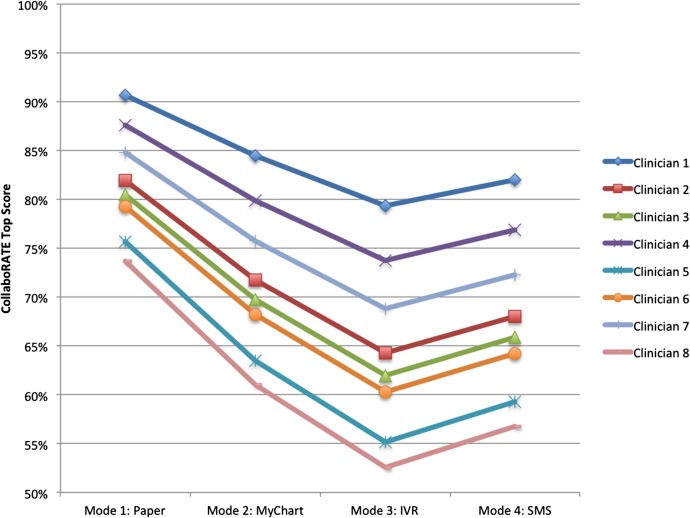

Clinician rank

Figure 1 demonstrates that clinician rank order by proportion of CollaboRATE top scores was identical across the four administration modes (adjusted by patient case mix; see online supplementary appendices 1 and 2), though the average top score differed across modes.

Figure 1.

Clinician scores by mode, adjusted for patient characteristics^*. ^While 15 clinicians participated in all four data collection modes, only eight reached 25 patient responses in all modes; therefore, eight of 15 clinicians are shown here. *During the electronic tablet/postal mail phase, responses were not linked to the electronic medical record; as a result, patient demographic data were unavailable.

bmjopen-2016-014681supp_appendices.pdf (83.3KB, pdf)

Respondent characteristics by administration mode

Respondents versus non-respondents by mode

Demographic characteristics of all patients attending the clinic were similar across modes (table 3). Respondents were slightly older than non-respondents across all modes and represented the overall clinic population with regard to gender. Respondents were slightly more likely than non-respondents to be seen for an annual wellness visit in the patient portal and SMS modes. Respondents also had more comorbid conditions than non-respondents in the paper mode. Logistic regression analysis confirmed these descriptive findings and showed no significant interaction between age and number of comorbid health conditions in predicting response to the CollaboRATE survey (OR 1.00; 95% CI 0.99 to 1.00; full results available on request).

Table 3.

Respondent and non-respondent characteristics by mode

| Paper |

Patient portal |

IVR |

SMS |

Tablet and mail†‡ | |||||

|---|---|---|---|---|---|---|---|---|---|

| Respondents (n=541) |

Non-respondents (n=4151) |

Respondents (n=1019) |

Eligible non-respondents (n=1996) |

Respondents (n=893) |

Eligible non-respondents (n=2696) |

Respondents (n=757) |

Eligible non-respondents (n=2572) |

Respondents (n=1211) |

|

| Female | 65.4% | 65.6% | 68.8% | 67.6% | 63.2% | 64.5% | 64.2% | 64.9% | 57.1% |

| Mean age (SD) | 54.09 (15.73) | 47.12* (21.54) | 54.12 (15.93) | 46.27* (10.98) | 44.61 (18.77) | 41.97* (20.02) | 46.56 (18.58) | 43.76* (19.78) | 49.57 (18.38) |

| Number of morbidities | * | ||||||||

| 0 | 17.7% | 31.4% | 59.6% | 62.7% | 35.9% | 37.2% | 38.4% | 37.7% | – |

| 1 | 35.9% | 42.2% | 32.5% | 31.1% | 43.7% | 44.7% | 40.8% | 42.1% | – |

| 2 | 25.1% | 16.7% | 7.5% | 6.0% | 15.0% | 13.1% | 16.0% | 14.7% | – |

| 3 | 13.7% | 6.3% | 0.5% | 0.2% | 4.1% | 3.6% | 3.4% | 4.4% | – |

| 4 or more | 7.6% | 3.4% | 0% | 0.1% | 1.2% | 1.4% | 1.3% | 1.1% | – |

| Annual wellness visit | 20.3% | 17.4% | 25.3% | 19.3%* | 19.4% | 16.6% | 22.6% | 17.5% | – |

*Significantly different from respondent group, p<0.05.

†During the electronic tablet/postal mail phase, responses were not linked to the electronic medical record; as a result, non-respondent patient characteristics are unavailable.

‡An intervention was introduced midway through data collection.

IVR, interactive voice response; SMS, short message service.

Respondent characteristics by mode

Among respondents, gender (χ2=11.32, p=0.02), age (χ2=49.88, p<0.001), reason for visit (χ2=11.04, p=0.01) and number of comorbidities (χ2=288.39, p<0.001) showed statistically significant variation across the modes. Respondents to the SMS and IVR modes were on average 8–10 years younger than respondents to the paper and patient portal modes. There were differences in respondent health status between modes; in the paper mode ∼46% of respondents had two or more comorbidities compared with 21% in SMS and IVR modes and 7% in the patient portal mode.

Effect of administration mode and patient demographics on scores

The results of a mixed effects logistic regression demonstrate significantly lower CollaboRATE scores in the patient portal (OR 0.60, 95% CI 0.45 to 0.80), IVR (OR 0.45, 95% CI 0.34 to 0.59) and SMS (OR 0.51, 95% CI 0.38 to 0.67) modes when compared with the paper mode. CollaboRATE scores increased slightly with patient age (OR 1.01 per year of age, 95% CI 1.01 to 1.02) but no other patient characteristics were associated with CollaboRATE scores (full results in online supplementary appendix 3). The estimated SD for the clinician random effect was 0.34, implying there was substantial unexplained heterogeneity in the clinicians' ratings even after adjusting for observed differences between the patient cases. Our sensitivity analysis including only the first CollaboRATE response from each unique patient finds very similar results (see online supplementary appendix 4). While the annual visit predictor becomes significant (OR 1.65, 95% CI 1.15 to 2.37) in this analysis limited to the first CollaboRATE response from each patient during the 15-month study period, all other fixed effects retain similar magnitude and significance and the estimated SD for the clinician random effect remains consistent at 0.33.

Cost of data collection

Owing to high personnel involvement in administering the paper and tablet modes, these modes were the most expensive (see online supplementary appendix 5). The cost per unique response was US$20.56 and US$16.71 for the paper and tablet modes respectively. The SMS, IVR and patient portal modes were significantly less expensive at US$10.28, US$8.87 and US$6.39 per unique response, respectively. When estimating the variable cost per completed response (excluding set-up costs and research related costs), these decrease to US$16.38 and US$13.46 for the personnel-intensive modes (tablet and paper modes) and US$3.36, US$2.83 and US$2.20 for the SMS, IVR and patient portal modes.

Discussion

Principal findings

Clinician SDM performance rankings were stable across data collection modes, though they were sensitive to mode effects, as were response rates. We were able to achieve the highest response rate (41%) by using tablet computers administered by research staff in-clinic, although this approach was among the costliest. The lowest response rate involved clinic staff giving paper surveys to patients as they left the clinic (12%).

Mode of administration and respondent characteristics were associated with CollaboRATE scores, with the lowest score in the IVR mode and the highest in the paper mode. We speculate that this finding may be explained, at least in part, by patients' perceived distance from the clinic experience and the perceived anonymity of the response. Across all modes, respondents were slightly older than non-respondents. Younger patients appeared to prefer, or were more comfortable than older patients, completing CollaboRATE using SMS and IVR, where respondents were ∼10 years younger than respondents from other modes. Across all modes, the clinician identity and mode of survey administration had the greatest impact on CollaboRATE scores, while increased patient age was associated with a small but statistically significant increase in CollaboRATE score.

If fixed institutional costs are excluded, the use of existing technological systems such as patient portals or SMS capabilities can reduce the cost of obtaining completed survey responses. The variable costs associated with collecting 25 responses per clinician are US$55 for patient portal, US$71 for IVR, US$84 for SMS, US$337 for paper in-clinic and US$410 for tablet, indicating that the modes requiring the least personnel involvement that maintained reasonable response rates (SMS, IVR, patient portal) cost the least. While the tablet mode had high response rates and was the most time-efficient data collection method, the personnel time required made it the most expensive mode.

Strengths and limitations

We consider the work to have several strengths. First, we deployed a range of modes to deliver a PREM of SDM, daily and within 24 hours of the clinic visit. By consistently measuring the performance of the same clinicians, we were also able to assess mode effects on clinician rank order and CollaboRATE score, and demonstrate the feasibility of this approach to performance measurement. We collected CollaboRATE routinely in a clinic setting and experienced the related practical challenges: competing priorities for administrative, information systems, and clinical staff made survey implementation challenging. We faced significant challenges embedding these survey administration methods in institutional information systems, where programming SMS, patient portal and IVR methods were by necessity secondary to other institutional deadlines and demands. However, we were able to access and link patient demographic and clinical data with CollaboRATE responses, which allowed us to account for patient characteristics.

The study also had limitations. Data collection for 15 months, using sequential administration modes, led to respondent and organisational fatigue, impacting response rates. Clinic staff were burdened by paper survey tasks. We received reports that patients who had previously completed the CollaboRATE survey perceived little value in repeating their evaluation. Our study design also did not account for potential repeated measures; since CollaboRATE's reference period is the patient's most recent clinical encounter, we assumed each CollaboRATE response to be independent and did not account for clustering by patient. Our sensitivity analysis excluding all but a patient's initial CollaboRATE response found results consistent with our original conclusions, suggesting only minimal potential influence of patient-level clustering on our results. Order of mode implementation was not randomised, and we were unable to account for potential order effects in our analysis.

In addition, significant changes in clinic management impeded paper administration of CollaboRATE. SMS and IVR administration modes were hampered because the clinic record system did not consistently distinguish between cellular and other telephone numbers. Selection bias, enabled by low response rates in some data collection modes and the potential for unmeasured demographic differences between respondents and non-respondents, may contribute to variation in scores between modes. However, we believe the risk of selection bias is low given the similar measured demographic characteristics of respondents and non-respondents. Our cost analysis methods also relied on retrospective cost estimates.

Context in existing literature

CollaboRATE provided a consistent estimate of the level of SDM practiced by clinicians, with no difference in rank order of clinicians across modes despite a shift in CollaboRATE top score. These findings are similar to those from the review by Hood et al14 where mode was not related to differences in the precision of 36-Item Short Form Health Survey (SF-36) scores, but survey administration (self-report or interviewer-led) and sensory stimuli (auditory, visual or both) were the factors that impacted the absolute mean scores. Using the Tourangeau13 framework, it appears that collecting data in the clinic setting results in the highest scores, compared with the lower scores obtained using the IVR or SMS modes. Lower scores when using IVR are also found by Rodriguez et al23 who reported primary care patients had significantly lower scores on the Ambulatory Care Experience Survey by IVR, after adjusting for patient characteristics, compared with mail and internet administration. In addition, our findings match others who report lower evaluations when patients are asked to respond outside clinic settings.28–30

Only the in-clinic electronic tablet/mail-in mode achieved the 40% response rate recommended for CAHPS.31 The patient portal response rate of eligible email recipients was 34%, double the average response rate found in a recent review,17 which supports the view that technological methods yield improved response rates, provided respondent burden is avoided.14 The use of SMS was not as successful; unreliable cellular coverage was a factor, and it remains unclear whether this mode can provide representative data given demographic differences in the use of cellphones and text messages.

Our cost analyses correspond with previous reports that the highest cost per completed survey is associated with in-clinic data collection.16 22 23 However, when set-up and research costs were excluded, all modes of administration were below US$20 per completed response and were <US$5 per completed response for three modes, namely patient portal, SMS and IVR.

Implications

Patient experience measurement is a relatively recent addition to healthcare settings and is widely viewed as a key method of assessing value.32 Nevertheless, there is a significant risk that the measurement of patient experience becomes viewed as a burden, where surveys are lengthy and administered out of context. Findings from our study suggest that CollaboRATE can overcome these challenges as it consists of only three items that can be delivered via multiple modes at a cost comparable to existing survey administration. In addition, clinician ranking was consistent, regardless of data collection mode. The decision of which mode is best for data collection will depend on the extent to which data collectors can harness technological tools and access patient information such as email addresses or telephone numbers to facilitate contact while maximising patient confidentiality. If multiple modes are used, it is important for administrators to account for mode effects when estimating CollaboRATE scores.

We now face the challenge of studying CollaboRATE in more settings to test the generalisability of our findings and establish benchmarks for clinic-level and clinician-level performance. There is also a need to investigate automated collection, analysis and visualisation of the data so that the results can facilitate the core purpose of providing timely feedback to improve clinical practice. Clinicians and clinics who score low on CollaboRATE will want to know what they can do to achieve SDM and in turn provide to patients higher quality healthcare.

Acknowledgments

We acknowledge the following individuals for their invaluable contributions to the project: Erik Olson and Shelley Sanyal for programming data collection and reporting systems for IVR/SMS and online patient portal data collection modes, respectively; Margaret Menkov for data management and medical record data extraction; Jef Hale, Scott Farr and Charles Goff for IT oversight; Chelsea Worthen, Ethan Berke and Michelle L'Heureux for clinic leadership.

Footnotes

Twitter: Follow Paul Barr @BarrPaulJ

Contributors: PJB contributed to design of the work, drafting the article and final approval of the version to be published. RCF contributed to design of the work, data analysis and interpretation, drafting the article and final approval of the version to be published. RT contributed to design of the work, critical revision of the article and final approval of the version to be published. EMO contributed to design of the work, data collection, data analysis and interpretation, critical revision of the article and final approval of the version to be published. RA contributed to the design of the work and final approval of the version to be published. MGC contributed to design of the work, data collection, critical revision of the article and final approval of the version to be published. AJO contributed to design of the work, data interpretation, critical revision of the article and final approval of the version to be published. GE contributed to conception and design of the work, drafting the article and final approval of the version to be published.

Funding: This work was funded by the Gordon and Betty Moore Foundation, grant number 3929.

Competing interests: GE reports personal fees from Emmi Solutions LLC, personal fees from National Quality Forum, personal fees from Washington State Health Department, personal fees from Shared Decision Making 3rd edition, personal fees from Groups (Radcliffe Press), outside the submitted work; and GE has initiated and led the Option Grid patient decision aids Collaborative, which produces and publishes patient knowledge tools in the form of comparison tables (http://optiongrid.org/). GE has been a member of teams that have developed measures of shared decision-making and care integration. These tools and measures are published and are available for use. For further information see http://www.glynelwyn.com/.

Ethics approval: The study received ethics approval by Dartmouth Committee for the Protection of Human Subjects (#24529).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Wagner E, Coleman K, Reid R et al. Guiding transformation: how medical practices can become patient-centered medical homes. Commonwealth Fund, 2012. http://www.commonwealthfund.org/~/media/Files/Publications/Fund%20Report/2012/Feb/1582_Wagner_guiding_transformation_patientcentered_med_home_v2.pdf (accessed 7 Dec 2016). [Google Scholar]

- 2.Dyer N, Sorra JS, Smith SA et al. Psychometric properties of the Consumer Assessment of Healthcare Providers and Systems (CAHPS®) Clinician and Group Adult Visit Survey. Med Care 2012;50:S28–34. 10.1097/MLR.0b013e31826cbc0d [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.U.S. Department of Health & Human Services. The CAHPS Program. http://www.ahrq.gov/cahps/about-cahps/cahps-program/index.html (accessed 24 Aug 2016). [DOI] [PubMed]

- 4.U.S. Department of Health & Human Services. Clinician & Group Survey and Instructions. http://www.ahrq.gov/cahps/surveys-guidance/cg/instructions/index.html (accessed 24 Aug 2016). [DOI] [PubMed]

- 5.Kasper J, Heesen C, Köpke S et al. Patients’ and observers’ perceptions of involvement differ. Validation study on inter-relating measures for shared decision making . PLoS ONE 2011;6:e26255 10.1371/journal.pone.0026255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Scholl I, Koelewijn-van Loon M, Sepucha KR et al. Measurement of shared decision making—a review of instruments. Z Evid Fortbild Qual Gesundhwes 2011;105:313–24. 10.1016/j.zefq.2011.04.012 [DOI] [PubMed] [Google Scholar]

- 7.Barr PJ, Elwyn G. Measurement challenges in shared decision making: putting the ‘patient’ in patient-reported measures. Health Expect 2016;19:993–1001. 10.1111/hex.12380 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Elwyn G, Barr PJ, Grande SW et al. Developing CollaboRATE: a fast and frugal patient-reported measure of shared decision making in clinical encounters. Patient Educ Couns 2013;93: 102–7. 10.1016/j.pec.2013.05.009 [DOI] [PubMed] [Google Scholar]

- 9.Barr PJ, Thompson R, Walsh T et al. The psychometric properties of CollaboRATE: a fast and frugal patient-reported measure of the shared decision-making process. J Med Internet Res 2014; 16:e2 10.2196/jmir.3085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Thompson R, Elwyn G. Patient-reported measurement of shared decision making: The development, validation, and pilot implementation of CollaboRATE. In: International Conference on Communication in Healthcare. Amsterdam, 2014. [Google Scholar]

- 11.Rickert J. An innovative patient-centered total joint replacement program. Heal Aff Blog 2016. http://healthaffairs.org/blog/2016/03/28/an-innovative-patient-centered-total-joint-replacement-program/ (accessed 8 Jul 2016). [Google Scholar]

- 12.Meterko M, Radwin L, Bokhour B. Measuring patient-centered care: a brief measure for use in point-of-care assessments. White Paper. US Department of Veterans Affairs, Office of Patient-centered Care and Cultural Transformation 2015.

- 13.Tourangeau R, Rips L, Rasinski K et al. The psychology of survey response. Cambridge University Press, 2000. [Google Scholar]

- 14.Hood K, Robling M, Ingledew D et al. Mode of data elicitation, acquisition and response to surveys: a systematic review. Health Technol Assess 2012;16:1–162. 10.3310/hta16270 [DOI] [PubMed] [Google Scholar]

- 15.Elliott MN, Zaslavsky AM, Goldstein E et al. Effects of survey mode, patient mix, and nonresponse on CAHPS hospital survey scores. Health Serv Res 2009;44:501–18. 10.1111/j.1475-6773.2008.00914.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bergeson SC, Gray J, Ehrmantraut LA et al. Comparing Web-based with Mail Survey Administration of the Consumer Assessment of Healthcare Providers and Systems (CAHPS(®)) Clinician and Group Survey. Prim Health Care 2013;3:1000132 10.4172/2167-1079.1000132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gwaltney CJ, Shields AL, Shiffman S. Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health 2008;11:322–33. 10.1111/j.1524-4733.2007.00231.x [DOI] [PubMed] [Google Scholar]

- 18.Kongsted A, Leboeuf-Yde C. The Nordic back pain subpopulation program—individual patterns of low back pain established by means of text messaging: a longitudinal pilot study. Chiropr Osteopat 2009;17:11 10.1186/1746-1340-17-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Axén I, Bodin L, Bergström G et al. The use of weekly text messaging over 6 months was a feasible method for monitoring the clinical course of low back pain in patients seeking chiropractic care. J Clin Epidemiol 2012;65:454–61. 10.1016/j.jclinepi.2011.07.012 [DOI] [PubMed] [Google Scholar]

- 20.Christie A, Dagfinrud H, Dale Ø et al. Collection of patient-reported outcomes;—text messages on mobile phones provide valid scores and high response rates. BMC Med Res Methodol 2014;14:52 10.1186/1471-2288-14-52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schober MF, Conrad FG, Antoun C et al. Precision and disclosure in text and voice interviews on smartphones. PLoS ONE 2015;10:e0128337 10.1371/journal.pone.0128337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.California HealthCare Foundation. Measuring and improving patient experience in the safety net. 2011. http://www.chcf.org/~/media/MEDIA%20LIBRARY%20Files/PDF/PDF%20M/PDF%20MeasuringImprovingPatientExperienceSummary.pdf [Google Scholar]

- 23.Rodriguez HP, von Glahn T, Rogers WH et al. Evaluating patients’ experiences with individual physicians: a randomized trial of mail, internet, and interactive voice response telephone administration of surveys. Med Care 2006;44:167–74. 10.1097/01.mlr.0000196961.00933.8e [DOI] [PubMed] [Google Scholar]

- 24.Makoul G, Krupat E, Chang CH. Measuring patient views of physician communication skills: development and testing of the Communication Assessment Tool. Patient Educ Couns 2007;67:333–42. 10.1016/j.pec.2007.05.005 [DOI] [PubMed] [Google Scholar]

- 25.U.S. Census Bureau. American FactFinder. http://factfinder.census.gov/faces/nav/jsf/pages/index.xhtml (accessed 26 Aug 2016).

- 26.Colla CH, Lewis VA, Gottlieb DJ et al. Cancer spending and accountable care organizations: Evidence from the Physician Group Practice Demonstration. Healthc (Amst) 2013;1:100–7. 10.1016/j.hjdsi.2013.05.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chisholm A, Askham J. What do you think of your doctor? A review of questionnaires for gathering patients’ feedback on their doctor. Oxford, UK: Picker Institute, 2006. [Google Scholar]

- 28.Bendall-Lyon D, Powers T. Time does not heal all wounds. Mark Heal 2001;21:10–14. [PubMed] [Google Scholar]

- 29.Jensen HI, Ammentorp J, Kofoed PE. User satisfaction is influenced by the interval between a health care service and the assessment of the service. Soc Sci Med 2010;70:1882–7. 10.1016/j.socscimed.2010.02.035 [DOI] [PubMed] [Google Scholar]

- 30.Bjertnaes OA. The association between survey timing and patient-reported experiences with hospitals: results of a national postal survey. BMC Med Res Methodol 2012;12:13 10.1186/1471-2288-12-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.U.S. Department of Health & Human Services. Frequently Asked Questions About CAHPS|Agency for Healthcare Research & Quality. http://www.ahrq.gov/cahps/faq/index.html (accessed 18 Jan 2016). [DOI] [PubMed]

- 32.Nelson EC, Mohr JJ, Batalden PB et al. Improving health care, Part 1: the clinical value compass. Jt Comm J Qual Improv 1996;22:243–58. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2016-014681supp_appendices.pdf (83.3KB, pdf)