Abstract

Progress in diagnostic error research has been hampered by a lack of unified terminology and definitions. This article proposes a novel framework for considering diagnostic errors, offering a unified conceptual model for underdiagnosis, overdiagnosis, and misdiagnosis. The model clarifies the critical separation between ‘diagnostic process failures’ (incorrect workups) and ‘diagnosis label failures’ (incorrect diagnoses). By dividing processes into those that are substandard, suboptimal, or optimal, important distinctions are drawn between ‘preventable’, ‘reducible,’ and ‘unavoidable’ diagnostic errors. The new model emphasizes the importance of mitigating diagnosis-related harms, regardless of whether the solutions require traditional safety strategies (preventable errors), more effective evidence dissemination (reducible errors; harms from overtesting and overdiagnosis), or new scientific discovery (currently unavoidable errors). Doing so maximizes our ability to prioritize solving various diagnosis-related problems from a societal value perspective. This model should serve as a foundation for developing consensus terminology and operationalized definitions for relevant diagnostic-error categories.

Keywords: diagnosis, diagnostic errors, misdiagnosis, overdiagnosis, patient safety, underdiagnosis

Introduction

Despite recent advances in understanding the epidemiology and burden of diagnostic error, [1–3] the ‘basic science’ of medical misdiagnosis remains underdeveloped [4]. Progress in diagnostic error research has been hampered, in part, by a lack of unified terminology and definitions. Some authors use the term diagnostic error to refer to any erroneous diagnosis, regardless of whether a clinical mistake occurred during the diagnostic process, allowing for a ‘no-fault’ class of errors [5, 6]. Others insist that a diagnosis failure must be linked to a care process error to be considered a diagnostic error [7–9]. Some expand the notion of process errors to include all ‘suboptimal cognitive acts’, including documentation failures, [10] while others do not, suggesting we should identify ‘missed opportunities’ in diagnosis rather than errors, per se [2]. There is lack of clarity on the distinction between ‘delayed’ and ‘missed’ diagnoses, since, to be identified as a diagnostic error, the diagnosis must eventually be proven wrong, leading some to suggest ‘missed’ be reserved for correct diagnoses discovered only post-mortem. It is also unclear when these late diagnoses should instead be considered ‘wrong’, rather than ‘delayed’ or ‘missed’, since, for some authors, these three categories are mutually exclusive [6]. The term ‘misdiagnosis’ is sometimes used synonymously with diagnostic error, [4] but other times restricted to the subset with ‘wrong’ diagnoses [7]. Among thought leaders in the field, there is even disagreement over the subtle linguistic difference between ‘diagnoSIS error’ and ‘diagnosTIC error’ (a distinction espoused by some, [7] but not others [11]). Terms such as overdiagnosis, [12, 13] underdiagnosis, [14, 15] and undiagnosed [16, 17] are now frequently used, but their relationship to diagnostic error remains undefined.

In this manuscript, I propose a new conceptual model that might serve as a basis for the development of a unified taxonomy and consensus definitions for terminology. In developing the new conceptual model, I sought to adhere to three guiding principles: (1) illustrate the important relationships between problems with diagnostic workups and problems rendering a diagnosis, linking these to different terms and taxonomies; (2) capture the full spectrum of diagnosis-related issues, including ‘no-fault’ errors, over-diagnosis, and misdiagnosis-related harms [4]; (3) remain internally consistent. Where possible, I also sought to maintain congruence with existing terminology and limit potential confusion over subtle word differences.

Core elements of the new model

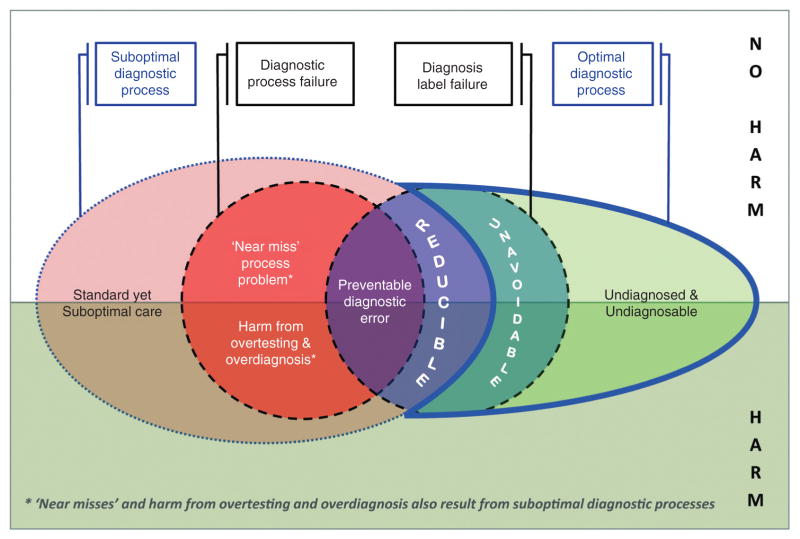

At the core of the model are diagnostic process failures and diagnosis label failures (Figure 1). Diagnostic process failures are problems in the diagnostic workup (e.g., failure to obtain an electrocardiogram in a patient with chest pain and possible acute coronary syndrome). Diagnosis label failures are problems in the named diagnosis given to the patient (e.g., diagnosing ‘gastroesophageal reflux’ when the true condition was ‘myocardial infarction’). Separating these core concepts is common to other models [7, 10] (e.g., diagnostic process failures have been called ‘diagnostic process errors’ [7] or ‘suboptimal cognitive acts’ [10]), as is identifying the subset in which misdiagnosis-related harm [4] occurs (also called ‘adverse events’ [7]).

Figure 1.

Core elements of the model define preventable diagnostic errors, and resemble prior conceptual models [7].

Diagnostic process failures come in many forms, including cognitive errors [18] and many types of systems errors [6] (e.g., communication failures, equipment malfunctions, problems with care coordination, and health-care access for patients). Diagnosis label failures come in two main forms – incorrect diagnosis label (e.g., myocardial infarction called reflux) and no diagnosis attempted (e.g., patient left against medical advice). Incorrect labels are sometimes named differently depending on whether the label was underspecified (e.g., labeling a patient with aneurysmal subarachnoid hemorrhage as ‘headache, not otherwise specified’ or ‘not yet diagnosed’– sometimes called ‘delayed’ or ‘missed’) versus mis-specified (e.g., mislabeling a patient with aneurysmal subarachnoid hemorrhage as ‘migraine’ – sometimes called ‘wrong’). It is worth noting that thought leaders now generally agree there is probably little value to insisting on subdividing incorrect diagnosis labels as specifically ‘delayed’, ‘missed,’ or ‘wrong.’

Diagnostic process failures and diagnosis label failures may be acts of omission or commission. Although it is tempting to think that, when processes fail, acts of omission (e.g., test underuse) lead to underdiagnosis (delayed/missed diagnoses), while acts of commission (e.g., test overuse) lead to wrong diagnosis (incorrect diagnoses) or overdiagnosis (correct diagnoses unlikely to impact patient health [12]), this is an oversimplification. Process failures in a single diagnostic workup often involve both underuse and overuse (e.g., obtaining a brain CT scan [overuse] in a patient with dizziness and suspected stroke, but not obtaining an MRI [underuse] [19]); and most wrong diagnoses represent underdiagnoses of true conditions as well as excess diagnoses of erroneous ones.

Relationship between diagnostic process and diagnosis label

Diagnostic process failures and diagnosis label failures may occur together or in isolation (Figure 1). Taxonomies generally agree that when diagnostic process failures lead to diagnosis label failures, the events should be called diagnostic errors. Here I call these preventable diagnostic errors (what some have called ‘missed opportunities’ in diagnosis [2]). By contrast, when diagnostic process and diagnosis label failures occur in isolation, there is disagreement among experts as to whether a ‘diagnostic error’ has occurred.

Diagnostic process failures sometimes occur without an incorrect diagnosis label. There are two discrete types – ‘lucky’ diagnoses (near-miss process failures) and correct diagnoses of underlying conditions that would better have gone undiagnosed (overdiagnoses [12]). Near misses include scenarios with fortunate outcomes despite undue diagnostic process risk (e.g., no lumbar puncture to rule out subarachnoid hemorrhage in new, thunderclap headache), but also latent or known errors that never influence the diagnosis, including those captured by redundant systems (e.g., missed pulmonary nodule by radiologist, identified by primary care doctor directly reviewing chest x-ray). Overdiagnoses include conditions that are intentionally sought but too mild to ever warrant treatment (e.g., with cancer screening [12]) or correct but unimportant conditions found incidentally in the pursuit of alternate diagnoses (so-called ‘incidentalomas’ [19]).

Conversely, diagnosis label failures sometimes occur without diagnostic process failures since, in clinical practice, diagnostic uncertainty often cannot be completely resolved [20, 21]. This is because most tests have imperfect sensitivity and specificity; obtaining some tests may risk more harm (or costs) than benefit; and scientific knowledge of diseases is incomplete. For example, many diseases have pre-symptomatic phases where the best available screening fails to detect the target conditions. New discoveries (tests or diseases) may make previously ‘undetectable’ or ‘unknown’ conditions diagnosable (e.g., recently-discovered, novel tick-borne pathogen [22]).

The new model and its implications

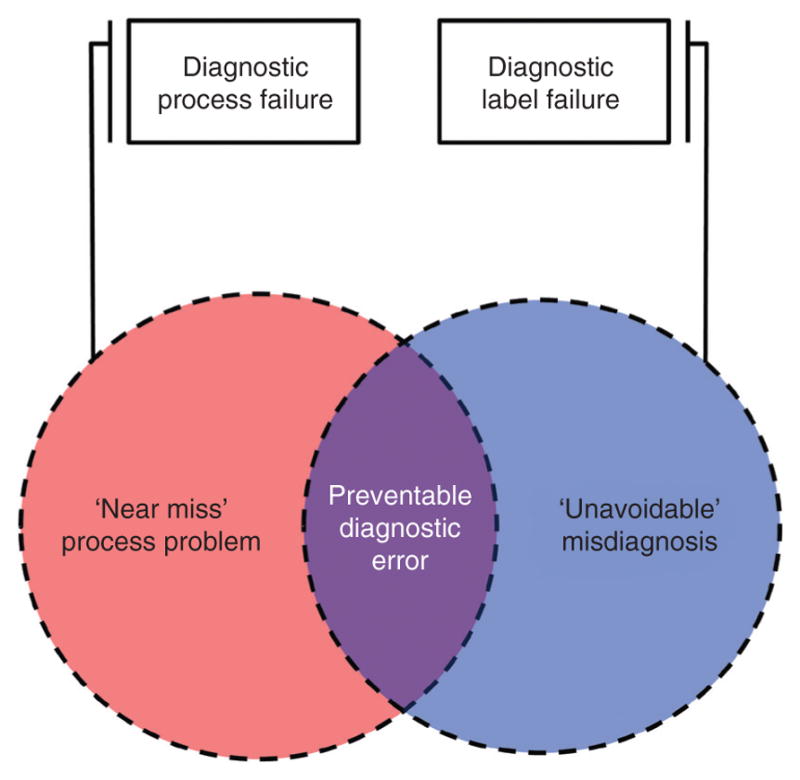

To the core model I have added two concepts not found in prior models [7, 10]: (1) suboptimal diagnostic processes and (2) optimal diagnostic processes (Figure 2). Suboptimal diagnostic processes are practices that are standard, accepted, and common in a given clinical setting, but do not represent the best possible care. Optimal diagnostic processes are those that represent the best possible care, given the state of current scientific knowledge (i.e., the most experienced and skilled clinician, practicing under optimized conditions, with full access to relevant tests, and no external constraints such as time pressure). Adding these concepts makes explicit that diagnostic standards vary locally and evolve with development of new diagnostic technologies, dissemination of new diagnostic methods, and discovery of new diseases. The result is two new classes of diagnostic error (‘reducible’ and ‘unavoidable’). These error classes are important because they may cause a substantial burden of misdiagnosis-related harm and because mitigation strategies are likely to differ from ‘preventable’ diagnostic errors. The new model also identifies a category of undiagnosed (no diagnosis label given) patients with undiagnosable conditions (pre-symptomatic or early-stage diseases below our current detection threshold and symptomatic diseases and disorders not yet known to science).

Figure 2.

Combining new elements with core elements of the model define reducible and unavoidable diagnostic errors.

Reducible diagnostic errors are suboptimal diagnostic processes leading to a diagnosis label failure. These differ from ‘preventable’ diagnostic errors because the diagnostic process leading to the incorrect diagnosis is suboptimal but not substandard. The practice gap therefore reflects a defect in access to a new or improved diagnostic approach, technology, or system of care. Sometimes this practice gap is a transient phenomenon while diffusion of innovation occurs (e.g., adoption of thrombolytic therapy for myocardial infarction [23]). Other times the practice gap is sustained for various reasons, despite major dissemination efforts [24]. In some instances, this residual defect may be partly due to resource constraints in diagnostic testing [25]. Reducing these errors often involves more effective dissemination strategies for preferred diagnostic approaches. In certain clinical contexts or settings (e.g., resource-poor countries), some ‘reducible’ diagnostic errors deemed unacceptable elsewhere (e.g., industrialized countries) might be deemed tolerable.

Unavoidable diagnostic errors are diagnosis label failures despite optimal diagnostic processes. These have previously been referred to as ‘no-fault’ errors [5, 6]. I avoid use of ‘no-fault’ here because prior definitions are somewhat broader (e.g., including uncommon diseases that ‘likely would be missed by most physicians’ [5]) and because using this terminology risks implying ‘fault’ for all other classes of diagnostic error – a blaming culture risks impeding progress in diagnostic safety [26]. These unavoidable errors could not have been prevented at the time, even under the best possible circumstances. Decreasing unavoidable diagnostic errors requires new scientific discovery to improve the detection thresholds for diagnostic tests, decrease the risks of confirmatory testing (as with newer, non-invasive tests), or identify new diseases (as with focused exploratory investigation in patients with unusual, unexplained symptoms [27]).

The new model allows for a broader conceptualization of who engages in ‘diagnosis’ and for growth in the direction of shared diagnostic decision-making. Directing the diagnostic process and offering a diagnosis label has historically been the exclusive purview of physicians. With the proliferation of internet-based self-diagnosis [28] and direct consumer marketing of diagnostic tests, [29] patients have taken an increasingly active role in the diagnostic process, sometimes with salutary, [30] and other times not-so-salutary effects [31, 32]. If a patient were to incorrectly diagnose their symptoms using internet resources, this could be readily classified in the new model as ‘unavoidable’ (in the clinician-centric sense) or ‘reducible’ (in the systems-of-care sense), even if most physicians would be uncomfortable classifying this as a ‘diagnostic process failure’ akin to failure to order an electrocardiogram in a patient with acute chest pain.

The structure of the new model also makes clear that for any given subset of diagnosis-related problems, harm may (or may not) result from testing, treatment, or disease (Figure 2) [4]. In the model, harms are not restricted to those from preventable diagnostic errors alone. The model therefore allows us to clarify and emphasize the importance of mitigating diagnosis-related harms, regardless of whether the solutions require traditional safety strategies (preventable diagnostic errors), more effective evidence dissemination (reducible diagnostic errors; harms from overtesting and overdiagnosis), or new scientific discovery (unavoidable diagnostic errors). Doing so maximizes our ability to prioritize solving various diagnosis-related problems from a societal value perspective [19]. High-value targets are clinical scenarios with a large burden of diagnosis-related harm that can be eliminated at relatively low net cost [19].

Unresolved issues

There remain important, unresolved questions that will need to be addressed going forward. Many of these relate to the temporal progress of disease and diagnosis, since both evolve over time (e.g., When does a disease ‘begin’? When does the diagnostic process ‘begin’, especially if we are considering diagnostic screening? How much ‘delay’ is acceptable for a given diagnosis, and how should we decide? Are the answers context dependent, as with patient age vis-à-vis cancer screening and overdiagnosis?). Some questions relate to the accuracy and precision of diagnosis specification (e.g., How detailed or ‘granular’ must a diagnosis be to be considered ‘correct’? If the diagnosis was mis-specified but close enough that it led to the correct next management action, was it an error?). Other questions relate to differing perspectives on error within a complex system of care (e.g., If a preventable diagnostic error is made but effectively mitigated by another agent within the health-care system, should this count as a diagnostic error… or a near miss? In an accountable-care health maintenance organization, is a patient’s failure to seek appropriate medical attention a ‘system’ error in diagnosis?). Finally, there are unsolved questions about ‘acceptable’ diagnostic errors (e.g., Should we be targeting a zero rate of preventable errors? If not, how should we pick a target? Under what circumstances might we tolerate (i.e., not seek to eliminate) reducible errors? Should standards differ for errors in diagnostic screening as opposed to symptomatic diagnosis?).

Conclusions

A new model incorporating concepts related to discovery and dissemination of new diagnostic knowledge provides a more robust platform for classifying diagnostic errors of all types and their associated harms. Going forward it will be important to build consensus around the terms representing these concepts and to operationalize their definitions. This is no small task, since there is disagreement among leaders in the field, and many of the concepts have blurry boundaries. Nevertheless, the understanding and prevention of diagnostic errors is unlikely to progress rapidly without some degree of consensus on key definitions.

Acknowledgments

Sources of funding and support; an explanation of the role of sponsor(s): Preparation of this manuscript was partly supported by a grant from the Agency for Health-care Research and Quality (Grant #R13HS019252). The funding agency was not involved in the writing, approval, or decision to publish this manuscript.

Footnotes

Authors’ contributions: David Newman-Toker: I declare that I am the sole author and approved the final version. I have no conflicts of interest.

Responsibility for manuscript: The corresponding author (David E. Newman-Toker) had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. The corresponding author also had final responsibility for the decision to submit for publication.

Conflict of interest: No conflicts of interest. The author has no financial or personal relationships with other people or organizations that could inappropriately influence (bias) their work.

Role of medical writer or editor: No medical writer or editor was involved in the creation of this manuscript.

Information on previous presentation of the information reported in the manuscript: Elements of this work were presented at the 5th Annual International Diagnostic Error in Medicine Conference held in Baltimore, MD in November 2012 and at the 6th Annual International Diagnostic Error in Medicine Conference held in Chicago, IL in September, 2013.

All persons who have made substantial contributions to the work but who are not authors: None.

IRB Approval: No human subjects were involved in the preparation of this manuscript, and no IRB approval was required.

Financial support: None.

References

- 1.Zwaan L, de Bruijne M, Wagner C, Thijs A, Smits M, van der Wal G, et al. Patient record review of the incidence, consequences, and causes of diagnostic adverse events. Arch Intern Med. 2010;170:1015–21. doi: 10.1001/archinternmed.2010.146. [DOI] [PubMed] [Google Scholar]

- 2.Singh H, Giardina TD, Meyer AN, Forjuoh SN, Reis MD, Thomas EJ. Types and origins of diagnostic errors in primary care settings. J Am Med Assoc Intern Med. 2013;173:418–25. doi: 10.1001/jamainternmed.2013.2777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Graber ML. The incidence of diagnostic error in medicine. BMJ Qual Saf. 2013;22(Suppl 2):ii21–ii7. doi: 10.1136/bmjqs-2012-001615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Newman-Toker DE, Pronovost PJ. Diagnostic errors – the next frontier for patient safety. J Am Med Assoc. 2009;301:1060–2. doi: 10.1001/jama.2009.249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kassirer JP, Kopelman RI. Cognitive errors in diagnosis: instantiation, classification, and consequences. Am J Med. 1989;86:433–41. doi: 10.1016/0002-9343(89)90342-2. [DOI] [PubMed] [Google Scholar]

- 6.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–9. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 7.Schiff GD, Kim S, Abrams R, Cosby K, Lambert B, Elstein AS, et al. Diagnosing diagnosis errors: lessons from a multi-institutional collaborative project. In: Henriksen K, Battles JB, Marks ES, Lewin DI, editors. Advances in patient safety: from research to implementation (vol. 2: Concepts and Methodology) Rockville (MD): Agency for Healthcare Research and Quality (US); 2005. pp. 255–78. [PubMed] [Google Scholar]

- 8.Schiff GD, Hasan O, Kim S, Abrams R, Cosby K, Lambert BL, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169:1881–7. doi: 10.1001/archinternmed.2009.333. [DOI] [PubMed] [Google Scholar]

- 9.Singh H, Giardina TD, Forjuoh SN, Reis MD, Kosmach S, Khan MM, et al. Electronic health record-based surveillance of diagnostic errors in primary care. Br Med J Qual Safety. 2012;21:93–100. doi: 10.1136/bmjqs-2011-000304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zwaan L, Thijs A, Wagner C, van der Wal G, Timmermans DR. Relating faults in diagnostic reasoning with diagnostic errors and patient harm. Acad Med. 2012;87:149–56. doi: 10.1097/ACM.0b013e31823f71e6. [DOI] [PubMed] [Google Scholar]

- 11.Graber M. Diagnostic errors in medicine: a case of neglect. Jt Comm J Qual Patient Saf. 2005;31:106–13. doi: 10.1016/s1553-7250(05)31015-4. [DOI] [PubMed] [Google Scholar]

- 12.Welch HG, Black WC. Overdiagnosis in cancer. J Natl Cancer Inst. 2010;102:605–13. doi: 10.1093/jnci/djq099. [DOI] [PubMed] [Google Scholar]

- 13.Moynihan R, Doust J, Henry D. Preventing overdiagnosis: how to stop harming the healthy. Br Med J. 2012;344:e3502. doi: 10.1136/bmj.e3502. [DOI] [PubMed] [Google Scholar]

- 14.Mitchell PB. Re:bipolar disorders: a shift to overdiagnosis or to accurate diagnosis? And underdiagnosis: which way to measure? Can J Psychiatry. 2013;58:372. doi: 10.1177/070674371305800612. [DOI] [PubMed] [Google Scholar]

- 15.Hocagil H, Karakilic E, Hocagil C, Senlikci H, Buyukcam F. Underdiagnosis of anaphylaxis in the emergency department: misdiagnosed or miscoded? Hong Kong Med J. 2013;19:429–33. doi: 10.12809/hkmj133895. [DOI] [PubMed] [Google Scholar]

- 16.Razzaghi H, Marcinkevage J, Peterson C. Prevalence of undiagnosed diabetes among non-pregnant women of reproductive age in the United States, 1999–2010. Prim Care Diab. 2013 doi: 10.1016/j.pcd.2013.10.004. pii: S1751-9918(13)00120-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Eyawo O, Hogg RS, Montaner JS. The holy grail: the search for undiagnosed cases is paramount in improving the cascade of care among people living with HIV. Can J Public Health. 2013;104:e418–9. doi: 10.17269/cjph.104.4054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–80. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 19.Newman-Toker DE, McDonald KM, Meltzer DO. How much diagnostic safety can we afford, and how should we decide? A health economics perspective. Br Med J Health Safety. 2013;22(Suppl 2):ii11–ii20. doi: 10.1136/bmjqs-2012-001616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kassirer JP. Our stubborn quest for diagnostic certainty. A cause of excessive testing. N Engl J Med. 1989;320:1489–91. doi: 10.1056/NEJM198906013202211. [DOI] [PubMed] [Google Scholar]

- 21.Goodacre S. Safe discharge: an irrational, unhelpful and unachievable concept. Emerg Med J. 2006;23:753–5. doi: 10.1136/emj.2006.037903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Krause PJ, Narasimhan S, Wormser GP, Rollend L, Fikrig E, Lepore T, et al. Human Borrelia miyamotoi infection in the United States. N Engl J Med. 2013;368:291–3. doi: 10.1056/NEJMc1215469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lau J, Antman EM, Jimenez-Silva J, Kupelnick B, Mosteller F, Chalmers TC. Cumulative meta-analysis of therapeutic trials for myocardial infarction. N Engl J Med. 1992;327:248–54. doi: 10.1056/NEJM199207233270406. [DOI] [PubMed] [Google Scholar]

- 24.Eagles D, Stiell IG, Clement CM, Brehaut J, Taljaard M, Kelly AM, et al. International survey of emergency physicians’ awareness and use of the Canadian Cervical-Spine Rule and the Canadian Computed Tomography Head Rule. Acad Emerg Med. 2008;15:1256–61. doi: 10.1111/j.1553-2712.2008.00265.x. [DOI] [PubMed] [Google Scholar]

- 25.Joubert J, Prentice LF, Moulin T, Liaw ST, Joubert LB, Preux PM, et al. Stroke in rural areas and small communities. Stroke. 2008;39:1920–8. doi: 10.1161/STROKEAHA.107.501643. [DOI] [PubMed] [Google Scholar]

- 26.Singer S, Lin S, Falwell A, Gaba D, Baker L. Relationship of safety climate and safety performance in hospitals. Health Serv Res. 2009;44(2 Pt 1):399–421. doi: 10.1111/j.1475-6773.2008.00918.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Undiagnosed Diseases Program. National Institutes of Health; [cited 2013 September 15]. Available from: http://rarediseases.info.nih.gov/research/pages/27/undiagnosed-diseases-program. [Google Scholar]

- 28.Giles DC, Newbold J. Self- and other-diagnosis in user-led mental health online communities. Qual Health Res. 2011;21:419–28. doi: 10.1177/1049732310381388. [DOI] [PubMed] [Google Scholar]

- 29.Lovett KM, Mackey TK, Liang BA. Evaluating the evidence: direct-to-consumer screening tests advertised online. J Med Screen. 2012;19:141–53. doi: 10.1258/jms.2012.012025. [DOI] [PubMed] [Google Scholar]

- 30.Rostami K, Hogg-Kollars S. A Patient’s Journey. Non-coeliac gluten sensitivity. Br Med J. 2012;345:e7982. doi: 10.1136/bmj.e7982. [DOI] [PubMed] [Google Scholar]

- 31.Avery N, Ghandi J, Keating J. The ‘Dr Google’ phenomenon – missed appendicitis. New Zealand Med J. 2012;125:135–7. [PubMed] [Google Scholar]

- 32.Pulman A, Taylor J. Munchausen by internet: current research and future directions. J Med Internet Res. 2012;14:e115. doi: 10.2196/jmir.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]