Summary

Background

As healthcare moves towards technology-driven population health management, clinicians must adopt complex digital platforms to access health information and document care.

Objectives

This study explored information literacy, a set of skills required to effectively navigate population health information systems, among primary care providers in one Veterans’ Affairs (VA) medical center.

Methods

Information literacy was assessed during an 8-month randomized trial that tested a population health (panel) management intervention. Providers were asked about their use and comfort with two VA digital tools for panel management at baseline, 16 weeks, and post-intervention. An 8-item scale (range 0-40) was used to measure information literacy (Cronbach’s a=0.84). Scores between study arms and provider types were compared using paired t-tests and ANOVAs. Associations between self-reported digital tool use and information literacy were measured via Pearson’s correlations.

Results

Providers showed moderate levels of information literacy (M= 27.4, SD 6.5). There were no significant differences in mean information literacy between physicians (M=26.4, SD 6.7) and nurses (M=30.5, SD 5.2, p=0.57 for difference), or between intervention (M=28.4, SD 6.5) and control groups (M=25.1, SD 6.2, p=0.12 for difference). Information literacy was correlated with higher rates of self-reported information system usage (r=0.547, p=0.001). Clinicians identified data access, accuracy, and interpretability as potential information literacy barriers.

Conclusions

While exploratory in nature, cautioning generalizability, the study suggests that measuring and improving clinicians’ information literacy may play a significant role in the implementation and use of digital information tools, as these tools are rapidly being deployed to enhance communication among care teams, improve health care outcomes, and reduce overall costs.

Keywords: Information systems, information literacy, health services needs and demand, informatics, human engineering or usability

1. Background and Significance

Clinical providers are responsible for integrating their knowledge, skills, and experience into effective patient care and have been increasingly tasked with adapting to new technology to deliver healthcare more efficiently [1, 2]. As clinical practice shifts towards population health management and technology makes information increasingly accessible, policies have driven the adoption of increasingly complex digital healthcare tools like electronic health record (EHR) systems [3, 4]. While there are many positive aspects to tools like EHRs, their adoption generates an overwhelming volume of unstructured data that is difficult to access and interpret [5–9, 10–14]. Moreover, complex information systems can get in the way of provider-to-provider as well as provider-to-patient communication [15–18].

To counter issues with the growing complexity of EHR systems, health care organizations are implementing data visualization and analytical aids, like animations and charts, that aim to simplify the presentation of patient information [19–21]. Clinical dashboards that combine multiple data sources into icons and graphs are also becoming increasingly common [22–25]. However, the capacity of clinicians to effectively utilize the full range of existing and emerging digital tools can be limited by their general knowledge of technology and associated understanding and skills.

The ability to navigate increasingly complex clinical information systems requires information literacy. Information literacy is the ability to recognize when information is needed, locate potential resources, develop appropriate search strategies, evaluate results, and apply relevant knowledge to decision-making [26]. As opposed to computer literacy, which involves general computing skills like word processing and email communications [27, 28], information literacy emphasizes users’ abilities to access, interact with, evaluate, understand and apply data and information to accomplish a specific task. Information literacy also includes users’ understanding of the social, legal and economic issues with respect to use of information.

Information literacy is especially important in team-based care settings like the patient-centered medical home (PCMH) model where multiple clinical providers are responsible for coordinating care for a panel, or set number, of patients [29]. Panel management (PM), a key component of the PCMH and the larger population health trend, involves tracking the health statuses of multiple patients in real-time, systematically identifying gaps in care, and providing proactive outreach to improve outcomes. These goals can be radically simplified with digital health tools. Via one centralized electronic system, providers can locate information about changes in a patient’s medications or treatment plan following a visit to a specialist or hospitalization. Studies examining information literacy have thus far focused on patients, linking limited health literacy to poor patient engagement and outcomes, and on medical trainees, where findings suggest limited statistical literacy [30–36].

In 2010, the Veterans Administration (VA) implemented their own version of the PCMH model [37]. The VA’s Patient Aligned Care Team (PACT) restructured over 800 primary care clinics into teams of 1–5 primary care providers (PCP), a registered nurse care manager (RNCM), a licensed practical nurse, and a clerk [38]. To support PACT, the VA also introduced two digital information tools: The Primary Care (PC) Almanac and the PACT Compass. The PC Almanac enables PACT providers to view and filter their patients with chronic conditions by last appointment date or specific clinical variables and find subgroups of patients with missing lab values, immunizations, or other care gaps. The PACT Compass allows comparisons of provider, team, facility, regional, and national benchmarks of quality measures such as continuity of care (i.e. proportion of patients seeing the same provider at each visit; proportion of patients contacted within two days of hospital discharge) and access (proportion of patients receiving same day access). These tools were implemented as applications for providers to use in addition to the VA’s existing EHR system.

2. Objectives

PACT providers are expected to access the PC Almanac and PACT Compass regularly and act on data collected across clinical settings [39]. However, it is unclear whether PACT providers possess the appropriate information literacy to use these tools. This study explored PACT providers’ information literacy and knowledge of the tools within the context of a randomized trial evaluating the implementation of a non-clinical panel management assistant (PMA) in primary care. This randomized trial was conducted by the Program for Research on Outcome of VA Education (PROVE). To our knowledge, this is the first study to assess information literacy among providers following the implementation of a PM intervention. We hypothesized that information literacy among providers would be greater among teams receiving panel management support than among control group teams, and that information literacy would be associated with positive attitudes towards PM in general and increased use of PACT digital tools.

3. Methods

3.1 Study Design

The PROVE study was a cluster-randomized trial to investigate the impact of PM support on outcomes through the incorporation of a Panel Management Assistant (PMA). PMAs were trained, non-clinical staff assigned to help PACTs implement PM, including the use of the PC Almanac and PACT Compass. Six PMAs were randomly allocated to serve two PACTs each (12 total) at either the Manhattan or Brooklyn campuses of the New York Harbor VA for 8 months. The remaining 10 PACTs served as the control arm and only received static, monthly reports on their patients’ health. This study was approved by VA’s Institutional Review Board and Research and Development Committee. Additional details on the study design and primary outcomes from the trial can be found elsewhere [40, 41].

We conducted 35 semi-structured interviews with PACT providers at baseline, 16 weeks, and two months following the end of the PMA intervention. Clinicians were asked about their experience with PM and their expectations of the PMA assigned to their team. At months 4 and 8 we interviewed the PMAs to explore their experiences working with their team, successes and challenges in implementing PM strategies, and how PM could be sustained following the intervention.

In addition to the interviews, we also conducted an online survey of PACT members before and again two months following the end of the PMA intervention. There were a total of 61 clinicians spread across 12 PACTs; all were invited to participate in the surveys. Of the available clinicians, 46 (75%) responded to the baseline survey and 47 (77%) responded to the post-intervention survey; 39 (64%) responded to both surveys. At baseline we measured team demographics, including gender, age, clinical role (RNCM or PCP), clinical site (Brooklyn or Manhattan), years at the VA, years in primary care, and days per week in the primary care clinic. Two months following the intervention, we measured PACT members’ perceptions of PACT, PM in general and the PMA (those teams assigned one). We further measured providers’ information literacy as well as their use of the PM digital tools.

3.2 Information Literacy

An 8-item, self-report scale, drawn from the larger surveys given to clinicians regarding perceived self efficacy with PM, was derived to assess information literacy of PCPs and RNCMs. The selected questions (▶ Table 1) asked about information system awareness, use of the information tools, preparedness to use the information tools, and availability of information to make clinical decisions. Respondents were asked how much they agreed with 8 statements on a 5-point Likert scale ranging from “strongly disagree” to “strongly agree”. Higher scores (max=40) indicated higher self-reported information literacy.

Table 1.

Information Literacy Scale Items

| 1. I am aware of and know how to access the PACT* Compass** and PC Almanac*** |

| 2. I routinely use the PACT Compass to see how my team is doing |

| 3. I routinely use the PC Almanac to see how our panel of patients is doing |

| 4. We routinely use data to improve the work of our team |

| 5. It is helpful to have resources like the PC Almanac and PACT Compass available |

| 6. I have sufficient training in using data tools to access information on our panel of patients |

| 7. I don’t like other people looking at data on our patients |

| 8. We have the right information at the right time to improve health outcomes for our patients |

* PACT: Patient Aligned Care Teams, VA’s Patient Centered Medical Home model

** The Primary Care (PC) Almanac enables providers to view and filter their patients with chronic conditions

*** The PACT Compass allows comparisons of local, regional, and national benchmarks of quality measures

3.3 Use of PM Digital Tools

In addition to general questions about their perceptions of PM, results that are described elsewhere [40], providers were asked about their actual usage of the PACT Compass and PC Almanac digital tools. Questions used a 5-point Likert scale ranging from “strongly disagree” to “strongly agree” and prompted providers to self-report whether they routinely used digital tools as part of PM. Higher scores (max=5) indicated higher self-reported, regular usage of a particular tool.

3.4 Quantitative Analysis

Descriptive statistics, presented as means with standard deviations, summarize PACT provider characteristics. The reliability, factor structure, range, and variability of the 8-item information literacy scale were measured. One-way ANOVA tests were conducted to compare information literacy scores between provider types (i.e. PCP or RNCM) and on the intervention (i.e. PACT + PMA) and control (i.e. PACT only) groups. Paired t-tests were used to compare pre- and post-intervention provider responses to questions about PM tool usage and attitudes towards PM. Pearson correlation coefficients were computed to determine the association of information literacy scores and providers’ attitudes and efficacy regarding PM implementation before and after the intervention. All analyses were completed in SPSS Version 21 (IBM Corporation) using two-tailed tests.

3.5 Qualitative Analysis

Interviews with clinical staff and PMAs were recorded and transcribed. Transcripts were analyzed using thematic analysis [42]. Thematic analysis involves multiple phases in which researchers iteratively refine a set of themes or patterns they find when analyzing qualitative data. For this analysis, codes related to information literacy were defined by the research team after initial review of transcripts. Initial codes focused on the applications used to access information, data accuracy and completeness, information retrieval, and application use. Refined codes sought to find patterns in use of the applications to examine patient data trends, including summary metrics, graphs and charts, as these are important tasks for population health management. The codes were used to identify major themes across interviews. Two researchers (AEJ and KJB) performed primary coding and thematic analysis. A third researcher (BED) guided the thematic analysis, resolved conflicts, and refined interpretation of results.

4. Results

4.1 Participant Characteristics

Thirty-nine (64%) clinicians (10 RNCMs and 29 PCPs) spread across 12 PACTs completed the baseline and post-intervention surveys. Participant characteristics are summarized in ▶ Table 2. About half (51%) had been employed by the VA for at least 11 years. Most clinicians worked in the primary care clinic 4 to 5 days/week (59.5%) or 2 to 3 days per week (21.6%).

Table 2.

Characteristics of clinical staff responding to both pre and post intervention surveys

| Characteristic | % (n=39) |

|---|---|

| Site | |

| Brooklyn | 51 |

| Manhattan | 49 |

| Role | |

| PCP* | 74 |

| RNCM** | 26 |

| Gender | |

| Female | 65 |

| Male | 35 |

| Study Group | |

| Control | 31 |

| PM*** Support | 69 |

| Years at the VA**** | |

| 10 years or less | 49% |

| More than 10 years | 51% |

* Primary Care Provider (PCP)

** Nurse Care Manager (RNCM)

*** Panel Management (PM)

**** Veterans Administration (VA)

4.2 Quantitative Results

4.2.1 Information Literacy (Post-Intervention)

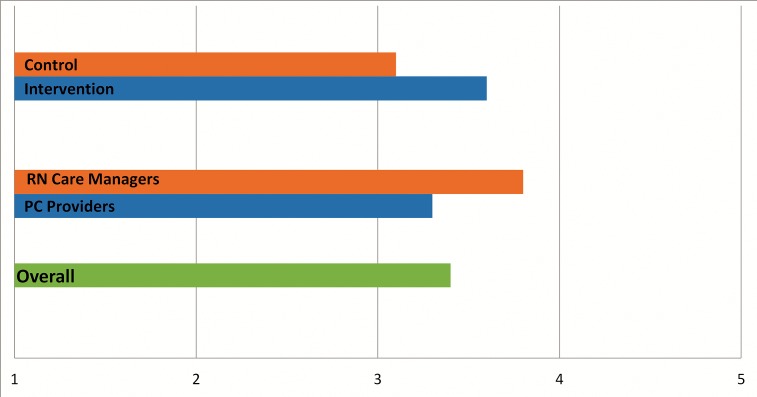

The information literacy scale (individual items listed in ▶ Table 1) was found to be reliable (Cronbach’s alpha=0.84), with the combined factor (comprised of all 8 items) explaining 65% of the variance. Scores were overall modest, averaging 27.4 out of 40 (SD 6.5). Mean information literacy was similar for PCPs (M= 26.4, SD= 6.7) and RNCMs (M= 30.5, SD= 5.2, p=0.57 for difference), and for intervention (M= 28.4, SD= 6.5) and control groups (M=25.1, SD= 6.2, p=0.12 for difference). In ▶ Figure 1, mean total scores were converted to Likert scale values to aid in interpretation. Converted scores were interpreted as modest.

Fig. 1.

Information Literacy Scale Mean Scores

4.2.2 PM Digital Tool Use

Overall reported use of the PM digital tools by participants increased from baseline to post-intervention. Reported frequency of use of the PC Almanac increased from 2.6 to 3.2, a mean increase of 0.5 (95% CI = 0.09, 0.94, p=0.02). Reported use of the PACT Compass increased from 2.3 to 3.2, a mean increase of 1.2 (95% CI = 0.70, 1.60, p<0.001). No differences were found between control and intervention arms in reported use of these information tools. At baseline, RNCMs and PCPs reported similar frequency of use but at post-intervention, RNCMs reported greater use of the PACT Compass (4.1 and 2.9 respectively, p=0.01) and PC Almanac (4.2 and 2.8 respectively, p=0.001) when compared to the PCPs.

4.2.3 Correlates of Information Literacy

High scores on the information literacy questionnaire were significantly associated with higher rates of self-reported PC Almanac (p<0.001) use but not with PACT Compass (p=0.052) use at baseline (see ▶ Table 3). Baseline PM digital tool use was not significantly correlated with information literacy (p=0.11). However, at post-intervention, PM digital tool use was significantly correlated with information literacy (p=0.01). PM digital use was highly correlated with attitudes towards PM (p=0.018 for “PM is critical for improving the health of our patients”) and with PM self efficacy (p=0.002 for “Outcomes at the panel level have little to do with my skills as a provider”). Self-reported satisfaction with PM training at baseline or post-intervention was not significantly correlated with information literacy (p= 0.063, 0.25 respectively). Satisfaction with PACT implementation (3-items, Cronbach’s alpha=0.86) was correlated with information literacy (p=0.013). Finally, frequency of use of PM at baseline and post-intervention was associated with higher information literacy (p=0.006, <0.001, respectively).

Table 3.

Correlates of Information Literacy

| Variable | Information Literacy Pearson’s r (p) |

|---|---|

| Baseline Attitudes Toward Panel Management | |

| I routinely use the PACT* Compass** to see how my team is doing | 0.32 (0.052) |

| I routinely use the PC Almanac*** to see how our panel of patients is doing | 0.55 (<0.001) |

| PM**** is critical for improving the health of our patients | 0.32 (0.053) |

| Outcomes at the panel level have little to do with my skills as a provider | -0.10 (0.56) |

| I have sufficient training in PM | 0.30 (0.063) |

| I routinely use PM | 0.44 (0.006) |

| Panel Management Self-Efficacy (6-item scale) | 0.26 (0.11) |

| Post-Intervention Attitudes Toward Panel Management | |

| Satisfaction with PACT (3-item scale) | 0.36 (0.013) |

| PM is critical for improving the health of our patients | 0.35 (0.018) |

| Outcomes at the panel level have little to do with my skills as a provider | -0.46 (0.002) |

| I routinely use PM | 0.57 (<0.001) |

| I have sufficient training in PM | 0.17 (0.25) |

| Panel Management Self-Efficacy (6-item scale) | 0.38 (0.01) |

* PACT: Patient Aligned Care Teams, VA’s Patient Centered Medical Home model

** The PACT Compass allows comparisons of local, regional, and national benchmarks of quality measures

*** The Primary Care (PC) Almanac enables providers to view and filter their patients with chronic conditions

**** Panel Management (PM)

4.3 Qualitative Results

Interviews with PACT providers and PMAs highlighted three key themes associated with information literacy: 1) barriers to accessing and managing population data (including time, skills, and role management); 2) interpretation and application of population data; and 3) accuracy of population data (▶ Table 4). These themes are further highlighted with quotes from study participants:

Fig. 4.

Qualitative Themes Associated with Information Literacy

| Theme | # of PCPs* or RNCMs** who discussed theme (N=10) | # of quotes by PCPs or RNCMs | # of PMAs*** who discussed theme (N=6) | # of quotes by PMAs |

|---|---|---|---|---|

| 1) Barriers to accessing and using population data | 10 | 48 | 6 | 27 |

| 2) Interpretation and application of population data | 5 | 14 | 5 | 10 |

| 3) Accuracy and completeness of population data | 4 | 5 | 6 | 21 |

* PCP: Primary Care Providers

** RNCM: Nurse Care Managers

*** PMA: Panel Management Assistants working with the intervention group teams

4.3.1 Barriers to Accessing and Using Population Data

All PACT providers and PMAs expressed concerns regarding access to and management of population data. PACT providers suggested assigning PMAs the task of retrieving and compiling data from multiple sources and formatting it into simple, understandable lists for the clinical team. These sentiments stemmed from concerns held by the PACT providers that data management would require skills and time they are not afforded:

“[Panel Management is] new. It’s overwhelming. Like I knew the patients and there’s just a lot of data coming at you all the time. So if I take a day off…I have to spend an hour digging through every lab…x-ray…note…visit…” (PCP, Control Arm)

PMAs confirmed the need for this role, stating that they were often assigned to be a liaison between patient information and PACT. They expressed concerns with a lack of time and skills to appropriately manage and present population data to their clinical teams. They reported difficulties accessing data from multiple systems and verifying data due to incomplete or inaccurate information:

“…Managing the smokers is really tough because there’s not a sound database that says ‘this person is a smoker’…chart review becomes so labor intensive. I have 500 smokers. Before (calling one)…I’m reviewing: When was the last time they were here? What are they…smoking? What recent…prescriptions are they taking? So before every phone call you have (to review each individual) chart…” (PMA, Intervention Arm)

4.3.2 Interpretation and Application of Population Data

PACT providers and PMAs identified challenges with applying population data to patient care. While some providers embraced the PM digital tools…

“…wow I didn’t realize that 15% of my patients have uncontrolled hypertension (and that) of those 15%, half of them missed their last appointment…it gets you to stop for a minute and think about things.” (PCP, Intervention Arm)

…others expressed discomfort with the PM digital tools:

“Nobody really told me about [the (PC) Almanac]. I’m used to nursing individuals, not statistics, so it’s kind of difficult in that respect. Like do you want your patients to know you? To trust you? Or do you just want his blood pressure to be within the normal range?” (RNCM, Intervention Arm)

4.3.3 Accuracy of Population Data

Finally, concerns were expressed regarding the accuracy of population data provided by the PM digital tools. Many PACT providers and all PMAs reported that the PC Almanac and PACT Compass tools are only updated monthly and often lacked the most recent health information of their panel patients. This presents challenges for managing patients who require more frequent monitoring. It also led to miscommunication of information across the team and to patients. To compensate for these obstacles, PMAs and RNCMs reported that they spent additional time confirming data via individual chart reviews.

Overall, PACT providers and PMAs both indicated that accessing, managing, and applying population data presented major challenges to established clinical workflows and expectations for how providers spend their time caring for patients. There was a general consensus that while the digital tools could be helpful, it will require time and additional human assistance to successfully implement them into panel management.

5. Discussion

As part of a larger trial examining the implementation of PM in primary care at the VA, we assessed the information literacy of practicing clinicians. Further, we explored the potential impact a PMA intervention might have on information literacy. Clinicians’ information literacy is rarely measured; prior studies focused on patient or medical trainee populations [30–36]. Therefore, this exploratory analysis may be the first to examine information literacy in a population of experienced, practicing clinicians. While exploratory in nature, cautioning generalizability, the study suggests that measuring and addressing clinicians’ information literacy may play a significant role in the implementation and use of digital information tools, as these tools are rapidly being deployed to enhance communication among care teams, improve health care outcomes, and reduce overall costs.

Based on self-reported use, perceptions of and application of PM data available through digital information tools, we found that clinicians’ information literacy was associated with greater use of digital PM tools post-intervention. This correlation is expected given that individuals with high information literacy would be facile users of information systems. While the PMA intervention did not lead to differences in information literacy, the support provided by the PMAs enabled providers, who reported feeling overwhelmed by the transition to PM, to be more comfortable with the digital PM tools by the end of the study period. This was especially true for RNCMs who were significantly more likely to use digital PM tools post-intervention when compared to PCPs. This suggests that PMAs can play a role in care teams, specifically in the management and use of electronic health information. The association between PMAs and digital tool usage suggests that, in an era where clinical teams are becoming increasingly multidisciplinary and communicating with a growing array of complex digital tools, an information liaison may be essential to the care team. Such a role may be particularly useful in scenarios like in the VA where PM tools are deployed with limited training for end users and use is strongly encouraged, but not required, to advance an organizational agenda towards improved population health. Future research should explore the ways in which a PMA-like role can support care teams, whether it be a trained Informatician or a member of the team who receives special training in informatics.

Results also suggest that the current generation of information tools may not sufficient for optimizing PM. PMAs and PACT providers reported static reports to be insufficient for clinical use. To be useful, data and information on patient populations should be updated and available in real-time. Yet providing clinicians access to view raw data or information (e.g., a line list of diabetic patients) is not sufficient. Simplistic attempts result in inefficient methods for retrieving the information necessary for clinical decisions. For example, one PCP stated that he must spend “an hour digging through every lab…x-ray…note…visit…” Interfaces for searching and displaying panel information could be more user-friendly, something echoed across health systems in prior research [13, 43, 44].

5.1 Limitations

There are several limitations in this study. Most instruments that measure information and computer literacies are designed for patients and almost none target clinicians’ competencies [33, 45, 46], so this study by its nature was exploratory. While our information literacy construct showed strong internal validity, it requires further refinement and validation in other clinician populations. A larger sample of clinicians with more even distribution between PCPs and RNCMs would likely increase power and reliability. Furthermore, information literacy was only measured at post intervention and within a VA primary care setting, limiting interpretation and generalization of the findings. The scale would therefore benefit from an expanded look at the wider array of population health management practiced outside the VA. In addition, future studies should seek to establish objective measurements of information literacy and effectiveness of PM tool use as self-reported questionnaires can be subjective when sample sizes are small like in this study.

6. Conclusions

To be effective, clinicians must have the information literacy skills necessary to access, locate, analyze, and apply clinical information from EHRs. However, we know little about clinicians’ mastery of these skills. Our exploratory analysis is a first step towards a greater understanding of practicing clinicians’ information and computer competencies. Information literacy plays an important role in the adoption and use of electronic clinical systems. An informatics liaison integrated into the clinical team could provide crucial support and promote access, analysis, interpretation, and use of PM data and information. Future research should focus on refining measures of information literacy for practicing clinicians and further explore how these competencies support team-based care as well as the delivery of population health.

Acknowledgements

This publication is derived from work supported under grants from VA HSR&D (EDU 08–428 and CIN 13–416). The views expressed in this publication are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government.

Funding Statement

Funding This publication is derived from work supported under grants from VA HSR&D (EDU 08–428 and CIN 13–416). The views expressed in this publication are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government.

Footnotes

Clinical Relevance Statement

Clinicians need information literacy skills to access, analyze, and apply clinical information from EHRs, yet we know little about clinicians’ mastery of these skills. An informatics liaison role integrated into the clinical team may provide support for greater adoption and use of data and information for managing panels of patients. While the results presented here are limited, they show promise for additional work to measure information literacy and better support population health using informatics tools and personnel within health systems.

Conflicts of Interest

Several authors (BED, KJB, SES, MDS) are employed at either the VA New York Harbor Healthcare Systems in New York, New York, or the Richard L. Roudebush Veterans Affairs Medical Center in Indianapolis, Indiana. As such, they receive at least a portion of their annual salary from the U.S. Department of Veterans Affairs.

Protection of Human Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and was reviewed by the Institutional Review Board and Research and Development Committee at the New York Harbor Healthcare System.

References

- 1.Singh H, Spitzmueller C, Petersen NJ, Sawhney MK, Sittig DF. Information overload and missed test results in electronic health record-based settings. JAMA internal medicine 2013; 173(8): 702-704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zeldes N, Baum N. Information overload in medical practice. The Journal of medical practice management : MPM 2011; 26(5): 314-316. [PubMed] [Google Scholar]

- 3.Jha AK, Burke MF, DesRoches C, Joshi MS, Kralovec PD, Campbell EG, Buntin MB. Progress toward meaningful use: hospitals‘ adoption of electronic health records. The American journal of managed care 2011; 17(12 Spec No.): SP117-SP124. [PubMed] [Google Scholar]

- 4.Xierali IM, Hsiao CJ, Puffer JC, Green LA, Rinaldo JC, Bazemore AW, Burke MT, Phillips RL., Jr. The rise of electronic health record adoption among family physicians. Annals of family medicine 2013; 11(1): 14-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Whipple EC, Dixon BE, McGowan JJ. Linking health information technology to patient safety and quality outcomes: a bibliometric analysis and review. Inform Health Soc Care 2013; 38(1):1–14. [DOI] [PubMed] [Google Scholar]

- 6.Black AD, Car J, Pagliari C, Anandan C, Cresswell K, Bokun T, McKinstry B, Procter R, Majeed A, Sheikh A. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS medicine 2011; 8(1): e1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, Morton SC, Shekelle PG. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Annals of Internal Medicine 2006; 144(10): 742-752. [DOI] [PubMed] [Google Scholar]

- 8.Nadkarni PM, Ohno-Machado L, Chapman WW. Natural language processing: an introduction. J Am Med Inform Assoc 2011; 18(5): 544-551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chapman W W, Nadkarni PM, Hirschman L, D‘Avolio LW, Savova GK, Uzuner O. Overcoming barriers to NLP for clinical text: the role of shared tasks and the need for additional creative solutions. J Am Med Inform Assoc 2011; 18(5): 540-543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dixon BE, Jones JF, Grannis SJ. Infection preventionists‘ awareness of and engagement in health information exchange to improve public health surveillance. American journal of infection control 2013; 41(9): 787-792. [DOI] [PubMed] [Google Scholar]

- 11.Cusack CM, Hripcsak G, Bloomrosen M, Rosenbloom ST, Weaver CA, Wright A, Vawdrey DK, Walker J, Mamykina L. The future state of clinical data capture and documentation: a report from AMIA‘s 2011 Policy Meeting. J Am Med Inform Assoc 2013; 20(1): 134-140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Walji MF, Kalenderian E, Tran D, Kookal KK, Nguyen V, Tokede O, White JM, Vaderhobli R, Ramoni R, Stark PC, Kimmes NS, Schoonheim-Klein ME, Patel VL. Detection and characterization of usability problems in structured data entry interfaces in dentistry. Int J Med Inform 2013; 82(2): 128-138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Minshall S. A review of healthcare information system usability & safety. Studies in health technology and informatics 2013; 183: 151-156. [PubMed] [Google Scholar]

- 14.Flanagan ME, Saleem JJ, Millitello LG, Russ AL, Doebbeling BN. Paper- and computer-based workarounds to electronic health record use at three benchmark institutions. Journal of the American Medical Informatics Association: JAMIA. 2013; 20(e1):e59–e66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Saleem JJ, Flanagan ME, Russ AL, McMullen CK, Elli L, Russell SA, Bennett KJ, Matthias MS, Rehman SU, Schwartz MD, Frankel RM. You and me and the computer makes three: variations in exam room use of the electronic health record. J Am Med Inform Assoc 2014; 21(e1): e147-e151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Frankel RM. Computers in the Examination Room. JAMA internal medicine 2016; 176(1): 128-129. [DOI] [PubMed] [Google Scholar]

- 17.Duke P, Frankel RM, Reis S. How to integrate the electronic health record and patient-centered communication into the medical visit: a skills-based approach. Teaching and learning in medicine 2013; 25(4): 358-365. [DOI] [PubMed] [Google Scholar]

- 18.Ratanawongsa N, Barton JL, Lyles CR, Wu M, Yelin EH, Martinez D, Schillinger D. Association Between Clinician Computer Use and Communication With Patients in Safety-Net Clinics. JAMA internal medicine 2016; 176(1): 125-128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Grannis SJ, Egg J, Overhage JM. Reviewing and managing syndromic surveillance SaTScan datasets using an open source data visualization tool. AMIA Annu Symp Proc 2005: 967. [PMC free article] [PubMed] [Google Scholar]

- 20.Chui KK, Wenger JB, Cohen SA, Naumova EN. Visual analytics for epidemiologists: understanding the interactions between age, time, and disease with multi-panel graphs. PloS one 2011; 6(2): e14683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cinnamon J, Schuurman N. Injury surveillance in low-resource settings using Geospatial and Social Web technologies. Int J Health Geogr 2010; 9: 25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dixon BE, Jabour AM, Phillips EO, Marrero DG. An informatics approach to medication adherence assessment and improvement using clinical, billing, and patient-entered data. J Am Med Inform Assoc 2014; 21(3): 517-521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dolan JG, Veazie PJ, Russ AJ. Development and initial evaluation of a treatment decision dashboard. BMC Med Inform Decis Mak 2013; 13: 51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Duke JD, Li X, Grannis SJ. Data visualization speeds review of potential adverse drug events in patients on multiple medications. Journal of biomedical informatics 2010; 43(2): 326-331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dixon BE, Alzeer AH, Phillips EO, Marrero DG. Integration of Provider, Pharmacy, and Patient-Reported Data to Improve Medication Adherence for Type 2 Diabetes: A Controlled Before-After Pilot Study. JMIR medical informatics 2016; 4(1): e4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Norman CD, Skinner HA. eHealth Literacy: Essential Skills for Consumer Health in a Networked World. J Med Internet Res 2006; 8(2): e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hajar Safahieh, Asemi A. Computer literacy skills of librarians: a case study of Isfahan University libraries, Iran. The Electronic Library 2010; 28(1): 89-99. [Google Scholar]

- 28.Dixon BE, Newlon CM. How do future nursing educators perceive informatics? Advancing the nursing informatics agenda through dialogue. J Prof Nurs 2010; 26(2): 82-89. [DOI] [PubMed] [Google Scholar]

- 29.Neuwirth EE, Schmittdiel JA, Tallman K, Bellows J. Understanding panel management: a comparative study of an emerging approach to population care. The Permanente journal 2007; 11(3):12–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Eichner J, Dullabh P. Accessible Health Information Technology (IT) for Populations with Limited Literacy: A Guide for Developers and Purchasers of Health IT. Rockville, MD: Agency for Healthcare Research and Quality; 2007. [Google Scholar]

- 31.Schaefer CT. Integrated review of health literacy interventions. Orthop Nurs 2008; 27(5): 302-317. [DOI] [PubMed] [Google Scholar]

- 32.Forster M. A phenomenographic investigation into Information Literacy in nursing practice – Preliminary findings and methodological issues. Nurse education today 2012. [DOI] [PubMed] [Google Scholar]

- 33.Rao G, Kanter SL. Physician numeracy as the basis for an evidence-based medicine curriculum. Academic medicine : journal of the Association of American Medical Colleges 2010; 85(11): 1794-1799. [DOI] [PubMed] [Google Scholar]

- 34.Moyer VA. What we don‘t know can hurt our patients: physician innumeracy and overuse of screening tests. Ann Intern Med 2012; 156(5): 392-393. [DOI] [PubMed] [Google Scholar]

- 35.Anderson BL, Obrecht NA, Chapman GB, Driscoll DA, Schulkin J. Physicians‘ communication of Down syndrome screening test results: the influence of physician numeracy. Genetics in medicine: official journal of the American College of Medical Genetics 2011; 13(8): 744-749. [DOI] [PubMed] [Google Scholar]

- 36.Anderson BL, Gigerenzer G, Parker S, Schulkin J. Statistical Literacy in Obstetricians and Gynecologists. Journal for healthcare quality : official publication of the National Association for Healthcare Quality; 2012. [DOI] [PubMed] [Google Scholar]

- 37.Rosland AM, Nelson K, Sun H, Dolan ED, Maynard C, Bryson C, Stark R, Shear JM, Kerr E, Fihn SD, Schectman G. The patient-centered medical home in the Veterans Health Administration. The American journal of managed care 2013; 19(7): e263-e272. [PubMed] [Google Scholar]

- 38.Klein S. The Veterans Health Administration: Implementing Patient-Centered Medical Homes in the Nation‘s Largest Integrated Delivery System. New York: The Commonwealth Fund 2011 September. Report No.: Pub; 1537. [Google Scholar]

- 39.Piette JD, Holtz B, Beard AJ, Blaum C, Greenstone CL, Krein SL, Tremblay A, Forman J, Kerr EA. Improving chronic illness care for veterans within the framework of the Patient-Centered Medical Home: experiences from the Ann Arbor Patient-Aligned Care Team Laboratory. Behav Med Pract Policy Res 2011; 1(4): 615-623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Strauss SM, Jensen AE, Bennett K, Skursky N, Sherman SE, Schwartz MD. Clinicians‘ panel management self-efficacy to support their patients‘ smoking cessation and hypertension control needs. Transl Behav Med 2015; 5(1):68–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Schwartz MD, Jensen A, Wang B, Bennett K, Dembitzer A, Strauss S, Schoenthaler A, Gillespie C, Sherman S. Panel Management to Improve Smoking and Hypertension Outcomes by VA Primary Care Teams: A Cluster-Randomized Controlled Trial. J Gen Intern Med 2015; 30(7): 916-923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Charmaz K. Qualitative interviewing and grounded theory analysis. Gubrium J, Holstein J, editors. Handbook of Interview Research: Context & Method. Thousand Oaks, CA: Sage; 2002: 675-694. [Google Scholar]

- 43.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, Payne TH, Rosenbloom ST, Weaver C, Zhang J. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc 2013; 20(e1): e2-e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Holden RJ, Voida S, Savoy A, Jones JF, Kulanthaivel A. Human Factors Engineering and Human–Computer Interaction: Supporting User Performance and Experience. Finnell JT, Dixon BE, editors. Clinical Informatics Study Guide: Text and Review. Zurich: Springer International Publishing; 2016: 287–307. [Google Scholar]

- 45.Rao G. Physician numeracy: essential skills for practicing evidence-based medicine. Family medicine 2008; 40(5): 354-358. [PubMed] [Google Scholar]

- 46.Storie D, Campbell S. Determining the information literacy needs of a medical and dental faculty1. Journal of the Canadian Health Libraries Association 2012; 33(02):48–59. [Google Scholar]