Summary

Background

Strategies to ensure timely diagnostic evaluation of hematuria are needed to reduce delays in bladder cancer diagnosis.

Objective

To evaluate the performance of electronic trigger algorithms to detect delays in hematuria follow-up.

Methods

We developed a computerized trigger to detect delayed follow-up action on a urinalysis result with high-grade hematuria (>50 red blood cells/high powered field). The trigger scanned clinical data within a Department of Veterans Affairs (VA) national data repository to identify all patient records with hematuria, then excluded those where follow-up was unnecessary (e.g., terminal illness) or where typical follow-up action was detected (e.g., cystoscopy). We manually reviewed a randomly-selected sample of flagged records to confirm delays. We performed a similar analysis of records with hematuria that were marked as not delayed (non-triggered). We used review findings to calculate trigger performance.

Results

Of 310,331 patients seen between 1/1/2012–12/31/2014, the trigger identified 5,857 patients who experienced high-grade hematuria, of which 495 experienced a delay. On manual review of 400 randomly-selected triggered records and 100 non-triggered records, the trigger achieved positive and negative predictive values of 58% and 97%, respectively.

Conclusions

Triggers offer a promising method to detect delays in care of patients with high-grade hematuria and warrant further evaluation in clinical practice as a means to reduce delays in bladder cancer diagnosis.

Keywords: Electronic health records, medical informatics, urologic neoplasms, hematuria, delayed diagnosis, triggers, monitoring and surveillance, data mining

1. Introduction

Bladder cancer is the ninth most common cancer worldwide, contributing to over 165,000 deaths per year [1]. Prompt diagnosis and treatment are critical to preventing progression of disease and improving patient survival [2–4]. Despite this, many patients experience significant delays in diagnosis [5]. Recent research has shown that among patients who present with symptoms suggestive of bladder cancer, 18% required three or more visits before a diagnosis was made [6], and women are particularly likely to experience delays in care resulting in late stage at diagnosis [7–10]. Delays occur despite the presence of hematuria, a “red flag” suggestive of possible bladder or urinary tract cancer and where guidelines strongly recommend subsequent evaluation [11]. The causes of delays have been attributed to numerous factors [12, 13], including awareness of guidelines, variation in recommendations by different guidelines, low perceived yield of cystoscopy in patients with hematuria, time pressures, poor communication within and between clinical teams, and failures in patient adherence to prescribed plans [10, 14–23].

The rapidly growing use of electronic health records (EHRs) and the vast amounts of clinical data they collect and store may offer opportunities to detect and act on such delays in the diagnostic evaluation of hematuria. One method gaining traction in the study of patient safety is the use of triggers [24–27]. “Triggers” are algorithm-based computer programs designed to scan through vast amounts of electronic data to flag high-risk situations for further review. One early example of a trigger is an algorithm-based software program that identifies inpatient orders for naloxone to indicate potential adverse drug events from excessive narcotics administration [28, 29]. Application of triggers for detecting delayed action after an abnormal test result is relatively new [30–32], but shows promise in detecting missed opportunities for follow-up that would otherwise be too expensive or time-consuming to detect using consecutive or random chart reviews. Triggers have the potential to improve the timeliness of diagnostic evaluation after a cancer-related alarm feature is detected, and could potentially prevent extended delays that allow it to advance.

We previously evaluated triggers to identify delays in colorectal, lung, and prostate cancer and achieved predictive values useful in clinical practice [30, 32–34]. In this study, we expand on this work by developing and testing a trigger algorithm to detect delays in diagnostic evaluation of a urinalysis result with hematuria. The algorithm was applied on data contained within a nationwide Department of Veterans Affairs (VA) data warehouse.

2. Material and Methods

2.1 Setting and Participants

We developed and evaluated the algorithm within the VA’s national data warehouse hosted by the VA Informatics and Computing Infrastructure (VINCI) [35]. This database contains clinical data, extracted from facility-level EHRs at all of the VA’s 152 medical centers and associated outpatient clinics. To facilitate chart review access to verify data, we limited evaluation of the algorithm to data from six Midwest regional hospitals and affiliated clinics. The local institutional review board and VA Research Office approved this study.

2.2 Trigger Development

We aimed to develop a trigger algorithm capable of identifying patients who required follow-up actions on “red flag” findings suggestive of bladder cancer, and thus were experiencing a delay in diagnostic evaluation. While this study used retrospective data to identify such cases, the intent was initial testing of a trigger for future, prospective application of the trigger using a ‘population health’ approach that could identify patients overdue for follow-up, facilitate their re-entry into the diagnostic care pathway, and hence reduce delays in care. We developed the algorithms by performing literature reviews [11, 23, 36], gathering expert input from primary care providers and specialists (oncologists and urologists), and evaluating existing VA clinical follow-up pathways to develop a basic set of trigger criteria. The draft criteria were sent to all clinical experts for review and comment. Criteria were iteratively revised based on comments, and then resent to each clinical expert. Comments were shared with each clinician. Once each clinical expert agreed that no further changes were necessary, the trigger was considered finalized. The final algorithm was designed to identify all patients with a red flag, and then exclude patients who did not require action because it was either not needed (e.g., due to terminal illness) or because appropriate action had already occurred.

Because hematuria is commonly associated with delayed follow-up [10], we chose this as the red flag for the algorithm. We specifically limited this definition to >50 red blood cells per high-powered field based on work by Loo et al [36] suggesting higher risk for cancer in both men and women at or above these levels. Of all unique patients with hematuria, the following “clinical exclusion criteria” were used to enable electronic exclusion of patients not requiring follow-up action: previously-diagnosed bladder cancer, terminal illness or palliative care, previously performed cystoscopy, patient with stones or a urologic procedure during the past 3 months known to cause hematuria, active urinary tract infection within 7 days, or patients <35 years old, for whom the likelihood of cancer is low [23]. To electronically exclude patients who received the usual, expected actions on hematuria, we created “expected follow-up criteria” to account for their completed follow-up. Because published guidelines offer variable recommendations for hematuria follow-up, “expected follow-up criteria” included any of several actions that could occur in response to hematuria, including a completed urology visit, abdominal or pelvic imaging, cystoscopy, and renal or bladder biopsy or surgery. No consensus for expected time to follow-up exists; thus we used lack of diagnostic evaluation within 60 days as our definition based on discussion with clinician experts. This would allow adequate time to enable patients to move through usual follow-up care pathways, while limiting delays significant enough to impact clinical outcomes.

All criteria were operationalized by programming each of them into a structured query language-based computer search algorithm designed to identify the presence of structured data codes in the EHR. These included test results, International Classification of Diseases (ICD) codes, and Current Procedural Terminology (CPT) codes. Each individual criterion was first individually evaluated by one of two reviewers who performed chart reviews to determine whether the trigger algorithm appropriately extracted the intended information. Once individually validated, individual criteria were combined into a complete algorithm and reviewed (► Table 1 and supplementary online ► Appendix).

Table 1.

Bladder Cancer Trigger Criteria and Algorithm

| Step 1: Identify all patient records with the following Red Flag Criteria | |

| Urinalysis with >50 Red Blood Cells per high-powered field | |

| Step 2: Exclude all patient records with the any of the following Clinical Exclusion Criteria | |

| Timeframe | Criteria |

| Within 1 year prior to red flag | Bladder cancer diagnosis Terminal illness diagnosis Hospice or palliative care enrollment Cystoscopy performed |

| Within 3 months prior to red flag | Diagnosis of renal or ureteral stones Potentially hematuria-causing procedure (bladder or prostate biopsy, renal stone surgery, ureteral stent, bladder or renal surgery) |

| Within 2 days prior to or 7 days after red flag | Evidence of active UTI (Urinalysis or culture consistent with UTI, or antibiotics ordered for UTI) |

| On date of red flag | Age < 35 History of total cystectomy |

| Within 60 days after red flag | Deceased Terminal illness diagnosis Hospice or palliative care enrollment |

| Step 3: Exclude all patient records with the any of the following Expected Follow-up Criteria | |

| Timeframe | Criteria |

| Within 60 days after date of red flag | Urology visit completed Abdominal or Pelvic Imaging (CT, MRI, Ultrasound) Cystoscopy performed Renal or Bladder Biopsy Renal or Bladder Surgery |

UTI = Urinary Tract Infection, CT = Computed Tomography, MRI = Magnetic Resonance Imaging

2.3 Sample Size

We calculated the sample size necessary to identify a 95% confidence interval for positive predictive value (PPV) and negative predictive value (NPV) that was no wider than 10% around the point estimate we obtained. Because a binomial distribution was used (delay versus non-delay), the largest sample size occurs at 50%, and thus we used this as a point estimate in our PPV calculations and obtained a minimum sample size of 384, which we increased to 400 records to account for any incomplete records or ambiguous cases. Similarly, we used a conservative point estimate of 95% NPV since our pilot work showed a nearly 100% correlation between trigger-detected exclusion criteria or already-completed follow-up and their actual occurrence. This calculation yielded a sample size of 73, which we increased to 100.

2.4 Trigger Evaluation

Once the algorithm was finalized and developed into a computerized trigger, we applied the trigger to a new two-year data set (i.e., data not used in development or verification) within the clinical data warehouse in order to obtain a list of records flagged by the trigger as having a delay in diagnostic evaluation (i.e., “trigger positive”). Additionally, we extracted records with hematuria, but where follow up was not needed (e.g., the record contained a clinical exclusion or the patient already received follow-up) (i.e., “trigger negatives”). Two clinician reviewers (VV and LW), each with extensive experience reviewing electronic records for patient safety research studies, performed chart reviews on randomly-selected trigger positive and trigger-negative records. Blinded to whether each record was flagged by the trigger as positive or negative for a delay, reviewers performed their own assessment of whether a delay in diagnostic evaluation had occurred within 60 days using a pilot-tested chart review form (► Figure 1). Instances where a documented plan to follow up the result beyond 60 days existed were labeled as “needs tracking”, and the record would be re-reviewed 30 days after the date of planned follow-up to determine whether the subsequent follow-up truly occurred. To enable calculation of interrater reliability across reviews, 20% of trigger positive and trigger negative records were reviewed by both reviewers. We assessed interrater agreement using Cohen’s kappa. We assessed trigger performance by calculating sensitivity, specificity, positive predictive value, and negative predictive value.

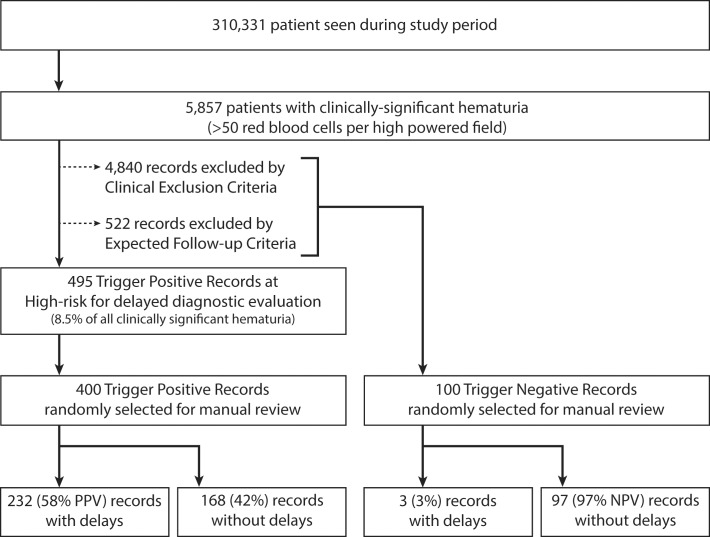

Fig. 1.

Study Flow

2.5 Data Analysis

We used SPSS (v22, IBM Corp, Armonk, NY) to analyze trigger performance and time to follow-up using Kaplan-Meier survival analysis. Fisher’s exact test was used to make comparisons between records with and without a delay. Reasons for lack of follow-up were analyzed and reported using descriptive statistics.

3. Results

We developed draft trigger algorithm criteria based on literature reviews and expert input, and operationalized the trigger for clinical use by converting the trigger into a computerized trigger algorithm. We subsequently tested and refined the algorithm on a subset of data from 1/1/2010–12/31/2011 and performed 551 reviews, including 531 reviews of individual criteria and 20 reviews of the completed algorithm.

To test performance, we applied the finalized trigger to the records of all 310,331 patients seen between 1/1/2012–12/31/2014. The trigger identified 5,857 patients with elevated urine red blood cells of >50 cells per high power field. Of these, the trigger subsequently excluded 4,840 patients due to clinical exclusion criteria and 522 patients where it identified evidence of completed follow-up action (► Figure 1). This left 495 patients with delayed follow-up diagnostic evaluation for review. During manual reviews of 400 randomly-selected records (mean age (SD): 68.5 (13.6); 92% male), 232 records were found to truly contain a delay in care (true positives; PPV of 58% [95% CI: 53%-63%]), while 168 contained no delay (false positives). No records were identified with a documented plan to specifically pursue additional work-up beyond 60 days (i.e., needs tracking group). Interrater agreement was high, with a kappa of 0.90.

Sixty-seven out of 232 patients (28.9%) with delayed diagnostic evaluation received subsequent follow-up action within two years. Using a Kaplan-Meier survival analysis on data up to 2-years after the red flag, the estimated proportion who received follow-up (i.e., not “surviving”) was 29% ± 3%, and the time at which 25% of cases received follow-up was 434 days (note: less than half received follow-up, so median time to follow-up cannot be reported). Of all patients who experienced a delay, 14 were diagnosed with bladder or renal cancer within 2 years after the abnormal urinalysis.

In most (78%) records that were found to have delays, there was lack of any documentation that the result was reviewed. Other contributing factors for delays that could be discerned from documentation are listed in ► Table 2. Reasons for false positive records included hematuria proven to be from another non-cancer diagnosis (e.g., UTI or chronic hematuria previously evaluated), care received outside the facility that could only be detected in free-text progress notes, and documentation of patient’s declining further work-up (► Table 3).

Table 2.

Contributory Factors for Delays as Determined by Chart Documentation

| Reason | No. | % |

|---|---|---|

| No documented justification for the delay was identified | 182 | 78.4 |

| Follow up was planned, but not completed within 60 days | 14 | 6.0 |

| Treating physician failed to follow guidelines and only repeated urinalysis | 13 | 5.6 |

| Follow up was scheduled, but the patient did not show up to the appointment | 8 | 3.4 |

| Patient sought care at an outside facility, which was not completed or documented as completed until after 60 days | 6 | 2.6 |

| Follow up was ordered, but the patient canceled the follow-up appointment or patient later declined to follow up after 60 days | 4 | 1.7 |

| Other | 5 | 2.1 |

| Total | 232 | 100.0 |

Table 3.

Reasons for False Positive Trigger Results

| Reason | No. | % |

|---|---|---|

| The patient had a known non-malignant etiology for the hematuria | 68 | 40.5 |

| The patient received appropriate follow up at outside institution (including another VA facility) within 60 days | 48 | 28.6 |

| The patient declined follow-up, and this was documented within 60 days | 21 | 12.5 |

| The physician documented that the patient was menstruating when sample taken, and repeated the sample at a later time. | 12 | 7.1 |

| The patient had a terminal illness or was in hospice, making follow-up unnecessary/inappropriate | 7 | 4.2 |

| The patient has a known recent history of bladder cancer within the past 1 year | 4 | 2.4 |

| The patient received appropriate follow up at the VA within 60 days, but follow-up was not identified by the trigger due to miscoded data | 1 | 0.6 |

| Other | 7 | 4.2 |

| Total | 168 | 100.0 |

Of 100 randomly selected trigger-negative records with a urine red blood cell count >50 cells per high powered field, 97 (NPV of 97%; 95% CI: 91%-99%) were identified by reviewers to truly have no delay. To calculate sensitivity and specificity of the trigger, we extrapolated the delay and no delay results to all 495 trigger positive and 5,362 trigger negative cases with hematuria (or 5,857 total hematuria cases). This resulted in 287 true positives, 208 false positives, 161 false negatives, and 5,201 true negatives, yielding sensitivity and specificity of 64% (95% CI: 59%-68%) and 96% (95% CI: 96%-97%), respectively.

4. Comment

We developed a computerized algorithm to detect instances of delayed diagnostic evaluation in patients with hematuria and applied the algorithm to a large EHR data warehouse. We found a sensitivity and specificity of 64% and 96%, respectively, and a positive predictive value of 58%, indicating that at least one of every two patients identified by the trigger had a delay in follow up of hematuria. This approach of identifying a small number of at-risk patients among thousands indicates that triggers are fairly accurate and could have potential value for clinical practice. For instance, when applied to an organization’s data repository, a single individual or team can receive a list of identified patients from multiple clinics, confirm presence/absence of delays, and ensure that follow-up can occur for all the patients with confirmed delays. Our trigger’s ability to reduce the number of record reviews necessary by 91% (495 of 5857 high-grade hematuria results) suggests that use of prospective triggers will be substantially more efficient in detection of delays compared to current non-selective methods, which often rely on reviewing all positive results. Thus, such triggers enable resources to focus on only those records that are truly high risk for a delay.

As with prior work on this topic [30, 32–34], we found many instances of delays where no documented plan for subsequent follow-up was detected. This suggests that providers could be missing urinalysis results that return with hematuria, even when the red blood cell count is at a high enough level to warrant additional diagnostic evaluation for cancer. These situations contribute to preventable errors, poorer patient outcomes, and resultant litigation [37, 38]. In addition to other interventions that help support providers in ensuring appropriate and timely follow-up, triggers-based interventions offer one method to mitigate the negative impact of preventable delays in follow-up of test results. Such strategies have been recommended in recent policy reports, including the 2015 “Improving Diagnosis in Health Care” from the National Academy of Medicine [39, 40]. The growing presence of data repositories makes mining this type of data increasingly more feasible and cost-effective. Many of the false positive results related to documented follow-up are present in the free-text portion of the EHR, and thus not accessible to our trigger. Additional work will need to explore strategies to leverage all of this narrative data, such as use of natural language processing algorithms, to improve trigger performance. Furthermore, while the trigger had a false negative rate of 3%, this is likely far outweighed by the benefits of detecting the 232 records with delays in a timely manner, particularly given that 14 were subsequently diagnosed with bladder or renal cancer. We are unaware of any health care organizations using other non-trigger-based methods to identify such patients, suggesting that these methods are likely more useful than status-quo.

While our trigger was designed to serve as a “back-up system” for notifying clinicians when follow-up evaluation does not proceed as expected, the trigger development process and criteria identified may provide insight into better methods for development and validation of real-time clinical decision support alerts or at least help identify the information that providers need while processing test results. For example, if the presence of a terminal illness, recent prostate biopsy, or known bladder cancer diagnosis is helpful in making a decision about follow-up action, this information could also be made visible via the EHR interface to the provider initially receiving the result. Similarly, based on the expected follow-up criteria, EHRs could present order options (e.g., urology referrals, imaging orders) when it detects results with high-grade hematuria, allowing providers to quickly place orders for appropriate diagnostic evaluation [20, 41]. By incorporating the trigger algorithm’s logic into clinical decision support (e.g., providing subtle prompts at the time an abnormal result returns), such algorithms could further improve the timeliness of care.

Several limitations of our study require mention. First, triggers were applied to multiple institutions within the same health care system. While this may impact generalizability, triggers have been successfully applied at other institutions [30] and the triggers presented largely rely on standardized structured data, like CPT codes, suggesting they may be effective at a variety of institutions or in the growing presence of community-wide health information exchanges. Second, depending on the institution, red blood cells on a urinalysis are sometimes reported as a range instead of a specific number. Thus, our triggers were not always able to extract only results with >50 cells per high powered field. However, this affected only a minority of cases, and we compensated by including results where the range crossed 50. Third, confirmation of action was identified via chart reviews, which may or may not reflect the care actually delivered, or rationale for inaction. However, prior work has shown good correlation between provider awareness and documentation [42]. Fourth, The clinical logic developed for this trigger, based on expert clinical input, is but one example set of criteria that could have been selected. Future researchers are encouraged to explore use of additional clinical data and particularly the use of natural language processing techniques. Natural language processing has the potential to allow the computer algorithm to “understand” many of the confounding factors that we were not able to use in our algorithm. Finally, the study was not designed to evaluate impact on long-term clinical outcomes or personnel time necessary to implement a trigger-based intervention. However, we were able to detect 14 instances where cancer was subsequently diagnosed within two years and could have received action sooner. Additionally, prior work using trigger-based interventions suggests only a nominal time commitment is necessary to significantly improve time to follow-up for patients experiencing delays, and this study serves as a basis for future research in this area [30].

Conclusions

We developed and tested an algorithm to identify patients at risk for delayed diagnostic evaluation after lab findings of high-grade hematuria and found a performance level conducive for future application in clinical practice. Such triggers may serve as a resource for clinicians, informaticians, and patient safety professionals to help reduce delays in cancer care.

Key Definitions for Abbreviations

- CPT

Current Procedural Terminology

- EHR

electronic health record

- ICD

International Classification of Diseases

- NPV

negative predictive value

- PPV

positive predictive value

- VA

Department of Veterans Affairs

- VINCI

VA Informatics and Computing Infrastructure

Supplementary Material

Acknowledgements

The authors would like to thank Jeremy Shelton for his efforts in developing the bladder cancer trigger.

Funding Statement

Funding: This project is funded by a Veteran Affairs Health Services Research and Development CREATE grant (CRE-12–033) and partially funded by the Houston VA HSR&D Center for Innovations in Quality, Effectiveness and Safety (CIN 13–413). Dr. Murphy is additionally funded by an Agency for Healthcare Research & Quality Mentored Career Development Award (K08-HS022901) and Dr. Singh is additionally supported by the VA Health Services Research and Development Service (CRE 12–033; Presidential Early Career Award for Scientists and Engineers USA 14–274), the VA National Center for Patient Safety and the Agency for Health Care Research and Quality (R01HS022087). These funding sources had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

Footnotes

Clinical Relevance Statement

Computerized triggers effectively detect delays in hematuria evaluation with performance levels sufficient for clinical application and allow more efficient detection of delays as compared to current non-selective methods. Such triggers may serve as a resource for clinicians, informaticians and patient safety professionals to help reduce delays in cancer care.

Conflict of Interest

The authors declare that they have no conflicts of interest in the research.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects. The protocol was reviewed by the Baylor College of Medicine Institutional Review Board (Protocol H-30995) and the Michael E. DeBakey Veteran Affairs Medical Center Research Office.

References

- 1.Ferlay J, Soerjomataram I, Dikshit R, Eser S, Mathers C, Rebelo M, Parkin DM, Forman D, Bray F. Cancer incidence and mortality worldwide: sources, methods and major patterns in GLOBOCAN 2012. Int J Cancer 2015; 136(5): E359-E386. [DOI] [PubMed] [Google Scholar]

- 2.Hollenbeck BK, Dunn RL, Ye Z, Hollingsworth JM, Skolarus TA, Kim SP, Montie JE, Lee CT, Wood DP, Miller DC. Delays in diagnosis and bladder cancer mortality. Cancer 2010; 116(22):5235–5242. [DOI] [PubMed] [Google Scholar]

- 3.Mahmud SM, Fong B, Fahmy N, Tanguay S, Aprikian AG. Effect of Preoperative Delay on Survival in Patients With Bladder Cancer Undergoing Cystectomy in Quebec: A Population Based Study. J Urol 2006; 175(1):78–83. [DOI] [PubMed] [Google Scholar]

- 4.Neal RD, Tharmanathan P, France B, Din NU, Cotton S, Fallon-Ferguson J, Hamilton W, Hendry A, Hendry M, Lewis R, Macleod U, Mitchell ED, Pickett M, Rai T, Shaw K, Stuart N, Tørring ML, Wilkinson C, Williams B, Williams N, Emery J. Is increased time to diagnosis and treatment in symptomatic cancer associated with poorer outcomes? Systematic review. Br J Cancer 2015; 112(s1):S92–S107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bergman J, Neuhausen K, Chamie K, Scales CD, Carter S, Kwan L, Lerman SE, Aronson W, Litwin MS. Building a Medical Neighborhood in the Safety Net: An Innovative Technology Improves Hematuria Workups. Urology 2013; 82(6):1277–1282. [DOI] [PubMed] [Google Scholar]

- 6.Lyratzopoulos G, Neal RD, Barbiere JM, Rubin GP, Abel GA. Variation in number of general practitioner consultations before hospital referral for cancer: findings from the 2010 National Cancer Patient Experience Survey in England. Lancet Oncol 2012; 13(4):353–365. [DOI] [PubMed] [Google Scholar]

- 7.Lyratzopoulos G, Abel GA, McPhail S, Neal RD, Rubin GP. Gender inequalities in the promptness of diagnosis of bladder and renal cancer after symptomatic presentation: evidence from secondary analysis of an English primary care audit survey. BMJ Open 2013; 3(6): e002861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dobruch J, Daneshmand S, Fisch M, Lotan Y, Noon AP, Resnick MJ, Shariat SF, Zlotta AR, Boorjian SA. Gender and Bladder Cancer: A Collaborative Review of Etiology, Biology, and Outcomes. Eur Urol 2016; 69(2):300–310. [DOI] [PubMed] [Google Scholar]

- 9.Cohn JA, Vekhter B, Lyttle C, Steinberg GD, Large MC. Sex disparities in diagnosis of bladder cancer after initial presentation with hematuria: a nationwide claims-based investigation. Cancer 2014; 120(4):555–561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nieder AM, Lotan Y, Nuss GR, Langston JP, Vyas S, Manoharan M, Soloway MS. Are patients with hematuria appropriately referred to Urology? A multi-institutional questionnaire based survey. Urol Oncol Semin Orig Investig 2010; 28(5):500–503. [DOI] [PubMed] [Google Scholar]

- 11.Davis R, Jones JS, Barocas DA, Castle EP, Lang EK, Leveillee RJ, Messing EM, Miller SD, Peterson AC, Turk TMT, Weitzel W. Diagnosis, evaluation and follow-up of asymptomatic microhematuria (AMH) in adults: AUA guideline. J Urol 2012; 188(s6):2473–2481. [DOI] [PubMed] [Google Scholar]

- 12.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010; 19(s3): i68-i74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lyratzopoulos G, Vedsted P, Singh H. Understanding missed opportunities for more timely diagnosis of cancer in symptomatic patients after presentation. Br J Cancer 2015; 112(s1): S84-S91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tarkan S, Plaisant C, Shneiderman B, Hettinger AZ. Reducing Missed Laboratory Results: Defining Temporal Responsibility, Generating User Interfaces for Test Process Tracking, and Retrospective Analyses to Identify Problems. AMIA Annu Symp Proc 2011; 1382–1391. [PMC free article] [PubMed] [Google Scholar]

- 15.Månsson Å, Anderson H, Colleen S. Time Lag to Diagnosis of Bladder Cancer–Influence of Psychosocial Parameters and Level of Health-Care Provision. Scand J Urol Nephrol 1993; 27(3):363–369. [DOI] [PubMed] [Google Scholar]

- 16.Chen EH, Bodenheimer T. Improving Population Health Through Team-Based Panel Management: Comment on “Electronic Medical Record Reminders and Panel Management to Improve Primary Care of Elderly Patients.” Arch Intern Med 2011; 171(17):1558–1559. [DOI] [PubMed] [Google Scholar]

- 17.Murphy DR, Reis B, Kadiyala H, Hirani K, Sittig DF, Khan MM, Singh H. Electronic Health Record-Based Messages to Primary Care Providers: Valuable Information or Just Noise? Arch Intern Med 2012; 172(3): 283. [DOI] [PubMed] [Google Scholar]

- 18.Murphy DR, Reis B, Sittig DF, Singh H. Notifications received by primary care practitioners in electronic health records: a taxonomy and time analysis. Am J Med 2012; 125(2): 209.e1-7. [DOI] [PubMed] [Google Scholar]

- 19.Singh H, Spitzmueller C, Petersen NJ, Sawhney MK, Smith MW, Murphy DR, Espadas D, Laxmisan A, Sittig DF. Primary care practitioners’ views on test result management in EHR-enabled health systems: a national survey. J Am Med Inform Assoc 2013; 20(4):727–735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Smith M, Murphy D, Laxmisan A, Sittig D, Reis B, Esquivel A, Singh H. Developing Software to “Track and Catch” Missed Follow-up of Abnormal Test Results in a Complex Sociotechnical Environment: Appl Clin Inform 2013; 4(3):359–375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schmidt-Hansen M, Berendse S, Hamilton W. The association between symptoms and bladder or renal tract cancer in primary care: a systematic review. Br J Gen Pr 2015; 65(640):e769–e775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Murphy DR, Meyer AD, Russo E, Sittig DF, Wei L, Singh H. The Burden of Inbox Notifications in Commercial Electronic Health Records. JAMA Intern Med 2016; 176(4):559–560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Nielsen M, Qaseem AHigh Value Care Task Force of the American College of Physicians. Hematuria as a Marker of Occult Urinary Tract Cancer: Advice for High-Value Care From the American College of Physicians. Ann Intern Med 2016; 164(7):488–497. [DOI] [PubMed] [Google Scholar]

- 24.Classen DC, Resar R, Griffin F, Federico F, Frankel T, Kimmel N, Whittington JC, Frankel A, Seger A, James BC. “Global Trigger Tool” Shows That Adverse Events In Hospitals May Be Ten Times Greater Than Previously Measured. Health Aff (Millwood) 2011; 30(4):581–589. [DOI] [PubMed] [Google Scholar]

- 25.de Wet C, Bowie P. Screening electronic patient records to detect preventable harm: a trigger tool for primary care. Qual Prim Care 2011; 19(2):115–125. [PubMed] [Google Scholar]

- 26.Kaafarani HMA, Rosen AK, Nebeker JR, Shimada S, Mull HJ, Rivard PE, Savitz L, Helwig A, Shin MH, Itani KMF. Development of trigger tools for surveillance of adverse events in ambulatory surgery. Qual Saf Health Care 2010; 19(5):425–429. [DOI] [PubMed] [Google Scholar]

- 27.Rosen AK, Mull HJ, Kaafarani H, Nebeker J, Shimada S, Helwig A, Nordberg B, Long B, Savitz LA, Shanahan CW, Itani K. Applying trigger tools to detect adverse events associated with outpatient surgery. J Patient Saf 2011; 7(1):45–59. [DOI] [PubMed] [Google Scholar]

- 28.Griffin FA, Resar RK. IHI Global Trigger Tool for Measuring Adverse Events (Second Edition). Cambridge, MA: Institute for Healthcare Improvement; 2009. (IHI Innovation Series white paper.). Available from: http://www.ihi.org/resources/pages/ihiwhitepapers/ihiglobaltriggertoolwhitepaper.aspx [Google Scholar]

- 29.Rozich J, Haraden C, Resar R. Adverse drug event trigger tool: a practical methodology for measuring medication related harm. Qual Saf Health Care 2003; 12(3):194–200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Murphy DR, Wu L, Thomas EJ, Forjuoh SN, Meyer AND, Singh H. Electronic Trigger-Based Intervention to Reduce Delays in Diagnostic Evaluation for Cancer: A Cluster Randomized Controlled Trial. J Clin Oncol 2015; 33(31):3560–3567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kidney E, Berkman L, Macherianakis A, Morton D, Dowswell G, Hamilton W, Ryan R, Awbery H, Greenfield S, Marshall T. Preliminary results of a feasibility study of the use of information technology for identification of suspected colorectal cancer in primary care: the CREDIBLE study. Br J Cancer 2015; 112(s1):S70–S76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Murphy DR, Laxmisan A, Reis BA, Thomas EJ, Esquivel A, Forjuoh SN, Parikh R, Khan MM, Singh H. Electronic health record-based triggers to detect potential delays in cancer diagnosis. BMJ Qual Saf 2014; 23(1):8–16. [DOI] [PubMed] [Google Scholar]

- 33.Murphy DR, Thomas EJ, Meyer AND, Singh H. Development and Validation of Electronic Health Record-based Triggers to Detect Delays in Follow-up of Abnormal Lung Imaging Findings. Radiology 2015; 277(1):81–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Murphy DR, Meyer AND, Bhise V, Russo E, Sittig DF, Wei L, Wu L, Singh H. Computerized Triggers of Big Data to Detect Delays in Follow-up of Chest Imaging Results. Chest 2016; 150(3):613–620. [DOI] [PubMed] [Google Scholar]

- 35.Fihn SD, Francis J, Clancy C, Nielson C, Nelson K, Rumsfeld J, Cullen T, Bates J, Graham GL. Insights from advanced analytics at the Veterans Health Administration. Health Aff Proj Hope 2014; 33(7):1203–1211. [DOI] [PubMed] [Google Scholar]

- 36.Loo RK, Lieberman SF, Slezak JM, Landa HM, Mariani AJ, Nicolaisen G, Aspen AM, Jacobsen SJ. Stratifying Risk of Urinary Tract Malignant Tumors in Patients With Asymptomatic Microscopic Hematuria. Mayo Clin Proc 2013; 88(2):129–138. [DOI] [PubMed] [Google Scholar]

- 37.Singh H, Sethi S, Raber M, Petersen LA. Errors in Cancer Diagnosis: Current Understanding and Future Directions. J Clin Oncol 2007; 25(31):5009–5018. [DOI] [PubMed] [Google Scholar]

- 38.Gandhi TK, Kachalia A, Thomas EJ, Puopolo AL, Yoon C, Brennan TA, Studdert DM. Missed and Delayed Diagnoses in the Ambulatory Setting: A Study of Closed Malpractice Claims. Ann Intern Med 2006; 145(7):488–496. [DOI] [PubMed] [Google Scholar]

- 39.Lorincz C, Drazen E, Sokol P, Neerukonda K, Metzger J, Toepp M, Maul L, Classen D, Wynia M. Research in Ambulatory Patient Safety 2000–2010: A 10-year review. Chicago IL: American Medical Association; 2011. [Google Scholar]

- 40.Committee on Diagnostic Error in Health Care Board on Health Care Services Institute of Medicine The National Academies of Sciences, Engineering and Medicine. Improving Diagnosis in Health Care. Balogh EP, Miller BT, Ball JR, editors. Washington DC: National Academies Press; 2015. [cited 2016 Aug 16]. Available from: http://www.ncbi.nlm.nih.gov/books/NBK338596/ [PubMed] [Google Scholar]

- 41.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005; 330(7494): 765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Singh H, Arora HS, Vij MS, Rao R, Khan MM, Petersen LA. Communication Outcomes of Critical Imaging Results in a Computerized Notification System. J Am Med Inform Assoc 2007; 14(4):459–466. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.