Abstract

As a result of the rapid development of smartphone-based indoor localization technology, location-based services in indoor spaces have become a topic of interest. However, to date, the rich data resulting from indoor localization and navigation applications have not been fully exploited, which is significant for trajectory correction and advanced indoor map information extraction. In this paper, an integrated location acquisition method utilizing activity recognition and semantic information extraction is proposed for indoor mobile localization. The location acquisition method combines pedestrian dead reckoning (PDR), human activity recognition (HAR) and landmarks to acquire accurate indoor localization information. Considering the problem of initial position determination, a hidden Markov model (HMM) is utilized to infer the user’s initial position. To provide an improved service for further applications, the landmarks are further assigned semantic descriptions by detecting the user’s activities. The experiments conducted in this study confirm that a high degree of accuracy for a user’s indoor location can be obtained. Furthermore, the semantic information of a user’s trajectories can be extracted, which is extremely useful for further research into indoor location applications.

Keywords: activity recognition, indoor localization, semantics

1. Introduction

Location-based services (LBSs) have been popular for many years. Although global navigation satellite systems (GNSSs) can provide good localization services outdoors, there is still no dominant indoor positioning technique [1]. Therefore, an alternative technology is required that can provide accurate and robust indoor localization and tracking. Moreover, the spatial structures of indoor spaces are usually more complex than the outdoor environment, and thus, distinctive information is needed to better describe locations for the LBS-based applications.

With the wide availability of smartphones, a large amount of research has been conducted in recent years targeting indoor localization. Most of the existing indoor localization technologies require additional infrastructure, such as ultra-wideband [2], laser scanning systems (LSSs), radiofrequency identification (RFID) [3] and Wi-Fi access points [4]. However, these approaches often require extensive labor and time. To solve this problem, pedestrian dead reckoning (PDR) has recently been proposed as one of the most promising technologies for indoor localization [5]. Differing from the above approaches, PDR uses the built-in smartphone inertial sensors (accelerometer, gyroscope and magnetometer) to estimate the position. However, PDR suffers from error accumulation when the travel time is long. To achieve improved localization results, a number of studies have been conducted under particular circumstances, but the applicability and accuracy are still limited.

In addition to the direct application in indoor localization, the built-in smartphone sensors can also be used to understand the user’s movements [6], as well as to identify the indoor environment. Sensing the implied location information about the user moving in the corresponding environment provides a new opportunity for indoor mobile localization. To exploit this underlying information, some studies have been presented based on human activity recognition (HAR) [7,8,9], which uses these sensors to identify user activity and then infers information about the context of the user’s location. Therefore, it is worth exploring how to use this information to assist with indoor localization.

Recently, semantic information in the indoor environment has received increased attention. In many cases, semantic information is as valuable as the location. For example, from a human cognition perspective, in comparing the position coordinates, it is more valuable to know if a location is a room, a corridor or stairs [10]. Furthermore, it is also more convenient for a user to obtain semantic information (e.g., “turn left”, “turn right”, “go upstairs”, “go downstairs” and “go into a room”) than information about a route. However, the extraction and description of the necessary semantic information remains an open challenge.

In this paper, a method that combines PDR, HAR and landmarks is developed to accurately determine indoor localization. The proposed method requires no additional devices or expensive labor, and the user trajectory can be corrected and displayed. In addition, to solve the initial position determination problem, a hidden Markov model (HMM) that considers the characteristics of the indoor environment is used to match the continuous trajectory. Furthermore, to describe the user’s indoor activities and trajectories, an indoor semantic landmark model is also constructed by detecting the user’s activities.

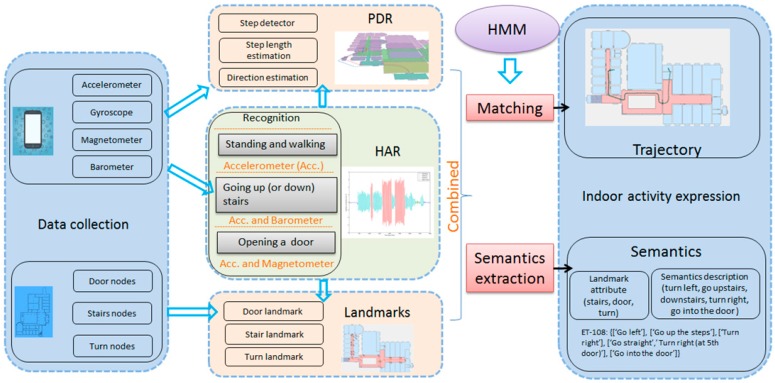

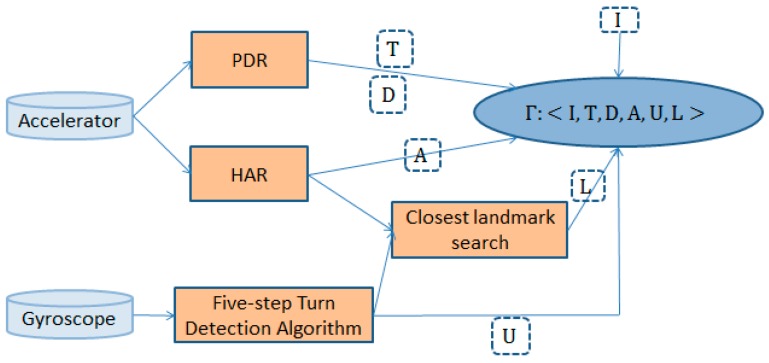

Figure 1 shows an overview of the proposed approach.

Figure 1.

The overall architecture. HAR, human activity recognition.

The remainder of the paper is organized as follows. The related works are briefly reviewed in Section 2. The primary methods are then introduced in Section 3. Section 4 presents the experimental process, and Section 5 discusses and analyzes the experimental results. Finally, the conclusions and recommendations for future work are presented in Section 6.

2. Related Works

Most of the existing indoor localization technologies require additional infrastructure or expensive labor and time. How to achieve reliable and accurate localization in indoor environments at a low cost is still a challenging task [11]. Compared to other methods of indoor localization, using the built-in smartphone sensors provides a more convenient and less expensive indoor localization method, which has the advantage of providing continuous localization across the whole indoor space [12]. Smartphone-based pedestrian dead reckoning (SmartPDR) [13] has been the subject of increased attention, and it is now considered a promising technology for low-cost and continuous indoor navigation [1]. However, a number of parameters, such as step length and walking direction, can easily affect PDR’s localization accuracy [14]. Furthermore, PDR suffers from error accumulation over time because of the low-cost sensors, and thus, it is necessary to combine it with other methods, such as Wi-Fi, indoor map assistance or landmark matching.

With Wi-Fi routers widely deployed in most buildings [15], many studies have combined Wi-Fi and PDR to complement the other’s drawbacks. An early attempt can be found in [16], in which a PDR-based particle filter was used to smooth Wi-Fi-based positioning results, and a Kalman filter based on Wi-Fi was used to correct the PDR errors. Furthermore, a barometer was used to identify upstairs and downstairs. Another study [17] used a Bayes filter to combine PDR and Wi-Fi fingerprinting, and PDR was used to update the motion model. The Wi-Fi fingerprinting method was used to correct the model. In order to improve the efficiency of Wi-Fi-based indoor localization, some new techniques have been recently adopted, such as the Light-Fidelity-assisted approach [18] and received signal strength estimation based on support vector regression [19].

Aside from Wi-Fi, indoor maps can also be used to reduce the accumulated error of PDR. A number of studies have used map information for location correction. In [20], the user’s location, stride length and direction were used as the state values of the particle filter. To achieve localization with less computational resources, a conditional random field (CRF)-based method was proposed in [21]. Maps as constraints were used in this work, and the Viterbi algorithm was used to generate a backtracked path. In [22], maps were also considered as constraints, and the impossible paths were eliminated when the user walks for a sufficient length. The data from the trajectory were then used to construct a Wi-Fi training set. A wider integration can be found in [23,24], where PDR, Wi-Fi and map information were combined to achieve pedestrian tracking in indoor environments. Particle filter-based approaches were used to match the maps in these approaches.

Like indoor maps, landmarks, which can be detected by the unique patterns of smartphone sensor data, can be used to correct the PDR trajectory [10]. Indoor landmarks detected by HAR provide a new opportunity for indoor localization [15]. Activities, such as going upstairs (or downstairs), turning or opening doors, can be treated as landmarks. In [25], an accelerometer was used to recognize standing, walking, stairs, elevators and escalators, achieving accurate recognition. In [15], Wi-Fi, PDR and landmarks were combined to provide a highly accurate localization system. In this work, a Kalman filter was used to combine the Wi-Fi- and PDR-based localization techniques with landmarks. Without relying on Wi-Fi infrastructure, the built-in accelerometer and magnetometer in a smartphone were used to record pedestrians’ walking patterns in [26], which were then matched to an indoor map.

Semantic information has also received attention from researchers. An integrated navigation system that considers both geometrics and semantics was presented in [27]. This work proposed a semantic model that can be used to describe indoor navigation paths. Similarly, a semantic description model derived from a spatial ontology was used to describe the basic elements of navigation paths in [28]. A human-centered semantic indoor navigation system was also proposed in [29]. In order to provide services involving human factors, the system used ontology-based knowledge representation and reasoning technologies.

Although many works have been done in this field, achieving an accurate and semantic-rich trajectory in mobile environments is still a challenging task [10,11]. To improve the accuracy of trajectory locations, the user’s motion information and the indoor map were also exploited to reduce the localization errors, such as in [30]. In their work, a sequence of navigation-related action were extracted from sensor data, and an HMM was used to match the user’s trajectory with the indoor map. Inspired by this idea, the semantic acquisition and utilization were further improved in the localization process in this work. The rich location-based semantic information was extracted based on the user’s activity recognition with HAR, and a semantic model was described and constructed for indoor navigation. The semantic model can be used to not only describe the user’s location, but also to improve the user’s localization efficiency. The simultaneous localization and semantic acquisition can be considered as a significant contribution of the proposed method.

3. Methods

3.1. Location Estimation and Activity Recognition

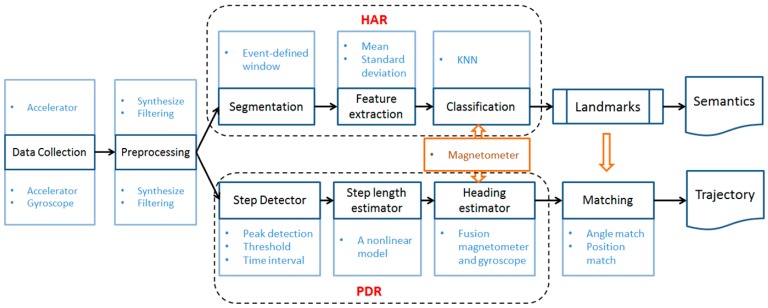

In this section, PDR is first introduced to estimate the user’s location, and then, landmarks are applied to correct the location. Next, with the help of multiple phone sensors, HAR is used to identify the user’s activity. Finally, an HMM is proposed in order to estimate the user’s initial position.

3.1.1. Landmark-Based PDR

The smartphone-based PDR system uses the inbuilt inertial and orientation sensors to track the user’s trajectory [31]. The main processes include step detection, step length estimation, direction estimation [20] and trajectory correction.

(a) Step detection:

Peak step detection [32], or the zero-crossing step detection algorithm [33], is the most frequently-used method to detect the user’s steps. To improve the robustness of the detection result, as in [34,35], the synthetic acceleration magnitude of a three-axis accelerometer is used. This is calculated as follows:

| (1) |

where a(t) is the synthetic acceleration reading at time t, and the constant component g represents the Earth’s gravity.

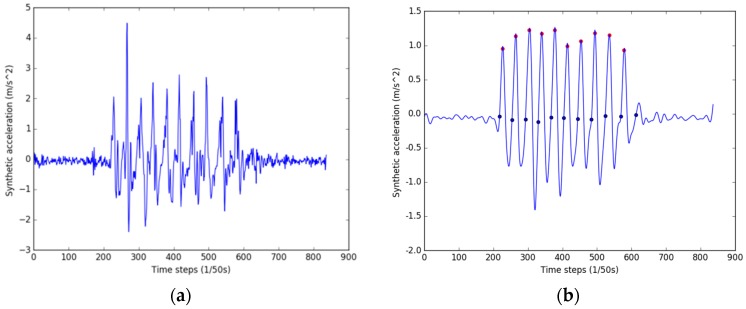

A low-pass filter is applied to smooth the data and to remove the spurious peaks, as shown in Figure 2. The step detection process is conducted according to the following conditions [10,35]:

is the local maximum and is larger than a given threshold .

The time between two consecutive detected peaks is greater than the minimum step period .

According to human walking posture, the start of a step is the zero-crossing point before the peak.

Figure 2.

Step detection. (a) Raw synthetic acceleration data; (b) filtered data and the step detection result.

Figure 2b shows the detected peaks marked with red circles, and the blue circles represent the start and end points of the steps.

(b) Step length estimation:

The length of a step depends on the physical features of the pedestrian (height, weight, age, health status, etc.) and the current state (walking speed and step frequency) [35]. Although step length varies from step to step, even in the same person, step length can be estimated by its corresponding acceleration. A nonlinear model [34] is used to effectively estimate step length.

| (2) |

where and are the maximum and minimum values of the synthetic acceleration during step k. The coefficient is the stride length parameter, and it can be corrected by the landmarks.

(c) Direction estimation:

Direction estimation is a challenging problem for PDR using a smartphone. The gyroscope and magnetometer in the smartphone are normally used to estimate the pedestrian’s walking direction [10]. The gyroscope and magnetometer obtain the steps’ direction during walking. An external environment can easily affect the magnetometer, which may lead to short-term heading estimation errors. Magnetic fields do not affect gyroscopes; however, gyroscopes do accumulate drift error over time [25]. In order to resolve each sensor’s drawbacks, both sensors are combined to enhance the direction estimation [13,34].

| (3) |

where and are the weighting parameters on the magnetometer’s estimated direction and the gyroscope’s estimated angle, respectively. The weight value changes according to the magnitude and correlation of the gyroscope and magnetometer.

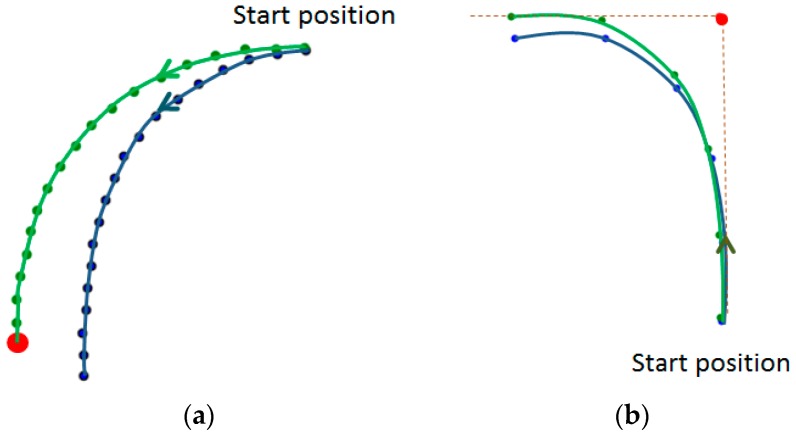

(d) Trajectory correction:

The raw pedestrian trajectory obtained through the above methods may encounter some bias because of the accumulated error of the PDR. To solve this problem, landmarks are used to recalibrate the errors. Depending on the location and angle of the landmarks, the step length and the angle in the raw trajectory are scaled to form a new corrected trajectory. In the process of a user’s indoor walking, two situations occur when passing a landmark. One is when a user goes straight through a landmark, as shown in Figure 3a. The other is when a user turns (see Figure 3b). As shown in Figure 3, the blue lines are the raw PDR trajectory, the green lines are the corrected trajectory and the red dots indicate the landmarks.

Figure 3.

Landmark corrections. (a) Go straight through a landmark; (b) Passing a landmark when the user turns.

3.1.2. Multiple Sensor-Assisted HAR

As in PDR, the synthetic three-axis accelerometer data are used as the base data in HAR. In addition, the smartphone’s magnetometer and barometer provide information about direction and height, respectively, which helps to improve the classification accuracy.

(a) Segmentation:

Three different windowing techniques have been used to divide the sensor data into smaller data segments: sliding windows, event-defined windows and activity-defined windows [36,37]. Since some specific events, such as the start and the end of a step length, are critical for pedestrian location estimation, the event-defined window approach is applied in our work. To use this approach, each step’s start and end points are detected, and then, the samples between them are regarded as a window. If no steps are detected over a period of time, the sliding window approach is used, in which two-second-long time windows with 50% overlap are selected [26,38].

(b) Feature extraction:

Two main types of data features are extracted from each time window. Time-domain features include the mean, max, min, standard deviation, variance and signal-magnitude area (SMA). Frequency-domain features include energy, entropy and time between peaks [8]. Two time-domain features are selected—the mean and standard deviation—because they are computationally inexpensive and sufficient to classify the activities.

(c) Classification:

A supervised learning method is adopted to infer user activities from the sensory data [7]. A number of different classification algorithms can be applied in HAR, such as decision tree (DT), k-nearest neighbor (KNN), support vector machine (SVM) and naive Bayes (NB) [8,9,38]. Due to the simplification and high accuracy of KNN, the KNN algorithm (see Algorithm 1) is selected to classify four activities: standing, going up (or down) stairs, walking and opening a door.

| Algorithm 1. KNN. | |

| Input: | Samples that need to be categorized: ; the known sample pairs: (, ) |

| Output: | Prediction classification: |

| 1: | for every sample in the dataset to be predicted do |

| 2: | calculate the distance between (, ) and the current sample |

| 3: | sort the distances in increasing order |

| 4: | select the k samples with the smallest distances to |

| 5: | find the majority class of the k samples |

| 6: | return the majority class as the prediction classification |

| 7: | end For |

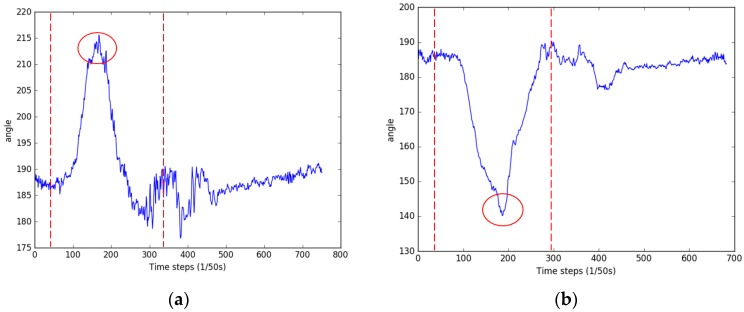

In order to improve the classification accuracy of indoor activities, a barometer is used to determine upstairs or downstairs and to locate the user’s floor. A magnetometer is used to assist in identifying the door-opening activity. Different ways of opening the door correspond to different magnetometer reactions. However, their similar performance patterns can be extracted by detecting the peak value change of the magnetometer in the sliding window. As shown in Figure 4, when the user opens a door, the magnetometer readings change significantly within a short time and then quickly return to the previous readings. Thus, the door-opening activities can be effectively identified.

Figure 4.

The magnetometer changes when a door is opened. (a) Opening a south-facing door, where the door handle is to the right; (b) opening a south-facing door, where the door handle is to the left.

3.1.3. The Hidden Markov Model

When the user’s initial location is unknown, HMM is used to match the motion sequence with indoor landmarks. PDR and HAR also provide useful information for matching and location estimation. As a widely-applied statistical model, HMM has a unique advantage in processing natural language, and it can capture the hidden states in a sequence of motion observations [30,36]. There are five basic elements in HMM: two sets of states (N, M) and three probability matrices (A, B, π).

Because of the unique indoor environment, HMM is presented as follows:

-

(1)

N represents the hidden states in the model, which can be transferred between each other. The hidden states in HMM are landmark nodes in the indoor environment, such as a door, stairs or a turning point.

-

(2)

M indicates the observations of each hidden state, which are the user’s direction selection (east, south, west and north) and the activity result from HAR.

-

(3)

A and B state the transition probability and the emission probability, respectively. The pedestrian moves indoors from one node to another, and when the direction of the current state is determined, the reachable nodes are reduced. In order to reduce the algorithm’s complexity, A and B are combined to give a transition probability set . [, , , ] represent the transition probabilities of different directions.

-

(4)

π is the distribution in the initial state. The magnetometer and barometer provide direction and altitude information when the user starts recording, which helps to reduce the number of candidate nodes in the initial environment. If the starting point is unknown, the same initial probability is given.

The Viterbi algorithm uses a recursive approach to find the most probable sequence of hidden states. It calculates the most probable path to a middle state, which achieves the maximum probability in the local trajectory. Choosing the state’s maximum local probability can determine the best global trajectory. However, in the indoor environment, using a partial maximum probability to obtain the global path is not appropriate, because the probability between hidden states could be zero, and a local best trajectory could become a dead trajectory in the next moment. In this study, the distance information from PDR and the activities information from HAR are combined with the Viterbi algorithm to compute the most likely trajectory. The improved Viterbi algorithm (Algorithm 2) is proposed as follows:

| Algorithm 2. Improved Viterbi algorithm. | |

| Input: | The proposed HMM tuples ; HAR classification results ; PDR distance information ; Initial direction of magnetometer ; Initial pressure of barometer ; is the distance threshold. |

| Output: | Prediction trajectory. |

| 1: | , /* Determine the initial orientation and floor |

| 2: | for from 1 to do |

| 3: | for each path pass through to do |

| 4: | if ((Distance(), )) - )<) and (>0) then /* Determine whether the distance between two landmark nodes coincides with the distance information estimated by PDR |

| 5: | Obtain the subset data |

| 6: | end if |

| 7: | end for |

| 8: | end for |

| 9: | for in do |

| 10: | Obtain the landmark data set |

| 11: | if match with HAR data then |

| 12: | Add this trajectory to the final trajectory data set |

| 13: | end for |

| 14 | return Max()/* Return the trajectory of the maximum probability |

With the determination of the user’s trajectory, the initial position can be obtained through the first landmark point and the PDR information.

3.2. Semantic Landmark Model

3.2.1. Trajectory Information Collection

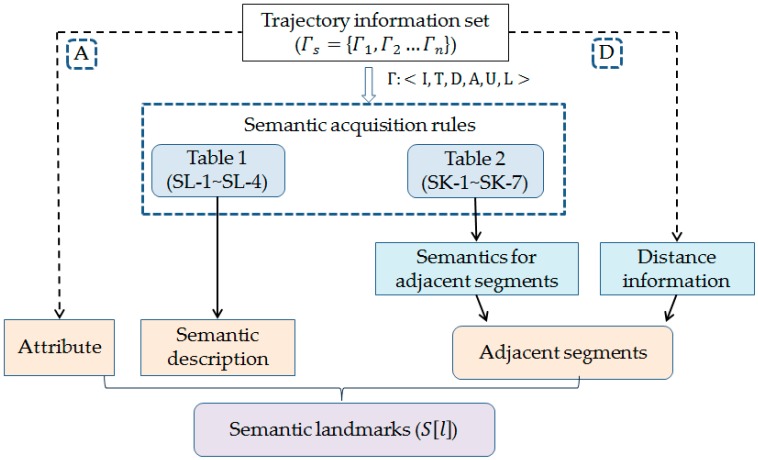

Definition 1. Trajectory information: A trajectory is defined as a six-tuple , where I is the ID of the trajectory, and and are the timestamp and position information, respectively, of each step. is the direction change list; is the activities information list; and L is the landmark list. Figure 5 shows the trajectory information collection process.

Figure 5.

The trajectory information collection process.

For example, if a user went from Entrance (ET) to Room 108, I is assigned ET–R108.

From PDR, the timestamp and xyz coordinate value for each step can be obtained and can be represented as:

| (4) |

| (5) |

where n denotes the number of steps detected.

Using the HAR method, the user’s activity information can be collected. Hence, can be given as follows:

| (6) |

The direction change list can be obtained by the gyroscope. It should be noted that we detected only large directional changes (>15°), and thus, walking along a smaller arc was not detected. Because most of the turns could be completed in less than five steps, a five-step turn detection method (see Algorithm 3) is proposed to determine the direction change activity.

| Algorithm 3. Five-step turn detection algorithm. | |

| Input: | Angle value sequence = [ |

| Output: | Direction change list . |

| 1: | Findpeaks()/* Find the local maximum sequence |

| 2: | for in do |

| 3: | if ( or ) and (>2) then |

| 4: | = |

| 5: | if () then |

| 6: | |

| 7: | else if () then |

| 8: | |

| 9: | else if () then |

| 10: | |

| 11: | else if () then |

| 12: | |

| 13: | else if () then |

| 14 | |

| 15: | end for |

| 16: | return |

For example, can be described as follows:

| (7) |

Since the landmarks are used as the key points in a trajectory, a landmark list can be used to denote a trajectory. According to the time series, a landmark list L is detected in a trajectory. Three types of landmarks (stairs, turns and doors) are added to list L according to the following rules:

If the going-up-(or -down)-stairs activity is detected, the nearest stairs landmark is added to L.

If direction change activity (see Algorithm 3) is detected, the nearest turn landmark is added to L.

If a door-opening activity is detected, the nearest door landmark is added to L.

3.2.2. Semantics Extraction

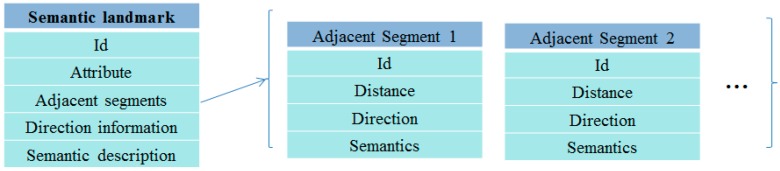

Definition 2. Semantic landmark: A semantic landmark consists of five parts: Id, attribute, adjacent segments, direction information and semantic description. Id is the landmark identifier. Attribute is one of the three types of landmarks: stairs, turn, or door. Adjacent segments contain the distance and semantic information between the current landmark and the next landmarks. Direction information and semantic description indicate the direction information and the semantic information when the user passes the landmark, respectively, as shown in Figure 6.

Figure 6.

Semantic landmark and adjacent segments. An adjacent segment consists of four parts: Id, distance, direction and semantic description. Id is the identifier of a segment. Distance represents the distance between the two landmarks that make up the segment. Direction represents the direction of the segment. Semantics indicates the semantic information that can be obtained.

A sequence of landmarks can denote a trajectory. Therefore, adding semantic information to the landmarks and their adjacent segments can describe the trajectory. The semantic description of trajectories is expressed as follows:

| (8) |

where indicates the semantics of trajectory , and is the number of landmarks for this trajectory. represents the semantics of landmark . denotes the semantics of the region from the start point to the first landmark point. Similarly, denotes the semantics of the region from the last landmark point to the end.

A semantic landmark or an adjacent segment can store multiple semantics and provides semantics based on the detected activity. According to the trajectory information , the semantic information can be obtained as shown in Table 1.

Table 1.

Semantics acquisition rules for landmarks.

| Id | Conditions (C) | Semantics (S) |

|---|---|---|

| SL-1 | = ‘Walking’, Detected () and Find () | ‘Go left’, ‘Go right’, ‘Turn left’, ‘Turn right’, ‘Turn around’ |

| SL-2 | = ‘Opening a door’, Find () and and | ‘Opening a door’ |

| SL-3 | = ‘Opening a door’, Find () and | ‘Go into the door’ |

| SL-4 | = ‘Opening a door’, Find () and | ‘Go out of the door’ |

SL indicates the identity of the rule. = {‘Standing’, ‘Walking’, ‘Going up (or down) stairs’, ‘Opening a door’}, = {‘Go left’, ‘Go right’, ‘Turn left’, ‘Turn right’, ‘Turn around’}. L is the landmark list: , are the turn, the stairs and the door landmarks. , and represent the current landmark, the previous landmark and the next landmark.

Detected () and Find () indicate that turn activity is detected and that a nearby turn landmark is found. If the user’s activity information is detected, but there are no corresponding landmarks nearby, this activity’s semantics is added to the corresponding adjacent segment, as shown in Table 2.

Table 2.

Semantics acquisition rules for landmark segments.

| Id | Conditions (C) | Semantics (S) |

|---|---|---|

| SK-1 | = ‘Standing’, Detected () | ‘Turn left’, ‘Turn right’, ‘Turn around’ |

| SK-2 | = ‘Walking’, Detected () and Unfound () | ‘Go left’, ‘Go right’, ‘Turn left’, ‘Turn right’, ‘Turn around’ |

| SK-3 | = ‘Walking’, Undetected (), > | ‘Go straight’ |

| SK-4 | = ‘Going up(or down) stairs’, Find () and < , < 5 s | ‘Go up the steps’ |

| SK-5 | = ‘ Going up(or down) stairs, Find () and > , < 5 s | ‘Go down the steps’ |

| SK-6 | = ‘ Going up(or down) stairs, Find () and < , > 5 s | ‘Go upstairs’ |

| SK-7 | = ‘ Going up(or down) stairs, Find () and > , > 5 s | ‘Go downstairs’ |

SK indicates the identity of the rule. = {‘Standing’, ‘Walking’, ‘Going up (or down) stairs’, ‘Opening a door’}, = {‘Go left’, ‘Go right’, ‘Turn left’, ‘Turn right’, ‘Turn around’}. L is the landmark list: , are the turn, the stairs, and the door landmarks. The duration of ‘Standing’, ’Walking’ and ‘Going up (or down) stairs’ activities are represented by , and , respectively. From D, we can obtain the distance and height information indicates the walk distance; represents the z value of the current landmark; and represents the z value of the next landmark.

According to the semantics acquisition rules shown in Table 1 and Table 2, the semantic landmarks are constructed as shown in Figure 7.

Figure 7.

Semantic landmark construction process.

As the above process shows, both semantic information and distance information are added to the semantic model. The order information (e.g., “Turn left at the 2nd turning point”) is added according to the following rule:

If the current landmark’s adjacent segments contain multiple turn or door landmarks and they have the same semantics, sort them by distance and then provide them with the order information or .

Set S denotes the semantics obtained, such as {S1: [‘Go left’], S2: [‘Go up the steps’], S3: [‘Turn left’]}. If the order information is obtained simultaneously, it can be expressed as {S_order: [‘at turn point’] or [‘at door’]}.

4. Experiment

The experiment was performed at the State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing (LIESMARS) at Wuhan University, China. An Android mobile phone and the indoor floor plans of LIESMARS were used in the experiment. It should be noted that, in this study, only the hand-held situation was considered. The experimental process is shown in Figure 8.

Figure 8.

The experiment’s overall process.

In the following, the pre-knowledge is first provided. Multiple user trajectories are then presented, including a trajectory on a single floor, a trajectory on multiple floors and a trajectory without knowing the starting point. Finally, the semantics acquisition process and results are described.

4.1. Pre-Knowledge

Door points, stair points and turn points were used as landmarks in the experiment, as shown in Figure 9. In indoor spaces, pedestrians tend to walk along a central line, and they tend to go in a straight line between places. The intersection of the corridor area’s centerline was therefore selected as a landmark. Most turn points near door points were not assumed to be landmarks, because the door points could replace them as landmarks. The above principles were used to generate the landmarks. The proposed approach does not focus on landmark extraction, but on trajectory generation and the steps in the semantics acquisition.

Figure 9.

Landmarks. (a) Landmarks of the first floor; (b) landmarks of the second floor.

The smartphone’s barometer and magnetometer were used to determine the initial orientation and locate the user’s floor, respectively. However, they need to be analyzed first. We collected barometer data at eight different locations on each layer, as shown in Table 3. As the change in barometer readings in the same layer was not significant, we used the average of the collected barometer readings as a benchmark to determine the user’s floor. If the current barometer reading is within ±0.1 hpa of B(f1) or B(f2), the corresponding floor is determined.

Table 3.

Barometer readings.

| Floor | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | Average (hpa) |

|---|---|---|---|---|---|---|---|---|---|

| f1 | 1020.91 | 1020.92 | 1020.92 | 1020.9 | 1020.88 | 1020.86 | 1020.85 | 1020.87 | B(f1) = 1020.89 |

| f2 | 1020.32 | 1020.34 | 1020.34 | 1020.33 | 1020.29 | 1020.3 | 1020.33 | 1020.32 | B(f2) = 1020.32 |

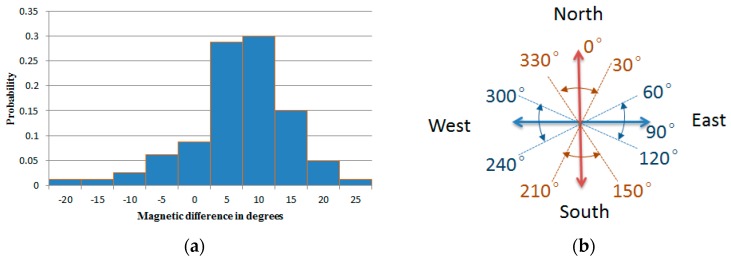

Compared to the barometer, the magnetometer is more unstable, so 80 north-facing magnetometer readings were collected at various locations within the building. The distribution of the difference between the collected magnetometer data and True North is shown in Figure 10a. Most of the magnetic differences are between −5° and 15° and occasionally more than 20°. Based on the above data, a threshold of 30° was chosen to determine the direction semantics of the initial position. Direction information like ‘north’ (330 < θ < 360, 0 ≤ θ < 30), ‘east’ (60 ≤ θ <120), ‘south’ (150 ≤ θ <210) and ‘west’ (240 ≤ θ < 300) can be obtained (Figure 10b) when the magnetometer reading of the user’s initial position is acquired.

Figure 10.

The direction information obtained by the magnetometer. (a) Distribution of the magnetic differences; (b) direction information.

In addition, the user’s step length parameters need to be determined over a short distance (0.45 in our experiment). When the user goes upstairs, the horizontal and vertical distances of each step are given as fixed values (0.3 m and 0.15 m in the experiment).

4.2. Trajectory Generation and Correction

To present a user’s trajectory, HAR is performed to identify landmarks, and then, these landmarks are used to correct the PDR trajectory.

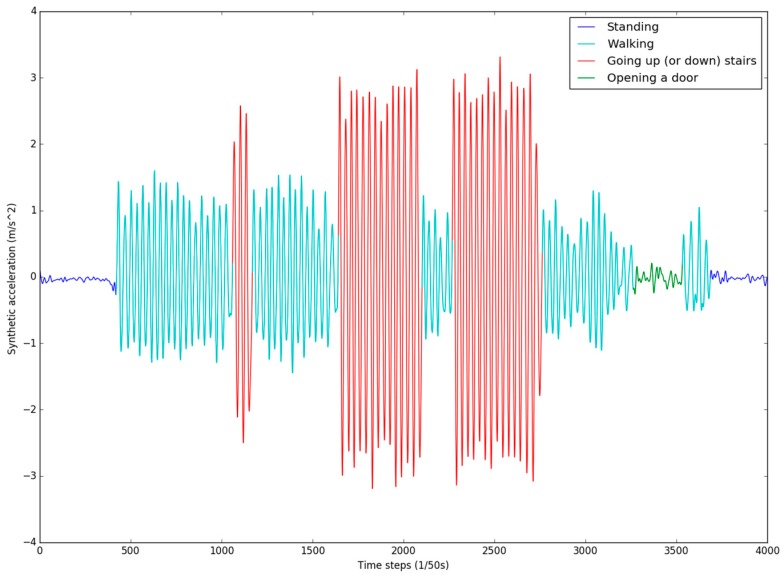

Because the HAR training set requires a variety of activities, 25 trajectories from the entrance to each room on the second floor were chosen. If a room has multiple doors, each door corresponds to a trajectory. In order to obtain information from standing activity samples, the user needs to stand for a while at the beginning point and the end point of each trajectory. A training sample is shown in Figure 11.

Figure 11.

The activity sample collection of trajectory Entrance (ET)–R201.

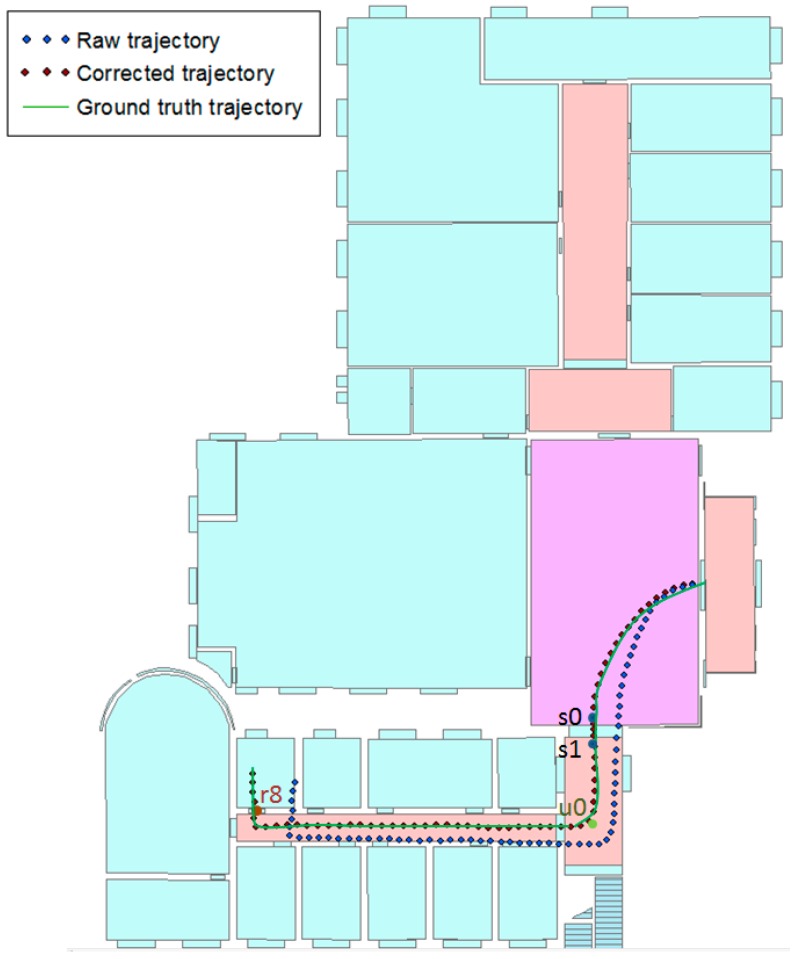

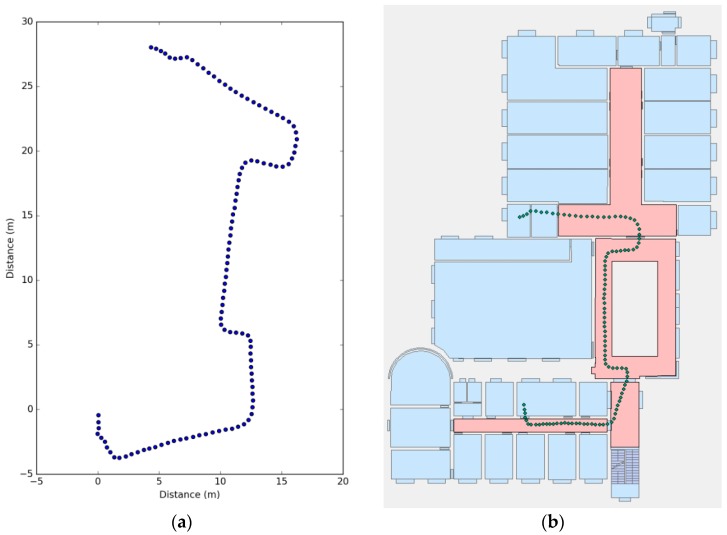

Landmarks that the user passes can be determined using the activity classification results provided by HAR, and then, the user’s trajectory can be corrected. Figure 12 shows the trajectory from the entrance to Room 108. The blue points indicate the raw trajectory, and the red points indicate the corrected trajectory. The corrected trajectory is extremely close to the ground truth trajectory. In addition, only four landmarks were used: stairs landmarks s0 and s1, turn landmark u0 and door landmark r8.

Figure 12.

The trajectory of ET–R108. The raw trajectory is without landmarks, and the corrected trajectory is with landmarks.

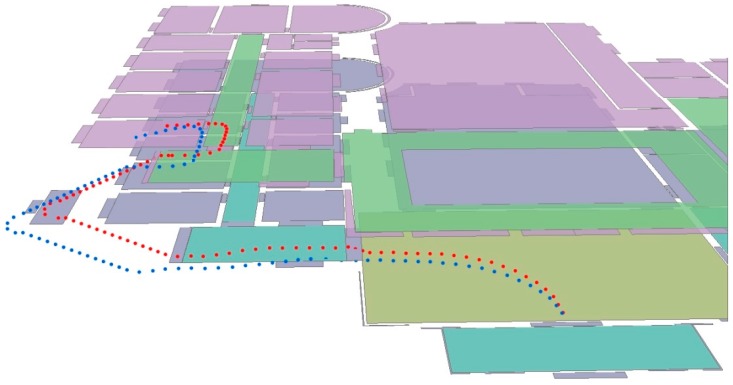

For trajectories on multiple floors, height information is added to each step point. Figure 13 shows the trajectory from the entrance to Room 201. The blue points and red points indicate the raw trajectory and the corrected trajectory, respectively.

Figure 13.

The trajectories on multiple floors.

When the user’s initial location is unknown, the direction observation sequence is obtained from the direction sensors. Information about position and activities is obtained from PDR and HAR, respectively. When the user trajectory is determined, the starting position can be inferred from PDR.

The user went from point to point . The trajectory when using only PDR is shown in Figure 14a, and the direction observation sequence is obtained.

Figure 14.

Trajectory matching results. (a) Raw PDR trajectory; (b) matching trajectory.

| (9) |

From PDR, the distance between the landmarks of two adjacent observation sequences is obtained, which is denoted by . For example, represents the distance from the starting point to the first landmark, and denotes the distance from the first landmark to the second landmark. represents the distance from the end point to the last landmark.

| (10) |

Information about activities is obtained from HAR.

| (11) |

The matching process used in the algorithm is shown in Table 4. To simplify the proposed model, a flag was abstracted to express similar landmarks. For example, stands for the adjacent doors at the north side of the corridor: [,,,,,], [,,,,], = [,,,], [,,,] (see Figure 9). The virtual landmark E indicates the connecting points between the doors and the corridor. For example, indicates the connecting point between door d3 and the corridor.

Table 4.

Trajectory matching results. E = east, S = south, W = west, N = north. s = standing, w = walking, u = going up stairs, d = going down stairs, o = opening a door.

| Observation Sequence | Trajectories | Distance and Activities Information | Trajectories after |

|---|---|---|---|

| {‘S’, ‘E’, ‘N’} | , | { = 1.86, = 1.69, = 10.9} { = (s, w, o), = (w), = (w)} |

|

| {‘S’, ‘E’, N’,’W’} | = 6.73, = (w) | ||

| {‘S’, ‘E’, N’,’W’, ‘N’} | = 2.1, = (w) | ||

| {‘S’, ‘E’, N’,’W’, ‘N’, ‘E’} | = 13.73, = (w) | ||

| {‘S’, ‘E’, N’,’W’, ‘N’, ‘E’, ‘N’} | = 4.1, = (w) | ||

| {‘S’, ‘E’, N’,’W’, ‘N’, ‘E’, N’,’W’} | , | = 4.1, = (w) | , |

| = 10.94, = 2.8 = (w, o, w) | , |

A trajectory is represented by a list, and the elements in the list represent the points that have been passed. The HAR results are denoted by . = (s, w, o) indicates the sequence of activities from the start point to the first landmark, which is “Standing-Walking-Opening a door”.

It should be noted that we used the real landmark coordinates to correct the PDR results when landmarks were detected. As the trajectory ended, de was given to estimate the final position, and the matching trajectory was obtained.

4.3. Semantics Extraction

According to the proposed semantics extraction method described in Section 3.2, the semantics for landmarks in trajectory ET–R108 (in Figure 12) were obtained as shown in Table 5. The start and end points were considered as virtual landmarks, which have no specific attributes or fixed locations.

Table 5.

Landmark semantics.

| Landmark | Name | Expression |

|---|---|---|

| Entrance | Id | ET |

| Attribute | Virtual landmarks | |

| Adjacent segments | {‘’:{‘semantics’: ‘Go left’, ‘distance’: 9.12, ‘direction’: ‘West-South’}} | |

| Direction information | ‘West’ | |

| Semantic description | ||

| Stairs s0 | Id | s0 |

| Attribute | Stairs | |

| Adjacent segments | {‘’:{‘semantics’: ‘Climb the steps’, ‘distance’: 0.99, ‘direction’: ‘South’}} | |

| Direction information | ‘South’ | |

| Semantic description | ||

| Stairs s1 | Id | s1 |

| Attribute | Stairs | |

| Adjacent segments | { ‘’:{‘semantics’: , ‘distance’: 5.05, ‘direction’: ‘South’}} | |

| Direction information | ‘South’ | |

| Semantic description | ||

| Turn u0 | Id | u0 |

| Attribute | Turn | |

| Adjacent segments | {‘’:{‘semantics’: [‘Go straight’, ‘Turn right’], ‘distance’: 19.13, ‘direction’: ‘West-North’}} | |

| Direction information | ‘South - West’ | |

| Semantic description | ‘Turn right’ | |

| Door r8 | Id | r8 |

| Attribute | Door | |

| Adjacent segments | {‘’:{‘semantics’: , ‘distance’: 2.03, ‘direction’: ‘North}} | |

| Direction information | ‘North’ | |

| Semantic description | ‘Go into the door’ |

After sufficient trajectories were acquired, the landmarks’ full semantics could be obtained. Taking the turn landmark (u0) as an example, the complete semantics are as shown in Table 6. In addition, the order of the landmarks could be obtained using the method described in Section 3.2.2.

Table 6.

Complete semantics of turn u0.

| Name | Expression |

|---|---|

| Id | u0 |

| Attribute | Turn |

| Adjacent segments | {‘’: {‘semantics’: ‘Turn left (at 1st door)’, ‘distance’: 5.19, ‘direction’: ‘West-South’}, ‘’: {‘semantics’: ‘Turn right (at 1st door)’, ‘distance’: 5.21, ‘direction’: ‘West-North’}, ‘’: {‘semantics’: ‘Turn left (at 2nd door)’, ‘distance’: 6.87, ‘direction’: ‘West-South’}, ‘’: {‘semantics’: ‘Turn right (at 2nd door)’, ‘distance’: 7.19, ‘direction’: ‘West-North’}, ‘’: {‘semantics’: ‘Turn right (at 3rd door)’, ‘distance’: 12.13, ‘direction’: ‘West-North’}, ‘’: {‘semantics’: ‘Turn left (at 3rd door)’, ‘distance’: 12.27, ‘direction’: ‘West-South’}, ‘’: {‘semantics’: ‘Turn left (at 4th door)’, ‘distance’: 14.11, ‘direction’: ‘West-South’}, ‘’: {‘semantics’: ‘Turn right (at 4th door)’, ‘distance’: 15.96, ‘direction’: ‘West-North’}, ‘’: {‘semantics’: ‘Turn left (at 5th door)’, ‘distance’: 17.75, ‘direction’: ‘West-South’}, ‘’: {‘semantics’: ‘Turn right (at 5th door)’, ‘distance’: 19.13, ‘direction’: ‘West-North’}, ‘’: {‘semantics’: ‘Go straight’, ‘distance’: 20.28, ‘direction’: ‘West’}, ‘’: {‘semantics’: ‘Turn left’, ‘distance’: 5.09, ‘direction’: ‘North}, ‘’: {‘semantics’: ‘Turn right’, ‘distance’: 2.84, ‘direction’: ‘South’}} |

| Direction information | ‘South-West’, ‘North-West’, ‘East-South’, ‘East-North’ |

| Landmark semantic | ‘Turn right’, ‘Turn left’ |

According to the above tables and our semantics model, the three trajectories presented in Section 4.2 can be described as follows:

ET–R108: {[‘Go left’], [‘Go up the steps’], [‘Turn right’], [‘Go straight’,’ Turn right (at 5th door)’], [‘Go into the door’]}

ET–R201: {[‘Go left’], [‘Go up the steps’], [‘Go straight’, ‘Go upstairs’], [‘Turn left], [‘Turn left (at 1st door)’], [‘Go into the door’]}

R204–R213: {[‘Go out the door’], [‘Turn left’], [‘Go straight’], [‘Turn left’], [‘Turn left’], [‘Turn right’], [‘Go straight’], [‘Turn right’], [‘Turn left’], [‘Turn left’], [‘Go straight’], [‘Go into the door’]}

The construction of complete semantics for all of the indoor landmarks requires a large amount of trajectory data. However, the proposed approach only considers the complete semantics of key landmarks and the partial semantics of non-key landmarks, because they can describe most of the user’s activities.

5. Discussion

Firstly, the performance of the HAR classification is evaluated. The location errors in a trajectory are then described. Finally, the accuracy of the landmark matching is analyzed.

5.1. Error Analysis

5.1.1. HAR Classification Error

In order to evaluate the performance of the HAR classification, 10-fold cross-validation [39] was used. In this method, the dataset is divided into 10 parts: nine parts are used for training, and one part is used for testing each iteration. The classification accuracy of the common classifiers was compared with the proposed classifier, and two different window segmentation approaches were compared. Since the error rate of our step detection is quite low, at 0.19% (total steps: 3092; error detection steps: six), it is more convenient to use the event-defined window approach to sense the user’s activity. As shown in Table 7, the results show that the event-defined window approach performs better than the sliding window approach, which applies two-second-long time windows with a 50% overlap.

Table 7.

Classification accuracy.

| Classifier | Accuracy | Accuracy |

|---|---|---|

| (Sliding Windows) | (Event-Defined Windows) | |

| DT | 98.62% | 98.69% |

| SVM | 96.55% | 97.73% |

| KNN | 98.83% | 98.95% |

Many different performance metrics could have been be used to evaluate the HAR classification [8]. A confusion matrix was adopted, which is a method commonly used to identify error types (false positives and negatives) [40]. Several different performance metrics—accuracy (the standard metric to express classification performance), precision, recall and F-measure—could be calculated based on the matrix [9]. Table 8 shows the confusion matrix used to evaluate the results of the KNN classification activities.

Table 8.

Confusion matrix.

| Actual Class | Predicted Class | Accuracy (%) | |||

|---|---|---|---|---|---|

| Standing | Walking | Going up (or down) Stairs | Opening a Door | ||

| Standing | 284 | 0 | 0 | 10 | 96.60% |

| Walking | 0 | 1981 | 3 | 0 | 99.85% |

| Going up (or down) stairs | 0 | 4 | 787 | 0 | 99.49% |

| Opening a door | 3 | 0 | 0 | 56 | 94.92% |

As shown in Table 8, the proposed method achieves an extremely high accuracy (>99%) in detecting stairs and walking activities. Some errors occur in identifying door-opening and standing activities. However, by detecting the magnetometer change, it is easy to distinguish between these two activities, thereby reducing the amount of errors.

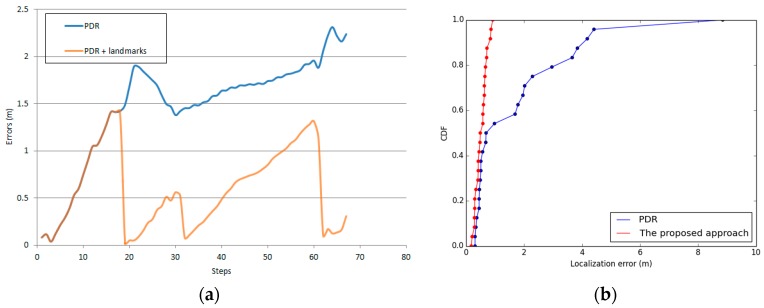

5.1.2. Localization Error

The localization error of trajectory ET–R108 is shown in Figure 15a; the blue line indicates the original PDR trajectory, and the orange line indicates the location errors after only the landmarks were corrected. The results show that the PDR errors increase with distance, and a high average localization accuracy (0.59 m) is achieved when we use the landmarks to correct the cumulative errors. Figure 15b shows the cumulative error distribution of the 25 test trajectories. We can see that the proposed approach is more stable than using only PDR, and the average error is reduced from 1.79 m to 0.52 m.

Figure 15.

Localization error. (a) Localization error of trajectory ET–R108; (b) the cumulative error distribution of the 25 test trajectories.

5.1.3. Landmark Matching Errors

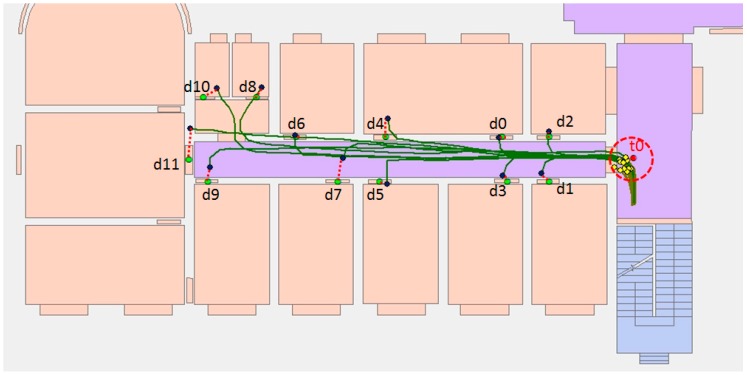

The shortest distance method was used to match the landmarks. As shown in Figure 16, the result matches the partial trajectories.

Figure 16.

Landmark matching. The red point is the turn landmark, and the green points are the door landmarks. The yellow points are the PDR position when a turn is detected. The blue points are the PDR position when opening a door is detected. The dashed red line indicates the nearest landmark points.

Although the trajectories were corrected at the turn landmark (the red point), the PDR-estimated user location still introduced errors, particularly when the user was far from the previous landmark. In the experiment, an error occurred because the distances of d18 and d20 were extremely close and far from the turning point landmark (t6). We can also see that a similar error occurred in turn landmark , which is matched to landmark (see Table 9).

Table 9.

Landmark matching errors.

| Landmark | Total | Wrong Match | Error Rate |

|---|---|---|---|

| Doors | 24 | 1 | 4.17% |

| Stairs | 96 | 0 | 0 |

| Turns | 63 | 1 | 1.59% |

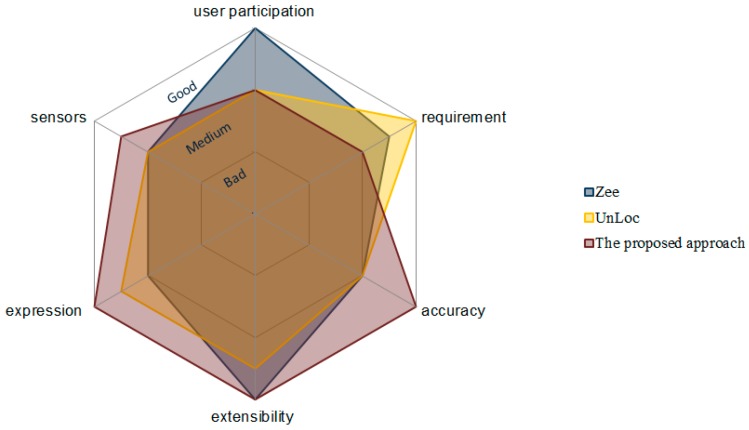

5.2. Comprehensive Comparison

Some similar indoor localization schemes, which require no additional devices or expensive labor, are compared in terms of requirement, sensors, user participation, accuracy, expression and extensibility in Table 10. Each technique has its own advantages. Zee [22] tracked a pedestrian’s trajectory without user participation, and a Wi-Fi training set was simultaneously collected, which can be used in Wi-Fi fingerprinting-based localization techniques. UnLoc [25] only needs a door location as the basic input information and simultaneously computes the user’s location and detects various landmarks. Compared to the above localization schemes, the proposed approach needs more basic information; however, the information allows us to obtain a better localization accuracy. Moreover, a semantic landmark model was constructed during the localization process, which can be used not only to describe the user’s trajectory, but also to improve the localization efficiency. The overall scores of the three approaches are shown in Figure 17.

Table 10.

Comparison with other localization systems.

| Name | Zee | UnLoc | The Proposed Approach |

|---|---|---|---|

| Requirement | Floorplan | A door location | Floorplan, landmarks |

| Sensors | Acc., Gyro., Mag., (Wi-Fi) | Acc., Gyro., Mag., (Wi-Fi) | Acc., Gyro., Mag., Baro. |

| User participation | None | Some | Some |

| Accuracy | 1–2 m | 1–2 m | <1 m |

| Expression | Trajectory | Trajectory | Trajectory, semantic description |

| Extensibility | Wi-Fi RSS distribution | Landmark distribution | Semantic landmark model |

Figure 17.

Overall score of Zee, UnLoc and the proposed approach.

5.3. Computational Complexity

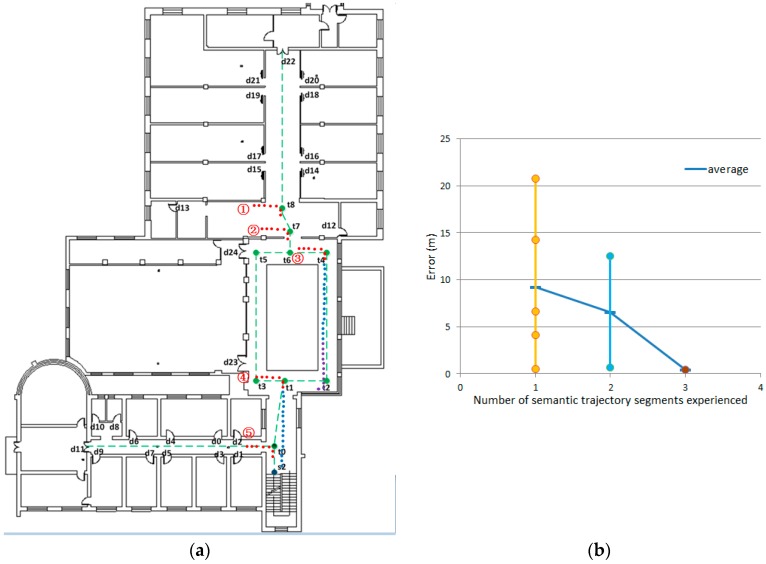

In order to verify the validity of the semantic model for localization and better analyze the computational complexity of semantics-assisted localization method, a further experiment was conducted (Figure 18a). In this experiment, the user started from any place on the second floor, and we wanted to determine the user’s trajectory as soon as possible. The overall error and time complexity in the trajectory matching process are used to evaluate the proposed method.

Figure 18.

Semantic matching of trajectories. (a) The trajectories after semantic matching; (b) the trajectory error.

As shown in Figure 18b, although only a few semantics are provided, the trajectory error drops rapidly (the average error drops from 9.25 m to 0.48 m). When the first semantic information is obtained from the trajectory, there are five trajectories satisfying the condition. In order to match the semantic information, each landmark point needs to be traversed once, so the time complexity is O(N). The next search only needs to traverse the semantics of the trajectory segments that satisfy the previous condition. These trajectory segments are ‘’, ‘’, ‘’, ‘’, ‘’, ‘’, ‘’, and the time complexity is O(7). The trajectory is determined after matching the third semantic information, and the trajectory error is similar to the previous trajectory localization experiment, where the initial location was known. The above description is provided in Table 11. Compared to the traditional trajectory matching method, which yields a time complexity of O(NT) or O(N2T), the proposed semantic matching method is more efficient. Since it does not need to traverse all of the states every time, the time complexity is much less than O(NT).

Table 11.

Semantic matching results.

| Trajectory | Trajectory Segment | Semantic | Time Complexity | Numbers 1 |

|---|---|---|---|---|

| Trajectory information | Segment 1 (red points) | ‘Turn right’ (‘East-South’) | O(N) | 5 |

| Segment 2 (blue points) | ‘Go straight’ (‘South’) | O(7) | 2 | |

| Segment 3 (purple points) | ‘Turn right’ (‘South-West’) | O(3) | 1 |

1 The number of trajectories after semantic matching.

6. Conclusions

In this paper, PDR, HAR and landmarks have been combined to achieve indoor mobile localization. The landmark information was extracted from indoor maps, and then, HAR was used to detect the landmarks. These landmarks were then used to correct the PDR trajectories and to achieve a high level of accuracy. Without knowing the initial position, HMM was performed to match the motion sequence to the indoor landmarks. Because semantic information was also assigned to the landmarks, the semantic description of a trajectory was obtained, which has the potential to provide more applications and better services. Moreover, the experiment was implemented in an indoor environment, to fully evaluate the proposed approach. The results not only show a high localization accuracy, but also confirm the value of semantic information.

More extensive research can be studied in the future. For example, phone sensing could be used to recognize more activities, particularly complex activities, and more semantic information could be extracted. In addition, complex experimental conditions, such as various trajectories, a location-independent mobile phone and all kinds of users, could be included in future studies. The semantic model used in this study does not contain all possible semantics, and rich semantic information could be obtained by using the data from crowdsourced trajectories. Furthermore, real-time localization (including semantic information) is also the priority of our future work.

Acknowledgments

This work is supported by The National Key Research and Development Program of China [grant number 2016YFB0502203], Mapping geographic information industry research projects of public interest Industry [grant number 201512009] and the LIESMARS special Research Funding.

Author Contributions

Sheng Guo conceived of and designed the study, performed the experiments and wrote the paper. Yan Zhou helped to improve some of the experiments. Hanjiang Xiong and Xianwei Zheng supervised the work and revised the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Deng Z., Wang G., Hu Y., Cui Y. Carrying Position Independent User Heading Estimation for Indoor Pedestrian Navigation with Smartphones. Sensors. 2016;16:677. doi: 10.3390/s16050677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gezici S., Tian Z., Giannakis G.B., Kobayashi H., Molisch A.F., Poor H.V., Sahinoglu Z. Localization via ultra-wideband radios: A look at positioning aspects for future sensor networks. IEEE Signal Proc. Mag. 2005;22:70–84. doi: 10.1109/MSP.2005.1458289. [DOI] [Google Scholar]

- 3.Zhu W., Cao J., Xu Y., Yang L., Kong J. Fault-tolerant RFID reader localization based on passive RFID tags. IEEE Trans. Parallel Distrib. Syst. 2014;25:2065–2076. doi: 10.1109/TPDS.2013.217. [DOI] [Google Scholar]

- 4.Deng Z., Xu Y., Ma L. Indoor positioning via nonlinear discriminative feature extraction in wireless local area network. Comput. Commun. 2012;35:738–747. doi: 10.1016/j.comcom.2011.12.011. [DOI] [Google Scholar]

- 5.Li H., Chen X., Jing G., Wang Y., Cao Y., Li F., Zhang X., Xiao H. An Indoor Continuous Positioning Algorithm on the Move by Fusing Sensors and Wi-Fi on Smartphones. Sensors. 2015;15:31244–31267. doi: 10.3390/s151229850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Guinness R. Beyond Where to How: A Machine Learning Approach for Sensing Mobility Contexts Using Smartphone Sensors. Sensors. 2015;15:9962–9985. doi: 10.3390/s150509962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Incel O.D., Kose M., Ersoy C. A Review and Taxonomy of Activity Recognition on Mobile Phones. BioNanoScience. 2013;3:145–171. doi: 10.1007/s12668-013-0088-3. [DOI] [Google Scholar]

- 8.Shoaib M., Bosch S., Incel O., Scholten H., Havinga P. A Survey of Online Activity Recognition Using Mobile Phones. Sensors. 2015;15:2059–2085. doi: 10.3390/s150102059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lara O.D., Labrador M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013;15:1192–1209. doi: 10.1109/SURV.2012.110112.00192. [DOI] [Google Scholar]

- 10.Yang Z., Wu C., Zhou Z., Zhang X., Wang X., Liu Y. Mobility increases localizability: A survey on wireless indoor localization using inertial sensors. ACM Comput. Surv. (CSUR) 2015;47:1–34. doi: 10.1145/2676430. [DOI] [Google Scholar]

- 11.Deng Z., Wang G., Hu Y., Wu D. Heading Estimation for Indoor Pedestrian Navigation Using a Smartphone in the Pocket. Sensors. 2015;15:21518–21536. doi: 10.3390/s150921518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Paucher R., Turk M. Location-based augmented reality on mobile phones; Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); San Francisco, CA, USA. 13–18 June 2010; pp. 9–16. [Google Scholar]

- 13.Kang W., Han Y. SmartPDR: Smartphone-Based Pedestrian Dead Reckoning for Indoor Localization. IEEE Sens. J. 2015;15:2906–2916. doi: 10.1109/JSEN.2014.2382568. [DOI] [Google Scholar]

- 14.Alzantot M., Youssef M. UPTIME: Ubiquitous pedestrian tracking using mobile phones; Proceedings of the 2012 IEEE Wireless Communications and Networking Conference (WCNC); Paris, France. 1–4 April 2012; pp. 3204–3209. [Google Scholar]

- 15.Chen Z., Zou H., Jiang H., Zhu Q., Soh Y., Xie L. Fusion of WiFi, Smartphone Sensors and Landmarks Using the Kalman Filter for Indoor Localization. Sensors. 2015;15:715–732. doi: 10.3390/s150100715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Evennou F., Marx F. Advanced integration of WiFi and inertial navigation systems for indoor mobile positioning. Eurasip J. Appl. Signal Process. 2006;2006:164. doi: 10.1155/ASP/2006/86706. [DOI] [Google Scholar]

- 17.Waqar W., Chen Y., Vardy A. Incorporating user motion information for indoor smartphone positioning in sparse Wi-Fi environments; Proceedings of the 17th ACM international conference on Modeling, analysis and simulation of wireless and mobile systems); Montreal, QC, Canada. 21–26 September 2014; pp. 267–274. [Google Scholar]

- 18.Huang Q., Zhang Y., Ge Z., Lu C. Refining Wi-Fi Based Indoor Localization with Li-Fi Assisted Model Calibration in Smart Buildings; Proceedings of the International Conference on Computing in Civil and Building Engineering; Osaka, Japan. 6–8 July 2016. [Google Scholar]

- 19.Hernández N., Ocaña M., Alonso J.M., Kim E. Continuous Space Estimation: Increasing WiFi-Based Indoor Localization Resolution without Increasing the Site-Survey Effort. Sensors. 2017;17:147. doi: 10.3390/s17010147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Li F., Zhao C., Ding G., Gong J., Liu C., Zhao F. A reliable and accurate indoor localization method using phone inertial sensors; Proceedings of the 2012 ACM Conference on Ubiquitous Computing; Pittsburgh, USA. 5–8 September 2012; pp. 421–430. [Google Scholar]

- 21.Xiao Z., Wen H., Markham A., Trigoni N. Lightweight map matching for indoor localisation using conditional random fields; IPSN-14 Proceedings of the 13th International Symposium on Information Processing in Sensor Networks; Berlin, Germany. 15–17 April 2014; pp. 131–142. [Google Scholar]

- 22.Rai A., Chintalapudi K.K., Padmanabhan V.N., Sen R. Zee: Zero-effort crowdsourcing for indoor localization; Proceedings of the 18th Annual International Conference on Mobile Computing and Networking; Istanbul, Turkey. 22–26 August 2012; pp. 293–304. [Google Scholar]

- 23.Leppäkoski H., Collin J., Takala J. Pedestrian navigation based on inertial sensors, indoor map, and WLAN signals. J. Signal Process. Syst. 2013;71:287–296. doi: 10.1007/s11265-012-0711-5. [DOI] [Google Scholar]

- 24.Wang H., Lenz H., Szabo A., Bamberger J., Hanebeck U.D. WLAN-based pedestrian tracking using particle filters and low-cost MEMS sensors; Proceedings of the 4th Workshop on Positioning, Navigation and Communication (WPNC’07); Hannover, Germany. 22 March 2007; pp. 1–7. [Google Scholar]

- 25.Wang H., Sen S., Elgohary A., Farid M., Youssef M., Choudhury R.R. No need to war-drive: unsupervised indoor localization; Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services; Ambleside, UK. 25–29 June 2012; pp. 197–210. [Google Scholar]

- 26.Constandache I., Choudhury R.R., Rhee I. Towards mobile phone localization without war-driving; Proceedings of the 29th Conference on Computer Communications; San Diego, CA, USA. 15–19 March 2010; pp. 1–9. [Google Scholar]

- 27.Anagnostopoulos C., Tsetsos V., Kikiras P. OntoNav: A semantic indoor navigation system; Proceedings of the 1st Workshop on Semantics in Mobile Environments (SME’05); Ayia Napa, Cyprus. 9 May 2005. [Google Scholar]

- 28.Kolomvatsos K., Papataxiarhis V., Tsetsos V. Semantic Location Based Services for Smart Spaces. Springer; Boston, MA, USA: 2009. pp. 515–525. [Google Scholar]

- 29.Tsetsos V., Anagnostopoulos C., Kikiras P., Hadjiefthymiades S. Semantically enriched navigation for indoor environments. Int. J. Web Grid Serv. 2006;2:453–478. doi: 10.1504/IJWGS.2006.011714. [DOI] [Google Scholar]

- 30.Park J., Teller S. Motion Compatibility for Indoor Localization. Massachusetts Institute of Technology; Cambridge, MA, USA: 2014. [Google Scholar]

- 31.Harle R. A Survey of Indoor Inertial Positioning Systems for Pedestrians. IEEE Commun. Surv. Tutor. 2013;15:1281–1293. doi: 10.1109/SURV.2012.121912.00075. [DOI] [Google Scholar]

- 32.Sun Z., Mao X., Tian W., Zhang X. Activity classification and dead reckoning for pedestrian navigation with wearable sensors. Meas. Sci. Technol. 2008;20:15203. doi: 10.1088/0957-0233/20/1/015203. [DOI] [Google Scholar]

- 33.Kappi J., Syrjarinne J., Saarinen J. MEMS-IMU based pedestrian navigator for handheld devices; Proceedings of the 14th International Technical Meeting of the Satellite Division of the Institute of Navigation (ION GPS 2001); Salt Lake City, UT, USA. 11–14 September 2001; pp. 1369–1373. [Google Scholar]

- 34.Kang W., Nam S., Han Y., Lee S. Improved heading estimation for smartphone-based indoor positioning systems; Proceedings of the 2012 IEEE 23rd International Symposium on Personal Indoor and Mobile Radio Communications (PIMRC); Sydney, Australia. 9–12 September 2012; pp. 2449–2453. [Google Scholar]

- 35.Gusenbauer D., Isert C., Krösche J. Self-contained indoor positioning on off-the-shelf mobile devices; Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation (IPIN); Zurich, Switzerland. 15–17 September 2010; pp. 1–9. [Google Scholar]

- 36.Preece S.J., Goulermas J.Y., Kenney L.P., Howard D., Meijer K., Crompton R. Activity identification using body-mounted sensors—A review of classification techniques. Physiol. Meas. 2009;30:R1–R33. doi: 10.1088/0967-3334/30/4/R01. [DOI] [PubMed] [Google Scholar]

- 37.Banos O., Galvez J., Damas M., Pomares H., Rojas I. Window Size Impact in Human Activity Recognition. Sensors. 2014;14:6474–6499. doi: 10.3390/s140406474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wu W., Dasgupta S., Ramirez E.E., Peterson C., Norman G.J. Classification accuracies of physical activities using smartphone motion sensors. J. Med. Internet Res. 2012;14:e130. doi: 10.2196/jmir.2208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shoaib M., Bosch S., Incel O., Scholten H., Havinga P. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors. 2016;16:426. doi: 10.3390/s16040426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Damaševičius R., Vasiljevas M., Šalkevičius J., Woźniak M. Human Activity Recognition in AAL Environments Using Random Projections. Comput. Math. Methods Med. 2016;2006:4073584. doi: 10.1155/2016/4073584. [DOI] [PMC free article] [PubMed] [Google Scholar]