This is the first study to characterize amygdala neuronal responses to cues predicting rewards and penalties of variable size in monkeys making value-based choices. Manipulating reward and penalty size allowed distinguishing activity dependent on motivational salience from activity dependent on value. This approach revealed in a previous study that neurons of area lateral intraparietal (LIP) encode motivational salience. Here, it reveals that amygdala neurons encode value. The results establish a sharp functional distinction between the two areas.

Keywords: amygdala, monkey, penalty, reward, value

Abstract

Neurons in the lateral intraparietal (LIP) area of macaque monkey parietal cortex respond to cues predicting rewards and penalties of variable size in a manner that depends on the motivational salience of the predicted outcome (strong for both large reward and large penalty) rather than on its value (positive for large reward and negative for large penalty). This finding suggests that LIP mediates the capture of attention by salient events and does not encode value in the service of value-based decision making. It leaves open the question whether neurons elsewhere in the brain encode value in the identical task. To resolve this issue, we recorded neuronal activity in the amygdala in the context of the task employed in the LIP study. We found that responses to reward-predicting cues were similar between areas, with the majority of reward-sensitive neurons responding more strongly to cues that predicted large reward than to those that predicted small reward. Responses to penalty-predicting cues were, however, markedly different. In the amygdala, unlike LIP, few neurons were sensitive to penalty size, few penalty-sensitive neurons favored large over small penalty, and the dependence of firing rate on penalty size was negatively correlated with its dependence on reward size. These results indicate that amygdala neurons encoded cue value under circumstances in which LIP neurons exhibited sensitivity to motivational salience. However, the representation of negative value, as reflected in sensitivity to penalty size, was weaker than the representation of positive value, as reflected in sensitivity to reward size.

NEW & NOTEWORTHY This is the first study to characterize amygdala neuronal responses to cues predicting rewards and penalties of variable size in monkeys making value-based choices. Manipulating reward and penalty size allowed distinguishing activity dependent on motivational salience from activity dependent on value. This approach revealed in a previous study that neurons of the lateral intraparietal (LIP) area encode motivational salience. Here, it reveals that amygdala neurons encode value. The results establish a sharp functional distinction between the two areas.

in tasks requiring monkeys to make value-based decisions, cues identifying promised rewards and threatened penalties elicit neuronal responses in many brain areas. These responses might depend either on motivational salience or on value. The two are dissociable because motivational salience increases with the size of either a promised reward or a threatened penalty, whereas value increases with reward size and decreases with penalty size (Barberini et al. 2012). We recently demonstrated that cue-related activity in the lateral intraparietal (LIP) area follows the pattern expected from neuronal sensitivity to motivational salience (Leathers and Olson 2012). Neurons fired more strongly in response to a cue predicting a reward if the reward was larger and likewise fired more strongly in response to a cue predicting a penalty if the penalty was larger. Neurons in this study displayed relatively little delay-period activity (Newsome et al. 2013); however, even neurons with strong delay-period activity were sensitive to salience as distinct from value (Leathers and Olson 2013). On the basis of our observations in LIP, we speculated that cue responses in LIP are related to the capture of attention by cues with motivational salience rather than to the representation of value. We surmised that the representation of value on the basis of which the monkeys made decisions might reside not in LIP but in the limbic system and in particular in the amygdala. This assumption is concordant with prior indications that amygdala neurons are sensitive to the positive and negative associations of cues in other tasks (Belova et al. 2008; Paton et al. 2006), including tasks requiring value-based decision making (Grabenhorst et al. 2012; Grabenhorst et al. 2016; Hernádi et al. 2015). However, neuronal responses in the amygdala are subject to modulation by task context (Peck et al. 2014; Saez et al. 2015), and, in some task contexts, neurons appear to signal salience as distinct from value (Peck and Salzman 2014b; Shabel and Janak 2009). To test whether amygdala neurons carry value signals under circumstances in which LIP neurons signal salience accordingly required recording from the amygdala while monkeys performed a task identical to the one used in the study of LIP.

MATERIALS AND METHODS

Subjects

Two adult rhesus macaque monkeys were studied (monkey 1, female, laboratory designation Ju, and monkey 2, male, laboratory designation Je). All experimental procedures were approved by the Carnegie Mellon University Institutional Animal Care and Use Committee (IACUC) and the University of Pittsburgh IACUC and were in compliance with the guidelines set forth in the United States Public Health Service Guide for the Care and Use of Laboratory Animals. In each monkey, a surgically implanted plastic cranial cap held a post for head restraint and a cylindrical recording chamber 2 cm in diameter oriented vertically with its base centered approximately over the amygdala. Electrodes could be advanced along tracks forming a square grid with 1-mm spacing. The chambers overlay the right hemisphere in monkey 1 and the left hemisphere in monkey 2.

Reward-Penalty Task

On each trial, cues presented to the left and right of fixation predicted the outcomes that would result from leftward and rightward saccades later in the trial. The cues were selected from two sets of four digitized images representing background-free objects. In each set, one image predicted a small reward, one predicted a large reward, one predicted a small penalty, and one predicted a large penalty. In monkey 1, the small and large rewards were 0.20 and 0.30 ml of apple juice diluted 1:3 in water. In monkey 2, the small and large rewards were 0.04 and 0.12 ml of water. These design features were the result of adjustments made during training of each monkey to induce engagement with the task and consistent choice behavior. In each monkey, the small penalty was a 400-ms period of enforced eccentric fixation and the large penalty was a 3,200-ms period of enforced eccentric fixation.

Trials conformed to 12 choice conditions comprising all possible arrangements in which cues of unequal value could be placed at the left and right locations. A run consisted of 96 successfully completed trials using image set 1 followed by 96 successfully completed trials using image set 2. A trial was judged to be successful if the monkey completed it regardless of whether the chosen outcome was optimal or suboptimal. The monkey completed 16 trials under each of 12 choice conditions during a session. The sequence of conditions was random except for 2 constraints. First, within each block of 24 trials, each condition had to be imposed twice. Second, following a fixation break resulting in premature completion of the trial, the identical condition was imposed again.

At the outset of each trial, the monkey fixated a central white square subtending 0.1° for 300 ms. Then, two cues, each associated with a reward or a penalty of a particular size, appeared for 500 ms at diametrically opposed locations 12° to the left and right of fixation. The cues were digitized images of objects against a transparent background. Each image subtended 7° along whichever dimension, horizontal or vertical, was greater. After the disappearance of the cues, there was a delay during which the monkey had to maintain central fixation. The duration of the delay was adjusted during training to accommodate each monkey’s behavioral limits. For monkey 1, the delay was selected at random within the range 1,000–1,200 ms. For monkey 2, it was fixed at 500 ms. At the end of the delay period, the fixation spot vanished and two white square targets subtending 0.2° appeared at locations 12° to the left and right of fixation. The monkey was required to initiate a saccade to one of the targets within a limited time (600 ms in monkey 1 and 300 ms in monkey 2) and to reach the target within 100 ms after initiation. The sequence of events following a saccade depended on whether the target was at a location marked earlier in the trial by a reward-predicting or penalty-predicting cue.

Selection of a reward-predicting cue.

If the target was at a location marked earlier in the trial by a reward-predicting cue, monkey 1 was required to maintain fixation on it for 100 ms. At that time, the unselected target disappeared and the selected target was replaced by a version of the reward-predicting cue minified to 24% of its former size so as to render it suitable as an object of fixation. Reward was delivered after an additional interval selected at random within the range 300–450 ms. In monkey 2, we found it necessary to adopt a simplified procedure. On completion of the saccade, the target was immediately extinguished and the reward delivered.

Selection of a penalty-predicting cue.

If the target was at a location marked earlier in the trial by a penalty-predicting cue, then it was immediately replaced by a minified version of this cue. The monkey was required to maintain fixation on this image during a punitive timeout period. While the monkey maintained fixation, the cue flashed on and off to the accompaniment of a periodic sound. If the monkey broke fixation, then the cue remained steadily on. When the monkey renewed fixation, it again flashed on and off to the accompaniment of the sound. This continued until the cumulative fixation time matched the required duration. Enforced fixation, although not a classic biologically defined primary reinforcer, was aversive due to its involving both effort and delay, which are well-known to be discounted against reward.

A premature fixation break led to consequences that depended on the outcome options available at the moment when fixation failed. 1) If the monkey failed to attain fixation on the central target or broke fixation before the outcome-predicting cues had appeared, then the trial simply terminated. 2) If, after the onset of the cues, the monkey broke central fixation, failed to launch a saccade in time, or failed to reach a target in time, then i) if the two options were both rewards, the trial simply terminated, ii) if one option was a penalty, then this penalty was imposed, and iii) if both options were penalties, then the larger penalty was imposed. 3) If the monkey made a saccade to a target at a location marked by a reward-predicting cue but broke eccentric fixation before delivery of reward, then i) if the two options were both rewards, the trial simply terminated, or ii) if the other option was a penalty, then this was delivered. The imposition of penalties after fixation breaks ensured that the monkeys remained familiar with the penalties even when choosing them rarely.

Recording

At the beginning of the session each day, we lowered a guide tube through the dura to a point several millimeters above the amygdala. We then advanced a varnish-coated tungsten microelectrode with an initial impedance of several megohms at 1 kHz through the guide tube down to the level of the amygdala as established in a preliminary mapping phase. While the electrode was being advanced, the monkey performed the reward-penalty task. On encountering well-isolated neuronal activity, we restarted the task and began to collect data. Selection of the recording site was not contingent on any judgment by the experimenter other than that the spikes were well-isolated. We included in the final data set any neuron recorded with good isolation throughout an entire run of the task as determined by offline use of commercially available spike-sorting software (Plexon Offline Sorter). The data set included 20 neurons recorded during 12 recording runs in monkey 1 and 30 neurons recorded during 19 recording runs in monkey 2. We confirmed that recording sites were in the amygdala by reference to frontoparallel MR images including fiducial markers placed at known locations within the chamber. In both monkeys, the recording sites were confined to a range ±1 mm in the anteroposterior (AP) and mediolateral (ML) dimensions relative to a point centered at coordinates of 16 mm anterior and 11 mm lateral relative to the Horsley-Clarke reference frame. Although we cannot definitively assign any recording site to a particular subdivision of the amygdala, we can state that the recording sites spanned a region potentially encompassing portions of the basal and lateral nuclei (Paxinos et al. 2000). This region overlapped the zone established in prior studies to contain neurons responsive to cues predicting rewards and penalties without any obvious sign of regional heterogeneity (Belova et al. 2008; Paton et al. 2006; Peck et al. 2013; Peck and Salzman 2014b; Zhang et al. 2013).

Analysis of Population Activity

To assess the statistical significance of the dependence of the population firing rate on cues predicting reward and penalty, we considered trials in which the monkey chose optimally. Trials involving suboptimal choices were too rare to consider in their own right and would have introduced uncontrolled variance into the database. Analysis was based on an epoch 0–250 ms after cue onset both because this was the period of strongest responsiveness and because it fully encompassed the period during which value-based decisions typically occur in two-alternative choice tasks (Cai and Padoa-Schioppa 2014).

The procedure involved two ANOVAs (α = 0.05). One ANOVA was based on those four trial conditions in which a reward cue (large or small) contralateral to the recording hemisphere was paired with a penalty cue (large or small) ipsilateral to the recording hemisphere and the monkey chose reward. Reward size and penalty size were fully counterbalanced across these conditions. A second ANOVA was based on those four trial conditions in which a contralateral penalty cue (large or small) was paired with an ipsilateral reward cue (large or small) and the monkey chose reward. Reward size and penalty size were fully counterbalanced across these conditions. Each ANOVA assessed the dependence of neuronal firing rate 0–250 ms after cue onset on three factors: reward size (large or small), penalty size (large or small), and neuron identity (1,2…n) with n equal to the number of neurons in the sample. Inclusion of neuron identity as a factor buffered variance due to differences in mean firing rate across neurons.

It might seem desirable to conduct a single ANOVA involving two factors (value contralateral to the recording site and value ipsilateral to the recording site) each with four levels (large reward, small reward, small penalty, and large penalty). This approach would, however, have been suboptimal for both technical and conceptual reasons. The technical reasons are twofold. First, cells along the diagonal of the 4 × 4 matrix would have been empty due to the absence of trials in which offers on the left and right were of equal value. Second, the variables of interest would covary with saccade direction. The conceptual reason is that the factors in this analysis would not correspond to the variables of immediate interest in the context of the study (the impact of reward and penalty size at contralateral and ipsilateral locations). Carrying out a pair of two-factor ANOVAs with reward size at one location precisely counterbalanced against penalty size at the other location solves all of these problems. There are no empty cells. Saccade direction is unvarying within the range of conditions under consideration. The factors are the variables of immediate interest. Using a pair of ANOVAs does not create a multiple-comparisons problem because the two analyses use different sets of trials to characterize different effects. One analysis characterizes the effects of reward contralateral to the recording site and penalty ipsilateral to the recording site. The other characterizes the effects of ipsilateral reward and contralateral penalty.

To aid in understanding the results of the ANOVAs, we constructed plots representing firing rate as a function of time for the two levels (small or large) of each of the four factors, contralateral reward size, ipsilateral reward size, contralateral penalty size, and ipsilateral penalty size. To construct the plots, we computed the mean population firing rate in each 1-ms bin and then applied a Gaussian smoothing function (σ = 10 ms).

To assess the timing of the contralateral reward-size signal, we conducted an analysis based on trials in which the options were a contralateral reward and an ipsilateral penalty and the monkey chose reward. We computed, as a function of time following cue onset, the mean population firing rate on trials in which the cue predicted a large reward minus the mean population firing rate on trials in which the cue predicted a small reward. We normalized the difference measure according to the formula (I − B)/(P − B) where I was instantaneous difference measure, B was the difference measure at time 0, and P was peak difference measure in a window 0–350 ms following cue onset.

Analysis of Single-Neuron Activity

To determine whether individual neurons were significantly sensitive to reward size or penalty size as predicted by contralateral or ipsilateral cues, we carried out a pair of two-factor ANOVAs identical to those employed for population analysis with the sole exception that, due to exclusion of neuron number as a factor, each ANOVA included only two factors (reward size and penalty size). For each possible main effect (contralateral reward size, ipsilateral reward size, contralateral penalty size, and ipsilateral penalty size), we determined, using a χ2-test with Yates correction, whether the number of neurons exhibiting a significant (α = 0.05) effect with a given sign (positive or negative) was greater than the number expected by chance (2.5%).

To determine whether the pattern of reward-size dependence was correlated with the pattern of penalty-size dependence across neurons, we characterized the reward and penalty dependence of the firing rate of each neuron with indices capturing the effect of contralateral reward and penalty cues. Each index had the form (L − S)/(L + S) where L and S were the firing rates associated with a large and small outcome, respectively. We then computed the Pearson correlation across neurons between the reward and penalty indices.

RESULTS

This study had two main aims: first, to characterize neuronal activity in the amygdala in the context of the reward-penalty task and, second, to compare neuronal activity in the amygdala with neuronal activity in LIP as observed in the same task (Leathers and Olson 2012). We first present results from the amygdala as considered in isolation and then present the results of a series of comparisons carried out between the amygdala and LIP.

Amygdala

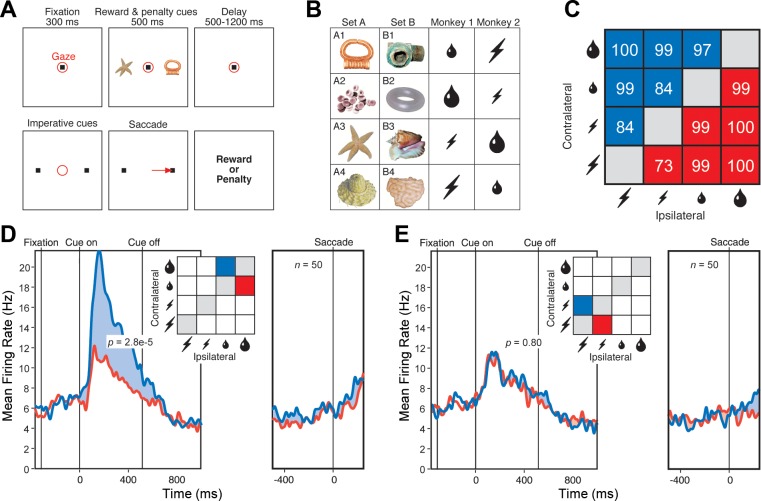

On each trial, the monkey chose between cues placed to the left and right of fixation (Fig. 1A). Each cue indicated that if the monkey made an eye movement to its location at the end of the trial a particular outcome would ensue (Fig. 1B). The possible outcomes, in order of declining value, were large reward (maximal volume of fluid), small reward (minimal volume of fluid), small penalty (minimal duration of enforced eccentric fixation), and large penalty (maximal duration of enforced eccentric fixation). The monkeys were sensitive to these manipulations. They correctly evaluated the cues in descending order from large reward to small reward to small penalty to large penalty as evidenced by the high rate of optimal choices under all conditions (Fig. 1C). They were more motivated or aroused by the prospect of either a large reward or a large penalty as indicated by a reaction time measure. The mean reaction time was lower on large-reward than on small-reward trials (monkey 1: 10 ms, P = 0.0017, F = 10.47, 12 runs; monkey 2: 11 ms, P < 0.0001, F = 24.54, 19 runs; ANOVA with consideration restricted to trials pitting a reward against a penalty, with reward size, penalty size, and reward location as independent variables and with mean reaction time during run as dependent variable). Likewise, the mean reaction time was lower on large-penalty than on small-penalty trials, with the effect approaching significance in monkey 1 (4 ms, P = 0.10, F = 2.76, 12 runs) and attaining significance in monkey 2 (5 ms, P = 0.0087, F = 7.08, 19 runs).

Fig. 1.

A: sequence of events in a single trial. The items in each panel were visible to the monkey with the exception of those indicating gaze location and saccade. The delay period varied randomly in the range 1,000–1,200 ms for monkey 1 and was fixed at 500 ms for monkey 2. B: stimuli and their contingencies. The 8 cues possessed different significance in the 2 monkeys as indicated. Large and small water drops: large and small reward. Large and small lightning bolts: large and small penalty. C: the monkeys nearly always chose optimally. The numbers indicate the percentage of trials on which they chose the better outcome, either at the contralateral location (blue) or at the ipsilateral location (red). D: population firing rate as a function of time during trials with a large-reward cue at the contralateral location and a small-reward cue at the ipsilateral location (blue) or vice versa (red). E: population firing rate as a function of time during trials with a large-penalty cue at the contralateral location and a small-penalty cue at the ipsilateral location (red) or vice versa (blue). In D and E, each pair of red and blue curves is based on conditions colored red and blue in the accompanying inset. In D and E, P is the outcome of a 2-tailed paired t-test, with n as indicated, comparing firing rates under the conditions represented in the panel.

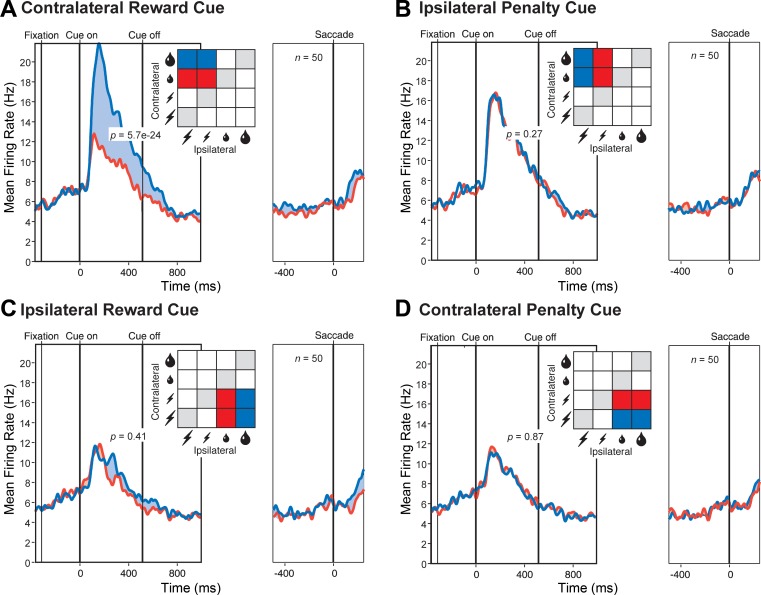

We proceeded to analyze neuronal data collected during trials in which the monkey made the optimal decision. The data set consisted of 20 neurons from monkey 1 and 30 neurons from monkey 2. Although these numbers are comparatively low, all of the effects that we describe were sufficiently robust to attain significance in the combined data and were present in the amygdala of each monkey considered individually. We first examined trials in which the monkey chose between a large and a small reward. Population activity was significantly stronger (5.4 spikes per second, P = 2.8 e−5, t = 4.62, n = 50, 2-tailed paired t-test) when the large-reward cue was contralateral to the recording hemisphere and the small-reward cue was ipsilateral than when the same cues were in the opposite arrangement (Fig. 1D). The effect was present both in monkey 1 (6.3 spikes per second, P = 0.0030, t = 3.40, n = 20) and in monkey 2 (4.81 spikes per second, P = 0.0037, t = 3.16, n = 30). We next examined trials in which the monkey chose between a large and a small penalty. Population activity was the same (P = 0.80) regardless of the arrangement of the cues (Fig. 1E). Sensitivity to the arrangement of reward cues might be taken as indicating that neurons actively integrate reward and spatial information (Peck et al. 2013; Peck and Salzman 2014a; Peck and Salzman 2014b); however, it could also indicate that neurons process information primarily from one hemifield (Peck and Salzman 2014a). To resolve this issue, we carried out further analyses based on trials across which variations in reward size on one side were fully counterbalanced against variations in penalty size on the other side. First, we considered trials in which the reward cue was contralateral and the penalty cue was ipsilateral. Population activity was significantly stronger (4.8 spikes per second, P = 5.7 e−24, F = 351, n = 50, ANOVA) for large than for small reward (Fig. 2A). The effect was present both in monkey 1 (4.8 spikes per second, P = 2.2 e−9, F = 111, n = 20) and in monkey 2 (4.8 spikes per second, P = 1.1 e−16, F = 284, n = 30). The population signal dependent on reward size attained half-height at 112 ms after cue onset. In contrast, population activity was the same (P = 0.27) regardless of penalty size (Fig. 2B). Next, we considered trials in which the reward cue was ipsilateral and the penalty cue was contralateral. Population activity appeared to be slightly stronger on large-reward compared with small-reward trials (Fig. 2C), but this effect did not attain significance (P = 0.41). Population activity was the same (P = 0.87) regardless of penalty size (Fig. 2D). In neither of the above analyses did reward size at one location interact significantly with penalty size at the other location (P > 0.20). These results indicate that the main determinant of population activity was the size of the reward predicted by the cue at the location contralateral to the recording hemisphere.

Fig. 2.

The population firing rate increased with the size of the reward predicted by the contralateral cue. A: the contralateral cue predicted large (blue) or small (red) reward with other factors held constant or counterbalanced out. B: the ipsilateral cue predicted large (blue) or small (red) penalty with other factors held constant or counterbalanced out. C: the ipsilateral cue predicted large (blue) or small (red) reward with other factors held constant or counterbalanced out. D: the contralateral cue predicted large (blue) or small (red) penalty with other factors held constant or counterbalanced out. In A–D, each pair of red and blue curves is based on conditions colored red and blue in the accompanying inset. In A–D, P is the level of significance of the difference in population firing rate between the large and small outcomes associated with the cues in the panel as revealed by an ANOVA with n as indicated.

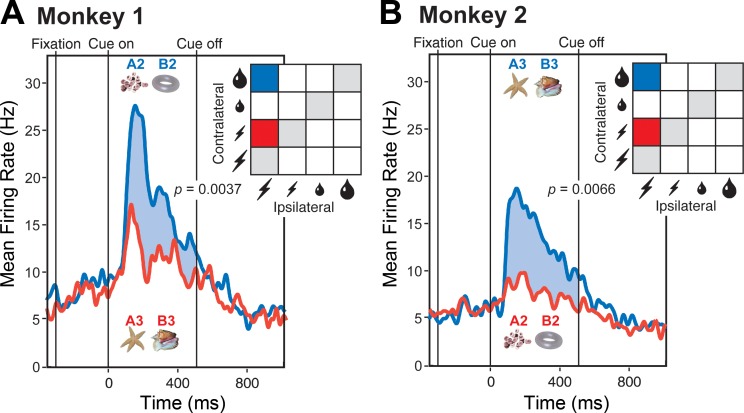

Cues predicting a large reward might conceivably have possessed a high level of physical salience and consequently have elicited a strong population response. To assess this possibility, we took advantage of the fact that the same images had different associations in the two monkeys. Cues predicting a large reward in monkey 1 predicted a small penalty in monkey 2 and vice versa (Fig. 1B). Analyzing data from trials in which these cues appeared at the contralateral location, with all other factors held constant or counterbalanced out, we found that population activity in each monkey was stronger for the cue predicting large reward than for the cue predicting small penalty (Fig. 3). The effect was significant both in monkey 1 (6.69 spikes per second, P = 0.0037, t = 3.31, n = 20, 2-tailed paired t-test) and in monkey 2 (5.25 spikes per second, P = 0.0066, t = 2.93, n = 30). The fact that population activity tracked the outcome predicted by the cue and not its physical identity rules out an interpretation based on physical salience.

Fig. 3.

The strength of the population response depended on the associations of the cues as distinct from their physical properties. A: in monkey 1, images A2 and B2 predicted a large reward, whereas images A3 and B3 predicted a small penalty. B: in monkey 2, the pairing of the images with the outcomes was reversed. In each monkey, the cues predicting a reward elicited a significantly stronger response (blue curve) than the cues predicting a penalty (red curve). In each panel, P is the outcome of a 2-tailed paired t-test, with n as indicated, comparing firing rates under the conditions represented in the panel.

Neurons visually selective for 1 or another of the large-reward cues might by chance have been sampled with unusually high frequency, with the consequence that the population appeared to respond more strongly to large-reward cues. To assess this possibility, we took advantage of the fact that completely different sets of images (image sets A and B as shown in Fig. 1B) were used during the 1st and 2nd 96-trial halves of the run. This design allowed unyoking reward-size selectivity from cue-identity selectivity, although it did not allow analyzing cue-identity selectivity as such due to the fact that cue identity was confounded with time during the run. For each neuron, for each half of the run, with consideration restricted to trials in which the contralateral cue signaled reward size and all other factors were held constant or counterbalanced out, we generated a reward index of the form (L − S)/(L + S) where L and S were the firing rates associated with large and small reward, respectively. If the reward signal depended solely on the incidental pairing of large reward with a cue possessing visual properties for which the neuron was selective, then the reward index based on data from the 1st 96 trials should have been uncorrelated across neurons with the reward index based on data from the 2nd 96 trials. On the contrary, there was a strong positive correlation (r = 0.44, P = 0.0015, F = 11.3, n = 50). We further divided the run into consecutive 48-trial quarters. If any component of the reward signal arose from incidental pairing of large reward with a cue possessing visual properties for which the neuron was selective, then there should have been a stronger correlation between reward indices computed during quarters 1 and 2 (when the images were the same) or between quarters 3 and 4 (when the images were the same) than between quarters 2 and 3 (when the images were different). In other words, the reward signal should have been greater when reward size was yoked to image identity than when it was not. On the contrary, the correlation coefficients were 0.34, 0.43, and 0.48 in the 1:2 (same images), 3:4 (same images), and 2:3 (different images) comparisons, respectively. We conclude that reward size exerted a major influence on neuronal activity even when unyoked from image identity and that the contribution of image identity to the reward signal was negligible.

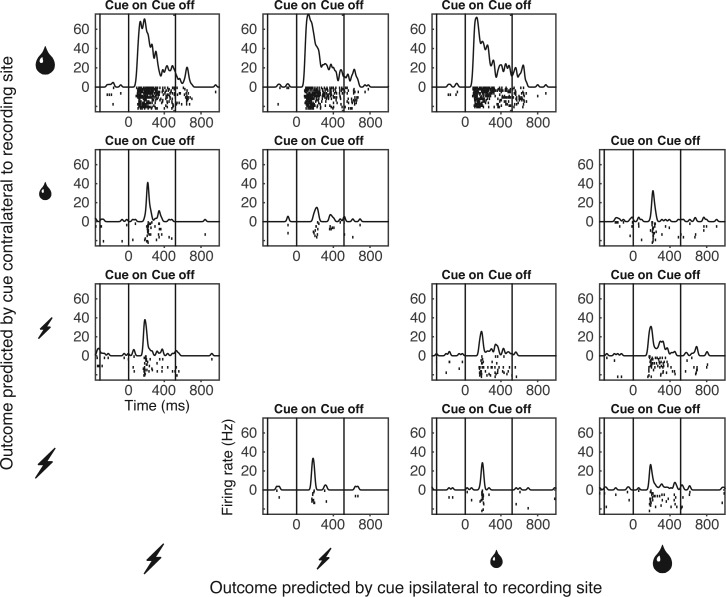

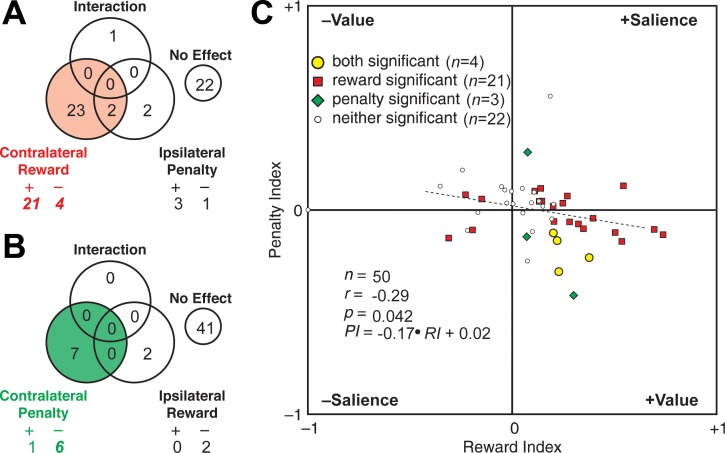

The population analyses described up to this point might have masked heterogeneous effects present at the neuronal level. Task-related signals carried by individual neurons were robust enough to allow for meaningful analysis as demonstrated by the example of a neuron sensitive to the size of the reward predicted by a cue contralateral to the recording hemisphere (Fig. 4). Accordingly, we carried out two analyses of variance on data from each neuron. One analysis assessed the impact of reward size contralateral to the recording hemisphere and penalty size ipsilateral to the recording hemisphere in a counterbalanced design. The counts of significant effects (α = 0.05) are given in Fig. 5A. Neurons exhibiting a significant main effect of contralateral reward size were preponderant (red sector of Fig. 5A). Main effects are broken down in Fig. 5A into cases with significantly stronger firing for large reward (R+), small reward (R−), large penalty (P+), and small penalty (P−). Counts significantly [χ2-test, 1 degree of freedom (df), Yates correction] in excess of the fraction (0.025) expected by chance are presented in italic boldface. Note that in a two-tailed test with α = 0.05, the fraction of positive effects expected by chance is 0.025 and likewise for negative effects. Cases with stronger firing for large reward (R+) were significantly more numerous than expected by chance (P = 4.3 e−68). This was also true of cases with stronger firing for small reward (R−; P = 0.042). The second analysis assessed the impact of contralateral penalty size and ipsilateral reward size in a counterbalanced design. The counts of significant effects are given in Fig. 5B. Neurons exhibiting a significant main effect of contralateral penalty size were preponderant (green sector of Fig. 5B). In particular, neurons firing more strongly for small penalty (P−) were significantly more numerous than expected by chance (P = 0.00012). Results broken down by individual monkey continued to conform to each of the patterns noted above. Reward sensitivity was more frequent for contralateral than for ipsilateral cues in monkey 1 (13 vs. 2 cases) and monkey 2 (12 vs. 0 cases). Contralateral cues elicited more R+ than R− responses in monkey 1 (10 vs. 3 cases) and monkey 2 (11 vs. 1 cases). Penalty sensitivity was more frequent for contralateral than for ipsilateral cues in monkey 1 (2 vs. 1 cases) and in monkey 2 (5 vs. 3 cases). Finally, contralateral penalty cues elicited more P− than P+ responses in monkey 1 (2 vs. 0 cases) and monkey 2 (4 vs. 1 cases). These findings at the single-neuron level are concordant with the population analysis in that the most numerous effect by far involves the neurons firing significantly more strongly when the contralateral cue predicts a large reward than when it predicts a small reward (contra R+). They go beyond the population analysis of reward-related activity in showing that although many neurons exhibit the effect observed at the population level (contralateral R+), a few exhibit the opposite effect (contralateral R−). They go beyond the population analysis of penalty-related activity in demonstrating the presence of sensitivity to penalty size (contralateral P−) in a few neurons.

Fig. 4.

Activity of a single neuron sensitive to the outcome offered by the cue contralateral to the recording site. The neuron strongly preferred large reward to small reward and weakly preferred small penalty to large penalty. The histogram-raster display in each panel represents firing as a function of time on trials conforming to 1 task condition. The panels are arranged in rows according to the outcome offered by the cue contralateral to the recording site (from large reward at the top to large penalty at the bottom) and in columns according to the identity of the outcome offered by the cue ipsilateral to the recording site (from large reward at the right to large penalty at the left). Only trials on which the monkey chose the optimal outcome are included.

Fig. 5.

Individual neurons were sensitive to predictions of reward size and penalty size when they were conveyed by contralateral cues. A: on trials involving a contralateral reward cue and an ipsilateral penalty cue, neurons sensitive to reward size were preponderant. Among reward-sensitive neurons, those firing more strongly for large reward (“+” = 21) outnumbered those firing more strongly for small reward (“–” = 4). B: on trials involving a contralateral penalty cue and an ipsilateral reward cue, neurons sensitive to penalty size were preponderant. Among penalty-sensitive neurons, those firing more strongly for small penalty (“–” = 6) outnumbered those firing more strongly for large penalty (“+” = 1). C: across all 50 neurons, there was a negative correlation between the penalty index (PI) and the reward index (RI), each having been defined as (L − S)/(L + S) where L and S were the firing rates associated with a large outcome and a small outcome, respectively. The observed pattern is compatible with the positive (+Value) or negative (–Value) signaling of value. It is incompatible with the positive (+Salience) or negative (–Salience) signaling of arousal, motivation, or salience. In C, P is the level of significance with which the indicated Pearson correlation coefficient (r) differs from 0 as indicated by a linear regression test with n as indicated.

Having found that neurons were sensitive to both contralateral penalty size and contralateral reward size, we proceeded to ask whether these effects arose from sensitivity to value (which increased with the size of the reward and decreased with the size of the penalty) or sensitivity to salience (which increased with the size of both the reward and the penalty). To answer this question, we analyzed how the reward and penalty signals covaried across the neuronal population. For each neuron, we generated a reward index and a penalty index. Each index was based on trials in which the size of the outcome predicted by the contralateral cue varied with all other factors held constant or counterbalanced out. Each index had the form (L − S)/(L + S) where L and S were the firing rates associated with a large and small predicted outcome, respectively. On plotting the penalty index against the reward index, we found that they were negatively correlated (Fig. 5C). The effect was significant in the combined data (r = −0.29, P = 0.042, F = 4.37, n = 50). In individual monkeys, the strength of the effect, as reflected in the correlation coefficient, was commensurate with its strength in the combined data, but, as expected from diminution of the sample size, significance was reduced to trend level in monkey 1 (r = −0.37, P = 0.11, F = 2.82, n = 20) and likewise in monkey 2 (r = −0.28, P = 0.13, F = 2.41, n = 30). We conclude that neurons firing more for large reward tended to fire less for large penalty and therefore that neuronal activity depended on the value of the predicted outcome as distinct from its salience.

Amygdala in Comparison with LIP

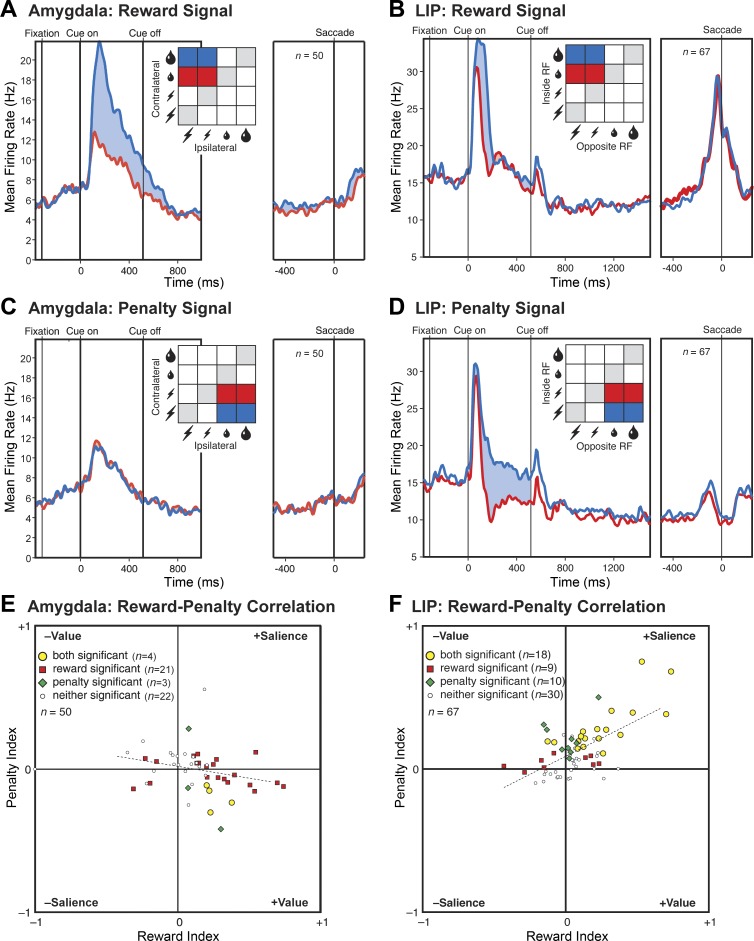

Results from the amygdala as described above allow direct comparison with results obtained in a previous study of LIP because they were obtained in the context of the same task and analyzed by means of the same measures (Leathers and Olson 2012). Here, we describe a series of comparisons between the amygdala and LIP focused successively on the reward signal (Fig. 6, A and B), the penalty signal (Fig. 6, C and D), and the cross-neuronal correlation between the two signals (Fig. 6, E and F). We also consider the degree to which cross-study differences in the monkeys' behavior (Fig. 7) could have contributed to cross-area differences in neuronal activity.

Fig. 6.

Comparison between the amygdala and LIP on 3 key effects. A and B: reward signal. Population mean firing rate as a function of time during the trial when the cue contralateral to the recording hemisphere predicted large (blue) vs. small (red) reward. Conventions as in Fig. 2A. C and D: penalty signal. Population mean firing rate as a function of time during the trial when the cue contralateral to the recording hemisphere predicted large (blue) vs. small (red) penalty. Conventions are as in Fig. 2D. E and F: correlation across neurons between the reward signal and the penalty signal. Conventions are as in Fig. 5C. Data from LIP are from a prior report by the authors (Leathers and Olson 2012).

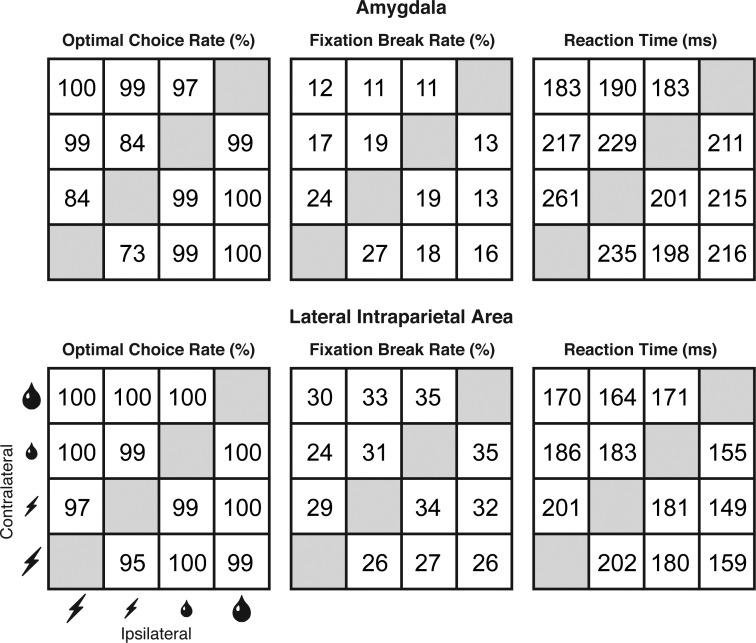

Fig. 7.

Impact of predicted reward size and predicted penalty size on 3 behavioral performance measures. Results from the current study of the amygdala are in the top row, and results from a prior study of LIP (Leathers and Olson 2012) are in the bottom row. In each panel, cells are arranged in rows according to the outcome offered by the cue contralateral to the recording hemisphere (from large reward at the top to large penalty at the bottom) and in columns according to the identity of the outcome offered by the cue ipsilateral to the recording hemisphere (from large reward at the right to large penalty at the left). Each measure represents the mean computed across all recording runs. There were 12 and 19 runs in amygdala subjects monkeys 1 and 2 for a total of 31 runs. There were 16 and 20 runs in LIP subjects monkeys 1 and 2 for a total of 36 runs. Optimal Choice Rate: the percentage of trials on which the monkey chose the better offer, with offers ranked in descending order from large reward to small reward to small penalty to large penalty. Fixation Break Rate: out of all trials completed at least up to the point of the outcome-predicting display, the percentage subsequently terminated due to a fixation break. Reaction Time: the interval between offset of the central fixation spot and initiation of the saccade on trials culminating in choice and delivery of the optimal offer.

Comparison with LIP: reward signal.

We computed a reward-size signal for each neuron by subtracting the mean firing rate on small-reward trials from the mean firing rate on large-reward trials. The mean across neurons was 4.8 spikes per second in the amygdala and 4.5 spikes per second in LIP. We compared the distributions by use of a two-tailed t-test. This revealed that the means were statistically indistinguishable (P = 0.85, t = −0.19, df = 115). In each area, more neurons than expected by chance exhibited significant reward-dependent activity (25/50 in the amygdala and 27/67 in LIP), and, within this group, neurons favoring large reward were more numerous than expected by chance (21/25 in the amygdala and 20/27 in LIP). To compare the corresponding counts, we employed a χ2-test with Yates correction (df = 1). The fraction of neurons exhibiting significant dependence on reward size did not differ significantly between the two areas (P = 0.39, χ2 = 0.73). Among neurons exhibiting a significant dependence of firing rate on reward size, the fraction favoring large over small reward did not differ significantly between the two areas (P = 0.59, χ2 = 0.29). Thus reward signals in the amygdala and LIP were statistically indistinguishable with regard to both strength and frequency.

Comparison with LIP: penalty signal.

We computed a penalty-size signal for each neuron in each area by subtracting the mean firing rate on small-penalty trials from the mean firing rate on large-penalty trials. The mean across neurons was −0.042 spikes per second in the amygdala and 5.3 spikes per second in LIP. We compared the distributions by use of a two-tailed t-test. This revealed that the mean in LIP was significantly greater than the mean in the amygdala (P = 2.2 e−6, t = 5.0, df = 115). In the amygdala, only a few neurons (7/50) exhibited significant penalty-dependent activity; among these neurons, very few (1/7) favored large penalty. In LIP, many neurons (28/67) exhibited significant penalty-dependent activity; among these neurons, all (28/28) favored large penalty. To compare the relevant counts, we employed a χ2-test with Yates correction (df = 1). The fraction of neurons exhibiting significant dependence on penalty size was significantly greater in LIP than in the amygdala (P = 0.0023, χ2 = 9.26). Among neurons exhibiting significant sensitivity to penalty size, the fraction favoring large over small penalty was significantly greater in LIP than in the amygdala (P = 1.4 e−6, χ2 = 23.2). Thus penalty signals in the amygdala and LIP differed significantly in both strength and frequency.

Comparison with LIP: correlation between reward and penalty signals.

The correlation across neurons between the normalized penalty signal and the normalized reward signal was significantly negative in the amygdala (r = −0.29) and significantly positive in LIP (r = 0.62). The fact that the correlations were individually significant and of opposite sign indicates that they were significantly different from each other. Direct statistical comparison of the coefficients confirmed this conclusion (P = 8.9 e−8, z = 5.35, n1 = 67, n2 = 50). A comparable effect was present at the level of neurons significantly sensitive to both reward size and penalty size. In 4 out of 4 such amygdala neurons, the sign of the reward effect was opposite to the sign of the penalty effect in accordance with an interpretation based on value. In 16 out of 18 such LIP neurons, the sign of the reward effect matched the sign of the penalty effect in accordance with an interpretation based on salience. A χ2-test with Yates correction revealed that the difference between the amygdala and LIP with regard to the distribution of counts between the value and salience categories was significant (P = 0.0028, χ2 = 8.94, df = 1). We conclude that the correlation between reward coding and penalty coding was genuinely reversed in the amygdala compared with LIP.

Comparison with LIP: behavior.

The behavior of the monkeys in the amygdala and LIP studies was not identical (Fig. 7). This raises the possibility that the differences in neural outcome arose from neural sensitivity to behavioral differences present during data collection. To explore this possibility, we carried out analyses based on 5 measures of behavior: 1) the rate of choosing reward over penalty; 2) the rate of choosing large reward over small reward; 3) the rate of choosing small penalty over large penalty; 4) the fixation-break rate on trials pitting a reward against a penalty; and 5) the reaction time on trials pitting a reward against a penalty. Measures 1–3 were sensitive to the way in which the monkeys evaluated rewards and penalties. Measures 4–5 were potentially sensitive to global states such as motivation and arousal. For each neuron in the amygdala and LIP, we extracted all 5 behavioral measures from the run during which neuronal data collection occurred. The resulting data set consisted of 5 behavioral measures for each of 20 neurons in amygdala monkey 1, 30 neurons in amygdala monkey 2, 28 neurons in LIP monkey 1, and 39 neurons in LIP monkey 2.

We proceeded to carry out a series of analyses in which we 1st factored out variance in a given neural measure explained by the 5 behavioral variables in a multivariate regression analysis and then tested whether the amygdala and LIP differed significantly with respect to the neural residuals.

reward signal.

After factoring out variance in the reward signal dependent on behavior, we found that signal strength was indistinguishable between the amygdala and LIP just as in the original analysis (P = 0.68, t = 0.42, df = 115, 2-tailed t-test).

penalty signal.

After factoring out variance in the penalty signal dependent on behavior, we found that signal strength was still stronger in LIP than in the amygdala but that the difference was no longer significant (P = 0.195, t = 1.3, df = 115, 2-tailed t-test). This outcome could have occurred either because the penalty signal genuinely depended on behavior or because the penalty signal and behavior happened independently to vary across studies. If the effect were genuine, then the penalty signal should have depended on behavior within each monkey according to the same pattern in which it depended on behavior across monkeys. However, the within-monkey and across-monkey patterns of dependence, as reflected in regression coefficients, were anticorrelated (Table 1). Reaction time was the sole behavioral measure for which the within-monkey and across-monkey analyses yielded regression coefficients with the same sign. To test whether the difference between areas with regard to the penalty signal could have arisen from its dependence on reaction time, we factored out variance in the penalty signal dependent on reaction time. Contrary to the idea that the difference between areas with regard to the penalty signal was an artifact of the behavioral difference, we found that the residual penalty signal was significantly greater in LIP than in the amygdala (P = 0.0038, t = 2.95, df = 115, 2-tailed t-test).

Table 1.

Measures relevant to determining whether the difference between the amygdala and LIP with regard to the penalty signal arose from differences in behavior during the collection of data from the 2 areas

| Penalty Signal, Spikes per Second | Choice Rate, %: Reward vs. Penalty | Choice Rate, %: Large vs. Small Reward | Choice Rate, %: Small vs. Large Penalty | Fixation Break Rate, % | Reaction Time, ms | |

|---|---|---|---|---|---|---|

| AM M1 (12 runs) | −0.39 | 98.9 | 98.4 | 71.4 | 11.2 | 228 |

| AM M2 (19 runs) | 0.19 | 96.8 | 98.6 | 82.4 | 17.0 | 194 |

| LIP M1 (16 runs) | 6.49 | 99.5 | 100 | 97.1 | 7.0 | 188 |

| LIP M2 (20 runs) | 4.47 | 99.6 | 99.8 | 95.4 | 48.2 | 160 |

| Between-monkey β-coefficients | 1.7 (P = 0.34) | 4.1 (P = 0.015) | 0.26 (P = 0.064) | 0.034 (P = 0.81) | −0.080 (P = 0.32) | |

| Within-monkey β-coefficients | −0.13 (P = 0.80) | −0.013 (P = 0.96) | −0.084 (P = 0.38) | −0.078 (P = 0.26) | −0.0098 (P = 0.79) |

For each of 2 monkeys in the current amygdala study (AM M1 and M2) and each of 2 monkeys in a prior LIP study (Leathers and Olson 2012), we provide the average of the following measures as computed across all neurons in the monkey. Penalty signal: firing rate on large-penalty trials minus firing rate on small-penalty trials for all trials in which the penalty cue was presented contralateral to the recording site, a reward cue was presented ipsilateral to the recording site and the monkey chose reward. Choice rate: reward vs. penalty: percentage of trials pitting a reward against a penalty in which the monkey chose 1 of the options and that option was reward. Choice rate: large vs. small reward: percentage of trials pitting a large reward against a small reward on which the monkey chose large reward. Choice rate: small vs. large penalty: percentage of trials pitting a small penalty against a large penalty on which the monkey chose small penalty. Fixation break rate: percentage of trials pitting a reward against a penalty on which the monkey aborted the trial by breaking fixation. Reaction time: interval between fixation-spot offset and initiation of the saccade on trials pitting a reward against a penalty on which the monkey chose reward. We also provide β-coefficients and associated P values from univariate regression analyses assessing the dependence of between-monkey variance and within-monkey variance on each of the behavioral variables. The data set consisted of the penalty signal and 5 behavioral measures for each of 50 neurons in the amygdala and 67 neurons in LIP. To isolate between-monkey variance, we substituted for each neuronal and behavioral measure the mean for the monkey. To isolate within-monkey variance, we substituted for each neuronal and behavioral measure its observed value minus its mean for the monkey. Note that between-monkey β-coefficients bear no systematic relation to within-monkey β-coefficients.

correlation between reward and penalty signals.

After factoring out variance in the normalized reward signal and the normalized penalty signal dependent on behavior, we analyzed the correlation between the residuals. We found that the correlation was significantly negative in the amygdala (r = −0.29, F = 4.4, P = 0.041, n = 50) and significantly positive in LIP (r = 0.59, F = 34.5, P = 1.6 e−7, n = 67) and that the two correlation coefficients differed significantly from each other (P = 4.0 e−7, z = 5.07, n1 = 50, n2 = 67). We conclude that amygdala neurons genuinely differed from LIP neurons in exhibiting a value-based rather than salience-based pattern of correlation between reward and penalty signals.

DISCUSSION

A key goal of this study was to determine whether neurons of the amygdala are sensitive to value in the context of a task in which LIP neurons exhibit sensitivity to motivational salience (Leathers and Olson 2012). The principal conclusion is that amygdala neurons indeed are sensitive to value, as indicated by a negative correlation between reward and penalty signals, whereas LIP neurons are sensitive to motivational salience, as indicated by a positive correlation. Secondary observations include the following. There is a strong tendency in both the amygdala and LIP for neurons to respond more strongly to cues predicting large reward than to cues predicting small reward. However, the areas differ with regard to responses to penalty-predicting cues. Amygdala neurons are weakly sensitive to predicted penalty size and weakly favor small penalty over large, whereas LIP neurons are highly sensitive to predicted penalty size and strongly favor large penalty over small. It is possible although unlikely that the between-area difference with regard to the strength of the penalty signal arose from a difference in behavior at the time of data collection. This reservation does not, however, apply to the key finding of a distinction between sensitivity to value in the amygdala and sensitivity to motivational salience in LIP.

The results of this study are concordant with the general view that the amygdala belongs to a system, including orbitofrontal cortex, responsible for representing the values associated with cues, whereas LIP belongs to a system, including dorsolateral prefrontal cortex, responsible for generating behavioral responses to the cues (Cai and Padoa-Schioppa 2014; Hunt et al. 2015; Kim et al. 2008; Padoa-Schioppa 2011). The properties of amygdala neurons, as described here, closely resemble the properties of orbitofrontal cortex neurons as characterized previously in the context of a similar task (Roesch and Olson 2004). That task did not include conditions pitting large reward against large penalty, large reward against small reward, or large penalty against small penalty. Nevertheless, the results justify concluding that neurons in both areas exhibit a strong preference for large reward, a weak preference for small penalty, and a negative correlation between reward and penalty signals. We note further that neurons in the amygdala and orbitofrontal cortex exhibit little if any activity related to the direction of the saccade impending at the end of the trial (Roesch and Olson 2004), whereas direction-selective neuronal activity appears during the delay period both in LIP (Leathers and Olson 2012) and in dorsolateral prefrontal cortex (Cai and Padoa-Schioppa 2014; Hunt et al. 2015; Kim et al. 2008; Padoa-Schioppa 2011). In the framework of the view that the amygdala and orbitofrontal neurons encode value, it seems puzzling that they do not distinguish more forcefully between large and small penalties. There are several possible explanations reconciling the relative paucity of penalty-related activity with the idea that neurons encode value. First, it may be that the penalties used in our studies, periods of effortful fixation, are less potent than the rewards. This interpretation fits with the observation that monkeys, when confronted with a choice between a large penalty and a small penalty, chose optimally less often than when confronted with a choice between a large reward and a small reward. Second, it may be that neuronal activity reflected chosen value rather than offer value (Padoa-Schioppa and Assad 2006). The conditions of the experiment allowed assessing the dependence of neuronal activity on penalties as offers but not on penalties as chosen outcomes because they were rarely chosen. Finally, it may be that in a larger sample of neurons we might have seen a more robust penalty signal.

We turn now to considering the relation between our results and the results of previous studies of the amygdala. Many previous reports have described neurons that fire more strongly in expectation of a reward than of a penalty and neurons that fire more strongly in expectation of a penalty than of a reward (Belova et al. 2008; Paton et al. 2006; Peck and Salzman 2014b; Saddoris et al. 2005; Sanghera et al. 1979; Schoenbaum et al. 1999; Zhang et al. 2013). Neurons in the two categories are intermingled anatomically but are distinct in functional connectivity (Zhang et al. 2013) and the rate at which they adjust to changes in cue significance (Morrison et al. 2011). Although it is tempting to conclude that the two populations encode positive and negative value, respectively, we cannot draw this conclusion on the basis of the stated observations because reward and penalty may have differed in salience as well as value (Namburi et al. 2016). Inasmuch as the key question of the present study is whether amygdala neurons encode value or salience, we will confine comparative comments in ensuing paragraphs to studies based on designs marginally capable of distinguishing value from salience by virtue of having used cues that predict at least three outcomes: positive (reward), intermediate (small reward or none), and negative (penalty). We will comment successively on the reward signal, the penalty signal, and the correlation between the two signals, as established in animal studies. We will conclude with commentary on limitations affecting the ability of human functional imaging studies to distinguish the encoding of value from the encoding of salience in the amygdala.

Reward Signal

We observed a strong preponderance of neurons favoring large over small reward. An early report based on measuring delay-period activity preceding either reward or no reward indicated that neurons favoring reward over no reward and vice versa occur in approximately equal numbers: see Supplementary Fig. 2A of Paton et al. (2006). However, later reports based on cue-period activity indicated that neurons favoring large reward are moderately [see Fig. 4E of Belova et al. (2008)] or markedly [see Table 1 and Fig. 3A of Hirai et al. (2009)] preponderant. The preponderance of neurons firing more strongly for the better outcome is in accordance with the findings of the present study.

Penalty Signal

We found that large-penalty and small-penalty cues elicited indistinguishable responses at the level of the population. Some individual neurons did exhibit significant sensitivity to penalty size, with neurons favoring small penalty preponderant. Comparison with prior studies is difficult because these used only one size of penalty. An early report based on delay-period activity shows roughly equal numbers of neurons as favoring air puff over a neutral outcome and vice versa: see Supplementary Fig. 2B of Paton et al. (2006). A later report based on cue-period activity shows a preponderance of neurons preferring neutral outcome over air puff: see cases denoted as statistically significant in Fig. 5D of Peck and Salzman (2014b). The latter result is like ours in that the majority of neurons favored the better outcome. Studies other than these employed comparisons that confound penalty and reward sensitivity. These include comparing a condition in which an air puff was impending with a condition in which a small reward was impending (Belova et al. 2008) and comparing a condition in which a reward was offered with a condition in which a penalty was threatened (Hirai et al. 2009). That neurons in prior studies differentiated a penalty from a neutral outcome and that in at least one prior study neurons preferring the neutral outcome were preponderant is concordant with our results.

Correlation Between the Reward Signal and the Penalty Signal

The key finding of the present study is that the penalty signal was negatively correlated with the reward signal across neurons. This outcome indicates that value coding was predominant and that neurons sensitive to salience, if present in our task, were a minority. No previous results allow of direct comparison because no previous study has varied penalty size. However, certain prior findings add weight to the arguments for value or salience as we now note.

Evidence for value sensitivity.

One prior report describes the reward signal (large vs. small reward) as being negatively correlated with a mixed signal (air puff vs. small reward): see Fig. 4E of Belova et al. (2008). This would constitute evidence for value coding if small reward were less intense than either large reward or air puff. However, for some monkeys, the appetitive value of a small reward exceeds in salience the aversive value of an air puff (Fiorillo et al. 2013).

Evidence for salience sensitivity.

Peck and Salzman (2014b) had monkeys perform a task in which two cues indicated the outcome that would follow from success or failure in detecting an event at the corresponding location later in the trial. This design placed a strategic advantage on attending to cues that promised a reward or threatened a penalty as distinct from a cue predicting a neutral outcome. Behavioral measures indicated that monkeys did indeed allocate attention preferentially to these cues. The occurrence of stronger neuronal responses to reward-predicting and penalty-predicting cues than to neutral cues can thus be explained on the assumption that neuronal activity in the amygdala is modulated by top-down attention as distinct from motivational salience. Shabel and Janak (2009), monitoring neuronal activity in rat amygdala in a Pavlovian paradigm, found that cues predicting a reward or an electric shock elicited similar responses compared with the response elicited by a neutral cue. Although this result might indicate encoding of salience, there are complications in the design of the experiment that render interpretation difficult. These include using a visual reward cue and an auditory shock cue, delivering reward and penalty in separate blocks, and referencing responses to both cues to responses elicited by a neutral visual cue in the reward block.

Evidence for unsigned prediction error.

Amygdala neurons have been reported to signal unsigned prediction error (Belova et al. 2007; Roesch et al. 2010; Roesch et al.2012). This signal, representing the unsigned difference between predicted value and delivered value, is loosely analogous to a salience signal, which represents unsigned value. However, it is thought to contribute to learning through enhancing the associability of cues followed by unexpected outcomes, not to drive choice behavior and regulate motivation. Prediction errors were minimal in our study because the cue-outcome associations were highly overlearned. From the preceding observations, we draw the following general conclusion. Prior studies have afforded hints that amygdala neurons are sensitive both to value and to salience; however, none has manipulated both reward size and penalty size so as to resolve the matter with a correlation analysis of the sort employed in this study.

Human Studies

Numerous reports based on functional imaging in humans have described salience-related activity in the amygdala (Anderson et al. 2003; Cooper and Knutson 2008; Garavan et al. 2001; Schlund and Cataldo 2010; Schlund et al. 2011; Small et al. 2003; Winston et al. 2005). The essential finding is that conditioned and unconditioned appetitive and aversive events elicit stronger responses than neutral events. This observation could genuinely reflect the presence of neurons sensitive to outcome salience, but it could also arise from the amygdala containing a mixture of positive-value and negative-value neurons. In harmony with the latter interpretation, a recent study using voxel-based decoding has provided evidence that the amygdala is sensitive to value, with positive-value and negative-value patterns predominating in different voxels (Jin et al. 2015). The distinction between value coding and salience coding, based as it is on how individual neurons put together information about reward size and penalty size, is subject to definitive resolution only at the single-neuron level.

GRANTS

Support was received from National Institutes of Health (NIH) Grants R01-EY-018620, R01-EY-024912, P50-MH-103204, and K08-MH-080329 and the Pennsylvania Department of Health Commonwealth Universal Research Enhancement Program. Technical support was received from NIH Grants P30-EY-008098 and P41-RR-03631.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

M.L.L. and C.R.O. conceived and designed research; M.L.L. performed experiments; M.L.L. and C.R.O. analyzed data; M.L.L. and C.R.O. interpreted results of experiments; M.L.L. and C.R.O. prepared figures; M.L.L. and C.R.O. drafted manuscript; M.L.L. and C.R.O. edited and revised manuscript; M.L.L. and C.R.O. approved final version of manuscript.

ACKNOWLEDGMENTS

We thank Karen McCracken for technical assistance.

REFERENCES

- Anderson AK, Christoff K, Stappen I, Panitz D, Ghahremani DG, Glover G, Gabrieli JD, Sobel N. Dissociated neural representations of intensity and valence in human olfaction. Nat Neurosci 6: 196–202, 2003. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- Barberini CL, Morrison SE, Saez A, Lau B, Salzman CD. Complexity and competition in appetitive and aversive neural circuits. Front Neurosci 6: 170, 2012. doi: 10.3389/fnins.2012.00170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron 55: 970–984, 2007. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci 28: 10023–10030, 2008. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Contributions of orbitofrontal and lateral prefrontal cortices to economic choice and the good-to-action transformation. Neuron 81: 1140–1151, 2014. doi: 10.1016/j.neuron.2014.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper JC, Knutson B. Valence and salience contribute to nucleus accumbens activation. Neuroimage 39: 538–547, 2008. doi: 10.1016/j.neuroimage.2007.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Song MR, Yun SR. Multiphasic temporal dynamics in responses of midbrain dopamine neurons to appetitive and aversive stimuli. J Neurosci 33: 4710–4725, 2013. doi: 10.1523/JNEUROSCI.3883-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garavan H, Pendergrass JC, Ross TJ, Stein EA, Risinger RC. Amygdala response to both positively and negatively valenced stimuli. Neuroreport 12: 2779–2783, 2001. doi: 10.1097/00001756-200108280-00036. [DOI] [PubMed] [Google Scholar]

- Grabenhorst F, Hernádi I, Schultz W. Prediction of economic choice by primate amygdala neurons. Proc Natl Acad Sci USA 109: 18950–18955, 2012. doi: 10.1073/pnas.1212706109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabenhorst F, Hernadi I, Schultz W. Primate amygdala neurons evaluate the progress of self-defined economic choice sequences. eLife 5: e18731, 2016. doi: 10.7554/eLife.18731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernádi I, Grabenhorst F, Schultz W. Planning activity for internally generated reward goals in monkey amygdala neurons. Nat Neurosci 18: 461–469, 2015. doi: 10.1038/nn.3925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirai D, Hosokawa T, Inoue M, Miyachi S, Mikami A. Context-dependent representation of reinforcement in monkey amygdala. Neuroreport 20: 558–562, 2009. doi: 10.1097/WNR.0b013e3283294a2f. [DOI] [PubMed] [Google Scholar]

- Hunt LT, Behrens TE, Hosokawa T, Wallis JD, Kennerley SW. Capturing the temporal evolution of choice across prefrontal cortex. eLife 4: e11945, 2015. doi: 10.7554/eLife.11945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jin J, Zelano C, Gottfried JA, Mohanty A. Human amygdala represents the complete spectrum of subjective valence. J Neurosci 35: 15145–15156, 2015. doi: 10.1523/JNEUROSCI.2450-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim S, Hwang J, Lee D. Prefrontal coding of temporally discounted values during intertemporal choice. Neuron 59: 161–172, 2008. doi: 10.1016/j.neuron.2008.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leathers ML, Olson CR. In monkeys making value-based decisions, LIP neurons encode cue salience and not action value. Science 338: 132–135, 2012. doi: 10.1126/science.1226405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leathers ML, Olson CR. Response to comment on “In monkeys making value-based decisions, LIP neurons encode cue salience and not action value”. Science 340: 430, 2013. doi: 10.1126/science.1233367. [DOI] [PubMed] [Google Scholar]

- Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron 71: 1127–1140, 2011. doi: 10.1016/j.neuron.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Namburi P, Al-Hasani R, Calhoon GG, Bruchas MR, Tye KM. Architectural representation of valence in the limbic system. Neuropsychopharmacology 41: 1697–1715, 2016. doi: 10.1038/npp.2015.358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newsome WT, Glimcher PW, Gottlieb J, Lee D, Platt ML. Comment on “In monkeys making value-based decisions, LIP neurons encode cue salience and not action value”. Science 340: 430, 2013. doi: 10.1126/science.1233214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci 34: 333–359, 2011. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature 441: 223–226, 2006. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439: 865–870, 2006. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paxinos G, Huang XF, Toga AW. The Rhesus Monkey Brain in Stereotaxic Coordinates. San Diego, CA: Academic Press, 2000. [Google Scholar]

- Peck CJ, Lau B, Salzman CD. The primate amygdala combines information about space and value. Nat Neurosci 16: 340–348, 2013. doi: 10.1038/nn.3328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck CJ, Salzman CD. The amygdala and basal forebrain as a pathway for motivationally guided attention. J Neurosci 34: 13757–13767, 2014a. doi: 10.1523/JNEUROSCI.2106-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck CJ, Salzman CD. Amygdala neural activity reflects spatial attention towards stimuli promising reward or threatening punishment. eLife 3: e04478, 2014b. doi: 10.7554/eLife.04478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peck EL, Peck CJ, Salzman CD. Task-dependent spatial selectivity in the primate amygdala. J Neurosci 34: 16220–16233, 2014. doi: 10.1523/JNEUROSCI.3217-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Esber GR, Schoenbaum G. Neural correlates of variations in event processing during learning in basolateral amygdala. J Neurosci 30: 2464–2471, 2010. doi: 10.1523/JNEUROSCI.5781-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Esber GR, Li J, Daw ND, Schoenbaum G. Surprise! Neural correlates of Pearce-Hall and Rescorla-Wagner coexist within the brain. Eur J Neurosci 35: 1190–1200, 2012. doi: 10.1111/j.1460-9568.2011.07986.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science 304: 307–310, 2004. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Saddoris MP, Gallagher M, Schoenbaum G. Rapid associative encoding in basolateral amygdala depends on connections with orbitofrontal cortex. Neuron 46: 321–331, 2005. doi: 10.1016/j.neuron.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Saez A, Rigotti M, Ostojic S, Fusi S, Salzman CD. Abstract context representations in primate amygdala and prefrontal cortex. Neuron 87: 869–881, 2015. doi: 10.1016/j.neuron.2015.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanghera MK, Rolls ET, Roper-Hall A. Visual responses of neurons in the dorsolateral amygdala of the alert monkey. Exp Neurol 63: 610–626, 1979. doi: 10.1016/0014-4886(79)90175-4. [DOI] [PubMed] [Google Scholar]

- Schlund MW, Cataldo MF. Amygdala involvement in human avoidance, escape and approach behavior. Neuroimage 53: 769–776, 2010. doi: 10.1016/j.neuroimage.2010.06.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlund MW, Magee S, Hudgins CD. Human avoidance and approach learning: evidence for overlapping neural systems and experiential avoidance modulation of avoidance neurocircuitry. Behav Brain Res 225: 437–448, 2011. doi: 10.1016/j.bbr.2011.07.054. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci 19: 1876–1884, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shabel SJ, Janak PH. Substantial similarity in amygdala neuronal activity during conditioned appetitive and aversive emotional arousal. Proc Natl Acad Sci USA 106: 15031–15036, 2009. doi: 10.1073/pnas.0905580106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small DM, Gregory MD, Mak YE, Gitelman D, Mesulam MM, Parrish T. Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron 39: 701–711, 2003. doi: 10.1016/S0896-6273(03)00467-7. [DOI] [PubMed] [Google Scholar]

- Winston JS, Gottfried JA, Kilner JM, Dolan RJ. Integrated neural representations of odor intensity and affective valence in human amygdala. J Neurosci 25: 8903–8907, 2005. doi: 10.1523/JNEUROSCI.1569-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W, Schneider DM, Belova MA, Morrison SE, Paton JJ, Salzman CD. Functional circuits and anatomical distribution of response properties in the primate amygdala. J Neurosci 33: 722–733, 2013. doi: 10.1523/JNEUROSCI.2970-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]