Abstract

Objectives

We evaluated the accuracy of augmented reality (AR)-based navigation assistance through simulation of bone tumours in a pig femur model.

Methods

We developed an AR-based navigation system for bone tumour resection, which could be used on a tablet PC. To simulate a bone tumour in the pig femur, a cortical window was made in the diaphysis and bone cement was inserted. A total of 133 pig femurs were used and tumour resection was simulated with AR-assisted resection (164 resection in 82 femurs, half by an orthropaedic oncology expert and half by an orthopaedic resident) and resection with the conventional method (82 resection in 41 femurs). In the conventional group, resection was performed after measuring the distance from the edge of the condyle to the expected resection margin with a ruler as per routine clinical practice.

Results

The mean error of 164 resections in 82 femurs in the AR group was 1.71 mm (0 to 6). The mean error of 82 resections in 41 femurs in the conventional resection group was 2.64 mm (0 to 11) (p < 0.05, one-way analysis of variance). The probabilities of a surgeon obtaining a 10 mm surgical margin with a 3 mm tolerance were 90.2% in AR-assisted resections, and 70.7% in conventional resections.

Conclusion

We demonstrated that the accuracy of tumour resection was satisfactory with the help of the AR navigation system, with the tumour shown as a virtual template. In addition, this concept made the navigation system simple and available without additional cost or time.

Cite this article: H. S. Cho, Y. K. Park, S. Gupta, C. Yoon, I. Han, H-S. Kim, H. Choi, J. Hong. Augmented reality in bone tumour resection: An experimental study. Bone Joint Res 2017;6:137–143.

Keywords: Augmented reality, Bone tumour, Navigation

Article focus

Intra-operative navigation systems reportedly help to define the extent of the tumour, and allow more precise surgery to be performed.

A somewhat cumbersome registration process, additional surgical time and high cost are among some of the factors cited as hindering its routine use.

We evaluated the accuracy of bone tumour resection in pig femurs using an augmented reality (AR)-based navigation which could be used on a tablet PC.

Key messages

Accuracy of tumour resection was satisfactory with the help of an AR navigation system, with the tumour shown as a virtual template.

Even though most limb salvage surgery can be performed with conventional techniques, we believe that our AR-based navigation system deserves further attention.

Strengths and limitations

This study concluded that AR-based navigation improves accuracy of surgical margins in both experienced and inexperienced hands.

The system can help guide the surgeon without a complex set-up process or crowding in the operating room, with minimal additional time and cost.

Use of this programme is confined to surgery for long bones.

Introduction

The exact role of navigation in orthopaedic oncology continues to evolve. Traditionally, most limb salvage surgeries are successfully and safely performed with pre-operative planning based on MR images, with or without the assistance of intra-operative fluoroscopy. Intra-operative navigation systems reportedly help to define the extent of the tumour, and allow more precise surgery to be performed.1 In particular, they could be helpful for pelvic/sacral tumour resections and may enable a safe resection margin when a tumour is adjacent to the joint.2

Despite its potentially favourable attributes in terms of the resection margin, navigation has not been as popular in bone tumour surgery. A somewhat cumbersome registration process, additional surgical time and high cost are among some of the factors cited, hindering its routine use.3

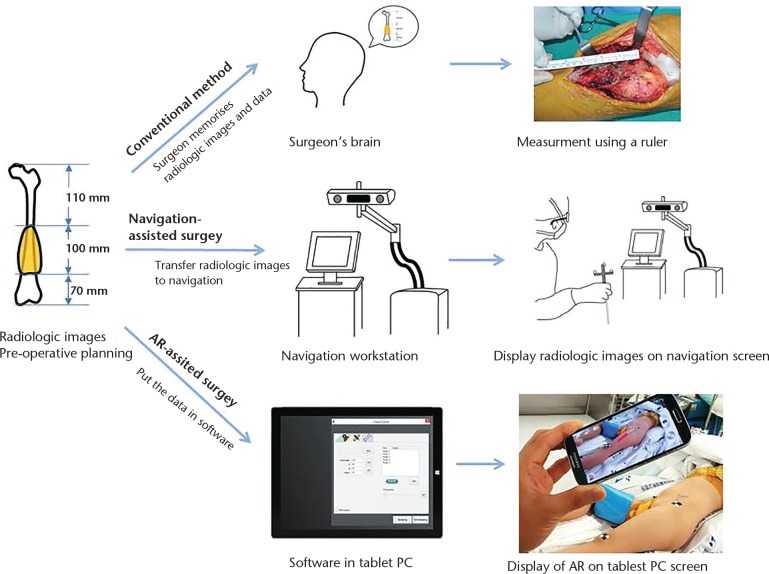

Recently, we developed an augmented reality (AR)-based navigation system for bone tumour resection, and reported its accuracy, simplicity and convenience.4 AR is a term describing the overlay of information over real-world imagery. In the medical arena, the information usually means computer-generated imagery or radiological data. The key focus of our software development was on simplifying the usage of navigation for resecting tumours in long bones. The information that a surgeon would like when resecting a malignant tumour from a long bone is fairly basic: the longitudinal relationships between the tumour, normal tissue and the adjacent joint. The navigation system in this study does not require radiological image segmentation for regions of interest, conventional registration or camera-tracker calibration. Instead of segmentation and the employment of an external tracker, we used a graphic model that represents a virtual ruler as a reference for bone cutting, and a portable tablet PC to track the tools and display the information measured (Fig. 1). In this study, we evaluated the accuracy of AR-based navigation assistance through simulation of bone tumours in a pig femur model. In addition, we describe a clinical case of a tumour in the tibial diaphysis which was resected with the help of the AR-based navigation.

Fig. 1.

Schematic diagrams of workflows according to the methods for determination of tumour location (AR, augmented reality).

Materials and Methods

Development of the AR-based navigation system

In this study, a tablet PC (Surface Pro3; Microsoft, Redmond, Washington) had simultaneous roles as a work station and as a position tracker. We used a mono camera embedded in a tablet PC as the tracker, instead of a commercial tracker which is used in the conventional navigation system. The PC camera recorded 2.0 megapixel images at 30 frames per second. Position changes of the target object and surgical instrument were estimated from the images captured by the camera. We captured 30 chessboard images in every different position, and intrinsic camera parameters were obtained from Zhang’s camera calibration algorithm.5 The camera in the tablet PC tracks reference markers which have a square graphic pattern with binary code. The four corner points were obtained from a square pattern using an image processing technique by Nicolau et al6 and executed using the ArUco library.7 The position of the reference was computed by a direct least-squares perspective-n-points algorithm.8

The navigation system in this study does not require transfer of the radiological images to the work station. Instead, we simply input the longitudinal relationship data (the lengths of the entire bone and tumour and the distance between the tumour margin and the articular surface or the end of the bone) into the software of the tablet PC, and it, in turn, outputs the virtual bar as augmented reality (Fig. 2).

Fig. 2.

Bone tumour model with bone cement inserted in the pig femur, and augmented reality software and virtual template containing the information about the longitudinal relationship of the tumour and normal bone. ‘A’ indicates distance between the proximal end of bone and proximal margin of the tumour and ‘B’ indicates the distance between the distal end of the bone and distal margin of the tumour.

Virtual template and registration

In conventional navigation systems, the segmentation and patient-to-image registration are necessary in order to display spatial information about the tumour on the screen. However, we designed a simpler process using the virtual template for bone tumour resection. This template contains information on the longitudinal relationship between normal tissue and the tumour, and is obtained from radiological images. Thus, instead of displaying radiological images, a cylinder-shaped virtual template is overlaid on the real-world imagery. The virtual template cylinder is composed of five stacks (Fig. 2). The first and fifth stacks, coloured blue, represent a normal region. The third stack (red) denotes the extent of the tumour, whilst the second and fourth stacks highlighted in green represent a safety margin. To overlay accurately the virtual template onto the real bone, a simple registration process was necessary to match both ends of the virtual template and both ends of the real bone.

Production of the bone tumour model

A cortical window (1 cm × 2 cm2) was made in the diaphysis of the dead pig femurs, and between 5 ml and 10 ml of bone cement (Depuy CMW; DePuy Synthes, Blackpool, United Kingdom) was inserted (Fig. 2). A CT scan was used to measure the length of the bones and the length of the inserted cement plug. The mean length of the 123 pig femurs was 190.7 mm (147 to 221), and the mean longitudinal length of the inserted cement plug was 57.5 mm (41 to 87).

Tumour resection was simulated in three different scenarios. The first was an AR-assisted resection by an expert orthopaedic oncologist (HSC). The second was an AR-assisted resection by an orthopaedic resident (YC), and the third was resection with conventional methods by the expert orthopaedic oncologist. A sample size calculation showed that 41 femurs for each group would be required at a significance level of 0.05 and 90% power, assuming a 2 mm difference in the resection error to be clinically significant between the groups (mean ± standard deviation (sd) 2 ± 3 mm for the AR group, compared with 4 ± 3 mm for the conventional resection group).9,10 A total of 123 pig femurs (purchased from a butcher) were used. This study was approved by the Institutional Animal Care and Use Committee at our hospital (No. BA1506-178/035-01).

Comparison of AR-assisted resection and conventional resection

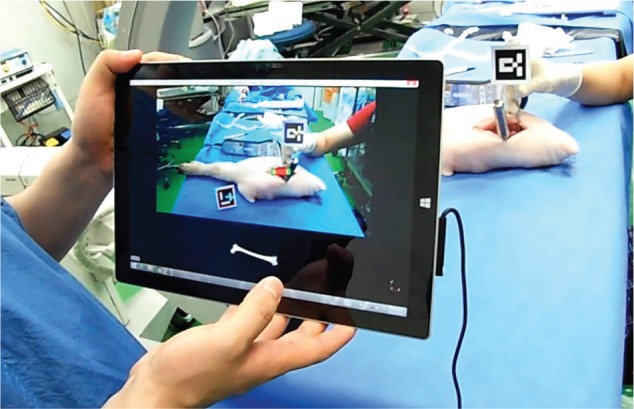

Bone tumour resection was simulated with a planned 1 cm safety margin proximally and distally to the bone cement. The 123 femurs were assigned through a 2:1 allocation to either the AR-assisted resection group (AR group) or the conventional resection group (conventional group) (Fig. 3). In the AR group, the resections were performed under AR guidance, and Figure 4 demonstrates the image seen by the surgeon intra-operatively. In the conventional group, resection was performed by the expert orthopaedic oncologist (HSC) after measurement of tumour dimensions based on CT images. The osteotomies were performed after measuring the distance from the edge of the condyle to the expected resection margin with a ruler as per routine practice.

Fig. 3.

Allocation of pig femurs (AR, augmented reality).

Fig. 4.

Determination of osteotomy site using augmented reality based navigation guidance.

The distance from the edge of cement to the resection margin was measured once with a ruler by another orthopaedic surgeon (YKP). He was blinded to the type of resection. A total of 246 six surgical margins of 123 femurs were assessed.

Outcomes measurement

We evaluated accuracy and precision of resection by comparing the surgical margins with the pre-operative plan. The difference between the obtained surgical margin and the planned surgical margin (10 mm) was regarded as the error of resection. The resection errors were classified into four grades: Grade A ⩽ 3 mm; Grade B > 3 mm to ⩽ 6 mm; Grade C > 6 mm to ⩽ 9 mm; Grade D > 9 mm, or any tumour violation.

Statistical analysis

One-way analysis of variance was used for statistical comparison of the resection errors between the three groups. Statistical analysis was performed by SPSS version 12.0 (SPSS, Chicago, Illinois). P-values ⩽ 0.05 were deemed statistically significant.

Results

Difference in surgical margins between the pre-operative plan and post-operative measurement

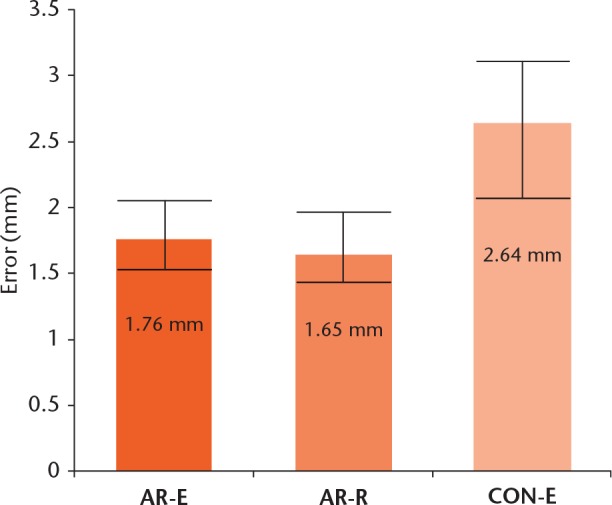

We evaluated the accuracy of resection by comparing the surgical margin with the pre-operative plan. The mean error of 164 resections in 82 femurs in the AR group was 1.71 mm (0 to 6) (1.76 mm in the expert resections and 1.65 mm in the resident resections, p = 0.58). The mean error of 82 resections in 41 femurs in the conventional resection group was 2.64 mm (0 to 11). A statistically significant difference was observed between AR-assisted and conventional resections (p < 0.05) (Fig. 5).

Fig. 5.

A statistically significant difference (p < 0.05) was observed between augmented reality (AR)-assisted and conventional resections (AR-E, AR-assisted resection by an expert; AR-R, AR-assisted resection by a resident; CON-E, conventional resection by the expert).

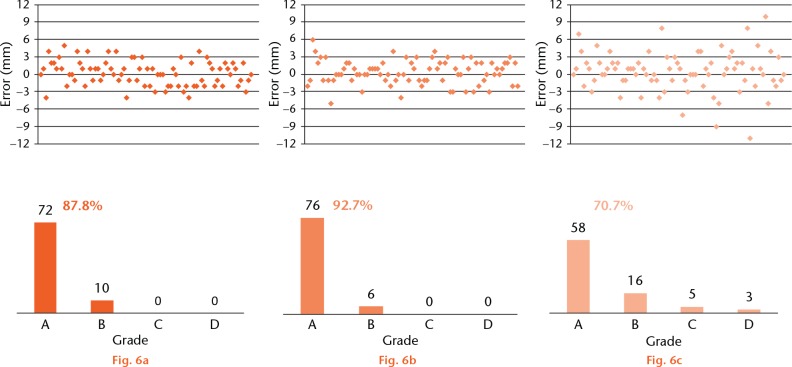

In the AR group, 148 resections were classified as grade A (72 expert resections, 76 resident resections) and 16 were classified as grade B (ten expert resections, six resident resections). No resections were classified as grades C or D in the AR group. In the conventional group, 58 resections were classified as grade A, 16 as grade B, five as grade C, and three as grade D. Although all resections were performed by an expert orthopaedic oncologist in the conventional resection group, three resections had tumour violation or an error larger than 10 mm. The probabilities of a surgeon obtaining a 10 mm surgical margin with a ⩽ 3 mm tolerance were 87.8% in AR-assisted resections by the expert, 92.7% in AR-assisted resections by the resident, and 70.7% in conventional resections by the expert (Fig. 6).

Distribution and grades of errors in the three groups: a) augmented reality (AR) assisted resection by an expert; b) AR-assisted resection by a resident; c) conventional resection.

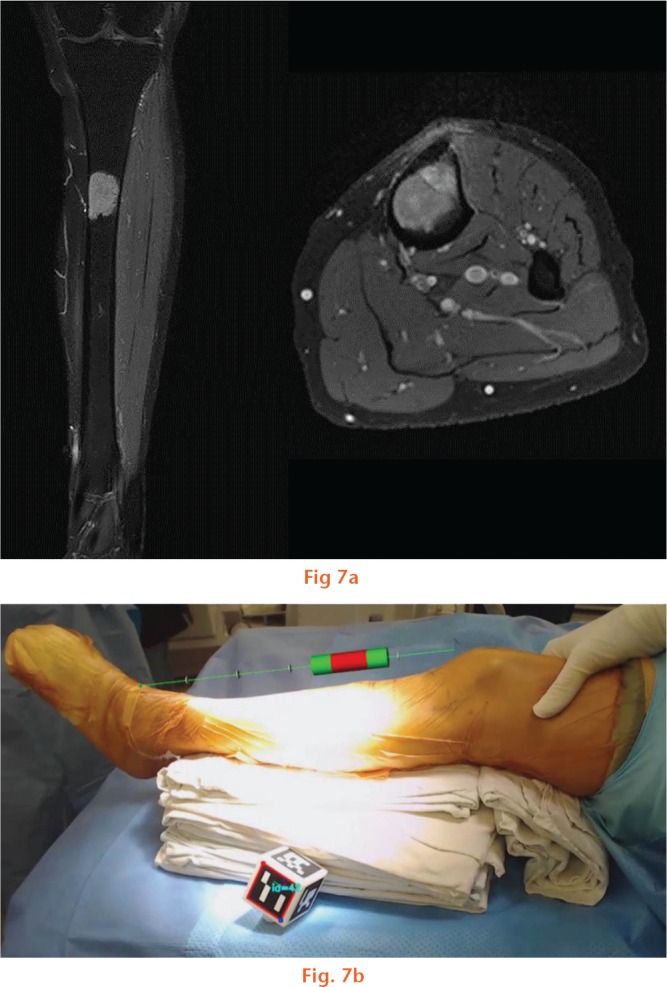

Case study

A 52-year-old woman was diagnosed with a low-grade osteosarcoma in the diaphysis of the tibia. Further evaluation excluded distant metastasis. The intercalary bone resection was planned and the extent of surgical resection was determined based on MRI. Osteotomies were performed under AR navigation guidance with a 1.5 cm safety margin proximally and distally. Pathological examination showed that the proximal resection margin was 1.4 cm and the distal margin was 1.7 cm (Fig. 7). The patient provided informed consent for the data concerning the case to be submitted for publication.

Images showing: a) intercalary resection was planned based on MR images and b) how the osteotomies were monitored under augmented reality based navigation guidance.

Discussion

Modern surgical navigation systems help surgeons to execute ‘what they planned pre-operatively’. For example, for the resection of a malignant tumour in a long bone, surgeons pre-operatively determine the extent of the resection, which is typically expressed as a longitudinal distance from some anatomical point (usually a joint surface) to the resection planes, based on radiological data. Navigation simply helps surgeons to carry out their pre-operative plans by visualising the radiological image intra-operatively. Similar to arthroplasty, however, many experienced surgeons are reluctant to use a navigation system during the resection of a malignant bone tumour, reasoning that most limb salvage surgeries can be performed without the help of navigation and that the additional cost/time is not justified.11 In addition, the size of the navigation unit itself may be considered a cumbersome appurtenance in already crowded operating rooms.3 We have tried to simplify the navigation system by applying AR technology to tumour resections in the long bones. The present report is a study derived from our research on computer-assisted bone tumour surgery.

Assimilating the AR technology would be expected to simplify the navigation system for bone tumour surgery without compromising accuracy. In this study, the accuracy of AR-based navigation assistance in bone tumour resection was evaluated through an experimental study with a simulation of bone tumours in a pig femur model.

AR is a recent technology which combines computer-generated objects and text superimposed onto real images and video. The first appearance of AR dates back to the 1950s when Morton Heilig, a cinematographer, thought of cinema as something that would have the ability to draw the viewer into the onscreen activity by taking in all the senses in an effective manner. In 1962, Heilig built a prototype of his vision, which he described in 1955 in “The Cinema of the Future,” named Sensorama, which predated digital computing.12 Next, Ivan Sutherland invented the head-mounted display in 1966. In 1968, Sutherland was the first to create an AR system using an optical see-through head-mounted display.13

In medicine, attempts have been made to apply AR in other surgical fields including neurosurgery,14 otolaryngology15,16 and maxillofacial surgery.17 In the pre-operative planning stage, most surgeons have a mental image of where the target lesion is and plan the route of exposure accordingly based on radiological studies. In the AR environment, marking structures of interest on radiographic images that can be superimposed onto live video camera images allows a surgeon to simultaneously visualise the surgical site and the overlaid graphic images. AR applications to orthopaedic surgery are not yet clinically available, but several research systems are being used to improve implant alignment.18 Variations on the application may include bone tumour resection.

In the field of orthopaedic oncology, the usefulness and accuracy of intra-operative navigation has been evaluated by many authors.19-21 Our group has found navigation to be most useful in the resection of pelvic ring tumours and metaphyseal tumours with the aim of preserving the adjacent joint.9 An experimental study by Cartiaux et al10 on the accuracy of tumour resection in the pelvis showed that the probability of an experienced surgeon obtaining a 1 cm surgical margin was only 52%. They attributed this inaccuracy to the complex 3D architecture of the pelvis, and suggested that the use of computer or robot-assisted technology could improve this.

We previously demonstrated that the advantages of navigation can be applied in limb salvage surgery and that, in selected cases, it can maximise the accuracy of surgical resection and help to preserve the adjacent joint.9,22 When a navigation system is employed during the resection of a long bone malignancy, the surgeon primarily wants to know the longitudinal relationships between the tumour, normal tissue and the adjacent joint.9,22 With the AR system in this study, we showed a simple diagrammatic relationship of a planned resected region and the normal bone, rather than the radiological image itself which is displayed in the conventional navigation system. This concept made the software simple, requiring a smaller memory than a conventional AR programme. As a result of the programme simplification, the AR navigation environment could be used on a tablet PC.

The outcomes in this study were evaluated in two aspects: accuracy and precision by comparing mean difference of errors between AR and conventional resections; and the distribution of errors. In the clinical setting, the latter is more meaningful because the use of navigation would be expected to prevent unacceptable resection. In this study, the probabilities of a surgeon obtaining a 10 mm surgical margin with a 3 mm tolerance were 90.2% in the AR group, and 70.7% in conventional resection. In addition, the ‘tumour’ was violated in three resections in the conventional resection group compared with none in the AR group.

Limitations of this study include simulation of the tumour by bone cement. Malignancies in the bone usually have poorly defined borders because of aggressive growth and oedema on the periphery. In our simulation of bone tumours with cement, the border of the tumour was well defined. However, although the determination of the tumour border is a real planning concern, this study aimed to evaluate the accuracy in the performance of planned resection with AR assistance, and we feel the cement aided our ability to measure margins accurately. Another limitation is that the use of this programme is confined to surgery for the long bone. In conventional navigation, the data provided by the system are the radiological images. They enable the surgeon to identify the location and contour of the tumour intra-operatively. The navigation guidance is helpful in surgeries for pelvic bone malignancies as well as those for long bones. The software used in this study only demonstrates the longitudinal relationship of the tumour to the bone. Therefore, it can only be used in long bone surgery. For pelvic malignancies, specific software would be required.

In this study, we demonstrated that the accuracy of tumour resection was satisfactory with the help of the AR navigation system, with the tumour shown as a virtual template. In addition, this concept made the navigation system simple and available without additional cost or time. Even though most limb salvage surgery can be performed with conventional techniques, we believe that our AR-based navigation system deserves further attention. We have shown that it improves accuracy of surgical margins in both experienced and inexperienced hands. Furthermore, it provides additional information about tumour location, and can help guide the surgeon at the time of the operation without a complex set-up process or overcrowding in the operating room, with minimal additional time and cost.

Footnotes

Author Contribution: H. S. Cho: Study design, Manuscript writing.

Y. K. Park: Animal study, Data collection and analysis.

S. Gupta: Manuscript writing.

C. Yoon: Animal study.

I. Han: Data analysis.

H-S. Kim: Study design.

H. Choi: Software development.

J. Hong: Software development.

ICMJE Conflict of Interest: None declared.

Funding Statement

This work was supported by a grant from the SNUBH Research Fund (grant no. 13-2014-008).

References

- 1. Wong KC, Kumta SM. Computer-assisted Tumor Surgery in Malignant Bone Tumors. Clin Orthop Relat Res 2013;471:750-761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Young PS, Bell SW, Mahendra A. The evolving role of computer-assisted navigation in musculoskeletal oncology. Bone Joint J 2015;97-B:258-264. [DOI] [PubMed] [Google Scholar]

- 3. Lanfranco AR, Castellanos AE, Desai JP, Meyers WC. Robotic surgery: a current perspective. Ann Surg 2004;239:14-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Cho HS, Park YK, Han I, Kim H-S, O’Donnell RJ. Augmented-Reality Assistance in Bone Tumor Surgery [abstract]. ISOLS meeting, 2015. [Google Scholar]

- 5. Zhang Z. A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 2000;22:1330-1334. [Google Scholar]

- 6. Nicolau SA, Pennec X, Soler L, et al. An augmented reality system for liver thermal ablation: design and evaluation on clinical cases. Med Image Anal 2009;13:494-506. [DOI] [PubMed] [Google Scholar]

- 7. Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit 2014;47:2280-2292. [Google Scholar]

- 8. Hesch JA, Roumeliotis SI. A Direct Least-Squares (DLS) Method for PnP [proceedings]. Computer Vision (ICCV), 2011. [Google Scholar]

- 9. Cho HS, Oh JH, Han I, Kim H-S. Joint-preserving limb salvage surgery under navigation guidance. J Surg Oncol 2009;100:227-232. [DOI] [PubMed] [Google Scholar]

- 10. Cartiaux O, Docquier P-L, Paul L, et al. Surgical inaccuracy of tumor resection and reconstruction within the pelvis: an experimental study. Acta Orthop 2008;79:695-702. [DOI] [PubMed] [Google Scholar]

- 11. Randall RL. Metastatic Bone Disease: An Integrated Approach to Patient Care. New York: Springer-Verlag, 2016. [Google Scholar]

- 12. Heilig ML. El cine del futuro: the cinema of the future. Presence: Teleoperators and Virtual Environments 1992;1:279-294. [Google Scholar]

- 13. Sutherland IE. A head-mounted three dimensional display [proceedings]. Fall Joint Computer Conference, 1968. [Google Scholar]

- 14. Masutani Y, Dohi T, Yamane F, et al. Augmented reality visualization system for intravascular neurosurgery. Comput Aided Surg 1998;3:239-247. [DOI] [PubMed] [Google Scholar]

- 15. Arora A, Lau LYM, Awad Z, et al. Virtual reality simulation training in Otolaryngology. Int J Surg 2014;12:87-94. [DOI] [PubMed] [Google Scholar]

- 16. Mason TP, Applebaum EL, Rasmussen M, et al. Virtual temporal bone: creation and application of a new computer-based teaching tool. Otolaryngol Head Neck Surg 2000;122:168-173. [DOI] [PubMed] [Google Scholar]

- 17. Badiali G, Ferrari V, Cutolo F, et al. Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. J Craniomaxillofac Surg 2014;42:1970-1976. [DOI] [PubMed] [Google Scholar]

- 18. DiGioia AM, Jaramaz B, Blackwell M, et al. The Otto Aufranc Award. Image guided navigation system to measure intraoperatively acetabular implant alignment. Clin Orthop Relat Res 1998;355:8-22. [DOI] [PubMed] [Google Scholar]

- 19. Cho HS, Kang HG, Kim HS, Han I. Computer-assisted sacral tumor resection. A case report. J Bone Joint Surg [Am] 2008;90-A:1561-1566. [DOI] [PubMed] [Google Scholar]

- 20. Cho HS, Oh JH, Han I, Kim HS. The outcomes of navigation-assisted bone tumour surgery: minimum three-year follow-up. J Bone Joint Surg [Br] 2012;94-B:1414-1420. [DOI] [PubMed] [Google Scholar]

- 21. Jeys L, Matharu GS, Nandra RS, Grimer RJ. Can computer navigation-assisted surgery reduce the risk of an intralesional margin and reduce the rate of local recurrence in patients with a tumour of the pelvis or sacrum? Bone Joint J 2013;95-B:1417-1424. [DOI] [PubMed] [Google Scholar]

- 22. Wong KC, Kumta SM. Joint-preserving tumor resection and reconstruction using image-guided computer navigation. Clin Orthop Relat Res 2013;471:762-773. [DOI] [PMC free article] [PubMed] [Google Scholar]