Abstract

This article presents an evaluation of novel display concepts for an emergency department information system (EDIS) designed using cognitive systems engineering methods. EDISs assist emergency medicine staff with tracking patient care and ED resource allocation. Participants performed patient planning and orientation tasks using the EDIS displays and rated the display’s ability to support various cognitive performance objectives along with the usability, usefulness, and predicted frequency of use for 18 system components. Mean ratings were positive for cognitive performance support objectives, usability, usefulness, and frequency of use, demonstrating the successful application of design methods to create useful and usable EDIS concepts that provide cognitive support for emergency medicine staff. Nurse and provider roles had significantly different perceptions of the usability and usefulness of certain EDIS components, suggesting that they have different information needs while working.

Keywords: Electronic department information systems, Patient tracking systems, Usability, Human factors methods

1. Introduction

Emergency department (ED) patient status boards display patient information and support the activities of ED staff including physicians, nurses, other care providers (e.g., physician assistants) and technicians. Patient status boards are used to track the status of patients and resources, providing support for task completion as well as shared awareness among clinical team members (Wears et al., 2007). Because the emergency department environment is characterized by high acuity patients, intense time pressures, and inconsistent patient arrivals, patient status boards are useful for managing high cognitive workloads and high decision density (Croskerry and Sinclair, 2001; Schenkel, 2000). Patient status boards support situational awareness of patient flow and patient status in support of tasks such as patient hand-off, documentation, teaching, and consulting other medical experts (Patterson et al., 2010; Laxmisan et al., 2007; Bisantz et al., 2010).

Patient status boards have transitioned from dry-erase white-boards to electronic emergency department information systems (EDIS) (Aronsky et al., 2008; Husk and Waxman, 2004). EDIS implementation has affected ED work practices in unintended ways, particularly with respect to communication among ED staff members and tracking patient progress, indicating that the design of EDIS interfaces have not comprehensively considered the information and communication needs of the end-users (Bisantz et al., 2010; Hertzum and Simonsen, 2013). Recent regulations by the Office of the National Coordinator for Health Information Technology (ONC), Health and Human Services, require vendors to attest to user-centered design processes, and conduct usability studies on final products (ONC, 2015). These recommendations emphasize a need to identify design and evaluation methods that integrate data on the cognitive and information needs of frontline users in the software development process.

Cognitive systems engineering (CSE) provides methods that can be employed in a user-centered design process. CSE offers a theoretical approach for the design of information displays that can support and enhance existing work practices by explicitly considering work systems, high level tasks, strategies, socio-technical constraints, and required knowledge and skills (Hajdukiewicz and Burns, 2004; Vicente, 1999). CSE methods have been successfully applied to user-interface design in a wide range of safety critical industries including defense, process control and aviation, as well as for healthcare systems such as neonatal intensive care cardiac nursing and anesthesiology (Naikar et al., 2006; Ahlstrom, 2005; Sharp and Helmicki, 1998; Watson et al., 2004; Yamaguchi and Tanabe, 2000; Seamster et al., 1997; Watson et al., 2000). However, CSE has not been used to design aspects of EDIS displays. Using CSE methods may support design of information displays that provide necessary information more effectively and enabling clinicians and staff to work more efficiently.

This paper presents the results of a usability assessment of novel EDIS display concepts that were designed using CSE methods. This study is part of a larger research program that took a systematic, CSE approach to identifying information needs and then iteratively refining and testing information displays based on those needs. In particular, and based on a work-centered systems design framework, we were interested in identifying high level cognitive requirements that an EDIS system should support, and then designing display elements or areas which supported users in meeting those requirements (Wampler et al., 2006). To accomplish this, our research program 1) performed a CSE work domain analysis of the ED to identify needs for support and related information requirements; 2) used the results to design novel EDIS displays; and 3) evaluated the success of the design in several studies (using multiple objective and subjective methods), including the study we describe here (Guarrera et al., 2013, 2015; Clark et al., 2014).

First, in order to identify information requirements, CSE work domain modeling methods drawn from a well-known theoretical framework - cognitive work analysis (Vicente, 1999) - were used to describe hospital emergency departments. The work domain model consisted of nodes representing high-level system purposes, abstract constraints and functions, processes, and system resources. Next, information requirements related to these model elements were identified and used in an iterative design process involving domain experts (emergency medicine physicians and a nurse) and human factors engineers to create and improve prototype display concepts for a novel EDIS. (See Guarrera et al., 2015 for a complete description of the modeling results and design process) (Guarrera et al., 2015).

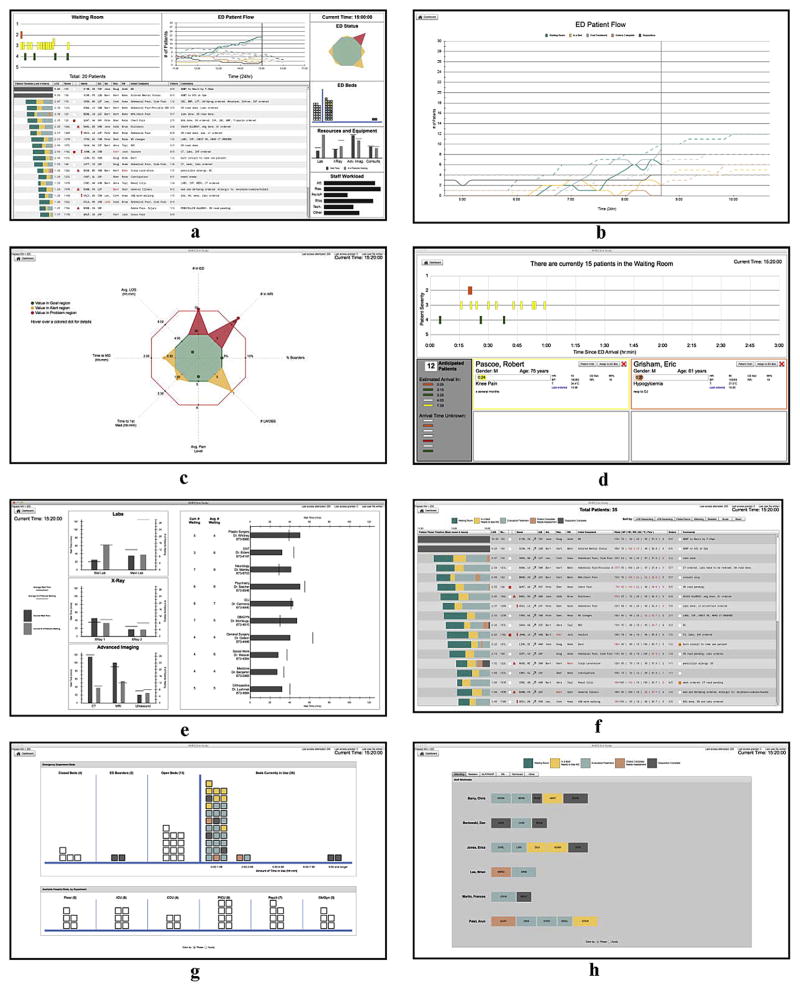

The resulting display concepts consist of seven display areas that can be accessed through a main dashboard (Fig. 1a). Together, the displays present information related to management of patient care and patient flow through the ED, with a particular focus on maintaining situational awareness of the ED as well as patient status and facilitating team communication and coordination. The displays were also designed to help users manage constraints related to patient care, such as availability of laboratory and imaging facilities, personnel workloads and bed availability throughout the hospital. Innovative features include: the ability to compare current and historic information regarding patient volumes (Fig. 1b), a quick status graphic which indicates overall ED status across eight variables (Fig. 1c), a timeline representation of patients in the waiting room, coded by triage score and arranged according to a dynamically updating length of wait (Fig. 1d), patient wait times for tests or consultations (Fig. 1e), color coded phases of care (i.e. waiting, in ED but not initially assessed, diagnosis/treatment, treatment/tests completed, and dispositioned) used both as part of a timeline (Fig. 1f) and to code other representations of patients in bed status and staff workload displays (Fig. 1g and h) along with graphic representation of beds in terms of whether or not they are open (Fig. 1g). The displays were implemented using Adobe Flash Builder 4.6(Adobe Flash Builder, 2010).

Fig. 1.

Final EDIS displays and main dashboard (a–h). Design concepts were based on information and communication requirements identified in the cognitive systems engineering analysis of the emergency department. Note that all patient data is fictitious.

2. Material and methods

2.1. Approach

A three-phase usability assessment consisting of an EDIS familiarization phase, a task performance phase and an assessment phase was conducted to evaluate the displays in terms of ease of use, utility, and the extent to which the displays support the work-oriented cognitive needs of emergency medicine clinical staff. Rating scales were derived from a work centered, cognitive systems engineering approach previously used to evaluate prototype displays for a complex work system (Truxler et al., 2012), and were tailored specifically to ED tasks and goals identified through the CSE analysis of the ED as described above (Guarrera et al., 2015). Detailed questions related to specific interface elements were included to allow us to understand which specific aspects of the displays were (or were not) rated as useful or usable.

Results from the assessments were designed to test two hypotheses regarding the design of the EDIS:

User perceptions regarding cognitive support, usability, and usefulness of the EDIS will be greater than neutral across all goals for cognitive support and ratings for screen displays.

User perceptions regarding cognitive support, usability, and usefulness of the EDIS will be consistent across clinician roles.

2.2. Participant recruitment

Eighteen participants with emergency medicine experience were recruited from a large, multi-hospital system. A recruitment e-mail was distributed to ED staff members via institutional e-mail listservs, and staff members could participate on a voluntary basis. Nine physicians/mid-level providers (attending physicians, residents, and physician assistants) and nine nurses participated. This sample size (overall, and per provider group) reflects the practical challenges in recruiting experienced participants for a laboratory study but allowed comparison across nurse and provider categories. A brief survey was given to each participant to ascertain previous experience with an EDIS. Appropriate Institutional Review Board approval was obtained for this study.

2.3. Setting

The evaluations were conducted on an individual basis in a clinical simulation laboratory setting. The prototype displays were shown on a 27″ LCD monitor. Participants could interact with the display to navigate among display areas using a standard mouse. Data drawn from a set of scripted (fictitious) patient cases was used as the source data to populate the EDIS displays (Clark et al., 2014). The scripted patient information could be advanced to a new point simulating the passage of time (see Lin et al., 2008 for a description of a similar ED simulation) (Lin et al., 2008).

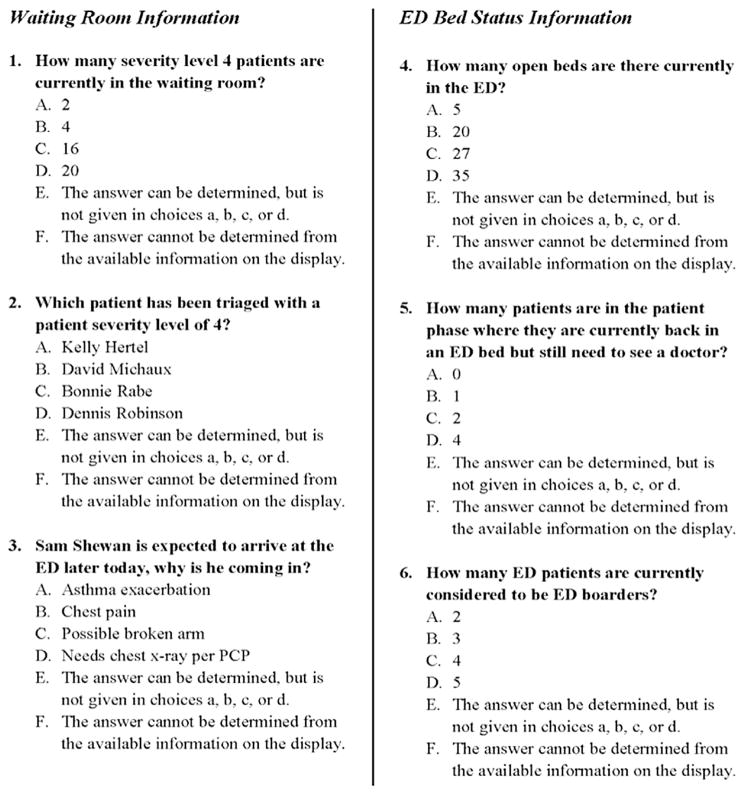

2.4. EDIS familiarization

Each participant watched a 12-min training video prior to the study. The video presented a voice-over tutorial of the EDIS and explained basic navigation, information content and functionality of each screen. Participants were instructed to use the displays as they answered 24 multiple choice questions related to individual patient and overall ED information present on the display screens (Fig. 2). The questions were grouped into sections by screen type, and the name of the corresponding screen was provided at the top of each screen for reference. The task and question set ensured that participants were exposed to various aspects of the EDIS. In addition, these questions provided an object measure of participants’ understanding of the display components that complemented the subject rating questions described below.

Fig. 2.

Example information familiarization questions for waiting room and bed status displays. Note that all patient data is fictitious.

2.5. Patient familiarization

Following the EDIS familiarization phase, an audio recording of an emergency medicine physician or nurse describing patients in the system was played for each participant. The audio recording presented patient information that would typically be presented to an ED staff member during a shift sign-over and was scripted by the emergency medicine domain experts on the research team. After the recording finished, participants were given an additional five minutes to review patient information and create handwritten notes, similar to those prepared for a shift.

2.6. Task performance

Participants then used the EDIS to perform two tasks, developed by the emergency medicine domain experts on the team. The purpose of these tasks was for participants to interact with various components of the interface in a more realistic, non-scripted manner. The first task was an orientation task that required the participants to simulate re-orienting themselves to the patient status board after returning from a patient resuscitation, and the second task was a planning task in which participants were notified of a mass casualty incident and instructed to prepare for an influx of patients. The first task was performed while seeing displayed patient data that represented a time 20 min after the patient sign-over, and the second task was simulated to occur after an additional 20 min of elapsed time.

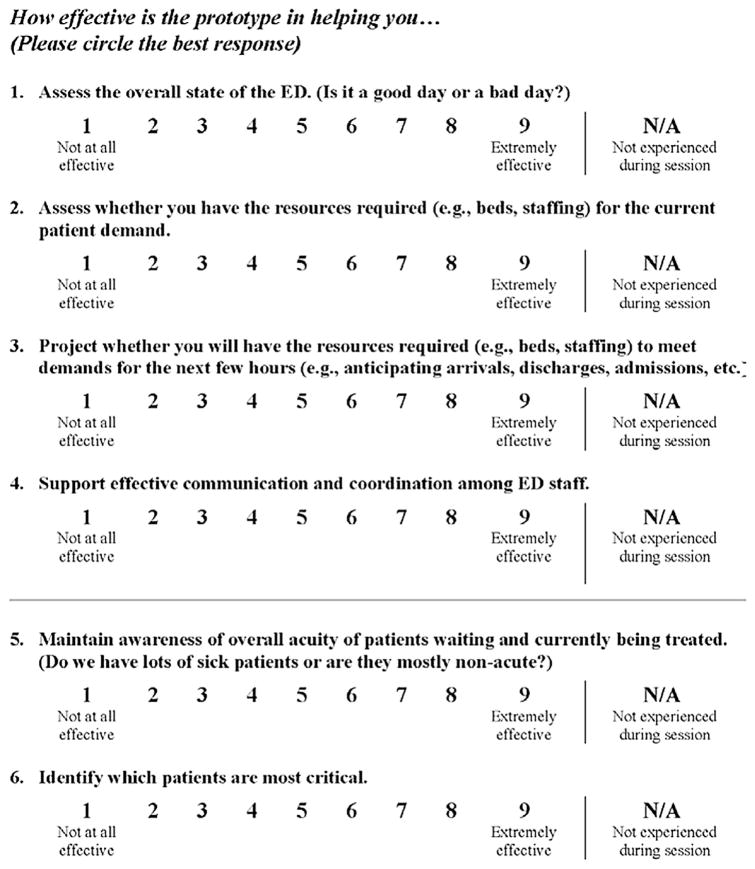

2.7. Subjective assessments

After the two tasks, participants completed two subjective assessments. The first evaluation consisted of a survey of 19 cognitive performance support objectives (Table 1 and Fig. 3) developed based on the previous CSE analysis of the ED. Participants rated how effectively the display concepts supported various objectives which were generally related to awareness of the overall ED state and flow of patients through the ED, patient care, staff workload, and available resources. Each objective was rated on a 9-point scale with 1 indicating “not at all effective” and 9 indicating “extremely effective.” Participants could also indicate “N/A” if that objective was “not experienced during the session.” Participants were allowed to use the EDIS during the evaluation to refresh their memory if needed.

Table 1.

Cognitive performance support objective mean scores.

| Cognitive Performance Support Objective How effective is the prototype in helping you … |

Mean Score | SD |

|---|---|---|

| 1 Identify which patients have been in the ED the longest | 8.56 | 1.20 |

| 2 Identify where patients are in the care process (across all patients) | 8.17 | 1.54 |

| 3 Assess whether you have the resources required (e.g., beds, staffing) for the current patient demand | 7.83 | 1.29 |

| 4 Identify bottlenecks or hold-ups preventing overall patient flow through the ED | 7.50 | 1.34 |

| 5 Maintain awareness of overall acuity of patients waiting and currently being treated. | 7.47 | 1.77 |

| 6 Assess the current state of the ED with respect to balancing patients and workload across providers | 7.39 | 1.97 |

| 7 Identify hold-ups in the care of an individual patient | 7.28 | 1.56 |

| 8 Project whether you will have the resources required (e.g., beds, staffing) to meet demands for the next few hours (e.g., anticipating arrivals, discharges, admissions, etc.) | 7.17 | 1.20 |

| 9 Identify which patients are most critical | 7.17 | 2.64 |

| 10 Assess the overall state of the ED (Is it a good day or a bad day?) | 7.06 | 1.76 |

| 11 Support effective planning for individual patient care | 6.88 | 1.69 |

| 12 Identify the next patient I should sign up for (e.g., patients that need to be seen out of order or seen from the waiting room) | 6.88 | 1.96 |

| 13 Support effective communication and coordination among ED staff, in regard to balancing patients and workload across providers | 6.83 | 1.89 |

| 14 Understand whether individual patients are waiting for you to assess or treat them (i.e. if you are the hold-up) | 6.82 | 1.67 |

| 15 Identify where only my patients are in the care process | 6.61 | 2.55 |

| 16 Support effective communication and coordination among ED staff | 6.59 | 2.03 |

| 17 Support effective communication and coordination among ED staff, in regard to an individual patient and that patient’s treatment plan | 6.56 | 1.54 |

| 18 Maintain awareness of acuity and changes in acuity of individual ED patients (Do we have lots of sick patients or are they mostly non-acute?) | 6.44 | 2.18 |

| 19 Provide support for prioritizing your tasks | 5.89 | 2.27 |

Fig. 3.

Example cognitive performance support objectives for emergency medicine staff. A complete list is shown in Table 1 in the results.

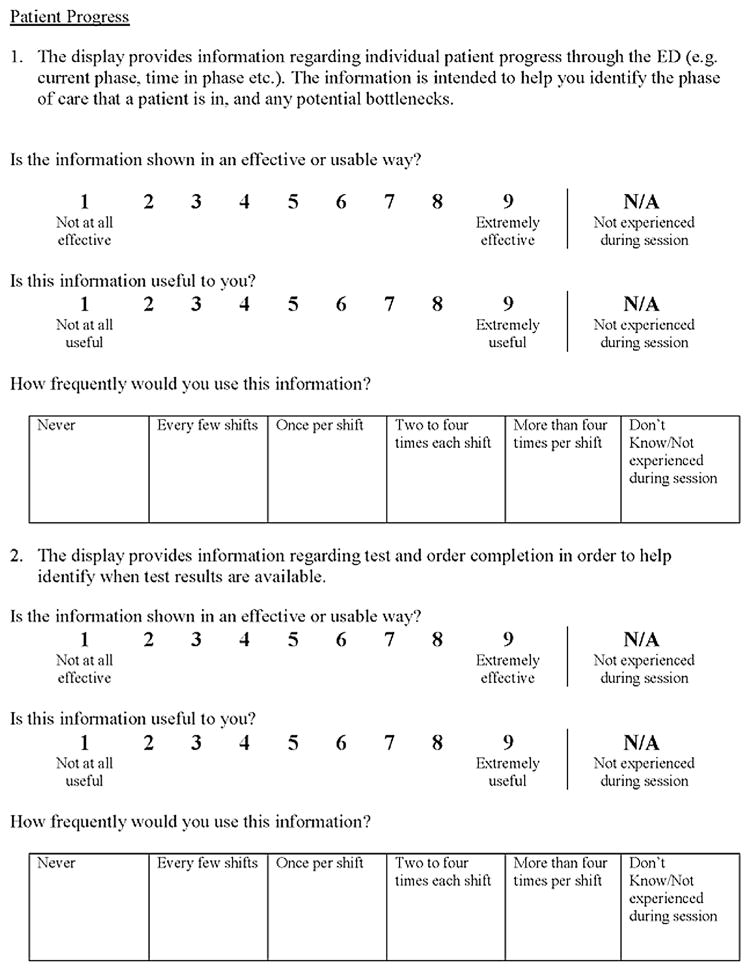

The second evaluation assessed the usability, usefulness, and frequency of use of 18 interface components and functions across the seven EDIS displays (Fig. 4). The evaluation contained a section for each EDIS screen (excluding the navigation dashboard). Each section provided the name of the screen that was being evaluated and asked the participant to rate the usability, usefulness, and predicted frequency of use for key features and function on the display screen. These questions were design to allow us to evaluate which specific visualization components were useful/usable (or not). Participants could refer back to the EDIS if needed to answer specific questions. Participants were asked to rate the usefulness and usability of each component on a 9-point Likert scale ranging from “not at all effective” (score of 1) to “extremely effective” (score of 9). For both scales, a rating 5 was considered neutral; a score of 6 and above was considered positive. Participants were also asked to predict how frequently they would use each information display or function if this system were implemented in the clinical environment using five options that ranged from “never” (score of 1) to “more than 4 times per shift” (score of 5). Participants also had the option to select “Don’t know/Not experienced during session.”

Fig. 4.

Example usability, usefulness, and frequency of use questions for EDIS functions and features.

2.8. Data analysis

The percentage of correct responses for the familiarization questions was computed for each question. Unanswered questions, and questions with more than one choice selected, were counted as incorrect. Mean scores were calculated for each cognitive objective, usability, and usefulness rating response (since these responses used Likert scales). Median scores were computed for the frequency question. Inspection of the data and residual plots for mean cognitive objective, usability, and usefulness scores did not indicate any serious violation of assumptions of normality, independence, and equality of variance. Therefore, ANOVA was used to identify significant main and interaction effects for these variables. Ratings for the cognitive performance support objective were analyzed using a two-way mixed ANOVA. Participant role was included as a two-level (provider/nurse) between-subject variable. All participants completed all cognitive support ratings. Therefore, cognitive performance support objective was included as a within-subjects variable. For the usability and usefulness evaluations, a different mixed ANOVA model was used. Role was again included as a between-subjects variable. All participants answered usability, and usefulness, questions about all screens. Therefore, measure type (usability and usefulness) and screen-type were included as within-subjects variables. The questions regarding screen features were specific to each screen, and therefore were treated as a nested variable. All analyses were performed using SAS 9.4(SAS, 2012).

3. Results

3.1. Participation

Participants included five attending physicians, two resident physicians, two physician assistants and nine registered nurses. All participants reported previous experience using at least one EDIS. On average, participants reported using a computer or electronic devices (tablet, handheld, or smartphone) for 36 h per week for work related activities (s.d. = ±12 h).

3.2. Familiarization

Familiarization scores provided an objective measure of the degree to which participants could use each component of the interface. Scores for the familiarization questions were 80% or higher for 18 of 23 questions (75% of the questions), indicating a good level of understanding of display functionality. One of the questions from the staff workload screen section was eliminated from the analysis because, after data collection, it was found that the question could not be answered based on the information provided on the screen.

3.3. Cognitive performance support objectives

All 19 cognitive performance support objectives received a mean score of 5 (neutral) or higher on the 9-point Likert scale, and 17 (89% of the questions) had mean scores higher than 6.5, indicating high levels of support for the objectives (Table 1). The highest rating was for supporting a provider’s ability to determine which patients have been in the ED the longest (Table 1, objective one), while the lowest rating was for supporting the ability to prioritize tasks (Table 1, objective 19). The two-way mixed ANOVA showed a significant main effect of cognitive performance support objective (p = 0.009). However, there was no effect of role (provider/nurse) and no interaction effect between role and objective. Paired comparisons showed overlap of cognitive performance support objective mean scores, with the mean score for objective one similar to objectives two through five. However, mean score for objective 1 was significantly higher than mean scores for objectives 6 through 19. The mean score for objective 19 was similar to mean scores for objectives 11 through 18, but significantly lower than mean scores for objectives 1 through 10.

3.4. Usability, usefulness, and frequency of use

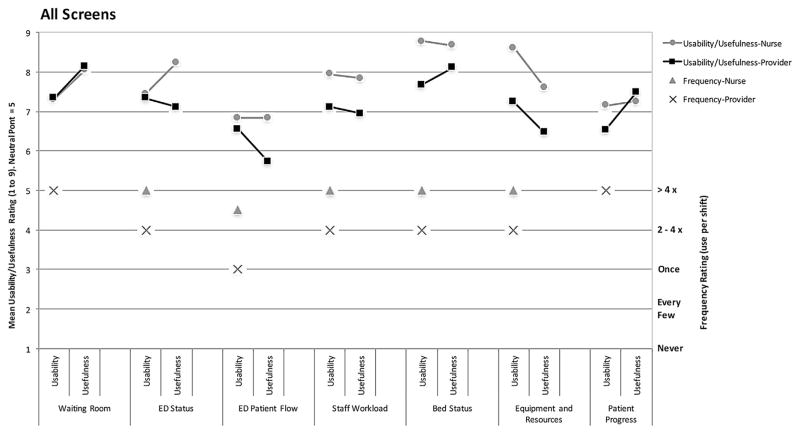

Fig. 5 shows the usability and usefulness scores averaged over all questions for each screen (left axes scale), along with median frequency of use scores (right axes scale). Mean usability and usefulness ratings were high (greater than 6) for 17 out 18 (97%) display components that were rated, across the seven display screens.

Fig. 5.

Mean usability, usefulness, and median frequency of use ratings for the seven EDIS displays separated according to role (providers/nurses). Usability and usefulness scores (left axes) ranged from 1 (Not at all effective/useful) to 9 (extremely effective/useful). Frequency scores (right axes) ranged from “never used in a shift” to “used more than 4 times”.

After insignificant interactions were removed, the final two-way mixed ANOVA model for the usability and usefulness scores retained only one 2-way interaction, between measure (usability and usefulness) and screen. This interaction was significant (p = 0.013) with simple effects tests showing that usefulness was higher than usability for the waiting room (p = 0.018) and patient progress (p = 0.0003) displays. There was also a significant main effect of role (p = 0.025) with nurses providing overall higher scores than providers (mean scores for screens of 7.75 and 7.12, respectively).

Median scores were also high for frequency of use. For all frequency of use responses, the median responses were either 2 to 4 times per shift, or 4 or more times per shift. Examination of the frequency of use scores (Fig. 5) indicates that for five of seven screens, providers responded that they would use the information less frequently than nurses. Frequency of use was also lowest for the ED patient flow screen, similar to usability and usefulness scores.

4. Discussion

The primary purpose of this study was to evaluate the usefulness and usability of novel display concepts for EDIS display concepts, developed using cognitive systems engineering and user-centered design methods (as described in the introduction section above). Overall, the displays received high scores for supporting 19 different cognitive performance support objectives. Individual features of the displays also received high scores for usefulness, usability, and frequency of use. Our first hypothesis, that scores would be greater than neutral (5), was supported for all of the cognitive performance support objectives, usability, and usefulness scores. These findings support the use CSE methods to identify important and useful information required for cognitive support and using those findings as the basis for design criteria.

The four top scoring cognitive performance support objectives (Table 1) included identifying which patients had been in the ED the longest, identifying where patients are in the care process, assessing whether there are enough resources to meet current patient demand, and identifying bottlenecks, or hold-ups, in the patient flow. The individual task prioritization cognitive performance support objective (Table 1, objective 19) received a significantly lower score (though it was still greater than neutral) when compared to objectives one through 10. We included the question regarding this objective because the need to support individual task prioritization for providers and nurses was identified through the CSE analysis process. However, we did not design a display specifically to support this objective, because that objective was focused on the individual provider, rather than the ED as a whole (Guarrera et al., 2012). This is likely why the rating for this objective was lower than for other objectives. Creating tools to support task prioritization and organization for individual clinical staff members is a goal for future research.

Our second hypothesis, stating user perceptions would be consistent across roles (providers/nurses), was supported with respect to cognitive performance support objective ratings. The ANOVA showed no significant difference in those scores between providers and nurse. However, the ANOVA results did show a main effect of role (provider/nurse) on usability and usefulness ratings of individual display features, with nurses providing higher scores on average than providers (7.75 vs. 7.12). Inspection of Fig. 5 suggests that the higher scores were primarily due to displays that address various kinds of resource allocation: staff, ED and hospital beds, and imaging and laboratory requests. Negotiating staffing (nursing assignments to specific patients), finding beds for admitted patients, and managing patients waiting for and being transported to tests are tasks that fall primarily to nursing staff. Therefore, differences in workflow and patient care tasks between nurses and providers may have led to the significant difference in usefulness and usability ratings seen for these displays.

Specific functions and features related to the waiting room (Fig. 1d), resources and equipment (Fig. 1e), bed status (Fig. 1g), and staff workload (Fig. 1h), received the highest mean scores for usability and usefulness (Fig. 5), indicating that information regarding resource allocation in the emergency department is useful information and the design of these EDIS displays was usable. Results from the two-way mixed ANOVA showed that the mean usefulness scores for the waiting room display and patient progress display were significantly higher than the mean usability ratings for these displays (though all average scores were higher than 6). This suggests that while the CSE methods used in this study correctly identified waiting room and patient progress information that is useful to ED staff members, it may be possible to further improve the presentation of the information.

Results from the familiarization questions also provided insight into a potential design improvement needed for the waiting room display. One question, for which only four out of 18 participants (22%) responded correctly, pertained to a feature in the waiting room screen (Fig. 1d) that allowed users to view information regarding incoming patients (See Fig. 2, question 3). Anticipated patient arrivals were shown by a colored bar representing the patient’s triage severity level and, for some patients, duration until arrival. Hovering over this area displayed additional information such as the patient’s name and chief complaint. The low percentage correct on this question suggests that the design of this feature could be improved to make this information more apparent and easily accessible to the user.

Information related to ED historical data was rated as less useful and less usable than other display concepts. The ED patient flow display (Fig. 1b) and the equipment and resources display (Fig. 1e) presented data regarding historical ED patient flow trends and historical wait times for imaging studies and laboratory tests. Both the ED patient flow display, overall, and the specific historical wait time feature of the equipment and resources display were not rated as highly useful as other features. The inclusion and presentation of this historical data was intended to provide ED staff members with the ability to determine if current ED conditions, such as current patient volume and current wait times for laboratory tests and imaging studies, were abnormal, or if the current ED conditions matched historical ED trends. The low mean scores for usefulness and usability suggest that this information may not be useful for providers and nurses when completing typical patient care tasks during a shift, even though this information was identified as a need in the first phase of the study. Participants also had lower scores on the (objective) familiarization questions related to this display screen, suggesting the format in which the information was displayed may have caused interpretation problems for the users. Future research is needed to determine whether such information can be provided in a way that is useful for decision making and patient care.

This study had several limitations. The EDIS being tested was a prototype that has not yet been used in the clinical environment. However, it was developed through a process that involved experts with extensive emergency medicine experience, and showed data that was representative of typical ED operations. The usability evaluations were performed on an individual basis in a simulation laboratory setting, which may have affected the participants’ use of the EDIS. Future studies involve the implementation and optimization of the EDIS in a real clinical emergency medicine environment. To this end, we have recently developed a module within a deployed hospital EHR system that provides the staff workload display (as seen in Fig. 1H) based on real-time patient data. This display concept received high usability and usefulness ratings (particularly from nurses) in this study, and was selected as particularly useful and worthwhile or further development by the hospital. We are in the process of implementing and assessing this display in a clinical environment, and anticipate implementing more of the display concepts in a similar fashion.

The results presented here used subjective assessments of the displays supplemented with objective measures of familiarization. Multiple methods including objective measures of performance or situation awareness can provide a more complete assessment and are recommended for usability studies (Ant Ozok et al., 2014). The tasks performed by participants in this study were designed to allow participants to orient themselves to the information presented. However, because the interface did not support action (other than navigation) we did not measure use errors or time to complete tasks (e.g., time to sign up or discharge a patient). Also, the information presented in this study was not dynamic but represented two static points in time. Therefore, targeted measures of situation awareness such as SAGAT (in which dynamic task simulations are “frozen” and participants respond to questions about variable values and states) were not appropriate. We did, however, collect objective measures regarding the degree to which participants could read and understand information on the display (measured through the familiarization questions). Also, as part of our larger research project, we collected additional measures regarding use of these displays, in separate studies. In particular, as reported in McGeorge et al. (2015), we compared these displays to control displays which mimicked current systems being used by participants in a dynamic experimental task performed by two-person participant teams (a physician and nurse) in a clinical simulation environment (McGeorge et al., 2015). Objective measures of situation awareness (collected through the SAGAT technique) along with subjective workload measures and rating of cognitive support were collected. That study showed higher levels of cognitive support for the new displays compared to the control displays; an increase in situation awareness across points in the scenario for the new displays, and no increase in subjective workload due to the use of the new displays. The current study was designed, in part, to complement the simulation study, by exposing participants to all aspects of the displays and investigate which particular components of the displays were most useful/usable for participants.

Finally, the relatively small sample size (18 total participants, with 9 in each user-group type) was a limitation of the research. The sample size was driven primarily by the practical challenges in recruiting experienced ED personnel. While the sample was sufficient to identify some between-group differences (e.g., for usability and usefulness measures) we did not see any main effects or interaction related to the cognitive support objective scores, which is somewhat surprising given the different needs that might be expected across the two groups. It is possible that larger group sample sizes may have resulted in additional significant effects.

5. Conclusion

This study evaluated novel EDIS display concepts designed using cognitive systems engineering techniques. Overall, the EDIS display concepts received high scores (greater than neutral) in terms of providing cognitive support, usefulness, usability, and predicted frequency of use and therefore support the use of CSE and user-centered methods for designing health IT displays. Nurses’ ratings of usability and usefulness were significantly higher than providers potentially due to EDIS displays that supported resource allocation tasks more often engaged in by nursing staff. Findings also support further research to create displays that support individual provider task prioritization, as well as investigating how historical information regarding patient volumes and wait times for laboratory tests and imaging studies can be best presented and used.

Acknowledgments

This project was supported by grant number 1R18HS020433 from the Agency for Healthcare Research and Quality (Dr. Bisantz, PI). During the award period Dr. Fairbanks was supported by an NIH Career Development Award from the National Institute of Biomedical Engineering and BioImaging (K08EB009090). The authors would like to thank Emile Roth for her comments on a revised version of this manuscript.

References

- Adobe Flash Builder. 4.6 ed. 2010. [Google Scholar]

- Ahlstrom U. Work domain analysis for air traffic controller weather displays. J Saf Res. 2005;36(2):159–169. doi: 10.1016/j.jsr.2005.03.001. http://dx.doi.org/10.1016/j.jsr.2005.03.001. [DOI] [PubMed] [Google Scholar]

- Ant Ozok A, Wu H, Garrido M, Pronovost PJ, Gurses AP. Usability and perceived usefulness of personal health records for preventive health care: a case study focusing on patients’ and primary care providers’ perspectives. Appl Ergon. 2014;45(3):613–628. doi: 10.1016/j.apergo.2013.09.005. http://dx.doi.org/10.1016/j.apergo.2013.09.005. [DOI] [PubMed] [Google Scholar]

- Aronsky D, Jones I, Lanaghan K, Slovis CM. Supporting patient care in the emergency department with a computerized whiteboard system. JAMIA. 2008;15(3):184–194. doi: 10.1197/jamia.M2489. http://dx.doi.org/10.1197/jamia.M2489.Introduction. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisantz AM, Pennathur PR, Fairbanks R, et al. Emergency department status boards: a case study in information systems transition. J Cogn Eng Decis Mak. 2010;4(1):39–68. http://dx.doi.org/10.1518/155534310X495582. [Google Scholar]

- Clark L, Guarrera TK, McGeorge N, et al. Usability evaluation and assessment of a novel emergency department IT system developed using a cognitive systems engineering approach. Proc. HFES 2014 Int. Symp. Hum. Factors Ergon. Healthc. Lead. Way; Chicago, IL. 2014. pp. 76–80. [Google Scholar]

- Croskerry P, Sinclair D. Emergency medicine: a practice prone to error? Can J Emerg Med. 2001;3:271–276. doi: 10.1017/s1481803500005765. [DOI] [PubMed] [Google Scholar]

- Guarrera T, Stephens RJ, Clark L, et al. Engineering better health IT: cognitive systems engineering of a novel emergency department IT system. Proc Int Symp Hum Factors Ergon Healthc. 2012;1:165. [Google Scholar]

- Guarrera T, McGeorge N, Stephens RJ, et al. Better pairing of providers and tools: development of an emergency department information system using cognitive engineering approaches. Proc Int Symp Hum Factors Ergon Healthc. 2013;2:63. [Google Scholar]

- Guarrera T, McGeorge N, Clark L, et al. Cognitive engineering design of an emergency department information system. In: Bisantz A, Fairbanks R, Burns C, editors. Cognitive Engineering for Better Health Care Systems. CRC Press, Taylor & Francis; Boca Raton: 2015. [Google Scholar]

- Hajdukiewicz J, Burns C. Ecological Interface Design. CRC Press, Inc; Boca Raton, FL: 2004. [Google Scholar]

- Hertzum M, Simonsen J. Work-practice changes associated with an electronic emergency department whiteboard. Health Inf J. 2013;19:46–60. doi: 10.1177/1460458212454024. http://dx.doi.org/10.1177/1460458212454024. [DOI] [PubMed] [Google Scholar]

- Husk G, Waxman DA. Using data from hospital information systems to improve emergency department care. Acad Emerg Med. 2004;11(11):1237–1244. doi: 10.1197/j.aem.2004.08.019. [DOI] [PubMed] [Google Scholar]

- Laxmisan A, Hakimzada F, Sayan OR, Green R, Zhang J, Patel VL. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. Int J Med Inf. 2007;76(11–12):801–811. doi: 10.1016/j.ijmedinf.2006.09.019. http://dx.doi.org/10.1016/j.ijmedinf.2006.09.019. [DOI] [PubMed] [Google Scholar]

- Lin L, Bisantz AM, Fairbanks R, et al. Development of a simulation environment for the study of IT impact in the ED. 2008 Agency Healthc. Res. Qual. Annu. Conf.2008. [Google Scholar]

- McGeorge NM, Hegde S, Berg RL, et al. Assessment of innovative emergency department information displays in a clinical simulation center. J Cogn Eng Decis Mak. 2015;9(4):329–346. doi: 10.1177/1555343415613723. http://dx.doi.org/10.1177/1555343415613723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naikar N, Moylan A, Pearce B. Analysing activity in complex systems with cognitive work analysis: concepts, guidelines and case study for control task analysis. Theor Issues Ergon Sci. 2006;7(4):371–394. http://dx.doi.org/10.1080/14639220500098821. [Google Scholar]

- Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services. 2015 Edition Health Information Technology (Health IT) Certification Criteria, 2015 Edition Base Electronic Health Record (EHR) Definition, and ONC Health IT Certification Program Modifications. Published 10/16/2015. Downloaded on 10/26/2016 from https://www.federalregister.gov/documents/2015/10/16/2015-25597/2015-edition-health-information-technology-health-it-certification-criteria-2015-edition-base. [PubMed]

- Patterson ES, Rogers ML, Tomolo A, Wears RL, Tsevat J. Comparison of extent of use, information accuracy, and functions for manual and electronic patient status boards. Int J Med Inf. 2010;79(12):817–823. doi: 10.1016/j.ijmedinf.2010.08.002. [DOI] [PubMed] [Google Scholar]

- SAS (Version 9.4) 2012. [Google Scholar]

- Schenkel S. Promoting patient safety and preventing medical error in emergency departments. Acad Emerg Med. 2000;7(11):1204–1222. doi: 10.1111/j.1553-2712.2000.tb00466.x. [DOI] [PubMed] [Google Scholar]

- Seamster TL, Redding RE, Kaempf GL. Applied Cognitive Task Analysis in Aviation. Ashgate Publishing Limited; Aldershot, UK: 1997. [Google Scholar]

- Sharp T, Helmicki A. The application of the ecological interface design approach to neonatal intensive care medicine. Proc Hum Factors Ergon Soc 42nd Annu Meet. 1998;1(2):350–354. [Google Scholar]

- Truxler R, Roth E, Scott R, Smith S, Wampler J. Designing collaborative automated planners for agile adaptation to dynamic change. Proc Hum Factors Ergon Soc Annu Meet. 2012;56:223–227. [Google Scholar]

- Vicente KJ. Cognitive Work Analysis. Lawrence Erlbaum Associates; Mahwah, NJ: 1999. [Google Scholar]

- Wampler J, Roth E, Whitaker R, et al. Using work-centered specifications to integrate cognitive requirements into software development. Proc Hum Factors Ergon Soc Annu Meet. 2006;50:240–244. http://dx.doi.org/10.1037/e577572012-007. [Google Scholar]

- Watson M, Russell W, Sanderson P. Ecological interface design for anaesthesia monitoring. Australas J Inf Syst. 2000;7(2):109–114. [Google Scholar]

- Watson M, Sanderson P, Russell WJ. Tailoring reveals information requirements: the case of anaesthesia alarms. Interact Comput. 2004;16(2):271–293. http://dx.doi.org/10.1016/j.intcom.2003.12.002. [Google Scholar]

- Wears RL, Perry SJ, Wilson S, Galliers J, Fone J. Emergency department status boards: user-evolved artefacts for inter- and intra-group coordination. Cogn Technol Work. 2007;9:163–170. http://dx.doi.org/10.1007/s10111-006-0055-7. [Google Scholar]

- Yamaguchi Y, Tanabe F. Creation of interface system for nuclear reactor OPeration practical implication of implementing EID concept on large complex system. Proc Hum Factors Ergon Soc Annu Meet. 2000:571–574. [Google Scholar]