Abstract

Comparisons of musicians and non-musicians have revealed enhanced cognitive and sensory processing in musicians, with longitudinal studies suggesting these enhancements may be due in part to experience-based plasticity. Here, we investigate the impact of primary instrument on the musician signature of expertise by assessing three groups of young adults: percussionists, vocalists, and non-musician controls. We hypothesize that primary instrument engenders selective enhancements reflecting the most salient acoustic features to that instrument, whereas cognitive functions are enhanced regardless of instrument. Consistent with our hypotheses, percussionists show more precise encoding of the fast-changing acoustic features of speech than non-musicians, whereas vocalists have better frequency discrimination and show stronger encoding of speech harmonics than non-musicians. There were no strong advantages to specialization in sight-reading vs. improvisation. These effects represent subtle nuances to the signature since the musician groups do not differ from each other in these measures. Interestingly, percussionists outperform both non-musicians and vocalists in inhibitory control. Follow-up analyses reveal that within the vocalists and non-musicians, better proficiency on an instrument other than voice is correlated with better inhibitory control. Taken together, these outcomes suggest the more widespread engagement of motor systems during instrumental practice may be an important factor for enhancements in inhibitory control, consistent with evidence for overlapping neural circuitry involved in both motor and cognitive control. These findings contribute to the ongoing refinement of the musician signature of expertise and may help to inform the use of music in training and intervention to strengthen cognitive function.

Keywords: music, expertise, auditory processing, speech

Graphical Abstract

We present the first evidence that percussionists outperform both non-musicians and vocalists in inhibitory control, implicating potential connections between motor and cognitive control. Subtle nuances to the neural signature of musical expertise are revealed: Percussionists have more precise encoding of the fast-changing acoustic features of speech than non-musicians, whereas vocalists have better frequency discrimination and show stronger encoding of speech harmonics.

Introduction

There is growing evidence that musical experience shapes the brain both structurally (see Gaser & Schlaug, 2003, 2009 for review) and functionally (Schlaug, 2001; Lappe et al., 2008). Cross-sectional comparisons of musicians and non-musicians have revealed musician advantages in various aspects of cognitive and sensory function including attention and inhibitory control (Bugos et al., 2007; Bialystok & Depape, 2009; Strait et al., 2010; Rodrigues et al., 2013; Moreno et al., 2014; Carey et al., 2015; Costa-Giomi, 2015), frequency discrimination (Tervaniemi et al., 2005; Micheyl et al., 2006) and backward masking (Strait et al., 2010) as well as neural processing of speech (Schön et al., 2004; Musacchia et al., 2007; Wong et al., 2007; Parbery-Clark et al., 2009; Strait et al., 2014). Although cross-sectional studies cannot differentiate effects of training from pre-existing differences, an increasing number of longitudinal studies shows the emergence of changes within individuals over time (Moreno et al., 2009; Moreno et al., 2011; Tierney et al., 2013; Chobert et al., 2014; Kraus et al., 2014; Putkinen et al., 2014; Slater et al., 2014; Slater et al., 2015), suggesting that at least some musician enhancements may be due, in part, to experience-based plasticity rather than innate differences between those who pursue music training and those who do not (see Kraus & White-Schwoch, 2016 for review).

The many forms of music-making present an opportunity to investigate how different elements of musical experience may play a role in shaping cognitive and sensory function. A small number of studies have shown that the imprint of musical experience on neural function may be fine-tuned by the specific instrument played, revealing enhancements in the neural processing of relevant sound features, such as an enhanced neural response to the timbre of their instrument (Pantev et al., 2001; Shahin et al., 2008; Margulis et al., 2009; Strait et al., 2012). There is also evidence that vocalists have unique auditory processing benefits over non-musicians and even instrumentalists of a similar level of expertise (Nikjeh et al., 2008; Halwani et al., 2011), however this group has received relatively little attention in research to date.

There are very few studies investigating differential effects of musical expertise on cognitive function, although initial evidence suggests that cognitive enhancements may be independent of instrument (Carey et al., 2015) and extend to vocalists (Bialystok & Depape, 2009). However, Carey et al (2015) found only weak evidence overall for the transfer of musical training to non-musical tasks and the factors contributing to the generalization of effects remain a point of ongoing discussion (for example, see Benz et al., 2015; Costa-Giomi, 2015 for review). It has been suggested that broader cognitive benefits of music training may be mediated by inhibitory control (Degé et al., 2011; Moreno & Farzan, 2015), therefore it is of particular interest to identify the components of musical activity that may be effective in strengthening inhibitory control.

Here we assessed cognitive, perceptual and neural measures in two musician groups (percussionists and vocalists) and in a control group of non-musicians. We recruited musicians from a wide range of musical backgrounds and adopted less stringent musicianship requirements than previous studies with respect to age of onset of musical training, years of practice and current amount of practice, with the goal of teasing apart different aspects of expertise. We focused on measures that have previously been associated with a musician advantage in young adults and asked whether these advantages are influenced by a relative emphasis on rhythm and timing (percussion) or pitch and melody (voice). In addition, the range of musical backgrounds in our participants allowed us to assess whether cognitive function is influenced by specialization in sight-reading or improvisation.

Cognitive and perceptual measures included attention and inhibitory control, frequency discrimination and backward masking. Neural measures, assessed by the auditory frequency-following response (FFR), included encoding of speech harmonics (Parbery-Clark et al., 2009) and the neural differentiation of speech syllables (Parbery-Clark et al., 2012; Strait et al., 2014), which relies upon precise encoding of fast-changing acoustic information. Based on previous work we hypothesized that different primary instruments engender distinct signatures of neural enhancement, reflecting the acoustic properties most salient to that type of musical practice. Specifically, we predicted that musical experience with an emphasis on rhythm and timing precision (i.e. drums/percussion) is associated with greater neural differentiation of fast-changing characteristics of speech syllables, whereas experience with a relative emphasis on pitch (i.e. vocalists) is associated with enhanced neural encoding of speech harmonics during a sustained vowel. However, we hypothesized that there are general cognitive benefits of musical practice, irrespective of instrument, and we predicted both musician groups would outperform non-musicians in attention and inhibitory control.

Materials and methods

Participants

Participants were young adult males, aged 18–35 years, and were recruited from the Northwestern University community, broader Chicago area and via postings on Craigslist. Participants completed an extensive questionnaire addressing family history, musical practice history and educational background. Participants had no current external diagnosis of a language, reading, or attention disorder, air-conducted audiometric thresholds < 30 dB nHL for octaves from 125–8000 Hz, and a click-evoked auditory brainstem response within lab-internal age-based norms. All procedures were approved by Northwestern University’s Institutional Review Board and participants were compensated for their time.

Groups based on primary instrument (percussion vs. voice)

Participants were divided into three groups: percussionists (n=21), vocalists (n=21) and non-musicians (n=18). The groups did not differ on age, IQ, as measured by the Test of Nonverbal Intelligence (TONI) (Brown et al., 1997) or pure-tone hearing thresholds (see Table 1). Musician participants had played consistently for at least the past five years with either drums/percussion or vocals as their primary instrument The two musician groups (percussionists and vocalists) did not differ with respect to age at which musical training began, years of musical experience, current hours of practice per week, self-rated overall proficiency on their primary instrument or self-rated proficiency in sight-reading or improvisation. Non-musician participants had no more than four years of musical experience across their lifetime, with no regular musical activities within the seven years prior to the study. See Table 1 for summary of group characteristics and statistics, and Table 2 for details regarding instruments played, self-rated proficiency, format of training and total years of musical practice for all participants with musical experience (including non-musicians with prior music training).

Table 1.

| Demographics | Non-musicians (n=18) | Vocalists (n=21) | Percussionists (n=21) | Test Statistic | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M(SD) | Min | Max | M(SD) | Min | Max | M(SD) | Min | Max | H(p value) | |

|

|

||||||||||

| Age (yrs) | Mdn=23.5 (3.5) | 18 | 30 | Mdn=23.0 (3.9) | 18 | 31 | Mdn=24.0 (5.4) | 18 | 35 | 0.208 (.901) |

| Non-verbal IQ (percentile) | 74.4 (19.5) | 21 | 99 | 72.7 (23.3) | 26 | 99 | 72.9 (21.4) | 21 | 95 | 0.017 (.991) |

| Pure-tone thresholds (R) | 8.9 (4.2) | 1.67 | 18.33 | 7.3 (3.6) | 3.33 | 13.33 | 7.9 (4.2) | −1.7 | 13.33 | 1.297 (.523) |

| Pure-tone thresholds (L) | 7.8 (4.9) | 0 | 20 | 7.1 (4.8) | −1.67 | 15 | 6.5 (4.9) | −5 | 16.67 | 0.408 (.816) |

| Musical history* | U(p value) | |||||||||

|

| ||||||||||

| Age of onset of musical training (yrs) | Mdn=7.0 (3.5) | 3 | 18 | Mdn=9.0 (2.4) | 3 | 13 | 291.5 (.071) | |||

| Years of musical experience | 16.1 (4.8) | 7 | 25 | 15.9 (6.7) | 8 | 32 | 200.0 (.605) | |||

| Current hours of practice per week | 10.8 (8.4) | 1 | 30 | 12.4 (8.0) | 1 | 30 | 234.0 (.530) | |||

| Self-rated proficiency on primary instrument | 8.2 (1.4) | 5 | 10 | 8.8 (1.2) | 6 | 10 | 281.5 (.115) | |||

| Sight-reading proficiency on primary instrument | 6.9 (2.5) | 2 | 10 | 6.6 (2.4) | 1 | 10 | 197.0 (.548) | |||

| Improvisation proficiency on primary instrument | 6.9 (1.7) | 4 | 10 | 7.5 (2.5) | 2 | 10 | 364.5 (.262) | |||

Non-musician participants excluded from group comparisions related to musical history

Table 2.

| PRIMARY INSTRUMENT | OTHER INSTRUMENTS | ||||

|---|---|---|---|---|---|

| Instrument (overall proficiency rating) | Sight-reading proficiency | Improvisation proficiency | Instrument (proficiency rating) | Format(s) of training | Years of musical practice |

| Voice (6) | 4 | 6 | Piano (3) | Private | 15 |

| Voice (8) | 6 | 8 | Piano (3) | Private, group | 15 |

| Voice (8) | 8 | 8 | Guitar (6), Drums (6), Bass (3), Piano (2) | Private, group, self-taught | 15 |

| Voice (7) | 7 | 4 | Piano (1) | Private, group | 7 |

| Voice (8) | 7 | 4 | Group | 12 | |

| Voice (7) | 8 | 5 | Piano (3) | Private, group, self-taught | 17 |

| Voice (10) | 6 | 8 | Flute (8) | Private, group | 13 |

| Voice (9) | 10 | 10 | Bass (5), piano (4), percussion (5), guitar (6) | Private, group, self-taught | 20 |

| Voice (10) | 8 | 7 | Piano (4) | Private, group | 19 |

| Voice (8) | 2 | 8 | Trombone (3), harmonica (3) | Private, group, self-taught | 20 |

| Voice (8) | 9 | 8 | Piano (5) | Private | 16 |

| Voice (10) | 7 | 10 | Piano (5) | Group, self-taught | 14 |

| Voice (7) | 5 | 7 | Piano (2) | Private, group | 9 |

| Voice (9) | 8 | 6 | Piano (6), flute (5), violin (3) | Private, self-taught | 19 |

| Voice (9) | 9 | 8 | Guitar (9), drums (7), piano (6) | Private, self-taught | 21 |

| Voice (10) | 10 | 7 | Guitar (5), piano (4) | Private, group, self-taught | 25 |

| Voice (9) | 8 | 7 | Piano (5) | Private, group, self-taught | 14 |

| Voice (9) | 10 | 8 | Private, group | 23 | |

| Voice (8) | 2 | 4 | Guitar (8), banjo (5), saxophone (5), bass (5) | Private, self-taught | 22 |

| Voice (7) | 9 | 6 | Piano (2) | Suzuki, private | 13 |

| Voice (5) | 3 | 6 | Trombone (6) | Private, group | 10 |

| Drum set (9) | 8 | 9 | Piano, cello | Private, group, suzuki | 13 |

| Drum set (7) | 7 | 6 | Private, group, self-taught | 8 | |

| Snare drum, Drum set (10) | 1 | 6 | Private, group | 10 | |

| Drum set (8) | 2 | 9 | Guitar (8), piano (2) | Private, self-taught | 24 |

| Percussion (10) | 7 | 7 | Private | 16 | |

| Drum set, percussion (6) | 8 | 2 | Private, group | 8 | |

| Drum set, percussion (9) | 6 | 7 | Private, self-taught | 11 | |

| Percussion (9) | 7 | 6 | Private | 22 | |

| Drum set (10) | 8 | 10 | Guitar (5) | Group, self-taught | 10 |

| Percussion (9) | 8 | 5 | private, group | 17 | |

| Drum set, mallets, percussion (10) | 5 | 7 | Voice (6), piano (4) | Private, self-taught | 20 |

| Drum set (8) | 2 | 9 | Guitar (8) | Private, self-taught | 32 |

| Drum set (9) | 7 | 10 | Guitar (7), Piano (6), Bass (5) | Private, mostly self-taught | 19 |

| Drum set (10) | 8 | 10 | Guitar (4), piano (5) | Private, group, self-taught | 26 |

| Drum set (10) | 9 | 9 | Piano (5), guitar (6), voice (4) | Private, group, self-taught | 24 |

| Percussion (10) | 8 | 10 | Private | 15 | |

| Drum set (10) | 10 | 3 | Private, group | 14 | |

| Percussion (9) | 6 | 4 | Piano (2), voice (1) | Self-taught, Suzuki | 11 |

| Drum set, percussion (7) | 9 | 8 | Piano (4) | Private, group, self-taught | 14 |

| Drum set (7), percussion (5) | 8 | 10 | Private | 10 | |

| Percussion (8) | 5 | 10 | Voice (4), occarina (2) | Self-taught, group | 9 |

| Non-musician | n/a | n/a | Piano (1), trumpet (1) | Group | 1 |

| Non-musician | n/a | n/a | Piano (1) | Private | 1 |

| Non-musician | n/a | n/a | Trumpet (1) | Group | 1 |

| Non-musician | n/a | n/a | Trumpet (4) | Group | 2 |

| Non-musician | n/a | n/a | Piano (3) | Private | 2 |

| Non-musician | n/a | n/a | Guitar (1) | Private | 2 |

| Non-musician | n/a | n/a | Piano (3), trumpet (3) | Private, group | 3 |

| Non-musician | n/a | n/a | Piano (2) | Private | 3 |

| Non-musician | n/a | n/a | Clarinet (7) | Group | 3 |

| Non-musician | n/a | n/a | Sax (4), piano (2) | Private | 3 |

| Non-musician | n/a | n/a | Handbell (2), voice (2) | Group | 4 |

Groups based on sight-reading and improvisation proficiency

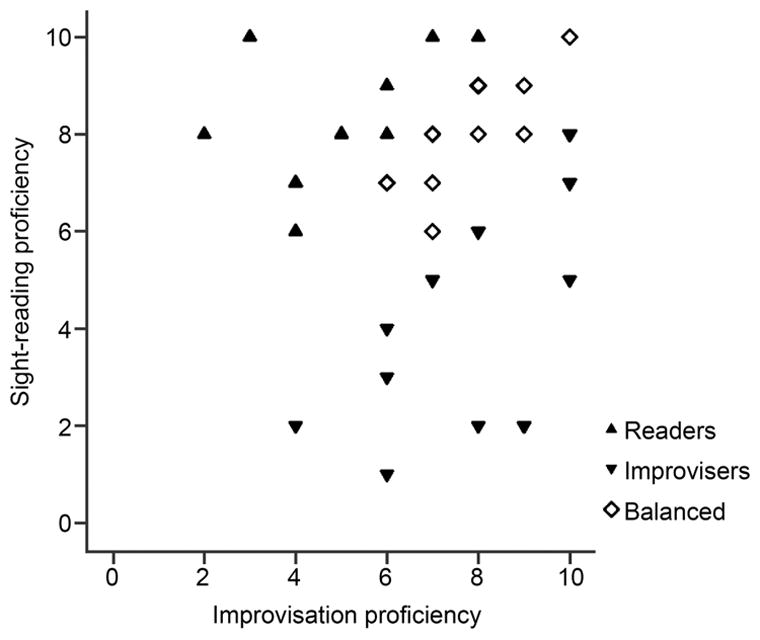

To assess the impact of specialization in sight-reading vs. improvisation on cognitive functions, we used the musicians’ self-reported proficiency scores on their primary instrument (the participants ranked themselves on a scale of 1–10 in each skill). Given the range and combinations of different types of specialization across the participants we grouped the participants by their dominant proficiency rather than treating proficiency as a continuous variable. To assess the extent of specialization, a difference score was calculated by subtracting self-rated proficiency in improvisation from proficiency in sight-reading We then grouped the musician participants based on their difference score (see Figure 1): if the participant’s self-rated proficiency in sight-reading was at least two points higher than their proficiency in improvisation, they were added to the Readers group (n=11, 7 vocalists), and vice versa for the Improvisers group (n=18, 8 vocalists); if their proficiency scores differed by less than two points, the participant was added to the Balanced group (n=13, 6 vocalists) The non-musicians formed a fourth group (n=18). These groups did not differ on age, IQ or pure tone thresholds (all p>.05). The three musician groups did not differ in their proficiency on primary instrument, age at which musical training began, hours per week or years of musical practice (all p>0.05). The number of percussionists vs. vocalists in each group was balanced (χ2(2)=1.12, p=.572). The distribution of sight-reading and improvisation proficiency across the musician participants is shown in Figure 1.

Figure 1.

Participants span a range of proficiency in sight reading and improvisation, and are grouped according to their relative proficiency in each.

Testing Procedures

Attention and inhibitory control were assessed using the Integrated Visual and Auditory Plus Continuous Performance Test (Sandford & Turner, 1994). The test was administered in a soundproof booth on a laptop computer and was divided into four sections: warm-up, practice, test and cool-down. Instructions came from the test via Sennheiser HD 25-1 headphones and corresponding visual cues. During the warm-up, participants were instructed to click the mouse when they saw or heard a “1”; the test proceeded with a 20-trial warm-up during which only the number “1” was spoken or presented visually, 10 times each. Next, participants completed a practice session during which they were reminded of the same instructions but were also asked not to click the mouse when they saw or heard a “2”; further practice trials were presented (10 auditory and 10 visual targets). During the main test portion of the test, choice reaction time was recorded for participants’ responses to the target (“1”) and foil (“2”) stimuli on five sets of 100 trials for a total of 500 trials. Each set consisted of two blocks of 50 trials, with each trial lasting 1.5 s. The visual targets were presented for 167 ms and were 4 cm high, while the auditory stimuli lasted 500 ms and were spoken by a female.

The first block of each set of the main test collects a measure of impulsivity by creating a ratio of target to foil of 5.25:1.0, resulting in 84% of trials (or 42 out of 50, per block) presenting targets intermixed with eight foils. The second block collects a measure of inattention by reversing the order and presenting many foils and few targets (165 targets over all five sets). Stimuli are presented in a pseudo-random order of visual and auditory stimuli. The entire assessment including the introduction, practice, test and cool down lasts 20 min. The assessment generates age-normed composite scores for the “full scale attention quotient” and “full scale response control quotient,” a measure of inhibitory control.

Frequency discrimination and backward masking were collected using sub-tests of the IHR Multicentre Battery for Auditory Processing (IMAP, developed by the Medical Research Council Institute of Hearing Research, Nottingham, UK). The test battery was administered in a sound attenuated booth using a laptop computer. Responses were recorded using a 3-button response box. Stimuli were presented diotically through Sennheiser HD 25–1 headphones and were accompanied by animated visual stimuli. The subtests used an identical response paradigm, visual cues and response feedback. Each subtest was initiated by a practice session of easy trials, consisting of the same stimuli used for initial trials in each subtest (a 90 dB SPL target tone for backward masking and a 50% frequency difference between the target and standard tones for frequency discrimination). Correct responses on 4 out of 5 practice trials were required to continue. All participants achieved a minimum of 4 out of 5 correct responses for all practice sessions.

Frequency discrimination

The frequency discrimination paradigm employed a cued three-alternative forced choice presented as an animated computer game in which each of three characters opened their mouths to “speak” a sound. The target (“odd-one-out”) signal was presented with equal probability in one of the three intervals amidst a standard 1000 Hz tone that was presented twice for each trial. All tones had equal durations (200 ms) and were separated from one another by 400 ms. All stimuli incorporated 10 ms cosine ramps. The target differed in frequency from the standard, initially 50% higher in frequency but gaining in proximity to the standard with successful performance according to an adaptive staircase model (3 down, 1 up) that incorporated three diminishing step sizes (see Amitay et al., 2006 for further description). Incorrect responses resulted in a greater percent difference between the target and standard tones. Each of the cartoon characters corresponded to one of the three buttons on the response box and participants indicated which cartoon character presented the target by pressing the corresponding button. After correct responses, the character that “spoke” the target danced. Participants were given unlimited time to respond (response times were not logged). Trials continued until a total of three reversals was obtained. Threshold was determined by calculating the mean percent difference between the target and standard presented in the final two trials (Amitay et al., 2006; Moore et al., 2008).

Backward masking

The IMAP test battery includes two backward masking sub-tests, one with a 50ms gap between the target and noise and one with no gap; we limited our analyses to the more difficult “no gap” condition, described here. Participants were instructed to attend to the computer screen and listen to a sequence of three “noise sounds” (bandpass noise with a center frequency of 1000 Hz, a width of 800 Hz, a duration of 300 ms, and a fixed spectrum level of 30 dB). A 20 ms, 1000 Hz target tone with 10 ms cosine ramp occurred immediately prior to the noise. Participants pressed the appropriate button on the response box to indicate which of three trials contained the target tone (as opposed to noise only). From the initial 90 dB SPL presentation, targets decreased in intensity according to an adaptive staircase model (3 down, 1 up) that incorporated three diminishing step sizes (see Amitay et al., 2006 for further description). This procedure yielded a minimum detectable threshold (target dB).

Electrophysiology

Stimuli

The speech syllables [ba], [da] and [ga] were presented in quiet. All stimuli were constructed using a Klatt-based synthesizer (Klatt, 1980). Their durations are 170 ms with voicing (100 Hz F0) onset at 10 ms. The formant transition durations are 50 ms and comprise a linearly rising F1 (400–720 Hz) and flat F4 (3300 Hz), F5 (3750 Hz), and F6 (4900 Hz). Ten ms of initial frication are centered at frequencies around F4 and F5. After the 50 ms formant transition period, F2 and F3 remain constant at their transition end point frequencies of 1240 and 2500 Hz, respectively, for the remainder of the syllable. The stimuli differ only in the starting points of F2 and F3. For [ba], F2 and F3 rise from 900 Hz and 2,400 Hz, respectively. For [da], F2 and F3 fall from 1700 and 2580, respectively. And for [ga], F2 and F3 fall from 3000 and 3100, respectively.

Electrophysiological Procedure

The speech syllables were presented in alternating polarities at 80 dB Sound pressure level (SPL) binaurally with an inter-stimulus interval of 83 ms (Neuro Scan Stim 2; Compumedics) through insert ear phones (ER-3; Etymotic Research), using NeuroScan Acquire 4.3 recording system (Compumedics) with four Ag–AgCl scalp electrodes. Responses were differentially recorded at a 20 kHz sampling rate with a vertical montage (Cz active, forehead ground, and linked earlobe references), an optimal montage for recording brainstem activity (Galbraith et al., 1995; Chandrasekaran & Kraus, 2010). Contact impedance was 2 kΩ or less between electrodes. Six thousand artifact-free sweeps were recorded for each condition, with each condition lasting between 23 and 25 min. Participants watched a silent, captioned movie of their choice to facilitate a still yet wakeful state for the recording session. To limit the inclusion of low-frequency cortical activity, brainstem responses were off-line bandpass filtered from 70 to 2000 Hz (12 dB/octave, zero phase-shift). The filtered recordings were then epoched using a −40 to 213 ms time window with the stimulus onset occurring at 0 ms. Any sweep with activity greater than 35 μV was considered artifact and rejected. The subtracted responses were used in analyses, following Strait et al. (2014). Subtracting the polarities emphasizes the temporal fine structure (TFS) and enables analysis of phase-locked neural activity to the frequency range in which the [ba] and [ga] stimuli differed (900–2480 Hz). Last, responses were amplitude-baselined to the pre-stimulus period.

Analytical and statistical methods

Frequency-following response

Neural encoding of speech harmonics

All data analyses were carried out in MATLAB 7.5.0 (Mathworks) with custom-coded routines. The neural data processing routines are available as part of MATLAB toolbox that is available from the Auditory Neuroscience Laboratory website. To assess the neural encoding of the stimulus spectrum, a fast Fourier transform was performed on the steady-state portion of the response (60–180 ms) using MATLAB routines. Average spectral amplitudes of specific frequency bins were calculated from the resulting amplitude spectrum. As described in Parbery-Clark et al. (2009), each bin was 60 Hz wide and centered on the stimulus f0 (100 Hz) and the subsequent harmonics H2–H10 (200–1000 Hz; whole-integer multiples of the f0). To create a composite score representing the strength of the overall harmonic encoding, the average amplitudes of the H2 to H10 bins were summed. Analyses were performed on the composite harmonic measure since this is where a musician advantage was previously observed (Parbery-Clark et al., 2009).

Neural differentiation of speech syllables

A cross-phaseogram was constructed according to Skoe et al. (2011) and provides a frequency- and time-specific measure of phase differences between two neural responses (/ba/and/ga/). Based on Strait et al. (2014), analyses were constrained to 900–1400 Hz. Comparisons were performed on the time region corresponding to the dynamic formant transition (5–45 ms), where differences between musicians and non-musicians were previously observed.

Statistical Analyses

All statistical analyses were conducted using standard functions of SPSS (version 23.0, SPSS Inc., Chicago, IL). Statistical analyses were constrained to those measures in which previous studies had revealed a musician advantage. Kruskal-Wallis H tests were used for comparisons with three or more independent samples (i.e. Percussionists, Vocalists and Non-musicians, or Readers, Improvisers, Balanced and Non-musicians), with subsequent post hoc pairwise Mann Whitney U tests conducted where significant group effects were found. Alpha levels were adjusted for multiple comparisons, as indicated in the text.

Results

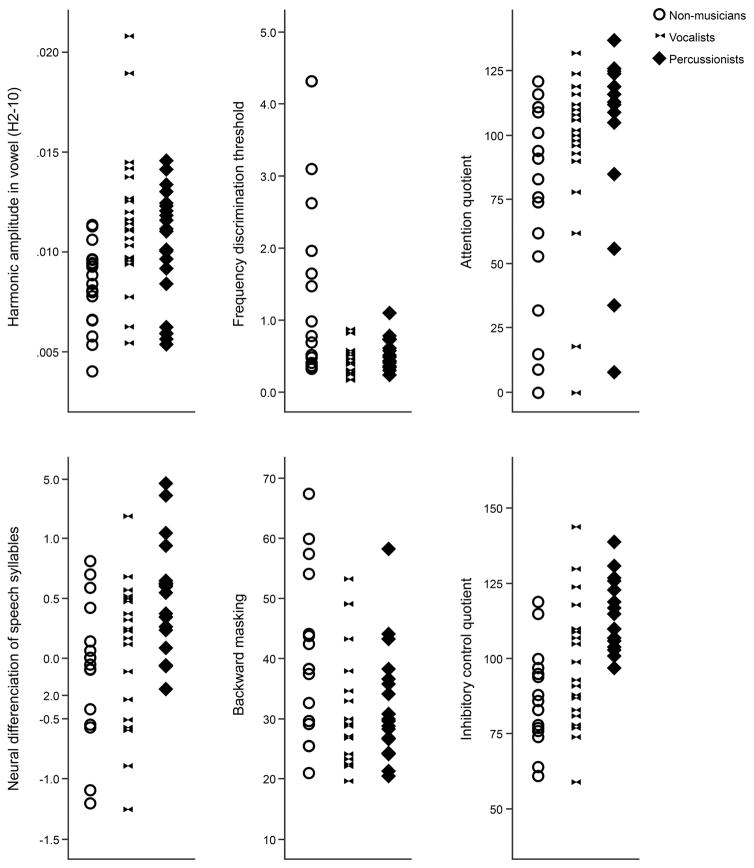

Scatterplots of individual data for all dependent variables are provided in Figure 2. Performance across measures is summarized in Table 3, and a summary of group comparison statistics is presented in Table 4.

Figure 2.

Scatterplots showing distribution of performance in cognitive and perceptual measures across groups of non-musicians, vocalists and percussionists.

Table 3.

| Non-musicians (n=18) | Vocalists (n=21) | Percussionists (n=21) | |

|---|---|---|---|

| M (SD), Mdn | M (SD), Mdn | M (SD), Mdn | |

| Attention quotient | 71.7 (39.6), 79.5 | 94.2 (32.5), 102.0 | 102.4 (34.8), 114.5 |

| Inhibitory control quotient | 87.6 (16.2), 87.0 | 98.9 (20.7), 99.0 | 114.0 (11.5), 110.0 |

| Frequency discrimination | 2.09 (2.89), 0.89 | 0.45 (0.21), 0.42 | 0.53 (0.21), 0.47 |

| Backward masking | 40.5 (13.6), 38.3 | 32.4 (10.9), 29.0 | 32.1 (8.9), 29.8 |

Table 4.

| Main effects (Kruskal-Wallis H test) PERC vs. VOC vs. NM | PERC vs. NM | VOC vs. NM | PERC vs. VOC | ||||

|---|---|---|---|---|---|---|---|

| χ2(2) (p value) | U(p) | Effect size (r) | U(p) | Effect size (r) | U(p) | Effect size (r) | |

| Attention quotient | 9.315 (.009) | ||||||

| Inhibitory control quotient | 16.604 (<.001) | −22.160 (<.001) | −0.691 | −8.997 (.271) | −0.286 | −13.163 (.031) | −0.410 |

| Frequency discrimination | 10.535 (.005) | 11.000 (.133) | 0.335 | 17.7 (.004) | 0.539 | −6.700 (.582) | −0.206 |

| Backward masking | 4.730 (.094) | ||||||

| Harmonic encoding of vowel (H2-10) | 11.336 (.003) | −13.278 (.054) | −0.379 | −18.5 (.003) | −0.527 | 5.190 (1.0) | 0.149 |

| Neural differentiation of syllables | 9.962 (.007) | −16.3 (.009) | −0.496 | −4.212 (1.0) | −0.128 | −12.050 (.058) | −0.369 |

Pairwise comparisons were carried out following main effects that were significant at the adjusted alpha level (p<.008), using Mann Whitney U tests. Post hoc p values are adjusted for multiple pairwise comparisons.

Group comparisons based on primary instrument

Comparison of the Percussionist, Vocalist and Non-musician groups revealed significant main effects of group (at adjusted alpha level p<.008) for inhibitory control (χ2(2)= 16.604, p<.001), frequency discrimination (χ2(2)=10.535, p=0.005), neural encoding of speech harmonics (χ2(2)=11.336, p=0.003) and the neural differentiation of syllables (χ2(2)=9.962, p=0.007).

Post hoc pairwise comparisons revealed that the Vocalists outperformed Non-musicians on frequency discrimination (U =17.7, p=.004, r=.539) and had stronger neural encoding of harmonics than Non-musicians (U=−18.5, p=.003, r=-.527); the Percussionists did not differ from either Vocalists or Non-musicians on these measures.

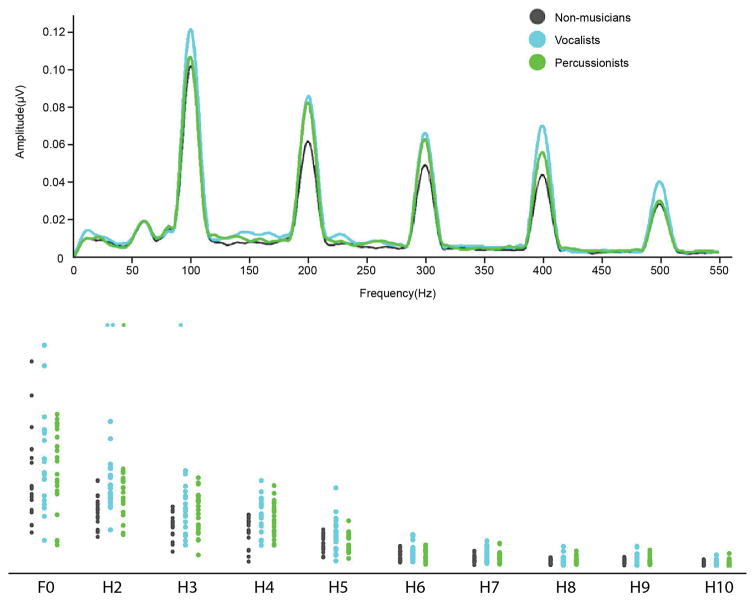

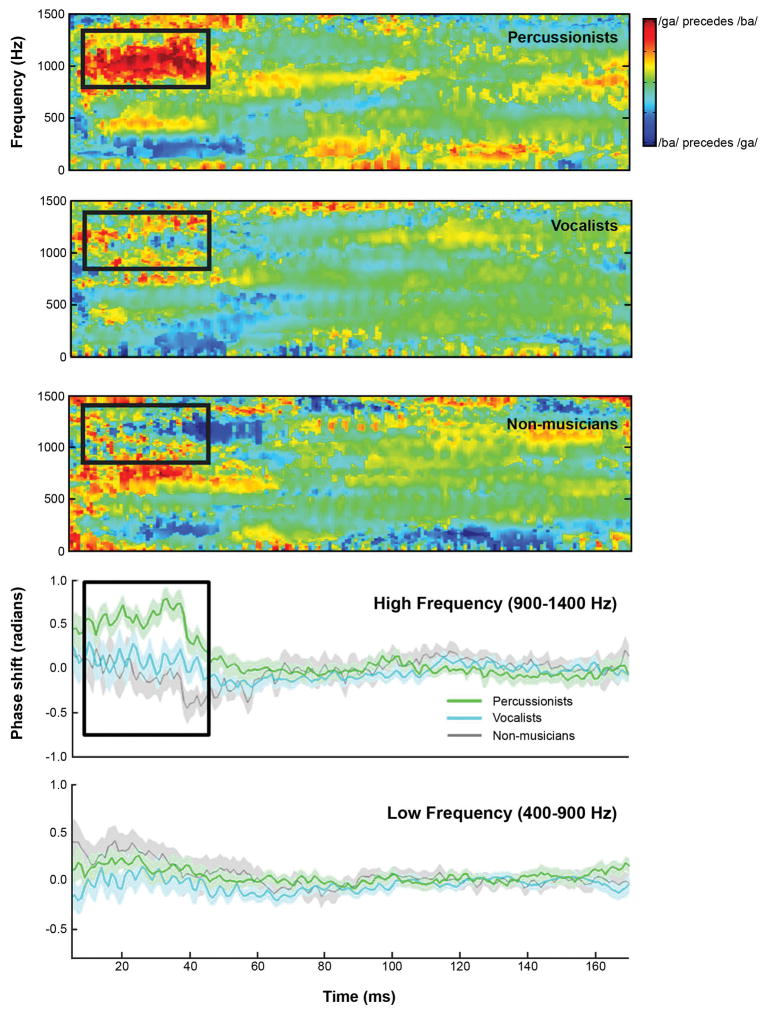

Percussionists had stronger neural differentiation of speech syllables than Non-musicians (U=16.262, p=.009, r=-.496); Vocalists did not differ from either group on this measure. Individual participants’ spectral amplitudes by frequency (F0-H10) are shown in Figure 3 and the neural differentiation of speech sounds is illustrated by group in Figure 4.

Figure 3.

Scatterplots show the spectral energy in response to the vowel portion of the speech sound/da/presented in quiet, at frequency bins centered at the fundamental frequency (100Hz) and its harmonics. Vocalists have stronger encoding of speech harmonics in quiet at H2, 3 and 4. Percussionists also show stronger encoding of H2 than non-musicians. Inset shows averaged spectral amplitudes by group in the lower harmonics, where group differences were observed.

Figure 4.

Percussionists show greater neural differentiation of speech syllables [ba] and [ga] than the vocalist and non-musician groups, reflecting more precise encoding of the fast timing characteristics of speech. In the top three panels, this is visualized by the red patch in the high frequency range (900–1400 Hz) in the consonant-vowel transition portion of the response (between 5 and 45 ms). In the lower two panels, percussionists show greater phase shifts during the transition portion. This is evident in the high frequency range, but not in the low frequency range, shown for comparison. The black rectangle indicates the region of interest (5–45ms, 900–1400Hz).

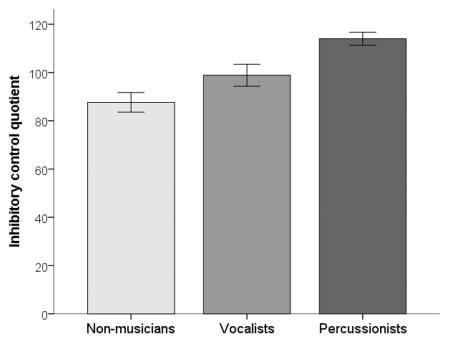

Contrary to our predictions, Percussionists outperformed both Non-musicians and Vocalists on inhibitory control (Percussionists vs. Non-musicians: U=−22.160, p<.001, r=-.691; Percussionists vs. Vocalists: U=−13.163, p=.031, r=−0.410); group differences in attention were not significant with adjustment for multiple comparisons (see Table 4). Group comparisons based on proficiency bias revealed a significant effect of group for inhibitory control (χ2(3)=10.643, p=.014). Post hoc pairwise comparisons determined that the Balanced group significantly outperformed the Non-musicians (U=17.841, p=.017, r=.536). No other pairwise comparisons were significant (p>.05).

To confirm that the effect of primary instrument on inhibitory control was not driven by general factors (age and non-verbal IQ) we performed a hierarchical regression analysis with inhibitory control as the dependent variable. In the first step we included age and non-verbal IQ as predictive variables. The resulting model did not predict variance in inhibitory control (F=1.091, p=.343, R2=.040, adjusted R2=.003). In the second step we added group (Percussionist, Vocalists, Non-musicians) as a predictive variable. This resulted in a significant improvement in the model (R2 change=.281, p<.001) with the overall model significantly predicting 28% of variance in inhibitory control (F=8.038, R2=.321, adjusted R2=.281).

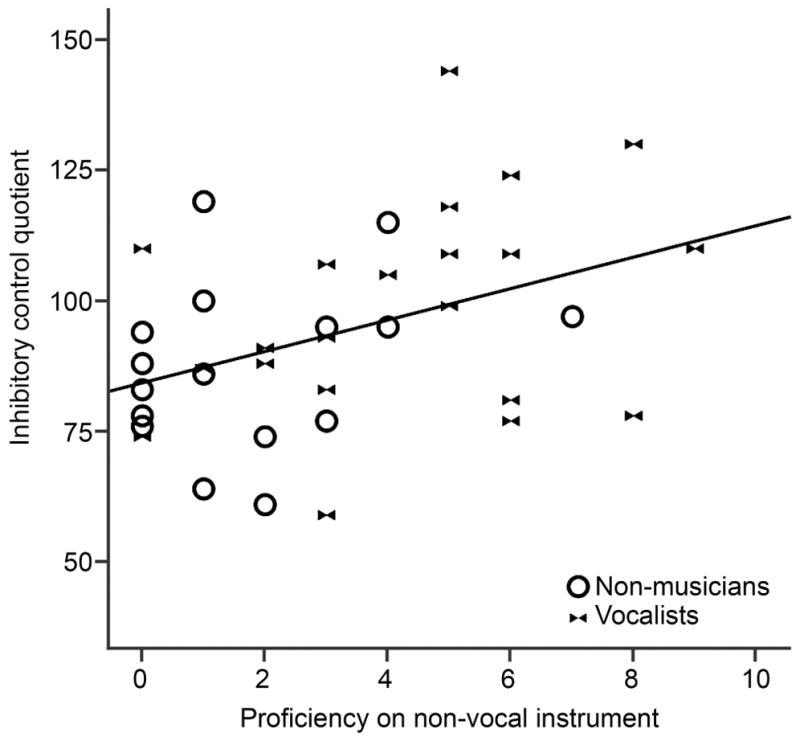

Inhibitory control and proficiency in secondary instruments

To further elucidate the factors contributing to inhibitory control performance, we included an exploratory analysis to determine whether the wide range of scores in the Vocalist and Non-musician groups could be explained by variation in experience with other instruments (i.e. Vocalists’ secondary instruments or Non-musicians’ prior instrumental experience). Within these participants (i.e. excluding the Percussionists) we found a significant correlation between self-rated proficiency on non-vocal instruments and inhibitory control (r=.400, p=.014, see Figure 5), which was strengthened when age and IQ were added as covariates (r=.437, p=.009).

Figure 5.

Vocalist and non-musician groups do not differ in inhibitory control, however non-vocal instrumental proficiency is correlated with inhibitory control within these participants.

Discussion

We set out to examine whether musical experience with an emphasis on rhythm (percussionists) vs. pitch (vocalists) is reflected in distinct signatures of expertise. Consistent with our hypotheses we found modest evidence for nuances in the musician signature: In comparison with non-musicians, the vocalists show stronger encoding of speech harmonics, while the percussionists show greater neural differentiation of syllables, which relies upon precise encoding of the fast timing characteristics of speech (e.g. consonants). These outcomes are consistent with the relative emphasis on spectral vs. temporal characteristics of sound in these musicians’ practice.

We also observed enhanced inhibitory control in the Percussionist group, which we had not predicted based on previous research. However, there is accumulating evidence of overlapping neural circuitry between motor and cognitive control, particularly in relation to aspects of coordination and timing (Graybiel, 1997; Brown & Marsden, 1998; Schwartze & Kotz, 2013) and also in processing musical rhythm (Grahn & Brett, 2007; Chen et al., 2008; Grahn, 2012). It is therefore possible that the particular demands of playing percussion, including the extent of motor activation and the coordination of complex rhythmic sequences in time, may provide a “sweet spot” for strengthening neural networks important for cognitive control. Our post hoc observation that Vocalists and Non-musicians with more extensive experience in (non-vocal) instrumental playing showed better inhibitory control, is consistent with the idea that more extensive motor activation associated with instrumental playing could contribute to the transfer of musical practice to more general cognitive control. This interaction of rhythm, motor and cognitive factors represents a promising area for future research.

Notably, we found no difference between musicians specialized in either sight-reading or improvisation; rather, it was the Balanced group (musician participants who rated themselves equally proficient in both skills) who outperformed non-musicians in inhibitory control. These preliminary outcomes suggest that, if anything, it may be versatility in playing style that leads to cognitive transfer of training, rather than specific expertise in one format or another. Although these analyses were based on small groups of participants, we note that the effect of instrument was clearly present despite the wide range of proficiency in reading vs. improvisation, suggesting that instrument was a more dominant factor than reading vs. improvisational proficiency in driving this effect. It is also possible that proficiency-based differences may emerge in other measures of neural or cognitive function that were not included in the present study. There is neuroimaging evidence to suggest that the style of playing influences does influence patterns of brain activation, for example improvisation is associated with deactivation of dorsolateral prefrontal, lateral orbital and limbic regions, in combination with activation of medial prefrontal and sensorimotor regions, when contrasted with memorized playing or note reading (Limb & Braun, 2008; Donnay et al., 2014).

It is also important to note that primary instrument and style of playing are only some of the potential aspects of musical experience that may influence cognitive and sensory function, The method of teaching used to lay down the fundamentals of music can also affect musical proficiency later on, and may in turn affect the transfer of musical expertise to other domains. When learning rhythms, for example, studies show that the method used affects the speed and accuracy with which musicians learn (Colley, 1987; Pierce, 1992). Typically used training techniques, such as ear playing, continuous practice, and silent analysis, have each been found to offer distinct benefits, showing that learning methods can be strategically used to develop and improve upon different aspects of musical proficiency (Rosenthal et al., 1988). Interestingly, there is also evidence that continued aural training shapes the way musicians listen to and think about music. It has been found that aurally trained musicians employ different and seemingly more effective strategies to remember melodies. For example, when asked to comment on their thought process during sight reading performance tasks for both instrument playing and singing, a majority of the musicians who had more experience with nontraditional music styles where aural skills are emphasized (i.e., jazz) commented that they could often predict where rhythms and melodies would go (Woody & Lehmann, 2010). These comments, in combination with the finding that the aurally-trained musicians required significantly fewer trials to play or sing the sight-read excerpts than the musicians trained with greater emphasis on reading, suggest that the musicians whose training focused more on aural experience were able to construct more meaningful and effective mental representations. The present findings suggest that versatility could be an important feature of musical experience to consider, in addition to expertise in one specific skill or another. For example, it is possible that an additional factor contributing to the stronger inhibitory control performance in vocalists who are also proficient in additional instruments, or in the musicians with balanced proficiency across reading and improvisational playing, is their experience juggling multiple musical “languages,” similar to the observed bilingual advantage in executive function (Bialystok & Depape, 2009; Bialystok et al., 2012; Krizman et al., 2012; Moreno et al., 2014), and this provides further motivation to investigate not only distinct elements of experience in isolation, but the ways in which the multiple facets of musical practice may complement one another in an enriched diet of expertise.

Our cross-sectional outcomes cannot speak to the causal effects of musical training, and could reflect pre-existing differences as well as experience-based effects. However, our findings are consistent with previous studies showing instrument-specific enhancements of auditory processing, and consistent with a model of auditory learning in which sound features that are meaningful to an individual become enhanced through repeated exposure and engagement with sound. In conjunction with increasing longitudinal evidence showing the emergence of the musician signature with music training (Kraus & White-Schwoch, 2016), these findings suggest that musical training is at least one of the factors contributing to these neural and cognitive enhancements. Most likely there are both genetic and environmental factors at play, for example it has been shown that personality traits play an important role in the likelihood of an individual continuing with musical practice (Corrigall et al., 2013). Understanding this interaction between inherent predisposition and training is itself an important area for future investigation. It is also important to consider how an individual’s enthusiasm and suitability for their instrument affects outcomes, since it is well established that emotional engagement and reward are important factors in the potential for music to engender neural plasticity (Herholz & Zatorre, 2012).

The rewarding nature of music also makes it an especially powerful vehicle for training and remediation. Musicians have shown advantages in many of the same neural processes that are impaired in individuals with learning and language deficits such as dyslexia and autism, suggesting that music-based programs may be effective in educational and clinical settings to support the development and remediation of language and listening skills (reviewed in Tierney & Kraus, 2013b). Converging evidence reveals links between rhythm abilities and language skills (Thomson & Goswami, 2008; Corriveau & Goswami, 2009; Huss et al., 2011; Tierney & Kraus, 2013a; Slater & Kraus, 2016), suggesting that the transfer of skills from music to speech processing may be mediated in part by enhanced rhythm skills (Shahin, 2011). Rhythmic expertise therefore provides a particularly interesting context for future research given the potential role of rhythm and timing abilities as markers of language development (Holliman et al., 2008; Dellatolas et al., 2009; Tierney & Kraus, 2013a; Woodruff Carr et al., 2014). Intervention studies focusing on rhythm skills have shown some success in the remediation of language difficulties such as dyslexia (Overy, 2000; Overy, 2003; Bhide et al., 2013), suggesting not only an association between these abilities but the potential for training in rhythm to strengthen underlying mechanisms of language processing. Further, we reveal a specific advantage for the percussionists in inhibitory control, who outperform both non-musicians and vocalists. This has interesting implications in the context of recent work identifying executive function as an important factor in determining whether skills developed through musical practice transfer to other domains (Degé et al., 2011; Moreno & Farzan, 2015). Our findings may therefore help to inform future research by highlighting specific aspects of musical experience, such as rhythm and motor activity, which may be of particular value in clinical and educational settings.

Conclusions

The imprint of musical experience on the brain may be fine-tuned by specific elements of musical practice, and the rich diversity of music-making activities within a typical population provides a wealth of opportunity for research. To help bridge the gap between life-long expertise and short-term training and intervention, it is especially important to assess more readily accessible forms of musical activity, such as singing and drumming, which might more easily be incorporated into educational and clinical contexts. Understanding the nuances of the complex relationship between music and brain may shed light on mixed outcomes within the literature, for example, studies comparing musician and non-musician groups may be influenced by other factors such as extent of rhythmic expertise, number of instruments played, or the specific motor activities associated with a given instrument. Further, this line of research may help to inform training strategies for the development of musical skills as well as their transfer to non-musical domains.

Acknowledgments

The authors wish to thank Emily Spitzer and Manto Agouridou for assistance with data collection and processing, and Kali Woodruff Carr and Sebastian Otto-Meyer who provided comments on an earlier version of this manuscript. We also wish to thank Alexandra Parbery-Clark, Dana Strait and Erika Skoe, whose research provided the foundation for this study. This work was supported by the National Institutes of Health grant F31DC014891-01 to J.S. and the National Association of Music Merchants (NAMM). The authors declare no competing financial interests.

Footnotes

Author Contributions

Conceived and designed the experiments: JS NK. Performed the experiments: JS BS AA. Analyzed the data: JS TN. Wrote the paper: JS AA.

Data Accessibility

Data are available on the Open Science Framework at https://osf.io/vx3f7/. These data include performance on the behavioral tests as well as the frequency-following measures used in reported analyses.

References

- Amitay S, Irwin A, Hawkey DJ, Cowan JA, Moore DR. A comparison of adaptive procedures for rapid and reliable threshold assessment and training in naive listenersa) J Acoust Soc Am. 2006;119:1616–1625. doi: 10.1121/1.2164988. [DOI] [PubMed] [Google Scholar]

- Benz S, Sellaro R, Hommel B, Colzato LS. Music Makes the World Go Round: The Impact of Musical Training on Non-musical Cognitive Functions—A Review. Front Psychol. 2015:6. doi: 10.3389/fpsyg.2015.02023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhide A, Power A, Goswami U. A Rhythmic Musical Intervention for Poor Readers: A Comparison of Efficacy With a Letter-Based Intervention. Mind, Brain, and Education. 2013;7:113–123. [Google Scholar]

- Bialystok E, Craik FI, Luk G. Bilingualism: consequences for mind and brain. Trends Cogn Sci. 2012;16:240–250. doi: 10.1016/j.tics.2012.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bialystok E, Depape AM. Musical expertise, bilingualism, and executive functioning. J Exp Psychol Hum Percept Perform. 2009;35:565–574. doi: 10.1037/a0012735. [DOI] [PubMed] [Google Scholar]

- Brown L, Sherbenou R, Johnsen SK. Test of nonverbal intelligence. A language free measure of cognitive ability 1997 [Google Scholar]

- Brown P, Marsden C. What do the basal ganglia do? The Lancet. 1998;351:1801–1804. doi: 10.1016/s0140-6736(97)11225-9. [DOI] [PubMed] [Google Scholar]

- Bugos J, Perlstein W, McCrae C, Brophy T, Bedenbaugh P. Individualized piano instruction enhances executive functioning and working memory in older adults. Aging and Mental Health. 2007;11:464–471. doi: 10.1080/13607860601086504. [DOI] [PubMed] [Google Scholar]

- Carey D, Rosen S, Krishnan S, Pearce MT, Shepherd A, Aydelott J, Dick F. Generality and specificity in the effects of musical expertise on perception and cognition. Cognition. 2015;137:81–105. doi: 10.1016/j.cognition.2014.12.005. [DOI] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: Neural origins and plasticity. Psychophysiology. 2010;47:236–246. doi: 10.1111/j.1469-8986.2009.00928.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen JL, Penhune VB, Zatorre RJ. Listening to musical rhythms recruits motor regions of the brain. Cereb Cortex. 2008;18:2844–2854. doi: 10.1093/cercor/bhn042. [DOI] [PubMed] [Google Scholar]

- Chobert J, François C, Velay JL, Besson M. Twelve months of active musical training in 8-to 10-year-old children enhances the preattentive processing of syllabic duration and voice onset time. Cereb Cortex. 2014;24:956–967. doi: 10.1093/cercor/bhs377. [DOI] [PubMed] [Google Scholar]

- Colley B. A comparison of syllabic methods for improving rhythm literacy. Journal of Research in Music Education. 1987;35:221–235. [Google Scholar]

- Corrigall KA, Schellenberg EG, Misura NM. Music training, cognition, and personality. Front Psychol. 2013;4:10.3389. doi: 10.3389/fpsyg.2013.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corriveau KH, Goswami U. Rhythmic motor entrainment in children with speech and language impairments: tapping to the beat. Cortex. 2009;45:119–130. doi: 10.1016/j.cortex.2007.09.008. [DOI] [PubMed] [Google Scholar]

- Costa-Giomi E. The Long-Term Effects of Childhood Music Instruction on Intelligence and General Cognitive Abilities. Update: Applications of Research in Music Education. 2015;33:20–26. [Google Scholar]

- Degé F, Kubicek C, Schwarzer G. Music Lessons and Intelligence: A Relation Mediated by Executive Functions. Music Perception: An Interdisciplinary Journal. 2011;29:195–201. [Google Scholar]

- Dellatolas G, Watier L, Le Normand MT, Lubart T, Chevrie-Muller C. Rhythm reproduction in kindergarten, reading performance at second grade, and developmental dyslexia theories. Arch Clin Neuropsychol. 2009;24:555–563. doi: 10.1093/arclin/acp044. [DOI] [PubMed] [Google Scholar]

- Donnay GF, Rankin SK, Lopez-Gonzalez M, Jiradejvong P, Limb CJ. Neural Substrates of Interactive Musical Improvisation: An fMRI Study of ‘Trading Fours’ in Jazz. PLoS One. 2014;9:e88665. doi: 10.1371/journal.pone.0088665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galbraith GC, Arbagey PW, Branski R, Comerci N, Rector PM. Intelligible speech encoded in the human brain stem frequency-following response. Neuroreport. 1995;6:2363–2367. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- Gaser C, Schlaug G. Brain structures differ between musicians and non-musicians. J Neurosci. 2003;23:9240–9245. doi: 10.1523/JNEUROSCI.23-27-09240.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grahn JA. Neural Mechanisms of Rhythm Perception: Current Findings and Future Perspectives. Top Cogn Sci. 2012;4:585–606. doi: 10.1111/j.1756-8765.2012.01213.x. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Brett M. Rhythm and beat perception in motor areas of the brain. J Cogn Neurosci. 2007;19:893–906. doi: 10.1162/jocn.2007.19.5.893. [DOI] [PubMed] [Google Scholar]

- Graybiel AM. The basal ganglia and cognitive pattern generators. Schizophr Bull. 1997;23:459–469. doi: 10.1093/schbul/23.3.459. [DOI] [PubMed] [Google Scholar]

- Halwani GF, Loui P, Rüber T, Schlaug G. Effects of Practice and Experience on the Arcuate Fasciculus: Comparing Singers, Instrumentalists, and Non-Musicians. Front Psychol. 2011;2:156. doi: 10.3389/fpsyg.2011.00156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herholz SC, Zatorre RJ. Musical training as a framework for brain plasticity: behavior, function, and structure. Neuron. 2012;76:486–502. doi: 10.1016/j.neuron.2012.10.011. [DOI] [PubMed] [Google Scholar]

- Holliman AJ, Wood C, Sheehy K. Sensitivity to speech rhythm explains individual differences in reading ability independently of phonological awareness. Br J Dev Psychol. 2008;26:357–367. [Google Scholar]

- Huss M, Verney JP, Fosker T, Mead N, Goswami U. Music, rhythm, rise time perception and developmental dyslexia: perception of musical meter predicts reading and phonology. Cortex. 2011;47:674–689. doi: 10.1016/j.cortex.2010.07.010. [DOI] [PubMed] [Google Scholar]

- Hyde KL, Lerch J, Norton A, Forgeard M, Winner E, Evans AC, Schlaug G. Musical training shapes structural brain development. J Neurosci. 2009;29:3019–3025. doi: 10.1523/JNEUROSCI.5118-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatt DH. Software for a cascade/parallel formant synthesizer. J Acoust Soc Am. 1980;67:971–995. [Google Scholar]

- Kraus N, Slater J, Thompson EC, Hornickel J, Strait DL, Nicol T, White-Schwoch T. Music Enrichment Programs Improve the Neural Encoding of Speech in At-Risk Children. J Neurosci. 2014;34:11913–11918. doi: 10.1523/JNEUROSCI.1881-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraus N, White-Schwoch T. Neurobiology of Everyday Communication: What Have We Learned From Music? Neuroscientist. 2016 doi: 10.1177/1073858416653593. [DOI] [PubMed] [Google Scholar]

- Krizman J, Marian V, Shook A, Skoe E, Kraus N. Subcortical encoding of sound is enhanced in bilinguals and relates to executive function advantages. Proceedings of the National Academy of Sciences. 2012;109:7877–7881. doi: 10.1073/pnas.1201575109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lappe C, Herholz SC, Trainor LJ, Pantev C. Cortical plasticity induced by short-term unimodal and multimodal musical training. J Neurosci. 2008;28:9632–9639. doi: 10.1523/JNEUROSCI.2254-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Limb CJ, Braun AR. Neural substrates of spontaneous musical performance: an FMRI study of jazz improvisation. PLoS One. 2008;3:e1679. doi: 10.1371/journal.pone.0001679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margulis EH, Mlsna LM, Uppunda AK, Parrish TB, Wong P. Selective neurophysiologic responses to music in instrumentalists with different listening biographies. Hum Brain Mapp. 2009;30:267–275. doi: 10.1002/hbm.20503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Micheyl C, Delhommeau K, Perrot X, Oxenham AJ. Influence of musical and psychoacoustical training on pitch discrimination. Hear Res. 2006;219:36–47. doi: 10.1016/j.heares.2006.05.004. [DOI] [PubMed] [Google Scholar]

- Moore DR, Ferguson MA, Halliday LF, Riley A. Frequency discrimination in children: Perception, learning and attention. Hear Res. 2008;238:147–154. doi: 10.1016/j.heares.2007.11.013. [DOI] [PubMed] [Google Scholar]

- Moreno S, Bialystok E, Barac R, Schellenberg EG, Cepeda NJ, Chau T. Short-term music training enhances verbal intelligence and executive function. Psychol Sci. 2011;22:1425–1433. doi: 10.1177/0956797611416999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moreno S, Farzan F. Music training and inhibitory control: a multidimensional model. Ann N Y Acad Sci. 2015;1337:147–152. doi: 10.1111/nyas.12674. [DOI] [PubMed] [Google Scholar]

- Moreno S, Marques C, Santos A, Santos M, Castro SL, Besson M. Musical training influences linguistic abilities in 8-year-old children: more evidence for brain plasticity. Cereb Cortex. 2009;19:712–723. doi: 10.1093/cercor/bhn120. [DOI] [PubMed] [Google Scholar]

- Moreno S, Wodniecka Z, Tays W, Alain C, Bialystok E. Inhibitory control in bilinguals and musicians: event related potential (ERP) evidence for experience-specific effects. PLoS One. 2014;9:e94169. doi: 10.1371/journal.pone.0094169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proceedings of the National Academy of Sciences. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nikjeh DA, Lister JJ, Frisch SA. Hearing of note: an electrophysiologic and psychoacoustic comparison of pitch discrimination between vocal and instrumental musicians. Psychophysiology. 2008;45:994–1007. doi: 10.1111/j.1469-8986.2008.00689.x. [DOI] [PubMed] [Google Scholar]

- Overy K. Dyslexia, temporal processing and music: The potential of music as an early learning aid for dyslexic children. Psychology of Music. 2000;28:218–229. [Google Scholar]

- Overy K. Dyslexia and music. From timing deficits to musical intervention. Ann N Y Acad Sci. 2003;999:497–505. doi: 10.1196/annals.1284.060. [DOI] [PubMed] [Google Scholar]

- Pantev C, Roberts LE, Schulz M, Engelien A, Ross B. Timbre-specific enhancement of auditory cortical representations in musicians. Neuroreport. 2001;12:169–174. doi: 10.1097/00001756-200101220-00041. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. J Neurosci. 2009;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Tierney A, Strait DL, Kraus N. Musicians have fine-tuned neural distinction of speech syllables. Neuroscience. 2012;219:111–119. doi: 10.1016/j.neuroscience.2012.05.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pierce MA. The effects of learning procedure, tempo, and performance condition on transfer of rhythm skills in instrumental music. Journal of Research in Music Education. 1992;40:295–305. [Google Scholar]

- Putkinen V, Tervaniemi M, Saarikivi K, Ojala P, Huotilainen M. Enhanced development of auditory change detection in musically trained school-aged children: a longitudinal event-related potential study. Developmental Science. 2014;17:282–297. doi: 10.1111/desc.12109. [DOI] [PubMed] [Google Scholar]

- Rodrigues AC, Loureiro MA, Caramelli P. Long-term musical training may improve different forms of visual attention ability. Brain Cogn. 2013;82:229–235. doi: 10.1016/j.bandc.2013.04.009. [DOI] [PubMed] [Google Scholar]

- Rosenthal RK, Wilson M, Evans M, Greenwalt L. Effects of different practice conditions on advanced instrumentalists’ performance accuracy. Journal of Research in Music Education. 1988;36:250–257. [Google Scholar]

- Sandford J, Turner A. IVA+ Plus: Interpretation manual. Chesterfield, VA: BrainTrain; 1994. [Google Scholar]

- Schlaug G. The brain of musicians. A model for functional and structural adaptation. Ann N Y Acad Sci. 2001;930:281–299. [PubMed] [Google Scholar]

- Schön D, Magne C, Besson M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology. 2004;41:341–349. doi: 10.1111/1469-8986.00172.x. [DOI] [PubMed] [Google Scholar]

- Schwartze M, Kotz SA. A dual-pathway neural architecture for specific temporal prediction. Neurosci Biobehav Rev. 2013;37:2587–2596. doi: 10.1016/j.neubiorev.2013.08.005. [DOI] [PubMed] [Google Scholar]

- Shahin AJ. Neurophysiological influence of musical training on speech perception. Front Psychol. 2011:2. doi: 10.3389/fpsyg.2011.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin AJ, Roberts LE, Chau W, Trainor LJ, Miller LM. Music training leads to the development of timbre-specific gamma band activity. Neuroimage. 2008;41:113–122. doi: 10.1016/j.neuroimage.2008.01.067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skoe E, Nicol T, Kraus N. Cross-phaseogram: objective neural index of speech sound differentiation. J Neurosci Methods. 2011;196:308–317. doi: 10.1016/j.jneumeth.2011.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater J, Kraus N. The role of rhythm in perceiving speech in noise: a comparison of percussionists, vocalists and non-musicians. Cogn Process. 2016;17:79–87. doi: 10.1007/s10339-015-0740-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater J, Skoe E, Strait DL, O’Connell S, Thompson E, Kraus N. Music training improves speech-in-noise perception: Longitudinal evidence from a community-based music program. Behav Brain Res. 2015;291:244–252. doi: 10.1016/j.bbr.2015.05.026. [DOI] [PubMed] [Google Scholar]

- Slater J, Strait DL, Skoe E, O’Connell S, Thompson E, Kraus N. Longitudinal effects of group music instruction on literacy skills in low-income children. PLoS One. 2014;9:e113383. doi: 10.1371/journal.pone.0113383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Chan K, Ashley R, Kraus N. Specialization among the specialized: auditory brainstem function is tuned in to timbre. Cortex. 2012;48:360–362. doi: 10.1016/j.cortex.2011.03.015. [DOI] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Parbery-Clark A, Ashley R. Musical experience shapes top-down auditory mechanisms: evidence from masking and auditory attention performance. Hear Res. 2010;261:22–29. doi: 10.1016/j.heares.2009.12.021. [DOI] [PubMed] [Google Scholar]

- Strait DL, O’Connell S, Parbery-Clark A, Kraus N. Musicians’ enhanced neural differentiation of speech sounds arises early in life: developmental evidence from ages 3 to 30. Cereb Cortex. 2014;24:2512–2521. doi: 10.1093/cercor/bht103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tervaniemi M, Just V, Koelsch S, Widmann A, Schroger E. Pitch discrimination accuracy in musicians vs nonmusicians: an event-related potential and behavioral study. Exp Brain Res. 2005;161:1–10. doi: 10.1007/s00221-004-2044-5. [DOI] [PubMed] [Google Scholar]

- Thomson JM, Goswami U. Rhythmic processing in children with developmental dyslexia: auditory and motor rhythms link to reading and spelling. J Physiol Paris. 2008;102:120–129. doi: 10.1016/j.jphysparis.2008.03.007. [DOI] [PubMed] [Google Scholar]

- Tierney A, Kraus N. The ability to tap to a beat relates to cognitive, linguistic, and perceptual skills. Brain Lang. 2013a;124:225–231. doi: 10.1016/j.bandl.2012.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tierney A, Kraus N. Music training for the development of reading skills. Applying Brain Plasticity to Advance and Recover Human Ability Progress in Brain Research. 2013b doi: 10.1016/B978-0-444-63327-9.00008-4. [DOI] [PubMed] [Google Scholar]

- Tierney A, Krizman J, Skoe E, Johnston K, Kraus N. High school music classes enhance the neural processing of speech. Front Psychol. 2013;4:855. doi: 10.3389/fpsyg.2013.00855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong PC, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat Neurosci. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodruff Carr K, White-Schwoch T, Tierney AT, Strait DL, Kraus N. Beat synchronization predicts neural speech encoding and reading readiness in preschoolers. Proceedings of the National Academy of Sciences. 2014 doi: 10.1073/pnas.1406219111. 201406219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woody RH, Lehmann AC. Student musicians’ ear-playing ability as a function of vernacular music experiences. Journal of Research in Music Education. 2010;58:101–115. [Google Scholar]