Abstract

Low‐dose X‐ray computed tomography (LDCT) imaging is highly recommended for use in the clinic because of growing concerns over excessive radiation exposure. However, the CT images reconstructed by the conventional filtered back‐projection (FBP) method from low‐dose acquisitions may be severely degraded with noise and streak artifacts due to excessive X‐ray quantum noise, or with view‐aliasing artifacts due to insufficient angular sampling. In 2005, the nonlocal means (NLM) algorithm was introduced as a non‐iterative edge‐preserving filter to denoise natural images corrupted by additive Gaussian noise, and showed superior performance. It has since been adapted and applied to many other image types and various inverse problems. This paper specifically reviews the applications of the NLM algorithm in LDCT image processing and reconstruction, and explicitly demonstrates its improving effects on the reconstructed CT image quality from low‐dose acquisitions. The effectiveness of these applications on LDCT and their relative performance are described in detail.

Keywords: denoising, dose reduction, nonlocal means, streak artifacts, view‐aliasing artifacts, X‐ray CT

1. Introduction

X‐ray computed tomography (CT) is widely used in the clinic for diagnosis, screening, image‐guided radiotherapy, and image‐guided surgeries. CT scan is a radiation‐intensive procedure,1 and its potential harmful effects including genetic and cancerous diseases have raised growing concerns in the medical physics community.2 Hence, many strategies have been proposed for dose reduction in CT scan to decrease radiation‐associated risks.3, 4, 5 In addition to hardware improvements in CT systems, two additional cost‐effective strategies have also been widely explored: (a) lowering the X‐ray tube current and exposure time [milliampere‐second (mAs)] or the X‐ray tube voltage [kilovoltage peak (kVp)] settings to reduce the X‐ray flux toward the patient (i.e., low‐flux acquisition); (b) reducing the number of projection views per rotation (i.e., sparse‐view acquisition). The first strategy would inevitably increase the projection data noise, and the resulting image by the conventional filtered back‐projection (FBP) method (equipped on most of commercial CT systems) may be degraded with excessive noise and streak artifacts due to quantum noise and/or electronic noise. The second strategy would produce undersampled projection data and the image reconstructed by the FBP method usually suffers from view‐aliasing artifacts due to insufficient angular sampling. The two strategies may be combined, leading to both noisy and undersampled projection data and further degrading the corresponding CT image reconstructed by the FBP method.

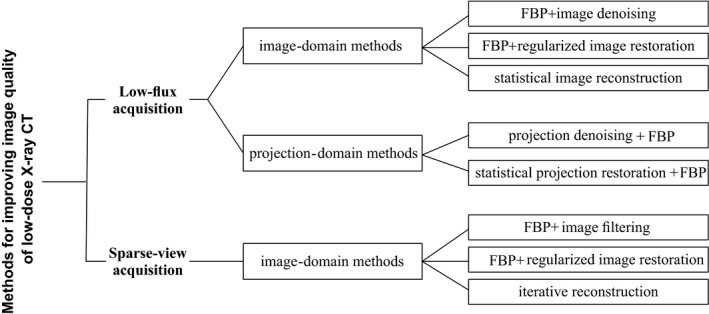

Numerous methods have been proposed to improve CT image quality from low‐dose acquisitions, as illustrated in Fig. 1. For low‐flux scanned data, the methods can work in either the image domain or the projection domain. One category of the image‐domain methods is the application of edge‐preserving filter directly to the FBP reconstructed image.6, 7, 8, 9, 10, 11 However, because noise and streak artifacts in the CT image are typically non‐stationary and unable to be modeled with a general distribution, it is difficult to effectively suppress them while fruitfully preserving fine structures and details. Another category includes regularized image restoration methods, which restore the FBP reconstructed image by optimizing an objective function consisting of a quadratic data‐fidelity term and a regularization term.12, 13 The third category includes statistical image reconstruction (SIR) methods, which reconstruct the CT image by optimizing a penalized maximum likelihood (pML) criterion or penalized weighted least‐squares (PWLS) criterion in the image domain.14, 15 The projection‐domain methods can either denoise the projection data with edge‐preserving filter16, 17, 18, 19, 20, 21 or estimate the ideal noiseless projection data by optimizing a pML/PWLS criterion in the projection domain.22, 23, 24, 25 The denoised/restored projection data can then be reconstructed with the analytical FBP method. For sparse‐view scanned data, the methods generally work in the image domain. The first method category attempts to suppress view‐aliasing artifacts in the FBP reconstructed image with an edge‐preserving filter, but is relatively ineffective when the view‐aliasing artifacts are severe.26, 27 The second category includes regularized image restoration methods that are exactly the same as those of low‐flux scanned data. The third category includes iterative reconstruction methods that typically exploit the compressed sensing or other sparse representations as prior knowledge to reconstruct CT image.28, 29, 30

Figure 1.

Overview of the methods proposed to improve CT image quality from low‐dose acquisitions. It is noted that statistical image reconstruction for low‐flux CT and iterative reconstruction for sparse‐view CT are grouped into image‐domain methods herein, because their objective function is typically a function of the reconstructed image. But strictly speaking, they should be considered as combined image‐domain and projection‐domain methods.

The nonlocal means (NLM) algorithm was originally proposed as a non‐iterative edge‐preserving filter to denoise natural images corrupted by additive white Gaussian noise.31, 32 Essentially, it is one of the neighborhood filters which denoise each pixel with a weighted average of its neighboring pixels according to similarity. However, differently from previous neighborhood filters, the NLM filter calculates the similarity based on patches instead of pixels. The patch of a pixel can be defined as a squared region centered at that pixel. Using patch strengthens, the NLM filter because the intensity of a single pixel can be very noisy. The authors compared the performance of the NLM filter with an array of denoising algorithms including the Gaussian filter, anisotropic filter, total variation (TV), Yaroslavsky neighborhood filter, and observed noticeable improvements over them. Motivated by this success, NLM‐based regularization models33, 34, 35 were also proposed and applied to various inverse problems including image denoising, image deblurring, image inpainting, image reconstruction, and image super‐resolution reconstruction. Inspired by its success in natural image scenarios, researchers also extended its use to medical images including MRI,36, 37, 38, 39, 40 ultrasound,41, 42 PET,43, 44, 45, 46 SPECT,47 and X‐ray CT. As noise distribution and other degradations may vary for different image types, special adaptation may be needed for each one of them. We specifically review the applications and adaptations of the NLM algorithm in low‐dose X‐ray CT (LDCT), and demonstrate how it improves the reconstructed CT image quality from low‐dose acquisitions.

The remainder of this paper is presented as follows. In Section 2, we provide an overview of the NLM algorithm as a filter or regularization model. In Section 3, we explicitly illustrate different applications of the NLM algorithm in LDCT from low‐flux or sparse‐view acquisitions, for both three‐dimensional and four‐dimensional scans. After discussing several issues, we provide conclusions in Section 4.

2. Overview of the NLM algorithm

2.A. NLM filter

The NLM filter was originally proposed to denoise the natural images corrupted by additive white Gaussian noise.31, 32 The filter exploits the high degree of redundancy that typically exists in an image, and reduces image noise by replacing the intensity of each pixel with a weighted average of its neighbors according to similarity. Although the similarity comparison can be performed between any two pixels within the entire image, in practice, this is typically limited to a fixed neighboring window area [called search‐window (SW), e.g., 17 × 17 in two‐dimensional (2D) case] of a target pixel for computational efficiency. Mathematically, the NLM filter can be described as:

| (1) |

where the vector represents the noisy image to be smoothed, k denotes the pixel index within the SW of pixel j, is the weighting coefficient and satisfies the conditions and , and denotes the intensity of pixel j after the NLM filtering.

However, differently from previous neighborhood filters, the NLM filter calculates similarity based on patches instead of pixels. The patch of a pixel can be defined as a squared region centered at that pixel (called patch‐window (PW), e.g., 5 × 5 in 2D case). Let denote the patch centered at pixel j and denote the patch centered at pixel k. The similarity between pixels j and k depends on the weighted Euclidean distance of their patches,, computed as the distance between two intensity vectors in high dimensional space with a Gaussian kernel (a > 0 is the standard deviation of the Gaussian kernel) to weight the contribution to each dimension. The exponential function converts the distance to the weighting coefficient that indicates the interaction degree between two pixels. The normalized weighting coefficient is given as:

| (2) |

where h is the filtering parameter that controls the exponential function decay and the weighting coefficient. When h is small, the image tends to be weakly smoothed; when h is large, the image tends to be strongly smoothed. As previously reported,31, 32 the filtering parameter h is a function of the standard deviation of Gaussian noise in the image. And if we further consider the size of the patch‐window, the filtering parameter h can be given as:40

| (3) |

where τ and η are free scalar parameters, σ is the standard deviation of Gaussian noise, and denotes the size of the patch‐window.

2.B. NLM‐based regularization models

Motivated by the success of the NLM filter, several NLM‐based regularization models were also proposed for various inverse problems including image denoising, deblurring, and reconstruction. The first regularization model takes a general form as:33

| (4) |

where φ denotes a positive potential function and one common choice is ɸ(Δ) = Δ2/2, and 1 represents a reference image. The weighting coefficient is computed on the reference image and given as:

| (5) |

The regularization in Eq. (4) is similar to the Markov random field (MRF) model‐based regularization,48 but a different method is used to calculate the weighting coefficient. In this regularization, the weighting coefficient is smaller when the patch distance of pixel j and pixel k is larger, and vice versa. By this way, the sharp edges in the image can be better preserved.

Another general form of the NLM‐based regularization is given as:34

| (6) |

This regularization model is similar to that in Eq. (4), but the idea varies slightly. This model assumes that the intensity of each pixel can be approximated by a weighted average of its neighbors according to similarity, which is also the original thought of the NLM filter.

The third general form for the NLM‐based regularization is described as:35

| (7) |

This regularization model is inspired by both the NLM filter and the compressed sensing theory. With p = 1, the regularization in Eq. (7) becomes the nonlocal TV regularization.49

The weighting coefficients in Eqs. (4), (6), and (7) are calculated from reference image . For the image denoising or deblurring problem, the current noisy or blurred image could be used as the reference image, and for tomographic image reconstruction, the FBP reconstructed image was explored to serve as the reference image.49 However, because the quality of the reference image is generally low, the resulting regularization may lead to a suboptimal solution.

Alternatively, investigators modified the above regularization models by replacing with w jk (u), described as:43, 50

| (8) |

This way, the regularization models eliminate the need for a specific reference image, and the weighting coefficients are computed on the unknown image u. However, direct optimization of the resulting objective function can be complicated. In practice, an empirical one‐step‐late implementation is usually employed in an iterative approach to obtain the solution. In this implementation, the weighting coefficients are computed on current image estimate, and are considered constants when the image is updated.43, 50 Although this strategy would inevitably increase the computational load, it may produce a more accurate solution as compared with the use of a low quality reference image.

3. Applications of the NLM algorithm in LDCT

The pixel intensity of a CT image reflects the X‐ray attenuation coefficient for the scanned object or patient. Therefore, the conventional notation μ will be used instead of u which representing a general image. In this section, we explicitly review the various applications of the NLM algorithm in LDCT, for both three‐dimensional and four‐dimensional CT scanning.

3.A. Three‐dimensional CT scanning

Three‐dimensional CT scanning refers to both fan‐beam CT and cone‐beam CT scanning (either in axial or helical acquisition mode) commonly used in the clinic to produce tomographic images of specific patient volumes.

3.A.1. NLM‐based image filtering

Simple image filtering

The NLM filter can be directly applied to the FBP reconstructed low‐flux CT images,6, 7, 8, 9, 10, 11 which are typically degraded with noise and streak artifacts, as shown in Fig. 2(b). However, because noise and streak artifacts are non‐stationary and cannot be modeled with a general distribution, it is difficult to determine the standard deviation σ in Eq. (3). The filtering parameter h is usually set as a global constant for the entire image, although this setting may lead to a suboptimal filtering result, because a spatially invariant filtering may be too strong for some regions (blurring much) but too weak for others (filtering little) across the image.10 Recently, Li et al. analytically derived a local noise level estimation method for low‐flux CT images by considering noise propagation from the projection data to the FBP reconstructed images.10 Based on the estimated noise map, the filtering parameter h employed for each pixel can be adjusted proportionally to the local noise level:

| (9) |

where σ j denotes the estimated noise level of pixel j. This is one way to introduce spatial adaptivity into traditional NLM filtering, and this spatially variant NLM filtering has demonstrated improved performance for low‐contrast objects.10 Thaipanich and Kuo suggested to adaptively adjust the SW according to the local structure information as follows: a large SW in the smooth regions, a small SW in strong edge/texture regions, and a medium window for the other regions.51 This may be another way to introduce spatial adaptivity into NLM filtering, but the discrimination of the three different regions may be time‐consuming and technically challenging, especially when the CT images are severely degraded. The third way to incorporate spatial adaptivity is to employ a fixed SW but exclude some patches that are very different from the patch of the central pixel. The selection process can be expressed as:40, 52

| (10) |

where 1 represents the FBP reconstructed LDCT image, and J is the number of pixels in the image; and represent the mean and the variance, respectively, for the patch of pixel ; the parameters 0 < λ < 1 and 0 < ν < 1 can be chosen as, for example, λ = 0.95 and ν = 0.5. The rationale behind the adaptive SW and patch selection strategies is that increasing the number of patches tends to improve filtering in the smooth regions because they contain many similar patches; meanwhile, it tends to either blur or remove details in the edge/texture regions that contain fewer similar patches. Therefore, both ways can make the NLM filtering be adaptive and improve the overall performance. Besides, Zheng et al. explored the point‐wise fractal dimension (PWFD) to achieve spatial adaptivity for NLM filtering of low‐flux CT images.11 The PWFD can provide local structure information, that is, the pixels in the smooth regions exhibit PWFDs close to zero and pixels near the edge regions have relatively large PWFDs. They designed a new weighting coefficient for NLM filtering by considering both the traditional patch distance and the new PWFD difference.

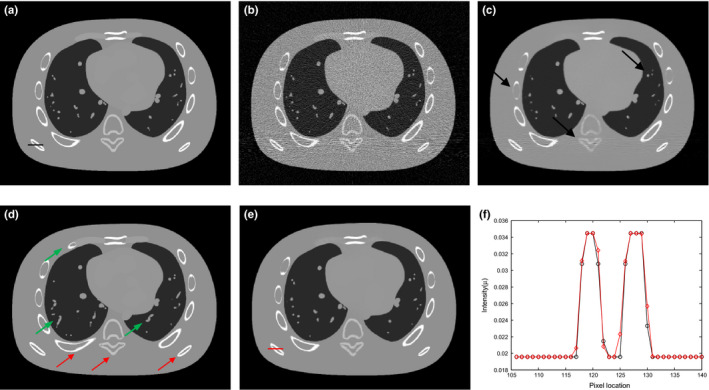

Figure 2.

Illustration of the preHQ‐guided NLM image filtering for low‐flux CT: (a) one transverse slice of the NCAT phantom; (b) FBP reconstruction from simulated noisy projection data; (c) traditional NLM filtering of low‐dose image in Fig. 2(b), SW=332, PW = 52, h = 0.015; (d) another transverse slice of the NCAT phantom serving as the prior image for preHQ‐guided NLM filtering of low‐dose image in Fig. 2(b); (e) corresponding preHQ‐guided NLM filtering result, SW = 332, PW = 52, h = 0.007; (f) profile comparison between the ground truth Fig. 2(a) and the filtering result Fig. 2(e). [Colour figure can be viewed at wileyonlinelibrary.com]

While NLM filtering may suppress noise to a large extent, it is not as effective in removing the streak artifacts in low‐flux CT images resulting from photon starvation, as demonstrated in Fig. 2(c). The streak artifacts are typically along the high‐attenuation paths and appear as directional patterns. To solve this issue, Chen et al. applied a directional one‐dimensional nonlinear diffusion in the stationary wavelet domain to suppress the streak artifacts first, followed by a NLM filtering step to reduce the noise.9 This approach showed advantages in streak artifacts suppression over simple NLM filtering.

The CT images reconstructed by the FBP method from sparse‐view acquisition typically suffer from view‐aliasing artifacts, as shown in Fig. 3(b). The view‐aliasing artifacts in the images are also non‐stationary, and the conventional NLM filtering generally cannot remove them without sacrifice of image details.26, 27

Figure 3.

Illustration of the preHQ‐guided NLM image filtering for sparse‐view CT: (a) one transverse CT image of a patient; (b) FBP reconstruction from sparse‐view projection data; (c) conventional NLM filtering of low‐dose image in (b); (d) a deformed version of (a) serving as the prior image for preHQ‐guided NLM filtering; (e) prior image registered to (a); (f) registered prior image with simulated view‐aliasing artifacts; (g) the corresponding preHQ‐guided NLM filtering result with Eq. (11); (h) corresponding preHQ‐guided NLM filtering result with Eq. (13). (Figure reprinted from the work of Xu and Mueller26).

Previous high quality scan (preHQ)‐guided image filtering

Repeated CT scans are required in clinical applications such as disease monitoring, longitudinal studies, and image‐guided radiotherapy. To optimize radiation dose utility, high quality scanning can be first performed to set up the reference, followed by a series of low‐dose scans (e.g., low‐flux or sparse‐view acquisitions). In these applications, the previous high quality scan can be exploited as prior information because of the anatomical similarity between the reconstructed image series of the scans. Using previous high quality image to improve low‐dose scan reconstruction has recently drawn great interest,26, 53, 54, 55, 56, 57, 58, 59, 60 some of which are based on the NLM algorithm. Because scans are acquired sequentially rather than simultaneously, misalignment or deformation may occur among the image series, so registration is usually needed to align the different scans. Fortunately, the NLM‐based methods do not heavily depend on registration accuracy because of the patch‐based search mechanism. A rough registration might be adequate in practice, although a more accurate one can reduce the computational load by allowing the use of a smaller SW.

Ma et al. proposed a preHQ‐guided NLM filtering method for CT image from low‐flux acquisition, described as:61

| (11) |

1 represents the FBP reconstructed low‐dose image, 1 represents the previous high quality image registered to current low‐dose image, w jk (μ LD , μ preHQ registered ) denotes the weighting coefficient, and denotes the intensity of pixel j after filtering. Specifically, the weighting coefficient was given as:61

| (12) |

One concern with this method is whether the registration accuracy may affect the filtering result. The red arrows in Fig. 2(d) indicate some structure deformations between Figs. 2(b) and 2(d). Without any registration between two images, the preHQ‐guided NLM filtering result [as shown in Fig. 2(e)] of Fig. 2(b) using Fig. 2(d) as prior image still retains good image quality, revealing that image registration accuracy may not be an obstacle. After comparing Fig. 2(c) with Fig. 2(e), we observed that preHQ‐guided NLM filtering reduces noise and streak artifacts better than traditional NLM filtering.

Xu and Muller used a similar approach to explore the preHQ‐guided NLM filtering method for sparse‐view CT.26 But they found that using an artifact‐free image μ preHQ registered and a low‐dose image μ LD with severe view‐aliasing artifacts to calculate the weighting coefficient as in Eq. (12) may yield inferior filtering result, because of the challenge to perform patch matching. Thus, they proposed to transform μ preHQ registered into a tandem‐image μ preHQ registered+degraded with view‐aliasing artifacts resembling those in μ LD , and then employed μ preHQ registered+degraded and μ LD for patch matching. The preHQ‐guided NLM filtering method for sparse‐view CT with artifact‐matched scheme can be described as:26

| (13) |

In Eq. (13), the high quality image is still used to determine the pixel estimates although the degraded image μ preHQ registered+degraded is employed to calculate the weight coefficients. Figure 3 illustrates that the filtering method in Eq. (13) is better than that in Eq. (11) for sparse‐view CT image.

One potential drawback of the preHQ‐guided NLM filtering method is that the pixel intensity of filtering result may resemble that of prior image when there are attenuation difference (e.g., due to kVp mismatch, detector difference, etc.) between the current low‐dose image and prior image. Figures 4(a) and 4(b) illustrate the same transverse slices of the NCAT phantom as Figs. 2(a) and 2(d) except for different kVp settings. Because the attenuation coefficient of the X‐ray in materials is energy‐dependent, the pixel intensity of Figs. 2(a) and 4(a) at the same location is different, as indicated by the profiles in Fig. 4(c). The preHQ‐guided NLM filtering results of Fig. 2(b), using Figs. 4(a) and 4(b) as prior image, are shown in Figs. 4(d) and 4(e). It is observed that the pixel intensity of Figs. 4(d) and 4(e) are closer to the prior image in Fig. 4(a), rather than that of the ground truth image in Fig. 2(a). To mitigate this issue, a modified preHQ‐guided NLM filtering method accounting for changes in pixel intensity was given as:61

| (14) |

where is a local adjusting factor used to account for changes in pixel intensity due to different kVp settings. This strategy was also applied in perfusion CT imaging,54, 61 where the pixel intensity of different scans can change due to contrast enhancement. However, a large PW size may be desired to strengthen C jk , because a small PW may lead to an imprecise estimation of . Using Eq. (14) and a large PW, the filtering results in Figs. 4(g) and 4(h) demonstrate higher intensity accuracy than those in Figs. 4(d) and 4(e), as indicated by the profiles. Meanwhile, we also observed that this strategy may still induce small pixel intensity inaccuracies, especially for relatively non‐uniform regions. More sophisticated approaches may be required to further improve its performance.

Figure 4.

Illustration of the preHQ‐guided NLM image filtering for low‐flux CT: (a) the same transverse slice of NCAT phantom as Fig. 2(a) except for different kVp setting; (b) the same transverse slice of NCAT phantom as Fig. 2(d) except for different kVp setting; (c) profile comparison between Fig. 2(a) and Fig. 4(a); (d) preHQ‐guided NLM filtering of Fig. 2(b) using Eq. (11) with Fig. 4(a) as prior image, SW = 332, PW = 132, h = 0.007; (e) preHQ‐guided NLM filtering of Fig. 2(b) using Eq. (11) with Fig. 4(b) as prior image, SW = 332, PW = 132, h = 0.007; (f) profile comparison between Fig. 2(a) and the filtering results in Figs. 4(d) and 4e; (g) preHQ‐guided NLM filtering of Fig. 2(b) using Eq. (14) with Fig. 4(a) as prior image, SW = 332, PW=132, h = 0.007; (h) preHQ‐guided NLM filtering of Fig. 2(b) using Eq. (14) with Fig. 4(b) as prior image, SW = 332, PW = 132, h = 0.007; (i) profile comparison between Fig. 2(a) and the filtering results in Figs. 4(g) and 4(h). [Colour figure can be viewed at wileyonlinelibrary.com]

Finally, we observed that the low‐contrast objects and subtle structures in Figs. 2(e), 3(g), 3(h) and 4(g), 4(h) are not as well‐preserved as the high‐contrast objects. One reason for this observation is that a fixed SW size and constant filtering parameter h were used for both cases, resulting in adequate filtering for high‐contrast objects but excessive filtering for other regions. Using the adaptive filtering strategies illustrated in Section 3.A.1(1), the preHQ‐guided filtering results may be further improved, especially for low‐contrast objects and subtle structures.

Database‐assisted image filtering

Because previous high quality image of the same patient may not always be available for some clinical applications, Xu et al. extended to utilize a database of high quality CT images from other patients, for guided NLM image filtering.62 However, the CT images from other patients are typically less similar to the current low‐dose image than those from the same patient. Therefore, the authors first picked a set of images from the database with similar anatomical structures to the current low‐dose image, and registered them to the current low‐dose image to construct the set of database priors. Then, they subdivided current low‐dose image and database priors into blocks (e.g., 129 × 129), and then performed block registration and guided NLM filtering in a blockwise fashion using Eq. (11). The reason for constructing a set of database priors rather than a single one is to considerably improve the ultimate filtering result, because one database prior unlikely matches the target image from a different patient. In their study, the authors found that three database priors are sufficient to faithfully restore all the features, for either low‐flux or sparse‐view CT images.62

To avoid the need for error‐prone image registration and massive image storage, Ha and Mueller also proposed to replace the high quality image database with a database of high quality small patches (e.g., 7 × 7). Two types of patch database were investigated for NLM filtering of low‐flux CT image,63 a localized patch database with anatomical region tags (e.g., lung, heart, spine) and a global patch database with no tags. For each pixel j in the low‐flux CT image, the patch is first extracted and matched with the database patches to find a certain number of most similar patches. Then, the patch database‐assisted NLM image filtering was expressed as:63

| (15) |

where SP j denotes the selected patches from the database for pixel j, l indexes the selected patches, and denotes the central pixel intensity of the lth selected patch. This method was validated on low‐flux CT images, and demonstrated better recovery of the image features than the conventional NLM filtering. Also, the global patch database‐assisted filtering removed a lower amount of structural information than the localized patch database, because of a larger patch variety.63 Nevertheless, it is still unknown whether the method works on sparse‐view CT images, which are degraded with severe view‐aliasing artifacts and may jeopardize the search for matched patches from the database.

3.A.2. NLM‐regularized image restoration

Generic NLM‐regularized image restoration

In addition to applying the filter directly to a FBP reconstructed LDCT image, the low‐dose image can also be restored by solving the following problem:

| (16) |

where μ LD represents the FBP reconstructed low‐dose image, as defined above. The first term in Eq. (16) is a data‐fidelity term, the second term is a regularization term, and β > 0 is a scalar control parameter that balances the two terms. Too small β may not eliminate noise/streaks in LDCT image, while too large β may remove valuable image features.

The NLM‐based regularization models illustrated in Section 2.B can be employed in Eq. (16) for the restoration of FBP reconstructed LDCT images. Hashemi et al. explored the nonlocal TV regularization for low‐flux CT image restoration with promising results.12 For sparse‐view CT, while μ LD is severely degraded with view‐aliasing artifacts and some structures are hardly resolved, the resulting image restoration may only yield inferior image quality because of the challenge to find similar patches within the image.

preHQ‐regularized image restoration

As discussed in Section 3.A.1(2), if a previous high quality CT image of the same patient is available, the prior image can be formulated into the NLM‐based regularization models in Section 2.B, to generate the preHQ‐induced NLM regularization models, which are given as:54, 58

| (17) |

| (18) |

| (19) |

where

| (20) |

The preHQ‐induced NLM regularizations in Eqs.(17), (18), (19) can all potentially be employed in Eq. (16) for the restoration of FBP reconstructed LDCT images. The prior image contains similar anatomical structures and provides excellent correspondence to a current low‐dose image, further improving image restoration over the use of generic regularizations.

3.A.3. NLM‐regularized image reconstruction

Generic NLM‐regularized image reconstruction

The NLM‐based regularization models in Section 2.B can be also employed for statistical image reconstruction (SIR) of LDCT image. In practice, the SIR method either uses calibrated transmitted photons (before log‐transform) with the pML criterion or calibrated line integrals (after log‐transform) with the PWLS criterion.64 Because of its computational advantage, the PWLS criterion is a common choice and is described as:

| (21) |

where 1 is the vector of the measured line integrals, and I is the total number of line integral measurements; 1 is the vector of attenuation coefficients of the object to be reconstructed, and J is the number of image pixels; is the system or projection matrix; is the covariance matrix, and because the measurement among different detector bins are assumed to be independent, the covariance matrix is diagonal and expressed as 64; the symbols T and −1 indicate transpose and inverse operators, respectively. The first term in Eq. (21) is a data‐fidelity term that models the statistics of projection measurements, while the second term can be the NLM‐based regularization demonstrated in Section 2.B.

Chen et al. first explored the NLM‐based regularization for Bayesian reconstruction of emission tomography43 and X‐ray CT,65 and impressive reconstruction results were observed in noise suppression and tissue feature preservation. Later, they also applied a joint estimation strategy to solve the convergence problem caused by the weight update in iterations.66 Zhang et al. compared the performance of NLM‐based regularization with traditional MRF model‐based regularizations for SIR of LDCT, and found the NLM‐based regularization can provide better noise reduction and resolution preservation for both phantoms and patient data.50, 67, 68

Similarly to NLM filtering, the use of locally adaptive filtering parameter h in NLM‐based regularization can also improve the image quality of the resulting reconstruction.68 While the local noise level in the SIR reconstructed image is difficult to estimate, an empirical approach to determine locally adaptive filtering parameter was given as:68

| (22) |

where s and t are two constants. The rationale behind this mathematical expression is that the value of h should depend on the similarity between the patch of the target pixel and the patches within the corresponding SW. When SW contains many patches similar to P(μ j ), h needs to be decreased to reduce the influence of the other patches. Conversely, when very few similar patches exist in SW for P(μ j ), h needs to be increased to relax the selection.69

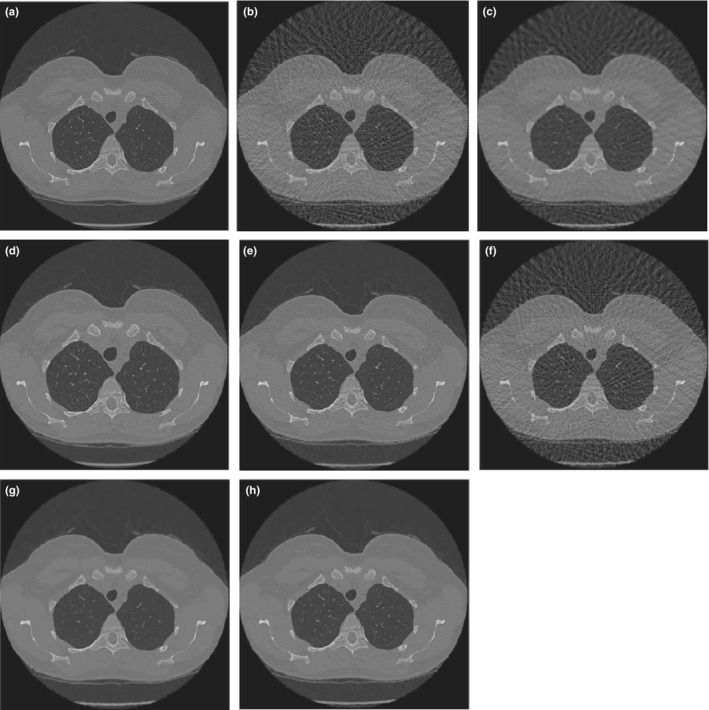

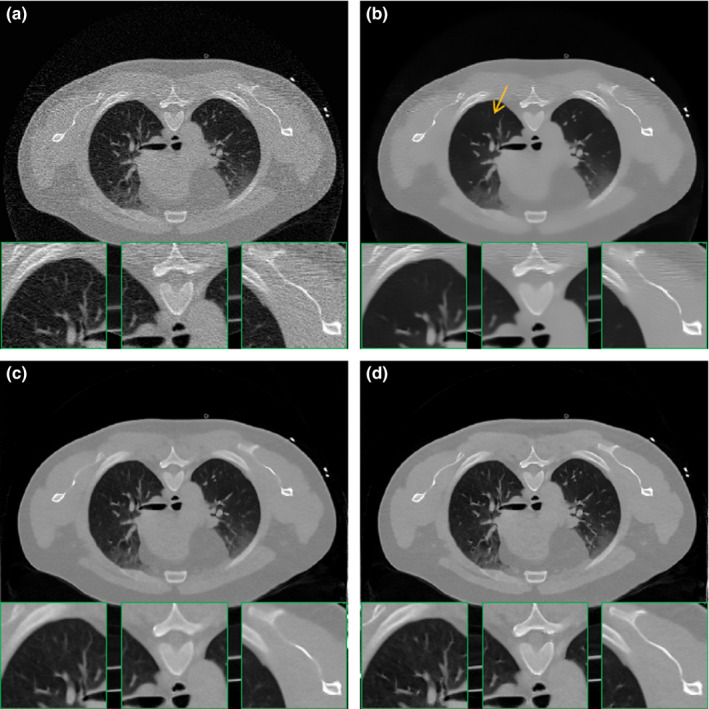

Figure 5 shows the reconstructed images from the low‐dose projection data (20 mAs, 120 kVp) of a patient by the following methods: the FBP method, the FBP reconstruction followed by NLM filtering (referred to as FBP + NLM filtering), the NLM‐regularized SIR using constant filtering parameter (referred to as PWLS‐NLM), and the NLM‐regularized SIR using adaptive filtering parameters in Eq. (22) (referred to as PWLS‐adaptiveNLM). The FBP + NLM filtering method cannot eliminate the streak artifacts within the image, but the two NLM‐regularized SIR methods do not have such problem because of the statistical modeling of the projection data. As observed in the zoom‐in views of three detailed regions, the PWLS‐adaptiveNLM outperforms the PWLS‐NLM on the reconstruction of subtle structures, demonstrating the need to introduce spatial adaptivity into NLM‐based regularization. Nevertheless, besides adjusting the filtering parameter h, other approaches may be used to make the NLM‐based regularization adaptive, such as those mentioned in Section 3.A.1.(1)

Figure 5.

One reconstructed slice of a patient from low‐flux projection data: (a) FBP reconstruction; (b) FBP reconstruction followed by NLM filtering (SW = 172, PW = 52, h = 0.012); (c) PWLS‐NLM reconstruction (SW = 172, PW = 52, h = 0.008); (d) PWLS‐adaptiveNLM reconstruction (SW = 172, PW = 52, s = 1 × 10−3, t = 4×10−6). All images are displayed with the same window. (Figure reprinted from the work of Zhang et al.68). [Colour figure can be viewed at wileyonlinelibrary.com]

The NLM‐regularized image reconstruction for sparse‐view CT27 can also be formulated as Eq. (21). While a penalized least‐squares criterion (i.e., the covariance matrix is set to be identity matrix) may be adequate in relatively high flux imaging application, a more accurate modeling of the projection measurements would be important for a limited number of X‐ray photons in each detector bin.70 Thus, the PWLS criterion in Eq. (21) can be a better choice to account for the credibility of each measurement in both sparse‐view and low‐flux cases.

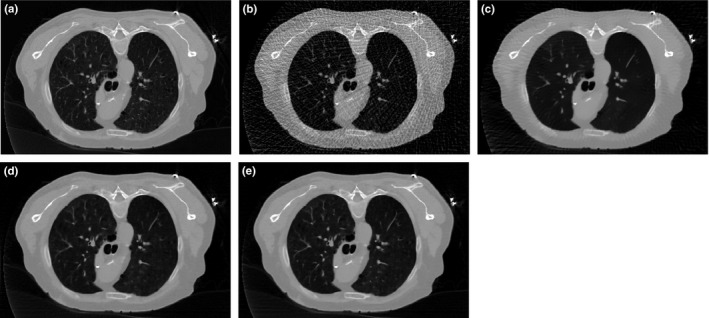

Figure 6(a) illustrates one transverse CT image of a patient, which is reconstructed from 1160 full projection views with the FBP method and serves as the ground truth for reference. Figures 6(b)–6(e) shows the reconstructed images by the FBP method, FBP+NLM filtering, PWLS‐NLM and PWLS‐adaptiveNLM, from 145 projection views which were evenly extracted from the 1160 projection views. The NLM‐regularized image reconstructions can suppress the view‐aliasing artifacts more effectively than the FBP and FBP + NLM filtering methods while retaining good image quality. Also, the PWLS‐adaptiveNLM is superior to the PWLS‐NLM in preserving the subtle structures within the lung region, which may be of great importance for diagnosis.

Figure 6.

A reconstructed slice of one patient dataset: (a) FBP reconstruction from the full 1160 projection views; (b) FBP reconstruction from 145 projection views; (c) FBP+NLM filtering from 145 projection views (SW = 172, PW = 52, h = 0.01); (d) PWLS‐NLM reconstruction from 145 projection views (SW = 172, PW = 52, h = 0.006); (e) PWLS‐adaptiveNLM reconstruction from 145 projection views (SW = 172, PW = 52, s = 1 × 10−3, t = 4 × 10−6). All the images are displayed with the same window.

preHQ‐regularized image reconstruction

The preHQ‐induced NLM regularizations described in Section 3.A.2(2) were also used for low‐flux and sparse‐view CT image reconstruction as in Eq. (21) with promising reconstruction results.58, 71, 72 It was also demonstrated that they may outperform the generic NLM‐based regularizations in terms of reconstructed image quality, or potentially yield good images from lower dose acquisition, because of the introduction of a previous high quality image.58, 71, 72 Finally, because optimization of the criterion in Eq. (21) is routinely performed by an iterative algorithm and the reconstructed image is updated after each iteration, the artifact‐matched scheme may not be needed as in Section 3.A.1(2).

3.A.4. NLM‐based projection data denoising

The NLM filter can also be applied to CT projection data before image reconstruction.16 The accessible projection data are commonly calibrated transmitted photons (before log‐transform) or calibrated line integrals (after log‐transform).64 The calibrated transmitted photons can be described by a Poisson distribution or ‘Poisson + Gaussian’ distribution with consideration of the electronic noise, while the calibrated line integrals can be approximated by a Gaussian distribution with a nonlinear signal‐dependent variance.64 Therefore, the local noise level of the projection data can be easily determined. Taking the calibrated line integrals as an example, the variance of each measurement can be given as:73, 74, 75

or

| (23) |

where y i represents the line integral measurement along the ith X‐ray path, and are the mean and variance of y i , denotes the mean number of incident photons, and denotes the variance of electronic noise. As a result, the filtering parameter h employed for each projection measurement can be adjusted adaptively as , which is similar to Eq. (10) but defined in the projection domain. The NLM‐based projection data denoising can then be performed similarly as that in Eq. (1).

If the projection data of previous high quality scan and current LDCT scan are both available, the same strategy as that of the image domain in Eq. (11) can be potentially used to perform preHQ‐guided projection data denoising. However, registration of the projection data can be more challenging than that of the images, which may impede its practical application.

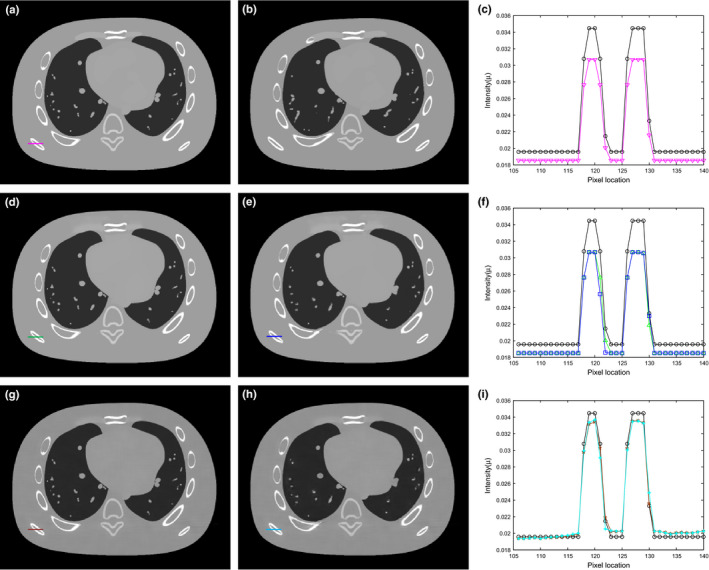

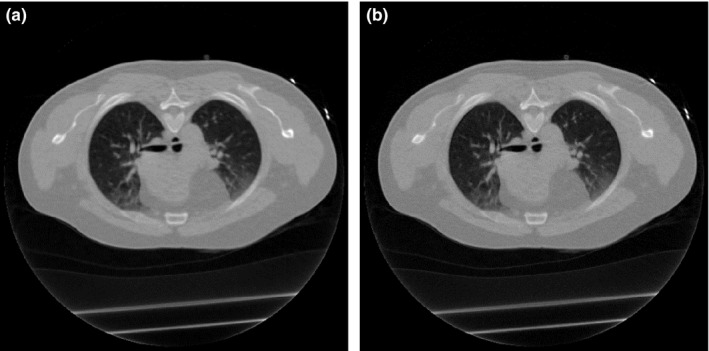

The denoisied projection data can then be reconstructed by the FBP method. The CT projection data denoising is advantageous because noise is removed early in the pipeline preventing its propagation into the reconstruction process.17 However, the edges in the projection domain are usually not well defined as compared to those in the image domain, which may result in edge sharpness loss in the ultimate reconstructed image, as shown in Fig. 7(a).

Figure 7.

One reconstructed slice from the same low‐flux projection data as Fig. 5: (a) NLM‐based projection data denoising followed by FBP reconstruction (SW = 52, PW = 32, ɛ = 15); (b) NLM‐regularized statistical projection restoration followed by FBP reconstruction (SW = 52, PW = 32, ɛ = 3).

3.A.5. NLM‐regularized statistical projection restoration

NLM‐based regularizations can also be used for statistical projection restoration of low‐flux CT. Similar to the SIR methods, statistical projection restoration can optimize either the pML or PWLS criterion in the projection domain to estimate the ideal noiseless projection data from the acquired noisy ones.22, 23, 24, 25 For instance, the PWLS criterion in the projection domain is given as:

| (24) |

where 1 is the vector of ideal noiseless line integrals to be estimated, and U(q) denotes a penalty term in the projection domain. Although this projection restoration is also typically optimized by an iterative algorithm, the computational speed is far more rapid than that of the SIR.

Similar to the regularization model of Eq. (4) in the image domain, the corresponding NLM‐based regularization in the projection domain can be described as:

| (25) |

where the weighting coefficient is also computed as:

| (26) |

Also, similar to the preHQ‐induced NLM regularization model of Eq. (17) in the image domain, the corresponding regularization in the projection domain can be described as:

| (27) |

where 1 represents the projection data of previous high quality scan registered to the projection data of current low‐dose scan, and the weighting coefficient is given as:

| (28) |

The other NLM‐based regularization models can also be adapted from the image domain to the projection domain. And all regularization models can potentially be used for the statistical projection restoration of low‐dose projection data with Eq. (24).

The restored projection data are then reconstructed by the analytical FBP method. The NLM‐regularized statistical projection restoration may have similar drawbacks to the NLM‐based projection data denoising, that is, the ultimate FBP reconstructed image may lose edge sharpness, as illustrated in Fig. 7(b).

3.B. Four‐dimensional CT scanning

For imaging respiratory motion‐involved sites, such as the thoracic and upper abdominal regions, four‐dimensional CT (4D‐CT), and four‐dimensional cone‐beam CT (4D‐CBCT) have been widely used to resolve organ motions and reduce motion artifacts.13, 76, 77 To achieve low‐dose imaging, the same strategies (i.e., low‐flux or sparse‐view acquisition) can be employed as in three‐dimensional CT scanning. In 4D‐CT and 4D‐CBCT, the projection data can be sorted into different groups corresponding to different breathing phases. After phase binning, each phase image can be reconstructed independently with either analytical (e.g., FBP, FDK) or iterative reconstruction methods. Each phase image can also be improved with the methods presented in Section 3.A. However, these methods neglect the highly temporal correlation between the images at successive phases, which could potentially improve the image quality of each phase. The same anatomical features may exist in successive phases, with slight motion and deformation. Therefore, Li et al. developed a partial temporal nonlocal means filter for 4D‐CT, which uses a partial temporal profile to determine the similarity between pixels and exploits redundant information in both temporal and spatial domains to achieve noise reduction.78 In addition, a temporal NLM (TNLM) method was proposed for image enhancement and reconstruction of 4D‐CT and 4D‐CBCT, to exploit the temporal redundancy among images at successive phases.13, 76, 77

Let us divide a respiratory cycle into N phases labeled by n=1,2,…,N. The image of phase n is denoted by 1, and a periodic boundary condition along the temporal direction is assumed, i.e., μ {N+1} = μ {1}. The TNLM regularization model is described as:13, 76, 77

| (29) |

where

| (30) |

And according to Section 2.B, the TNLM regularization model can also be given as:

| (31) |

| (32) |

It can be seen that the TNLM regularization models are very similar to those described in Section 3.A.2(2), although they exploit neighboring phases instead of a previous high quality image.

3.B.1. TNLM‐regularized image restoration

Let 1 denote the reconstructed image of phase n by the analytical FBP/FDK method from the phase binned projection data. The TNLM‐based image restoration or enhancement method for 4D‐CT/4D‐CBCT can be given as:13

| (33) |

3.B.2. TNLM‐regularized image reconstruction

Let 1 denote the vector of phase binned line integrals of phase n, represent the projection matrix of phase n that maps the image μ {n} into a set of projections corresponding to various projection angles represent the covariance matrix for phase binned line integrals of phase n, then the TNLM‐based image reconstruction method for 4D‐CT/4D‐CBCT can be described as:13, 76, 77

| (34) |

Also, as discussed in Section 3.A.3(1), Eq. (34) may be replaced with the following form when the X‐ray flux is relatively high:

| (35) |

In these optimization problems, the images at every phase are reconstructed altogether instead of independently.

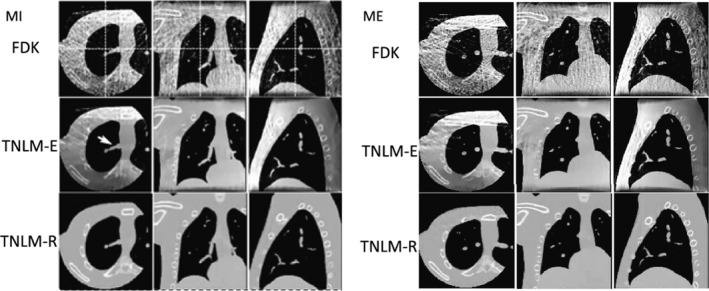

Figure 8 illustrates the 4D‐CBCT images of the NCAT phantom from sparse‐view acquisitions (30 projection views spanned evenly in 360° rotation), by the following methods: analytical FDK reconstruction, TNLM‐regularized image restoration or enhancement (referred to as TNLM‐E) in Eq. (33), and TNLM‐regularized image reconstruction (referred to as TNLM‐R) in Eq. (35). Both TNLM‐E and TNLM‐R methods mitigate the view‐aliasing artifacts to a large extent, as compared with the FDK method. In the absence of other problems such as data truncation, the TNLM‐R method can yield higher image quality than the TNLM‐E method.13

Figure 8.

4D‐CBCT images of the NCAT phantom at the maximum inhale (MI) and the maximum exhale (ME) phases generated by three different methods from sparse‐view acquisitions. (Figure reprinted from the work of Jia et al.13).

In addition, Kazantsev et al.79, 80 argued that structurally similar features may exist in much more distant time frames than simply adjacent ones, although the two neighboring frames have a higher probability to be structurally similar to the central frame. Therefore, they proposed to employ all available temporal data to improve the spatial resolution of each individual time frame. However, this approach would inevitably further increase the computational burden, although the patch selection as in Eq.(10) can be utilized to accelerate the process to some extent.

4. Discussions and conclusions

This paper reviews various applications of the NLM algorithm in LDCT from low‐flux and sparse‐view acquisitions. The NLM algorithm can be used as a filter for image/projection filtering, which is a typical non‐iterative procedure. The NLM algorithm can also be formulated as a regularization term for regularized image/projection restoration and image reconstruction, routinely performed by optimizing an objective function with an iterative algorithm. Based on the form of objective function, iterative algorithms including gradient descent, conjugate gradient, expectation maximization, iterative coordinate descent, separable paraboloidal surrogates81 may be employed to obtain the solution. Moreover, acceleration techniques including ordered subsets,82 nonhomogeneous update,83 alternating direction method of multiplier,84 first‐order method based on Nesterov's algorithm85 can also be combined. Generally, the computational load of the NLM‐regularized strategies is much higher than that of the NLM‐based filtering strategies due to iterations. However, CT image quality may be remarkably improved despite the increased computational time. For instance, NLM‐regularized image reconstruction takes advantage of projection data (e.g., statistical modeling) and imaging geometry (e.g., projection matrix), and can produce better image quality than NLM‐based filtering or restoration strategies, as demonstrated in Figs. 5, 7, and 8.

For CT from low‐flux acquisitions, the NLM strategies for noise and streak artifacts reduction can operate in both image and projection domains. While the noise and streak artifacts in low‐flux CT images cannot be modeled with a general distribution, the noise properties of low‐flux projection data are fairly well understood.64 Comparing Fig. 7(a) with Fig. 5(b), it is found that the projection denoising performs better than the image denoising in terms of noise and streak artifacts suppression, for this specific patient study. This finding is consistent with the results from a study by Xia et al., who applied a partial diffusion equation based denoising technique for breast CBCT on both projection data and reconstructed images.86 However, projection denoising and statistical projection restoration may lose edge sharpness in the ultimate reconstructed image (as shown in Fig. 7), because the edges in the projection domain are usually not as well defined as those in the image domain. Compared Fig. 5(d) with Fig. 7(b), NLM‐regularized statistical image reconstruction (operating in image domain) better preserves the edge/detail than the NLM‐regularized statistical projection restoration (operating in projection domain). This finding is also consistent with a previous study87 which compared the performances of the image domain and projection domain PWLS implementations using anisotropic diffusion filter‐based regularization. Overall, the NLM‐regularized statistical image reconstruction in Eq. (21), which optimizes an objective function defined in the image domain and also takes advantage of noise properties of the projection data, seems to yield the best image quality of all NLM strategies.

While the use of previous high quality scan to improve current LDCT image reconstruction has become a research endeavor, one major limitation is that the previous high quality image usually needs be registered to a current low‐dose image. The NLM‐based strategies do not heavily depend on registration accuracy, hence a rough registration can be sufficient because of the patch‐based search mechanism. Because direct filtering of the LDCT image is usually problematic, the introduction of a previous high quality image can significantly improve current low‐dose image filtering, as shown in Figs. 2 and 3. Similarly, a previous high quality image can also be formulated into the NLM‐based regularization models, to improve image restoration or reconstruction of the current LDCT. While generic NLM‐regularized image restoration or reconstruction may already be able to generate good image quality for LDCT, preHQ‐regularized image restoration or reconstruction can potentially further improve image quality or yield as good image from lower dose acquisition. Finally, although preHQ‐guided projection data denoising and preHQ‐regularized statistical projection restoration can be applied in theory, they may not be practical due to the following reasons: (a) the registration of the projection data is more challenging than the registration of the images; (b) it is more difficult to find sufficient similar patches in the projection data than in the images. Instead, the previous high quality scan‐induced NLM strategies in the projection domain can be converted to the image‐domain strategies (i.e., preHQ‐guided image filtering, preHQ‐regularized image restoration, and preHQ‐regularized statistical image reconstruction) after the FBP reconstruction of previous high quality projection data, so that the edge/detail of the resulting image can be better preserved.

Introducing spatial adaptivity to the NLM algorithm can be beneficial to improve the image quality of LDCT, especially for low‐contrast objects and subtle structures, as illustrated in Figs. 5 and 6. The reason is that uniform smoothing strength can be too weak for some regions but too strong for others within the image, hence the need for spatially‐variant adaptive smoothing. Spatial adaptivity can be incorporated into the NLM algorithm in different ways, including designing adaptive filtering parameter h, employing adaptive SW, excluding dissimilar patches within the SW. So far, these adaptive approaches have mainly been explored for generic NLM strategies but rarely for preHQ‐induced NLM strategies for two main reasons: (a) it is more difficult to incorporate spatial adaptivity for preHQ‐induced NLM strategies because of the introduction of previous high quality image; and (b) it is less important to incorporate spatial adaptivity because the previous high quality image can provide more correspondence to the current LDCT image for structure recovery. However, spatially‐variant adaptive smoothing may still be beneficial to preHQ‐induced NLM strategies and may be an interesting topic for further investigation. Finally, since anisotropic structure is commonly observed in real‐world images, some anisotropic NLM algorithms were also proposed to improve the denoising performance, such as substituting the square patches of fixed size by spatially adaptive shapes,88 using image gradient information to estimate the edge orientation,89 and incorporating structure tensor into weighing calculation.90 Investigation on these strategies for LDCT applications can be another interesting research topic.

While NLM‐based strategies have been successfully used in the LDCT scenario, two drawbacks include sophisticated parameter tuning and heavy computational burden. For NLM‐based image/projection filtering, four parameters generally need to be tuned: SW size, PW size, standard deviation a of the Gaussian kernel, and filtering parameter h. More parameters may be needed when the adaptive scheme is used. And for NLM‐regularized image/projection restoration or image reconstruction, an additional parameter (scalar control parameter) needs to be selected. Determining the optimal values for these parameters is challenging, and in practice, they are usually set empirically via the extensive trial‐and‐error process. It was found that SW size, PW size and standard deviation a do not usually have noticeable effects on the reconstructed image when set in a reasonable range; filtering parameter h and control parameter β need to be manually tuned. Also, the superior performance of NLM‐based strategies is offset by the cost of increased computational time. One time‐consuming procedure is the calculation of patch distance within a large SW. Various schemes have been proposed to speed up this process, including preselecting patches,52, 91 neglecting Gaussian kernel,92, 93 taking advantage of symmetry,10 and employing GPU for parallel processing.10, 94 For NLM‐regularized image reconstruction, employing an iterative algorithm to optimize the objective function is also time‐consuming because of multiple re‐projection and back‐projection operation cycles in the projection and image domains. Many software15, 83, 84, 85 and hardware95, 96, 97, 98, 99 approaches have been investigated to substantially reduce the reconstruction time. With advancements in fast computation through the development of dedicated software and hardware, the computational burden may be not a major challenge in the future, and the NLM‐based strategies may move closer for clinical use.

We have only reviewed the applications of the NLM algorithm in a standard LDCT scenario (low‐flux or sparse‐view acquisition), however, it may also be applied to other low‐dose problems such as interior CT and short‐scan CT, or to suppress artifacts caused by other imperfections. Moreover, the NLM algorithm may also be used in perfusion CT,54, 61, 78, 100 dual‐energy CT,101, 102 and multi‐energy CT103 scenarios, although special adjusting strategies are needed to account for changes in pixel intensity due to contrast perfusion or energy differences. We hope this review will motivate more studies in the fields.

Finally, it is worth mentioning that many other filters may potentially be applied to LDCT in similar manners as demonstrated in this review, and the NLM filter is essentially one of them. Because of patch use, NLM‐based strategies may yield better image quality than traditional filters‐based strategies. Recently, sparse dictionary learning104, 105 has drawn great interest, which also takes advantage of the patches and can be considered as an extension of the NLM algorithm.106 Successful applications of dictionary learning have been reported in the LDCT scenario for projection denoising,107, 108, 109 image denoising110, 111, 112 and reconstruction.113, 114, 115 For instance, Chen et al.112 proposed an image‐domain artifact suppressed dictionary learning method to suppress the streak artifacts and noise in LDCT image, which designed novel discriminative dictionaries to cancel the streak artifacts in high‐frequency bands. Xu et al.114 used the dictionary learning‐based sparsification as regularization term in SIR for LDCT, and demonstrated superior performance in noise reduction and structural feature preservation. The other strategies reviewed in this paper may also be explored for dictionary learning in the future.

Conflict of interest

The authors have no relevant conflict of interest to disclose.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant Nos. 81371544 & 61571214, the National Science and Technology Major Project of the Ministry of Science and Technology of China under Grant No. 2014BAI17B02, the Guangdong Natural Science Foundation under Grant No. 2015A030313271, the Science and Technology Program of Guangdong, China under Grant No. 2015B020233008, the Science and Technology Program of Guangzhou, China under Grant No. 201510010039, and in part by the Scientific Research Foundation of Southern Medical University, Guangzhou, China under Grant No. CX2015N002. DZ was partially supported by China Postdoctoral Science Foundation funded project under Grant No. 2016M602489. HZ and ZL were partially supported by the National Institutes of Health (Nos. R01 CA143111 & R01 CA206171). JW was supported in part by the National Institutes of Health (No. R01 EB020366) and the American Cancer Society (No. RSG‐13‐326‐01‐CCE). The authors would like to thank Dr. Damiana Chiavolini for editing the paper. The authors also acknowledge anonymous reviewers for their constructive suggestions.

Contributor Information

Zhengrong Liang, Email: jerome.liang@stonybrook.edu.

Jianhua Ma, Email: jhma@sum.edu.cn.

References

- 1. Kalender WA. Dose in x‐ray computed tomography. Phys Med Biol. 2014;59:R129. [DOI] [PubMed] [Google Scholar]

- 2. Brenner DJ, Hall EJ. Computed tomography—an increasing source of radiation exposure. N Engl J Med. 2007;357:2277–2284. [DOI] [PubMed] [Google Scholar]

- 3. Hsieh J. Computed Tomography: Principles, Design, Artifacts, and Recent Advances. Bellingham, WA: SPIE press; 2009. [Google Scholar]

- 4. McCollough CH, Primak AN, Braun N, Kofler J, Yu L, Christner J. Strategies for reducing radiation dose in CT. Radiol Clin North Am. 2009;47:27–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Yu L, Liu X, Leng S, et al. Radiation dose reduction in computed tomography: techniques and future perspective. Imaging in Medicine. 2009;1:65–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Giraldo JR, Kelm ZS, Guimaraes LS, et al. Comparative study of two image space noise reduction methods for computed tomography: bilateral filter and nonlocal means. In: Annual International Conference of the Engineering in Medicine and Biology Society (EMBC). Piscataway, NJ: IEEE; 2009. [DOI] [PubMed] [Google Scholar]

- 7. Kelm ZS, Blezek D, Bartholmai B, Erickson BJ. Optimizing non‐local means for denoising low dose CT. In: IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBI). Piscataway, NJ: IEEE; 2009. [Google Scholar]

- 8. Chen Y, Chen W, Yin X, et al. Improving low‐dose abdominal CT images by weighted intensity averaging over large‐scale neighborhoods. Eur J Radiol. 2011;80:e42–e49. [DOI] [PubMed] [Google Scholar]

- 9. Chen Y, Yang Z, Hu Y, et al. Thoracic low‐dose CT image processing using an artifact suppressed large‐scale nonlocal means. Phys Med Biol. 2012;57:2667. [DOI] [PubMed] [Google Scholar]

- 10. Li Z, Yu L, Trzasko JD, et al. Adaptive nonlocal means filtering based on local noise level for CT denoising. Med Phys. 2014;41:011908. [DOI] [PubMed] [Google Scholar]

- 11. Zheng X, Liao Z, Hu S, Li M, Zhou J. Improving spatial adaptivity of nonlocal means in low‐dosed CT imaging using pointwise fractal dimension. Comput Math Methods Med. 2013;2013:902143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hashemi S, Beheshti S, Cobbold RS, Paul NS. Non‐local total variation based low‐dose Computed Tomography denoising. In: 36th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC). Piscataway, NJ: IEEE; 2014. [DOI] [PubMed] [Google Scholar]

- 13. Jia X, Tian Z, Lou Y, Sonke J‐J, Jiang SB. Four‐dimensional cone beam CT reconstruction and enhancement using a temporal nonlocal means method. Med Phys. 2012;39:5592–5602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Sauer K, Bouman C. A local update strategy for iterative reconstruction from projections. IEEE Trans Image Process. 1993;41:534–548. [Google Scholar]

- 15. Fessler JA. Statistical image reconstruction methods for transmission tomography. In: Handbook of Medical Imaging, Vol. 2. Bellingham, WA: SPIE; 2000: 1–70. [Google Scholar]

- 16. Huang K, Zhang D, Wang K, Li M. Adaptive non‐local means denoising algorithm for cone‐beam computed tomography projection images. In: Fifth International Conference on the Image and Graphics (ICIG). Piscataway, NJ: IEEE; 2009. [Google Scholar]

- 17. Maier A, Wigström L, Hofmann HG, et al. Three‐dimensional anisotropic adaptive filtering of projection data for noise reduction in cone beam CT. Med Phys. 2011;38:5896–5909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Manduca A, Yu L, Trzasko JD, et al. Projection space denoising with bilateral filtering and CT noise modeling for dose reduction in CT. Med Phys. 2009;36:4911–4919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hsieh J. Adaptive streak artifact reduction in computed tomography resulting from excessive x‐ray photon noise. Med Phys. 1998;25:2139–2147. [DOI] [PubMed] [Google Scholar]

- 20. Demirkaya O. Reduction of noise and image artifacts in computed tomography by nonlinear filtration of projection images. Proc SPIE Med Imaging. 2001;4322:917–923. [Google Scholar]

- 21. Kachelriess M, Watzke O, Kalender WA. Generalized multi‐dimensional adaptive filtering for conventional and spiral single‐slice, multi‐slice, and cone‐beam CT. Med Phys. 2001;28:475–490. [DOI] [PubMed] [Google Scholar]

- 22. Li T, Li X, Wang J, et al. Nonlinear sinogram smoothing for low‐dose X‐ray CT. IEEE Trans Nucl Sci. 2004;51:2505–2513. [Google Scholar]

- 23. La Rivière PJ. Penalized‐likelihood sinogram smoothing for low‐dose CT. Med Phys. 2005;32:1676–1683. [DOI] [PubMed] [Google Scholar]

- 24. La Rivière PJ, Bian J, Vargas PA. Penalized‐likelihood sinogram restoration for computed tomography. IEEE Trans Med Imaging. 2006;25:1022–1036. [DOI] [PubMed] [Google Scholar]

- 25. Wang J, Li T, Lu H, Liang Z. Penalized weighted least‐squares approach to sinogram noise reduction and image reconstruction for low‐dose X‐ray computed tomography. IEEE Trans Med Imaging. 2006;25:1272–1283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Xu W, Mueller K. Efficient low‐dose CT artifact mitigation using an artifact‐matched prior scan. Med Phys. 2012;39:4748–4760. [DOI] [PubMed] [Google Scholar]

- 27. Zhang H, Ma J, Wang J, et al. Adaptive nonlocal means‐regularized iterative image reconstruction for sparse‐view CT. In: IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC). Piscataway, NJ: IEEE; 2014. [Google Scholar]

- 28. Sidky EY, Kao C‐M, Pan X. Accurate image reconstruction from few‐views and limited‐angle data in divergent‐beam CT. J X‐ray Sci Technol. 2006;14:119–139. [Google Scholar]

- 29. Chen G‐H, Tang J, Leng S. Prior image constrained compressed sensing (PICCS): a method to accurately reconstruct dynamic CT images from highly undersampled projection data sets. Med Phys. 2008;35:660–663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Sidky EY, Pan X. Image reconstruction in circular cone‐beam computed tomography by constrained, total‐variation minimization. Phys Med Biol. 2008;53:4777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Buades A, Coll B, Morel J‐M. A non‐local algorithm for image denoising. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Piscataway, NJ: IEEE; 2005. [Google Scholar]

- 32. Buades A, Coll B, Morel J‐M. A review of image denoising algorithms, with a new one. Multiscale Model Simul. 2005;4:490–530. [Google Scholar]

- 33. Kindermann S, Osher S, Jones PW. Deblurring and denoising of images by nonlocal functionals. Multiscale Model Simul. 2005;4:1091–1115. [Google Scholar]

- 34. Buades A, Coll B, Morel J‐M. Image enhancement by non‐local reverse heat equation. Preprint CMLA. 2006;22:2006. [Google Scholar]

- 35. Elmoataz A, Lezoray O, Bougleux S. Nonlocal discrete regularization on weighted graphs: a framework for image and manifold processing. IEEE Trans Image Process. 2008;17:1047–1060. [DOI] [PubMed] [Google Scholar]

- 36. Coupé P, Yger P, Barillot C. Fast non local means denoising for 3D MR images. Med Image Comput Computer‐Assist Interv. 2006;9:33–40. [DOI] [PubMed] [Google Scholar]

- 37. Adluru G, Tasdizen T, Schabel MC, DiBella EV. Reconstruction of 3D dynamic contrast‐enhanced magnetic resonance imaging using nonlocal means. J Magn Reson Imaging. 2010;32:1217–1227. [DOI] [PubMed] [Google Scholar]

- 38. Manjón JV, Coupé P, Martí‐Bonmatí L, Collins DL, Robles M. Adaptive non‐local means denoising of MR images with spatially varying noise levels. J Magn Reson Imaging. 2010;31:192–203. [DOI] [PubMed] [Google Scholar]

- 39. Manjón JV, Carbonell‐Caballero J, Lull JJ, García‐Martí G, Martí‐Bonmatí L, Robles M. MRI denoising using non‐local means. Med Image Anal. 2008;12:514–523. [DOI] [PubMed] [Google Scholar]

- 40. Coupé P, Yger P, Prima S, Hellier P, Kervrann C, Barillot C. An optimized blockwise nonlocal means denoising filter for 3‐D magnetic resonance images. IEEE Trans Med Imaging. 2008;27:425–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Coupé P, Hellier P, Kervrann C, Barillot C. Nonlocal means‐based speckle filtering for ultrasound images. IEEE Trans Image Process. 2009;18:2221–2229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Li L, Hou W, Zhang X, Ding M. GPU‐based block‐wise nonlocal means denoising for 3D ultrasound images. Comput Math Methods Med. 2013;2013:921303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Chen Y, Ma J, Feng Q, Luo L, Shi P, Chen W. Nonlocal prior Bayesian tomographic reconstruction. J Math Imaging Vision. 2008;30:133–146. [Google Scholar]

- 44. Jie L. A non‐local means approach for PET image denoising. In: World Congress on Medical Physics and Biomedical Engineering. Berlin, Germany: Springer; 2009. [Google Scholar]

- 45. Dutta J, Leahy RM, Li Q. Non‐local means denoising of dynamic PET images. PLoS One. 2013;8:e81390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Chan C, Fulton R, Barnett R, Feng DD, Meikle S. Postreconstruction nonlocal means filtering of whole‐body PET with an anatomical prior. IEEE Trans Med Imaging. 2014;33:636–650. [DOI] [PubMed] [Google Scholar]

- 47. Chun SY, Fessler JA, Dewaraja YK. Post‐reconstruction non‐local means filtering methods using CT side information for quantitative SPECT. Phys Med Biol. 2013;58:6225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Geman S, Geman D. Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. In: IEEE Transactions on Pattern Analysis and Machine Intelligence. Piscataway, NJ: IEEE; 1984: 721–741. [DOI] [PubMed] [Google Scholar]

- 49. Lou Y, Zhang X, Osher S, Bertozzi A. Image recovery via nonlocal operators. J Sci Comput. 2010;42:185–197. [Google Scholar]

- 50. Zhang H, Ma J, Wang J, Liu Y, Lu H, Liang Z. Statistical image reconstruction for low‐dose CT using nonlocal means‐based regularization. Comput Med Imaging Graph. 2014;38:423–435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Thaipanich T, Kuo C‐CJ. An adaptive nonlocal means scheme for medical image denoising. Proc. SPIE Medical Imaging 7623. Bellingham, WA: SPIE; 2010: 76230M‐76230M‐76212. [Google Scholar]

- 52. Mahmoudi M, Sapiro G. Fast image and video denoising via nonlocal means of similar neighborhoods. IEEE Signal Process Lett. 2005;12:839–842. [Google Scholar]

- 53. Nett B, Tang J, Aagaard‐Kienitz B, Rowley H, Chen G‐H. Low radiation dose C‐arm cone‐beam CT based on prior image constrained compressed sensing (PICCS): including compensation for image volume mismatch between multiple data acquisitions. Proc SPIE Medical Imaging. 2009;7258:725803–725812. [Google Scholar]

- 54. Ma J, Zhang H, Gao Y, et al. Iterative image reconstruction for cerebral perfusion CT using a pre‐contrast scan induced edge‐preserving prior. Phys Med Biol. 2012;57:7519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Stayman JW, Dang H, Ding Y, Siewerdsen JH. PIRPLE: a penalized‐likelihood framework for incorporation of prior images in CT reconstruction. Phys Med Biol. 2013;58:7563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Dang H, Wang A, Sussman MS, Siewerdsen J, Stayman J. dPIRPLE: a joint estimation framework for deformable registration and penalized‐likelihood CT image reconstruction using prior images. Phys Med Biol. 2014;59:4799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Zhang H, Han H, Wang J, et al. Deriving adaptive MRF coefficients from previous normal‐dose CT scan for low‐dose image reconstruction via penalized weighted least‐squares minimization. Med Phys. 2014;41:041916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Zhang H, Huang J, Ma J, et al. Iterative reconstruction for X‐ray computed tomography using prior‐image induced nonlocal regularization. IEEE Trans Biomed Eng. 2014;61:2367–2378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Zhang H, Han H, Liang Z, et al. Extracting information from previous full‐dose CT scan for knowledge‐based Bayesian reconstruction of current low‐dose CT images. IEEE Trans Med Imaging. 2016;35:860–870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Yan H, Zhen X, Cerviño L, Jiang SB, Jia X. Progressive cone beam CT dose control in image‐guided radiation therapy. Med Phys. 2013;40:060701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Ma J, Huang J, Feng Q, et al. Low‐dose computed tomography image restoration using previous normal‐dose scan. Med Phys. 2011;38:5713–5731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Xu W, Ha S, Mueller K. Database‐assisted low‐dose CT image restoration. Med Phys. 2013;40:031109. [DOI] [PubMed] [Google Scholar]

- 63. Ha S, Mueller K. Low dose CT image restoration using a database of image patches. Phys Med Biol. 2015;60:869. [DOI] [PubMed] [Google Scholar]

- 64. Zhang H, Wang J, Ma J, Lu H, Liang Z. Statistical models and regularization strategies in statistical image reconstruction of low‐dose X‐ray computed tomography: a survey. arXiv preprint arXiv:1412.1732; 2014.

- 65. Chen Y, Gao D, Nie C, et al. Bayesian statistical reconstruction for low‐dose X‐ray computed tomography using an adaptive‐weighting nonlocal prior. Comput Med Imaging Graph. 2009;33:495–500. [DOI] [PubMed] [Google Scholar]

- 66. Chen Y, Li Y, Yu W, Luo L, Chen W, Toumoulin C. Joint‐MAP tomographic reconstruction with patch similarity based mixture prior model. Multiscale Model Simul. 2011;9:1399–1419. [Google Scholar]

- 67. Zhang H, Ma J, Liu Y, et al. Nonlocal means‐based regularizations for statistical CT reconstruction. Proc SPIE Med Imaging. 2014;9033:903337. [Google Scholar]

- 68. Zhang H, Ma J, Wang J, et al. Statistical image reconstruction for low‐dose CT using nonlocal means‐based regularization. Part II: an adaptive approach. Comput Med Imaging Graph. 2015;43:26–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Coupé P, Manjón JV, Fonov V, Pruessner J, Robles M, Collins DL. Patch‐based segmentation using expert priors: application to hippocampus and ventricle segmentation. NeuroImage. 2011;54:940–954. [DOI] [PubMed] [Google Scholar]

- 70. Xu J, Tsui B. Quantifying the importance of the statistical assumption in statistical x‐ray CT image reconstruction. IEEE Trans Med Imaging. 2014;33:61–73. [DOI] [PubMed] [Google Scholar]

- 71. Zhang H, Bian Z, Ma J, et al. Sparse angular X‐ray cone beam CT image iterative reconstruction using normal‐dose scan induced nonlocal prior. IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC). Piscataway, NJ: IEEE; 2012. [Google Scholar]

- 72. Liang JZ, Ma J, Zhang H, Moore W, Han H. Iterative reconstruction for X‐Ray computed tomography using prior‐image induced nonlocal regularization, US Patent 20,160,055,658; 2016. [DOI] [PMC free article] [PubMed]

- 73. Wang J, Lu H, Liang Z, et al. An experimental study on the noise properties of x‐ray CT sinogram data in Radon space. Phys Med Biol. 2008;53:3327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Ma J, Liang Z, Fan Y, et al. Variance analysis of x‐ray CT sinograms in the presence of electronic noise background. Med Phys. 2012;39:4051–4065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Thibault J‐B, Bouman CA, Sauer KD, Hsieh J. A recursive filter for noise reduction in statistical iterative tomographic imaging. In: Proc. SPIE Electronic Imaging 6065. Bellingham, WA: SPIE; 2006; 60650X‐60650X‐60610. [Google Scholar]

- 76. Jia X, Lou Y, Dong B, Tian Z, Jiang S. 4D computed tomography reconstruction from few‐projection data via temporal non‐local regularization. Med Image Comput Computer‐Assist Interv. 2010;2010:143–150. [DOI] [PubMed] [Google Scholar]

- 77. Tian Z, Jia X, Dong B, Lou Y, Jiang SB. Low‐dose 4DCT reconstruction via temporal nonlocal meansa). Med Phys. 2011;38:1359–1365. [DOI] [PubMed] [Google Scholar]

- 78. Li Z, Yu L, Leng S, et al. A robust noise reduction technique for time resolved CT. Med Phys. 2016;43:347–359. [DOI] [PubMed] [Google Scholar]

- 79. Kazantsev D, Thompson WM, Van Eyndhoven G, et al. 4D‐CT reconstruction with unified spatial‐temporal patch‐based regularization. Inverse Probl Imaging. 2015;9:447–467. [Google Scholar]

- 80. Kazantsev D, Guo E, Kaestner A, et al. Temporal sparsity exploiting nonlocal regularization for 4D computed tomography reconstruction. J X‐ray Sci Technol. 2016;24:207–219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Erdogan H, Fessler JA. Ordered subsets algorithms for transmission tomography. Phys Med Biol. 1999;44:2835. [DOI] [PubMed] [Google Scholar]

- 82. Hudson HM, Larkin RS. Accelerated image reconstruction using ordered subsets of projection data. IEEE Trans Med Imaging. 1994;13:601–609. [DOI] [PubMed] [Google Scholar]

- 83. Yu Z, Thibault J‐B, Bouman CA, Sauer KD, Hsieh J. Fast model‐based X‐ray CT reconstruction using spatially nonhomogeneous ICD optimization. IEEE Trans Image Process. 2011;20:161–175. [DOI] [PubMed] [Google Scholar]

- 84. Ramani S, Fessler JA. A splitting‐based iterative algorithm for accelerated statistical X‐ray CT reconstruction. IEEE Trans Med Imaging. 2012;31:677–688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Choi K, Wang J, Zhu L, Suh T‐S, Boyd S, Xing L. Compressed sensing based cone‐beam computed tomography reconstruction with a first‐order methoda). Med Phys. 2010;37:5113–5125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Xia JQ, Lo JY, Yang K, Floyd CE Jr, Boone JM. Dedicated breast computed tomography: volume image denoising via a partial‐diffusion equation based technique. Med Phys. 2008;35:1950–1958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Zhang H, Liu Y, Han H, et al. A comparison study of sinogram‐and image‐domain penalized re‐weighted least‐squares approaches to noise reduction for low‐dose cone‐beam CT. In: Proc. SPIE Medical Imaging 8668. Bellingham, WA: SPIE; 2013. 86683E‐86683E‐86688. [Google Scholar]

- 88. Deledalle C‐A, Duval V, Salmon J. Anisotropic non‐local means with spatially adaptive patch shapes. In: International Conference on Scale Space and Variational Methods in Computer Vision. Berlin, Germany: Springer; 2011. [Google Scholar]

- 89. Maleki A, Narayan M, Baraniuk RG. Anisotropic nonlocal means denoising. Appl Comput Harmon Anal. 2013;35:452–482. [Google Scholar]

- 90. Wu X, Liu S, Wu M, et al. Nonlocal denoising using anisotropic structure tensor for 3D MRI. Med Phys. 2013;40:101904. [DOI] [PubMed] [Google Scholar]

- 91. Brox T, Kleinschmidt O, Cremers D. Efficient nonlocal means for denoising of textural patterns. IEEE Trans Image Process. 2008;17:1083–1092. [DOI] [PubMed] [Google Scholar]

- 92. Darbon J, Cunha A, Chan TF, Osher S, Jensen GJ. Fast nonlocal filtering applied to electron cryomicroscopy. In: 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro (ISBI). Bellingham, WA: SPIE; 2008. [Google Scholar]

- 93. Liu Y‐L, Wang J, Chen X, Guo Y‐W, Peng Q‐S. A robust and fast non‐local means algorithm for image denoising. J Comput Sci Technol. 2008;23:270–279. [Google Scholar]

- 94. Zhuang Z, Chen Y, Shu H, Luo L, Toumoulin C, Coatrieux J‐L. Fast low‐dose CT image processing using improved parallelized nonlocal means filtering. In: 2014 International Conference on Medical Biometrics (ICMB). Piscataway, NJ: IEEE; 2014. [Google Scholar]

- 95. Xu F, Mueller K. Accelerating popular tomographic reconstruction algorithms on commodity PC graphics hardware. IEEE Trans Nucl Sci. 2005;52:654–663. [Google Scholar]

- 96. Jia X, Lou Y, Li R, Song WY, Jiang SB. GPU‐based fast cone beam CT reconstruction from undersampled and noisy projection data via total variation. Med Phys. 2010;37:1757–1760. [DOI] [PubMed] [Google Scholar]

- 97. Kole J, Beekman F. Evaluation of accelerated iterative x‐ray CT image reconstruction using floating point graphics hardware. Phys Med Biol. 2006;51:875. [DOI] [PubMed] [Google Scholar]

- 98. Knaup M, Kalender WA, Kachelrieb M. Statistical cone‐beam CT image reconstruction using the cell broadband engine. In: IEEE Nuclear Science Symposium Conference Record. Piscataway, NJ: IEEE; 2006. [Google Scholar]

- 99. Yan H, Wang X, Shi F, et al. Towards the clinical implementation of iterative low‐dose cone‐beam CT reconstruction in image‐guided radiation therapy: cone/ring artifact correction and multiple GPU implementation. Med Phys. 2014;41:111912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100. Li B, Lyu Q, Ma J, Wang J. Iterative reconstruction for CT perfusion with a prior‐image induced hybrid nonlocal means regularization: phantom studies. Med Phys. 2016;43:1688–1699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101. Zhao W, Niu T, Xing L, et al. Using edge‐preserving algorithm with non‐local mean for significantly improved image‐domain material decomposition in dual‐energy CT. Phys Med Biol. 2016;61:1332. [DOI] [PubMed] [Google Scholar]

- 102. Li Y, Hao J, Jin X, Zhang L, Kang K. Towards dose reduction for dual‐energy CT: a non‐local image improvement method and its application. Nucl Instrum Methods Phys Res, Sect A. 2015;770:211–217. [Google Scholar]

- 103. Zeng D, Huang J, Zhang H, et al. Spectral CT Image Restoration via an Average Image‐induced Nonlocal Means Filter. IEEE Trans Biomed Eng. 2016;63:1044–1057. [DOI] [PubMed] [Google Scholar]