Abstract

Anticipatory timing plays a critical role in many aspects of human and non-human animal behavior. Timing has been consistently observed in the range of milliseconds to hours, and demonstrates a powerful influence on the organization of behavior. Anticipatory timing is acquired early in associative learning and appears to guide association formation in important ways. Importantly, timing participates in regulating goal-directed behaviors in many schedules of reinforcements, and plays a critical role in value-based decision making under concurrent schedules. In addition to playing a key role in fundamental learning processes, timing often dominates when temporal cues are available concurrently with other stimulus dimensions. Such control by the passage of time has even been observed when other cues provide more accurate information and can lead to sub-optimal behaviors. The dominance of temporal cues in governing anticipatory behavior suggests that time may be inherently more salient than many other stimulus dimensions. Discussions of the interface of the timing system with other cognitive processes are provided to demonstrate the powerful and primitive nature of time as a stimulus dimension.

Keywords: time perception, interval timing, classical conditioning, instrumental conditioning, choice

Timing is Everything

Humans and a range of non-human animals are highly sensitive to the passage of time (Lejeune & Wearden, 1991), and this sensitivity goes beyond simply recognizing if an individual is late to a meeting, or being aware of whether a child counted to ten too quickly in a game of hide-and-seek. Timing is involved in activities as disparate as the millisecond differentiation of continuous language into distinct words and sentences (see Mauk & Buonomano, 2004; Tallal, Miller, & Fitch, 1993), and the activity of our body’s hormonal cycles depending on the time of day (see Hastings, O’Neill, & Maywood, 2007). Timing is distributed across a wide range of brain regions, and different circuits are involved in processing different time scales. The sense of time is also unique due to its omnipresent nature. While closing one’s eyes or covering one’s ears allows for blocking visual or auditory stimulation, one cannot escape the passage of time – it is “the primordial context” (Gibbon, Malapani, Dale, & Gallistel, 1997, p. 170). As a result, the passage of time is inherently involved in multiple cognitive processes that collectively permit individuals to progress and function in daily life.

The goal of this review is to illuminate the involvement of temporal processing in multiple cognitive phenomena, coupled with discussions of many cases where temporal cues dominate in controlling behavior. While there are several recently published reviews of interval timing (e.g., Allman, Teki, Griffiths, & Meck, 2014; Buhusi & Meck, 2005; Coull, Cheng, & Meck, 2011; Grondin, 2010), there are no recent comprehensive reviews discussing the involvement of interval timing mechanisms across such a broad range of other cognitive processes. Therefore, the primary objective of the current review is to synthesize research across a range of phenomena for which the common denominator is interval timing, thus providing evidence for the inherent contribution of timing processes to basic aspects of cognition. Indeed, such analysis may even result in the realization that temporal perception and anticipation may contribute in important ways to other more commonly studied mechanisms. Accordingly, an incidental effect of this review may be the provision of relevant paradigmatic information to those researchers interested in implementing timing-based analyses in their research. Ultimately, the current review argues for re-conceptualization of our understanding and study of the perception of time, and that such a phenomenon is not only a behavior to be analyzed, but an explanatory mechanistic factor that may ultimately account for behavior across multiple fields of psychological research.

Timing is Everywhere

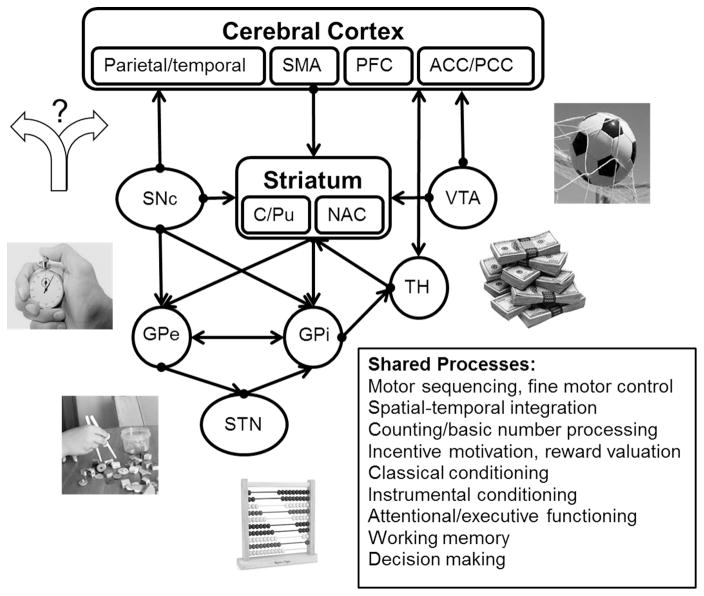

Temporal processing extends broadly across cortical and subcortical brain circuits (Morillon, Kell, & Giraud, 2009). The primary neural system implicated in core interval timing processes is the cortico-striatal-thalamic circuit (e.g., Coull et al., 2011; Gibbon et al., 1997; Matell & Meck, 2004), depicted in Figure 1, which consists of the basal ganglia, the nigrostriatal pathway from the substantia nigra pars compacta (SNc) to the caudate/putamen (C/Pu), and the mesolimbic pathway from the ventral tegmental area (VTA) to the nucleus accumbens (NAC) and pre-frontal cortex (PFC). Additional key regions associated with interval timing include the supplementary motor area (SMA), premotor cortex, medial agranular cortex, and parietal cortex (e.g., Coull & Nobre, 2008; Leon & Shadlen, 2003; Matell, Shea-Brown, Gooch, Wilson, & Rinzel, 2011; Rao, Mayer, & Harrington, 2001; Schwartze, Rothermich, & Kotz, 2012).

Figure 1.

Cortico-striatal loops comprising the timing system. The “Shared Processes” box reflects other cognitive processes that have been associated with these same regions of the timing system identified in the diagram, suggesting a strong interaction between various cognitive phenomena and interval timing. SMA = supplementary motor area; PFC = prefrontal cortex; ACC = anterior cingulate cortex; PCC = posterior cingulate cortex; SNc = substantia nigra pars compacta; C/Pu = caudate/putamen; NAC = nucleus accumbens; VTA = ventral tegmental area; GPe = external segment of the globus pallidus; GPi = internal segment of the globus pallidus; TH = thalamus; STN = subthalamic nucleus.

Of greater intrigue is the overlap between areas involved in interval timing and those involved in basic cognitive and psychological functions. For example, in conjunction with an associated role in interval timing, the mesolimbic pathway has been implicated in prediction error learning/classical conditioning and incentive motivation (e.g., Berridge & Robinson, 2003; Schultz, Dayan, & Montague, 1997), and the PFC has been implicated in working memory, attention, and decision-making (e.g., Miller & Cohen, 2001). As a result, dysregulation of any of these phenomena has the potential to affect interval timing, and vice versa. Therefore, temporal processing may stand as one of the most fundamental cognitive mechanisms. Consistent with this idea is accumulating evidence suggesting that time serves as a powerful determinant of a range of cognitive processes.

Involvement of Timing in Fundamental Cognitive Processes

Timing is critical for many cognitive processes, at a minimum, due to the arrow of time (Eddington, 1928), which refers to the sensation of forward movement in time. The arrow of time provides a differentiation of the past from the future, which is essential for cognitive functions that involve ordinal sequencing, coincidence detection, causal inference formation, and any other time-dependent coding of information. Indeed, Stephen Hawking, in his highly influential book, “The Brief History of Time,” proposed that the arrow of time is the basis of all other intellectual capabilities due to its foundational nature in disambiguating the past, present, and future (Hawking, 1988). In other words, Hawking states that the arrow of time is the very foundation upon which our intellect is built. To the extent that this is true, then we would expect to see a major interface between the timing system and other cognitive systems, thereby reflecting the foundational role of the timing system in a broad range of cognitive processes.

Figure 1 provides an account of the most well documented shared systems, tying in those cognitive systems with the neural circuits discussed in the previous section. For example, motor sequencing and fine motor control are connected with timing in the basal ganglia and cerebellum (Ferrandez et al., 2003; Harrington, Lee, Boyd, Rapcsak, & Knight, 2004; Ivry & Keele, 1989; Lewis & Miall, 2003; Morillon et al., 2009; Stevens, Kiehl, Pearlson, & Calhoun, 2007; Tregellas, Davalos, & Rojas, 2006). The relationship between timing and motor control is apparent in Parkinson’s disease (see Ivry, 1996). Another shared process is counting and rhythm, which most likely relies on shared systems within cortical regions such as the prefrontal and parietal cortices (Harrington, Haaland, & Knight, 1998; Lewis & Miall, 2003; Morillon et al., 2009; Schubotz, Friederici, & Yves von Cramon, 2000). Incentive motivation, reward valuation, basic conditioning, and aspects of decision making emerge through shared processes involving reward valuation circuitry (VTA to NAC and SNc to striatum; see Galtress, Marshall, & Kirkpatrick, 2012; Kirkpatrick, 2014; Schultz et al., 1997). Attentional and executive functioning are associated with frontal cortico-striatal circuits, which are also implicated in regulating attention to time (Coull, 2004; Coull, Vidal, Nazarian, & Macar, 2004; Ferrandez et al., 2003; Grondin, 2010; Meck & Benson, 2002; Zakay & Block, 2004).

The emergence of timing from several distributed neural systems presents opportunities for an interface of timing with multiple cognitive systems, as well as the availability of those cognitive systems for participation in timing processes. These shared cognitive and neural systems create the possibility for reciprocal relationships to occur between timing and other cognitive functions. The core timing system is comprised of separate neural systems dedicated to different aspects of functioning including systems for circadian timing, short interval (< 2 s) and motor timing, interval timing in the seconds to minutes range, temporal sequencing and counting, and temporal decisions (Carr, 1993; Coull et al., 2011; Dibner, Schibler, & Albrecht, 2010; Mauk & Buonomano, 2004; Morillon et al., 2009; Reppert & Weaver, 2002). From here forward, the core interval timing system, or time collating system (Morillon et al., 2009), will be the primary focus of this paper. However, the other systems are worth mentioning in this broad sense to show that the principles that we discuss in the interval timing system may be more broadly applicable across the whole of the timing system.

The time collating system is responsible for automatically tracking durations of events, regardless of whether individuals are actively engaged in timing. This system allows for prospective timing, when attention is engaged, as well as retrospective timing, when individuals may not explicitly track time (Zakay & Block, 1997). The prospective/retrospective component of the time collating system is associated with both attention and working memory. Attentional switching or gating is proposed to regulate attention used for prospective timing (Lejeune, 1998; Zakay & Block, 1996) and working memory likely plays a key role in retrospective timing (Block & Zakay, 1997; Zakay & Block, 2004), as well as the maintenance of temporal information during prospective timing (Brown, 1997). It appears that working memory and interval timing may rely not only on the same brain regions (including the basal ganglia, VTA, SNc, and PFC), but also on the same basic neural coding mechanisms, in which information regarding stimulus identity is extracted from particular cortical neurons that are associated with the representation, and duration-related information is derived from their relative phase (Lustig, Matell, & Meck, 2005). Overall, it appears that timing and working memory are highly interrelated.

The aforementioned research reflects the intricate relationship between timing mechanisms and a range of cognitive phenomena, suggesting that methods to assess temporal processing capabilities are necessitated within a wide range of experimental psychologists’ toolboxes. Otherwise, multiple explanations for seemingly discrepant behaviors will perpetuate the literature, even though a more overarching explanation for these behaviors in terms of interval timing mechanisms may be realizable and ultimately achievable. Thus, to more closely examine the general reciprocal role of timing with other cognitive processes, three examples are explored here in greater depth: classical conditioning/prediction error learning (emerging from the mesolimbic pathway), goal-directed actions in schedules of reinforcement (emerging from the nigrostriatal pathway), and decision making (emerging from cortical regions such as the PFC, anterior cingulate cortex, and posterior cingulate cortex).

Classical Conditioning and Prediction Error Learning

In a number of ways, Pavlov (1927) recognized the importance of timing processes in classical conditioning. He regularly reported responding in time bins, an approach that is more typical in the timing literature than in the classical conditioning field, and he wrote about time as a conditioned stimulus (CS) in his discussion of temporal conditioning. Temporal conditioning involves presentation of an unconditioned stimulus (US; e.g., food) at regular intervals (e.g., 1 min). Over the course of training, conditioned responding develops during the US-US interval and responding is non-random. Instead, conditioned responses (CRs) increase in frequency or amplitude as a function of time in the US-US interval (e.g., Kirkpatrick & Church, 2003).

Simple conditioning effects

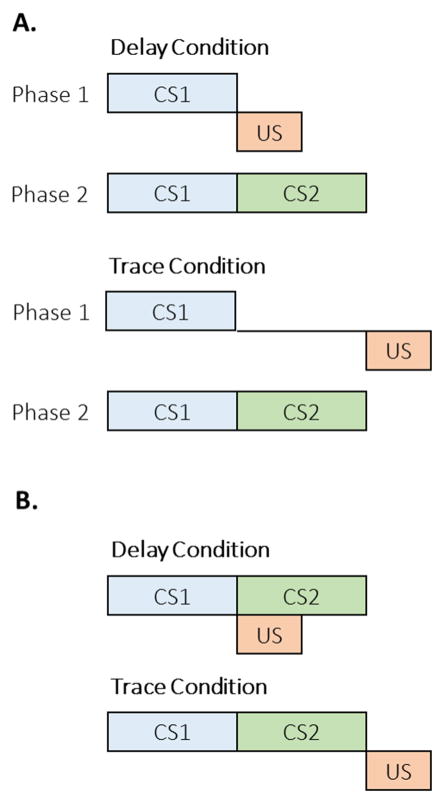

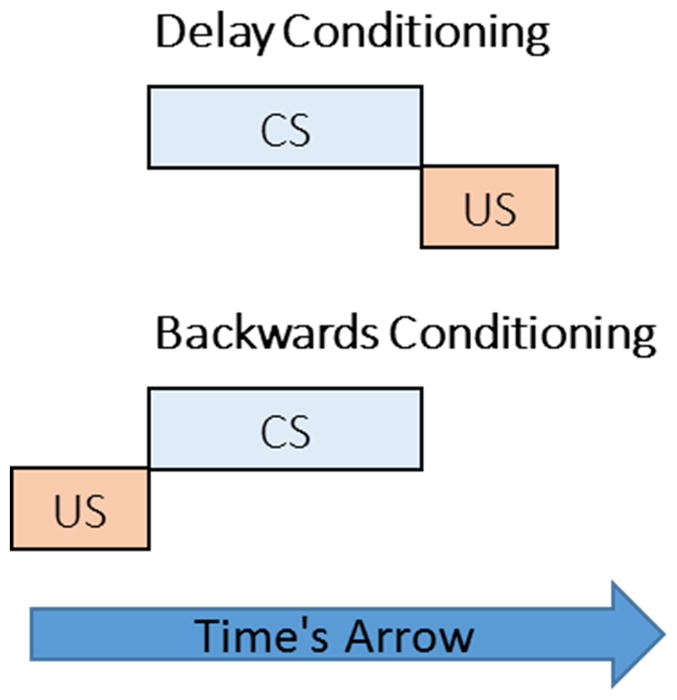

Given that classical conditioning involves the presentation of CSs and USs that unfold in time, it is not surprising that conditioning is inherently governed by temporal variables. For example, conditioning is most robust when the CS occurs before the US in paradigms such as delay conditioning, whereas conditioning is generally poor when the US occurs before the CS in backwards conditioning (Figure 2). This effect is known as priority in time and it is one of the most fundamental facets of conditioning. The arrow of time provides a means for differentiating the order of events in conditioning paradigms, and without it there would be no basis for the effects of priority in time on conditioning.

Figure 2.

The importance of time’s arrow in disambiguating the order of conditioned stimulus (CS) and unconditioned stimulus (US) deliveries in delay and backwards conditioning paradigms. Without a sense of the movement of time, it would not be possible to discern the ordinal difference between these two paradigms. The fact the delay conditioning results in superior conditioning compared to backwards conditioning in a wide variety of paradigms and species testifies to the importance of time’s arrow in basic conditioning.

Another important consideration is that CRs are timed appropriately at their earliest point of occurrence in a range of appetitive and aversive procedures in multiple species (Balsam, Drew, & Yang, 2002; Davis, Schlesinger, & Sorenson, 1989; Drew, Zupan, Cooke, Couvillon, & Balsam, 2005; Kehoe, Ludvig, Dudeney, Neufeld, & Sutton, 2008; Kirkpatrick & Church, 2000a; Ohyama & Mauk, 2001). The observation of CR timing at the start of learning indicates that learning to anticipate whether and when the US will occur (in relation to the CS) most likely emerge in parallel and at a similar point in conditioning. This is not surprising given that the brain circuits implicated in associative learning (particularly the mesolimbic and nigrostriatal pathways) are also involved in interval timing processes (e.g., Coull et al., 2011; Kable & Glimcher, 2007, 2009; Kirkpatrick, 2014; Morillon et al., 2009; Waelti, Dickinson, & Schultz, 2001). Essentially, timing and associative learning emerge in parallel because these forms of learning most likely originate from shared cognitive and neural systems.

Another important temporal variable in conditioning is that interval durations directly affect the strength and/or probability of CR occurrence in simple conditioning (Holland, 2000; Kirkpatrick & Church, 2000a; Lattal, 1999), and this relationship is observed regardless of the events that cue the onset of the interval. The relationship also holds when comparing responding during the CS and intertrial intervals (ITIs) of different durations (Jennings, Bonardi, & Kirkpatrick, 2007; Kirkpatrick, 2002). While the mean interval duration primarily affects response rate, the variability of interval durations affects the pattern of responding in simple conditioning procedures. For example, random intervals lead to generally constant rates of responding, whereas fixed intervals lead to increasing rates of responding over the course of the CS-US interval (Kirkpatrick, 2002; Kirkpatrick & Church, 1998, 2000a, 2003, 2004).

A final critical factor of simple conditioning is the duration of the CS (trial, or T) relative to the ITI duration (I), the I:T ratio. Larger I:T ratios, in which T is proportionately shorter than I, promote faster acquisition of CRs. The effect of I:T ratios on conditioning has been proposed to occur through the same mechanism that produces differences in response rates as a function of interval duration (Kirkpatrick, 2002; Kirkpatrick & Church, 2003). Alternatively, Rate Estimation Theory (Gallistel & Gibbon, 2000) proposes that the I and T durations are each associated with an information value that is determined by the rate and pattern of reinforcement, with conditioning determined by the ratio of information values. Ultimately, classical conditioning, in terms of CR rate, CR patterns, and speed of acquisition, is critically governed by I:T ratios as well as the individual I and T values. In other words, as the same set of parameter values elicit individual differences in classical conditioning (see Gallistel, Fairhurst, & Balsam, 2004), classical conditioning is conceivably driven by an individual’s sensitivity to the absolute and relative durations of the CS and ITI. In many ways, this is not surprising given that most classical conditioning paradigms involve learning about events that unfold predictably in time. Accordingly, further research on classical conditioning should incorporate individuals’ sensitivities to the passage of time as a key factor in the analysis of psychological mechanisms of simple classical conditioning phenomena. In cases where timing analyses have been incorporated, it is clear that animals time important events even when the associative contingencies should discourage responding altogether, such as in paradigms with zero or negative contingencies (Kirkpatrick & Church, 2004; Williams, Lawson, Cook, Mather, & Johns, 2008), verifying the importance of including such analyses in associative learning studies.

Cue integration and competition effects

In addition to these simpler forms of temporal effects on conditioning, complex learning involving multiple CSs is also strongly governed by temporal factors. One of the most striking effects comes from a substantial series of studies on temporal-map formation by Miller and colleagues. Figure 3A displays the design of the study by Cole, Barnet, and Miller (1995), in which a 5-s CS1 was paired with the US in either a delay or trace conditioning arrangement. In delay conditioning, the US immediately followed CS1 whereas in the trace condition, the US occurred following a 5-s gap. In a subsequent Phase 2, CS1 was immediately followed by a 5-s CS2 without any US deliveries, a second-order conditioning arrangement. The interesting facet of their design is that the delay condition resulted in better first-order conditioning in Phase 1, and thus should support better transfer of conditioning in Phase 2, according to associative learning principles. Instead, they found stronger conditioning to CS2 in the trace group. These and other related findings led to the proposal of the temporal encoding hypothesis (Arcediano & Miller, 2002; Savastano & Miller, 1998), which posits that the representations of CS1, CS2, and the US are combined into a temporal map. The temporal maps for the delay and trace groups are displayed in Figure 3B. In this instance, the representation of CS2 is in a forward contiguous relationship with the representation of the US, whereas in the delay condition, CS2 is in a simultaneous relationship with the US. Thus, CS2 leads to superior second-order conditioning in the trace conditioning arrangement due to the advantageous temporal map. Evidence for temporal map formation has been found in a range of conditioning paradigms including overshadowing, blocking, and conditioned inhibition paradigms (e.g., Barnet, Arnold, & Miller, 1991; Barnet, Cole, & Miller, 1997; Barnet, Grahame, & Miller, 1993; Barnet & Miller, 1996; Blaisdell, Denniston, & Miller, 1998; Cole et al., 1995). The formation of temporal maps indicates that time plays a fundamental role not only in simple conditioning, but also in the integration of key pieces of information across experiences.

Figure 3.

A. The design of a study on temporal encoding of durations by Cole, Barnet, and Miller (1995). The delay condition involved successive presentations of conditioned stimulus (CS) 1 and the unconditioned stimulus (US) in Phase 1 followed by CS1→CS2 presentations in Phase 2. The trace condition involved a 5-s gap between CS1 and US in Phase 1, followed by CS1→CS2 presentations in Phase 2. B. The proposed temporal map resulting from the exposure to the delay and trace conditions. Note that although the trace condition resulted in weaker conditioning in Phase 1, the resulting temporal map is more advantageous for promoting conditioned responding to CS2.

Timing also contributes to cue competition paradigms such as overshadowing (Pavlov, 1927), where two CSs of different properties are associated with the same US. Here, the more salient CS usually results in more robust conditioning. Interestingly, in accordance with the strong relationship between classical conditioning and interval timing, the temporal properties of the CSs interact with overshadowing. Specifically, weaker overshadowing occurs with variable duration CSs than with fixed duration CSs (Jennings, Alonso, Mondragón, & Bonardi, 2011) and with longer duration CSs compared with shorter duration CSs (Fairhurst, Gallistel, & Gibbon, 2003; Hancock, 1982; Kehoe, 1983; but see Jennings et al., 2007; McMillan & Roberts, 2010), consistent with the idea that both shorter and less variable CSs (i.e., CSs that should be timed with more absolute precision) may be more salient due to their higher information value in predicting the US (Balsam, Drew, & Gallistel, 2010).

Temporal variables also influence cue competition within blocking paradigms (Kamin, 1968, 1969), which involves pre-training with a CS1→US pairing followed by later CS1+CS2→US pairings. For example, shifts in CS1 duration between phases attenuate blocking in some cases (Barnet et al., 1993; Schreurs & Westbrook, 1982), consistent with the temporal encoding hypothesis (but see Kohler & Ayres, 1979, 1982; Maleske & Frey, 1979). The relationship between CS durations may also affect blocking, with a longer CS1 blocking a shorter CS2 (Gaioni, 1982; Kehoe, Schreurs, & Amodei, 1981), but not vice versa (Jennings & Kirkpatrick, 2006), while other studies have reported little or no asymmetry in blocking (Barnet et al., 1993; Kehoe, Schreurs, & Graham, 1987) or the opposite result with stronger blocking by a shorter CS1 (Fairhurst et al., 2003; McMillan & Roberts, 2010). Despite mixed results, timing processes appear to play a key role in cue competition, consistent with an interaction between timing and associative learning processes. Thus, fundamental learning processes in classical conditioning paradigms are explained, at least in part, by interval timing mechanisms. Indeed, given the preceding discussion of the overlap between timing and conditioning neural pathways (Figure 1), such interactions are far from unexpected. Yet, the involvement of interval timing in associative learning mechanisms and their corresponding interactions represent only a minority of studies within the associative learning literature. Thus, further research is clearly needed to disentangle the nature of these interactions at both the behavioral and neurobiological levels, providing more information as to the fundamental role of interval timing in many classical conditioning phenomena.

Schedules of Reinforcement

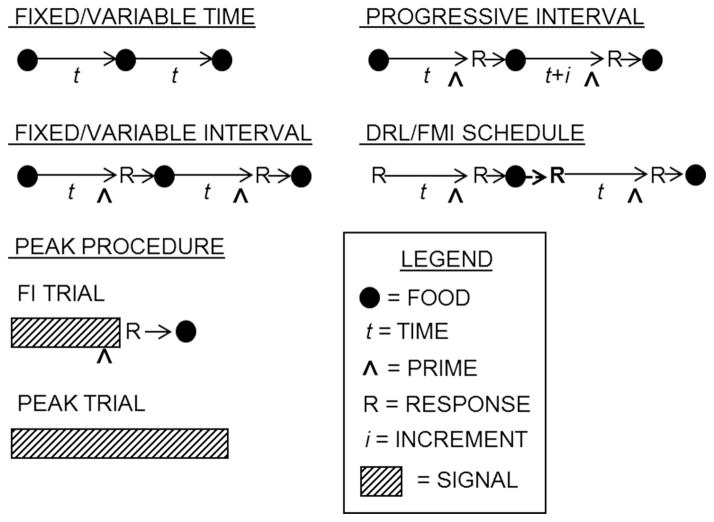

All schedules of reinforcement are time-based to varying degrees, as responses and reinforcers are emitted over time. Some schedules are explicitly time-based, including fixed time (FT), variable time (VT), fixed interval (FI), variable interval (VI), progressive interval, differential reinforcement of low rate (DRL), and fixed minimum interval (FMI) schedules. These schedules are diagrammed in Figure 4. FT and VT schedules do not require any responses, delivering outcomes after a fixed or variable criterion time, respectively. FT schedules are the same as temporal conditioning. FI and VI schedules require a response after a criterion time (t) since the last reinforcer. Progressive interval schedules are a variant on FI schedules, but with the criterion time incrementing from one reinforcer to the next. For example, a progressive interval 10-s schedule would start with a criterion time t (e.g., 10 s) and then increment by t for each subsequent interval. Finally, DRL and FMI schedules (e.g., Mechner & Guevrekian, 1962), or two-lever DRL schedules (Soffié & Lejeune, 1991), require response inhibition during select time periods. In a DRL schedule, individuals must wait for a criterion time between responses, and the clock resets if responses occur early (see the bold “R” in Figure 4). Thus, DRL schedules encourage the development of interresponse times (IRTs) that are longer than the criterion time. The FMI is a variant of the DRL where the response that starts the clock is different from the response required to obtain reinforcement. For example, a rat might have to press the left lever to initiate the interval and the right to collect the reinforcer. If the right lever is pressed too soon, then the interval resets.

Figure 4.

Common time-based schedules of reinforcement. In all procedures, events unfold in time, with specific delays marked by time, t. Food delivery is indicated by a filled circle. In schedules where food is response contingent, the time when food is primed is marked separately (^) from food delivery, and the time of the response that produces the food is similarly noted (R). Procedures involving discrete cues such as tones and lights are denoted by a signal marker (hatched bars). DRL = differential reinforcement of low rate; FMI = fixed minimum interval; Fl = fixed interval.

Given their inherent temporal structure, these aforementioned schedules of reinforcement have been widely used to study mechanisms of interval timing, ultimately revealing the intricate relationship between temporal processing and schedule-controlled behavior. One of the more common instrumental conditioning paradigms to study interval timing is the peak procedure (Roberts, 1981), in which discrete-trial FI trials are intermixed with peak trials (see Figure 4). On FI trials, a signal is presented and then food is primed after a target delay (e.g., 30 s). The first response after the prime results in food delivery and signal termination. Peak trials are the same as FI trials except that the signal is presented for longer than normal and responses have no consequence (i.e., there are no food deliveries). Responding on peak trials increases until the expected time of food delivery and then decreases thereafter (i.e., a “peak” in responding; Roberts, 1981). In addition, these peak functions display scalar variance (see, e.g., Leak & Gibbon, 1995), which is a hallmark of interval timing (Gibbon, 1977). Accordingly, anticipatory goal-directed behavior within schedules of reinforcement is strongly governed by time-based factors, suggesting that understanding subject-specific sensitivities to time may elucidate individual differences in various learning phenomena.

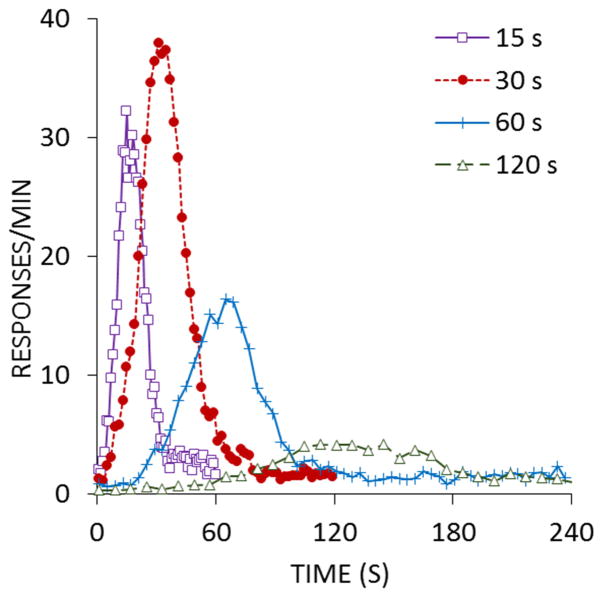

The peak procedure has also been implemented in the absence of any programmed response contingencies, thus producing a Pavlovian peak procedure. In the Pavlovian variant, a CS is followed by a US, usually in a delay conditioning arrangement (Figure 2), to induce CRs during the CS. Intermixed with the normal conditioning trials are peak trials in which the CS is presented for longer than normal. Figure 5 displays the results from a Pavlovian peak procedure from Kirkpatrick and Church (2000a; also see Balsam, Drew, & Yang, 2002). The response for the Pavlovian peak procedure was goal-tracking behavior, measured by the rate of responses to the food cup. The Pavlovian procedure yielded very similar patterns of behavior compared to the more common instrumental peak procedure (see, e.g., Roberts, 1981). The most striking difference is that interval duration has a more pronounced effect on response rate in the Pavlovian procedure, which most likely reflects the effect of the response contingency in maintaining response rates in the instrumental procedure. However, despite differences in response-reinforcer contingencies, the timing of responses is highly similar in the two procedures. While one explanation for these results is that different types of conditioning are similarly affected by temporal factors, it is more parsimonious to suggest that temporal processing and conditioning mechanisms are inseparably connected, such that conditioning and timing are not distinct phenomena, but two components of the same process. Accordingly, greater understanding of an individual’s temporal processing abilities will undeniably contribute to explaining that same individual’s ability to learn by association and trial-and-error.

Figure 5.

The rate of goal tracking responses (in responses per minute) as a function of time during peak trials in a Pavlovian peak procedure. Adapted from Kirkpatrick and Church (2000a).

In addition to studying behavior on fixed delay schedules, the peak procedure has been used to examine responding on variable interval schedules (Church, Lacourse, & Crystal, 1998). When intervals are uniformly distributed, so that there is an increasing hazard function (Evans, Hastings, & Peacock, 2000), response rates show a fairly characteristic peak, with the width of the peak dependent on the mean and variance of the uniform distribution (but see Harris, Gharaei, & Pincham, 2011). Thus, similar to what was discussed with Pavlovian conditioning, rats time even when intervals are variable in duration, and they are sensitive to fairly subtle differences in the distribution of intervals (Church & Lacourse, 2001). It is therefore clear that interval timing mechanisms are not only involved when the interval separating two events is fixed; instead, timing processes are broadly engaged whenever there are delays to important events, whether or not those delays are fixed.

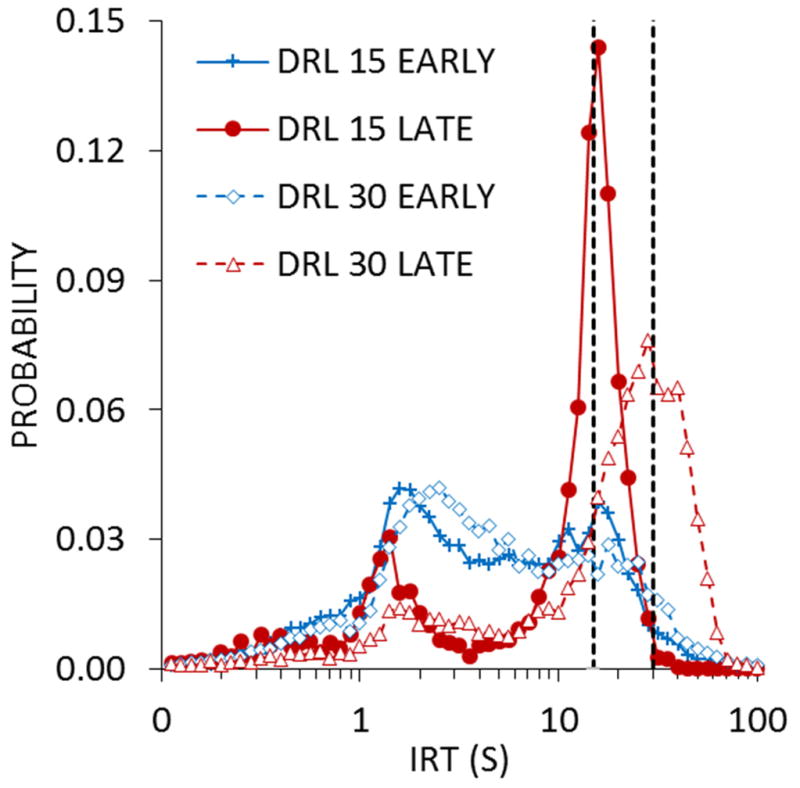

While timing on most interval schedules involves examining response rates, the most common metric in DRL and FMI schedules is the distribution of IRTs (Droit, 1994; Ellen, Wilson, & Powell, 1964; Soffié & Lejeune, 1992). The distribution of IRTs has been shown to be roughly centered on the criterion time for reinforcement (Kramer & Rilling, 1970) or systematically later (Wearden, 1990; also see Balci et al., 2011), and IRTs become more variable as the criterion time increases (Jasselette, Lejeune, & Wearden, 1990; Richardson & Loughead, 1974; Sanabria & Killeen, 2008). As the IRT criterion is lengthened, the peak in responding may fall short of the criterion time, reflecting the increase in difficulty in inhibiting responding for longer durations (Doughty & Richards, 2002; Pizzo, Kirkpatrick, & Blundell, 2009; Richards, Sabol, & Seiden, 1993; Richards & Seiden, 1991). In that respect, performance on DRL/FMI schedules is similar to peak timing performance, where responses congregate around the time of reinforcement and their temporal variability is generally proportional to the time to reinforcement (Roberts, 1981). These schedules are also often used to measure response inhibition capacity (Bardo, Cain, & Bylica, 2006; Hill, Covarrubias, Terry, & Sanabria, 2012; Sanabria & Killeen, 2008) and depressive-like properties of behavior (O’Donnell & Seiden, 1983). Accordingly, these procedures provide potential explanatory links between inhibitory and interval timing processes. An example of this connection is shown in Figure 6, which displays IRT distributions for 10- and 30-s criterion as a function of sessions of training. In this study, rats were trained to lever press on DRL schedules with the two different criteria and their distribution of IRTs was measured over the course of training (Pizzo et al., 2009). The IRTs early in training were generally short, peaking at around 1–2 s, which is consistent with the bout-like nature of lever pressing (Shull, Gaynor, & Grimes, 2001, 2002; Shull & Grimes, 2003; Shull, Grimes, & Bennett, 2004). But, over time, the short IRTs were suppressed, while the IRTs surrounding the criterion time increased in frequency, which can be seen by comparing the early and late IRT functions for the two DRL criteria.

Figure 6.

Inter-response time (IRT) distributions in log-spaced bins as a function of sessions of training for differential reinforcement of low rate (DRL) criteria of 15 or 30 s. The early distributions were from sessions 1–2 of training, and the late distributions, from sessions 9–10. The vertical dashed lines denote the IRT criterion in effect for the DRL schedules. Adapted from Pizzo, Kirkpatrick, and Blundell (2009).

The short IRTs are an indicator of impulsive behaviors (Cheng, MacDonald, & Meck, 2006; Jentsch & Taylor, 1999; Peterson, Wolf, & White, 2003; Sanabria & Killeen, 2008) and difficulty in inhibiting short IRTs on tasks such as DRL schedules can serve as a marker of poor executive functioning (Solanto, 2002), further demonstrating the link between timing and executive processes. Therefore, the schedules of reinforcement that have been traditionally used to determine how well individuals can time the separation between their responses (i.e., DRL, FMI) may also provide significant insight into inhibitory and executive processes, ultimately reflecting the core connection between temporal processing and multiple fundamental cognitive phenomena. For example, deficits in executive functioning and inhibitory processes may be explained, at least in part, by deficits in temporal processing, a factor that deserves further attention in future research.

Translational applications

While much of the previously discussed research has been conducted in non-human animals, there are equivalent time-based schedules that are used for human participants including FI (Baron, Kaufman, & Stauber, 1969), DRL (Gordon, 1979; also see Çavdaroğlu, Zeki, & Balcı, 2014), and FMI (also known as a temporal production task; Bizo, Chu, Sanabria, & Killeen, 2006; Carrasco, Guillem, & Redolat, 2000). Additionally, variations on the peak procedure (Rakitin et al., 1998) have also been developed for measuring human timing. A more recently developed human timing task, called the beat-the-clock task, is designed so that participants are rewarded for making a single response as close as possible to the end of an interval; the more closely they respond to interval termination, the greater the reward (Simen, Balcı, deSouza, Cohen, & Holmes, 2011). In general, human performance on all of the tasks with animal analogs closely mirrors timing behavior in non-human animals, although humans often show slightly less variability in their timing (Buhusi & Meck, 2005). However, when experimental instructions are removed and consummatory responses are required, humans then behave much like non-human animals in various schedules of reinforcement (see Matthews, Shimoff, Catania, & Sagvolden, 1977). Thus, future experiments analyzing various cognitive phenomena in human participants could easily include an evaluation of temporal processing capabilities, as individual or group differences in other cognitive behaviors may be elucidated and perhaps even explained by individual differences in interval timing. This would be beneficial for determining whether the interface between the timing system and other systems, such as executive functioning, holds across species.

Decision Making

Arguably, it may be said that daily life is a continual experience of and selection between multiple concurrent (i.e., simultaneously presented) schedules of reinforcement, reflecting the classic problem of behavioral allocation. Accordingly, given the innate relationship between temporal processing and multiple fundamental cognitive phenomena within schedules of reinforcement, it should not be surprising that temporal processing is critically involved in choices between schedules of reinforcement that unfold in time. Indeed, choice behavior in the laboratory is typically studied with more complex instrumental conditioning schedules that involve two or more concurrently available options with potentially different outcomes. As with simpler conditioning procedures, many choice procedures involve events that unfold in time, and differences in delays may form a vital component of the decision process. In these cases, one would expect to see a central function for timing processes in decision making.

For example, impulsive choice (also referred to as delay discounting or intertemporal choice) procedures involve delivering choices between a smaller reward that is available sooner (the SS outcome) versus a larger reward that is available after a longer delay (the LL outcome). Choices of the SS are usually a marker of impulsive choice behavior, particularly when choosing the SS results in relatively fewer overall rewards earned. Thus, impulsive choice involves a trade-off between delay and amount. The predominant model of impulsive choice is the hyperbolic discounting model, V = A/(1+/kD), which proposes that subjective value, V, decays from the veridical amount, A, as a function of delay, D, a process known as delay discounting. The rate of discounting is determined by the parameter k, and impulsive choice//k-values have been proposed to serve as a stable trait variable in both humans (Baker, Johnson, & Bickel, 2003; Jimura et al., 2011; Johnson, Bickel, & Baker, 2007; Kirby, 2009; Matusiewicz, Carter, Landes, & Yi, 2013; Ohmura, Takahashi, Kitamura, & Wehr, 2006; Peters & Büchel, 2009) and rats (Galtress, Garcia, & Kirkpatrick, 2012; Garcia & Kirkpatrick, 2013; Marshall, Smith, & Kirkpatrick, 2014).

Recent research has begun examining the underlying mechanisms of impulsive choice and there is growing evidence implicating core timing processes as a key underlying cognitive component to impulsive choice behavior. In humans, impulsive individuals overestimate interval durations (Baumann & Odum, 2012) and exhibit earlier start times on FI schedules (Darcheville, Rivière, & Wearden, 1992). Similarly, interval timing mechanisms have been implicated in delayed gratification tasks (McGuire & Kable, 2012, 2013), in which an individual can choose to accept a smaller reward at any time prior to the availability of a delayed larger reward. Specifically, decisions to forego waiting longer for the larger reward have been suggested to reflect the subjective belief that the longer an individual has already waited for the larger reward, the longer s/he will have to keep waiting, whereas decisions to accept the smaller reward more quickly (i.e., wait for shorter durations before choosing the smaller reward) have been suggested to reflect faster internal clocks (see McGuire & Kable, 2013).

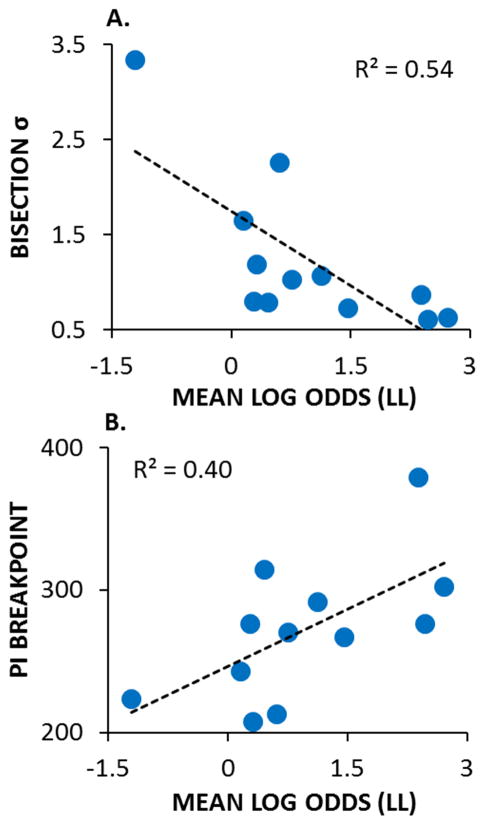

Additionally, research with rats has indicated that temporal discrimination/temporal precision abilities may play a key role in impulsive choice/delay discounting (Marshall et al., 2014; McClure, Podos, & Richardson, 2014). Specifically, McClure et al. (2014) found that the rats that timed with greater precision in a peak procedure (i.e., narrower peak functions) also made fewer impulsive choices. In addition, Marshall et al. (2014) tested rats on an impulsive choice procedure and conducted additional measurements of timing and delay aversion using a temporal bisection task (Church & Deluty, 1977) and a progressive interval schedule, respectively. Figure 7A displays the correlational patterns for individual rats in their study. Rats with higher standard deviations of their bisection functions, indicating poorer temporal discrimination, displayed greater impulsive choice (lower LL choices). These rats also displayed more delay intolerance, with lower breakpoints on a progressive interval schedule indicating that they gave up sooner when the delay was increased (Figure 7B). This pattern of results indicates a strong interrelationship between interval timing processes, delay tolerance, and impulsive choice, and suggests that core timing processes may play a critical role in the fundamental cognitive process of decision making in time-based tasks. Accordingly, future research on impulsive choice and decision making phenomena may be benefitted by real-time assessments of temporal processing ability, instead of simply reporting sensitivity to time through fitting choice data with modifications of the hyperbolic discounting model described above (Myerson & Green, 1995).

Figure 7.

A. The relationship between the log odds of larger-later (LL) choices, an indicator of impulsivity (with lower scores indicating more impulsive choices) and the standard deviation (σ) of the temporal bisection function (with higher values indicating poorer timing precision/temporal discrimination). B. The relationship between the log odds of LL choices and the progressive interval breakpoint indicating delay tolerance, with higher breakpoints associated with greater delay tolerance. Overall, poorer timing precision, poorer delay tolerance, and greater impulsive choice were inter-correlated. Adapted from Marshall, Smith, and Kirkpatrick (2014).

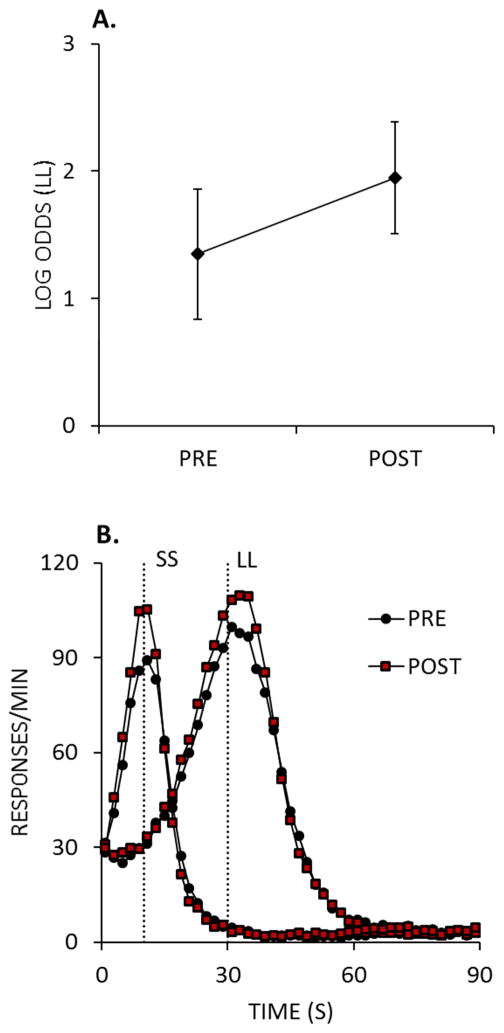

Following on from these findings, Smith, Marshall, and Kirkpatrick (2015) examined whether time-based interventions could mitigate impulsive choice by delivering FI, VI, and DRL schedules between a pre- and post-intervention assessment of impulsive choice behavior. All three schedules decreased post-intervention impulsive choices in normal (Sprague-Dawley) rats, and the effects of the FI and VI schedules were weaker and shorter-lived in Lewis rats, a potential animal model of disordered impulsive choice (Anderson & Diller, 2010; Anderson & Woolverton, 2005; Garcia & Kirkpatrick, 2013; García-Lecumberri et al., 2010; Huskinson, Krebs, & Anderson, 2012; Madden, Smith, Brewer, Pinkston, & Johnson, 2008; Stein, Pinkston, Brewer, Francisco, & Madden, 2012). The effects of the DRL schedule on choice behavior are shown in Figure 8A, where rats were tested for choices of a 10-s, 1 pellet SS versus a 30-s, 2 pellet LL before (PRE) and after (POST) the intervention. In addition to decreasing impulsive choices, the intervention also improved timing behavior, as shown in Figure 8B. Here, the main effects were to decrease the standard deviation (width) of the peak and increase peak rate. These effects on the peak are reflective of improvements in temporal discrimination/timing precision, consistent with the correlational patterns in Figure 7A. The combined results suggest that good temporal discrimination abilities are critical for being able to wait for outcomes that are delayed, which may ultimately relate to making more well informed choices. Accordingly, temporal processing abilities may explain individual differences in not only subjective optimality, but objective optimality in terms of rewards earned per unit time, reflecting the central notion of the innate involvement of temporal processing mechanisms in basic cognitive processes (i.e., decision making).

Figure 8.

A. The log odds of making larger-later (LL) choices during the pre- and post-intervention tests of impulsive choice. B. Response rates of lever pressing on peak trials as a function of time. Peak trials were administered during the choice task during the pre- and post- intervention tests of impulsive choice. Adapted from Smith, Marshall, and Kirkpatrick (2015).

The central involvement of interval timing in a cognitive phenomenon as fundamental as decision-making between differentially delayed outcomes has been supported by recent proposals that hyperbolic discounting is inherently rooted in the scalar property of interval timing. Specifically, the hyperbolic delay discounting model and the results from impulsive choice procedures indicate that increases in the delay to reward reduce the subjective value of reward. The function relating delay to subjective value is best approximated by a hyperbolic function. In his seminal paper, Gibbon (1977) derived a hyperbolic expectancy function for the expectancy of reward (h) over time (t) from scalar timing processes by proposing that: ht=H/(x−t), where H is the incentive value of the reward, x, the expected delay to reward, and t, the time remaining until reward delivery. Gibbon’s solution proposes that the value of H, which is essentially the same as A in the hyperbolic discounting equation, is spread over the time remaining in the interval. This relates to the scalar property because shorter delays would result in comparatively less spread of value than longer delays. At the start of the delay (t=0), ht is equal to the overall reinforcement rate associated with that delay (amount/delay); as time proceeds in the interval, expectancy diminishes hyperbolically with the passage of time. It is worth noting that this formulation is a precursor to the later Gibbon and Balsam (1981) model that eventually led to the development of Rate Estimation Theory (Gallistel & Gibbon, 2000) described above. Thus, Gibbon’s solution is relevant not only to choice behavior, but also to reinforcer valuation in basic conditioning and learning paradigms. This connection demonstrates the fundamental interconnection between timing processes and a variety of basic learning phenomena.

Gibbon’s original account has been expanded upon more recently by Cui (2011). The key connection in Cui’s derivation is through Weber’s law, which is the fundamental tenet behind the scalar property of interval timing. Cui relies on the observation that the subjective judgment of quantities, in this case the amount of reward and the delay to reward, are determined by Weber fractions a and b, respectively. This means that both amount and time are subject to scalar variance. Drawing on this principle, the overall subjective value of a delayed reward, V, at time t, can be found given the following explicit solution: Vt = (1−a)ln(t)/ln(1+b), producing diminishing reductions in subjective value as a function of time, in accordance with hyperbolic discounting (see Mazur, 1987; Myerson & Green, 1995; Rodriguez & Logue, 1988). In this equation, time is logarithmically scaled [ln(t)], consistent with proposals that a logarithmic representation of time is responsible for hyperbolic discounting and the preference reversals in choice behavior that are observed at differential delays (see Takahashi, 2005; Takahashi, Han, & Nakamura, 2012; Takahashi, Oono, & Radford, 2008).

Thus, hyperbolic discounting is ultimately derived directly from the scalar property of interval timing, further linking timing and choice phenomena and providing an explanation for the relationship between temporal discrimination/precision and basic decision making. Indeed, some individuals with temporal processing deficits also exhibit decision making deficits, such as drug addicts (Bickel & Marsch, 2001; Wittmann, Leland, Churan, & Paulus, 2007), schizophrenics (Allman & Meck, 2012; Heerey, Robinson, McMahon, & Gold, 2007), and attention deficit hyperactivity disorder patients (Barkley, Edwards, Laneri, Fletcher, & Metevia, 2001; Toplak, Dockstader, & Tannock, 2006), further confirming these purported links.

Time as an Explanatory Mechanism

The research described thus far has demonstrated the role of core timing processes in a variety of time-based learning paradigms. If time is as fundamental as the aforementioned results suggest, then time might be expected to exert strong stimulus control, perhaps even superseding discrete cues such as auditory and/or visual stimuli. Such observations would further implicate the timing system as primitive and foundational for other cognitive functions.

The Dominance of Temporal Cues

One of the seminal papers demonstrating the power of time as a cue was conducted by Williams and LoLordo (1995). Within a fear conditioning paradigm, conditioning to a tone CS was blocked by temporal cues, but temporal cues were not blocked by the tone CS. This asymmetry suggested that time may govern behavior to a greater degree than a discrete cue such as an auditory tone within an aversive paradigm. Kirkpatrick and Church (2000b) extended the findings of Williams and LoLordo (1995) to an appetitive paradigm; temporal cues employed in initial training continued to control behavior more than subsequently-introduced auditory stimulus cues, even though the auditory cues were more contiguous with food delivery (also see Goddard & Jenkins, 1988). Therefore, a discrete stimulus that was more predictive of reward delivery was superseded in terms of stimulus control by a distant temporal cue (or, time marker).

Furthermore, Caetano, Guilhardi, and Church (2012) presented rats with three different FI schedules signaled by distinct stimuli (e.g., houselight → FI 30 s; white noise → FI 60 s; clicker stimulus → FI 120 s). In one experiment, each session involved 60 presentations of one of the stimulus-FI pairings, and each session employed a different stimulus-FI pairing relative to the prior session (e.g., Session 1: houselight → FI 30 s; Session 2: clicker stimulus → FI 120 s). At the end of each of the sessions, the rats’ timing behavior was indicative of sensitivity to the current FI schedule. However, despite the extensive training with each of the stimulus-FI pairings, the rats’ responding early in the sessions did not differ across FI schedules. In other words, the rats’ absolute response gradients across FI schedules were approximately superposed early in each of the sessions, but became more differentiated in accordance with the current FI schedule as the session progressed. Ultimately, these results suggest that the particular stimulus that reliability predicted the current FI schedule in each session did not control behavior as much as the time-based nature of the task itself did. In accordance with Williams and LoLordo (1995), Caetano et al. (2012) suggested that temporal memories regarding food delivery may exceed other relevant stimuli in salience. Therefore, in conjunction with the results described above, this result supports the strong control of time over behavior and the considerable involvement of temporal processing in multiple cognitive phenomena.

Simultaneous Temporal Processing

Based on the superiority of time-based cues, it could be assumed that with a greater number of effective time markers present, the uncertainty in estimating the time to reward delivery should substantially decrease. Previous research has shown that non-human animals, such as rats and pigeons, are able to combine information conveyed by multiple time markers to predict the time of food delivery (Church, Guilhardi, Keen, MacInnis, & Kirkpatrick, 2003; Guilhardi, Keen, MacInnis, & Church, 2005; Kirkpatrick & Church, 2000a; Leak & Gibbon, 1995; MacInnis, 2007; MacInnis, Marshall, Freestone, & Church, 2010; Meck & Church, 1984). Such time-based cue integration occurs in simultaneous temporal processing paradigms where overlapping intervals are signaled by different time markers (e.g., Church et al., 2003; Kirkpatrick & Church, 2000a; Meck & Church, 1984). The ability to combine multiple cues of temporal information without the consequence of information overload provides support for the involvement of temporal processing in basic cognitive phenomena.

While the analysis of interval timing has primarily focused on the timing of a single interval, simultaneous timing occurs in many natural and artificial situations. If a previous food delivery signals a 120-s interval before the next food delivery, then that food delivery serves as a time marker; the possible onset (and offset) of an embedded stimulus within the food-to-food interval is another time marker that serves as a more contiguous predictor of upcoming food delivery. While an initial prediction may be that animals should ignore the first time marker in favor of the second, more contiguous time marker, animals do not, but instead combine the temporal information provided by these distinct cues (Guilhardi et al., 2005; Kirkpatrick & Church, 2000a, 2000b). In fact, patterns of anticipatory responding become more complex with each additional time marker (e.g., Dews, 1965), so that embedded stimuli presented closer to reinforcement exert greater control over behavior than less temporally-proximate stimuli (see Fairhurst et al., 2003; Farmer & Schoenfeld, 1966; Snapper, Kadden, Shimoff, & Schoenfeld, 1975).

Despite the historical divergence of the conditioning and timing sub-fields of experimental psychology (see Kalafut, Freestone, MacInnis, & Church, 2014; Kirkpatrick & Church, 1998), the paradigms used in both conditioning and timing experiments converge in their use of multiple time markers. Given the simultaneous emergence of timing and associative learning, as described above, several conditioning phenomena may be more parsimoniously explained via simultaneous temporal processing. For example, recent evidence has suggested that the large differences in response rates traditionally observed in instrumental versus classical conditioning paradigms (Domjan, 2010) may actually be driven by differences in time markers rather than differences in either the type of learning or response contingency; that is, response non-contingent reinforcement in classical conditioning paradigms in itself serves as an effective time marker to reduce response rate until reinforcement (Freestone, MacInnis, & Church, 2013). Moreover, the evidence that larger I:T ratios produce faster acquisition in conditioning paradigms, as described above, may be at least partially explained by simultaneous temporal processing and time marker effectiveness in terms of the time marker’s temporal position relative to reinforcement (Holland, 2000; Kirkpatrick & Church, 2000a; Lattal, 1999). Given the necessity of learning for individual adaptation, such an explanation would therefore suggest that time is in fact a powerful stimulus (cf., Balsam et al., 2010). Furthermore, it suggests that, as described above, the perception of time is the fundamental cornerstone of basic cognition.

Such immediate time-based cues are far from the only sources of temporal information that an individual concurrently processes. For example, the stimulus-to-food interval is not only embedded within a food-to-food interval, but within the duration of the experimental session, the light:dark cycle, the day, month, year, and so on. Accordingly, given the evidence for the simultaneous timing of multiple intervals, it should come as no surprise that animals track durations beyond those experienced at the individual trial level. Wilson and Crystal (2012) showed that rats that were fed immediately following an experimental session within the operant chamber performed more poorly during a temporal bisection task as the time to the post-session feeding approached, compared to rats that were not provided an immediate post-session meal. Importantly, the former experimental group continued to show adequate temporal discrimination performance, while also exhibiting elevated rates of head-entry behavior in anticipation of the post-session feeding, whereas the latter control group did not show anticipatory behavior (see Wilson & Crystal, 2012). Interestingly, Wilson, Pizzo, and Crystal (2013) subsequently showed that presentation of a time-marker before the post-session meal produced a rapid increase in rats’ head-entry behavior, while the rats also exhibited adequate temporal discrimination performance. This steep increase in head-entry behavior given a late-onset time-marker is comparable to the within-trial patterns of responding observed in FI schedules when time-markers are embedded very proximal to the time of food availability (Guilhardi et al., 2005), suggesting an interesting overlap between the effects of time-markers on between- versus within-trial behavior.

Furthermore, Plowright (1996) implemented a procedure in which pigeons responded on a tandem VI 5 – FI 10 s schedule of reinforcement (i.e., the FI schedule began once the VI schedule was completed), with each component on a separate key. The procedure also scheduled a larger reinforcer at either 6 or 16 minutes into the 20-minute experimental session; the larger reinforcer was delivered contingent on completion of the VI 5 s schedule following either of these longer delays. Consistent with the results presented by Wilson and Crystal (2012), Plowright (1996) showed that pigeons accurately timed the FI 10 s schedule of reinforcement while also exhibiting increases in response rate on the VI 5 s schedule at approximately the expected time of the larger reinforcement. As simultaneous temporal processing analyses have typically involved the overlap of separate intervals in anticipation of the same reinforcer, the results from these studies are striking, emphasizing the simultaneous impact of time-based stimuli on behavior at both molecular and molar scales. Specifically, rather than an animal selectively attending to a single stimulus, temporal information from multiple sources is integrated so as to best predict upcoming events (i.e., reinforcement).

The ease with which simultaneous time markers are integrated may be due to the presence of multiple and independent temporal signatures in the brain. Buhusi and Meck (2009) presented rats with a modified tri-peak procedure. Each of three levers delivered food on an FI 10, FI 30, or FI 90 s schedule; on each trial, signaled by houselight onset, one lever was randomly selected to deliver food according to the FI schedule of reinforcement. On a subset of peak trials, the houselight signal was disrupted 15 s into the trial for 1, 3, 10, or 30 s (i.e., “gap” trials). A 10-s gap differentially shifted timing on the 10-s, 30-s, and 90-s peak trials, suggesting that the simultaneous timing of the three intervals was distinctly altered by the same 10-s gap. Buhusi and Meck (2009) concluded that the timing of the intervals was simultaneous but separate, so that multiple internal clocks independently timed each interval. Accordingly, simultaneous temporal processing may engage multiple clocks so that the information processing system does not become overloaded. Thus, our timing system may have adapted to account for a plethora of simultaneous time markers by employing multiple temporal signatures. Ultimately, the mechanisms of simultaneous temporal processing supply explanatory potential of the inherent involvement of timing mechanisms in fundamental cognitive processes that involve multiple events unfolding together in time.

Time as Information

Time-markers are assumed to convey temporal information; that is, information in terms of the expected time to reinforcement. A time-marker may be more effective, and thus convey more information, when the rate of reward signaled by that time marker exceeds that signaled by a second time marker. For example, in Rate Estimation Theory (Gallistel & Gibbon, 2000), the stimulus (i.e., time-marker) begins to control behavior when this ratio of reinforcement in the presence of the stimulus relative to that of its absence exceeds a given threshold (i.e., time marker strength is high). Furthermore, Delay Reduction Theory computes the difference between the food-to-food interval and the stimulus-to-food interval; the larger the difference, the greater the reward rate in the stimulus-to-food interval and the more the stimulus (i.e., time-marker) controls behavior (Fantino, Preston, & Dunn, 1993). Therefore, in conjunction with results describing differential stimulus control over behavior given differential CS-US contingencies (e.g., Rescorla, 1968), time-marker effectiveness may be quantified in terms of the information provided regarding changes in reward rate (also see Egger & Miller, 1962). Accordingly, the intimate involvement of interval timing in fundamental learning processes may be explained by the possibility that events in time represent the best signals for when an individual should adapt his or her behavior to most effectively interact with the environment.

A second conceptualization is conveyed by information theory (Cover & Thomas, 1991). Based on the pioneering work of Shannon (1948), information in a simple delay-conditioning paradigm is calculated as the difference in entropies of two distinct memory distributions as to when food will be delivered – one for the previous food (food-to-food interval) and one for the stimulus (stimulus-to-food interval)– plus a scalar variance factor (Balsam et al., 2010; Balsam, Fairhurst, & Gallistel, 2006; Balsam & Gallistel, 2009). Intuitively, this refers to calculating the reduction in uncertainty of the time when food will be available based on the addition of a second time marker (i.e., stimulus). Indeed, the application of information theory to classical conditioning can explain the blocking and overshadowing results of cue competition studies (Balsam & Gallistel, 2009), essentially in terms of how effective each stimulus is as a time-marker. Accordingly, learning is dependent on the length of the food-to-food and stimulus-to-food intervals (Balsam & Gallistel, 2009). In other words, learning is temporally-dependent, thus supporting the current proposal that the processing of time serves as a fundamental cornerstone in basic components of cognition as well as emphasizing the necessity to assess temporal processing within other cognitive tasks.

Pigeons and Time

Although many, if not all, species appear to be heavily reliant on timing systems, an interesting result that has perpetuated the literature is that pigeons seem to be especially sensitive to temporal cues and particularly apt to use those cues. One striking example is the mid-session reversal procedure, in which the reinforcement contingencies switch in the middle of a session. In the pigeon variant of the procedure, pecks to one key (e.g., red) result in reinforcement at the start of the session while pecks to the other key (e.g., green) do not. Then, half-way through the session, the contingencies reverse, a classic example of a win-stay/lose-shift contingency. The most efficient way to solve this task is to stay on the reinforced option until the first time that non-reinforcement occurs, and then switch, which is the strategy employed by rats (Rayburn-Reeves, Stagner, Kirk, & Zentall, 2013). Pigeons, on the other hand, anticipate the upcoming switch and also continue to make some responses on the non-reinforced option after the switch (Cook & Rosen, 2010; Laude, Stagner, Rayburn-Reeves, & Zentall, 2014; McMillan & Roberts, 2015; Stagner, Michler, Rayburn-Reeves, Laude, & Zentall, 2013), although the extent to which they do this may depend somewhat on other cues that are available (McMillan & Roberts, 2015) or on the ITI duration (Rayburn-Reeves, Laude, & Zentall, 2013). By performing special tests with ITI durations that were shorter or longer than the training ITI, McMillan and Roberts (2012) demonstrated that the pigeons were using the time from session onset to anticipate the switch in contingencies. This is a highly inefficient strategy which resulted in many errors on the pigeon’s part, and further testifies that timing cues often dominate even when superior local reinforcement cues are available. Essentially, pigeons would not be using temporal cues unless the use of these cues was adaptive. Accordingly, future research is needed to better understand individual- and species-specific sensitivities to temporal cues when the use of such cues is seemingly irrational but subjectively optimal.

A similar case of the dominance of temporal cues can be seen in response-initiated delay schedules. In these schedules, one cue signals trial onset (e.g., red) and another cue signals delay onset (e.g., green). Following food delivery, the trial onset cue is presented. The first peck to that cue results in the initiation of the fixed delay (T) to reward and its associated cue. The time between trial onset and the keypeck is denoted as the waiting time (t) and this delay is under the control of the animal. Therefore, in this schedule, the optimal strategy for the pigeon is to respond as soon as the trial onset cue is presented to minimize waiting time. Wynne and Staddon (1988; see also Wynne & Staddon, 1992) assessed performance in different variants of response-initiated delay schedules. In one condition, T was fixed regardless of t, the classic response-initiated delay schedule, and in other conditions T was adjusted to compensate for t to neutralize the cost of longer waiting times on the overall delay to reinforcement. Birds were tested under different values of T and the relationship between T and t was assessed. They found that waiting times were linearly related to the delay to reward, regardless of the contingencies in place, and that the distribution of waiting times was scalar. This means that the pigeons’ waiting times tracked the delay to reinforcement, even when doing so was sub-optimal. These results provide further evidence that pigeons possess a built-in timing mechanism that automatically tracks delays to reinforcement and adjusts behavior accordingly, even when doing so is costly.

Another example of dominance of temporal cues was shown by Roberts, Coughlin, and Roberts (2000), when testing pigeon’s use of timing versus number cues. Pigeons were presented with a variant on the peak procedure in which a flashing light was reinforced either after a certain number of flashes (a fixed number or FN schedule) or after a fixed amount of time (an FI schedule). The FN and FI schedules were cued with differently-colored lights, and pigeons received the two schedules intermixed. Following training, the pigeons received mixed test trials in which the counting cue would be turned on for 10 s and then would switch to the timing cue or vice versa. In the counting→timing test trial, the pigeons peaked at the FI value indicating that they were timing from the start of the trial even though they were cued to count at the start. On the timing→counting test trials, the pigeons switched from timing to counting when cued to do so. The results indicate that the pigeons timed regardless of cueing, but that they could count when cued to do so at the end of the trial. In essence, the pigeons counted when cued, but always timed. Rats, on the other hand, appear to be more balanced. Meck and Church (1983) trained rats with pulsing tones where either the number of pulses or the total duration of the tone pulses (2 pulses/2 s vs. 8 pulses/8 s) could serve as cues in a temporal bisection task. In a subsequent test with variations in either the pulse number or delay, it was found that rats showed strong control by both aspects and that the psychophysical functions for time and number were essentially indistinguishable. Thus, rats appeared to simultaneously time and count. The finding that both pigeons and rats “always time” may reflect the omnipresent aspect of time discussed earlier in the paper. However, where it appears that pigeons may be particularly sensitive to this aspect of time, additional research is warranted to better elucidate the comparative use of temporal cues.

Pigeons are also prone to exhibit oscillatory processes on peak procedures (Kirkpatrick-Steger, Miller, Betti, & Wasserman, 1996). Pigeons were trained on a standard peak procedure, with variations in the ratio of the peak trial durational: FI trial duration. When those durations were in a 4:1 ratio, the pigeons showed a strong oscillatory response, peaking at the FI and then again at 3 times the FI value. The second peak was often associated with a rate of response that was nearly as high as the original peak. Even more striking was the observation of longer-term oscillations when special peak trials were included that were 8 times the original duration. In this case, four peaks were observed at regular intervals, but with dampened oscillation so that the height of the peaks declined over time. These observations suggest that pigeons may engage in behavioral oscillations that reflect a strong underlying control by timing cues, further emphasizing the core involvement of temporal processing mechanisms in goal-directed actions. Thus far, similar results have not been shown in rats, suggesting a further divergence in control by temporal cues between these two species. Ultimately, our understanding of time and individual/species differences in temporal processing is still in its infancy. Further comparative, neuroscientific, and psychological research of differential cognitive mechanisms and their relationship to individual and species differences in interval timing processes reflects the most critical avenue in our understanding of brain and behavior mechanisms. Specifically, if the perception of time underlies fundamental aspects of basic cognition, then it should be a more widely studied and appreciated psychological process in future research.

What is Left to be Discovered

The pervasiveness of interval timing processes across brain and behavior mechanisms does not preclude the further exploration of such phenomena. Rather, it raises even greater questions. For example, there has been long-standing debate regarding whether mental representations of time are linearly-or logarithmically-scaled (Cerutti & Staddon, 2004; Church & Deluty, 1977; Gibbon, 1977; Gibbon & Church, 1981; Yi, 2009), a question that fundamentally addresses the function mapping objective time to subjective time, and yet there are no clear answers to this problem. Accordingly, different theories of behavior that are centered on or incorporate interval timing posit different representations of time. For example, a logarithmic representation of time is assumed within the Behavioral Economic Model (Jozefowiez, Staddon, & Cerutti, 2009) and the Multiple Timescale Model (Staddon & Higa, 1999), while a linear representation of time is assumed within Scalar Expectancy Theory (Gibbon, 1977, 1991), the Behavioral Theory of Timing (Killeen & Fetterman, 1988), and the Learning to Time theory (Machado, 1997). It is challenging to understand the mechanisms of interval timing if our theoretical accounts disagree on fundamental processes, such as how time is psychologically scaled. Thus, greater understanding of the mental representations of time will aid the convergence of distinct theoretical accounts into a centralized and comprehensive theory of behavior. That being said, the role of temporal processing in fundamental cognitive processes warrants the inclusion of an interval timing module in extant and future theories of many forms of cognition, some of which were discussed here, as such an inclusion may provide a more comprehensive theoretical account of behavior across multiple experimental manipulations.

A second open question involves the neurobiology and neuropathology of interval timing. There is no single disease that purely reflects a deficit in interval timing, although many neuropathologies are characterized by dysfunctional temporal processing (see Allman & Meck, 2012; Balci, Meck, Moore, & Brunner, 2009; Coull et al., 2011; Ward, Kellendonk, Kandel, & Balsam, 2012). By better understanding the basic facets of the timing system, as well as the intimate connection between timing and basic components of cognitive function, then perhaps those predisposed to such disorders could receive the necessary neurocognitive and pharmacological treatments to temporarily or permanently delay pathogenesis while individuals are pre-symptomatic. Indeed, interval timing deficits have been observed in schizophrenics and individuals at high risk for developing schizophrenia (Allman & Meck, 2012; Penney, Meck, Roberts, Gibbon, & Erlenmeyer-Kimling, 2005), suggesting that longitudinal evaluations of interval timing may also provide insight into disease prognosis.

As has been conveyed throughout this review, interval timing is closely intertwined with fundamental cognitive processes such as learning and decision making. Indeed, learning and decision-making deficits may reflect deficits in the processing and integration of sequential (or simultaneous) temporal events and durations. Accordingly, efforts to alleviate such deficits may be more effective if temporal processing is a part of the focus of therapeutic techniques. Moreover, individual differences in interval timing may ultimately explain individual differences in those mechanisms that are tied to suboptimal, maladaptive, and health-deficient behaviors (e.g., Marshall et al., 2014). For instance, if dysfunctional interval timing can explain impulsivity in drug abusers (see Wittmann & Paulus, 2008), then testing interval timing early in life may help identify those individuals prone to substance abuse, allowing targeted interventions prior to addiction. Given the prevalence of standardized testing, physical education/health programs in elementary and secondary schools could implement standardized assessments of basic cognitive functions, such as interval timing, thereby permitting the subsequent administration of individually-specific therapies to deter such potential inevitabilities. Overall, the interaction of the temporal processing system with the neurobiological correlates and psychological processes of attention, learning, incentive valuation, decision making, working memory, etc., suggests that greater understanding of the perception of time may ultimately unveil key answers to the questions that have continued to perplex both psychological scientists and neuroscientists alike.

Regardless of the task or paradigm, time is the single most ubiquitous component of each and every experimental procedure across multiple disciplines and sub-disciplines. The concept of time may refer to the dimension of a predictive stimulus, the function by which a person, action, or event changes, or, simply, the global experimental context, in which rests more tangible and discrete contexts. Such omnipresence and, ultimately, omnipotence is well-captured by the multitude of potential neurobiological correlates of temporal processing and the horde of cognitive mechanisms that involve interval timing. Accordingly, if temporal processing mechanisms contribute to other cognitive mechanisms, then psychologists, cognitive scientists, cognitive neuroscientists, and the like have the responsibility to administer assessments of timing abilities in subsequent behavioral experiments. Only then may a comprehensive and thorough understanding and theoretical account of the interaction between brain mechanisms and behavioral processes be achieved.

Acknowledgments

Some of the research described in this paper was supported by grant NIMH-R01 085739 awarded to Kimberly Kirkpatrick and Kansas State University.

References

- Allman MJ, Meck WH. Pathophysiological distortions in time perception and timed performance. Brain. 2012;135:656–677. doi: 10.1093/brain/awr210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allman MJ, Teki S, Griffiths TD, Meck WH. Properties of the internal clock: first- and second-order principles of subjective time. Annu Rev Psychol. 2014;65:743–771. doi: 10.1146/annurev-psych-010213-115117. [DOI] [PubMed] [Google Scholar]

- Anderson KG, Diller JW. Effects of acute and repeated nicotine administration on delay discounting in Lewis and Fischer 344 rats. Behavioural Pharmacology. 2010;21:754–764. doi: 10.1097/FBP.0b013e328340a050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson KG, Woolverton WL. Effects of clomipramine on self-control choice in Lewis and Fischer 344 rats. Pharmacology, Biochemistry and Behavior. 2005;80:387–393. doi: 10.1016/j.pbb.2004.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arcediano F, Miller RR. Some constraints for models of timing: A temporal coding hypothesis perspective. Learning and Motivation. 2002;33:105–123. doi: 10.1006/lmot.2001.1102. [DOI] [Google Scholar]

- Baker F, Johnson MW, Bickel WK. Delay discounting in current and never-before cigarette smokers: similarities and differences across commodity, sign, and magnitude. Journal of Abnormal Psychology. 2003;112:382–392. doi: 10.1037/0021-843X.112.3.382. [DOI] [PubMed] [Google Scholar]

- Balci F, Freestone D, Simen P, deSouza L, Cohen JD, Holmes P. Optimal temporal risk assessment. Frontiers in Integrative Neuroscience. 2011;5 doi: 10.1177/0269881110364272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balci F, Meck WH, Moore H, Brunner D. Timing deficits in aging and neuropathology. In: Bizon JL, Woods A, editors. Animal Models of Human Cognitive Aging. Totowa, NJ: Humana Press; 2009. pp. 161–201. [Google Scholar]

- Balsam PD, Drew MR, Gallistel CR. Time and associative learning. Comparative Cognition & Behavior Reviews. 2010;5:1–22. doi: 10.3819/ccbr.2010.50001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balsam PD, Drew MR, Yang C. Timing at the start of associative learning. Learning and Motivation. 2002;33:141–155. doi: 10.1006/lmot.2001.1104. [DOI] [Google Scholar]

- Balsam PD, Fairhurst S, Gallistel CR. Pavlovian contingencies and temporal information. Journal of Experimental Psychology: Animal Behavior Processes. 2006;32:284–294. doi: 10.1037/0097-7403.32.3.284. [DOI] [PubMed] [Google Scholar]

- Balsam PD, Gallistel CR. Temporal maps and informativeness in associative learning. Trends in Neurosciences. 2009;32:73–78. doi: 10.1016/j.tins.2008.10.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bardo MT, Cain ME, Bylica KE. Effect of amphetamine on response inhibition in rats showing high or low response to novelty. Pharmacology Biochemistry and Behavior. 2006;85:98–104. doi: 10.1016/j.pbb.2006.07.015. [DOI] [PubMed] [Google Scholar]

- Barkley RA, Edwards G, Laneri M, Fletcher K, Metevia L. Executive functioning, temporal discounting, and sense of time in adolescents with attention deficit hyperactivity disorder (ADHD) and oppositional defiant disorder (ODD) Journal of Abnormal Child Psychology. 2001;29:541–556. doi: 10.1023/A:1012233310098. [DOI] [PubMed] [Google Scholar]

- Barnet RC, Arnold HM, Miller RR. Simultaneous conditioning demonstrated in second-order conditioning: Evidence for similar associative structure in forward and simultaneous conditioning. Learning and Motivation. 1991;22 doi: 10.1016/0023-9690(91)90008-V. [DOI] [Google Scholar]