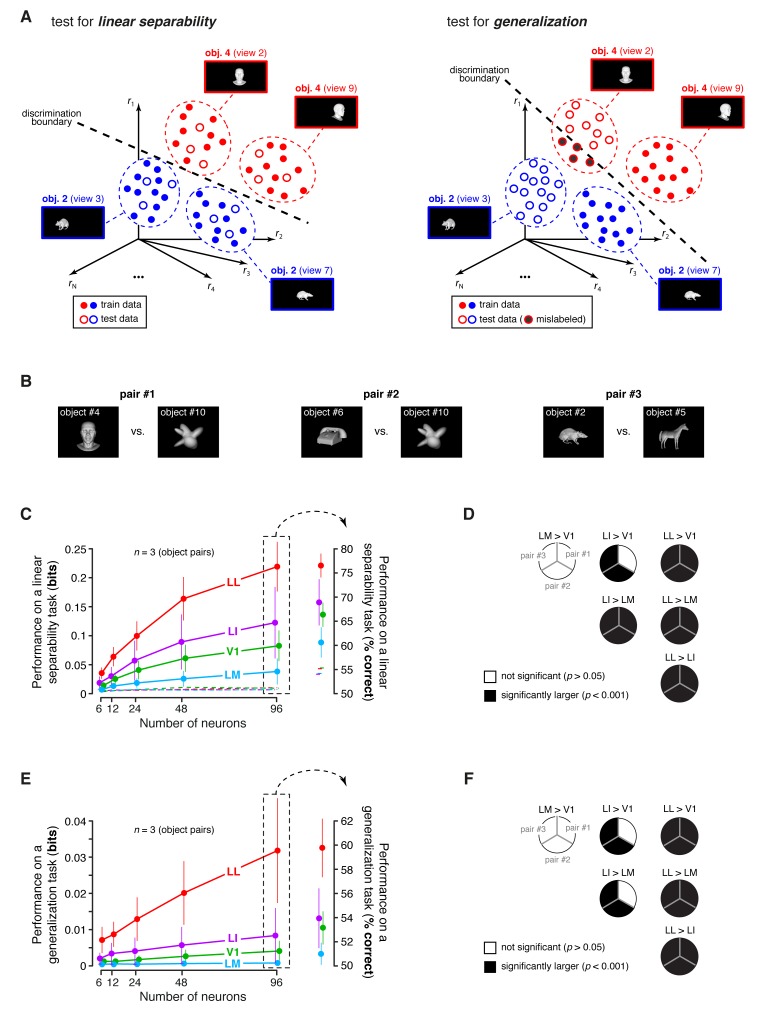

Figure 8. Linear separability and generalization of object representations, tested at the level of neuronal populations.

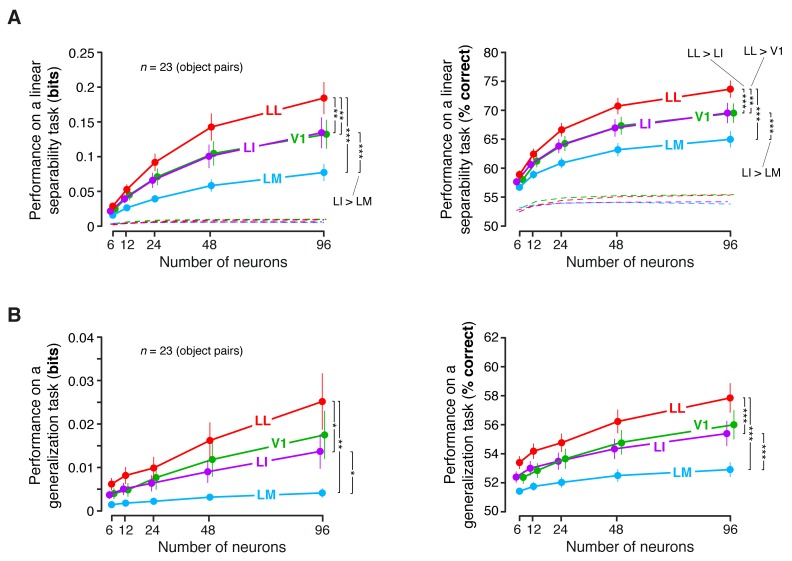

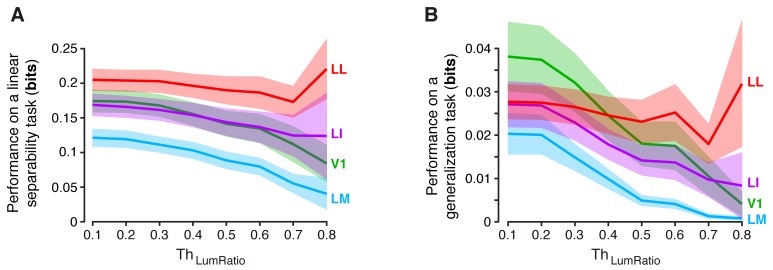

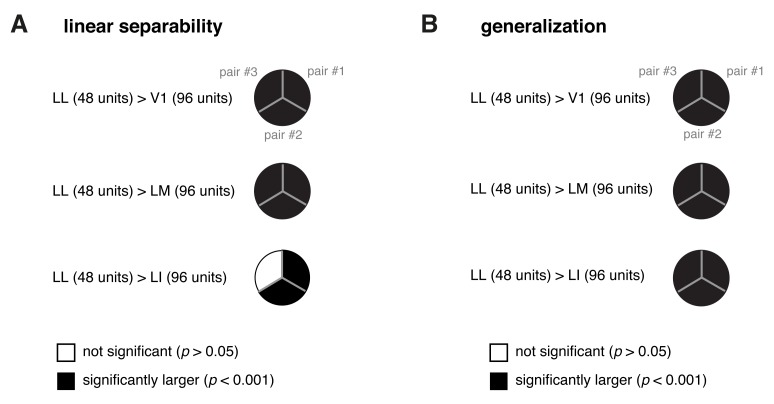

(A) Illustration of the population decoding analyses used to test linear separability and generalization. The clouds of dots show the sets of response population vectors produced by different views of two objects. Left: for the test of linear separability, a binary linear decoder is trained with a fraction of the response vectors (filled dots) to all the views of both objects, and then tested with the left-out response vectors (empty dots), using the previously learned discrimination boundary (dashed line). The cartoon depicts the ideal case of two object representations that are perfectly separable. Right: for the test of generalization, a binary linear decoder is trained with all the response vectors (filled dots) produced by a single view per object, and then tested for its ability to correctly discriminate the response vectors (empty dots) produced by the other views, using the previously learned discrimination boundary (dashed line). As illustrated here, perfect linearly separability does not guarantee perfect generalization to untrained object views (see the black-filled, mislabeled response vectors in the right panel). (B) The three pairs of visual objects that were selected for the population decoding analyses shown in C-F, based on the fact that their luminance ratio fulfilled the constraint of being larger than for at least 96 neurons in each area. (C) Classification performance of the binary linear decoders in the test for linear separability, as a function of the number of neurons N used to build the population vector space. Performances were computed for the three pairs of objects shown in (B). Each dot shows the mean of the performances obtained for the three pairs (± SE). The performances are reported as the mutual information between the actual and the predicted object labels (left). In addition, for N = 96, they are also shown in terms of classification accuracy (right). The dashed lines (left) and the horizontal marks (right) show the linear separability of arbitrary groups of views of two objects (same three pairs used in the main analysis; see Results). (D) The statistical significance of each pairwise area comparison, in terms of linear separability, is reported for each individual object pair (1-tailed U-test, Holm-Bonferroni corrected). In the pie charts, a black slice indicates that the test was significant (p<0.001) for the corresponding pairs of objects and areas (e.g., LL > LI). (E) Classification performance of the binary linear decoders in the test for generalization across transformations. Same description as in (C). (F) Statistical significance of each pairwise area comparison, in terms of generalization across transformations. Same description as in (D). The same analyses, performed over a larger set of object pairs, after setting , are shown in Figure 8—figure supplement 1. The dependence of linear separability and generalization from is shown in Figure 8—figure supplement 2. The statistical comparison between the performances achieved by a population of 48 LL neurons and V1, LM and LI populations of 96 neurons is reported in Figure 8—figure supplement 3.