Abstract

We present an overview of the Centers for Quantitative Imaging Excellence (CQIE) program, which was initiated in 2010 to establish a resource of clinical trial-ready sites within the National Cancer Institute (NCI)-designated Cancer Centers (NCI-CCs) network. The intent was to enable imaging centers in the NCI-CCs network capable of conducting treatment trials with advanced quantitative imaging end points. We describe the motivations for establishing the CQIE, the process used to initiate the network, the methods of site qualification for positron emission tomography, computed tomography, and magnetic resonance imaging, and the results of the evaluations over the subsequent 3 years.

Keywords: Scanner qualification, cancer imaging, phantom imaging, imaging accreditation, imaging credentialing, clinical trials

INTRODUCTION

Background and Objectives

Advanced imaging methodologies play a pivotal role in cancer care, providing early detection of tumors and guidance of therapy as well as subsequent disease monitoring and surveillance. Advantages inherent in imaging assays include the ability to obtain spatially localized information over large volumes of tissue or the entire body compared to the limited sampling available for biopsy-driven histopathology or in vitro blood- or serum-based assays and their inherent drawbacks. In addition, in vivo imaging assays have the ability to provide multiple evaluations of a molecular target or tumor metabolism over time, allowing for adaptive therapy without invasive procedures.

Continued progress in research and development of imaging agents, methodologies, and technologies holds promise for better cancer care—for example, with improved tumor detection and biological characterization. New imaging agents and approaches exploit various pathophysiologic characteristics of tumors with evaluations of phenomena such as metabolism, proliferation, hypoxia, angiogenesis, essential signal pathway blockage(s), and other tumor microenvironment modifications. These refined imaging procedures have the potential to be surrogate or primary biomarkers in oncologic patient evaluation. In addition, the use of validated molecular imaging probes is critical both to the National Cancer Institute (NCI) drug discovery and development process and the ongoing NCI commitment to further our understanding of cancer biology.

While imaging for patient care in clinical oncology practice is predominately focused on tumor diagnostics, imaging within oncology clinical trials has expanded to assess tumor biologic characteristics, including pretreatment patient stratification and functional changes during and after therapeutic interventions. This expanded focus on oncologic imaging necessitates a more stringent quality management program to ensure that imaging devices are functioning appropriately and that properly controlled imaging acquisition protocols are being used at radiology sites performing imaging on patients in clinical trials. It is essential that the resulting imaging examinations are of sufficient quality to assess the desired end points, and that the imaging assessment is performed in a consistent manner across sites. Finally, imaging data in clinical trials should be appropriately preserved for central analysis, regulatory documentation, and potential downstream secondary studies. As such, there has been increased recognition of the need to standardize imaging protocols in clinical trials (1–5).

With these objectives in mind, the NCI Cancer Imaging Program (CIP) initiated the Centers for Quantitative Imaging Excellence (CQIE) program in 2010 to establish a resource of clinical trial-ready sites within the NCI-designated Cancer Centers (NCI-CCs) network, capable of conducting treatment trials that contain integral molecular and functional advanced quantitative imaging end points. The NCI-CC sites serve as centers for transdisciplinary, translational, and clinical research, and link cancer research to health service delivery systems outside the center via proactive dissemination programs. Such centers were optimal sites in which to support and promote advanced quantitative imaging for measurement of response.

Delays can often occur in opening treatment trials with advanced imaging aims within a multicenter setting. Areas of delay may include site selection based on qualification of advanced imaging capabilities, dissemination of relevant qualification standards for molecular and/or functional imaging modalities, and lack of coordinated collaboration among imaging and treatment/research teams at a site. These areas of concern were addressed by organizing and implementing the CQIE program under the auspices of NCI CIP, with administrative coordination and oversight from the American College of Radiology Imaging Network (ACRIN), NCI’s cooperative group with an exemplary history of performing large phase II and III studies to evaluate imaging methods and agents for enhanced cancer management. This report on CQIE progress details the experience and lessons learned in the course of qualifying approximately 60 NCI-CC sites across the nation; these centers have now demonstrated competence in key areas of advanced imaging within the modalities of static and dynamic positron emission tomography (PET), volumetric computed tomography (vCT) or magnetic resonance (vMR), and dynamic contrast-enhanced MR imaging (DCE-MRI) in body and/or brain. This CQIE network is now a proven resource supporting development and clinical implementation of quantitative imaging for measurement of response to therapy, with the potential to be extended to other NCI and National Institutes of Health programs that support advanced imaging within clinical trials under the NCI Divisions of Cancer Treatment and Diagnosis as well as the Division of Cancer Prevention.

Roles and Responsibilities of the CQIE Partners

With NCI funding and oversight, ACRIN has developed and maintained a series of site qualification guidelines and standard operating procedures (SOPs) for multicenter imaging trials for PET use in both static and dynamic mode, vCT/vMR, and DCE-MRI, and for all four major imaging device manufacturers—General Electric (GE), Siemens, Philips, and Toshiba. Furthermore, as the CQIE coordinating center, ACRIN developed a set of data transfer and management procedures and then implemented the qualification process at NCI-CC sites, as detailed in a manual of procedure used across the network. More specifically, they:

collected and maintained the images and data submitted by sites for their initial qualification and yearly renewal processes;

performed both qualitative and quantitative analysis of images submitted by sites to validate and approve the qualification process; and

loaned phantoms and calibrators to sites if needed to enable the qualification process.

The SOPs were made freely available on the web and have been used by other clinical trial organizations in UK, Europe, and Japan, as well as the pharmaceutical industry.

Participating NCI-CC sites in turn agreed to:

participate in the CQIE process for 3 years, starting with completing online learning modules, baseline and annual phantom calibration, and clinical case test submission;

comply with qualification and quality control (QC) guidelines and employ imaging standards for all three modalities for potential use in future NCI-sponsored clinical trials; and

identify on-site project leaders and modality-specific imaging contacts to serve as CQIE liaison with ACRIN in implementing the agreed-upon imaging best practices standard.

Detailed summaries of the qualification process, including online learning tool examples, scanner image acquisition benchmarks, and phantom calibration procedures for PET, CT, and MRI methods, are presented next, along with metrics derived for quantitative imaging results from participating NCI-CC sites through the duration of the program.

METHODS

Site Identification and Contact

The study cohort was drawn from 58 active NCI-CC sites in the continental US as of 2010. Outreach to each of the sites was performed by emailing contacts in radiology as indicated by the NCI-CC site directors at each site. The initial contact introduced the imaging leader(s) to the ACRIN core laboratory leadership team and the goals of the CQIE program. Outreach to half of the NCI-CCs sites (arbitrarily chosen) was initiated at the beginning of year one, and to the other half 6 months later. If these contacts failed to respond to initial outreach, the scope of e-mail contact was broadened to include radiology chairs and imaging modality leaders at these centers.

During the initial outreach to the NCI-CC sites and their imaging liaisons, the CQIE team indicated to the sites the availability of funds to reimburse the programs for their time and effort in the initial year of the program. The purpose for on-site visits by CQIE personnel in any or all of the three modalities to provide guidance in year one qualification activities was also communicated. Following selection of the site CQIE project leader, primary modality technical leaders in PET, CT, and MRI were identified.

Once contact was established with an imaging project leader at each NCI-CC site, a calendar for qualification activities was established. To provide adequate resources from the ACRIN core laboratory for year one qualification activities, we staggered site initiation timelines across the first 10 months of the initial funding year (resulting in between five and seven new NCI-CC sites initiating CQIE procedures per month). Once initiated, each NCI-CC site was provided an estimated 3-month time window for completion of qualification activities in all three modalities, although in many cases qualification activities in one or more modality extended beyond this time frame. Regardless of the actual time frame in which qualification activities were successfully completed, the time for renewal in years two and three was set based on the initial assigned qualification window in year one. This “fixed” calendar (regardless of actual date of successful qualification) was established to ensure unified timelines at a given NCI-CC site for PET, CT, and MRI, and to ensure the opportunity to qualify all participating NCI-CC sites annually in each of the modalities throughout the course of the 4-year funding period for the CQIE program.

General Procedures

Year One

For year one activities, imaging centers were given the option of an on-site visit by each of the three modality teams to facilitate scanning and qualification. Centers could opt for onsite visits for any or all modalities; in which case, scheduling of core laboratory modality team visits was based on ACRIN and NCI-CC site imaging personnel availability, and on anticipated scanner availability as determined by the imaging centers.

Each site was requested to select one scanner to demonstrate phantom scans for each modality.

Modality-, vendor-, and software-specific imaging acquisition procedures were supplied to each site based on scanner information supplied by the sites to the ACRIN core laboratory. Phantoms and CQIE SOP materials were then forwarded to the site within 2 weeks of the planned site visit. Methods for imaging transfer to ACRIN via secure file transfer protocol (sFTP) were also initiated at this time. Sites were also encouraged to complete a review of the learning modules created as an introduction describing the importance of the program prior to their site visit, compliance with which was monitored by an electronic confirmation following completion. Modality-specific procedures are discussed in subsequent sections.

Years Two and Three

Unlike year one, no on-site visits by ACRIN core laboratory personnel were arranged for years two and three, and no reimbursement was provided for qualification activities. Instead, sites were sent reminders of the need to requalify their scanner(s) by e-mail 3 months in advance of their requalification deadline. Requalification materials, including phantoms and teaching slides, were made available to each site. Sites requesting additional assistance were given access to real-time telephone consultation by ACRIN core laboratory specialists to facilitate qualification activities. Sites initially qualifying under CQIE in year one were sent reminders in year three, regardless of the degree and/or success of their participation in year two.

PET

Sources of Variability

To obtain usable quantitative imaging information from multicenter imaging trials, standardized imaging procedures were essential across all imaging modalities. Variability is inherent in studies when multiple radiologists interpret images, multiple technologists perform different image acquisition procedures, and different centers use varying makers and models of scanners and software. This is particularly true for PET assessment, where the most commonly used measure of activity, the standardized uptake value (SUV), is affected by multiple technical factors, including dosing accuracy with PET isotope delivery and normalization to body weight (total or lean) versus surface area, regularity of scanner calibration, use of image reconstruction algorithm, correction factors employed for attenuation, and region of interest (ROI) definition method.

To address these issues, CQIE created and distributed PET-specific learning modules for initial and annual site qualification.

Site Initiation

ACRIN-CQIE PET leads provided assistance with technical issues associated with standardizing PET acquisition protocols across multiple scanner models, and familiarized sites with phantom scanning procedures and regular QC testing practices. Each center was asked to provide ACRIN with benchmark cases, including two whole-body and two brain 18fluoro-2-deoxyglucose (FDG)-PET or PET/CT scans for each qualified scanner, along with data forms for qualification review and resolution of any technical or quality issues. These images (preferably made within the prior month) were acquired and reconstructed with the standard imaging protocol utilized by the site; both attenuation and nonattenuation corrected images were submitted.

Clinical Test Cases

For initial and annual qualification assessments, two test cases for brain and body fields of view (FOV) were submitted for review for overall technical image quality. The test cases could be any 18F-FDG study with or without abnormal findings. Test cases were acquired according to the site’s standard protocols within 1 month of the submission timepoint. Analysis included a comparison of the technical parameters in the Digital Imaging and Communications in Medicine (DICOM) header to those recorded on the Site Assessment Form, an analysis of the quality of the PET and CT fusion, and an overall evaluation for anything that may hinder a reviewer’s ability to interpret the study.

Phantom Testing

Baseline and annual phantom tests were performed with both uniform cylinder and American College of Radiology (ACR) PET phantoms (provided if not available at the site); images and data forms were sent to ACRIN for review and analysis. The uniform cylinder phantom (internal diameter 18–22 cm with no internal structure) has the ability to evaluate uniformity and noise characteristics of an individual PET or PET/CT scanner. Acceptance criteria for body and brain FOV 10–20 minute static acquisition scans (two- and one-bed position, respectively) and body FOV dynamic acquisition scans (one-bed position) are shown in Table 1. The body FOV dynamic acquisition was acquired using the following dynamic sequence: 16 bins of 5 seconds duration, 7 bins of 10 seconds duration, 5 bins of 30 seconds duration, 5 bins of 1 minute duration, and 5 bins of 3 minutes duration.

TABLE 1.

Acceptance Criteria

| Body and Brain FOV Static Acquisitions |

|

| Body FOV Dynamic Acquisition |

|

FOV, field of view; SUV, standardized uptake value.

Materials required to perform this test include:

uniform cylinder phantom (ACR phantom could be used if cold rods and spheres were removed and the “flat” lid was used)

60 mL and tuberculin syringes

timer and data forms

dose of 18F-FDG or 18F-fluoride scaled to the phantom volume

The fully assembled ACR phantom (Fig 1) has the ability to evaluate quantitative accuracy and the “hot” and “cold” contrast resolution for a given scanner. Pass/fail ACR phantom SUV acceptance criteria are shown in Table 2. Materials required to perform this test include:

ACR phantom (provided by ACRIN on loan if needed)

1000 mL bag/bottle distilled water or saline

three 60 mL and two tuberculin syringes (large-bore 18 gauge needles)

timer and SUV Analysis data forms

CQIE-ACR PET Phantom dilution worksheet

either 18F-FDG or 18F-fluoride

Figure 1.

American College of Radiology phantom.

TABLE 2.

SUV Acceptance Criteria

| Based on ACR's 2010 Pass/Fail Criteria |

|

SUV, standardized uptake value.

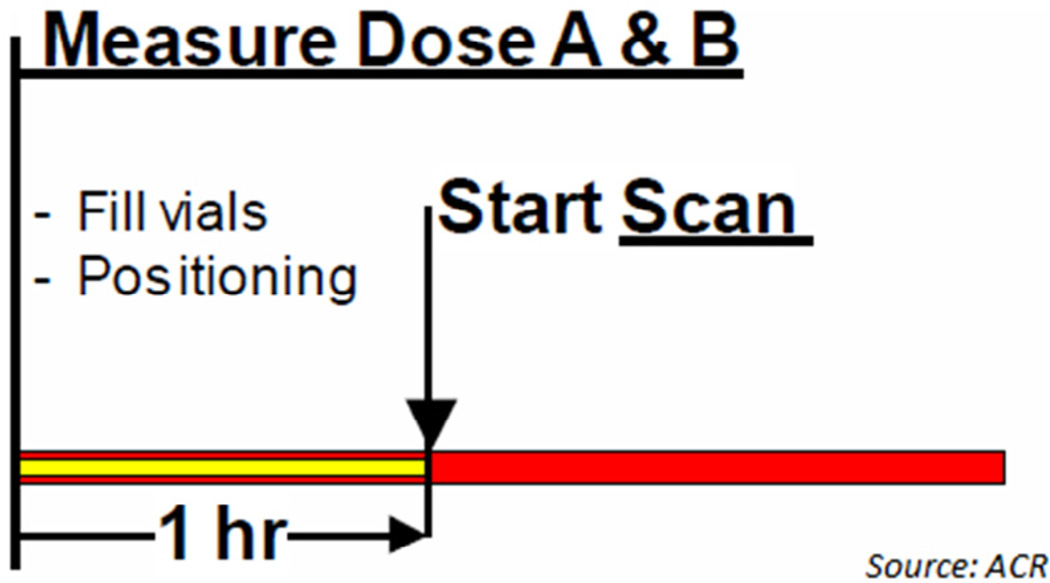

The time sequence and A and B dose measurement procedure used for ACR phantom testing are shown in Figure 2.

Figure 2.

Time sequence and dose measurement.

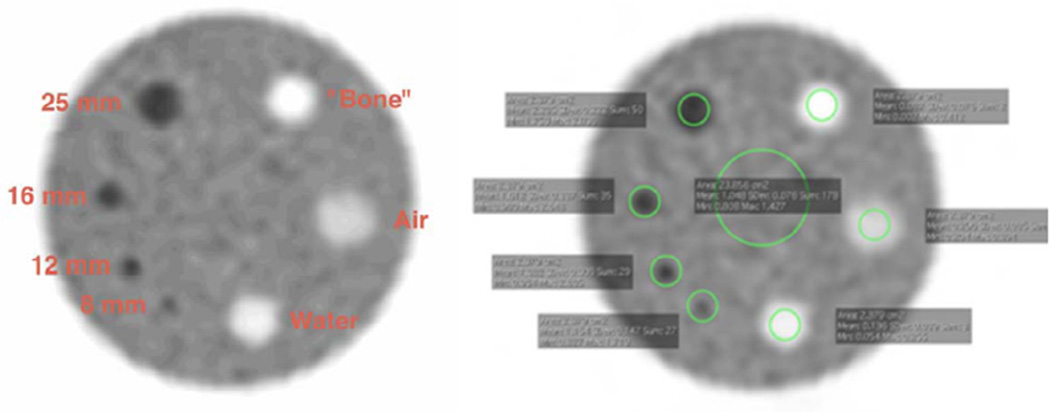

Each site performed its own SUV analysis before submitting the images to ACRIN, according to the ACR standard analysis instructions and using the locally preferred software package. The slice that best showed the four “hot” cylinders was selected, then both background and small cylinder ROIs were drawn. The mean and maximum SUV values were recorded for each ROI and recorded on CQIE-ACR PET Phantom SUV Analysis data forms (one each for body and brain FOV). As part of the core laboratory analysis, we also recorded the SUV peak measurement for each of the hot cylinders (Fig 3).

Figure 3.

Screen capture of regions of interest.

Data Submission and Analysis

Upon receipt of phantom data sets and forms via sFTP, ACRIN Imaging Core Laboratory performed a submission-level QC review and informed sites of any missing qualification component that might preclude evaluation. Each submission received a Pass/Fail score based on modality-specific criteria. Sites had to pass at least one scanner for each of the imaging modalities (PET, CT, MRI) to be CQIE qualified. ACRIN worked with each site to identify any deficiencies and suggest remedies.

QC Routine

Sites were encouraged to incorporate CQIE-recommended QC activities into their imaging instrument QC review (Table 3). Ensuring patient safety and image quality is increasingly important as quantitative imaging end points are incorporated into multicenter trials. Sites documented the nature and frequency of QC testing in response to the ACRIN audit questionnaire used during annual review to maintain their qualification status.

TABLE 3.

CQIE-Recommended QC Activities

| Test | Purpose | Frequency |

|---|---|---|

| Physical inspection | Check gantry covers in tunnel and patient handling system. | Daily |

| Daily detector check | Test and visualize proper functioning of detector modules. | Daily |

| Blank scan | Visually inspect sinograms for apparent streaks and consistency. |

Daily |

| Normalization | Determine system response to activity inside the FOV. | At least 1 × 3 months, after software upgrades and hardware service |

| Uniformity | Estimate axial uniformity across image planes by imaging a uniformly filled object. |

After maintenance, new setups, normalization, and software upgrades |

| Attenuation-correction calibration |

Determine calibration factor from image voxel intensity to true activity concentration |

At least 1 × 6 months, after normalization |

| Cross-calibration | Identify discrepancies between PET camera and dose calibrator. |

At least 1 × 3 months, after upgrades, new setups, normalization |

| Spatial resolution | Measure spatial resolution of point source in sinogram and image space. |

At least annually |

| Count Rate performance | Measure count rate as a function of given activity concentration. |

After new setups, normalization, recalibrations |

| Sensitivity | Measure volume response of system to a source of given activity concentration. |

At least 1 × 6 months |

| Image quality | Check hot and cold spot image quality of standardized image quality phantom. |

At least annually |

CQIE, Centers for Quantitative Imaging Excellence; FOV, field of view; PET, positron emission tomography; QC, quality control.

CT

Phantom Scanning

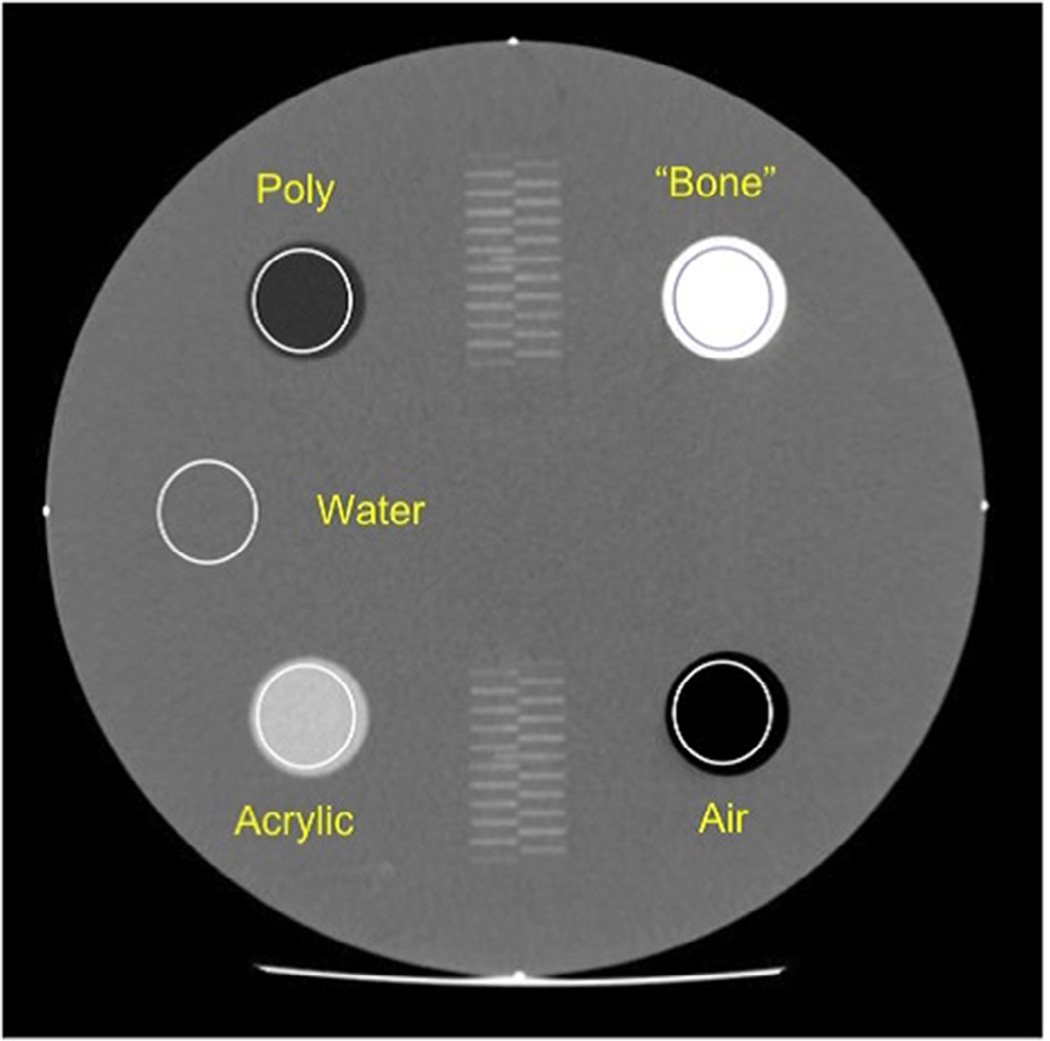

Phantom tests were performed utilizing the ACR CT Accreditation phantom (Gammex 464; Fig 4). Phantoms were provided by ACRIN to imaging centers on loan if they were not available at the site. The ACR CT Accreditation phantom is a solid phantom containing four modules and is constructed primarily from a water-equivalent material (6). Each module is 4 cm deep and 20 cm in diameter.

Figure 4.

American College of Radiology computed tomography phantom.

Phantom Module 1 assessed positioning and alignment, CT number accuracy, and slice thickness. Module 2 assessed low-contrast resolution; Module 3 assessed CT number uniformity and distance accuracy; and Module 4 assessed high-contrast (spatial) resolution. Additional materials required for phantom testing, including phantom-leveling base and CQIE CT phantom data worksheet, were also provided to the participating sites.

Each site was required to scan the ACR CT phantom using three different acquisition protocols, as shown in Tables 4 and 5: the CQIE Volumetric Chest Protocol, the Adult Volumetric Liver Protocol, and a Routine Adult Abdomen Protocol. manufactures (GE, Philips, Siemens, and Toshiba) were included.

TABLE 4.

Volumetric Adult Chest CT Protocol

| Parameter | GE | Philips | Siemens | Toshiba | |

|---|---|---|---|---|---|

| Display FOV (Reconstruction FOV) | 21 cm | 210 | 230 | 21 cm | |

| Reconstructed slice width | 1.25 mm | 1.25 mm | 1–1.5 mm | 1–1.5 mm | |

| Reconstruction algorithm | STD | B | B30f | FC10 | |

| Matrix | 512 × 512 | ||||

| Scan FOV | Small body | ||||

| mAs | 240 ± 20 | ||||

| kVp | 120 | ||||

| Scan mode | Axial |

CT, computed tomography; FOV, field of view.

TABLE 5.

Acquisition Parameters for Phantom Scans 2 and 3

| Protocol | Scan FOV | Display FOV | Slice Width | Recon Algorithm | Scan Mode | |

|---|---|---|---|---|---|---|

| 2 | Volumetric liver | Small body (or ~25 cm) |

21–25 cm | 2.5–3 mm | Per routine clinical protocol |

Helical |

| 3 | Adult abdomen | Large (or ~50 cm) |

38 cm | 5 mm |

FOV, fields of view.

For year one, ACRIN core laboratory personnel provided guidance in phantom positioning and image acquisition during on-site testing. When feasible, on-site analysis of the imaging data was also performed—with repeat imaging undertaken when required based on scanner availability in the course of the site visit. Centers not requesting on-site visits in year one were instructed to evaluate the acquired phantom scans for pass/fail per acceptance criteria prior to submitting the images to ACRIN for analysis. During years two and three, all sites were encouraged to perform on-site analysis prior to submitting phantom images to ACRIN.

Phantom Image Analysis

Final phantom analysis for all three years was performed at the ACRIN core laboratory. Quantitative image analysis was performed using Osirix software. In addition to slice thickness and FOV, key DICOM header fields including values representing spatial resolution, exposure, reconstruction algorithms, and pitch factors were inspected for compliance.

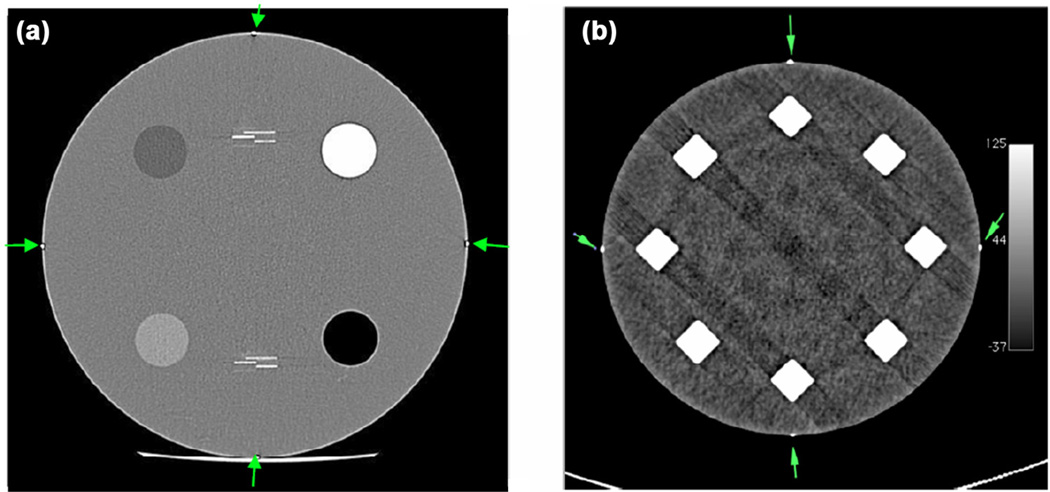

The scanner alignment test (evaluated for volumetric chest data set only) was considered “pass” if, by visual examination of the images, all four markers were visible at S0 and S120 locations as depicted in Figure 5a and b and proper headfirst supine image orientation was observed.

Figure 5.

(a) Markers visible at scan location S0. (b) Markers visible at scan location S120.

The CT number was evaluated on datasets acquired per all three protocols using Module 1. The best image from scanning Module 1 was selected (Fig 6). The mean CT number from a circular ROI was expected to fall within the range for each material (Table 6). Slice thickness accuracy was assessed by correlating the number of resolved central wires to the prescribed slice thickness as indicated in the DICOM header.

Figure 6.

Cross-sectional image of Module 1 with properly placed regions of interest.

TABLE 6.

CT Number Pass Criteria

| Material | CT Number (HU) |

|---|---|

| Bone | +850 to +970 |

| Air | −1005 to −970 |

| Acrylic | +110 to +135 |

| Water | −7 to +7 |

| Polyethylene | −170 to −87 |

CT, computed tomography; HU, Hounsfield unit.

Contrast-to-noise ratio (CNR) was evaluated for datasets acquired per the abdomen and liver protocols using Module 2 with the following pass criteria: CNR should be >1.0 for abdomen datasets, and >0.75 for liver datasets.

CT number uniformity was assessed in Module 3 consistent with ACR credentialing protocol through the use of five ROIs placed centrally and at 3, 6, 9, and 12 o’clock. The CT number difference between edge and central ROIs was expected to be less than 5 Hounsfield units (HU). Distance accuracy was also confirmed in this module by a 100 mm linear measurement between the two embedded markers.

High-contrast resolution was assessed for the abdominal and volumetric chest series in Module 4 with the 5 lp/cm pattern expected to be resolved on the abdominal series, and resolution of 6 lp/cm or greater for the chest series.

Passing Criteria

Sites that successfully provided phantom image sets meeting all the required criteria on central ACRIN core laboratory analysis were deemed as CQIE CT qualified. Sites whose CT phantom analysis did not meet passing criteria in one or more areas were informed of the deficiency(ies) and were encouraged to resubmit new phantom images for re-analysis.

CQIE also required a minimum set of routine periodic QC standards to help ensure the fidelity of CT data generated over time. The types of QC testing and their recommended frequencies are identified in Table 7. To successfully complete CQIE CT requirements, sites were to attest to the prior and continued performance of these recommended ongoing CT activities. Data documenting actual compliance with these mandates were not collected.

TABLE 7.

Standardized QC Tests for CT

| Test | Minimum Frequency |

|---|---|

| Water CT number and standard deviation |

Daily—technologist |

| Artifacts | Daily—technologist |

| Scout prescription and alignment light accuracy |

Annually |

| Imaged slice thickness (slice sensitivity profile, SSP) |

Annually |

| Table travel/slice positioning accuracy | Annually |

| Radiation beam width | Annually |

| High-contrast (spatial) resolution | Annually |

| Low-contrast sensitivity and resolution | Annually |

| Image uniformity and noise | Annually |

| CT number accuracy | Annually |

| Artifact evaluation | Annually |

| Dosimetry/CTDI | Annually |

CT, computed tomography; QC, quality control; CTDI, Computed Tomography Dose Index; SSP, slice sensitivity profile.

MRI

Phantom Scanning and Image Submission

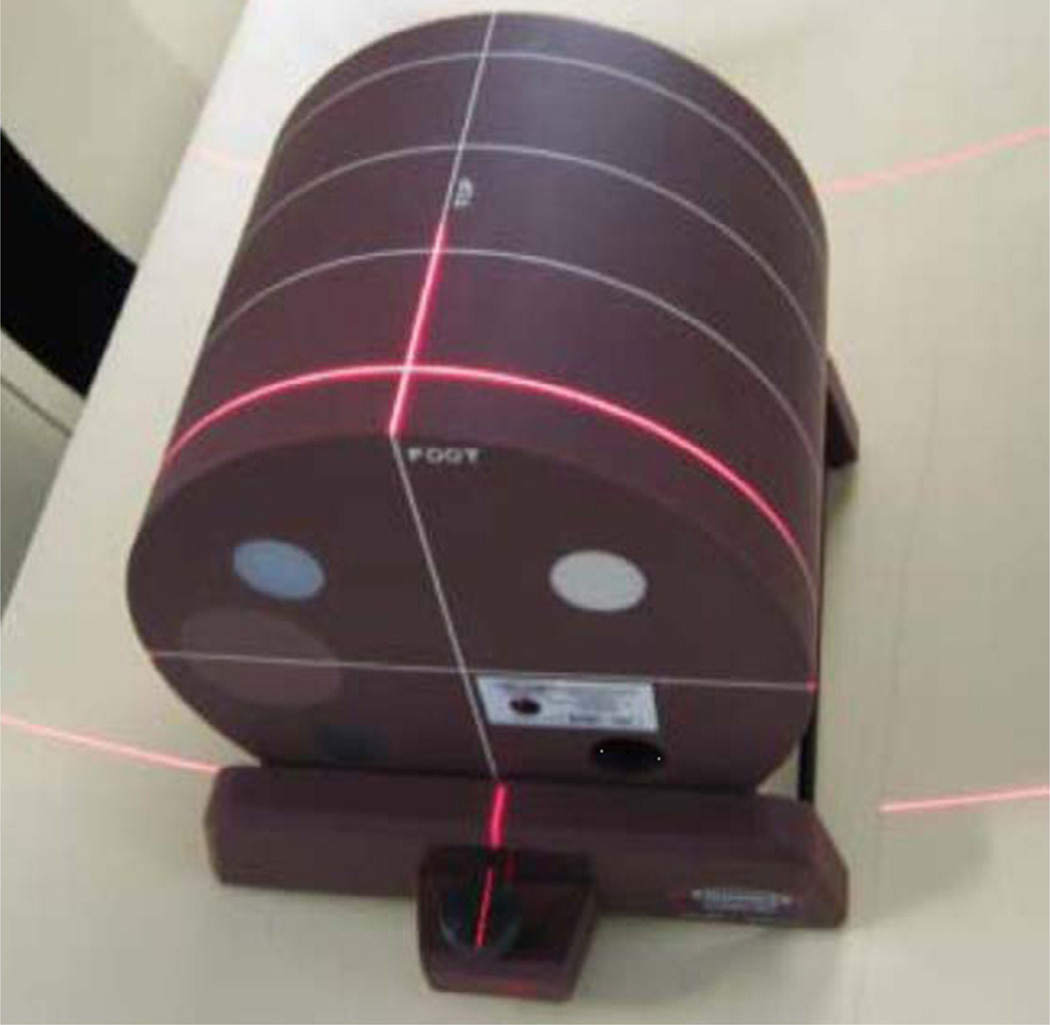

Two types of phantoms were used for CQIE MRI accreditation. The ACR MRI accreditation phantom (Fig 7) was utilized to test for basic machine performance. This phantom is constructed of acrylate plastic, glass, and silicone rubber, and is filled with 10 millimolar (mmol) nickel chloride solution containing sodium chloride (45 mmol). Modules within the phantom are designed to test for landmark accuracy, signal-to-noise ratio, slice offset, slice thickness, slice gap and position, geometric distortion, spatial resolution, low contrast detectability, and image uniformity (7).

Figure 7.

American College of Radiology magnetic resonance phantom.

In addition, to provide a larger range of image intensities for procedures common in DCE-MRI (e.g., R1 mapping, coil sensitivity calculations), a specific R1 phantom was developed for use in the CQIE MRI module. The R1 phantom was designed specifically by The Phantom Laboratory in conjunction with CQIE personnel. It contained vials with varying concentrations of gadolinium to simulate tissue and vascular intensities during a DCE-MRI study.

The ACR MRI phantom was made available to sites on loan each year, as necessary. The phantom was imaged using a head coil. The following phantom examinations were required.

Sequence 1: ACR protocol consisting of sagittal localizer and T1 and T2 spin echo

Sequence 2: Sagittal 3D volumetric protocol

Sequence 3: Variable flip angle (VFA) imaging/R1 mapping series (5)

Sequence 4: Dynamic volumetric multiphase imaging for DCE-MRI

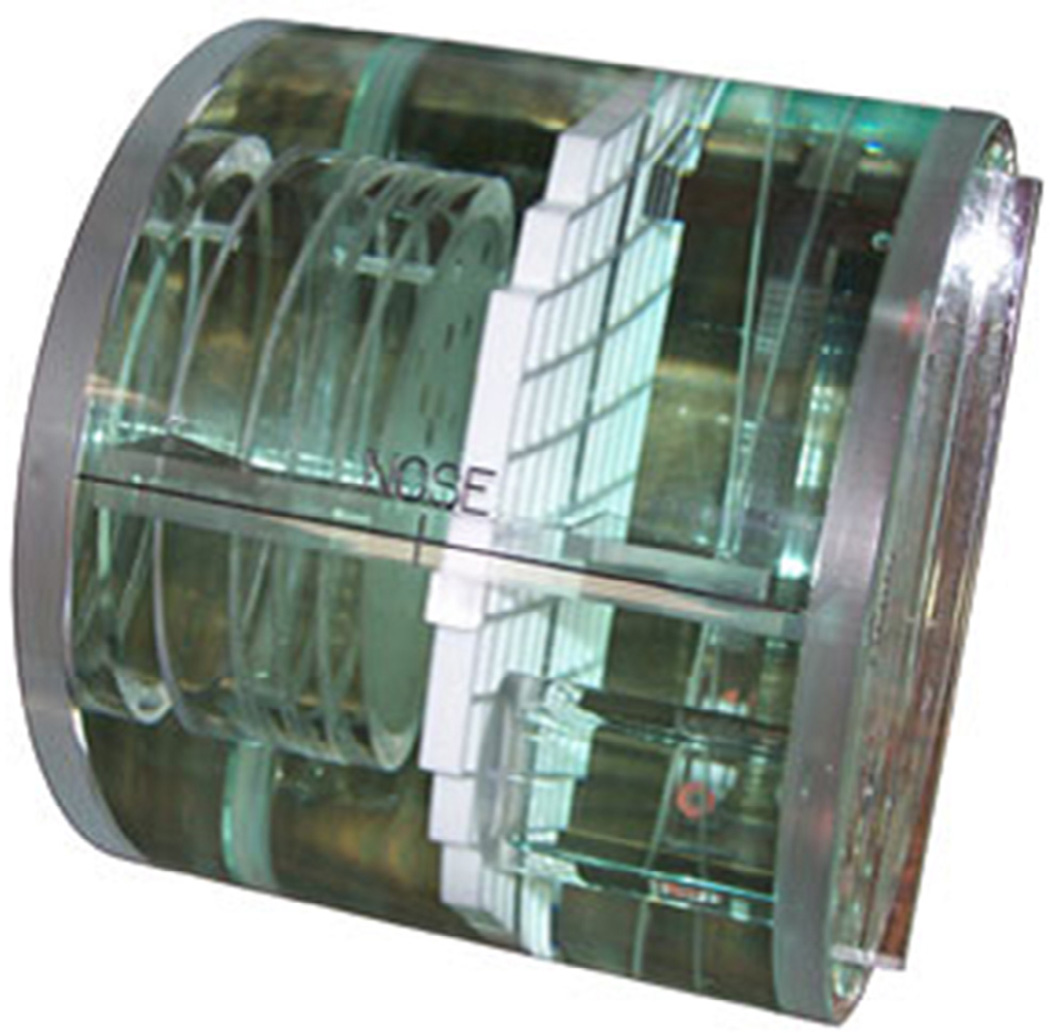

The body DCE-MRI phantom was not commercially available (Fig 8). Thus, the phantom was provided by ACRIN to all sites for baseline and annual qualification testing. The following imaging sequences were required for the DCE-MRI phantom:

Sequence 5: VFA imaging for R1 mapping (3).

Sequence 6: Coil map sensitivity profiles using both torso array and body only receive modes (2).

Sequence 7: Dynamic volumetric multiphase imaging for DCE-MRI

Figure 8.

Body dynamic contrast-enhanced magnetic resonance imaging phantom.

Sites were also required to submit a diffusion-weighted MR series of the brain from a human subject (with or without pathologic findings).

Image Analysis

For ACR MR phantom image sequence 1, data were analyzed in accordance with ACR accreditation methodology for geometric accuracy, high-contrast spatial resolution, slice thickness accuracy, slice position accuracy, image intensity uniformity, percent-signal ghosting, low-contrast object detectability, and compliance with image acquisition protocols.

For ACR phantom sequence 2, images were visually inspected for overall image quality and compliance with imaging specification through evaluation of key DICOM header values. Consistency between acquisitions of the five variable flip angle R1 mapping series of sequence 3 was assessed, and for the DCE-MRI sequence (sequence 4), image quality and protocol compliance were assessed, and temporal resolution of less than 8 seconds (and ideally 6 seconds or less) between imaging phases was required.

The human brain diffusion weighted image (DWI) submission was qualitatively assessed. Specifically, scans were inspected for image quality and were required to be free of artifacts, demonstrate sufficient anatomical coverage, and include apparent diffusion coefficient (ADC) maps.

Passing Criteria

Sites that successfully provided phantom and human image sets meeting all the required criteria on central ACRIN core laboratory analysis were deemed as CQIE MR qualified. Sites whose MR phantom analysis did not meet passing criteria in one or more areas were informed of the deficiency(ies) and were encouraged to resubmit new phantom images for re-analysis.

Quality Control Routine

CQIE also required a minimum set of routine periodic QC standards to help ensure the fidelity of MR data generated over time. The types of QC testing and their recommended frequencies are identified in Table 8. To successfully complete CQIE MR requirements, sites were to attest to the prior and continued performance of these recommended ongoing MR activities. Data documenting actual compliance with these mandates were not collected.

TABLE 8.

Standardized QC Tests for MRI

| Test | Minimum Frequency |

|---|---|

| Center frequency | Weekly |

| Table positioning | Weekly |

| Signal to noise | Weekly |

| Artifact analysis | Weekly |

| Geometric accuracy | Weekly |

| High-contrast resolution | Weekly |

| Low-contrast resolution | Weekly |

| Magnetic field homogeneity | Quarterly |

| Slice position accuracy | Quarterly |

| Slice thickness accuracy | Quarterly |

| Radiofrequency coil checks | Annually |

MRI, magnetic resonance imaging; QC, quality control.

RESULTS

Year One

In year one, all 58 continental NCI-CCs were contacted through outreach described previously. All 58 centers eventually were engaged to participate in year one CQIE activities. The majority of these sites opted for on-site visits by the CT, MR, or PET CQIE team. A total of 73 CT scanners, 83 MRI scanners, and 65 PET scanners were subject to CQIE testing during year one. Based on the CQIE procedures, the onsite teams provided guidance and immediate image analysis (with repeat scanning as required during the site visit), so as to approach a 100% pass rate (ie, one scanner passing for each modality). For scanners that could not be issued a passing grade during the on-site visit (due to constraints on the CQIE team’s time and/or machine access), the CQIE team continued to remotely interact with the site personnel in attempts to obtain repeat phantom imaging.

Initial (first attempt) pass rates for scanners during year one was 89% (CT), 83% (MRI), and 38% (PET). Overall, successful qualifications per scanner during the first year were 95% (CT), 89% (MRI), and 98% (PET). All 58 centers were able to obtain a site passing score (at least one scanner per modality qualified) during the year one procedures for CT and MRI. Fifty-six of the 58 centers were willing and/or capable of qualifying for PET in year one.

Year Two

In year two, sites that participated in year one were sent reminders that requalification was needed. No specific followup to the year two outreach was undertaken. In addition, no on-site visits were provided, and sites were not given access to funds to defray costs associated with scanner qualification. As expected, site participation decreased dramatically in year two relative to year one. From the initial 58 centers participating in year one, year two participation rates dropped to 28 (48%) for CT, 31 (53%) for MRI, and 33 (57%) for PET. The number of participating scanners also fell, as shown in Table 9.

TABLE 9.

Differences in Scanner Qualification from Year 1–Year 3

| Sites | Scanners | |||||||

|---|---|---|---|---|---|---|---|---|

| No. of Sites Submitted |

No. of Sites Passing |

Percent Sites Passing |

No. of Scanners Submitted |

No. of Scanners Passing (First Attempt) |

Percent Scanners Passing (First Attempt) |

No. of Scanners Passed |

Percent Scanners Passed |

|

| Year 1 | ||||||||

| CT | 58 | 58 | 100 | 73 | 65 | 89 | 69 | 95 |

| MR | 58 | 58 | 100 | 83 | 69 | 83 | 74 | 89 |

| PET | 56 | 56 | 100 | 65 | 25 | 38 | 64 | 98 |

| Year 2 | ||||||||

| CT | 28 | 26 | 93 | 40 | 34 | 85 | 35 | 88 |

| MR | 31 | 23 | 74 | 48 | 37 | 77 | 41 | 85 |

| PET | 33 | 32 | 97 | 39 | 33 | 85 | 39 | 100 |

| Year 3 | ||||||||

| CT | 53 | 44 | 83 | 82 | 66 | 80 | 66 | 80 |

| MR | 53 | 49 | 92 | 88 | 75 | 85 | 75 | 85 |

| PET | 51 | 48 | 92 | 52 | 35 | 67 | 48 | 92 |

CT, computed tomography; MR, magnetic resonance; PET, positron emission tomography.

Despite the lack of on-site support, success rates for initial (first attempt) scanner qualification remained high in year two: 34 of 40 (85%) for CT, 37 of 48 (77%) for MRI, and 33 of 39 (85%) for PET. These rates are comparable to those obtained on the first attempt during year one. We also found that we were able to increase scanner qualification rates with subsequent phantom submissions in all modalities during this time. Overall rates of site qualification by modality were 93% (CT), 74% (MRI), and 97% (PET).

Year Three

Based on the decline in participation in year two, the CQIE team increased outreach efforts in year three in an attempt to improve the rate of site participation. As shown in Table 9, these increased outreach efforts resulted in substantial improvements in the rate of site participation, with a concomitant increase in the number of scanners undergoing qualification during year three. The rate of scanner qualification on the initial attempt in year three was comparable to that achieved in year two, despite the influx of sites that had declined to participate in year two CQIE activities. Initial scan passing rates were 80% (CT), 85% (MRI), and 67% (PET). This represented stability or a slight increase in first attempt passing rates for CT and MRI, with a slight decline in passing rates for PET. However, we were able to improve PET scanner qualification rate in year three to 92% by providing feedback and encouraging phantom scan resubmission. Overall site qualification rates in year three were 83% (CT), 92% (MRI), and 92% (PET).

Data summarizing year one through year three participation and scanner qualification rates are shown in Table 9.

Scanner Distribution by Manufacturer

Table 10 shows a breakdown of scanner submissions by manufacturer throughout the duration of the program. Sites were not required to submit imaging on the same scanners annually. This factor, combined with mixed participation between years one and two, resulted in a somewhat varied distribution of manufacturers year over year.

TABLE 10.

Manufacturers Represented in CQIE Testing Program

| Scanner Modality | Manufacturer | Year 1 | Year 2 | Year 3 |

|---|---|---|---|---|

| CT | GE | 24 | 23 | 34 |

| Siemens | 31 | 12 | 7 | |

| Philips | 12 | 1 | 29 | |

| Toshiba | 6 | 4 | 9 | |

| MRI | GE | 28 | 17 | 22 |

| Siemens | 44 | 24 | 13 | |

| Philips | 11 | 7 | 52 | |

| Toshiba | 0 | 0 | 1 | |

| PET | GE | 36 | 21 | 28 |

| Siemens | 7 | 4 | 5 | |

| Philips | 21 | 14 | 20 |

CQIE, Centers for Quantitative Imaging Excellence; CT, computed tomography; MRI, magnetic resonance imaging; PET, positron emission tomography.

Reasons for Scanner Qualification Failure

To better determine the source of failed scanner qualification, we tabulated reasons for failure by modality for both initial and overall phantom scan submissions for years two and three. These data are shown individually for each modality in Tables 11–13. The set of failure reasons was not recorded for year one because, for the CT and MR tests, the CQIE physicist performed cursory analysis of these acquisitions on-site as time permitted. This often resulted in an immediate repeat acquisition at sites undergoing a year one visit and therefore any collection of failure reasons was considered to be biased.

TABLE 11.

MRI Year 2 and Year 3 Phantom Study Failure Reasons on First Submission

| Series | Year 2 Area Failed | Number | Year 3 Area Failed | Number |

|---|---|---|---|---|

| ACR Phantom Imaging—ACR series, T1/T2 SE* |

Low-contrast detectability | 3 | Low-contrast detectability | 1 |

| Image uniformity | 3 | Image uniformity | 4 | |

| Position accuracy | 2 | Incomplete submission | 1 | |

| Acquisition compliance (slice thickness) | 1 | — | — | |

| 3D Volumetric series* |

Acquisition compliance (incomplete phantom coverage) |

1 | Incomplete submission | 1 |

| DCE body* | Incomplete submission | 2 | Incomplete submission | 4 |

| Acquisition compliance (temporal resolution) | 1 | Acquisition compliance (temporal resolution) | 2 | |

| Artifact | 2 | — | — | |

| Acquisition compliance (FOV) | 1 | Acquisition compliance (flip angle) | 2 | |

| Acquisition compliance (scan duration) | 4 | — | — | |

| DCE brain* | Acquisition compliance (temporal resolution) | 1 | Acquisition compliance (temporal resolution) | 1 |

| Acquisition compliance (scan duration) | 2 | — | — |

3D, three-dimensional; ACR, American College of Radiology; DCE, dynamic contrast-enhanced; FOV, field of view; MRI, magnetic resonance imaging; T1, T2, relaxation times, SE, spin echo.

Some submissions had multiple issues.

TABLE 13.

PET Year 2 and Year 3 Phantom Study Failure Reasons on First Submission

| Series | Year 2 | Year 3 | ||

|---|---|---|---|---|

| Uniform Cylinder PET Phantom* | Static | Static | ||

| Reason | # fails (protocol) | Reason | # fails (protocol) | |

| SUV out of specification | 2 (brain) | SUV out of specification | 3 (brain, body) | |

| Incomplete submission | 1 (body + brain) | Incomplete submission | 1 (brain, body) | |

| Problem with forms | 1 (body + brain) | Uniformity problem | 2 (brain) | |

| Improper acquisition | 1 (body + brain) | — | — | |

| Dynamic | Dynamic | |||

| SUV out of specification | 1 (body) | SUV out of specification | 5 (body) | |

| Reconstruction problem | 1 (body) | Reconstruction problem | 7 (body) | |

| Incomplete submission | 1 (body) | Incomplete submission | 1 (body) | |

| Improper acquisition | 1 (body) | — | — | |

| Problem with forms | 1 (body) | — | — | |

| ACR PET Phantom* | SUV out of specification | 1 (brain) | SUV out of specification | 2 (brain, body) |

| Incomplete submission | 1 (body, brain) | Incomplete submission | 3 (brain, body) | |

| Improper acquisition | 3 (body), 2 (brain) | Phantom filling issue | 1 (body) | |

| — | — | Problem with forms | 2 (body) | |

ACR, American College of Radiology; PET, positron emission tomography; SUV, standardized uptake value.

Some submissions had multiple issues.

DISCUSSION

Obtaining reliable quantitative and semi-quantitative data in multicenter clinical trial settings is both a major opportunity and a challenge for imaging biomarker research. When properly performed, the scanner images should be comparable and reproducible across sites in regard to technical performance, lesion detectability, and quantitative accuracy. Standardization to ensure consistent imaging performance longitudinally across multiple imaging centers requires regular quality control procedures and adherence to specified imaging protocols at each study site.

In their most recent guidance document on imaging in clinical trials, the Food and Drug Administration provides the following statement:

With a clinical trial standard for image acquisition and interpretation, sponsors should address the features highlighted within the subsequent sections of this guidance. These features, including various aspects of data standardization, exceed those typically used in medical practice (8).

The goal of the CQIE program was to establish a resource of clinical trial-ready sites within the NCI-CCs network, capable of participating in oncologic therapeutic trials that included integral and integrated functional advanced quantitative imaging end points. The CQIE program was not designed to be a prospective study of the impact of rigorous site quality assurance and quality control (QA/QC) on image metrics or study power, etc., although a few studies have demonstrated reduction of bias and variability when site qualification and QA/QC procedures are implemented (9,10). However, we are aware of only one study showing the cost-effectiveness of the level (ie, rigor) of site qualification and QA/QC versus the impact on image metrics or study power (11).

This review has summarized the scope, extent, procedures, results, and lessons learned from the CQIE program. The procedures and criteria described previously for site qualification and QA/QC were based on the consensus opinion of imaging experts in each modality (MR, CT, PET). Where possible, existing procedures and criteria found in established accreditation and qualification specifications were used; primary sources included:

ACR accreditation standards for MR, CT, and PET oncology imaging

ACRIN site qualification and QA/QC procedures for imaging in oncology trials

Quantitative Imaging Biomarkers Alliance Profiles, documents specifying achievable measurement accuracy and the standards required to meet them (12)

Methods developed in the Quantitative Imaging Network (QIN). The NCI-sponsored QIN is at the exploratory end of the development spectrum in developing and implementing quantitative imaging methods and tools for clinical trials (13).

A primary concern during development of site qualification and QA/QC procedures for the CQIE program was feasibility. To this end, we undertook this pilot program to test the feasibility of instituting basic, though rigorous, standardized quality control programs across the NCI-designated Cancer Centers network in advance of (and separated from) actual specific NCI clinical trials. Our goal was to demonstrate that a rigorous set of qualification procedures could be undertaken in a standardized means across an array of imaging sites in leading academic centers across the country.

To this end, we set up a distinct set of procedures between the year one and year two/three activities. In year one, sites were highly incentivized to participate. The CQIE program provided monetary reimbursement for personnel and scanner time. In addition, CQIE personnel made on-site visits to most centers during year one, to personally familiarize imaging personnel with the program requirements, and to provide expert real-time feedback of scanning procedures and QC results. Near 100% participation and compliance with the CQIE qualification standards across all three modalities during this year one endeavor was achieved.

In years two and three, we sought to determine whether the experience of successful qualification in year one could be extended to a multiyear program without the benefit of monetary compensation nor on-site assistance. The results of this later experience were more varied. In year two, we noted a substantial lowering in the level of participation in our cohort relative to that of year one—with site participation rates of roughly 50% compared to that of the prior year. However, in year three, we noted a substantial increase in the degree of participation, approaching that of year one in all three modalities. We attribute the decline in year two participation to “qualification fatigue,” noting that based on timeline limitations for the 4-year funding program, sites that were delayed in achieving year one qualification were not able to “reset” their year-two calendar. As such, in a number of instances, sites were notified of the need for requalification for year two only several months after receiving a final year one “passing” grade.

Despite the lower level of participation in year two, we noted that rates of passing (both initially and on subsequent test image submissions) remained high throughout years two and in year three as well (Table 9). The passing rates in both years were equivalent to that seen in year one. In fact, in both years two and three, the rates of initial successful CQIE PET qualification exceeded that of year one, indicating that the sites were able to incorporate their prior year’s experience to improve the quality of their initial submission in years two and three. A more detailed inspection shows that first attempt passing rate was 38% in year one, 85% in year two, and 67% in year three. Although we are not sure of the cause, or if there is a single reason, we speculate that in year two the participant sites that remained were likely to be those that routinely participated in site qualification and QA/QC procedures. In combination with the training provided by CQIE and the qualification process itself, this selection process would be expected to increase the passing rate. In year three, as discussed previously, there was an increased outreach effort to improve the rate of site participation. This may have recruited sites with less rigorous qualification and QA/QC procedures, thus reducing the passing rate compared to year two. However, we note that the passing rate was higher in year three than in year one. Because the number of sites was similar in both periods (56 vs 51), we attribute the increased passing rate to the CQIE training and the qualification process.

The changes in first attempt passing rates, and the final Percent Scanners Passed, over the three periods for all modalities show several patterns. The changes described previously for the first attempt passing rate over the three periods for PET is not replicated for CT and MR. In addition, there was general, but not consistent, decrease in both rates over the three periods. We are not sure of the reasons, but note that for sites undergoing a year one visit for CT and MR, the CQIE team often assisted on site until the scanners were qualified. This was not the case for the year one PET studies due to the extended processing times sometimes required. This may have contributed to differences between PET versus CT and MR rate. In addition, the lack of on-site CQIE staff during the year two and year three qualifications could be expected to lead to reductions in success rates.

We also endeavored to tabulate specific reasons for qualification failure to better identify areas in need of improved attention in the qualification process in future scanner qualification activities. Our data indicate that qualification failures included both technical (scanner performance) and practical (human performance) aspects of the qualification process. As noted in Table 11a–c, in all modalities failure included aspects of scanner technical performance (eg, SUV quantification, CT HU accuracy, or MR contrast detectability). In other cases, qualification failure could be attributed to failure of imaging personnel to accurately prescribe imaging sequences or complete imaging and form submission.

The tabulation across multiple years of the reasons for qualification failure (Table 11) clearly indicates the need for continuing qualification on a regular basis. In other words, if a site is only qualified at the beginning of a multiyear trial, errors in data reporting and biases in measured image values will invariably occur, thus decreasing study power below what is expected. This is likely a contributor to the current concerns about reproducibility, even for randomized clinical trials (14,15). It is hard to quantitatively estimate the impact of such errors, but we note that Doot et al. noted that to maintain study power in an example scenario, the required sample size increased from 8 to 126 as PET measurement precision worsened from 10% to 40% (16). Because the PET component collected more quantitative information with multiple SUV measures, a more detailed analysis of the PET data is ongoing (17).

These results point to areas where core laboratory and other clinical trial resources can be utilized to guide imaging centers on methods to improve their performance in imaging biomarker studies. When technical scanner performance deficiencies are detected, these can usually be corrected on-site with manufacturer assistance. When documented procedures are not followed correctly by imaging personnel, the sites and personnel can be promptly notified of these deficiencies such that procedures can be completed correctly in short order. In both cases, these experiences can be harnessed to improve the timeline for clinical trial participation in trials with advanced imaging end points.

The CQIE program is the most complex and extensive undertaking for multisite, multimodality advanced imaging qualification in the United States. Other scientific and commercial groups have undertaken multisite imaging qualification in the course of clinical trials. These include academic ventures such as the Alzheimer’s Disease Neuroimaging Initiative for MRI, and the Society of Nuclear Medicine and Molecular Imaging Clinical Trial Network (CTN) Scanner Validation Program, which works to ensure that PET/CT systems used in clinical trials operate optimally (18). To date, CTN has validated more than 230 scanners, many of which have been used in international CTN-pharma partnered trials. Data gathered by the CTN Program on the scanners themselves, as well as staff training at individual centers, have proven to be a valuable resource for many drug companies considering use of FDG-PET as an assessment tool in developing oncology and other therapeutic programs. The European Association of Nuclear Medicine launched the resEARch 4 Life (EARL) initiative in 2010 to improve nuclear medicine practice within the European Union by providing an accreditation system and calibration service to member institutions (19).

Advanced imaging has also been undertaken in CT, MRI, and PET in numerous early-phase pharmaceutical trials, with qualification programs provided by imaging-based contract research organizations (CROs). However, in many of these cases, these activities are streamlined for a more limited number of imaging centers and are geared specifically for site participation in a specific clinical trial. In contrast, the CQIE program constituted one of the larger-scale endeavors for advanced multimodality oncologic imaging qualification outside of specific clinical trials in the United States.

Although the overall goal of the CQIE program was to provide a means to streamline and standardize the imaging qualification procedures that often hamper or delay site participation in imaging biomarker trials, we did not in this exercise seek to document successful achievement of more rapid imaging qualification. And although we were able to capture anecdotal information from sites that were able to use the CQIE certificate to bypass qualification requirements in other clinical trials, we did not systematically capture this information across the entire cohort of CQIE participants. Indeed, there remain efforts within the NCI and other groups supporting clinical trials with imaging to augment the number of advanced imaging biomarker trials in the United States. To this end, the NCI has reformulated the funding of their National Clinical Trials Network (NCTN) groups, to include the formation of the Imaging and Radiation Oncology Consortium (IROC) for imaging QC efforts in these trials (20,21).

IROC has been founded as an amalgamation of preexisting core laboratories to facilitate QA and QC activities for imaging and radiation oncology within the NCTN clinical trials portfolio. Furthermore, as part of the IROC mechanism, scientific expertise and consultation early in the development of hypothesis generation serve to ensure robust imaging end points and the appropriate delivery of protocol-specified therapy.

This study has limitations. The nature of the qualification activities did not always match the evolving needs of advanced imaging in oncologic clinical trials. When the CQIE program was initiated, DCE-MRI perfusion studies of tumors were a more frequently encountered advanced imaging biomarker in early-stage clinical trials, whereas quantitative DWI for ADC quantification is now more frequently utilized. In other cases, advanced imaging techniques were included in the CQIE program, but these remain areas that are still in development and are utilized rarely in clinical trials (eg, dynamic PET imaging).

In summary, this report highlights the multiyear experience of the ACRIN core laboratory in undertaking broad cross-modality imaging site qualification in advance of oncologic imaging biomarker trials. The experiences from this effort and the results describe and provide insight into the opportunities and challenges in achieving broad levels of advanced imaging “readiness” in academic medical centers, a pressing need to help streamline the use of imaging biomarkers in oncologic therapy trials.

TABLE 12.

CT Year 2 and Year 3 Phantom Study Failure Reasons on First Submission

| Series | Year 2 Area Failed | Number | Year 3 Area Failed | Number |

|---|---|---|---|---|

| Volumetric lung* | CT no. accuracy | 1 | CT no. accuracy | 2 |

| Incomplete submission | 2 | Acquisition compliance (FOV) | 1 | |

| Acquisition compliance (slice interval) | 1 | Acquisition compliance (slice interval) | 3 | |

| Volumetric liver* | Low contrast resolution | 3 | Low-contrast resolution | 9 |

| Positioning accuracy/slice prescription | 1 | CT no. accuracy | 3 | |

| — | — | Acquisition compliance (FOV) | 1 | |

| Abdominal* | Low contrast resolution | 3 | Low-contrast resolution | 8 |

| CT no. accuracy | 2 | CT no. accuracy | 5 | |

| — | — | Positioning accuracy/slice prescription | 1 | |

| — | — | Acquisition compliance (FOV) | 1 |

CT, computed tomography; FOV, field of view.

Some submissions had multiple issues.

Acknowledgments

The authors gratefully acknowledge the contributions of the following individuals for their time and effort in support of this publication: Joshua Scheuermann, Walter Witschey, Deborah Harbison, Mehdi Adineh, Janet Reddin, Sereivutha Chao, Howard Higley, Ying Tang, Linda Doody, and Lauren Uzdienski. (Funded through Leidos Biomedical Research Inc., subcontract number 10XS070 to ACRIN for the Cancer Imaging Program, DCTD, NCI. This project has been funded in part with Federal funds from the National Cancer Institute, National Institutes of Health, under NIH grants U01CA148131, U01CA190254, U10CA180820, and Contract No. HHSN261200800001E. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government.)

REFERENCES

- 1.Shankar LK. The clinical evaluation of novel imaging methods for cancer management. Nat Rev Clin Oncol. 2012;9:738–744. doi: 10.1038/nrclinonc.2012.186. Available at: http://www.ncbi.nlm.nih.gov/pubmed/23149888. [DOI] [PubMed] [Google Scholar]

- 2.Boellaard R, Oyen WJ, Hoekstra CJ, et al. The Netherlands protocol for standardisation and quantification of FDG whole body PET studies in multicentre trials. Eur J Nucl Med Mol Imaging. 2008;35:2320–2333. doi: 10.1007/s00259-008-0874-2. [DOI] [PubMed] [Google Scholar]

- 3.Buckler AJ, Boellaard R. Standardization of quantitative imaging: the time is right, and 18F-FDG PET/CT is a good place to start. J Nucl Med. 2011;52:171–172. doi: 10.2967/jnumed.110.081224. [DOI] [PubMed] [Google Scholar]

- 4.Buckler AJ, Schwartz LH, Petrick N, et al. Data sets for the qualification of volumetric CT as a quantitative imaging biomarker in lung cancer. Opt Express. 2010;18:15267–15282. doi: 10.1364/OE.18.015267. [DOI] [PubMed] [Google Scholar]

- 5.Fahey FH, Kinahan PE, Doot RK, et al. Variability in PET quantitation within a multicenter consortium. Med Phys. 2010;37:3660–3666. doi: 10.1118/1.3455705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McCollough CH, Bruesewitz MR, McNitt-Gray MF, et al. The phantom portion of the American College of Radiology (ACR) Computed Tomography (CT) accreditation program: practical tips, artifact examples, and pitfalls to avoid. Med Phys. 2004;31:2423–2442. doi: 10.1118/1.1769632. [DOI] [PubMed] [Google Scholar]

- 7.Clarke GD. Overview of the ACR MRI Accreditation Phantom, MRI PHANTOMS & QA TESTING, AAPM Literature. Available at: https://www.aapm.org/meetings/99AM/pdf/2728-58500.pdf. [Google Scholar]

- 8.U.S. Department of Health and Human Services, Food and Drug Administration, Center for Drug Evaluation and Research (CDER), Center for Biologics Evaluation and Research (CBER) Guidance for Industry: Standards for Clinical Trial Imaging Endpoints. Draft Guidance. 2011 Aug; Available at: http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/UCM268555.pdf.

- 9.Scheuermann JS, Saffer JR, Karp JS, et al. Qualification of PET scanners for use in multicenter cancer clinical trials: the American College of Radiology Imaging Network experience. J Nucl Med. 2009;50:1187–1193. doi: 10.2967/jnumed.108.057455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Trembath L, Opanowski A. Clinical trials in molecular imaging: the importance of following the protocol. J Nucl Med Technol. 2011;39:63–69. doi: 10.2967/jnmt.110.083691. [DOI] [PubMed] [Google Scholar]

- 11.Kurland BF, Doot RK, Linden HA, et al. Multicenter trials using 18F-fluorodeoxyglucose (FDG) PET to predict chemotherapy response: effects of differential measurement error and bias on power calculations for unselected and enrichment designs. Clin Trials. 2013;10:886–895. doi: 10.1177/1740774513506618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Buckler AJ, Bresolin L, Dunnick NR, et al. A collaborative enterprise for multi-stakeholder participation in the advancement of quantitative imaging. Radiology. 2011;258:906–914. doi: 10.1148/radiol.10100799. [DOI] [PubMed] [Google Scholar]

- 13.Yankeelov TE, Mankoff DA, Schwartz LH, et al. Quantitative imaging in cancer clinical trials. Clin Cancer Res. 2016;22:284–290. doi: 10.1158/1078-0432.CCR-14-3336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ioannidis JPA. Why most published research findings are false. PLoS Med. 2005;2:e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Macleod MR, Michie S, Roberts I, et al. Biomedical research: increasing value, reducing waste. Lancet. 2014;383:101–104. doi: 10.1016/S0140-6736(13)62329-6. [DOI] [PubMed] [Google Scholar]

- 16.Doot RK, Kurland BF, Kinahan PE, et al. Design considerations for using PET as a response measure in single site and multicenter clinical trials. Acad Radiol. 2012;19:184–190. doi: 10.1016/j.acra.2011.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Scheuermann J, Opanowski A, Maffei J, et al. Qualification of NCI-designated comprehensive cancer centers for quantitative PET/CT imaging in clinical trials. J Nucl Med. 2013;54(suppl 2) Abstract. [Google Scholar]

- 18.Opanowski A, Kiss T. Education and scanner validation: keys to standardizing PET imaging research. SNMMI-TS Uptake Newsletter. 2013;19 Available at: http://snmmi.files.cms-plus.com/docs/Uptake-SeptOct_2013.pdf. [Google Scholar]

- 19.European Association of Nuclear Medicine (EANM) ResEARch 4Life: an EANM initiative. [Accessed June 27, 2014];About EARL. Available at: http://earl.eanm.org/cms/website.php?id=/en/about_earl.htm. [Google Scholar]

- 20.Fitzgerald TJ, Bishop-Jodoin M, Followill DS, et al. Imaging and data acquisition in clinical trials for radiation therapy. Int J Radiat Oncol Biol Phys. 2016;94:404–411. doi: 10.1016/j.ijrobp.2015.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fitzgerald TJ, Bishop-Jodoin M, Bosch WR, et al. Future vision for the quality assurance of oncology clinical trials. Front Oncol. 2013;3:31. doi: 10.3389/fonc.2013.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]