Significance

Science is said to be suffering a reproducibility crisis caused by many biases. How common are these problems, across the wide diversity of research fields? We probed for multiple bias-related patterns in a large random sample of meta-analyses taken from all disciplines. The magnitude of these biases varied widely across fields and was on average relatively small. However, we consistently observed that small, early, highly cited studies published in peer-reviewed journals were likely to overestimate effects. We found little evidence that these biases were related to scientific productivity, and we found no difference between biases in male and female researchers. However, a scientist’s early-career status, isolation, and lack of scientific integrity might be significant risk factors for producing unreliable results.

Keywords: bias, misconduct, meta-analysis, integrity, meta-research

Abstract

Numerous biases are believed to affect the scientific literature, but their actual prevalence across disciplines is unknown. To gain a comprehensive picture of the potential imprint of bias in science, we probed for the most commonly postulated bias-related patterns and risk factors, in a large random sample of meta-analyses taken from all disciplines. The magnitude of these biases varied widely across fields and was overall relatively small. However, we consistently observed a significant risk of small, early, and highly cited studies to overestimate effects and of studies not published in peer-reviewed journals to underestimate them. We also found at least partial confirmation of previous evidence suggesting that US studies and early studies might report more extreme effects, although these effects were smaller and more heterogeneously distributed across meta-analyses and disciplines. Authors publishing at high rates and receiving many citations were, overall, not at greater risk of bias. However, effect sizes were likely to be overestimated by early-career researchers, those working in small or long-distance collaborations, and those responsible for scientific misconduct, supporting hypotheses that connect bias to situational factors, lack of mutual control, and individual integrity. Some of these patterns and risk factors might have modestly increased in intensity over time, particularly in the social sciences. Our findings suggest that, besides one being routinely cautious that published small, highly-cited, and earlier studies may yield inflated results, the feasibility and costs of interventions to attenuate biases in the literature might need to be discussed on a discipline-specific and topic-specific basis.

Numerous biases have been described in the literature, raising concerns for the reliability and integrity of the scientific enterprise (1–4). However, it is yet unknown to what extent bias patterns and postulated risk factors are generalizable phenomena that threaten all scientific fields in similar ways and whether studies documenting such problems are reproducible (5–7). Indeed, evidence suggests that biases may be heterogeneously distributed in the literature. The ratio of studies concluding in favor vs. against a tested hypothesis increases, moving from the physical, to the biological and to the social sciences, suggesting that research fields with higher noise-to-signal ratio and lower methodological consensus might be more exposed to positive-outcome bias (5, 8, 9). Furthermore, multiple independent studies suggested that this ratio is increasing (i.e., positive results have become more prevalent), again with differences between research areas (9–11), and that it may be higher among studies from the United States, possibly due to excessive “productivity” expectations imposed on researchers by the tenure-track system (12–14). Most of these results, however, are derived from varying, indirect proxies of positive-outcome bias that may or may not correspond to actual distortions of the literature.

Nonetheless, concerns that papers reporting false or exaggerated findings might be widespread and growing have inspired an expanding literature of research on research (aka meta-research), which points to a postulated core set of bias patterns and factors that might increase the risk for researchers to engage in bias-generating practices (15, 16).

The bias patterns most commonly discussed in the literature, which are the focus of our study, include the following:

Small-study effects: Studies that are smaller (of lower precision) might report effect sizes of larger magnitude. This phenomenon could be due to selective reporting of results or to genuine heterogeneity in study design that results in larger effects being detected by smaller studies (17).

Gray literature bias: Studies might be less likely to be published if they yielded smaller and/or statistically nonsignificant effects and might be therefore only available in PhD theses, conference proceedings, books, personal communications, and other forms of “gray” literature (1).

Decline effect: The earliest studies to report an effect might overestimate its magnitude relative to later studies, due to a decreasing field-specific publication bias over time or to differences in study design between earlier and later studies (1, 18).

Early-extreme: An alternative scenario to the decline effect might see earlier studies reporting extreme effects in any direction, because extreme and controversial findings have an early window of opportunity for publication (19).

Citation bias: The number of citations received by a study might be correlated to the magnitude of effects reported (20).

US effect: Publications from authors working in the United States might overestimate effect sizes, a difference that could be due to multiple sociological factors (14).

Industry bias: Industry sponsorship may affect the direction and magnitude of effects reported by biomedical studies (21). We generalized this hypothesis to nonbiomedical fields by predicting that studies with coauthors affiliated to private companies might be at greater risk of bias.

Among the many sociological and psychological factors that may underlie the bias patterns above, the most commonly invoked include the following:

Pressures to publish: Scientists subjected to direct or indirect pressures to publish might be more likely to exaggerate the magnitude and importance of their results to secure many high-impact publications and new grants (22, 23). One type of pressure to publish is induced by national policies that connect publication performance with career advancement and public funding to institutions.

Mutual control: Researchers working in close collaborations are able to mutually control each other’s work and might therefore be less likely to engage in questionable research practices (QRP) (24, 25). If so, risk of bias might be lower in collaborative research but, adjusting for this factor, higher in long-distance collaborations (25).

Career stage: Early-career researchers might be more likely to engage in QRP, because they are less experienced and have more to gain from taking risks (26).

Gender of scientist: Males are more likely to take risks to achieve higher status and might therefore be more likely to engage in QRP. This hypothesis was supported by statistics of the US Office of Research Integrity (27), which, however, may have multiple alternative explanations (28).

Individual integrity: Narcissism or other psychopathologies underlie misbehavior and unethical decision making and therefore might also affect individual research practices (29–31).

One can explore whether these bias patterns and postulated causes are associated with the magnitude of effect sizes reported by studies performed on a given scientific topic, as represented by individual meta-analyses. The prevalence of these phenomena across multiple meta-analyses can be analyzed with multilevel weighted regression analysis (14) or, more straightforwardly, by conducting a second-order meta-analysis on regression estimates obtained within each meta-analysis (32). Bias patterns and risk factors can thus be assessed across multiple topics within a discipline, across disciplines or larger scientific domains (social, biological, and physical sciences), and across all of science.

To gain a comprehensive picture of the potential imprint of bias in science, we collected a large sample of meta-analyses covering all areas of scientific research. We recorded the effect size reported by each primary study within each meta-analysis and assessed, using meta-regression, the extent to which a set of parameters reflecting hypothesized patterns and risk factors for bias was indeed associated with a study’s likelihood to overestimate effect sizes.

Each bias pattern and postulated risk factor listed above was turned into a testable hypothesis, with specific predictions about how the magnitude of effect sizes reported by primary studies in meta-analyses should be associated with some measurable characteristic of primary study or author (Table 1). To test these hypotheses, we searched for meta-analyses in each of the 22 mutually exclusive disciplinary categories used by the Essential Science Indicators database, a bibliometric tool that covers all areas of science and was used in previous large-scale studies of bias (5, 11, 33). These searches yielded an initial list of over 116,000 potentially relevant titles, which through successive phases of screening and exclusion yielded a final sample of 3,042 usable meta-analyses (Fig. S1). Of these, 1,910 meta-analyses used effect-size metrics that could all be converted to log-odds ratio (n = 33,355 nonduplicated primary data points), whereas the remaining 1,132 meta-analyses (n = 15,909) used a variety of other metrics, which are not readily interconvertible to log-odds ratio (Table S1). In line with previous studies, we focused our main analysis on the former subsample, which represents a relatively homogeneous population, and we included the second subsample only in robustness analyses.

Table 1.

Summary of each bias pattern or risk factor for bias that was tested in our study, parameters used to test these hypotheses via meta-regression, predicted direction of the association of these parameters with effect size, and overall assessment of results obtained

| Hypothesis type | Hypothesis tested | Specific factor tested | Variables measured to test the hypothesis | Predicted association with effect size | Result |

| Postulated bias patterns | Small-study effect | Study SE | + | S | |

| Gray literature bias | Gray literature (any type) vs. Journal article | − | S | ||

| Decline effect | Year order in MA | − | P | ||

| Early extremes | Year order in MA, regressed on absolute effect size | − | N | ||

| Citation bias | Total citations to study | + | S | ||

| US effect | Study from author in the US vs. Any other country | + | P | ||

| Industry bias | Studies with authors affiliated with private industry vs. Not | + | P | ||

| Postulated risk factors for bias | Pressures to publish | Country policies | Cash incentive | + | N |

| Career incentive | + | N | |||

| Institutional incentive | + | N | |||

| Author’s productivity | (First/last) author’s total publications, publications per year | + | N | ||

| Author’s impact | (First/last) total citations, average citations, average normalized citations, average journal impact, % top10 journals | + | N | ||

| Mutual control | Team size | − | S | ||

| Countries/author, average distance between addresses | + | S | |||

| Individual risk factors | Career level | Years in activity (first/last) author | − | S | |

| Gender | (First/last) author’s female name | − | N | ||

| Research integrity | (First/last) author with ≥1 retraction | + | P | ||

Symbols indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). Conclusions as to whether results indicate that the hypothesis was fully supported (S), partially supported (P), or not supported (N) are based on main analyses as well as secondary and robustness tests, as described in the main text.

Fig. S1.

Flow diagram illustrating the stages of selection of meta-analyses to include in the study. Electronic search in the Web of Science yielded an initial list of potentially relevant titles. From this list 30,225 titles were actually screened and, in successive phases of selection, a total of 14,885 titles were identified for potential inclusion. A total of 10,485 studies were eventually excluded for failing to meet one or more of the criteria, whereas 1,457 were deemed of unclear status. Of the latter, 187 were identified too late in the process to be included, whereas multiple attempts were made to contact the authors of the 1,270 previously identified studies. The authors of 516 of these studies responded, and communications with them led to discarding 417 studies, which either did not meet our inclusion criteria or had unretrievable data. Therefore, 99 meta-analyses could be included, bringing our final sample to a total of 3,042 distinct meta-analyses. Meta-analyses in the multidisciplinary category (MU) were reclassified by hand.

Table S1.

Metrics used by the meta-analyses included in the study and their frequency in each of the 22 disciplines used for sampling

| Metric | MA | GE | CS | CH | EN | MB | BB | MI | NB | PA | EE | IM | PT | CM | AG | PP | EB | SO | Total |

| Odds ratio | 1 | 0 | 1 | 2 | 3 | 255 | 42 | 12 | 153 | 10 | 23 | 105 | 83 | 213 | 7 | 59 | 2 | 67 | 1,038 |

| Risk ratio | 0 | 0 | 0 | 0 | 0 | 14 | 21 | 15 | 42 | 10 | 29 | 39 | 59 | 173 | 30 | 26 | 1 | 40 | 499 |

| Stand.mean.diff. | 0 | 0 | 2 | 0 | 0 | 3 | 11 | 0 | 70 | 13 | 22 | 16 | 14 | 56 | 14 | 165 | 8 | 82 | 476 |

| Correlation coefficient | 0 | 1 | 6 | 0 | 1 | 0 | 3 | 0 | 16 | 23 | 12 | 2 | 1 | 10 | 2 | 95 | 39 | 42 | 253 |

| Mean difference | 0 | 0 | 0 | 0 | 0 | 3 | 17 | 0 | 27 | 7 | 4 | 11 | 18 | 80 | 27 | 7 | 1 | 16 | 218 |

| Proportion | 0 | 0 | 0 | 0 | 1 | 2 | 5 | 7 | 21 | 2 | 2 | 40 | 12 | 78 | 1 | 14 | 1 | 15 | 201 |

| Hedges’ g | 0 | 1 | 2 | 0 | 0 | 0 | 2 | 1 | 17 | 9 | 2 | 0 | 3 | 7 | 2 | 42 | 0 | 24 | 112 |

| Hazard ratio | 0 | 0 | 0 | 0 | 0 | 3 | 2 | 0 | 2 | 0 | 0 | 4 | 3 | 31 | 1 | 2 | 0 | 3 | 51 |

| Prop.diff. | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 5 | 2 | 2 | 2 | 5 | 14 | 2 | 4 | 3 | 3 | 45 |

| Fisher's Z | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 2 | 2 | 8 | 0 | 0 | 1 | 0 | 8 | 0 | 1 | 24 |

| Simple mean | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 2 | 4 | 3 | 1 | 2 | 3 | 0 | 0 | 0 | 0 | 17 |

| Other | 0 | 0 | 0 | 1 | 1 | 2 | 4 | 0 | 11 | 7 | 17 | 7 | 10 | 9 | 6 | 8 | 11 | 14 | 108 |

| Total | 1 | 3 | 11 | 3 | 7 | 284 | 109 | 36 | 368 | 89 | 124 | 227 | 210 | 675 | 92 | 430 | 66 | 307 | 3,042 |

Abbreviations of column headings are defined in Methods in the main text. Stand.mean.diff, standardized mean difference; Prop.diff, proportion difference.

On each included meta-analysis, the possible effect of each relevant independent variable was measured by standard linear meta-regression. The resulting regression coefficients were then summarized in a second-order meta-analysis to obtain a generalized summary estimate of each pattern and factor across all included meta-analyses. Analyses were also repeated using an alternative method, in which primary data are standardized and analyzed with multilevel regression (SI Multilevel Meta-Regression Analysis for further details).

Results

Bias Patterns.

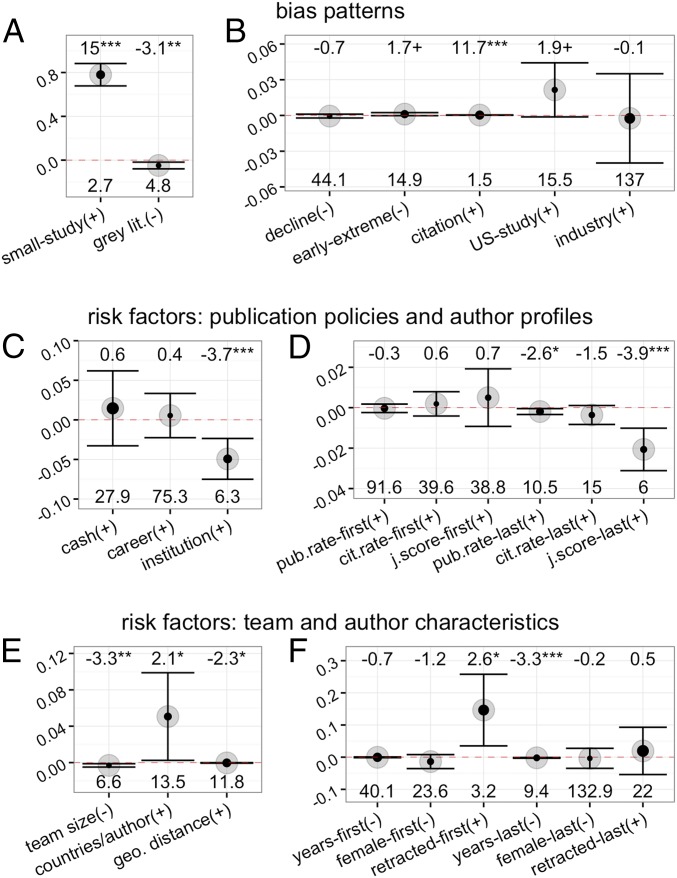

Bias patterns varied substantially in magnitude as well as direction across meta-analyses, and their distribution usually included several extreme values (Fig. S2; full numerical results in Dataset S1). Second-order meta-analysis of these regression estimates yielded highly statistically significant support for the presence of small-study effects, gray literature bias, and citation bias (Fig. 1 A and B). These patterns were consistently observed in all secondary and robustness tests, which repeated all analyses not adjusting for study precision, standardizing meta-regression estimates and not coining the meta-analyses or coining them with different thresholds (Methods for details and all numerical results in Dataset S2).

Fig. S2.

Histograms of meta-regression coefficients measured for each bias pattern and risk factor tested in the study. Symbols in parentheses indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). Numbers in parentheses indicate the number of meta-analyses that could be used in the analysis (i.e., in which the corresponding independent variable had nonzero variance). Histograms are centered on zero, marked by a vertical red bar.

Fig. 1.

(A–F) Meta-meta–regression estimates of bias patterns and bias risk factors, adjusted for study precision. Each panel shows second-order random-effects meta-analytical summaries of meta-regression estimates [i.e., b ± 95% confidence interval (CI)], measured across the sample of meta-analyses. Symbols in parentheses indicate whether the association between factor and effect size is predicted to be positive (+) or negative (−). The gray area within each circle is proportional to the percentage of total variance explained by between–meta-analysis variance (i.e., heterogeneity, measured by I2). To help visualize effect sizes and statistical significance, numbers above error bars display t scores (i.e., summary effect size divided by its corresponding SE, b/SE) and conventional significance levels (i.e., +P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Numbers below each error bar reflect the cross–meta-analytical consistency of effects, measured as the ratio of between–meta-analysis SD divided by summary effect size (i.e., τ/b; the smaller the ratio, the higher the consistency). A few of the tested variables were omitted from D for brevity (full numerical results for all variables are in Dataset S2). See main text and Table 1 for details of each variable.

The decline effect, measured as a linear association between year of study publication and reported effect size, was not statistically significant in our main analysis (Fig. 1B), but was highly significant in all robustness tests. Moreover, secondary analyses conducted with the multilevel regression approach suggest that most or all of this effect might actually consist of a “first-year” effect, in which the decline is not linear and just the very earliest studies are likely to overestimate findings (SI Multilevel Meta-Regression Analysis, Multilevel Analyses, Secondary Tests of Early Extremes, Proteus Phenomenon and Decline Effect).

The early-extreme effect was, in most robustness tests, marginally significant in the opposite direction to what was predicted, but was measured to high statistical significance in the predicted (i.e., negative) direction when not adjusted for small-study effects (Dataset S2). In other words, it appears to be true that earlier studies may report extreme effects in either direction, but this effect is mainly or solely due to the lower precision of earlier studies.

The US effect exhibited associations in the predicted direction and was marginally significant in our main analyses (Fig. 1B) and was significant in some of the robustness tests, particularly when meta-analysis coining was done more conservatively (Dataset S2; Methods for further details).

Industry bias was absent in our main analyses (Fig. 1B) but was statistically significant when meta-analyses were coined more conservatively (Dataset S2).

Standardizing these various biases to estimate their relative importance is not straightforward, but results using different methods suggested that small-study effects are by far the most important source of potential bias in the literature. Second-order meta-analyses of standardized meta-regression estimates, for example, yield similar results to those in Fig. 1 (Dataset S2). Calculation of pseudo-R2 in multilevel regression suggests that small-study effects account for around 27% of the variance of primary outcomes, whereas gray literature bias, citation bias, decline effect, industry sponsorship, and US effect, each tested as individual predictor and not adjusted for study precision, account for only 1.2%, 0.5%, 0.4%, 0.2%, and 0.04% of the variance, respectively (SI Multilevel Meta-Regression Analysis, Multilevel Analyses, Relative Strength of Biases further details).

Risk Factors for Bias.

The pressure to publish hypothesis was overall negatively supported by our analyses, which included tests at the country (i.e., policy) as well as the individual level. At the country level, we found that authors working in countries that incentivize publication performance by distributing public funding to institutions (i.e., Australia, Belgium, New Zealand, Denmark, Italy, Norway, and the United Kingdom) were significantly less likely to overestimate effects. Countries in which publication incentives operate on an individual basis, for example via a tenure-track system (i.e., Germany, Spain, and the United States), and countries in which performance is rewarded with cash-bonus incentives (China, Korea, and Turkey), however, exhibited no significant difference in either direction (Fig. 1C). If country was tested separately for first and last authors, results were even more conservative, with last authors working in countries from the latter two categories being significantly less likely to overestimate results (Dataset S2). At the individual level, we again found little consistent support for our predictions. Publication and impact performance of first and last authors, measured in terms of publications per year, citations per paper, and normalized journal impact score (Fig. 1D) as well as additional related measures (Dataset S2), were either not or negatively associated with overestimation of results. The most productive and impactful last authors, in other words, were significantly less likely to report exaggerated effects (Fig. 1D).

The mutual control hypothesis was supported overall, suggesting a negative association of bias with team size and a positive one with country-to-author ratio (Fig. 1E). Geographic distance exhibited a negative association, against predictions, but this result was not observed in any robustness test, unlike the other two (Dataset S2).

The career level of authors, measured as the number of years in activity since the first publication in the Web of Science, was overall negatively associated with reported effect size, although the association was statistically significant and robust only for last authors (Fig. 1F). This finding is consistent with the hypothesis that early-career researchers would be at greater risk of reporting overestimated effects (Table 1).

Gender was inconsistently associated with reported effect size: In most robustness tests, female authors exhibited a tendency to report smaller (i.e., more conservative) effect sizes (e.g., Fig. 1F), but the only statistically significant effect detected among all robustness tests suggested the opposite, i.e., that female first authors are more likely to overestimate effects (Dataset S2).

Scientists who had one or more papers retracted were significantly more likely to report overestimated effect sizes, albeit solely in the case of first authors (Fig. 1F). This result, consistently observed across most robustness tests (Dataset S2), offers partial support to the individual integrity hypothesis (Table 1).

The between–meta-analysis heterogeneity measured for all bias patterns and risk factors was high (Fig. 1, Fig. S2, and Dataset S2), suggesting that biases are strongly dependent on contingent characteristics of each meta-analysis. The associations most consistently observed, estimated as the value of between–meta-analysis variance divided by summary effect observed, were, in decreasing order of consistency, citation bias, small-study effects, gray literature bias, and the effect of a retracted first author (Fig. 1, bottom numbers).

Differences Between Disciplines and Domains.

Part of the heterogeneity observed across meta-analyses may be accounted for at the level of discipline (Fig. S3) or domain (Fig. 2 and Fig. S4), as evidenced by the lower levels of heterogeneity and higher levels of consistency observed within some disciplines and domains. The social sciences, in particular, exhibited effects of equal or larger magnitude than the biological and the physical sciences for most of the biases (Fig. 2) and some of the risk factors (Fig. S4).

Fig. S3.

Bias patterns and bias risk factors tested in our study, partitioned by discipline (see Methods for details). Each panel reports the second-order random-effects meta-analytical summaries of meta-regression estimates (b ± 95% CI) measured across the sample of meta-analyses. The shaded portion of the area of each circle is proportional to the percentage of total variance that is explained by between–meta-analysis variance (i.e., heterogeneity, measured by I2, all numerical results available as Supporting Information). Symbols in parentheses indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). Percentages above each panel report between-discipline heterogeneity. Meta-analyses in the “multidisciplinary” category were reclassified by hand in one of the other disciplines, and those from the physical sciences disciplines, being few in number, were combined in one category. Abbreviations are defined in Methods in the main text. CEGM, Chemistry + Engineering + Geosciences + Mathematics. To help visualize effects, numbers on the right of error bars display t scores and statistical significance levels (i.e., °P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Numbers on the left of each error bar reflect the cross–meta-analytical consistency of effects, measured as the ratio of between–meta-analysis variance divided by summary effect size (i.e., τ2/b; the smaller the ratio is, the higher the consistency).

Fig. 2.

Bias patterns partitioned by disciplinary domain. Each panel reports the second-order random-effects meta-analytical summaries of meta-regression estimates (b ± 95% CI) measured across the sample of meta-analyses. Symbols in parentheses indicate whether the association between factor and effect size is predicted to be positive (+) or negative (−). The gray area within each circle is proportional to the percentage of total variance explained by between–meta-analysis variance (i.e., heterogeneity, measured by I2). To help visualize effect sizes and statistical significance, numbers above error bars display t scores (i.e., summary effect size divided by its corresponding SE, b/SE) and conventional significance levels (i.e., +P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Numbers below each error bar reflect the cross–meta-analytical consistency of effects, measured as the ratio of between–meta-analysis SD divided by summary effect size (i.e., τ/b; the smaller the ratio is, the higher the consistency). The sample was partitioned between meta-analyses from journals classified based on discipline indicated in Thompson Reuters’ Essential Science Indicators database. Using abbreviations described in Methods, discipline classification is the following: physical sciences (P), MA, PH, CH, GE, EN, CS; social sciences (S), EB, PP, SO; and biological sciences (B), all other disciplines. See main text and Table 1 for further details.

Fig. S4.

Bias risk factors tested in our study, partitioned by disciplinary domain. Each panel reports the second-order random-effects meta-analytical summaries of meta-regression estimates (b ± 95% CI) measured across the sample of meta-analyses. Symbols in parentheses indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). The transparent portion of the area of each circle is proportional to the percentage of total variance that is explained by between–meta-analysis variance (i.e., heterogeneity, measured by I2, all numerical results available as Supporting Information). The sample was partitioned between meta-analyses from journals in the physical (P), biological (B), and social (S) sciences, as classified by Thompson Reuters’ Essential Science Indicators database. To help visualize effects, numbers above error bars display t scores and statistical significance levels (i.e., °P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Numbers below each error bar reflect the cross–meta-analytical consistency of effects, measured as the ratio of between–meta-analysis variance divided by summary effect size (i.e., τ2/b; the smaller the ratio is, the higher the consistency). Using abbreviations described in Methods, discipline classification is the following: physical sciences (P), MA, PH, CH, GE, EN, CS ; social sciences (S), EB, PP, SO; and biological sciences (B), all other disciplines.

Trends over Time.

We assessed whether the magnitude of bias patterns or risk factors measured within meta-analyses had changed over time, measured by year of publication of meta-analysis. Because individual-level bibliometric information might be less accurate going backwards in time, this analysis was limited to study-level parameters. Across seven tested effects, we observed a significant increase in the coefficients of collaboration distance and small-study effects (respectively, b = 0.012 ± 0.005/y, b = 0.020 ± 0.010/y; Dataset S3). Analyses partitioned by domain suggest that the social sciences, in particular, may have registered a significant worsening of small-study effects, decline effect, and social control effects (Fig. S5; see Dataset S4 for all numerical results). These trends, however, were small in magnitude and not consistently observed across robustness analyses (Dataset S4).

Fig. S5.

Estimate of changes over time of three of the bias patterns tested (all numerical results are in Dataset S4). Circles in each panel are meta-regression estimates plotted by year of the corresponding meta-analysis. Regression lines and values in red were produced by a second-order mixed-effects meta-regression. Symbols in parentheses indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). See main text and Table 1 for further details. To help visualize trends, asterisks display conventional statistical significance levels (i.e., +P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Using abbreviations described in Methods, discipline classification is the following: physical sciences (P), MA, PH, CH, GE, EN, CS; social sciences (S), EB, PP, SO; and biological sciences (B), all other disciplines.

Robustness of Results.

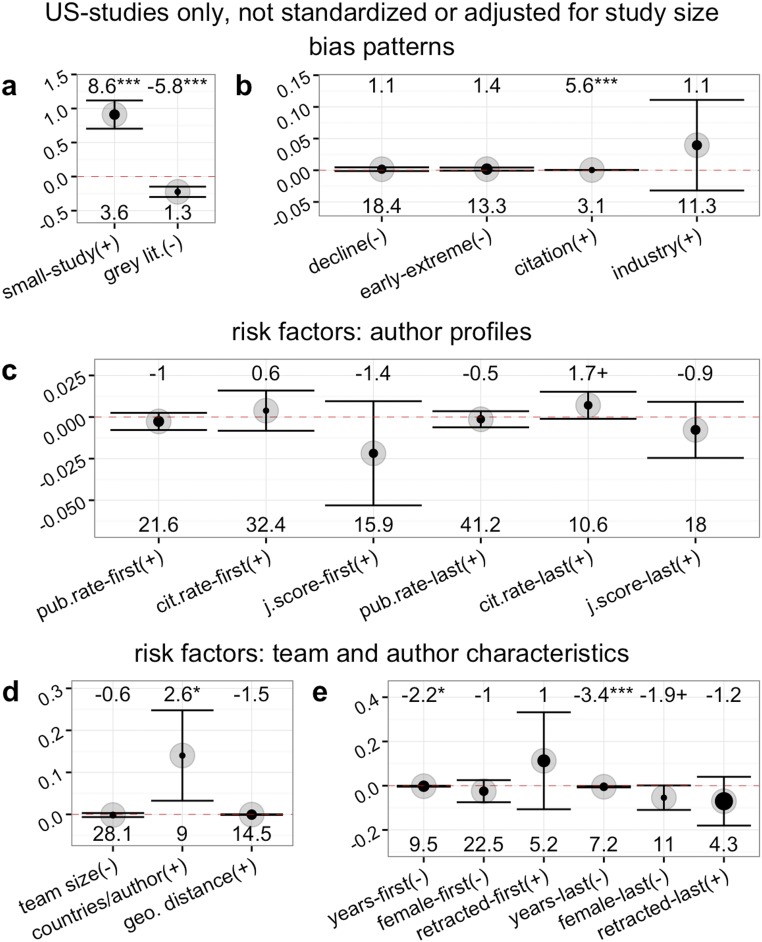

Similar results were obtained if analyses were run including the noninterconvertible meta-analyses, although doing so yields extreme levels of heterogeneity and implausibly high regression coefficients in some disciplines (Dataset S5). We also reached similar conclusions if we analyzed the sample using multilevel weighted regression analysis. This method also allowed us to test all variables in a multivariable model, which confirmed that measured bias patterns and risk factors are all independent from one another (see analyses and discussion in SI Multilevel Meta-Regression Analysis, Multilevel Analyses, Relative Independence of Biases). Country of activity of an author might represent a significant confounding factor in our analyses, particularly if country is a surrogate for the way research is done and rewarded. For example, countries with incentives to publish may also have higher research standards, on average. Moreover, the accuracy of individual-level data may be lower for authors from many developing countries that have limited presence in English-speaking journals. However, very similar results were obtained when analyses were limited to studies from authors based in the United States (Fig. S6).

Fig. S6.

Meta-meta–regression estimates of bias patterns and bias risk factors, not adjusted for study precision, limited to US studies. (A–E) The figure reports exactly the same analysis as reported in Fig. 1, with the exception of B, from which the test of the US effect, which does not apply to this sub-analysis, was removed. Details of each analysis and panel are equivalent to those reported in the main text. Each panel plots the second-order random-effects meta-analytical summaries of standardized meta-regression estimates (i.e., beta ± 95% CI), measured across the sample of meta-analyses. Symbols in parentheses indicate whether the association between factor and effect size is predictive to be positive (+) or negative (−). The gray area within each circle is proportional to the percentage of total variance explained by between–meta-analysis variance (i.e., heterogeneity, measured by I2). To help visualize effect sizes and statistical significance, numbers above error bars display t scores (i.e., summary effect size divided by its corresponding SE, b/SE) and conventional significance levels (i.e., +P < 0.1, *P < 0.05, **P < 0.01, ***P < 0.001). Numbers below each error bar reflect the cross–meta-analytical consistency of effects, measured as the ratio of between–meta-analysis SD divided by summary effect size (i.e., τ/b; the smaller the ratio is, the higher the consistency). A few of the tested variables were omitted from D for brevity (full numerical results for all variables are in Dataset S2). See main text and Table 1 for details of each variable.

Discussion

Our study asked the following question: “If we draw at random from the literature a scientific topic that has been summarized by a meta-analysis, how likely are we to encounter the bias patterns and postulated risk factors most commonly discussed, and how strong are their effects likely to be?” Our results consistently suggest that small-study effects, gray literature bias, and citation bias might be the most common and influential issues. Small-study effects, in particular, had by far the largest magnitude, suggesting that these are the most important source of bias in meta-analysis, which may be the consequence either of selective reporting of results or of genuine differences in study design between small and large studies. Furthermore, we found consistent support for common speculations that, independent of small-study effects, bias is more likely among early-career researchers, those working in small or long-distance collaborations, and those that might be involved with scientific misconduct.

Our results are based on very conservative methodological choices (SI Limitations of Results), which allow us to draw several conclusions. First, there is a good match between the focus of meta-research literature and the actual prevalence of different biases. Small-study effects, whether due to the file-drawer problem or to heterogeneity in study design, are the most widely understood and studied problem in meta-analysis (1). Gray literature bias, which partially overlaps but is distinct from the file-drawer problem, is also widely studied and discussed, and so is citation bias (1, 34). Moreover, we found at least partial support for all other bias patterns tested, which confirms that these biases may also represent significant phenomena. However, these biases appeared less consistently across meta-analyses, suggesting that they emerge in more localized disciplines or fields.

Second, our study allows us to get a sense of the relative magnitude of bias patterns across all of science. Due to the limitations discussed above, our study is likely to have underestimated the magnitude of all effects measured. Nonetheless, the effects measured for most biases are relatively small (they accounted for 1.2% or less of the variance in reported effect sizes). This suggests that most of these bias patterns may induce highly significant distortions within specific fields and meta-analyses, but do not invalidate the scientific enterprise as a whole. Small-study effects were an exception, explaining as much as 27% of the variance in effect sizes. Nevertheless, meta-regression estimates of small-study effects on odds ratio may run a modest risk of type I error (35) and were measured by our study more completely and accurately than the other biases (SI Multilevel Meta-Regression Analysis, Reliability and Consistency of Collected Data). Therefore, whereas all other biases in our study were measured conservatively, small-study effects are unlikely to be more substantive than what our results suggest. Moreover, small-study effects may result not necessarily from QRP but also from legitimate choices made in designing small studies (17, 36). Choices in study design aimed at maximizing the detection of an effect might be justified in some discovery-oriented research contexts (37).

Third, the literature on scientific integrity, unlike that on bias, is only partially aiming at the right target. Our results supported the hypothesis that early-career researchers might be at higher risk from bias, largely in line with previous results on retractions and corrections (16) and with predictions of mathematical models (26). The reasons why early-career researchers are at greater risk of bias remain to be understood. Our results also suggest that there is a connection between bias and retractions, offering some support to a responsible conduct of research program that assumes negligence, QRP, and misconduct to be connected phenomena that may be addressed by common interventions (38). Finally, our results also support the notion that mutual control between team members might protect a study from bias. The team characteristics that we measured are very indirect proxies of mutual control. However, in a previous study these proxies yielded similar results on retractions and corrections (16), which were also significantly predicted by sociological hypotheses about the academic culture of different countries (a hypothesis that this study was not designed to test). Therefore, our findings support the view that a culture of openness and communication, whether fostered at the institutional or the team level, might represent a beneficial influence.

Even though several hypotheses taken from the research integrity literature were supported by our results, the risk factors that were not supported included phenomena that feature prominently in such literature. In particular, the notion that pressures to publish have a direct effect on bias was not supported and even contrarian evidence was seen: The most prolific researchers and those working in countries where pressures are supposedly higher were significantly less likely to publish overestimated results, suggesting that researchers who publish more may indeed be better scientists and thus report their results more completely and accurately. A previous study testing the risk of producing retracted and corrected articles, with the latter assumed to represent a proxy of integrity, had similarly falsified the pressures to publish hypothesis as conceptualized here (16), and so did historical trends of individual publication rates (39). Therefore, cumulating evidence offers little support for the dominant speculation that pressures to publish force scientists to publish excessive numbers of articles and seek high impact at all costs (40–42). A link between pressures to publish and questionable research practices cannot be excluded, but is likely to be modulated by characteristics of study and authors, including the complexity of methodologies, the career stage of individuals, and the size and distance of collaborations (14, 39, 43). The latter two factors, currently overlooked by research integrity experts, might actually be growing in importance, at least in the social sciences (Fig. S5).

In a previous, smaller study, two of us documented the US effect (14). We did measure again a small US effect but this may not be easy to explain simply by pressures to publish, as previously speculated. Future testable hypotheses to explain the US effect include a greater likelihood of US researchers to engage in long-distance collaborations and a greater reliance on early-career researchers as first authors.

Other general conclusions of previous studies are at least partially supported. Systematic differences in the risk of bias between physical, biological, and social sciences were observed, particularly for the most prominent biases, as was expected based on previous evidence (5, 11, 33). However, it is not known whether the disciplinary and domain differences documented in this study are the result of different research practices in primary studies (e.g., higher publication bias in some disciplines) or whether they result from differences in methodological choices made by meta-analysts of different disciplines (e.g., lower inclusion of gray literature). Similarly, whereas our results support previous observations that bias may have increased in recent decades, especially in the social sciences (11), future research will need to determine whether and to what extent these trends might reflect changes in meta-analytical methods, rather than an actual worsening of research practices.

In conclusion, our analysis offered a “bird’s-eye view” of bias in science. It is likely that more complex, fine-grained analyses targeted to specific research fields will be able to detect stronger signals of bias and its causes. However, such results would be hard to generalize and compare across disciplines, which was the main objective of this study. Our results should reassure scientists that the scientific enterprise is not in jeopardy, that our understanding of bias in science is improving and that efforts to improve scientific reliability are addressing the right priorities. However, our results also suggest that feasibility and costs of interventions to attenuate distortions in the literature might need to be discussed on a discipline- and topic-specific basis and adapted to the specific conditions of individual fields. Besides a general recommendation to interpret with caution results of small, highly cited, and early studies, there may be no one-size fits-all solution that can rid science efficiently of even the most common forms of bias.

Methods

During December 2013, we searched Thompson Reuters’ Web of Science database, using the string (“meta-analy*” OR “metaanaly*” OR “meta analy*”) as topic and restricting the search to document types “article” or “review.” Sampling was randomized and stratified by scientific discipline, by restricting each search to the specification of journal names included in each of the 22 disciplinary categories used by Thompson Reuters’ Essential Science Indicators database. These disciplines and the abbreviations used in Fig. 2 and Figs. S1 and S3 are the following: AG, agricultural sciences; BB, biology and biochemistry; CH, chemistry; CM, clinical medicine; CS, computer science; EB, economics and business; EE, environment/ecology; EN, engineering; GE, geosciences; IM, immunology; MA, mathematics; MB, molecular biology and genetics; MI, microbiology; MS, materials science; MU, multidisciplinary; NB, neuroscience and behavior; PA, plant and animal sciences; PH, physics; PP, psychiatry/psychology; PT, pharmacology and toxicology; SO, social sciences, general; and SP, space sciences. Studies retrieved from MU were later reclassified based on topic.

In successive phases of selection, meta-analyses were screened for potential inclusion (Fig. S1), based on the following inclusion criteria: (i) tested a specified empirical question, not a methodological one; (ii) sought to answer such question based on results of primary studies that had pursued the same or a very similar question; (iii) identified primary studies via a dedicated literature search and selection; (iv) produced a formal meta-analysis, i.e., a weighted summary of individual outcomes of primary studies; and (v) the meta-analysis included at least five independent primary studies (SI Methods for details).

For each primary study in each included meta-analysis we recorded reported effect size and measure of precision provided (i.e., confidence interval, SE, or N) and we retrieved available bibliometric information, using multiple techniques and databases and attempting to complete missing data with hand searches. Further details of each parameter collected and how variables tested in the study were derived are provided in SI Methods.

Each dataset was standardized following previously established protocols (14). Moreover we inverted the sign of (i.e., multiplied by −1) all primary studies within meta-analyses whose pooled summary estimates were smaller than zero—a process known as “coining.” All analyses presented in the main text refer to meta-analyses coined with a threshold of zero, whilst results obtained with uncoined data and data with a more conservative coining threshold are reported in Datasets S1–S5.

Each individual bias or risk factor was first tested within each meta-analysis, using simple meta-regression. The meta-regression slopes thus obtained were then summarized by a second-order meta-analysis, weighting by the inverse square of their respective SEs, assuming random variance of effects across meta-analyses.

We also analyzed data using weighted multilevel regression, a method that yields similar but more conservative estimates (30). A complete discussion of this method and all results obtained with it can be found in Supporting Information.

SI Methods

During December 2013, we searched Thompson Reuters’ Web of Science database, using the string (“meta-analy*” OR “metaanaly*” OR “meta analy*”) as topic (which captures terms used anywhere in the database record, including, title, abstract, keyword, keywords expanded), restricting the search to document types “article” or “review.” This search strategy was identified as the most efficient way to retrieve suitable and usable meta-analyses. Sampling was stratified by scientific discipline, by restricting each search to the specification of journal names included in each of the 22 disciplinary categories used by Thompson Reuters’ Essential Science Indicators database. These disciplines and the abbreviations used throughout the text and figures are defined in Methods in the main text.

All potentially relevant records were retrieved for each discipline and their order was randomized so that subsequent phases of inspection and inclusion would yield randomized samples. From each discipline, an initial list of potentially relevant records was retrieved based on title and abstract. The pdf file for all these records was retrieved. When the pdf was not available, attempts were made to contact the author of the meta-analysis to obtain it. All retrieved pdfs were then inspected for final inclusion or exclusion.

To be included in the final sample, meta-analyses needed to meet the following criteria:

-

i)

Tested a specified empirical question, not a methodological one. Meta-studies that, for example, report statistics on methodological characteristics of previous studies were excluded.

-

ii)

Sought to answer such question based on results of primary studies that had pursued the same or a very similar question. This criterion was necessary because, if primary studies had been conceived for completely different hypotheses from that of the meta-analysis, then there is no reason to suppose that the effects extracted from them would be biased with respect to the meta-analysis’ hypothesis. To this end, we excluded meta-studies that used data from studies designed for a completely different research question (for example, a study that pools the weight of a farm animal breed by taking values of weight from any previous study done on that breed). We also excluded meta-analyses whose primary objective was to test a meta-question using meta-regression (for example, a meta-analysis that assesses the sensitivity of various species to habitat degradation by comparing ecological surveys that were conducted in different habitats for purposes other than measuring the effects of habitat degradation).

-

iii)

Identified primary studies via a dedicated literature search and selection. Meta-analyses that reanalyzed data sets compiled by previous meta-analyses were excluded.

-

iv)

Produced a formal meta-analysis, i.e., a weighted summary of individual outcomes of primary studies. Studies that had only produced a systematic review (e.g., presented tabulated results but had not pooled results in a summary estimate) and studies that adopted semiquantitative methods, such as vote counting, were excluded. We excluded studies that, instead of summarizing the outcomes of different experiments, had recompiled a single dataset from primary sources, and thus do not report primary study-level data and statistics. We also excluded genome-wide association studies (GWAS) and meta-analyses of neuroimaging data (e.g., fMRI) because their methods and outcomes are too different to be effectively compared with those of standard meta-analyses.

-

v)

The meta-analysis included at least five independent primary studies. When studies had conducted multiple meta-analyses or had partitioned the meta-analysis into different subgroups, with no attempt to an overall summary, then we selected the submeta-analysis that had the largest number of independent primary studies.

Whenever in doubt about the inclusion or exclusion status of a paper or whenever the pdf and supporting information did not include the meta-analysis’s primary data, attempts were made to contact the author of the meta-analysis by email, to ask for clarifications and/or data. A first attempt was made to contact all authors, using an email with standard text but that was personalized by specifying the name of the author and the title of the meta-analysis of interest. All responses were then acknowledged and dealt with by direct email exchanges with D.F. Authors who did not reply the first time were sent a reminder 1 wk later, followed by another reminder 4 wk later. When no response was received, the study was excluded.

Fig. S1 reports a summary flow diagram of the selection process as it occurred in each discipline. All of the potentially relevant titles were inspected for 17 of the 22 disciplines. In the remaining 5 disciplines, a random sample of titles was inspected instead.

The initial selection of potentially relevant titles was conducted by D.F. Then pdfs were downloaded by an assistant (Annaelle Winand) who operated a first screening, excluding all titles that were obviously not systematic reviews or meta-analyses. All decisions about inclusion and exclusion of the remaining pdfs were made by D.F.

Data Extraction.

For each primary study in each included meta-analysis we recorded the study’s identification number, its reported effect size, and the measure of precision provided (i.e., confidence interval, SE, or N). In most cases, primary data were available directly in numerical form, either within the meta-analysis’s forest plot or in tables. When the meta-analysis presented data exclusively in graphic form (i.e., forest plot with no numbers), primary data were extracted using a plot-digitizing software. The nature of the metric used by the meta-analysis was also recorded.

When the meta-analysis did not provide primary data in any form (and/or when the inclusion/exclusion status of the meta-analysis was in doubt), multiple attempts were made to contact the authors of the meta-analysis.

All primary data were extracted by a team of research assistants (i.e., Sophy Ouch, Sonia Jubinville, Mélanie Vanel, Fréderic Normandin, Annaelle Winand, Sébastien Richer, Felix Langevin, Hugo Vaillancourt, Asia Krupa, Julie Wong, Gabe Lewis, and Aditya Kumar) and subsequently inspected, corrected, and completed by D.F.

Study and Author Characteristics.

Sources of bibliometric data.

For each primary datum reported in each meta-analysis we searched and retrieved available bibliometric information, using multiple techniques and databases. Each reference was searched in the Web of Science Core Collection (WOSCC) using, whenever possible, links that are provided automatically by the WOSCC to all cited references of a text (in our case, of the meta-analyses). If the primary study was present in the WOSCC database, its entire bibliometric record was downloaded, as well as the bibliometric profile of all its authors (described below). When the study was not available in the WOSCC database, its entire bibliographic reference, as reported in the meta-analysis’s publication, was recorded instead. Studies that were cited in meta-analyses as being unpublished were recorded as such. All meta-analyses had been obtained from the WOSCC, and therefore all had a corresponding bibliometric record that was also retrieved.

To ensure maximum completeness of information, records that did not appear to be accessible via direct link from the meta-analysis were searched again by hand in the WOSCC, using looser criteria (e.g., using key words in the title and/or authors); when such search was unsuccessful, author names and key words in the study's title were searched in the entire Web of Science (which includes multiple alternative databases: BIOSIS Citation Index, CABI: CAB Abstracts and Global Health, Current Contents Connect, Data Citation Index, Derwent Innovations Index, Inspec, KCI-Korean Journal Database, MEDLINE, Russian Science Citation Index, SciELO Citation Index, Zoological Record) and, if still unsuccessful, in Scopus. The corresponding bibliometric records, when available, were recorded.

Moreover, for all records for which bibliometric data were not available, we searched the name of the first author to identify, in the WOSCC, an alternative paper from which author bibliometric information could be extracted (these parameters are described below).

All primary study characteristics were recorded and searched multiple times by different research assistants, and results were inspected, corrected, and completed by D.F. Further details of each parameter collected and how variables tested in the study were derived are provided in Supporting Information.

For studies for which no identifier was available, as much information as possible was extracted by the bibliographic reference available in the meta-analysis. Information available in these bibliographic references usually included year of publication, number of authors, and name and type of source (i.e., book, thesis, unpublished data, conference proceeding, journal, personal communication, etc.).

Individual authors characteristics.

The complete bibliometric profile of all authors of all studies for which a WOSCC record was available (including authors for which an alternative record had been retrieved, described above) was obtained using a disambiguation algorithm (44). This algorithm clusters papers recorded in the database around individual author names, using decision rules that weight multiple items of information available in the database records (e.g., first name, email, affiliation, etc.). Depending on the level of information available, the precision of this algorithm varies between 94.4% and 100% (16). From each corpus associated with each author in our sample, we calculated the following bibliometric parameters:

First publication year: Year of the first publication of each author (for journals covered by WOS). This information was used to estimate the career level of the author (parameter below).

Last publication year: Year of the last publication of each author (for journals covered by WOS).

Number of papers: Total number of publications (article, review, and letter) counted up to the year 2014.

Total citations: Total number of citations (excluding author self-citations) for all of the publications, considering citations received up to the year 2014.

Average citations: Mean citation score of the publications calculated as the ratio of total citations to total number of publications.

Average normalized citations: Mean field-normalized citation score of the publications. This is calculated by dividing each paper’s citations by the mean number of citations received from papers in the same WOS Subject Categories and year and then averaging these normalized scores across all papers published by the author.

Average journal impact: Mean field-normalized citation score of all journals in which authors have published. This measure would be conceptually similar to taking the average Journal Impact Factor of an author but, unlike the latter, it is not restricted to a 2-y time window and is normalized by field.

Proportion of top 10 journals: Proportion of publications of an author that belong to the top 10% most cited papers of their WOS Subject Categories.

Country of author: Country was attributed based on the linkages between authors and affiliations recorded in all their papers available in the WOS. Because not all records have affiliation information, and because authors might report different affiliations throughout their careers, country was attributed based on a majority rule, taking into account all of the countries associated with the author in all of the author's publications. The country that we indicate for an author, in other words, corresponds to the best estimate of the place of most frequent activity of the author.

Full first name: This could be obtained by having at least one paper in the author’s corpus that reported his/her complete name.

Number of retracted publications coauthored by the author.

Based on the sources of information above, we derived the following independent variables:

Gray literature vs. journal article: Any record that could be unambiguously attributed to sources other than a peer-reviewed journal article was classified as “gray literature.” This category includes all personal communications, unpublished material, working papers, conference presentations, graduation thesis (any degree), book sources, reports, and patents. Whenever in doubt, the record was classified as journal article, to ensure a maximally conservative analysis.

Year of publication within meta-analysis: This corresponds to the publication year, rescaled to the oldest year within each meta-analysis. For gray literature and other records lacking bibliometric information, year was derived from manual search or indirect sources (e.g., from the bibliographic reference provided in the meta-analysis).

Citations received by each study.

US study vs. not: Any study or author that could be unambiguously attributed to the United States as opposed to any other country. Country attribution followed a priority rule: It was based on corresponding address (or any other reliable geographic information pertaining to a study) whenever this was available, and secondarily it was based on the country ascribed to the first author and, when this was also unavailable, to the country of last author.

Industry collaboration vs. other: Any study in which one or more authors indicated, as one of his/her affiliations, a private sector organization. This attribution is based on computerized routines that identify the private-organization status of an affiliation based on indicative acronyms or details in the reported addresses (e.g., “inc.,” “ltd.,” etc.) (45). Because not all industry partnerships may be formally indicated (or identifiable) in the addresses reported in WOS records, this variable yields a conservative estimate. Nonetheless, it is a more reliable indicator than sponsorship status indicated in acknowledgements, the proxy normally used to assess industry influence, because sponsorship information in acknowledgment sections is available only since 2009 and may not reliably reflect industry influence, given that sponsors are usually required to play no role in study design and execution.

Publication policy of country of author: This was based on country of study, attributed following the same priority rule used for the US-study variable described above. In secondary analyses, we also tested this parameter, distinguishing countries attributed as first and last author. Policy classification was based on categories proposed by previous independent literature (16).

Author publication rate: Total number of papers divided by total number of years of publication activity (author’s last publication year minus author’s first publication year plus one).

Author total number of papers, total citations, average citations, average normalized citations, average journal impact, and proportion of top 10 journals, as described above.

Team size: Number of authors, as described above. When bibliometric data were missing, number of authors was extracted from the author list, when available, or excluded in all other cases.

Country-to-author ratio: Number of countries included in corresponding addresses list, divided by total number of authors.

Average distance between author addresses, expressed in hundreds of kilometers: Geographic distance was calculated following the methodology of ref. 46, based on a geocoding of affiliations covered in the Web of Science (45).

Career stage of author: Number of years occurring between an author’s first publication and the year of publication of the paper included in our sample.

Female author vs. male author: Gender was attributed based on a combination of name and, whenever available, main country of author’s activity. The majority of names were classified using an online service (genderapi.com), and unclassified records were later completed, to any extent possible, by hand, using nation-specific lists of baby names and similar sources. Names that could not be attributed reliably to one gender were classified as “unknown”.

Retracted author vs. not: Dummy variable identifying authors that had coauthored at least one retracted paper. This estimation was based on the author bibliometric data above. Authors for which no bibliometric data were available were ascribed no retractions, making this a conservative estimate.

Data Preparation and Analyses.

Data standardization.

Data from meta-analyses that used interconvertible metrics (i.e., Cohen’s d, Hedges’ g, correlation coefficient, Fisher’s z, odds ratios and any metric whose description corresponded to one of these) were all transformed to log-odds ratios—these data were used in all main analyses. The remaining metrics were left untransformed. Whenever possible, we recorded as primary study’s unit of precision (for subsequent weighting) the study’s SE, which could in most cases be retrieved directly from the publication or recalculated from other data available in the meta-analysis (e.g., confidence intervals or sample size in the case of correlation coefficients). In a few meta-analyses that used unorthodox metrics and for which only sample size was available, precision was calculated as the inverse of the sample size. Primary studies whose outcomes appeared more than once within a meta-analysis were included only once, to ensure that each primary dataset consisted of independent studies.

Each dataset was subsequently standardized following previously established protocols (14). Specifically, from each meta-analytical primary data set obtained above we recalculated a random-effects weighted summary and subtracted this value from each primary effect size within that meta-analysis, essentially centering all primary outcomes by meta-analysis.

Coining by expected direction of primary outcomes’ biases.

Depending on the specific phenomenon studied and the metric used, the effects tested by studies in a meta-analysis might be expected to be positive or negative. Biases that lead to overestimation of effects would be expressed in the positive direction in the former case and in the negative direction in the latter. In previous studies we classified the direction of expected effects by hand (what we called the “expectation factor”), but the method was deemed to be inapplicable to most disciplines (14). However, it is reasonable to assume that in meta-analyses in which the pooled summary values are negative, the tested effect is negative and biases are therefore expressed in that direction. Therefore, we inverted the sign of (i.e., multiplied by −1) all primary studies within meta-analyses whose pooled summary estimates were smaller than zero—a process known as coining. All analyses presented in figures refer to meta-analyses coined with a threshold of zero. Secondary results obtained with uncoined data, and data with a more conservative coining threshold (i.e., 1 SD of primary effect sizes, described below) are reported in Datasets S1–S5.

Analyses.

All analyses reported in the main text were obtained by running meta-analyses at multiple levels. Each individual bias or risk factor was first tested for its effects within each meta-analysis, using simple meta-regression (the full set of meta-regression estimates and their SEs are reported in Dataset S1). The meta-regression slopes thus obtained were then summarized by a second-order meta-analysis, weighting by the inverse square of their respective SEs. This second-level meta-analysis assumed random effects, i.e., allowed each effect to vary at random across meta-analyses.

The robustness of our results was assessed by repeating all analyses on uncoined data (described above), data coined with a more conservative threshold (i.e., one negative SD of the log-odds ratio values in our sample, value equal to −1.11), meta-regressions not adjusted for study size, and standardized meta-regression estimates (i.e., each first-level meta-regression estimate was divided by the SD of its corresponding independent variable).

To assess the relative independence of biases measured by second-order meta-analysis, we also analyzed data using weighted multilevel regression, a method that yields similar but more conservative estimates (32). A complete discussion of this method and all results obtained with it can be found in Supporting Information.

Study characteristics.

We downloaded the entire database record of all primary studies (and meta-analyses) for which a database identifier was available. From these records, using computational methods, the following study characteristics were extracted: (i) year of publication, (ii) total citations received at time of sampling (i.e., December 2013), (iii) number of authors, and (iv) corresponding address.

SI Limitations of Results

First, we assumed that the expected direction of an effect corresponds to the direction of the summary effect of the meta-analysis, because this is the best guess given the data. Following this assumption, all primary effect sizes within meta-analyses whose summary estimate was below zero had their signs inversed (a process known as coining). Our results were robust to relaxing this assumption by allowing meta-analyses not to be coined and were actually strengthened (i.e., yielded additional statistically significant results) if more conservative coining assumptions were made (Dataset S2). Nonetheless, it is likely that our analysis included occasional errors, i.e., cases of meta-analyses in which the expected direction of effects was negative. Hand coding the direction of expected effects might have avoided some errors, but the procedure would be unfeasible and/or unavoidably subjective in many disciplines (14). Second, we tested all bias patterns and risk factors, assuming simple linear relationships and adjusting at most for one covariate (i.e., study precision). Multivariable analyses, however, support our general conclusions by showing that, once all predictors are included in the same model, the relative strengths of biases and risk factors are similar (Supporting Information). Third, our study relies on data reported by published meta-analyses, which could have errors or biases of their own. Empirical surveys suggest that such errors are common (47). Nondifferential measurement errors within meta-analysis are associated with deflated associations, so the impact of bias may be even larger in some cases. Fourth, we considered only meta-analyses with at least five studies, whereas it is common for many scientific topics to have only one or a few studies available (48). Fifth, the accuracy and internal consistency of data collected were very high for most variables (Table S6), but occasional measurement errors or missing data may have introduced noise that, if present, would lead to an underestimation of measured effects. Sixth, we assumed random effects in all second-order meta-analyses, an assumption that generalizes our findings at the cost of reducing statistical power, particularly in the presence of heterogeneity.

Table S6.

Relative magnitude of biases, measured by variance explained: Comparison of effect size values of different biases, measured by each bias’s ability to explain the variance of primary study’s effect sizes

| Variable | Pseudo-R2 | Standard R2 | Standard R2, unweighted |

| Small-study effect | 0.27145 | 0.0055 | 0.03507 |

| Gray literature bias | 0.01197 | 0.00029 | 0.00045 |

| Citation bias (effect size as independent variable) | 0.01897 | 0.0003 | 0.00103 |

| Citation bias (citations as independent variable) | 0.00545 | 0.0003 | 0.00103 |

| Decline effect | 0.00432 | 0.00023 | 0.00053 |

| Early extremes (same as decline effect, but on deviation score) | 0.00199 | 0.00138 | 0.0014 |

| Industry | 0.00038 | 0.00004 | 0.00008 |

| US effect | 0.00036 | 0.00005 | 0.0000004 |

For each variable values of total variance explained via pseudo-R2 calculated on the multilevel regression model (i.e., weighted mixed-effects model), standard R2 values obtained from simple linear weighted regression, and standard R2 of a linear and nonweighted regression are shown.

Table S7.

Estimation of consistency and completeness of primary data: Average consistency of data collected, estimated as Spearman rank correlation (for continuous and ordinal variables) or proportion of matching values (for nominal categories)

| Variable | Correlation/overlap | Missing | N of pairs |

| Effect size | 1 | 0 | 47 |

| SE/sample size | 1 | 0 | 47 |

| Citation | 0.98 | 0.07 | 46 |

| Publication year | 0.98 | 0.01 | 47 |

| Team size | 0.97 | 0.09 | 46 |

| Countries/author | 0.98 | 0.09 | 46 |

| Geographic distance | 0.99 | 0.09 | 46 |

| Years, first | 0.98 | 0.07 | 46 |

| Publication rate, first | 0.98 | 0.06 | 46 |

| Citation rate, first | 0.99 | 0.06 | 46 |

| j score, first | 0.99 | 0.06 | 46 |

| Years, last | 0.98 | 0.09 | 46 |

| Publication rate, last | 0.99 | 0.09 | 46 |

| Citation rate, last | 0.98 | 0.09 | 46 |

| j score, last | 0.99 | 0.09 | 46 |

| Gray literature | 0.99 | 0 | 47 |

| US study | 1 | 0.06 | 47 |

| Industry | 0.99 | 0 | 47 |

| Female, first | 1 | 0.2 | 46 |

| Retracted, first | 1 | 0.06 | 46 |

| Cash | 1 | 0.06 | 47 |

| Career | 0.99 | 0.06 | 47 |

| Institution | 0.99 | 0.06 | 47 |

| Female, last | 1 | 0.24 | 46 |

| Retracted, last | 1 | 0.09 | 46 |

The values reported are weighted means of correlations obtained from pairs of meta-analyses for which all data were collected twice independently. N of pairs: number of pairs of meta-analyses with nonmissing and nonzero variance, on which similarity indexes could be estimated.

SI Multilevel Meta-Regression Analysis

Preliminary Technical Note.

In a previous study with similar design but more limited scope (14) primary studies extracted from meta-analyses in Web of Science Subject Categories of “Psychiatry” and “Genetics & Heredity” were analyzed using a multilevel weighted regression approach. All independent variables (i.e., study characteristics) were standardized by meta-analysis and all primary outcomes were centered around their respective meta-analytical random-effects summary, to be analyzed as a nested population of individual data points, using inverse-variance weighted regression. The main analysis ran such a regression on a measure of how extreme a reported result is, which was called “deviation score,” calculated as the absolute value of primary outcomes, square-root transformed twice to approximate normality. The model included a random intercept to account for differences in overall dispersion of data points between meta-analyses. The second main analysis assessed the effect of a binary variable encoding the expected direction of outcome in each meta-analysis (i.e., whether the desired effect was positive or negative, a measure we called expectation factor) on the nonabsolute values of standardized primary outcomes.

It was later shown that a reanalysis of the data using a simpler meta-meta–analytical approach (i.e., the method used in the present study) yielded results substantially similar in content and actually easier to interpret (32). The meta-meta–analytical approach has the advantage of yielding direct estimates of effects of bias within and across meta-analyses and also provides direct estimations of between–meta-analysis variance in effects. However, this method has the disadvantage of performing regressions on what are typically small numbers of studies per meta-analysis. This method therefore hampers multivariable regression analysis, because the number of variables that can be tested in any individual analysis is limited by the size of the smallest meta-analyses included. The average size of meta-analysis in the literature is rather small and our sample, which aimed at being representative of the population of meta-analyses at large, included any meta-analysis with k ≥ 5.

The multilevel weighted regression approach avoids the limitation imposed by the small number of studies in the included meta-analyses, but requires standardization and transformations that make results less straightforward to interpret. Furthermore, multilevel regression accounts for heterogeneity at the lowest level of analysis (i.e., the level of primary studies), using a multiplicative factor (49) and not an additive factor (50). This difference makes results of multilevel models rather sensitive to distributional properties of data (normal distribution of errors is assumed at all levels of analysis) as well as of weights [large studies have more weight in a multivariable model than they would in a random-effects meta-analysis with additive random-effects correction (49)].

To summarize, the meta-meta–analytical approach presented in the main text estimates more closely and directly the amount of bias that may be encountered in meta-analysis. To assess the relative independence of multiple factors, however, multilevel weighted regression (henceforth, referred to simply as “regression”) represents a more powerful approach. In Supporting Information we therefore follow this latter approach to test for the relative independence of biases and bias risk factors measured in the main text and run further secondary analyses.

Multilevel Analyses.

Before proceeding with the analyses, we assessed the validity of the regression method by attempting to replicate previous results on the new sample (14). To this end, we selected from our new sample all meta-analyses that the Web of Science Subject Category system (i.e., not the Essential Science Indicators classification but the Subject Category classification) had classified under Psychiatry or Genetics & Heredity. To match the characteristics of the original sample, year of publication of meta-analysis was limited to the years 2009–2012 and size of meta-analysis to between 10 and 20 independent primary studies. We thus obtained a subsample of 42 behavioral and 29 nonbehavioral meta-analyses (852 studies in total), which is comparable in all characteristics with that tested previously.

To replicate the previous analysis, we analyzed the effects on deviation score (description above) of small-study effects, US effect, and year in meta-analysis. In our previous study we estimated confidence intervals by using a Markov chain Monte Carlo sampling algorithm, which, however, has been discontinued from the R lmer package that we used for these analyses (49). Therefore, confidence intervals are here derived by a simple Wald method. Because errors appear to be symmetrically heavy tailed in these data, confidence intervals estimated in this analysis are bound to be very conservative (i.e., statistical significance is underestimated).

Overall, results of this analysis are substantially similar to those previously reported (49), suggesting that relatively larger-effect sizes are observed by US studies among behavioral meta-analyses and not in the nonbehavioral ones (Table 1, leftmost columns). However, the values of this effect are shifted in the negative direction and confidence intervals are very large. Inspection of the data revealed the presence of a few meta-analyses with extremely low reported variances, which gives a few data points a vastly inflated weight in the analysis. The largest weight in the sample is 6.1 × 107, which represents over 63% of all weights in the sample combined—clearly an unacceptable imbalance.

The distribution of weights improved only slightly if SEs were rescaled and centered by meta-analysis, using the same formula as in previous analyses (14); i.e.,

where is SE of study i in meta-analysis j, is the average SE in meta-analysis j, and is the average of all SEs in the sample. This rescaling of SEs shifted results closer to those previously obtained (Table 1, center column), but still produced some extreme variance values—the largest weight being equal to 1.3 × 107, i.e., 26% of the total. To avoid the biasing effects of extreme weights, we therefore rescaled by meta-analysis not the SEs, but the weights themselves, with the formula