Abstract

The recognition of facial expressions of emotion is adaptive for human social interaction, but the ability to do this and the manner in which it is achieved differs among individuals. Previous functional neuroimaging studies have demonstrated that some brain regions, such as the inferior frontal gyrus (IFG), are active during the response to emotional facial expressions in healthy participants, and lesion studies have demonstrated that damage to these structures impairs the recognition of facial expressions. However, it remains to be established whether individual differences in the structure of these regions could be associated with differences in the ability to recognize facial expressions. We investigated this issue using acquired structural magnetic resonance imaging, and assessed the performance of healthy adults with respect to recognition of the facial expressions of six basic emotions. The gray matter volume of the right IFG positively correlated with the total accuracy of facial expression recognition. This suggests that individual differences in the ability to recognize facial expressions are associated with differences in the structure of the right IFG. Furthermore, the mirror neuron activity of the IFG may be important for establishing efficient facial mimicry to facilitate emotion recognition.

Keywords: cerebellum, facial expression recognition, inferior frontal gyrus, superior temporal gyrus, voxel-based morphometry

Introduction

Facial expressions are indispensable for effective social interactions. Expressions make it possible to understand another’s emotional state, communicate intention (Keltner and Kring, 1998) and trigger appropriate behavior (Blair, 2003). Consistent with this adaptive functioning, previous studies have demonstrated that the ability to recognize facial expressions accurately has a positive effect on functional outcomes, such as social adjustment (e.g. Edwards et al., 1984) and mental health (e.g. Carton et al., 1999). Accordingly, people with impaired emotion recognition, such as individuals with autism spectrum disorder (ASD) and schizophrenia, have marked difficulties in this regard (Mancuso et al., 2011; Uono et al., 2011, 2013). These findings have drawn attention to the need to understand the underlying neurocognitive mechanisms of the ability to recognize facial expressions.

Some evidence suggests that the large individual differences in the ability to recognize facial expressions, which is stable over time, relate to differences in the underlying brain structures. Several studies have reported that individuals with ASD and schizophrenia show marked difficulties recognizing facial expressions compared with normal participants (Kohler et al., 2010; Uljarevic and Hamilton, 2013; Kret and Ploeger, 2015) and have genetically inherited atypical brain structures (Crespi and Badcock, 2008 for a review). Many studies have noted gender differences in the ability to recognize facial expressions (Kret and de Gelder, 2012), and in brain structures involved in social behavior (Ruigrok et al., 2014). A recent study revealed that individual differences in expression recognition may be related to certain genotypes involved in the development of brain structures (Lin et al., 2012). Given that there is wide individual variation in facial expression processing, even in the typical population (Palermo et al., 2013), there may also be variation in the brain structure of the same population.

A number of neuroimaging studies that used functional magnetic resonance imaging (fMRI) and positron emission tomography have provided clues that suggest which brain regions are crucial for recognizing facial expressions. Two recent meta-analyses indicated that the processing of emotional facial expressions involves prefrontal regions, such as the inferior frontal gyrus (IFG), the superior temporal sulcus (STS) region (Allison et al., 2000) including the posterior STS and the adjacent middle temporal gyrus (MTG) and superior temporal gyrus (STG), and the amygdala (Fusar-Poli et al., 2009; Sabatinelli et al., 2011). These regions may be involved in the understanding of another’s intentions, through matching the visual representation of the other’s action with one’s own motor representations of the action (IFG: Rizzolatti et al., 2001; Gallese et al., 2004), the visual analysis of invariant aspects of faces such as expressions (the STS region: Haxby et al., 2002), and the extraction of emotional information from stimuli (amygdala: Calder et al., 2003). The recognition of another’s facial expressions could be implemented by motor, visual and emotional processing in the IFG, the STS region and the amygdala.

Previous studies have revealed that damage or interruption to these brain regions impairs recognition of overall emotional facial expressions. A study showing anatomical double dissociations between cognitive and emotional empathy demonstrated that damage to the right IFG decreases the ability to recognize emotional expressions from the region around eyes (Shamay-Tsoory et al., 2009). Lesion symptom mapping studies have reported that lesions in the bilateral frontal and temporal cortex, including the IFG and STS region as well as other regions, contribute to understanding others’ facial expressions (Adolphs et al., 2000; Dal Monte et al., 2013). A recent transcranial magnetic stimulation (TMS) study also showed that a transient disruption of the STS region impairs facial expression recognition but not identity recognition (Pitcher, 2014). Other studies have revealed that damage to specific brain regions impairs the ability to recognize specific emotions, such as in the case of impaired recognition of certain negative facial expressions, especially fear, in patients with amygdala damage (e.g. Adolphs et al., 1994, 1999).

Taken together, these studies have demonstrated that some brain regions are active in the response to emotional facial expressions, and that damage to these structures impairs this ability. However, it remains to be proven whether individual differences in the structure of these regions could be associated with differences recognition ability in healthy participants. Research on potential associations between expression recognition and brain structure will provide insight into the roles that identified regions and associated cognitive processes play in determining individual differences in the ability to recognize facial expressions in typical and atypical individuals.

We investigated this issue by conducting voxel-based morphometry (VBM) on structural MRI data and assessing the performance of healthy adults with respect to recognition of the facial expressions of six basic emotions. VBM studies in healthy individuals have demonstrated that individual differences in task performance are reflected in the volumes of specific brain regions (e.g. Carlson et al., 2012; Takeuchi et al., 2012; Gilaie-Dotan et al., 2013). This approach enables the investigation of the relationship between task performance and structures throughout the entire brain; the areas under investigation are not restricted to those activated under a specific task or those damaged in patients. The ability to recognize facial expressions was measured using a label-matching paradigm featuring six basic emotions. This paradigm has previously revealed differences in the ability to recognize facial expressions between typical and clinical populations (e.g. Uono et al., 2011, 2013; Sato et al., 2002; Okada et al., 2015). Based on previous functional neuroimaging and lesion studies, we hypothesized that the gray and white matter volume of the IFG, the STS region and the amygdala would correlate with the ability to recognize facial expressions.

Materials and methods

Participants

Fifty healthy young Japanese adults participated in this study (24 females and 26 males; M ± s.d. age, 22.4 ± 4.4). One additional participant was excluded from the analysis, because she was very familiar with the stimuli used. Verbal and performance intelligence quotient (IQ) was measured using the Japanese version of the Wechsler Adult Intelligence Scale, third edition (Fujita et al., 2006). All participants had IQs within the normal range (full scale IQ: M = 121.4, s.d. = 8.6; verbal IQ: M = 121.5, s.d. = 9.3; performance IQ: M = 116.7, s.d. = 10.4). Based on the Japanese version of the Mini International Neuropsychiatric Interview (Otsubo et al., 2005), a psychiatrist confirmed that none of participants had any neurological or psychiatric symptoms at a clinical level. All participants were right-handed, as assessed by the Edinburgh Handedness Inventory (Oldfield, 1971), and all had normal or corrected-to-normal visual acuity.

Following an explanation of the procedures, all participants provided written informed consent. This study was part of a broad research project exploring mind–brain relationships. The project was approved by the ethics committee of the Primate Research Institute, Kyoto University. The experiment was conducted in accordance with the guidelines of the Declaration of Helsinki.

Emotion recognition task

A total of 48 photographs of faces expressing the 6 basic emotions (anger, disgust, fear, happiness, sadness and surprise) from 4 Caucasian and 4 Japanese individuals were used as stimuli (Ekman and Friesen, 1976; Matsumoto and Ekman, 1988). The experiment was conducted using the Presentation (version 14.9, Neurobehavioral System) software on a Windows computer (HPZ200SFF, Hewlett-Packard Company). The images were presented on a 19-in. CRT monitor (HM903D-A, Iiyama) in random order. Written labels of the six basic emotions were presented around each photograph and the positions of the labels were counterbalanced across blocks. Participants were asked to indicate which of the labels best described the emotion expressed in each photograph. They were instructed to consider all alternatives prior to responding. Thus, time limits were not set, and each photograph remained on the screen until a verbal response was made. An experimenter carefully recorded the verbal response. Feedback to their response was not provided for each trial, and the photographs were presented just once. The participants completed a total of 48 trials in approximately 10 min. We confirmed that all participants understood the meanings of the written labels prior to starting the experimental trials. They also performed two training trials to familiarize themselves with the procedure.

MRI acquisition

The emotion recognition task and MRI acquisition were conducted on separate days. Image scanning was performed on a 3-T scanning system (MAGNETOM Trio, A Tim System, Siemens) at the ATR Brain Activity Imaging Center using a 12-channel array coil. T1-weighted high-resolution anatomical images were obtained using a magnetization-prepared rapid gradient-echo sequence (repetition time = 2250 ms, echo time = 3.06 ms, flip angle = 9°, inversion time = 1000 ms, field of view = 256 × 256 mm, matrix size = 256 × 256, voxel size = 1 × 1 × 1 mm).

Data analysis

Emotion recognition task. The percent accuracy in each emotion category and the average score across categories were calculated.

Image analysis. Image and statistical analyses were performed using the SPM8 statistical parametric mapping package (http://www.fil.ion.ucl.ac.uk/spm) and the VBM8 toolbox (http://dbm.neuro.uni-jena.de) implemented in MATLAB R2012b (Mathworks). Image preprocessing was performed using the VBM8 toolbox by using the default settings. Structural T1 images were segmented into gray matter, white matter and cerebrospinal fluid, using an adaptive maximum a posteriori approach (Rajapakse et al., 1997). Intensity inhomogeneity in the MRI was modeled as slowly varying spatial functions, and thus corrected in the estimation. The segmented images were used for a partial volume estimation using a simple model with mixed tissue types to improve segmentation (Tohka et al., 2004). A spatially adaptive non-local means denoising filter was applied to address spatially varying noise levels (Manjón et al., 2010). A Markov random field cleanup was used to improve the image quality. The gray and white matter images in native space were subsequently normalized to standard stereotactic space defined by the Montreal Neurological Institute using the diffeomorphic anatomical registration using the exponentiated Lie algebra algorithm approach (Ashburner, 2007). We used the predefined templates provided with the VBM8 toolbox, derived from 550 healthy brains from the IXI-database (http://www.brain-development.org). The normalized images were modulated using Jacobian determinants with non-linear warping only (i.e. m0 image in VBM8 outputs) to exclude the effect of total intracranial volume. Finally, the normalized modulated images were resampled to a resolution of 1.5 × 1.5 × 1.5 mm and smoothed using an isotropic Gaussian kernel 12 mm full width at half-maximum to compensate for anatomical variability among participants.

Multiple regression analyses were performed using the averaged percent accuracy across conditions as the independent variable and sex, age, and full-scale IQ as covariates. The positive and negative relationships between gray and white matter volumes and the averaged percent accuracy across conditions were tested using t-statistics. We selected the bilateral IFG, MTG and the amygdala as regions of interest (ROI). Co-ordinates were derived from Sabatinelli et al. (2011) as follows: bilateral IFG (right: x = 42, y = 25, z = 3; left: x = −42, y = 25, z = 3), MTG (right: x = 53, y = −50, z = 4; left: x = −53, y = −50, z = 4) and amygdala (right: x = 20, y = −4, z = −15; left: x = −20, y = −6, z = −15). The co-ordinates of the left MTG were generated by flipping those of the right MTG, because Sabatinelli et al. (2011) did not report the involvement of the left MTG. The coordinates used in the present study are similar to those used in a meta-analysis (x = −56, y = −58, z = 4; Fusar-Poli et al., 2009). To restrict the search volume in the bilateral IFG, MTG and amygdala, ROIs were specified as the intersection of a sphere of 12mm radius centered on the coordinates with anatomically defined masks provided by the WFU PickAtlas (Maldjian et al., 2003). We performed small volume correction (Worsley et al., 1996) in each ROI. Significant voxels were identified at the height threshold of P < 0.001 (uncorrected), and then a family wise error (FWE) correction for multiple comparisons was applied (P < 0.05). Other areas were FWE-corrected for the entire brain volume. For exploratory purposes, we performed the analysis using a height threshold of P < 0.001 (uncorrected) with a liberal extent threshold of 100 contiguous voxels. The same whole brain and exploratory analyses were also conducted using the percent accuracy in each emotion category as independent variables. The brain structures were anatomically labeled using Talairach Client (Lancaster et al., 2007) and the SPM Anatomy Toolbox (Eickhoff et al., 2005).

Results

The mean percent accuracy for each condition is shown in Table 1.

Table 1.

Mean (with s.d.) percentages of accurate facial expression recognition in each emotion category

| Category | Anger | Disgust | Fear | Happiness | Sadness | Surprise | Total |

|---|---|---|---|---|---|---|---|

| Mean (%) | 57.8 | 63.8 | 64.0 | 99.8 | 86.0 | 92.0 | 77.2 |

| s.d. | 20.0 | 22.1 | 24.7 | 1.8 | 15.1 | 16.7 | 7.1 |

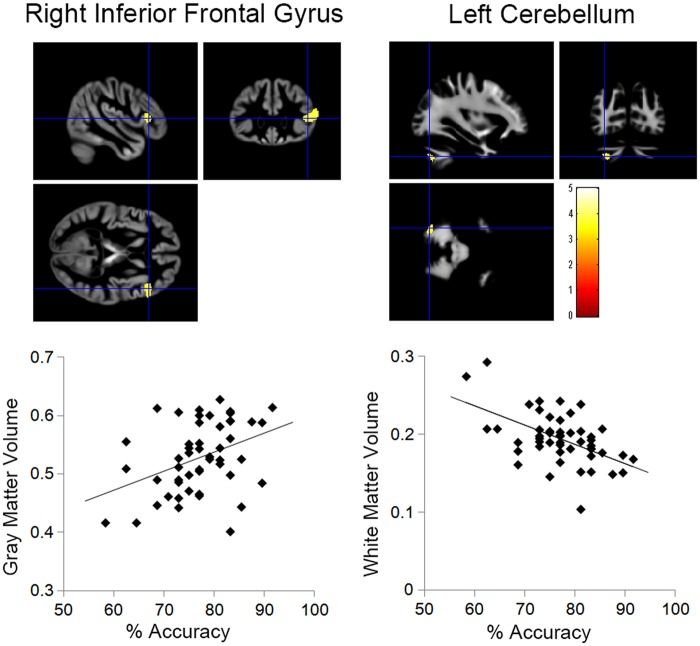

To reveal which brain regions are involved in emotion recognition, the structural MRI data were analyzed using a multiple regression analysis, with the average percent accuracy across emotion conditions as the independent variable, and sex, age, and full-scale IQ as covariates. The ROI analysis indicated a significant positive relationship with the gray matter volume of the right IFG (P < 0.05, FWE corrected; Figure 1 and Table 2). There were no other significant clusters within ROIs or other entire brain regions. The exploratory analysis using a liberal extent threshold (k > 100) revealed a negative relationship with the white matter volume of the left cerebellum (Figure 1 and Table 2).

Fig. 1.

Gray and white matter regions showing positive or negative relationships with the recognition accuracy of overall facial expressions. For display purposes, voxels are included above a threshold of P < 0.001 (uncorrected), with an extent threshold of 100 contiguous voxels. The positive correlation between overall emotion recognition and gray matter volume of the right inferior frontal gyrus was significant in the ROI analysis with an FWE correction for multiple comparisons. The blue cross indicates the location of the peak voxel. The red–white color scale represents the T-value. Scatter plots show the gray matter volume of the right inferior frontal gyrus (left bottom) and white matter volume of the left cerebellum (right bottom) as functions of the recognition accuracy of overall facial expressions at the peak voxels.

Table 2.

Brain regions showing correlations between the percent accuracy of facial expression recognition and gray and white matter volume

| Side | BA | Correlation | Co-ordinates |

T(45)-value | Cluster size (voxels) | |||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| All, gray matter | ||||||||

| Inferior frontal gyrus, triangularisa | R | 45 | + | 48 | 27 | 7 | 4.05 | 555 |

| All, white matter | ||||||||

| Cerebellum, crus II | L | − | −32 | −73 | −44 | 3.93 | 154 | |

| Anger, gray matter | ||||||||

| Superior parietal lobule | L | 7 | − | −20 | −76 | 49 | 4.14 | 1263 |

| Inferior parietal lobule | R | 40 | − | 47 | −40 | 52 | 3.93 | 321 |

| Orbitofrontal cortex | R | 10 | − | 24 | 68 | −2 | 3.37 | 207 |

| Disgust, gray matter | ||||||||

| Precentral gyrus | L | 6 | + | −45 | −4 | 21 | 4.01 | 190 |

| Fear, gray matter | ||||||||

| Thalamus | R | − | 18 | −13 | 15 | 3.96 | 142 | |

| Sadness, gray matter | ||||||||

| Superior temporal gyrus | R | 39 | + | 51 | −52 | 24 | 3.79 | 204 |

| Superior temporal gyrus | R | 21 | − | 66 | −9 | −5 | 3.77 | 180 |

| Sadness, white matter | ||||||||

| Superior temporal gyrusb | L | 41c | − | −59 | −22 | 7 | 4.79 | 774 |

| Surprise, gray matter | ||||||||

| Superior temporal gyrus | L | 22 | − | −63 | 2 | 0 | 4.42 | 233 |

| Parahippocampal gyrus | L | 36 | − | −20 | −34 | −14 | 4.23 | 238 |

The co-ordinates of the peak in the MNI system are shown. A threshold of P < 0.001 (uncorrected) with a liberal extent threshold of 100 contiguous voxels was set for displayed data.

BA, Broadmann area; L, left; R, right; +, positive correlation; −, negative correlation.

Significant positive correlation in the ROI analysis with a FWE correction for multiple comparisons (P < 0.05).

Significant negative correlation in the whole brain analysis with a FWE correction for multiple comparisons (P < 0.05).

Nearest cortical gray matter.

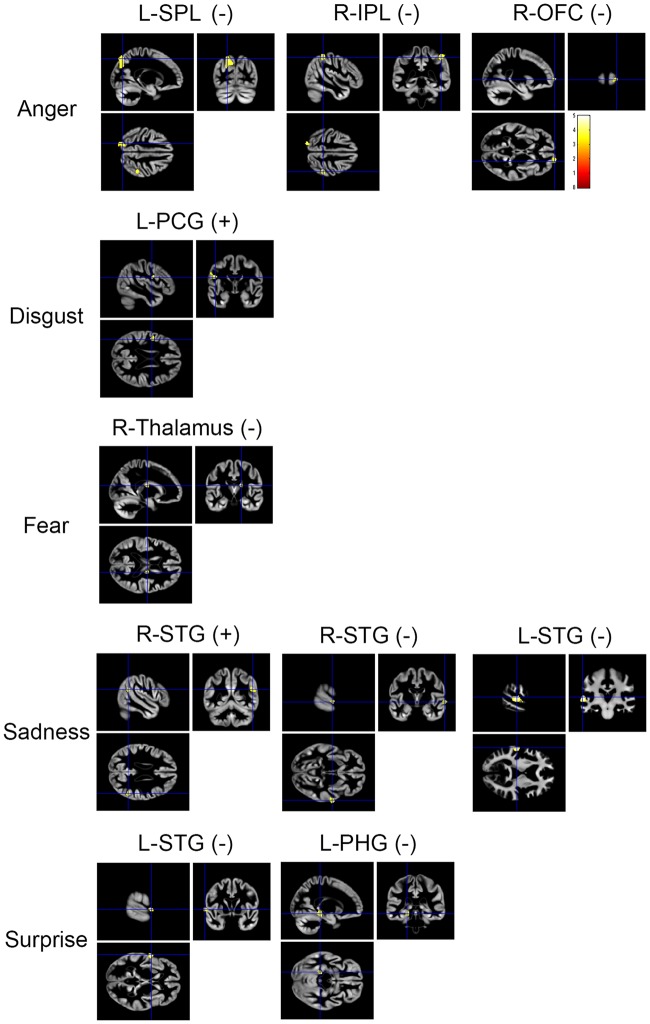

Multiple regression analyses were also conducted regarding the relationship between the structural MRI data and the percent accuracy for each emotion condition. Although there were no significant clusters within ROIs, the white matter volume of the left STG negatively correlated with sadness recognition in the whole brain analysis (P < 0.05, FWE corrected; see Figure 2 and Table 2). When we conducted exploratory analyses using a liberal extent threshold (k > 100), several brain regions showed associations with the ability to recognize facial expressions (see Figure 2 and Table 2). Specifically, for anger recognition, the results revealed a negative relationship with the gray matter volume of the left superior parietal lobule, the right inferior parietal lobule, and the right orbitofrontal cortex. For disgust recognition, a positive relationship was found with the gray matter volume of the left precentral gyrus. For fear recognition, a negative relationship was found with the gray matter volume of the right thalamus. For sadness recognition, a positive relationship was found with the gray matter volume of the right STG. Negative relationships were also found with the gray matter volume of the right STG. For surprise recognition, negative relationships were found with the gray matter volume of the left STG and parahippocampal gyrus.

Fig. 2.

Gray and white matter regions showing a positive or negative relationship with recognition accuracy in each emotion category. For display purposes, voxels are included above a threshold of P < 0.001 (uncorrected), with an extent threshold of 100 contiguous voxels. The negative correlation between sadness recognition and white matter volume of the left superior temporal gyrus was significant in the whole brain analysis with an FWE correction for multiple comparisons. The blue cross indicates the location of the peak voxel. The red–white color scale represents the T-value. (+) and (–) show positive and negative correlation, respectively. IPL, inferior parietal lobule; OFC, orbitofrontal cortex; PHG, parahippocampal gyrus; PCG, precentral gyrus; SPL, superior parietal lobule; STG, superior temporal gyrus.

Discussion

The present study reveals that the gray matter volume of the right IFG is associated with the ability to recognize facial expressions, across six emotion categories. This result is consistent with meta-analyses of neuroimaging data, which have reported that the IFG is reliably activated during the observation of facial expressions (Fusar-Poli et al., 2009; Sabatinelli et al., 2011). Furthermore, lesion studies have demonstrated that the right IFG is involved in the ability to recognize facial expressions (Adolphs et al., 2000; Shamay-Tsoory et al., 2009; Dal Monte et al., 2013). Previous studies have also suggested that people with more accurate expression recognition have a larger gray matter volume in the right IFG. For example, women, who can recognize facial expressions more accurately than men (Kret and de Gelder, 2012), have a larger right IFG compared with men (Ruigrok et al., 2014). No previous studies have revealed a relationship between individual differences in the ability to recognize overall facial expressions and brain structure in healthy individuals, although there is some evidence for a relationship in patients with psychiatric and neurological disorders (e.g. frontotemporal dementia: Van den Stock et al., 2016; Parkinson’s disease: Ibarretxe-Bilbao et al., 2009). Our data suggest that individual differences in the ability to recognize emotions from facial cues are reflected in individual structural differences in the right IFG.

Although we are unable to conclusively identify a functional role of the right IFG, we suggest that it may contribute toward the ability to recognize facial expressions via a mechanism involving mimicking the other person’s facial expression. It has been suggested that the IFG contains mirror neurons that discharge when observing and executing specific actions, and matching these representations allows us to understand each other’s actions (Rizzolatti et al., 2001; Gallese et al., 2004). Consistent with this concept, behavioral studies have reported that observing facial expressions induces facial mimicry, and automatic and intentional facial imitation modulate the process of recognizing facial expressions of other people (Niedenthal, 2007; Oberman et al., 2007; Sato et al., 2013a; Hyniewska and Sato, 2015). Previous fMRI studies have shown that observing and imitating facial expressions activates the right IFG (Carr et al., 2003; Hennenlotter et al., 2005; Pfeifer et al., 2008). Some studies have provided further evidence for the role of the right IFG, indicating that the right IFG shows greater activation when participants imitate emotional facial expressions compared with ingestive facial expressions (Lee et al., 2006), and that stronger congruent facial movements with another’s facial expressions correlate with increased activation of the right IFG (Lee et al., 2006; Likowski et al., 2012). Together with these previous findings, it is possible that the increased volume of the right IFG reflects enhanced facial mimicry, which facilitates the ability to recognize facial expressions.

Interestingly, our exploratory analysis suggests that the white matter volume of the left cerebellum negatively correlates with the ability to recognize facial expressions. Recent studies have indicated that the cerebellum contributes to not only motor but also cognitive and emotional function (Stoodley and Schmahmann, 2009). Consistent with this, a meta-analysis of fMRI studies revealed that the left cerebellum is active while observing others' facial expressions (Fusar-Poli et al., 2009). In relation to expression recognition, however, only a few studies have investigated the role of the cerebellum. Previous studies have reported impaired recognition in individuals with cerebellum infraction (Adamaszek et al., 2014) and spinocerebellar ataxias (D’Agata et al., 2011). A stimulation study demonstrated that a transcranial direct current stimulation to the cerebellum enhanced the processing of negative facial expressions (Ferrucci et al., 2012). The cerebellum is part of a large-scale network that involves the neocortex. The identified voxels were located in the Crus II of the left hemisphere, which is structurally and functionally interconnected with the contralateral prefrontal cortex (Kelly and Strick, 2003; O’Reilly et al., 2010). Both the right IFG and the left cerebellum have been associated with congruent facial reactions in response to the facial expressions of others (Likowski et al., 2012). Based on these findings, we speculate that the network between the right IFG and the left cerebellum might play a critical role for recognizing others’ facial expressions.

It should be noted that the IFG and the cerebellum are involved in general cognitive functions (Stoodley and Schmahmann, 2009; Aron et al., 2014). A previous study suggested that executive function is related to the recognition of facial expressions (Circelli et al., 2013). These findings suggest that individual differences in these functions might explain the relationship between the ability to recognize facial expressions and the volume of the identified brain regions. However, in our study, care was taken to ensure that all participants appropriately understood the meanings of the written labels prior to the experiment. No time limits were set for conducting the task and the stimuli and the written labels were presented until their response was recorded. Thus, the task should not have burdened attentional processing, working memory, language processing, or inhibitory control processes. Given that the significant relationship between brain volume and the ability to recognize emotions was found after controlling for participants’ intellectual ability, it is unlikely that the relationship may be explained only by individual differences in general cognitive function.

The present study provides insight into the functional role of the right IFG in clinical populations. For example, individuals with ASD, who are characterized by social and communication impairments, have difficulty recognizing facial expressions (Uljarevic and Hamilton, 2013). Studies have reported that individuals with ASD show reduced facial mimicry (McIntosh et al., 2006; Yoshimura et al., 2015) and reduced right IFG activation in response to the facial expressions of other people (Dapretto et al., 2006; Hadjikhani et al., 2007; Sato et al., 2012). Anatomical studies have demonstrated a relationship between a reduction in the gray matter volume of the right IFG and impaired social communication (Kosaka et al., 2010; Yamasaki et al., 2010). These data are consistent with our speculation that the increased gray matter volume of the right IFG is associated with enhanced facial mimicry, which facilitates emotion recognition. Based on these findings, it is possible that impaired facial mimicry, which is implemented by the IFG, results in an impaired ability to recognize facial expressions in ASD. A VBM study with a large clinical sample is needed to investigate the relationship between structural abnormalities and impaired emotion recognition in ASD.

The exploratory analysis conducted for each emotion category found that gray and white matter volumes of distinct brain regions correlate with recognition accuracy. Importantly, we found that the recognition accuracy of sad and surprised faces correlated with the volumes of subregions of the bilateral STS region. Previous lesion symptom mapping studies demonstrated that the STS region and frontal cortex are critical areas involved in the recognition of another’s facial emotions (Adolphs et al., 2000; Dal Monte et al., 2013). In fact, a recent TMS study in healthy adults showed that TMS of the STS region impairs facial expression recognition (Pitcher, 2014). These findings are in accordance with the role of the STS region, i.e. the visual processing of invariant aspects of faces (Haxby et al., 2002). Consistent with the suggestion described above, the STS region has a direct connection with the IFG (Catani et al., 2002), and this connection may implement motor mimicry (Hamilton, 2008). Although some correlations found in the present study have also been reported in previous studies (angry face and the orbitofrontal cortex: Blair and Cipolotti, 2000; fearful face and the thalamus: Williams et al., 2001), we did not detect other frequently reported correlations, such as fearful faces–amygdala (e.g. Adolphs et al., 1994) and disgusted faces–insular and/or basal ganglia (e.g. Calder et al., 2000). Methodological differences and a relatively small sample size might explain these discrepancies. Future studies are needed to combine structural and functional MRI investigations of a larger number of participants.

The present study had additional limitations. First, an analysis of the recognition of a happy face was not conducted, because all but one participant recognized happy faces at the ceiling level. It would be useful to apply computer morphing paradigms to the stimulus photographs to adjust the difficulty levels across emotion categories (e.g. Sato et al., 2002). Second, the results did not suggest any significant involvement of brain regions related with rapid emotional processing, such as the amygdala (e.g. Sato et al., 2013b). This might be because no time pressure was established for the task. The requirement of an accelerated response may reveal significant relationships between performance and the volume of such brain regions (cf. Zhao et al., 2013). Third, although we emphasized facial mimicry as a functional role of the right IFG in emotion recognition, there is no evidence indicating whether the volume of the right IFG correlates with the magnitude of facial muscle activity in response to another’s facial expressions. The emotion recognition task used employed several processing stages, such as perceptual processing, motor resonance and lexical access to emotional meaning. For example, a previous study suggested that damage to the right frontal cortex contributes to the performance of naming rather than rating another’s facial expressions (Adolphs et al., 2000). Future studies should directly investigate the relationship among facial mimicry, emotion recognition and brain volume.

In summary, the ability to recognize facial expressions across emotion categories correlates with the gray matter volume of the right IFG. Based on previous findings that indicate that the IFG is involved in facial imitation, and that facial mimicry modulates the ability to recognize facial expressions, it is possible that the large gray matter volume of the right IFG contributes toward efficient facial mimicry, which facilitates emotion recognition.

Funding

This study was supported by the JSPS Funding Program for Next Generation World-Leading Researchers (LZ008). The funding sources had no involvement in study design; in the collection, analysis and interpretation of data; in the writing of the report; and in the decision to submit the article for publication. The authors have declared that no competing interests exist.

Conflict of interest. None declared.

Acknowledgements

We thank ATR Brain Activity Imaging Center for their support in acquiring the data. We also thank Kazusa Minemoto, Akemi Inoue, and Emi Yokoyama for their technical support and reviewers for their helpful advice.

References

- Adamaszek M., D’Agata F., Kirkby K.C., et al. (2014). Impairment of emotional facial expression and prosody discrimination due to ischemic cerebellar lesions. Cerebellum, 13(3), 338–45. [DOI] [PubMed] [Google Scholar]

- Adolphs R., Tranel D., Damasio H., Damasio A. (1994). Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature, 372(6507), 669–72. [DOI] [PubMed] [Google Scholar]

- Adolphs R., Tranel D., Hamann S., et al. (1999). Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia, 37(10), 1111–7. [DOI] [PubMed] [Google Scholar]

- Adolphs R., Damasio H., Tranel D., Cooper G., Damasio A.R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. The Journal of Neuroscience, 20(7), 2683–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T., Puce A., McCarthy G. (2000). Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences, 4(7), 267–78. [DOI] [PubMed] [Google Scholar]

- Aron A.R., Robbins T.W., Poldrack R.A. (2014). Inhibition and the right inferior frontal cortex: one decade on. Trends in Cognitive Sciences, 18(4), 177–85. [DOI] [PubMed] [Google Scholar]

- Ashburner J. (2007). A fast diffeomorphic image registration algorithm. Neuroimage, 38(1), 95–113. [DOI] [PubMed] [Google Scholar]

- Blair R.J.R. (2003). Facial expressions, their communicatory functions and neuro-cognitive substrates. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 358(1431), 561–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair R.J.R., Cipolotti L. (2000). Impaired social response reversal: a case of ‘acquired sociopathy’. Brain and Cognition, 123(Pt 6), 1122–41. [DOI] [PubMed] [Google Scholar]

- Calder A.J., Keane J., Manes F., Antoun N., Young A.W. (2000). Impaired recognition and experience of disgust following brain injury. Nature Neuroscience, 3(11), 1077–8. [DOI] [PubMed] [Google Scholar]

- Calder A.J., Lawrence A.D., Young A.W. (2003). Neuropsychology of fear and loathing. Nature Reviews Neuroscience, 2(5), 352–63. [DOI] [PubMed] [Google Scholar]

- Carlson J.M., Beacher F., Reinke K.S., et al. (2012). Nonconscious attention bias to threat is correlated with anterior cingulate cortex gray matter volume: a voxel-based morphometry result and replication. Neuroimage, 59(2), 1713–8. [DOI] [PubMed] [Google Scholar]

- Carr L., Iacoboni M., Dubeau M.C., Mazziotta J.C., Lenzi G.L. (2003). Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proceeding of the National Academy of Sciences of the United States of America, 100(9), 5497–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carton J.S., Kessler E.A., Pape C.L. (1999). Nonverbal decoding skills and relationship well-being in adults. Journal of Nonverbal Behavior, 23(1), 91–100. [Google Scholar]

- Catani M., Howard R.J., Pajevic S., Jones D.K. (2002). Virtual in vivo interactive dissection of white matter fasciculi in the human brain. Neuroimage, 17(1), 77–94. [DOI] [PubMed] [Google Scholar]

- Circelli K.S., Clark U.S., Cronin-Golomb A. (2013). Visual scanning patterns and executive function in relation to facial emotion recogniton in aging. Neuropsychology, Development, and Cognition. Section B, Aging, Neuropsychology and Cognition, 20(2), 148–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crespi B., Badcock C. (2008). Psychosis and autism as diametrical disorders of the social brain. Behavioral and Brain Sciences, 31(3), 241–61. [DOI] [PubMed] [Google Scholar]

- D’Agata F., Caroppo P., Baudino B., et al. (2011). The recognition of facial emotions in spinocerebellar ataxia patients. Cerebellum, 10(3), 600–10. [DOI] [PubMed] [Google Scholar]

- Dal Monte O., Krueger F., Solomon J.M., et al. (2013). A voxel-based lesion study on facial emotion recognition after penetrating brain injury. Social Cognitive & Affective Neuroscience, 8(6), 632–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dapretto M., Davies M.S., Pfeifer J.H., et al. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience, 9(1), 28–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards R., Manstead A.S.R., Macdonald C.J. (1984). The relationship between children’s sociometric status and ability to recognize facial expressions of emotion. European Journal of Social Psychology, 14(2), 235–8. [Google Scholar]

- Eickhoff S.B., Stephan K.E., Mohlberg H., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage, 25(4), 1325–35. [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V. (1976). Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Ferrucci R., Giannicola G., Rosa M., et al. (2012). Cerebellum and processing of negative facial emotions: cerebellar transcranial DC stimulation specifically enhances the emotional recognition of facial anger and sadness. Cognition and Emotion, 26(5), 786–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujita K., Maekawa H., Dairoku H., Yamanaka K. (2006). Japanese Wechsler Adult Intelligence Scale—III. Tokyo: Nihon-Bunka-Kagaku-sha. [Google Scholar]

- Fusar-Poli P., Placentino A., Carletti F., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. Journal of Psychiatry and Neuroscience, 34(6), 418–32. [PMC free article] [PubMed] [Google Scholar]

- Gallese V., Keysers C., Rizzolatti G. (2004). A unifying view of the basis of social cognition. Trends in Cognitive Sciences, 8(9), 396–403. [DOI] [PubMed] [Google Scholar]

- Gilaie-Dotan S., Kanai R., Bahrami B., Rees G., Saygin A.P. (2013). Neuroanatomical correlates of biological motion detection. Neuropsychologia, 51(3), 457–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N., Joseph R.M., Snyder J., Tager-Flusberg H. (2007). Abnormal activation of the social brain during face perception in autism. Human Brain Mapping, 28(5), 441–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamilton A.F. (2008). Emulation and mimicry for social interaction: a theoretical approach to imitation in autism. Quarterly Journal of Experimental Psychology (Hove), 61(1), 101–15. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Hoffman E.A., Gobbini M.I. (2002). Human neural systems for face recognition and social communication. Biological Psychiatry, 51(1), 59–67. [DOI] [PubMed] [Google Scholar]

- Hennenlotter A., Schroeder U., Erhard P., et al. (2005). A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage, 26(2), 581–91. [DOI] [PubMed] [Google Scholar]

- Hyniewska S., Sato W. (2015). Facial feedback affects valence judgments of dynamic and static emotional expressions. Frontiers in Psychology, 6, 291.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibarretxe-Bilbao N., Junque C., Tolosa E., et al. (2009). Neuroanatomical correlates of impaired decision-making and facial emotion recognition in early Parkinson's disease. European Journal of Neuroscience, 30(6), 1162–71. [DOI] [PubMed] [Google Scholar]

- Kelly R.M., Strick P.L. (2003). Cerebellar loops with motor cortex and prefrontal cortex of a nonhuman primate. The Journal of Neuroscience, 23(23), 8432–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keltner D., Kring A.M. (1998). Emotion, social function, and psychopathology. Review of General Psychology, 2(3), 320–42. [Google Scholar]

- Kohler C.G., Walker J.B., Martin E.A., Healey K.M., Moberg P.J. (2010). Facial emotion perception in schizophrenia: a meta-analytic review. Schizophrenia Bulletin, 36(5), 1009–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosaka H., Omori M., Munesue T., et al. (2010). Smaller insula and inferior frontal volumes in young adults with pervasive developmental disorders. Neuroimage, 50(4), 1357–63. [DOI] [PubMed] [Google Scholar]

- Kret M.E., de Gelder B. (2012). A review on sex differences in processing emotional signals. Neuropsychologia, 50(7), 1211–21. [DOI] [PubMed] [Google Scholar]

- Kret M.E., Ploeger A. (2015). Emotion processing deficits: a liability spectrum providing insight into comorbidity of mental disorders. Neuroscience and Biobehavioral Reviews, 52, 153–71. [DOI] [PubMed] [Google Scholar]

- Lancaster J.L., Tordesillas-Gutiérrez D., Martinez M., et al. (2007). Bias between MNI and Talairach coordinates analyzed using the ICBM-152 brain template. Human Brain Mapping, 28(11), 1194–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee T.W., Josephs O., Dolan R.J., Critchley H.D. (2006). Imitating expressions: emotion-specific neural substrates in facial mimicry. Social Cognitive & Affective Neuroscience, 1(2), 122–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likowski K.U., Muhlberger A., Gerdes A.B., Wieser M.J., Pauli P., Weyers P. (2012). Facial mimicry and the mirror neuron system: simultaneous acquisition of facial electromyography and functional magnetic resonance imaging. Frontiers in Human Neuroscience, 6, 214.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin M.-T., Huang K.-H., Huang C.-L., Huang Y.-J., Tsai G.E., Lane H.-U. (2012). MET and AKT genetic influence on facial emotion perception. PLoS One, 7(4), e36143.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mancuso F., Horan W.P., Kern R.S., Green M.F. (2011). Social cognition in psychosis: multidimensional structure, clinical correlates, and relationship with functional outcome. Schizophrenia Research, 125(2–3), 143–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maldjian J.A., Laurienti P.J., Kraft R.A., Burdette J.H. (2003). An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage, 19(3), 1233–9. [DOI] [PubMed] [Google Scholar]

- Manjón J.V., Coupé P., Martí-Bonmatí L., Collins D.L., Robles M. (2010). Adaptive non-local means denoising of MR images with spatially varying noise levels. Journal of Magnetic Resonance Imaging, 31(1), 192–203. [DOI] [PubMed] [Google Scholar]

- Matsumoto D., Ekman P. (1988). Japanese and Caucasian Facial Expressions of Emotion. San Francisco, CA: Intercultural and Emotion Research Laboratory, Department of Psychology, San Francisco State University. [Google Scholar]

- McIntosh D.N., Reichmann-Decker A., Winkielman P., Wilbarger J.L. (2006). When the social mirror breaks: deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Developmental Science, 9(3), 295–302. [DOI] [PubMed] [Google Scholar]

- Niedenthal P.M. (2007). Embodying emotion. Science, 316(5827), 1002–5. [DOI] [PubMed] [Google Scholar]

- O’Reilly J.X., Beckmann C.F., Tomassini V., Ramnani N., Johansen-Berg H. (2010). Distinct and overlapping functional zones in the cerebellum defined by resting state functional connectivity. Cerebral Cortex, 20(4), 953–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberman L.M., Winkielman P., Ramachandran V.S. (2007). Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neuroscience, 2(3–4), 167–78. [DOI] [PubMed] [Google Scholar]

- Okada T., Kubota Y., Sato W., Murai T., Pellion F., Gorog F. (2015). Common impairments of emotional facial expression recognition in schizophrenia across French and Japanese cultures. Frontiers in Psychology, 6, 1018.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Otsubo T., Tanaka K., Koda R., et al. (2005). Reliability and validity of Japanese version of the mini-international neuropsychiatric interview. Psychiatry and Clinical Neurosciences, 59(5), 517–26. [DOI] [PubMed] [Google Scholar]

- Palermo R., O’Connor K.B., Davis J.M., Irons J., McKone E. (2013). New tests to measure individual differences in matching and labelling facial expressions of emotion, and their association with ability to recognise vocal emotions and facial identity. PLoS One, 8(6), e68126.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeifer J.H., Iacoboni M., Mazziotta J.C., Dapretto M. (2008). Mirroring others’ emotions relates to empathy and interpersonal competence in children. Neuroimage, 39(4), 2076–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D. (2014). Facial expression recognition takes longer in the posterior superior temporal sulcus than in the occipital face area. The Journal of Neuroscience, 34(27), 9173–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rajapakse J.C., Giedd J.N., Rapoport J.L. (1997). Statistical approach to segmentation of single-channel cerebral MR images. IEEE Transactions on Medical Imaging, 16(2), 176–86. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G., Fogassi L., Gallese V. (2001). Neurophysiological mechanisms underlying the understanding and imitation of action. Nature Reviews Neuroscience, 2(9), 661–70. [DOI] [PubMed] [Google Scholar]

- Ruigrok A.N., Salimi-Khorshidi G., Lai M.C., et al. (2014). A meta-analysis of sex differences in human brain structure. Neuroscience and Biobehavioral Reviews, 39, 34–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabatinelli D., Fortune E.E., Li Q., et al. (2011). Emotional perception: meta-analyses of face and natural scene processing. Neuroimage, 54(3), 2524–33. [DOI] [PubMed] [Google Scholar]

- Sato W., Fujimura T., Kochiyama T., Suzuki N. (2013a). Relationships among facial mimicry, emotional experience, and emotion recognition. PLoS One, 8(3), e57889.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato W., Kochiyama T., Uono S., et al. (2013b). Rapid and multiple-stage activation of the human amygdala for processing facial signals. Communicative & Integrative Biology, 6(4), e24562.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato W., Kubota Y., Okada T., Murai T., Yoshikawa S., Sungoku A. (2002). Seeing happy emotion in fearful and angry faces: qualitative analysis of facial expression recognition in a bilateral amygdala-damaged patient. Cortex, 38(5), 727–42. [DOI] [PubMed] [Google Scholar]

- Sato W., Toichi M., Uono S., Kochiyama T. (2012). Impaired social brain network for processing dynamic facial expression in autism spectrum disorders. BMC Neuroscience, 13, 99.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamay-Tsoory S.G., Aharon-Peretz J., Perry D. (2009). Two systems for empathy: a double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain, 132(Pt 3), 617–27. [DOI] [PubMed] [Google Scholar]

- Stoodley C.J., Schmahmann J.D. (2009). Functional topography in the human cerebellum: a meta-analysis of neuroimaging studies. Neuroimage, 44(2), 489–501. [DOI] [PubMed] [Google Scholar]

- Takeuchi H., Taki Y., Sassa Y., et al. (2012). Regional gray and white matter volume associated with Stroop interference: evidence from voxel-based morphometry. Neuroimage, 59(3), 2899–907. [DOI] [PubMed] [Google Scholar]

- Tohka J., Zijdenbos A., Evans A. (2004). Fast and robust parameter estimation for statistical partial volume models in brain MRI. Neuroimage, 23(1), 84–97. [DOI] [PubMed] [Google Scholar]

- Uljarevic M., Hamilton A. (2013). Recognition of emotions in autism: a formal meta-analysis. Journal of Autism and Developmental Disorders, 43(7), 1517–26. [DOI] [PubMed] [Google Scholar]

- Uono S., Sato W., Toichi M. (2011). The specific impairment of fearful expression recognition and its atypical development in pervasive developmental disorder. Social Neuroscience, 6(5–6), 452–63. [DOI] [PubMed] [Google Scholar]

- Uono S., Sato W., Toichi M. (2013). Common and unique impairments in facial-expression recognition in pervasive developmental disorder-not otherwise specified and Asperger’s disorder. Research in Autism Spectrum Disorders, 7(2), 361–8. [Google Scholar]

- Van den Stock J., De Winter F.L., de Gelder B., et al. (2016). Impaired recognition of body expressions in the behavioral variant of frontotemporal dementia. Neuropsychologia, 75, 496–504. [DOI] [PubMed] [Google Scholar]

- Williams L.M., Phillips M.L., Brammer M.J., et al. (2001). Arousal dissociates amygdala and hippocampal fear responses: evidence from simultaneous fMRI and skin conductance recording. Neuroimage, 14(5), 1070–9. [DOI] [PubMed] [Google Scholar]

- Worsley K.J., Marrett S., Vandal A.C., Neelin P., Friston K.J., Evans A.C. (1996). A unified statistical approach for determining significant signals in images of cerebral activation. Human Brain Mapping, 4(1), 58–73. [DOI] [PubMed] [Google Scholar]

- Yamasaki S., Yamasue H., Abe O., et al. (2010). Reduced gray matter volume of pars opercularis is associated with impaired social communication in high-functioning autism spectrum disorders. Biological Psychiatry, 68(12), 1141–7. [DOI] [PubMed] [Google Scholar]

- Yoshimura S., Sato W., Uono S., Toichi M. (2015). Impaired overt facial mimicry in response to dynamic facial expressions in high-functioning autism spectrum disorders. Journal of Autism and Developmental Disorders, 45(5), 1318–28. [DOI] [PubMed] [Google Scholar]

- Zhao K., Yan W.J., Chen Y.H., Zuo X.N., Fu X. (2013). Amygdala volume predicts inter-individual differences in fearful face recognition. PLoS One, 8(8), e74096.. [DOI] [PMC free article] [PubMed] [Google Scholar]