Abstract

Recent behavioral and neuroimaging studies demonstrate that labeling one’s emotional experiences and perceptions alters those states. Here, we used a comprehensive meta-analysis of the neuroimaging literature to systematically explore whether the presence of emotion words in experimental tasks has an impact on the neural representation of emotional experiences and perceptions across studies. Using a database of 386 studies, we assessed brain activity when emotion words (e.g. ‘anger’, ‘disgust’) and more general affect words (e.g. ‘pleasant’, ‘unpleasant’) were present in experimental tasks vs not present. As predicted, when emotion words were present, we observed more frequent activations in regions related to semantic processing. When emotion words were not present, we observed more frequent activations in the amygdala and parahippocampal gyrus, bilaterally. The presence of affect words did not have the same effect on the neural representation of emotional experiences and perceptions, suggesting that our observed effects are specific to emotion words. These findings are consistent with the psychological constructionist prediction that in the absence of accessible emotion concepts, the meaning of affective experiences and perceptions are ambiguous. Findings are also consistent with the regulatory role of ‘affect labeling’. Implications of the role of language in emotion construction and regulation are discussed.

Keywords: emotion, language, concepts, meta-analysis

Introduction

It is often assumed that language merely labels or communicates emotional states that have already been generated (Ekman and Cordaro, 2011; Wood and Niedenthal, 2015). However, psychological constructionist models of emotion suggest that words that name emotion concepts (‘fear’, ‘disgust’, ‘anger’) are in fact constitutive of emotions. In these models, emotion words support the conceptual knowledge that helps the brain make meaning of affective sensations in a given context. In doing so, conceptual knowledge helps ‘construct’ emotions because it transforms ambiguous affective sensations into experiences and perceptions of certain discrete emotions (Barrett et al., 2007; Lindquist and Gendron, 2013; Lindquist et al., 2015a,b).

Consistent with the psychological constructionist view, a growing body of research demonstrates the behavioral and cognitive impact of emotion concept words on emotional experiences and perceptions (Pennebaker & Beall, 1986; Fugate et al., 2010; Widen and Russell, 2010; Lieberman, 2011; Lieberman et al., 2011; Kircanski et al., 2012; Kassam & Mendes, 2013; Lindquist and Gendron, 2013; Lindquist et al., 2015a, b; Niles et al., 2015for reviews). Yet only a handful of neuroimaging experiments have explicitly assessed how emotion concept words impact the neural representation of emotional experiences and perceptions (Banks et al., 2007; Lieberman et al., 2007; Satpute et al. in press). We thus performed a comprehensive meta-analysis of the neuroimaging literature on emotion to examine whether the presence or absence of emotion concept words in experimental paradigms alters brain activity during experiences and perceptions of emotion. This meta-analysis allowed us to examine whether emotion concept words consistently influence the neural representation of emotions, demonstrating that emotion concept words have more than a trivial impact on how the brain processes affective stimuli.

Language and the psychological construction of emotion

Psychological constructionist models uniquely predict a constitutive role of language in emotion. In particular, the psychological constructionist approach predicts that emotions are the product of more basic affective and conceptual processes (Lindquist and Barrett, 2008). Consistent with recent predictive coding approaches to perception (Panichello et al., 2012), the psychological constructionist view hypothesizes that the brain is continuously making affective predictions about how stimuli in the world will impact the organism, and then refining those affective predictions into more specific emotional experiences and perceptions using conceptual knowledge about emotion (e.g. anger, disgust, fear, etc.) (Cunningham et al., 2013; Lindquist, 2013; Barrett and Simmons, 2015). The brain’s initial affective predictions help to determine how stimuli will impact the body—determining whether something is good, bad, arousing or calming—and prepares the organism for action. These predictions are referred to as ‘core affect’ (Russell and Barrett, 1999; Russell, 2003) and are supported by limbic and paralimbic cortices (Barrett and Bliss-Moreau, 2009; Barrett and Simmons, 2015). In particular, a distributed network consisting of the dorsal and ventral anterior insula, dorsal and ventral anterior cingulate cortex, basal ganglia, and amygdala (i.e. a ‘salience’ or ‘ventral attention’ network; Seeley et al., 2007; Lindquist et al., 2012; Barrett and Simmons, 2015; Lindquist et al., 2016b) is hypothesized to compute these early affective predictions. The amygdala is thought to play a key role in this core affective network by marshaling autonomic changes in the body and increasing sensory processing to particularly uncertain and motivationally relevant stimuli (for discussions, see Cunningham and Brosch, 2012; Lindquist et al., 2012). Uncertainty about the meaning of a stimulus suggests that the brain needs to make further predictions to ascertain that stimulus’ impact for the organism.

The psychological constructionist account predicts that initial affective predictions are subsequently refined into experiences and perceptions of discrete emotions when the brain draws on semantic knowledge to improve predictions about the more specific meaning of core affective sensations in that context (e.g. that feelings of unpleasantness and arousal are an indication that a stimulus is disgusting vs fearful) (Cunningham et al., 2013; Lindquist, 2013; Barrett, 2014). This type of prediction is referred to as ‘conceptualization’ and is supported by regions that are thought to represent prior experiences and semantic knowledge such as the dorsal and ventral medial prefrontal cortex, posterior cingulate cortex, hippocampus, lateral temporal cortex, anterior temporal lobe and the ventrolateral prefrontal cortex (i.e. the ‘default network’; Raichle et al., 2001; ‘association network’; Schacter et al., 2007; ‘context network’; Bar, 2009; or ‘conceptual hub network’; Binder, 2016) (Lindquist and Barrett, 2012; Barrett and Satpute, 2013). It is known that conceptualization helps make predictions about the meaning of visual sensations by spontaneously retrieving semantic knowledge that exerts a top-down predictive influence on ongoing perceptions (e.g. to determine if an object is a gun vs hairdryer) (Bar, 2007; Chaumon, et al., 2014). A psychological constructionist view predicts that one’s own internal core affective sensations (Russell, 2003; Barrett, 2006; Lindquist, 2013) or visual sensations of another person’s core affective facial muscle movements (Barrett et al., 2007; Lindquist and Gendron, 2013) are similarly made meaningful as instances of anger, disgust, fear, etc. by drawing on emotion concept knowledge acquired over prior experiences and organized by linguistic category labels such as ‘anger’, ‘disgust’, etc. Ventral aspects of the lateral prefrontal cortex (vlPFC), in particular, play a key role in this network by retrieving these semantic representations (Thompson-Schill et al., 2007) and autobiographical representations (Simons and Spiers, 2003; St. Jacques et al., 2012) of prior experiences for use in the moment. Activity within vlPFC is thus often considered evidence that the brain is accessing the rich cache of situation-specific knowledge about a concept for online use.

Important to the present paper, there is much evidence that in humans, conceptualization works in tandem with language. Research demonstrates that language not only helps individuals acquire new concepts (Xu, 2002; Lupyan et al., 2007) but that access to linguistic concepts also shapes online processing of visual sensations (Lupyan, 2012). For instance, activating linguistic concepts warps memories of perceptual objects towards more categorical representations (Hemmer and Persaud, 2014), and even shapes online visual perception (Lupyan and Spivey, 2010). The relationship between conceptual knowledge and language is especially critical for abstract concepts such as emotion categories, which are comprised of embodied representations of prior experiences combined with culturally acquired knowledge about the situations, bodily feelings and facial expressions associated with a particular emotion category (Vigliocco et al., 2009; Lindquist et al., 2015b). The psychological constructionist approach thus hypothesizes that emotion words play a role in constituting emotional experiences and perceptions because they help people store and then access the conceptual knowledge about emotions used to make predictions about the meaning of external (e.g. visual) and internal (i.e. interoceptive) sensations in the moment.

Of course, not every emotional experience or perception occurs in the explicit presence of emotion words, or is explicitly categorized with language. However, the psychological constructionist approach predicts that conceptualization occurs implicitly when semantic knowledge about emotion categories is used to make meaning of ambiguous affective predictions in a given context (Lindquist and Barrett, 2008; Wilson-Mendenhall et al., 2011). In a particular instance, an individual’s conceptual knowledge is rapidly and implicitly integrated to make meaning of ongoing sensory experiences, which allows ambiguous affective sensations from the body and environment to be made meaningful as instances of a specific emotion. For instance, access to the linguistic concept of ‘fear’ (vs ‘anger’ or no emotion-specific concepts) transforms unpleasant and highly aroused core affect into an experience of fear, as indicated by participants’ increased perceptions of threat in the environment (Lindquist and Barrett, 2008). Therefore, an emotion is a constructed event that is a product of core affect, conceptualization and the features of the present context.

Importantly, according to our psychological constructionist approach, not all words are created equally when it comes to constructing emotional experiences and perceptions. We hypothesize that words that name emotion concepts (e.g. ‘anger’, ‘disgust’, ‘fear’, ‘sadness’, ‘joy’, ‘amusement’, etc.) possess greater predictive power than words that name affective states (e.g. ‘pleasant’, ‘unpleasant’, ‘positive’, ‘negative’, ‘good’, ‘bad’) insofar as emotion concept words help refine the meaning of otherwise ambiguous core affective predictions by transforming them into experiences and perceptions of specific emotion categories (e.g. transforming a feeling of unpleasant, high arousal into the experience of fear vs anger; Lindquist and Barrett, 2008). We thus hypothesized that words that name emotion concepts will be more likely than words that name affective concepts to prompt semantic retrieval of specific instances of prior discrete emotional experiences and perceptions. Specifically, we predict that discrete emotion words, but not affect words will help the brain make meaning of ambiguous core affective predictions, refining those core affective representations into experiences and perceptions of anger, fear, sadness, joy, amusement and so on.

Neuroimaging evidence for the role of language in emotion

Most research thus far has focused on the role of language in emotion using behavioral experiments (Lindquist and Gendron, 2013; Lindquist et al., 2015a, b, 2016a, for reviews), but growing evidence from neuroimaging is also consistent with the idea that emotion concepts supported by language help constitute emotions. A meta-analysis of neuroimaging studies on emotion (Lindquist et al., 2012) found that brain regions consistently activated across studies of semantic processing such as the dorsomedial prefrontal cortex, ventrolateral prefrontal cortex, lateral temporal cortex and anterior temporal lobe (Binder et al., 2009) also showed consistent increases in activation across studies of emotion (see Lindquist et al., 2015b for areas of consistent overlap during emotions and semantics). A neuroimaging study observed that thinking about emotions, experiencing an emotion, and attending to body feelings share overlapping activity within the ‘default network’ hypothesized to support semantic retrieval and use (Oosterwijk et al., 2012). In particular, the anterior temporal lobe, a brain region involved in representing semantic knowledge (Patterson et al., 2007) had greater activity during emotions as compared to general positive and negative body feelings (Oosterwijk et al., 2012). These findings suggest that emotional experiences and perceptions may draw on conceptual knowledge of emotion during the construction of emotional states.

A separate, yet compatible, line of research has specifically investigated how the neural representation of emotion changes when participants are asked to explicitly label their affective states. This research finds that explicit, forced-choice labeling of negative facial expressions during an emotion perception task increases activity in the ventrolateral prefrontal cortex and decreases amygdala activity (Hariri et al., 2000; Lieberman et al., 2007). The strength of connectivity between vlPFC and amygdala is also increased by the presence of emotion concept words, which results in decreased amygdala activity (Torrisi et al., 2013). Additionally, labeling negative emotional experiences and pairing aversive stimuli with emotion category labels reduces autonomic reactivity to threatening images (Tabibnia et al., 2008), and increases the efficacy of exposure interventions in clinical populations such as sufferers of public speaking anxiety (Niles et al., 2015) and arachnophobia (Kircanski et al., 2012). Due to the overlap of regions involved in “affect labeling” and emotion regulation (Payer et al., 2012; but see Burklund et al., 2014 for some subtle distinctions), it is argued that affect labeling serves as a form of implicit emotion regulation (Lieberman, 2011).

A neuroimaging meta-analysis of the role of language in emotion

Although individual studies suggest that language may play a constitutive role in emotion by transforming initial affective predictions into experiences and perceptions of discrete emotions, no study has systematically addressed this hypothesis on a large scale. In the present report, we use a comprehensive coordinate-based meta-analysis of the neuroimaging literature on emotion to build upon the predictions of the psychological constructionist approach and previous empirical findings. In particular, we use meta-analysis to systematically investigate how emotion concept words alter brain activity in emotion. If emotion words had no impact on how the brain represented emotion, then this would suggest that language is indeed epiphenomenal to emotion (Ekman and Cordaro, 2011). In contrast, if the neural basis of emotions is fundamentally different when emotion concept words are present vs absent in tasks, then this would suggest that emotion words are impacting emotional experiences and perceptions in more than trivial ways.

Meta-analysis is particularly advantageous for addressing questions about the role of language in emotion because meta-analysis reveals brain regions that are consistently activated during certain conditions, even when individual studies were not specifically designed to address the hypothesis at hand. In the present meta-analysis, we coded individual contrasts from 386 studies containing 7333 participants reporting peak coordinates from 876 contrasts (see Supplementary Table S1 in Supplementary Material). Studies were published between 1993 and the end of 2014. Studies were coded to indicate whether those contrasts included experimental tasks with emotion words or affect words present. We hypothesized that the presence of emotion words anywhere throughout a task would implicitly prime emotion concepts, causing participants to draw on conceptual knowledge of certain discrete emotion categories when making meaning of initial affective predictions about stimuli. This would in turn, reduce the ambiguity of those initial affective predictions by refining them as being about an instance of anger, fear, etc. We thus predicted that experimental contrasts in which emotion concept words were present would be associated with consistent activations in brain regions involved in the retrieval and representation of semantic knowledge, such as ventrolateral prefrontal cortex and aspects of the lateral temporal lobe (including superior temporal gyrus and anterior temporal lobe) (Visser et al., 2010). In contrast, in studies that did not explicitly include emotion concept words throughout their experiment, we predicted that participants would be relatively less likely to access emotion concepts and thus fail to further elaborate on and refine initial affective predictions into experiences and perceptions of discrete emotions, causing those initial affective predictions to remain ambiguous. We thus predicted that contrasts in which emotion words were absent we would observe consistent activity in the amygdala, an aspect of the salience network particularly activated by uncertainty (Whalen, 2007). Finally, we predicted that when compared to emotion concept words, affect words (e.g. ‘unpleasant’, ‘pleasant’) would have less of an impact on the neural basis of emotional experiences and perceptions because they would not provide the same amount of refinement about the meaning of initial affective predictions.

Methods

Database

The database of studies used in this meta-analysis included fMRI and PET studies that employed tasks related to emotional (e.g. anger, fear, disgust, happy, sad, etc.) and affective (e.g. positive and negative) experiences and perceptions published between January 1993 and December 2014. See Supplementary Materials for specific details about the database and a list of studies included. We began by coding contrasts from individual studies to indicate whether the experimental tasks included emotion concept words or not, and the frequency with which emotion words were present in that task. For example, contrasts were considered to have emotion words present if they contained words that were present in verbal or visual instructions at points throughout the task (e.g. an instructions screen at the beginning of each experimental block told participants they would see ‘angry’ faces on the next block of trials) or if words were present on the screen in every trial (e.g. asking for ratings of discrete emotions with response options like ‘anger’, ‘disgust’, etc. after the neural response to the stimulus was modeled on every trial). To be able to titrate the effect of the presence of emotion concept words, we separated contrasts that had a lot of word priming (e.g. on every trial throughout the contrast; Level 2; 33 studies) and those that had relatively less (e.g. at the beginning of end of blocks; Level 1; 67 studies). Studies were considered to not have emotion words present (Level 0; 264 studies) if the methods section explicitly reported that no emotion words were used or if the methods did not mention the use of emotion words during instructions, as response options, or as stimuli. To be conservative, studies were not included if they included the very minimal use of emotion words prior to scanning (e.g. emotion words were present in a behavioral task performed prior to scanning, or included in a verbal instruction phase conducted outside of the scanner). Of note, half the studies including emotion words modeled the neural response to an emotionally evocative stimulus (e.g. an image, autobiographical memory, scenario, sound, facial expression, etc.) separately from portions of the task in which any words were on screen. Thus, in many studies, the only difference between Levels 0, 1 and 2 contrasts were that emotion words had not been primed (Level 0) or had been primed (Levels 1 and 2) at some point preceding emotional experiences and perceptions.

To address the role of emotion concept words vs more general affect words, contrasts were additionally coded for the presence of affect-related words in tasks. Coding followed that for emotion words, but specifically applied to affect-general words such as ‘positive’, ‘negative’, ‘pleasant’ and ‘unpleasant’ (Level 2; 13 studies; Level 1; 49 studies; Level 0; 319 studies. We focused specifically on words describing valence since this described the majority of affect-related words in tasks (i.e. arousal words were less frequent).

Multilevel kernel density analysis

The present meta-analysis used a Multi-level Kernel Density Analysis (MKDA) (Wager et al., 2007) implemented in NeuroElf (http://neuroelf.net). This technique summarizes the overlap in peak activations from individual study contrasts in order to report voxels that show consistent increases in activation for a given meta-analytic contrast (e.g. emotion words present vs not present). The MKDA treats each individual contrast as the unit of analysis, rather than each individual study, which keeps single studies that report several highly related contrasts from unduly influencing the meta-analytic results. Contrasts from all studies assessing emotional experiences and perceptions were included in the present meta-analysis, including contrasts assessing a difference in neural activity between emotion categories (e.g. anger vs disgust) and those assessing neural activity associated with a specific emotion category (e.g. anger vs neutral).

Following the standard MKDA procedure (Wager et al., 2007; Kober et al., 2008; Lindquist et al., 2016b), coordinates from each study contrast were first convolved with 12 mm spheres to produce binary indicator maps. The resulting maps for each contrast were then weighted to control for differences in sample size and rigor of statistical analysis: each individual contrast map was weighted by the square root of the sample size used so that studies with higher sample sizes would have greater influence on the results, and studies which used fixed-effects analyses were down-weighted by 0.75 to reduce their contribution to the meta-analytic results. Finally, we computed meta-analytic contrasts to assess the role of emotion and affect words in shaping neural activity associated with emotion (described in more detail below). For each meta-analytic contrast, inferences were made by comparing the proportion of study contrasts that report activation in a given voxel to an empirically derived null distribution created using Monte Carlo simulations. On each simulation, an MKDA map was calculated based on the probability of a particular proportion of studies reporting activation near a given voxel, and was compared to a null distribution calculated through random sampling of scrambled peak activations across the whole brain, excluding ventricles and white matter. Five thousand simulations were performed for each voxel-level analysis and voxels surpassing the primary threshold of P < 0.001 were retained. The resulting maps were cluster-level thresholded using a family wise error rate of P < 0.05.

Isolating the effect of emotion words

In our first analysis, we wanted to implement the most conservative test of the hypothesis that emotion concept words impact the neural representation of emotions. To do this, we sought a meta-analytic contrast that would isolate the presence vs absence of emotion concept words while keeping constant as many other task features as possible. We thus targeted consistent neural activity that was associated with labeling faces with emotion words vs. labeling faces with gender words, and vice-versa. There were 23 studies including 66 contrasts in our database that asked participants to categorize emotional stimuli (in particular, faces and voices) with emotion labels and 34 studies including 86 contrasts in our database that asked participants to categorize similar stimuli by gender (Supplementary Table S1 in Supplementary Material). Importantly, both types of studies used emotional facial or vocal expressions as their target stimuli and required participants to use a category label to make a response on every trial, meaning that the only difference between the conditions was the presence of emotion or gender words. Making this comparison also reduced the likelihood that there were differences between the conditions related to degrees of attention or cognitive control enlisted by the tasks. To assess the difference between emotion and gender categorizations, we computed two meta-analytic contrasts: (1) ‘emotion words present’ vs ‘gender words present’, and (2) ‘gender words present’ vs ‘emotion words present’.

Demonstrating the broader effect of emotion words on the neural representation of emotions

After completing the first, narrower, test of our hypothesis, we extended our analysis to our entire database of studies to assess whether our results would generalize across a wide variety of experimental tasks (see Supplementary Materials for details on the tasks and stimuli used by the studies). This analysis was less controlled than the first set of analyses we ran since studies varied in the tasks they employed. However, this analysis was more powerful, insofar as it examines whether the impact of emotion concept words on the neural basis of emotion generalizes across a variety of experimental contexts. To first assess the role of emotion words in shaping neural activity related to a variety of emotional experiences and perceptions, we computed two meta-analytic contrasts: (1) ‘emotion words present’ vs ‘emotion words not present’, and (2) ‘emotion words not present’ vs ‘emotion words present’. Here, we examined the effect of any emotion words present (Levels 1 and 2) vs no words present (Level 0) and vice versa. We also ran four additional contrasts to assess incremental effects caused by varying degrees of emotion concept knowledge present in experimental tasks: (1) ‘emotion words present in every trial’ vs ‘emotion words present throughout the task’ (Level 2 > Level 1), (2) ‘emotion words present throughout the task’ vs ‘emotion words present in every trial’ (Level 1 > Level 2), (3) ‘emotion words present throughout the task’ vs ‘emotion words not present’ (Level 1 > Level 0) and (4) ‘emotion words not present’ vs ‘emotion words present throughout the task’ (Level 0 > Level 1).

Testing the effect of emotion words on the neural representation of experiences and perceptions of emotion and positive and negative emotions

To examine whether language played a comparable role across all types of emotional states (experiences or perceptions and positive or negative emotions), we next computed the main contrasts ‘emotion words present’ vs ‘emotion words not present’ (Levels 1 and 2 > Level 0), and ‘emotion words not present’ vs ‘emotion words present’ (Level 0 > Levels 1 and 2) on four subsets of the database: (1) studies of emotion experience including positive and negative emotions, (2) studies of emotion perception including positive and negative emotions, (3) studies of emotion experience and perception which focused on negative emotions and (4) studies of emotion experience and perception which focused on positive emotions.

Testing the effect of affect-related words

Finally, to examine whether emotion concept words had a differential impact on the neural basis of emotions than affect words, we performed two analyses. First, we computed a meta-analytic contrast comparing the presence of emotion concept words (Levels 1 and 2) with the presence of affect words (Levels 1 and 2). Second, we replicated the main emotion word analyses with affect words [any affect word present (Levels 1 and 2) > no affect word present (Level 0)].

Results

Isolating the effect of emotion words

The first meta-analytic contrast focused on studies of emotional face and voice perception where categorization of either emotion or gender was required on every trial. Within this subset of the database, we computed two meta-analytic contrasts: emotion words present > gender words present and gender words present > emotion words present (Figure 1A and B). We observed a single cluster in the left inferior frontal gyrus (IFG; centered on −47, 15, 4; k = 998) for the emotion words present > gender words present contrast. The left IFG is implicated in semantic retrieval and use (Thompson-Schill et al., 1997; Grindrod et al., 2008; Huang et al., 2012), consistent with our constructionist hypothesis that emotion words prompt retrieval of emotion concepts.

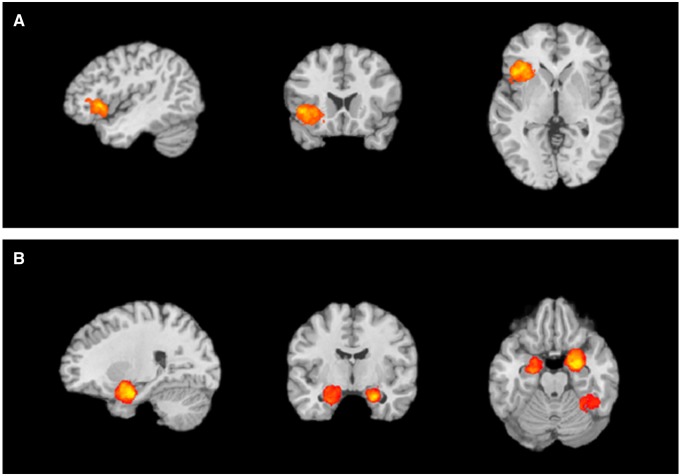

Fig. 1.

Regions with greater activity for the (A) emotion categorization > gender categorization contrast include clusters in left inferior frontal gyrus (IFG), and left dorsal anterior insula and left superior temporal gyrus and (B) gender categorization > emotion categorization contrast include clusters in right and left parahippocampal gyrus (PHG), amygdala, and right declive, culmen and fusiform gyrus.

Also consistent with predictions, we observed clusters in the right amygdala and left PHG (centered on 24, −10, −22; k = 761 and −24, −14, −24; k = 385) for the gender words present > emotion words present contrast. That the amygdala had more frequent activation to facial and vocal expressions in the presence of gender words suggest that in the absence of emotion words, the affective meaning of faces may be relatively more ambiguous and prompt further processing in the brain. Finally, we observed consistent activity in the cerebellum (right declive centered on 32 −50 −16; k = 502) for this contrast.

Demonstrating the broader effect of emotion words on the neural representation of emotions

After running the emotion words present > gender words present analysis on a subset of the database, we sought to observe whether the impact of emotion words generalized to the rest of the database, including both studies of emotion experience and perception and a much larger variety of experimental tasks. For the main emotion words present > emotion words not present (Levels 1 and 2 > Level 0) contrast (Table 1), we observed consistent activations in large clusters of voxels in the right and left superior temporal gyrus (STG) and right medial temporal gyrus and inferior frontal gyrus (Figure 2A), all regions that are consistently implicated in semantic retrieval and use (Visser et al., 2010; Binder et al., 2009).

Table 1.

Effect of emotion words on emotion experience and perception

| Region | x | y | z | k | Max | Mean |

|---|---|---|---|---|---|---|

| Contrast: emotion words present > not present (Levels 1 and 2 > Level 0) | ||||||

| R Superior Temporal Gyrus | 48 | 10 | −11 | 351 | 0.07 | 0.05 |

| R Superior Temporal Gyrus | 48 | 10 | −11 | 0.07 | 0.05 | |

| R Superior Temporal Gyrus | 50 | 5 | −2 | 0.06 | 0.05 | |

| R Superior Temporal Gyrus | 50 | 10 | −18 | 0.06 | 0.05 | |

| R Superior Temporal Gyrus | 52 | 13 | −2 | 0.06 | 0.05 | |

| R Inferior Frontal Gyrus | 47 | 15 | −5 | 0.06 | 0.05 | |

| R Superior Temporal Gyrus | 50 | 4 | −14 | 0.06 | 0.05 | |

| R Middle Temporal Gyrus | 50 | 3 | −19 | 0.05 | 0.05 | |

| L Superior Temporal Gyrus | −53 | −51 | 15 | 234 | 0.09 | 0.05 |

| L Superior Temporal Gyrus | −53 | −51 | 15 | 0.09 | 0.06 | |

| L Superior Temporal Gyrus | −50 | −51 | 11 | 0.07 | 0.05 | |

| L Superior Temporal Gyrus | −53 | −44 | 17 | 0.06 | 0.05 | |

| L Superior Temporal Gyrus | −54 | −49 | 8 | 0.06 | 0.05 | |

| L Superior Temporal Gyrus | −49 | −45 | 12 | 0.06 | 0.05 | |

| Contrast: emotion words not present > present (Level 0 > Levels 1 and 2) | ||||||

| R Parahippocampal Gyrus/Amygdala | 23 | −7 | −10 | 1416 | 0.20 | 0.10 |

| L Parahippocampal Gyrus/Amygdala | −21 | −4 | −12 | 1191 | 0.16 | 0.08 |

| L Parahippocampal Gyrus/Amygdala | −21 | −4 | −12 | 0.16 | 0.09 | |

| L Parahippocampal Gyrus/Amygdala | −31 | −5 | −15 | 0.13 | 0.07 | |

| L Lentiform Nucleus/Putamen | −26 | −11 | −6 | 0.11 | 0.07 | |

| L Fusiform Gyrus | −39 | −52 | −14 | 680 | 0.1 | 0.06 |

| L Fusiform Gyrus | −39 | −52 | −14 | 0.1 | 0.06 | |

| L Fusiform Gyrus | −39 | −56 | −9 | 0.09 | 0.06 | |

| L Cerebellum (Declive) | −39 | −58 | −16 | 0.09 | 0.06 | |

| L Fusiform Gyrus | −37 | −50 | −9 | 0.09 | 0.06 | |

| L Fusiform Gyrus | −36 | −42 | −10 | 0.07 | 0.05 | |

| L Fusiform Gyrus | −43 | −62 | −10 | 0.07 | 0.06 | |

| R Fusiform Gyrus | 30 | −46 | −12 | 398 | 0.07 | 0.05 |

| R Fusiform Gyrus | 30 | −46 | −12 | 0.07 | 0.05 | |

| R Inferior Occipital Gyrus | 43 | −75 | −3 | 0.07 | 0.05 | |

| R Middle Occipital Gyrus | 46 | −79 | 8 | 0.07 | 0.05 | |

| R Fusiform Gyrus | 40 | −49 | −12 | 0.06 | 0.05 | |

| R Middle Occipital Gyrus | 40 | −77 | 5 | 0.06 | 0.05 | |

| R Fusiform Gyrus | 46 | −54 | −11 | 0.06 | 0.05 | |

| R Inferior Occipital Gyrus | 37 | −76 | −7 | 0.06 | 0.05 | |

| R Fusiform Gyrsu | 41 | −59 | −12 | 0.05 | 0.05 | |

| R Fusiform Gyrus | 46 | −68 | −7 | 0.05 | 0.05 | |

| Contrast: emotion words every trial > throughout task (Level 2 > Level 1) | ||||||

| L Middle Temporal Gyrus | −45 | −62 | 6 | 371 | 0.14 | 0.1 |

| L Middle Temporal Gyrus | −45 | −62 | 6 | 0.14 | 0.11 | |

| L Middle Temporal Gyrus | −44 | −66 | 12 | 0.13 | 0.11 | |

| L Middle Occipital Gyrus | −43 | −70 | 4 | 0.12 | 0.1 | |

| L Middle Temporal Gyrus | −48 | −62 | −1 | 0.1 | 0.1 | |

| L Middle Temporal Gyrus | −43 | −62 | 1 | 0.1 | 0.1 | |

| L Middle Occipital Gyrus | −53 | −71 | 8 | 0.1 | 0.09 | |

| L Middle Temporal Gyrus | −48 | −71 | 11 | 0.1 | 0.09 | |

| Contrast: emotion words throughout task > every trial (Level 1 > Level 2) | ||||||

| L Subcallosal Gyrus | −15 | 2 | −12 | 555 | 0.19 | 0.12 |

| L Subcallosal Gyrus | −15 | 2 | −12 | 0.18 | 0.12 | |

| L Subcallosal Gyrus | −21 | 4 | −13 | 0.18 | 0.13 | |

| L Parahippocampal Gyrus/Amygdala | −27 | 3 | −14 | 0.17 | 0.12 | |

| L Parahippocampal Gyrus/Amygdala | −25 | −3 | −16 | 0.17 | 0.12 | |

| L Lentiform Nucleus | −12 | 0 | −5 | 0.13 | 0.10 | |

| L Parahippocampal Gyrus/Amygdala | −24 | −9 | −17 | 0.15 | 0.11 | |

| Contrast: emotion words throughout task > no words (Level 1 > Level 0) | ||||||

| L Cerebellum (Culmen) | −4 | −53 | −13 | 289 | 0.10 | 0.06 |

| L Cerebellum (Culmen) | −4 | −53 | −13 | 0.10 | 0.06 | |

| L Cerebellum (Declive) | −10 | −59 | −17 | 0.07 | 0.06 | |

| R Cerebellum (Culmen) | 10 | −51 | −14 | 0.07 | 0.05 | |

| R Superior Frontal Gyrus | 8 | 50 | 30 | 267 | 0.07 | 0.06 |

| R Superior Frontal Gyrus | 8 | 50 | 30 | 0.07 | 0.06 | |

| L Medial Frontal Gyrus | 0 | 50 | 28 | 0.07 | 0.06 | |

| L Superior Frontal Gyrus | −11 | 48 | 29 | 0.07 | 0.06 | |

| Contrast: no emotion words > emotion words throughout task (Level 0 > Level 1) | ||||||

| R Parahippocampal Gyrus/Amygdala | 27 | −5 | −12 | 1052 | 0.18 | 0.09 |

| R Parahippocampal Gyrus/Amygdala | 27 | −5 | −12 | 0.18 | 0.09 | |

| R Amygdala | 23 | −7 | −7 | 0.17 | 0.12 | |

| R Lentiform Nucleus | 17 | −7 | −4 | 0.14 | 0.09 | |

| R Lentiform Nucleus | 25 | −9 | −2 | 0.14 | 0.08 | |

| L Parahippocampal Gyrus/Amygdala | −31 | −7 | −10 | 746 | 0.12 | 0.07 |

| L Parahippocampal Gyrus/Amygdala | −31 | −7 | −10 | 0.12 | 0.08 | |

| L Parahippocampal Gyrus/Amygdala | −31 | −5 | −15 | 0.11 | 0.07 | |

| L Parahippocampal Gyrus/Amygdala | −22 | −9 | −12 | 0.11 | 0.07 | |

| L Parahippocampal Gyrus/Amygdala | −21 | −4 | −12 | 0.11 | 0.07 | |

| L parahippocampal Gyrus/Hippocampus | −24 | −14 | −11 | 0.10 | 0.07 | |

| L Lentiform Nucleus/Putamen | −25 | −9 | −1 | 0.09 | 0.06 | |

| L Lentiform Nucleus | −18 | −13 | −4 | 0.09 | 0.07 | |

| L Claustrum | −31 | −2 | −3 | 0.08 | 0.06 | |

| L Superior Temporal Gyrus | −35 | 3 | −12 | 0.08 | 0.06 | |

| L Parahippocampal Gyrus | −22 | −14 | −15 | 0.07 | 0.06 | |

| L Fusiform Gyrus | −39 | −52 | −12 | 818 | 0.12 | 0.08 |

| L Fusiform Gyrus | −39 | −52 | −12 | 0.12 | 0.08 | |

| L Cerebellum (Declive) | −39 | −58 | −16 | 0.1 | 0.07 | |

| L Fusiform Gyrus | −43 | −62 | −6 | 0.09 | 0.07 | |

| R Fusiform Gyrus | 44 | −56 | −12 | 828 | 0.1 | 0.06 |

| R Fusiform Gyrus | 44 | −56 | −12 | 0.1 | 0.07 | |

| R Fusiform Gyrus | 36 | −49 | −8 | 0.1 | 0.07 | |

| R Middle Occipital Gyrus | 50 | −68 | 5 | 0.08 | 0.06 | |

| R Middle Occipital Gyrus | 44 | −77 | 7 | 0.07 | 0.06 | |

| R Inferior Occipital Gyrus | 43 | −71 | −2 | 0.07 | 0.06 | |

| R Inferior Occipital Gyrus | 46 | −77 | 2 | 0.07 | 0.06 | |

| R Inferior Occipital Gyrus | 45 | −77 | −3 | 0.06 | 0.06 | |

| L Middle Occipital Gyrus | −53 | −69 | 9 | 329 | 0.09 | 0.06 |

| L Middle Occipital Gyrus | −53 | −69 | 9 | 0.09 | 0.06 | |

| L Middle Occipital Gyrus | −48 | −69 | 4 | 0.08 | 0.06 | |

| L Inferior Temporal Gyrus | −47 | −73 | −1 | 0.06 | 0.06 | |

Note: voxel-wise P < 0.001, cluster-wise FWER P < 0.05.

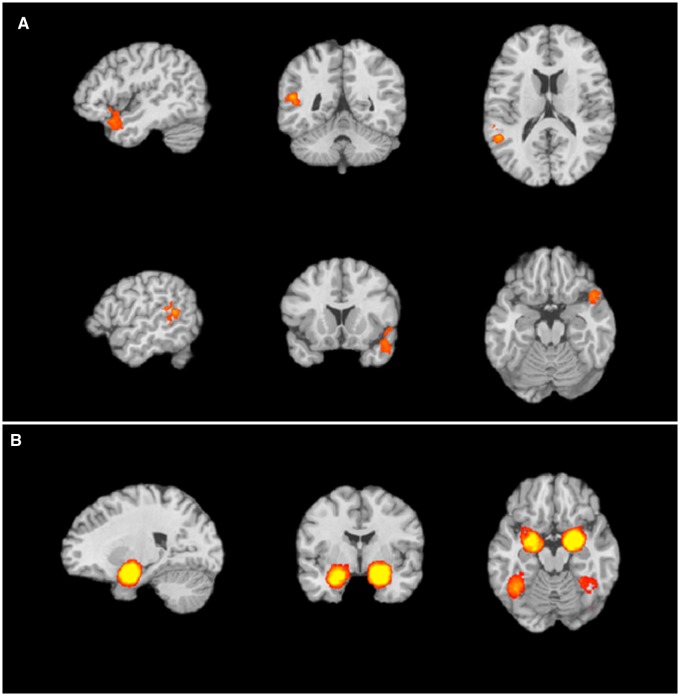

Fig. 2.

Regions with greater activity for the (A) emotion words present > emotion words not present contrast include clusters in left and right superior temporal gyrus (STG) and (B) emotion words not present > emotion words present contrast include clusters in bilateral PHG and amygdala and bilateral fusiform gyrus.

We also examined regions that were modulated by the presence of emotion words by isolating regions that had more frequent activations when emotion words were not present relative to when they were present. For this emotion words not present > emotion words present contrast (Level 0 > Levels 1 and 2) (Table 1), we observed a set of findings similar to those observed when gender words, but not emotion words were present. Specifically, we observed consistent activation in clusters in bilateral amygdala/parahippocampal gyrus (PHG) and bilateral fusiform gyrus (Figure 2B).

To assess whether any incremental effects could be observed between tasks with varying frequencies of emotion words present (i.e. emotion words present on the screen in every trial vs included in instruction screens at the beginning of each block), we computed four additional contrasts across the whole database (Table 1). For the emotion words present in every trial > emotion words present throughout the task (Level 2 > Level 1) contrast, we observed consistent activations in left middle temporal gyrus extending to middle occipital gyrus. For the emotion words present throughout the task > emotion words present in every trial (Level 1 > Level 2) contrast, we observed consistent activations in a large cluster anchored in the left subcallosal gyrus, including left amygdala and PHG.

For the emotion words present throughout the task > no emotion words present (Level 1 vs Level 0) contrast, we observed a pattern similar to the emotion words present > emotion words not present (Levels 1 and 2 > Level 0) contrast. In particular, we again found consistent activations in right STG. We also found consistent activations in the left medial frontal gyrus, right superior frontal gyrus, and aspects of the cerebellum. For the emotion words not present > emotion words present throughout the task (Level 0 > Level 1) contrast, we again observed consistent activations in bilateral amygdala/PHG, fusiform gyrus and middle occipital gyrus.

Testing the effect of emotion words on the neural representation of emotion experiences or perceptions

The results for studies that focused on emotion experience were generally consistent with those observed for our targeted facial expressions contrast as well as our contrasts on the broader database of experience and perception studies (Table 2). For studies of emotion experience when emotion words were present > no emotion words present (Levels 1 and 2 > Level 0), we observed consistent activations in a cluster located in the cerebellum. In contrast, when no emotion words were present > emotion words present (Level 0 > Levels 1 and 2) we observed clusters in bilateral amygdala/PHG and left fusiform gyrus.

Table 2.

Effect of emotion words on emotion experience or perception

| Region | x | y | z | k | max | mean |

|---|---|---|---|---|---|---|

| Contrast: emotion experience, emotion words present > not present (Levels 1 and 2 > Level 0) | ||||||

| R Cerebellum (Fastigium) | 6 | −58 | −22 | 302 | 0.09 | 0.06 |

| R Cerebellum (Fastigium) | 6 | −53 | −19 | 0.09 | 0.07 | |

| R Cerebellum (Culmen) | 4 | −50 | −18 | 0.08 | 0.06 | |

| R Nodule | 0 | −56 | −24 | 0.07 | 0.06 | |

| R Cerebellum (Declive) | 8 | −63 | −18 | 0.07 | 0.06 | |

| R Cerebellum (Declive) | 4 | −59 | −16 | 0.06 | 0.05 | |

| R Nodule | 1 | −52 | −28 | 0.06 | 0.05 | |

| Contrast: emotion experience, emotion words not present > present (Level 0 > Levels 1 and 2) | ||||||

| R Parahippocampal Gyrus/Amygdala | 23 | −3 | −13 | 1087 | 0.19 | 0.10 |

| R Lentiform Nucleus/Putamen | 31 | −9 | −6 | 0.10 | 0.07 | |

| L Parahippocampal Gyrus/Amygdala | −27 | −3 | −15 | 1031 | 0.16 | 0.08 |

| L Parahippocampal Gyrus/Amygdala | −22 | −9 | −12 | 0.15 | 0.09 | |

| L Parahippocampal Gyrus/Amygdala | −30 | −9 | −11 | 0.14 | 0.09 | |

| L Lentiform Nucleus/Medial Globus Pallidus | −16 | −11 | −6 | 0.10 | 0.07 | |

| L Parahippocampal Gyrus/Hippocampus | −24 | −14 | −11 | 0.10 | 0.07 | |

| L Lentiform Nucleus/Putamen | −25 | −9 | −1 | 0.07 | 0.06 | |

| L Superior Temporal Gyrus | −35 | 3 | −12 | 0.07 | 0.06 | |

| L Fusiform Gyrus | −41 | −56 | −10 | 682 | 0.11 | 0.07 |

| L Fusiform Gyrus | −46 | −54 | −11 | 0.11 | 0.08 | |

| L Fusiform Gyrus | −41 | −54 | −15 | 0.11 | 0.08 | |

| L Parahippocampal Gyrus | −38 | −44 | −8 | 0.09 | 0.07 | |

| Contrast: emotion perception, emotion words present > not present (Levels 1 and 2 > Level 0)* | ||||||

| R Superior Temporal Gyrus | 48 | −18 | 1 | 609 | 0.11 | 0.07 |

| R Superior Temporal Gyrus | 55 | −19 | 1 | 0.10 | 0.07 | |

| R Superior Temporal Gyrus | 55 | −16 | −4 | 0.10 | 0.07 | |

| R Superior Temporal Gyrus | 54 | −9 | 0 | 0.10 | 0.07 | |

| R Superior Temporal Gyrus | 62 | −5 | −2 | 0.09 | 0.07 | |

| R Superior Temporal Gyrus | 53 | 3 | 2 | 0.08 | 0.06 | |

| R Superior Temporal Gyrus | 47 | −5 | 1 | 0.08 | 0.07 | |

| R Precentral Gyrus | 51 | −2 | 8 | 0.07 | 0.06 | |

| R Precentral Gyrus | 47 | −9 | 8 | 0.07 | 0.06 | |

| R Superior Temporal Gyrus | 47 | −8 | −4 | 0.07 | 0.06 | |

| R Insula | 40 | −23 | −5 | 0.07 | 0.06 | |

| R Middle Temporal Gyrus | 57 | −22 | −9 | 0.07 | 0.06 | |

| R Superior Temporal Gyrus | 64 | −8 | 6 | 0.06 | 0.06 | |

| Contrast: emotion perception, emotion words not present > present (Level 0 > Levels 1 and 2) | ||||||

| R Sub-lobar Amygdala | 25 | −9 | −10 | 1252 | 0.25 | 0.14 |

| L Parahippocampal Gyrus/Amygdala | −25 | −1 | −16 | 839 | 0.19 | 0.11 |

| L Parahippocampal Gyrus/Amygdala | −28 | −7 | −16 | 0.19 | 0.11 | |

| L Parahippocampal Gyrus/Amygdala | −22 | −7 | −11 | 0.16 | 0.11 | |

| L Inferioir Frontal Gyrus | −32 | 6 | −9 | 0.13 | 0.09 | |

| L Lentiform Nucleus/Putamen | −29 | 0 | −5 | 0.11 | 0.08 | |

| L Parahippocampal Gyrus | −14 | −13 | −13 | 0.09 | 0.08 | |

Note: voxel-wise P < 0.001, except where noted by *P < 0.005, cluster-wise FWER P < 0.05.

The results for studies that focused on emotion perception also revealed a similar pattern of activations to the results from our previous analyses. For studies of emotion perception when emotion words were present > no emotion words present (Levels 1 and 2 > Level 0), there were no consistent activations at our primary threshold, but we observed consistent activations in a single cluster located in the right STG at P < 0.005. For studies of emotion perception when no emotion words were present > emotion words present (Level 0 > Levels 1 and 2), we observed consistent activations in clusters in bilateral amygdala/PHG. Taken together, the consistency of the findings when assessed separately for studies of emotion experience and perception suggests that the results from the whole database were not solely driven by studies on emotion experience or emotion perception. Consistent with psychological constructionist hypotheses (Lindquist et al., 2015a), language may play a similar role in emotion whether involved in making meaning of sensations internal to one’s body (as in emotion experiences) or sensations external to one’s body (as in perceptions of someone else’s facial, bodily or vocal expressions).

Testing the effect of emotion words on the neural representation of positive or negative emotions

For studies of negative emotion experiences and perceptions when emotion words were present > no emotion words present (Levels 1 and 2 > Level 0), we observed no consistent activations at P < 0.001. However, we observed consistent activations in one cluster in the left claustrum, extending to STG and dorsal anterior insula at P < 0.005. Like the STG, the claustrum and dorsal anterior insula have been implicated in semantic retrieval during priming (Rossell et al., 2001; Rissman et al., 2003). For studies of negative emotion experiences and perceptions when no emotion words were present > emotion words present (Level 0 > Levels 1 and 2) we again observed consistent activity within bilateral amygdala/PHG and the left fusiform gyrus at P < 0.001 (Table 3). For studies that focused on positive emotion experiences and perceptions, we found no consistent activations at any threshold when emotion words were present > no emotion words present (Levels 1 and 2 > Level 0) contrast. This finding may be related to the fact that studies often only include a single positive emotion category (happiness), which limits the brain’s need to retrieve and differentiate between same-valence concepts when making meaning of positive affective predictions. However, for the no emotion words present > emotion words present (Level 0 > Levels 1 and 2) contrast we once again observed clusters in bilateral amygdala/PHG (Table 3).

Table 3.

Effect of emotion words on negative or positive emotions

| Region | x | y | z | k | max | mean |

|---|---|---|---|---|---|---|

| Contrast: negative emotions, emotion words present > not present (Levels 1 and 2 > Level 0)* | ||||||

| R Claustrum | 30 | 8 | 2 | 559 | 0.10 | 0.06 |

| R Lentiform Nucleus/Putamen | 17 | 5 | 2 | 0.08 | 0.06 | |

| L Lentiform Nucleus/Putamen | 24 | 11 | 3 | 0.08 | 0.06 | |

| R Claustrum | 36 | 2 | 4 | 0.08 | 0.06 | |

| R Superior Temporal Gyrus | 50 | 5 | −2 | 0.07 | 0.06 | |

| R Middle Temporal Gyrus | 48 | 4 | −15 | 0.07 | 0.05 | |

| R Superior Temporal Gyrus | 48 | 12 | −12 | 0.06 | 0.05 | |

| R Insula | 44 | 11 | 1 | 0.06 | 0.05 | |

| R Insula | 42 | 1 | 6 | 0.06 | 0.05 | |

| R Insula | 41 | 14 | 6 | 0.06 | 0.05 | |

| R Middle Temporal Gyrus | 47 | 1 | −18 | 0.06 | 0.05 | |

| Contrast: negative emotions, emotion words not present > present (Level 0 > Levels 1 and 2) | ||||||

| R Parahippocampal Gyrus/Amygdala | 23 | −7 | −10 | 1226 | 0.25 | 0.12 |

| L Sub-lobar Amygdala | −22 | −7 | −9 | 1084 | 0.19 | 0.10 |

| L Parahippocampal Gyrus/Amygdala | −27 | −1 | −15 | 0.19 | 0.10 | |

| L Parahippocampal Gyrus/Hippocampus | −30 | −9 | −16 | 0.17 | 0.11 | |

| L Parahippocampal Gyrus/Hippocampus | −26 | −14 | −11 | 0.14 | 0.10 | |

| L Lentiform Nucleus/Medial Globus Pallidus | −16 | −11 | −6 | 0.13 | 0.08 | |

| L Fusiform Gyrus | −41 | −56 | −12 | 517 | 0.11 | 0.08 |

| L Fusiform Gyrus | −47 | −58 | −11 | 0.10 | 0.08 | |

| L Fusiform Gyrus | −43 | −58 | −17 | 0.10 | 0.07 | |

| L Fusiform Gyrus | −44 | −48 | −13 | 0.09 | 0.07 | |

| L Inferior Occipital Gyrus | −43 | −68 | −5 | 0.07 | 0.06 | |

| Contrast: positive emotions, emotion words not present > present (Level 0 > Levels 1 and 2) | ||||||

| R Parahippocampal Gyrus/Amygdala | 25 | −3 | −14 | 665 | 0.21 | 0.13 |

| R Lentiform Nucleus/Lateral Globus Pallidus | 19 | −3 | −8 | 0.19 | 0.13 | |

| R Sub-lobar Amygdala | 25 | −11 | −7 | 0.18 | 0.12 | |

| L Parahippocampal Gyrus/Amygdala | −25 | −1 | −16 | 457 | 0.18 | 0.11 |

| L Parahippocampal Gyrus/Amygdala | −28 | −9 | −13 | 0.15 | 0.11 | |

| L Subcallosal Gyrus | −27 | 4 | −14 | 0.13 | 0.10 | |

| L Claustrum | −32 | 8 | −6 | 0.13 | 0.10 | |

| L Insula | −39 | 17 | 1 | 0.12 | 0.10 | |

| L Insula | −28 | 16 | −3 | 0.12 | 0.10 | |

| L Inferior Frontal Gyrus | −41 | 19 | −4 | 0.11 | 0.10 | |

Note: voxel-wise P < 0.001, except where noted by *P < 0.005, cluster-wise FWER P < 0.05.

Testing the effect of affect-related words

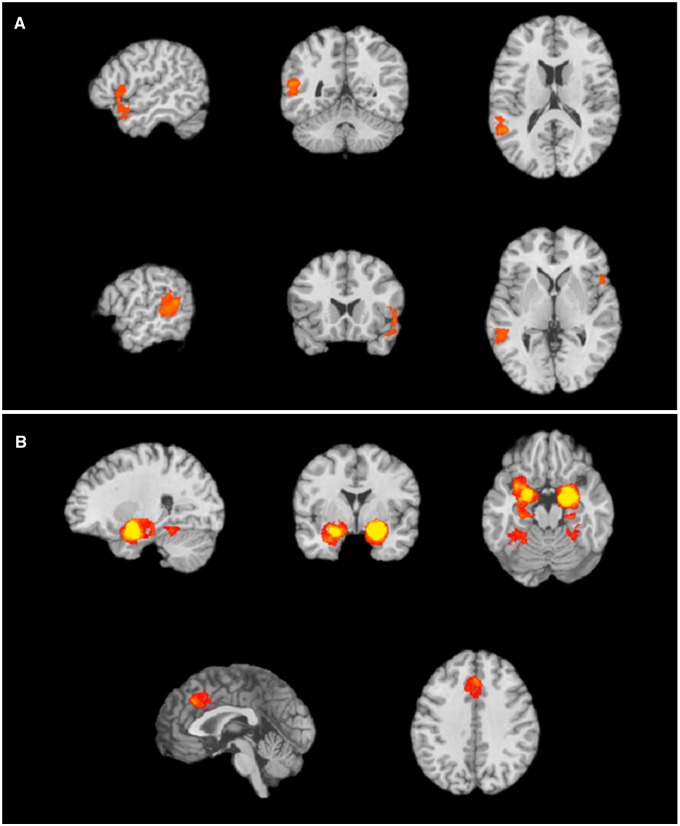

Finally, to specifically address our predictions that emotion words would be more effective at reducing amygdala activity than affect words, we ran a meta-analytic contrast comparing frequent brain activations in studies that used emotion words vs affect words. In the emotion words present > affect words present contrast, we observed a cluster in STG (Figure 3A), replicating previous findings that emotion concept words prompt semantic processing. In contrast, in the affect words present > emotion words present contrast, we observed consistent amygdala activity (Figure 3B), replicating our previous analyses and underscoring the fact that affective predictions remain ambiguous in the presence of affect, but not emotion words.

Fig. 3.

Regions with greater activity for the (A) emotion words present > affect words present contrast include clusters in left middle temporal gyrus, and right STG and (B) affect words present > emotion words present contrast include clusters in bilateral PHG/amygdala, left cingulate gyrus and left and right culmen.

To examine more closely how affect words impacted on the neural basis of emotion, we next focused specifically on experimental contrasts that contained only affect words. In the affect words present > no affect words present, we did not find any clusters that survived the P < 0.001 threshold. However, at the very lenient threshold of P < 0.05, we observed a large cluster in the parahippcampal gyrus/amygdala. There were no significant activations for no affect words present > affect words present. Together, these findings suggest that as predicted, the presence of affect words in studies did not serve the same function as emotion words.

Discussion

In the present meta-analysis, we found that emotion words impact the neural representation of emotion, as reflected by more frequent activations in the IFG, STG and MTG when emotion words are present across emotional experiences and perceptions. The IFG is routinely implicated in semantic retrieval (Thompson-Schill et al., 1997; Grindrod et al., 2008; Huang et al., 2012) and the STG and middle temporal gyrus also have an undisputed role in language (Friederici et al., 2003; Bigler et al., 2007). More anterior portions of STG, such as what we observed in our meta-analysis, are involved in the representation of semantic knowledge and are thought to be a hub in a distributed semantic network containing the IFG, lateral temporal cortices, medial temporal lobe and midline cortical areas such as the medial prefrontal cortex and posterior cingulate cortex (see Visser et al., 2010 for a meta-analysis). Together, these findings suggest that tasks involving emotion words prompted semantic retrieval and use of relevant emotion concept knowledge during the experience and perception of emotions. This is notable given that in many experiments in our database, the neural response to an emotional stimulus was modeled separately from the neural response to emotion words involved in instructions or trial-by-trial labeling—thus our findings do not merely reflect processing words per se but accessing conceptual knowledge during emotional experiences and perceptions.

In contrast, when emotion studies across the literature did not involve emotion words, we consistently observed frequent amygdala activity. In fact, we observed frequent amygdala activity any time emotion concept words were not present in a task. Our findings are consistent with a role for the amygdala in signaling uncertainty about the meaning of affective sensations when emotion concept knowledge is not readily accessible (as predicted by a psychological constructionist approach; see Lindquist et al., 2015a). This hypothesized role of the amygdala is also consistent with recent accounts suggesting that the amygdala more generally responds to uncertainty, arousal and the ‘motivational salience’ of visual stimuli in particular (Cunningham and Brosch, 2012; Touroutoglou et al., 2014). According to the psychological constructionist view, the uncertainty of the meaning of affective stimuli is resolved when conceptual knowledge about emotion is made more readily accessible and used to categorize the meaning of affective sensations.

Frequent amygdala activity even occurred in the more conservative case of gender vs emotion categorizations of emotional facial expressions and when affect words were present, suggesting that not just any type of words decrease the frequency of amygdala activity—the effect appeared to be specific to the presence of emotion concept words across studies. This is likely because emotion concept words name discrete emotion concepts that help refine the meaning of otherwise ambiguous affective states. Once a person categorizes unpleasant affect as, e.g. fear, he knows what his affective state means, what to do about it, and even how to regulate it. This process would in turn decrease the arousing nature of those stimuli, performing an implicit emotion regulation function (Lieberman, 2011). Consistent with this interpretation, when emotion words were absent from paradigms, we also observed more frequent activations in the parahippocampal gyrus and aspects of ventral temporal cortex (e.g. fusiform), suggesting that participants may have been engaging in increased episodic memory retrieval and sensory processing to make meaning of the affective stimuli they were experiencing and perceiving in the absence of readily available emotion concept knowledge. The parahippocampal gyrus is also implicated in the processing of visual context (Aminoff et al., 2013), potentially suggesting a greater reliance on contextual cues to resolve uncertain affective experiences in the absence of specific conceptual knowledge. Given the role of the fusiform in downstream visual processing important for successful object and face recognition (Haxby et al., 2001), frequent activations in this region when words are not present could reflect more elaborate visual processing of emotional stimuli when conceptual knowledge is not readily available to make meaning of visual input.

Implications

Taken together, our findings are consistent with the psychological constructionist hypothesis that language helps constitute emotion by representing conceptual knowledge that is necessary to make meaning of otherwise ambiguous affective states. These findings are important in that they begin to shine light on the dynamics of neural systems that help construct emotions. It is common to observe increased activity within brain regions associated with semantics during emotions (Kober et al., 2008; Vytal and Hamann, 2010; Lindquist et al., 2012; see Lindquist et al., 2015a). However, it is unclear from these findings whether language is merely an epiphenomenon to emotion, only labeling emotions after the fact, or whether these regions are performing another function entirely. In contrast, our meta-analytic neuroimaging findings suggest that the mere presence vs absence of emotion words in a task change emotional brain activity in a consistent manner.

The idea that affective feelings are ambiguous in the absence of conceptual knowledge about emotion is consistent with findings suggesting that ‘affect labeling’ helps a person regulate their feelings (Pennebaker and Beall, 1986; Lieberman, 2011). Understanding more specifically what you are feeling helps you know what caused the feeling and what to do about it. It is thus no surprise that learning to label feelings is at the core of many types of psychotherapy. Similarly, re-conceptualizing the meaning of a feeling with a different linguistic category (as in the cognitive reappraisal tasks used in standard emotion regulation paradigms) would also help regulate emotions by helping transform one type of experience (e.g. fear) into another (e.g. anger). Not surprisingly, reappraisal, although it does not explicitly involve affect labeling, involves many of the same brain regions involved in semantics such as the ventrolateral prefrontal cortex, medial prefrontal cortex, anterior temporal lobe and posterior cingulate cortex (Buhle et al., 2014; Burklund et al., 2014). There is debate about whether the processes involved in emotion regulation are the same or different than those involved in emotion generation (Gross and Barrett, 2011), but evidence suggests that the neural mechanisms involved in both are similar (Ochsner et al., 2012). These findings underscore the psychological constructionist point that conceptualization is a fundamental ‘ingredient’ in emotions (Wilson-Mendenhall et al., 2011; Lindquist, 2013; Barrett, 2014).

The present meta-analysis is also an important step in extending behavioral research (Lindquist et al., 2015a,b) that investigates the impact of language on emotion. Our results are particularly striking given the wide variety of tasks included in our meta-analytic database. That the general pattern of less frequent amygdala activity when words are present holds for the entire database of studies strongly suggests emotion words confer an implicit emotion regulation effect, but none of the studies included in our database assessed emotion regulation. Showing that the mere presence of emotion words can confer this consistent effect is a pivotal step in investigating the degree to which language is constitutive of emotion. These findings additionally present a cautionary methodological note, as researchers studying emotion should be as aware as possible of how subtle task characteristics such as the inclusion of emotion words can actually have a large influence over the affective processes under investigation (Kassam and Mendes, 2013).

Limitations

Despite its promises, this work is limited by existing neuroimaging meta-analysis techniques, which rely on studies with subtraction analyses to isolate regions more frequently activated for one task condition vs another. Additionally, a general issue with neuroimaging meta-analysis is that one can never truly know the details and idiosyncrasies of every individual task that comprises the meta-analytic database in use, and further, whether there are qualitative differences between the tasks in each meta-analytic conditions used (e.g. ‘words present’ vs ‘words not present’) that could bias the results. For instance, it is possible that meta-analytic conditions differed in the amount of arousal content shown, cognitive load or in other task demand characteristics. Our examination of the database did not reveal systematic differences in the arousal content (furthermore, most neuroimaging studies tend to use highly arousing stimuli; see Lindquist et al., 2016b). However, it remains a possibility that there are unknown qualitative differences that impacted our findings. Nonetheless, we are reassured by the fact that our findings replicate the findings of single ‘affect labeling’ neuroimaging studies (Hariri et al., 2000; Lieberman et al., 2007) that tightly control for these confounds, suggesting that these additional confounds do not likely account for our findings. Furthermore, the stability of our findings across our different analyses reduces this concern; we demonstrate the same effects when we restricted our analyses to a tightly controlled subset of the database (in which both emotion and gender labeling conditions were nearly identical in terms of task constraints) as well as when examining a more heterogeneous set of contrasts using different methods of inducing emotional experience and perceptions.

Our meta-analysis is also limited more specifically by which hypotheses can be tested at the meta-analytic level. Future research should advance our meta-analytic results using individual neuroimaging studies to address more fine-grained questions about the parametric role of labeling on brain activity and how language impacts activity within and between broad-scale neural networks supporting core affect and conceptualization. Another possibility for future neuroimaging studies would be to use multivariate techniques to assess whether patterns of neural activity elicited by emotional stimuli can be classified by the degree of conceptual knowledge present in the task. We look forward to future research that will continue to explore the important, yet often overlooked, role of language as it shapes our experiences, perceptions and regulation of emotions.

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

Funding

Construction of the meta-analytic database was supported by the Army Research Institute (W5J9CQ-11-C-0046).

Supplementary Material

References

- Aminoff E.M., Kveraga K., Bar M. (2013). The role of the parahippocampal cortex in cognition. Trends in Cognitive Sciences, 17(8), 379–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banks S.J., Eddy K.T., Angstadt M., Nathan P.J., Phan K.L. (2007). Amygdala-frontal connectivity during emotion regulation. Social Cognitive and Affective Neuroscience, 2(4), 303–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. (2007). The proactive brain: using analogies and associations to generate predictions. Trends in Cognitive Sciences, 11, 280–9. [DOI] [PubMed] [Google Scholar]

- Bar M. (2009). The proactive brain: memory for predictions. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1521), 1235–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett L.F. (2006). Solving the emotion paradox: categorization and the experience of emotion. Personality and Social Psychology Review, 10, 20–46. [DOI] [PubMed] [Google Scholar]

- Barrett L.F. (2014). The conceptual act theory: a précis. Emotion Review, 6, 292–7. [Google Scholar]

- Barrett L.F., Bliss-Moreau E. (2009). Affect as a psychological primitive. Advances in Experimental Social Psychology, 41, 167–218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett L.F., Lindquist K.A., Gendron M. (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11, 327–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett L.F., Satpute A. (2013). Large-scale brain networks in affective and social neuroscience: towards an integrative architecture of the human brain. Current Opinion in Neurobiology, 23, 361–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett L.F., Simmons W.K. (2015). Interoceptive predictions in the brain. Nature Reviews Neuroscience, 16, 419–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bigler E.D., Mortensen S., Neeley E.S., et al. (2007). Superior temporal gyrus,language function, and autism. Developmental Neuropsychology, 31(2), 217–38. [DOI] [PubMed] [Google Scholar]

- Binder J.R. (2016). In defense of abstract conceptual representations. Psychonomic Bulletin & Review, 1, 13. [DOI] [PubMed] [Google Scholar]

- Binder J.R., Desai R.H., Graves W.W., Conant L.L. (2009). Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex, 19(12), 2767–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buhle J.T., Silvers J.A., Wager T.D., et al. (2014). Cognitive reappraisal of emotion: a meta-analysis of human neuroimaging studies. Cerebral Cortex, 24, 2981–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burklund L.J., Creswell J.D., Irwin M., Lieberman M.D. (2014). The common and distinct neural bases of affect labeling and reappraisal in healthy adults. Frontiers in Psychology, 5(221), 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaumon M., Kveraga K., Barrett L.F., Bar M. (2014). Visual predictions in the orbitofrontal cortex rely on associations. Cerebral Cortex, 24, 2899–907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham W.A., Brosch T. (2012). Motivational salience: amygdala tuning from traits, needs, values, and goals. Current Directions in Psychological Science, 21, 54–9. [Google Scholar]

- Cunningham W.A., Dunfield K., Stillman P. (2013). Emotional states from affective dynamics. Emotion Review, 5, 344–55. [Google Scholar]

- Ekman P., Cordaro D. (2011). What is meant by calling emotions basic. Emotion Review, 3, 364–70. [Google Scholar]

- Friederici A.D., Rüschemeyer S., Hahne A., Fiebach C.J. (2003). The role of left inferior frontal and superior temporal cortex in sentence comprehension: localizing syntactic and semantic processes. Cerebral Cortex, 13(2), 170–7. [DOI] [PubMed] [Google Scholar]

- Fugate J., Gouzoules H., Barrett L.F. (2010). Reading chimpanzee faces: evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10(4), 544.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grindrod C.M., Bilenko N.Y., Myers E.B., Blumstein S.E. (2008). The role of the left inferior frontal gyrus in implicit semantic competition and selection: an event-related fMRI study. Brain Research, 1229, 167–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross J.J., Barrett L.F. (2011). Emotion generation and emotion regulation: one or two depends on your point of view. Emotion Review, 3, 8–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hariri A.R., Bookheimer S.Y., Mazziotta J.C. (2000). Modulating emotional responses: effects of a neocortical network on the limbic system. NeuroReport, 11(1), 43–8. [DOI] [PubMed] [Google Scholar]

- Haxby J.V., Gobbini M.I., Furey M.L., Ishai A., Schouten J.L., Pietrini P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science, 293(5539), 2425–30. [DOI] [PubMed] [Google Scholar]

- Hemmer P., Persaud K. (2014). Interaction between categorical knowledge and episodic memory across domains. Frontiers in Psychology, 5, 584.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang J., Zhu Z., Zhang J.X., Wu M., Chen H.C., Wang S. (2012). The role of left inferior frontal gyrus in explicit and implicit semantic processing. Brain Research, 1440, 56–64. [DOI] [PubMed] [Google Scholar]

- Kassam K.S., Mendes W.B. (2013). The effects of measuring emotion: physiological reactions to emotional situations depend on whether someone is asking. PLoS One, 8(6), e64959.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kircanski K., Lieberman M.D., Craske M.G. (2012). Feelings into words: contributions of language to exposure therapy. Psychological Science, 23, 1086–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kober H., Barrett L.F., Joseph J., Bliss-Moreau E., Lindquist K.A., Wager T.D. (2008). Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. NeuroImage, 42, 998–1031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman M.D. (2011). Why symbolic processing of affect can disrupt negative affect: social cognitive and affective neuroscience investigations In: Todorov A., Fiske S.T., Prentice D. editors. Social Neuroscience: Toward Understanding the Underpinnings of the Social Mind. Oxford: Oxford University Press. [Google Scholar]

- Lieberman M.D., Eisenberger N.I., Crockett M.J., Tom S.M., Pfeifer J.H., Way B.M. (2007). Putting feelings into words. Psychological Science, 18, 421–8. [DOI] [PubMed] [Google Scholar]

- Lieberman M.D., Inagaki T.K., Tabibnia G., Crockett M.J. (2011). Subjective responses to emotional stimuli during labeling, reappraisal, and distraction. Emotion, 11, 468–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K.A. (2013). Emotions emerge from more basic psychological ingredients: a modern psychological constructionist model. Emotion Review, 5, 356–68. [Google Scholar]

- Lindquist K.A., Barrett L.F. (2008). Constructing emotion: the experience of fear as a conceptual act. Psychological Science, 19, 898–903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K A., Barrett L.F., (2012). A functional architecture of the human brain: insights from emotion. Trends in Cognitive Sciences, 16, 533–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K.A., Gendron M. (2013). What's in a word? Language constructs emotion perception. Emotion Review, 5, 66–71. [Google Scholar]

- Lindquist K.A., Gendron M., Satpute A.B. (2016a). Language and emotion: putting words into feelings and feelings into words In: Barrett L.F., Lewis M., Haviland-Jones J.M., editors. Handbook of Emotions, 4th edn,New York: Guilford. [Google Scholar]

- Lindquist K.A., MacCormack J.K., Shablack H. (2015a). The role of language in emotion: predictions from psychological constructionism. Frontiers in Psychology, 6, 444.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K.A., Satpute A.B., Gendron M. (2015b). Does language do more than communicate emotion? Current Directions in Psychological Science, 24, 99–108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K.A., Satpute A.B., Wager T.D., Weber J., Barrett L.F. (2016b). The brain basis of positive and negative affect: evidence from a meta-analysis of the human neuroimaging literature. Cerebral Cortex, 5, 1910–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist K.A., Wager T.D., Kober H., Bliss-Moreau E., Barrett L.F. (2012). The brain basis of emotion: a meta-analytic review. Behavioral and Brain Sciences, 35, 121–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lupyan G. (2012). Linguistically modulated perception and cognition: the label-feedback hypothesis. Frontiers in Psychology, 3, 54.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lupyan G., Rakison D.H., McClelland J.L. (2007). Language is not just for talking: labels facilitate learning of novel categories. Psychological Science, 18(12), 1077–83. [DOI] [PubMed] [Google Scholar]

- Lupyan G., Spivey M.J. (2010). Making the invisible visible: verbal but not visual cues enhance visual detection. PLoS One, 5, e11452.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niles A.N., Craske M.G., Lieberman M.D., Hur C. (2015). Affect labeling enhances exposure effectiveness for public speaking anxiety. Behavior Research and Therapy, 68, 27–35. [DOI] [PubMed] [Google Scholar]

- Ochsner K.N., Silvers J.A., Buhle J.T. (2012). Functional imaging studies of emotion regulation: a synthetic review and evolving model of the cognitive control of emotion. Annals of the New York Academy of Sciences, 1251, E1–E24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterwijk S., Lindquist K.A., Anderson E., Dautoff R., Moriguchi Y., Barrett L.F. (2012). States of mind: emotions, body feelings, and thoughts share distributed neural networks. NeuroImage, 62, 2110–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panichello M.F., Cheung O.S., Bar M. (2012). Predictive feedback and conscious visual experience. Frontiers in Psychology, 3, 620.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K., Nestor P.J., Rogers T.T. (2007). Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience, 8, 976–87. [DOI] [PubMed] [Google Scholar]

- Payer D.E., Baicy K., Lieberman M.D., London E.D. (2012). Overlapping neural substrates between intentional and incidental down-regulation of negative emotions. Emotion, 2, 229–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennebaker J.W., Beall S.K. (1986). Confronting a traumatic event: toward an understanding of inhibition and disease. Journal of Abnormal Psychology, 95(3), 274–81. [DOI] [PubMed] [Google Scholar]

- Raichle M.E., MacLeod A.M., Snyder A.Z., Powers W.J., Gusnard D.A., Shulman G.L. (2001). A default mode of brain function. Proceedings of the National Academy of Sciences United States of America, 98(2), 676–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rissman J., Eliassen J.C., Blumstein S.E. (2003). An event-related fMRI investigation of implicit semantic priming. Journal of Cognitive Neuroscience, 15(8), 1160–75. [DOI] [PubMed] [Google Scholar]

- Rossell S.L., Bullmore E.T., Williams S.C.R., David A.S. (2001). Brain activation during automatic and controlled processing of semantic relations: a priming experiment using lexical-decision. Neuropsychologia, 39, 1167–76. [DOI] [PubMed] [Google Scholar]

- Russell J.A. (2003). Core affect and the psychological construction of emotion. Psychological Review, 110, 145–72. [DOI] [PubMed] [Google Scholar]

- Russell J.A., Barrett L.F. (1999). Core affect, prototypical emotion episodes, and other things called emotion: dissecting the elephant. Journal of Personality and Social Psychology, 76(5), 805–19. [DOI] [PubMed] [Google Scholar]

- Satpute A.B., Nook E.C., Narayanan S., Weber J., Shu J., Ochsner K.N. (in press). Emotions in “black or white” or shades of gray? How we think about emotion shapes our perception and neural representation of emotion. Psychological Science. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacter D.L., Addis D.R., Buckner R.L. (2007). Remembering the past to imagine the future: the prospective brain. Nature Reviews Neuroscience, 8(9), 657–61. [DOI] [PubMed] [Google Scholar]

- Seeley W.W., Menon V., Schatzberg A.F., et al. (2007). Dissociable intrinsic connectivity networks for salience processing and executive control. The Journal of Neuroscience, 27(9), 2349–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simons J.S., Spiers H.J. (2003). Prefrontal and medial temporal lobe interactions in long-term memory. Nature Reviews Neuroscience, 4(8), 637–48. [DOI] [PubMed] [Google Scholar]

- St. Jacques P.L., Rubin D.C., Cabeza R. (2012). Age-related effects on the neural correlates of autobiographical memory retrieval. Neurobiology of Aging, 33(7), 1298–310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tabibnia G.T., Lieberman M.D., Craske M.G. (2008). The lasting effect of words on feelings: words may facilitate exposure effects to threatening images. Emotion, 8, 307–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill S.L., D’Esposito M., Aguirre G.K., Farah M.J. (1997). Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proceedings of the National Academy of Sciences United States of America, 94, 14792–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill S.L., Schnur T.T., Hirshorn E., Schwartz M.F., Kimberg D. (2007). Regulatory functions of prefrontal cortex during single word production. Brain and Language, 103(1), 171–2. [Google Scholar]

- Torrisi S.J., Lieberman M.D., Bookheimer S.Y., Altshler L.L. (2013). Advancing understanding of affect labeling with dynamic causal modeling. NeuroImage, 82, 481–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Touroutoglou A., Bickart K.C., Barrett L.F., Dickerson B.C. (2014). Amygdala task-evoked activity and task-free connectivity independently contribute to feelings of arousal. Human Brain Mapping, 10, 5316–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigliocco G., Meteyard L., Andrews M., Kousta S.T. (2009). Toward a theory of semantic representation. Language and Cognition, 1, 219–47. [Google Scholar]

- Visser M., Jefferies E., Lambon Ralph M.A. (2010). Semantic processing in the anterior temporal lobes: a meta-analysis of the functional neuroimaging literature. Journal of Cognitive Neuroscience, 22(6), 1083–94. [DOI] [PubMed] [Google Scholar]

- Vytal K., Hamann S. (2010). Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. Journal of Cognitive Neuroscience, 22(12), 2864–85. [DOI] [PubMed] [Google Scholar]

- Wager T.D., Lindquist M., Kaplan L. (2007). Meta-analysis of functional neuroimaging data: current and future directions. Social Cognitive and Affective Neuroscience, 2, 150–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen P.J. (2007). The uncertainty of it all. Trends in Cognitive Sciences, 11, 499–500. [DOI] [PubMed] [Google Scholar]

- Widen S.C., Russell J.A. (2010). Children’s scripts for social emotions: causes and consequences are more central than are facial expressions. British Journal of Developmental Psychology, 28, 565–81. [DOI] [PubMed] [Google Scholar]

- Wilson-Mendenhall C.D., Barrett L.F., Simmons W.K., Barsalou L.W. (2011). Grounding emotion in situated conceptualization. Neuropsychologia, 49, 1105–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood A., Niedenthal P. (2015). Language limits the experience of emotions: comment on “The quartet theory of human emotions: an integrative and neurofunctional model” by Koelsch S.et al. Physics of Life Reviews, 13, 95–98. [DOI] [PubMed] [Google Scholar]

- Xu F. (2002). The role of language in acquiring object kind concepts in infancy. Cognition, 85, 223–50. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.