Abstract

Humans cannot help but attribute human emotions to non-human animals. Although such attributions are often regarded as gratuitous anthropomorphisms and held apart from the attributions humans make about each other’s internal states, they may be the product of a general mechanism for flexibly interpreting adaptive behavior. To examine this, we used functional magnetic resonance imaging (fMRI) in humans to compare the neural mechanisms associated with attributing emotions to humans and non-human animal behavior. Although undergoing fMRI, participants first passively observed the facial displays of human, non-human primate and domestic dogs, and subsequently judged the acceptability of emotional (e.g. ‘annoyed’) and facial descriptions (e.g. ‘baring teeth’) for the same images. For all targets, emotion attributions selectively activated regions in prefrontal and anterior temporal cortices associated with causal explanation in prior studies. These regions were similarly activated by both human and non-human targets even during the passive observation task; moreover, the degree of neural similarity was dependent on participants’ self-reported beliefs in the mental capacities of non-human animals. These results encourage a non-anthropocentric view of emotion understanding, one that treats the idea that animals have emotions as no more gratuitous than the idea that humans other than ourselves do.

Keywords: anthropomorphism, emotion attribution, face perception, social cognition

Introduction

‘But man himself cannot express love and humility by external signs, so plain as does a dog, when with drooping ears, hanging lips, flexuous body, and wagging tail, he meets his beloved master. Nor can these movements in the dog be explained by acts of volition or necessary instincts, any more than the beaming eyes and smiling cheeks of a man when he meets an old friend.’ (Darwin, 1872: 10–1)

In this brief passage from his treatise ‘The Expression of the Emotions in Man and Animals, Charles Darwin provides an excellent illustration of the human mind’s capacity to see in the behavior of non-human animals the same kinds of covert emotional states it sees in the behavior of other humans. From an anthropocentric viewpoint, the attribution of humanlike emotion to non-human animals represents a clear case of ‘anthropomorphism’, the projection of our own attributes onto non-human entities (Epley et al., 2007), and is not unlike the attribution of beliefs, motives and intentions to other non-human entities, such as moving circles and triangles (Heider and Simmel, 1944), robots (Fussell et al., 2008) and hurricanes (Barker and Miller, 1990). Unsurprisingly, then, Darwin’s sophisticated attributions of emotion to non-human animals are still the subject of intense debate regarding whether and how emotion should be used in the science of animal behavior (de Waal, 2011). Nevertheless, be the beloved family dog or the genetically similar chimpanzee and bonobo, humans cannot help but attribute human emotions to animals. In this study, we designed a behavioral task for isolating the cognitive processes that produce reliable attributions of emotional states to the behavior of non-human animals. Using this task in conjunction with functional magnetic resonance imaging (fMRI), we for the first time directly compared the neural basis of attributing the same emotions to human and non-human animals.

What is involved when one human attributes an emotion to another human? Taking Darwin’s example above, the target’s overt facial behaviors (e.g. ‘beaming eyes’, ‘smiling cheeks’) are viewed as expressions of a specific covert emotional state presumed to exist in the target. This assumption—that observable behaviors are caused by unobservable states of mind like belief and emotion—is the foundation of what the philosopher Daniel Dennett termed ‘the intentional stance’ (Dennett, 1989), and what in psychology and more recently social neuroscience has come to be known as a ‘theory of mind’ (ToM) (Gallagher and Frith, 2003; Saxe et al., 2004). Our proclivity for making such attributions about behavior has been well studied by social and developmental psychologists, yielding several frameworks for characterizing the dimensions on which we make such attributions. For instance, there are 2D schemes for experience and agency (Gray et al., 2007), or for competence and warmth (Fiske et al., 2007). Specifically for faces, there may be dimensions of trustworthiness and dominance (Todorov et al., 2008). In all these cases, the dimensions on which we represent emotion in humans are dimensions of attributes that cannot be directly observed but are inferred based on information that is already known or directly observable, such as non-verbal behavior. In other words, representations of emotion tend to be conceptually abstract, with an unreliable correspondence with specific sensorimotor events (Vallacher and Wegner, 1987; Spunt et al., 2016).

There is also a literature suggesting that very similar, or identical, psychological dimensions characterize the attributions humans tend to make about a variety of non-human entities, ranging from non-human animals to robots to Gods (Epley et al., 2007). Although such anthropomorphic attributions are typically placed in a special category and often treated as irrational and gratuitous (e.g. Wynne, 2004), they may actually be the rational consequence of fundamental similarities in both the form and function of human and non-human animal behaviors (de Waal, 2011). The form of the human body and face—including its capacity for expression—has much in common with other mammalian species (Brecht and Freiwald, 2012), particularly other primates (Sherwood et al., 2003; Parr et al., 2007). Not surprisingly, then, untrained human observers evidence an ability to extract affective information from novel dog facial expressions that rivals their ability to do the same for human infant expressions (Schirmer et al., 2013). In turn, evidence suggests that domesticated dogs have through selective breeding and adaptation to human ecologies evolved an ability to discriminate affective information in human faces (Berns et al., 2012; Axelsson et al., 2013; Cuaya et al., 2016).

To our knowledge, no prior neuroimaging study has experimentally manipulated the demand to attribute emotions to non-human animals in order to identify its neural basis and evaluate its similarity to the well-known neural basis of reasoning about the mental states of another human being. Several studies have compared the neural correlates of passively observing dogs and humans under different conditions; these studies largely demonstrate that regions associated with processing salient features of human behavior—such as facial expression and biological motion—are also activated when observing corresponding features in dogs (Blonder et al., 2004; Franklin et al., 2013). These studies collectively indicate that humans spontaneously deploy similar higher-level visual processes across human and non-human animal targets. However, given that they did not experimentally control the demand to anthropmorphize the non-human animal targets, their findings leave open questions regarding its neural basis.

A related line of prior neuroimaging studies demonstrate that individual differences in prior experience and beliefs related to non-human animals affect the brain regions humans recruit when observing non-human animals. For instance, prior experience with dogs was associated with increased activity in the posterior superior temporal sulcus during the passive viewing of meaningful dog gestures (Kujala et al., 2012), presumably reflecting increased engagement of cortical regions concerned with processing biological motion. A more recent study compared the neural response to observing domesticated dogs in pet owners and non-pet owners and found that pet owners more strongly activated a set of cortical regions spanning insular, frontal, and occipital cortices (Hayama et al., 2016). Related work examining brain structure suggests that individual differences in anthropomorphism for non-human animals (see Waytz et al., 2010) correlated with gray matter volume in a region of left TPJ thought to be involved in aspects of ToM (Cullen et al., 2014). These studies suggest the importance of distinguishing the ‘capacity’ to appreciate anthropomorphic descriptions of non-human animals, and the ‘tendency’ to deploy that capacity spontaneously, in the absence of an explicit stimulus to do so (Keysers and Gazzola, 2014).

Although the neural basis of attributing emotion to animals remains unknown, the neural basis of attributing mental states to other humans is known to be reliably associated with a set of cortical regions commonly referred to as the ToM or mentalizing network (Fletcher et al., 1995; Goel et al., 1995; Happé et al., 1996; Gallagher and Frith, 2003; Saxe et al., 2004; Amodio and Frith, 2006). In a series of prior studies of healthy adults, we have shown that attributions about social situations activate an anatomically well-defined network of brain regions (Spunt et al., 2010, 2011; Spunt and Lieberman, 2012a,b). In a recent study, we found that, remarkably, this same neural system appears to be engaged when we make causal attributions about completely non-social events, such as attributing the sight of water gushing out of a gutter to an unseen rainstorm (Spunt and Adolphs, 2015). In line with prior neuroimaging studies of social and/or non-social reasoning (see Van Overwalle, 2011), activation of this inferential process was reliably stronger for social situations. Thus, it appears that social attributions are executed by processes that, though intrinsically domain-general, may acquire specialization for the social domain over the course of social development.

Here, we extend this research to identify the neural mechanisms supporting anthropomorphism during the perception of facial expressions that we see in non-human primates and dogs. Specifically, we examined three questions. First, do we use the same mechanisms to attribute emotions to the facial expressions of humans and non-human animals, when asked in a task to make such attributions? Second, do we spontaneously recruit these mechanisms to similar degrees when merely passively watching human and non-human animal behavior, in the absence of an explicit attribution task? Third, is the level of spontaneous recruitment dependent on individual differences in experience with, and attitudes towards, humans and non-human animals?

Methods

Participants

Eighteen adults from the Los Angeles metropolitan area participated in the study in exchange for financial compensation. All participants were screened to ensure that they were right-handed, neurologically and psychiatrically healthy, had normal or corrected-to-normal vision, spoke English fluently, had IQ in the normal range (as assessed using the Wechsler Abbreviated Scales of Intelligence), and were not pregnant or taking any psychotropic medications at the time of the study. All participants provided written informed consent according to a protocol approved by the Institutional Review Board of the California Institute of Technology.

Exclusions . For the Explicit Task described below, data from two participants was excluded from the analysis, one due to unusually poor task performance (no response to 46% of trials), and one due to excessive head motion during image acquisition (translation > 8 mm). This left 16 participants (8 males, 8 females; mean age = 29.00, age range = 21–46) for the analysis of the Explicit Task. For the Implicit Task described below, data from one participant were excluded from analysis due to unusually poor task performance (no response to 42% of catch trials). This left 17 participants (9 males, 8 females; mean age = 28.71, age range = 21–46) in the analysis of the Implicit Task.

Power a nalysis. The open-source MATLAB toolbox fmripower was used to estimate power for detecting effects in the ‘Emotion > Expression’ contrast for each of the a priori ROIs used to test our primary hypotheses in the Explicit Task (described below). We used the ‘Mind > Body> contrast from Spunt et al. (2015), which featured an event-related design similar to that of this study. For the four ROIs used to test our primary hypotheses, 90% detection power could be achieved with an average of 9.25 subjects (SD = 3.95, MIN/MAX = 6/15). We thus aimed to have data from at least 15 subjects, a sample size sufficiently large to test our primary hypotheses, and recruited n = 18 with the expectation that a few participants might drop out for technical reasons, as indeed a few did.

Experimental design

For the study, participants were asked to perform two tasks, each of which was intended to capture distinctive features of emotion attribution. Both tasks featured the same stimulus set containing photographs of human, non-human primate, and dog facial expressions. The tasks differed primarily in the overt task that participants had to perform for each stimulus. In the following sections, we describe in detail the components of the experimental design, beginning with the stimulus set.

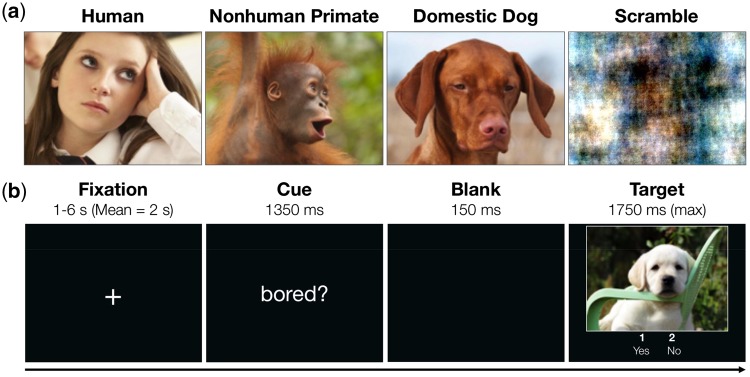

Stimuli. The experimental stimuli were composed of 30 naturalistic photographs of facial expressions from each of three categories: ‘Humans, Non-human Primates and Dogs’ (see Figure 1 e.g. and Supplementary Figure S1–S3 for the full stimulus sets). For brevity’s sake, we henceforth use the term ‘Primate’ to refer to the Non-human Primate photographs. Given that three of the Primate photographs show the animal in restricted conditions (e.g. behind the bars of a cage), we clarify here that we describe the photographs as ‘naturalistic’ from the perspective of a human observer rather than the depicted targets. Insofar as opportunities for humans to observe primates often occur while the animal is in captivity (e.g. zoo, laboratory), photographs of animals in restricted conditions are therefore ‘naturalistic’ from the perspective of the human observer.

Fig. 1.

Experimental design. (a) In the Implicit Task, participants perform a visual one-back on a series of naturalistic images showing human, non-human primate, and dog facial displays. Images appear in an event-related design intermixed with a phase-scrambled subset of the same images which provided a baseline for univariate contrasts. We refer to the task as ‘implicit’ only to designate that at the time of performing the task, participants were not explicitly directed to attend to or think about the images in a particular way, and were naïve to the fact that in a subsequent task they would be asked to consider the emotional states of each target. (b) The sequence of screens from an example trial in the Explicit Task, which participants learn about only after completing the Implicit Task. The task features the same images used in the Implicit Task (excluding scrambles). Each image is presented twice, once preceded by a verbal cue directing participants to accept or reject an emotion attribution about the target, and once by a verbal cue directing participants to accept or reject an expression attribution about the target (all verbal cues presented in Table 1). Once the image appears, participants have 1750 ms to commit a ‘Yes/No’ manual response. Every cue preceded an equal number of images from each target type and elicited a response of either ‘Yes’ (two-third of trials) or ‘No’ (one-third of trials) in the majority of respondents in an independent sample.

We acquired stimuli from a variety of online stock photography sources. Each set contained 26 color photographs and four grayscale photographs; these were not distinguished in analyses. Moreover, the three sets were matched on valence, on face area proportionate to the whole image area, and on estimated luminance (all Ps > 0.84 from independent-samples t-tests comparing the means). We used Amazon.com’s web service Mechanical Turk to collect normative ratings of photograph valence from ∼30 native English-speaking US citizens (on a seven-point Likert scale; 1 = Extremely Negative, 4 = Neutral, 7 = Extremely Positive).

Explicit task. In the Explicit Task, we experimentally manipulated mental state attribution by presenting participants with each stimulus twice, once with the demand to evaluate a description of the target's emotional state (e.g. bored?), and once with the demand to instead evaluate a description of the physical characteristics of their facial expression (e.g. mouth open?). The Explicit Task can thus be described as a 2 (Cue: Emotion vs Expression) × 3 (Target: Humans vs Non-human Primates vs Dogs) factorial design. In numerous published studies, we have shown that conceptually similar manipulations provide a contrast that robustly and selectively modulates activity in the regions of the brain associated with mental state attribution (Spunt et al., 2011; Spunt and Lieberman, 2012a,b; Spunt and Adolphs, 2014).

Table 1 displays the 10 verbal cues featured in the study. Emotion cues regarded the mental state of the focal animal in the photograph (e.g. bored?), while Expression cues regarded an observable motor behavior (e.g. gazing up?). Each cue was paired with four photographs designed to elicit the response ‘yes’, and two photographs designed to elicit the response ‘no’. These pairings were selected based on the responses of an independent sample of Mechanical Turk respondents. For these prior normative responses, each cue-stimulus pairing was evaluated by ∼30 native English-speaking US citizens. We retained only those pairings that produced the same response (accept or reject) in the majority (>80%) of respondents. In our subsequent analysis of participant performance, this consensus data was used to code responses as normative vs counternormative. Independent samples t-tests showed that question-photograph consensus did not differ across the three stimulus sets (Ps > 0.60).

Table 1.

Verbal cues used in the Explicit Task to experimentally control the incidence of emotion attribution when observing the facial expressions of Humans, Non-human Primates and Dogs.

| Attentional focus | |

|---|---|

| Emotion | Expression |

| annoyed? | baring teeth? |

| bored? | gazing up? |

| confident? | looking at the camera? |

| excited? | mouth closed? |

| reflective? | mouth open? |

Emotion cues prompted participants to evaluate the emotional state implied by the target expression, while Expression cues prompted participants to evaluate a factual statement about the target expression itself. Each cue was paired with six targets from each stimulus category. Every target appeared twice during the Explicit Task, once paired with an Emotion cue and once with a Verbal cue. Thus, the Emotion > Expression contrast is attentional.

Implicit task. The Explicit Task allowed us to tackle our primary research question directly: Do people activate the same neural regions, and hence presumably engage the same psychological processes, to attribute mental states to the facial expressions of humans and non-human animals alike? Given that anthropomorphism is motivated by extrinsic task demands, this limited our ability to address our secondary and tertiary research questions, which ask whether humans commonly attribute human-like emotions to non-human animals spontaneously, in the absence of any extrinsic demands to do so. To better capture aspects of this spontaneously expressed motivation to anthropomorphize non-human animals, we had participants perform a simple visual one-back task on all stimuli. To minimize demand characteristics, participants always performed this Implicit Task before being introduced to the Explicit Task. This ensured that the evoked responses to each stimulus category were maximally spontaneous and not primed by the words used in the explicit task.

In the Implicit Task, the 90 stimuli were presented in pseudo-random order in an event-related fashion along with 30 phase-scrambled images, which were selected by phase-scrambling the entire set of 90 stimuli and determining a subset of 30 that was matched on luminance. Each stimulus appeared onscreen for 2 s. Trials were separated by an inter-stimulus interval during which a fixation-cross appeared onscreen. The duration ranged from 0.5 to 5 s, with a mean interval of 1.5 s. During stimulus presentation, participants were asked to perform a visual one-back task, indicating with a right index finger button press whenever a presented image was the same as the one previously shown. Three 1-back ‘catch’ trials were included for each of the four stimulus conditions and excluded from the fMRI analysis. The percentage of one-backs detected by participants was high (Mean = 96.57%, Min = 75.00%), ensuring that all participants attended to the images.

Post-task stimulus ratings and personality measurement. Immediately following their scan, all subjects also rated each of the facial expression images on two scales. To assess the extent to which participants understood the emotional state of each target, participants answered the question ‘Do you understand what he or she is feeling?’ (1–9 scale; 1 = Not at all, 9 = Completely). To assess the valence of the participant’s emotional reaction to viewing each target, participants answered the question ‘How does the photograph make you feel?’ (1–9 scale; 1 = Very bad, 9 = Very good).

In addition, participants completed several questionnaires designed to measure personality attributes we predicted would affect their tendency to anthropomorphize animals. These were the The Belief in Animal Mind Questionnaire (BAM; Hills, 1995), the Empathy to Animals Scale (ETA; Paul, 2000), the Pet Attitude Scale (PAS; Templer et al., 1981), and the Individual Differences in Anthropomorphism Questionnaire (IDAQ; Waytz et al., 2010). Due to restriction of range in our small sample in responses to the BAM, ETA and PAS, we excluded these questionnaires from further analysis. Moreover, given our exclusive focus on the anthropomorphization of non-human animals as well as prior work indicating distinct correlates of the living and non-living subscales of the IDAQ (Cullen et al., 2014), we restricted our analysis to the animal anthropomorphization subscale, which demonstrated both excellent internal reliability (α = 0.90) and range of responses across participants (Scale Limits: 1–9; Score Range: 2.2–9). Due to subject timing schedules, responses to the BAM, ETA, and IDAQ were available for only 16 participants.

Experimental procedures

For both tasks, trials were presented to participants in a pseudo-randomized event-related design (Figure 1). For the Implicit Task, the order and onsets of trials were optimized to maximize the efficiency of separately estimating the Face > Scramble contrast for each of the three target categories. For the Explicit Task, the order and onsets of trials were optimized to maximize the efficiency of separately estimating the Emotion > Expression contrast for each of the three targets; in addition, the order in which the Emotion/Expression cues appeared was counterbalanced across stimuli within each target. For both tasks, design optimization was achieved by generating the design matrices for 1 million pseudo-randomly generated designs and for each summing the efficiencies of estimating each contrast of interest. The most efficient design for each task was retained and used for all participants.

Stimulus presentation and response recording used the Psychophysics Toolbox (version 3.0.9; Brainard, 1997) operating in MATLAB. An LCD projector was used to present the task on a screen at the rear of the scanner bore that was visible to participants through a mirror positioned on the head coil. Participants were given a button box and made their responses using their right-hand index and middle fingers. Before the experimental tasks, participants were introduced to the task structure and performed brief practice versions featuring stimuli not included in the experimental task.

Image acquisition

All imaging data was acquired at the Caltech Brain Imaging Center using a Siemens Trio 3.0 Tesla MRI scanner outfitted with a 32 channel phased-array head coil. We acquired 1330 whole-brain T2*-weighted echoplanar image volumes (EPIs; multi-band acceleration factor = 4, slice thickness = 2.5 mm, in-plane resolution = 2.5 × 2.5 mm, 56 slices, TR = 1000 ms, TE = 30 ms, flip angle = 60°, FOV = 200 mm) for the two experimental tasks. Participants’ in-scan head motion was minimal (max translation = 2.78 mm, max rotation = 1.88°). We also acquired an additional 904 EPI volumes for each participant for use in a separate study. Finally, we acquired a high-resolution anatomical T1-weighted image (1 mm isotropic) and field maps used to estimate and correct for inhomogeneity-induced image distortion.

Image preprocessing

Images were processed using Statistical Parametric Mapping (SPM12 version 6685, Wellcome Department of Cognitive Neurology, London, UK) operating in MATLAB. Prior to statistical analysis, each participant's images for each task were subjected to the following preprocessing steps: (i) the first four EPI volumes were discarded to account for T1-equilibration effects; (ii) slice-timing correction was applied; (iii) the realign and unwarp procedure was used to perform distortion correction and concurrent motion correction; (iv) the participants’ T1 structural volume was co-registered to the mean of the corrected EPI volumes; (v) the group-wise DARTEL registration method included in SPM12 (Ashburner, 2007) was used to normalize the T1 structural volume to a common group-specific space, with subsequent affine registration to Montreal Neurological Institute (MNI) space; (vi) all EPI volumes were normalized to MNI space using the deformation flow fields generated in the previous step, which simultaneously re-sampled volumes (2 mm isotropic) and applied spatial smoothing (Gaussian kernel of 6 mm isotropic, full width at half maximum); and finally, (vii) a log-transformation was applied to the EPI timeseries for each task to ensure that the regression weights estimated from our single-subject models were interpretable as percent signal change.

Single-subject analysis

For each participant, general linear models were used to estimate a model of the EPI timeseries for each task. Models for both tasks included as covariates of no interest the six motion parameters estimated from image realignment and a predictor for every timepoint where in-brain global signal change (GSC) exceeded 2.5 SDs of the mean GSC or where estimated motion exceeded 0.5 mm of translation or 0.5° of rotation. In addition, the hemodynamic response was modelled using the canonical (double-gamma) response function; high-pass filtered at 1/100 Hz; and estimated using the SPM12 RobustWLS toolbox, which implements the robust weighted least-squares estimation algorithm (Diedrichsen and Shadmehr, 2005).

Explicit task. The model for the Explicit Task included six covariates of interest corresponding to the six cells created by crossing factors corresponding to the Cue (‘Emotion’ vs ‘Expression’) and Target (‘Humans’ vs ‘Non-human Primates’ vs ‘Dogs’). These covariates excluded foil trials (i.e. trials to which the normative response was to reject) and trials to which the participant gave either no response or the counternormative response. These excluded trials were modeled in a separate covariate of no interest. The neural response to each trial was defined with variable epochs spanning the onset and offset of the target stimulus (Grinband et al., 2008). The onset of the verbal cues preceding each target was also modeled in an additional covariate of no interest. A final covariate of no interest was included which modeled variability in response time (RT) across all trials included in the covariates of interest.

Implicit ta sk. The model for the Implicit Task included four covariates of interest corresponding to the timeseries of the four stimulus types presented to participants (‘Humans, Primates, Dogs, Scrambles’). These covariates excluded all one-back ‘catch’ trials, which were modeled in a separate covariate of no interest. Given that stimulus duration was fixed across conditions, we modeled the neural response to each trial using fixed 2-second epochs that spanned the onset and offset of each image.

Group analysis

Contrasts of interest. To test for a relationship with emotion attribution across all three targets (humans, primates, dogs), we used paired-samples t-tests to identify those regions that independently demonstrated an association with the Emotion > Expression contrast in the Explicit Task for all three targets. We followed this by testing for target-independent (spontaneous) responses to the Face > Scramble contrast in the Implicit Task for each stimulus category separately.

We interrogated several additional contrasts to identify effects that reliably differed across the human and non-human targets. Our primary objective here was to identify those effects where Human targets differed from both Primate and Dog targets. For the Explicit Task, we examined two contrasts, one for the Cue by Target interaction (‘HumanEmotion > Expression > Non-humanEmotion > Expression’); and one for the main effect of Target (‘HumanEmotion + Expression > Non-humanEmotion + Expression’). For the Implicit Task, we examined the ‘Human > Non-human’ comparison. Finally, exploratory analyses of the ‘Primate > Dog’ comparison are reported in Supplementary Table S2. These analyses indicate that in both the Explicit and Implicit Tasks, responses in regions of interest were not reliably different across the two non-human targets.

Regions of interest. Each effect of interest was first interrogated using a set of independently defined ROI based on the group-level Why/How contrasts from Study 1 (n = 29) and Study 3 (n = 21) reported in Spunt and Adolphs (2014). These images are publicly available on NeuroVault (http://neurovault.org/collections/445/). In Spunt and Adolphs (2015), we observed evidence for domain-general responses to the Why > How contrast in four left hemisphere ROIs: Dorsomedial PFC, the Lateral OFC, TPJ and Anterior STS. Two of these regions—the dorsomedial PFC and lateral OFC—showed a response to the Why > How contrast that was reliably stronger for facial expressions than for non-social events. Building on these prior study findings, our ROI analyses here were focused on the same four ROIs highlighted in that prior study. The peak coordinate and spatial extent of each ROI is provided in Supplementary Table S1. For each ROI, we tested our hypotheses with t-tests on the extracted average parameter estimate across voxels. For each test, we report P-values corrected for multiple comparisons across ROIs using the false-discovery rate (FDR) procedure described in Benjamini and Yekutieli (2001). Confidence intervals (CIs) for these effects were estimated using the bias corrected and accelerated percentile method (10 000 random samples with replacement; implemented using the BOOTCI function in MATLAB).

Whole-brain. ROI analyses were complemented by whole-brain analyses. To model the 2 × 3 design of the Explicit Task at the group-level, we entered participants’ contrast images for the six cells of the design into a random-effects analysis using the flexible factorial repeated-measures analysis of variance (ANOVA) module within SPM12 (within-subject factors: Cue, Target; blocking factor: Subject). To examine the one-way design of the Implicit Task, we conducted one-sample t-tests on single-subject contrast images for effects of interest. When interrogating the group-level model for both tasks, we tested the conjunction null for comparisons of interest using the minimum statistic method (Nichols et al., 2005).

We interrogated the resulting group-level t-statistic images by applying a cluster-forming (voxel-level) threshold of P < 0.001 followed by cluster-level correction for multiple comparisons at a family-wise error (FWE) of 0.05. Cluster-level correction was achieved for conjunction images by identifying the maximum cluster extent threshold necessary to achieve cluster-wise correction across the individual images entering the conjunction. For visual presentation, thresholded t-statistic maps were overlaid on the average of the participants' T1-weighted anatomical images.

Exploratory analysis of individual differences. We conducted between-subject analyses to explore possible individual differences in the experimentally unconstrained brain activity observed during the Implicit Task. We specifically explored two interrelated a priori hypotheses regarding individual differences in the attribution of human-like emotions to non-human animals. The first hypothesis follows on our finding that brain regions associated with anthropomorphism in the Explicit Task were reliably activated by the stimuli in the Implicit Task. If these activations reflect spontaneous anthropomorphism, then they should be strongest in those individuals who, on our post-scanning questionnaire, endorsed the highest levels of understanding the animal stimuli, as well as in those participants who endorsed a disposition to anthropomorphize non-human animals in their everyday lives. To explore this first hypothesis, we extracted signal from each ROI in the Primate > Scramble and Dog > Scramble contrasts and computed the Pearson correlation with the scores derived from the post-task stimulus ratings and personality questionnaires.

The second hypothesis adopts a different approach to interpreting the activity observed in the Implicit Task. Namely, if spontaneous activity in response to non-human animals reflects anthropomorphism, then the extent to which that activity is similar to the same individual’s spontaneous activity in response to humans should be associated with their tendency to anthropomorphize non-human animals. To explore this hypothesis, for each participant we correlated their whole-brain (gray-matter masked) response pattern for human faces to their response pattern for each of the non-human animal face conditions. These correlations were computed on the t-statistic images and subsequently Fisher’s z-transformed for the between-subject analysis. This produced two measures of human/non-human neural similarity for each participant, which we also correlated with the scores derived from the post-task stimulus ratings and personality questionnaires.

We chose to estimate similarity across the whole-brain response because it requires no assumptions about the content of the brain states being compared. Rather, it simply asks to what extent to a participant responds similarly when naturally observing humans and non-human animals, and is agnostic regarding ‘what’ specific mental contents and processes are involved. In adopting this strategy, we recognized that within-subject similarities would likely be underestimated due to the admission of functional responses that are irrelevant to anthropomorphism, such as early visual processing. However, we reasoned that this problem in within-subject similarity estimates would likely wash out in our analysis, which is on the between-subject variability.

Results

Behavioral outcomes

Table 2 summarizes cue acceptance and RT data from the Explicit Task and post-task stimulus ratings of emotion understanding and emotional valence. Due to ceiling effects on cue acceptance which produced highly non-normal distributions, we did not subject cue acceptance data to statistical tests and excluded counternormative attributions from our analysis of the both the remaining behavioral outcomes (RT and post-task ratings) and the estimated neural response (as described in the ‘Methods’ section).

Table 2.

Means and SDs (parenthetically) summarizing the frequency and RT with which participants accepted normative Emotion and Expression cues for each of the three targets in the Explicit Task, as well as their ratings of each stimulus target collected post-task

| Acceptance (%) |

Acceptance RT (ms) |

Post-task Stimulus Ratings |

||||

|---|---|---|---|---|---|---|

| Emotion | Expression | Emotion | Expression | Understanding | Valence | |

| Human | 97.19 (4.46) | 98.12 (3.10) | 914 (126) | 789 (99) | 6.93 (1.03) | 5.31 (.48) |

| Primate | 94.95 (5.20) | 89.95 (6.82) | 960 (112) | 849 (108) | 5.83 (.91) | 5.07 (.62) |

| Dog | 84.80 (12.18) | 95.24 (6.98) | 948 (114) | 848 (94) | 6.05 (1.11) | 5.30 (.47) |

Participants indicated the extent to which they understood what each target was feeling (‘Understanding’), and the extent to which each target made them feel good versus bad (‘Valence’). See the main text further details and Supplementary Table S2 for statistical analysis of these outcomes.

Explicit task performance. We used a series of repeated measures ANOVA to examine the remaining behavioral outcomes in the Explicit Task. Complete results are presented in Supplementary Table S2. When examining the simultaneous effects of Cue and Target on Acceptance RT, we observed a significant main effect of both Cue and Target but no interaction. The main effect of Cue—namely, that RT to acceptance for Emotion cues were longer than for Expression cues—parallels a reliable behavioral effects observed in the Why/How Task from which this study’s task was adapted (Spunt and Adolphs, 2014, 2015). Critically, the absence of an interaction effect indicates that this behavioral effect was of a similar magnitude in all three targets. Post-hoc t-tests indicated that the RT difference across Emotion and Expression cues revealed reliable above-zero effects for all three targets (all Ps < 0.0001). We additionally observed no evidence that the magnitude of this RT effect differed in any of the pairwise comparisons (all Ps > 0.20). Post-hoc t-tests demonstrate that the main effect of Target on RT to acceptance was driven by reliably longer RTs for judgments of non-human targets when compared with the same judgments for human targets (all Ps < 0.0001).

We emphasize here our belief that the main effect of Target on RT does not pose a significant impediment to interpreting our fMRI-derived measures of brain activity. As described earlier, our regression model of the fMRI timeseries for the Explicit Task additionally included a parametric covariate modelling RT variability in response amplitude across all included trials. As we have shown now in multiple published papers (Spunt and Adolphs, 2014; Spunt et al., 2016), RT variability does not sufficiently explain the univariate response in regions associated with attentional manipulations akin to the Emotion/Expression manipulation used here.

Post-task ratings. A one-way ANOVA revealed a significant main effect of Target on participants’ post-task ratings of emotion understanding. Post-hoc contrasts revealed that this effect was driven by higher levels of understanding for human targets compared with each non-human targets, with no reliable difference across the two non-human targets. Finally, a one-way ANOVA revealed no evidence for an effect of Target on participants’ post-task ratings of valence.

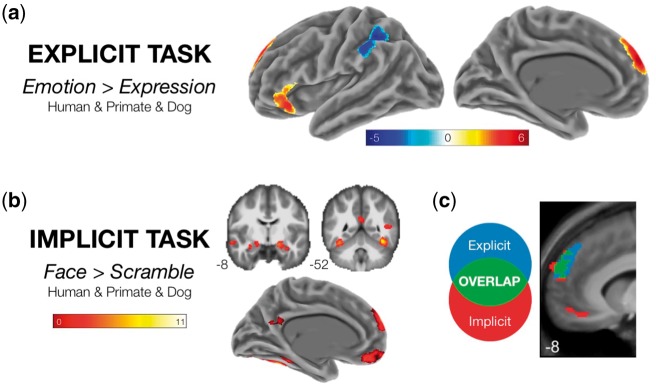

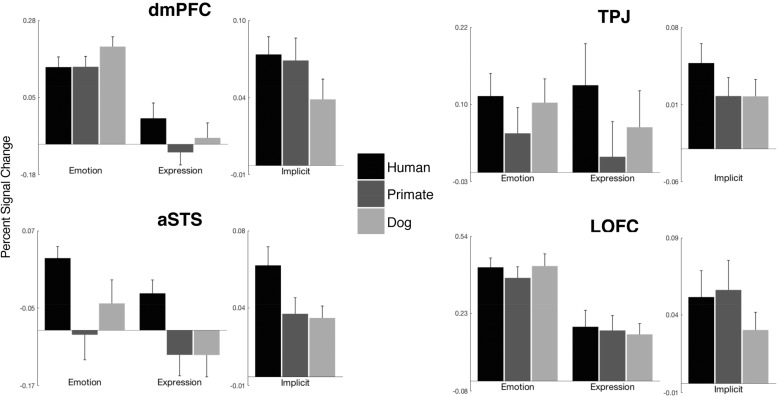

Target-independent effects

As listed in Table 3 and displayed in Figure 3, every ROI except the left TPJ showed an independently significant association with the ‘Emotion > Expression’ contrast for all three targets. These were the dorsomedial prefrontal cortex (dmPFC), the lateral orbitofrontal cortex (LOFC) and the anterior superior temporal sulcus (aSTS). Notably, left TPJ failed to demonstrate an association with the ‘Emotion > Expression’ contrast for any of the three targets, including Humans. As displayed in Figure 2a and listed in Supplementary Table S3, a whole-brain analysis of the ‘Emotion > Expression’ conjunction across targets demonstrated target-independent responses in regions of the dmPFC and LOFC similar in location to our a priori ROIs for those regions. These findings strongly suggest that explicitly attributing emotions (as opposed to merely describing expressions) to non-human animal faces relies on the same core inferential mechanisms supporting the attribution of emotion to the faces of other humans.

Table 3.

Outcomes of ROI tests on the within-stimulus comparisons from the Explicit and Implicit Tasks

| Contrast Name |

Humans |

Non-human Primates |

Dogs |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ROI Label | t-stat | pFDR | 95% | CI | t-stat | pFDR | 95% | CI | t-stat | pFDR | 95% | CI | |

| Explicit Task: Emotion > Expression | |||||||||||||

| LOFC* | 5.415 | <0.001 | 0.34 | 0.68 | 5.357 | <0.001 | 0.25 | 0.51 | 7.531 | <0.001 | 0.41 | 0.69 | |

| TPJ | 0.203 | 1.000 | −0.17 | 0.24 | 1.896 | 0.161 | −0.01 | 0.29 | 1.958 | 0.144 | 0.01 | 0.30 | |

| aSTS | 3.532 | 0.013 | 0.09 | 0.31 | 2.086 | 0.151 | 0.00 | 0.21 | 3.168 | 0.018 | 0.07 | 0.26 | |

| dmPFC* | 3.192 | 0.017 | 0.13 | 0.52 | 5.668 | <0.001 | 0.32 | 0.63 | 6.913 | <0.001 | 0.36 | 0.64 | |

| Implicit Task: Face > Scramble | |||||||||||||

| LOFC* | 2.911 | 0.021 | 0.12 | 0.51 | 2.788 | 0.027 | 0.11 | 0.54 | 2.720 | 0.032 | 0.05 | 0.29 | |

| TPJ* | 4.171 | 0.002 | 0.26 | 0.68 | 2.798 | 0.027 | 0.10 | 0.48 | 2.878 | 0.030 | 0.13 | 0.53 | |

| aSTS* | 5.777 | <0.001 | 0.20 | 0.39 | 3.734 | 0.008 | 0.08 | 0.25 | 4.821 | 0.002 | 0.09 | 0.21 | |

| dmPFC* | 5.620 | <0.001 | 0.42 | 0.86 | 4.364 | 0.004 | 0.35 | 0.88 | 2.980 | 0.030 | 0.13 | 0.61 | |

*ROIs that showed an effect across all stimulus categories. P-values after adjusting for multiple ROIs using a FDR procedure. Further details on the ROIs used are provided in the main text and Supplementary Table S1. L, Left; LOFC, lateral orbitofrontal cortex; TPJ, temporoparietal junction; aSTS, anterior superior temporal sulcus; dmPFC, dorsomedial prefrontal cortex.

Fig. 3.

Whole-brain analysis of target-independent effects. Statistical maps are cluster-level corrected at a FWE rate of 0.05. (a) ‘Explicit Task’. Regions showing significantly positive or negative responses in the ‘Emotion > Expression’ contrast for all targets. (b) ‘Implicit Task’. Regions showing a significantly positive response in the ‘Face > Scramble’ contrast for all targets. (c) ‘Explicit/Implicit Task Conjunction’. The region of dorsomedial prefrontal cortex showing a target independent-effect in both tasks.

Fig. 2.

Percent signal change in a priori ROIs. For each ROI, the leftmost set of bars represent the mean response across voxels (relative to fixation baseline) to the six conditions of the Explicit Task; the rightmost set of bars represent the mean response across voxels to each target in the Implicit Task (relative to the response to the scramble stimulus condition). For further details on the ROIs, see the main text and Supplementary Table S1. Statistical tests corresponding to the data plotted here are presented in Tables 3 and 4. OFC, orbitofrontal cortex; TPJ, temporoparietal junction; aSTS, anterior superior temporal sulcus; dmPFC, dorsomedial prefrontal cortex.

We next tested for target-general responses in the Implicit Task. As also listed in Table 3, every ROI—including the left TPJ—showed an independently significant association with the comparison to Scrambled images for all three targets. As displayed in Figure 2 and listed in Supplementary Table S3, a whole-brain analysis of the Face > Scramble conjunction across targets demonstrated target-independent responses in a distributed set of cortical regions spanning the fusiform and inferior occipital gyri bilaterally, the amygdala/parahippocampal gyrus bilaterally, the precuneus, and both the vmPFC and dmPFC. These findings expand on those observed in the Explicit Task by demonstrating that non-human animal facial expressions elicit similar regional responses in the brain even in the absence of an explicit emotion attribution task, and that these regional responses include those associated with emotion attribution in the Explicit Task as well as additional regions associated more so with face perception and social attention in prior studies (Blonder et al., 2004; Kujala et al., 2012; Franklin et al., 2013; Hayama et al., 2016).

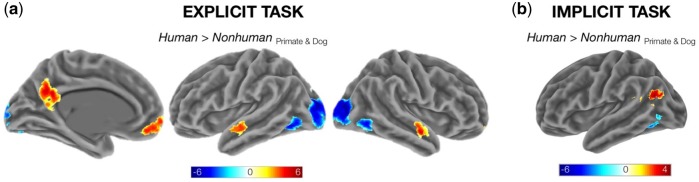

Target-dependent effects

As listed in Table 4 and displayed in Figure 4, we observed scant evidence for univariate responses in any ROI that reliably discriminated the human from the non-human stimuli. When examining each of the direct, pairwise comparisons of the three targets, only the left aSTS and left TPJ demonstrated reliable effects. The left aSTS showed greater activation for human compared with primate faces in both the Explicit and Implicit Tasks, and for dog compared with primate faces in the Explicit Task. The left TPJ showed greater activation for human compared with primate faces in only the Explicit Task. Although of potential interest, these effects are more likely to be interpreted in terms of factors other than the human vs non-human distinction, such as prior experience and conceptual familiarity, which would naturally be elevated for facial expressions of dogs and humans when compared with non-human primates.

Table 4.

Outcomes of ROI tests examining contrasts of human to non-human targets in the Explicit and Implicit Tasks

| Contrast Name | Human > Primate |

Human > Dog |

||||||

|---|---|---|---|---|---|---|---|---|

| ROI label | t-stat | pFDR | 95% CI | t-stat | pFDR | 95% CI | ||

| Explicit Task: HumanEmotion>Expression > Non-humanEmotion>Expression | ||||||||

| LOFC | −0.156 | 1.000 | −0.22 | 0.17 | −1.038 | 0.877 | −0.42 | 0.12 |

| TPJ | −1.862 | 0.343 | −0.27 | −0.01 | −1.102 | 0.877 | −0.35 | 0.10 |

| aSTS | 2.013 | 0.343 | 0.01 | 0.17 | 0.629 | 1.000 | −0.08 | 0.16 |

| dmPFC | −1.157 | 0.741 | −0.25 | 0.05 | −1.401 | 0.877 | −0.44 | 0.07 |

| Explicit Task: HumanEmotion+Expression > Non-humanEmotion+Expression | ||||||||

| LOFC | 0.380 | 1.477 | −0.08 | 0.14 | 0.543 | 1.335 | −0.06 | 0.10 |

| TPJ | 4.469 | 0.002 | 0.11 | 0.29 | 0.851 | 1.335 | −0.04 | 0.12 |

| aSTS* | 5.352 | 0.001 | 0.12 | 0.24 | 3.659 | 0.021 | 0.06 | 0.20 |

| dmPFC | 2.971 | 0.026 | 0.05 | 0.22 | 0.476 | 1.335 | −0.07 | 0.12 |

| Implicit Task: Human > Non-human | ||||||||

| LOFC | −0.256 | 1.000 | −0.08 | 0.06 | 1.077 | 0.620 | −0.04 | 0.11 |

| TPJ | 2.257 | 0.160 | 0.01 | 0.11 | 3.002 | 0.070 | 0.03 | 0.13 |

| aSTS | 4.334 | 0.004 | 0.03 | 0.08 | 2.667 | 0.070 | 0.01 | 0.11 |

| dmPFC | 0.372 | 1.000 | −0.03 | 0.06 | 1.789 | 0.257 | −0.02 | 0.13 |

*ROIs showing effects in both non-human targets. P-values are adjusted for multiple ROIs using a FDR procedure. Further details on ROIs are provided in the main text and Supplementary Table S1. L, Left; LOFC, lateral orbitofrontal cortex; TPJ, temporoparietal junction; aSTS, anterior superior temporal sulcus; dmPFC, dorsomedial prefrontal cortex.

Fig. 4.

Whole-brain analysis of target-dependent effects. Statistical maps are cluster-level corrected at a FWE rate of 0.05. (a) ‘Explicit Task’. Regions showing a differential response to Human targets relative to both Non-human targets (collapsing the ‘Emotion/Expression’ factor). (b) ‘Implicit Task’. Regions showing a differential response to Human targets relative to both Non-human targets.

Exploratory analysis of individual differences

Table 5 summarizes the results of exploratory between-subject analyses examining the relationship between brain activity during the Implicit Task and participants’ post-task ratings of emotion understanding and the non-human animal subscale of the IDAQ. Univariate contrast in our ROIs did not show consistent effects on ratings of emotion understanding but did show preliminary evidence for a positive association with the IDAQ subscale. Multivariate response similarity across human and each non-human target revealed stronger evidence for an association between ‘human-like’ brain activity and both higher levels of emotion understanding and dispositional anthropomorphism towards animals.

Table 5.

Outcomes of exploratory between-subject ROI analyses of variability in spontaneous activation in the Implicit Task, and in the similarity of each participant’s whole-brain (gray-matter masked) response pattern for human faces to their response pattern for each of the non-human animal face conditions

| Primate > Scramble |

Dog > Scramble |

Similarity to human |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| LOFC | TPJ | aSTS | dmPFC | LOFC | TPJ | aSTS | dmPFC | Primate | Dog | ||

| Target-specific emotion understanding | Humans | −0.19 | 0.03 | −0.25 | −0.31 | −0.37 | −0.41 | −0.32 | −0.31 | 0.46 | 0.45 |

| Primates | 0.14 | 0.03 | 0.15 | 0.14 | −0.25 | −0.09 | −0.03 | −0.43 | 0.65** | 0.56* | |

| Dogs | 0.15 | 0.22 | −0.10 | −0.05 | −0.07 | −0.41 | −0.20 | −0.25 | 0.60* | 0.53* | |

| IDAQ (animals subscale) | 0.37 | 0.23 | 0.10 | 0.54* | 0.32 | 0.18 | 0.49 | 0.11 | 0.54* | 0.41 | |

We extracted signal from each ROI in the Primate > Scramble and Dog > Scramble contrasts and computed the Pearson correlation with the scores derived from the post-task emotion understanding ratings and scales on the animal-specific subscale of the IDAQ. Response pattern similarity was calculated using the Pearson correlation of the t-statistic images representing the response pattern for each Target in the Implicit Task, and then subsequently Fisher’s z-transformed for the between-subject analysis. This produced two measures of human/non-human neural similarity for each participant, which we also correlated with the collected post-task measures. L, Left; OFC, lateral orbitofrontal cortex; TPJ, temporoparietal junction; aSTS, anterior superior temporal sulcus; dmPFC, dorsomedial prefrontal cortex.

Note: *P < 0.05, **P < 0.01.

Discussion

We identified the brain regions underlying a basic form of anthropomorphism, namely, the attribution of human-like emotion to the facial expressions of non-human animals. Using an adapted version of the Yes/No Why/How Task (Spunt and Adolphs, 2014), we were able to independently identify the neural basis of attributing the same emotional states to other humans, to non-human primates, and to dogs. In both region-of-interest and whole-brain analyses, we found no evidence for a uniquely human neural substrate for the attribution of emotion. Instead, we found that attributions of emotion to each of the three targets draw on a shared neural mechanism spanning dorsomedial and lateral orbitofrontal prefrontal cortices.

Thus, to answer our first research question: ‘Yes’, attributions of emotion to both humans and to non-human animals draw on the same neural mechanism. Rather than viewing this result as the misapplication of an inferential mechanism for attributing mental states to other humans, we instead view this as the rational consequence of fundamental continuities in both the form and function of human and many non-human animal behaviors (de Waal, 2011; Brecht and Freiwald, 2012). As noted earlier, the human body much in common with other mammalian species, making it natural that many non-human animals would behave in ways that physically resemble human emotional behaviors. A distinct yet related view follows from the observation that, due to common descent and/or to common ecology, many non-human animal species produce behaviors with functions that resemble those ascribed to human emotional behaviors, for instance, social communication. And, while it may be the case that we cannot know what it feels like to be a dog or a chimpanzee, it is just as much the case that we cannot know what it feels like to be ‘any’ human other than ourselves.

Our second research question attempted to distinguish the ‘ability’ to attribute emotion to non-human animal behavior from the ‘tendency’ to recruit that ability spontaneously, in the absence of the kinds of explicit verbal cues used to manipulate emotion attribution in the Explicit Task. Thus, before introducing participants to the Explicit Task, we asked them to observe the experimental stimuli while performing a minimally demanding one-back task. In this Implicit Task, we found that human and non-human facial expressions both elicited activity in a distributed network of brain regions including those regions associated with emotion attribution in the subsequent Explicit Task. Of course, this does not permit the reverse inference that participants were making emotion attributions while performing the one-back task. Rather, it reinforces the conclusion that the similar functional responses observed for human and non-human targets in the Explicit Task were not merely the product of the strong demand characteristics imposed by experimental protocol. Thus, to answer our second research question: ‘Yes’, when observing non-human animal facial expressions, participants activate this mechanism spontaneously even in the absence of explicit verbal cues to attribute emotion.

Our final research question built on extant research demonstrating that the tendency to attribute emotion to non-human animals is a measurable trait that shows considerable variability in the general population (Waytz et al., 2010). In a set of exploratory analyses of both univariate contrast in our a priori ROIs and multivariate response pattern similarity across the whole-brain, we found evidence consistent with the proposition that individuals who are more dispositionally prone to attribute mental states to non-human animals will be more similar in their neural responses to humans and non--human animals. Thus, to provide a preliminary answer to our third and final research question: ‘Yes’, the extent to which humans and non-human animals spontaneously produce similar neural responses appears to be somewhat related to individual differences in beliefs about the mental capacities of non-human animal species.

Future studies with larger sample sizes should further investigate individual differences in animal emotion attribution as they appear in both typically developing populations and in psychiatric disorders. Such studies would profit by considering other idiographic variables that influence how individuals perceive and think about non-human animals, such as adhering to a vegetarian or vegan diet for ethical reasons. Doing so may shed light on the mechanisms by which human–animal relationships, in particular, pet ownership, can have positive effects on mental health and symptom improvement in a wide variety of disorders (Matchock, 2015).

Finally, it is well known that fMRI can distinguish separable neural processes, but cannot provide definitive conclusions regarding similar neural processes. It is thus possible that emotion attribution for human faces, non-human primate faces, and dog faces, recruit different neural mechanisms. Although we consider this possibility unlikely, it could arise from separate but intermingled populations of neurons within the same brain regions. Future fMRI studies using multivoxel analyses or adaptation protocols could further test this possibility, as could single-unit recordings from neurosurgical patients (although with the exception of dmPFC, the regions we found are rarely implanted with electrodes).

We used a novel adaptation of the Yes/No Why/How Task (Spunt and dolphs, 2014) to examine the neural basis of a common form of anthropomorphism, namely, the attribution of complex emotional states to the facial expressions of non-human animals. By comparing such attributions to those made about the facial expressions of humans, we were able to show that the attribution of emotion to non-human animals relies on the same executive processes already known to be critical for understanding the behavior other humans in terms of mental states (Spunt et al., 2011; Spunt and Lieberman, 2012a), and is consistent with our recent work suggesting that these processes can be flexibly deployed to understand the reasons for phenomena in non-social domains (Spunt and Adolphs, 2015). Thus, attribution of emotion to non-human animals may represent one of the many possible expansions humans have made on their capacity to reason about the causes of human behavior. More broadly and in the spirit of Darwin’s seminal treatise on human and non-human animal emotion, our findings here further encourage a non-anthropocentric view of emotion understanding, one that treats the idea that animals have emotions as no more gratuitous than the idea that humans other than ourselves do.

Supplementary Material

Acknowledgements

The Authors would like to acknowledge Mike Tyszka and the Caltech Brain Imaging Center for help with the neuroimaging; the Della Martin Foundation for postdoctoral fellowship support to R.P.S.; Samuel P. and Frances Krown for sponsoring a Summer Undergraduate Research Fellowship to E. E; and three anonymous reviewers for helpful comments.

Funding

This work was supported in part by the National Institutes of Health (R01 MH080721-03 to R.A.). Additional funding was provided by the Caltech Conte Center the Neurobiology of Social Decision-Making (P50MH094258-01A1 to R.A.).

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

References

- Amodio D.M., Frith C.D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience, 7(4), 268–77. [DOI] [PubMed] [Google Scholar]

- Ashburner J. (2007). A fast diffeomorphic image registration algorithm. Neuroimage, 38(1), 95–113. [DOI] [PubMed] [Google Scholar]

- Axelsson E., Ratnakumar A., Arendt M.L., et al. (2013). The genomic signature of dog domestication reveals adaptation to a starch-rich diet. Nature, 495(7441), 360–4. [DOI] [PubMed] [Google Scholar]

- Barker D., Miller D. (1990). Hurricane gilbert: anthropomorphising a natural disaster. Area, 22(2), 107–16. Available: http://www.jstor.org/stable/20002812 [Google Scholar]

- Benjamini Y., Yekutieli D. (2001). The control of the false discovery rate in multiple testing under dependency. Annals of Statistics, 1165–88. Available: http://www.jstor.org/stable/2674075 [Google Scholar]

- Berns G.S., Brooks A.M., Spivak M. (2012). Functional MRI in awake unrestrained dogs. PLoS One, 7(5),e38027.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blonder L.X., Smith C.D., Davis C.E., et al. (2004). Regional brain response to faces of humans and dogs. Brain Research Reviews, Cognitive Brain Research, 20(3), 384–94. [DOI] [PubMed] [Google Scholar]

- Brainard D.H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–6. [PubMed] [Google Scholar]

- Brecht M., Freiwald W.A. (2012). The many facets of facial interactions in mammals. Current Opinion in Neurobiology, 22(2), 259–66. [DOI] [PubMed] [Google Scholar]

- Cuaya L.V., Hernández-Pérez R., Concha L. (2016). Our faces in the dog’s brain: functional imaging reveals temporal cortex activation during perception of human faces. PLoS One 11(3), e0149431.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullen H., Kanai R., Bahrami B., Rees G. (2014). Individual differences in anthropomorphic attributions and human brain structure. Social Cognitive and Affective Neuroscience, 9, 1276–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin C. (1872). The Expression of the Emotions in Man and Animals. London: J. Murray. [Google Scholar]

- de Waal F.B. (2011). What is an animal emotion. Annals of the New York Academy Sciences, 1224, 191–206. [DOI] [PubMed] [Google Scholar]

- Dennett D.C. (1989). The Intentional Stance. Cambridge: The MIT Press. [Google Scholar]

- Diedrichsen J., Shadmehr R. (2005). Detecting and adjusting for artifacts in fmri time series data. Neuroimage, 27(3), 624–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epley N., Waytz A., Cacioppo J.T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864–86. [DOI] [PubMed] [Google Scholar]

- Fiske S.T., Cuddy A.J., Glick P. (2007). Universal dimensions of social cognition: warmth and competence. Trends in Cognitive Science, 11(2), 77–83. [DOI] [PubMed] [Google Scholar]

- Fletcher P.C., Happe F., Frith U., et al. (1995). Other minds in the brain: A functional imaging study of "theory of mind" in story comprehension. Cognition, 57(2), 109–28. [DOI] [PubMed] [Google Scholar]

- Franklin R.G., Nelson A.J., Baker M., et al. (2013). Neural responses to perceiving suffering in humans and animals. Social Neuroscience, 8(3), 217–27. [DOI] [PubMed] [Google Scholar]

- Fussell S.R., Kiesler S., Setlock L.D., Yew V. (2008). How people anthropomorphize robots. Proceedings from the 3rd International conference on human robot interaction (HRI). New York, NY, USA.

- Gallagher H.L., Frith C.D. (2003). Functional imaging of ‘theory of mind’. Trends in Cognitive Sciences, 7(2), 77–83. [DOI] [PubMed] [Google Scholar]

- Goel V., Grafman J., Sadato N., Hallett M. (1995). Modeling other minds. NeuroReport, 6(13), 1741–6. [DOI] [PubMed] [Google Scholar]

- Gray H.M., Gray K., Wegner D.M. (2007). Dimensions of mind perception. Science, 315(5812), 619.. [DOI] [PubMed] [Google Scholar]

- Grinband J., Wager T.D., Lindquist M., Ferrera V.P., Hirsch J. (2008). Detection of time-varying signals in event-related fmri designs. Neuroimage, 43(3), 509–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Happé F., Ehlers S., Fletcher P., et al. (1996). ‘theory of mind’ in the brain. Evidence from a pet scan study of asperger syndrome. NeuroReport, 8(1), 197–201. [DOI] [PubMed] [Google Scholar]

- Hayama S., Chang L., Gumus K., King G.R., Ernst T. (2016). Neural correlates for perception of companion animal photographs. Neuropsychologia, 85, 278–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heider F., Simmel M. (1944). An experimental study of apparent behavior. The American Journal of Psychology, 57(2), 243. [Google Scholar]

- Hills A.M. (1995). Empathy and belief in the mental experience of animals. Anthroz Jour Inter Peo Ani, 8(3), 132–42. [Google Scholar]

- Keysers C., Gazzola V. (2014). Dissociating the ability and propensity for empathy. Trends in Cognitive Sciences, 18(4), 163–6. doi:10.1016/j.tics.2013.12.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kujala M.V., Kujala J., Carlson S., Hari R. (2012). Dog experts’ brains distinguish socially relevant body postures similarly in dogs and humans. PLoS One, 7(6),e39145.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matchock R.L. (2015). Pet ownership and physical health. Current Opinioin in Psychiatry, 28(5), 386–92. [DOI] [PubMed] [Google Scholar]

- Nichols T., Brett M., Andersson J., Wager T., Poline J.B. (2005). Valid conjunction inference with the minimum statistic. Neuroimage, 25(3), 653–60. [DOI] [PubMed] [Google Scholar]

- Parr L.A., Waller B.M., Vick S.J., Bard K.A. (2007). Classifying chimpanzee facial expressions using muscle action. Emotion, 7(1), 172–81. doi:10.1037/1528-3542.7.1.172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paul E.S. (2000). Empathy with animals and with humans: are they linked. Anthroz Jour Inter Peo Ani, 13(4), 194–202. [Google Scholar]

- Saxe R., Carey S., Kanwisher N. (2004). Understanding other minds: Linking developmental psychology and functional neuroimaging. Annual Review of Psychology, 55, 87–124. [DOI] [PubMed] [Google Scholar]

- Schirmer A., Seow C.S., Penney T.B. (2013). Humans process dog and human facial affect in similar ways. PLoS One, 8(9),e74591.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherwood C.C., Holloway R.L., Gannon P.J., et al. (2003). Neuroanatomical basis of facial expression in monkeys, apes, and humans. Annals of New York Academy of Sciences, 1000, 99–103. Available: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=14766625 [DOI] [PubMed] [Google Scholar]

- Spunt R.P., Adolphs R. (2014). Validating the why/how contrast for functional mri studies of theory of mind. Neuroimage, 99, 301–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt R.P., Adolphs R. (2015). Folk explanations of behavior: A specialized use of a domain-general mechanism. Psychological Science, 26(6), 724–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt R.P., Falk E.B., Lieberman M.D. (2010). Dissociable neural systems support retrieval of how and why action knowledge. Psychological Science, 21(11), 1593–8. [DOI] [PubMed] [Google Scholar]

- Spunt R.P., Kemmerer D., Adolphs R. (2016). The neural basis of conceptualizing the same action at different levels of abstraction. Social Cognitive and Affective Neuroscience, 11(7), 1141–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt R.P., Lieberman M.D. (2012a). An integrative model of the neural systems supporting the comprehension of observed emotional behavior. NeuroImage, 59(3), 3050–9. [DOI] [PubMed] [Google Scholar]

- Spunt R.P., Lieberman M.D. (2012b). Dissociating modality-specific and supramodal neural systems for action understanding. Journal of Neuroscience, 32(10), 3575–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spunt R.P., Meyer M.L., Lieberman M.D. (2015). The default mode of human brain function primes the intentional stance. Joural of Cognitive Neuroscience, 27(6), 1116–24. [DOI] [PubMed] [Google Scholar]

- Spunt R.P., Satpute A.B., Lieberman M.D. (2011). Identifying the what, why, and how of an observed action: an fmri study of mentalizing and mechanizing during action observation. Joural of Cognitive Neuroscience, 23(1), 63–74. [DOI] [PubMed] [Google Scholar]

- Templer D.I., Salter C.A., Dickey S., Baldwin R., Veleber D.M. (1981). The construction of a pet attitude scale. Psycholog Record, 31(3), 343–8. Available: http://psycnet.apa.org/psycinfo/1982-06859-001 [Google Scholar]

- Todorov A., Said C.P., Engell A.D., Oosterhof N.N. (2008). Understanding evaluation of faces on social dimensions. Trends in Cognitive Sciences, 12(12), 455–60. [DOI] [PubMed] [Google Scholar]

- Vallacher R.R., Wegner D.M. (1987). What do people think they’re doing? Action identification and human behavior. Psychological Review, 94(1), 3–15. [Google Scholar]

- Van Overwalle F. (2011). A dissociation between social mentalizing and general reasoning. NeuroImage, 54(2), 1589–99. [DOI] [PubMed] [Google Scholar]

- Waytz A., Cacioppo J., Epley N. (2010). Who sees human? The stability and importance of individual differences in anthropomorphism. Perspectives on Psychological Science, 5(3), 219–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wynne C.D. (2004). The perils of anthropomorphism. Nature, 428(6983), 606.. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.