Abstract

Objective: The United States Office of the National Coordinator for Health Information Technology sponsored the development of a “high-priority” list of drug-drug interactions (DDIs) to be used for clinical decision support. We assessed current adoption of this list and current alerting practice for these DDIs with regard to alert implementation (presence or absence of an alert) and display (alert appearance as interruptive or passive).

Materials and methods: We conducted evaluations of electronic health records (EHRs) at a convenience sample of health care organizations across the United States using a standardized testing protocol with simulated orders.

Results: Evaluations of 19 systems were conducted at 13 sites using 14 different EHRs. Across systems, 69% of the high-priority DDI pairs produced alerts. Implementation and display of the DDI alerts tested varied between systems, even when the same EHR vendor was used. Across the drug pairs evaluated, implementation and display of DDI alerts differed, ranging from 27% (4/15) to 93% (14/15) implementation.

Discussion: Currently, there is no standard of care covering which DDI alerts to implement or how to display them to providers. Opportunities to improve DDI alerting include using differential displays based on DDI severity, establishing improved lists of clinically significant DDIs, and thoroughly reviewing organizational implementation decisions regarding DDIs.

Conclusion: DDI alerting is clinically important but not standardized. There is significant room for improvement and standardization around evidence-based DDIs.

Keywords: clinical decision support, electronic health records, drug-drug interactions

BACKGROUND AND SIGNIFICANCE

An estimated 1.5 million adverse drug events (ADEs) occur annually in the United States, leading to significant increases in hospital cost, length of stay, and mortality.1–3 Many of these ADEs are caused by drug-drug interactions (DDIs) involving drugs known to interact.4,5 DDIs can never be entirely eliminated, because providers sometimes knowingly co-prescribe interacting drugs when no better alternatives exist. However, harm also occurs when providers prescribe drugs without being aware of their potential interactions or safer alternatives. This threat to patient safety is largely preventable, as many strategies to mitigate the risk of DDIs exist. One such strategy is to implement interruptive point-of-care DDI alerts within computerized provider order entry (CPOE) systems. Previous work has shown that properly developed and implemented alerts have the potential to prevent ADEs and improve patient safety.6–9

While point-of-care alerts have the potential to prevent DDIs, they have had limited success thus far due to a number of factors, including poor implementation within user workflows and out-of-date or poorly tiered (based on interaction severity) DDI knowledge bases (KBs).5,9,10 As a result, these alerts are often ignored or overridden; some studies estimate that as many as 98% of DDI alerts are overridden.11–14 This issue will only become more pervasive as more health care organizations implement DDI alerts to meet meaningful use mandates.15–17

Despite high override rates, recent research suggests that clinicians recognize the importance of such alerts to avoid prescribing “never” combinations.18 However, best practices around reducing the noise from undesired alerts remain elusive. There have been a number of previous attempts to develop a list of high-priority DDIs for implementation,6,18–20 but concerns were raised about establishing and maintaining such a list due to the delicate balance between DDI prevention and alert fatigue.18,19 One such list of high-priority DDIs developed by Phansalkar et al. was created with sponsorship from the Office of the National Coordinator for Health Information Technology. The list of DDIs, which were approved by a panel as “contraindicated for concurrent use,” contains 15 drug pairs that should “always be alerted on” (ie, brought to the prescriber’s attention) as a standard for implementation across electronic health records (EHRs).19 However, its current implementation in institutions and EHRs has not been assessed. To better understand current DDI alerting practices, we evaluated the implementation of these 15 DDI pairs in a variety of EHRs and health care organizations across the United States.

OBJECTIVE

We sought to assess current DDI alerting practice for high-priority DDIs with regard to alert implementation (presence or absence of an alert) and display (alert appearance as interruptive or passive). Through this assessment, we addressed the following research questions:

Is there a standard of care regarding high-priority DDI alert implementation that spans institutions and EHRs?

What impact does the EHR vendor have on DDI alert implementation and display?

What impact does the health care organization have on DDI alert implementation and display?

MATERIALS AND METHODS

Many of the DDI pairs on the list by Phansalkar et al.19 are specified at the drug-class level. Thus, we first established a list of specific, orderable medications representing each class-level DDI. We selected the most common medications representing each class-level DDI using the number of prescriptions at Brigham and Women’s Hospital as a proxy for medication commonality. A pharmacist reviewed the list (Supplement 1) to ensure that a significant interaction existed between the specified medications. Based on the pharmacist’s expertise, changes were made when a different medication provided a more representative example of the DDI.

To obtain a set of EHRs in which to test the DDIs, we contacted a convenience sample of medical informaticians at medical centers across the United States. Various internally and commercially built EHRs were used at these organizations. Consenting informaticians participated in a 30-minute web-based evaluation of their system, during which they placed orders for each of the 15 medication pairs on a test patient. To gather baseline characteristics about participants’ EHRs as implemented, we requested the following pieces of information:

Which EHR vendor and version are you using for your CPOE?

How long has this system been in use at your institution?

Are there different “levels” of alerts within your CPOE system? If so, what are the levels?

At what severity level are DDI alerts visible to users in your CPOE system?

What KB do you use for DDI alerting?

What (if any) changes have you made to your KB?

Prior to the evaluation, we asked participants to prepare an adult test patient with no active medications on their medications list within their production environment. We did not specify additional patient characteristics. When the production environment could not be used for the evaluation, we used a test environment that closely mirrored the production environment. During the evaluation, we asked participants to share their screens so that the ordering process within their CPOE systems could be observed. We instructed each participant to order the medications representing each of the 15 DDIs on the previously prepared test patient. Participants completed the ordering process to the point of alert appearance; at some sites this was upon placing the order, and at others it was upon signing and completing the order. If a medication was not available at an institution, a different medication within the same class was substituted. If all drugs within a drug-class were unavailable for a particular DDI, the participant skipped that DDI.

The following information was recorded for each DDI pair:

Presence of an alert: Was there some indication to the user that the 2 drugs interacted?

Alert severity level: If an alert was presented, was the interaction described as mild, moderate, severe, etc.?

Alert display: If an alert was presented, was the alert interruptive or passive with respect to the user’s workflow?

Passive alert appearance: If a passive alert was presented, was it displayed as a symbol such as an icon, or was it displayed as a portion of informational text indicating the interaction?

Override capability: If an interruptive alert was presented, was the user able to complete the order for the medication, or was there a hard stop?

Override reason requirement: If an interruptive alert was presented, was the user forced to enter a reason for proceeding with the order?

After testing the 15 DDIs, the researchers asked the participants whether they were surprised by anything that occurred during the evaluation. Responses to all questions were summarized and compared across institutions and EHR systems. This study was reviewed and approved by the Partners Healthcare Human Subjects Review Committee.

RESULTS

We contacted 21 medical informaticians at 17 institutions across the United States. Of those, 19 responded and 17 (81%) completed the evaluation of their CPOE system. In addition, 2 freely available EHRs were evaluated (Table 1).

Table 1.

Summary of sites completing our study

| System | Version | Site | Location | Setting | Environment | KB | Changes to KB? | |

|---|---|---|---|---|---|---|---|---|

| Epic | 2012 | Baylor College of Medicine | Houston, TX | Outpatient | Production | Medi-Span | No | |

| Epic | 2014 | Brigham and Women's Hospital | Boston, MA | Inpatient/Outpatient | Production | First DataBank | Yes | |

| Epic | 2014 | Brigham and Women's Hospital | Boston, MA | Inpatient/Outpatient | Test | First DataBank | Yes | |

| LMR | June 2015 | Brigham and Women's Hospital | Boston, MA | Inpatient/Outpatient | Production | Custom | N/A | |

| CPRS | 30A | Veterans Affairs Medical Center | Houston, TX | Inpatient/Outpatient | Production | Custom | N/A | |

| Meditech | 5.6.6 | HCA Gulf Coast Division | Houston, TX | Inpatient | Production | First DataBank | No | |

| Allscripts Sunrise | 6.1 SU7 PR 21 | Holy Spirit Hospital - A Geisinger Affiliate | Camp Hill, PA | Inpatient | Production | Multum | Yes | |

| Epic | 2014 | Kaiser Permanente Northwest | Portland, OR | Inpatient/Outpatient | Production | First DataBank | Yes | |

| Cerner | 2012.1.34 | Memorial Hermann | Houston, TX | Inpatient | Test | Multum | Yes | |

| eClinical Works | 10.0.80 | Memorial Hermann | Houston, TX | Outpatient | Test | Medi-Span | No | |

| GE CPS | 12 | Physicians at Sugar Creek - An affiliate of Memorial Hermann | Houston, TX | Outpatient | Production | Medi-Span | No | |

| Epic | 2014 | The Ohio State University Medical Center | Columbus, OH | Inpatient/Outpatient | Test | Medi-Span | Yes | |

| Allscripts Enterprise | 11.4.1 | The University of Texas (Physicians) | Houston, TX | Outpatient | Production | Medi-Span | No | |

| Wiz Order | 2.0 | Vanderbilt University Medical Center | Nashville, TN | Inpatient | Test | Custom | N/A | |

| Epic | 2014 | Weill Cornell Medical College | New York, NY | Inpatient/Outpatient | Production | Medi-Span | Yes | |

| Athena Clinicals | 16.2 | Women's Health Specialists of St Louis | St Louis, MO | Outpatient | Production | First DataBank | No | |

| NextGen | 5.8.1 | WVP Health Authority | Salem, OR | Outpatient | Production | First DataBank | No | |

| Dr. Chrono | Asclepius | N/A; Self-evaluation | Web-based | Self-evaluation | Production | Lexi-Comp | No | |

| Practice Fusion | 3.6.1.32.28 | N/A; Self-evaluation | Web-based | Self-evaluation | Production | Medi-Span | No | |

N/A: not applicable.

System Information

We tested 19 EHR implementations at 13 sites using 14 different EHR systems. The systems tested, along with the associated version number, institution, setting, environment, and DDI KB in use, are displayed in Table 1. Multiple instances of Epic were tested because it is widely used in academic and large community settings.21 A majority of the systems (74%) allowed for testing in their production environment.22 Different DDI alert severity levels were available in 17 of 19 systems (89%). However, 4 systems (21%) only showed the most severe alerts to users, while 8 (42%) displayed all alert severity levels to users.

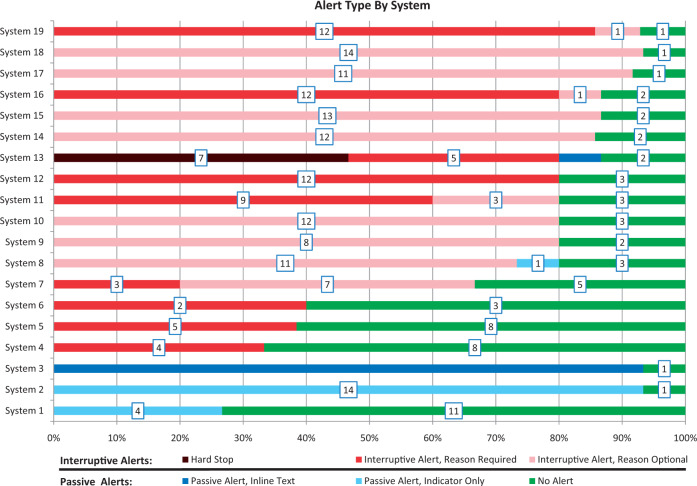

DDI alerts by system

Figure 1 shows the number and type of alerts by system. No system alerted on all of the DDI pairs tested, though 1 system alerted on 14 of the 15 pairs (93%). Across all systems, 58% of the DDI pairs produced interruptive alerts, while an additional 12% produced passive alerts. However, there was great variation in alert display across systems. In 1 system, all alerts were interruptive, while in another, all alerts were passive. Only 1 system used hard stops (alerts that could not be overridden). In that system, hard stops were used for 7 of the DDIs evaluated (system 13).

Figure 1.

DDI alert type by system, with number of DDIs associated with each alert type indicated in boxes

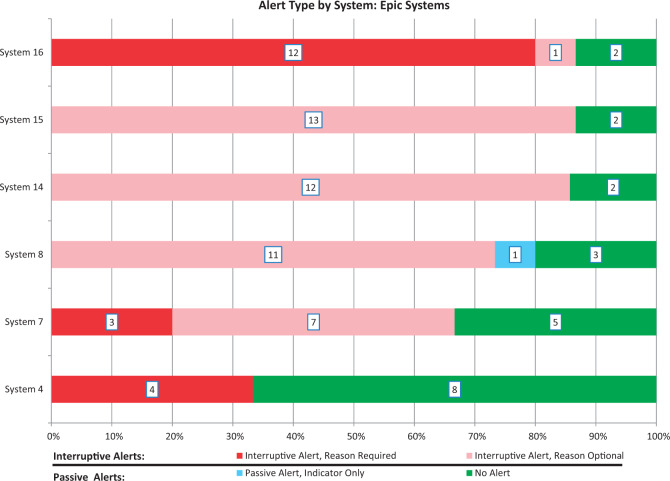

DDI alerts in Epic systems

To assess intra-EHR variability, we completed this DDI evaluation in 6 Epic systems at 5 different sites. Although each of these 6 systems used the same EHR vendor (2 different versions, 2 different DDI KBs), considerable diversity was observed in the distribution of implemented alerts and display modes (Figure 2). Notably, we evaluated both the production and test instances of an Epic system at 1 site and found that they did not yield identical results.22

Figure 2.

DDI alert types observed in Epic systems, with number of DDIs associated with each alert type indicated in boxes

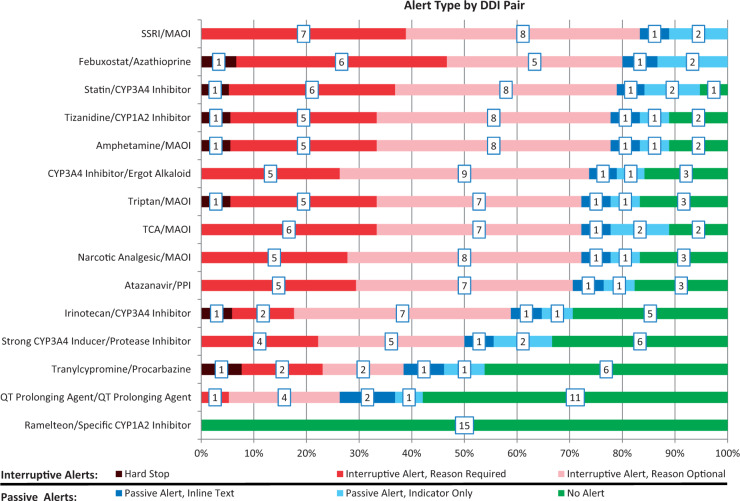

Alert type by DDI pair

Across the drug pairs, there was significant variation in the number of systems that produced an alert. Alerting ranged from near universal (for the DDI between selective serotonin reuptake inhibitors and monoamine oxidase inhibitors) to nonexistent (for the DDI between ramelteon and strong CYP1A2 inhibitors). In addition, the type of alert generated varied considerably across the drug pairs (Figure 3).

Figure 3.

Alert type by DDI pair, with number of systems associated with each alert type in boxes

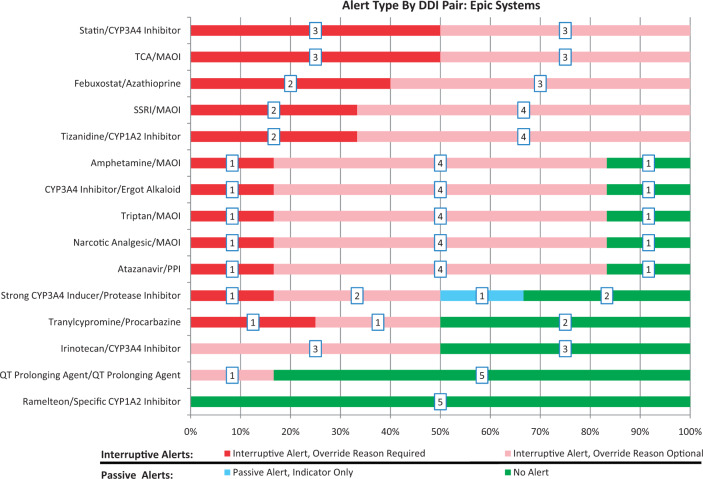

Alert type by DDI pair in Epic systems

When restricting our examination of alert type by DDI pair to systems with an Epic EHR, a wide variety of alert types remained. A common difference in alert type between systems was whether an override reason was required for interruptive alerts. However, considerable variation was also seen in whether an alert was present at all (Figure 4).

Figure 4.

Alert type by DDI pair at Epic sites, with number of systems associated with each alert type in boxes

DISCUSSION

EHR vendors and DDI KBs differed across systems, but nearly all systems had different severity levels of DDI alerts available. Across institutions there was a wide variety in the implementation and display of DDI alerts tested, even between institutions using the same EHR vendor. Similarly, across DDI pairs, there was great diversity in terms of the implementation and display of DDI alerts, ranging from sporadic to near universal implementation. Variation in alert implementation extended to institutions using the same EHR, although the display type of DDI alerts was largely constant at these institutions.

A standard of care for DDI alerts?

The high-priority list of DDIs upon which this study was based was proposed as a standard for implementation across all EHRs.19 While these DDIs may indeed be high priority, our results suggest that they are not consistently implemented and that there is, at present, no consistent standard of care with regard to implementation of DDIs as alerts or the manner in which the alerts should be displayed. Only 2 of the 15 DDIs tested produced alerts in every system where the DDI could be evaluated. For each DDI pair, there was considerable variation in the display of the alert. For all DDI pairs, with the exception of ramelteon and specific CYP1A2 inhibitors (for which no system had an alert enabled), there was at least 1 system with a passive alert and 1 system with an interruptive alert (Figure 3). These findings demonstrate the lack of a current standard for DDI alerting. This could be due, at least in part, to variations in the KBs themselves,23,24 as well as local considerations such as formulary selection, modifications to the KBs, and configuration of the EHR alerting display.

The range of DDI alerting behavior observed is well demonstrated by the use of hard stop alerts across systems. While there is controversy over whether or not some alerts should exist as hard stops,25,26 our results suggest that hard stops are rarely employed. Only 1 of 19 systems tested had a hard stop enabled for any of the DDIs tested. However, for 6 DDIs that produced hard stops in system 13, there was at least 1 other system that had no alert enabled. In other words, for 6 of the 15 DDI pairs tested, observed alerts ranged from hard stops to the absence of alerts altogether. These opposing findings further exemplify the lack of agreement that exists around DDI alerting.

While current results indicate that the choice of which DDI alerts to implement and how to display them is discretionary, there should be a standard for DDI alerts across institutions. In other words, the DDIs producing alerts, and the display of these alerts, should be constant across health care institutions. Much work remains to achieve this standard, especially given the complexity of the problem.26–29 Of course, effective DDI alerting is not dependent simply on content. For such alerting to be optimally effective, clinical workflows, EHR user interfaces, and data quality (particularly accuracy and currency of medication lists) must be improved.

The role of the EHR vendor

While the EHR vendor may be the typical target of complaints about unnecessary or irrelevant DDI alerts, our results suggest that the true conundrum may lie with the configuration and implementation decisions of the institution. Looking specifically at Epic systems, no 2 Epic implementations (including the 2 environments at Brigham and Women’s Hospital) produced the same distribution of alerts (Figure 2). In addition, for 10 of the 15 DDI pairs tested, there was at least 1 Epic site that did not have an alert enabled (Figure 4). These findings are consistent with previous studies21 suggesting that the presence or absence of an alert is determined largely by institutional decisions and the DDI KB in place, both of which varied across the Epic systems. Possible limitations of vendor-supplied defaults likely also play a role in the issue of DDI over- and under-alerting, but our findings suggest that health care organizations play a key role in determining which DDIs are shown. Thus, the dialogue surrounding DDI alert improvement needs to include the health care organization in addition to EHR vendors and KB developers.30

Severity tiering for DDI alerts

Different severity tiers of alerts were available in 17 of the 19 EHR systems tested. Given the potential for tiering to increase compliance with DDI alerts,26,29 this is likely beneficial for users and their needs. However, the data show very little variation in the alert display type visible to end users in individual systems (Figure 1). In other words, although multiple severity tiers of alerts were often available, for the most part only 1 such tier was actually active. Indeed, 13 of the 19 systems demonstrated only 1 alert display type across the 15 DDI pairs tested. These results are in agreement with findings indicating that many institutions intentionally limit DDI alerting to only the major or severe category in order to reduce alert fatigue.31–33 The effort to reduce alert fatigue is necessary, although this decision must be weighed against the safety risk that may result from cases when a significant DDI alert fails to fire, leading to subsequent patient harm.34 However, this effort appears to have eliminated differentiation in display types of DDI alerts. As a result, improved variation in the display of alerts for DDIs with differing levels of severity or likelihood may help to improve compliance with these alerts. This is the yet unsolved problem of how to balance all these factors in order to reduce alert fatigue, avoid false negatives, and provide smooth clinician workflow.35

Recommendations

Payne et al.36 previously identified a series of best practices for DDI alerts. These recommendations offer practical and actionable usability suggestions related to 7 elements of DDI alerts, which could help to reduce interinstitutional variability and improve provider compliance, thereby improving patient safety around DDIs, and we endorse these recommendations.

Based on the results of the present investigation, we have identified 2 additional recommendations for improved DDI alert implementation across institutions. First and foremost, given the significant variability in alert content and implementation approaches observed, institutions should carefully review their DDI alerting approaches. In particular, institutions should assess their DDI KBs for clinical significance. We observed wide heterogeneity in the implementation of even these high-priority DDI alerts. It is important that institutions review their DDI alerts to ensure that the proper interactions generate alerts. Institutions should also review their approach to tiering, how they decide which alerts are displayed interruptively, passively, or only on request, and when and how override reasons are required.

Second, at a broader level, we recommend revamping the national and international alerting strategy for DDIs. The lack of a standard of care for even high-priority DDIs is concerning. As a result, we recommend creating an officially approved, standardized DDI KB, and considering a possible safe harbor or other legal protection for sites that implement that KB. To address this issue, we recommend creating a national or international committee of pharmacists, physicians, and informaticians, as creating a standardized DDI alerting methodology will require knowledge and input from each of these stakeholders.

Limitations

This study has a number of important limitations. We used a convenience sample of EHR systems when evaluating the list of high-priority DDIs. While we made an effort to select representative institutions using common EHR systems, it is unclear how well these conclusions would generalize to other sites and EHR systems. We tested drug pairs from a single list of high-priority DDIs. This list has not been publicly accepted as a standard, nor is its implementation financially incentivized. Other lists of high-priority DDIs exist and may generate different conclusions regarding DDI alert implementation and display. In future work, it would be useful to study other lists, particularly the pediatric DDI list,20 which could be tested in pediatric settings. When collecting information on the EHR systems evaluated, we collected the DDI KB in place, but not the version number of that KB. The version of the DDI KB may also play a role in the implementation and display of DDI alerts. When evaluating the role of the EHR vendor, we only tested multiple instances of the Epic system. It is unclear how well these conclusions would extend to other EHR vendors.

CONCLUSION

We present an assessment of the implementation and display of previously described high-priority DDI alerts across various institutions and EHR systems. There does not appear to be a standard of alerting across institutions or across individual DDI pairs, even for a single widely used EHR vendor’s product. Despite widely available severity tiering options, there is little variation in the alert types shown to end users at an institution. Much work is required to reach a true standard of alerting and to better utilize tiering options. Developing a standard that covers which DDIs to alert on and how to display those alerts will likely require the collaboration of clinical informaticians, pharmacists, and physicians. This standard will have to be vetted at health care organizations across the country and implemented as a standard by EHR vendors. The problem of DDIs is far from solved and will require additional effort to ensure patient safety.

Supplementary Material

CONTRIBUTORS

Mr McEvoy assisted in the design of this research, coordinated the collection of data across sites, assisted in the analysis and interpretation of data, and drafted the initial draft of the manuscript with supervision from Dr Wright. Dr Sittig, Dr McCoy, and Dr Wright coordinated the design of this research and provided input for the primary analysis and interpretation of data for this manuscript. Dr Amato assisted in the design and analysis of this work, particularly around the selection of drugs for use in this study, as well as the pharmacological implications of this work. Ms Hickman, Ms Aaron, and Ms Ai assisted in the collection of data across sites. Dr Bauer, Dr Fraser, Mr Harper, Ms Kennemer, Dr Krall, Dr Lehmann, Dr Malhotra, Dr Murphy, Ms O’Kelley, Dr Samal, Dr Schreiber, Dr Singh, Dr Thomas, Dr Vartian, and Dr Westmorland facilitated the collection of data from each of their respective sites and made substantial contributions to the interpretation of the data. All authors provided critical revisions to the manuscript, approved the final version of the manuscript, and take responsibility for the integrity and accuracy of this work.

FUNDING

This work was supported by National Library of Medicine of the National Institutes of Health grant number R01LM011966. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

COMPETING INTERESTS

Dr Singh and Dr Murphy are partially supported by the Houston Veterans Administration Health Services Research and Development Center for Innovations in Quality, Effectiveness, and Safety (CIN 13–413). Dr Schreiber owns a few shares of Allscripts stock. Dr Krall is an Epic Emeritus Physician and has participated in limited funding engagements through the Epic Emeritus Program (2014–2016). The authors have no other competing interests or funding sources to disclose. These funding sources and competing interests did not impact the decision to participate in this study, the content of the research, the content of the manuscript, or the decision to submit the manuscript for publication.

SUPPLEMENTARY MATERIAL

Supplementary material are available at Journal of the American Medical Informatics Association online.

REFERENCES

- 1. Chiatti C, Bustacchini S, Furneri G, et al. The economic burden of inappropriate drug prescribing, lack of adherence and compliance, adverse drug events in older people: a systematic review. Drug Saf 2012;35 (Suppl 1):73–87. [DOI] [PubMed] [Google Scholar]

- 2. Wu C, Bell CM, Wodchis WP. Incidence and economic burden of adverse drug reactions among elderly patients in Ontario emergency departments: a retrospective study. Drug Saf 2012;35(9):769–781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Makary MA, Daniel M. Medical error: the third leading cause of death in the US. BMJ 2016;353:i2139. [DOI] [PubMed] [Google Scholar]

- 4. Aspden P, Wolcott J, Bootman JL, Cronenwett LR, editors. Preventing medication errors: quality chasm series. National Academies Press; 2006 Dec 11. [Google Scholar]

- 5. Lazarou J, Pomeranz BH, Corey PN. Incidence of adverse drug reactions in hospitalized patients: a meta-analysis of prospective studies. JAMA 1998;279(15):1200–1205. [DOI] [PubMed] [Google Scholar]

- 6. Classen DC, Phansalkar S, Bates DW. Critical drug-drug interactions for use in electronic health records systems with computerized physician order entry: review of leading approaches. J Patient Saf 2011;7(2):61–65. [DOI] [PubMed] [Google Scholar]

- 7. Nuckols TK, Smith-Spangler C, Morton SC, et al. The effectiveness of computerized order entry at reducing preventable adverse drug events and medication errors in hospital settings: a systematic review and meta-analysis. Syst Rev 2014;3(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Bates DW, Teich JM, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc 1999;6(4):313–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Horsky J, Phansalkar S, Desai A, Bell D, Middleton B. Design of decision support interventions for medication prescribing. Int J Med Inform 2013;82(6):492–503. [DOI] [PubMed] [Google Scholar]

- 10. McCoy AB, Waitman LR, Lewis JB, et al. A framework for evaluating the appropriateness of clinical decision support alerts and responses. J Am Med Inform Assoc 2012;19(3):346–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Weingart SN, Simchowitz B, Shiman L, et al. Clinicians' assessments of electronic medication safety alerts in ambulatory care. Arch Intern Med 2009;169(17):1627–1632. [DOI] [PubMed] [Google Scholar]

- 12. Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006;13(1):5–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Bryant A, Fletcher G, Payne T. Drug interaction alert override rates in the Meaningful Use era: no evidence of progress. Appl Clin Inform 2013;5(3):802–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. van der Sijs H. 2009 Drug safety alerting in computerized physician order entry: unraveling and counteracting alert fatigue. Available at: http://repub.eur.nl/pub/16936. [Google Scholar]

- 15. Drug Interaction Checks. Secondary Drug Interaction Checks 2014. https://www.healthit.gov/providers-professionals/achieve-meaningful-use/core-measures/drug-interaction-check Accessed December 17, 2015. [Google Scholar]

- 16. Classen DC, Bates DW. Finding the meaning in meaningful use. N Engl J Med 2011;365(9):855–858. [DOI] [PubMed] [Google Scholar]

- 17. Weingart SN, Seger AC, Feola N, Heffernan J, Schiff G, Isaac T. Electronic drug interaction alerts in ambulatory care. Drug Saf 2011;34(7):587–593. [DOI] [PubMed] [Google Scholar]

- 18. Slight SP, Berner ES, Galanter W, et al. Meaningful use of electronic health records: experiences from the field and future opportunities. JMIR Med Inform 2015;3(3):e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Phansalkar S, Desai AA, Bell D, et al. High-priority drug-drug interactions for use in electronic health records. J Am Med Inform Assoc 2012;19(5):735–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Harper MB, Longhurst CA, McGuire TL, Tarrago R, Desai BR, Patterson A. Core drug-drug interaction alerts for inclusion in pediatric electronic health records with computerized prescriber order entry. J Patient Saf 2014;10(1):59–63. [DOI] [PubMed] [Google Scholar]

- 21. Koppel R, Lehmann CU. Implications of an emerging EHR monoculture for hospitals and healthcare systems. J Am Med Inform Assoc 2015;22(2):465–471. [DOI] [PubMed] [Google Scholar]

- 22. Wright A, Aaron S, Sittig DF. Testing electronic health records in the “production” environment: an essential step in the journey to a safe and effective healthcare system. J Am Med Inform Assoc 2016. DOI:10.1093/jamia/ocw039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Abarca J, Malone DC, Armstrong EP, et al. Concordance of severity ratings provided in four drug interaction compendia. J Am Pharm Assoc (2003) 2004;44(2):136–141. [DOI] [PubMed] [Google Scholar]

- 24. Ramos G, Guaraldo L, Japiassú A, Bozza F. Comparison of two databases to detect potential drug-drug interactions between prescriptions of HIV/AIDS patients in critical care. J Clin Pharm Ther 2015;40(1):63–67. [DOI] [PubMed] [Google Scholar]

- 25. Strom BL, Schinnar R, Aberra F, et al. Unintended effects of a computerized physician order entry nearly hard-stop alert to prevent a drug interaction: a randomized controlled trial. Arch Intern Med 2010;170(17):1578–83. [DOI] [PubMed] [Google Scholar]

- 26. Ammenwerth E, Hackl WO, Riedmann D, Jung M. Contextualization of automatic alerts during electronic prescription: researchers' and users' opinions on useful context factors. Stud Health Technol Inform 2010;169:920–4. [PubMed] [Google Scholar]

- 27. Sax PE. Secondary EHR and Drug Prescribing Warnings: The Good, the Bad, the Ugly September 20, 2015. http://blogs.jwatch.org/hiv-id-observations/index.php/ehr-and-drug-prescribing-warnings-the-good-the-bad-the-ugly/2015/09/20/. Accessed December 17, 2015. [Google Scholar]

- 28. Patapovas A, Dormann H, Sedlmayr B, et al. Medication safety and knowledge-based functions: a stepwise approach against information overload. Br J Clin Pharmacol 2013;76(S1):14–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Paterno MD, Maviglia SM, Gorman PN, et al. Tiering drug-drug interaction alerts by severity increases compliance rates. J Am Med Inform Assoc 2009;16(1):40–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Ash JS, Sittig DF, McMullen CK, et al. Studying the vendor perspective on clinical decision support. AMIA Annu Symp Proc 2011;2011:80–87. [PMC free article] [PubMed] [Google Scholar]

- 31. Ong MS, Coiera E. Evaluating the effectiveness of clinical alerts: a signal detection approach. AMIA Annu Symp Proc 2011;2011:1036–44. [PMC free article] [PubMed] [Google Scholar]

- 32. Gaba DM, Howard SK. Fatigue among clinicians and the safety of patients. N Engl J Med 2002;347(16):1249–1255. [DOI] [PubMed] [Google Scholar]

- 33. Smithburger PL, Buckley MS, Bejian S, Burenheide K, Kane-Gill SL. A critical evaluation of clinical decision support for the detection of drug-drug interactions. Expert Opin Drug Saf 2011;10(6):871–882. [DOI] [PubMed] [Google Scholar]

- 34. Ridgely MS, Greenberg MD. Too many alerts, too much liability: sorting through the malpractice implications of drug-drug interaction clinical decision support. St Louis U J Health Law Policy 2011;5:257. [Google Scholar]

- 35. Singh K, Wright A. Clinical decision support. In: Finnell JT, Dixon BE. Clinical Informatics Study Guide Heidelberg: Springer; 2016:111–133. [Google Scholar]

- 36. Payne TH, Hines LE, Chan RC, et al. Recommendations to improve the usability of drug-drug interaction clinical decision support alerts. J Am Med Inform Assoc 2015;22(6):1243–1250. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.