Abstract

Background

Understanding the relationships between clinical tests, the processes they measure, and the brain networks underlying them, is critical in order for clinicians to move beyond aphasia syndrome classification toward specification of individual language process impairments.

Objective

To understand the cognitive, language, and neuroanatomical factors underlying scores of commonly used aphasia tests.

Methods

25 behavioral tests were administered to a group of 38 chronic left hemisphere stroke survivors and a high resolution MRI was obtained. Test scores were entered into a principal components analysis to extract the latent variables (factors) measured by the tests. Multivariate lesion-symptom mapping was used to localize lesions associated with the factor scores.

Results

The principal components analysis yielded four dissociable factors, which we labeled Word Finding/Fluency, Comprehension, Phonology/Working Memory Capacity, and Executive Function. While many tests loaded onto the factors in predictable ways, some relied heavily on factors not commonly associated with the tests. Lesion symptom mapping demonstrated discrete brain structures associated with each factor, including frontal, temporal, and parietal areas extending beyond the classical language network. Specific functions mapped onto brain anatomy largely in correspondence with modern neural models of language processing.

Conclusions

An extensive clinical aphasia assessment identifies four independent language functions, relying on discrete parts of the left middle cerebral artery territory. A better understanding of the processes underlying cognitive tests and the link between lesion and behavior may lead to improved aphasia diagnosis, and may yield treatments better targeted to an individual’s specific pattern of deficits and preserved abilities.

INTRODUCTION

Most survivors of left hemisphere (LH) stroke never fully recover their language abilities and are left with chronic aphasia1. Rather than diagnosing classical aphasia syndromes (e.g., Broca’s aphasia), the primary goal of modern aphasia assessment is to identify deficits in specific language processes2. Assessing damage to specific aspects of language can help clinicians focus the rehabilitation plan for the individual patient, potentially improving outcomes.

However, identifying a given patient’s deficits requires clear understanding of the mapping between common language tests and the dissociable underlying processes they measure. Further, examining correspondences between lesion location and dissociable language processes rather than individual test scores may provide a more comprehensive way to examine the brain’s language systems.

Factor analysis, a data reduction technique used to extract the underlying factors in a dataset, can reduce a large number of behavioral scores to a few “latent variables” that best explain the variability in the data3. This approach has been used to examine dissociations between semantics and phonology in people with left hemisphere stroke4,5 as well as more broadly to determine the most important factors underlying large testing batteries for aphasia assessment6,7. These studies then combined the extracted factors they found with lesion-symptom mapping techniques, revealing the brain areas associated with each factor. Here, we similarly administer a detailed language assessment battery that includes standardized tests commonly used in clinical practice and then identify core language variables via a factor analysis of scores on these tests. Our aim is to identify core language and cognitive processes affected by left hemisphere strokes, and to determine the degree to which tasks commonly used in clinical assessment rely upon one or more of the core language variables. We then use a multivariate lesion symptom mapping technique to determine the brain areas associated with the core language processes identified by our factor analysis. The technique was designed to have predictive power and high generalizability in that it is less vulnerable to single patients or small groups of patients within the sample. We believe this combination of a factor analysis that includes tests commonly used in clinics with a multivariate, generalizable, lesion symptom mapping technique, will be of interest to clinicians working with people with aphasia at all severity levels and with deficits in all parts of the language system.

METHODS

Participants

38 native English speakers with chronic LH stroke (> 6 months) participated in the study. All participants had suffered LH strokes, and had no history of other neurological problems or significant psychiatric disorders (See Table 1). Though our decision to include only LH stroke patients limits interpretation of our results to some degree, it has been standard practice for lesion-symptom mapping studies of aphasia5,6,8–10. Our cohort was more inclusive than many other studies in that we included people with all levels of aphasia severity as well as people who had “recovered,” and we included left-handed people. The study was approved by the Georgetown University IRB (study #PRO00000315), and all participants provided informed consent.

Table 1.

Participant Demographic and Clinical Information

| Age | Sex | Years of Education | Time since stroke (months) | WAB Aphasia Quotient | WAB Diagnosis | Type of Stroke | Edinburgh Handedness Score | |

|---|---|---|---|---|---|---|---|---|

| Average | 62.97 | 25 M | 16.23 | 59.72 | 64.31 | 3 recovered (AQ >93.8) | 30 ischemic | 32 right-handed |

| Standard Deviation | 12.41 | 13 F | 4.08 | 41.12 | 26.41 | 17 anomic; 14 Broca’s |

8 hemorrhagic | 2 ambidextrous |

| Range | 38–78 | 12–22 | 7.92–151.18 | 13.8–98.6 | 2 Wernicke’s; 3 conduction |

4 left-handed | ||

| 1 transcortical sensory | ||||||||

| 1 global |

Behavioral testing methods

The behavioral evaluation was designed to assess language and cognition thoroughly and through different modalities. It was administered by a speech-language pathologist or PhD cognitive scientist and was completed in a single day over 3–5 hours, with breaks as needed. Tests were administered in the same order (listed below) for all participants and were videotaped to resolve any scoring issues. A brief description of each test follows:

Western Aphasia Battery-Revised11 (WAB)

The WAB includes subtests of spontaneous speech (conversational questions and picture description), auditory verbal comprehension (yes/no questions, auditory word recognition, and sequential commands), repetition (of words, phrases, and sentences), and naming and word-finding (object naming, category fluency, sentence completion and responsive speech). The test calculates an aphasia quotient (AQ) for each patient (Table 1).

Cognitive Linguistic Quick Test (CLQT)12

The CLQT is a test that assesses several aspects of cognition, and was designed to be appropriate for use in individuals with acquired language impairments. Here, we use the executive function composite score (which comprises symbol trails, category and letter fluency, mazes, and design generation).

Philadelphia Naming Test (PNT)13

The PNT is a confrontation naming test of black and white drawings. We use a reduced 60-item version.

Apraxia Battery for Adults (ABA), Increasing word length subtest14

Participants are asked to repeat words that increase in number of syllables as suffixes are added (e.g., “thick, thicken, thickening”). Deterioration in performance as word length increases is the measure of apraxia severity.

Pseudoword Repetition

In this in-house test, pseudowords increase in length from 1 to 5 syllables as the 30-item test progresses.

Category and Letter Fluency

Participants must generate as many items as possible within certain categories (animals, items in a supermarket, things you wear) or that begin with certain letters (M, F, A, S) in 60 seconds.

Philadelphia Naming Test (PNT) – Written

A subset of 30 items from the PNT are presented again for written confrontation naming.

Digit Span

Number strings of increasing length are presented for repetition, first forward and then backward.

Pyramids and Palm Trees15

Participants must determine which of two pictures matches better with a third picture. It is designed to test nonverbal semantics.

Reading real words

Participants are presented with single words, 3–7 letters long, of all parts of speech and levels of concreteness and asked to read them aloud.

Reading pseudowords

Participants are asked to read aloud pseudowords, 3–4 letters in length.

Auditory word-to-picture matching

This in-house test requires participants to identify a picture of an object from among 5 semantic foils after the object name is presented.

Boston Diagnostic Aphasia Examination (BDAE; excerpts)16

The embedded sentences, semantic probe, and complex ideational material subtests of the BDAE were used. The embedded sentences subtest requires the participant to choose 1 of 4 pictures described by a grammatically complex, auditorily-presented sentence. The semantic probe is a series of yes/no questions about the category, physical features, and function of common objects. The complex ideational material subtest also asks yes/no questions, of common knowledge and about brief auditorily-presented paragraphs.

Factor Analysis Methods

Raw scores on all 25 behavioral tests were entered into a principle components factor analysis of the correlation matrix in SPSS 21. A standard eigenvalue > 1 cutoff was used to determine the number of factors extracted. Varimax rotation with Kaiser normalization was used to create orthogonal factors and the rotated component matrix was used to examine the loading of each test on the extracted factors.

Imaging Methods

High resolution 3D T1-weighted MRIs were acquired on a 3.0 T Siemens TIM Trio scanner within one week of behavioral testing, with the following parameters: TR = 1900 ms; TE = 2.56 ms; flip angle = 9°; 160 contiguous 1 mm sagittal slices; field of view = 250 × 250 mm; matrix size = 246 × 256, voxel size = 1 mm3.

Lesion tracings

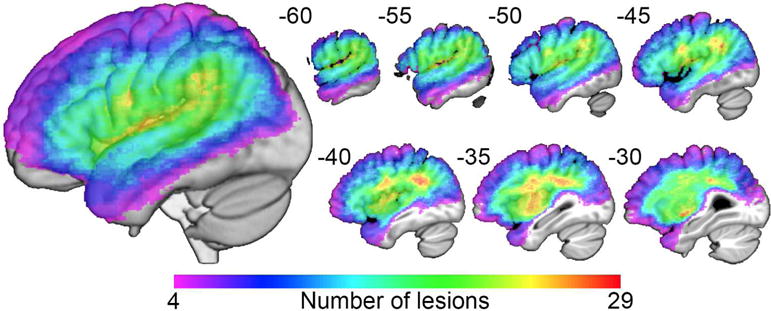

Each lesion mask was drawn manually on the T1-weighted images in native space using MRIcron (http://mccauslandcenter.sc.edu/CRNL/clinical-toolbox) and reviewed by two neurologists (S.X. and P.E.T) blinded to the behavioral data. T1-weighted images were normalized to a standard older T1 brain template in Montreal Neurological Institute (MNI) space via the unified segmentation approach provided by the VBM8 toolbox in SPM8 running under Matlab R2014a. The lesioned area in T1 native space was masked out before spatial normalization. The derived deformations were subsequently applied to the lesion masks (see Figure 1).

Figure 1. Composite map of all patient lesions.

Lesion overlap map of 38 patients with left-hemisphere lesions. The color scale moves toward red as more patients have damage to those voxels.

Lesion-symptom mapping methods

Multivariate lesion-symptom mapping was carried out on each factor score using Support Vector Regression-Based Lesion-Symptom Mapping (SVR-LSM) running under Matlab R2014a (https://cfn.upenn.edu/~zewang/). SVR-LSM is similar to the univariate technique, Voxel-based Lesion Symptom Mapping (VLSM), in that it uses binary masks of lesions in a standard anatomical space to assess relationships between lesion location and behavior. However, SVR-LSM addresses some of the limitations of univariate methods, such as VLSM. Given multiple possible lesion locations associated with a deficit, VLSM will favor the one more common in the sample of lesions9. Modeling evidence suggests that when a behavior depends on more than one brain area, lesion covariance can lead to mislocalization of lesion-behavior associations,17 but SVR-LSM reduces these errors10. Multivariate lesion-symptom mapping techniques must be interpreted with caution, because the vector weights do not necessarily correspond to the level of their importance to the cognitive process being studied18. However, Zhang et al. (2014) directly compared SVR-LSM to VLSM using both real and simulated behavioral data and showed that results of the two techniques were strikingly similar, except that VLSM failed to detect some voxels their simulation model had established to be important to the deficit. They also found that the lesion parameters dictated by vascular architecture systematically introduce error into mass-univariate techniques. SVR-LSM works by using a machine learning-based multivariate support vector regression algorithm to find lesion-symptom relationships10. The model is trained to predict behavioral scores using damaged and undamaged voxels as independent variables and then a threshold is applied that favors prediction accuracy over fit accuracy. The model is therefore not as vulnerable to single patients or small groups of patients within the cohort and better generalizes to the population9. VLSM and the related technique, voxel-based correlational methodology (VBCM), typically control for lesion volume by including it as a covariate of no interest in the statistical analysis. SVR-LSM provides a direct total lesion volume control (dTLVC) method to control relationships between total lesion size, stroke distribution, and factor scores10. This method weights lesioned voxels in inverse proportion to the square root of the lesion size. Lesion volume control is applied directly to the normalized vectors from all participants combined into one matrix, which has the benefit of controlling for lesion volume without being overly conservative. It also improves sensitivity to detect relationships with behavior in voxels in which lesion-status correlates with lesion size10. Because SVR-LSM considers all voxels simultaneously in a single, non-linear regression model, correction for multiple comparisons is not considered required10.

Only voxels damaged in at least 10% of participants were included in our analysis. Voxel-level statistical significance was determined based on 10,000 Monte Carlo style permutations in which the factor scores were randomly associated with the lesion masks, and the resulting P-map was thresholded at P < .005, with an arbitrary cluster threshold of 500 mm3.

RESULTS

Factor analysis of behavioral tests

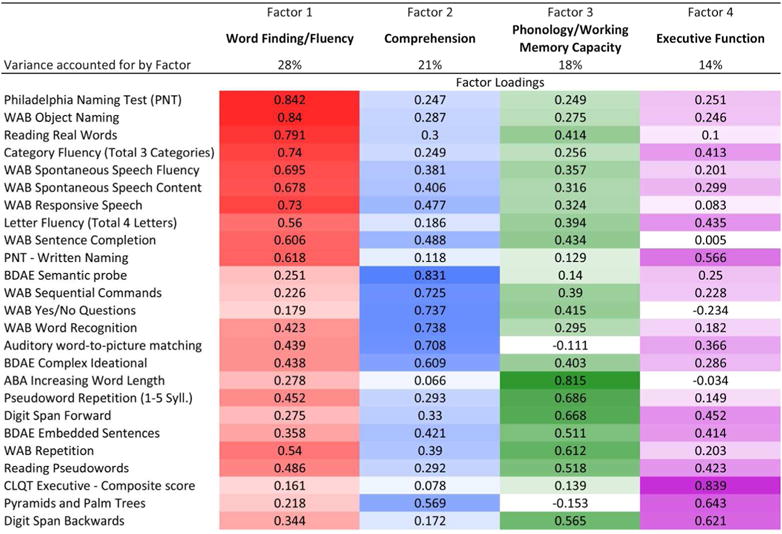

The factor analysis of behavioral scores yielded four factors that accounted for 81% of the variance in patient test scores (Figure 2). Based on the maximal loading score for each test, the factors clearly corresponded to four coherent language processes putatively measured by the tests. Tests loading mainly onto Factor 1 (labeled Word-Finding/Fluency) include tests of naming (Philadelphia Naming Test, Philadelphia Naming Test-Written, WAB object naming), word finding (WAB sentence completion, WAB spontaneous speech content) and verbal fluency (WAB fluency, category fluency). Tests loading mainly onto Factor 2 (labeled Comprehension) include those measuring comprehension at the word or sentence level (e.g., yes/no questions, sequential commands, and word recognition from the WAB, and the Auditory word-to-picture matching test). Tests loading mainly onto Factor 3 (labeled Phonology/Working Memory Capacity) included tests of output phonology, mainly repetition tasks (e.g., Apraxia Battery for Adults Increasing word length subtest, Pseudoword repetition, forward digit span). Tests loading onto Factor 4 (labeled Executive Function) included two tests widely thought to assess executive functions (Cognitive Linguistic Quick Test Executive function composite score, backwards digit span).

Figure 2.

Results of factor analysis after orthogonal rotation for all behavioral tests. Displays factor loadings for principal component analysis after varimax rotation with Kaiser normalization. Rotation converged in 8 iterations. Colors used for each factor are as follows: Word Finding/Fluency (red), Comprehension (blue), Phonology/Working Memory Capacity (green), and Executive Function (purple). Higher loadings are in darker shades. Shading moves toward white as loadings approach 0. Percent of variance accounted for by each factor is also shown. (PNT=Philadelphia Naming Test; WAB=Western Aphasia Battery; BDAE=Boston Diagnostic Aphasia Examination; ABA=Apraxia Battery for Adults; CLQT=Cognitive Linguistic Quick Test)

Examining the relative differences in loading scores between factors revealed other informative patterns. Tests generally thought to be relatively pure measures of the individual language processes above loaded strongly and specifically on the corresponding factors. For instance, naming tasks loaded specifically onto Word-Finding/Fluency with low loading scores for other factors (e.g., Philadelphia Naming Test Factors 1, 2, 3, 4: .84, .25, .25, .25; see Figure 2). In contrast, tests like letter fluency that are thought to rely on multiple processes (Word-finding and Executive function, Factors 1 and 4), loaded onto both of the predicted processes (Letter Fluency Factors 1, 2, 3, 4: .56, .19, .39, .44). Similarly, auditory comprehension tests at the single word level (Auditory word-to-picture matching, WAB word recognition) loaded secondarily on the Word-Finding/Fluency Factor, whereas tests requiring more working memory (WAB sequential commands, WAB yes/no questions) loaded secondarily on the Phonology/Working Memory Capacity Factor.

The factor analysis also revealed that a few tests did not load primarily onto the factors one might expect, considering what the tests were designed to assess. The Boston Diagnostic Aphasia Examination embedded sentences task, a classic test that putatively assesses grammatical comprehension, loaded fairly evenly across the four factors, with primary loading on the Phonology/Working Memory Capacity Factor, and secondary on Comprehension and Executive Function (Factors 1, 2, 3, 4: .36, .42, .51, .41). The Pyramids and Palm Trees test, which putatively assesses nonverbal semantic ability, loaded primarily onto Executive Function (.64).

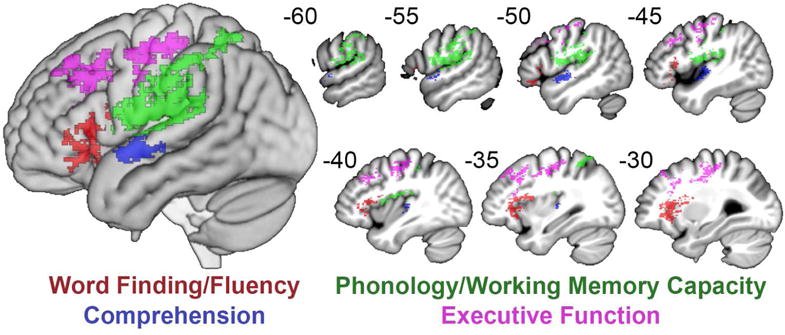

Lesion-symptom mapping results

Before conducting lesion-symptom mapping, we tested for relationships between the factor scores and demographic factors (age, time since stroke, education) using exploratory bivariate correlations and partial correlations controlling for stroke size. No relationships were observed (all P > .10), so these variables were not considered further during lesion-symptom mapping. The SVR-LSM analysis of factor scores demonstrated that lesions to discrete areas of the LH result in impairment in the different language factors (Figure 3 and Table 2). Word-Finding/Fluency deficits were primarily associated with lesions to inferior frontal areas, including all three subregions of the inferior frontal gyrus (IFG) and the anterior insula. A smaller area in the dorsal parietal white matter was also associated with Word-Finding/Fluency deficits. Comprehension deficits were associated with lesions to the superior temporal cortex anterior to Heschl’s gyrus. Phonology/Working Memory Capacity deficits were associated with lesions to ventral motor and somatosensory cortex, supramarginal gyrus, and the posterior planum temporale. Executive Function deficits were associated with the middle frontal gyrus (dorsolateral prefrontal cortex), dorsal Rolandic cortex, and posterior frontal white matter in the superior longitudinal fasciculus. There was no overlap between the lesion symptom maps for the four factors.

Figure 3.

Lesion-symptom maps of four factors. SVR-LSMs shown at P<.005 with a cluster threshold of 500 mm3 for Word Finding/Fluency (red), Comprehension (blue), Phonology/Working Memory Capacity (green), and Executive Function (purple). !! †

Table 2.

SVR-LSM Results for the Four Factor Scores

| Cluster Volume (mm3) | X | Y | Z | |

|---|---|---|---|---|

| Word-Finding/Fluency | ||||

|

| ||||

| Inferior Frontal Cortex and Anterior Insula | 4,897 | |||

| Inferior Frontal Gyrus | −34 | 22 | 10 | |

| Inferior Frontal White Matter | −34 | 12 | 14 | |

| Anterior Insula | −28 | 21 | −4 | |

| Anterior Insula | −32 | 26 | 0 | |

|

| ||||

| Parietal White Matter | 1,046 | |||

| Parietal White Matter | −21 | −32 | 38 | |

| Comprehension | ||||

|

| ||||

| Superior Temporal Cortex | 1,836 | |||

| Superior Temporal Gyrus | −46 | −8 | 0 | |

| Heschl’s Gyrus | −40 | −22 | 4 | |

| Phonology/Working Memory Capacity | ||||

|

| ||||

| Ventral Rolandic, Inferior Parietal, and Posterior Temporal Cortex | 10,402 | |||

| Postcentral Gyrus/Rolandic Operculum | −56 | −8 | 10 | |

| Precentral Gyrus/Rolandic Operculum | −50 | −4 | 12 | |

| Postcentral Gyrus/Rolandic Operculum | −56 | −14 | 12 | |

| Supramarginal Gyrus/Parietal Operculum | −60 | −26 | 16 | |

| Supramarginal Gyrus/Parietal Operculum | −51 | −24 | 16 | |

| Supramarginal Gyrus/Parietal Operculum | −52 | −30 | 18 | |

| Heschl’s Gyrus | −40 | −22 | 12 | |

| Superior Temporal Gyrus/Planum Temporale | −57 | −30 | 14 | |

| Superior Temporal Gyrus | −51 | −39 | 22 | |

| Superior Temporal Gyrus | −57 | −40 | 24 | |

|

| ||||

| Supramarginal Gyrus | 662 | |||

| Supramarginal Gyrus | −60 | −38 | 45 | |

| Executive Function | ||||

|

| ||||

| Dorsolateral Prefrontal Cortex and White Matter | 2,811 | |||

| Middle Frontal Gyrus | −39 | 22 | 34 | |

| Middle Frontal Gyrus | −33 | 20 | 42 | |

| Frontal White Matter | −28 | 14 | 33 | |

|

| ||||

| Dorsal Rolandic Cortex and White Matter | 5,528 | |||

| Frontal White Matter | −28 | −4 | 36 | |

| Frontal White Matter | −32 | −14 | 40 | |

| Frontal White Matter | −28 | −15 | 45 | |

| Frontal White Matter | −22 | −26 | 44 | |

| Precentral Gyrus | −45 | −6 | 33 | |

| Precentral Gyrus | −50 | −4 | 33 | |

| Precentral Gyrus | −26 | −22 | 46 | |

| Precentral Gyrus | −39 | −14 | 46 | |

| Precentral Gyrus | −44 | −9 | 46 | |

DISCUSSION

Language is complex and relies on multiple brain areas and cognitive processes. In order to accurately assess language deficits in stroke patients, one must understand the discrete language processes measured by clinical language assessments, and the degree to which performance on individual tests relies on each of these processes. This is not always obvious, even for common bedside language tests. Our findings demonstrate that a thorough aphasia assessment including common clinical tests can identify four statistically dissociable language processes linked to discrete parts of the left middle cerebral artery territory. The majority of tests in our battery loaded most strongly on the factors they were designed to assess. Many tests relied equally on more than one factor or most strongly on an unexpected factor, indicating that performance on widely used aphasia assessments, such as the WAB, should be considered in the context of a patient’s overall language and cognitive abilities. For example, the WAB repetition subtest loaded most strongly on Phonology/Working Memory Capacity, but it could be helpful for clinicians to consider that it also loaded on Comprehension and Word Finding/Fluency. Our factor analysis also quantifies the complexity of tasks like the Boston Diagnostic Aphasia Examination embedded sentences task, which requires auditory comprehension of a grammatically complex sentence while simultaneously processing 4 pictures containing different named objects, by showing that this task loaded onto all 4 factors. When the goal of a brief clinical examination is to assess a specific function, the tests we found to load most specifically on a single factor may be the most useful. Alternatively, when time is short and assessment of overall language ability is the goal, tests relying on several factors might be more appropriate.

The use of subtests from aphasia batteries as independent scores here presents an interesting opportunity for comparison of our results with other recent studies using data reduction techniques on full scores of language and cognition tests for aphasia6,7. Most tests used in these previous studies were longer and more detailed assessment tools, yet our results replicate the factors these studies found and produce similar neural correlates. Though there was no exact overlap in the tests used (with the exception of forward and backward digit span), Halai et al. found four factors similar to ours (phonology, semantics, cognition, and speech quanta or fluency)7. Our replication of these results indicates that factor analysis is a robust technique for understanding the cognitive and neural underpinnings of aphasic deficits regardless of the measures used.

Though the results were similar, there were some notable differences. The previous studies included “speech quanta” measures (e.g. words per minute) in their factor analysis, which could explain some of the differences in the way their tasks broke down onto the other factors compared to ours, and why their lesion maps placed the fluency factor posterior to ours (precentral gyrus vs. inferior frontal gyrus). Examining the one test that was exactly the same between the studies, digit span, we see that both forward and backward digit span loaded strongly almost exclusively on their phonology factor, whereas in our analysis, forward digit span loaded most strongly on Phonology/Working Memory Capacity and backward digit span on Executive Function. Though the factor loadings differed, the extracted factors and their neural correlates were still quite similar across two different labs, test batteries, and groups of subjects.

Our lesion-symptom mapping results demonstrated that discrete parts of the left middle cerebral artery distribution related to the underlying language processes identified in the factor analysis. The current study contributes to a growing understanding of the neuroanatomy of specific language functions that may help clinicians move beyond aphasia syndrome classification and better understand acquired cognitive and language deficits.

Our finding that IFG lesions were associated with deficits in Word Finding/Fluency corresponds with the results of functional neuroimaging studies on verbal fluency in healthy participants, which typically show robust activity in this area19. Notably, since repetition tasks segregated into an independent factor with a different localization, the role of the IFG here in Word Finding/Fluency cannot be attributed to articulatory-motor planning for speech. Some have argued that the IFG performs syntactic functions specific to language20,21. A few of the tasks contributing to the Word Finding/Fluency factor do involve grammatical production or comprehension (e.g., WAB spontaneous speech fluency, WAB sentence completion). However, the tasks that loaded most specifically on the Word Finding/Fluency factor were naming tasks, specifically the Philadelphia Naming Test, which only tests concrete nouns and hence excludes any syntactic or morphological processing. Alternatively, some have suggested the IFG plays a more general role in cognitive control in situations that require selection between competing responses22,23. The naming and fluency tasks in our battery require this type of control24,25. In a prior study, both the degree of IFG activity in controls and the lesion burden in this area in stroke survivors related to performance on a word production task designed to exaggerate competition during word selection26. However, the factor analysis also showed that category and letter fluency tasks relied on the Executive Function factor as well as the Word Finding/Fluency factor, suggesting that executive control functions involved in word-finding may have segregated into this separate factor. Rather, it seems likely that the localization of Word Finding/Fluency here most likely reflects the roles of the anterior and posterior portions of the IFG in lexical semantic and phonological processing,27,28 both of which are required for word retrieval and production.

As suggested by many modern neural models of speech and language processing,29,30 our results provide further evidence that the brain’s language systems extend well beyond the classical Broca’s and Wernicke’s areas. Our SVR-LSM analysis localizes Comprehension to regions anterior to Heschl’s gyrus, outside the classical posterior location of Wernicke’s area. Indeed, a previous lesion-symptom mapping study found that lesions to Wernicke’s area did not significantly affect language comprehension31. A recent analysis of cortical atrophy in primary progressive aphasia supports our findings, showing word-level comprehension is subserved by anterior temporal cortex32. Although this study suggested that sentence-level comprehension relies on distributed cortical areas, a large-scale meta-analysis of functional imaging studies found that auditory processing of increasingly complex stimuli from words to passages activated progressively more anterior portions of the superior temporal cortex33.

The Phonology/Working Memory Capacity factor was associated with dorsal processing stream regions mapping phonology to articulation34,35. The tests with the highest weights for this factor require little to no grounding in semantics (Apraxia Battery for Adults Increasing word length subtest, Pseudoword Repetition, Forward Digit Span) and should therefore rely largely on phonology and verbal working memory capacity. The lesion-symptom map of the Phonology/Working Memory Capacity factor correspondingly identified areas consistent with previous studies providing evidence for a dorsal phonological processing stream. For example, other recent factor analyses mapped speech production or phonology factors to similar parietal areas,5–7 as did a study combining lesion-symptom mapping with white matter tractography in acute aphasia to determine lesions affecting pseudoword repetition36. Similarly, a combined fMRI and lesion-overlap map of conduction aphasia localized a sensorimotor interface for speech to the posterior planum temporale37. A study testing patients with inferior parietal damage showed that they performed disproportionately poorly on verbal working memory tasks such as repetition, while patients with inferior frontal lesions were not impaired on these tasks38.

The Cognitive Linguistic Quick Test executive function composite score loaded most strongly on the final factor, along with backwards digit span, a classic test of executive function. Further, the lesion maps showed that this factor was associated with the well-established frontoparietal executive/attention network39. The role of dorsolateral prefrontal cortex in executive function is well-known,40,41 and our SVR-LSM results agree with this localization. The results showed lesions extending into underlying parts of the superior longitudinal fasciculus and frontal subcortical white matter, consistent with studies showing impaired executive function associated with white matter damage42–44. A recent study using the Trail Making Test, which simultaneously measures processing speed, attention, visuospatial ability, and working memory, showed that lesions involving the superior longitudinal fasciculus are associated with executive deficits in set-shifting45. Some language tests in our battery loaded on the Executive factor to a surprising extent (eg. Pyramids and Palm Trees, Philadelphia Naming Test-Written Naming). Another recent factor analysis found that the Camels and Cactus test, which is similar to the Pyramids and Palm Trees test used here, also loaded strongly onto a “cognitive” factor6. These similar findings suggest that caution should be used in attributing poor performance to the semantic deficits these tests are intended to measure. Though both tests assess semantics without requiring an oral response, neither tests semantics without also testing executive function.

A better understanding of the mapping between common language tests and the underlying processes they measure could improve aphasia diagnosis, and also allow clinicians to identify redundant tests in order to streamline their assessments, leading to improved diagnosis and treatments better tailored to an individual’s deficits. Here, we have demonstrated the value and limits of typical aphasia tests in assessing deficits in discrete language processes and the brain structures underlying them.

Acknowledgments

We would like to thank Laura Hussey, Molly Stamp, Jessica Friedman, Lauren Taylor, and Katherine Spiegel for their diligent assistance with data collection for this study, and a very helpful reviewer for guidance with the manuscript.

Footnotes

Conflict of Interest Statement: The authors declare that there is no conflict of interest.

Contributor Information

Elizabeth H. Lacey, Department of Neurology, Georgetown University Medical Center, MedStar National Rehabilitation Hospital.

LM Skipper-Kallal, Department of Neurology, Georgetown University Medical Center.

S Xing, Department of Neurology, Georgetown University Medical Center, Department of Neurology, First Affiliated Hospital of Sun Yat-Sen University, Guangzhou, China.

ME Fama, Department of Neurology, Georgetown University Medical Center.

PE Turkeltaub, Department of Neurology, Georgetown University Medical Center, MedStar National Rehabilitation Hospital.

References

- 1.Laska AC, Martensson B, Kahan T, von Arbin M, Murray V. Recognition of depression in aphasic stroke patients. Cerebrovascular diseases. 2007;24(1):74–79. doi: 10.1159/000103119. [DOI] [PubMed] [Google Scholar]

- 2.Fama ME, Turkeltaub PE. Treatment of Poststroke Aphasia: Current Practice and New Directions. Semin Neurol. 2014;34:504–513. doi: 10.1055/s-0034-1396004. [DOI] [PubMed] [Google Scholar]

- 3.Santos NC, Costa PS, Amorim L, et al. Exploring the factor structure of neurocognitive measures in older individuals. PLoS One. 2015;10(4):e0124229. doi: 10.1371/journal.pone.0124229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lambon Ralph MAM, Lynne, Sage K. Anomia is simply a reflection of semantic and phonological impairments: Evidence from a case-series study. Aphasiology. 2002;16(1–2):56–82. [Google Scholar]

- 5.Mirman D, Chen Q, Zhang Y, et al. Neural organization of spoken language revealed by lesion-symptom mapping. Nature communications. 2015;6:6762. doi: 10.1038/ncomms7762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Butler RA, Lambon Ralph MA, Woollams AM. Capturing multidimensionality in stroke aphasia: mapping principal behavioural components to neural structures. Brain. 2014 Dec;137(Pt 12):3248–3266. doi: 10.1093/brain/awu286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Halai AD, Woollams AM, Lambon Ralph MA. Using principal component analysis to capture individual differences within a unified neuropsychological model of chronic post-stroke aphasia: Revealing the unique neural correlates of speech fluency, phonology and semantics. Cortex. 2016 Apr 29; doi: 10.1016/j.cortex.2016.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bates E, Wilson SM, Saygin AP, et al. Voxel-based lesion-symptom mapping. Nat Neurosci. 2003 May;6(5):448–450. doi: 10.1038/nn1050. [DOI] [PubMed] [Google Scholar]

- 9.Mirman D, Zhang Y, Wang Z, Coslett HB, Schwartz MF. The ins and outs of meaning: Behavioral and neuroanatomical dissociation of semantically-driven word retrieval and multimodal semantic recognition in aphasia. Neuropsychologia. 2015 Sep;76:208–219. doi: 10.1016/j.neuropsychologia.2015.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang Y, Kimberg DY, Coslett HB, Schwartz MF, Wang Z. Multivariate lesion-symptom mapping using support vector regression. Human Brain Mapping. 2014 Jul 16;35(12):5861–5876. doi: 10.1002/hbm.22590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kertesz A. Western aphasia battery test manual. Psychological Corp; 1982. [Google Scholar]

- 12.Helm-Estabrooks N. Cognitive Linguistic Quick Test. San Antonio, TX: The Psychological Corporation; 2001. [Google Scholar]

- 13.Roach A, Schwartz MF, Martin N, Grewal RS, Brecher A. The Philadelphia Naming Test: Scoring and rationale. Clinical Aphasiology. 1996;24:121–133. [Google Scholar]

- 14.Dabul B. Apraxia Battery for Adults. Austin TX: PRO-ED; 1979. [Google Scholar]

- 15.Howard D, Patterson KE. The Pyramids and Palm Trees Test: A test of semantic access from words and pictures. Thames Valley Test Company; 1992. [Google Scholar]

- 16.Goodglass H, Kaplan E, Barresi B. BDAE: The Boston Diagnostic Aphasia Examination. Lippincott Williams & Wilkins; 2001. [Google Scholar]

- 17.Mah YH, Husain M, Rees G, Nachev P. Human brain lesion-deficit inference remapped. Brain. 2014 Jun 28;137(Pt 9):2522–2531. doi: 10.1093/brain/awu164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Haufe S, Meinecke F, Gorgen K, et al. On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage. 2014 Feb 15;87:96–110. doi: 10.1016/j.neuroimage.2013.10.067. [DOI] [PubMed] [Google Scholar]

- 19.Wagner S, Sebastian A, Lieb K, Tuscher O, Tadic A. A coordinate-based ALE functional MRI meta-analysis of brain activation during verbal fluency tasks in healthy control subjects. BMC Neurosci. 2014;15:19. doi: 10.1186/1471-2202-15-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Embick D, Marantz A, Miyashita Y, O’Neil W, Sakai KL. A syntactic specialization for Broca’s area. Proc Natl Acad Sci U S A. 2000 May 23;97(11):6150–6154. doi: 10.1073/pnas.100098897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Musso M, Moro A, Glauche V, et al. Broca’s area and the language instinct. Nat Neurosci. 2003 Jul;6(7):774–781. doi: 10.1038/nn1077. [DOI] [PubMed] [Google Scholar]

- 22.Thompson-Schill SL, D’Esposito M, Kan IP. Effects of repetition and competition on activity in left prefrontal cortex during word generation. Neuron. 1999 Jul;23(3):513–522. doi: 10.1016/s0896-6273(00)80804-1. [DOI] [PubMed] [Google Scholar]

- 23.Thompson-Schill SL, Bedny M, Goldberg RF. The frontal lobes and the regulation of mental activity. Curr Opin Neurobiol. 2005 Apr;15(2):219–224. doi: 10.1016/j.conb.2005.03.006. [DOI] [PubMed] [Google Scholar]

- 24.Noppeney U, Phillips J, Price C. The neural areas that control the retrieval and selection of semantics. Neuropsychologia. 2004;42(9):1269–1280. doi: 10.1016/j.neuropsychologia.2003.12.014. [DOI] [PubMed] [Google Scholar]

- 25.Kan IP, Thompson-Schill SL. Effect of name agreement on prefrontal activity during overt and covert picture naming. Cogn Affect Behav Neurosci. 2004 Mar;4(1):43–57. doi: 10.3758/cabn.4.1.43. [DOI] [PubMed] [Google Scholar]

- 26.Schnur TT, Schwartz MF, Kimberg DY, Hirshorn E, Coslett HB, Thompson-Schill SL. Localizing interference during naming: convergent neuroimaging and neuropsychological evidence for the function of Broca’s area. Proc Natl Acad Sci U S A. 2009 Jan 6;106(1):322–327. doi: 10.1073/pnas.0805874106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Poldrak RA, Wagner AD, Prull MW, et al. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10:15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- 28.Gough PM, Nobre AC, Devlin JT. Dissociating linguistic processes in the left inferior frontal cortex with transcranial magnetic stimulation. The Journal of Neuroscience. 2005 Aug 31;25(35):8010–8016. doi: 10.1523/JNEUROSCI.2307-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007 May;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 30.Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009 Jun;12(6):718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dronkers NF, Wilkins DP, Van Valin RD, Jr, Redfern BB, Jaeger JJ. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004 May-Jun;92(1–2):145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- 32.Mesulam MM, Thompson CK, Weintraub S, Rogalski EJ. The Wernicke conundrum and the anatomy of language comprehension in primary progressive aphasia. Brain. 2015 Aug;138(Pt 8):2423–2437. doi: 10.1093/brain/awv154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.DeWitt I, Rauschecker JP. Phoneme and word recognition in the auditory ventral stream. Proceedings of the National Academy of Sciences of the United States of America. 2012 Feb 21;109(8):E505–514. doi: 10.1073/pnas.1113427109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Walker GM, Hickok G. Bridging computational approaches to speech production: The semantic-lexical-auditory-motor model (SLAM) Psychon Bull Rev. 2015 Jul 30; doi: 10.3758/s13423-015-0903-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hickok G. Computational neuroanatomy of speech production. Nature Reviews Neuroscience. 2012;13(2):135–145. doi: 10.1038/nrn3158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kummerer D, Hartwigsen G, Kellmeyer P, et al. Damage to ventral and dorsal language pathways in acute aphasia. Brain. 2013 Feb;136(Pt 2):619–629. doi: 10.1093/brain/aws354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Buchsbaum BR, Baldo J, Okada K, et al. Conduction aphasia, sensory-motor integration, and phonological short-term memory – An aggregate analysis of lesion and fMRI data. Brain and language. 2011;119(3):119–128. doi: 10.1016/j.bandl.2010.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Baldo JV, Dronkers NF. The role of inferior parietal and inferior frontal cortex in working memory. Neuropsychology. 2006 Sep;20(5):529–538. doi: 10.1037/0894-4105.20.5.529. [DOI] [PubMed] [Google Scholar]

- 39.Raz A, Buhle J. Typologies of attentional networks. Nat Rev Neurosci. 2006 May;7(5):367–379. doi: 10.1038/nrn1903. [DOI] [PubMed] [Google Scholar]

- 40.Luria AR. The Working Brain. 1973 [Google Scholar]

- 41.Smith EE, Jonides J. Storage and executive processes in the frontal lobes. Science. 1999 Mar 12;283(5408):1657–1661. doi: 10.1126/science.283.5408.1657. [DOI] [PubMed] [Google Scholar]

- 42.Cummings JL. Frontal-subcortical circuits and human behavior. Arch Neurol. 1993 Aug;50(8):873–880. doi: 10.1001/archneur.1993.00540080076020. [DOI] [PubMed] [Google Scholar]

- 43.Perry ME, McDonald CR, Hagler DJ, Jr, et al. White matter tracts associated with set-shifting in healthy aging. Neuropsychologia. 2009 Nov;47(13):2835–2842. doi: 10.1016/j.neuropsychologia.2009.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Biesbroek JM, Kuijf HJ, van der Graaf Y, et al. Association between subcortical vascular lesion location and cognition: a voxel-based and tract-based lesion-symptom mapping study. The SMART-MR study. PLoS One. 2013;8(4):e60541. doi: 10.1371/journal.pone.0060541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Muir RT, Lam B, Honjo K, et al. Trail Making Test Elucidates Neural Substrates of Specific Poststroke Executive Dysfunctions. Stroke. 2015 Oct;46(10):2755–2761. doi: 10.1161/STROKEAHA.115.009936. [DOI] [PMC free article] [PubMed] [Google Scholar]