Abstract

Recently, the alternating direction method of multipliers (ADMM) has received intensive attention from a broad spectrum of areas. The generalized ADMM (GADMM) proposed by Eckstein and Bertsekas is an efficient and simple acceleration scheme of ADMM. In this paper, we take a deeper look at the linearized version of GADMM where one of its subproblems is approximated by a linearization strategy. This linearized version is particularly efficient for a number of applications arising from different areas. Theoretically, we show the worst-case 𝒪(1/k) convergence rate measured by the iteration complexity (k represents the iteration counter) in both the ergodic and a nonergodic senses for the linearized version of GADMM. Numerically, we demonstrate the efficiency of this linearized version of GADMM by some rather new and core applications in statistical learning. Code packages in Matlab for these applications are also developed.

Keywords: Convex optimization, alternating direction method of multipliers, convergence rate, variable selection, discriminant analysis, statistical learning

AMS Subject Classifications: 90C25, 90C06, 62J05

1 Introduction

A canonical convex optimization model with a separable objective function and linear constraints is:

| (1) |

where A ∈ ℝn×n1, B ∈ ℝn×n2, b ∈ ℝn, and 𝒳 ⊂ ℝn1 and 𝒴 ⊂ ℝn2 are closed convex nonempty sets, f1 : ℝn1 → ℝ and f2 : ℝn2 → ℝ are convex but not necessarily smooth functions. Throughout our discussion, the solution set of (1) is assumed to be nonempty, and the matrix B is assumed to have full column rank.

The motivation of discussing the particular model (1) with separable structures is that each function fi might have its own properties, and we need to explore these properties effectively in algorithmic design in order to develop efficient numerical algorithms. A typical scenario is where one of the functions represents some data-fidelity term, and the other is a certain regularization term —- we can easily find such an application in many areas such as inverse problem, statistical learning and image processing. For example, the famous least absolute shrinkage and selection operator (LASSO) model introduced in [44] is a special case of (1) where f1 is the ℓ1-norm term for promoting sparsity, f2 is a least-squares term multiplied by a trade-off parameter, A = In×n, B = −In×n, b = 0, 𝒳 = 𝒴 = ℝn.

To solve (1), a benchmark is the alternating direction method of multipliers (ADMM) proposed originally in [24] which is essentially a splitting version of the augmented Lagrangian method in [34,42]. The iterative scheme of ADMM for solving (1) reads as

| (2) |

where γ ∈ ℝn is the Lagrangian multiplier; ρ > 0 is a penalty parameter, and ||·|| is the Euclidean 2-norm. An important feature of ADMM is that the functions f1 and f2 are treated individually; thus the decomposed subproblems in (2) might be significantly easier than the original problem (1). Recently, the ADMM has received wide attention from a broad spectrum of areas because of its easy implementation and impressive efficiency. We refer to [6,13,23] for excellent review papers for the history and applications of ADMM.

In [21], the ADMM was explained as an application of the well-known Douglas-Rachford splitting method (DRSM) in [36] to the dual of (1); and in [14], the DRSM was further explained as an application of the proximal point algorithm (PPA) in [37]. Therefore, it was suggested in [14] to apply the acceleration scheme in [25] for the PPA to accelerate the original ADMM (2). A generalized ADMM (GADMM for short) was thus proposed:

| (3) |

where the parameter α ∈ (0, 2) is a relaxation factor. Obviously, the generalized scheme (3) reduces to the original ADMM scheme (2) when α = 1. Preserving the main advantage of the original ADMM in treating the objective functions f1 and f2 individually, the GADMM (3) enjoys the same easiness in implementation while can numerically accelerate (2) with some values of α, e.g., α ∈ (1, 2). We refer to [2,8,12] for empirical studies of the acceleration performance of the GADMM.

It is necessary to discuss how to solve the decomposed subproblems in (2) and (3). We refer to [41] for the ADMM’s generic case where no special property is assumed for the functions f1 and f2, and thus the subproblems in (2) must be solved approximately subject to certain inexactness criteria in order to ensure the convergence for inexact versions of the ADMM. For some concrete applications such as those arising in sparse or low-rank optimization models, one function (say, f1) is nonsmooth but well-structured (More mathematically, the resolvent of ∂f1 has a closed-form representation), and the other function f2 is smooth and simple enough so that the y-subproblem is easy (e.g., when f2 is the least-squares term). For such a case, instead of discussing a generic strategy to solve the x-subproblem in (2) or (3) approximately, we prefer to seek some particular strategies that can take advantage of the speciality of f1 effectively. More accurately, when f1 is a special function such as the ℓ1-norm or nuclear-norm function arising often in applications, we prefer linearizing the quadratic term of the x-subproblem in (2) or (3) so that the linearized x-subproblem has a closed-form solution (amounting to estimating the resolvent of ∂f1) and thus no inner iteration is required. The efficiency of this linearization strategy for the ADMM has been well illustrated in different literatures, see. e.g. [52] for image reconstruction problems, [48] for the Dantzig Selector model, and [50] for some low-rank optimization models. Inspired by these applications, we thus consider the linearized version of the GADMM (“L-GADMM” for short) where the x-subproblem in (3) is linearized:

| (4) |

where G ∈ ℝn1×n1 is a symmetric positive definite matrix. Note that we use the notation ||x||G to denote the quantity . Clearly, if 𝒳 = ℝn1 and we choose G = τIn1 − ρATA with the requirement τ > ρ||ATA||2, where ||·||2 denotes the spectral norm of a matrix, the x-subproblem in (4) reduces to estimating the resolvent of ∂f1:

which has a closed-form solution for some cases such as f1 = ||x||1. The scheme (4) thus includes the linearized version of ADMM (see. e.g. [48,50,52]) as a special case with G = τIn1 − ρATA and α = 1.

The convergence analysis of ADMM has appeared in earlier literatures, see. e.g. [22,20,29,30]. Recently, it also becomes popular to estimate ADMM’s worst-case convergence rate measured by the iteration complexity (see e.g. [39,40] for the rationale of measuring the convergence rate of an algorithm by means of its iteration complexity). In [31], a worst-case 𝒪(1/k) convergence rate in the ergodic sense was established for both the original ADMM scheme (2) and its linearized version (i.e., the special case of (4) with α = 1), and then a stronger result in a nonergodic sense was proved in [32]. We also refer to [33] for an extension of the result in [32] to the DRSM for the general problem of finding a zero point of the sum of two maximal monotone operators, [11] for the linear convergence of the ADMM under additional stronger assumptions, and [5,27] for the linear convergence of ADMM for the special case of (1) where both f1 and f2 are quadratic functions.

This paper aims at further studying the L-GADMM (4) both theoretically and numerically. Theoretically, we shall establish the worst-case 𝒪 (1/k) convergence rate in both the ergodic and a nonergodic senses for L-GADMM. This is the first worst-case convergence rate for L-GADMM, and it includes the results in [8,32] as special cases. Numerically, we apply the L-GADMM (4) to solve some rather new and core applications arising in statistical learning. The acceleration effectiveness of embedding the linearization technique with the GADMM is thus verified.

The rest of this paper is organized as follows. We summarize some preliminaries which are useful for further analysis in Section 2. Then, we derive the worst-case convergence rate for the L-GADMM (4) in the ergodic and a nonergodic senses in Sections 3 and 4, respectively. In Section 5, we apply the L-GADMM (4) to solve some statistical learning applications and verify its numerical efficiency. Finally, we make some conclusions in Section 6.

2 Preliminaries

First, as well known in the literature (see, e.g. [30,31]), solving (1) is equivalent to solving the following variational inequality (VI) problem: Finding w* = (x*, y*, γ*) ∈ Ω := 𝒳 × 𝒴 × ℝn such that

| (5) |

where f(u) = f1(x) + f2(y) and

| (6) |

We denote by VI(Ω, F, f) the problem (5)–(6). It is easy to see that the mapping F(w) defined in (6) is affine with a skew-symmetric matrix; it is thus monotone:

This VI reformulation will provide significant convenience for theoretical analysis later. The solution set of (5), denoted by Ω*, is guaranteed to be nonempty under our nonempty assumption on the solution set of (1).

Then, we define two auxiliary sequences for the convenience of analysis. More specifically, for the sequence {wt} generated by the L-GADMM (4), let

| (7) |

Note that, by the definition of γt+1 in (4), we get

Plugging the identities ρ(Axt+1 + Byt − b) = γt − γ̃t and yt+1 = ỹt (see (7)) into the above equation, it holds that

| (8) |

Then we have

| (9) |

where M is defined as

| (10) |

For notational simplicity, we define two matrices that will be used later in the proofs:

| (11) |

It is easy to verify that

| (12) |

3 A worst-case 𝒪(1/k) convergence rate in the ergodic sense

In this section, we establish a worst-case 𝒪(1/k) convergence rate in the ergodic sense for the L-GADMM (4). This is a more general result than that in [8] which focuses on the original GADMM (3) without linearization.

We first prove some lemmas. The first lemma is to characterize the accuracy of the vector w̃t to a solution point of VI(Ω, F, f).

Lemma 1

Let the sequence {wt} be generated by the L-GADMM (4) and the associated sequence {w̃t} be defined in (7). Then we have

| (13) |

where Q is defined in (11).

Proof

This lemma is proved by deriving the optimality conditions for the minimization subproblems in (4) and performing some algebraic manipulation. By deriving the optimality condition of the x-subproblem of (4), as shown in [31], we have

| (14) |

Using x̃t and γ̃t defined in (7), (14) can be rewritten as

| (15) |

Similarly, deriving the optimality condition for the y-subproblem in (4), we have

| (16) |

From (8), we get

| (17) |

Substituting (17) into (16) and using the identity yt+1 = ỹt, we obtain that

| (18) |

Meanwhile, the third row of (7) implies that

| (19) |

Combining (15), (18) and (19), we get

| (20) |

By the definition of F in (6) and Q in (11), (20) can be written as

The assertion (13) is proved.

Recall the VI characterization (5)–(6) of the model (1). Thus, according to (13), the accuracy of w̃t to a solution of VI(Ω, F, f) is measured by the quantity (w − w̃t)T Q(wt − w̃t). In the next lemma, we further explore this term and express it in terms of some quadratic terms, with which it becomes more convenient to estimate the accuracy of w̃t and thus to estimate the convergence rate for the scheme (4). Note that the matrix B is of full column rank and the matrix G is positive definite. Thus, the matrix H defined in (11) is positive definite for α ∈ (0, 2) and ρ > 0; and recall that we use the notation

for further analysis.

Lemma 2

Let the sequence {wt} be generated by the L-GADMM (4) and the associated sequence {w̃t} be defined in (7). Then for any w ∈ Ω, we have

| (21) |

Proof

Using Q = HM and M(wt − w̃t) = (wt − wt+1) (see (9)), it follows that

| (22) |

For the vectors a, b, c, d in the same space and a matrix H with appropriate dimensionality, we have the identity

In this identity, we take

and plug them into the right-hand side of (22). The resulting equation is

The remaining task is to prove

| (23) |

By the definition of H given in (11), we have

| (24) |

On the other hand, we have by (17) and the definition that ũt = ut+1,

| (25) |

Subtracting (25) from (24), performing some algebra yields (23), and the proof is completed.

Lemmas 1 and 2 actually reassemble a simple proof for the convergence of the L-GADMM (4) from the perspectives of contraction type methods.

Theorem 1

The sequence {wt} generated by the L-GADMM (4) satisfies that for all w* ∈ Ω*

| (26) |

where

| (27) |

Proof

Set w = w* in the assertion of Lemma 2, we get

On the other hand, by using (13) and the monotonicity of F, we have

Consequently, we obtain

| (28) |

Since ỹt = yt+1, it follows from (9) that

Substituting it into (28), we obtain

| (29) |

Note that (16) is true for any integer t ≥ 0. Thus we have

| (30) |

Setting y = yt and y = yt+1 in (16) and (30), respectively, we get

and

Adding the above two inequalities yields

| (31) |

Substituting it in (29) and using x̃t = xt+1, we obtain

and the assertion of this theorem follows directly.

Remark 1

Since the matrix H defined in (11) is positive definite, the assertion (26) implies that the sequence {wt} generated by the L-GADMM (4) is contractive with respect to Ω* (according to the definition in [4]). Thus, the convergence of {wt} can be trivially derived by applying the standard technique of contraction methods.

Remark 2

For the special case where α = 1, i.e., the L-GADMM (4) reduces to the split inexact Uzawa method in [52,53], due to the structure of the matrix H (see (11)), the inequality (26) is simplified to

Moreover, when α = 1 and G = 0, i.e., the L-GADMM (4) reduces to the ADMM (2), the inequality (26) becomes

where v and H0 are defined in (27). This is exactly the contraction property of the ADMM (2) analyzed in the appendix of [6].

Now, we are ready to establish a worst-case 𝒪(1/k) convergence rate in the ergodic sense for the L-GADMM (4). Lemma 2 plays an important role in the proof.

Theorem 2

Let H be given by (11) and {wt} be the sequence generated by the L-GADMM (4). For any integer k > 0, let ŵk be defined by

| (32) |

where w̃t is defined in (7). Then, we have ŵk ∈ Ω and

Proof

First, because of (7) and wt ∈ Ω, it holds that w̃t ∈ Ω for all t ≥ 0. Thus, together with the convexity of 𝒳 and 𝒴, (84) implies that ŵk ∈ Ω. Second, due to the monotonicity, we have

| (33) |

thus Lemma 1 and Lemma 2 imply that for all w ∈ Ω,

| (34) |

Summing the inequality (34) over t = 0, 1, …, k, we obtain that for all w ∈ Ω

Using the notation of ŵt, it can be written as for all w ∈ Ω

| (35) |

Since f(u) is convex and

we have that

Substituting it into (35), the assertion of this theorem follows directly.

For an arbitrary substantial compact set 𝒟 ⊂ Ω, we define

where w0 = (x0, y0, γ0) is the initial point. After k iterations of the L-GADMM (4), we can find a ŵk ∈ Ω such that

A worse-case 𝒪(1/k) convergence rate in the ergodic sense is thus proved for the L-GADMM (4).

4 A worst-case 𝒪(1/k) convergence rate in a nonergodic sense

In this section, we prove a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the L-GADMM (4). The result includes the assertions in [32] for the ADMM and its linearized version as special cases.

For the right-hand-side of the inequality (21) in Lemma 2, the first term is already in the form of the difference to w of two consecutive iterates, which is ideal for performing recursively algebraic reasoning in the proof of convergence rate (see theorems later). Now we have to deal with the last two terms in the right-hand side of (21) for the same purpose. In the following lemma, we find a bound in the term of for the sum of these two terms.

Lemma 3

Let the sequence {wt} be generated by the L-GADMM (4) and the associated sequence {w̃t} be defined in (7). Then we have

| (36) |

where

| (37) |

Proof

Similar to (24), it follows from x̃t = xt+1 and the definition of H that

| (38) |

From (31), (8) and the assumption α ∈ (0, 2), we have

| (39) |

Since α ∈ (0, 2), we get (2 − α)/α > 0, 1 ≥ cα > 0 and

The proof is completed.

With Lemmas 1, 2 and 3, we can find a bound of the accuracy of w̃t to a solution point of VI(Ω, F, f) in term of some quadratic terms. We show it in the next theorem.

Theorem 3

Let the sequence {wt} be generated by the L-GADMM (4) and the associated sequence {w̃t} be defined in (7). Then for any w ∈ Ω, we have

| (40) |

where H and cα > 0 are defined in (11) and (37), respectively.

Proof

Using the monotonicity of F(w) (see (33)) and replacing the right-hand side term in (13) with the equality (21), we obtain that

The assertion (40) follows by plugging (36) into the above inequality immediately.

Now we show an important inequality for the scheme (4) by using Lemmas 1, 2 and 3. This inequality immediately shows the contraction of the sequence generated by (4), and based on this inequality we can establish its worst-case 𝒪(1/k) convergence rate in a nonergodic sense.

Theorem 4

The sequence {wt} generated by the L-GADMM (4) satisfies

| (41) |

where H and cα > 0 are defined in (11) and (37), respectively.

Proof

Setting w = w* in (40), we get

On the other hand, since w̃t ∈ Ω, and w* ∈ Ω*, we have

From the above two inequalities, the assertion (41) is proved.

Remark 3

Theorem 4 also explains why the relaxation parameter α is restricted into the interval (0, 2) for the L-GADMM (4). In fact, if α ≤ 0 or α ≥ 2, then the constant cα defined in (37) is only non-positive and the in-equality (41) does not suffice to ensure the strict contraction of the sequence generated by (4); it is thus difficult to establish its convergence. We will empirically verify the failure of convergence for the case of (4) with α = 2 in Section 5.

To establish a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the scheme (4), we first have to mention that the term can be used to measure the accuracy of an iterate. This result has been proved in some literatures such as [32] for the original ADMM.

Lemma 4

For a given wt, let wt+1 be generated by the L-GADMM (4). When , w̃t defined in (7) is a solution to (5).

Proof

By Lemma 1 and (22), it implies that for all w ∈ Ω,

| (42) |

As H is positive definite, the right-hand side of (42) vanishes if , since we have H(wt+1−wt) = 0 whenever . The assertion is proved.

Now, we are ready to establish a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the scheme (4). First, we prove some lemmas.

Lemma 5

Let the sequence {wt} be generated by the L-GADMM (4) and the associated {w̃t} be defined in (7); the matrix Q be defined in (11). Then, we have

| (43) |

Proof

Setting w = w̃t+1 in (13), we have

| (44) |

Note that (13) is also true for t := t + 1 and thus for all w ∈ Ω,

Setting w = w̃t in the above inequality, we obtain

| (45) |

Adding (44) and (45) and using the monotonicity of F, we get (43) immediately. The proof is completed.

Lemma 6

Let the sequence {wt} be generated by the L-GADMM (4) and the associated {w̃t} be defined in (7); the matrices M, H and Q be defined in (10) and (11). Then, we have

| (46) |

Proof

Adding the equation

to both sides of (43), we get

| (47) |

Using (see (9))

to the term wt − wt+1 in the left-hand side of (47), we obtain

and the lemma is proved.

We then prove that the sequence {||wt − wt+1||H} is monotonically non-increasing.

Theorem 5

Let the sequence {wt} be generated by the L-GADMM (4) and the matrix H be defined in (11). Then, we have

| (48) |

Proof

Setting a = M(wt − w̃t) and b = M(wt+1 − w̃t+1) in the identity

we obtain that

| (49) |

Inserting (46) into the first term of the right-hand side of (49), we obtain that

| (50) |

where the last inequality is by the fact that (QT + Q) − MTHM is positive definite. This is derived from the following calculation:

As we have assumed that G is positive definite. It is clear that (QT + Q) − MTHM is positive definite. By (50), we have shown that

Recall the fact that wt − wt+1 = M(wt − w̃t). The assertion (48) follows immediately.

We now prove the worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the L-GADMM (4).

Theorem 6

Let {wt} be the sequence generated by the L-GADMM (4) with α ∈ (0, 2). Then we have

| (51) |

where H and cα > 0 are defined in (11) and (37), respectively.

Proof

It follows from (41) that

| (52) |

As shown in Theorem 5, { } is non-increasing. Therefore, we have

| (53) |

The assertion (51) follows from (52) and (53) immediately.

Notice that Ω* is convex and closed. Let w0 be the initial iterate and d := inf{||w0 − w*||H | w* ∈ Ω*}. Then, for any given ε > 0, Theorem 6 shows that the L-GADMM (4) needs at most ⌈d2/(cαε) − 1⌉ iterations to ensure that . It follows from Lemma 4 that w̃k is a solution of VI(Ω, F, f) if . A worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the L-GADMM (4) is thus established in Theorem 6.

5 Numerical Experiments

In the literature, the efficiency of both the original ADMM (2) and its linearized version has been very well illustrated. The emphasis of this section is to further verify the acceleration performance of the L-GADMM (4) over the linearized version of the original ADMM. That is, we want to further show that for the scheme (4), the general case with α ∈ (1, 2) could lead to better numerical result than the special case with α = 1. The examples we will test include some rather new and core applications in statistical learning area. All codes were written in Matlab 2012a, and all experiments were run on a Macbook Pro with an Intel 2.9GHz i7 Processor and 16GB Memory.

5.1 Sparse Linear Discriminant Analysis

In this and the upcoming subsection, we apply the scheme (4) to solve two rather new and challenging statistical learning models, i.e. the linear programming discriminant rule in [7] and the constrained LASSO model in [35]. The efficiency of the scheme (4) will be verified. In particular, the acceleration performance of (4) with α ∈ (1, 2) will be demonstrated.

We consider the problem of binary classification. Let {(xi, yi), i = 1, …, n} be n samples drawn from a joint distribution of (X, Y) ∈ ℝd × {0, 1}. Given a new data x ∈ ℝd, the goal of the problem is to determine the associated value of Y. A common way to solve this problem is the linear discriminant analysis (LDA), see e.g. [1]. Assuming Gaussian conditional distributions with a common covariance matrix, i.e., (X|Y = 0) ~ N(μ0, Σ) and (X|Y = 1) ~ N(μ1, Σ), let the prior probabilities π0 = ℙ(Y = 0) and π1 = ℙ(Y = 1). Denote Ω = Σ−1 to be the precision matrix; μa = (μ0 + μ1)/2 and μd = μ1 − μ0. Under the normality assumption and when π0 = π1 = 0.5, by Bayes’ rule, we have a linear classifier

| (54) |

As the covariance matrix Σ and the means μ0, μ1 are unknown, given n0 samples of (X|Y = 0) and n1 samples of (X|Y = 1) respectively, a natural way to build a linear classifier is plugging sample means μ̂0, μ̂1 and sample inverse covariance matrix Σ̂−1 into (54) to have ℓ(x; μ̂0, μ̂1, Σ̂). However, in high-dimensional case, where d > n, plug-in rule does not work as Σ̂ is not of full rank.

To resolve this problem, we consider the linear programming discriminant (LPD) rule proposed in [7] which assumes the sparsity directly on the discriminant direction β = Ωμd instead of μd or Ω, which is formulated as following: We first estimate sample mean and sample covariance matrix μ̂0, Σ̂0 of (X|Y = 0) and μ̂1, Σ̂1 of (X|Y = 1) respectively. Let where n0, n1 are the sample sizes of (X|Y = 0) and (X|Y = 1) respectively and n = n0 + n1. The LPD model proposed in [7] is

| (55) |

where λ is a tuning parameter; μ̂d = μ̂1 − μ̂0, and || · ||1 and || · ||∞ are the ℓ1 and ℓ∞ norms in Euclidean space, respectively.

Clearly, the model (55) is essentially a linear programming problem, thus interior point methods are applicable. See e.g. [7] for a primal-dual interior point method. However, it is well known that interior point methods are not efficient for large-scale problems because the involved systems of equations which are solved by Newton type methods are too computationally demanding for large-scale cases.

Here, we apply the L-GADMM (4) to solve (55). To use (4), we first reformulate (55) as

| (56) |

which is a special case of the model (1), and thus the scheme (4) is applicable.

We then elaborate on the resulting subproblems when (4) is applied to (56). First, let us see the application of the original GADMM scheme (3) without linearization to (56):

| (57) |

| (58) |

| (59) |

For the β-subproblem (57), since Σ̂ is not a full rank matrix in the model (55) (in high-dimensional setting, the rank of Σ̂ is much smaller than d), it has no closed-form solution. As described in (4), we choose G = τId − ρΣ̂TΣ̂ and consider the following linearized version of the β-subproblem (57):

| (60) |

where is the gradient of the quadratic term at β = βt. It is seen that (60) is equivalent to

| (61) |

Let shrinkage(u, η) := sign(u) · max (0, |u| − η) be the soft shrinkage operater, where sign(·) is the sign function. The closed-form solution of (61) is given by

| (62) |

For the y-subproblem (58), by simple calculation, we get its solution is

| (63) |

Overall, the application of the L-GADMM (4) to the model (55) is summarized in Algorithm 1.

Algorithm 1.

To implement Algorithm 1, we use the stopping criterion described in [6]: Let the primal residual at the t-th iteration be rt = ||Σ̂βt − μ̂d − yt|| and the dual residual be st = ||ρ Σ̂ (yk−yk−1)||; let the tolerance of the primal and dual residual at the t-th iteration be and , respectively, where ε is chosen differently for different applications; then the iteration is stopped when rt < εpri and st < εdua.

5.1.1 Simulated Data

We first test some synthetic dataset. Following the settings in [7], we consider three schemes and for each scheme, we take n0 = n1 = 150, d = 400 and set μ0 = 0, μ1 = (1, …, 1, 0, …, 0)T, λ = 0.15 for Scheme 1, and λ = 0.1 for Schemes 2 and 3, where the number of 1’s in μ1 is s = 10. The details of the schemes to be tested is listed below:

[Scheme 1]: Ω = Σ−1 where Σjj = 1 for 1 ≤ j ≤ d and Σjk = 0.5 for j ≠ k.

[Scheme 2]: Ω = Σ−1, where Σjk = 0.6|j−k| for 1 ≤ j, k ≤ d.

[Scheme 3]: Ω = (B + δI)/(1 + δ), where B = (bjk)d×d with independent bjk = bkj = 0.5 × Ber(0.2) for 1 ≤ j, k ≤ s, i ≠ j; bjk = bkj = 0.5 for s + 1 ≤ j < k ≤ d; bjj = 1 for 1 ≤ j ≤ d, where Ber(0.2) is a Bernoulli random variable whose value is taken as 1 with the probability 0.2 and 0 with the probability 0.8, and δ = max(−Λmin(B), 0)+0.05, where Λmin(W) is the smallest eigenvalue of the matrix W, to ensure the positive definiteness of Ω.

For Algorithm 1, we set the parameters ρ = 0.05 and τ = 2.1||Σ̂T Σ̂||2. These values are tuned via experiments. The starting points are that β0 = 0, y0 = 0 and γ0 = 1. We set ε = 5 × 10−4 for the stopping criterion. Note that, as described by [7], we add δId to the sample covariance matrix to avoid the ill conditionness, where δ = 10−12.

Since synthetic dataset is considered, we repeat each scheme ten times and report the averaged numerical performance. In particular, we plot the evolutions of the number of iterations and computing time in seconds with respect to different values of α in the interval [1.0, 1.9] with an equal distance of 0.1, and we further choose finer grids in the interval [1.91, 1.98] with an equal distance of 0.01 in Figure 11. To see the performance of Algorithm 1 with α ∈ [1, 2) clearly, for the last simulation case of Scheme 2, we plot the evolutions of objective function value, primal residual and dual residual with respect to iterations for different values of α in Figure 2. The acceleration performance of the relaxation factor α ∈ [1, 2), especially when α is close to 2, over the case where α = 1 is clearly demonstrated. Also, we see that when α is close to 2 (e.g. α = 1.9), the objective value and the primal residual decrease faster than the cases where α is close to 1.

Fig. 1.

Algorithm 1: Evolution of number of iterations and computing time in seconds w.r.t. different values of α′ for synthetic dataset.

Fig. 2.

Algorithm 1: Evolution of objective value, primal residual and dual residual w.r.t. some values of α for Scheme 2

As we have mentioned, the β-subproblem (57) has no closed-form solution; and Algorithm 1 solves it by linearizing its quadratic term and thus only solves the β-subproblem by one iteration because the linearized β-subproblem has a closed-form solution. This is actually very important to ensure the efficiency of ADMM-like method for some particular cases of (1), as emphasized in [48]. One may be curious in comparing with the case where the β-subproblem (57) is solved iteratively by a generic, rather than the special linearization strategy. Note that the β-subproblem (57) is a standard ℓ1−ℓ2 model and we can simply apply the ADMM scheme (2) to solve it iteratively by introducing an auxiliary variable v = β and thus reformulating it as a special case of (1). This case is denoted by “ADMM2” when we report numerical results because the ADMM is used both externally and internally. As analyzed in [14,41], to ensure the convergence of ADMM2, the accuracy of solving the β-subproblem (57) should keep increasing. Thus, in the implementation of ADMM for the subproblem (57), we gradually decrease the tolerance for the inner problem from ε= 5 × 10−2 to ε= 5 × 10−4. Specifically, we take ε= 5 × 10−2 when min(rt/εpri, st/εdua) > 50; ε = 5×10−3 when 10 < max(rt/εpri, st/εdua) < 50; and ε = 5×10−4 when max(rt/εpri, st/εdua) < 10. We further set the maximal iteration numbers as 1, 000 and 40, 000 for the inner and outer loops executed by the ADMM2, respectively.

Table 1.

Numerical comparison between the averaged performance of Algorithm 1 and ADMM2

| Alg. 1 (α = 1) | Alg. 1 (α = 1.9) | ADMM2 | ||

|---|---|---|---|---|

| Scheme 1 | CPU time(s) | 100.54 | 72.25 | 141.86 |

| Objective Value | 2.1826 | 2.1998 | 2.2018 | |

| Violations | 0.0081 | 0.0073 | 0.0182 | |

|

| ||||

| Scheme 2 | CPU time(s) | 54.70 | 28.33 | 481.44 |

| Objective Value | 14.3276 | 14.3345 | 14.2787 | |

| Violations | 0.0099 | 0.0098 | 0.0183 | |

|

| ||||

| Scheme 3 | CPU time(s) | 49.67 | 27.94 | 223.15 |

| Objective Value | 2.4569 | 2.4537 | 2.4912 | |

| Violations | 0.0078 | 0.0080 | 0.0176 | |

We compare Algorithm 1 and ADMM2 for their averaged performances. In Table 1, we list the averaged computing time in seconds and the objective function values for Algorithm 1 with α = 1 and 1.9, and ADMM2. Recall Algorithm 1 with α = 1 is the linearized version of the original ADMM (2) and ADMM2 is the case where the β-subproblem (57) is solved iteratively by the original ADMM scheme. The data in this table shows that achieving the same level of objective function values, Algorithm 1 with α = 1.9 is faster than Algorithm 1 with α = 1 (thus, the acceleration performance of the GADMM with α ∈ (1, 2) is illustrated); and Algorithm 1 with either α = 1 or α = 1.9 is much faster than ADMM2 (thus the necessity of linearization for the β-subproblem (57) is clearly demonstrated). We also list the averaged primal feasibility violations of the solutions generated by the algorithms in the table. The violation is defined as ||Σ̂β̂ − μ̂d, − w||, where β̂ is the output solution and w = min (max(Σ̂β̂ − μ̂d, − λ), λ) ∈ ℝd. It is clearly seen in the table that Algorithm 1 achieves better feasibility than ADMM2.

5.1.2 Real Dataset

In this section, we compare Algorithm 1 with ADMM2 on a real dataset of microarray dataset in [38]. The dataset contains 13,182 microarray samples from Affymetrixs HGU133a platform. The raw data contains 2,711 tissue types (e.g., lung cancers, brain tumors, Ewing tumor etc.). In particular, we select 60 healthy samples and 60 samples from those with breast cancer. We use the first 1,000 genes to conduct the experiments.

It is believed that different tissues are associated with different sets of genes and microarray data have been heavily adopted to classify tissues, see e.g. [28, 47]. Our aim is to classify those tissues of breast cancer from those healthy tissues. We randomly select 60 samples from each group to be our training set and use another 60 samples from each group as testing set. The tuning parameter λ is chosen by five-fold cross validation as described in [7].

We set ρ = 1 and τ = 2||Σ̂T Σ̂||2 for Algorithm 1; and take the tolerance ε = 10−3 in the stopping criterion of Algorithm 1. The starting points are β0 = 0, z0 = 0 and γ0 = 0. The comparison among Algorithm 1 with α = 1 and α = 1.9 and ADMM2 is shown in Table 2; where the pair “a/b” means there are “a” errors out of “b” samples when iteration is terminated. It is seen that Algorithm 1 outperforms ADMM2 in both accuracy and efficiency; also the case where α= 1.9 accelerates Algorithm 1 with α = 1.

Table 2.

Numerical comparison between Algorithm 1 and ADMM2 for microarray dataset

| Alg. 1 (α = 1) | Alg. 1 (α = 1.9) | ADMM2 | |

|---|---|---|---|

| Training Error | 1/60 | 1/60 | 1/60 |

| Testing Error | 3/60 | 3/60 | 3/60 |

| CPU time(s) | 201.17 | 167.61 | 501.95 |

| Objective Value | 24.67 | 24.85 | 24.81 |

| Violation | 0.0121 | 0.0121 | 0.0241 |

5.2 Constrained Lasso

In this subsection, we apply the L-GADMM (4) to the constrained LASSO model proposed recently in [35].

Consider the standard linear regression model

where X ∈ ℝn×d is a design matrix; β ∈ ℝd is a vector of regression coefficients; y ∈ ℝn is a vector of observations, and ε ∈ ℝn is a vector of random noises. In high-dimensional setting where the number of observations is much smaller than the number of regression coefficients, n ≪ d, the traditional least-squares method does not perform well. To overcome this difficulty, just like the sparse LDA model (55), certain sparsity conditions are assumed for the linear regression model. With the sparsity assumption on the vector of regression coefficients β, we have the following model

where pλ(·) is a penalization function. Different penalization functions have been proposed in the literature, such as the LASSO in [44] where pλ(β) = λ||β||1, the SCAD [16], the adaptive LASSO [54], and the MCP [51]. Inspired by significant applications such as portfolio selection [18] and monotone regression [35], the constrained LASSO (CLASSO) model was proposed recently in [35]:

| (64) |

where A ∈ ℝm×d and b ∈ ℝm with m < d in some applications like portfolio selection [18]. It is worth noting that many statistical problems such as the fused LASSO [45] and the generalized LASSO [46] can be formulated as the form of (64). Thus it is important to find efficient algorithms for solving (64). Although (64) is a quadratic programming problem that can be solved by interior point methods theoretically, again for high-dimensional cases the application of interior point methods is numerically inefficient because of its extremely expensive computation. Note that existing algorithms for LASSO can not be extended to solve (64) trivially.

In fact, introducing a slack variable to the inequality constraints in (64), we reformulate (64) as

| (65) |

which is a special case of the model (1) and thus the L-GADMM (4) is applicable. More specifically, the iterative scheme of (4) with G = τId − ρ(AT A) for solving (65) reads as

| (66) |

| (67) |

| (68) |

where .

Now, we delineate the subproblems (66)–(68). First, the β-subproblem (66) is equivalent to

| (69) |

where wt = XT y + βt − ρut, and Id ∈ ℝd×d is the identity matrix. Deriving the optimality condition of (69), the solution of (69), βt+1 has to satisfy the following equation:

where C = (XT X + τId). As XT X is positive semidefinite and Id is of full rank with all positive eigenvalues, we have that C is invertible. We have a closed-form solution for (66) that

| (70) |

Then, for the z-subproblem (67), its closed-form solution is given by

| (71) |

Overall, the application of the L-GADMM (4) to the constrained CLASSO model (64) is summarized in Algorithm 2.

We consider two schemes to test the efficiency of Algorithm 2.

[Scheme 1]: We first generate an n×d matrix X with independent standard Gaussian entries where n = 100 and d = 400, and we standardize the columns of X to have unit norms. After that, we set the coefficient vector β = (1, …, 1, 0, …0)T with the first s = 5 entries to be 1 and the rest to be 0. Next, we generate a m × d matrix A with independent standard Gaussian entries where m = 100, and we generate b = Aβ + ε̂ where the entries of ε̂ are independent random variables uniformly distributed in [0, σ], and the vector of observations y is generated by y = Xβ + ε with ε ~ N(0, σ2Id) where σ = 0.1. We set λ = 1 in the model (64).

[Scheme 2]: We follow the same procedures of Scheme 1, but we change σ to be 0.3

Algorithm 2.

To implement Algorithm 2, we use the stopping criterion proposed by [48]: Let the primal and dual residual at the t-th iteration be pt = ||Aβt − zt || and dt = ||γt − γt+1||, respectively; the tolerance of primal and dual residuals are set by the criterion described in [6] where and ; then the iteration is stopped when pt < εpri and dt < εdua. We choose ε = 10−4 for our experiments. We set ρ = 10−3 and τ = 20||AT A||2 and the starting iterate as β0 = 0, z0 = 0 and γ0 = 0.

Since synthetic dataset is considered, we repeat the simulation ten times and report the averaged numerical performance of Algorithm 2. In Figure 3, we plot the evolutions of number of iterations and computing time in seconds with different values of α ∈ [1, 2] for Algorithm 2 with α chosen the same way as we did in the previous subsection. The acceleration performance when α ∈ (1, 2) over the case where α = 1 is clearly shown by the curves in this figure. For example, the case where α = 1.9 is about 30% faster than the case where α = 1. We also see that when α = 2, Algorithm 2 does not converge after 100,000 iterations. This coincides with the failure of strict contraction of the sequence generated by Algorithm 2, as we have mentioned in Remark 3.

Fig. 3.

Algorithm 2: Evolution of number of iterations and computing time in seconds w.r.t. different values of α for synthetic dataset

Like Section 5.1.1, we also compare the averaged performance of Algorithm 2 with the application of the original ADMM (2) where the resulting β-subproblem is solved iteratively by the ADMM. The penalty parameter is set as 1 and the ADMM is implemented to solve the β-subproblem, whose tolerance ε is gradually decreased from 10−2 to 10−4 obeying the same rule as that mentioned in Section 5.1.1. We further set the maximal iteration numbers to be 1, 000 and 10, 000 for inner and outer loops executed by ADMM2. Again, this case is denoted by “ADMM2”. In Table 3, we list the computing time in seconds to achieve the same level of objective function values for three cases: Algorithm 2 with α = 1, Algorithm 2 with α = 1.9, and ADMM2. We see that Algorithm 2 with either α = 1 or α = 1.9 is much faster than ADMM2; thus the necessity of linearization when ADMM-like methods are applied to solve the constrained LASSO model (64) is illustrated. Also, the acceleration performance of the GADMM with α ∈ (1, 2) is demonstrated as Algorithm 2 with α = 1.9 is faster than Algorithm 2 with α = 1. We also report the averaged primal feasibility violations of the solutions generated by each algorithm. The violation is defined as ||Aβ̂ − w||, where β̂ is the output solution and w = min (b, Aβ̂) ∈ ℝm, respectively. It is seen in the table that Algorithm 2 achieves better feasibility than ADMM2.

Table 3.

Numerical comparison of the averaged performance between Algorithm 2 and ADMM2

| Alg. 2 (α = 1) | Alg. 2 (α = 1.9) | ADMM2 | ||

|---|---|---|---|---|

| Scheme 1 | CPU time(s) | 88.03 | 71.01 | 166.17 |

| Objective Value | 5.4679 | 5.4678 | 5.4823 | |

| Violations | 0.0033 | 0.0031 | 0.2850 | |

|

| ||||

| Scheme 2 | CPU time(s) | 97.19 | 82.49 | 165.90 |

| Objective Value | 5.3861 | 5.3864 | 5.6364 | |

| Violations | 0.0032 | 0.0032 | 0.1158 | |

5.3 Dantzig Selector

Last, we test the Dantzig Selector model proposed in [9]. In [48], this model has been suggested to be solved by the linearized version of ADMM which is a special case of (4) with α = 1. We now test this example again to show the acceleration performance of (4) with α ∈ (1, 2).

The Dantzig selector model in [9] deals with the case where the the number of observations is much smaller than the number of regression coefficients, i.e n ≪ d. In particular, the Dantzig selector model is

| (72) |

where δ > 0 is a tuning parameter, and || · ||∞ is the infinity norm.

As elaborated in [48], the model (72) can be formulated as

| (73) |

where x ∈ ℝd is an auxiliary variable. Obviously, (73) is a special case of (1) and thus the L-GADMM (4) is applicable. In fact, applying (4) with G = Id − τ||(XTX)T(XTX)||2 to (73), we obtain the subproblems as the following:

| (74) |

| (75) |

| (76) |

where . Note that τ ≥ 2||(XXT)XTX||2 is required to ensure the convergence, see [48] for the detailed proof.

The β-subproblem (74) can be rewritten as

whose closed-form solution is given by

| (77) |

Moreover, the solution of the x-subproblem (75) is given by

| (78) |

Overall, the application of the L-GADMM (4) to the Dantzig Selector model (72) is summarized in Algorithm 3.

Algorithm 3.

5.3.1 Synthetic Dataset

We first test some synthetic dataset for the Dantizg Selector model (72). We follow the simulation setup in [9] to generate the design matrix X whose columns all have the unit norm. Then, we randomly choose a set S of cardinality s. β is generated by

where ξ ~ U(−1, 1)(i.e., the uniform distribution on the interval (−1, 1)) and ai ~ N(0, 1). At last, y is generated by y = Xβ + ε with ε ~ N(0, σ2I).

We consider four schemes as listed below:

[Scheme 1]: (n, d, s) = (720, 2560, 80), σ = 0.03.

[Scheme 2]: (n, d, s) = (720, 2560, 80), σ = 0.05.

[Scheme 3]: (n, d, s) = (1440, 5120, 160), σ = 0.03.

[Scheme 4]: (n, d, s) = (1440, 5120, 160), σ = 0.05.

We take .

To implement Algorithm 3, we set the penalty parameter ρ = 0.1 and τ = 2.5||(XXT)XTX||2 respectively. Again, we use the stopping criterion described in [6]: Let the primal and dual residual at the t-th iteration be rt = ||XTXβt − xt − XTy|| and st = ||ρXTX(γt − γt−1)||, respectively; let the tolerance of the primal and dual residual at the t-th iteration be and , respectively; then the iteration is stopped when rt < εpri and st < εdua simultaneously. We choose ε = 10−4 for our experiments. We set the starting points as β0 = 0, x0 = 0 and γ0 = 0.

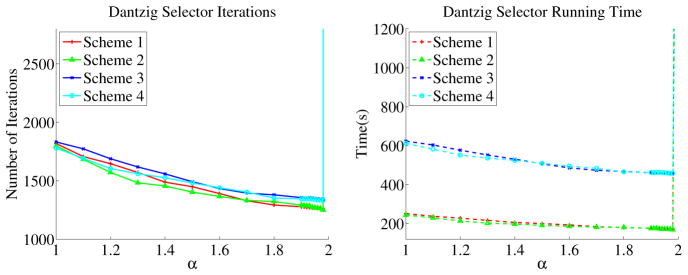

In Figure 4, we plot the evolutions of number of iterations and computing time in seconds with respect to different values of α ∈ [1, 2]. We choose the values of α ∈ [1, 2] for Algorithm 3 with α chosen the same way as we did in the previous subsections. The curves in Figure 4 show the acceleration performance of Algorithm 3 with α ∈ (1, 2) clearly. Also, if α = 2, Algorithm 3 does not converge after 10,000 iterations.

Fig. 4.

Algorithm 3: Evolution of number of iterations and computing time w.r.t. different values of α for synthetic dataset

Like previous sections, we compare the averaged performance of our method with ADMM2 which solves the β-subproblem iteratively by ADMM for all of the four schemes. In the implementation of using ADMM to solve the β-subproblem, we use the same stopping criterion as described in the previous sections, and the tolerance ε is gradually decreased from 10−2 to 10−4 obeying the same rule as the rule in Section 5.1.1. We further set the maximal iteration numbers to be 1, 000 and 10, 000 for inner and outer loops executed by ADMM2, respectively. Also, we set the penalty parameter for inner loop as 1. For the outer loop, we set the tolerance ε = 10−4 for both Algorithm 3 and ADMM2. In Table 4, we present the computing time in seconds to achieve the same level of objective values for three cases: Algorithm 3 with α = 1, Algorithm 3 with α = 1.9, and ADMM2. It is seen that Algorithm 3 with either α = 1 or α = 1.9 is much faster than ADMM2, and Algorithm 3 with α = 1.9 is faster than Algorithm 3 with α = 1; we have thus demonstrated the necessity of linearization when ADMM-like methods are applied to solve the Dantzig selector model (64) and the acceleration performance of GADMM with α ∈ (1, 2). Also, we list the averaged primal feasibility violation of each algorithm. The violation is defined as ||XT (Xβ̂ − y) − w||, where β̂ is the output solution and w = min (max (XT (Xβ̂ − y), −δ), δ) ∈ ℝd. Algorithm 3 achieves better feasibility than ADMM2 as illustrated in the table.

Table 4.

Numerical comparison of the averaged performance between Algorithm 3 and ADMM2

| Alg. 3 (α = 1) | Alg. 3 (α = 1.9) | ADMM2 | ||

|---|---|---|---|---|

| Scheme 1 | CPU time(s) | 215.12 | 154.7 | 1045.62 |

| Objective Value | 60.6777 | 60.6821 | 60.8028 | |

| Violation | 0.0048 | 0.0050 | 0.1464 | |

|

| ||||

| Scheme 2 | CPU time(s) | 213.80 | 147.29 | 1184.98 |

| Objective Value | 54.7704 | 54.7729 | 54.7915 | |

| Violation | 0.0049 | 0.0052 | 0.1773 | |

|

| ||||

| Scheme 3 | CPU time(s) | 613.20 | 462.41 | 5514.81 |

| Objective Value | 124.0258 | 124.0208 | 124.2314 | |

| Violation | 0.0072 | 0.0072 | 0.2361 | |

|

| ||||

| Scheme 4 | CPU time(s) | 607.80 | 463.58 | 5357.55 |

| Objective Value | 111.8513 | 111.8489 | 112.0675 | |

| Violation | 0.0072 | 0.0071 | 0.2137 | |

5.3.2 Real Dataset

Then, we test the Dantizg Selector model (72) for a real dataset. In particular, we test the same dataset as in Section 5.1.2. We look at another disease: Ewing’s sarcoma which has drawn much attention in the literature, see e.g. [26]. We have 27 samples diagnosed with Ewing’s sarcoma. Then, we randomly select 55 healthy samples. Next, we select 30 healthy samples and 15 samples diagnosed with Ewing’s sarcoma as training set, and let the remaining 42 samples be the testing set. We use the first 2,000 genes to conduct the analysis. Thus, this dataset corresponds to the model (72) with n = 45 and d = 2, 000. In the implementation, the parameter δ in (72) is chosen by five-fold cross validation, and we set ρ = 0.02 and τ = 9||(XTX)TXTX||2 for Algorithm 3, and we use the same stopping criterion with ε = 4 × 10−4. The comparison between Algorithm 3 and ADMM2 is listed in Table 5. From this table, we see that Algorithm 3 with either α = 1 or α = 1.9 outperforms ADMM2 significantly —- it requires much less computing time to achieve the same level of objective function values with similar primal feasibility and attains the same level of training or testing error. In addition, the acceleration performance of the case where α = 1.9 over the case where α = 1 is again demonstrated for Algorithm 3.

Table 5.

Numerical comparison between Algorithm 3 and ADMM2 for microarray dataset

| Alg. 3 (α = 1) | Alg. 3 (α = 1.9) | ADMM2 | |

|---|---|---|---|

| Training Error | 0/45 | 0/45 | 0/45 |

| Testing Error | 0/37 | 0/37 | 0/37 |

| CPU time(s) | 1006.68 | 532.96 | 2845.27 |

| Objective Value | 17.596 | 17.579 | 17.771 |

| Violation | 0.0131 | 0.0131 | 0.0285 |

6 Conclusion

In this paper, we take a deeper look at the linearized version of the generalized alternating direction method of multiplier (ADMM) and establish its worst-case 𝒪(1/k) convergence rate in both the ergodic and a nonergodic senses. This result subsumes some existing results established for the original ADMM and generalized ADMM schemes; and it provides accountable and novel theoretical support to the numerical efficiency of the generalized ADMM. Further, we apply the linearized version of the generalized ADMM to solve some important statistical learning applications; and enlarge the application range of the generalized ADMM. Finally we would mention that the worst-case 𝒪(1/k) convergence rate established in this paper amounts to a sublinear speed of convergence. If certain conditions are assumed (e.g. some error bound conditions) or the model under consideration has some special properties, it is possible to establish the linear convergence rate for the linearized version of the generalized ADMM by using similar techniques in, e.g.[5,11,27]. We omit the detail of analysis and only focus on the convergence rate analysis from the iteration complexity perspective in this paper.

Appendices

We show that our analysis in Sections 3 and 4 can be extended to the case where both the x- and y-subproblems in (3) are linearized. The resulting scheme, called doubly linearized version of the GADMM (“DL-GADMM” for short), reads as

| (79) |

where the matrices G1 ∈ ℝn1×n1 and G2 ∈ ℝn2×n2 are both symmetric and positive definite.

For further analysis, we define two matrices, which are analogous to H and Q in (11), respectively, as

| (80) |

Obviously, we have

| (81) |

where M is defined in (10). Note that the equalities (8) and (9) still hold.

A A worst-case 𝒪(1/k) convergence rate in the ergodic sense for (79)

We first establish a worst-case 𝒪(1/k) convergence rate in the ergodic sense for the DL-GADMM (79). Indeed, using the relationship (81), the resulting proof is nearly the same as that in Section 3 for the L-GADMM (4). We thus only list two lemmas (analogous to Lemmas 1 and 2) and one theorem (analogous to Theorem 2) to demonstrate a worst-case 𝒪(1/k) convergence rate in the ergodic sense for (79), and omit the details of proofs.

Lemma 7

Let the sequence {wt} be generated by the DL-GADMM (79) with α ∈ (0, 2) and the associated sequence {w̃t} be defined in (7). Then we have

| (82) |

where Q2 is defined in (80).

Lemma 8

Let the sequence {wt} be generated by the DL-GADMM (79) with α ∈ (0, 2) and the associated sequence {w̃t} be defined in (7). Then for any w ∈ Ω, we have

| (83) |

Theorem 7

Let H2 be given by (80) and {wt} be the sequence generated by the DLGADMM (79) with α ∈ (0, 2). For any integer k > 0, let ŵk be defined by

| (84) |

where w̃t is defined in (7). Then, ŵk ∈ Ω and

B A worst-case 𝒪(1/k) convergence rate in a nonergodic sense for (79)

Next, we prove a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79). Note that Lemma 4 still holds by replacing H with H2. That is, if , w̃t defined in (7) is an optimal solution point to (5). Thus, for the sequence {wt} generated by the DL-GADMM (79), it is reasonable to measure the accuracy of an iterate by .

Proofs of the following two lemmas are analogous to those of Lemmas 5 and 6, respectively. We thus omit them.

Lemma 9

Let the sequence {wt} be generated by the DL-GADMM (79) with α ∈ (0, 2) and the associated {w̃t} be defined in (7); the matrix Q2 be defined in (80). Then, we have

Lemma 10

Let the sequence {wt} be generated by the DL-GADMM (79) with α ∈ (0, 2) and the associated {w̃t} be defined in (7); the matrices M, H2, Q2 be defined in (10) and (80). Then, we have

Based on the above two lemmas, we see that the sequence {||wt − wt+1||H2} is monotonically non-increasing. That is, we have the following theorem.

Theorem 8

Let the sequence {wt} be generated by the DL-GADMM (79) and the matrix H2 be defined in (80). Then, we have

Note that for the DL-GADMM (79), the y-subproblem is also proximally regularized, and we can not extend the inequality (31) to this new case. This is indeed the main difficulty for proving a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79). A more elaborated analysis is needed. Let us show one lemma first to bound the left-hand side in (31).

Lemma 11

Let {yt} be the sequence generated by the DL-GADMM (79) with α ∈ (0, 2). Then, we have

| (85) |

Proof

It follows from the optimality condition of the y-subproblem in (79) that

| (86) |

Similarly, we also have,

| (87) |

Setting y = yt in (86) and y = yt+1 in (87), and summing them up, we have

where the second inequality holds by the fact that . The assertion (85) is proved.

Two more lemmas should be proved in order to establish a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79).

Lemma 12

The sequence {wt} generated by the DL-GADMM (79) with α ∈ (0, 2) and the associated {w̃t} be defined in (7), then we have

| (88) |

where cα is defined in (37).

Proof

By the definition of Q2, M and H2, we have

which implies the assertion (88) immediately.

In the next lemma, we refine the bound of (w − w̃t)T Q2(wt − w̃t) in (82). The refined bound consists of the terms recursively, which is favorable for establishing a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79).

Lemma 13

Let {wt} be the sequence generated by the DL-GADMM (79) with α ∈ (0, 2). Then, w̃t ∈ Ω and

| (89) |

where M is defined in (10), and H2 and Q2 are defined in (80).

Proof

By the identity Q2(wt − w̃t) = H2(wt − wt+1), it holds that

Setting a = w, b = w̃t, c = wt and d = wt+1 in the identity

we have

| (90) |

Meanwhile, we have

where the last equality comes from the identity Q2 = H2M.

Substituting the above identity into (90), we have, for all w ∈ Ω,

Plugging this identity into (82), our claim follows immediately.

Then, we show the boundedness of the sequence {wt} generated by the DL-GADMM (79), which essentially implies the convergence of {wt}.

Theorem 9

Let {wt} be the sequence generated by the DL-GADMM (79) with α ∈ (0, 2). Then, it holds that

| (91) |

where H2 is defined in (80).

Proof

Setting w = w* in (89), we have

Then, recall (5), we have

It is easy to see that . Thus, it holds

which completes the proof.

Finally, we establish a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79).

Theorem 10

Let the sequence {wt} be generated by the scheme DL-GADMM (79) with α ∈ (0, 2). It holds that

| (92) |

Proof

By the definition of H2 in (80), we have

| (93) |

Using (85), (88), (91) and (93), we obtain

By Theorem 8, the sequence { } is non-increasing. Thus, we have

and the assertion (92) is proved.

Recall that for the sequence {wt} generated by the DL-GADMM (79), it is reasonable to measure the accuracy of an iterate by . Thus, Theorem 10 demonstrates a worst-case 𝒪(1/k) convergence rate in a nonergodic sense for the DL-GADMM (79).

Footnotes

Contributor Information

Ethan X. Fang, Department of Operations Research and Financial Engineering, Princeton University

Bingsheng He, International Centre of Management Science and Engineering, and Department of Mathematics, Nanjing University, Nanjing, 210093, China. This author was supported by the NSFC Grant 91130007 and the MOEC fund 20110091110004.

Han Liu, Department of Operations Research and Financial Engineering, Princeton University.

Xiaoming Yuan, Department of Mathematics, Hong Kong Baptist University, Hong Kong. This author was supported by the Faculty Research Grant from HKBU: FRG2/13-14/061 and the General Research Fund from Hong Kong Research Grants Council: 203613.

References

- 1.Anderson TW. An introduction to multivariate statistical analysis. 3. Wiley-Interscience; 2003. [Google Scholar]

- 2.Bertsekas DP. Constrained optimization and Lagrange multiplier methods. Academic Press; New York: 1982. [Google Scholar]

- 3.Bickel PJ, Levina E. Some theory for Fisher’s linear discriminant function, naive Bayes’, and some alternatives when there are many more variables than observations. Bernoulli. 2004;6:989–1010. [Google Scholar]

- 4.Blum E, Oettli W. Mathematische Optimierung. Grundlagen und Verfahren. Ökonometrie und Unternehmensforschung. Springer-Verlag; Berlin-Heidelberg-New York: 1975. [Google Scholar]

- 5.Boley D. Local linear convergence of ADMM on quadratic or linear programs. SIAM J Optim. 2013;23(4):2183–2207. [Google Scholar]

- 6.Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found Trends Mach Learn. 2011;3:1–122. [Google Scholar]

- 7.Cai TT, Liu W. A Direct estimation approach to sparse linear discriminant analysis. J Amer Stat Assoc. 2011;106:1566–1577. [Google Scholar]

- 8.Cai X, Gu G, He B, Yuan X. A proximal point algorithm revisit on alternating direction method of multipliers. Sci China Math. 2013;56(10):2179–2186. [Google Scholar]

- 9.Candès EJ, Tao T. The Dantzig selector: statistical estimation when p is much larger than n. Ann Stat. 2007;35:2313–2351. [Google Scholar]

- 10.Clemmensen L, Hastie T, Witten D, Ersbøll B. Sparse discriminant analysis. Technometrics. 2011;53:406–413. [Google Scholar]

- 11.Deng W, Yin W. On the global and linear convergence of the generalized alternating direction method of multipliers. Manuscript. 2012 [Google Scholar]

- 12.Eckstein J. Parallel alternating direction multiplier decomposition of convex programs. J Optim Theory Appli. 1994;80(1):39–62. [Google Scholar]

- 13.Eckstein J, Yao W. RUTCOR Research Report RRR 32-2012. Dec, 2012. Augmented Lagrangian and alternating direction methods for convex optimization: a tutorial and some illustrative computational results. [Google Scholar]

- 14.Eckstein J, Bertsekas D. On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math Program. 1992;55:293–318. [Google Scholar]

- 15.Fan J, Fan Y. High dimensional classification using features annealed independence rules. Ann Stat. 2008;36:2605–2037. doi: 10.1214/07-AOS504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Stat Assoc. 2001;96:1348–1360. [Google Scholar]

- 17.Fan J, Feng Y, Tong X. A road to classification in high dimensional space: the regularized optimal affine discriminant. J R Stat Soc Series B Stat Methodol. 2012;74:745–771. doi: 10.1111/j.1467-9868.2012.01029.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Fan J, Zhang J, Yu K. Vast portfolio selection with gross-exposure constraints. J Amer Stat Assoc. 2012;107:592–606. doi: 10.1080/01621459.2012.682825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fazeland M, Hindi H, Boyd S. A rank minimization heuristic with application to minimum order system approximation. Proceedings of the American Control Conference; 2001. [Google Scholar]

- 20.Fortin M, Glowinski R. Stud Math Appl. Vol. 15. NorthHolland; Amsterdam: 1983. Augmented Lagrangian Methods: Applications to the Numerical Solutions of Boundary Value Problems. [Google Scholar]

- 21.Gabay D. Applications of the method of multipliers to variational inequalities. In: Fortin M, Glowinski R, editors. Augmented Lagrange Methods: Applications to the Solution of Boundary-valued Problems. North Holland; Amsterdam, The Netherlands: 1983. pp. 299–331. [Google Scholar]

- 22.Gabay D, Mercier B. A dual algorithm for the solution of nonlinear variational problems via finite-element approximations. Comput Math Appli. 1976;2:17–40. [Google Scholar]

- 23.Glowinski R. Springer Proceedings of a Conference Dedicated to J Periaux. On alternating directon methods of multipliers: a historical perspective. to appear. [Google Scholar]

- 24.Glowinski R, Marrocco A. Approximation par éléments finis d’ordre un et résolution par pénalisation-dualité d’une classe de problémes non linéaires. RAIRO. 1975;R2:41–76. [Google Scholar]

- 25.Gol’shtein EG, Tret’yakov NV. Modified Lagrangian in convex programming and their generalizations. Math Program Study. 1979;10:86–97. [Google Scholar]

- 26.Grier HE, Krailo MD, Tarbell NJ, Link MP, Fryer CJ, Pritchard DJ, Gebhardt MC, Dickman PS, Perlman EJ, Meyers PA, et al. Addition of ifosfamide and etoposide to standard chemotherapy for Ewing’s sarcoma and primitive neuroectodermal tumor of bone. New England Journal of Medicine. 2003;348:694–701. doi: 10.1056/NEJMoa020890. [DOI] [PubMed] [Google Scholar]

- 27.Han D, Yuan X. Local linear convergence of the alternating direction method of multipliers for quadratic programs. SIAM J Numer Anal. 2013;51(6):3446–3457. [Google Scholar]

- 28.Hans CP, Weisenburger DD, Greiner TC, Gascone RD, Delabie J, Ott G, Müller-Hermelink H, Campo E, Braziel R, Elaine S, et al. Confirmation of the molecular classification of diffuse large B-cell lymphoma by immunohistochemistry using a tissue microarray. Blood. 2004;103:275–282. doi: 10.1182/blood-2003-05-1545. [DOI] [PubMed] [Google Scholar]

- 29.He B, Liao L-Z, Han DR, Yang H. A new inexact alternating directions method for monotone variational inequalities. Math Program. 2002;92:103–118. [Google Scholar]

- 30.He B, Yang H. Some convergence properties of a method of multipliers for linearly constrained monotone variational inequalities. Oper Res Let. 1998;23:151–161. [Google Scholar]

- 31.He B, Yuan X. On the O(1/n) convergence rate of Douglas-Rachford alternating direction method. SIAM J Numer Anal. 2012;50:700–709. [Google Scholar]

- 32.He B, Yuan X. On nonergodic convergence rate of Douglas-Rachford alternating direction method of multipliers. Numerische Mathematik. to appear. [Google Scholar]

- 33.He B, Yuan X. On convergence rate of the Douglas-Rachford operator splitting method. Math Program. to appear. [Google Scholar]

- 34.Hestenes MR. Multiplier and gradient methods. J Optim Theory Appli. 1969;4:302–320. [Google Scholar]

- 35.James GM, Paulson C, Rusmevichientong P. The constrained LASSO. 2012 Manuscript. [Google Scholar]

- 36.Lions PL, Mercier B. Splitting algorithms for the sum of two nonlinear operator. SIAM J Numer Anal. 1979;16:964–979. [Google Scholar]

- 37.Martinet B. Regularisation, d’inéquations variationelles par approximations succesives. Rev Francaise d’Inform Recherche Oper. 1970;4:154–159. [Google Scholar]

- 38.McCall MN, Bolstad BM, Irizarry RA. Frozen robust multiarray analysis (fRMA) Biostatistics. 2010;11:242–253. doi: 10.1093/biostatistics/kxp059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Nemirovsky AS, Yudin DB. Wiley-Interscience Series in Discrete Mathematics. John Wiley & Sons; New York: 1983. Problem Complexity and Method Efficiency in Optimization. [Google Scholar]

- 40.Nesterov YE. A method for solving the convex programming problem with convergence rate O(1/k2) Dokl Akad Nauk SSSR. 1983;269:543–547. [Google Scholar]

- 41.Ng MK, Wang F, Yuan X. Inexact alternating direction methods for image recovery. SIAM J Sci Comput. 2011;33(4):1643–1668. [Google Scholar]

- 42.Powell MJD. A method for nonlinear constraints in minimization problems. In: Fletcher R, editor. Optimization. Academic Press; 1969. [Google Scholar]

- 43.Shao J, Wang Y, Deng X, Wang S. Sparse linear discriminant analysis by thresholding for high dimensional data. Ann Stat. 2011;39:1241–1265. [Google Scholar]

- 44.Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Series B Stat Methodol. 1996;58:267–288. [Google Scholar]

- 45.Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. J R Stat Soc Series B Stat Methodol. 2005;67:91–108. [Google Scholar]

- 46.Tibshirani RJ, Taylor J. The solution path of the generalized lasso. Ann Stat. 2011;39:1335–1371. [Google Scholar]

- 47.Wang L, Zhu J, Zou H. Hybrid huberized support vector machines for microarray classification and gene selection. Bioinformatics. 2008;24:412–419. doi: 10.1093/bioinformatics/btm579. [DOI] [PubMed] [Google Scholar]

- 48.Wang X, Yuan X. The linearized alternating direction method of multipliers for Dantzig Selector. SIAM J Sci Comput. 2012;34:2782–2811. [Google Scholar]

- 49.Witten DM, Tibshirani R. Penalized classification using Fisher’s linear discriminant. J R Stat Soc Series B Stat Methodol. 2011;73:753–772. doi: 10.1111/j.1467-9868.2011.00783.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yang J, Yuan X. Linearized augmented Lagrangian and alternating direction methods for nuclear norm minimization. Math Comput. 2013;82:301–329. [Google Scholar]

- 51.Zhang C-H. Nearly unbiased variable selection under minimax concave penalty. Ann Stat. 2010;38:894–942. [Google Scholar]

- 52.Zhang XQ, Burger M, Osher S. A unified primal-dual algorithm framework based on Bregman iteration. J Sci Comput. 2010;6:20–46. [Google Scholar]

- 53.Zhang XQ, Burger M, Bresson X, Osher S. Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM J Imag Sci. 2010;3(3):253–276. [Google Scholar]

- 54.Zou H. The adaptive lasso and its oracle properties. J Amer Stat Assoc. 2006;101:1418–1429. [Google Scholar]