Abstract

This study investigated a technology-enhanced training protocol to facilitate dissemination of therapist training on cognitive behavior therapy (CBT) for anxiety disorders. Seventy community clinicians received an online tutorial followed by live remote observation of clinical skills via videoconference. Impact of training on patient outcomes was also assessed. Training resulted in a significant increase in both trainee knowledge of CBT concepts and techniques and therapist competence in applying these skills. Patients treated by trainees following training had significant reductions in anxiety and depression. Ratings of user satisfaction were high. Results provide support for the use of these technologies for therapist training in CBT.

The critical shortage of therapists trained in empirically based treatments (EBTs) remains a major public health concern (Institute of Medicine, 2015). This shortage poses a significant barrier to accessing these effective treatments by patients in the community (Cartreine, Ahern, & Locke, 2010; Olfson et al., 2009; U.S. Department of Health and Human Services, 1999; Weissman et al., 2006; Weisz, Hawley, & Doss, 2004; Williams & Martinez, 2008). Facilitating access to professional training on EBTs has been proposed as one solution to this problem and has been identified as a national priority by the National Institute on Mental Health (NIMH) and major mental health professional organizations (APA Presidential Task Force on Evidence-Based Medicine, 2006; National Institute of Mental Health, 2007).

Although important initiatives exist in academic programs to facilitate training of clinicians in EBTs (American Psychological Assocation, 1993; American Psychological Association, 2002), there remains a shortage of such clinical training programs (National Institute of Mental Health, 2007; Pidano & Whitcomb, 2012; U.S. Department of Health and Human Services, 1999; Weissman, et al., 2006). Thus, the need for clinicians prepared to deliver such interventions in the community is not currently being met. Large-scale dissemination efforts, such as those of the Veterans Health Administration, involve considerable cost in terms of time and money, both for the initial training and for ongoing monitoring and reinforcing of fidelity following initial training (Eftekhari et al., 2013). This makes large-scale dissemination feasible only as system-wide interventions (Fixsen, Blase, Duda, Naoom, & Van Dyke, 2010). Because many agencies lack the resources for such efforts, attempts to train large numbers of clinicians have been minimal (Beveridge et al., 2015). Unfortunately, continuing education approaches such as workshops, presentations, and conferences are not widely available for practicing clinicians outside of these large systems to learn to deliver EBTs. Moreover, the limited continuing education that is available has not resulted in increased uptake and dissemination (Cartreine, et al., 2010; Herschell, Kolko, Baumann, & Davis, 2010; Joyce & Showers, 2002; Miller & Mount, 2001; Schoenwald, Kelleher, & Weisz, 2008), long-term changes in therapists’ behaviors or improvements in patient outcomes (Herschell, et al., 2010). This is likely due to the limited format of current continuing education efforts (Herschell, et al., 2010). Thus, novel training options are needed to both reach more practicing clinicians and make training interventions more effective.

Compounding the problem of the limited opportunities for clinicians to learn EBTs is a lack of trainers available to teach other clinicians these techniques. The recent report by the Institute of Medicine on developing evidence-based standards for psychosocial interventions found EBTs are not being routinely taught in academic programs or being routinely used in clinical practice (Institute of Medicine, 2015). A similar conclusion was found by the 2007 NIMH panel on integrating evidence-based mental health practices into social work education and practice (National Institute of Mental Health, 2007).

Another limitation of current efforts at dissemination is that one-time workshops, which are the most common way that clinicians obtain EBT training (Kobak, Craske, Rose, & Wolitsky-Taylor, 2013), typically provide only didactic training with no examination of applied skills or follow-up on implementation. Dissemination and implementation are both crucial challenges for EBTs (Schoenwald, McHugh, & Barlow, 2012). Although increased access to training can help improve the dissemination of EBTs, ensuring that clinicians actually implement the new skills with their patients (and implement them correctly) is a posttraining challenge (Decker, Jameson, & Naugle, 2011; McHugh & Barlow, 2010). Didactic training alone does not, in itself, necessarily yield improvements in clinical skills (Dimeff et al., 2015; Herschell, et al., 2010; Kobak, Engelhardt, Williams, & Lipsitz, 2004). American Psychological Association guidelines state that clinicians should not only demonstrate knowledge of EBT theories and methods but also be able to demonstrate skill in implementing EBT intervention strategies (American Psychological Assocation, 2007). However, when clinicians utilize EBTs with patients, they are often not delivered correctly (Kessler, Merikangas, & Wang, 2007; Stobie, Taylor, Quigley, Ewing, & Salkovskis, 2007). Thus, the most effective way to train clinicians in EBTs and to ensure their proper implementation is with didactic training plus ongoing expert consultation and feedback (Beidas, Edmunds, Marcus, & Kendall, 2012; Edmunds, Beidas, & Kendall, 2013; Nadeem, Gleacher, & Beidas, 2013; Rose et al., 2011). The amount of posttraining consultation hours has been found to significantly predict therapist adherence and skill at 3-month follow-up (Beidas, et al., 2012). Access to experts for training and ongoing supervision is limited by both trainer availability and logistical access to the training location.

The use of new technologies has been suggested as a way to overcome these problems and increase the dissemination, implementation, and quality of EBT training (Barnett, 2011; Fairburn & Cooper, 2011; Kobak, et al., 2013; Shafran et al., 2009). Web-based training offers several advantages to traditional methods (Khanna & Kendall, 2015), including 24-hr accessibility, standardization of training (to help insure the quality of instruction), personalization (e.g., self-paced, allowing for repetition and review), and the opportunity for interactive exercises and multimedia training components (e.g., audio, video, animation), which have been found to enhance knowledge retention (Gardner, 1993). Web-based training is unconstrained by enrollment limitations due to class size and trainer availability. It is cost effective, and trainees can work according to their own schedules. Indeed, a recent study found time and cost to be the strongest predictors of unwillingness to obtain training on empirically based treatments (Stewart, Chambless, & Baron, 2012). Studies have shown web-based therapist training to be more effective than paper-based manualized training (without clinician input) alone (Sholomskas & Carroll, 2006) and, in one study, superior to live training (Dimeff et al., 2009). Web-based training has also shown superiority to paper-based manuals in long-term knowledge retention and utilization of skills in clinical practice (Dimeff, Woodcock, Harned, & Beadnell, 2011). Recent research has found that a two-stage training model consisting of a web-based tutorial for didactic training combined with live remote observation of clinical skills via videoconference significantly improves both applied clinical skills as well as conceptual knowledge (Curran et al., 2015; Kobak, Lipsitz, Williams, Engelhardt, & Bellew, 2005; Kobak, et al., 2013; Kobak, Engelhardt, & Lipsitz, 2006; Weingardt, Cucciare, Bellotti, & Lai, 2009) and can help ensure proper implementation over time (Kobak et al., 2007).

In response to the NIMH’s call for research on the use of technology to facilitate the dissemination of evidence-based treatments, we developed a technology-enhanced training protocol to facilitate therapist training on cognitive behavior therapy (CBT) for anxiety disorders. The program consists of two components: (a) a web-based tutorial on CBT concepts and skills and (b) a series of live remote applied training sessions conducted via videoconference in which trainees apply the skills learned in the tutorial while being observed by a trainer who provides feedback. The goal of the current study was to examine whether the two-part, technology-enhanced training program (a) improved trainees’ knowledge of CBT concepts and techniques and (b) resulted in improvements in trainees’ skill in actually applying these techniques in practice. In order to evaluate the relative impact of each of the two training components (i.e., online tutorial and live remote applied training via videoconference) on clinical skills, we tested trainees’ applied clinical skills at three time points: before any training, after the online tutorial, and after live applied training. The secondary goals of the study were to examine whether training increased the frequency with which clinicians utilized CBT techniques with patients and whether patients treated by clinicians who received the training showed improvements in levels of self-reported anxiety. Given the research suggesting that didactic training alone is insufficient for clinician skill improvement and uptake, we posited that the addition of expert feedback and skill practice via videoconference would show an additional incremental improvement in CBT skill competency. In a prior study (Kobak, et al., 2013), we pilot tested the model using social anxiety disorder as the focus. In the current study, we expanded the tutorial to teach clinicians how to apply these skills across the range of anxiety-related disorders (i.e., obsessive compulsive disorder [OCD], panic disorder, generalized anxiety disorder [GAD], posttraumatic stress disorder [PTSD], and social anxiety disorder).

Method

Participants

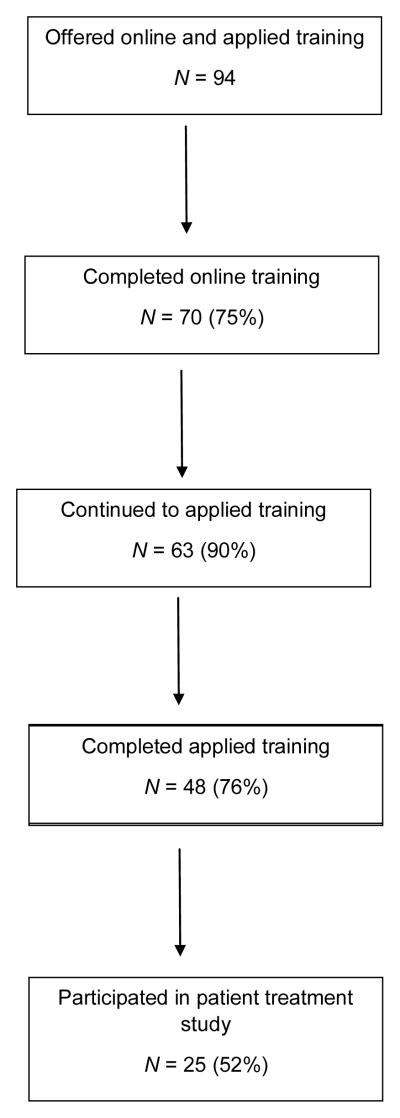

Clinicians were recruited through advertisements in the National Association of Social Work newsletter and the American Psychological Association’s monthly journal. Because our goal was to evaluate how well the training protocol worked outside of academic centers of excellence, we focused on recruiting clinicians practicing in the community. A diagram of study flow is shown in Figure 1. Ninety-four clinicians inquired about the study and were offered participation. Of these, 70 completed the online training tutorial; 63 continued to the applied training phase, and 48 completed. Finally, 25 trainees, whose employment situations allowed them to access clients for research purposes, participated in the posttraining follow-up of the impact of training on patient outcomes. The study was approved by the Allendale Institutional Review Board, and all participants signed informed consent statements. The 70 community clinicians completing the online tutorial were 83% female and 85% Caucasian, 10% African American, and 5% other or mixed racial categories. Participants came from 24 different states in the continental United States, and two were from Cyprus and one was from Mexico. The mean age was 47.7 years (SD = 13.7, range: 23 to 86 years), and the mean years of clinical experience was 12.2 (SD = 9.5, range: 1–35 years). Fifty (72%) were social workers, 17 (24%) were psychologists, and three (4%) were marriage and family therapists. Twenty-eight (40%) reported having received some type of prior formal training in CBT, including 24 (34%) through continuing education workshops and two (3%) through their employer. Only 14 (20%) reported receiving training as part of their formal coursework or practicum in graduate school, and only eight (11%) were actually observed conducting CBT as part of their training, either through role play or with actual patients. Fifty-one (73%) reported using some CBT techniques in their practice prior to participation in the study, including thought challenging (51%), diaphragmatic breathing (53%), exposure therapy (20%), and downward arrow (14%).

Figure 1.

Study flow.

Procedure

This study used a within-subjects additive design. In this approach, we empirically examined the impact of the online tutorial on clinical skills and then examined the added value of live, applied training on these skills.

Prior to beginning any training, trainees were given two pretests: one on their didactic knowledge of CBT concepts and principles and one on their applied clinical skills in conducting CBT with a mock patient (described below). Following the pretests they were given a user name and password to access the web-based training and completed the online tutorial at their own pace. Trainees could email the instructors with questions about the material. After completing the online tutorial, trainees took two posttests: one testing their conceptual knowledge and one evaluating their clinical skills with a standardized mock patient.

Following the two posttutorial tests, trainees received four, 1-hr, live applied training sessions conducted via videoconference. During each training session, the trainer portrayed a standardized patient with a specific anxiety disorder while the trainee role-played as the therapist. The trainer (Dr. Wolitzky-Taylor) provided feedback in real time, both following and sometimes during each role play. Trainees could also use this time to ask any questions they had regarding CBT concepts or techniques. After completing the four training sessions, a final posttest was conducted of the trainee’s applied clinical skills. After completing the training and final posttest, those trainees whose employment situation allowed the conduct of research with clinic patients recruited at least one patient from their clinical practice who was initiating treatment for an anxiety disorder. Patients were evaluated weekly for 8 weeks with brief self-report measures of anxiety (GAD-7: Spitzer, Kroenke, Williams, & Lowe, 2006) and depression (PHQ-2; Lowe, Kroenke, & Grafe, 2005) to examine the impact of training on patient outcomes. More detailed descriptions of the online tutorial, the applied training and testing process, and the poststudy follow-up are provided below.

Online tutorial content

The online tutorial consisted of nine core modules covering the theory and practice of CBT for anxiety disorders: (1) Welcome & Introduction, (2) Principles of CBT, (3) Teaching Clients About the Nature of Anxiety, (4) Explaining Treatment Rationale to Clients, (5) Teaching Patients Self-Assessment Skills, (6) Helping Clients Develop a Fear Hierarchy, (7) Teaching Clients Breathing Techniques, (8) Managing Anxious Thinking (Cognitive Restructuring), and (9) Exposure Therapy. For continuing education credits, modules were grouped into four clusters, by general theme: (a) Modules 1–5, (b) 6 and 7, (c) 8, and (d) 9. Three additional optional modules provided a more in-depth review of specific topics. These included Advanced Principles of CBT, The Therapeutic Relationship in CBT, and Enhancing Client Motivation (using motivational interviewing techniques). The latter optional module was included because several studies have found enhanced outcomes for patients with anxiety disorders when motivational interviewing techniques such as exploring ambivalence, developing discrepancy, dealing with resistance, and enhancing self-efficacy are added to CBT therapy (Westra, Arkowitz, & Dozois, 2009; Westra & Dozois, 2008; Westra & Dozois, 2006).

CBT concepts and techniques were illustrated for five anxiety-related disorders (i.e., OCD, panic disorder, GAD, PTSD, and social anxiety disorder) so trainees could learn how to conduct CBT across a range of anxiety disorders. Clinicians typically work with patients with a variety of problems, and the tutorial was aimed at equipping them will the skills necessary to apply the CBT principles and techniques in their clinical practice across a range of anxiety disorders. Each anxiety disorder has its own unique features and issues that need to be understood and addressed when conducting CBT. Learners would initially choose one of the five different anxiety disorders and follow each as a track throughout the course. The course would present CBT concepts and skills using examples based on the selected disorder. The course remembers that choice yet still allows learners to switch tracks to see the same skills demonstrated with a different disorder. For example, in learning thought challenging, if the trainee chose OCD as their track, they would first see an illustration of thought challenging in OCD, but then they could switch focus and see the same skills illustrated with panic disorder. In this way, trainees obtain a wide breadth of knowledge while enabling a single course to meet the needs of different practitioners.

The tutorial material was presented in a variety of formats, including interactive exercises, animations, graphical illustrations, and videos of expert clinicians (Drs. Craske and Rose) demonstrating the techniques with a mock client. Throughout the course, “challenge questions” on new material were used as a way to involve learners, reinforce learning, and enhance retention (Roediger & Karpicke, 2006). Interactivity was maximized in order to engage students in the learning process and enhance knowledge retention (Gardner, 1993; Vincent & Ross, 2001). Principles of instructional design were used to guide the presentation of material in ways that enhanced learning (Mayer & Moreno, 2003).

Applied training

After completing the online tutorial, trainees received four remote applied training sessions with an experienced CBT trainer via videoconference (i.e., WebEx, a commercially available videoconference service). During each training session, the trainer portrayed a standardized patient with a specific anxiety disorder of the trainees’ choosing. All four applied training sessions focused on the same anxiety disorder but with a different clinical presentation each time. The session began with the “patient” describing who he or she was, a little about his or her background, and the types of problems he or she was having. The applied training focused on learning two specific CBT techniques: cognitive restructuring and exposure therapy. Three cognitive and three behavioral/exposure skills were practiced in each session. For all anxiety disorders, the cognitive skills practiced were downward arrow, challenging likelihood overestimation, and decatastrophizing. For all anxiety disorders, two of the three behavioral skills were creating a fear hierarchy and preparing for/assigning an exposure task. The third behavioral skill varied based on the anxiety disorder: exposure debriefing (OCD and social anxiety), conducting an interoceptive exposure assessment (panic disorder), and conducting an imaginal exposure (PTSD and GAD). The trainer provided feedback in real time following, and sometimes also during, each role play therapy session. Trainees could use these sessions to ask any questions they had on the material. Each training session was about 1 hr long. Training was conducted by a licensed clinical psychologist and Assistant Professor at the University of California, Los Angeles (Dr. Wolitzky-Taylor). The trainer had extensive experience in providing CBT supervision and training as part of her academic teaching, graduate, and postdoctoral experience.

Measures

Online tutorial

Improvement in trainees’ knowledge of CBT concepts and techniques was evaluated using a 46-item multiple-choice pre- and posttest. Item development followed continuing education guidelines from the American Psychological Association and the National Association of Social Workers in terms of number of test items per hour of study and balance of multiple-choice versus yes or no questions. Items were developed by domain experts (Drs. Craske, Rose, and Kobak) to ensure they accurately reflected the range of tutorial content. Items covered not only trainees’ understanding of CBT concepts and procedures but also their understanding of common difficulties encountered and how to address them (Fairburn & Cooper, 2011). Trainees were not given feedback as to the correct answers following the pretest. Posttests were given immediately after the modules covering the specific topic. The coefficient alpha of the test was .73 in our previous study (Kobak, et al., 2013), and prestudy piloting showed good sensitivity to change.

Applied training

Improvements in clinical skill were evaluated using the Yale Adherence and Competence Scale (YACS; (Carroll et al., 2000; Nuro et al., 2000), a well-validated scale for rating therapist adherence and competence in delivering cognitive behavioral treatments for substance use disorders. It had good interrater reliability (ICC = .88) and internal consistency reliability (coefficient alpha; r = .89) in our data (Kobak, et al., 2013) and correlates well with measures of therapist alliance (Carroll, et al., 2000). Each YACS item was rated on a 7-point scale ranging from very poor (1) to excellent (7). Ratings for each anchor point were operationalized, and guidelines were provided for rating each anchor point. The scale contained six items, three evaluating therapist skill in conducting cognitive restructuring and three evaluating therapist skill in conducting exposure therapy (corresponding to the six skills practiced during the applied training).

In order to evaluate the relative contribution of didactic and applied training on applied clinical skills, applied skills were evaluated with the YACS at three time points: (a) before taking the online tutorial, (b) after the online tutorial but before the applied training, and (c) after the applied training. Testing consisted of conducting the six specific CBT therapist skills covered in the applied training (described previously) with a mock patient. Each testing session was audiotaped and blindly rated by an independent, experienced CBT clinician (who was not the CBT trainer). These independent raters included two experienced CBT clinicians (one current and one former postdoctoral fellow at the UCLA Anxiety Disorders Research Center and the Collaborative Center for Integrative Medicine, respectively). Both were licensed clinical psychologists with extensive experience in conducting CBT therapy with published research on CBT interventions. Training and calibration on the YACS was done prior to the study to ensure interrater reliability, six tapes were independently coded by each rater, and ratings and rationales for the ratings were discussed until a consensus score was reached and a common understanding of the YACS guidelines was achieved. In order to minimize expectancy bias, the rater was blinded to whether it was the initial testing session, middle testing session, or final testing session. Tapes were prescreened to edit out any comments that would identify testing session number, because studies have shown that knowing the temporal order of sessions significantly impacts raters’ judgments of improvement (Quinn et al., 2002). During testing sessions, the clinician portraying the patient was instructed not to “break role” to provide feedback.

User satisfaction

Satisfaction with the technical aspects of the tutorial was assessed using the System Usability Scale (SUS(Bangor, Kortum, & Miller, 2009; Brooke, 1996), a reliable, well-validated 10-item scale designed to evaluate the usability and user satisfaction with web-based applications and other technologies. Item are rated on a 5-point scale, with descriptions provided for the endpoints (1 = strongly disagree, 5 = strongly agree). A global rating of user-friendliness is also obtained. The SUS has good internal consistency reliability (α = .87 in our sample) in assessing usability across diverse types of user interfaces (e.g., web, IVR, cell phone, etc.).

Descriptive data were gathered on user satisfaction with the clinical content of the online tutorial. Learning goals for each module were developed a priori (as required by APA continuing education guidelines), and participants were asked at the end of each module whether these goals were met (see Table 1). Participants were also asked to rate the course content along four dimensions using a 4-point scale (strongly agree, agree, disagree, strongly disagree; see Table 2). Global ratings of how much was learned from the online tutorial and overall user satisfaction were also included. Descriptive data were also gathered on user satisfaction with the applied training. Participants rated their experience along six dimensions, including the impact of training on one’s clinical skills, the ability to apply the skills learned with clients, and the effectiveness of the instructor. Items were rated on a 4-point scale (strongly agree, agree, disagree, strongly disagree; see Table 3). A global rating of how much was learned as a result of the applied training was also included.

Table 1.

Learning Objectives by Module: Cognitive Behavior Therapy (CBT) Tutorial

| Module | Learning Goal |

|---|---|

| 1: Welcome Video | Describe the goals of the tutorial |

| 2: Theoretical Principles of CBT | Describe what cognitive behavior therapy is Summarize the difference between cognitive therapy and behavior therapy Explain the nature of the therapeutic relationship in CBT Describe how to enhance client motivation in CBT |

| 3: Teaching Clients About the Nature of Anxiety | Describe the normal function of anxiety Describe the purpose of the physical symptoms of anxiety Explain the difference between normal anxiety and anxiety disorders |

| 4: Explaining Treatment Rationale to Clients | Explain the CBT treatment approach Describe why the client’s understanding of the treatment rationale is important Describe the three parts of anxiety Explain the anxiety cycle and how it works |

| 5: Teaching Clients Self-Assessment Skills | Explain to clients the purpose of ongoing recording of anxiety Teach clients how, what and when to record anxiety |

| 6: Helping Clients Develop a Fear Hierarchy | Recognize different forms of avoidant behavior Explain to clients how avoidance maintains and exacerbates their anxiety Help clients develop a fear hierarchy |

| 7: Teaching Clients Breathing Techniques | Describe the mechanics of breathing and what it means to overbreathe Teach clients diaphragmatic breathing as a skill to prevent overbreathing |

| 8: Managing Anxious Thinking (Cognitive Restructuring) | Explain the three steps of cognitive restructuring Describe to clients the impact of thoughts on emotions Teach clients how to examine and challenge their negative thinking Teach clients how to challenge catastrophic thoughts Initiate the thought challenging process Handle common obstacles encountered in thought challenging |

| 9: Exposure Therapy | Explain the difference between cognitive restructuring and exposure therapy Describe the goals and critical factors in exposure therapy Explain the therapist’s role in exposure therapy List the 7 steps in designing exposure practice Describe the most common reasons clients don’t complete exposure assignments and how to handle them Explain the rationale for the frequency, timing and duration of exposure sessions |

Table 2.

Mean Satisfaction Ratings on Tutorial Scale Clinical Content (N = 70)

| Item | M (SD) |

|---|---|

| 1. The material was presented in an interesting manner. | 3.4 (0.62) |

| 2. The concepts were clearly presented and easy to understand. | 3.5 (0.61) |

| 3. I would recommend this course to others. | 3.5 (0.65) |

| 4. I enjoyed taking this tutorial. | 3.7 (0.48) |

| 5. Overall, how satisfied were you with this tutorial? | 3.5 (0.59) |

Note. Items 1–4 were rated on a scale of 1= strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree. Item 5 was rated on a scale of 1 = very dissatisfied, 2 = dissatisfied, 3 = satisfied, 4 = very satisfied.

Table 3.

Mean Satisfaction Ratings on Applied Training Conducting via Videoconference (N = 48)

| Item | M (SD) |

|---|---|

| 1. I feel able to apply the skills reviewed in the training sessions with clients. | 3.5 (0.55) |

| 2. The program enhanced my professional expertise. | 3.7 (0.47) |

| 3. The instructor knew the subject matter. | 3.9 (0.31) |

| 4. The instructor answered my questions effectively. | 3.8 (0.41) |

| 5. Overall, I was satisfied with the applied training sessions. | 3.7 (0.45) |

Note. Items 1–4 were rated on a scale of 1= strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree. Item 5 was rated on a scale of 1 = very dissatisfied, 2 = dissatisfied, 3 = satisfied, 4 = very satisfied.

Clinical outcomes

In order to evaluate how well the training improved treatment outcomes, participants were asked to follow at least one patient initiating treatment for an anxiety disorder for 8 weeks after completing training. Twenty-five trainees participated, and 33 patients were followed. Patients had the following anxiety disorders (determined by the clinicians via unstructured clinical interview): GAD (n = 14), panic disorder (n = 8), social anxiety disorder (n = 5), PTSD (n = 5), and OCD (n = 1). Patients were evaluated weekly with the GAD-7 (Bandelow & Brasser, 2009; Delgadillo et al., 2012; Kroenke, Spitzer, Williams, & Lowe, 2010; Kroenke, Spitzer, Williams, Monahan, & Lowe, 2007; Spitzer, Kroenke, Williams, & Lowe, 2006), a self-report measure of anxiety. The GAD-7 has good reliability, as well as criterion and construct validity. It has been used as both a screener and an outcome measure for general levels of anxiety severity across a range of anxiety disorders, including GAD, panic disorder, PTSD, and social anxiety disorder (Kroenke, et al., 2010; Kroenke, et al., 2007). Because depression is often comorbid with anxiety disorders, we also measured weekly levels of depressive symptomatology with the PHQ-2 (Kroenke, Spitzer, & Williams, 2003). The PHQ-2 has been found to have good psychometric properties for evaluating depression severity as well as change over time.

Use in clinical practice

Because both dissemination and implementation were study goals, we asked trainees prior to training and via email 2 months following training to evaluate the extent to which they were actually using CBT with their clients using the single question, “How often do you use CBT with your clients?” (never, rarely, occasionally, often, very often, almost exclusively).

Statistical Analyses

Paired t tests were used to compute mean change in conceptual knowledge from pretest to posttest on the online tutorial, and pre- to posttreatment change in self-reported anxiety and depression on the GAD-7 and PHQ-2, respectively. A repeated-measures analysis of variance (ANOVA) was computed to evaluate change on the YACS scale from pretest to postonline training to postapplied training. Pairwise comparisons were conducted with Bonferroni correction. All tests were two tailed. Standardized mean effect sizes (ES) for the change measures were calculated using the formula (Cohen, 1988).1 Effect sizes were considered large at .80, medium at .50, and small at .20 (Cohen, 1992). Assuming a medium effect size of .50, a sample size of 50 participants would have power of .93 to detect significant change on the YACS scale.

Results

Online Tutorial

Improvement in conceptual knowledge

A significant change was found in the mean number of correct answers pre- to posttutorial, from 20.2 (SD = 3.8) to 34.8 (SD = 4.1), t(69) = 25.56, p < .001, Cohen’s d = 3.68, 95% CI [3.40, 3.97]. An examination of results by course content found significant changes across all concepts areas (see Table 4). Forty-six trainees (66%) scored 75% correct or better (i.e., 34 of 45 items correct) after taking the tutorial, compared with none prior to taking the tutorial.

Table 4.

Mean Number of Correct Answers on Pre- and Posttest and Effect Sizes by Module: Online CBT Tutorial (N = 70)

| Module | M (SD) pretest | M (SD) posttest | t | p | Effect size (d) | 95% CI for effect size |

|---|---|---|---|---|---|---|

| All modules | 20.2 (3.8) | 34.8 (4.1) | 25.56 | .000 | 3.68 | [3.40, 3.97] |

| Modules 1–5 (Principles of CBT, Nature of Anxiety, Orientation to Treatment, Self-Assessment Skills) | 5.0 (1.7) | 7.9 (1.5) | 11.73 | .000 | 1.78 | [1.48, 2.08] |

| Modules 6–7 (Developing Fear Hierarchy, Breathing Techniques) | 3.7 (1.6) | 8.1 (1.2) | 18.67 | .000 | 2.88 | [2.57, 3.19] |

| Module 8 (Managing Anxious Thinking [Cognitive Restructuring]) | 5.3 (1.8) | 8.1 (1.4) | 11.12 | .000 | 1.80 | [1.48, 2.13] |

| Module 9 (Exposure Therapy) | 6.1 (1.7) | 10.6 (1.8) | 15.85 | .000 | 2.55 | [2.23, 2.87] |

Learning objectives

Thirty learning objectives were identified a priori for the nine core modules of the online tutorial (see Table 1). After completing the tutorial, the learning objectives were rated as being met 97% of the time on average. The mean rating of how much they learned as a result of taking the tutorial was 4.3 (rated on a scale from 1 = very little to 5 = a great deal).

Applied Training

Improvements in applied clinical skills

A significant difference was found between the three time points on mean YACS score, F(2, 94) = 121.773, p < .001. Post hoc tests were conducted to examine pairwise comparisons (see Table 5). The mean YACS score improved significantly from baseline to posttutorial (mean change = 10.0, SD = 6.5, p < .001), from posttutorial to postapplied training (mean change = 7.7, SD = 6.6, p < .001), and from baseline to postapplied training (mean change = 17.7, SD = 5.6, p < .001). The overall improvement in YACS scores from baseline (14.8) to postapplied training (32.6) corresponds roughly to an increase from poor (defined as the therapist showing clear lack of expertise, understanding, and competence) to the midpoint between adequate (defined as characteristic of the average, “good enough” therapist) and good (defined as slightly better than the average clinician). Only one trainee (2%) scored 30 or greater (adequate) on the YACS at baseline; 18 (38%) scored 30 or greater after the online tutorial, and 33 (70%) did so after the applied training.

Table 5.

Mean (SD) Yale Adherence and Competence Scale (YACS) Total Scores: Pairwise Comparisons by Time Point

| Comparison | Baseline (Time 1) (N = 70) | Post-tutorial (Time 2) (N = 70) | Post-applied Training (Time 3) (N = 48) | M (SD) Change | p | Effect size (d) | 95% CI for effect size |

|---|---|---|---|---|---|---|---|

| Baseline vs. posttutorial | 14.8 (5.5) | 24.9 (7.4) | 10.0 (6.5) | .000 | 1.54 | [1.08, 2.26] | |

| Posttutorial vs. postapplied training | 24.9 (7.4) | 32.6 (5.8) | 7.70 (6.6) | .000 | 1.16 | [0.77, 1.55] | |

| Baseline vs. postapplied training | 14.8 (5.5) | 32.6 (5.8) | 17.7 (5.6) | .000 | 3.14 | [2.62, 3.67] |

A significant difference between the three time points was also found on both YACS subscales, F(2, 94) = 92.72, p < .001, for the Cognitive Skills subscale, and F(2, 94) = 88.23, p < .001, for the Exposure Skills subscale, suggesting overall improvement was driven by changes in both skill sets. Pairwise comparisons were significant for improvements from baseline to posttutorial (mean change cognitive subscale = 7.7, SD = 3.4, p < .001; mean change exposure subscale = 7.1, SD = 3.0, p < .001), from posttutorial to postapplied training (mean change Cognitive subscale = 13.3, SD = 4.2, p < .001; mean change Exposure subscale = 11.5, SD = 4.2, p < .001), and from baseline to postapplied training (mean change Cognitive subscale = 16.5, SD = 2.9, p < .001); mean change Exposure subscale = 16.1, SD = 3.6, p < .001).

User Satisfaction

Technical feasibility

The mean score on the System Usability Scale (SUS), which evaluated user satisfaction with the technical aspects of the online tutorial, was 88.2 (SD = 13.2; scale range is 0–100). This corresponds to a score of excellent on the SUS (see Table 6). All trainees had a mean SUS score rated OK or greater. The mean score on the single-item global rating of user friendliness was 5.8 (between good and excellent; range = 1 [worst imaginable] to 7 [best imaginable]).

Table 6.

System Usability Scale (SUS) Scores for the Online Tutorial (N = 70)

| Adjective | SUS cutoff score | M (SD) in the current study |

|---|---|---|

| Worst imaginable | 12.5 | |

| Awful | 20.3 | |

| Poor | 35.7 | |

| OK | 50.9 | |

| Good | 71.4 | |

| Excellent | 85.5 | 88.2 (13.2) |

| Best imaginable | 90.0 |

Note. SUS cutoff scores were obtained from (Bangor, et al., 2009).

Online tutorial

Satisfaction with the clinical content of the tutorial is presented in Table 2. Mean ratings on all items were between agree and strongly agree, and mean satisfaction rating was between satisfied and very satisfied. Ninety-five percent of participants said they would recommend the tutorial to other clinicians.

Applied training

Clinician feedback on the applied training conducted by videoconference is presented in Table 3. Ratings on all dimensions were at or above the midpoint between agree and strongly agree. The mean rating of how much they learned as a result of the applied training sessions was 4.6 (rated on a scale from 1 = very little to 5 = a great deal).

Patient Outcomes

Because the ultimate goal of disseminating CBT training is to improve patient outcomes, we evaluated patients treated by trainees after completing the training protocol as a secondary and preliminary measure of clinical impact of the clinician training on real patients. Patient scores on the GAD-7 were examined before initiating CBT treatment and after 8 weeks of treatment. Mean scores on the GAD-7 dropped from 13.7 (SD = 4.9) at baseline to 6.6 (SD = 5.2) at Week 8, t(32) = 7.39, p < .001, Cohen’s d = 1.39, 95% CI [1.00, 1.77]. A score of 10 represents a “moderate” level of anxiety, and a score under 5 is considered subclinical (Spitzer, et al., 2006). As a secondary clinical outcome measure, we examined pre and posttreatment depression scores as measured by the PHQ-2. The mean PHQ-2 score dropped from 2.7 (SD = 1.7) at baseline to 1.3 (SD = 1.7) at 8 weeks, t(32) = 4.95, p < .001, Cohen’s d = 0.86, 95% CI [0.50, 1.21]. A score of 3 or greater on the PHQ-2 indicates a clinical level of depressive symptomatology (Kroenke, et al., 2003).

Use of CBT in Clinical Practice

We examined the extent to which clinicians reported utilizing CBT in their clinical practice following the training protocol as compared with prior to the training. At 2-month follow-up, the percentage of clinicians who reported using CBT techniques “often” or “very often” increased significantly, from 51% before training to 87% after training, χ2(1) = 17.47, p < .001.

Discussion

EBTs are vastly underutilized in community settings. In this study, our goal was to evaluate a training methodology designed to increase the dissemination of therapist training to community therapists. We evaluated the training program’s effectiveness in improving therapists’ skill, subsequent client outcomes, and the extent to which the skills were implemented in clinical practice following training. The results demonstrate that this training methodology was acceptable and effective in training clinicians in CBT for anxiety disorders.

Another key goal of the study was to examine the relative impact of didactic and applied training on clinical skills, specifically, whether the addition of applied training incrementally improves clinician training and skill level beyond the skill developed via web tutorial. In an age in which cost effectiveness is paramount, the degree to which applied training improves clinical skills beyond that obtained by didactic training alone needs to be evaluated, given the additional time and cost that live training involves. Although results show that didactic training results in significant skill improvement, the question becomes whether this alone is enough to equip clinicians to adequately conduct CBT therapy. Didactic training did raise the mean YACS score from 14.8 (poor) to 24.9 (midpoint between acceptable and adequate). However, the addition of applied training further improved the mean YACS score to 32.6 (midpoint between adequate and good). These findings suggest that the addition of applied training further improves skills to a clinically significant degree and that investing in the resources for live, expert videoconference training may be worthwhile. Indeed, prior research has found that other, presumably less resource-intensive post-web-training options for ongoing training result in decreases in knowledge over time (e.g., peer consultation; (Chu, Carpenter, Wyszynski, Conklin, & Comer, 2015). Our findings are also in line with research in clinical supervision of EBPs demonstrating that engaging in role plays with expert supervisors results in better use of EBPs than discussions during supervision (Bearman et al., 2013). Supplementing online training with motivational interviewing and regular group calls with a supervisor following training has also shown to increase clinical proficiency and knowledge of course content (Harned et al., 2014). Taken together, there is a growing body of research pointing to the critical utility of role play skill practice and expert involvement after didactic training in order to maintain high fidelity to CBT and other EBPs.

Thus, although we did not rate treatment fidelity during actual sessions with real patients following training, we can speculate that the improvement in skill level observed on the YACS that resulted from the applied training increases the likelihood that treatment is delivered with fidelity in real clinical practice, an issue stressed by the Institute of Medicine’s recent report on evidence-based standards for psychosocial treatments (Institute of Medicine, 2015). The report’s call for “performance samples” to evaluate the quality with which an EBT is being delivered would also speak to the need for the inclusion of an applied training component. It should be noted that although 73% reported using some CBT techniques prior to training, the pretraining evaluation of clinical skills found that perhaps they are not being implemented correctly, as denoted by their pretreatment YACS score of 14.8 (poor). Moreover, the majority of clinicians reported using CBT following the training, compared with only half prior to training. Although our data are limited to self-report, which may not reflect actual practices, these data are promising and suggest that perhaps the applied training increased self-efficacy to deliver CBT more regularly in clinical practice. Future research should more thoroughly examine clinicians’ uptake of EBTs after training (e.g., by rating a subsample of real therapy tapes a few months following training) and explore provider-level factors that may contribute to this uptake (e.g., increased self-efficacy to deliver CBT).

A caveat to these findings is that although we observed significant pre- to posttraining increases in trainees’ clinical skills, we did not evaluate trainees’ pretraining clinical outcomes with actual clients in real practice. Because there was no pretraining assessment of patient effectiveness with real patients, we do not know how much the training improved clinical outcomes with patients in real clinical practice. Thus, although we can say that the therapy administered by the trained clinicians was effective, we do not know if the training made the therapy more effective than it would have been without the training. Another limitation to the findings is that the analyses included only trainees who completed each phase of the study. It is possible that only those who benefitted from the intervention completed all phases of the study.

An important factor impacting the success of any online training intervention is the degree to which the users find the program engaging and worthwhile. This in turn impacts the degree to which clinicians will complete the training and implement the skills successfully with patients. In the current study, user satisfaction was high, and completion rates of the didactic and applied components for participants who started them were around 75% (70 of 94 and 48 of 63, respectively). This is a somewhat higher rate of completion compared with typical online courses (Park & Choi, 2009; Yang, Sinha, Adamson, & Rose, 2013), although the overall completion rate (including subjects who completed Part 1 but dropped before starting Part 2) was 51% (48/94). Possible contributing factors to the dropout rate for the applied training include time and scheduling issues (live one-on-one training is time and labor intensive, even though videoconferencing reduces the geographic limitations) and performance anxiety (many trainees were not used to being observed in practice, as this is currently not the norm in continuing education). Although these may reduce feasibility to an extent, in the context of a general absence of applied training it is still an overall net gain.

As discussed in a recent series of commentaries (Cabaniss, Wainberg, & Oquendo, 2015; Hollon, 2015; Roy-Byrne, 2015), the Institute of Medicine (2015) also recently advocated for an “elements” approach to future training in EBTs, in which the common “active ingredients” of effective treatments across therapeutic modalities are identified. Although the tutorial does offer a modular approach to specific skills such as cognitive restructuring and exposure therapy, is it unknown whether teaching these skills “à la carte” would have the same impact on therapist skill and client outcomes.

It should be noted that the lack of access to training on EBTs is only one reason for their underutilization. Other reasons include lack of time and support for such training for those working in community organizations (Herschell, et al., 2010) and negative beliefs about EBTs (e.g., that they impair the therapeutic relationship, limit clinical decision making, are too manualized and thus robotic, and that they do not translate well outside of research the environment; (Curran, et al., 2015; Shafran, et al., 2009; Stewart, et al., 2012). Negative beliefs about certain components of treatment, such as fears that exposure therapy will cause clients harm, have also been identified (Deacon et al., 2013) and discredited by scientific evidence (see for a review (Olatunji, Deacon, & Abramowitz, 2009). Curran et al. (2015) described several approaches for overcoming these biases, including providing clinicians with empirical evidence to counter these beliefs and listening to and working with clinicians to flexibly integrate EBTs within their current practice approach.

Although this study advances the literature significantly in developing and evaluating cost-effective, novel approaches to improving clinician training in EBTs, there are some limitations worth noting. First, practical limitations necessitated the use of a within-subjects design. The lack of a randomized controlled design precludes us from directly comparing the effectiveness of this training method with other training methods (e.g., web training vs. web training plus applied training). However, our use of a within-subjects design allows us to evaluate the incremental effectiveness of each step of the protocol. Similarly, we did not utilize a randomized controlled trial to compare clinical patient outcomes between clinicians who participated in the training versus those who did not. Thus, we do not know if patients treated by the participating clinicians would have done equally as well without having undergone the training. A future randomized controlled trial focusing on clinical outcomes is warranted. Another limiting factor was that only about half of the clinicians who completed the applied training participated in the posttraining follow up on patient impact. Because the ability to participate was not random, but based on limitations placed on clinicians by employers, we do not know if similar results would generalize to all clinicians.

Conclusions

These results provide evidence to support this two-stage training model using new technologies in improving clinician knowledge and skill in conducting CBT for anxiety disorders. User satisfaction was high, and treatment administered posttraining was effective. Future research including randomized trials of community clinicians to training versus no training would help determine the validity of these findings. If confirmed, it would provide support for the use of this technology as a viable methodology for increased dissemination of CBT training. Future research on therapist training interventions should include patient-reported outcomes in the methodology.

Acknowledgments

Funding: This study was funded with federal funds from the National Institute of Mental Health, National Institutes of Health, Department of Health and Human Services, under Small Business Innovation Research (SBIR) Grant No. R43MH086951.

Footnotes

Statistical software was provided by David B. Wilson, PhD (http://mason.gmu.edu/~dwilsonb/ma.html) and accessed through the APA website (http://www.apa.org/pubs/journals/pst/resources.aspx).

Conflict of Interest: Drs. Kobak, Craske, and Rose own the intellectual property described in this research and have a financial interest in the online tutorial.

Compliance With Ethical Standards:

Ethical Approval: All procedures performed in this study involving human participants were in accordance with the ethical standards of the Institutional Review Board and with the ethical standards as laid down in the 1964 Declaration of Helsinki declaration and its later amendments. Informed consent was obtained from all individual participants included in the study. This article does not contain any studies with animals performed by any of the authors.

References

- American Psychological Association. Task force on promotion and dissemination of psychological procedures. 1993 Retrieved from www.apa.org/divisions/div12/est/chamble2.pdf.

- American Psychological Association. Criteria for evaluating treatment guidelines. American Psychologist. 2002;57:1052–1059. http://dx.doi.org/10.1037//0003-066X.57.12.1052. [PubMed] [Google Scholar]

- American Psychological Association. Guidelines and principles for accreditation of programs in professional psychology. 2007 Retrieved from www.apa.org/ed/accreditation/about/policies/guiding-principles.pdf.

- APA Presidential Task Force on Evidence-Based Medicine. Evidence-based practice in psychology. American Psychologist. 2006;61:271–285. doi: 10.1037/0003-066X.61.4.271. http://dx.doi.org/10.1037/0003-066X.61.4.271. [DOI] [PubMed] [Google Scholar]

- Bandelow B, Brasser M. Clinical suitability of GAD-7 scale compared to hospital anxiety and depression scale-A for monitoring treatment effects in generalized anxiety disorder. European Neuropsychopharmacology. 2009;19:S604–S605. http://dx.doi.org/10.1016/S0924-977X(09)70970-8. [Google Scholar]

- Bangor A, Kortum P, Miller J. Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies. 2009;4:114–123. [Google Scholar]

- Barnett JE. Utilizing technological innovations to enhance psychotherapy supervision, training, and outcomes. Psychotherapy (Chic) 2011;48:103–108. doi: 10.1037/a0023381. http://dx.doi.org/10.1037/a00233812011-10776-001. [DOI] [PubMed] [Google Scholar]

- Bearman SK, Weisz JR, Chorpita BF, Hoagwood K, Ward A, Ugueto AM … The Research Network on Youth Mental Health. More practice, less preach? The role of supervision processes and therapist characteristics in EBP implementation. Administration and Policy in Mental Health. 2013;40:518–529. doi: 10.1007/s10488-013-0485-5. http://dx.doi.org/10.1007/s10488-013-0485-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Edmunds JM, Marcus SC, Kendall PC. Training and consultation to promote implementation of an empirically supported treatment: A randomized trial. Psychiatric Services. 2012;63:660–665. doi: 10.1176/appi.ps.201100401. http://dx.doi.org/10.1176/appi.ps.201100401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beveridge RM, Fowles TR, Masse JJ, McGoron L, Smith MA, Parrish BP, … Widdoes N. State-wide dissemination and implementation of parent–child interaction therapy (PCIT): Application of theory. Children and Youth Services Review. 2015;48:38–48. http://dx.doi.org/10.1016/j.childyouth.2014.11.013. [Google Scholar]

- Brooke J. SUS: A “quick and dirty” usability scale. In: Jordan PW, Thomas B, Weerdmeester BA, McClelland IL, editors. Usability evaluation in industry. London, England: Taylor & Francis; 1996. pp. 189–194. [Google Scholar]

- Cabaniss DL, Wainberg ML, Oquendo MA. Evidence-based psychosocial interventions: Novel challenges for training and implementation. Depression and Anxiety. 2015;32:802–804. doi: 10.1002/da.22437. http://dx.doi.org/10.1002/da.22437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Nich C, Sifry RL, Nuro KF, Frankforter TL, Ball SA, Rounsaville BJ. A general system for evaluating therapist adherence and competence in psychotherapy research in the addictions. Drug and Alcohol Dependence. 2000;57:225–238. doi: 10.1016/s0376-8716(99)00049-6. [DOI] [PubMed] [Google Scholar]

- Cartreine JA, Ahern DK, Locke SE. A roadmap to computer-based psychotherapy in the United States. Harvard Review of Psychiatry. 2010;18:80–95. doi: 10.3109/10673221003707702. http://dx.doi.org/10.3109/10673221003707702. [DOI] [PubMed] [Google Scholar]

- Chu BC, Carpenter AL, Wyszynski CM, Conklin PH, Comer JS. Scalable options for extended skill building following didactic training in cognitive-behavioral therapy for anxious youth: A pilot randomized trial. Journal of Clinical Child and Adolescent Psychology. 2015 doi: 10.1080/15374416.2015.1038825. Advance online publication. http://dx.doi.org/10.1080/15374416.2015.1038825. [DOI] [PubMed]

- Cohen J. Statistical power analysis for the behavioral sciences. 2. Hillsdale, NJ: Erlbaum; 1988. [Google Scholar]

- Cohen J. A power primer. Psychological Bulletin. 1992;112:155–159. doi: 10.1037//0033-2909.112.1.155. http://dx.doi.org/10.1037/0033-2909.112. [DOI] [PubMed] [Google Scholar]

- Curran GM, Woo SM, Hepner KA, Lai WP, Kramer TL, Drummond KL, Weingardt K. Training substance use disorder counselors in cognitive behavioral therapy for depression: Development and initial exploration of an online training program. Journal of Substance Abuse Treatment. 2015;58:33–42. doi: 10.1016/j.jsat.2015.05.008. http://dx.doi.org/10.1016/j.jsat.2015.05.008. [DOI] [PubMed] [Google Scholar]

- Deacon BJ, Farrell NR, Kemp JJ, Dixon LJ, Sy JT, Zhang AR, McGrath PB. Assessing therapist reservations about exposure therapy for anxiety disorders: The Therapist Beliefs About Exposure Scale. Journal of Anxiety Disorders. 2013;27:772–780. doi: 10.1016/j.janxdis.2013.04.006. http://dx.doi.org/10.1016/j.janxdis.2013.04.006. [DOI] [PubMed] [Google Scholar]

- Decker SE, Jameson MT, Naugle AE. Therapist training in empirically supported treatments: A review of evaluation methods for short- and long-term outcomes. Administration and Policy in Mental Health. 2011;38:254–286. doi: 10.1007/s10488-011-0360-1. http://dx.doi.org/10.1007/s10488-011-0360-1. [DOI] [PubMed] [Google Scholar]

- Delgadillo J, Payne S, Gilbody S, Godfrey C, Gore S, Jessop D, Dale V. Brief case finding tools for anxiety disorders: Validation of GAD-7 and GAD-2 in addictions treatment. Drug and Alcohol Dependence. 2012;125:37–42. doi: 10.1016/j.drugalcdep.2012.03.011. http://dx.doi.org/10.1016/j.drugalcdep.2012.03.011. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Harned MS, Woodcock EA, Skutch JM, Koerner K, Linehan MM. Investigating bang for your training buck: A randomized controlled trial comparing three methods of training clinicians in two core strategies of dialectical behavior therapy. Behavior Therapy. 2015;46:283–295. doi: 10.1016/j.beth.2015.01.001. http://dx.doi.org/10.1016/j.beth.2015.01.001. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Koerner K, Woodcock EA, Beadnell B, Brown MZ, Skutch JM, Harned MS. Which training method works best? A randomized controlled trial comparing three methods of training clinicians in dialectical behavior therapy skills. Behaviour Research and Therapy. 2009;47:921–930. doi: 10.1016/j.brat.2009.07.011. http://dx.doi.org/10.1016/j.brat.2009.07.011. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Woodcock EA, Harned MS, Beadnell B. Can dialectical behavior therapy be learned in highly structured learning environments? Results from a randomized controlled dissemination trial. Behavior Therapy. 2011;42:263–275. doi: 10.1016/j.beth.2010.06.004. http://dx.doi.org/10.1016/j.beth.2010.06.004. [DOI] [PubMed] [Google Scholar]

- Edmunds JM, Beidas RS, Kendall PC. Dissemination and implementation of evidence-based practices: Training and consultation as implementation strategies. Clinical Psychology (New York) 2013;20:152–165. doi: 10.1111/cpsp.12031. http://dx.doi.org/10.1111/cpsp.12031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eftekhari A, Ruzek JI, Crowley JJ, Rosen CS, Greenbaum MA, Karlin BE. Effectiveness of national implementation of prolonged exposure therapy in Veterans Affairs care. JAMA Psychiatry. 2013;70:949–955. doi: 10.1001/jamapsychiatry.2013.36. http://dx.doi.org/10.1001/jamapsychiatry.2013.36. [DOI] [PubMed] [Google Scholar]

- Fairburn CG, Cooper Z. Therapist competence, therapy quality, and therapist training. Behaviour Research and Therapy. 2011;49:373–378. doi: 10.1016/j.brat.2011.03.005. http://dx.doi.org/10.1016/j.brat.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Blase KA, Duda MA, Naoom SF, Van Dyke M. Implementation of evidence-based treatments for children and adolescents: Research findings and their implications for the future. In: Weisz JR, Kazdin AE, editors. Evidence-based psychotherapies for children and adolescents. 2. New York, NY: Guilford Press; 2010. pp. 435–450. [Google Scholar]

- Gardner H. Multiple intelligences: The theory in practice. New York, NY: Basic Books; 1993. [Google Scholar]

- Harned MS, Dimeff LA, Woodcock EA, Kelly T, Zavertnik J, Contreras I, Danner SM. Exposing clinicians to exposure: A randomized controlled dissemination trial of exposure therapy for anxiety disorders. Behavior Therapy. 2014;45:731–744. doi: 10.1016/j.beth.2014.04.005. http://dx.doi.org/10.1016/j.beth.2014.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010;30:448–466. doi: 10.1016/j.cpr.2010.02.005. http://dx.doi.org/10.1016/j.cpr.2010.02.005S0272-7358(10)00040-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollon SD. IOM report on psychosocial treatments: CBT perspective. Depression and Anxiety. 2015;32:790–792. doi: 10.1002/da.22444. http://dx.doi.org/10.1002/da.22444. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Psychosocial interventions for mental and substance use disorders: A framework for establishing evidence based standards. Washington, DC: National Academies Press; 2015. [PubMed] [Google Scholar]

- Joyce B, Showers B. Meta-analysis on the effects of training and coaching. Chapel Hill, NC: National Implementation Research Network; 2002. (Publication No. 231) [Google Scholar]

- Kessler RC, Merikangas KR, Wang PS. Prevalence, comorbidity, and service utilization for mood disorders in the United States at the beginning of the twenty-first century. Annual Review of Clinical Psychology. 2007;3:137–158. doi: 10.1146/annurev.clinpsy.3.022806.091444. http://dx.doi.org/10.1146/annurev.clinpsy.3.022806.091444. [DOI] [PubMed] [Google Scholar]

- Khanna SM, Kendall CP. Bringing technology to training: Web-based therapist training to promote the development of competent cognitive-behavioral therapists. Cognitive and Behavioral Practice. 2015;22:291–301. http://dx.doi.org/10.1016/j.cbpra.2015.02.002. [Google Scholar]

- Kobak KA, Craske MG, Rose RD, Wolitsky-Taylor K. Web-based therapist training on cognitive behavior therapy for anxiety disorders: A pilot study. Psychotherapy. 2013;50:235–247. doi: 10.1037/a0030568. http://dx.doi.org/10.1037/a0030568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobak KA, Engelhardt N, Lipsitz JD. Enriched rater training using Internet based technologies: A comparison to traditional rater training in a multi-site depression trial. Journal of Psychiatric Research. 2006;40:192–199. doi: 10.1016/j.jpsychires.2005.07.012. http://dx.doi.org/10.1016/j.jpsychires.2005.07.012. [DOI] [PubMed] [Google Scholar]

- Kobak KA, Engelhardt N, Williams JB, Lipsitz JD. Rater training in multicenter clinical trials: Issues and recommendations. Journal of Clinical Psychopharmacology. 2004;24:113–117. doi: 10.1097/01.jcp.0000116651.91923.54. http://dx.doi.org/10.1097/01.jcp.0000116651.91923.54. [DOI] [PubMed] [Google Scholar]

- Kobak K, Lipsitz JD, Williams JB, Engelhardt N, Bellew KM. A new approach to rater training and certification in a multicenter clinical trial. Journal of Clinical Psychopharmacology. 2005;25:407–412. doi: 10.1097/01.jcp.0000177666.35016.a0. http://dx.doi.org/10.1097/01.jcp.0000177666.35016.a0. [DOI] [PubMed] [Google Scholar]

- Kobak K, Lipsitz J, Williams JBW, Engelhardt N, Jeglic E, Bellew K. Are the effects of rater training sustainable? Results from a multi-center trial. Journal of Clinical Psychopharmacology. 2007;27:534–535. doi: 10.1097/JCP.0b013e31814f4d71. http://dx.doi.org/10.1097/JCP.0b013e31814f4d71. [DOI] [PubMed] [Google Scholar]

- Kroenke K, Spitzer RL, Williams JB. The Patient Health Questionnaire-2: Validity of a two-item depression screener. Medical Care. 2003;41:1284–1292. doi: 10.1097/01.MLR.0000093487.78664.3C. http://dx.doi.org/10.1097/01.MLR.0000093487.78664.3C. [DOI] [PubMed] [Google Scholar]

- Kroenke K, Spitzer RL, Williams JB, Lowe B. The Patient Health Questionnaire Somatic, Anxiety, and Depressive Symptom Scales: A systematic review. General Hospital Psychiatry. 2010;32:345–359. doi: 10.1016/j.genhosppsych.2010.03.006. http://dx.doi.org/10.1016/j.genhosppsych.2010.03.006S0163-8343(10)00056-3. [DOI] [PubMed] [Google Scholar]

- Kroenke K, Spitzer RL, Williams JB, Monahan PO, Lowe B. Anxiety disorders in primary care: Prevalence, impairment, comorbidity, and detection. Annals of Internal Medicine. 2007;146:317–325. doi: 10.7326/0003-4819-146-5-200703060-00004. [DOI] [PubMed] [Google Scholar]

- Mayer RE, Moreno R. Nine ways to reduce cognitive load. Educational Psychologist. 2003;38:43–52. http://dx.doi.org/10.1207/S15326985EP3801_6. [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: A review of current efforts. American Psychologist. 2010;65:73–84. doi: 10.1037/a0018121. http://dx.doi.org/10.1037/a00181212010-02208-010. [DOI] [PubMed] [Google Scholar]

- Miller WR, Mount KA. A small study of training in motivational interviewing: Does one workshop change clinician and client behavior? Behavioural and Cognitive Psychotherapy. 2001;29:457–471. http://dx.doi.org/10.1017/S1352465801004064. [Google Scholar]

- Nadeem E, Gleacher A, Beidas RS. Consultation as an implementation strategy for evidence-based practices across multiple contexts: Unpacking the black box. Administration and Policy in Mental Health. 2013;40:439–450. doi: 10.1007/s10488-013-0502-8. http://dx.doi.org/10.1007/s10488-013-0502-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institute of Mental Health. Partnerships to integrate evidence-based mental health practices into social work education and research. Paper presented at the Institute for the Advancement of Social Work Research conference; Washington, DC. 2007. [Google Scholar]

- Nuro KF, Maccarelli L, Baker SM, Martino S, Rounsaville BJ, Carroll KM. Yale Adherence and Competence Scale (YACSII) guidelines. West Haven, CT: Yale University Psychotherapy Development Center; 2000. [Google Scholar]

- Olatunji BO, Deacon BJ, Abramowitz JS. The cruelest cure? Ethical issues in the implementation of exposure-based treatments. Cognitive and Behavioral Practice. 2009;16:172–180. http://dx.doi.org/10.1016/j.cbpra.2008.07.003. [Google Scholar]

- Olfson M, Mojtabai R, Sampson NA, Hwang I, Druss B, Wang PS, Kessler RC. Dropout from outpatient mental health care in the United States. Psychiatric Services. 2009;60:898–907. doi: 10.1176/appi.ps.60.7.898. http://dx.doi.org/10.1176/appi.ps.60.7.89860/7/898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park JH, Choi HJ. Factors influencing adult learners’ decision to drop out or persist in online learning. Educational Technology & Society. 2009;12:207–217. [Google Scholar]

- Pidano AD, Whitcomb JM. Training to work with children and families: Results from a survey of psychologists and doctoral students. Training and Education in Professional Psychology. 2012;6:8–17. http://dx.doi.org/10.1037/a0026961. [Google Scholar]

- Quinn J, Moore M, Benson DF, Clark CM, Doody R, Jagust W, … Kaye JA. A videotaped CIBIC for dementia patients: Validity and reliability in a simulated clinical trial. Neurology. 2002;58:433–437. doi: 10.1212/wnl.58.3.433. [DOI] [PubMed] [Google Scholar]

- Roediger HL, Karpicke JD. Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science. 2006;17:249–254. doi: 10.1111/j.1467-9280.2006.01693.x. http://dx.doi.org/10.1111/j.1467-9280.2006.01693.x. [DOI] [PubMed] [Google Scholar]

- Rose RD, Lang AJ, Welch SS, Campbell-Sills L, Chavira DA, Sullivan G, … Craske MG. Training primary care staff to deliver a computer-assisted cognitive-behavioral therapy program for anxiety disorders. General Hospital Psychiatry. 2011;33:336–342. doi: 10.1016/j.genhosppsych.2011.04.011. http://dx.doi.org/10.1016/j.genhosppsych.2011.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy-Byrne P. Translating research to practice: Too much research, not enough practice? Depression and Anxiety. 2015;32:785–786. doi: 10.1002/da.22443. http://dx.doi.org/10.1002/da.22443. [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, Kelleher K, Weisz JR. Building bridges to evidence-based practice: The MacArthur Foundation Child System and Treatment Enhancement Projects (Child STEPs) Administrative Policy in Mental Health. 2008;35:66–72. doi: 10.1007/s10488-007-0160-9. http://dx.doi.org/10.1007/s10488-007-0160-9. [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, McHugh RK, Barlow DH. The science of dissemination and implementation. In: McHugh RK, Barlow DH, editors. Dissemination and implementation of evidence-based psychological interventions. New York, NY: Oxford University Press; 2012. [Google Scholar]

- Shafran R, Clark DM, Fairburn CG, Arntz A, Barlow DH, Ehlers A, … Wilson GT. Mind the gap: Improving the dissemination of CBT. Behaviour Research and Therapy. 2009;47(11):902–909. doi: 10.1016/j.brat.2009.07.003. http://dx.doi.org/10.1016/j.brat.2009.07.003. [DOI] [PubMed] [Google Scholar]

- Sholomskas DE, Carroll KM. One small step for manuals: Computer-assisted training in twelve-step facilitation. Journal of Studies on Alcohol. 2006;67:939–945. doi: 10.15288/jsa.2006.67.939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spitzer RL, Kroenke K, Williams JB, Lowe B. A brief measure for assessing generalized anxiety disorder: The GAD-7. Archives of Internal Medicine. 2006;166:1092–1097. doi: 10.1001/archinte.166.10.1092. http://dx.doi.org/10.1001/archinte.166.10.1092. [DOI] [PubMed] [Google Scholar]

- Stewart RE, Chambless DL, Baron J. Theoretical and practical barriers to practitioners’ willingness to seek training in empirically supported treatments. Journal of Clinical Psychology. 2012;68:8–23. doi: 10.1002/jclp.20832. http://dx.doi.org/10.1002/jclp.20832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stobie B, Taylor T, Quigley A, Ewing S, Salkovskis PM. Contents may vary: A pilot study of treatment histories of OCD patients. Behavioural and Cognitive Psychotherapy. 2007;35:273–282. http://dx.doi.org/10.1017/S135246580700358X. [Google Scholar]

- U.S. Department of Health and Human Services. Mental health: A report of the surgeon general—executive summary. 1999 Retrieved from http://mentalhealth.samhsa.gov/features/surgeongeneralreport/chapter6/sec2.asp.

- Vincent A, Ross D. Learning style awareness: A basis for developing teaching and learning strategies. Journal of Research on Technology in Education. 2001;33:1–10. [Google Scholar]

- Weingardt KR, Cucciare MA, Bellotti C, Lai WP. A randomized trial comparing two models of web-based training in cognitive-behavioral therapy for substance abuse counselors. Journal of Substance Abuse Treatment. 2009;37:219–227. doi: 10.1016/j.jsat.2009.01.002. http://dx.doi.org/10.1016/j.jsat.2009.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weissman MM, Verdeli H, Gameroff MJ, Bledsoe SE, Betts K, Mufson L, … Wickramaratne P. National survey of psychotherapy training in psychiatry, psychology, and social work. Archives of General Psychiatry. 2006;63:925–934. doi: 10.1001/archpsyc.63.8.925. http://dx.doi.org/10.1001/archpsyc.63.8.925. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Hawley KM, Doss AJ. Empirically tested psychotherapies for youth internalizing and externalizing problems and disorders. Child and Adolescent Psychiatric Clinics of North America. 2004;13:729–815. v–vi. doi: 10.1016/j.chc.2004.05.006. http://dx.doi.org/10.1016/j.chc.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Westra HA, Arkowitz H, Dozois DJA. Adding a motivational interviewing pretreatment to cognitive behavioral therapy for generalized anxiety disorder: A preliminary randomized controlled trial. Journal of Anxiety Disorders. 2009;23:1106–1117. doi: 10.1016/j.janxdis.2009.07.014. http://dx.doi.org/10.1016/j.janxdis.2009.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Westra HA, Dozois DJA. Preparing clients for cognitive behavioral therapy: a randomized pilot study of motivational interviewing for anxiety. Cognitive Therapy Research. 2006;30:481–498. http://dx.doi.org/10.1007/s10608-006-9016-y. [Google Scholar]

- Westra HA, Dozois D. Integrating motivational interviewing into the treatment of anxiety. In: Arkowitz A, Westra HA, Miller WR, Rollnick S, editors. Motivational interviewing in the treatment of psychological problems. New York, NY: Guilford Press; 2008. pp. 26–56. [Google Scholar]

- Williams C, Martinez R. Increasing access to CBT: Stepped care and CBT self-help models in practice. Behavioral and Cognitive Psychotherapy. 2008;36:675–683. http://dx.doi.org/10.1017/S1352465808004864. [Google Scholar]

- Yang D, Sinha T, Adamson D, Rose CP. “Turn on, tune in, drop out”: Anticipating student dropouts in massive open online courses. Paper presented at the NIPS Workshop on Data Driven Education; Lake Tahoe, NV. 2013. Dec, [Google Scholar]